?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

This research investigates fast and precise Virtual Reality (VR) sketching methods with different tool-based asymmetric interfaces. In traditional real-world drawing, artists commonly employ an asymmetric interaction system where each hand holds different tools, facilitating diverse and nuanced artistic expressions. However, in virtual reality (VR), users are typically limited to using identical tools in both hands for drawing. To bridge this gap, we aim to introduce specifically designed tools in VR that replicate the varied tool configurations found in the real world. Hence, we developed a VR sketching system supporting three hybrid input techniques using a standard VR controller, a VR stylus, or a data glove. We conducted a formal user study consisting of an internal comparative experiment with four conditions and three tasks to compare three asymmetric input methods with each other and with a traditional symmetric controller-based solution based on questionnaires and performance evaluations. The results showed that in contrast to symmetric dual VR controller interfaces, the asymmetric input with gestures significantly reduced task completion times while maintaining good usability and input accuracy with a low task workload. This shows the value of asymmetric input methods for VR sketching. We also found that the overall user experience could be further improved by optimizing the tracking stability of the data glove and the VR stylus.

1. Introduction

This paper explores how tool-based asymmetric interfaces can be used for fast and precise sketching in Virtual Reality (VR). The concept of a tool-based asymmetric interface, inspired by other researchers (Zou et al., Citation2021), aims to enhance task efficiency and usability by integrating multiple specially designed tools into one input system. This approach allows users to hold different tools in each hand, enabling them to perform various subtasks within a single overarching task. In conventional art and design productions, a content creator often uses different tools in each hand. For example, a painter may hold a palette in one hand and a brush in the other. Similarly, a three-dimensional (3D) modeler could use a stylus with a touchpad in one hand and a keyboard in the other. Guiard points out that most skilled manual activities involve two hands with different roles (Guiard, Citation1987), often requiring very different tools. However, for typical VR design applications like the TiltbrushFootnote1 or Gravity SketchFootnote2, input is mainly conducted via two controllers with the identical physical form factor and affordances, even though their representations in VR can be different for each hand. Therefore, we aim to recreate users’ real-world drawing experience by implementing an asymmetrical interaction system based on various tools. Through this tool-based asymmetric approach, we strive to enhance users’ sketching experience and improve their efficiency.

There has been considerable research on using VR for content creation and modeling (Bonnici et al., Citation2019; Yu et al., Citation2021; Yuan & Huai, Citation2021), with many VR applications for 3D design. However, there has been relatively limited research on asymmetric interfaces where the user uses two different physical input devices to interact in VR, and few formal studies have compared asymmetric to symmetric input methods in VR.

The concept of what you see is what you get (WYSIWYG) is often applied in VR interfaces. Many relatively mature VR design applications with WYSIWYG interfaces are becoming available (Google, Citation2021; GravitySketch, Citation2021). These systems enable content creators to design and shape 3D objects directly in the virtual environment (Machuca et al., Citation2018). However, most VR input devices (e.g., the HTC VIVEFootnote3 and Meta QuestFootnote4 controllers) may not provide a realistic design experience. In contrast to conventional 2D sketching situations, contemporary virtual reality (VR) equipment cannot provide instantaneous tangible or tactile input feedback. This absence of tactile input feedback may lead to insufficiently vibrant user experiences in VR, thereby potentially resulting in unsatisfactory interactions (Cipresso et al., Citation2018).

Our work mainly investigates two research questions: (1) How can the stylus and gesture be integrated into asymmetric interaction for fast and precise sketching in VR? (2) What are the benefits of integrating the stylus and gesture in asymmetric interaction for VR sketching tasks? Our research hypotheses are:

H1: The integration of gesture input and stylus capabilities in asymmetric interaction can contribute to immersive and efficient VR sketching experiences.

The advantages of gesture input in VR interaction have been suggested, but not much research has explored the implementation of gesture input in VR sketching. In addition, many studies have demonstrated using a stylus for VR sketching, but few have explored the effect of the stylus when used with other input methods in a hybrid way. In this study, we want to explore the advantage of using asymmetric gesture and stylus input for 3D sketching.

H2: The advanced asymmetrical approach, combining stylus and hand gestures, outperforms standard symmetric methods. It excels especially in complex, fast, and precise virtual reality sketching tasks, leveraging the combined input for optimal performance.

We aim to investigate the inclusiveness of diverse asymmetric interactions for VR sketching tasks by comparing the different interaction techniques available in the system. Depending on the task scenario, the separate utilization of each paired dual input method is anticipated to optimize performance and meet specific requirements. This versatile system aims to enable seamless switching between input methods, empowering users to achieve desired outcomes with enhanced ease and efficiency. Through this approach, we intend to identify which interaction methods are better suited to meet the varying requirements of basic VR sketching tasks.

Using customized devices with similar form factors and functions to traditional creative tools makes it possible to support more intuitive interaction and improve overall user experiences. For example, the Logitech VR InkFootnote5 is a stylus developed to support 2D and 3D design in VR. The form factor of the VR Ink enables it to be held like a pen and make precise input. This allows users to explore asymmetric input methods using tools specifically designed for 3D object creation tasks in VR. In this paper, we present an asymmetric system that combines different input modalities for VR sketching and then compares their usability through spatial sketching tasks. The main contributions of this research are:

We implement hybrid asymmetric interfaces that integrate controller, stylus, and gesture input for spatial VR sketching tasks;

We conduct a formal evaluation of the developed asymmetric interfaces in VR sketching, comparing the accuracy, efficiency, and user experiences;

We present design guidelines for asymmetric interfaces in VR sketching.

We first review the related research on immersive sketching tasks in VR. In section 3, we describe the prototype platform we have developed for implementing asymmetric VR interfaces. We present a user study to assess the usability and user preference of the system in Section 4. The results are described in Section 5, and a discussion regarding the results and design principles is presented in Section 6. Finally, we conclude the paper with the limitations of the work and future research directions.

2. Related work

Our research is based on earlier research on immersive sketching, VR sketching input, and asymmetric bi-manual interaction. In this section, we describe related work in each of these areas and then discuss the research gap we are addressing and the novelty of our work.

presents the list of references cited in each subsection within this section, facilitating reading and referencing for the readers.

Table 1. Citations in different sections in related work.

2.1. Immersive sketching tasks

Immersive VR is an ideal medium for sketching and 3D design, as the designer can create directly in 3D space. This can help the designer save time when completing basic tasks in the early stages of design (Israel et al., Citation2009; Smallman et al., Citation2001).

From 2007 to 2015, many early-stage immersive sketching systems were based on the CAVE Audio Visual Experience (CAVE) system (Csapo et al., Citation2013; Dorta, Citation2007; Jackson & Keefe, Citation2016; Perkunder et al., Citation2010; Wiese et al., Citation2010). However, due to hardware limitations, these early immersive sketching experiences were often imperfect and inaccurate (Israel et al., Citation2009). As a result, designers initially felt that immersive sketching could not be used for their design work, which required a large amount of accurate input (Israel et al., Citation2013; Rahul et al., Citation2017; Tano et al., Citation2012).

Following the recent growth in the use of VR HMDs, additional hardware has been developed to provide more accurate input in VR environments. One of the main drawbacks of immersive drawing with current systems is the lack of functionality compared to traditional 2D sketching, such as stroke capture and real-time feedback, haptic feedback, visual depth cues, and motion parallax (Israel et al., Citation2013, Citation2009; Rahul et al., Citation2017). A lot of the hardware-related and interface research has therefore been addressing these limitations, such as studies allowing users to draw in a virtual space using a physical surface to provide haptic feedback and accurate input (Boddien et al., Citation2017; Drey et al., Citation2020). Some researchers focus on using assistive tools to embellish user-created strokes (Machuca et al., Citation2018), while others use extra visual cues to provide additional support for accurate sketching (Rahul et al., Citation2017).

2.2. VR sketching input

2.2.1. Near-realistic interaction

An essential part of VR interaction design is the creation of interface metaphors that help users achieve as many different goals as possible in the virtual world Jerald (Citation2015). These metaphors are divided into different interaction fidelities, ranging from realistic interactions to non-realistic ones. Near-realistic interaction involves the utilization of realistic virtual environments and avatars that are controlled through motion capture techniques. On the other hand, non-realistic interaction employs stylized or abstract virtual scenarios and avatars that possess unique features or abilities. Both interaction modes offer advantages and disadvantages in various VR applications and user experiences, while also presenting ethical concerns that require careful consideration (Rogers et al., Citation2022; Slater et al., Citation2020; Wu et al., Citation2021). Sketching tasks involve a certain amount of human-factors evaluation. Near-realistic interaction metaphor design is more appropriate in this context than a non-realistic metaphor design to reduce the potential adverse training effects brought by problems such as adaptation.

There has been some research on using different input devices to create near-realistic interaction in VR. For example, Van Rhijn and Mulder (Citation2006) presented a desktop near-field VR system called the Personal Space Station (PSS), where the user could hold a different input device in each hand. They found that using an input device that was purposefully designed to detect and respond to users’ gestures and movements significantly enhanced the user’s interaction experience with the virtual environment. As an extension of Rhijn’s work, Lakatos et al. (Citation2014) prototyped a spatially-aware controller named T(ether), allowing users to collaborate in 3D modeling through an iPad-based VR viewport with a touch screen input and pinching gestures. Their research showed the potential of using a spatially-aware controller for constructing a 3D object in the virtual environment.

2.2.2. Stylus input

One of the most common tools used in traditional sketching is the pen, and there has been considerable research on stylus input devices. Input device accuracy is a critical factor for the user when it comes to efficient drawing (Israel et al., Citation2009; Rahul et al., Citation2017; Tano et al., Citation2013). Stylus input devices are often used as precision input devices in traditional human-computer interaction (HCI) (Jerald, Citation2015; LaViola et al., Citation2017). Previous research has found that stylus input is more reliable than the finger and mouse interaction (tapping and dragging) on tablets and phones (Annett et al., Citation2014; Batmaz et al., Citation2020; Chung & Shin, Citation2015; Cockburn et al., Citation2012). A stylus also provides a similar user experience as handwriting or sketching (Li et al., Citation2020). Li et al. (Citation2020) studied the appropriate pen position for holding a pen-like input device in a VR environment. They concluded that the Tripod at the Rear End (TRE) posture supports a wider range of moving distances and free tilt angles. Moreover, in poking tasks, stylus input devices performed better than traditional wrist-based input. Chen et al. (Citation2022) conducted a study to investigate different input methods for 3D drawing in the context of varying hardware configurations. The results of the study demonstrated that using a stylus as an input device provided notable benefits in precision-oriented 3D drawing tasks when compared to the conventional controller input method. This research sheds light on the superior performance of stylus-based input in achieving high precision in 3D drawing tasks and contributes to the broader understanding of optimal input modalities for such applications.

There are many stylus input devices for mobile phones or tablets on the market (Annett et al., Citation2020), and how to optimize and enhance these design tools for VR applications has been discussed in several papers. These studies ranged from position tracking systems to achieve angle-free input (Tian et al., Citation2007; Citation2008) to pressure-sensitive systems to increase input accuracy (Xin et al., Citation2012). Grossman et al. (Citation2003) found that the lack of physical feedback and visual cues caused inaccurate operations in VR drawing. Drey et al. (Citation2020) introduced a method that combines stylus and tablet input in a virtual environment, allowing users to sketch and interact with a 2D UI in VR. The tablet provides a constrained 2D surface for sketching in a 3D environment, while the stylus allows users to perform mid-air sketching. The combination of stylus and tablet input can assist with precisely manipulating objects or selecting from a configuration menu. However, they claimed that this method could not be adequate for frequent switching between 2D and 3D creation tasks.

The Logitech VR Ink stylusFootnote6 is a novel stylus input device that provides analog force-sensitive controls and dynamic haptic feedback with 3D position tracking for VR applications. Currently, it only works with the HTC Vive, Varjo, and Valve Index head-mounted displays (HMDs). Our research extends the existing research on asymmetric input systems in VR by combining the Logitech VR Ink stylus input devices with a data glove and an HTC VIVE controller to handle different types of requirements in the VR sketching tasks.

2.2.3. Hand gesture input

The reason for bringing hand gestural input into the interaction system of this research is that as a natural input device, human hands can play an essential role in complex VR interactions, combined with the many hand tools developed and perfected over thousands of years. When it comes to VR, the intuitive nature of two-handed interaction makes it worth preserving. When creating content in a VR environment, the human hand is one of the most important tools that can be used (Jerald, Citation2015). Hence, much hardware has been developed around getting gestural input to work in a hand-dominated interaction context (Jerald, Citation2015), while the tracking of the hand is one of the bases of 3D interaction (LaViola et al., Citation2017).

As wearable input devices have become commercially available, the number of hand gesture-based input methods and related research has increased. Compared with stylus input devices, gesture-based input devices are easier to learn and use since they are intuitive and cause less fatigue for users (Zhang et al., Citation2017). There are currently two common ways of capturing gesture-based input, either detected through a wearable device (for example, a data glove) or directly captured by various sensors (e.g., Leap Motion) (Li et al., Citation2020). Data gloves are one of the most common solutions for gesture capture (LaViola, Citation2013) and can be used to capture a user’s hand gestures and movements and provide haptic feedback in VR (Kim et al., Citation2009). Connolly et al. (Citation2017) found that data gloves are versatile and efficient for virtual object manipulation in VR. Early data gloves tended to be too bulky and did not provide a good user experience (Jerald, Citation2015). Therefore, ongoing research based on data gloves has focused on creating a lightweight form factor (Hinchet et al., Citation2018) and optimizing haptic feedback (Markvicka et al., Citation2019). The ideal glove-based input method needs to include bend-sensing and pinch-sensing to make specific interactions easier (LaViola et al., Citation2017). The hybrid model provided by StretchSense data gloveFootnote7 (StretchSense, Citation2020) integrates both sensing modes and is expected to meet the interaction requirements of the VR 3D design workflow.

In contrast to data gloves, hand gestures captured directly by camera sensors are more accessible, although this solution cannot provide haptic feedback in most cases (Gunawardane & Medagedara, Citation2017; Maitlo et al., Citation2019). Brahmbhatt et al. (Citation2020) created a dataset of the gestures of grasping 25 household objects by analyzing participants’ hand pose, and object pose from Red, Green, Blue, and Depth (RGB-D) images. They claimed that the dataset could potentially improve gesture-based input experiences in AR/VR. Maitlo et al. (Citation2019) proposed a hand gesture-based test input method for wearable AR/VR devices. The method combines image processing techniques and Convolutional Neural Network (CNN) models to optimize the computational process and provide better performance than traditional recognition methods. Some VR devices (e.g., the Valve Index controllers) capture the user’s finger movements through sensors on the handheld controller (Valvesoftare, Citation2020). In contrast, devices such as the Oculus Quest 2 capture user hand gestures directly through built-in cameras on the HMD (Oculus, Citation2020).

Despite the importance of hand gestural input in constituting a natural user experience, very few studies on immersive sketching have addressed hand gestural input. This may be due to the limitation of hardware capabilities, which prevents hand gesture input from fully exploiting the advantages of realistic interaction. Previous studies De Araùjo et al. (Citation2012); Taele and Hammond (Citation2017) have suggested that camera-based bare-hand interaction offers more immersive advantages and can reduce the user’s workload in 3D tasks. Their works provided valuable insights into the potential benefits of bare-hand interaction for sketching tasks. However, it is essential to note that both studies were conducted outside of the VR space, which means that their proposed methodologies for bare-hand interaction in sketching tasks have not been validated in a VR environment. Therefore, in our current research, we opted for a straightforward and intuitive” what you see is what you get” approach as the foundational logic for gesture interaction in VR sketching. This choice ensures a clear and coherent interaction experience tailored to VR, building upon the existing knowledge while addressing the specific context of our study.

Therefore, our research aims to integrate gesture input into the input system and find the application scenarios that can take advantage of gesture input in immersive sketching tasks by internal cross-sectional comparison.

2.3. Asymmetric bi-manual interaction

One possible way to make gesture input work in a VR sketching task is to integrate it into existing input systems to complement other input devices and form different modes of two-handed interaction for different application scenarios. Some studies discuss how to implement two-handed interaction in virtual environments.

There is much research on bi-manual interaction in the VR environment. Guiard (Citation1987) classified bimanual interaction as symmetric (each hand performs identical actions) and asymmetric (each hand performs a different action); he suggested that asymmetric tasks are more appropriate as they are closer to human habits.

Regarding bimanual asymmetric interactions, the dominant hand usually performs precise motor skills while the non-dominant hand provides ergonomic benefits (Jerald, Citation2015). An example of how this can be applied is from Bai et al. (Huidong et al., Citation2017), who created an asymmetric bimanual interface for manipulating 3D virtual objects in a mobile VR application. They demonstrated a two-handed interface that combined the handheld controller with gesture input to provide natural and intuitive 3D manipulation for a mobile VR application. The value of asymmetric bi-manual input for optimizing the user experience has been presented in many studies (Hinckley et al., Citation1994; Huidong et al., Citation2017; Liu et al., Citation2021; Poupyrev et al., Citation1998), which suggested the need for asymmetric interfaces to enhance the user experience in specific VR tasks. The bimanual interaction is in line with human operating habits that have evolved over time. However, due to the current hardware and system limitations, such as the inability to provide effective haptic feedback and poor tracking stability, two-handed interaction is insufficient to be used independently as an input system in VR sketching tasks. In our study, we developed an asymmetric interaction system that combines two-handed gesture input with other specially designed input devices to create a smooth user experience. This research will explore the impact on the performance and usability of VR sketching by integrating stylus and gesture input into an asymmetric interface.

3. System design

3.1. Design requirements

This research aims to provide a solution for fast and precise sketching in VR to create spatial points and lines, and the targeted input system needs to meet the following requirements:

The interaction system should support fast 3D sketching;

The interaction system should support precise input in 3D;

The interaction system should be easy and intuitive to use and learn.

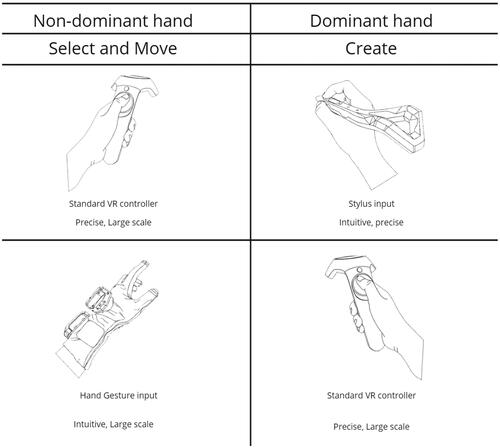

shows the characteristics of the various input modalities summarized from the reviewed papers. The mainstream VR controller benefits from the stable tracking and high accuracy when performing tasks with a wide range of movement (Valvesoftare, Citation2020). The stylus input has more freedom and better performance than the traditional wrist-based input (Li et al., Citation2020). The gesture input provides ergonomic benefits for tasks that require a large scale of movement and rotation (Jerald, Citation2015). By combining these different input tools into an asymmetric interaction system that has already proven its superiority, we expected that the advantages of the various input tools can be maximized.

3.2. System implementation

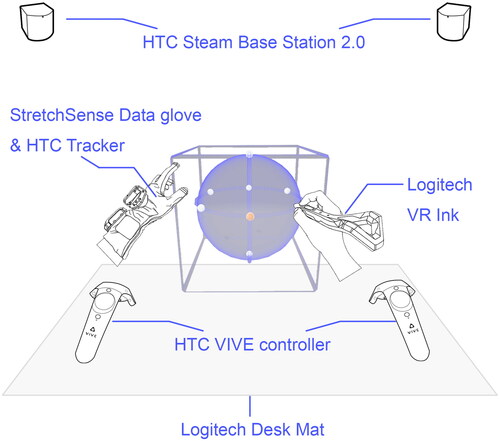

We applied a similar system setting in this research to that based on the research about tool-based asymmetric interaction with stylus (Zou et al., Citation2021). As shown in , the system included two HTC SteamVR Base Station 2.0, a HTC VIVE HMD (1440 × 1600 pixels per eye) with two VR controllers, a Logitech VR Ink, and a StretchSense data gloveFootnote8 with an attached VIVE Tracker. The VR HMD was connected to a desktop PC (Intel Core i7-11700 at 2.50 GHz, 32.0 GB Memory, RTX3060 GPU, Windows 11). We placed a 900 × 800 mm Logitech desk mat on the desktop to reduce reflections from the desktop to improve overall tracking stability.

The whole VR system was developed with Unity 3D Engine. We first integrated OpenBrushFootnote9 into our VR tool-based system to support advanced 3D sketching. The VR environment applied the default settings of the OpenBrush, and the hand model for gesture input comes from the StretchSense assets.

The original Open Brush system only allowed users to select objects or draw 3D sketches using the standard VR controllers. We extended the object selection approach with natural gestures and the 3D sketching approach with the stylus. The StretchSense data glove supports real-time hand gesture detection and enables integration with the Unity engine. By attaching a VIVE tracker to the back of the glove, we can capture the users’ hand position, orientation, and gestures in real time. In this case, users can directly move and rotate the virtual objects rendered in the VR system using their hands. Holding a controller to draw 3D sketching is quite a different experience from how people usually paint by holding a stylus or brush. To simulate a natural painting experience, our system also enabled 3D sketching by holding the Logitech VR Ink pen, which is rendered as a pen in the VR system. Hence, our system consists of three input devices with four different interaction modes (one symmetrical dual-controller interaction and three tool-based asymmetric interactions).

4. User study

4.1. Task design

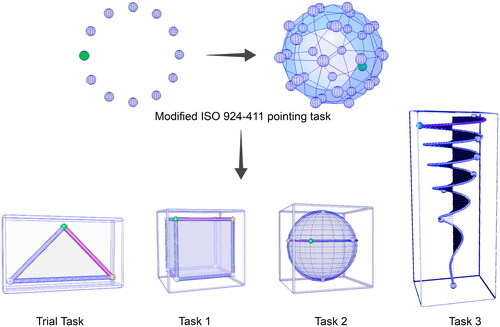

We applied a similar study design to Zou et al. (Citation2021) by modifying the ISO 924-411 pointing task (ISO, Citation2012) to measure the usability and overall performance of the different interaction methods in this research. As shown in , we have deconstructed the lines that need to be drawn in VR sketching into straight lines, curves, and complex spatial curves, and then adapted the 2D task to 3D to reproduce these types of tasks in the VR sketching process. The four tasks, including the trail task, represent different levels of complexity encountered in VR sketching. The simplest task, the trail task, represents the basic 3D straight-line sketching task. From task 1 to task 3, the complexity of the strokes to be created and the axial variations gradually increase. We deconstructed the complex VR sketching task into its fundamental building blocks, allowing us to quantify and measure the stability and accuracy of user output in VR sketching tasks using mathematical methods.

Figure 3. Modified ISO 924-411 pointing task (up) (Zou et al., Citation2021) and our task design in the user study (down). Trail task: Drawing straight lines on a plane. Task 1: Drawing straight lines in three-dimensional space. Task 2: Drawing two-axis curved lines in space. Task 3: Drawing three-axis curved lines in space.

During the trial tasks, a set of three spheres are uniformly positioned on a surface of a large, translucent, light blue triangular panel with a designated red pipe connecting them. The formal three tasks require the user to draw a stroke from a yellow sphere to a green one, following the preset red route. The object can be maneuvered and rotated by the user’s non-dominant hand (left hand), interacting with the semi-translucent boundary frame surrounding the object. Meanwhile, the drawing could be performed with the user’s dominant hand (right hand). The task concludes once the user has completed all predetermined routes. In the study, the user’s field of view would only display two spheres (one yellow and one green), a red reference line connecting the two spheres, and the reference parent object. After each stroke is completed by the user, new spheres and the stroke appear. Furthermore, to ensure that the user’s visual perception is not obstructed during the execution of sketching tasks, different-colored translucent materials were assigned to the spheres, the reference lines connecting the spheres, the reference parent objects, and the frame that controls object movement. The curves generated by the user, on the other hand, possess a non-transparent highlight material, allowing clear visibility of the user’s output without any adverse effects. The subsequent section outlines the modifications we implemented for each task, and the three tasks are increasingly complex.

Task 1 We changed the reference object from a triangle panel to a cube and increased the number of small spheres to eight. The task includes preset routes that encompass variations in the values of individual axes, representing distinct straight lines situated within the same spatial plane.

Task 2 We kept the number of small spheres just like Task 1 but changed the reference object from a cube to a sphere. The task incorporates preset routes that encompass variations in the values of two axes, resulting in distinct curves situated within the same spatial plane.

Task 3 We maintained the number of small spheres but changed the reference object from the sphere to a spatial vortex generated by EquationEquation (1)

(1)

(1) . The task includes preset routes that encompass variations in the values of three axes, resulting in distinct curves situated on different spatial planes.

Regarding the usage methods for different hardware, the controller follows the same methodology as the default HTC VIVE VR controller, where users can press the trigger on the right-hand controller to perform drawing actions. The trigger on the left-hand controller can be used to select and move objects. As for the Logitech VR Ink, the pressure-sensitive button on the stylus enables users to intuitively adjust the thickness of the strokes generated. The stylus is designed to be gripped more closely to a real pen grip, enhancing the user experience. The StretchSense data glove facilitates gesture-based input, allowing users to employ pinch gestures for object selection, movement, and rotation. An additional VIVE tracker add-on is utilized to provide spatial location information for the data glove.

(1)

(1)

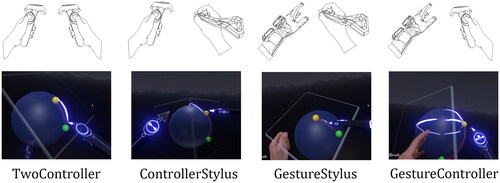

4.2. Experimental design

We conducted a formal study to evaluate the usability, performance, and user preference of four proposed asymmetric interfaces that consist of two hands holding input devices: (1) TwoController condition, (2) ControllerStylus condition, (3) GestureController condition, and (4) GestureStylus condition. Regarding the ControllerStylus condition, participants will grasp an HTC Vive controller in their left hand and a Logitech VR Ink stylus in their right hand. Concerning the GestureController and GestureStylus condition, participants will hold an HTC Vive controller and a Logitech VR Ink stylus in their right hand, respectively, while utilizing their left hand for gesture input.

We selected the aforementioned four scenarios as the asymmetric interaction conditions in our experiment based on the following considerations. Firstly, the Two Controller condition serves as the default input method for most VR headsets and can be used as a control group. The controller is a versatile tool suitable for various task scenarios. Therefore, we chose three scenarios where it functions as an object manipulation tool in the left hand and a sketching input tool in the right hand (TwoController, GestureController, ControllerStylus). Stylus has been proven to be suitable for drawing tasks, so we selected two scenarios where the stylus is used in the right hand as a sketching input tool (ControllerStylus and GestureStylus).

We did not choose the scenario of hand gesture input in the right hand primarily because gesture input has been predominantly applied in previous research for fast and wide-ranging operations, which does not align with our requirement for small-scale, rapid sketching tasks. Additionally, we conducted a pilot study before the formal experiment, and we found that the input stability and precision exhibited by the data glove were not satisfactory for providing accurate output in small-scale sketching tasks.

4.2.1. Participants

A total of 24 participants (10 female, 14 male; age: 20–35) were recruited for the experiment from the university campus. 11 of them have less (n = 5) or much less (n = 6) VR experiences, while 8 participants have more or much more VR experiences. The rest of the participants have a medium VR experience. A total of 17 participants have more design experience than normal users. Each participant had normal or corrected normal vision, and all had the same dominant hand (right hand). The experimental design adopted a within-subject approach, wherein all participants were instructed to perform the same VR sketching task under four different conditions. The order of the conditions was counterbalanced to minimize the influence of learning effects during the task execution process.

4.2.2. Procedure

The user study was approved by our university’s Human Participants Ethics Committee. All participants needed to read and sign the participant information sheet and the consent in advance. Before the trial and formal tasks, each participant would spend 5 minutes playing in the OpenBrush default environment to get familiar with the operation of the various input devices.

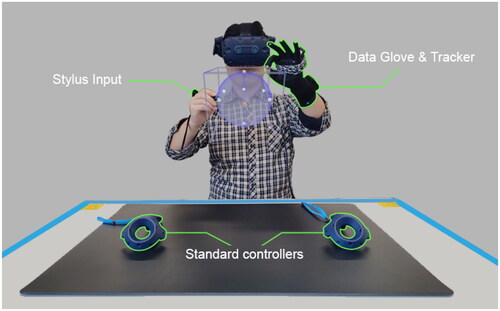

After the training session, the participants were asked to complete the trial task before the formal experiment task to get familiar with the task objectives. In the VR environment, the users needed to draw straight lines, curves, and complex spatial curves based on the given references in each task with four provided methods (). The participants sat in a chair in front of the desk during the experiment, as shown in . The reference object appeared on the user’s right side of the head when the task began. Following the completion of a stroke, the user perceives an auditory prompt.

Figure 4. Schematics of four conditions (up) and screenshots of our four interaction methods running in VR (down).

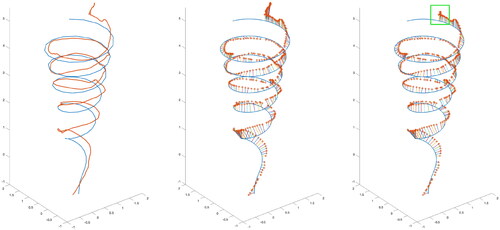

The VR application recorded each task’s completion time and the 3D drawing paths. The built-in program records the spatial coordinates (XYZ axes) of the user’s sketching output at 60 frames per second, being consistent with the rendering rate. We applied similar measurements used by Chen et al. (Citation2022) to explore the accuracy of spatial drawing. Our built-in logs recorded the spatial coordinates of user-generated lines in 3D densely sampled point sequences. To investigate the errors between the target curves and the drawn ones, we first calculated the Euclidean Distance for each sampling point to the target spatial curves, then we computed the mean value of Euclidean Distance across all sampling points. Based on our observations during the study and the recorded data set, the majority of errors made by humans were found to be within a range of Euclidean Distance of less than 5 cm. However, it is noteworthy that technical glitches occasionally resulted in errors exceeding 5 cm, particularly resulting in sharp protrusions instead of smooth curves in the final visualized path. Therefore, we removed the extreme outliers (Euclidean distance <5cm) to avoid errors caused by technical glitches, as shown in the highlighted ROI of .

Figure 6. Visualization of the user’s raw data (left), Euclidean distance between the user’s original data and the reference(Middle), filter data for Euclidean distances <5cm (right).

After completing all of the sketching tasks for each condition, the participants filled out the System Usability Scale (SUS) questionnaire (Bangor et al., Citation2008), the NASA Task Load Index questionnaire (NASA-TLX) (Hart & Staveland, Citation1988), and Simulator Sickness Questionnaire (SSQ) (Bimberg et al., Citation2020). When all four conditions were completed, they also completed a post-study questionnaire about their overall preference and general feedback on each interface.

5. Results

Regarding the result, the mean difference was significant at the 5% level, and adjustment for multiple comparisons was automatically made with the Bonferroni correction unless noted otherwise.

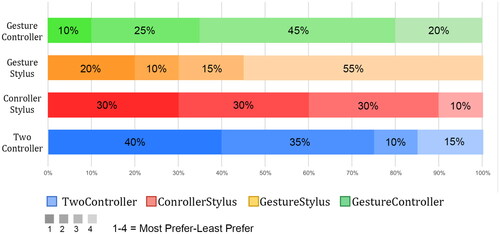

The results indicate that in the execution of relatively complex tasks, the asymmetric interaction with hand gesture significantly reduces task completion time compared to the asymmetric interaction with the controller. However, for relatively simple tasks, there was no significant difference in task completion time. There were no significant differences among the four input methods in terms of input accuracy. All four input methods achieved usability scores exceeding 68, demonstrating a certain degree of user-friendliness. However, the TwoController condition exhibited a significant advantage over GestureStylus and GestureController in terms of system usability. The NASA-TLX results revealed some significant differences between the TwoController condition and the other input methods in terms of Mental Demand, Performance, Effort, and Frustration. Detailed information will be described in the following paragraphs. There were no significant differences in the SSQ scores among the four input methods. Regarding user preference, TwoController was the most preferred, followed by ControllerStylus, GestureStylus, and lastly GestureController.

5.1. Task completion time

The measurement and analysis of task completion time for each interaction method offer significant insights into the effectiveness and user-friendliness of an interaction system. We conducted a one-way repeated measures ANOVA to determine whether statistically significant differences existed across the task completion time for four interaction methods. The results are presented in . The data were normally distributed with no outliers, as assessed by the Boxplot and Shapiro-Wilk test (p >.05), respectively.

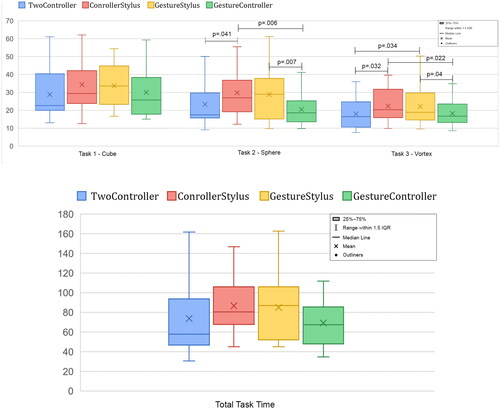

Figure 7. Task completion time of each task (up) and overall task time (down) (0: no task load ∼ 100: heavy task load; ×: mean; connecting lines: significant pairwise differences; outlier).

In terms of Task 1, Mauchly’s test of sphericity indicated that the assumption of sphericity had not been violated ( = 1.46, p =.918). The use of different interaction methods did not lead to any statistically significant changes in Task 1’s completion time (F(3, 57) = 1.148, p =.338, partial

= 0.057).

In terms of Task 2, Mauchly’s test of sphericity indicated that the assumption of sphericity was violated ( = 12.346, p = 0.031). A Greenhouse-Geisser correction was applied (ϵ = 0.681). Task completion time for the different interaction methods elicited statistically significant changes (F(2.043, 38.822) = 3.954, p = .027, partial

= 0.172). Post-hoc analysis with a Bonferroni adjustment revealed that the Task 2 completion time was statistically significantly different between the TwoController and ControllerStylus conditions (−5.531 (95% CI, −10.826, −0.237) sec, p = .041), the GestureStylus and GestureController conditions (9.311 (95% CI, 2.93, 15.693) sec, p = .007), and the GestureController and ContorllerStylus conditions (−8.37 (95% CI, −14.033, −2.706) sec, p = .006). No significant differences were found in the rest of the paired conditions.

In terms of Task 3, Mauchly’s test of sphericity indicated that the assumption of sphericity had not been violated ( = 2.492, p =.778). The use of different interaction methods led to statistically significant changes in Task 3 completion time (F(3, 57) = 3.824, p =.014, partial

= 0.168). Post-hoc analysis with a Bonferroni adjustment revealed that the Task 3 completion time was statistically significantly different between the TwoController and GestureStylus conditions (−4.332 (95% CI, −8.307, −0.358) sec, p =.034), the TwoController and ControllerStylus conditions (−4.159 (95% CI, −7.925, −0.394) sec, p =.032), the GestureStylus and GestureController conditions (4.065 (95% CI, 0.208, 7.924) sec, p =.04), and the GestureController and ControllerStylus conditions (−3.892 (95% CI, −7.155, −0.63) sec, p =.022). No significant differences were found in the rest of the paired conditions.

For overall task completion time, different interaction methods did not make any statistically significant changes in overall task completion time (F(3, 57) = 2.62, p =.059, partial = 0.121).

The results show no significant difference in task completion time between the various interaction methods for performing the simple VR sketching tasks. When performing relatively complex tasks, the interaction with gesture inputs significantly reduced the task completion time compared to the interaction with controllers.

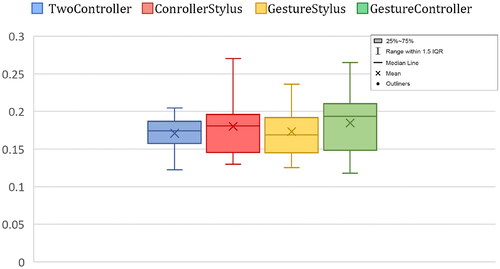

5.2. Accuracy

The accuracy of the output plays a crucial role in assessing the efficiency of each interaction system. As we focus on evaluating the efficiency and usability of the sketching input system, it becomes imperative to measure and evaluate the quality of the output generated by the system. We recorded and calculated the accuracy of the 3D paths generated by all participants under the four interaction conditions from the target curve of Task 3. By thoroughly analyzing the data in Matlab, we first identified potential outliers that could have arisen from technical glitches. In this case, we used a method based on Euclidean Distance and removed all sampling points located more than 5 cm away from the target curve. This approach helped ensure our data analysis’s reliability and accuracy, as depicted in .

We conducted a one-way repeated measures ANOVA to explore whether statistically significant differences existed in the input accuracy with four interaction methods (). Mauchly’s test of sphericity indicated that the assumption of sphericity had not been violated ( = 0.794, p =.537). The different interaction methods did not lead to a statistically significant difference in the mean deviation of generated curves in Task 3 (F(3, 57) = 1.938, p = 0.134, partial

= 0.093). The mean deviation of the generated curves in different conditions were, TwoController (M = 0.171 dm, SE = 0.005), ControllerStylus (M = 0.18 dm, SE = 0.009), GestureStylus (M = 0.173 dm, SE = 0.007), and GestureController (M = 0.185 dm, SE = 0.008). The results show no significant difference in input accuracy between the various interaction methods.

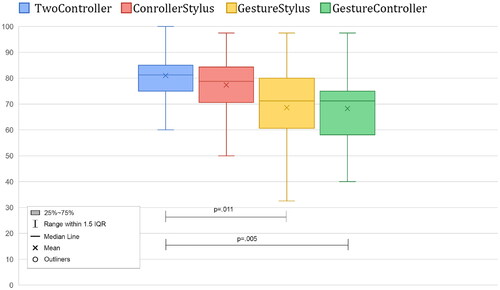

5.3. The System Usability Scale

SUS serves as a valuable tool for assessing and comparing the usability of interaction systems, providing insights that can inform design decisions and improvements. shows the average SUS score for each condition. We used the Friedman Test to determine if there were usability differences across all interaction methods. The SUS score was statistically significantly different between the four interaction conditions ( = 18.414, p <.0005). Further pairwise comparisons were performed with a Bonferroni correction for multiple comparisons (). Post-hoc analysis revealed statistically significant differences in the SUS score between TwoController (Mdn = 81.25) and GestureController conditions (Mdn = 71.25) (p =.005), and TwoController (Mdn = 81.25) and GestureStylus conditions (Mdn = 71.25) (p =.011). No significant differences were found in other paired conditions, and detailed significant values are presented in . A system with over 68 SUS scores could be considered above the average usability, and in our case, all four interfaces have good usability.

Figure 9. Results of SUS displayed in a box plot (×: mean; the higher, the better; connecting lines: significant pairwise differences; outlier).

Table 2. Pairwise comparisons of SUS in each condition.

All input methods exhibit a SUS score exceeding 68. Notably, the TwoController condition demonstrates a significantly higher SUS score compared to the GestureStylus and GestureController conditions.

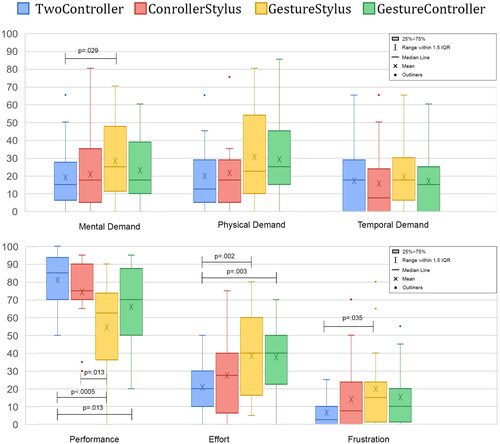

5.4. Workload

We conducted a Friedman Test for the NASA-TLX task evaluation and found significant differences in the task workload between four conditions. In specific, mental Demand ( = 10.807, p =.013), Physical Demand (

= 11.487, p =.009), Performance (

= 24.543, p <.0005), Effort (

= 19.912, p <.0005), and Frustration (

= 12.345, p =.006) were statistically significantly different. In contrast, there were no significant differences in the Temporal Demand (

= 5.436, p =.143), as shown in .

Figure 10. Six attributes of the NASA—TLX results displayed in a box plot (0: no task load ∼ 100: heavy task load; ×: mean; connecting lines: significant pairwise differences; outlier).

Pairwise comparisons were performed with a Bonferroni correction for multiple comparisons. For Mental Demand, Post-hoc analysis indicated that only GestureStylus (Mdn = 25) elicited a statistically significant increase compared to the TwoController condition (Mdn = 15) (p =.029). For Physical Demand, Post-hoc analysis showed no significant difference between each condition. In terms of Performance, Post-hoc analysis revealed that TwoController (Mdn = 85) elicited a statistically significant increase compared to GestureStylus (Mdn = 62.5) (p <.0005) and GestureController conditions (Mdn = 70) (p =.013). ControllerStylus (Mdn = 75) had statistically better performance than GestureStylus (Mdn = 62.5) (p =.013). Regarding Effort, Post-hoc analysis revealed that TwoController (Mdn = 20) elicited a statistically significant decrease compared to GestureStylus (Mdn = 40) (p =.002) and GestureController conditions (Mdn = 40) (p =.003). There was no significant difference between the rest of the conditions. In terms of Frustration, Post-hoc analysis revealed that TwoController (Mdn = 2.5) elicited a statistically significant decrease compared to the GestureStylus condition (Mdn = 15) (p =.035). No significant difference was found between the rest of the conditions.

The Friedman Test results indicated significant differences in task workload among the four conditions, specifically in terms of mental demand, physical demand, performance, effort, and frustration. Among the workload dimensions evaluated, only temporal demand did not exhibit statistically significant differences in the data. Pairwise comparisons with Bonferroni correction revealed specific condition comparisons that yielded statistically significant differences in certain workload dimensions.

5.5. Simulator sickness

We tested the SSQ score between all interaction methods with Friedman Test and found no significant difference in the sub-scale and the total score of SSQ. The nausea score, oculomotor disturbance score, disorientation score, and total score of each condition are listed in . There were no significant differences observed in the individual subscales and overall scores of the SSQ among the four conditions.

Table 3. Simulator Sickness Questionnaire results of each condition.

5.6. User preference

The user’s objective attitude plays a crucial role in the evaluation of the usability of an interaction method. It is important to consider the user’s unbiased perspective and objective viewpoint when assessing the effectiveness and user-friendliness of the interaction method. Therefore, in the post-study questionnaires, we collected users’ subjective preferences from four provided interfaces and their subjective opinions on the advantages and disadvantages of each one. Participants were requested to rank the four conditions in order of preference, using a numerical scale ranging from 1 (Most Preferred) to 4 (Least Preferred). Additionally, general feedback was collected from participants through open-ended questions such as” What do you perceive as the advantages/disadvantages of the GestureController, Gesture Stylus, ControllerStylus, and TwoController input system?” This approach allowed for the collection of participants’ subjective opinions and insights regarding the strengths and benefits of the respective input systems. The preference result is presented in (). Many users indicated a preference for the TwoController condition, which we believe, in conjunction with the results of our experiments, is due to the low stability of the current input devices and technical glitches during the experiment. In addition, the ControllerStylus condition exhibited a notable level of user preference, which can be attributed to its favorable performance in terms of usability, task completion time, and low task load. The majority of the participants also have a design background, and so were likely to be more comfortable holding a stylus input device while sketching than using a traditional VR controller. An additional potential factor contributing to this preference is the stability provided by the controller, which may enhance the overall user experience and satisfaction with the interaction method.

6. Discussion

The study investigated various asymmetric interaction methods and their impact on VR sketching performance. The results demonstrate that the asymmetric interaction system incorporating stylus and gesture inputs exhibits favorable overall usability and facilitates fast and accurate VR sketching.

6.1. Key findings

Comparing the different interaction methods, the ControllerStylus approach yielded a high usability score, comparable to that of the TwoController method. The GestureController mode within the interface proved effective in reducing task completion times for complex curve tasks with multiple axial variations, while still maintaining above-average usability and low task load. Generally, the TwoController condition was the most preferred method, followed by the ControllerStylus condition. Participant feedback also indicated that hardware stability variations across the conditions influenced their preferences.

H1 was partially substantiated by analyzing the advantages and performance characteristics of different input techniques. Factors such as task completion times, usability scores, and accuracy are used to assess the effectiveness and user experience of these input methods. The results of the user study indicate that the GestureController technique offers distinct advantages in comparison to the other conditions (GestureStylus and ControllerStylus). These advantages include faster task completion times, which were found to be similar to the TwoController condition, but significantly higher than both the GestureStylus and ControllerStylus conditions. Additionally, the GestureController technique demonstrated high usability scores and relatively accurate performance, making it an optimal input device for tool-based asymmetric interaction. The study underscores the immersive interaction experience offered by gesture input through the GestureController, which can be effectively leveraged within this interaction technique. However, the GestureStylus method did not demonstrate significant advantages in terms of usability, task completion time, and task load.

These results indicate that when hand gesture input is integrated with a controller as a tool-based asymmetric input. This combined interaction approach significantly reduces task completion time while maintaining usability and accuracy levels similar to traditional dual-controller interactions. However, the integration of hand gestures and stylus input did not demonstrate the aforementioned advantages. Hypothesis H1 proposed enhancing VR sketching experiences by integrating hand gesture input and stylus input into asymmetric interaction. The experimental findings only validate the task completion time advantages of asymmetric interaction with hand gesture input. Therefore, H1 is partially supported.

According to the study results, H2 was rejected. We compared three selected tool-based asymmetric interaction approaches. We further evaluated these three conditions with the symmetric two-controller one. The findings indicated that the GestureController outperformed the GestureStylus and ControllerStylus in terms of shorter task completion time for relatively complex VR sketching tasks. It is noteworthy that subjective questionnaire responses from the NASA-TLX survey indicated that ControllerStylus exhibited significantly better performance than GestureStylus. However, objective data showed no significant differences in accuracy among the three input methods for complex VR sketching tasks. Considering the results from SUS, SSQ, and user preference, which did not show significant differences, and in combination with our observations during the experiment, we infer that the perceived subjective differences primarily stem from technical glitches in the hardware. There is no distinctly evident advantage across various aspects when comparing three distinct forms of selected asymmetric interaction with the conventional symmetric dual-controller interaction method. Therefore H2 was rejected.

To have a better understanding of the drawing behaviors with our provided interfaces, we recorded the spatial line data drawn by all users in the experiment and reproduced the visual outputs in MATLAB, as shown in from one of the most complicated drawing paths from Task 3. We observed that the outputs of the four explored interactions all match the overall trend of the preset routes. In particular, the output of TwoController and ControllerStylus is relatively stable. In contrast, GestureController and GestureStylus, which contain gesture inputs, show a certain amount of drawing defects. We attribute the significant deviations of a few outputs from the predetermined route to technical glitches present in the hardware.

Upon removing the aforementioned outlier data points (Euclidean Distance >5cm), the overall accuracy achieved using tool-based asymmetric interaction involving gesture and stylus inputs remained comparable to the input accuracy obtained using traditional symmetric VR input employing two identical controllers.

It is worth noting that, despite receiving lower ratings in terms of overall performance and usability based on the SUS and NASA-TLX questionnaires, the GestureStylus and GestureController conditions exhibited some advantages in terms of objective measures such as task completion time and accuracy. Specifically, the GestureController one exhibited significantly faster completion times even for complex sketching tasks, suggesting that the tool-based asymmetric interaction system with gesture input has certain input efficiency advantages. However, it is crucial to take into account the frustration caused by technical glitches in the hardware itself, which may have prompted users to expedite the task completion.

The identified instabilities encompassed several aspects that could potentially impact the user experience in VR sketching interactions. These included tracking inconsistencies, drifting, and calibration errors. Tracking inconsistencies arose from inaccuracies or inconsistencies in tracking users’ hand movements, leading to misalignments or jittery representations, ultimately detracting from the overall quality of the drawing experience. Drifting referred to the gradual shift or movement of virtual objects or the user’s viewpoint over time. This phenomenon resulted in misalignment between the intended strokes of the user and the actual output in the virtual environment, compromising the accuracy and precision of the drawings. Calibration errors manifested as discrepancies between the user’s physical movements and their virtual representation, resulting in inaccurate mapping. This discrepancy led to distorted or imprecise drawings, undermining the fidelity and realism of the virtual sketching experience. Addressing these instabilities is crucial in order to enhance the user experience in VR sketching interactions, ensuring smooth and accurate rendering, real-time feedback, and a seamless mapping between physical movements and virtual representations.

In our case, the occurrence of glitches primarily resulted from large hand movements extending beyond the detection volume, which were relatively infrequent. These glitches would promptly cease once the hand re-entered the detection range. To further investigate this issue, additional objective data such as Electroencephalogram (EEG) and Electromyography (EMG) signals need to be gathered and analyzed in future studies exploring the usability of each device and corresponding cognitive and load muscle fatigue.

Our findings align with previous research, corroborating the confirmed value of asymmetric bi-manual input as an interaction modality in enhancing the user interaction experience (Hinckley et al., Citation1994; Huidong et al., Citation2017; Jerald, Citation2015; Poupyrev et al., Citation1998). Distinguishing our study from prior research, we comprehensively integrate previously explored input modalities, such as gesture input (LaViola, Citation2013; LaViola et al., Citation2017) and stylus input (Drey et al., Citation2020; Li et al., Citation2020), as a cohesive framework to investigate their usability within VR sketching instead of treat these devices as individuals. The findings of our study suggest that an asymmetric interaction system, combining hand gesture input, stylus input, and standard controllers, appears to be a promising approach to enhancing users’ efficiency and accuracy during complex VR sketching tasks. However, it is important to note that these conclusions are based on the specific tool-based asymmetric interactions discussed and evaluated in our study, and further research may be needed to validate the generalizability of these results to other interaction scenarios.

6.2. Design implications

From this study, we can summarize the following design guidelines:

Symmetric dual-controller interaction can be a secure and adaptable design decision for VR sketching tasks requiring many complexity levels and task types. It enhances task performance and user experience by enabling users to grasp the controllers firmly while accessing relevant input devices.

Using tool-based asymmetric interaction techniques involving a stylus and controller can provide a viable solution for situations where dependable interactions are crucial, and a VR drawing process needs to match the user’s natural habits as faithfully as feasible. This strategy can improve task performance and reduce cognitive strain while giving users a more intuitive and comfortable experience.

Asymmetric interaction with gesture and controller can benefit VR sketching tasks involving complex curved drawings. This approach allows users to leverage the advantages of both input modalities to achieve accurate and precise drawings, reducing errors and improving task performance.

Integrating context-aware interaction adaptation in VR sketching applications enhances the user experience by customizing interaction techniques to suit individual preferences, skill levels, and task requirements. This adaptive approach fosters satisfaction, engagement, and efficiency in accomplishing sketching tasks.

6.3. Limitations

The results of the user study are promising, but a number of limitations of the research should be addressed in the future. First, 11 participants mentioned that the tracking quality of the current data glove and the stylus was not super stable, and sometimes had bugs that prevented users from entering information effectively. We would improve the configuration glove and stylus hardware to provide better tracking reliability.

Similarly, there were issues with hand-tracking performance from the software perspective. Participants made comments such as “errors and difficulties (bugs and inaccuracies) when using the hand to grab and make gestures,” “the sensor of the hand is sometimes not accurate so sometimes frustrating when grabbing objects” and the visualization of user output data also showed problems with the hand tracking. So a much better performance and user experience for VR sketching could be achieved by improving the hand-tracking techniques. Some participants expressed interest in using gesture input if the data glove provided greater comfort and the tracking system was more stable, appreciating the method’s innovation and intuitiveness. For instance: “The hand is more vivid and intuitive to use, but it’s quite glitchy when I use it.” “It’s more realistic as you get to use your body part to control… but I need to be careful when grabbing the objects.”

Another limitation is that although our hybrid interface provides four interaction methods, the system currently does not support a direct solution that allows users to easily switch between the four interaction methods. For example, to change the hand gesture input method, the user has to wear or take off the data glove, which needs to be re-calibrated every single time, affecting the fluency of using the hybrid interface. In the future, a visual-based hand-tracking algorithm could be used as an alternative solution to solve this issue for seamless interface change.

7. Conclusion and future work

The symmetric design using two controller interactions is widely used in current VR applications. However, this type of input system is insufficient for dedicated tasks such as VR sketching, which require several different tools to be held in the user’s hands. Our work explored the potential of tool-based interaction interfaces consisting of a standard VR controller, stylus input, and/or a dataglove for intuitive spatial VR sketching. We developed a prototype system that supported asymmetric interfaces with three novel input modalities. With a formal user study, we found that the developed asymmetric methods could handle different types of interaction in the VR sketching process.

When dealing with complex spatial sketching, the GestureController mode significantly reduced the task completion time while maintaining good usability, low task load, and input accuracy. In more composite situations, the stability of the ControllerStylus condition allowed it to provide a more natural user experience when handling various sketching tasks. Participants ranked their preferences for each interaction method. A considerable number of participants preferred the TwoController condition, which may have been due to its reliability. The ControllerStylus condition was the second preferred method, likely due to superior performance in usability, task completion, and low task load. Additionally, most participants had a design background, which could have contributed to their comfort in using a stylus from their work habits.

In our current tool-based approach, which combines stylus and gesture input, we acknowledge certain limitations that warrant further investigation. To optimize the stability and usability of the hybrid interface, future research should focus on augmenting the system with additional devices and refining software algorithms. Specifically, we plan to introduce a vision-based solution to replace the sensor-based data glove, leveraging infrared cameras to facilitate real-time gesture input. This integration is expected to eliminate the need for extra trackers or devices attached to the user’s hand, potentially enhancing the overall usability of the system. Addressing the hardware stability concerns will open up new possibilities for research. One promising avenue is exploring the utilization of dominant hand gestures to achieve varying levels of input fidelity within the tool-based asymmetric VR sketching input system. By leveraging the capabilities of the dominant hand, users may achieve more intricate and precise interactions, further enhancing the user experience and enabling advanced sketching capabilities.

The incorporation of objective measurement metrics, such as physiological data, is essential in the evaluation process, considering that users’ cognitive load and muscle fatigue may impact the sketching experience. The introduction of EMG and EEG signals will provide valuable insights into users’ cognitive and physiological responses during interaction, thus revealing potential areas for improvement. Additionally, comprehensive usability assessments should extend beyond task completion time and accuracy to encompass other factors, enabling a more thorough evaluation of the system’s performance.

In summary, future research should focus on enhancing the tool-based asymmetric VR sketching input system by incorporating vision-based solutions and exploring dominant hand gestures. The integration of physiological data into the evaluation process will provide valuable insights into the usability of the system. Ultimately, these advancements will contribute to a more refined and efficient VR sketching experience, catering to the needs and preferences of users in diverse application scenarios.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Notes on contributors

Qianyuan Zou

Qianyuan Zou is a PhD candidate specializing in XR experiences for design. With a keen focus on VR and AR applications, he is currently researching asymmetric interfaces for immersive design at the University of Auckland’s Empathic Computing Lab under the guidance of Professor Mark Billinghurst and Dr. Allan Fowler.

Huidong Bai

Huidong Bai obtained his PhD from the HIT Lab NZ in 2016, under the supervision of Prof. Mark Billinghurst and Prof. Ramakrishnan Mukundan. He is presently a Research Fellow at the Empathic Computing Laboratory at The University of Auckland. His research focuses on remote collaborative Mixed Reality (MR) and empathic interfaces.

Lei Gao

Lei Gao, a PhD graduate from HitLab NZ, University of Canterbury, is now a software engineer at Determ. His research centers on large-scale remote collaboration through AR interfaces with scene scanning and capture tech. Gao brings extensive expertise in computer vision-based AR tracking, reconstruction, and rapid 3D data rendering.

Gun A. Lee

Gun A. Lee Senior Lecturer, specializes in interactive techniques for sharing virtual experiences in AR and immersive 3D environments. Currently focused on enhancing remote collaboration through AR and wearables, his research aims to enrich communication cues and broaden participation in larger groups.

Allan Fowler

Allan Fowler Senior Lecturer, held tenured positions in Game Design and Software Engineering at renowned institutions, including Columbia University and UC Santa Cruz. Research areas include gamified learning, CS Education, Game Jams, and Human-Computer Interaction, with a global perspective influenced by New Zealand’s Manawatu-Whanganui region.

Mark Billinghurst

Mark Billinghurst holds a PhD in electrical engineering from the University of Washington, supervised by Prof. T. Furness III and Prof. L. Shapiro. He is presently a Professor heading the Empathetic Computing Laboratory at Auckland Bioengineering Institute, The University of Auckland.

Notes

References

- Annett, M., Anderson, F., Bischof, W., & Gupta, A. (2014). The pen is mightier: Understanding stylus behaviour while inking on tablets. In P. G. Kry & A. Bunt (Eds.), Graphics interface. AK Peters/CRC Press.

- Annett, M., Anderson, F., Bischof, W. F., & Gupta, A. (2020). The pen is mightier: Understanding stylus behaviour while inking on tablets. In P. G. Kry & A. Bunt (Eds.), Graphics interface 2014 (pp. 193–200). AK Peters/CRC Press.

- Bangor, A., Kortum, P. T., & Miller, J. T. (2008). An empirical evaluation of the system usability scale. International Journal of Human-Computer Interaction, 24(6), 574–594. https://doi.org/10.1080/10447310802205776

- Batmaz, A. U., Mutasim, A. K., & Stuerzlinger, W. (2020). Precision vs. power grip: A comparison of pen grip styles for selection in virtual reality [Paper presentation]. 2020 IEEE Conference on Virtual Reality and 3d User Interfaces Abstracts and Workshops (VRW), Atlanta, GA (pp. 23–28).

- Bimberg, P., Weissker, T., & Kulik, A. (2020). On the usage of the simulator sickness questionnaire for virtual reality research [Paper presentation]. 2020 IEEE Conference on Virtual Reality and 3d User Interfaces Abstracts and Workshops (VRW), Atlanta, GA (pp. 464–467).

- Boddien, C., Heitmann, J., Hermuth, F., Lokiec, D., Tan, C., Wölbeling, L., Jung, T., & Israel, J. H. (2017). SketchTab3d: A hybrid sketch library using tablets and immersive 3D environments [Paper presentation]. Proceedings of the 2017 ACM Symposium on Document Engineering (pp. 101–104). Association for Computing Machinery. https://doi.org/10.1145/3103010.3121029

- Bonnici, A., Akman, A., Calleja, G., Camilleri, K. P., Fehling, P., Ferreira, A., Hermuth, F., Israel, J. H., Landwehr, T., Liu, J., Padfield, N. M. J., Sezgin, T. M., & Rosin, P. L. (2019). Sketch-based interaction and modeling: Where do we stand? Artificial Intelligence for Engineering Design, Analysis and Manufacturing, 33(4), 370–388. https://doi.org/10.1017/S0890060419000349

- Brahmbhatt, S., Tang, C., Twigg, C. D., Kemp, C. C., & Hays, J. (2020). ContactPose: A dataset of grasps with object contact and hand pose. In A. Vedaldi, H. Bischof, T. Brox, J. M. Frahm (Eds.), Computer Vision – ECCV 2020. ECCV 2020. Lecture Notes in Computer Science, (Vol. 12358). Springer, Cham. https://doi.org/10.1007/978-3-030-58601-0_22

- Chen, C., Yarmand, M., Xu, Z., Singh, V., Zhang, Y., & Weibel, N. (2022). Investigating input modality and task geometry on precision-first 3D drawing in virtual reality [Paper presentation]. 2022 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Singapore, Singapore (pp. 384–393).

- Chung, K-m., & Shin, D.-H. (2015). Effect of elastic touchscreen and input devices with different softness on user task performance and subjective satisfaction. International Journal of Human-Computer Studies, 83(C), 12–26. https://doi.org/10.1016/j.ijhcs.2015.06.003

- Cipresso, P., Giglioli, I. A. C., Raya, M. A., & Riva, G. (2018). The past, present, and future of virtual and augmented reality research: A network and cluster analysis of the literature. Frontiers in Psychology, 9, 2086. https://doi.org/10.3389/fpsyg.2018.02086

- Cockburn, A., Ahlström, D., & Gutwin, C. (2012). Understanding performance in touch selections: Tap, drag and radial pointing drag with finger, stylus and mouse. International Journal of Human-Computer Studies, 70(3), 218–233. https://doi.org/10.1016/j.ijhcs.2011.11.002

- Connolly, J., Condell, J., O'Flynn, B., Sanchez, J. T., & Gardiner, P. (2017). IMU sensor-based electronic goniometric glove for clinical finger movement analysis. IEEE Sensors Journal, 18(3), 1–1. https://doi.org/10.1109/JSEN.2017.2776262

- Csapo, A., Israel, J. H., & Belaifa, O. (2013). Oversketching and associated audio-based feedback channels for a virtual sketching application [Paper presentation]. 2013 IEEE 4th International Conference on Cognitive Infocommunications (Coginfocom), Budapest, Hungary (pp. 509–514).

- De Araùjo, B. R., Casiez, G., & Jorge, J. A. (2012). Mockup builder: Direct 3D modeling on and above the surface in a continuous interaction space. In Proceedings of graphics interface 2012 (pp. 173–180). Canadian Information Processing Society.

- Dorta, T. (2007). Augmented sketches and models: The hybrid ideation space as a cognitive artifact for conceptual design. Digital Thinking in Architecture, Civil Engineering, Archaeology, Urban Planning and Design: Finding the Ways, 11, 251–264.

- Drey, T., Gugenheimer, J., Karlbauer, J., Milo, M., & Rukzio, E. (2020). Vrsketchin: Exploring the design space of pen and tablet interaction for 3D sketching in virtual reality. In Proceedings of the 2020 Chi Conference on Human Factors in Computing Systems (pp. 1–14). Association for Computing Machinery.

- Google. (2021). Painting from a new perspective. https://www.tiltbrush.com/.

- GravitySketch. (2021). Think in 3D Create in 3D. https://www.gravitysketch.com/.

- Grossman, T., Balakrishnan, R., & Singh, K. (2003). An interface for creating and manipulating curves using a high degree-of-freedom curve input device [Paper presentation]. Proceedings of the Sigchi conference on Human Factors in Computing Systems, Ft. Lauderdale, Florida, USA (pp. 185–192).

- Guiard, Y. (1987). Asymmetric division of labor in human skilled bimanual action: The kinematic chain as a model. Journal of Motor Behavior, 19(4), 486–517. https://doi.org/10.1080/00222895.1987.10735426

- Gunawardane, P., & Medagedara, N. T. (2017). Comparison of hand gesture inputs of leap motion controller & data glove in to a soft finger [Paper presentation]. 2017 IEEE International Symposium on Robotics and Intelligent Sensors (IRIS), Ottawa, ON, Canada (pp. 62–68).

- Hart, S. G., & Staveland, L. E. (1988). Development of NASA-TLX. Human mental workload. Advances in Psychology, 52, 139–183. https://doi.org/10.1016/S0166-4115(08)62386-9

- Hinchet, R., Vechev, V., Shea, H., & Hilliges, O. (2018). Dextres: Wearable haptic feedback for grasping in VR via a thin form-factor electrostatic brake. In Proceedings of the 31st Annual Acm Symposium on User Interface Software and Technology (pp. 901–912). Association for Computing Machinery.

- Hinckley, K., Pausch, R., Goble, J. C., & Kassell, N. F. (1994). Passive real-world interface props for neurosurgical visualization [Paper presentation]. Proceedings of computing systems for Computing Machinery in The Sigchi Conference on Human Factors (pp. 452–458). NYUSA Association, New York.

- Huidong, B., Alaeddin, N., Barrett, E., & Mark, B. (2017). Asymmetric bimanual interaction for mobile virtual reality. In R. Lindeman, G. Bruder, & D. Iwai (Eds.), ICAT-EGVE (pp. 83–86).

- ISO. (2012). ISO/TS 9241-411:2012. Retrieved 14/07/2021 from https://www.iso.org/standard/54106.html.

- Israel, J. H., Mauderli, L., & Greslin, L. (2013). Mastering digital materiality in immersive modelling [Paper presentation]. Proceedings of The International Symposium on Sketch-Based Interfaces and Modeling, New York, NY (pp. 15–22).

- Israel, J. H., Wiese, E., Mateescu, M., Zöllner, C., & Stark, R. (2009). Investigating three-dimensional sketching for early conceptual design—results from expert discussions and user studies. Computers & Graphics, 33(4), 462–473. https://doi.org/10.1016/j.cag.2009.05.005

- Jackson, B., & Keefe, D. F. (2016). Lift-off: Using reference imagery and freehand sketching to create 3D models in VR. IEEE Transactions on Visualization and Computer Graphics, 22(4), 1442–1451. https://doi.org/10.1109/TVCG.2016.2518099

- Jerald, J. (2015). The VR book: Human-centered design for virtual reality. Morgan & Claypool.

- Kim, J.-H., Thang, N. D., & Kim, T.-S. (2009). 3-D hand motion tracking and gesture recognition using a data glove. In 2009 IEEE International Symposium on Industrial Electronics (pp. 1013–1018). Institute of Electrical and Electronics Engineers.

- Lakatos, D., Blackshaw, M., Olwal, A., Barryte, Z., Perlin, K., & Ishii, H. (2014). T (ether) spatially-aware handhelds, gestures and proprioception for multi-user 3D modeling and animation. In Proceedings of the 2nd ACM Symposium on Spatial User Interaction (pp. 90–93). Association for Computing Machinery.

- LaViola, J. J. (2013). 3D gestural interaction: The state of the field. ISRN Artificial Intelligence, 2013, 1–18. https://doi.org/10.1155/2013/514641

- LaViola, J. J., Jr, Kruijff, E., McMahan, R. P., Bowman, D., & Poupyrev, I. P. (2017). 3D user interfaces: Theory and practice. Addison-Wesley Professional.

- Lazar, J., Feng, J. H., & Hochheiser, H. (2017). Research methods in human-computer interaction. Morgan Kaufmann.

- Li, N., Han, T., Tian, F., Huang, J., Sun, M., Irani, P., & Alexander, J. (2020). Get a grip: Evaluating grip gestures for VR input using a lightweight pen [Paper presentation]. In Proceedings of the 2020 Chi Conference on Human Factors in Computing Systems, New York, NY (pp. 1–13).

- Liu, Z., Zhang, F., & Cheng, Z. (2021). Buildingsketch: Freehand mid-air sketching for building modeling [Paper presentation]. 2021 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Bari, Italy (pp. 329–338).

- Machuca, M. D. B., Asente, P., Stuerzlinger, W., Lu, J., & Kim, B. (2018). Multiplanes: Assisted freehand VR sketching. In Proceedings of the Symposium on Spatial User Interaction (pp. 36–47). Association for Computing Machinery.

- Maitlo, N., Wang, Y., Chen, C. P., Mi, L., & Zhang, W. (2019). Hand-gesture-recognition based text input method for AR/VR wearable devices. arxiv.org/abs/1907.12188.

- Markvicka, E., Wang, G., Lee, Y.-C., Laput, G., Majidi, C., & Yao, L. (2019). Electrodermis: Fully untethered, stretchable, and highly-customizable electronic bandages. In Proceedings of the 2019 Chi Conference on Human Factors in Computing Systems (pp. 1–10). Association for Computing Machinery.

- Oculus. (2020). Hand tracking. https://support.oculus.com/2720524538265875/.

- Perkunder, H., Israel, J. H., & Alexa, M. (2010). Shape modeling with sketched feature lines in immersive 3D environments. In SBIM (Vol. 10, pp. 127–134). The Eurographics Association. https://doi.org/10.2312/SBM/SBM10/127-134

- Poupyrev, I., Tomokazu, N., & Weghorst, S. (1998). Virtual notepad: Handwriting in immersive VR [Paper presentation]. Proceedings. IEEE 1998 Virtual Reality Annual International Symposium (Cat. no.98cb36180), Atlanta, GA (pp. 126–132).

- Rahul, A., Habib, K. R., Fraser, A., Tovi, G., Karan, S., & George, F. (2017). Experimental evaluation of sketching on surfaces in VR. In ACM CHI Conference on Human Factors in Computing Systems, Denver, CO, USA (Vol. 17, pp. 5643–5654). Association for Computing Machinery.

- Rogers, S. L., Broadbent, R., Brown, J., Fraser, A., & Speelman, C. P. (2022). Realistic motion avatars are the future for social interaction in virtual reality. Frontiers in Virtual Reality, 2, 750729. https://doi.org/10.3389/frvir.2021.750729

- Slater, M., Gonzalez-Liencres, C., Haggard, P., Vinkers, C., Gregory-Clarke, R., Jelley, S., Watson, Z., Breen, G., Schwarz, R., Steptoe, W., Szostak, D., Halan, S., Fox, D., & Silver, J. (2020). The ethics of realism in virtual and augmented reality. Frontiers in Virtual Reality, 1, 1. https://doi.org/10.3389/frvir.2020.00001