Abstract

The increase of digital health solutions to mitigate work-related mental health issues has been marked, and the COVID-19 pandemic has further reinforced this trend. This paper evaluates the usability and acceptability of a digital platform developed to provide tools and resources to support key organizational stakeholders. Through semi-structured interviews with think-aloud and cognitive walkthrough techniques, 31 potential end-users identified critical factors influencing the usability and acceptability of the platform. Key usability themes included the perceived intuitiveness of the system, clarity of information, and users’ emotional response to the platform tools, namely perceived enjoyment. Acceptability themes included performance expectancy, trust, and facilitating conditions. The findings provide insights for both research and practice, enriching the understanding of the usability and acceptability of digital health platforms from the perspectives of different organizational stakeholders. This knowledge can also guide designers and developers of eHealth applications in the workplace.

1. Introduction

According to recent surveys (EU-OSHA, Citation2019), many European managers express concern about workplace stress and mental disorders. However, less than 30% of European workplaces have adequate procedures to address these issues (ibid). Despite efforts of international bodies to provide guidelines to organizations on how to promote mental health in the workplace (Walsh, Citation2022; World Health Organization, Citation2022), employers and managers often struggle to select, implement, and evaluate appropriate mental health interventions. At the same time, managers may represent one of the groups most at risk of developing mental health problems (St-Hilaire & Gilbert, Citation2019; Skakon et al., Citation2011).

In response to these challenges, digital platforms addressing mental health may offer an accessible and informative solution for employers. In recent years, organizations have increasingly leveraged digital health solutions to aid their managers and employees prevent work-related mental health disorders (Stratton et al., Citation2022). These digital interventions have permeated the workplace, manifesting as platforms explicitly designed to provide practical tools, guide individuals in effectively managing workplace stress, and promote healthier behaviors, thereby fostering well-being at work. Gayed et al. (Citation2018), for instance, provide a notable example of evidence regarding the feasibility, usability, and effectiveness of an online training program aimed at building managers’ confidence in supporting the mental health needs of their staff and fostering a mentally healthy work environment through their behavior.

Therefore, the pervasiveness of eHealth applications in society has significantly increased, especially during the COVID-19 pandemic (e.g., Keuper et al., Citation2021; Tomaino et al., Citation2022). These interventions, particularly those targeting mental health and well-being at work, are gaining popularity due to their potential to involve many individuals simultaneously while preserving anonymity (Armaou et al., Citation2019; Carolan & De Visser, Citation2018; Griffiths et al., Citation2006; Stratton et al., Citation2017) and increasing accessibility (Bunyi et al., Citation2021). The inherent reachability of these applications and their sizable and sustained effects are key factors driving users toward them (Howarth et al., Citation2018, Citation2019; Lecomte et al., Citation2020). However, while numerous mental health platforms are available for the public (e.g., Headspace, Calm) or are specifically designed to provide self-help tools (World Health Organization & International Labor Organization, Citation2020), fewer resources are tailored to address mental health at work in a more comprehensive way or support managers in their decision-making journey towards selecting, implementing, and evaluating comprehensive interventions. Furthermore, the evaluation of digital mental health interventions should extend beyond their effectiveness because although there is supporting data, there is still some concern about their actual use and engagement (Carolan & De Visser, Citation2018). Indeed, as even indicated in reviews (e.g., Chan & Honey, Citation2022; Chan et al., Citation2023), whereas mental health apps are generally viewed positively by consumers, factors such as ease of use, usefulness, and content and privacy need to be considered to maximize and sustain users’ engagement with the app.

Usability and acceptability are crucial aspects that must be assessed when evaluating digital platforms at work (Balcombe & De Leo, Citation2023). Usability evaluation measures the extent to which a digital solution is user-friendly, efficient, and satisfying for the intended users (Martins et al., Citation2023). On the other hand, acceptance refers to the degree to which a product, system, or service is deemed satisfactory or agreeable by its users. It is a critical factor in user experience and can significantly influence the adoption and continued use of a digital tool (Davis, Citation1989). In this regard, the most widely used acceptability framework, namely, the Unified Theory of Acceptance and Use of Technology (UTAUT) (Venkatesh et al., Citation2003), defined four main key dimensions of acceptance able to influence user’s behavioral intention and actual use. These are performance expectancy (i.e., the degree to which an individual believes that using the system will help them achieve improvements in job performance), effort expectancy (degree of ease associated with using the system), social influence (the extent to which an individual perceives that essential others believe they should use the new system), and facilitating conditions (the degree to which an individual believes that the necessary organizational and technical infrastructure exists to support the use of the system). The UTAUT is recognized as the most predictive technology acceptance model, explaining up to 70% of the variance in technology acceptance (Venkatesh et al., Citation2003). Moreover, beyond the UTAUT constructs, studies have emphasized the role of the trust-risk relationship (Arfi et al., Citation2021) and perceived reliability, defined as the belief of an IT system to deliver a service safely and accurately (Alam et al., Citation2020) as pivotal factors influencing adoption of eHealth technologies.

These aspects, usability, and acceptability, are particularly critical in developing digital health solutions that aim to assist in health-related tasks. Poor usability and engagement can compromise the quality of these digital solutions and undermine their suitability for users (Couper et al., Citation2010; Martins et al., Citation2023). Diehl and colleagues’ recent synthesis and analysis of 179 existing user interface recommendations (2022) have shed light on crucial factors that inform digital technologies’ design and ensure usability. These principles include feedback, recognition, flexibility, customization, consistency, errors, help, accessibility, navigation, and privacy and serve as general guidelines or design rules that outline characteristics of systems and inform the design process for digital solutions, ultimately enhancing user interaction.

On the one hand, the design of effective digital solutions should follow usability standardization principles (Diehl et al., Citation2022; Goundar et al., Citation2022) to be inclusive towards a broad and diverse audience. Nonetheless, the ever-increasing heterogeneous audience of digital solutions calls for a context-oriented approach in which careful user requirement analysis is needed. Notably, the complexity of usability evaluation is underlined by the need to assess the users and their relationship with the information technology and the environment, carefully incorporating contextual factors impacting the adoption in natural settings (Yen & Bakken, Citation2012). In this sense, experts have stressed the need for designing highly effective, easy-to-use, engaging, and trustworthy tools to fully benefit from the results of digital mental health solutions (Carolan et al., Citation2017; Roland et al., Citation2020; Schreiweis et al., Citation2019). This context-driven approach to usability and acceptability testing is even more relevant to the work context, primarily when the technology investigated covers potentially stigmatized topics such as mental health. As a result, the user-centered design of eHealth tools, which implies users’ involvement in the developmental process, has been increasingly deployed through various methods, including, among others, mining user reviews of mental health apps (Alqahtani & Orji, Citation2019).

1.1 The present study

This paper presents an evaluation of the usability and acceptability of the H-WORK Mental Health at Work Platform (https://www.mentalhealth-atwork.eu), designed to address the lack of resources and tools supporting managers, employers, and Human Resource (HR) directors and Occupational Health and Safety (OHS) professionals in addressing mental health at work. The tested platform is one of the results of the H-WORK project (De Angelis et al., Citation2020), funded under the European research and innovation program Horizon 2020, which aimed to implement and assess multilevel organizational interventions to improve mental health at work. Developed through a comprehensive evaluation of existing digital platforms and a thorough examination of scientific literature, the platform offers services and tools to address these challenges. The platform comprises three main sections: Interactive Tools, Roadmap, and Policy Briefs selection.

The Interactive Tools section includes the H-WORK Benchmarking Tool (H-BT), connected to the European Survey of Enterprises on New and Emerging Risk Databank (EU-OSHA, Citation2019), which allows organizations to compare their mental health and well-being performance indicators to similar organizations from the same country or Europe. The Tools also comprise the H-WORK Decision Support System (H-DSS), which provides a tailored list of recommended interventions based on the organization’s mental health (“Mental Health at Work questionnaire”) and psychosocial well-being scores (“Psychosocial Wellbeing questionnaire”) assessed through a list of responses to a set of items. Moreover, the Interactive section also offers the H-WORK Economic Calculator (H-EC), which calculates potential savings from implementing mental health actions.

The H-WORK Roadmap was designed as a step-by-step downloadable handbook for end-users that guides preparing, planning, implementing, and evaluating multilevel interventions to promote mental health and well-being in the workplace. It covers aspects such as adopting the H-WORK approach, preparing the ground by involving the relevant stakeholders, prioritizing the needs, planning the appropriate actions, implementing the interventions while monitoring and sustaining the ongoing progress, and measuring the impact and effectiveness of such strategies.

Lastly, the platform provides access to H-WORK Policy Briefs produced by the project, offering guidelines and recommendations to practitioners and policymakers on best practices in mental health management at national and European levels.

The present study aimed to test the usability and acceptability of the H-WORK Mental Health at Work Platform. Building upon the stratified view of health information technology (Yen & Bakken, Citation2012), interviews were run to identify the potential factors influencing usability and acceptability. The platform’s usability, intended as its ease of use and user-friendliness (Martins et al., Citation2023), and acceptability, the extent to which employees are willing to utilize it (Davis, Citation1989), were tested by potential end users. In so doing, this research aimed to contribute to theory and practice. On the one hand, the study aimed to contribute to theory by expanding the knowledge on acceptability and usability studies in digital organizational health and identifying essential factors impacting the usability and acceptability of eHealth platforms at work. Moreover, the study aimed to contribute to practice by providing valuable insights for occupational health platform developers regarding potential usability and acceptability barriers indicated by the target user group (e.g., managers, employers), which could prevent them from integrating eHealth solutions in their work and organizational processes.

2. Materials and methods

2.1 Participants

Thirty-eight people were initially contacted to participate in the present study. Of these, seven people declined the invitation to participate due to concurrent work commitments. As a result, thirty-one participants were involved in the final sample, corresponding to approximately 82% response rate. The latest systematic reviews informed the sample size rationale, which pointed to 7 to 17 interviews to reach data saturation (Hennink & Kaiser, Citation2022). Participants were employers, managers, HR directors, and OHS professionals from organizations based in thirteen different countries, including Spain (n = 8; 27%), Italy (n = 6; 20%), the United States of America (n = 3; 11%), the Netherlands (n = 2; 6%), Germany (n = 2; 6%), Czech Republic (n = 2; 6%), Slovenia (n = 2; 6%), Austria (n = 1; 3%), Turkey (n = 1; 3%), France (n = 1; 3%), Norway (n = 1; 3%), Romania (n = 1; 3%), and Poland (n = 1; 3%). shows basic sociodemographic data describing respondents included in the final sample. To meet the guidelines outlined by Martins et al. (Citation2023) on usability evaluation reporting, which emphasizes the need for heterogeneity in the evaluators’ domains, the recruitment process aimed at sampling participants from different sectors, such as manufacturing, academia, consulting, education, and communication. All of them had no previous experience with usability evaluation. Participants were external to the platform development team. Participant recruitment was conducted via a convenience sampling methodology, by posts on the H-WORK social media pages, via direct contact (i.e., email), and through the consortium partners’ contacts. Sixteen participants were males (51%), and fifteen were females (49%). The mean age was 44 (SD = 11). The mean tenure was ten years (SD = 10).

Table 1. Respondents’ sociodemographic data.

2.2. Procedure

Drawing from previous literature (Cai et al., Citation2017; Juristo, Citation2009; Newton et al., Citation2020; Yen & Bakken, Citation2012), a qualitative evaluation procedure was designed and deployed using the Think Aloud (TA) technique (Hartson & Pyla, Citation2012) and the Cognitive Walkthrough (CW) protocol (Mahatody et al., Citation2010), two approaches particularly used in early-stage evaluation of digital technology development (Hartson & Pyla, Citation2012). Formative evaluation, as the primary focus of the current study, aims at refining interaction design to identify user hesitations and barriers (ibid.). The application of TA and CW techniques has shown a significant impact on enhancing the technical aspects and usability of digital applications (Maramba et al., Citation2019).

The TA technique encourages users to verbalize their thoughts during their interaction with a system, thereby providing insights into and a deeper understanding of user behavior, motivations, intentions, and perceptions of the user experience (Hartson & Pyla, Citation2012; Nielsen, Citation1993). By eliciting real-time feedback, it facilitates the identification of potential usability issues and informs the iterative design process, ultimately enhancing the overall user experience. Recent reviews compared usability measures used in eHealth applications supported TA protocols in addressing and improving usability compared to stand-alone quantitative metrics such as the System Usability Scale (SUS; Broekhuis et al., Citation2019). Similarly, the Cognitive Walkthrough (CW) is a distinct usability inspection method, primarily focusing on evaluating cognitive activities and the alignment of action execution with user interface design (Hartson & Pyla, Citation2012; Mahatody et al., Citation2010; Polson et al., Citation1992). A variant of this method is the Cognitive Walkthrough with Users (CWU; Mahatody et al., Citation2010), which involves users in performing tasks while verbalizing their thoughts, feelings, and opinions about any aspect of the system or prototype experience. This addresses the traditional CW's lack of user involvement (Granollers & Lorés, Citation2006) and is particularly effective in assessing ease of use and the simplicity of interface exploration or learning (Mahatody et al., Citation2010).

2.3. Data collection

Data collection took place from February and March 2023. After providing informed consent, an average of fifty-minute online individual semi-structured interviews were run on Microsoft Teams, which helped reach our digital platform’s intended European. Following previous CW protocol guidelines (Mahatody et al., Citation2010), the interviews were video recorded after interview approval, allowing for a more comprehensive understanding of user interactions and potential usability issues (e.g., mouse movement). This research, as part of the H-WORK project (De Angelis et al., Citation2020), adhered to ethical standards and received approval from the Bioethics Committee of the Alma Mater Studiorum University of Bologna (Prot. n. 0185076) and in compliance with the Declaration of Helsinki (World Medical Association, Citation2013).

The data collection methodology was developed based on relevant systematic and scoping reviews (Balcombe & De Leo, Citation2023; Maramba et al., Citation2019; Yen & Bakken, Citation2012) as well as inspired by similar studies (Boucher et al., Citation2021; Bucci et al., Citation2018; Farzanfar & Finkelstein, Citation2012; Gray et al., Citation2020; Smit et al., Citation2021; Venning et al., Citation2021). The protocol questions focused around the following features of usability and acceptability testing (Hartson & Pyla, Citation2012), namely: aesthetics (i.e., colors, layout, attractiveness); information clarity and thoroughness; design (i.e., tools environment and organization), relevance; language (i.e., user-centered terminology); usefulness (i.e., the impact of the platform on mental health) intention to use.

The interview protocol was structured into five distinct sections, as follows:

Initiation: This phase involved introducing and presenting the landing page, during which participants were prompted to share their initial impressions.

Cognitive Walkthrough with Think-Aloud Technique: Participants were invited to experiment with the tools and to express their insights. Due to a deliberate effort to maintain participant engagement and prevent potential drop-out resulting from a lengthy interview process, participants selected which tool or section to test according to their interests. For example, the H-BT was tested by 10 participants, the H-DSS by 22, the H-EC by 9, the Policy Briefs by 6, and the Roadmap by 13. Also, to obtain synthesizable data and minimize potential differences related to data being collected for digital tools that are different in nature and purposes, we made sure that each participant was administered at least one interactive tool (i.e., H-DSS, H-BT, H-EC) and one non-interactive tool (i.e., H-WORK Roadmap, H-WORK Policy Briefs). Guidance on task execution was provided solely at the interviewee’s request.

Relevance-Associated Queries: This section was designed to elicit feedback on the platform’s overarching rationale and primary objective.

Layout-Based Queries: This part explored the user’s opinions of the overall platform design.

Conclusion: The final phase investigated participants thoughts on the potential impact of such platform on their workplace, specifically how it might serve as a valuable resource to address mental health at work.

In each segment of the protocol, participants were invited to share recommendations and suggestions for enhancement. The complete interview protocol can be found in the Appendix.

2.4. Data analysis

The recorded interviews were transcribed verbatim, and thematic content analysis (Braun & Clarke, Citation2006) was performed with the MAXQDA 2020 software. The analysis used a hybrid inductive/deductive approach (Armat et al., Citation2018; Fereday & Muir-Cochrane, Citation2006), thus balancing pre-existing categories of acceptability and usability (e.g., Hartson & Pyla, Citation2012) while identifying emerging sub-themes. Initially, we drew upon well-established criteria for the UTAUT Acceptability framework (Venkatesh et al., Citation2003; Arfi et al., Citation2021), where Trust and Perceived Risk were included, and the user interface recommendations of usability emerged from a comprehensive synthesis of previous studies (Diehl et al., Citation2022). Using an inductive methodology ensured our analysis was both theoretically robust and aligned with our target audience’s perspectives, crucial for influencing key organizational decisions like policy adoption, investment returns, and strategic workplace responses.

The interview transcripts were analyzed by a panel of three researchers. We implemented a coding process to identify segments related to pre-established categories (), detect new emerging topics, define themes, and systematically identify data patterns. Initially, each researcher conducted independent coding, followed by a joint session to compare, harmonize, and reach a consensus on the coded labels. illustrates this study’s theme definitions and the pre-existing framework’s category for usability and acceptability labels that guided the analysis.

Table 2. Definitions of this study’s themes and related pre-existing categories.

3. Results

Overall, three sub-themes were identified in the usability category, namely, “system intuitiveness,” “information clarity,” and “perceived enjoyment.” Three sub-themes were identified in the acceptability category, such as “performance expectancy,” “trust,” and “facilitating conditions” ().

3.1. Category “usability and related factors”

About the Usability category, the first sub-theme included instances, comments and suggestions related to the intuitiveness of the design. Despite some deeming the design as straightforward, quick, intuitive, easy, and engaging, other interviewees have outlined shortcomings that could prevent users from maximizing their platform experience. The most relevant design-related aspect is the soft log-in required to access the Interactive Tools, as one participant expressed:

I was interested if you had a database of interventions, and I started looking for that, and I could not find that immediately. But I saw here the interactive tools, and that triggered me. However, then I saw that you had to log in. So, I left it for a minute.

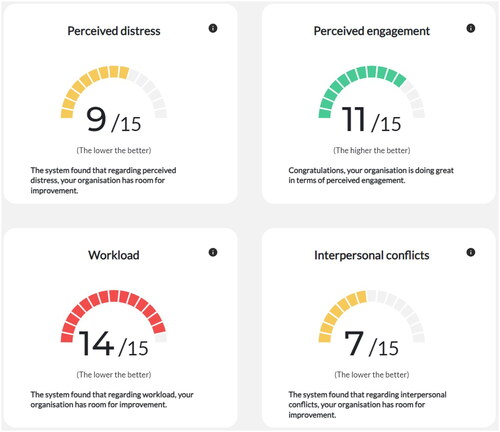

Similarly, some interviewees needed help with interpreting the results section of the Decision Support System (H-DSS). This part presents traffic light color-coded dashboards indicating how well their organization is doing on each dimension (e.g., stigma about mental health) covered in the mental health at work and psychosocial wellbeing questionnaires. Given that the feedback of the results is given on different subdimensions that change in direction (e.g., the higher, the better or, the lower, the better), some participants highlighted how this lack of consistency across scales’ direction could require additional mental effort from the users.

The relationship between intuitiveness in design and data visualization becomes evident in the results section of the H-DSS. Here, using traffic light color-coded dashboards was perceived as intuitive and visually engaging. On the other hand, the clarity of information is less directly connected to data visualization, as demonstrated by the need for further clarifications in the Economic Calculator section, In this section, users’ behaviour was more focused on retrieving actual information related to the contents and words used to described the various tools, their purpose, and how to then translate and apply them in the real organizational context.

Indeed, as a general comment, interviewees also emphasized how they experienced difficulties understanding promptly the functionalities and aim of the platform due to a lack of information. Participants would have appreciated a more explicit brief elevator pitch on the landing page as a design feature to ensure a smoother and faster understanding of the overall aim of the platform and the differences in functionality of each tool.

Therefore, the theme of information clarity pertained to the degree of comprehensibility attributed to the tool’s instructions or the feedback received from the system, focusing on instances in which participants explicitly sought additional information and expressed uncertainty over data to fill. This theme mainly emerged among those who tried the Economic Calculator (H-EC). All interviewees who assessed the H-EC (N = 9), had to ask for clarifications about at least one of the indexes and figures required to process the calculations (). Uncertainty over what data to fill in was reported, especially concerning the “productivity loss due to high-stress levels” percentage. Despite the tool providing a default percentage (i.e., 9%) set by the H-WORK experts, many participants shared their doubts over the definition of “high-stress levels”:

Now I take off my employer’s hat and put on my worker’s hat, and I am always stressed, always, because stress is anything: I missed the bus, I am already stressed, and then the concept of work-related stress linked to work issues is also trivialized, and things start to get mixed up, don’t they?

Additionally, two expressed concerns about the inability of this percentage to adequately represent the fluctuation in stress levels over time, as follows:

You are asking for a value for profit for a year, but stress, you know, could be, you know, a specific episode through a couple of months, could be a whole year, could be somebody who is on sick leave for extended periods I think it is a lot more elaborate than just putting in a percentage.

The theme of perceived enjoyment delves into the positive emotional responses elicited from the users while interacting with the platform. It is not merely about the platform serving its functional purpose but also about the added layer of users feeling a sense of satisfaction, amusement, or even delight during its use. This enjoyment goes beyond the basic instrumental value, adding an experiential dimension to the interaction. A salient example emerged when participants were exposed to the results section of the H-DSS. Their reactions indicated not just appreciation for the information provided but a genuine pleasure derived from the manner of its presentation and the interactive experience.

The dashboard and the traffic light colors were deemed very catchy to the eye (), as one stated:

Figure 2. The psychosocial well-being tool dashboard showing examples results provided by the decision support system.

“I feel like it is like it turns it into, like, a fun quiz people wanna feel like they got good results.”

Moreover, the platform’s color palette, tools, and available material were considered by most participants as calming, pleasing, and welcoming, suggesting that users feel at ease when using it. Similarly, one stressed how the different logos of the consortium partners, which encompass universities, small- and medium-sized enterprises (SMEs), large public organizations, and European-level OHS networks, conveyed a feeling of safety:

“I feel safe when I see [names of academic partners and European level networks]. I think it is a safe environment.”

3.2. Category “acceptability and related factors”

Acceptability encompasses three sub-themes: performance expectancy, trust, and facilitating conditions.

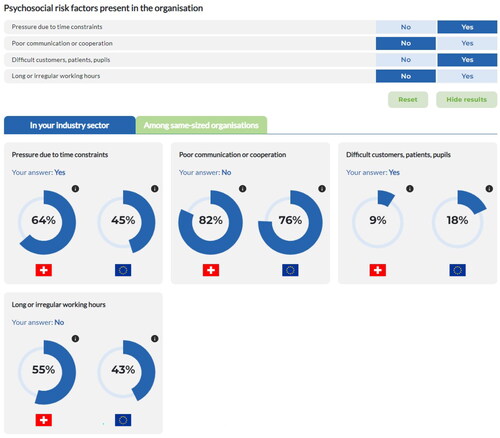

Performance expectancy grouped those instances where participants stated how the tools would effectively achieve the objectives of the platform (i.e., lead them to commit to mental health via increasing their awareness and motivation). For instance, a few participants stressed how the Benchmark calculator could be an eye opener by making employers recognize how their company may trails behind comparable organizations (), both domestically and at the European level. This may result in them feeling nudged to close this gap and promote mental health initiatives.

Similarly, the “Mental Health at Work” and the “Psychosocial Wellbeing” questionnaires were invaluable aids in stimulating employer self-reflection and enhancing awareness. In this context, the Interactive Tools were uniformly recognized as beneficial for managerial evaluations of the present working condition in terms of practices—most notably through the Benchmarking Tool (H-BT) and the Decision Support System—and costs via the Economic Calculator (H-EC). These connections to the practical, day-to-day aspects of the managerial organization link directly to performance expectation, as they demonstrate how the tool’s functionality aligns with the platform’s goal of improving the organization’s and managers’ awareness of mental health issues, thereby incentivizing greater motivation for its promotion. One participant stated that these tools could be employed to transform an organization from a state of ‘unknown incompetence’ to one of ‘known incompetence’, a transition she considered crucial for shifting how organizational well-being is approached.

Trust emerged as a sub-theme representing participants’ perception of the platform’s trustworthiness in relation to privacy and effectiveness of the tools. A few interviewees highlighted the platform’s significance as a tangible outcome of a substantial project financed by the European Union (EU). In this context, the EU brand served as a key factor in instilling trust among users, as it signified their commitment to adhering to data and privacy regulations and quality of material presented. However, most participants who assessed the H-EC and H-DSS, expressed the need for further information to enhance the platform’s explainability of results. Specifically, some pointed out that the lack of a more precise explanation of the link between the H-DSS outcomes and the recommended interventions and of the computation backing the H-EC might lead managers to doubt the overall results. Notably, a few of those who tried the H-EC reacted to the overall return on investment (ROI) with surprise and shock:

“Okay, super nice, but again, it looks like a bit wild.” Or: “My goodness. Thank goodness it is only five percent. Let’s see. [changes absence to 10%] Wow! […] I mean, I am a bit focused on mine, but it’s tremendous. It is a bit dizzying.”

“I was kind of like suspicious like, why should I, you know, tell someone how much money my company makes, […] I was, like, thinking about quitting because I wouldn’t be confident to provide such information.”

I’m the General Manager of a company. If someone asked me nine questions and told me, “You should do leadership training” after nine questions and to invest so much money in it, I would say, “Okay, no, sorry. How do you know that? There are so many other things which you do not know about the company?

Let us say that it seems to me – so, briefly – that the number of data that are considered are relatively few to have an accurate figure, that is. And then I cannot understand, as I said before, what the calculations behind this are at the base.

In this sense, while the actual benefits in being able to take advantage of tools with these purposes are known (see performance expectancy), users raise doubts and share concerns about the reliability of the data or calculations, reflecting issues of trust not only in the currency and relevance of the data, but also in the platform’s ability to provide actionable and reliable insights. Therefore, it is not a question of meaning and purpose, but of internal mechanisms that are clear, retrievable and auditable.

The sub-theme of facilitating conditions covered many contextual and organizational factors stated by the users as impacting the extent they would engage with the platform and its tools. Firstly, given the international background of the sample, most non-mother tongue interviewees stressed that the opportunity to access the information in their own language would make information more easily understandable and usable in their own companies. Secondly, the mental-health-specific terminology used was the second accessibility sub-theme found in the data. Specifically, a few users emphasized that they felt some terms such as “vulnerable workers”, “mental health conditions”, and “good practices” might not be accessible and interpretable by people who may lack basic mental health knowledge. In this sense, a few emphasized the gap between the organization’s health commitments and what is done in practice, stating how this responsibility is sometimes blurred across leadership levels. This results in difficulties in adequately answering the questions and ultimately accessing the results. As expressed by two interviewees:

“[.] Is this my immediate supervisor, the CEO? Who would this be? I wouldn’t know that, but yeah, some people do – I know some people do.”

Yeah, at least in consulting, we have different leaders every five months. So, your experience… changes completely from one project to another one… Then, we have other leaders that are just focused on numbers and deliverables, so you suffer a lot.

[.] There is, in my opinion, I do not know how to say, a gap to be considered, which is the prejudice, the difficulty, the impact that the term “mental health” has on the context we are referring to, which is not a clinical context, but a regular, working context. And the immediate reaction that I found, but I say it about myself, because we are all children of this culture, was “I do not need it, I am not crazy, I do not have problems” [.] I think this is something to be reckoned with. It might make it more difficult for people to perceive the instrument’s usefulness.

The organization type could additionally influence the accessibility of tools: for instance, one underlined that the H-EC would not be suitable with different core values and performance criteria, such as non-governmental organizations or not-for-profit organizations. A few emphasized the need for better clarity of the pricing plans of each intervention, as that would improve the users’ perceived financial accessibility to a given intervention, making decision-making ultimately faster.

4. Discussion

The digital revolution continues to shape our lives, presenting various platforms for a wide range of needs. Among these, platforms that address mental health in the work environment are becoming increasingly essential. The "Mental Health at Work Platform" has been created to serve this specific need (De Angelis et al., Citation2020), offering valuable guidance and practical tools. To ensure its effectiveness, a thorough assessment of the user-friendliness and accessibility of the digital platform is essential.

We aim to identify the key robust elements of the platform’s user interface and where it could improve to ensure user engagement, specifically focusing on usability and acceptability factors perceived by the platform’s specific target group (e.g., managers and employers).

4.1. System intuitiveness

Regarding the first pre-determined usability category, system intuitiveness, information clarity, and positive emotional reactions emerged as factors influencing usability. Managers and employers have expressed the need for smoother design features and efficient navigation, especially in terms of information hierarchy, which concerns the level of detail and interdependency of the presented information (Diehl et al., Citation2022). The lack of clarity on the landing page, the cumbersome log-in procedures and the time-consuming process to download material indicated a preference for a more direct and intuitive presentation of information. For example, based on user feedback, we optimized how the Roadmap is available, allowing users to preview the document and flip through the pages online, reducing the time and clicks required for access. In the domain of digital system design, the principle of navigation emerges as a paramount factor for specific target users (i.e., managers) that often seek streamlined access to vital information, guidelines, or instructions. Following this principle, to address the information gap on the landing page, we introduced pop-up video previews for each interactive tool, enhancing user understanding of tool functionalities and clarify user expectations regarding the usability of the tools.

As the literature suggests, the core of user interaction relies heavily on effective navigation, which significantly influences the speed and ease of achieving user objectives. As Fang and Holsapple (Citation2007) indicate, an optimally designed navigation system reduces the steps needed for user-system interaction. From our results, the concept extends beyond the basic hierarchical layout of web pages, involving strategic categorization and material accessibility, balancing between the necessity of additional clicks for a quick preview (e.g., demo preview) and the evaluation of interactive online versus downloadable offline content. Essentially, this highlights a user’s tendency to efficiently process information facilitated by a well-structured and agile navigation approach in digital platforms.

User feedback also pointed to the need for consistent and congruent data presentation, pointing out that irregular variations could potentially dim comprehension and thus impede the rapid processing of information. In response to this feedback, subsequent iterations of the platform have prioritized ensuring that the scales convey information consistently, thus mitigating unnecessary cognitive strain on users. This aligns with the principle that users more easily understand applications that are consistent within the digital system, thus reducing cognitive load and facilitates learning of how an application functions, as emphasized in usability research (Lowdermilk, Citation2013).

A close examination of the user experience revealed that not only the workflow was paramount but also how users interacted with the platform. Diehl et al. (Citation2022) outline these considerations on two distinct levels, namely consistency and navigation. However, the insights gleaned from this study support integrating these levels into a broader category: 'system intuitiveness’. Within this umbrella, principles such as consistency and navigation emerge as intricate, mutually reinforcing the optimal user experience.

Another significant usability aspect is the clarity of the language used by the system, particularly in the mental health questionnaires or the economic calculator is vital. More academic or generic terms may hamper the platform’s ability to communicate with users. Using familiar and easily recognizable language is crucial to enhancing user experience and increasing accessibility (Diehl et al., Citation2022), especially for mental health platforms, where users’ varied knowledge levels can influence intervention effectiveness (Kitchener & Jorm, Citation2002). The updated version of the platform now includes a glossary, which can be consulted online and offline via download, explaining in more detail some key terms related to the issues addressed by the platform. In this sense, the focus on the type of users who may interface with the system, where different cultural, professional, or organizational backgrounds may affect the understanding of the contents, becomes relevant.

4.2. Emotional triggers, trust and explainability

Participants’ emotional reactions to certain features of the platform (e.g., the color palette, the traffic-light dashboard) highlight the affective category of interface design, stressing the role of visual elements and gamification of information technology in enhancing user engagement and overall user experience. An interesting observation from our research concerns the profound influence of institutional logos on users’ perceptions. Serving as signs of authoritative approval, these logos intensified positive emotions (i.e., safety environment), increasing the inclination to use platform tools. The interaction between brand design and user experience is a well-established aspect in the literature (Septianto & Paramita, Citation2021; Kato, Citation2021). However, in our study, aimed primarily at senior decision-makers, we discovered a two-dimensional impact of these logos.

From a usability perspective, the presence of valued institutional emblems gave the platform a further impression of effectiveness and validity. In this sense, as trust resonates with people’s emotional and cognitive spheres and a sense of vulnerability (Vianello et al., Citation2023), on the acceptability front, institutional logos (e.g., EU brand) fostered a sense of safety and ethical assurance. Participants extrapolated those renowned entities, known for their rigorous research ethics and strict data management standards, endorsing the platform.

The usability and acceptability of interactive tools hinge on the clarity of system mechanics and logic. Participants emphasized the need for greater transparency and explainability, particularly in terms of platform outputs such as suggested interventions and costs, emphasizing the importance of users’ perceived reliability towards a given information technology system as an additional element to consider when evaluating acceptability (Alam et al., Citation2020). In today’s increasingly data-driven landscape, transparency and explainability are not only beneficial features, they are non-negotiable prerequisites. This study outlines how a system that remains an enigma to its users can erode trust, lead to distrust and hinder widespread adoption. Again, while tooltips were already present, they have been enhanced in the updated version to place greater emphasis on transparency and explainability alongside each indicator.

Additionally, participants expressed a need for clear and available information on data management, aligning with studies that highlight privacy, data security, and anonymity as an essential organizational factor promoting or hindering eHealth adoption (Alshahrani et al., Citation2022; Jimenez & Bregenzer, Citation2018; Schreiweis et al., Citation2019).

4.3. Digital nudging

Managers and key decision-makers value information hierarchy, predictive consistency, language, emotional triggers, and transparency and explainability in user interface design and interaction as relevant features, especially the need to gather the information they are looking for as quickly as possible. This urgency underscores the relevance of efficient user experience design for mental health tools in the workplace, in particular for managers who are under increasing time pressure (St-Hilaire & Gilbert, Citation2019). Techniques like 'digital nudging’, which guide user behavior in digital environments (Weinmann et al., Citation2016), are essential for streamlining interactions, enhancing intuitiveness, and reducing cognitive load. In mental health contexts, digital nudges can direct managers towards effective mental well-being strategies and data interpretation (Jesse & Jannach, Citation2021; Carolan & De Visser, Citation2018).

The benchmarking tool, a key aspect of acceptability, for example, represents a relevant example digital nudging. The strength of the benchmarking tool lies in its ability to provide users with a comparative picture that is informative and, at the same time, triggers a social comparison mechanism, which amplifies the user’s recognition of the perceived relevance of the digital solution and drives adoption (Venkatesh et al., Citation2003). When users see their performance compared to peers, they not only emphasize the practicality of the solution but also induce introspection and awareness on issues related to promoting mental health in the workplace. However, it is crucial to delineate the boundary between 'Performance Expectancy’ and 'Social Norms’, as outlined by the UTAUT model. Benchmarking does not show the opinions of others on the usage of the system (i.e., Social Norms) but clarifies how an organization measures itself, potentially influencing users’ perceptions of the usefulness and performance of the system in showing possible ways to improve.

However, although benchmarking may resonate strongly with many, its effectiveness may vary depending on user profiles and situations. Future studies on these aspects are highly encouraged. From the qualitative results of our study, using social pressure-driven 'nudging’ can enhance tool acceptability, prompting users to reflect on their practices. A well-implemented benchmarking feature in a digital tool not only facilitates acceptance but also encourages proactive engagement with mental health initiatives.

Similarly, other organizational-level factors, such as accessibility to the company’s data (as required in some of the platform’s tools), might also represent facilitating conditions for user-intended and actual take-up. In this sense, as stated by some interviewees, small and medium-sized enterprises may not gather stress-related data, and line managers might not have permission to access sensitive company information, thus limiting their chance to accept the platform and use digital tools. The feedback gathered in this respect allowed us to further refine some of our tools, in particular with regard to economic estimates, which are now governed by more common standards and accepted in small and medium-sized enterprises as well.

4.4. Limitations

This study has some limitations. We did not control for the participants’ digital literacy level, which may have influenced the data gathered regarding the usability and acceptability themes that emerged, as it directly influences the users’ capability to interact with the system (van Deursen & van Dijk, Citation2019; Martins et al., Citation2023). For example, the user’s understanding of how to navigate and manipulate the platform, along with their ability to understand and interpret the content it presents, might have been affected by their level of digital literacy. Indeed, at the time of testing the H-WORK Mental Health at Work Platform for the present study, it was only offered in English, so this is the language participants were administered the platform’s tools and resources. Incorporating these considerations in future studies can enhance the overall acceptability and usability of the platform.

An additional limitation of our study is the participants’ lack of familiarity with usability evaluation which might have influenced the comprehensiveness of their responses. Self-selection bias and recruitment bias might have also conditioned the data. As a proactive measure to mitigate and avoid the risk of participant drop-out from a lengthy interview procedure, not all participants tested all parts of the platform. This limited exposure to different tools or features of the platform, potentially missing essential insights or variations in usability and acceptability across different components. However, the sample represented a heterogeneous population, including employers, managers, HR directors, and OHS professionals from organizations based in multiple countries. The study used a hybrid deductive/inductive methodology, which is uncommon.

4.5. Recommendations

The results of our research offer tangible guidelines for the development and refinement of digital health platforms, particularly those that aim to promote mental health in the workplace. First, user experience is greatly enhanced when platforms prioritize the intuitiveness of the system. This means that the design of a platform should not only be aesthetically pleasing but should also be consistent and facilitate easy navigation. Decision-makers, who often face time constraints, greatly value the ability to quickly access and interpret relevant information. This is particularly relevant considering that eHealth tools and apps are gradually entering the work context. In this context, offering features such as an online document preview, as opposed to time-consuming downloads, or providing video previews and demos for interactive tools usage can greatly increase user engagement.

Consistency in data representation emerged as another essential aspect. Users, especially in professional settings, where the amount of data to be interpreted could be cumbersome, expect a uniform presentation of data to avoid confusion and cognitive stress. A consistent platform increases trust, and gamified visual elements may reduce the time to understand the provided content. In addition, the language used plays a key role in the understandability of the platform. Since these platforms could target users with different professional, cultural, and organizational backgrounds, using clear, relatable, and easily understandable language is critical. The inclusion of glossaries or explanatory sections can help in this regard.

One interesting finding was the impact of institutional logos on users’ perceptions. From a practical standpoint, platforms seeking to convey positive emotions and build trust might consider partnering with reputable institutions or integrating recognized endorsements into their design. However, although visual validations can increase user trust, transparency and explainability in data management and system processes remain critical. Users are more likely to engage with a platform they perceive as open and secure. Accordingly, platforms should be forthcoming about their data management practices, providing clarity and accessibility to this information.

Finally, digital nudging, in which users are gently guided toward optimal choices, seems to have great potential to improve user engagement. By integrating features that tap into users’ inherent desire for comparison and validation, such as benchmarking tools, platforms can stimulate users’ self-awareness and proactive organizational actions when it comes to promoting mental health at work.

5. Conclusion

In conclusion, our study provides valuable insights into the usability and acceptability of a digital platform developed to promote mental well-being in the workplace. The research underscores the importance of several factors, including the intuitiveness of the system, the clarity of the platform’s language and structure, and the trustworthiness and explainability of the tools it offers.

Additionally, the research brings to light potential areas for improvement in platform design, such as simplifying the access procedure, enhancing the consistency and information hierarchy, and increasing transparency around data management. Implementing these changes could significantly enhance the platform’s usability and acceptability, thereby increasing its potential for widespread adoption in various organizational contexts. This research contributes to the broader discourse around digital well-being tools to be applied in the workplace, providing vital insights for developers, stakeholders, and end-users.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

Notes on contributors

Marco De Angelis

Marco De Angelis, graduated at the University of Bologna, specializes in human factors and technology adoption research. With a focus on gender and age dynamics from the University of Groningen, he teaches industry safety and human factors. He is keen on the impact of AI and Industry 5.0 studies.

Lucia Volpi

Lucia Volpi has recently concluded an international research master’s in Work and Organizational Psychology. She studied HR and Psychology in her bachelor’s, and now works as a research assistant at the University of Bologna. Her past research has focused on physiological changes at work related to gender.

Davide Giusino

Davide Giusino is a Ph.D. Candidate at the Department of Psychology of the University of Bologna. His research covers digital-based interventions for teams in the workplace and mental health and psychosocial well-being in working environments. He is involved in the EU-H2020 project H-WORK.

Luca Pietrantoni

Luca Pietrantoni, a Full Professor at the University of Bologna, specializes in Work and Organizational Psychology. He is an active contributor to Horizon Europe projects, focusing on integrating AI and robotics in organizations. His expertise lies in safety, risk, and human factors within industrial workplaces.

Federico Fraboni

Federico Fraboni, with a Ph.D. from the University of Bologna, specializes in Human Factors and technology interaction. A background in Work Psychology underpins his roles in European research and private projects. Now at Bologna’s Safety research unit, he focuses on human-robot collaboration, workload, and risky work behaviors.

References

- Alam, M. K., Hoque, M. R., Hu, W., & Barua, Z. (2020). Factors influencing the adoption of mHealth services in a developing country: A patient-centric study. International Journal of Information Management, 50, 128–143. https://doi.org/10.1016/j.ijinfomgt.2019.04.016

- Alqahtani, F., & Orji, R. (2019). Usability issues in mental health applications [Paper presentation]. Adjunct Publication of the 27th Conference on User Modeling, Adaptation and Personalization, June; pp. 343–348. https://doi.org/10.1145/3314183.3323676

- Alshahrani, A., Williams, H., & MacLure, K. (2022). Investigating health managers’ perspectives of factors influencing their acceptance of eHealth services in the Kingdom of Saudi Arabia: A quantitative study. Saudi Journal of Health Systems Research, 2(3), 114–127. https://doi.org/10.1159/000525423

- Arfi, W. B., Nasr, I. B., Kondrateva, G., & Hikkerova, L. (2021). The role of trust in intention to use the IoT in eHealth: Application of the modified UTAUT in a consumer context. Technological Forecasting and Social Change, 167, 120688. https://doi.org/10.1016/j.techfore.2021.120688

- Armaou, M., Konstantinidis, S., & Blake, H. (2019). The effectiveness of digital interventions for psychological well-being in the workplace: A systematic review protocol. International Journal of Environmental Research and Public Health, 17(1), 255. https://doi.org/10.3390/ijerph17010255

- Armat, M. R., Assarroudi, A., Rad, M., Sharifi, H., & Heydari, A. (2018). Inductive and deductive: Ambiguous labels in qualitative content analysis. The Qualitative Report, 23(1), 219–221. https://doi.org/10.46743/2160-3715/2018.2872

- Balcombe, L., & De Leo, D. (2023). Evaluation of the use of digital mental health platforms and interventions: Scoping review. International Journal of Environmental Research and Public Health, 20(1), 362. https://doi.org/10.3390/ijerph20010362

- Boucher, E. M., McNaughton, E. C., Harake, N., Stafford, J., & Parks, A. C. (2021). The impact of a digital intervention (Happify) on loneliness during COVID-19: Qualitative focus group. JMIR Mental Health, 8(2), e26617. https://doi.org/10.2196/26617

- Braun, V., & Clarke, V. (2006). Using thematic analysis in psychology. Qualitative Research in Psychology, 3(2), 77–101. https://doi.org/10.1191/1478088706qp063oa

- Broekhuis, M., Van Velsen, L., & Hermens, H. J. (2019). Assessing usability of eHealth technology: A comparison of usability benchmarking instruments. International Journal of Medical Informatics, 128, 24–31. https://doi.org/10.1016/j.ijmedinf.2019.05.001

- Bucci, S., Morris, R., Berry, K., Berry, N., Haddock, G., Barrowclough, C., Lewis, S., & Edge, D. (2018). Early psychosis service user views on digital technology: Qualitative analysis. JMIR Mental Health, 5(4), e10091. https://doi.org/10.2196/10091

- Bunyi, J., Ringland, K. E., & Schueller, S. M. (2021). Accessibility and digital mental health: Considerations for more accessible and equitable mental health apps. Frontiers in Digital Health, 3, 742196. https://doi.org/10.3389/fdgth.2021.742196

- Cai, R., Beste, D., Chaplin, H., Varakliotis, S., Suffield, L., Josephs, F., Sen, D., Wedderburn, L. R., Ioannou, Y., Hailes, S., & Eleftheriou, D. (2017). Developing and evaluating JIApp: Acceptability and usability of a smartphone app system to improve self-management in young people with juvenile idiopathic arthritis. JMIR mHealth and uHealth, 5(8), e121. https://doi.org/10.2196/mhealth.7229

- Carolan, S., & De Visser, R. O. (2018). Employees’ perspectives on the facilitators and barriers to engaging with digital mental health interventions in the workplace: Qualitative study. JMIR Mental Health, 5(1), e8. https://doi.org/10.2196/mental.9146

- Carolan, S., Harris, P. C., & Cavanagh, K. (2017). Improving employee well-being and effectiveness: Systematic review and meta-analysis of web-based psychological interventions delivered in the workplace. Journal of Medical Internet Research, 19(7), e271. https://doi.org/10.2196/jmir.7583

- Chan, A. H. Y., & Honey, M. L. (2022). User perceptions of mobile digital apps for mental health: Acceptability and usability‐An integrative review. Journal of Psychiatric and Mental Health Nursing, 29(1), 147–168. https://doi.org/10.1111/jpm.12744

- Chan, G., Alslaity, A., Wilson, R., & Orji, R. (2023). Feeling moodie: Insights from a usability evaluation to improve the design of mHealth apps. International Journal of Human–Computer Interaction, 1–19. https://doi.org/10.1080/10447318.2023.2241613

- Couper, M. P., Alexander, G. L., Zhang, N., Little, R. J. A., Maddy, N., Nowak, M. A., McClure, J. B., Calvi, J., Rolnick, S. J., Stopponi, M. A., & Johnson, C. C. (2010). Engagement and retention: Measuring breadth and depth of participant use of an online intervention. Journal of Medical Internet Research, 12(4), e52. https://doi.org/10.2196/jmir.1430

- Davis, F. D. (1989). Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Quarterly, 13(3), 319–340. https://doi.org/10.2307/249008

- De Angelis, M., Giusino, D., Nielsen, K., Aboagye, E., Christensen, M., Innstrand, S. T., Mazzetti, G., Van Den Heuvel, M., Sijbom, R. B. L., Pelzer, V., Chiesa, R., & Pietrantoni, L. (2020). H-WORK project: Multilevel interventions to promote mental health in SMEs and public workplaces. International Journal of Environmental Research and Public Health, 17(21), 8035. https://doi.org/10.3390/ijerph17218035

- Diehl, C., Martins, A., Almeida, A., Silva, T., Ribeiro, Ó., Santinha, G., Rocha, N., & Silva, A. G. (2022). Defining recommendations to guide user interface design: Multimethod approach. JMIR Human Factors, 9(3), e37894. https://doi.org/10.2196/37894

- EU-OSHA. (2019). European survey of enterprises on new and emerging risks (ESNER-3): Managing safety and health at work (pp. 1–160). Retrieved December 19, 2022, from https://visualisation.osha.europa.eu/esener/en/survey/overview/2019

- EU-OSHA. (2019). Psychosocial risks in Europe: Prevalence and strategies for prevention. Publications of the European Union. Retrieved June 15, 2019, from https://osha.europa.eu/en/tools-andpublications/publications/reports

- Fang, X., & Holsapple, C. W. (2007). An empirical study of web site navigation structures’ impacts on web site usability. Decision Support Systems, 43(2), 476–491. https://doi.org/10.1016/j.dss.2006.11.004

- Farzanfar, R., & Finkelstein, D. (2012). Evaluation of a workplace technology for mental health assessment: A meaning-making process. Computers in Human Behavior, 28(1), 160–165. https://doi.org/10.1016/j.chb.2011.08.022

- Fereday, J., & Muir-Cochrane, E. (2006). Demonstrating rigor using thematic analysis: A hybrid approach of inductive and deductive coding and theme development. International Journal of Qualitative Methods, 5(1), 80–92. https://doi.org/10.1177/160940690600500107

- Gayed, A., LaMontagne, A. D., Milner, A., Deady, M., Calvo, R. A., Christensen, H., Mykletun, A., Glozier, N., & Harvey, S. B. (2018). A new online mental health training program for workplace managers: Pre-post pilot study assessing feasibility, usability, and possible effectiveness. JMIR Mental Health, 5(3), e10517. https://doi.org/10.2196/10517

- Goundar, M. S., Kumar, B. A., & Ali, A. S. (2022). Development of usability guidelines: A systematic literature review. International Journal of Human–Computer Interaction, 1–19. https://doi.org/10.1080/10447318.2022.2141009

- Granollers, T., & Lorés, J. (2006). Incorporation of users in the evaluation of usability by cognitive walkthrough. In R. Navarro-Prieto & J. Vidal (Eds.), HCI related papers of interacción. 2004 (pp. 243–255). Springer. https://doi.org/10.1007/1-4020-4205-1_20

- Gray, R., Kelly, P. J., Beck, A. K., Baker, A. L., Deane, F. P., Neale, J., Treloar, C., Hides, L., Manning, V., Shakeshaft, A., Kelly, J. M., Argent, A., & McGlaughlin, R. (2020). A qualitative exploration of SMART Recovery meetings in Australia and the role of a digital platform to support routine outcome monitoring. Addictive Behaviors, 101, 106144. https://doi.org/10.1016/j.addbeh.2019.106144

- Griffiths, F., Lindenmeyer, A., Powell, J., Lowe, P., & Thorogood, M. (2006). Why are health care interventions delivered over the internet? A systematic review of the published literature. Journal of Medical Internet Research, 8(2), e10. https://doi.org/10.2196/jmir.8.2.e10

- Hartson, R., & Pyla, P. S. (2012). The UX Book: Process and guidelines for ensuring a quality user experience. Morgan Kaufmann.

- Hennink, M., & Kaiser, B. N. (2022). Sample sizes for saturation in qualitative research: A systematic review of empirical tests. Social Science & Medicine (1982), 292, 114523. https://doi.org/10.1016/j.socscimed.2021.114523

- Howarth, A., Quesada, J. I. P., Donnelly, T., & Mills, P. D. (2019). The development of ‘Make One Small Change’: An e-health intervention for the workplace developed using the person-based approach. Digital Health, 5, 2055207619852856. https://doi.org/10.1177/2055207619852856

- Howarth, A., Quesada, J. I. P., Silva, J., Judycki, S., & Mills, P. D. (2018). The impact of digital health interventions on health-related outcomes in the workplace: A systematic review. Digital Health, 4, 2055207618770861. https://doi.org/10.1177/2055207618770861

- Jesse, M., & Jannach, D. (2021). Digital nudging with recommender systems: Survey and future directions. Computers in Human Behavior Reports, 3, 100052. https://doi.org/10.1016/j.chbr.2020.100052

- Jimenez, P., & Bregenzer, A. (2018). Integration of eHealth tools in the process of workplace health promotion: Proposal for design and implementation. Journal of Medical Internet Research, 20(2), e65. https://doi.org/10.2196/jmir.8769

- Juristo, N. (2009). Impact of usability on software requirements and design. In A. De Lucia & F. Ferrucci (Eds.), Software engineering. ISSSE 2007 2008 2006. Lecture notes in computer science (vol. 5413, pp. 55–77). Springer. https://doi.org/10.1007/978-3-540-95888-8_3

- Kato, T. (2021). Functional value vs emotional value: A comparative study of the values that contribute to a preference for a corporate brand. International Journal of Information Management Data Insights, 1(2), 100024. https://doi.org/10.1016/j.jjimei.2021.100024

- Keuper, J., Batenburg, R., van Tuyl, L. (2021). Use of e-health in general practices during the Covid-19 pandemic. ScienceOpen Posters. https://scholar.archive.org/work/ffdyu3aayfgkzaighm3zb27ale/access/wayback/https://www.scienceopen.com/document_file/f9a3631c-9d3b-4066-abfd-c24a0e4151e6/ScienceOpen/e-Poster%20Use%20of%20E-health%20in%20general%20practices%20during%20Covid-19%20pandemic.pdf

- Kitchener, B. A., & Jorm, A. F. (2002). Mental health first aid training for the public: Evaluation of effects on knowledge, attitudes and helping behavior. BMC Psychiatry, 2(1), 10. https://doi.org/10.1186/1471-244X-2-10

- Lecomte, T., Potvin, S., Corbière, M., Guay, S., Samson, C., Cloutier, B., Francoeur, A., Pennou, A., & Khazaal, Y. (2020). Mobile apps for mental health issues: Meta-review of meta-analyses. JMIR mHealth and uHealth, 8(5), e17458. https://doi.org/10.2196/17458

- Lowdermilk, T. (2013). User-centered design: A developer’s guide to building user-friendly applications. O'Reilly Media, Inc.

- Mahatody, T., Sagar, M., & Kolski, C. (2010). State of the art on the cognitive walkthrough method, its variants, and evolutions. International Journal of Human-Computer Interaction, 26(8), 741–785. https://doi.org/10.1080/10447311003781409

- Maramba, I., Chatterjee, A., & Newman, C. W. (2019). Methods of usability testing in the development of eHealth applications: A scoping review. International Journal of Medical Informatics, 126, 95–104. https://doi.org/10.1016/j.ijmedinf.2019.03.018

- Martins, A. I., Santinha, G., Almeida, A. M., Ribeiro, O., Silva, T., Da Rocha, N. P., & Silva, A. G. (2023). Consensus on the terms and procedures for planning and reporting usability evaluation of health-related digital solutions: A Delphi study and a resulting checklist. Journal of Medical Internet Research, 25(1), e44326. https://doi.org/10.2196/44326

- Newton, A. S., Bagnell, A., Rosychuk, R. J., Duguay, J., Wozney, L., Huguet, A., Henderson, J., & Curran, J. (2020). A mobile phone-based app for use during cognitive behavioral therapy for adolescents with anxiety (MindClimb): User-centered design and usability study. JMIR mHealth and uHealth, 8(12), e18439. https://doi.org/10.2196/18439

- Nielsen, J. (1993). Usability engineering. Academic Press.

- Pal, S., Biswas, B., Gupta, R., Kumar, A., & Gupta, S. (2023). Exploring the factors that affect user experience in mobile-health applications: A text-mining and machine-learning approach. Journal of Business Research, 156, 113484. https://doi.org/10.1016/j.jbusres.2022.113484

- Polson, P. G., Lewis, C., Rieman, J., & Wharton, C. (1992). Cognitive walkthroughs: A method for theory-based evaluation of user interfaces. International Journal of Man-Machine Studies, 36(5), 741–773.(92)90039-n https://doi.org/10.1016/0020-7373

- Roland, J., Lawrance, E., Insel, T., Christensen, H. (2020). The digital mental health revolution: Transforming care through innovation and scale-up. World Innovation Summit for Health. Retrieved June 5, 2023, from https://www.wish.org.qa/reports/the-digital-mental-health-revolution-transforming-care-through-innovation-and-scale-up/

- Schreiweis, B., Pobiruchin, M., Strotbaum, V., Suleder, J., Wiesner, M., & Bergh, B. (2019). Barriers and facilitators to the implementation of eHealth services: Systematic literature analysis. Journal of Medical Internet Research, 21(11), e14197. https://doi.org/10.2196/14197

- Septianto, F., & Paramita, W. (2021). Cute brand logo enhances favorable brand attitude: The moderating role of hope. Journal of Retailing and Consumer Services, 63, 102734. https://doi.org/10.1016/j.jretconser.2021.102734

- Skakon, J., Kristensen, T. S., Christensen, K. B., Lund, T., & Labriola, M. (2011). Do managers experience more stress than employees? Results from the Intervention Project on Absence and Well-being (IPAW) study among Danish managers and their employees. Work (Reading, MA), 38(2), 103–109. https://doi.org/10.3233/wor-2011-1112

- Smit, D., Vrijsen, J. N., Groeneweg, B. F., Vellinga-Dings, A., Peelen, J., & Spijker, J. (2021). A newly developed online peer support community for depression (Depression Connect): Qualitative study. Journal of Medical Internet Research, 23(7), e25917. https://doi.org/10.2196/25917

- St-Hilaire, F., & Gilbert, F. (2019). What do leaders need to know about managers’ mental health? Organizational Dynamics, 48(3), 85–92. https://doi.org/10.1016/j.orgdyn.2018.11.002

- Stratton, E., Lampit, A., Choi, I., Calvo, R. A., Harvey, S. B., & Glozier, N. (2017). Effectiveness of eHealth interventions for reducing mental health conditions in employees: A systematic review and meta-analysis. PloS One, 12(12), e0189904. https://doi.org/10.1371/journal.pone.0189904

- Stratton, E., Lampit, A., Choi, I., Malmberg Gavelin, H., Aji, M., Taylor, J., Calvo, R. A., Harvey, S. B., & Glozier, N. (2022). Trends in effectiveness of organizational eHealth interventions in addressing employee mental health: Systematic review and meta-analysis. Journal of Medical Internet Research, 24(9), e37776. https://doi.org/10.2196/37776

- Tomaino, S. C. M., Viganò, G., & Cipolletta, S. (2022). The COVID-19 crisis as an evolutionary catalyst of online psychological interventions. A systematic review and qualitative synthesis. International Journal of Human–Computer Interaction, 40(2), 160–172. https://doi.org/10.1080/10447318.2022.2111047

- van Deursen, A. J., & van Dijk, J. A. (2019). The first-level digital divide shifts from inequalities in physical access to inequalities in material access. New Media & Society, 21(2), 354–375. https://doi.org/10.1177/1461444818797082

- Venkatesh, V., Morris, M. A., Davis, G. B., & Davis, F. D. (2003). User acceptance of information technology: Toward a unified view. Management Information Systems Quarterly, 27(3), 425. https://doi.org/10.2307/30036540

- Venning, A., Herd, M. C. E., Oswald, T. K., Razmi, S., Glover, F., Hawke, T., Quartermain, V., & Redpath, P. (2021). Exploring the acceptability of a digital mental health platform incorporating a virtual coach: The good, the bad, and the opportunities. Health Informatics Journal, 27(1), 1460458221994873. https://doi.org/10.1177/1460458221994873

- Vianello, A., Laine, S., & Tuomi, E. (2023). Improving trustworthiness of AI solutions: A qualitative approach to support ethically-grounded AI design. International Journal of Human–Computer Interaction, 39(7), 1405–1422. https://doi.org/10.1080/10447318.2022.2095478

- Walsh, M. (2022). Report on mental health in the digital world of work. European Parliament. https://www.europarl.europa.eu/doceo/document/A-9-2022-0184_EN.html

- Weinmann, M., Schneider, C., & Brocke, J. V. (2016). Digital nudging. Business & Information Systems Engineering, 58, 433–436.

- World Health Organization & International Labor Organization. (2020). Mental health and psychosocial support in the workplace: Action for healthy and decent work. World Health Organization.

- World Health Organization. (2022). Guidelines on mental health at work. https://www.who.int/publications/i/item/9789240053052

- World Medical Association. (2013). World medical association declaration of Helsinki. JAMA, 310(20), 2191–2194. https://doi.org/10.1001/jama.2013.281053

- Yen, P., & Bakken, S. (2012). Review of health information technology usability study methodologies. Journal of the American Medical Informatics Association: JAMIA, 19(3), 413–422. https://doi.org/10.1136/amiajnl-2010-0

Appendix

Introduction: The interviewer greets the participant, explains the interview aim, procedure, and duration, discusses ethical issues like voluntary participation, right to withdraw, right to skip questions, confidentiality, and privacy, asks for permission to video-record the session, and checks if there are any questions before beginning.

User info: The interviewer collects basic information about the participant, such as sex, country, age, job position, tenure, sector, industry, and browser used.

Platform familiarization: The interviewer sends a link to the platform and asks the participant to navigate it. The participant is questioned about their prior platform use and similar tools. They also provide first impressions about the aesthetics and organization of the platform.

Cognitive walkthrough with think-aloud technique: In this section, the participant explores specific tools on the platform while thinking aloud, sharing their impressions of various aspects of the platform’s layout, information, language, colors, and clarity. The participant engages with different groups of tools like Benchmarking + Policy briefs, DSS + Roadmap, and Economic Calculator.

General questions related to tools: The participant answers questions about each tool they used on the platform, how helpful they found it in their work, and its ease of use. They are also asked to compare the tools and discuss their difficulties.

Questions related to the platform: The participant provides their opinion on the potential impact of using the platform on mental health at their workplace, how often they would use it, what might prevent them from using it, and how these barriers might be overcome. They also discuss the platform’s relevance to their profession, the sufficiency of the information provided, the clarity of the language, the aesthetics of the layout, and their intention to recommend it to others.

Closure: Finally, the participant shares any additional thoughts they might have, and the interviewer summarizes the main points and next steps. The interview is then concluded.