Abstract

The increased prevalence of human-AI collaboration is reshaping the manufacturing sector, fundamentally changing the nature of human work and training needs. While high automation improves performance when functioning correctly, it can lead to problematic human performance (e.g., defect detection accuracy, response time) when operators are required to intervene and assume manual control of decision-making responsibilities. As AI capability reaches higher levels of automation and human–AI collaboration becomes ubiquitous, addressing these performance issues is crucial. Proper worker training, focusing on skill-based, cognitive, and affective outcomes, and nurturing motivation and engagement, can be a mitigation strategy. However, most training research in manufacturing has prioritized the effectiveness of a technology for training, rather than how training design influences motivation and engagement, key to training success and longevity. The current study explored how training workers using an AI system affected their motivation, engagement, and skill acquisition. Specifically, we manipulated the level of automation of decision selection of an AI used for the training of 102 participants for a quality control task. Findings indicated that fully automated decision selection negatively impacted perceived autonomy, self-determined motivation, behavioral task engagement, and skill acquisition during training. Conversely, partially automated AI-enhanced motivation and engagement, enabling participants to better adapt to AI failure by developing necessary skills. The results suggest that involving workers in decision-making during training, using AI as a decision aid rather than a decision selector, yields more positive outcomes. This approach ensures that the human aspect of manufacturing work is not overlooked, maintaining a balance between technological advancement and human skill development, motivation, and engagement. These findings can be applied to enhance real-world manufacturing practices by designing training programs that better develop operators’ technical, methodological, and personal skills, though companies may face challenges in allocating substantial resources for training redevelopment and continuously adapting these programs to keep pace with evolving technology.

1. Introduction

Advances in technological interconnectivity, decision-making speed, and automation have greatly improved the capability of artificial intelligence (AI)-based manufacturing work systems, thus changing the nature of the work being done by workers in the manufacturing sector. AI is becoming more integrated and pervasive in industrial processes due to this technological advancement, also known as Industry 4.0 (I4.0) or the fourth industrial revolution (Jan et al., Citation2022). The main objective is to support human workers rather than replace them. This focus on supporting rather than replacing human workers is driven by the recognition that the complete replacement of humans by AI could lead to several detrimental outcomes and be unfeasible in many manufacturing contexts.

First, it risks losing the critical thinking, creative problem-solving, and adaptability that human workers bring, which are essential for handling unpredictable situations and innovations that AI cannot yet replicate (Kolade & Owoseni, Citation2022; Strenge & Schack, Citation2021). Second, many organizations, particularly those embracing Lean Management principles, place a high value on worker autonomy (Rosin et al., Citation2020). They aim for a transition from a technology-centered to a value-centered vision, where AI tools are designed to support rather than replace human decision-making processes (Enang et al., Citation2023; Kumar et al., Citation2021). This approach is aligned with the findings of Rosin et al. (Citation2021, Citation2022), who demonstrated various ways to support decision-making in manufacturing with Industry 4.0 tools, including AI, suggesting that complete automation is not always desirable. Indeed, research has shown that automating decision-making aspects of work may reduce workers’ perceptions of autonomy/agency, and thus their subjective well-being, characterized by lower motivation and sense of meaningfulness at work (Legaspi et al., Citation2024; Nazareno & Schiff, Citation2021).

Third, the full automation of tasks without human oversight might lead to systemic vulnerabilities, where AI systems may fail to adapt to novel scenarios or detect nuanced anomalies, potentially compromising safety and efficiency. Indeed, AI tools, vary greatly in performance depending on the complexity of the task and data quality (Usuga Cadavid et al., Citation2020). Particularly notable are the challenges arising from the adoption of personalized product strategies seen in I4.0. The inherent variability in data associated with customized approaches, frequently hinders the development of robust and reliable AI systems, necessitating more advanced and adaptable technological solutions (Neumann et al., Citation2022). Finally, manufacturing environments frequently undergo changes, as new products are continuously developed and brought to market. This necessitates regular adjustments in manufacturing and assembly processes, including shifts in raw materials (Martínez-Olvera & Mora-Vargas, Citation2019; Zhou et al., Citation2022). These regular changes can lead to decreases in AI tool reliability and even obsolescence in cases of significant technological shifts (Mellal, Citation2020).

Recognizing the strengths and limitations of both AI and humans leads to the acknowledgment that both have distinct but complementary roles to play in modern manufacturing. Humans, with their versatility, creativity, and problem-solving capabilities, complement AI's proficiency in performing repetitive tasks quickly, accurately, and consistently. In contrast to humans or AI acting alone, it is anticipated that both working together would increase efficiency, productivity, and cost-effectiveness (Klumpp et al., Citation2019; Wilson & Daugherty, Citation2018). Because of this, human-AI collaboration in manufacturing systems is becoming more common in the age of I4.0. The nature of the human’s work is fundamentally altered by this partnership in terms of job responsibilities and skill/training requirements (Avril et al., Citation2022; Da Silva et al., Citation2022; Gagné et al., Citation2022; Magnani, Citation2021; Parker & Grote, Citation2022; Soo et al., Citation2021). For example, the automation of repetitive manual tasks shifts human work towards a higher-level supervisory role which involves manual takeover, troubleshooting, or problem-solving when automation malfunctions.

This role requires workers to have the capabilities to detect a malfunctioning/failing automation and manually take over efficiently. Research has shown this to be a challenging aspect of human-automation collaboration, noting that the increased automation of AI systems is a double-edged sword with regards to human task performance. As levels of automation increase, so does worker performance during routine system operation. On the other hand, when automation fails or malfunctions, higher levels of automation lead to worse performance (Bainbridge, Citation1983; Onnasch et al., Citation2014). Essentially, workers may become complacent and over-rely on automation, leaving them unable to adequately respond, resulting in precarious performance (Liu, Citation2023).

As human–AI collaboration becomes more prevalent and the capability of AI increases, it is necessary to find ways to mitigate worker task performance issues in the inevitable situation of automation malfunction. One such mitigation, brought forward by many, is proper worker training (Büth et al., Citation2018; Cazeri et al., Citation2022; Da Silva et al., Citation2022; Molino et al., Citation2020; Parker & Grote, Citation2022; Saniuk et al., Citation2021). Indeed, providing workers with problem-solving, analytical and decision-making skills, as well as the motivation to learn and improve, can help them adapt to the growing capability of AI, fostering an efficient human–AI collaboration (Bell et al., Citation2017; Hecklau et al., Citation2016; Zirar et al., Citation2023). Despite its noted importance, training workers using highly-automated AI systems has received relatively little research attention in the manufacturing domain. Rather, most of the research has focused on operational, or technical aspects, as opposed to a human-centred focus, which values the worker as an indispensable resource to the success of I4.0 work systems in which humans and AI collaborate.

This issue has been echoed by the scientific community (European Commission et al., Citation2021; Gagné et al., Citation2022; Kaasinen et al., Citation2019; Kadir et al., Citation2019; Neumann et al., Citation2021; Rauch et al., Citation2020). Indeed, in their systematic review, Kadir et al. (Citation2019) found that less than 2% of all papers about I4.0 had a human-centred focus. Generally speaking, this limits our understanding regarding the design and implementation of AI systems. More specific to the current study, this limits our understanding regarding the creation of training that would give workers the necessary capabilities to adequately adapt when AI systems malfunction. The European Commission has raised the lack of human-centric research and has brought forward the concept of Industry 5.0 (I5.0), defined as a manufacturing paradigm that leverages technology to promote worker well-being and empowerment, societal development, and environmental sustainability (European Commission et al., Citation2021; Humayun, Citation2021; Leng et al., Citation2022). Essentially, I5.0 aims to alleviate issues within I4.0 research by stimulating human-centric research in which human empowerment and augmentation is paramount.

Within the optic of I5.0, the current paper presents an experiment which explores an under-researched aspect of human–AI collaboration that plays a pivotal role in the long-term success of work system, i.e., worker training in a highly automated manufacturing environment. More specifically, we aim to investigate the impact of AI decision-selection level of automation during training for a quality control task on worker skill acquisition, motivation, and engagement. We intend to answer two research questions: (1) Does AI level of automation during training affect a worker’s ability to perform when AI fails? (2) Does AI level of decision-selection automation affect worker motivation and engagement during training?

The rest of the article is structured as follows. First, the next section presents a literature review of worker competencies in relation to automation and worker training within I4.0. We then discuss our experimental methodology and results before concluding the paper a with a discussion on this research’s main contribution and limitations.

2. Literature review

The following section will review the relevant literature on I4.0, automation, worker competencies, training, motivation, and engagement.

2.1. Industry 4.0, automation, and the impact on work and workers

I4.0 is characterized by technological advancement such as decentralized decision-making, real-time data, and technology interconnectivity (Danjou et al., Citation2017). These advancements have led to the increased capability of AI-based systems, allowing more cognitive complex tasks to now be automated. Humans will not be completely replaced by AI, as whole jobs cannot be automated (Brynjolfsson et al., Citation2018; Parker & Grote, Citation2022). Additionally, AI and humans each have their strengths. For example, humans are better at complex decision-making requiring contextual understanding, while AI is better at collecting and processing a large amount of data (Bainbridge, Citation1983). Rather than whole jobs, tasks within jobs are being automated, meaning that humans and AI are working collaboratively more than ever.

Increased automation comes with both benefits and drawbacks, as is well-documented within human factors research. For example, automated systems can improve worker safety by taking over dangerous tasks and can improve company profitability through better process efficiency (Parker & Grote, Citation2022). On the other hand, higher automation is associated with significant human performance issues when AI systems malfunction, as they inevitably do (Bindewald et al., Citation2020; Onnasch et al., Citation2014; Wickens, Citation2018). This issue, commonly known as the out-of-the-loop performance problem, is due to an overreliance on automation, complacency, and/or cognitive overload (Endsley & Kiris, Citation1995; Onnasch et al., Citation2014). Automation can be classified according to one of four stages of information processing that it acts on: (1) information acquisition; (2) information analysis; (3) decision and action selection; (4) action implementation) (Kaber & Endsley, Citation2004; Parasuraman, Citation2000; Wickens, Citation2018). Within each of these stages, the level of automation can vary from no automation to full automation. In a meta-analysis performed by Onnasch et al. (Citation2014), they found that the performance issues when automation fails are exacerbated when higher levels of automation are present for stages 3 and 4 (decision selection and action implementation), compared to stages 1 and 2. Essentially, high levels of automation of processes involving decision making and action implementation can cause important performance issues when manual takeover is required. It is thus recommended that workers be kept in the loop when it comes to decision selection and action implementation (Wickens, Citation2018). This can be done by allocating the function of decision selection and/or action implementation to the worker rather than an AI, or by keeping the level of automation to a maximum of medium for these two stages of information processing (Onnasch et al., Citation2014).

As the capability and ubiquity of AI are increasing, so too is the level of automation for the later stages of information processing. AI systems are increasingly capable of processing complex information, resulting in a greater capacity to perform tasks involving higher-level decision-making, which used to be performed exclusively by workers. AI systems can now recognize patterns, handle a large amount of data, and make real-time decisions. This increased capability of automation in the advanced stages of information processing leads to quicker and more accurate decision-making when all is well but leads to problematic performance when a human takeover is required due to malfunction, as demonstrated by the out-of-the-loop performance problem (Onnasch et al., Citation2014).

It is necessary to find ways to mitigate this issue, thus improving overall system performance specifically when automation malfunctions. One such mitigation is the development of competencies through worker training (Bahner et al., Citation2008; Dattel et al., Citation2023; Parasuraman & Riley, Citation1997). I4.0 and the resulting increased prevalence of human-AI collaboration have changed the necessary worker competencies, i.e., the skills, abilities, knowledge, and attitudes needed to effectively do one’s job (Armstrong & Taylor, Citation2020; Da Silva et al., Citation2022; Hecklau et al., Citation2016; Oberländer et al., Citation2020). Indeed, different categories of competencies that are changing in the context of I4.0 have been identified: technical, methodological, and personal (Hecklau et al., Citation2016; Kowal et al., Citation2022). Here, we present only competencies relevant to human–AI interaction. For a complete description of all competencies, refer to Hecklau et al. (Citation2016). Technical competencies include a greater and deeper understanding of processes due to a increased complexity of work systems, as well as more comprehensive technical skills for manual takeover in case of AI malfunction (Gehrke et al., Citation2015; Pacher et al., Citation2023). Methodological competencies include greater analytical and problem-solving capability to detect the source of an error within complex systems (World Economic Forum, Citation2016; Morgan, Citation2014; Pacher et al., Citation2023). Personal competencies include motivation to learn and ability to work under pressure to be able to adapt to changing technology and shorter product life cycles (UK commission for employment and skills, Citation2014; Pacher et al., Citation2023).

2.2. Worker training

Proper training is necessary for workers to develop these competencies. Training research has a century-long history, which provides “evidence-based recommendations and best practices for maximizing training effectiveness” (Bell et al., Citation2017; Salas et al., Citation2012, p. 80). Four research themes have emerged, each contributing to the effectiveness of training: (1) training criteria, (2) trainee characteristics, (3) training context, and (4) training design and delivery. A short description of each theme will be presented, focusing on the relevant findings; for a comprehensive description, refer to Bell et al. (Citation2017) and Salas et al. (Citation2012).

2.2.1. Training criteria

Evaluating the effectiveness of training should be done using multi-dimensional outcomes (Bell et al., Citation2017; Kraiger et al., Citation1993). Specifically, skill-based outcomes, cognitive outcomes, and affective outcomes should be considered. Skill-based outcomes include performance metrics and related outcomes. Cognitive outcomes refer to knowledge organization and cognitive state. For example, task engagement represents a cognitive state that facilitates knowledge acquisition. Affective outcomes relate to trainee motivation and related constructs. Considering all three dimensions will provide a better understanding of a training’s success since it can affect multiple individual and organizational factors directly affecting a company’s well-being (Salas et al., Citation2012).

2.2.2. Trainee characteristics

Individual characteristics that affect trainees’ motivation to learn should be considered. This includes personality traits, such as trainee trait engagement (general causality orientation), which refers to one’s perception of control over actions and events (Deci & Ryan, Citation1985). Trainee skills should also be taken into consideration so that the training can be adequately adapted.

2.2.3. Training context

The success of training depends not only on the training itself but on a variety of other factors related to the organizational context. Training effectiveness is heavily influenced by supervisor and peer support, as well as organizational learning culture. Additionally, it is essential to understand that skill decay occurs over that and that refresher training may be needed (Bell et al., Citation2017; Salas et al., Citation2012). Training context is not in the scope of the current experiment since our focus is on the short-term outcomes of training.

2.2.4. Training design and delivery

Training should focus on active rather than passive learning. Active learning should involve hands-on practice with the work system, allowing trainees to practice decision-making. In addition, errors should be incorporated into training, allowing trainees to be better when errors happen during actual work (Salas et al., Citation2012; Sauer et al., Citation2016). Active learning techniques, such as problem-based learning or simulation-based learning, allow trainees to develop flexible and adaptive skills necessary to deal with complex work systems, such as those seen in I4.0 (Bell et al., Citation2017; Kozlowski et al., Citation2001; Léger et al., Citation2012). Active learning also promotes trainee motivation and engagement during learning. It is essential to design training in a way that promotes trainee motivation and engagement, as they are crucial determinants of training effectiveness and sustainment (Bell et al., Citation2017; Lazzara et al., Citation2021; Salas et al., Citation2012; Van der Klink & Streumer, Citation2002). Additionally, they are strong predictors of employee performance, turnover, absenteeism, innovation, organizational learning culture, and technology acceptance, among others (Akhlaq & Ahmed, Citation2013; Deci et al., Citation2017; Gerhart & Fang, Citation2015; Molino et al., Citation2020; Salas et al., Citation2012; Schmid & Dowling, Citation2020). The following sections will present the concepts of motivation and engagement within the context of employee training.

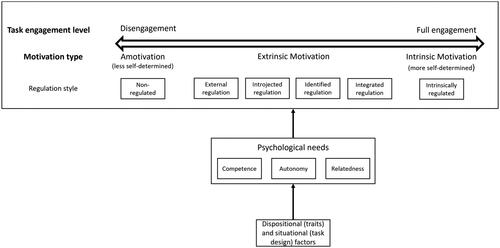

2.3. Worker motivation

Through meta-analytic evidence, theories of human motivation and work engagement were created. One of these theories, self-determination theory (SDT), provides a robust theoretical framework that can be leveraged to understand how elements of training design affect trainee motivation and engagement. At its core, SDT aims to explain how situations and environments impact a worker’s motivation and engagement (Deci & Ryan, Citation2008). SDT states that workers have innate and universal needs, such as the need to feel a sense of self-efficacy (competence), to feel in control of their actions and decisions (autonomy), and to have meaningful social interactions (relatedness). The satisfaction of these needs dictate to what extent workers experience more self-determined motivation, i.e., motivation resulting from a greater internalization of the motive for completing an action. Motivation lies on a continuum, starting from intrinsic on one end, to extrinsic at the center, to amotivation on the other end. illustrates this continuum. Intrinsic motivation relates to performing an action for its inherent enjoyment and because it is in line with the individual’s values, interests, or aspirations. Intrinsic motivation represents the most self-determined type and is the strongest predictor of worker well-being and absenteeism (Van den Broeck et al., Citation2021). Extrinsic motivation relates to performing a task because of some external demand, such as an external reward or punishment avoidance. Extrinsic motivation is further divided into four types that vary in terms of internalization, i.e., the degree to which the motive for performing an action is in line with their values, interests, or aspirations. A more self-determined subtype of extrinsic motivation, identified motivation, is of particular importance. This type of motivation represents completing a task because it is perceived as meaningful. Identified motivation is the strongest predictor of workplace performance, continuous effort investment, and other organizational citizenship behavior (Van den Broeck et al., Citation2021). Amotivation represents a complete lack of motivation to perform an action. SDT’s main premise is that satisfying workers’ psychological needs of competence, autonomy, and relatedness leads to a greater internalization of the motive for learning, which is synonymous with a more intrinsic type of motivation and regulation. In turn, motivation that is more intrinsically regulated will lead to workers being more engaged, innovative, generally happier, less likely to change jobs, and greater acceptance of technology (Deci et al., Citation2017; Meyer & Gagné, Citation2008; Venkatesh et al., Citation2002). When these needs are thwarted, workers are less intrinsically motivated, which is associated with burnout, stress, and disengagement, all of which affect their well-being (Deci et al., Citation2017). Unsurprisingly, training effectiveness, skill acquisition, and work performance are also negatively affected.

Figure 1. Motivation and engagement continuum (adapted from Meyer et al., Citation2010; Ryan & Deci, Citation2000; Szalma, Citation2014).

2.4. Worker engagement

A worker’s engagement during training or a task is a direct consequence of their motivation and is associated with the same outcomes as motivation (e.g., well-being, performance, technology acceptance). Task engagement is a multi-dimensional concept consisting of a (1) dispositional (trait) dimension, a (2) psychological state dimension, and a (3) behavioral dimension (Macey & Schneider, Citation2008; Meyer et al., Citation2010). Trait engagement is defined as a worker’s predisposition “to experience work in positive, active, and energetic ways and to behave adaptively” (Macey & Schneider, Citation2008, p. 21). Within the context of SDT, this means that some workers are more likely than others to perceive, behave, and think in ways that will satisfy their psychological needs (Deci & Ryan, Citation1985; Meyer et al., Citation2010). In essence, a worker’s personality traits impact how they cognitively evaluate a situation as being more controlling or autonomy-inducing, which affects whether they will experience more intrinsic or extrinsic motivation and, consequently more engagement (Ryan & Deci, Citation2008; Szalma, Citation2020). State engagement is composed of a cognitive and an emotional component (Kahn, Citation1990; Schaufeli et al., Citation2002). Cognitive engagement is characterized by full concentration and mental absorption within a task. Emotional engagement encompasses positive and negative affect (valence), and emotional arousal/activation (Lang, Citation1995; Macey & Schneider, Citation2008). Behavioral engagement refers to observable indicators of engagement within a work task (Macey & Schneider, Citation2008). Both state and behavioral engagement are viewed as consequences of need satisfaction, i.e., a more intrinsically regulated type of motivation (Meyer et al., Citation2010).

2.5. Worker training in Industry 4.0

Training workers to develop the competencies to be motivated and performant within I4.0 work systems has been consistently raised as a main concern for researchers and practitioners alike (Cazeri et al., Citation2022; UK commission for employment and skills, Citation2014; World Economic Forum, Citation2016; Ivaldi et al., Citation2022; Pacher et al., Citation2023; Saniuk et al., Citation2021). Nevertheless, I4.0 research has focused mainly on technical and operational aspects rather than human-centric issues such as worker training (European Commission et al., Citation2021; Gagné et al., Citation2022; Kaasinen et al., Citation2019; Kadir et al., Citation2019; Neumann et al., Citation2021; Rauch et al., Citation2020). Some authors have taken a step forward by assessing how I4.0 technology can be used to aid in worker training, with a strong focus on virtual, augmented, and mixed reality (Carretero et al., Citation2021; Dhalmahapatra et al., Citation2021; Lopez et al., Citation2021; Simões et al., Citation2021; Ulmer et al., Citation2020; Vidal-Balea et al., Citation2020; Zawadzki et al., Citation2020). For example, Dhalmahapatra et al. (Citation2021) evaluated the effectiveness of a virtual reality training to improve the safety of crane operators; Zawadzki et al. (Citation2020) also assessed the effectiveness of virtual reality training to improve operational performance; in a similar vein, Casillo et al. (Citation2020) evaluated the effectiveness of training using chatbots in a manufacturing setting. This research generally adopts an active learning approach, as recommended by training literature. In other words, workers are able to practice their decision-making skills and make errors safely with the help of virtual, augmented, or mixed reality. However, it does not seem to account for the importance of worker motivation and engagement, as main drivers of training effectiveness and other positive outcomes related to worker and company well-being (e.g., turnover, technology acceptance, worker performance). This hinders our ability to design training that enables workers to effectively collaborate with highly automated AI systems. To our knowledge, no paper has experimentally evaluated, using a multi-dimensional approach recommended in the training literature, the impact of using AI as a tool to train workers. In other words, no paper has used trainee performance, cognitive state, and affective/motivational state in conjunction to better understand how an AI can impact training effectiveness.

3. Hypothesis development

In the current study, we aim to understand how training design, in the context of highly-automated AI, impacts worker training effectiveness, motivation, and engagement. Specifically, we aim to understand how levels of automation of an AI system used to train workers impacts their motivation and engagement during training, as well as their ability to perform when AI malfunctions. Within the literature, it can be seen that full automation of decision selection (Stage 3 of information processing) represents the critical boundary after which human performance is strongly impacted when automation fails (out-of-the-loop performance effect) (Onnasch et al., Citation2014). Logically, it can be expected that workers being trained with an AI error-detection system (AIEDS) that fully automated the decision selection stage will not acquire the technical, methodological, and personal competencies to properly respond to a malfunctioning AI. On the other hand, being trained with an AIEDS that only partially automates decision selection should mitigate the out-of-the-loop performance effect. Indeed, partial automation during training is more aligned with an active learning approach, allowing workers to practice their decision-making and build the necessary competencies and motivation. Thus, partial automation of decision selection should lead to trainees gaining a deeper understanding of the work process being learned, more comprehensive technical skills, and better problem-solving capabilities, among others, which translates to better overall performance after training. As such, we hypothesize that:

H1:

Training completed with an AI system that partially automates decision selection (compared to full automation) will lead to better performance after training

Using a fully automated AI system during training also has implications for trainee motivation and engagement. Not having the decision-making aspect of the training present can have deleterious effects on worker autonomy and self-efficacy, two drivers of self-determined motivation and engagement. Indeed, full automation leaves participants no choice but to agree with the AI’s recommendation, leaving little room for decisional latitude or opportunities for them to feel a sense of self-efficacy. On the other hand, the partially automated decision-selection merely suggests a decision, allowing participants to override the AI’s recommendation. This may provide participants with a sense of decisional freedom while providing enough error-detection support for participants to feel competent. Thus, partial automation of decision selection should better motivate and engage trainees by satisfying their psychological needs, leading to greater skill acquisition. As such, we hypothesize that:

H2:

Training completed with an AI system that partially automates decision selection (compared to full automation) will lead to more self-determined motivation during training

H3:

Training completed with an AI system that partially automates decision selection (compared to full automation) will lead to more engagement during training

4. Materials and methods

The following section will present the experimental design, sample, task, setup, procedure, as well as variable operationalization, statistical analysis, and a priori power analysis.

4.1. Experimental design and sample

A total of 102 participants completed the laboratory experiment (67 men, 35 women). Gender was self-reported. Participants were recruited using a mass email sent to all undergraduate students. The average participant age was 21.97 (SD = 2.69). No participant had any prior experience with the task chosen for this experiment. This experiment was reviewed and approved by HEC Montreal’s research ethics board (certificate #2023-5058). Informed consent was obtained, and each participant was given 40€ for their participation at the end of the experiment. The experiment was conducted in French.

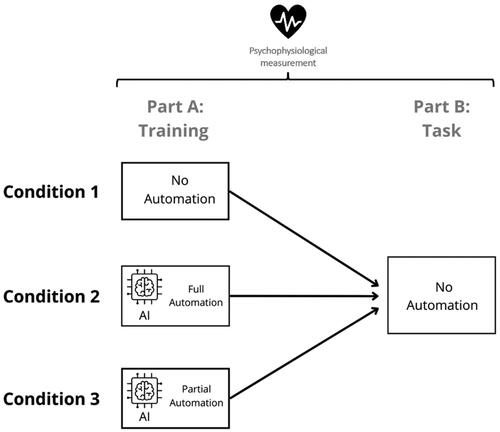

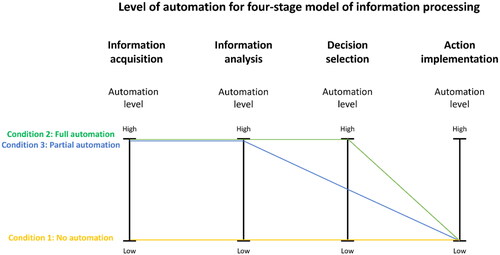

This study used a between-subject design. We have devised an experiment based on findings from worker training literature, i.e., considering the importance of training criteria (multi-dimensional evaluation of training effectiveness), of trainee characteristics (personality factors affecting motivation to learn), and of training design (active learning with a strong focus on motivation and engagement). Also, we have incorporated theoretical knowledge from SDT, which allows us to better understand how elements of training affect trainee motivation and engagement. To this end, we manipulated the level of automation of decision selection of the AIEDS during training (Part A), resulting in three conditions (1. No automation, 2. Full automation, 3. Partial automation). During the experimental task itself (Part B), automation was removed to simulate a failure of the AIEDS and thus evaluate skill acquisition (see ). shows the level of automation for each condition based on the four stages of information processing (Parasuraman et al., Citation2000). Refer back to section 2.1 for more details about this model and stages of information processing. Participants were randomly assigned to one of three conditions. This experiment was part of a larger experiment which consisted of nine conditions after the training.

Figure 3. Level of automation for each condition (adapted from Parasuraman et al., Citation2000).

4.2. Training, experimental task, and setup

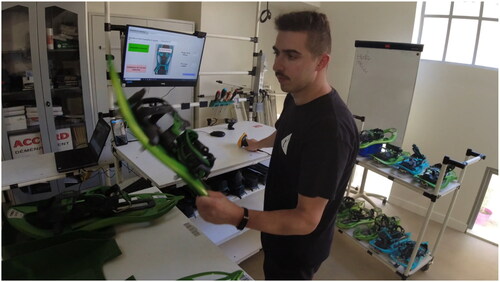

To create the experimental task (Part B), members of the research team went to the factory in which the product used (snowshoe) was built. We observed and filmed the employees completing their work tasks. We ascertained that each employee on the production line was responsible for assembling one component of the snowshoe and checking for defects/errors that previous employees may have committed. Employees spent approximately 20 seconds on each snowshoe before passing it on to the next worker. To maximize the study’s ecological validity, we designed the experimental task in accordance with what was observed in the factory. Snowshoes (), composed of many pieces that vary in terms of assembly difficulty, were an ideal product because they allowed us to completely control the presence of errors.

To create the training, we based ourselves on the training literature. We took an active learning approach, in which participants were trained (Part A) with the exact same work system (interface) as the experimental task (Part B). In addition, errors were incorporated, allowing participants to practice their decision-making and develop their technical, methodological, and personal competencies. The training task (Part A) was identical to the experimental task they had to perform afterwards (Part B).

Participants were instructed that they were the fourth worker on a four-worker snowshoe assembly line, i.e., the final worker. Their goal was to detect any possible errors made by the previous three workers and to finish assembling the snowshoe if no error was detected. Participants received snowshoes that were 90% assembled. Both tasks in Part A and Part B consisted of 30 of these snowshoes, which were placed on racks (120 cm x 71 cm x 103 cm) next to the participant (see for workstation). For each snowshoe, participants scanned the barcode associated with it using a barcode scanner, placed it on the workstation, checked it for errors, indicated using the computer interface () whether or not they detected an error, assembled the remaining 10%, then put it back in its original place. Errors were artificially and systematically introduced into specific snowshoes by the researchers. Six snowshoes out of 30 contained these errors. Each error was unique and always appeared in the same snowshoe across participants. Each of the six errors was randomly assigned to one of the 30 snowshoes in the planning phase of the experiment. The order of the appearance of errors was different for Part A and Part B of the experiment.

Figure 5. Participant workstations (Passalacqua et al., Citation2024).

The experiment was conducted at the DynEO learning factory in Aix-en-Provence (France). The room contained two identical workstations (see ), separated with a room divider so that participants could not see each other. The experimental setup can be seen in the video in the supplementary material available on this link: https://youtu.be/xtcpxqcyz8k.

4.3. Procedure

Participants were told that the experiment involved evaluating their ability to detect production errors in snowshoes. They were told that they would be trained to complete error-detection and assembly tasks, comprising 30 snowshoes. They were told that the task they must complete during the training (Part A) and the experimental task (Part B) were identical; only that the AI would be removed for Part B. After signing the consent form, participants put on physiological vests under their clothes. They were then shown the workstation and the AI interface was explained to them, Additionally, the task was explained, and the six possible errors were shown. Participants then completed a pre-experiment questionnaire consisting of demographics and personality (trait engagement) questionnaires before moving on to Part A and Part B of the experiment. Both parts of the experiment were followed up with a questionnaire. See for the full experimental procedure.

In the no automation condition, participants were trained without any help from an artificial intelligence error-detection system (AIEDS). In the fully automated AI condition, decision selection (stage 3 of information processing), i.e., error versus no error, is fully automated by the AIEDS. The decision made by the AIEDS is always correct. shows the computer interface when the AIEDS detected an error and when no error was detected. Appendix A shows all possible errors that can be detected. In the partial automation AI condition, decision selection was partially automated by the AIEDS. Participants had to make the final decision about the presence of an error. The AIEDS acted like a decision-support tool by suggesting a decision to the participants. The AIEDS was correct in its error detection 83% of the time. Since each task contained six errors, this simply means that one of these errors was missed by the AIEDS (false negative). False positives, i.e., when the AIEDS detects an error without the presence of one, were not possible. Participants were informed beforehand of the AIEDS’ percentage of reliability since prior studies have found that providing participants with accurate information about a system’s reliability percentage improves their performance when using that system (Avril, Citation2022). The percentage itself was chosen based on past research. Reliability levels under 70% have been found to not be beneficial in terms of performance (Onnasch, Citation2015; Wickens & Dixon, Citation2007). Therefore, we opted to approximately split the difference between 100% and 70%. Additionally, a reliability percentage of approximately 85% has been commonly used in past studies (e.g., Avril et al., Citation2022; Wickens & Dixon, Citation2007).

4.4. Variable operationalization and measures

As recommended, the efficacy of training was evaluated using skill-based, cognitive, and affective outcomes (Bell et al., Citation2017; Salas et al., Citation2012). See for a summary of variable operationalization and measurement. Whenever possible, constructs were evaluated using a multi-method approach (perceptually and psychophysiologically). Psychophysiological measures allowed us to measure a participant’s state without interruption throughout the whole task, thus limiting biases associated with using only perceptual measures.

Table 1. Summary of variable operationalization and measurement.

The Hexoskin smart vest (Carré Technologies, Montreal, Canada) was used to capture the psychophysiological data, i.e., heart rate and respiration data. This vest captured 256 Hz 1-lead electrocardiogram data using a built-in electrode, 128 Hz respiration data using two built-in respiratory inductive plethysmography sensors, and 64 Hz acceleration/activity data using a built-in 3-axis accelerometer. The use of the Hexoskin smart vest has been evaluated and validated by a multitude of studies (Cherif et al., Citation2018; Jayasekera et al., Citation2021; Smith et al., Citation2019)

4.4.1. Performance

4.4.1.1. Time

The first of two key performance indicators is the amount of time taken for a participant to complete the task (hereinafter performance time). A lower time indicates better performance

4.4.1.2. Error detection

The second is the participants’ error detection mistakes, operationalized as a percentage of correctness (hereinafter error detection performance). Percentage of correctness is negatively affected when participants fail to detect an error and when they falsely detect an error. Each mistake reduces the percentage of correctness by 1/30 since there are a total of 30 items per task. For example, one mistake in the whole task would produce a percentage of correctness of 96.96%, while two mistakes would lead to 93.33%, and so on. Therefore, a higher percentage indicates better performance.

4.4.2. Motivational needs

Motivational needs were measured using the autonomy (self-determination) and competence subscales of the empowerment scale (Spreitzer, Citation1995). Each subscale is composed of 3 items on a five-point Likert scale. The third psychological need, relatedness, was not addressed in this study because it relates to positive social interactions within the workplace. The scope of this study did not include any social interactions.

4.4.3. Motivation

Motivation was assessed using the French version of the situational motivation scale, created and validated by Guay et al. (Citation2000). This questionnaire is composed of 16 items scored on a seven-point Likert scale. It is divided into four subscales, each of which represents a type of motivational regulation: intrinsic regulation, identified regulation, external regulation, and amotivation.

4.4.4. Cognitive task engagement

4.4.4.1. Self-reported

Cognitive engagement was measured using the absorption subscale of the Utrecht Work Engagement Scale (Schaufeli et al., Citation2003). The French version of the questionnaire was used, which has been validated (Zecca et al., Citation2015). This questionnaire is composed of 9 items scored on a seven-point Likert scale.

4.4.4.2. Psychophysiological

Using the Fast Fourier Transform on interbeat intervals (RR intervals), we derived the absolute power for the low frequency (LF) 0.04-0.15 Hz) and high frequency (HF) (0.15-0.4 Hz) bands of heart rate variability (HRV). The LF band of HRV is produced by both the parasympathetic (PNS) and sympathetic nervous system (SNS). On the other hand, the HF band of HRV has been shown to be produced mainly by the PNS (Shaffer et al., Citation2014). The SNS mainly controls “fight-or-flight” responses, while the PNS mainly controls the “rest-and-digest” responses. The LF/HF ratio is intended to estimate the ratio between SNS and PNS activity and has been shown to be an indicator of cognitive engagement during a task (Gao et al., Citation2020).

4.4.5. Emotional task engagement

4.4.5.1. Self-reported

The emotional component of state task engagement can be further subdivided into two sub-components: valence (happiness/sadness) and arousal (interest/boredom) (Lang, Citation1995; Macey & Schneider, Citation2008; Passalacqua et al., Citation2020). We used Betella and Verschure’s (Citation2016) affective slider, which consists of two sliders measuring valence and arousal on a scale of 0 to 100. The valence slider has sadness on one end and happiness on the other end, while the arousal slider has boredom on one end and interest on the other.

4.4.5.2. Psychophysiological

We have used respiration rate as an indicator of emotional arousal. This measure has been validated as an indicator of sympathetic and emotional arousal (Bradley & Lang, Citation2007). Respiration rate was measured using the two built-in respiratory inductive plethysmography sensors within the Hexoskin vest. Respiration data have been baselined at the participant level using a period of idle standing as the baseline.

4.4.6. Behavioral task engagement

4.4.6.1. Self-reported

Behavioral engagement was measured using the vigor subscale of the French version of the Utrecht Work Engagement Scale (Schaufeli et al., Citation2003).

4.4.6.2. Psychophysiological

Behavioral engagement is operationalized as the standard deviation of the intensity of movement (physical effort) during a task, measured in g-force (Gao et al., Citation2020). This is measured by the 3-axis accelerometer of the Hexoskin vest. A smaller standard deviation in movement intensity suggests that individuals are maintaining a consistent level of movement, reflecting sustained engagement, while a larger standard deviation indicates variability in movement, reflecting fluctuations in engagement (Gao et al., Citation2020).

4.4.7. Trait task engagement

Trait task engagement was measured using the French version of the general causality orientation scale (Deci & Ryan, Citation1985; Meyer et al., Citation2010). The French version has been validated by Vallerand et al. (Citation1987). It consists of 12 vignettes depicting an achievement-oriented situation, about which the participant must answer three questions for each using a seven-point Likert scale. Each of the three questions represents a subscale of the questionnaire: autonomy, control, and impersonal. Participants scoring higher in autonomy are more likely to perceive situations or tasks as being autonomy-promoting and, thus are more likely to experience intrinsic, integrated, or identified motivation and higher engagement. Participants scoring higher in control are more likely to perceive situations or tasks as controlled by an external source and, thus are more likely to experience introjected or external motivation and less engagement compared to those who score higher in autonomy. Participants scoring higher in impersonal are more likely to feel unable to have an effect or control situations or tasks. They may experience a sense of helplessness and are more likely to experience amotivation and lack of engagement (Deci et al., Citation2017; Ryan & Deci, Citation2008; Szalma, Citation2020).

4.5 Statistical analysis and research model

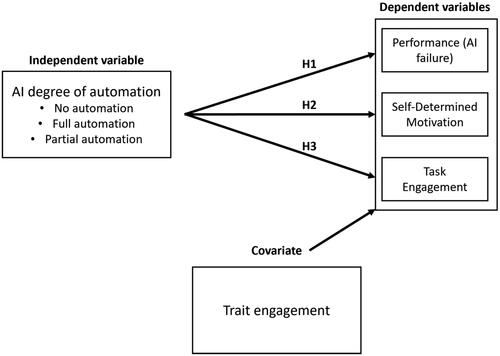

A type-3 analysis of variance (ANOVA) was conducted to determine the global effect of AI level of automation on each dependent variable. As recommended by both the training and motivation literature, trait engagement (individuals’ predisposition to experience certain types of motivation and a certain level of engagement) was controlled for. Not controlling for these personality traits could introduce intragroup variability within our experimental conditions that can influence how AI type (independent variable) affects our dependent variables. Therefore, we attempted to reduce the confounding effect of trait engagement by entering it as a covariate in our statistical model. presents the research model. When we found globally significant effects, we used linear regressions to compare the pairwise least square means. These tests have been adjusted for multiple comparisons using the Holm method.

4.6 A Priori statistical power calculation

A priori statistical power calculations allow us to estimate the sample size necessary to achieve a sufficient level of statistical power. Statistical power refers to the probability of correctly detecting differences within the sample (Cohen, Citation1992). G*Power software (Faul et al., Citation2009) was used to calculate power in the planning phase of the experiment with the following parameters. The effect size was unable to be estimated from past studies, therefore we selected a small value (f = 0.15) to be as conservative as possible. A power of 0.90 was selected; a minimum of 0.80 is recommended (Cohen, Citation1992). A 0.80 correlation among repeated measures was derived from pilot tests. In short, power calculations informed us that a sample size of 99 participants was deemed sufficient to correctly reject the null hypothesis with 90% certainty.

4.7. Transparency and openness

All raw data, processed data, and statistical outputs on which the study’s conclusions are based are available at https://data.mendeley.com/datasets/7njpg3g33g/2. Feel free to contact the corresponding author should you have any questions. This study’s design and its analysis were not preregistered. We used R version 4.3.1 (R Core Team, Citation2021) and Statistical Product and Service Solutions (SPSS) version 26 to conduct our analyses.

5. Results

Two participants were excluded due to technical issues with the equipment. A total of 100 participants were retained for analysis. See Appendix B for descriptive data separated by Training/Task and AI level of automation. Error bars on all graphs represent the standard error of the mean. No significant gender differences were observed.

5.1. H1: Training completed with an AI system that partially automates decision selection (compared to full automation) will lead to better performance after training

5.1.1. Performance time

A type-3 ANOVA showed no significant main effect of AI level of automation on performance time in the experimental task after controlling for trait engagement, F(2, 27) = 1.74, p = .195.

5.1.2. Error detection performance

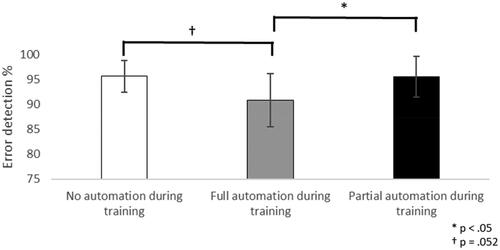

A type-3 ANOVA showed a significant main effect of AI level of automation on error detection performance in the experimental task 2 after controlling for trait engagement, F(2, 27) = 5.65, p = .009, ηp2 = .30. Post-hoc pairwise linear regressions revealed that error detection performance was significantly worse when being trained with the fully automated AI compared to starting to partial automation (t = −3.02, p = .022) or no automation (t = −2.80, p = .052). However, there were no significant differences between participants were trained with partial automation and no automation (t = 0.22, p = 1). shows these results. The x-axis show the condition participants were assigned to during training, while the y-axis show the participants’ percentage of error detection correctness during the experimental task.

5.2. H2: Training completed with an AI system that partially automates decision selection (compared to full automation) will lead to more self-determined motivation during training

5.2.1. Motivational needs

5.2.1.1. Autonomy

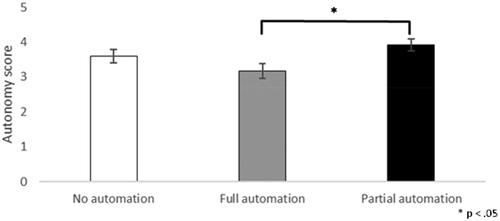

A type-3 ANOVA showed a significant main effect of AI condition on autonomy after controlling for trait engagement, F(2, 94) = 3.80, p = .026, ηp2 = .08. Post-hoc pairwise linear regressions revealed that autonomy was significantly higher using the partially automated AI compared to Full automation AI (t = 2.67, p = .024). However, no differences were observed between partial automation and no automation (t = 0.97, p = .992) or between Fully automated AI and No automation (t = −1.72, p = .266). shows these results. The x-axis shows the condition to which participants were assigned, while the y-axis shows the questionnaire score of the autonomy subscale of the empowerment scale during training (5-point Likert scale).

5.2.1.2. Competence

A type-3 ANOVA showed no significant main effect of AI condition on competence after controlling for trait engagement, F(2, 94) = 1.61, p = .206.

5.2.2. Motivation

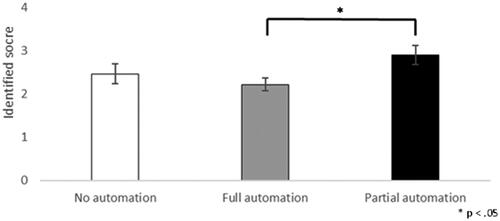

A type-3 ANOVA showed a significant main effect of AI condition on identified regulation after controlling for trait engagement, F(2, 94) = 3.62, p = .031, ηp2 = .07. However, no significant main effect was found for intrinsic motivation (F(2, 94) = 1.47, p = .236), external regulation (F(2, 94) = 1.164, p = .317), or amotivation (F(2, 94) = 1.03, p = .363)

Post-hoc pairwise linear regressions revealed that identified regulation was significantly higher using partially automated AI compared to full automation AI (t = 2.64, p = .029). However, no differences were observed between partial and no automation (t = 1.80, p = .224) or between full automation and no automation (t = −0.84, p = 1). shows these results. The x-axis shows the condition to which participants were assigned, while the y-axis shows the questionnaire score of the identified regulation subscale of the situational motivation scale during training (7-point Likert scale).

5.3. H3: Training completed with an AI system that partially automates decision selection (compared to full automation) will lead to more engagement during training

5.3.1. Cognitive (state) engagement

5.3.1.1. Self-report

A type-3 ANOVA showed no significant main effect of AI condition on self-reported absorption after controlling for trait engagement, F(2, 94) = 2.88, p = .061.

5.3.1.2. Psychophysiological

A type-3 ANOVA showed no significant main effect of AI condition on LF/HF ratio (HRV) after controlling for trait engagement, F(2, 91) = 0.06, p = .944.

5.3.2. Emotional (state) engagement

5.3.2.1. Self-report

A type-3 ANOVA showed a significant main effect of AI condition on self-reported arousal after controlling for trait engagement, F(2, 94) = 1.37, p = .260.

A type-3 ANOVA showed no significant main effect of AI condition on self-reported valence after controlling for trait engagement, F(2, 94) = 0.23, p = .796.

5.3.2.2. Psychophysiological

A type-3 ANOVA showed no significant main effect of AI condition on respiration rate after controlling for trait engagement, F(2, 86) = 2.09, p = .130.

5.3.3. Behavioral engagement

5.3.3.1. Self-report

A type-3 ANOVA showed no significant main effect of AI condition on self-reported vigor after controlling for trait engagement, F(2, 94) = 0.362, p = .697.

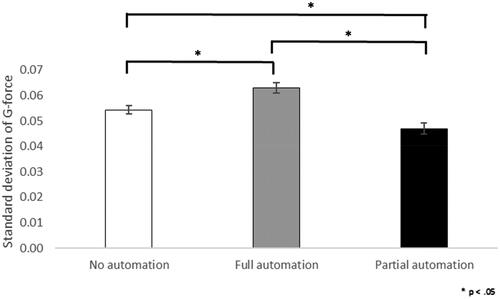

5.3.3.2. Psychophysiological

A type-3 ANOVA showed a significant main effect of AI condition on standard deviation of the intensity of physical effort after controlling for trait engagement, F(2, 91) = 15.34, p < .001, ηp2 = .25. Post-hoc pairwise linear regressions revealed the SD of the intensity of physical effort was significantly lower using the partially automated AI compared to full automation AI (t = −5.18, p < .001) and compared to No automation (t = −2.29, p = .024). Also, the intensity of physical effort was lower for No automation compared to Full automation AI (t = −2.86, p = .01). shows these results. The x-axis shows the condition to which participants were assigned, while the y-axis shows the standard deviation of movement intensity (G-force) during training, measured using a 3-axis accelerometer. A lower standard deviation indicates a higher behavioral engagement.

Figure 11. Intensity of movement (behavioral engagement) during training.

Note. A lower standard deviation is indicative of higher behavioral engagement (Gao et al., Citation2020).

6. Discussion

The following section will discuss the results pertaining to each hypothesis, as well as the practical contributions and limitations.

6.1. H1: Training completed with an AI system that partially automates decision selection (compared to full automation) will lead to better performance after training

Results indicate that training with full automation of decision selection proved to be problematic when the AI was removed. Indeed, participants who were trained with the fully automated version of the AIEDS had a significantly worse error-detection performance in the experimental task (AIEDS malfunction) compared to participants who were trained with partial or no automation. Using a fully automated AI during training has hindered participants’ acquisition of competencies, i.e., the skills, abilities, and knowledge necessary for manual takeover of the error-detection task. No such effect was found when participants were trained with partial automation since their error-detection performance was equivalent to that of participants who were trained without automation, thus confirming H1. These results are in line with previous research on automation, indicating that full automation of decision selection (stage 3) represents a critical boundary, at which point humans are very vulnerable to automation failure. Whereas when humans select the final decision (partial automation of stage 3), manual performance when automation malfunctions is less affected (Onnasch et al., Citation2014; Parasuraman et al., Citation2000). Our results build upon these findings, indicating that the level of automation matters during training as well. Indeed, the critical boundary of automation affects worker skill acquisition, suggesting that training with high automation may impede the development of the technical, methodological, and personal competencies necessary to manually takeover for a malfunctioning AI. Full automation of decision selection keeps trainees out of the decisional loop, not allowing them to practice decision-making and make errors, creating a passive learning approach, rather than an active one. Practitioners creating training should avoid automation past the critical boundary, even though the capabilities of AI are increasing. They should focus on creating training curricula that employee an active learning approach, i.e., problem- or simulation-based learning in which trainees could experiment with decision-making and with errors. As such, they will be able to better comprehend and manage I4.0 work systems, which are growing in complexity with every advance in technology.

6.2. H2: Training completed with an AI system that partially automates decision selection (compared to full automation) will lead to more self-determined motivation during training

6.2.1. Motivational needs

Participants felt the strongest sense of autonomy when being trained with the partially automated AIEDS. To put this result into context, it is necessary to break down the participant/worker role in each AI condition. Within the no automation condition, participants were responsible for examining each snowshoe and deciding whether an error was present. While they had complete decision-making freedom, error-detection without the AIEDS is repetitive in nature, resulting in very low task variety, which may have negatively affected their perception of autonomy during training. In the fully automated, the AI made the decisions, and the worker simply had to implement them, i.e., assembling or discarding the snowshoe, without much leeway in terms of decision-making. Participants may have felt that the AI was in control of the training rather than themselves, negatively affecting their perception of autonomy. In the partial automation condition, participants were monitoring the AI’s decisions, with the freedom to decide whether to accept or reject those decisions. While the AI was doing most of the repetitive work (error detection), participants were left with a supervisory role, characterized as more meaningful and gratifying, which positively affected their perception of autonomy.

Regarding participants’ perception of competence during training, we observed no differences between the three conditions. When looking at the mean values for each condition, we see that all values are above 4.25 on a five-point Likert scale, indicating that participants felt a rather strong sense of self-efficacy or capability to successfully complete the training. A lack of differentiation between conditions could be due to the training not being difficult enough. A higher degree of difficulty could have put participants’ feeling of competence to the test, which could have resulted in significant variability between conditions. Nevertheless, equally high competence between conditions indicates that participants did not feel less supported or empowered by partially automated compared to fully automated AI.

6.2.2. Motivation

From most self-determined/internalized to least self-determined/internalized, four types of motivation were measured: intrinsic regulation, identified regulation, external regulation, and amotivation (see ). Results indicate that identified regulation was higher when participants used partially automated AI compared to fully automated AI. No differences were found within the other types of motivation. Identified regulation represents performing a task because it is perceived as meaningful. It implies a significant internalization, meaning that the motive for completing the task has been integrated into the self and has a certain means-to-end value for the participant. Putting this into context, the results indicated that participants in the partial automation condition attributed a greater meaning to the completion of the training, compared to the other conditions. This can be explained by higher levels of perceived autonomy due to participants in this condition having decision authority over the AI. Identified motivation has been shown to be the strongest predictor of performance and organizational citizenship behaviors (e.g., continuous effort investment, commitment) (Van den Broeck et al., Citation2021). These results indicate that partial automation should lead to the best long-term outcomes for organizations. Overall, results show support for H2. The partial automation condition led to the most self-determined motivation, which is crucial for the success and sustainment of any training (Bell et al., Citation2017; Lazzara et al., Citation2021; Salas et al., Citation2012; Van der Klink & Streumer, Citation2002). As such, maximizing the level of automation may not be the ideal solution for successful skill acquisition. As the prevalence of human-AI collaboration is increasing, it is more important than ever for practitioners to strive for a balanced level of automation and design trainings to allow trainees to feel in control of their decisions/action rather than being controlled by an automated system.

6.3. H3: Training completed with an AI system that partially automates decision selection (compared to full automation) will lead to more engagement during training

6.3.1. Cognitive, emotional, and behavioral engagement

No significant differences were observed when looking at self-reported cognitive engagement, i.e., mental absorption during a task, or cognitive engagement measured psychophysiologically (LF/HF HRV ratio).

We observed no differences for emotional arousal measured perceptually or psychophysiologically (respiration rate). For self-reported emotional valence, no differences were observed. When looking at the mean values for each condition, values are between 52-61 (scale is 0-100). This indicates a neutral valence, not positive nor negative. The lack of significant differences may be due to the training being unable to produce any significant affective response from participants.

We observe no significant differences between conditions for self-reported behavioral engagement, i.e., the perceived investment of physical energy into the task. For psychophysiological measurement of behavioral engagement (standard deviation of the intensity of movement), partial automation led to the highest engagement, while full automation led to the lowest. This means that participants were more behaviorally engaged during the training when in the partial automation condition.

Overall, results show some support for H3. The partial automation condition seems to lead to the best outcomes only in terms of behavioral engagement (psychophysiological). This means that participants were the most physically engaged in the training when decision selection was only partially automated. As seen in SDT, this is most likely due to stronger feelings of autonomy and more self-determined motivation (Deci et al., Citation2017). Indeed, autonomy and self-determined motivation are antecedents of task engagement. Task engagement itself lead to better skill acquisition, which could have contributed to the best performance being in the partial automation condition. For all other variables, we observe no significant differences between the conditions, indicating that one does not lead to better or worse outcomes than the others.

7. Conclusion

Using skill-based, cognitive, and affective criteria to evaluate the effectiveness of training, we found that partial automation led to the most positive outcomes. Indeed, workers retaining decision-selection authority during training led them to feel a stronger sense of decisional latitude (autonomy), self-determined motivation, and behavioral engagement during training. In turn, this better allowed workers to develop their technical, methodological, and personal skills, which led to them being able to better adapt to AI failure, as indicated by better error-detection performance. Within the context of worker training in Industry 5.0, these results imply that AI may have more value as a decision aid rather than a decision selector during training.

From a practical perspective, those responsible for creating training should be mindful when deciding the level of automation an AI system provides at the decision-selection stage of information processing. Care should be taken to nurture trainee perceptions of autonomy, i.e., their perception of being in control of their behaviors and actions, through increased worker decisional power, for example (Gagné et al., Citation2022). These considerations will positively impact both the worker and the organization. Workers will be more motivated and engaged during training and perceive it as more meaningful, improving their competency acquisition. Positive effects are also seen after training through improved performance, technology acceptance, and well-being (Bell et al., Citation2017; Deci et al., Citation2017; Molino et al., Citation2020). Organizations will benefit from improved productivity, reduced turnover, safety incidents, and absenteeism, among others (Lazzara et al., Citation2021; Mann & Harter, Citation2016; Schmid & Dowling, Citation2020). From a societal perspective, policymakers can use these insights to guide regulations and initiatives that support workforce development in the face of rapid technological advancement. By promoting training programs that balance AI capabilities with human skill and decision-making, policies can foster a workforce that is adaptable and competent in an increasingly automated world. Socially, this research contributes to a narrative that values the human element in the age of AI. By ensuring that technological advancements do not diminish human importance in manufacturing systems or in operations but rather complement them, we can influence public attitudes towards technology in the workplace, enhancing overall quality of life.

Our study has two main limitations. First is that our dependent variables were measured in the short term. While extrapolation to the long-term can be done through the lens of our theoretical framework (SDT), a longitudinal study would be ideal to test skill retention, motivation, and engagement over time. A second limitation is that our sample consisted of university students, not actual factory workers. University students may have different levels of familiarity with AI systems, varying motivation levels due to the experimental context, and less experience in a manufacturing setting. Factory workers may have more practical experience, pre-existing skills specific to manufacturing environments, and resistance to change. These differences could influence how each group interacts with AI systems, their learning curve, and how they perceive and adapt to AI-assisted training. As such, skill acquisition, motivation, and engagement could be different between groups. Acknowledging this, our results necessitate further validation with actual factory workers to enhance their applicability in real-world manufacturing settings. Nevertheless, we attempted to maximize ecological validity through the selection of an experimental task that was identical to one of those in the actual factory. Additionally, the experiment was conducted within a learning factory setting.

The current research reinforces the need to gain a deeper understanding of the impact of new technology on manufacturing workers. More research applying self-determination theory to the training of workers using highly-automated AI systems should be conducted to explore how to effectively support workers’ motivational needs. Additionally, future research should validate our current findings longitudinally and with a sample of actual factory workers. Lastly, future research could experimentally evaluate the use of other I4.0 technologies for worker training (e.g., augmented/virtual reality, digital twin) using the multi-method, multi-dimensional, and human-centered approach used in this paper.

CRediT author statement

Mario Passalacqua: conceptualization, methodology, validation, investigation, writing - original draft, visualization, project administration. Robert Pellerin: conceptualization, methodology, resources, writing - review & editing, supervision, funding acquisition. Esma Yahia: conceptualization, methodology, software. Florian Magnani: conceptualization, methodology, investigation, writing - review & editing. Frédéric Rosin: conceptualization, methodology, resources. Laurent Joblot: conceptualization, methodology, resources, writing - review & editing. Pierre-Majorique Léger: conceptualization, methodology, resources, writing - review & editing, supervision, funding acquisition.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

Notes on contributors

Mario Passalacqua

Mario Passalacqua is a Ph.D. candidate in industrial engineering at Polytechnique Montréal. He holds a master’s degree in user experience from HEC Montréal and a bachelor’s degree in psychology from McGill. His research examines the psychosocial component of human-AI interaction in the workplace, focusing on worker motivation, engagement, and trust.

Robert Pellerin

Robert Pellerin is a Full Professor in the Department of Mathematics and Industrial Engineering at Polytechnique Montreal. He holds degrees in engineering management (B.Eng.) and industrial engineering (Ph.D.). He is the current chairman of the Jarislowsky/AkinRéalis Research Chair and he is a member of CIRRELT and Poly-Industry 4.0 research groups.

Esma Yahia

Esma Yahia is an associate professor at the École Nationale Supérieure des Arts et Métiers, Aix-en-Provence campus. She conducts her research activities at the Laboratory of Physical and Digital Systems Engineering (LISPEN). Her research activities contribute to human-centered agile systems engineering in the context of Industry 5.0.

Florian Magnani

Florian Magnani is an associate professor at Ecole Centrale Méditerranée and conducts research in operations, industrial organization and human resources at CERGAM (IAE Aix-Marseille). His current research focuses on the adoption of organizational innovations (Lean, Industry 4.0, Industry 5.0) and pays special attention to the human dimension of these innovations.

Frédéric Rosin

Frédéric Rosin is a professor at Arts et Métiers within the LISPEN laboratory. He holds a Ph.D. in industrial engineering. His research focuses on new production models combining the benefits of operational excellence and Industry 5.0. He is the founder of DynEO, a learning-factory which integrates a factory lab.

Laurent Joblot

Laurent Joblot is a researcher at Arts et Métiers Cluny, holding a Ph.D. in Industrial Engineering. He specializes in training and teaching LEAN principles through learning labs. Additionally, he explores the impact of Industry 4.0 technologies on human factors such as motivation, involvement, and decision-making, in manufacturing and construction sectors.

Pierre-Majorique Léger

Pierre-Majorique Léger is a full professor at HEC Montréal and holds the NSERC-Prompt Industrial Research Chair in User Experience. His research aims to improves the UX experienced when using information technology by mobilizing the biophysiological data generated during an interaction to qualify the user’s emotion and cognition.

References

- Akhlaq, A., & Ahmed, E. (2013). The effect of motivation on trust in the acceptance of internet banking in a low-income country. International Journal of Bank Marketing, 31(2), 115–125. https://doi.org/10.1108/02652321311298690

- Armstrong, M., & Taylor, S. (2020). Armstrong’s handbook of human resource management practice. Kogan Page Publishers.

- Avril, E. (2022). Providing different levels of accuracy about the reliability of automation to a human operator: Impact on human performance. Ergonomics, 66(2), 217–226. (just-accepted), https://doi.org/10.1080/00140139.2022.2069870

- Avril, E., Cegarra, J., Wioland, L., & Navarro, J. (2022). Automation type and reliability impact on visual automation monitoring and human performance. International Journal of Human–Computer Interaction, 38(1), 64–77. https://doi.org/10.1080/10447318.2021.1925435

- Bahner, J. E., Hüper, A.-D., & Manzey, D. (2008). Misuse of automated decision aids: Complacency, automation bias and the impact of training experience. International Journal of Human-Computer Studies, 66(9), 688–699. https://doi.org/10.1016/j.ijhcs.2008.06.001

- Bainbridge, L. (1983). Ironies of automation. In Analysis, design and evaluation of man–machine systems. (pp. 129–135). Elsevier.

- Bell, B. S., Tannenbaum, S. I., Ford, J. K., Noe, R. A., & Kraiger, K. (2017). 100 years of training and development research: What we know and where we should go. The Journal of Applied Psychology, 102(3), 305–323. https://doi.org/10.1037/apl0000142

- Betella, A., & Verschure, P. F. (2016). The affective slider: A digital self-assessment scale for the measurement of human emotions. PloS One, 11(2), e0148037. https://doi.org/10.1371/journal.pone.0148037

- Bindewald, J. M., Miller, M. E., & Peterson, G. L. (2020). Creating effective automation to maintain explicit user engagement. International Journal of Human-Computer Interaction, 36(4), 341–354. https://doi.org/10.1080/10447318.2019.1642618

- Bradley, M. M., & Lang, P. J. (2007). Emotion and motivation. In Handbook of psychophysiology (3rd ed, pp. 581–607). Cambridge University Press. https://doi.org/10.1017/CBO9780511546396.025

- Brynjolfsson, E., Mitchell, T., & Rock, D. (2018). What can machines learn, and what does it mean for occupations and the economy? AEA Papers and Proceedings, 108, 43–47. https://doi.org/10.1257/pandp.20181019

- Büth, L., Blume, S., Posselt, G., & Herrmann, C. (2018). Training concept for and with digitalization in learning factories: An energy efficiency training case. Procedia Manufacturing, 23, 171–176. https://doi.org/10.1016/j.promfg.2018.04.012

- Carretero, M. d P., García, S., Moreno, A., Alcain, N., & Elorza, I. (2021). Methodology to create virtual reality assisted training courses within the Industry 4.0 vision. Multimedia Tools and Applications, 80(19), 29699–29717. https://doi.org/10.1007/s11042-021-11195-2

- Casillo, M., Colace, F., Fabbri, L., Lombardi, M., Romano, A., & Santaniello, D. (2020). Chatbot in industry 4.0: An approach for training new employees [Paper presentation]. 2020 IEEE International Conference on Teaching, Assessment, and Learning for Engineering (TALE), https://doi.org/10.1109/TALE48869.2020.9368339

- Cazeri, G. T., de Santa-Eulália, L. A., Serafim, M. P., & Anholon, R. (2022). Training for Industry 4.0: A systematic literature review and directions for future research. Brazilian Journal of Operations & Production Management, 19(3), 1–19. https://doi.org/10.14488/BJOPM.2022.007

- Cherif, N., Mezghani, N., Gaudreault, N., Ouakrim, Y., Mouzoune, I., & Boulay, P. (2018). Physiological data validation of the hexoskin smart textile. In Proceedings of the 11th international joint conference on biomedical engineering systems and technologies (BIOSTEC 2018) - BIODEVICES; ISBN 978-989-758-277-6; ISSN 2184-4305 (pp. 150–156). SciTePress.

- Cohen, J. (1992). Statistical power analysis. Current Directions in Psychological Science, 1(3), 98–101. https://doi.org/10.1111/1467-8721.ep10768783

- Da Silva, L., Soltovski, R., Pontes, J., Treinta, F., Leitão, P., Mosconi, E., De Resende, L., & Yoshino, R. (2022). Human resources management 4.0: Literature review and trends. Computers & Industrial Engineering, 168, 108111. https://doi.org/10.1016/j.cie.2022.108111

- Danjou, C., Rivest, L., & Pellerin, R. (2017). Industrie 4.0: Des pistes pour aborder l’ère du numérique et de la connectivité. CEFRIO, p. 27. https://espace2.etsmtl.ca/id/eprint/14934/1/le-passage-au-num%C3%A9rique.pdf

- Dattel, A. R., Babin, A. K., & Wang, H. (2023). Human factors of flight training and simulation. In Human Factors in Aviation and Aerospace. (pp. 217–255). Elsevier.

- Deci, E. L., Olafsen, A. H., & Ryan, R. M. (2017). Self-determination theory in work organizations: The state of a science. Annual Review of Organizational Psychology and Organizational Behavior, 4(1), 19–43. https://doi.org/10.1146/annurev-orgpsych-032516-113108

- Deci, E. L., & Ryan, R. M. (1985). The general causality orientations scale: Self-determination in personality. Journal of Research in Personality, 19(2), 109–134. https://doi.org/10.1016/0092-6566(85)90023-6

- Deci, E. L., & Ryan, R. M. (2008). Self-determination theory: A macrotheory of human motivation, development, and health. Canadian Psychology/Psychologie Canadienne, 49(3), 182–185. https://doi.org/10.1037/a0012801

- Dhalmahapatra, K., Maiti, J., & Krishna, O. (2021). Assessment of virtual reality-based safety training simulator for electric overhead crane operations. Safety Science, 139, 105241. https://doi.org/10.1016/j.ssci.2021.105241

- Enang, E., Bashiri, M., & Jarvis, D. (2023). Exploring the transition from techno centric industry 4.0 towards value centric industry 5.0: A systematic literature review. International Journal of Production Research, 61(22), 7866–7902. https://doi.org/10.1080/00207543.2023.2221344

- Endsley, M. R., & Kiris, E. O. (1995). The out-of-the-loop performance problem and level of control in automation. Human Factors: The Journal of the Human Factors and Ergonomics Society, 37(2), 381–394. https://doi.org/10.1518/001872095779064555

- European Commission, Directorate-General for Research and Innovation, Breque, M., De Nul, L., & Petridis, A. (2021). Industry 5.0 – Towards a sustainable, human-centric and resilient European industry. Publications Office of the European Union. https://op.europa.eu/en/publication-detail/-/publication/468a892a-5097-11eb-b59f-01aa75ed71a1/language-en