Abstract

The recent pandemic and rapid increase in the number of media platforms in recent years have significantly influenced media watching behaviors of individuals. Specifically, individuals now want to have continuous media-watching experience (MWE) for long periods in different positions, while also engaging in various other activities. Considering these trends, a new concept for display robots, equipped with mobility and screen adjustment functions, is required to improve MWE. Hence, this study focused on the introduction of a new concept for display robots and proposed interaction guidelines, derived through expert interviews, for designing such robots. In this case study, the Wizard of Oz method was used on actual users to confirm the user value of the display robot and verify the proposed guidelines. The results show that display robots can improve the MWE by supporting emerging media-watching behaviors. Furthermore, strategies for facilitating the implementation of the suggested principles are elucidated. These findings validate the feasibility of creating a display robot for enhancing the MWE of users.

1. Introduction

In today’s interconnected world, daily life and media have become intertwined, impacting our lives from various perspectives. In particular, the COVID-19 era has witnessed a drastic increase in the amount of time dedicated by individuals to media consumption, irrespective of age, gender, profession, etc. (Chauhan & Shah, Citation2020). With the media becoming more meaningful from both social and personal perspectives, diverse technologies and devices have emerged to enrich the media-watching experience (MWE).

Because displays are a pivotal means for interacting with media, a number of advancements have been made to enhance the MWE through novel display concepts. For instance, the surge in multimedia distribution platforms such as YouTube, Netflix, Twitch, and Facebook Live has amplified the use of personal display devices, particularly smartphones, over traditional TV screens (Dao et al., Citation2022). Additionally, as media penetrate human life more deeply, maintaining uninterrupted watching experiences has become paramount (Barwise et al., Citation2020). Instead of confining media consumption to a fixed location, individuals seek seamless transitions with large screens while moving, such as from the living room to the bedroom or kitchen. The evolving patterns of media consumption underscore the necessity for an innovative display. In particular, there is increasing demand for displays capable of adapting their positions based on user movements, thereby enriching the overall MWE. Aligned with recent user requirements, displays are shifting from static devices to robots that can adjust their direction or follow user movements.

Various novel display concepts, such as “everywhere display,” “immersive display,” and “ubiquitous display,” have been suggested (Choi et al., Citation2013). In the same context, Alexander et al. introduced the concept of a “tilt display,” which integrates multiaxis tilting and vertical actuation for a comfortable MWE (Alexander et al., Citation2012). Similarly, Choi and Kim proposed a projector robot that combines a handheld projector, mobile robot, RGB-D sensor, and pan/tilt device (Choi et al., Citation2013). The interactive display robot moves freely indoors and projects content onto any surface. Therefore, we predict that the evolution of displays will culminate in the emergence of display robots in the near future.

In this paper, a display robot is defined as a robot accompanied by a screen for playing media, and this robot assists in making independent decisions based on predetermined rules. The robot navigates within these spaces, allowing users to access and play media content. Thus, display robots can help people to seamlessly and continuously experience media. They also fulfill the desire to use large screens that smartphones and tablets cannot. This novel concept necessitates the formulation of interaction-design guidelines to facilitate the effective development of robots. Despite the growing prominence of these robots, a significant gap exists in the design guidelines for interactions with innovative devices (Choi et al., Citation2013). Consequently, this study focused on the formulation of tailored design guidelines for intelligent robot displays. We developed a new concept for display robots and verified the effectiveness of the guidelines through a practical case study.

The main contributions of this study are as follows:

Functions that display robots should possess to enhance the evolving MWE were proposed, and the user value of these functions was verified.

Together with human–robot interaction (HRI) experts, the principles required when designing a display robot to provide a great MWE were identified, and interaction-design guidelines were derived accordingly.

Through a case study of actual users, the application of the proposed guidelines to display robots was identified.

The subsequent sections of this paper are organized as follows: Section 2 presents an overview of relevant studies on personalized and innovative display robots, followed by a literature review to explore current state-of-the-art interaction-design guidelines and subsequent deliberation on their limitations. Section 3 outlines the development of interaction-design guidelines for display robots based on expert perspectives. Thus, we conducted expert interviews and generated novel interaction-design guidelines for display robots based on insights from experts. In Section 4, we discuss the creation of a high-fidelity prototype and the execution of a case study to confirm the efficacy of the developed design guidelines and verify the user value of the proposed display robot. Section 5 discusses the results obtained in this study, and Section 6 highlights the conclusions drawn from the major findings of this study.

2. Related works

In this section, we first examine the previous display robot and its associated interaction-design principles. Following this, we delve into the limitations identified in prior research.

2.1. Background on the display robot

Novel display concepts have emerged in response to evolving media consumption behavior. Conventional static displays, which require users to adapt their position for optimal media viewing, challenge the attainment of seamless and organic MWEs. To address this issue, recent studies have focused on facilitating uninterrupted media while promoting a natural posture.

Several relevant research studies have been conducted on novel types of display robots (). First, Choi et al. (Choi et al., Citation2013) presented the concept of a projection robot. It combines handheld projectors with mobile robots, enabling the user to manipulate the display’s projection onto various surfaces within a space. This concept provides mobility and user control, but it may require more user intervention may be required in terms of navigation and setup. Additionally, the mobile projection device must be fixed separately by the user, which limits its ability to dramatically improve the media viewing experience. Alexander et al. (Alexander et al., Citation2012) suggested tilting displays. The concept of a tilting display is characterized by the ability to adjust the display’s orientation in multiple axes and provide vertical actuation. This allows viewers to access content from various angles. While tilting displays offer versatility in terms of viewing angles, they may still be confined to a fixed location. Another related concept involves using a rotating mirror to project displays onto any wall or surface within a space (Pinhanez, Citation2001). This approach offers flexibility in display placement, making it adaptable to different room configurations. However, this approach may require precise calibration and can be limited by line-of-sight constraints. Finally, using drones for display projection actively introduces mobility (Scheible et al., Citation2013). Drones can provide flexible display projection locations and dynamic positioning. Drone-type display robots can project onto various surfaces, but their indoor use is limited.

Table 1. Summary of previous display robots.

2.2. Interaction-design principles for display robot

As robots continue to be integrated into human lives, emphasizing HRIs for their value provision has become important (Lindblom et al., Citation2020). However, a considerable number of existing studies have adopted a robot-centric approach, focusing heavily on technological issues and solutions, and often neglecting the human perspective (Coronado et al., Citation2022). Regardless of their type and usage context, robots can fundamentally aid humans. Hence, it is imperative to involve user viewpoints across all stages of developing a new robot concept to enhance users’ overall satisfaction. Numerous studies have aimed to integrate human perspectives into HRIs. To effectively integrate user insights during the early design phase, numerous design considerations concerning HRIs for intelligent devices have emerged (Amershi et al., Citation2019; Frijns & Schmidbauer, Citation2021; Park et al., Citation2020).

We conducted a literature review to understand the design considerations of existing studies related to display robots. Academic papers were collected from Google Scholar utilizing keywords such as “user experience of robot,” “HRI design principle,” “design guidelines for movable display,” “automation design consideration.” The papers were published in peer-reviewed journals and conference proceedings. Studies emphasizing non-user perspectives, such as algorithms for enhancing robot performance and guidelines for determining machine autonomy levels, were excluded to maintain a user-centric perspective. Following the extraction of design principles from the selected papers, those with congruent meanings were categorized (). presents an introduction to the suggested design principles from existing studies, which are categorized as Reaction, Modality, Personalization, and Movement.

Table 2. Design principles derived from existing studies.

The Reaction category refers to the robot’s response after perceiving the user’s inputs or actions. Interactive robots are engineered to react to human behavior or commands. In previous studies, two critical aspects related to robot reactions have emerged. First, robots must be designed to respond promptly to human input (Amershi et al., Citation2019). The speed of a robot’s reaction plays a critical role in effective HRI. Responses that are excessively delayed or rapid, and fail to align with the situation, can undermine user confidence and disrupt effective communication. Second, robot responses to humans should be foreseeable and should not require human reinterpretation (Cooney et al., Citation2011). This approach is particularly crucial in the context of potential risks.

The Modality category encapsulates the principles related to the communication channel employed during the interaction with the robot. Typically, HRIs differ from human-human interactions (Novanda et al., Citation2016). With the proliferation of devices such as smartphones and tablets, people have become accustomed to diverse interaction modalities such as touch, voice, and gestures. Consequently, designers of new robots must meticulously consider the interaction modality that best aligns with the robot’s form and purpose (Frijns & Schmidbauer, Citation2021). This requires comprehending the usage context and carefully observing the target users. Some studies advocate enabling users to freely select their preferred interaction method among multiple modalities, depending on the situation. Although multimodal approaches offer familiarity, they also pose challenges in system development and finding the optimal combination (Argall & Billard, Citation2010). Hence, when designing a robot that requires entirely new interaction paradigms, modality considerations must be considered from the outset.

The Personalization category indicates the importance of a display robot’s adaptability to the needs and preferences of individual users. To ensure robot–user adaptability, the correlation between customization factors and user individuality must be explored (Syrdal et al., Citation2007). Personalization can increase user satisfaction with robot utilization, particularly in scenarios characterized by pronounced individual variance in robot usage behavior (Lee et al., Citation2012). Individual differences in display robots may include height, media viewing habits (positioning), and primary viewing spaces (Block et al., Citation2023). In addition, learnability from user behavior is important (Amershi et al., Citation2019).

The Movement category includes guidelines for the navigation of a display robot. Considering the user’s interpretation of a robot’s movements is necessary when designing a robot (Hoffman & Ju, Citation2014). The robot’s motion should neither appear intimidating nor awkward to the user while achieving its intended purpose (Samarakoon et al., Citation2023). Given that the main objective of display robots is to present screens to users, their movements predominantly involve revolving around them. Consequently, an inadequately designed motion may lead to unintended collisions with users or negatively impact the MWE by placing the robot too close to or too far from the user (Samarakoon et al., Citation2023). In addition, the robot’s range of motion should be within human expectations (Bartoli et al., 2014).

2.3. Limitations of existing studies

Research has suggested that displaying robots with new functionalities can enhance the MWE. However, there are several challenges in this domain. First, current studies have not fully incorporated rapid technological advancements in recent years. Advances in artificial intelligence have significantly progressed over the past decade, leading to notable enhancements in the learning, predictive capabilities, and performance of robots (Sghir et al., Citation2022). However, most related research was conducted approximately 10 years ago with a forward-looking perspective on technology. With regard to real-world technologies, there have been developments in recent years, including the introduction of large screen monitors equipped with wheels on the market but without academic investigation (Faulkner, Citation2023). Consequently, although the new display robot is likely to be commercially available in the near future, these studies are far from the commercialization stage considering the prevalent rapid technological advances.

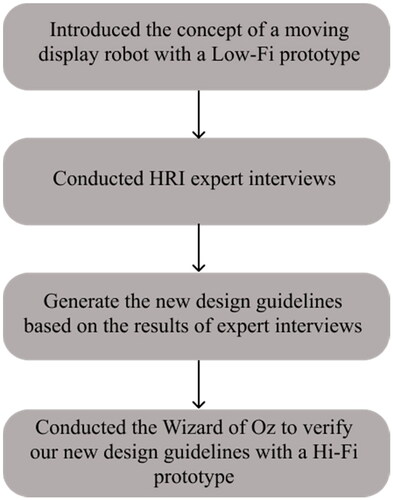

Second, there is a lack of related studies on display robots from the user’s perspective compared to the general robot field represented by the term HRI. In the development and design of innovative devices, integrating user preferences and requirements through a multidisciplinary approach is imperative (Kujala et al., Citation2005). One effective strategy involves the formulation of interactive design guidelines. These guidelines facilitate the design and evaluation of display robots that are understandable, trustworthy, and acceptable to the public (Amershi et al., Citation2019). Constructing guidelines to enhance the MWE by fostering seamless interactions between users and display robots during the early stages of product development requires input from both experts and users. Experts can effectively contribute to the principles required for the stage of a product. However, there is a gap remains between expert and actual user opinions that can be addressed through user-based investigations. In addition, the existing studies do not adequately reflect users’ perspectives. Alexander et al. did not analyze the ways in which an individual uses the innovative display concept in real-world applications (Alexander et al., Citation2012). Similarly, Choi et al. (Choi et al., Citation2013) and Pinhanez (Pinhanez, Citation2001) did not conduct a user study, resulting in an incomplete examination of the user experience. Although numerous previous studies have suggested roles for enhancing HRIs, such roles do not apply identically to all similar products owing to variations in context. Thus, the significance and application of each guideline differ according to the device type. Consequently, there is a need to present interaction-design guidelines tailored to the proposed display robot need to be presented, supplemented by real-world usage scenarios involving general users. Therefore, we introduce innovative interaction-design guidelines for display robots. These guidelines were formulated based on expert interviews and subsequently verified through a case study involving a high-fidelity prototype (see ).

3. Method

To enhance the MWE and address the identified research gap, we adhered to the methods outlined in . In our approach, we generally adhered to the methods outlined by Amershi et al. (Amershi et al., Citation2019). Initially, we developed a low-fidelity prototype of a moving display robot to introduce our novel concept. At this juncture, we incorporated three fundamental core functions: screen adjustment, user following, and multimodal integration. Subsequently, we engaged in expert interviews with twelve HRI specialists, assigning them with interactive scenarios. Third, their responses and feedback were then analyzed to formulate the new design guidelines for the moving display robots. Finally, leveraging these guidelines, we advanced to the development of a high-fidelity prototype. Subsequently, we conducted a case study involving 16 participants to verify the efficacy of our innovative design principles.

3.1. Concepts of the display robot

In this study, we introduce a novel display robot concept based on existing research and evolving media-watching behaviors. Fundamental considerations of display robots include screen adjustment, user following, and multimodality integration.

First, we implemented screen adjustment functionality to facilitate a comfortable MWEs. Maintaining a fixed viewing posture for a prolonged period strains the musculoskeletal system. With the proliferation of portable displays, users can now alter their viewing postures. However, manual screen adjustments can be cumbersome. An automated robot capable of adapting to screen settings based on environmental conditions, user preferences, and content types can significantly enhance the convenience of media consumption.

Second, our display robot features a user-following function aimed at delivering uninterrupted MWEs. Contemporary lifestyles increasingly demand flexibility in navigating living spaces without disrupting media consumption. Seamlessly transitioning between different rooms while maintaining the progress in the movies or TV shows being watched enhances the overall convenience. To achieve this seamless transition, users often utilize multiple devices for media consumption. A seamless experience empowers users to switch between devices effortlessly, eliminates interruptions, and preserves a consistent viewing journey. Consequently, manual synchronization of progress across devices becomes unnecessary, saving time and allowing viewers to immerse themselves in the content without distraction.

Finally, we embraced diverse input modalities for a novel display robot. When individuals interact with a robot, several input methods such as voice, touch, and gestures methods. The motivation behind introducing a new control modality for this innovative display robot concept stems from the aspiration to optimize user interactions, fully harness the robot’s capabilities, and deliver an exceptional and unique user experience congruent with distinct features and objectives. The novel display robot concept may encompass unique capabilities that traditional control modalities struggle to fully exploit. Furthermore, this concept may prioritize a streamlined and intuitive interaction model, necessitating a distinct modality aligned with new functionalities. Introducing a novel modality enables users to extract the maximum value from a robot’s capabilities.

3.2. In-depth interview with HRI experts

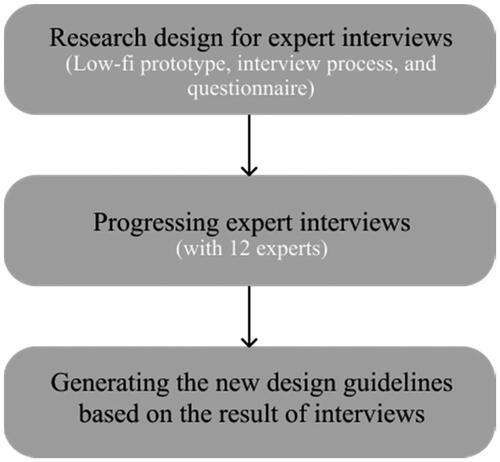

An expert interview was conducted as the first step in deriving interaction design guidelines for display robots (see ). Expert interviews are a valuable qualitative research methods that hold significance in various domains, including HRIs and design research (Amershi et al., Citation2019). In the context of a new display-robot concept, HRI experts can provide informed insights, identify potential challenges, and offer recommendations based on their understanding of user needs, technological possibilities, and design considerations. Therefore, it is crucial to involve experts early in the design process when dealing with novel concepts. These insights can help to refine the initial concept, making it more feasible and effective. In this study, 12 HRI experts participated in expert interviews (six males and six females). All the experts recruited were robot developers and HRI designers. The average age was 40.5 years, with a range: 30–51 years. On an average, they had 14 years of professional experience. The study received approval from the company, as it solely involved asking participants about guidelines without posing any ethical hazards. Before proceeding with the study, we explained its purpose and obtained consent forms from all participants. We designed various types of interaction tasks for use in the test, considering that the new guidelines might be applied differently. In the expert interviews, we anticipated that the participants would have experience using the moving display robot. Thus, we employed the Cognitive Walkthrough method to assign tasks to experts utilizing moving robots and extract elements for the guidelines. Our objective was to extract insights at each stage of interaction. Therefore, they can fully understand the behavior of various types of novel robots and gain in-depth insight into the effects of a robot’s behavior on users.

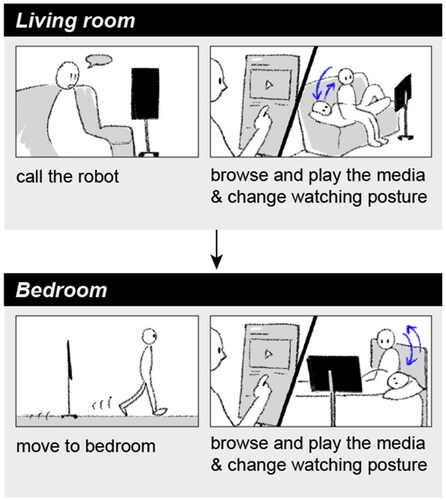

Before the expert interviews, the researchers discussed and described several situations in which the display robot was useful for the participants to experience it effectively. The derived scenarios comprise one continuous scenario. summarizes this scenario. First, the participants went to the sofa in the living room and called the display robot. When the display robot approached, they searched for media and started watching it. While watching the media, participants could freely change their posture on the sofa anytime they wanted. They could sit in a lying position or turn their bodies in different directions. After watching the media for a period of time in an altered position, the participants were asked to move to a bedroom. The same activities were performed in the bedroom and living room. After being freely positioned on the bed, they changed their posture while exploring and enjoying the media.

While experiencing the scenario, the participants were not restricted to any behavior other than the prescribed behavior. For example, experts were allowed to experiment with any interaction method they wanted across scenarios. In addition, the participants could adjust the preset position of the display robot or the angle and distance of the screen. By promoting the autonomous behavior of experts, we were able to test various design considerations for the new concept of display robots and present insights from a wide perspective.

A space equipped with furniture, including a bed and sofa, was configured for the expert interviews. The space might provide an environment similar to a real home, including a bedroom, living room, kitchen, and bathroom. In addition, a display robot was prepared to provide a realistic experience. Mobility was ensured by mounting wheels on the lower part of the robot’s body, which was connected to a 27-inch display. The display can be continuously adjusted in height from 1 m to 1.4 m to accommodate the various media-watching postures of the participants. In addition, the display can rotate by 180°, swivel by 130°, and tilt by 50°, allowing screen adjustments over a wide range. The media were played by mirroring a separate media device on the display robot. YouTube, which is well known to most people, was used as a media platform to concentrate solely on gathering feedback about the display robot itself, excluding any usability concerns. To imagine and experience the functions of the display robot freely instead of predefining and implementing the robot’s functions, a human operator performed all the movements of the robot (see ).

Three researchers participated as the primary operators in each interview. One acted as a moderator, explained the experiment to the participants, facilitated the sequential execution of the scenario, and conducted an interview after all the scenario experiences were completed. Another researcher implemented the movement of the display robot by following the participant while moving. The last researcher controlled the media content through a device mirrored by a display robot when the participant searched for media or wanted to control it. Interviews were conducted after participants understood the concept of a display robot. The interviews consisted of three sessions: mobility, screen adjustment, and input modality. Without a set interview format, the experts freely shared their opinions with researchers regarding their feelings, design considerations for each function, and concerns. The entire experimental process took 60–90 min for each participant, and the entire process was video-recorded with their consent.

3.3. New interaction-design guidelines for the display robot

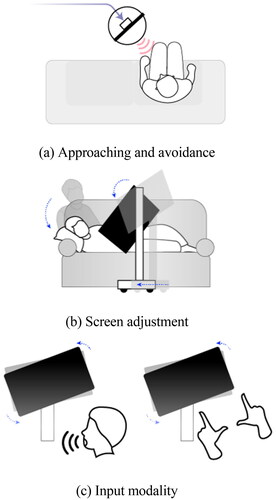

In the expert interviews, we collected the results of observing the participants’ behavior and opinions. After the expert interviews, we held a session to understand and follow the experts’ opinions. Subsequently, we formulated interaction design guidelines based on the outcomes of expert interviews, which spanned three distinct usage categories: movement, screen adjustment, and input modality. Eight practical guidelines were derived, including three in the movement and screen adjustment categories and two in the input modality category (). The remainder of this section explains the rationale behind the guidelines based on expert opinions and existing research.

Table 3. New interaction design guidelines for the display robot.

3.3.1. Movement

In relation to mobility, experts have consistently emphasized two key functions that display robots must effectively perform effectively: relocating to positions conducive to optimal media viewing and avoiding interference with users. These operations play a crucial role in providing seamless and immersive media viewing experiences. Several factors were considered in the implementation of these functionalities. The initial focus was on the need for quick detection and response to user movement. The experts anticipated that these mobility-related functions would be automated rather than initiated by user commands. Given the human inclination toward real-time interactions, the display robot’s swift and adept responsiveness mirrors the natural rhythm of communication, fostering heightened engagement. This cultivates a positive perception of the display robot and ultimately boosts user satisfaction. Moreover, timely responses reduce ambiguity in HRIs, which bolsters user confidence through the immediate acknowledgment of their actions.

However, rapid responses should not be equated to fast movements. Research on HRIs underscores the importance of user comfort and safety in cultivating positive interactions. Excessive speed can startle users or evoke feelings of discomfort, running counter to the overarching goal of enhancing the MWE (Olatunji et al., Citation2020). Such rapid motion may not align with the users’ natural cognitive pace, introducing cognitive strain and creating a sense of disconnected interactions (Fong et al., Citation2003). Furthermore, quick robot movements may inadvertently convey the impression of unpredictability or a lack of control, thereby eroding user trust (Aéraïz-Bekkis et al., Citation2020). Consequently, experts stress the need to calibrate the display robot’s speed to match users’ comfort levels, ensuring a stable experience.

Finally, it is crucial for the display robot not to obstruct human pathways under any circumstances. Numerous studies on HRIs have emphasized the importance of robot navigation, which prioritizes the safety of both humans and the robot itself (Dautenhahn et al., Citation2005). Favorite user reception and positive interactions with robots often hinge on respecting the users’ personal space and movement preferences (Goetz et al., Citation2003; Nomura et al., Citation2006). This includes physical barriers as well as psychological comfort. As a result, experts underscore the necessity of thoroughly examining user movement patterns and determining which robot movements users perceive to obstruct their pathways before designing a display robot.

3.3.2. Screen adjustments

The experts expected the screen adjustment function to be a useful feature for users’ media viewing experiences. Nevertheless, certain concerns were raised during the evaluation. Primarily, the experts recognized the inherent diversity in individuals’ preferences for screen tilt. Notably, even in identical contexts and postures for media consumption, users’ physical attributes such as height and visual acuity influence optimal screen orientation. Additionally, when accounting for variables such as user height, eye level, posture, and habitual viewing tendencies, it is anticipated that the angle and tilt of the screen will vary based on individual viewing behaviors. Consequently, the experts advocated implementing a screen adjustment function with learning capabilities in mind. The outcomes of screen adjustment can be substantially improved by assimilating the aforementioned insights into user viewing patterns. This learning process could involve consistent observation of users’ media consumption behaviors or eliciting direct feedback during the onboarding phase.

Furthermore, experts underscored a crucial consideration regarding screen adjustment: the final authority for adjustment should always remain with humans. Although this principle has been emphasized in contexts fraught with risks such as autonomous driving, its applicability offers valuable insights into the design of display robots. Despite impressive advancements in automation technologies, human behavior encompasses intricacies that may deviate from machine predictions (Klumpp et al., Citation2019). For instance, even in identical postures, various circumstances, such as the presence or absence of others may necessitate different viewing angles. Therefore, while the display robot operates based on user recognition outcomes, its ability to manually adjust settings should be consistent with that of the users, granting them autonomy to fine-tune configurations at their discretion. Notably, the contextual environment profoundly influences the requirements for screen adjustment and the anticipated variations in adjustment angles and degrees (subtleties) among individuals underscore the frequent need for manual interventions.

These expert perspectives provide a comprehensive approach that integrates user behavior learning, context sensitivity, and user access to manual control for the screen adjustment. This approach aligns with the intricacies of human behavior and preferences while augmenting the overall effectiveness of the display robot’s screen adjustment capability.

3.3.3. Input modality

In the final round of interviews, valuable insights pertaining to the interactive control aspects of display robots were gathered. When individuals encounter novel devices, their natural inclination is to gravitate toward the most suitable mode of interaction (Clemmensen et al., Citation2017). Based on this phenomenon, experts have emphasized that display robots should offer interaction methods that optimally align with various scenarios. Given the role of a display robot as an intermediary between the mobility of portable displays and the conventional functionality of stationary screens, embracing diverse input modalities could facilitate comfortable interactions across diverse contexts. This perspective is grounded in the understanding that each interaction method has distinct advantages and limitations. Experts have commonly mentioned the high suitability of the three input modalities: voice, touch, and air gestures.

Voice commands are useful in situations where users maintain a standard viewing distance, eliminating the need for direct physical interaction with the display. Historically, numerous efforts have been invested in integrating voice interaction into human–robot communication (Bures, Citation2012). However, experts have highlighted the inherent challenges that hinder the widespread adoption of voice interactions. Although natural voice interaction allows seamless usage without a steep learning curve, several voice-based interactions require users to undergo separate training, which acts as a barrier to adoption (Gao et al., Citation2018). Additionally, concerns regarding privacy intrusion present a significant challenge, potentially compromising user satisfaction (Kim, Citation2008). Addressing these concerns in the design of voice-controlled display robots is imperative for fostering user acceptance.

In contrast, touch, the most intuitive modality following the proliferation of smartphones, has emerged as a straightforward means of engaging with portable displays (Lee & Hui, Citation2018). Experts have noted that a display robot capable of recognizing specific users and tailoring experiences could be perceived as similar to personal devices, such as tablets or smartphones, rather than traditional, distance-oriented displays. However, physical proximity constraints have also been introduced in the context of display robots. For touch interaction to be effective, the display robot must adeptly approach users when they signal their intent to engage through physical contact. Experts have mentioned that to implement touch input in display robots, designers need to properly identify and configure the action cues the user regards as an appropriate starting point for touch interactions.

Gesture interaction was also considered, which offers the advantage of remote control through gestures similar to familiar touch motions (Yee, Citation2009). This approach eliminates the need for location changes for both the user and robot, mitigates voice interference, and enables user interaction without disrupting the viewing behavior. Nevertheless, experts anticipate that gesture-based control may not be preferred by users for display robots. Persistent issues related to gesture learnability, cultural divergence in gesture interpretation, and challenges in device gesture recognition have contributed to this projection. Furthermore, alternative control methods, such as remote controllers, have also been explored; however, concerns have been raised regarding their potential incongruity with the mobility-centric concept of display robots.

Experts have highlighted the significance of gaining a nuanced understanding of the advantages and drawbacks associated with each modality when applied to display robots. This understanding serves as a foundation for offering users a range of interaction options in subsequent studies. The inherent limitations of each interaction method can be addressed systematically through a collection of preferences and appropriateness assessments in various situations.

4. Case study

The proposed interaction guidelines were the result of a comprehensive review of the literature and expert perspectives. In the initial stages of conceptualizing products and services, tapping into expert knowledge offers a pragmatic means of comprehensively understanding their functionality and potential user impact. However, expert viewpoints may be limited in fully encapsulating real-world user experiences (Clemmensen, Citation2011). Consequently, numerous studies on nascent technologies have addressed this challenge by amalgamating authentic user feedback and expert insights (Jang et al., Citation2020; Park et al., Citation2020; Park & Kim, Citation2024; Ringgenberg et al., Citation2022). This study not only verified the guidelines through genuine user input but also confirmed the inherent value of the proposed display robot. In this pursuit, the Wizard of Oz (WOZ) method has emerged as a suitable tool. In addition to verifying the guidelines, the case study’s outcomes establish a strong foundation for demonstrating the inherent value of the display robot the effectiveness of the simplified interaction guidelines.

4.1. Prototype

According to experts, the hardware specifications of a robot are suitable for implementing the proposed display robot concept. In our study, we used a high-quality prototype with hardware specifications similar to those of the robot used in the expert interviews. Unlike in the case study, in which the person controlling the movement of the robot was revealed in the expert interview, the Hi-Fi prototype allowed the user to believe that the display robot was actually operating by enabling remote control of the display robot in the case study. Several rules related to the operation of the display robot were established by referring to expert opinions, and the initial guidelines were created based on them (see ).

With respect to mobility, our design guidelines stipulate that ‘The movement of the display robot should not be seen as dangerous to the user’, ‘The display robot does not respond to all movements of the user and must change its position after recognizing the user’s intention’, and ‘The display robot must move to avoid colliding with the user, as well as positional movement for comfortable viewing.’ Hence, it was assumed that the display robot could prevent collisions by recognizing its distance from the user and automatically maintaining a certain distance. Therefore, in all situations where the user does not command an approach, they always move while maintaining a certain distance. In addition, when a user is watching media and shows an intention to move, such as getting up, he or she takes a sideways motion to avoid obstructing his or her path. The speed of the display robot was almost the same as that of the user; therefore, the user did not feel threatened by the fast movement of the display robot.

With respect to screen adjustment, our design guidelines stipulate that ‘It is more important to adjust the screen stably than to adjust the screen in real time’, ‘The display robot should incorporate a manual mode that allows users to make precise adjustments when necessary’, and ‘The display robot needs to adjust after determining the angle and distance of the display preferred by each individual.’ Hence, the display robot’s screen was adjusted to the height and angle of the user’s eyes, and the user’s face and screen were placed face-to-face. The screen was always kept at a certain distance from the user’s face, and when the user changed posture or moved his/her head, the screen moved along with it. All movements of the display robot, including mobility and screen adjustment, are automated, but manual adjustment is possible if the user desires. After manual adjustment, the value is learned and used immediately for the next movement.

Finally, for the input modality, our design guidelines stipulate that ‘The display robot should offer a comfortable means of interaction’, and ‘The display robot should offer a range of interaction methods based on the situation.’ Hence, experts opined that it is desirable to support multiple modalities so that users can freely use their preferred interaction method according to various purposes and contexts. Reflecting on this, all the touch, voice, and air gestures mentioned as possible candidates for the input modality were guided so that they could be used in any situation. However, in the case of touch gestures, experts were concerned about distance limitations; therefore, when users made a pointing gesture, the display robot moved to a touchable distance. Today, many people are familiar with machine interactions. Just as people naturally use swiping to move to subsequent media, users generally agree on commands. Therefore, in this study, the users were requested to control the robot naturally without a detailed interaction guide.

4.2. Case study design

We recruited 16 participants (eight males and females) who watched media almost daily. Their ages ranged from 28 to 48 years, with an average age of 38.8 years. The study received approval from the company, as it solely involved asking participants about guidelines without posing any ethical hazards. Before proceeding with the study, we explained its purpose and obtained consent forms from all participants. We designed various types of interaction tasks for use in the test, considering that the new guidelines might be applied differently. A case study using WOZ was conducted to verify the user value and propose an initial guideline for the proposed display robot targeting actual users. The WOZ technique is frequently used in HRI research. It involves simulating an interactive robot’s behavior, as if it were autonomously operating, while in reality, a human “wizard” behind the scenes controls its actions (Dahlbäck et al., Citation1993). It allows researchers to gather user feedback and evaluate user interactions with a concept or prototype before full development, facilitating early-stage insights, identifying design improvements, and refining user-centered features (Hoffman & Ju, Citation2014; Novanda et al., Citation2016).

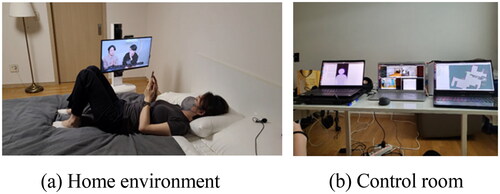

The experimental location for the WOZ was the same as that in the expert interview, based on the expert opinion that the home environment was suitable for experiencing the given scenario. Four researchers participated as the main operators of the WOZ. All researchers had fixed roles without any rotation. The two researchers were present in the same room as the participants. The main facilitator was to moderate the overall experiment and control the robot movements using a remote controller. We ensured that the remote controller was not always visible; therefore, the users believed that the display robot was moving independently. The assistant facilitator recorded the experiments and sent videos to the other two researchers for real-time observation. The remaining two researchers who watched the experiment through video were in a separate space that users could not see, namely, the control room (see ). The participants acted as wizards who controlled the media according to user commands through devices that were remotely connected to the display robot.

The scenario for WOZ was identical to that of the expert interviews (). Participants’ tasks entail a sequential process. When the robot is initially seated on the sofa, it summons the robot. They then explore and consume media through multimodal control, navigating and viewing the content. Subsequently, participants are instructed to adjust their viewing posture. Following this, they transition to the bedroom, where they continue their exploration of media utilizing control functionalities. Finally, participants are asked to change their viewing posture to match their personal preferences.

Using the Wizard of Oz, we sought to substantiate the effectiveness of our newly formulated design guidelines. Thus far, we have assessed the satisfaction levels of the overall MWE as well as each of its key features. Hence, after experiencing the scenario under the guidance of the moderator, the participants rated their satisfaction level with the overall MWE and the main features they experienced, namely, mobility, screen adjustment, and input modality, on a 5-point Likert-scale (1 = very dissatisfied, 5 = very satisfied). Subsequently, the users freely expressed their opinions on each response. These user responses encompass explanations of each item’s score, the positive aspects they identified, and suggestions for potential enhancements.

The entire experimental process took 2 hours for each participant and was video recorded with the consent of the participants. The responses of all participants were analyzed. After WOZ was completed, the researchers reviewed the experimental videos, observed and analyzed the behavior of all participants, and combined the observed results with the collected user opinions to derive meaningful interpretations.

4.3. Results

As mentioned previously, the two main purposes of the case study were to confirm the value of display robots for potential users and to verify the effectiveness of the guidelines. To achieve the first objective, a brief satisfaction survey was conducted on the overall MWE and three main features of the display robot. The case study results were analyzed in an integrated manner with the qualitative opinions of users, thereby confirming the user value of the display robot. In addition, the grounds for verifying the initial guidelines were obtained from user behaviors observed throughout the case study and interview results.

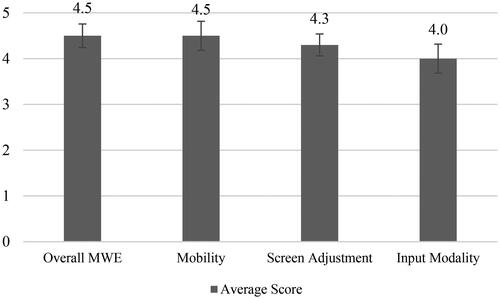

4.3.1. User value of display robot

First, indicates that our investigation established the positive impact of the display robot on the overall MWE, as evidenced by the noteworthy high satisfaction rating of 4.5 (SD = 0.516). This result highlights the effectiveness of the proposed robot. It is particularly encouraging to note that all participants responded favorably to the core functionalities of the display robot, garnering an impressive average rating of 4.5 for mobility (SD = 0.632) and 4.3 for screen adjustment (SD = 0.479). The average satisfaction score for the input method was 4.0 points (SD = 0.632), which was slightly lower than that for the other items but still showed satisfactory results.

First, regarding the robot’s mobility, it was encouraging to observe that the participants showed a clear tendency to prefer the ability of the display robot to synchronize its movements with those of the user. This perception extends beyond intuitiveness to encompass recognition of its high effectiveness. This functionality addresses their aspirations for an uninterrupted and smooth journey through their media consumption experiences. This underscores the pivotal role of integrating mobility as a strategic approach to fulfilling the intrinsic longing for seamlessly integrated and unobtrusive interaction with the display robot.

P1: “It is great not having to pause the media when I move to another room. I can enjoy media while cooking in the kitchen or even while doing some chores.”

P2: “Being able to watch media from different spots in the room is truly convenient. I can switch between the couch and my favorite chair without any hassle.”

P8: “It is convenient to watch while engaging in other activities such as cooking or working out.”

Regarding screen adjustment, participants often expressed a desire for comfortable neck and shoulder positioning while engaging in extended media viewing. The participants believed that the screen adjustment feature could help them achieve this comfort. They also agreed that having the option to customize the screen angle according to their preferences would significantly improve their overall MWE. Many users stressed the importance of staying relaxed and strain-free while watching content for longer periods. They hoped that the display robot’s design would ensure ergonomic alignment between the screen and their eyes, minimizing the discomfort associated with extended media watching.

P5: “I love that the screen adjusts automatically to match my face direction. It truly reduces the strain on my neck, especially for long media, such as movies.”

P6: “It is gentler on the hands and neck compared to using a mobile phone.”

P11: “The screen was not fixed and adjusted to fit my posture, making it comfortable to view.”

The introduction of the proposed display robot demonstrated its positive impact on enhancing the overall media viewing experience. Users expressed high satisfaction with both the mobility and screen adjustment functions, which were highlighted as intuitive and effective solutions for seamless media consumption. The automatic screen adjustment function has been particularly praised for its potential to reduce strain during extended viewing sessions. However, the potential distraction caused by the robot’s movement, while occasionally affecting immersion, emphasizes the importance of aligning the robot’s actions with the user’s needs and context. Despite this, the general consensus remains that the robot’s movement augments immersion and engagement, making the viewing experience more dynamic and interactive.

4.3.2. Verifying design guidelines

In the case study, users experienced the display robot prototype by referring to the proposed initial guidelines and freely presented various opinions. Some of these opinions were consistent with the experts’ insights, whereas others required further research. In this section, user opinions related to the derived guidelines are collected, and qualitative analysis results are provided.

4.3.2.1. Mobility

User opinions collected through empirical studies on mobility are mostly consistent with expert opinions. In response to concerns that the robot’s movements could inadvertently exacerbate user discomfort, the participants consistently emphasized that the robot’s speed of movement should not exceed their own. This remarkable consensus highlights the importance of synchronizing a robot’s movement dynamics with a user’s natural speed to infuse familiarity into an interaction. Another important finding from user feedback is the approach angle adopted by the display robot. Surprisingly, the participants preferred the robot to approach them from around the side rather than directly from the front. This implies that users perceive the frontal approach to be more intrusive and potentially disturbing. Moreover, the need to maintain an appropriate distance between the user and display robot emerged as a consistent theme throughout the user responses. The participants articulated the need for buffer zones that preserve personal space to enhance security and a sense of control. In addition, some users were concerned that the display robot would collide with furniture or people. Therefore, the movement of a display robot must be designed to provide users with confidence in safety at all times.

P8: “I liked how the display robot matched my speed, which felt natural. A faster movement could be unsettling, whereas slower movement might disrupt the media-watching experience.”

P15: “I was a bit concerned when a robot came right in front of my face while I was lying down. Perhaps it gets better with time, but I'd feel more at ease if it approaches from the side.”

Users preferred to reposition the display robot based on the detected user intent instead of mirroring every movement in real time. Many users responded that it is preferable for the display robot to follow the user only when the user is outside a certain range rather than always following the user at a certain distance. They emphasized that there was an intention to move to another space only when the user moved a certain distance away from the robot. Instead, when the user moves within a certain range, a seamless experience can be maintained by fixing the position of the robot and adjusting only the direction of the display. In addition, some users prefer the robot to follow a command rather than move as soon as it starts moving. These insights highlight the significance of finding a middle ground between robot autonomy and user control. Users prefer display robots that can adapt to their intentions and movements but do not want full automation. By understanding these preferences, we can design display robots that respond in ways that users find meaningful and harmonious, contributing to positive and effective HRIs.

P1: “If the robot always follows me even during brief movements, I think it could actually disrupt my media-watching experience.”

P7: “I think it would be nice to have an option to activate following after a command so that the robot can follow only when I want it to.”

Finally, the users strongly agreed that the display robots should have motion to avoid collisions. In particular, a positive response was observed when the display robot automatically avoided when the user when they lying down. Contrary to previous concerns, this 'step aside’ movement was accepted very naturally and usefully by users. This implies that users have a shared conviction that the integration of motion capabilities into display robots is not only a matter of convenience, but also a vital means of preempting collisions and fostering a secure environment.

Screen adjustment: From the user’s perspective, it was observed that the real-time movement of the screen as the user shifts their gaze can lead to dizziness and discomfort. To address this issue, the participants expressed a preference for screen adjustments to be executed after the user’s movement had concluded, thus minimizing any potential negative effects on their viewing experiences.

P10: “The robot detects even my minor movements and frequently adjusts the screen angle, which is quite unsettling. I would prefer that it responds only to larger movements rather than to smaller ones.”

Researchers have observed divergent opinions on the optimal display angle. Some participants favored aligning the display angle with that of their eyes, while others advocated for a fixed horizontal alignment similar to that of conventional TVs. Additionally, even among individual users, discrepancies in preferred display angles and distances were noted based on distinct viewing postures. This variance highlights the importance of accommodating a spectrum of preferences to cater to diverse user needs.

P4: “At times, the screen angle did not match my posture perfectly, so I adjusted the screen angle.”

With regards to individual differences, several users indicated the importance of having an option for manual screen alignment. The rationale behind this preference is the dynamic nature of viewing conditions, which can necessitate tailored adjustments. However, a significant number of users ultimately wanted the screen-adjustment process to be automated without user intervention. The core of achieving satisfactory screen adjustment functionality lies in the capacity to learn and adapt to users’ individual viewing patterns, thereby delivering a personalized and optimal viewing experience.

Input modality: User perspectives of input modalities highlight the importance of tailoring interaction methods to suit specific functions. Notably, a distinct dichotomy in the preferred input modalities emerged within two contexts related to media engagement: media control and robot movement control. Users displayed a proclivity for touch interaction when navigating media-oriented tasks such as volume adjustments and content searches. This predisposition can be attributed to the seamless transition of a familiar personalized touch experience from handheld devices to the proposed display robot. Users envisaged a scenario in which explicit gestures, such as pointing with an index finger, could trigger the robot’s movement and align it with their desired position. Despite touch interactions receiving unanimous favor from most media controls, it is imperative to recognize a subset of users who gravitate toward voice commands for media searches, indicating the need for nuanced deliberation.

P2: “Given my prior experiences with personal viewing on mobile devices and tablets, I naturally expected touch interaction to be fundamental to the concept of a display robot.”

P11: “I appreciate the robot getting closer when I extend my finger for touch interaction."

P16: “I struggle to conceive how I'd use voice commands to control video playback.”

In contrast to touch’s dominance in media control, voice interaction has emerged as the preferred mode for controlling robot movements. As elucidated earlier, users expressed a desire to fine-tune the robot’s position or prompt its movement after altering its location. In such instances, users consider voice commands to be the most intuitive and natural means of interaction. Similarly, when summoning a display robot from a distance, users favor voice rather than direct physical contact, such as tactile interactions. However, it is noteworthy that users who were less familiar with voice commands exhibited reservations, corroborating the experts’ apprehensions about the potential discomfort with voice interaction.

P9: “Given that robot repositioning often occurs when the display robot and I are spatially distant, voice commands feel much more intuitive and comfortable.”

P13: “Controlling a non-human machine with my voice felt awkward for me.”

Numerous participants contended that multiple modalities are required because of the shared concepts between the controlling media and robots. For instance, potential confusion could arise between instructing the robot to move backward and ordering the rewinding media content. Users tended to use brief voice commands (e.g., moving back) instead of complex and natural language instructions (e.g., rewinding the media for 10 s). Such voice commands can perplex both users and robots, underscoring the necessity for clear distinctions between robot and media control commands to foster intuitive interactions. Air gestures were not preferred for similar reasons. For example, when the correct direction is indicated by hand gestures, it is difficult for both the user and robot to intuitively determine whether this is for the next medium or a command to move the robot to the right.

P5: “When attempting to adjust the robot’s posture, I felt embarrassed, as the command resembled a media control command (move forward).”

5. Discussion

5.1. Interaction design guidelines for the display robots

5.1.1. Differences between expert and user opinions

We conducted experiments involving both experts and the general public to better understand their opinions regarding display robots. Although their viewpoints were similar, some differences emerged, which led to insightful findings. These differences support the claims of previous studies that expert opinions do not perfectly reflect actual user experiences.

First, the mobility function of the display robot receives positive feedback from users. However, it is interesting to note that users did not strongly demand the full automation of mobility, contrary to the experts’ expectations. Users prefer automation in several specific situations, such as when starting or finishing media viewing or moving around. In other instances, users prefer to control the robot’s movement through their own commands. This finding suggests that finding the correct balance between automation and user control is important for enhancing the overall experience.

Second, regarding screen adjustment function, users expressed a more lenient views than experts did. Users do not want the screen adjustment function to be as detailed and strict as experts believe, indicating a certain level of tolerance for screen adjustment. In addition, users demonstrated a stronger demand for automation in most contexts than for the mobility function. This finding suggests that users can perceive value through automated screen adjustments that satisfy their preferences. This highlights the importance of understanding user preferences in different aspects of the interactions with a display robot.

Furthermore, offering too many interaction methods could lead to confusion among users. This suggests a slightly different meaning from what the experts stated when they argued that multimodality should be supported in all situations. Several initial HRI studies offer guidance without restricting the interaction method. These studies aim to ascertain whether a dominant input modality exists or if users prefer a free interaction approach. This study revealed that a more effective approach might involve identifying a dominant modality based on a context that reflects user preferences. For example, using voice commands when summoning a robot from a distance and employing touch for media control can lead to smoother interactions.

5.1.2. maximizing the effectiveness OF GUIDELINES

The development of an interaction guideline for display robots with the intention of enhancing the MWE by integrating user perspectives has yielded significant insights for display robot designers. These insights can be effectively applied to inform design processes in several ways.

Our study shows that it is important to know where personalization is crucial. For example, the results of this study indicate that designers must decide whether the display should match the angle of the user’s eyes or remain straight. The designers must also determine the optimum height of the display as well as the minimum distance between the display and the user’s face. By considering these issues in the guidelines, designers can ensure that the display is personalized for each user.

Our research showed that people usually have similar preferences; however, this is not well-known. For example, designers must determine the contexts in which users prefer manual commands over automated functions. Furthermore, the preferred interaction modalities for distinct situations should be considered. Designers are encouraged to consider these nuanced preferences and tailor guidelines to accommodate users’ general preferences. Consequently, the utility of the suggested guidelines could be increased by developing each guideline in detail or by adding subsequent items to each guideline.

5.2. Limitations and future outlook

Further studies would be required to effectively utilize the findings of this study and overcome its limitations. First, it is necessary to investigate the opinions regarding the long-term use of the proposed display robot. Along with the development of media content, the pandemic has had a significant impact on overall MWE. Specifically, people are spending an increasing amount of time watching media (Common Sense, Citation2021). The display robot exhibits key features that enhance the MWE, making it more suitable for longer periods of media consumption. For example, the proposed display robot can mitigate physical fatigue that occurs when a user is in the same posture for a long time while watching a fixed display. Therefore, the user value of a display robot is expected to increase when media consumption increases for a long time. Furthermore, user evaluations and the perceived value of interacting with the robot can change over time (Jost et al., Citation2020). However, due to the limitations of our experimental environment, we were unable to gather opinions after prolonged media viewing. We anticipate that by collecting feedback from users who have used the display robot during extended media consumption, we can obtain results that align better with the intended purpose of the display robot.

Second, subsequent research could explore potential differences in opinions based on age group. While our study involved participants in their 20s and 30s, media personalization and increased viewing times were trends across various age groups (Statista, Citation2023). Therefore, additional studies encompassing a wider age range are needed. Given that age significantly influences acceptance of automation (Haghzare et al., Citation2021), the perceived value of a display robot may vary depending on the age group. It would also be valuable to investigate whether each interaction guideline can be tailored to different age-related changes in physical ability and lifestyle.

Third, once the design of our robot is finalized, comprehensive guidelines, encompassing specific quantitative data, must be proposed. It is necessary to test, via both quantitative and qualitative methods, a high-fidelity robot that applies our new design guidelines. In addition, this approach includes precise measurements such as the distance and angle between the user and robot, among other relevant parameters (Yousuf et al., Citation2019). In the future, the development of more advanced prototypes could strongly support the outcomes of this study.

Fourth, we must delve into diverse types of interactions, including nonverbal interactions, and implement our design guidelines. Even though it is in the early stages of development, our moving display robot shows the potential to play a pivotal role in home settings as a social robot. While offering an enriched MWE serves as an initial and fundamental function, we anticipate that users will seek more extensive interactions and functionalities from the robot. In alignment with this vision, we must explore additional interaction methods, encompassing capabilities such as eye-tracking recognition and interpreting human emotions (Chadalavada et al., Citation2020; Hong et al., Citation2020; Saunderson & Nejat, Citation2019).

6. Conclusion

A review of existing literature revealed that displays currently in use do not sufficiently reflect the changing MWE. Hence, in this study, a novel display robot concept with characteristics of mobility, screen adjustment, and input modality was proposed to address this gap. The value of display robots has been demonstrated, and a design direction suitable for new-concept display robots has been explored. This exploration has led to valuable insights that can significantly impact the field of HRIs and the design of display robots.

This study also underscores the importance of harmonizing expert insights with the nuanced expectations of users. Notably, we have shown that users often prioritize a delicate balance between automation and user control, particularly in scenarios such as media commencement, conclusion, and physical repositioning. Understanding these preferences is critical in crafting an overall positive user experience with display robots.

While these findings have direct implications for display robot design, they can also be extended to the broader field of HRI. Specifically, this study is foundational to advancing the development of interactive user-centric robots. The principles derived from this study can inform the creation of robots capable of delivering tailored, context-sensitive experiences beyond media consumption.

Future research can encompass an extended period of use and a wider age range to explore potential user preferences, given the significant role of usage time and age. In addition, it is crucial to develop comprehensive guidelines with precise quantitative data as the robot’s design matures. This approach will facilitate the practical implementation of our insights into the creation of user-friendly display robots.

This study advances our understanding of user preferences and the role of experts in display robot design and contributes significantly to the broader landscape of HRI research. As we continue to refine our findings, we anticipate that the impact of this work will resonate throughout the development of similar devices in various contexts, fostering a new era of human–robot collaboration that prioritizes user satisfaction and interaction quality.

Acknowledgments

We would like to express our heartfelt appreciation to all the individuals who contributed to the creation of this paper. We extend our gratitude to the dedicated members of our team at the UX Innovation Lab of Samsung Research. We are especially indebted to Bosung Kim and Sangmin Hyun, whose unwavering support throughout this journey, which ensured the successful completion of this study. Additionally, we wish to express our deep gratitude to Youjin Won, Serin Koh, and Seunghee Hwang for their pivotal roles in orchestrating the experimental procedure that enriched the content and insights of this study.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Correction Statement

This article has been republished with minor changes. These changes do not impact the academic content of the article.

Additional information

Notes on contributors

Hyeji Jang

Hyeji Jang works at UX Innovation Lab, Samsung Research. She received a Ph.D. degree from the Department of Industrial Management and Engineering, POSTECH, in 2022. Her research interests include user experience, human–computer interaction, human–automation interaction, and human data analysis.

Daehee Park

Daehee Park is a Principal designer at Samsung Research. He received Ph.D. in Knowledge Service Engineering from KAIST. He graduated in B.Sc. Psychology from the University of York and M.Sc. Human and Computer Interaction from University College London. His research interests are human–computer interaction and cognitive engineering.

Sowoon Bae

Sowoon Bae was born in Seoul, South Korea, in 1993. She received the B.Des. degree from the College of Art and Design, Ewha Womans University, Seoul-si, South Korea, in 2016. Her research interests include user experience, user interface, human–computer interaction, visual communication design, visual interaction design, and design system.

References

- Aéraïz-Bekkis, D., Ganesh, G., Yoshida, E., & Yamanobe, N. (2020, April). Robot movement uncertainty determines human discomfort in co-worker scenarios [Paper presentation]. 2020 6th International Conference on Control, Automation and Robotics (ICCAR) (pp. 59–66). IEEE.

- Alexander, J., Lucero, A., Subramanian, S. (2012, September). Tilt displays: Designing display surfaces with multi-axis tilting and actuation. In Proceedings of the 14th International Conference on Human-Computer Interaction with Mobile Devices and Services (pp. 161–170).

- Amershi, S., Weld, D., Vorvoreanu, M., Fourney, A., Nushi, B., Collisson, P., & Horvitz, E. (2019, May). Guidelines for human-AI interaction [Paper presentation]. Proceedings of the 2019 Chi Conference on Human Factors in Computing System (pp. 1–13). https://doi.org/10.1145/3290605.3300233

- Argall, B. D., & Billard, A. G. (2010, October). A survey of tactile human–robot interactions. Robotics and Autonomous Systems. 58(10), 1159–1176. https://doi.org/10.1016/j.robot.2010.07.002

- Bartoli, L., Garzotto, F., Gelsomini, M., Oliveto, L., & Valoriani, M. (2014, June). Designing and evaluating touchless playful interaction for ASD children [Paper presentation]. Proceedings of the 2014 Conference Interaction Design and Children (pp. 17–26). https://doi.org/10.1145/2593968.2593976

- Barwise, P., Bellman, S., & Beal, V. (2020, June). Why do people watch so much television and video?: Implications for the future of viewing and advertising. Journal of Advertising Research, 60(2), 121–134. https://doi.org/10.2501/JAR-2019-024

- Block, A. E., Seifi, H., Hilliges, O., Gassert, R., & Kuchenbecker, K. J. (2023, March). In the arms of a robot: Designing autonomous hugging robots with intra-hug gestures. ACM Transactions on Human-Robot Interaction, 12(2), 1–49. https://doi.org/10.1145/3526110

- Bures, V. (2012, June). Interactive digital television and voice interaction: Experimental evaluation and subjective perception by elderly. Elektronika ir Elektrotechnika, 122(6), 87–90. https://doi.org/10.5755/j01.eee.122.6.1827

- Chadalavada, R. T., Andreasson, H., Schindler, M., Palm, R., & Lilienthal, A. J. (2020). Bi-directional navigation intent communication using spatial augmented reality and eye-tracking glasses for improved safety in human–robot interaction. Robotics and Computer-Integrated Manufacturing, 61, 101830. https://doi.org/10.1016/j.rcim.2019.101830

- Chauhan, V., & Shah, M. H. (2020). An empirical analysis into sentiments, media consumption habits, and consumer behaviour during the Coronavirus (COVID-19) outbreak. UGC Care Journal, 31(20), 353–378.

- Choi, S. W., Kim, W. J., & Lee, C. H. (2013, March). Interactive display robot: Projector robot with natural user interface. In 2013 8th ACM/IEEE International Conference on Human-Robot Interaction (HRI) (pp. 109–110). IEEE.

- Clemmensen, T. (2011). Templates for cross-cultural and culturally specific usability testing: Results from field studies and ethnographic interviewing in three countries. International Journal of Human-Computer Interaction, 27(7), 634–669. https://doi.org/10.1080/10447318.2011.555303

- Clemmensen, T., Nielsen, J. A., & Andersen, K. N. (2017). Service robots: Interpretive flexibility or physical appropriation? https://robots-in-context.mpi.aass.oru.se/wp-content/uploads/2017/12/ECCE-2017-RiC-Clemmensen.pdf

- Common Sense. (2021). The common sense census: Media use by tweens and teens. https://www.commonsensemedia.org/sites/default/files/research/report/8-18-census-integrated-report-final-web_0.pdf

- Cooney, M. D., Kanda, T., Alissandrakis, A., Ishiguro, H. (2011). Interaction design for an enjoyable play interaction with a small humanoid robot. In 2011 11th IEEE-RAS International Conference on Humanoid Robots (pp. 112–119). IEEE.

- Coronado, E., Kiyokawa, T., Ricardez, G. A. G., Ramirez-Alpizar, I. G., Venture, G., & Yamanobe, N. (2022, April). Evaluating quality in human–robot interaction: A systematic search and classification of performance and human-centered factors, measures and metrics towards an industry 5.0. Journal of Manufacturing Systems. 63, 392–410. https://doi.org/10.1016/j.jmsy.2022.04.007

- Dahlbäck, N., Jönsson, A., Ahrenberg, L. (1993, February). Wizard of Oz studies: Why and how. In Proceedings of the 1st International Conference on Intelligent User Interfaces, pp. 193–200.

- Dao, N.-N., Tran, A.-T., Tu, N. H., Thanh, T. T., Bao, V. N. Q., & Cho, S. (2022, November). A contemporary survey on live video streaming from a computation-driven perspective. ACM Computing Surveys, 54(10s), 1–38. https://doi.org/10.1145/3519552

- Dautenhahn, K., Woods, S., Kaouri, C., Walters, M. L., Koay, K. L., & Werry, I. (2005, August). What is a robot companion-friend, assistant or butler? [Paper presentation]. 2005 IEEE/RSJ International Conference Intelligent Robots and Systems (pp. 1192–1197). IEEE.

- Faulkner, C. (2023). LG’s StanbyME is a so-so TV on a stellar stand. The Verge. https://www.theverge.com/23554060/lg-stanbyme-monitor-tv-stand-features-specs-review

- Fong, T., Nourbakhsh, I., & Dautenhahn, K. (2003, March). A survey of socially interactive robots. Robotics and Autonomous Systems, 42(3–4), 143–166. https://doi.org/10.1016/S0921-8890(02)00372-X

- Frijns, H. A., & Schmidbauer, C. (2021, August). Design guidelines for collaborative industrial robot user interfaces [Paper presentation]. Human-Computer Interaction–INTERACT 2021: 18th IFIP TC 13 International Conference, Bari, Italy (pp. 407–427).

- Gao, F., Yu, C., & Xie, J. (2018, July). Study on design principles of voice interaction design for smart mobile devices [Paper presentation]. Cross-Cultural Design. Methods, Tools, and Users: 10th International Conference, Las Vegas, NV (Vol. 15–20, pp. 398–411).

- Goetz, J., Kiesler, S., & Powers, A. (2003, November). Matching robot appearance and behavior to tasks to improve human–robot cooperation [Paper presentation]. The 12th IEEE International Workshop on Robot and Human Interactive Communication (pp. 55–60). IEEE.

- Haghzare, S., Campos, J. L., Bak, K., & Mihailidis, A. (2021, February). Older adults’ acceptance of fully automated vehicles: Effects of exposure, driving style, age, and driving conditions. Accident; Analysis and Prevention, 150, 105919. https://doi.org/10.1016/j.aap.2020.105919

- Hoffman, G., & Ju, W. (2014, February). Designing robots with movement in mind. Journal of Human-Robot Interaction, 3(1), 91–122. https://doi.org/10.5898/JHRI.3.1.Hoffman

- Hong, A., Lunscher, N., Hu, T., Tsuboi, Y., Zhang, X., Franco Dos Reis Alves, S., Nejat, G., & Benhabib, B. (2020). A multimodal emotional human–robot interaction architecture for social robots engaged in bidirectional communication. IEEE Transactions on Cybernetics, 51(12), 5954–5968. https://doi.org/10.1109/TCYB.2020.2974688

- Jang, H., Han, S. H., & Kim, J. H. (2020, December). User perspectives on blockchain technology: User-centered evaluation and design strategies for DApps. IEEE Access, 8, 226213–226223. https://doi.org/10.1109/ACCESS.2020.3042822

- Jost, C., Le Pévédic, B., Belpaeme, T., Bethel, C., Chrysostomou, D., Crook, N., & Mirnig, N. (2020). Human–robot interaction. Springer International Publishing.

- Kim, H. C. (2008, September). Weaknesses of voice interaction [Paper presentation]. 2008 4th International Conference on Networked Computing and Advanced Information Management (Vol. 2, pp. 740–745), IEEE.

- Klumpp, M., Hesenius, M., Meyer, O., Ruiner, C., & Gruhn, V. (2019, December). Production logistics and human-computer interaction—state-of-the-art, challenges and requirements for the future. The International Journal of Advanced Manufacturing Technology, 105(9), 3691–3709. https://doi.org/10.1007/s00170-019-03785-0

- Kujala, S., Kauppinen, M., Lehtola, L., & Kojo, T. (2005). The role of user involvement in requirements quality and project success [Paper presentation]. 13th IEEE International Conference on Requirements Engineering (RE'05) (pp. 75–84). IEEE.

- Lee, L. H., & Hui, P. (2018, May). Interaction methods for smart glasses: A survey. IEEE Access, 6, 28712–28732. https://doi.org/10.1109/ACCESS.2018.2831081

- Lee, M. K., Forlizzi, J., Kiesler, S., Rybski, P., Antanitis, J., & Savetsila, S. (2012, March). Personalization in HRI: A longitudinal field experiment. In Proceedings of the 7th Annual ACM/IEEE International Conference on Human-Robot Interaction (pp. 319–326).

- Lindblom, J., Alenljung, B., & Billing, E. (2020). Evaluating the user experience of human–robot interaction. In Human–robot interaction: Evaluation methods and their standardization. springer series on bio- and neurosystems (Vol. 12, pp. 231–256).

- Nomura, T., Kanda, T., & Suzuki, T. (2006, March). Experimental investigation into influence of negative attitudes toward robots on human–robot interaction. AI & Society, 20(2), 138–150. https://doi.org/10.1007/s00146-005-0012-7

- Novanda, O., Salem, M., Saunders, J., Walters, M. L., & Dautenhahn, K. (2016). What communication modalities do users prefer in real time HRI? arXiv preprint arXiv:1606.03992.