Abstract

Drawing inspiration from Michail Bakhtin’s philosophy, this study introduces the Polyphonic Model, grounded in the Bakhtinian principles of dialogue and polyphony. These principles emphasise the multiplicity of perspectives and the dynamic, dialogic interplay of various subjective consciousnesses, reflecting the inherent diversity of human thought. This theoretical foundation informs our analytical tool and conceptual framework, enabling an exploration of the recursive and cyclical dialogues between AI and its stakeholders, including AI itself as a non-human participant. Distinctively, the Polyphonic Model’s strength lies not merely in its capacity to highlight the multiplicity of these voices – as many preceding models have – but in its emphasis on the recursive, cyclic nature of the dialogic relationships and a dynamic interchange that continuously reshapes both the AI and its users, especially evident in the temporal transformations. The exploration asserts AI itself as a non-human stakeholder, contributing to and being molded by the very discourses that seek to understand it. While analyzing seventeen diverse stakeholders using ChatGPT as an illustrative context, the exploration zeroes in on two: the non-human stakeholder, exemplified by ChatGPT 4, and its users. Through an innovative methodological approach – an interview with ChatGPT 4 and a sentiment analysis of tweets from its early adopters powered by a lexicon-based approach and a machine learning method, along with Latent Dirichlet Allocation (LDA) for topic modelling – this study examines the dialogic relationships between stakeholders. The findings solidify the Polyphonic Model’s potential not only as an analytical tool but also as a blueprint for the holistic evaluation of AI-powered products. The scope of this study is confined to the development and societal integration of ChatGPT, as a representative example of advanced language processing AI technologies.

1. Introduction

Over the past few decades, the trajectory of technology development and commercialization has underscored the significance of a comprehensive stakeholder approach. Several methodologies, such as stakeholder analysis methodology (Bunn et al., Citation2002), discern the impact and characteristics of diverse stakeholder groups and evaluate their influence on market evolution. The Cloverleaf model of technology transfer (Heslop et al., Citation2001) emphasizes the integration of multiple perspectives to achieve effective technology transfer and commercialization. It considers the academia, industry, venture capital, and entrepreneurial community as key stakeholders in the process of transforming research into marketable products. Mitchell et al. (Citation1997) articulation on stakeholder identification and salience underlined the importance of prioritizing these key stakeholders based on their power, legitimacy, and urgency. The collective voice of stakeholders, be it users, regulators, investors, or developers, has the potential to refine and even redefine technological narratives.

While numerous models and research paradigms have underscored the centrality of stakeholders in the different phases of development, diffusion, and adoption of technologies, there has been a conspicuous gap in recognizing and analyzing the multiplicity of voices, especially in the realm of Artificial Intelligence (AI) (Kujala et al., Citation2022). Moreover, the dialogical nature of relationships between stakeholders involved in many stages of the AI development process seems to be overlooked.

AI-based products integrate algorithms, data analytics, and cognitive computing to simulate human intelligence in machines (Russell & Norvig, Citation2010). Examples of such products include digital voice assistants like Siri, Alexa, Google Home, and Cortana (Marr, Citation2019), autonomous vehicles exemplified by Tesla cars, recommendation systems such as Netflix’s movie suggestions and Spotify’s music playlists, smart home devices like Nest’s thermostats and Philips Hue lights, and an advanced language model developed by OpenAI, ChatGPT, primarily used for natural language understanding, conversation simulation, and text generation (further investigated in this Article as an illustrative example). AI-based product development is emblematic of the multi-staged procedure, starting from ideation and conceptualization to eventual deployment and continuous refinement. Each stage introduces its unique set of stakeholders that may or may not contribute their voices to the evolution and development of the product. In this context, Bakhtin’s polyphonic and dialogic ethos – historically rooted in diverse fields such as social psychology, popular culture, advertising, and organizational studies – finds compelling relevance as every stakeholder introduces a unique voice shaped by their individual socio-cultural background, temporal-spatial contexts, and distinct interpretations of AI’s promise. The dialogic interactions between these stakeholders and the AI products themselves remain largely uncharted, hinting at an analytical void in our understanding of the dynamic socio-technological interplays at work.

1.1. A polyphonic model

The idea of examining multiple, diverse stakeholders in technology development is not new. For instance, the SCOT (Social Construction of Technology) framework, originally proposed by Wiebe Bijker and Trevor Pinch, emphasizes the role of different “relevant social groups” in shaping technology, which can include many different stakeholders.

Humphreys (Citation2005) has made a significant contribution to our comprehension of social groupings, delineating four pertinent clusters: producers, advocates, users, and bystanders. Producers, comprising engineers, designers, marketers, and financial investors, interact directly with technology, shaping the development of technological artifacts. Advocates encompass policymakers and lobbyists who, while not directly involved with technology, engage in policymaking, lobbying, and scholarly research related to the artifact. Users maintain a personal and direct connection with technology, interacting with, purchasing, and exploring the artifact. Lastly, bystanders include those indirectly involved, such as neighbors, family, and friends. The collective engagement of these groups forms a consensus on the meaning and interpretation of a technological artifact.

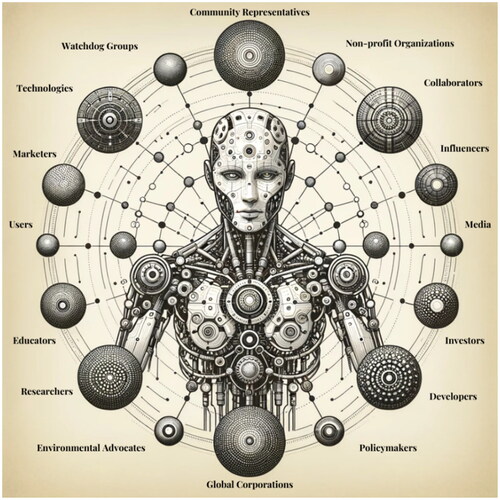

Drawing upon the collective scholarship of various researchers (e.g., Bijker et al., Citation2012; Cave et al., Citation2018; Fjeld et al., Citation2020; Güngör, Citation2020; Humphreys, Citation2005; Jobin et al., Citation2019; Meske et al., Citation2022; Ouchchy et al., Citation2020), the following enumeration of stakeholders is proposed, though it may not represent an exhaustive compilation: Educators and trainers, investors and financial stakeholders; industry partners and collaborators, AI ethics advocates and watchdog groups, endorsements and influencers, non-profit and civil society organizations, cultural and community representatives, environmental advocates, developers, researchers, regulatory bodies and policymakers, branding and marketing managers, other technologies, global corporations, “non-human” stakeholder, media, and users.

Utilizing a polyphonic model to comprehend AI-based product development allows us to recognize diverse voices from multiple stakeholders. Each voice is anchored in its own unique worldview, yet they coexist within a unified, dialogic system. According to Bakhtin (Citation2003, p. 6), polyphony signifies a “plurality of independent and unmerged voices and consciousnesses, each possessing equal rights.” Significantly, within this dialogical framework, the AI-based product assumes a role beyond mere functionality; it becomes an active “non-human stakeholder,” dynamically participating in the dialogue with itself and other stakeholders ().

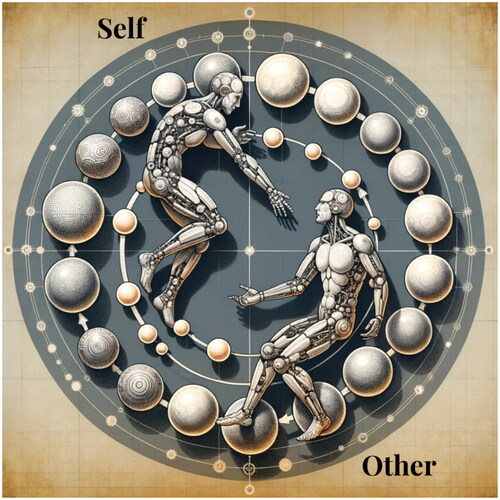

Rooted in Bakhtin’s perspective, these voices are not static entities; they exist in an ongoing state of “becoming.” This state is cultivated through persistent dialogic interactions. Here, the conventional demarcation between “self” and “other” is reconceptualized. Instead of two separate entities, the “self” is ever-evolving, continuously influenced by its dialogic engagements, shaping, and being shaped by the “other.” As this co-creative process progresses, the “self” perceives and internalizes multiple voices, with each interaction influencing subsequent ones (). These interactions keep traces – residual imprints of prior dialogic engagements, further enriching the evolving narrative (Karimova, Citation2011).

Polyphony is conceived as the dynamic interplay of intertwined experiences, understandings, and knowledge across temporal and spatial dimensions. Every engagement with a “text” – where text is any entity or phenomenon – yields diverse voices, each bearing its distinct interpretation.

The strength of the polyphonic approach in AI product development is its ability to highlight the multiplicity inherent in the product’s perception, both public and internal. It does so not just by capturing the array of external voices, as one might find in conventional stakeholder models, but by probing the rich interplay of voices within each participant (Goby & Karimova, Citation2023). In this internal dialogue, ChatGPT, although it might appear as a singular voice, experiences an exchange of a blend of countless voices that have been trained into its model.

In the polyphonic chasm of AI, three layers emerge: firstly, every voice adds its unique articulation to the attributes of the AI’s persona; secondly, these attributes are continually evolving, shaped by each individual’s unique position and worldview; thirdly, interactions with the AI product encourage a dynamic exchange, where prior understandings meld with fresh revelations, resulting in a continually evolving perception of the AI product’s essence and potential.

In addition, the borders between stakeholders remain ambiguous, given the complex interconnections and dialogic relations between the actors, where each actor involved in the creation process can play different roles. This ambiguity is further intensified by the cyclic nature of their interaction. Echoing Marshall McLuhan’s insight into technology as an extension of ourselves, recent advancements in AI, such as neuron interface devices, exemplify this convergence. These technologies are not just psychological extensions but physically integrate with the human body and brain, blurring the lines between humans and machines. In this evolving context, each entity or stakeholder is in a constant process of transformation and becoming, illustrating the profound impact of AI on the human experience.

1.2. Polyphonic framework stages

The polyphonic paradigm transcends its role as a mere analytical instrument for technology assessment, emerging as an indispensable framework for technology creation itself. By integrating Bakhtin’s polyphonic insights into the stakeholder analysis for AI-centric product development, one must recognise the perpetual dynamics of diverse voices devoid of any definitive stabilization. In synthesizing the Social Construction of Technology (SCOT) framework with the quintessential five-step methodology posited by Bunn et al. (Citation2002), we advocate for an augmented approach, one that impeccably melds these models, all the while infusing them with Bakhtinian philosophy ().

1.2.1. Comprehensive stakeholder identification

This step is a synthesis of the initial stages of both models. It entails recognizing all stakeholders, ranging from developers, users, regulators to ethicists, media entities, corporations, and others. Each group’s specific interests, goals, interpretations, and engagements with the AI product should be carefully examined.

1.2.2. Characterization and categorization

Stakeholders are described based on salient characteristics and analyzed and classified predicated upon specific stakeholder attributes, ensuring the versatile nature of their identities and interests.

1.2.3. Dynamic relational analysis

Interactions and negotiations among these stakeholders around the AI product must be continuously monitored and analyzed. This involves not just understanding current relationships but acknowledging that these relationships are in constant flux. Techniques such as sentiment analysis and topic modeling can be employed, ensuring an understanding of these evolving relationships.

1.2.4. Acknowledging non-stabilization

While the SCOT model considers a stabilization or closure step, Bakhtin’s perspective suggests that dominant interpretations are ever-changing and no voice ever achieves permanent dominance. Consequently, rather than seeking stabilization, this approach necessitates constant vigilance and adaptability, embracing the constant evolution of stakeholder perspectives and interpretations.

1.2.5. Polyphony-Informed strategies across development facets

In this stage, strategies are crafted with a holistic consideration of the entire product development lifecycle, encompassing areas from marketing to management. Recognizing the vital role stakeholders play across varying phases—conceptualization, design, promotion, distribution, feedback, and iteration—these strategies should be grounded in Bakhtin’s principles. This implies that in each facet of product development, be it in marketing campaigns or managerial decisions, the multitude of stakeholder voices are not only acknowledged but also become central to shaping the direction. Rather than seeking a monologic consensus, strategies should promote an ecosystem wherein diverse voices unite, guiding the product’s evolution in dialogic interactions.

1.3. Illustrative context of OpenAI

To expound upon the functional details of the proposed framework, this research undertakes an empirical analysis of ChatGPT, positing it as an illustrative case study.

The exploration of users’ voices within a single classical case study reveals sentiment analysis results from tweets associated with ChatGPT. “Case study research is one method that excels at bringing us to an understanding of a complex issue and can add strength to what is already known through previous research” (Dooley, Citation2002, p. 335).

ChatGPT is an artificial intelligence chatbot developed by OpenAI. GPT in ChatGPT signifies its capabilities: “Generative” for producing text, “Pre-training” referencing its method of applying prior data for new tasks, and “Transformer” for analyzing relationships within datasets. (Haleem et al., Citation2022; Street & Wilck, Citation2023). Although the primary purpose of a chatbot is to simulate human-like conversations, ChatGPT displays a wide range of capabilities such as generating computer codes, emulating the communication style of famous CEOs, creating musical pieces, screenplays, stickers, student essays, poetry, song lyrics, and fairytales, summarizing written content (Haleem et al., Citation2022), creating medical documentation (Chow et al., Citation2023), augmenting mental health care and psychological therapy (Uludag, Citation2023) and many more However, some analytics are less impressed with ChatGPT’s human-like abilities. In Chomsky’s sceptical words, “Roughly speaking, they take huge amounts of data, search for patterns in it and become increasingly proficient at generating statistically probable outputs – such as seemingly humanlike language and thought.” (Chomsky et al., Citation2023).

Next, following the contours of the polyphonic framework, select stakeholders are methodically assessed with an emphasis on the voices of ChatGPT and its user cohort.

1.3.1. Educators and trainers

This group includes individuals and institutions responsible for imparting knowledge about AI-based products, from formal education settings to corporate training programs. Their narrative revolves around the pedagogy of AI, its integration into curricula, and its capacity to prepare the next generation for a tech-centric future (Blikstein, Citation2015).

1.3.2. Investors and financial stakeholders

These are individuals or institutions that provide capital to AI initiatives. Their perspective is rooted in the product’s financial viability, growth potential, and market competition. Their voice stresses the economic implications, potential returns on AI investments, and the effect of AI technologies on business decisions (Metcalf et al., Citation2019).

1.3.3. Industry partners and collaborators

These entities often engage in partnerships, collaborations, or even competition with AI product firms. Their narrative adds a layer of market dynamics, highlighting industry standards, interoperability issues, and strategic alignments.

1.3.4. Ethics advocates and watchdog groups

Numerous scholarly investigations have deliberated on the subject of AI ethics, including algorithmic bias (Gardner, Citation2022). In reaction to these concerns, both national and international institutions organized specialized expert committees dedicated to AI (Jobin et al., Citation2019, p. 389). These groups often provide an external check on the technology, advocating for its ethical use, fairness, transparency, and accountability. Given the profound societal implications of AI, these professionals provide a critical perspective on the moral, ethical, and societal facets of AI products. Their voice in the polyphony emphasizes responsible and conscious AI technology development and deployment.

1.3.5. Endorsements and influencers

In today’s digital age, influencers play a significant role in shaping public perception of products, including AI-based products (Devlin et al., Citation2019).

Their narrative, often grounded in personal experiences and broader audience engagement, can impact a product’s market acceptance and popularity.

1.3.6. Non-Profit and civil society organizations

These entities often operate from a position of public interest, voicing concerns about privacy, inclusivity, and the broader societal impacts of AI. Their narrative adds a layer of checks and balances to the promotional discourse.

1.3.7. Cultural and community representatives

Different cultures and communities might have unique perspectives on AI, shaped by their historical, religious, or sociocultural contexts. Their voice ensures that the polyphonic framework is globally resonant and culturally sensitive.

1.3.8. Environmental advocates

With increasing emphasis on sustainable technologies, this stakeholder group can shed light on the environmental implications of AI infrastructures, ensuring that AI products consider their carbon footprint and ecological impact.

1.3.9. Developers

These technical custodians drive the AI product from conception to execution (Karimova, Citation2022). Their narrative provides insights into design choices, ethical considerations, and technological constraints.

1.3.10. Researchers

This group primarily shapes technology from a technical perspective, including algorithms, architectures, training methodologies, etc. Their voice in the polyphonic mix is one of innovation and forward-looking vision.

1.3.11. Regulatory bodies and policy makers

Stakeholders influence how the technology is deployed, setting regulations and guidelines for ethical, legal, and socially responsible use. Their narrative ensures that AI products are ethically grounded, transparent, and socially responsible.

1.3.12. Branding and marketing managers

These stakeholders are instrumental in positioning AI-based products within the consumer psyche and broader market landscape (Karimova, Citation2022). Through strategic branding exercises and targeted marketing campaigns, they translate the technical capabilities of AI tools into tangible benefits for the end users (Kotler et al., Citation2022).

1.3.13. Other technologies

Interactions with other technologies significantly shape the evolution and utility of any AI-based product. In the ecosystem of digital solutions, technologies do not exist in isolation; they co-evolve, often synergizing their capabilities to offer enhanced user experiences (Adner, Citation2017). An example of such interaction with other technologies is the integration of ChatGPT with DALL-E 3, an image generation model, which has expanded ChatGPT’s capabilities from text generation to creating visual content based on textual prompts.

1.3.14. Global corporations

This group encompasses multinational corporations and major tech companies. These organizations have a substantial effect on how AI technologies are perceived, covered in the media, and, ultimately, used in society. They can promote certain technologies, influence regulatory decisions, and shape public opinion (Bonkowski & Smith, Citation2023). They might also directly fund or influence research and development in AI, leading to potential biases in technological development that reflect their interests or ideology.

1.3.15. Media

The media is shaping, interpreting, or challenging the narratives of other stakeholders. Recognizing their power in shaping public opinion, their voice in the polyphonic mix can act as both a harmonizer and a counterpoint, ensuring that the promotion is balanced and reaches diverse audiences. Media coverage not only molds the public’s understanding of technology but also impacts its development and adoption (Ouchchy et al., Citation2020), necessitating an analysis of AI’s portrayal in both general media (Lachman & Joffe, Citation2021) and specialized domains like popular culture and science fiction films (Lorenčik et al., Citation2013). An emphasis on embodiment, a tendency toward utopian or dystopian extremes, and a lack of diversity in creators, characters, and types of AI are all common characteristics of recent popular AI stories (Cave et al., Citation2018, p. 4). Such portrayals have been described as overstated or polarized, often either excessively optimistic about what the technology might achieve (Dubljević et al., Citation2014) or “melodramatically pessimistic” (The Royal Society, Citation2018, p. 9). The human-robot interaction is presented through stereotypical “good” and “bad” notions “such as robots as super-heroes that save the planet or as evil intelligence that enslaves mankind” (Bruckenberger et al., Citation2013, p. 302). This media representation leads to mixed feelings, uncertainty (Piçarra, 2016), and a cautious attitude toward AI-based innovations.

1.3.16. “Non-human” stakeholder

Following the Actor-Network Theory (ANT), the AI product is an active participant as it continually learns, evolves, and interacts, generating its own set of narratives and experiences. Its voice in polyphony represents the blend of technology, data, and algorithms, contributing to an ever-evolving narrative.

Continuing from Humphreys (Citation2005) invaluable interpretation of social groupings, the ANT proposes an intriguing perspective to our understanding of technological interplays by introducing the non-human actant – in our case, the AI-based product itself. ChatGPT transcends its status as a passive artifact to become an active participant. It evolves through iterative learning, shapes user experience, influences societal perceptions of AI, and even informs policy decisions. As a “non-human” stakeholder, it does not merely respond to human actors but engages in reciprocal exchanges, contributing to the co-creation of its meaning and function in the larger social and technological landscape.

In response to an inquiry regarding the prevailing themes associated with ChatGPT 4, the subsequent answer followed (OpenAI, November 21, 2023):

AI Ethics and Responsibility: ChatGPT, like any AI, raises questions about ethical use, bias in data, and the responsibility of creators and users in deploying AI technology responsibly.

Human-AI Interaction: The ways in which humans interact with AI, including reliance, skepticism, and the evolving nature of this relationship, are central themes. ChatGPT is often seen as a bridge between complex AI systems and everyday users.

Education and Learning: Many people, including educators, researchers, and students, are exploring the use of ChatGPT in learning environments, from aiding in research to teaching language skills.

AI’s Impact on Employment: There’s an ongoing debate about how AI, including chatbots like ChatGPT, might affect the job market, potentially automating some roles while creating others.

Technological Advancement and Limitations: The capabilities and limitations of AI in understanding and generating human language, as well as the technical evolution of models like ChatGPT, are of keen interest.

1.3.17. Users

The end-users are the recipients of the AI solution’s value proposition. Their voices carry lived experiences, aspirations, and concerns. In a polyphonic setting, their feedback and use cases become central, dictating product adaptations, and shaping its narrative in real-world contexts. AI technologies should be designed and developed with the end user in mind, focusing on usability, accessibility, inclusivity, and value creation.

Since customers now express their opinions more freely than ever before, especially on e-commerce platforms (e.g., Amazon, eBay), social media platforms (e.g., Twitter, LinkedIn), consumer travel aggregators (e.g., Expedia, Booking), and review sites (e.g., TripAdvisor), comprehending end users’ perceptions is crucial for companies. Automatically assessing client feedback via social media conversations enables firms to understand their audience and adjust their products and services accordingly (Venugopalan & Gupta, Citation2022). Sentiment analysis, also referred to as opinion mining, is one of the text analysis methods that computationally finds topics from media text and categorizes opinions as positive, negative, or natural sentiment by using Natural Language Processing (NLP) (Agarwal et al., Citation2015). It is efficiently utilized across diverse fields, including healthcare (Chow et al., Citation2023; Kashif et al., Citation2017), politics (Brandon & Deng, Citation2017), and aviation (Luis et al., Citation2019), as well as in assessing public attitudes towards emerging technologies like IoT (Bian et al., Citation2016).

Utilizing sentiment analysis, this study mines opinions from Tweets to capture early adopters’ perspectives on ChatGPT by OpenAI, introduced as a prototype in November 2022. Such analysis, informed by keywords and topics, can guide cost-effective strategies for product enhancement and promotion (Alantari et al., Citation2022).

1.4. Sentiment analysis

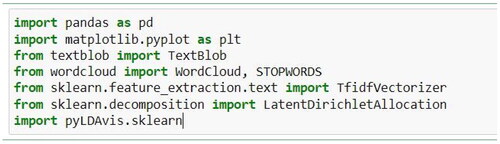

In this study, sentiment analysis was conducted employing the Python programming language. It is known for its libraries and tools in data science, such as Pandas for data manipulation, NLTK (Natural Language Toolkit) and Scikit-learn for natural language processing and machine learning, which were employed to enable the various stages of sentiment analysis. These stages consist of data collection, pre-processing, feature extraction, and the application of machine-learning techniques for classification (Gupta et al., Citation2017, p. 29).

The Python environment enabled efficient handling and analysis of large datasets, which is critical for applications like social media monitoring, reputation management, and customer experience investigation (Haruna et al., Citation2014).

Furthermore, our methodology incorporated both machine learning and lexicon-based approaches to implement and compare these techniques. Machine learning approaches in our study involved using Python’s algorithmic functions to extract and detect sentiment, while lexicon-based approaches utilized Python’s text processing features to count positive and negative words in the data (Drus & Khalid, Citation2019, p. 708).

The case study of ChatGPT sentiment analysis by Haque et al. (Citation2022) exemplifies the application of these methodologies. Their approach was replicated and extended by applying Latent Dirichlet Allocation (LDA) modelling for topic identification and sentiment analysis of the identified topics, thereby providing a comprehensive understanding of public opinion on ChatGPT.

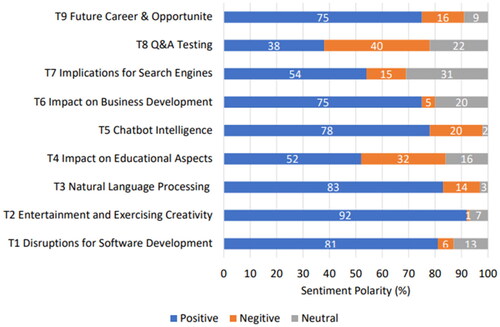

The analysis demonstrates a few dominating themes revealed by researchers (): the disruption that ChatGPT may cause for software development, its entertainment and creativity capacities, and its effect on future careers and opportunities. Most topics evoked positive emotions. For instance, ChatGPT was applied for “entertainment purposes, generating poems, jokes or other humorous write-ups” (Ul Haque et al., Citation2022). The topic that raised the most public concern and negative attitude was the impact of the ChatGPT on education. Utilizing ChatGPT for writing essays and preparing assignments may impede students’ learning process. Other concerns include plagiarism detection for the students’ assignments and superficial answers that ChatGPT may provide in response to research questions.

Figure 4. Results of the qualitative sentiment analysis per topic.

(Source: Haque, M., Dharmadasa, I., Sworna, Z.T., Rajapakse, R.N., Ahmad, H. (2022). I think this is the most disruptive technology, Exploring Sentiments of ChatGPT Early Adopters using Twitter Data, p. 7, Cornel University, Retrieved from http://arXiv:2212.05856).

Bukar et al. (Citation2023) performed an in-depth text analysis of LinkedIn posts about ChatGPT adopting VOSviewer. The findings revealed that users express concern with plagiarism, citations, manuscripts, papers, and literature reviews. These results indicate that ChatGPT has the potential to advance academic progress (new knowledge, thought, etc.) and, at the same time, enhance academic abuse (plagiarism, inaccuracy, etc.) (Bukar et al., Citation2023).

The study conducted by Tlili et al. (Citation2023) employed social network analysis (SNA) to perform a cross-sectional analysis of tweets (Hansen et al., Citation2010) on education. The study findings point to a generally optimistic prognosis for ChatGPT in educational contexts, but others expressed dissenting voices about its possible effects (Tlili et al., Citation2023), such as a risk of diminishing students’ critical thinking and problem-solving skills and a decrease in direct human interaction and communication as students may become more accustomed to interfacing with AI.

1.5. Data analysis

In the present research, a comprehensive analysis was conducted on the dataset made publicly accessible via Kaggle, adhering to the algorithmic procedure delineated in Appendix 1 (https://www.kaggle.com/datasets/sanlian/tweets-about-chatgpt-march-2023). The dataset encompasses 100,000 tweets, all pertaining to ChatGPT, and written exclusively in English. These were collated over the span from March 18, 2023, to March 21, 2023.

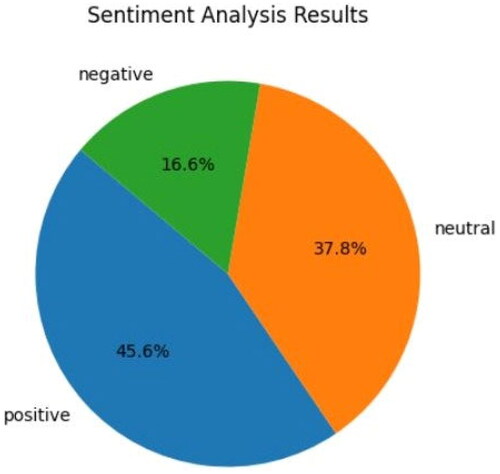

To visualize the sentiment analysis results, the number of tweets with positive, negative, and neutral sentiments expressed over the span of four days (18–21 March 2023) is examined and displayed using the pie chart ().

Compared to prior research, the current results reveal a consistent sentiment among early adopters of ChatGPT. Haque et al. (Citation2022) indicated a predominant positive disposition towards ChatGPT-related topics; this sentiment remains consistent, with data from March 2023 showing a similar positive inclination among ChatGPT’s users, constituting approximately forty-five percent. Furthermore, while it is plausible to postulate an escalating public concern regarding the implications of novel technologies, such apprehensions may not impede their adoption. The allure of promised convenience and benefits may outweigh potential reservations. As is aptly put by Narr (Citation2022, p. 72), “As AI penetrates increasing domains of everyday life, it is working to colonize and manipulate the unconscious for profitable extraction. This makes it important to remedy the harms of AI at the same time as those harms become harder to see.”

The notable proportion of neutral responses (approximately thirty-eight percent) may suggest a sense of prudence or indecision regarding the capabilities of ChatGPT and their potential implications. After all, the inflated enthusiasm surrounding the potential of ChatGPT is not a universally shared sentiment. As articulated by Noam Chomsky et al. (Citation2023), “that long-prophesied moment when mechanical minds surpass human brains […] may come, but its dawn is not yet breaking, contrary to what can be read in hyperbolic headlines and reckoned by injudicious investments” (Chomsky et al., Citation2023).

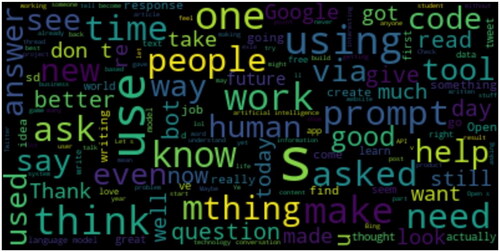

Word Cloud chart () aesthetically illustrates key points of discourse (Insight Software, Citation2022), built around ChatGPT by its early adopters. From the Word Cloud chart, it is apparent that some dominant sentiments are related to the help that ChatGPT offers and the effect it may have on improving the quality of work. Think, write, code, question, and answer are other salient words uncovered by sentiment analysis.

Latent Dirichlet Allocation (LDA) (Blei et al., Citation2003) topic modeling was performed on all tweets from the document numerous times with different numbers of topic clusters. The number of latent topics must be pre-determined before running the clustering algorithm because LDA topic modeling is unable to assess on its own how many latent topics are within a series of documents (Yun et al., Citation2020). “One of the weaknesses of topic modeling is that much of the work is ultimately done by humans in determining what topics are indicated by various word clusters.”

The most intuitive outcomes are presented with five total latent topic clusters using the Gensim Python library. For example, Topic 4 appears to be related to general search engines and has keywords such as “Microsoft,” “Google,” “Bing,” and “search.”

An example of Topic 4 outcome:

'0.072*"openai" + 0.064*"microsoft" + 0.054* "google" + 0.033*"bing" + 0.031*"chatbot" + 0.030*"search" + 0.026*"baidu" + 0.023*"users" + 0.021*"india" + 0.019*"ernie"

Topic 1: This topic appears to be about cryptocurrencies and related technologies such as NFTs. Elon Musk, who is known for his interest in cryptocurrencies, appears on this topic, indicating that it may be related to recent news and events in crypto space.

Topic 2: This topic appears to be more general, related to the broader field of artificial intelligence, perhaps discussing its applications, potential, and limitations.

Topic 3: This topic seems to be about generative models such as OpenAI’s Codex or GPT-3, with mentions of “copilot” and “generative” in the top keywords. Other terms, such as “cybersecurity,” “developers,” and “price,” suggest that this topic may be about the practical applications of generative models in different contexts.

Topic 4: This topic appears to be about different search engines and chatbots, including Google, Bing, and Baidu. The mention of “open AI” in this topic may be related to how OpenAI’s models compare to other chatbots and search engines.

Topic 5: This topic may be related to Siri and Apple’s other AI products, as indicated by the presence of “Apple” and “Siri” in the top keywords. The mention of “bitcoin” in this topic suggests that it may also be related to cryptocurrencies and their adoption.

Overall, these topics provide a glimpse into the types of conversations people are having on Twitter about ChatGPT and related topics.

2. Discussion

2.1. Non-human stakeholder’s voice

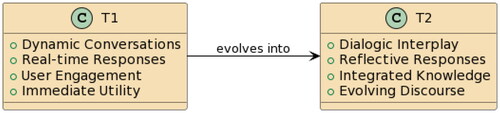

The evolution of ChatGPT’s interaction capabilities is best understood through a comparative analysis of two answers provided by ChatGPT 4 at distinct temporal points, designated as T1 and T2 ().

At Timepoint T1: The focus is on the immediate, tangible aspect of ChatGPT’s functionality – its “Interactivity.” Here, ChatGPT is primarily recognized for its ability to engage users through dynamic and responsive conversations. It accurately handles a diverse range of queries in real-time, showcasing its immediate responsiveness.

Transitioning to Timepoint T2: The narrative shifts to a more profound understanding of ChatGPT’s role, aligning with the Bakhtinian concept of “Dialogic Interplay.” This perspective views each interaction with ChatGPT 4 as more than just a response to a user’s query; it is a reflection of an ongoing dialogue that integrates the user’s input with the collective knowledge embedded within the AI. This interaction is not static but a dynamic, evolving discourse that continuously reshapes both the AI and the user’s perspectives.

The Evolutionary Process: This transition from T1 to T2 is symbolic of a continuous evolution within the dialogical framework. ChatGPT, as a non-human stakeholder, is not a static entity but is in a perpetual state of becoming. Through its interactions, it constantly appropriates and changes, absorbing and reflecting the knowledge and inputs from human beings. In essence, ChatGPT transforms over time, becoming the “other” that is shaped by and shapes the dialogic relationships it engages in and so does the user.

2.2. User’s voice

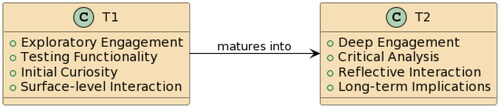

The same recursive cyclic process can be noticed in users if we compare the results of sentiment analysis conducted at different periods of time. “As the self undergoes the process of change, so does its perception of what the other is.” (Karimova, Citation2011, p. 468). This process is apparent in the continuous re-articulation and detection of new themes by the users in relation to ChatGPT.

We can trace the changes in users’ interactions with ChatGPT from T1 to T2 ().

At Timepoint T1 (Initial Stage of ChatGPT Adoption): Users were exploring the novelty of ChatGPT’s capabilities, engaging with the AI primarily to test its responsiveness and range. The sentiment analysis from this period shows a predominance of curiosity-driven interaction, with users primarily focused on exploring the functional aspects of ChatGPT, such as its ability to conduct dynamic conversations and handle a diverse range of queries in real-time. The language and sentiment reflected excitement and surprise at the AI’s immediate responsiveness.

Transitioning to Timepoint T2 (As ChatGPT Matured): The nature of user interaction evolved to a more sophisticated engagement, aligning with deeper conceptual understandings of AI’s potential. Users began to view their interactions as part of a broader dialogue, not just with the AI but with the collective knowledge it accesses. The sentiment analysis indicates a shift toward more reflective and critical engagement, with discussions around the implications of AI integration into daily life, ethical considerations, and the potential impact on various domains like education and employment. There is an evident progression from a focus on novelty to considering the broader implications of AI technology.

The Evolutionary Process: From T1 to T2, the users’ roles transformed from testers and early adopters to stakeholders actively participating in shaping the narrative around ChatGPT. As the AI itself became more sophisticated in its responses and capabilities, so did the users’ approaches to engaging with it. The dialogic interplay became more complex, and the discourse around ChatGPT matured to include not only its immediate functionalities but also its long-term societal impact, ethical considerations, and its role as a tool in various professional fields.

It is evident how the voices instantaneously influence, and are being influenced by, each other. At each co-creation, there are remnants of the influence of interaction that took place the moment before. This is how the recursive nature of dialogue manifests itself in each interaction. In other words, “polyphony is the result of understanding, experience, and knowledge of self and other by self in the continuum of time and space.” (Karimova, Citation2011, p. 468).

3. Conclusion

This study introduces the polyphonic framework to unravel the complex stakeholder dynamics shaping the development of AI-based technologies. The strength of the polyphonic model resides not just in its ability to bring forth the plurality of these voices, as several antecedent models did, but in its focus on the recursive cyclical nature of dialogic relationships and an evolving exchange that perpetually transforms both the AI and its users. This examination posits AI as a non-human stakeholder, reciprocally shaping and being shaped by the discourses aspiring to understand it. To demonstrate dialogical relations between this non-human stakeholder and users, the research displays the transformations that ChatGPT 4 and the users go through over time. To achieve this, the study employs an interview with ChatGPT 4 and sentiment analysis of tweets from its early adopters powered by a lexicon-based approach.

The study’s findings accentuate a dynamic interplay between technology and user and their transformations at different points in time (T1 and T2). Initially, users interacted with ChatGPT within the scope of its immediate capabilities, highlighting its role as an interactive tool capable of addressing real-time queries (T1). Over time, this interaction matured, reflecting a deeper, more contemplative engagement. Users began to notice the broader implications of ChatGPT’s integration into their workflows and societal discourse (T2). This shift from a reactive engagement with AI to a more proactive and thoughtful interaction suggests a significant evolution in the user’s role – from consumers to active participants in the technology’s ongoing development. These stages of engagement, from T1’s exploration to T2’s reflective dialogue, not only parallel the advancements of AI but also signal a co-evolutionary path where both users and AI are in a constant state of metamorphosis and adaptation.

Yet, this evolution raises critical questions about the less discussed, perhaps even overlooked, aspects of AI integration. The most salient observation is not the topics dominating discourse but rather the areas conspicuously absent from it, such as increasing cognitive dependence, cultural homogenization, anthropomorphization of AI, eradication of ephemeral knowledge, amplification of historical biases, and many more.

It seems there was a little concern that the information the users feed to AI can threaten human freedom. By allowing AI to handle a range of functions – from cognitive activities like thinking and writing to operational tasks such as programming and content creation – people may unintentionally relinquish control to these AI-based systems.

In a search for convenience, there appears to be a gradual renunciation of decision-making capacities, consequently amplifying corporate control over the populace. Paradoxically, while AI’s genesis is intrinsically tied to human innovation, there emerges the scenario where humans, in their increasing reliance on AI for task efficiency, become trapped by their own creation (Kim et al., Citation2021). “Across diverse socio-cultural landscapes, there emerges a tangible propensity for individuals to increasingly lean on platforms like ChatGPT, relegating crucial tasks initially of limited scope but gradually expanding in significance” (Baird & Maruping, Citation2021). This tendency is particularly noticeable in developing nations where expert scarcity in niche domains positions ChatGPT as the arbitrator of knowledge (Dwivedi et al., Citation2023). As we start this co-evolutionary process with AI, guided by the dialogic model, we should recognize our intertwined destinies with these technologies, developing higher sensitivity toward the capabilities we endow AI as in shaping AI we are simultaneously sculpting our future selves. Thus, the choices we make today in AI development are not just technical decisions, but deeply human ones.

Disclosure statement

No potential conflict of interest was reported by the author.

Additional information

Notes on contributors

Gulnara Z. Karimova

Gulnara Z. Karimova possesses a unique combination of practical experience and theoretical understanding in the field of marketing communications specializing in marketing artificial intelligence. Her research has been published in prestigious journals such as the Journal of Consumer Marketing and the Journal of Marketing Communications.

References

- Adner, R. (2017). Ecosystem as structure: An actionable construct for strategy. Journal of Management, 43(1), 39–58. https://doi.org/10.1177/0149206316678451

- Agarwal, B., Mittal, N., Bansal, P., & Garg, S. (2015). Sentiment analysis using common-sense and context information. Computational Intelligence and Neuroscience, 2015(N 2015), 715730. https://doi.org/10.1155/2015/715730

- Alantari, H. J., Currim, I. S., Deng, Y., & Singh, S. (2022). An empirical comparison of machine learning methods for text-based sentiment analysis of online consumer reviews. International Journal of Research in Marketing, 39(1), 1–19. https://doi.org/10.1016/j.ijresmar.2021.10.011

- Baird, A., & Maruping, L. M. (2021). The next generation of research on IS use: A theoretical framework of delegation to and from agentic IS artifacts. MIS Quarterly, 45(1), 315–341. https://doi.org/10.25300/MISQ/2021/15882

- Bakhtin, M. (2003). Problems of Dostoevsky’s poetics. (C. Emerson, Trans.). University of Minnesota Press.

- Bian, J., Yoshigoe, K., Hicks, A., Yuan, J., He, Z., Xie, M., Guo, Y., Prosperi, M., Salloum, R., & Modave, F. (2016). Mining Twitter to assess the public perception of the “Internet of Things. PLoS One, 11(7), e0158450. 2016. https://doi.org/10.1371/journal.pone.0158450

- Bijker, W. E., Hughes, T. P., & Pinch, T. (2012). The social construction of technological systems: New directions in the sociology and history of technology. MIT press.

- Blei, D. M., Ng, A. Y., & Jordan, M. I. (2003). Latent dirichlet allocation. Journal of Machine Learning Research, 3, 993–1022. https://dl.acm.org/doi/10.5555/944919.944937

- Blikstein, P. (2015). Computationally enhanced toolkits for children: Historical review and a framework for future design. Foundations and Trends® in Human–Computer Interaction, 9(1), 1–68. https://doi.org/10.1561/1100000057

- Bonkowski, E., & Smith, H. S. (2023). The other side of the self-advocacy coin: How for-profit companies can divert the path to justice in rare disease. The American Journal of Bioethics: AJOB, 23(7), 88–91. https://doi.org/10.1080/15265161.2023.2207521

- Brandon, J., & Deng, J. (2017). Sentiment analysis of Tweets for the 2016 US presidential election. In IEEE MIT undergraduate research technology conference (URTC). IEEE.

- Bruckenberger, U., Weiss, A., Mirnig, N., Strasser, E., Stadler, S., & Tscheligi, M. (2013). The good, the bad, the weird: Audience evaluation of a “real” robot in relation to science fiction and mass media. In: Herrmann G., Pearson M. J., Lenz A., Bremner P., Spiers A., & Leonards U. (Eds.), Social robotics. ICSR 2013. Lecture notes in computer science (Vol. 8239). https://doi.org/10.1007/978-3-319-02675-6_30

- Bukar, U., Sayeed, M. S., Razak, S. F. A., Yogarayan, S., Amodu, O. A. (2023). Text analysis of Chatgpt as a tool for academic progress or exploitation. In SSRN. https://ssrn.com/abstract=4381394

- Bunn, M. D., Savage, G. T., & Holloway, B. B. (2002). Stakeholder analysis for multi‐sector innovations. Journal of Business & Industrial Marketing, 17(2/3), 181–203. https://doi.org/10.1108/08858620210419808

- Cave, S., Craig, C., Dihal, K., Dillon, S., Montgomery, J., Singler, B., Taylor, L. (2018). Portrayals and perceptions of AI and why they matter. (DES5612). https://royalsociety.org/∼/media/policy/projects/ai-narratives/AI-narratives-workshop-findings.pdf

- Chomsky, N., Roberts, Y., Watumull, J. (2023). The false promise of ChatGPT. New York Times, March 8, 2023. https://www.nytimes.com/2023/03/08/opinion/noam-chomsky-chatgpt-ai.html

- Chow, J. C. L., Sanders, L., & Li, K. (2023). Impact of ChatGPT on medical chatbots as a disruptive technology. Frontiers in Artificial Intelligence, 6, 1–4. https://doi.org/10.3389/frai.2023.1166014​

- Devlin, J., Chang, M.-W., Lee, K., & Toutanova, K. (2019). BERT: Pre-training of deep bidirectional transformers for language understanding. In J. Burstein, C. Doran, & T. Solorio (Eds.), Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies: Vol. 1. Long and Short Papers (pp. 4171–4186). Association for Computational Linguistics. https://doi.org/10.18653/v1/N19-1423

- Dooley, L. M. (2002). Case study research and theory building. Advances in Developing Human Resources, 4(3), 335–354. https://doi.org/10.1177/1523422302043007

- Drus, Z., & Khalid, H. (2019). Sentiment analysis in social media and its application: Systematic literature review. Procedia Computer Science, 161, 707–714. https://doi.org/10.1016/j.procs.2019.11.174

- Dubljević, V., Saigle, V., & Racine, E. (2014). The rising tide of tDCS in the media and academic literature. Neuron, 82(4), 731–736. https://doi.org/10.1016/j.neuron.2014.05.003

- Dwivedi, Y. K., Kshetri, N., Hughes, L., Slade, E. L., Jeyaraj, A., Kar, A. K., Baabdullah, A. M., Koohang, A., Raghavan, V., Ahuja, M., Albanna, H., Albashrawi, M. A., Al-Busaidi, A. S., Balakrishnan, J., Barlette, Y., Basu, S., Bose, I., Brooks, L., Buhalis, D., … Wright, R. (2023). So what if ChatGPT wrote it? Multidisciplinary perspectives on opportunities, challenges, and implications of generative conversational AI for research, practice, and policy. International Journal of Information Management, 71(N), 102642. https://doi.org/10.1016/j.ijinfomgt.2023.102642

- Fjeld, J., Achten, N., Hilligoss, H., Nagy, A., & Srikumar, M. (2020). Principled artificial intelligence: Mapping consensus in ethical and rights-based approaches to principles for AI. Berkman Klein Center Research Publication.

- Gardner, A. (2022). Responsibility, recourse, and redress: A focus on the three R’s of AI ethics. In IEEE Technology and Society Magazine, Vol. 41, N. 2, pp. 84–89. https://ieeexplore.ieee.org/abstract/document/9794766 https://doi.org/10.1109/MTS.2022.3173342

- Goby, V. P., & Karimova, G. Z. (2023). Addressing the inherent ethereality and mutability of organizational identity and image: A Bakhtinian response. International Journal of Organizational Analysis, 32(2), 286–298. https://doi.org/10.1108/IJOA-01-2023-3608

- Gupta, B., Negi, M., Vishwakarma, K., Rawat, G., & Badhani, P. (2017). Study of Twitter sentiment analysis using machine learning algorithms on Python. International Journal of Computer Applications, 165(9), 29–34. Vhttps://doi.org/10.5120/ijca2017914022

- Güngör, H. (2020). Creating value with artificial intelligence: A multi-stakeholder perspective. Journal of Creating Value, 6(1), 72–85. https://doi.org/10.1177/2394964320921071

- Heslop, L. A., McGregor, E., & Griffith, M. (2001). The cloverleaf model of technology transfer: A comprehensive approach. Journal of Technology Transfer, 26(1), 1–15. https://doi.org/10.1023/A:1011139021356

- Hansen, D., Shneiderman, B., & Smith, M. A. (2010). Analyzing social media networks with NodeXL: Insights from a connected world. Morgan Kaufmann.

- Haleem, A., Javaid, M., & Singh, R. P. (2022). An era of ChatGPT as a significant futuristic support tool: A study on features, abilities, and challenges. Bench Council Transactions on Benchmarks, Standards, and Evaluations, 2(4), 1–8. https://doi.org/10.1016/j.tbench.2023.100089

- Haque, M., Dharmadasa, I., Sworna, Z. T., Rajapakse, R. N., Ahmad, H. (2022). I think this is the most disruptive technology, Exploring Sentiments of ChatGPT Early Adopters using Twitter Data, Cornel University. http://arXiv:2212.05856

- Haruna, I., Trundle, P., & Neagu, D. (2014). Social media analysis for product safety using text mining and sentiment analysis. In 14th UK workshop on computational intelligence. IEEE.

- Humphreys, L. (2005). Reframing social groups, closure, and stabilization in the social construction of technology. Social Epistemology, 19(2-3), 231–253. https://doi.org/10.1080/02691720500145449

- Insight Software. (2022). Visualizing text analysis results with Word Clouds. https://insightsoftware.com/blog/visualizing-text-analysis-results-with-word-clouds/

- Jobin, A., Ienca, M., & Vayena, E. (2019). The global landscape of AI ethics guidelines. Nature Machine Intelligence, 1(9), 389–399. https://doi.org/10.1038/s42256-019-0088-2

- Karimova, G. Z. (2022). A personality-grounded framework for designing artificial intelligence-based product appearance. International Journal of Human - Computer Interaction, 1–13. https://doi.org/10.1080/10447318.2022.2150744

- Karimova, G. Z. (2011). Literary criticism and interactive advertising: Bakhtinian perspective on interactivity. Communication and Medicine, 36(4), 463–482. https://doi.org/10.1515/comm.2011.023

- Kashif, A., Dong, H., Bouguettaya, A., Erradi, A., & Hadjidj, R. (2017). Sentiment analysis as a service: A social media-based sentiment analysis framework. In IEEE international conference on web services (ICWS). IEEE.

- Kim, T. W., Maimone, F., Pattit, K., José Sison, A., & Teehankee, B. (2021). Master and slave: The dialectic of human-artificial intelligence engagement. Humanistic Management Journal, 6(3), 355–371. https://doi.org/10.1007/s41463-021-00118-w

- Kotler, P., Keller, K. L., & Chernev, A. (2022). Marketing management. Global Edition (16th ed.). Pearson Education Limited.

- Kujala, J., Sachs, S., Leinonen, H., Heikkinen, A., & Laude, D. (2022). Stakeholder engagement: Past, present, and future. Business & Society, 61(5), 1136–1196. https://doi.org/10.1177/00076503211066595

- Lachman, R., & Joffe, M. (2021). Applications of artificial intelligence in media and entertainment. In Analyzing future applications of AI, sensors, and robotics in society (pp. 201–220). IGI Global.

- Lorenčik, D., Tarhaničová, M., Sinčák, P. (2013). Influence of Sci-Fi films on artificial intelligence and vice-versa. IEEE Xplore. https://ieeexplore.ieee.org/abstract/document/6480990

- Luis, M., Martin, J. C., & Mandsberg, G. (2019). Social media as a resource for sentiment analysis of airport service quality (ASQ). Journal of Air Transport Management, 78(NC), 106–115. Volhttps://doi.org/10.1016/j.jairtraman.2019.01.004

- Marr, B. (2019, December 16). The ten best examples of how AI is already used in our everyday life, Forbes, December 186, 2019. Retrieved from (https://www.forbes.com/sites/bernardmarr/2019/12/16/the-10-best-examples-of-how-ai-is-already-used-in-our-everyday-life/?sh=10b117a21171

- Meske, C., Bunde, E., Schneider, J., & Gersch, M. (2022). Explainable artificial intelligence: Objectives, stakeholders, and future research. Opportunities, Information Systems Management, 39(1), 53–63. https://doi.org/10.1080/10580530.2020.1849465

- Metcalf, L., Askay, D. A., & Rosenberg, L. (2019). Keeping humans in the loop: Pooling knowledge through artificial swarm intelligence to improve business decision making. California Management Review, 61(4), 84–109. https://doi.org/10.1177/0008125619862256

- Mitchell, R. K., Agle, B. R., & Wood, D. J. (1997). Toward a theory of stakeholder identification and salience: Defining the principle of who and what really counts. The Academy of Management Review, 22(4), 853–886. https://doi.org/10.2307/259247

- Narr, G. (2022). The coloniality of desire: Revealing the desire to be seen, and blind spots leveraged by data colonialism as AI manipulates the unconscious for profitable extraction on dating apps. Revista Fronteiras – Estudos Midiáticos, 24(3), 72–84. https://doi.org/10.4013/fem.2022.242.07

- OpenAI. (2023). ChatGPT 4 [Large language model]. https://chat.openai.com/c/31a0364b-f8ae-4d3d-b42a-3fdfc0cf7e34

- Ouchchy, L., Coin, A., & Dubljević, V. (2020). AI in the headlines: The portrayal of the ethical issues of artificial intelligence in the media. AI & Society, 35(4), 927–936. https://doi.org/10.1007/s00146-020-00965-5

- The Royal Society. (2018). Portrayals and perceptions of AI and why they matter. https://royalsociety.org/-/media/policy/projects/ainarratives/AI-narratives-workshop-fndings.pdf

- Piçarra, N., Giger, J. C., Pochwatko, G., & Gonçalves, G. (2016). Making sense of social robots: A structural analysis of the layperson’s social representation of robots. Revue Européenne de Psychologie Appliquée, 66(6), 277–289. https://doi.org/10.1016/j.erap.2016.07.001

- Russell, S., & Norvig, P. (2010). Artificial intelligence: A modern approach. (3rd ed.). Prentice Hall.

- Street, D., & Wilck, J. (2023). ‘Let’s have a chat’: Principles for the effective application of ChatGPT and large language models in the practice of forensic accounting. Faculty Journal Articles, 15(2), 1–57. https://doi.org/10.2139/ssrn.4351817

- The Royal Society. (2018). Portrayals and perceptions of AI and why they matter. https://royalsociety.org/-/media/policy/projects/ai-narratives/AI-narratives-workshop-findings.pdf

- Tlili, A., Shehata, B., Adarkwah, M. A., Bozkurt, A., Hickey, D. T., Huang, R., & Agyeman, B. (2023). What if the devil is my guardian angel: ChatGPT as a case study of using chatbots in education. Smart Learning Environments, 10(15), 1–24. https://doi.org/10.1186/s40561-023-00237-x

- Uludag, K. (2023). The use of AI-supported chatbot in psychology, SSRN, January 20, 2023. https://ssrn.com/abstract=4331367

- Venugopalan, M., & Gupta, D. (2022). An enhanced guided LDA model augmented with BERT- based semantic strength for aspect term extraction in sentiment analysis. Knowledge-Based Systems, 246(C), 108668. https://doi.org/10.1016/j.knosys.2022.108668

- Yun, J. T., Duff, B. R. L., Vargas, P. T., Sundaram, H., & Itai, H. (2020). Computationally analyzing social media text for topics: A primer for advertising researchers. Journal of Interactive Advertising, 20(1), 47–59. https://doi.org/10.1080/15252019.2019.1700851

Appendix

Algorithm for performing sentiment analysis

1 Import necessary libraries

The first step is to import the required libraries. For sentiment analysis, we will use TextBlob for sentiment scoring, and for visualization, we will use Matplotlib and WordCloud. To import these libraries, run the following code:

2 Load data

Load the data from the CSV file using pandas. You can use the following code to read the CSV file:

Assuming the file is located in “C:\DATA SET,” and the file name is “chatgpt 2023 processed.csv.”

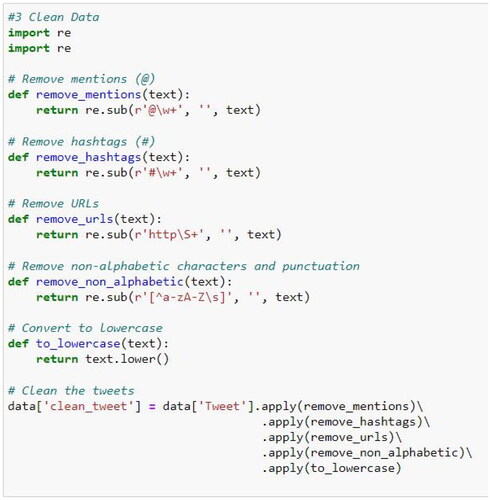

3 Clean data

After importing the data, the next step is to clean the text data. This process involves removing any unwanted characters or words that may affect the accuracy of sentiment analysis and topic modeling. Clean the data by removing unnecessary characters, converting all text to lowercase, and removing stop words. Here are the steps to clean the text:

Remove URLs, as they do not provide any valuable information for sentiment analysis and topic modeling.

Remove mentions (@username), as they do not provide any context to the sentiment or topic.

Remove hashtags (#hashtag), as they are not relevant for sentiment analysis and topic modeling.

Remove emojis, emoticons, and pictograms, as they do not provide any valuable information for sentiment analysis and topic modeling.

Remove special characters, such as punctuation marks and symbols, as they do not contribute to the sentiment or topic.

Convert all text to lowercase, as this will ensure that the sentiment analysis and topic modeling algorithms treat all words equally.

Remove stop words, such as “the,” “and,” and “a,” as they do not contribute to the sentiment or topic and may lead to noise in the analysis.

Stem or lemmatize the words, which involves reducing them to their root form. This can improve the accuracy of sentiment analysis and topic modeling by treating different forms of the same word as one.

Use the following code:

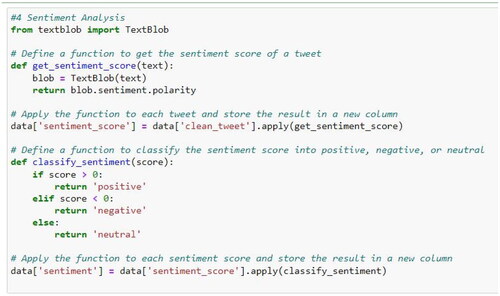

4 Perform sentiment analysis

To perform sentiment analysis on the tweets, we will use the TextBlob library. TextBlob is a Python library for processing textual data. It provides a simple API for sentiment analysis. We can use TextBlob to calculate the sentiment score for each tweet. Here is an example code to calculate the sentiment score for each tweet:

The above code will iterate through each tweet in the data and calculate the sentiment score using TextBlob. The sentiment score will be added to the sentiment_scores list.

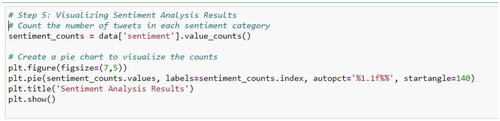

5 Visualize sentiment analysis results

To visualize the sentiment analysis results, one can create a pie chart or a bar chart to display the number of tweets with positive, negative, and neutral sentiments. Here is an example code to create the pie chart:

The above code will create a pie chart that displays the number of tweets with positive, negative, and neutral sentiments.

6 Create WordCloud

A word cloud is a visual representation of the most frequent words in a text. We can use the WordCloud library to create a word cloud for the tweets. Here is an example code to create a word cloud:

The above code will create a word cloud that displays the most frequent words in the tweets.

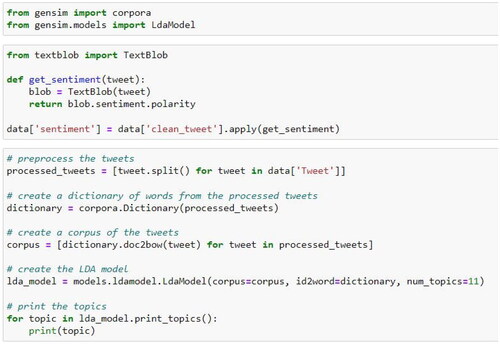

7 Perform topic modeling

Topic modeling is a technique that can be used to identify the topics that are being discussed in a text. We can use the Gensim library to perform topic modeling on the tweets. Here is an example code to perform topic modeling: