?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

This article presents a comprehensive Virtual Reality (VR) grasping taxonomy, which represents the common grasping patterns employed by users in VR, and is directly comparable to real object grasping taxonomies. With grasping being one of the primary interfaces we have with the physical world, seminal work has sought to explore our explicit grasping actions with real objects with the aim to define structured reasoning in the form of taxonomies. However, limited work has replicated this approach, for immersive technology (i.e., VR) and to address this, we present the first complete taxonomy of grasping interaction for VR, which builds on the body of work from real object grasping alongside recent approaches which have been applied into VR. We present a formal elicitation study, wherein a Wizard of Oz (WoZ) methodology is applied with users (N = 50) tasked to grasp and translate virtual twins of real objects. We present results from the analysis of 4800 grasps into a formal structured taxonomy which details the frequency of all potential real grasps and draws comparisons to the body of prior work in both real grasping and VR grasping studies. Results highlight the reduced number of grasp types used in VR (27), and the differences in commonality, frequency, and grasping approach between real grasping and VR grasping taxonomies. Focus is especially given to the nuances of the grasp, and via an in-depth evaluation of object properties, namely shape and size, we illustrate the common trends in VR grasping. Results from this work are also combined with VR grasping findings from prior published work, leading to the presentation of the most common grasps (5) for VR and recommendations for future analysis and use in intuitive and natural VR systems.

1. Introduction

Attributable to the recent developments in consumer available hardware, Virtual Reality (VR) is in a period of unprecedented growth, with off the shelf VR headsets providing highly immersive interactive environments and near realistic experiences. However, commonly the usability and effectiveness of VR systems is highly dependent on several factors, and beyond immersion, one of the the main components of VR quality is interaction (Heim, Citation2000). This is especially evident for VR systems to deliver presence (Hudson et al., Citation2019), or when aiming to be a digital twin of a real scenario.

Within interaction, explicit hand interaction has gained popularity in recent VR systems, therefore aiming to provide a near realistic representation of tasks we may complete in a natural environment (i.e., pick up, move or manipulate objects). However, due to the dexterity of the hand coupled with humans’ ability to use their hands for acquiring and manipulating objects with ease, it is a complex process, where commonly hand-held VR controllers are still used as the standard interaction method for VR. However, controllers have shown to be limited in providing natural interactions, with users often reporting that controller based interactions are not intuitive, require a longer learning curve, and thus can influence wider knowledge transfer. Therefore, while hand interactions have been highly explored within the VR community, initially through the use of wearables such as gloves, or more recently via predefined gestures, priority is often given to optimal recognition rather than naturalness. This means that the interactions can often be arbitrary and not intuitive enough (Piumsomboon et al., Citation2013).

Hand based grasping has been explored over recent years as a technical and computational challenge, and current VR devices (i.e., Meta Quest 3) now support off the shelf hand and finger based tracking for interaction. However, with current hand based grasping approaches, users are often trained to use particular grasps, commonly designed via a direct interpretation of the body of knowledge from real object grasping. While this approach is valid for specific scenarios, a considerable assumption is made that intuitive and natural grasping for VR will be the same as real grasping. Therefore differences in the VR environment, and objects, notably shape, texture and haptics, are often overlooked (Islam & Lim, Citation2022), with grasping interaction decisions being made based on assumed visual perception from real grasping only.

Recent work in VR, notably from Blaga et al. (Citation2021a, Citation2021b), has illustrated key differences between virtual object grasping and real grasping when compared to the work of Feix et al. (Citation2014a), with shape being the primary influencing condition for users grasping choices in VR. This brings into question the viability of real grasping taxonomies for direct use within VR and it is now pertinent to explore VR grasping taxonomies fully.

In this work we focus on exploring virtual object properties, such as shape, size and dimensions, to build on the work of Blaga et al. (Citation2021a, Citation2021b) and develop the first complete VR grasping taxonomy which considers all grasps from real object grasping literature. We draw findings via in-depth analysis of this new taxonomy and prior VR grasping results (Blaga et al., Citation2021a, Citation2021c) to determine the most common VR grasps for providing usable VR grasping interaction. Results from this work can inform the design of virtual grasping approaches, intuitive VR environments and usable interactive virtual object design. Additionally we provide an overview of key user behaviours, limitations and problems when grasping in VR, providing a taxonomy to be applied in natural and intuitive interactions in VR, which could also contribute to current research trends that aim to move the Metaverse from science fiction to an upcoming reality (Wang et al., Citation2023).

The article is structured as follows, Section 2 presents literature of grasping studies in real and virtual environments alongside the use of elicitation methods for developing real grasping taxonomies. Sections 3 through 6 present the methodologies of the work detailing the experimental framework, the user testing, the grasp categorisation and the metrics employed. Sections 7 and 8 propose the hypothesis and data analysis, with section 9 detailing the results. Finally section 10 presents a discussion of the main taxonomy alongside the most common VR grasps, synthesising insights from this work and from prior published work.

2. Related works

To develop a VR taxonomy this section presents an overview of grasping work in real environments and virtual environments, presenting an overview of grasping theories, existing taxonomies and methods which can be employed.

2.1. Grasping real objects

Grasping is the primary and most frequent physical interaction technique people perform in everyday life (Holz et al., Citation2008) and is formally defined as being every static posture at which an object can be held securely with a single hand (Feix et al., Citation2009). Grasping real objects is a complex and demanding task (Supuk et al., Citation2011) which has subsequently resulted in significant research focused on studying and characterising aspects of human hand usage when interacting with objects (Redmond et al., Citation2010), especially in areas such as anthropology (Monaco et al., Citation2014), hand surgery (Sollerman & Ejeskär, Citation1995), hand rehabilitation (Lukos et al., Citation2013) and robotics (Bullock et al., Citation2013; Cutkosky, Citation1989; Feix et al., Citation2014b, Citation2009).

The numerous skeletal and muscular degrees of freedom of the hand provide the human with a dexterity that has not yet been achieved by any other species on earth (Nowak, Citation2009). However, movement and function of the hand is not only a product of the internal degrees of freedom of the hand, but also the movement of the body and limbs, and contact with the environment (Feix et al., Citation2016). The multitude of hand movements that can be performed by the hand can be divided into two main groups: Prehensile, or movements in which an object is seized and held partly or wholly within the compass of the hand; and Non-prehensile, or movements in which no grasping or seizing is involved but by which objects can be manipulated by pushing or lifting motions of the hand as a whole or of the digits individually (Napier, Citation1956).

Derived from the complexity and physiology of the human hand, research has focused on observing and classifying grasping movements (Cutkosky, Citation1989) with the aim to support a greater understanding of the human grasping capabilities (MacKenzie & Iberall, Citation1994). To achieve this, several analytical grasp quality measures for describing a successful grasp have been defined which support analysis into grasp accuracy and therefore support grasping taxonomies. M. Cutkosky and Kao (Citation1989) proposed compliance as an important measure for describing a grasp, focusing on the effective compliance (inverse of stiffness) of the grasped object with respect to the hand. Mason and Salisbury (Citation1985) proposed connectivity, which refers to the number of independent parameters needed to completely specify the position and orientation of the object with respect to the palm. Complementary to this, Ohwovoriole and Roth (Citation1981) defined forced closure as another metric for measuring grasp performance, which considers the ability of the object to move without slipping when the finger joints are locked. From this, the idea of assessing grasp form closure emerged, with the work of Mason and Salisbury (Citation1985) then assessing the ability of the grasp to hold an object when external forces are applied from any direction. Complementary work was also defined by Kerr and Roth (Citation1986) who analysed grasp isotropy to measure if the grasp configuration allows the finger joints to accurately apply forces and movements to the object. For example, if one of the fingers is nearly in a singular configuration, it will be impossible to accurately control force and motion in a particular direction. Other measures have been proposed to assess the quality of a grasp, notably in the work of Cutkosky and Wright (Citation1986); Kerr and Roth (Citation1986) on manipulability and resistance to slipping, however the metric that encompasses the main grasp measures that ensure a successful grasp is grasp stability (Pollard & Lozano-Perez, Citation1990) and dexterity (MacKenzie & Iberall, Citation1994). Stability refers to the ability of the grasp to return to its initial configuration after being disturbed by an external force (MacKenzie & Iberall, Citation1994; Cutkosky, Citation1989) while dexterity refers to how accurately the fingers can impart larger motions or forces, and sensitivity or how accurately fingers can sense small changes in force and position (MacKenzie & Iberall, Citation1994).

While some of these metrics could be applicable to VR systems, many consumer VR devices still lack the ability to accurately replicate object force, connectivity (Mason & Salisbury, Citation1985), isotropy (Kerr & Roth, Citation1986) and stability (Pollard & Lozano-Perez, Citation1990) in user grasping, therefore leading to differences in experience between real grasping and virtual object grasping (Blaga et al., Citation2021b).

2.2. Grasping virtual objects

Limited work has explored the link between real and virtual grasping and therefore the connection to real grasping theories and user responses in VR. Initial work, notably Wan et al. (Citation2004), sought to develop grasp poses for virtual cubes, cylinders and spheres based on assumptions made from real grasping literature without performing evaluations on the differences or acknowledging the grasp quality measures. Similar approaches have been applied in the work of Jacobs and Froehlich (Citation2011); Valentini (Citation2018) who developed grasping systems which applied learning from real environments but again excluding the potential for differences in the VR systems. As an alternative to this approach, intuitive virtual grasping should be explored directly in VR to understand user behaviour when grasping virtual objects and what parameters influence their approach, to allow improvement of current systems that aim to provide intuitive virtual grasping interaction.

Considering this approach, seminal work has sought to explore virtual object grasping in an elicitation based methodological way, thus presenting a pathway for potential VR grasping taxonomies, with the most notable being the work of Al-Kalbani et al. (Citation2017, Citation2019); Al-Kalbani et al. (Citation2016a, Citation2016b). More recently, work by Blaga et al. (Citation2021a) has furthered this approach and highlighted key differences in grasping patterns via initial grasping taxonomies. When compared to the real grasping work of Feix et al. (Citation2014a, Citation2014b), Blaga et al. (Citation2021b) work highlights key differences between real and virtual grasping, when considering interactive tasks (Blaga et al., Citation2021a), object thermal properties (Blaga et al., Citation2020) and different object structural properties (Blaga et al., Citation2021c). The latter of these was found to be the most influencing factor for differences between real and virtual grasping and highlighted that more work is required on object properties to determine the nuanced differences between real and virtual object grasping.

2.3. Elicitation studies in AR/VR

In real object grasping, the physical object, coupled with our prior experience, are both referents to elicit a structured grasping response for us to naturally interact. Within Human Computer Interaction (HCI) interaction elicitation is a technique that emerges from the field of participatory design (Morris et al., Citation2014) and aims to enable users to have a voice in system design without needing to speak the language of professional technology design (Simonsen & Robertson, Citation2013). Elicitation studies are a formal methodology where the experimenter provides a referent (i.e., task or object) and asks participants to perform the reaction/interaction that would produce that effect (Villarreal-Narvaez et al., Citation2020). Seminal work by Wobbrock et al. (Citation2005); Wobbrock et al. (Citation2009) developed the end-user elicitation study method, aiming to make interactive systems more guessable, eliciting gestures from non-technical users and synthesised the results in a user-defined taxonomy of surface gestures.

Over time, elicitation studies have been highly used for developing user-defined taxonomies, with most of the research surrounding freehand input justifying the chosen interaction paradigms based on elicitation studies (Piumsomboon et al., Citation2013). Additionally, grasping elicitation for virtual objects has been conducted by Yan et al. (Citation2018) and synthesised into a gesture based taxonomy, thus illustrating the portability of elicitation for studies outside classical interfaces.

Considering real grasping studies, elicitation in this context can be seen as analogous to, or substitute to, the real world observation experiments, which are performed in the real grasping taxonomies of Feix et al. (Citation2014a, Citation2014b). These observations present users with real objects and tasks (referents) with them tasked to grasp and interact. Observations of this then are categorised, classified and structured into taxonomies. This approach has been further deployed into the VR domain by Blaga et al. (Citation2021a, Citation2021b) by immersing the users in a VR environment where they are asked to initiate grasps for a variety of referent virtual objects (Blaga et al., Citation2021b) or tasks (Blaga et al., Citation2021a) which can then be classified and structured accordingly.

2.4. Taxonomies

Taxonomies are defined as the “science of classification” (Bowman & Hodges, Citation1999) and have been highly used for classifying grasping patterns to provide a deep understanding of the way humans grasp real objects. Results from taxonomies have provided an important contribution in many domains ranging from anthropology, medical literature, rehabilitation, psychology and robotic arm design among many others (Feix et al., Citation2016). Taxonomies have also been highly used in interactive computing systems, due to the number of available technologies emerging and help reason, compare, elicit and create appropriate systems, in various domains such as gesture-based systems (Scoditti et al., Citation2011), User Interfaces (UI) (Seneler et al., Citation2008), voice commands (Pérez-Quiñones et al., Citation2003), data visualisation (Kleinman et al., Citation2021), VR (Muhanna, Citation2015), and AR (Hertel et al., Citation2021).

While the complexity and variety of uses of the human hand makes the categorisation and classification of grasps a challenging task, researchers attempted to simplify this process by identifying grouping mechanisms based on independent parameters that might influence grasping approach. Many classical taxonomies have been proposed, notably the work of MacKenzie and Iberall (Citation1994); Keller and Zahm (Citation1947); Lyons (Citation1985); Schlesinger (Citation1919) with each structuring grasps into specific functional categories based on the hand and finger pose.

These approaches laid the foundations of grasping taxonomies which over subsequent years have been developed with the goal of understanding what types of grasps humans commonly use in everyday tasks. However, due to the complexity and variety of uses of the human hand, the classification and categorisation of the hand function is a challenging task (Feix et al., Citation2016) which led to a lack of consensus in defining the terminology of a range of grasp types that humans commonly use. To allow a better understanding of the human hand and create a framework for investigating human hand use for various tasks and objects, Feix et al. (Citation2009) emphasized the need for creating a common terminology of grasp types to allow further investigation into human hand use. This common terminology supports insightful recommendations for system design and could offer VR a framework for improving the value and usefulness of immersive systems (Blaga et al., Citation2021a). As detailed in prior work has led to the development of real object grasping taxonomies and initial work has sought to explore the application of this knowledge into VR. This work now builds on this body of literature by presenting the first complete taxonomy for VR grasping which applies the methodologies from both real and virtual object grasping studies and synthesises the most common grasps for VR.

Table 1. A Summary table of key literature leading towards the contribution proposed by this article. Current knowledge in Real (blue), virtual (green) and combined real and virtual (yellow) object grasping taxonomies.

3. Methodology

To define the VR grasping taxonomy and support a development on prior published work in real (Feix et al., Citation2014a, Citation2014b) and virtual (Blaga et al., Citation2020, Citation2021a, Citation2021b) object grasping as detailed in a user elicitation study (N = 50) was conducted following a Wizard of Oz methodology on 16 virtual objects from the “Yale-Carnegie Mellon University-Berkeley Object and Model Set” (Calli et al., Citation2015). Objects were chosen following the methodology detailed by Blaga et al. (Citation2021a) and categorised using the approach of Zingg (Citation1935) following the protocols in Feix et al. (Citation2014b) real grasping research. This is described in detail in section 3.2. All elements of this study underwent a formal university ethics review and was granted full approval.

3.1. Virtual objects

Previous user elicitation studies have used pictorial (Wittorf & Jakobsen, Citation2016; Wobbrock et al., Citation2009) or animated (Piumsomboon et al., Citation2013) referents to encourage participants to develop their own set of gestures based on showing the effects these will have on the system. The work in this study aims to explore the object shape which was illustrated by Blaga et al. (Citation2021a) to be a primary influence in grasping choice. Therefore, referents used in these experiments were 3D virtual representations of real objects as shown in and and used in previous VR grasping research (Blaga et al., Citation2021a). The virtual objects used for this experiment were the 16 objects selected from the Yale-Carnegie Mellon University-Berkeley Object and Model Set (Calli et al., Citation2015) following the methodology detailed by Blaga et al. (Citation2021a).

Figure 1. Virtual objects used within this study are 3D virtual representations of real objects used in prior VR grasping research.

Table 2. Virtual objects used in this experiment categorised in Zingg’s shape categories (Zingg, Citation1935): Equant, Prolate, Oblate and Bladed based on their dimensions (A, B, C).

3.2. Virtual object categorisation

Categorisations applied to physical objects have largely focused on Zingg (Citation1935) methodology, which categorises objects based on their shape and the three dimensions that indicate the volume of their geometric bodies. Zingg (Citation1935) defined A as the longest dimension of an object, C as the shortest and B as the remaining dimension. He defined a constant R to describe the relationship between dimensions and categorise the object; determining that the value at which one typically regards two axes to be different is R = 3/2 (Zingg, Citation1935). Based on these parameters, four shape categories were defined as part of Zingg’s categorisation framework: Equant, Prolate, Oblate and Bladed. Each category is defined by two mathematical expressions (see ).

3.3. Task

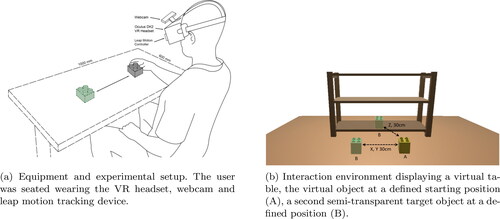

Tasks selected for this study were a set of translate tasks, which are the most common interaction tasks in virtual environments (Chen et al., Citation2018; Hartney et al., Citation2019). The tasks were defined in the three axes of the Cartesian coordinate system (X, Y, Z), in both positive and negative directions. Participants were asked to move each randomised virtual object to a target position, ±30cm away in each axes direction as shown in . This is in alignment with previous translate tasks presented in VR (Blaga et al., Citation2020, Citation2021a).

Figure 2. Experiment setup with 2a showcasing the equipment and experimental setup and 2b depicting the interaction environment in VR.

3.4. Apparatus

To support the evaluations of this work with prior published work, We directly implemented a comparable experimental framework from the work of Blaga et al. (Citation2021a) which considered hardware, software and environment conditions. Therefore, an Oculus DK2 VR headset, Leap Motion, and a Logitech Pro 1080p HD webcam were configured as represented in . The webcam is used to capture participants’ hands at all times during the experiment and support grasp categorisation. The VR environment was developed using the Unity game engine, Oculus Integration Package, Leap Motion 4.0 SDK and AutoDesk Maya 3D.

3.5. Environment

The user experiment was conducted in a controlled environment under laboratory conditions. The test room was lit by a 2700k (warm white) fluorescent tube with no external light source. The virtual environment was composed of a virtual table and a virtual shelf (see ). For each task, a virtual object would appear in a randomised order on the virtual table, together with a marker for the target position, following the environment methodology depicted in Blaga et al. (Citation2021a). The shelf was used for creating a realistic context for translate tasks.

3.6. Participants

A total of 50 right-handed participants (23 females, 27 males) from a population of university students and staff members volunteered to take part in this study. Participants ranged in age from 19 to 65 (M = 29.4, SD = 12.45). All participants performed the 6 experiment translation tasks across directions with the 16 virtual objects. In alignment with prior work, right handed participants were exclusively recruited for this study. This was to ensure that all results are compatible with those of Blaga et al. (Citation2021a) and of Feix et al. (Citation2014b). Participants completed a standardised consent form and were not compensated. Visual acuity of participants was measured using a Snellen chart (Hetherington, Citation1954), each participant was also required to pass an Ishihara test (Pickford, Citation1944) to check for colour blindness. No participants were excluded from this study with all participants having no colour blindness and/or non corrected visual acuity of < 0.80 (where 20/20 is 1.0).

3.7. Grasp data

Feix et al. (Citation2014a, Citation2014b) used a camera attached to users’ heads to record the hands during grasping interactions with real objects to capture hand posture and object contact area information. Following this methodology and thus support a comparison, we captured a virtual view of the grasp using the camera in the virtual environment, and the real hand using the head-mounted webcam. We also collect the centre position (X, Y, Z) of the palm and the individual fingers in millimetres from the 19 points provided by the hand tracking device.

3.8. Grasp metrics

Using the grasp data from section 3.7, grasps are classified in categories, types and grasped object dimension using the real camera view and virtual camera view. Additionally, following the methodology of Al-Kalbani et al. (Citation2016a), Grasp Aperture (GAp) is calculated using the X, Y, Z hand and finger position.

GAp is defined in Equationequation 1(1)

(1) as the distance between the thumb tip and index fingertip in the X, Y and Z axes. With Px, Py and Pz being the co-ordinates of the index fingertip, and Bx, By and Bz being the co-ordinates of the thumb tip.

(1)

(1)

3.9. Labelling

Grasp categorisation within this work was conducted based on the labels and method of Blaga et al. (Citation2021a) and following the process applied in Feix et al. (Citation2014a, Citation2014b).

3.9.1. Grasp labels

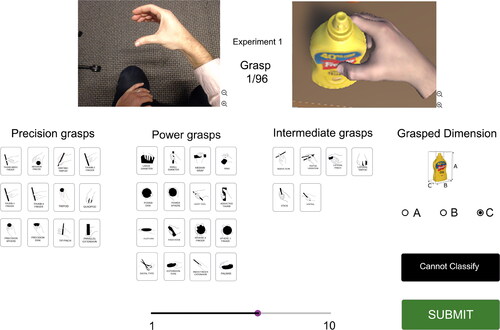

Following the work of Feix et al. (Citation2009) and the methodology described in Blaga et al. (Citation2021a) the work presented in this study uses the Human GRASP Taxonomy terminology. The taxonomy is the most complete taxonomy of grasp types to date, and presents the three main grasp categories (Power, Intermediate and Precision). For easy reporting, each grasp type was assigned a grasp code (i.e., [P1]) as shown in .

3.9.2. Process

Two academic raters, experienced in grasping literature, were trained to annotate the full set of collected grasps using grasp types and categories using the common terminology from the Human GRASP Taxonomy (Feix et al., Citation2009). A custom-made labelling application was used to display side by side the virtual camera and real camera view for a grasp instance (see . Raters select a grasp type from the different grasps in the Human GRASP Taxonomy organised in the corresponding grasp classes (Power, Precision and Intermediate). They also provide the dimension for which the grasp has been selected on the object alongside a confidence level as a slider from 1 to 10 where a 5 represents 50% confidence in their choice to a specific grasp instance. The application also shows a “Cannot Classify” button which is used if the virtual view and the real view do not provide a clear overview of the grasp performed (i.e., due to occlusion).

Figure 3. An example of the custom designed labelling application. Real (left) and virtual (right) views are presented to raters to support them in selecting one of the potential grasps from the three categories Precision, Power and intermediate. Object grasped dimension (A,B,C) can also be captured alongside rater’s confidence percentage on a scale of 1 to 10 where a 5 indicates a 50% level of confidence in the grasp choice.

Figure 4. Grasp categories: (a) Power grasps, (b) Intermediate grasps and (c) Precision grasps.

Following current literature on grasp labelling Feix et al. (Citation2014a, Citation2014b) methodology is used for cleaning and processing the grasp data. Therefore, the following considerations were applied:

If the virtual and the real view were labelled differently, they were excluded from the data set.

If the confidence level was below five for at least one of the raters, that grasp instance was removed from the data set. Five is selected as it represents an equal chance (50%) that the grasp could be classified as as another grasp within the set.

If the grasp was labelled as “cannot classify” by at least one of the raters, the grasp was excluded from the data set.

This resulted in 83 grasps being removed from the study.

3.10. Protocol

3.10.1. Pre-test

Prior to the study, participants were given a written informed consent where the test protocol and main aim of the study was described. Additionally, participants completed a pre-test questionnaire enquiring about their background level of experience with VR.

3.10.2. Training

Participants underwent initial hand interaction and task training to familiarise themselves with the VR environment and hand interaction space. This training task was a representative translate task on a basic cuboid object. Once training was complete participants were presented with the main experimental task.

3.10.3. Test

Participants were seated during the experiment and each completed 96 grasps (16 objects × 6 tasks), with a total of 4800 grasps recorded during the study (96 grasps × 50 participants). Objects and tasks were presented in a Latin square randomised order across the conditions. A Wizard of Oz (WoZ) protocol was employed for the study where participants were instructed to grasp the virtual objects the way they felt most intuitive. Once participants were happy with their grasp, they notified the test coordinator (the wizard) who then managed and the translation interaction. Employing a WoZ methodology in this context mimics the protocol employed by Blaga et al. (Citation2021a, Citation2021b) and permits a user to define the grasps they feel most suitable for each object without the constraints of a system defined grasping interaction trigger.

3.10.4. Post-test

After all tasks were completed, participants were asked to complete a post-test questionnaire comprised of the NASA-TLX questionnaire (Hart & Staveland, Citation1988) and the Motion-Sickness Questionnaire (MSAQ) (Gianaros et al., Citation2001).

3.11. Metrics

3.11.1. Questionnaire:

Previous literature on VR grasping (Blaga et al., Citation2021b) showed that users take more time to grasp virtual objects than real objects, which might have been due different cognitive load or motion sickness. To ensure there are no undue biases in the methodology and that the experiment system is not introducing excessive physiological or cognitive effects on the user, NASA-TLX (Hart & Staveland, Citation1988) is used for evaluating perceived user workload during the task. Additionally, the Motion Sickness Questionnaire (MSAQ) is used to assess motion sickness (Gianaros et al., Citation2001) on a scale from 0 to 100.

3.11.2. Grasp size

As defined in section 3.8 to observer how users estimate the size of a virtual object we use GAP based on their hand opening between the thumb and index fingertip.

3.11.3. Grasp labels

Grasp Labels refers to labels assigned to grasp instances (grasp category and grasp type) during the Labelling process. This was conducted following the methodology proposed by Feix et al. (Citation2014a, Citation2014b) and the protocol described in 3.9.2, which strictly followed the considerations outlined in Blaga et al. (Citation2021a).

3.11.4. User grasp choice agreement

User grasp choice agreement is analysed for each object to understand if there is a link between object shape and grasp variability. The grasp agreement score was defined as the agreement among the grasp types proposed by participants per object, following the definition of Wobbrock et al. (Citation2005) and was computed using the equation:

(2)

(2)

where r is a referent in the set of all referents available for each object or task (segmented by object category) R; Pr is the set of grasp proposals for referent r and Pi is a subset of identical grasp labels for Pr as in Wobbrock et al. (Citation2005) and Wobbrock et al. (Citation2009).

3.12. Hypotheses

Prior work suggests there is a link between object characteristics and grasping patterns, notably Blaga et al. (Citation2021a), Therefore, the following hypothesis is proposed:

: Virtual object shape has an effect on grasping patterns in VR.

Furthermore, to understand if grasping patterns change for different translate tasks, we suggest the position based on the work of Blaga et al. (Citation2021a), the following additional hypothesis is proposed:

: Translate tasks do not have an effect on grasping patterns in VR.

3.13. Data analysis

The Shapiro-Wilk (Shapiro & Wilk, Citation1965) normality test found the data to be not normally distributed. Data collected for GAp was non-parametric and not normally distributed, therefore statistical significance between the four dependent groups (Equant, Prolate, Oblate and Bladed) where the variable of interest is continuous (GAp in mm) was tested using the Friedman test (Friedman, Citation1940) with an alpha of 5%. For testing statistical significance between grasp patterns for object categories, where the dependent variable (Grasp Category) is nominal categorical (Power, Intermediate and Precision), contingency tables were created and analysed for significance using a Chi-Squared Test of Independence with 95% Confidence Intervals, therefore, a p-value of less than 0.05 will indicate statistical significance. Cramer’s V calculation for effect sizes (recommended for 3 × 4 contingency tables) was applied after verifying assumptions that the variables are categorical. Results were interpreted following existing guidelines based on degrees of freedom.

4. Results

4.1. NASA-TLX and MSAQ

No participants reported motion sickness before, during or after the experiment. The mean MSAQ score reported is 24.69 (SD = 14.98) when the maximum score is 100. The mean score for gastrointestinal items was 21.88 (SD = 18.82), for central items was 28.13 (SD = 20.43), for peripheral items was 22.07 (SD = 15.97) and for spite-related was 25.16 (SD = 17.55). This shows there was a negligible effect on motion sickness.

The NASA-TLX score was 28.89 (SD = 16.78), which based on existing guidelines is considered a medium workload. These results show that the tasks did not suppose a challenge for participants and they did not feel overloaded.

4.2. Grasp Aperture: GAp

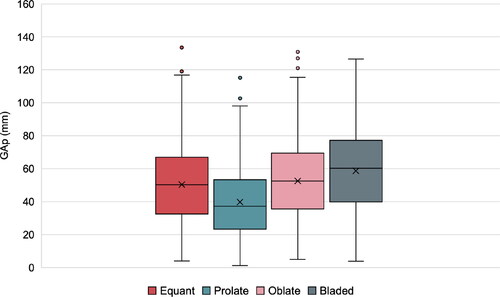

shows an overview of GAp for each object category presented in this experiment (Equant, Prolate, Oblate and Bladed). Differences in GAp between categories can be observed as follows: Equant objects were grasped with a mean GAp of 50.29 mm (SD = 23.66), Prolate objects were grasped with a mean GAp of 39.73 mm (SD = 20.55), Oblate objects were grasped with a mean GAp of 52.52 mm (SD = 24.09) and Bladed objects were grasped with a mean GAp of 58.65 mm (SD = 25.35). However, a non-parametric Friedman test of differences showed that these differences in GAp between object categories were not significant (3, N = 4717) = 146.21, p = 1.726.

Figure 5. GAp in mm for virtual objects categorised based on Zingg (Citation1935) methodology. X marks on boxplots indicate the mean GAp across all participants for Equant, Prolate, Oblate and Bladed. Whiskers represent the highest and lowest values within 1.5 times the interquartile range. Outliers are shown in coloured circles.

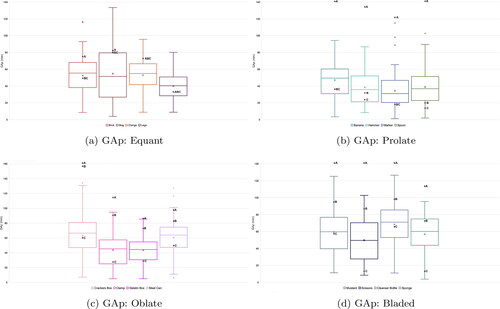

shows GAp for individual objects within the same category, with A, B, C points plotted to represent object size on each dimension in mm (see for A,B,C values). showing GAp for objects in Equant category, 6b for Prolate category, 6c for Oblate category and 6d for Bladed category. Although results show no statistical significant difference in GAp for categorised objects, illustrates that users changed their GAp based on the individual object presented, thus indicating a potential relationship to the object dimensions (A,B,C).

Figure 6. GAp (mm) for objects within (a) Equant, (b) Prolate, (c) Oblate and (d) Bladed categories. X marks indicate the mean GAp across all participants. Whiskers represent the highest and lowest values within 1.5 times the interquartile range. Outliers shown in circles. A, B and C represent virtual object dimensions, as detailed in plotted as a reference for how GAp relates to individual object sizes.

4.3. Grasp labels

A total of 4800 grasps were recorded during the experiments (50 participants × 16 objects × 6 tasks) which were labelled following the methodology presented in section 3.9.2. Out of 4800 grasps, 42 were removed due to being rated as “Cannot Classif” by at least one of the raters and 41 were removed due to disagreement between virtual and real view caused by sensor errors. The remaining 4717 (1159 for Equant objects, 1183 for Prolate objects, 1187 for Oblate objects and 1188 for Bladed objects) were further analysed for developing the first VR grasping taxonomy.

Cohen’s Kappa was used to measure inter-rater reliability for labelling the grasps. Raters agreed in 86% of instances (Cohen’s Kappa = 0.4) which based on existing guidelines is a moderate agreement, which is often achieved when subjectivity is involved in the process (Sun, Citation2011) and is a common agreement score for classification tasks (Feix et al., Citation2014b).

4.3.1. Grasp category

Power grasps were the most used grasps in this experiment (67.39%, N = 3179) of the total dataset, with the remaining instances being Precision grasps (14.54%, N = 686) and Intermediate grasps (3.24% N = 153) as shown in .

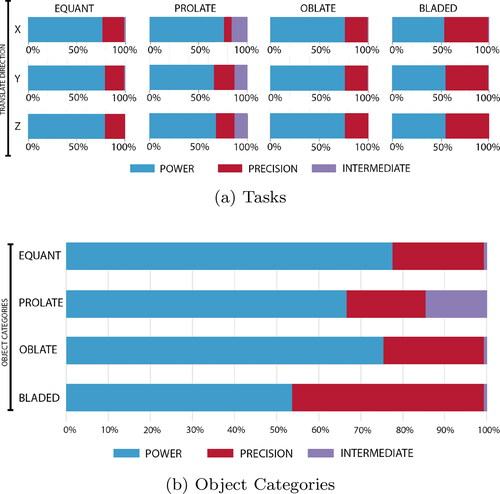

4.3.1.1. Task:

To understand if task influenced grasp choice, grasp patterns for every translate task (X, Y, Z) were compared for every category of virtual objects (Equant, Prolate, Oblate and Bladed). Grasp choice categories (Power, Precision and Intermediate) for each task and object group is presented in . A Chi-Squared test of Independence showed that this difference was not statistically significant for any of the object categories, Equant: ( (2, N = 1182) = 668.91, p = 0.261), Prolate: (

(2, N = 1161) = 3.81, p = 0.432), Oblate: (

(2, N = 1185) = 1.08, p = 0.896) and Bladed: (

(2, N = 1188) = 5.66 p = 0.225).

4.3.1.2. Object category:

When comparing grasp labels between object categories (Equant, Prolate, Oblate and Bladed) there were differences in grasp category (Power, Intermediate and Precision). A Chi-Squared test of Independence showed that this difference was statistically significant ( (3, N = 4717) = 668.91, p ¡ 0.001*) with a medium ES (Cramer’s V = 0.26). A visual representation of these results can be seen in .

4.3.2. Most common grasps

shows the most common grasp types and their usage percentages. Large Diameter [P1] from the Power grasp category was the most prevalent grasp type, accounting for 38.75% of the labels in the data set (N = 1828). This grasp was followed by the Precision Disk [PC10] grasp from the Precision grasp category with 14.54% of the labels (N = 686) and the Medium Wrap [P3] from the Power grasp category with 13.44% of the labels (N = 634). In total, the six most used grasps accounted for 85.18% (N = 4018) of the labelled data.

4.3.3. User grasp choice agreement

Objects with an agreement score above 90% were Hammer (97.98%), Crackers Box (96.01%), Mustard bottle (92.22%) and Meat can (90.23%). Objects presenting an agreement below 50% were Mug (36.53%), Clamp (35.52%), Spoon (30.24%), Marker (25.64%) and Lego brick (20.58%).

When looking at object shape categories, Equant objects showed an overall agreement of 55.56%, Prolate objects showed an agreement of 59.92%, Oblate objects showed an agreement of 71.65% and Bladed objects showed an agreement of 79.50%.

4.4. Taxonomy of grasp types

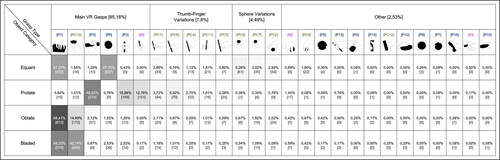

The Real Grasp Taxonomy by Feix et al. (Citation2014a, Citation2014b, Citation2009) is structured based on findings from the study of human grasping and by grouping grasp types into Power, Precision, and Intermediate, as well as Thumb Abducted and Thumb Adducted, which are known as taxonomy dimensions. To define the dimensions of the VR grasping taxonomy presented in , the 27 grasp types used in this experiment were grouped in meaningful categories by frequency of usage. The first subcategory is Main VR grasps, which represent the 6 most used grasps.

Figure 8. Most common used grasp types in this experiment. The six most used grasp types accounted for more than 85% of the labelled data (a), with the most used grasp type being large diameter [P1] (b).

![Figure 8. Most common used grasp types in this experiment. The six most used grasp types accounted for more than 85% of the labelled data (a), with the most used grasp type being large diameter [P1] (b).](/cms/asset/5ca4c734-9b5d-4b06-b21e-65bd129897f6/hihc_a_2351719_f0008_c.jpg)

Then, results from this study showed that high variations in grasp choice were due to participants using a different number of fingers to perform similar grasps with the same objects. Therefore, these variations were grouped under Thumb-Finger Variations category, which accounted for 7.08% (368 instances) of the total dataset and contains Precision grasp types where the number of fingers used together with the thumb when performing a grasp varies: Thumb-Index Finger [PC1], Thumb 2-Finger [PC4], Thumb 3-Finger [PC5], Thumb 4-Finger [P] and Tip Pinch [PC11], which were used in 7.8% of instances (N = 367).

The next category by frequency of use was Sphere Variations which accounted for 4.49% (212 instances) of the total dataset. This category contains Precision grasp types where the hand shapes in a way similar to grasping a spherical object: Precision Sphere [PC9], Tripod [PC7] and Inferior Pincer [PC2], which were used in 4.49% of instances (N = 211). The remaining grasp types were grouped in category Other, which was used in only 2.53% instances (N = 119).

presents the VR grasping taxonomy which shows the relationship between object category and grasp type with percentages and number of instances for each object category (Equant, Prolate, Oblate and Bladed).

5. Discussion

Results in this article showed that virtual object shape influences grasp patterns in VR in terms of grasp labels. GAp did not show significant differences between object shape categories, however it did show to vary for individual objects, suggesting a correlation between the size of the grasped location and GAp when grasping in VR, which is consistent with real grasping literature (Feix et al., Citation2014b). In alignment with previously reported results in VR grasping (Blaga et al., Citation2021a), participants grasped virtual objects smaller or larger than object size, with the lack of haptic feedback potentially introducing errors in object size estimation.

5.1. Grasp Aperture: GAp

Results of this experiment showed that differences in GAp between object shape categories were not significant, therefore suggesting that in VR, GAp is not directly influenced by object shape if objects are categorised using the dimension representation from Zingg (Citation1935) method. This opposed real grasping literature, where grasp aperture has shown to be influenced by object size (Feix et al., Citation2009).

Equant objects presented the highest variability in terms of GAp patterns, with spherical/cylindrical objects being grasped smaller than all object dimensions, such as Mug and Orange, while cuboid objects being grasped smaller and larger than object dimensions (Brick and Lego) as shown in . This is consistent with MR grasping research, where it has been showen that participants grasped spherical objects smaller than cuboid objects (Al-Kalbani et al., Citation2016a). Additionally, some objects in this category present unique grasping challenges, i.e., the Mug proposing multiple graspable locations (body, top and handle, as explored in more detail in Blaga et al. (Citation2020)).

Objects in the Prolate category showed more similarities in terms of GAp, all objects being predominantly grasped larger than dimensions B and C and always smaller than dimension A as shown in . This may have been due to the nature of Prolate objects which are characterised by long and narrow bodies, where dimension A is significantly bigger than dimension B and C. For example, objects used in this experiment have A higher than 120 mm, considering that in real environments comfortable grasps are typically less than 70 mm (Feix et al., Citation2014b), while MR research showing that the most comfortable grasped size is 80 mm (Al-Kalbani et al., Citation2016a), therefore suggesting that grasping along this longer dimension would not be intuitive for the users. A similar pattern was found for objects in Oblate and Bladed categories (6c and 6d) which were predominantly grasped smaller than dimension A and B, but larger than dimension C.

Real object grasping research showed that the human hand has a tendency to grasp the smallest dimension of an object (C) (Feix et al., Citation2014b). This has also been shown in previous virtual grasping literature (Blaga et al., Citation2021a). Considering our results, users overestimated object size for all object categories, with some exceptions in Equant category.

While a clear pattern in GAp was not identified for Zingg (Citation1935) object categories, a strong connection between GAp and grasp types can be observed in this work. Equant, Oblate and Bladed objects were predominantly grasped with a mean GAp ranging from 50 to 58 mm and a grasp type Large Diameter [P1] which in real environments is linked to a grasp size of 70 mm, while Prolate objects were grasped with a mean GAp of 39 mm and a grasp type Medium Wrap [P3] which in real environments is linked to a grasp size of 45 mm. Moreover, objects that showed a lower user grasp choice agreement also showed higher variability in GAp, showing that the correlation between positions of the fingers (grasp types) change together with the hand opening (aperture) as known from real grasping. Therefore, the hypothesis H1:Virtual object shape does have an effect on grasping patterns in VR, is accepted as differences in grasping labels were found between object shape categories. When comparing grasp labels for different translate tasks, no significant differences were found, therefore failing to reject the hypothesis H2: Translate tasks do not have an effect on grasping patterns in VR.

5.1.1. Grasp labels

Results showed that grasp choice was not influenced by simple translate tasks while being primarily influenced by virtual object shape. This is in alignment with previous literature in VR, where grasp metrics did not change by the orientation or reposition of virtual objects (Blaga et al., Citation2021a). These results however, contrast real grasping literature where grasp choice is highly influenced by intended task (Napier, Citation1956).

In real grasping literature, it has been shown that objects with irregular shapes present the largest variation in grasping approach (Feix et al., Citation2014b). A similar result was found in this study, where Mug, Clamp, Spoon, Marker and Lego showed user grasp choice agreements below 40%. These objects not only have irregular shapes but also present multiple graspable locations such as handles (Mug, Clamp), multiple intuitive graspable possibilities (Spoon, which could be grasped as a regular cylindrical object, or with more precision for eating; Marker, which could be grasped as a regular cylindrical or with more precision for writing) or very small objects (Lego) which are linked to higher variations of grasp types in real environments (Feix et al., Citation2014b).

5.2. Taxonomy of grasp types

5.2.1. Complete taxonomy

Strictly following the user elicitation and labelling methodologies presented by Blaga et al. (Citation2021a), and to deliver a comparable taxonomy to the work of Feix et al. (Citation2009, Citation2014a,), the first complete Taxonomy of Grasp Types in VR has been presented. The taxonomy is presented structured following methodologies for defining taxonomy dimensions in real grasping literature (Feix et al., Citation2009) and illustrates that of the potential grasps from real grasping only 27 were present in VR for this study.

5.2.2. Main VR grasps

A key finding in this work is that 6 grasps in VR account for more than 85% of the grasping instances, contrasting the 13 different grasp types that accounted for 82.80% of the data in the most complete real grasping taxonomy to date (Feix et al., Citation2009). Similar results in VR grasping were reported by Blaga et al. (Citation2021a), suggesting an overall lower variability in grasping objects of different shapes in VR. This lower variability in grasp approach was also found in grasping virtual objects in immersive technology grasping literature (Al-Kalbani et al., Citation2016a) and in gesture elicitation studies (Billinghurst et al., Citation2014), where subjects used a small variety of hand poses across tasks.

5.2.3. Most common grasp type

In real environments the Medium Wrap [P3] grasp type from the Power grasp category is the most common grasp used when manipulating real objects (Feix et al., Citation2014b), yet, the VR taxonomy presented in this study showed the Large Diameter [P1] to be the most common grasp type across object categories being used for 40% of the dataset. The main difference between these two grasps is that Large Diameter [P1] presents a larger hand opening (GAp) and the hand is not wrapped around the object as with the Medium Wrap [P3]. Blaga et al. (Citation2021a) reported similar results with Large Diameter accounting for just below 40% of their dataset. This shows that even though the virtual objects used in this study were of different shapes and sizes, subjects did not focus on performing a grasp around the boundaries of the virtual object, which might have been influenced by the lack of haptic feedback.

5.2.4. Influence of object shape

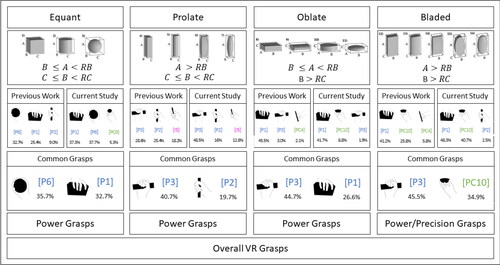

Considering how grasp type changes between object shape categories, unique patterns were identified for different shapes. Full detail to this is given in and . Equant objects were predominantly grasped using a Power Sphere [P6] and a Large Diameter [P1], followed by Sphere variations. This shows that subjects adjusted their grasp to object shape as Equant objects are variations of cuboid or spherical objects. A similar pattern was found for Prolate objects, which are long tubular bodies, and were predominantly grasped using Medium Wrap [P3] and Small Diameter [P2] which represent grasps that differ in terms of hand opening, with both of them being predominantly used to create a stable grasp for heavy objects (Feix et al., Citation2014b). While the weight of the objects was not an influencer in this case, the tubular shape and the object sizes on the graspable locations (less than 36 mm for every object in this category) might have influenced the grasping pattern for this object category, showing a link between virtual object characteristics and grasp type, even when hand occlusion and lack of haptic feedback introduce errors in object size estimation (Murcia-López & Steed, Citation2018).

Subjects used a higher number of finger variations grasps for Prolate category compared to other categories. This finding could be linked to the lack of weight feedback associated with VR interactions, thus allowing users to grasp objects in a comfortable manner instead of prioritising stability (i.e., a hammer can be grasped using a pinch grasp or other finger variations in VR, which would not be possible in real environments). A lack of awareness in the number of fingers involved in grasping was observed for all virtual objects used in this experiment. Variations of the same grasp, but using a different number of digits to perform precision grasps has been used instinctively as shown in the VR Taxonomy ([PC1], [P], [PC4], [PC1] and [PC5]) for the same object. This finding aligns with prior user elicitation studies defining mid-air gesture interactions for augmented reality (Piumsomboon et al., Citation2013) and wall display interactions (Wittorf & Jakobsen, Citation2016), where users did not show awareness of the number of digits they were using while interacting. This contrasts with grasping and manipulating real objects, where the number of digits involved is influenced by the size of the object (Bullock et al., Citation2015), increasing with size and mass (Cesari & Newell, Citation1999, Citation2000).

Oblate objects were predominantly grasped using Large Diameter [P1] from the Power category, followed by Precision Disk [PC10] from Precision category. A similar pattern was found for Bladed objects, however the distribution of Large Diameter [P1] and Precision Disk [PC10] is more balanced for Bladed objects. While a high variability in grasp types of the same category were expected, a prevalence for grasp types from different categories, which are fundamentally different from each other, introduces the question of whether the virtual objects that propose multiple grasping possibilities (i.e., Clamp, Scissors, Sponge), represent in fact outliers that skew the results of the taxonomy for these categories. A post-analysis revealed that more than 98% of the Precision Disk [PC10] grasp instances in Oblate category were for grasping the Clamp object while more than 90% of the Precision Disk [PC10] grasp instances in Bladed were for Scissors and Sponge.

5.2.5. Overall VR grasps

Building on prior work, considering each of Zingg’s object categories (), we have defined the two most common VR grasps from this study and the work of Blaga et al. (Citation2021a, Citation2021c) for each category. Considering the dataset size differences, between previous literature (N = 1872) in Blaga et al. (Citation2021a, Citation2021c) and our study (N = 4800), we propose that the common grasps as weighted percentages proportionate to the data sizes. We therefore define that the following 5 grasps are most common in VR; Power Sphere [P6], Large Diameter [P1], Medium Wrap [P3], Small Diameter [P2] and Precision Disk [PC10], with 4 of these 5 coming from the Power grasp category (). This prevalence of power grasps in VR aligns with prior work for real object grasping, where power grasps have proven to be useful and practical for large, small and lightweight objects (Feix et al., Citation2014b). Furthermore, prior VR studies have also illustrated how users employ a common grasping dimension for virtual objects and this is often larger than the virtual object dimensions (Al-Kalbani et al., Citation2016b). This preference for users to overestimate object size and performing larger grasps than needed in reality could explain the prevalence of power grasps in VR and could account for the power grasp being used in 80% of grasps in VR.

Figure 10. Most common grasps based on results presented on this study per Zingg’s object categories and previous results reported in literature (Blaga et al., Citation2021a, Citation2021c), reporting on proposed common grasps and the human GRASP taxonomy (Feix et al., Citation2009) and VR taxonomy of grasp types categories they belong to.

5.3. Limitations

While this work has presented the first complete taxonomy for VR grasping there are several constraints which should be highlighted. Primarily, by building onto existing studies in VR (Blaga et al., Citation2021a) and real object grasping (Feix et al., Citation2014a, Citation2014b), the methodologies employed emulate the prior research and thus have inherent limitations. One limitation is in the diversity of participant selection and the reach to grasp task. To extend the taxonomy, future work should look to determine the influence that participant specifics (i.e., hand dominance) has on grasping patterns. Likewise, to support more inclusive VR experiences taxonomies both in real and VR object grasping should look to sample a more diverse participant group, therefore responding to the research agenda of Chris Creed et al. (Citation2023) and the call to action of Peck et al. (Citation2021). Additionally, while every effort was made to support a direct comparison with the work of Blaga et al. (Citation2021a), future work should look to also explore a more unconstrained grasping task similar to the work of Bullock et al. (Citation2014). If complete this would enable a richer consideration of how the task can influence user’s grasp choice and enable future task based categorisation in VR against the work in real grasping. Finally, to match the methodology of Blaga et al. (Citation2021a) haptic feedback was also not provided within this work. Therefore haptic feedback could be considered as a future route for exploration. Haptic feedback, although not standard in consumer VR hardware, has been illustrated to improve presence and embodiment in VR, however often with increased discomfort for users. While still in its infancy, mid-air haptic systems have illustrated some promise for grasping smaller objects in VR Maite Frutos-Pascual et al. (Citation2019) and therefore could be considered for a future extension to this work.

6. Conclusions and recommendations

This article presented VR-Grasp, a taxonomy of grasp types for VR objects. The taxonomy is structured on the frequency and commonality of grasping instances in a translate interaction task and employed the methodologies of Feix et al. (Citation2009), Feix et al. (Citation2014a) and Blaga et al. (Citation2021a). The results provide the first fully comparable grasping taxonomy between real and virtual objects covering 27 different VR grasps from the power, intermediate and precision grasp categories. This taxonomy bridges a research gap for immersive technology which now supports complementary comparisons to be drawn with real object grasping.

Complimenting the taxonomy, commonalities in the results with the work of Blaga et al., Citation2021a, illustrate that in VR the grasping patterns can be reduced further from the potential 27 grasps to the 5 most common VR grasps. We recommend interaction designers or researchers in VR to consider these findings for creating computationally efficient, yet highly immersive, virtual environments. Additionally, as the prevalence for power grasps was reported in this work, to further improve on the computational efficiency in VR, a generalised power grasp model could be applied, thus negating the need to focus on the nuances of fingertip placement and virtual object bounds.

We hope this work will lead towards the development of further guidelines for understanding virtual grasping patterns and lead to more usable VR experiences. Moreover, we wish for this taxonomy to be used as a framework for comparative analysis of freehand grasping-based interaction techniques, providing researchers with both a methodology and the main grasp categories for future VR research and development.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Notes on contributors

Andreea Dalia Blaga

Andreea Dalia Blaga received the PhD degree in Human-Computer Interaction from Birmingham City University, United Kingdom in 2023. She is currently working as a Senior Researcher for Mosaic Group (NASDAQ:IAC). Her research includes human-computer interaction and user experience in digital environments such as mobile, desktop, VR/AR.

Maite Frutos-Pascual

Maite Frutos-Pascual, Senior Lecturer at Birmingham City University, U.K., specializes in immersive technologies, HCI, interactive systems, and sensor data analysis. She holds a BEng in Telecommunications Engineering, an MSc in Software Development with distinction, and a PhD in Computer Science from the University of Deusto, Spain.

Chris Creed

Chris Creed is a Professor of Human-Computer Interaction (HCI) at Birmingham City University where he leads HCI research around the design and development of inclusive technologies for disabled people across a range of impairments.

Ian Williams

Ian Williams is a full Professor of Visual Computing at Birmingham City University. He leads research into 3D UI, Computer Vision and XR systems. Having both an industry and academic background Prof Williams has received national and international acclaim for the impact and value his research has for industry.

References

- Al-Kalbani, M., Frutos-Pascual, M., & Williams, I. (2017). Freehand grasping in mixed reality: Analysing variation during transition phase of interaction. In Proceedings of the 19th Acm International Conference on Multimodal Interaction, New York, NY, USA (pp. 110–114) Association for Computing Machinery. https://doi.org/10.1145/3136755.3136776

- Al-Kalbani, M., Frutos-Pascual, M., & Williams, I. (2019). Virtual object grasping in augmented reality: Drop shadows for improved interaction. In 2019 11th International Conference on Virtual Worlds and Games for Serious Applications (vs-Games) (pp. 1–8). Retrieved from https://doi.org/10.1109/VS-Games.2019.8864596

- Al-Kalbani, M., Williams, I., & Frutos-Pascual, M. (2016a). Analysis of medium wrap freehand virtual object grasping in exocentric mixed reality. 2016 Ieee International Symposium on Mixed and Augmented Reality (Ismar) (pp. 84–93). Retrieved from https://doi.org/10.1109/ISMAR.2016.14

- Al-Kalbani, M., Williams, I., & Frutos-Pascual, M. (2016b). Improving freehand placement for grasping virtual objects via dual view visual feedback in mixed reality. In Proceedings of the 22nd Acm Conference on Virtual Reality Software and Technology, New York, NY, USA. In (p. 279–282) Association for Computing Machinery. Retrieved from https://doi.org/10.1145/2993369.2993401

- Billinghurst, M., Piumsomboon, T., & Bai, H. (2014). Hands in space: Gesture interaction with augmented-reality interfaces. IEEE Computer Graphics and Applications, 34(1), 77–80. https://doi.org/10.1109/MCG.2014.8

- Blaga, A. D., Frutos-Pascual, M., Creed, C., & Williams, I. (2020). Too hot to handle: An evaluation of the effect of thermal visual representation on user grasping interaction in virtual reality. In Proceedings of the 2020 Chi Conference on Human Factors in Computing Systems, New York, NY, USA (pp. 1–16). Association for Computing Machinery. https://doi.org/10.1145/3313831.3376554

- Blaga, A. D., Frutos-Pascual, M., Creed, C., & Williams, I. (2021a). Freehand grasping: An analysis of grasping for docking tasks in virtual reality. In 2021 Ieee Virtual Reality and 3d User Interfaces (vr) (pp. 749–758). https://doi.org/10.1109/VR50410.2021.00102

- Blaga, A. D., Frutos-Pascual, M., Creed, C., & Williams, I. (2021b). A grasp on reality: Understanding grasping patterns for object interaction in real and virtual environments. In 2021 IEEE international symposium on mixed and augmented reality adjunct (Ismar-Adjunct) In (p. 391–396). Retrieved from https://doi.org/10.1109/ISMAR-Adjunct54149.2021.00090

- Blaga, A. D., Frutos-Pascual, M., Creed, C., & Williams, I. (2021c). Virtual object categorisation methods: Towards a richer understanding of object grasping for virtual reality. In Proceedings of the 27th ACM symposium on virtual reality software and technology, New York, NY, USA. Association for Computing Machinery. https://doi.org/10.1145/3489849.3489875

- Bowman, D. A., & Hodges, L. F. (1999). Formalizing the design, evaluation, and application of interaction techniques for immersive virtual environments. Journal of Visual Languages and Computing. 10(1), 37–53. https://api.semanticscholar.org/CorpusID:17882289 https://doi.org/10.1006/jvlc.1998.0111

- Bullock, I., Feix, T., & Dollar, A. (2014). The Yale human grasping dataset: Grasp, object, and task data in household and machine shop environments. The International Journal of Robotics Research, 34(3), 251–255. https://doi.org/10.1177/0278364914555720

- Bullock, I., Feix, T., & Dollar, A. (2015). Human precision manipulation workspace: Effects of object size and number of fingers used. In 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Milan, Italy pp. 5768–5772). IEEE. https://doi.org/10.1109/EMBC.2015.7319703

- Bullock, I., Zheng, J., Rosa, S., Guertler, C., & Dollar, A. (2013). Grasp frequency and usage in daily household and machine shop tasks. IEEE Transactions on Haptics, 6(3), 296–308. https://doi.org/10.1109/TOH.2013.6

- Calli, B., Walsman, A., Singh, A., Srinivasa, S., Abbeel, P., & Dollar, A. M. (2015). Benchmarking in manipulation research: Using the yale-cmu-berkeley object and model set. IEEE Robotics & Automation Magazine, 22(3), 36–52. https://doi.org/10.1109/MRA.2015.2448951

- Cesari, P., & Newell, K. (1999). The scaling of human grip configurations. Journal of Experimental Psychology. Human Perception and Performance, 25(4), 927–935. https://doi.org/10.1037//0096-1523.25.4.927

- Cesari, P., & Newell, K. (2000). Body-scaled transitions in human grip configurations. Journal of Experimental Psychology. Human Perception and Performance, 26(5), 1657–1668. https://doi.org/10.1037/0096-1523.26.5.1657

- Chen, Y.-S., Han, P.-H., Lee, K.-C., Hsieh, C.-E., Hsiao, J.-C., Hsu, C.-J., Chen, K.-W., Chou, C.-H., & Hung, Y.-P. (2018). Lotus: Enhancing the immersive experience in virtual environment with mist-based olfactory display. In Siggraph Asia 2018 virtual and augmented reality, New York, NY, USA. Association for Computing Machinery. Retrieved from https://doi.org/10.1145/3275495.3275503

- Creed, C., Al-Kalbani, M., Theil, A., Sarcar, S., & Williams, I. (2023). Inclusive augmented and virtual reality: A research agenda. In International Journal of Human–Computer Interaction. Advance online publication. https://doi.org/10.1080/10447318.2023.2247614

- Cutkosky, M., & Kao, I. (1989). Computing and controlling compliance of a robotic hand. IEEE Transactions on Robotics and Automation, 5(2), 151–165. https://doi.org/10.1109/70.88036

- Cutkosky, M. R. (1989). On grasp choice, grasp models, and the design of hands for manufacturing tasks. IEEE Transactions on Robotics and Automation, 5(3), 269–279. https://doi.org/10.1109/70.34763

- Cutkosky, M. R., & Wright, P. K. (1986). Friction, stability and the design of robotic fingers. The International Journal of Robotics Research, 5(4), 20–37. https://doi.org/10.1177/027836498600500402

- Feix, T., Bullock, I., & Dollar, A. (2014a). Analysis of human grasping behavior: Correlating tasks, objects and grasps. IEEE Transactions on Haptics, 7(4), 430–441. 10) https://doi.org/10.1109/TOH.2014.2326867

- Feix, T., Bullock, I., & Dollar, A. (2014b). Analysis of human grasping behavior: Object characteristics and grasp type. IEEE Transactions on Haptics, 7(3), 311–323. https://doi.org/10.1109/TOH.2014.2326871

- Feix, T., Pawlik, R., & Schmiedmayer, H.-B. (2009). A comprehensive grasp taxonomy. Robotics, Science and Systems Conference: Workshop on Understanding the Human Hand for Advancing Robotic Manipulation,. https://api.semanticscholar.org/CorpusID:1676242

- Feix, T., Romero, J., Schmiedmayer, H.-B., Dollar, A. M., & Kragic, D. (2016). The grasp taxonomy of human grasp types. IEEE Transactions on Human-Machine Systems, 46(1), 66–77. https://doi.org/10.1109/THMS.2015.2470657

- Friedman, M. (1940). A comparison of alternative tests of significance for the problem of m rankings. The Annals of Mathematical Statistics, 11(1), 86–92. https://doi.org/10.1214/aoms/1177731944

- Frutos-Pascual, M., Creed, C., Harrison, J. M., & Williams, I. (2019). Evaluation of ultrasound haptics as a supplementary feedback cue for grasping in virtual environments. In International conference on multimodal interaction (ICMI ’19). https://doi.org/10.1145/3340555.3353720

- Gianaros, P. J., Muth, E., Mordkoff, J., Levine, M., & M. Stern, R. (2001). A questionnaire for the assessment of the multiple dimensions of motion sickness. Aviation, Space, and Environmental Medicine, 72(2), 115–119. https://api.semanticscholar.org/CorpusID:42535853

- Hart, S. G., & Staveland, L. E. (1988). Development of nasa-tlx (task load index): Results of empirical and theoretical research. In P. A. Hancock & N. Meshkati (Eds.), Human mental workload. (Vol. 52, p. 139–183). North-Holland. https://doi.org/10.1016/S0166-4115(08)62386-9

- Hartney, J. H., Rosenthal, S. N., Kirkpatrick, A. M., Skinner, J. M., Hughes, J., & Orlosky, J. (2019). Revisiting virtual reality for practical use in therapy: Patient satisfaction in outpatient rehabilitation. In 2019 IEEE conference on virtual reality and 3d user interfaces (vr) (pp. 960–961). https://doi.org/10.1109/VR.2019.8797857

- Heim, M. (2000). Virtual realism. Oxford University Press USA. https://doi.org/10.1093/oso/9780195104264.001.0001

- Hertel, J., Karaosmanoglu, S., Schmidt, S., Braeker, J., Semmann, M., & Steinicke, F. (2021). A taxonomy of interaction techniques for immersive augmented reality based on an iterative literature review. In 2021 IEEE international symposium on mixed and augmented reality (Ismar) (pp. 431–440). https://doi.org/10.1109/ISMAR52148.2021.00060

- Hetherington, R. (1954). The snellen chart as a test of visual acuity. In Psychologische Forschung, 24(4), 349–357. https://doi.org/10.1007/BF00422033

- Holz, D., Ullrich, S., Wolter, M., & Kuhlen, T. (2008). Multi-contact grasp interaction for virtual environments. JVRB– Journal of Virtual Reality and Broadcasting, 5(2008), 7. https://doi.org/10.20385/1860-2037/5.2008.7

- Hudson, S., Matson-Barkat, S., Pallamin, N., & Jegou, G. (2019). With or without you? Interaction and immersion in a virtual reality experience. Journal of Business Research, 100(1), 459–468. Retrieved from https://doi.org/10.1016/j.jbusres.2018.10.062

- Islam, M. S., & Lim, S. (2022). Vibrotactile feedback in virtual motor learning: A systematic review. Applied Ergonomics, 101, 103694. https://doi.org/10.1016/j.apergo.2022.103694

- Jacobs, J., & Froehlich, B. (2011). A soft hand model for physically-based manipulation of virtual objects. In 2011 IEEE virtual reality conference (pp. 11–18). https://doi.org/10.1109/VR.2011.5759430

- Keller, C. L. T., & A. D., Zahm, V. (1947). Studies to determine the functional requirements for hand and arm prosthesis. Department of Engineering, University of California at Los Angeles.

- Kerr, J., & Roth, B. (1986). Analysis of multifingered hands. The International Journal of Robotics Research, 4(4), 3–17. https://doi.org/10.1177/027836498600400401

- Kleinman, E., Preetham, N., Teng, Z., Bryant, A., & Seif El-Nasr, M. (2021). What happened here!? A taxonomy for user interaction with spatio-temporal game data visualization. Proceedings of the ACM on Human-Computer Interaction, 5(CHI PLAY), 1–27. https://doi.org/10.1145/3474687

- Lukos, J., Snider, J., Hernandez, M., Tunik, E., Hillyard, S., & Poizner, H. (2013). Parkinson’s disease patients show impaired corrective grasp control and eye-hand coupling when reaching to grasp virtual objects. Neuroscience, 254, 205–221. https://doi.org/10.1016/j.neuroscience.2013.09.026

- Lyons, D. (1985). A simple set of grasps for a dextrous hand. In Robotics and Automation. proceedings. 1985 IEEE international conference (Vol. 2, pp. 588–593). https://doi.org/10.1109/ROBOT.1985.1087226

- MacKenzie, C. L., & Iberall, T. (1994). Chapter 2. prehension. In C. L. Mackenzie & T. Iberall (Eds.), The grasping hand (Vol. 104, pp. 15–46). North-Holland. https://doi.org/10.1016/S0166-4115(08)61574-5

- Mason, M. T., & Salisbury, J. K. (1985). Robot hands and the mechanics of manipulation. MIT Press. https://doi.org/10.1109/PROC.1987.13861

- Monaco, S., Sedda, A., Cavina-Pratesi, C., & Culham, J. (2014). Neural correlates of object size and object location during grasping actions. The European Journal of Neuroscience, 41(4), 454–465. https://doi.org/10.1111/ejn.12786

- Morris, M. R., Danielescu, A., Drucker, S., Fisher, D., Lee, B., Schraefel, M. C., & Wobbrock, J. O. (2014). Reducing legacy bias in gesture elicitation studies. Interactions, 21(3), 40–45. https://doi.org/10.1145/2591689

- Muhanna, M. A. (2015). Virtual reality and the cave: Taxonomy, interaction challenges and research directions. Journal of King Saud University – Computer and Information Sciences, 27(3), 344–361. https://doi.org/10.1016/j.jksuci.2014.03.023

- Murcia-López, M., & Steed, A. (2018). A comparison of virtual and physical training transfer of bimanual assembly tasks. IEEE Transactions on Visualization and Computer Graphics, 24(4), 1574–1583. https://doi.org/10.1109/TVCG.2018.2793638

- Napier, J. R. (1956). The prehensile movements of the human hand. The Journal of Bone and Joint Surgery. British Volume, 38-B(4), 902–913. https://doi.org/10.1302/0301-620X.38B4.902

- Nowak, D. A. (2009). Sensorimotor control of grasping: Physiology and pathophysiology. Cambridge University Press. https://doi.org/10.1017/CBO9780511581267

- Ohwovoriole, M. S., & Roth, B. (1981). An extension of screw theory. Journal of Mechanical Design, 103(4), 725–735. https://doi.org/10.1115/1.3254979

- Peck, T. C., McMullen, K. A., & Quarles, J. (2021). Divrsify: Break the cycle and develop vr for everyone. IEEE Computer Graphics and Applications, 41(6), 133–142. https://doi.org/10.1109/MCG.2021.3113455

- Pérez-Quiñones, M. A., Capra, R. G., & Shao, Z. (2003). The ears have it: A task by information structure taxonomy for voice access to web pages. In Interact (pp. 856–859). https://www.interaction-design.org/literature/conference/proceedings-of-the-tenth-international-conference-on-human-computer-interaction

- Pickford, R. (1944). The ishihara test for color blindness. In Nature, 153(3891), 656–657. (Retrieved from https://doi.org/10.1038/153656b0

- Piumsomboon, T., Clark, A., Billinghurst, M., & Cockburn, A. (2013). User-defined gestures for augmented reality. In Chi’13 extended abstracts on human factors in computing systems (pp. 955–960). https://doi.org/10.1007/978-3-642-40480-1_18

- Pollard, N., & Lozano-Perez, T. (1990). Grasp stability and feasibility for an arm with an articulated hand. In Proceedings., ieee international conference on robotics and automation (pp. 1581–1585 vol. 3). https://doi.org/10.1109/ROBOT.1990.126234

- Redmond, B., Aina, R., Gorti, T., & Hannaford, B. (2010). Haptic characteristics of some activities of daily living. In Proceedings of the 2010 IEEE haptics symposium (pp. 71–76). IEEE Computer Society. https://doi.org/10.1109/HAPTIC.2010.5444674

- Schlesinger, G. (1919). Der mechanische aufbau der künstlichen glieder. In Ersatzglieder und arbeitshilfen: Für kriegsbeschädigte und unfallverletzte. Springer Berlin Heidelberg. https://doi.org/10.1007/978-3-662-33009-8_13

- Scoditti, A., Blanch, R., & Coutaz, J. (2011). A novel taxonomy for gestural interaction techniques based on accelerometers. In Proceedings of the 16th International conference on intelligent user interfaces, New York, NY, USA (pp. 63–72) Association for Computing Machinery. https://doi.org/10.1145/1943403.1943414

- Seneler, C. O., Basoglu, N., & Daim, T. U. (2008). A taxonomy for technology adoption: A human computer interaction perspective. In Picmet ’08 – 2008 Portland international conference on management of engineering technology (pp. 2208–2219). https://doi.org/10.1109/PICMET.2008.4599843

- Shapiro, S. S., & Wilk, M. B. (1965). An analysis of variance test for normality (complete samples). Biometrika, 52(3-4), 591–611. https://doi.org/10.2307/2333709

- Simonsen, J., & Robertson, T. (Eds.). (2013). Routledge international handbook of participatory design. Routledge. https://doi.org/10.4324/9780203108543

- Sollerman, C., & Ejeskär, A. (1995). Sollerman hand function test. A standardised method and its use in tetraplegic patients. Scandinavian Journal of Plastic and Reconstructive Surgery and Hand Surgery, 29, (2), 167–176. https://doi.org/10.3109/02844319509034334

- Sun, S. (2011). Meta-analysis of cohen’s kappa. Health Services and Outcomes Research Methodology, 11(3–4), 145–163. https://doi.org/10.1007/s10742-011-0077-3

- Supuk, T., Bajd, T., & Kurillo, G. (2011). Assessment of reach-to-grasp trajectories toward stationary objects. Clinical Biomechanics, 26(8), 811–818. https://doi.org/10.1016/j.clinbiomech.2011.04.007

- Valentini, P. P. (2018). Natural interface for interactive virtual assembly in augmented reality using leap motion controller. International Journal on Interactive Design and Manufacturing (IJIDeM), 12(4), 1157–1165. https://doi.org/10.1007/s12008-018-0461-0

- Villarreal-Narvaez, S., Vanderdonckt, J., Vatavu, R.-D., & Wobbrock, J. O. (2020). A systematic review of gesture elicitation studies: What can we learn from 216 studies? In Proceedings of the 2020 ACM designing interactive systems conference, New York, NY, USA (pp. 855–872) Association for Computing Machinery. Retrieved from https://doi.org/10.1145/3357236.3395511

- Wan, H., Luo, Y., Gao, S., & Peng, Q. (2004). Realistic virtual hand modeling with applications for virtual grasping. In Proceedings of the 2004 ACM siggraph international conference on virtual reality continuum and its applications in industry, New York, NY, USA (pp. 81–87). Association for Computing Machinery. https://doi.org/10.1145/1044588.1044603

- Wang, Y., Su, Z., Zhang, N., Xing, R., Liu, D., Luan, T. H., & Shen, X. (2023). A survey on metaverse: Fundamentals, security, and privacy. IEEE Communications Surveys & Tutorials, 25(1), 319–352. https://doi.org/10.1109/comst.2022.3202047

- Wittorf, M. L., & Jakobsen, M. R. (2016). Eliciting mid-air gestures for wall-display interaction. In Proceedings of the 9th nordic conference on human-computer interaction (p. 3). https://doi.org/10.1145/2971485.2971503