ABSTRACT

Research has revealed that teachers find teaching and assessing socioscientific argumentation (SSA) to be challenging. In this study, ten pre-service science teachers (PSTs) tested a new Practical Assessment of Socioscientific Argumentation Model (PASM) that was developed to enhance skills in assessing SSA. The models’ design is based on the Teacher oriented Assessment Framework. Here, we present the characteristics of PASM and examine how PSTs perceive that the use of PASM effects competences in assessing SSA. PASM is divided into multiple phases and requires PSTs to perform three roles: arguing for and against a given socioscientific issue, and assessing other PSTs’ argumentation. It also includes group discussion and individual reflection phases. Two cycles of the model were performed, focusing on different issues (GMOs and nuclear power). Data were collected in the form of audio-recordings of group discussions, field notes from whole class discussions and the PSTs’ written individual reflections. Thematic data analysis revealed that the PSTs discussed and reflected on four main themes: the focus of the assessment, the tools in PASM, the nature of PASM, and coping strategies. The nature of PASM, with iterative cycles and repeated reflections, expanded their views on assessing this kind of argumentation, making PSTs aware of quality criteria that should be included in assessment of SSA. We conclude that it is important to include training on assessing SSA in teacher education and that PASM could be a valuable tool for this purpose.

Introduction

Research has shown that including socioscientific issues (SSI) in science education increases students’ interest in science and motivation to learn (Albe, Citation2008), and can promote student learning outcomes including decision-making, critical thinking, moral development, and argumentation skills (D. L. Zeidler & Keefer, Citation2003). SSI are contemporary scientific topics that affect societies and peoples’ lives (Sadler, Citation2004). They are often at the scientific frontier and require decision-making on both personal and societal levels. Moreover, SSI can be controversial and have no obvious correct answers; decisions are guided by personal values (Ratcliffe & Grace, Citation2003; Zeidler et al., Citation2009). SSI decision-making often involves risk assessment (Kolstø, Citation2006) and always has ethical aspects (Zeidler & Sadler, Citation2008; Reiss, Citation2010). When teaching science using SSI, deliberation is central. SSI provide a context to argumentation practice, requires decision-making, and ask students to formulate a standpoint (which is a part of citizenship education). Argumentation is thus central in SSI-driven curricula. While formal argumentation has strict rules originating from formal logic, SSI argumentation is less rigid because it concerns ill-structured and open-ended issues; it has been termed informal argumentation (Chang & Chiu, Citation2008). In this paper, informal argumentation on SSI is referred to as socioscientific argumentation (SSA).

Argumentation is central to scientific knowledge production (Jiménez-Aleixandre & Erduran, Citation2008). Therefore, including argumentation on SSI in science education can increase students’ understanding of the nature of science and develop their scientific literacy (Zeidler, Citation2014). For these reasons, SSA has been incorporated into science education curricula worldwide (Lindahl et al., Citation2019). However, scholars have reported that assessment is a major challenge when teaching argumentation and SSI in science education (e. g., Cetin et al., Citation2014; Christenson et al., Citation2017; V. Dawson & Carson, Citation2017; Sampson & Blanchard, Citation2012; Tideman & Nielsen, Citation2017). Here, we present and explore the potential of the Practical Assessment of Socioscientific Argumentation Model (PASM), which was developed to train pre-service teachers (PSTs) how to assess SSA. PASM is based on the Teacher-oriented Assessment Framework (TAF), which was developed from a review of the research literature on what constitutes high quality SSA together with assessment guidelines from curricula and national tests (Christenson & Chang Rundgren, Citation2015).

Socioscientific argumentation (SSA)

Two types of argumentation are important in science education: scientific argumentation, which involves scientific issues without direct societal implications (e.g., how results from a scientific experiment should be interpreted) and SSA, which places science in a social setting where political debate, personal decision-making, and ethics are central (Jiménez-Aleixandre & Erduran, Citation2008). Like scientific argumentation, SSA includes evaluation of evidence (Chang & Chiu, Citation2008), but it also allows for multiple positions because each arguer is guided by his/her values. It therefore cannot be assessed and evaluated in the same way as scientific argumentation because argumentation occurs in an SSI framework where the issues’ social and ethical aspects are relevant.

Challenges of teaching SSA

Teachers play a vital role in determining how SSA is taught in school science (e. g. Lee et al., Citation2006). However, Lee et al. (Citation2006) reported that a low awareness of the potential of SSI teaching causes many science teachers to exclude SSA from their teaching. Research has revealed many challenges that teachers pursuing SSI-driven curricula encounter when teaching SSA. These include a lack of resources (e.g., teaching materials and preparation time) for implementing SSI (Sadler et al., Citation2006) as well as challenges related to teachers’ epistemology. Several authors report that teachers find teaching content knowledge more important than addressing the ethical or societal dimensions of science, which is an inevitable part of SSI in science education; consequently, these aspects are often simply excluded (D. L. Zeidler & Keefer, Citation2003; Levinson & Turner, Citation2001). In addition, there are concerns that teachers lack skills in SSI teaching activities and knowledge of the subject matter (Lee et al., Citation2006; Sadler et al., Citation2006). Teachers could also lack confidence in handling discussions (Simonneaux, Citation2008) and find it hard to express their own arguments, including opinions, ethics and values (Sadler et al., Citation2006). This could increase the use of teacher-centered activities, contradicting the objective of including SSA in science education. In addition, some teachers are reluctant to teach SSI because of difficulties in assessing the quality of their students’ arguments (Tideman & Nielsen, Citation2017).

Assessment of SSA

The use of SSI in science education gives teachers several opportunities to assess multiple knowledge requirements (Christenson et al., Citation2017). However, it is important to point out that students need opportunities to practice the skills that will be assessed before the summative assessment occurs (Jönsson, Citation2017). When working with SSA there are multiple ways for students to demonstrate their abilities that can serve as the basis for teachers’ assessment. These include oral debates (V. M. Dawson & Venville, Citation2010), role play (Simonneaux, Citation2001), letters to policy makers (Åkerblom & Lindahl, Citation2017), and media opinion pieces (Christenson et al., Citation2017), all of which can give teachers material for assessment. Such activities also give students opportunities to use the knowledge and skills needed to imitate and experience participation in democratic processes and provide opportunities to practice authentic assessment (Jönsson, Citation2017).

A prerequisite for successful assessment is for students to be aware of and understand what they are expected to know and what criteria will be used in their assessment (Black & Wiliam, Citation2006). This can be achieved using assessment matrices. An assessment matrix is a tool that includes various qualitative criteria for assessment on multiple levels that resonate with the knowledge requirements of the syllabus (Jönsson, Citation2017). The matrix may be specifically designed for a given exercise or more general and applicable to multiple assessment practices.

Challenges in assessing SSA

Assessing skills related to SSI in science education is challenging, especially because teachers lack effective guidance on assessing related quality issues, which discourages them from including SSA in their teaching (Levinson & Turner, Citation2001; Tideman & Nielsen, Citation2017). In a study on the role of SSI in biology teaching, Tideman and Nielsen (Citation2017) found that no participating teachers could present a strategy for assessing learning objectives related to SSA.

When assessing SSA, teachers tend to primarily focus on students’ ability to use biological content knowledge (Tideman & Nielsen, Citation2017). Teachers have a “content-centered interpretation of socioscientific issues” (p. 55) that prioritizes content knowledge over the societal contextualization of issues. This suggests that SSI teaching activities are used instrumentally with the aim of giving the biological content a context or perspective; the underlying assumption is that simply acquiring biological content knowledge will allow students to make informed decision on SSI. As a result, assessments of SSA become biased toward assessing students’ ability to use biological content knowledge. Teachers’ current assessment practices are thus a barrier to the implementation of full-fledged SSI teaching activities. The challenges of assessing SSA are particularly prominent in oral exams (Tideman & Nielsen, Citation2017), also recognized by McNeill and Knight (Citation2013) who reported that many teachers find it difficult to evaluate the quality of oral argumentation and concluded that a lot of further work is needed to develop reliable and valid tools for assessing argumentation. In addition, teachers tend to focus on summative assessment rather than on including formative assessment activities related to SSA (Tideman & Nielsen, Citation2017).

Not only is assessing the quality of SSA very complex for both researchers and teachers, few tools are available for its assessment (Evagorou, Citation2011). Therefore, in addition to teaching support, teachers implementing SSI-driven curricula need consistent ways of evaluating and assessing SSA (Aydeniz & Ozdilek, Citation2016; Evagorou, Citation2011).

Frameworks for assessing quality in SSA

Several scholars argue that both structure and content should be considered when assessing SSA (Christenson, Citation2015; Nielsen, Citation2012; Sampson & Clark, Citation2008). However, existing analytical frameworks tend to focus on one or the other (Sampson & Clark, Citation2008). Most published studies, including those presenting analytical frameworks for analyzing scientific and socioscientific argumentation, have used the Toulmin argumentation pattern (TAP) or one of its variants (Lazarou & Erduran, Citation2021). TAP is a structure-oriented framework that is domain-general, meaning that it is applicable in all argumentation contexts. According to TAP, the strength or weakness of an argument depends on the presence or absence of specific combinations of structural components (Sampson & Clark, Citation2008; Toulmin, Citation2003). Despite being possibly the most influential and widely used framework for assessing argumentation quality in research, many researchers have experienced problems when using TAP (e.g., Lazarou & Erduran, Citation2021; Sampson & Clark, Citation2008; Zohar & Nemet, Citation2002). For example, difficulties have been reported with categorizing segments of arguments into the right categories, and some TAP components are commonly missing in less formal types of argumentation such as SSA (Christenson & Chang Rundgren, Citation2015). Another limitation of TAP is that it only considers the presence or absence of components; the accuracy or relevance of the content is not assessed, and neither is the coherence and logical structure of the argument (Sampson & Clark, Citation2008).

Assessments of SSA and its quality must consider domain-specific content as well as structural and domain-general aspects (Christenson, Citation2015; Christenson & Chang Rundgren, Citation2015; Sampson & Clark, Citation2008). Because SSI are science-related issues that may present ethical dilemmas and have significant implications for society and people’s lives (Sadler, Citation2004), it is important for these aspects to be included in SSA. The literature includes several examples demonstrating an analytical focus on the content of arguments, and there is an ongoing discussion about the influence of content knowledge on the quality of argumentation (e.g., Cetin et al., Citation2014; Sadler & Fowler, Citation2006; Nielsen, Citation2012; Tideman & Nielsen, Citation2017). Content-related factors commonly emphasized in the research literature on assessing SSA include the use of data and content knowledge to justify claims (e.g., Sadler & Donnelly, Citation2006; Zohar & Nemet, Citation2002). This may include evaluations of the relevance of the content knowledge to the SSI, and of the scientific correctness and depth or superficiality of the content knowledge. The importance of being able to include multiple perspectives from the natural sciences and also from social science and the humanities has also been highlighted (e.g., Chang & Chiu, Citation2008; Wu & Tsai, Citation2007). Finally, the importance of addressing ethical considerations in SSA has been discussed in the research literature (Sadler & Donnelly, Citation2006; Tal & Kedmi, Citation2006) and is recognized in the Swedish curricula for compulsory and upper secondary schools (The Swedish National Agency for Education, Citation2018a, Citation2018b). Unfortunately, all the analytical frameworks applied in these works are too complex to use in assessment practice (Christenson, Citation2015; Christenson & Chang Rundgren, Citation2015). To make a sustainable shift toward SSI-driven curricula, teachers need tools for assessing SSA quality that are discriminative, simple, relevant, and fair (V. Dawson & Carson, Citation2017).

Teacher-oriented assessment framework—TAF

The Teacher-oriented Assessment Framework (TAF; Christenson & Chang Rundgren, Citation2015) is a tool for assessing SSA that was developed to align with the Swedish curricula for compulsory and upper secondary schools (The Swedish National Agency for Education, Citation2018a, 2018b). It is divided into a set of components corresponding to different SSA quality criteria that have been discussed in the research literature. To maximize its usefulness to teachers in science education, it is simple but addresses both structural and content-related aspects of SSA (Christenson, Citation2015; Christenson & Chang Rundgren, Citation2015).

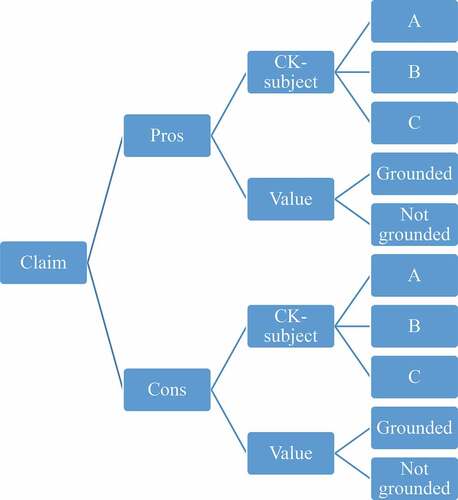

According to TAF, the structure of a socioscientific argument can be broken down into claims (decisions) that are supported by justifications (which may be either pros or cons). Justifications are evaluated in the same way as Zohar and Nemet (Citation2002), assessing both the use of content knowledge and value-based argumentation. The pros and cons are the justification(s) that are offered for or against the claim. Hence, the cons represents what in TAP is called a rebuttal, or counter-argument. Justifications are value-laden or knowledge-based statements that use conceptual knowledge to support a claim.

Value-based justifications are classified as being grounded or not grounded. Grounded value statements are taking both interspecific and intergenerational considerations into account (Reiss, Citation2010). This shows an expounded moral judgment, beyond merely mirroring a value reflecting a person’s “gut feeling.” Consequently, a non-grounded value statement could be that a person opposes nuclear power on the basis that it is scary and makes that person worried (e.g., “I think nuclear power should be terminated because it scares me”). On the other hand, a more grounded statement would elaborate on the causes for approving GMO to be used, including also the possible consequences in a broader sense, e.g., how it could be used to feed more people in the future when access to food might be limited on a global scale but also how this can affect ecosystems and other organisms.

Several studies have focused on students’ ability to use content knowledge in SSA (e.g., Sadler & Fowler, Citation2006; Klosterman & Sadler, Citation2010). The conceptual knowledge relevant to a specific issue is domain-specific, and knowledge from multiple disciplines may be validly brought to bear on a given SSI (Christenson et al., Citation2012), in keeping with the multi-disciplinary nature of such issues. In the TAF, the use of content knowledge in a justification is assigned a score of A, B, or C based on its relevance and scientific correctness:

Correct and relevant content knowledge is used. (E.g., radiation from nuclear power is dangerous since it can harm DNA replication).

Nonspecific general knowledge (not directly related to the issue/focus) is used. (E.g., in a discussion about nuclear power, an argument that describes the function of solar panels could be correct, but not relevant).

Incorrect content knowledge (misconceptions or superficial scientific knowledge) is used. (E.g., one thing that is dangerous about GMO is that you will eat DNA and that is dangerous).

shows how TAF breaks an argument down into hierarchical components. An assessment matrix was developed based on the TAF quality criteria (see Appendix 1).

Figure 1. TAF showing the different components of the SSA assessment framework and its hierarchical organization (modified from Christenson & Chang Rundgren, Citation2015).

In the current study, we use TAF as part of the model (PASM) aiming to enhance PSTs’ skills to assess socioscientific argumentation.

The Swedish context

Although PASM was developed and rationalized in the Swedish context, it was not intended to only be valid within this context. Rather, the ambition is that it should be useful in diverse contexts that require assessment of SSA. The Swedish curricula for both compulsory school (grades 1–9) and upper secondary school are partly SSI-driven (The Swedish National Agency for Education, Citation2018a, Citation2018b). The syllabi for all science subjects include SSI activities and argumentation practice, and there is an explicit emphasis on SSI and argumentation in the rubrics for grading courses in chemistry, biology, and physics.

Aim and RQ

It has been repeatedly shown that teachers lack strategies for assessing SSA and need support in this area. One major challenge in improving the assessment of SSA is that teachers see SSI teaching in instrumental terms, causing a dominant focus on science content knowledge and the exclusion of other important aspects of SSA such as societal aspects, the role of values in SSI decision-making, and argument structure. A second challenge relates to the difficulty of assessing oral argumentation. A third challenge is that teachers tend to focus on summative assessment and exclude formative practices that give students opportunities to develop their SSA skills. To address these challenges, we developed the Practicing Assessment of Socioscientific Argumentation Model (PASM), which is based on the TAF (Christenson & Chang Rundgren, Citation2015), as a tool for training teachers in assessing SSA. Here we evaluate the PASM, focusing on two research questions:

How does a group of pre-service teachers describe their evolving perceptions of using the Practical Assessment of the Socioscientific Argumentation Model (PASM)?

How does a group of pre-service teachers reflect about the characteristics of the Practical Assessment of the Socioscientific Argumentation Model (PASM) for teacher use?

Method

Participants

Ten upper secondary Pre-Service Science Teachers (PSTs) volunteered to participate in this study. For all of them, it was their first term studying science. Before they took the course on SSI that addressed different aspects of teaching SSI, they had studied a course in floristics and faunistics. Four of the PSTs had previously studied another subject (English or Physical education and health), and the rest were in their first term studying in the teacher program. For those who were studying science as their second subject, they had previously undertaken five weeks with teacher training in schools. In addition, we have no information about any prior teaching experiences among the PSTs. See, for more information about the participants. The study was conducted in accordance with the ethical standards of the Swedish Research Council and informed consent was obtained from all participants. When presenting comments made by the participating PSTs, they are referred to as PST1-PST10. The sources of the comments are indicated by the labels AR (audio recording of group discussion), IR (individual written reflection), and F (field notes taken by the authors during joint discussions).

Table 1. Information about the participants.

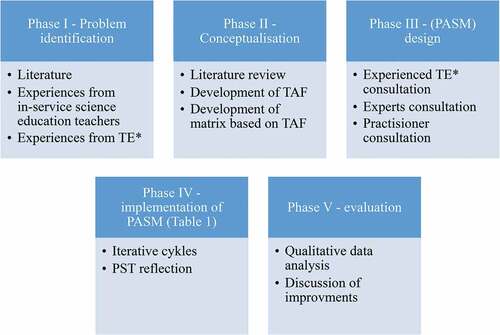

Study context—development of PASM

The development of PASM proceeded through several distinct phases (). First, a literature review was conducted to identify challenges relating to SSA assessment experienced by in-service science teachers and during teacher education, as well as quality criteria and standards for assessing SSA. Because all of the frameworks used to assess SSA in the identified literature were designed for research purposes rather than for use by teachers, the Teacher-oriented Assessment Framework (TAF;) was developed (), and used as a conceptual model of SSA in PASM. For this purpose, a matrix for SSA assessment was developed based on TAF. Hence, TAF was used both as a theoretical model informing on high quality SSA, and as part of practical use in PASM in assessment of SSA. After consulting experienced teacher educators, including some with knowledge of SSA assessment, PASM was designed as an intervention for improving PSTs’ skills in assessing SSA (for details of the model’s structure, see the section below and ). The PASM intervention was then tested on a group of PSTs. Finally, data collected during the PSTs’ discussions and from their written individual reflections were analyzed thematically to determine whether and how PSTs perceived they developed their skills in assessing SSA by participating in PASM intervention. None of the authors were involved as teachers examining the PSTs in the course.

Table 2. The PASM. *Data collection.

Figure 2. Outline of the study for development and evaluation of PASM for practicing assessment of SSA in science education. *TE = Teacher educators.

Figure 3. The iterative cycles of practical assessment of SSA. It shows how the PSTs (three in each group, named 1, 2 and 3) changed their role in each of the cycles, being the one arguing for (PSTF) or against (PSTA) the SSI, or acting as examiner (PSTE) assessing the argumentation. This process is repeated with two different SSI.

The practicing assessment of socioscientific argumentation model (PASM)

The PASM intervention is divided into four phases. The first phase (A) is preparatory because it is assumed that the targets of the intervention (in this case, PSTs) are unfamiliar with assessing SSA. The PSTs were therefore given a video-recorded lecture (prepared by the first author) and reading material to ensure that they had some common ground and foundational knowledge based on research into SSA assessment. The content presented included a definition of SSI, how this can be included in teaching, the connection between SSI and argumentation, and challenges in teaching SSI with a special emphasis on assessment of SSA. Finally, TAF was introduced as a conceptual model outlining aspects of quality in SSA and how SSA can be assessed using TAF. Additionally, to create an authentic setting for the subsequent argumentative practice, the PSTs needed some basic knowledge of the issues to be discussed. Therefore, two SSI (the use of nuclear power and genetically modified organisms, GMO) were presented to the PSTs in advance, and they were assigned to study the cases for and against these issues in their own time. The PSTs were provided with digital resources to find information on these issues. However, it was the PSTs’ own responsibility to inform themselves on content knowledge and alternate views about nuclear power and GMO. Finally, at the occasion when the PSTs were about to practice SSA in phase B, a short recapitalization on the TAF (Christenson & Chang Rundgren, Citation2015) and on how to conduct assessments and use the matrix (see Appendix 1) was conducted to ensure a common understanding.

The second phase (B) of PASM deals with the actual assessment of SSA. In this phase, participants engage in a series of iterative activities during which they alternately argue for (PSTF) and against (PSTA) the SSI under consideration (nuclear power) and also act as examiners (PSTE) of their fellow participants’ SSA using the matrix (Appendix I). The PSTs were divided into small groups of 3 or 4 members each during this phase. After each round of discussion, the evaluator provided brief formative feedback to each arguer, after which the participants swapped roles so that the PST arguing for the issue became the evaluator, the evaluator arguing against the issue instead argued for it, and so on, see .

The third phase (C) focuses on evaluation. To this end, each group discussed the exercise based on a set of questions provided by the authors. Examples of questions posed were: How did you experience this exercise? How did you experience having the different roles and in particular being the examiner assessing the argumentation? The complete list of questions can be found in Appendix II. After the group discussions, a joint discussion was held with all PSTs and the two authors so that reflections could be shared.

In the fourth phase (D), phases B and C were repeated, this time focusing on a new SSI (in this case, GMO).

The fifth and final phase (E) in PASM was the meta-evaluation phase. A few days after the workshop, the PSTs wrote individual reflections about the process, evaluating the PASM intervention. The five phases are summarized in .

Data collection and analysis

Data were collected in the form of audio-recordings (AR) of group discussions, field notes (F) written by both authors during joint discussions with the participating PSTs. All of these data were collected during the same day in December 2019. Meta evaluation reports written individually (IR) by participants were collected two weeks later. The group discussions were guided by a set of questions (Appendix II) that were also used in the joint discussions, where the PSTs from different groups were able to share their reflections. All of the questions posed to PSTs were phrased in general terms in order to avoid biasing the results. The individual written reflections from the evaluation phase were also guided by questions provided to the PSTs (Appendix II).

All data were analyzed using thematic analysis (Braun & Clarke, Citation2006), which was performed by two authors independently. Initially the transcripts were read repeatedly and preliminary codes were identified. In particular, we searched for themes relating to the issues reported to be especially challenging in assessing SSA. We were also interested in PSTs reflections on if and how the PASM exercise had helped them improve their assessment of SSA. Preliminary codes were generated across the whole data set. In the next step, the codes with included excerpts were collected into potential themes. At this stage, a thematic map of the analysis was generated in order to check if the themes were consistent with the coded excerpts. Up to this point, the authors worked independently. In order to ensure reliability, the authors compared and then discussed their analyses. As a result of these discussions, minor changes were made regarding the themes in order to achieve a consensus. These changes were mainly of editorial character, and the hierarchy of a theme was altered in one instance. To further ensure reliability, we have made efforts by presenting a rich collection of data for the identified themes in the results.

Triangulation of multiple data sources was performed to enhance the trustworthiness (Robson, Citation2011) of the findings. Specifically, several different types of data (recordings of the group discussions from the assessment exercise, field notes and final written reflections) were compared to enable cross-checking and interpretation (Robson, Citation2011). It should be noted that because this study was small in scale, we do not aspire to broader generalization.

Results

We identified four main themes and several subthemes during the data analysis (). The four main themes are: focus in assessment, the tools in PASM, the nature of PASM, and coping strategies. For a more operational definition of the themes and frequencies throughout the different data sets, see, . The following section discusses these themes and their sub-themes, presenting illustrative excerpts from the audio recordings of the PSTs’ evaluations directly after the assessment exercise and from the PSTs’ written reflections. The excerpts have been translated from Swedish.

Table 3. Main- and sub themes and frequencies of themes in the data sets.

Focus of assessment

The first theme related to how the PSTs discussed the focus of assessment when acting as an examiner and the aspects they considered important during assessment. They mostly talked about the importance of assessing their future students’ science content knowledge and the need for this knowledge to be scientifically correct. For example, they discussed the level of content knowledge one could expect their future students to display when making arguments about a SSI, concluding that it will be more on a general level than detailed knowledge.

AR:PST9: I think content knowledge is often poorly defined. Arguments often rely on rather non-specific content knowledge. For example, when someone says “the waste is radioactive”, what does that mean? It is rather vague and not detailed.

AR:PST3: No, it’s only general knowledge.

AR:PST9: So, what kind of content knowledge are we searching for? If someone says “well, it’s about radiation”, what does that mean? Is it harmful for the body? We say it is harmful, but how? What can happen? How does it destroy the environment and nature? You need to know what you want them to talk about.

One challenge identified was that the PSTs believed that their own lack of content knowledge influenced their ability to assess SSA [F]. This was also mentioned by the PSTs in the group discussions and individual reflections, as shown below:

I had too little content knowledge myself about these issues; even though there are no rights and wrong in SSI, as a teacher I need factual knowledge if I am going to assess students. [IR:PST6]

As a teacher you need good content knowledge about the issue to be discussed, because if a student uses some arguments and you don’t have enough knowledge yourself, maybe you think it sounds good and give the student a high grade, but if you don’t know whether it’s true, what kind of assessment will that be? [AR:PST7]

All PST groups reported that the focus of assessment shifted between the first and second cycles. In one group’s first discussion, the focus was solely on the ability to use content knowledge; the use of values was considered undesirable. For example, one comment from a group discussion suggested that teachers should possess all of the available content knowledge on the SSI at hand:

AR:PST5: No, it could be that you don’t know if they are using content knowledge, or if they only are talking about values and nonsense.

However, after the second cycle, there was a greater recognition of the role of values in SSA; participants in the same group now acknowledged that value-based arguments should be included, in accordance with the matrix and TAF.

AR:PST8: It is good to know what needs to be included in the argumentation, that you need counter arguments and value-based arguments. It’s good to have a template from the beginning.

There were also discussions (mostly indirect) about assessing the structural aspects of the arguments and the need to include a counter argument. One PST found it hard to include value-laden justifications in a counter argument.

AR:PST9: We mentioned before that it’s more difficult to use values in counter-arguments. You have your own arguments for, and the corresponding values.

AR:PST2: It requires another level of knowledge or reflection.

The tools in PASM

The second theme related to the PSTs’ reflections on the tools in PASM (the matrix and TAF). The PSTs mainly found the matrix to be helpful but initially found it hard to understand. This improved after the first round; the group discussion of experiences among all PSTs revealed some ways in which the matrix could be used.

In our group, we had some difficulties understanding how to use the matrix during the first debate about nuclear power, but then we discussed and found a strategy for the second discussion about GMO. [IR:PST10]

I think the boxes [in the matrix] were good because they made it easy to structure each argument and to identify what kinds of arguments were used, showing whether they were based on content knowledge or values. [AR:PST5]

In addition to discussing the need for clearer instructions, the PSTs suggested that their future students could use the matrix in formative exercises because it shows what should be included in high quality SSA. Moreover, using the matrix was felt to make the assessment more objective and fairer, reducing possible undesirable subjective effects.

AR:PST7: I also believe that it’s important that the notes in the assessment matrix decide the grade the students will get. This cannot be influenced by the teacher’s opinion on the issue. Like, if you are for nuclear power you give the students with the same opinion a higher grade.

The most common comment about the matrix was that the PSTs wanted to modify it, both to reflect content they wanted their future students to use for a specific SSI and also to customize it. The general feeling was that the matrix should be adjusted by teachers themselves (in this case the PSTs) and adapted to include important concepts that future students were expected to use in their arguments then only needing to tick boxes if their future students covered the aspects included in the matrix [F]. There were also some statements about making the boxes better designed for teachers’ (in this case the PSTs) comments.

AR:PST7: Maybe create your own matrix for assessment? Make one you feel that you are familiar with …

AR:PST6: Exactly.

AR:PST7: It may support the actual assessment.

AR:PST6: I think if you know that you are going to have a debate about nuclear power, you could include some words that the students should use, we talked about this before.

AR:PST5: Like a checklist.

AR:PST6: Yes, exactly.

AR:PST8: Yes, I also think it’s a good idea to have a check list with support and counterarguments and values.

Throughout the exercise, the PSTs had TAF at hand showing the components of the SSA framework and its heuristic organization (). They commented that this was helpful because it showed the logic of SSA and the interconnections between its different aspects such as content, structure, and values [F].

The nature of PASM

A third theme was how the PSTs related to the nature of PASM. Related sub-themes included the roles in SSA and its assessment, iterative cycles, and systematic reflections. In general, PSTs appreciated testing all the roles in the exercise, indicating that arguing both for and against an issue expanded their insight into the complexity of SSA and also that having to argue from both sides increases the quality of SSA:

AR:PST1: You become better at arguing if you have prepared to defend both positions … It was much more fun to argue than assessing.

AR:PST2: I think, like you said about being well prepared, knowing both sides, your own and the other, it makes it possible to respond – “well, this is great”, but you can give a counter argument. It seems as if you really know the issue, like an expert.

The PSTs felt that the assessment role was the hardest and that it was hard to focus on opponents’ arguments when arguing for or against a position because they were focused on making their own argument and therefore missed some of their opponent’s statements and justifications [F].

The nature of PASM including the sub-themes of iterative cycles and systematic reflections supported the PSTs and helped them develop in their roles. They reported that repeating the exercise improved their skills at arguing for and against issues and assessing others’ arguments. After the second round, the participants reported improved confidence and understanding of the nature and skill of assessing SSA.

AR:PST5: I think it makes it clear how inexperienced you actually are at assessing arguments based on different criteria. You really need to practice.

PSTs enjoyed the change of SSI topic from nuclear power to GMO and suggested that the exercise should be repeated to further increase confidence and proficiency in assessing SSA. However, they also felt that two rounds per intervention was sufficient; they all felt tired after completing the second cycle.

AR:PST5: I felt calmer [during the second cycle], it was easier to listen. I didn’t just focus on my own arguments, I was able to think and listen.

Finally, some PSTs found the systematic reflections included in PASM helpful:

I think it was really good to have a joint discussion between the two sessions arguing on the different issues – it helped me understand how others think about assessment. After the first joint discussion, I used some ideas that were shared when we worked on the second issue. [IR:PST7]

This was a really good exercise because it was realistic – we worked on situations we could encounter in our future work as teachers. It was good because of all the components, the change of roles, the feedback, and being able to reflect on the whole process. [IR:PST3]

The PSTs also stated that their reflections were supported by the feedback they got from other PSTs during the argumentation cycles. They uniformly stated that this was helpful; a typical comment was:

It was so useful to listen to you [the others in the group] when you gave feedback and explained how you assessed the argumentation, what kind of notes you made, it was good to learn from each other. [AR:PST3]

Coping strategies

The fourth main theme we identified related to the PSTs’ discussions about coping strategies. Much of the data included discussions on this issue and how the PSTs could manage the complicated task of assessing SSA in future. The PSTs felt that they needed more training and found PASM helpful. They also included their future students in this discussion, recognizing a need for them to participate in formative assessment activities to improve their ability to construct strong and valid SSA [F].

PSTs also discussed practical strategies. They recognized that assessing oral argumentation is particularly complex and suggested that it would be useful to work with small groups of (future) students when doing this. They also suggested that if the aim of the exercise is to focus on content aspects of the SSI, it would be beneficial to decide on the SSI in beforehand, allowing the teacher to prepare by researching the issue. On the other hand, if the focus is on structural aspects of SSA, the (future) students could choose the SSI because that would not require as much preparation by the teacher.

AR:PST3: I was thinking about what we talked about earlier, that you are supposed to assess both the structure and the content, and that a challenge of SSI is that as a teacher you cannot be an expert on everything. Therefore, if the focus is on the content, it is important for all students to discuss the same issue and for you as a teacher to know it well. However, if focus is on assessing structure, you can let the students choose the issues themselves. If you do that, it will be difficult as a teacher to know all the content and be able to assess the correctness of the arguments.

The PSTs also noted that cooperation with other teachers could be helpful when assessing SSA. Examples mentioned included language teachers (who were recognized as experts in argumentation because it is part of the curriculum for Swedish language studies) and teachers of other subjects who could provide useful content knowledge pertaining to SSI.

AR:PST6: It is possible to do interdisciplinary work – for instance, to cooperate with social science teachers when debating the environment. In that way you could conduct the assessment together.

Finally, the PSTs proposed some future strategies for assessing SSA that related to practical aspects of organizing argumentative practices in class that were not included in PASM. They emphasized that it was important to give their future students a fixed amount of time to argue to prevent some of them taking over and talking all the time. The PSTs also felt that their future students should be given roles with pre-defined opinions and not be required to state their personal views. On the other hand, the PSTs also thought that sometimes they should be free to choose their own SSI and to discuss based on their own interests and opinions [F].

Discussion

We have developed a model for improving skills in assessing SSA, which was shown to be challenging in previous studies. The model was used and evaluated by PSTs to determine whether it can help future teachers develop strategies to use in their own assessment practices. In particular, we investigated whether and how the model can encourage PSTs to expand their views of what constitutes high quality SSA and what factors should be considered when assessing oral SSA. We conclude that the PSTs perceived that PASM had a significant positive effect on their competence to perform a SSA assessment. First, we focus the discussion on the PSTs perceived competence to assess to SSA through PASM. Second, we discuss factors that can enable this process.

PSTs perceived competence to assess SSA through PASM

The participating PSTs focused primarily on content knowledge in their assessment practices, especially in the first round of PASM iterative cycles. They initially felt that content knowledge should be the sole focus of assessment even though when preparing for the exercise they read papers and watched lectures on assessment frameworks (Christenson & Chang Rundgren, Citation2015) that highlighted the importance of multidisciplinary aspects, values, and structural aspects. This reflects a challenge relating to teachers’ epistemological views: teachers prioritize and focus on science content knowledge to the exclusion of other aspects of SSA (D. L. Zeidler & Keefer, Citation2003; Levinson & Turner, Citation2001; Tideman & Nielsen, Citation2017). However, during the first whole class joint discussion, PSTs acknowledged the importance of including aspects other than content knowledge and the need to include value-based justifications and counter-arguments in their argumentation and assessment. It is clear that the exercise made them aware of quality criteria that should be included when assessing SSA, even though all participants acknowledged a need for further training. A possibility to further expand the scope of PASM could be to include explicit reflections of the various purposes of science education and scientific literacy that extend beyond science teaching only focusing on content knowledge.

The written individual reports submitted by the PSTs one week after the intervention suggested that their views on what factors should be considered when assessing SSA had continued to evolve and became more comprehensive after the exercise was finished. Consequently, if PASM is used in teacher education, we recommend including the extra individual reflection phase that we call meta evaluation.

Much of the discussions between the PSTs concerned the importance of teachers’ content knowledge of the SSI being debated, which is consistent with previous findings on the challenges of teaching SSI (Lee et al., Citation2006; Sadler et al., Citation2006). In the discussions, the PSTs proposed strategies for dealing with this; most suggested that the SSI to be used in class should be chosen in advance, giving the teacher time to prepare thoroughly and become well informed on the issue in advance. Another proposed strategy was to cooperate with teachers of other subjects, including language teachers (who were seen as experts on argumentation) and teachers of subjects relevant to the chosen SSI. Previous studies have identified this as a useful strategy for improving the assessment of SSA (Christenson et al., Citation2017; Lin & Mintzes, Citation2010). These insights were triggered by the exercise and the systematic reflections built into the PASM model. However, this discussion also demonstrates a continuing focus on content knowledge among the PSTs, and arguably indicates that they had not fully embraced the nature of SSI as a complex part of real life. The purpose of including SSI in science teaching is to give students ownership over their knowledge development and letting them become experts rather than more traditional teacher-centered science education (Zeidler, Citation2014). On the other hand, in an exercise designed for practicing assessment, the pragmatic view of the PSTs and their desire to complete the task in a way that they found manageable are both understandable. Content knowledge is something they have some control over, and they can increase this control through preparation and doing more research on the issues themselves.

The PSTs discussed strategies for handling assessment of oral SSA, which is considered particularly challenging (Tideman & Nielsen, Citation2017). They proposed to let their future students form small groups during the exercise so they could better keep track of students’ claims and justifications. Previous findings that teachers focus more on summative than formative assessment (Tideman & Nielsen, Citation2017) are partly contradicted by our results: the PSTs discussed ways of including formative exercises for their students and letting them directly use the matrix when practicing SSA. However, this was not the main focus or a major part of the discussions.

Factors that enable development of SSA assessment competence

PASM gave the PSTs strategies and tools for assessing learning objectives related to SSA, both of which teachers were previously shown to lack (Evagorou, Citation2011; Tideman & Nielsen, Citation2017). PASM components such as TAF and the matrix had clear effects on PSTs’ understanding of high quality SSA and its assessment. However, there was some initial confusion about how to use these, indicating a need for more thorough and explicit instructions, perhaps including illustrative examples.

The positive result was attributed to several aspects of PASM including the requirement for participants to try all of the SSA roles (arguing for and against an issue and acting as an examiner), the use of two rounds, the multiple sets of discussions and reflections among peers, and the final individual written reflections. The opportunity to perform multiple roles during argumentation gave the PSTs a good understanding of what constitutes high quality SSA, and being forced to take both sides when discussing issues also expanded their views on the SSI in focus. Repeating the task () seems to have profoundly enhanced the PSTs SSA assessment skills, and even better results may have been obtained with more repetition; this is something worth bearing in mind if using the model in teacher training. As noted by Kinskey and Zeidler (Citation2021) time is important when training PSTs how to design and implement SSI-based lessons. The main factor that expanded PSTs’ understanding of SSA assessment and skills in this area appeared to be the repeated systematic reflections included in PASM. Reflection was conducted in groups of varying size, ranging from individuals to groups of three PSTs and the full cohort of 10 PSTs. Repeated reflection in groups of varying size thus appears to be important when training PSTs in how to assess SSA.

As mentioned earlier, research has revealed that assessment of SSA is a major challenge in science education (e. g., Cetin et al., Citation2014; Christenson et al., Citation2017; V. Dawson & Carson, Citation2017; Sampson & Blanchard, Citation2012; Tideman & Nielsen, Citation2017). Moreover, in our experience as teacher educators, any training on this in teacher programs is insufficient. This is a small-scale study, so we do not claim that its conclusions are generalizable. However, we strongly argue that teacher education should incorporate training on assessing SSA, and that the PASM model could be useful for this purpose.

Further research

As noted by the participants in this study, PASM could be used directly by students to practice their SSA skills and also to understand the nature of high quality SSA and the factors involved in its assessment. This should be tested on a larger scale.

As noted by Lazarou and Erduran (Citation2021), it is important to determine how teachers (or in our case, PSTs) interpret new frameworks (such as PASM) presented during professional development and how they implement those frameworks in their assessment practice, both summative and formative. A follow-up study focusing on how teachers trained using PASM perform assessments in authentic settings is therefore needed.

Acknowledgments

We thank the pre-service teachers who volunteered to participate in this study.

Disclosure statement

No potential conflict of interest was reported by the author(s).

References

- Åkerblom, D., & Lindahl, M. (2017). Authenticity and the relevance of discourse and figured worlds in secondary students‘ discussions of socioscientific issues. Teaching and Teacher Education, 65, 205–214. https://doi.org/10.1016/j.tate.2017.03.025

- Albe, V. (2008). When scientific knowledge, daily life experience, epistemological and social considerations intersect: Students’ argumentation in group discussions on a socio-scientific issue. Research in Science Education, 38(1), 67–90. https://doi.org/10.1007/s11165-007-9040-2

- Aydeniz, M., & Ozdilek, Z. (2016). Assessing and enhancing pre-service science teachers’ self-efficacy to teach science through argumentation: Challenges and possible solutions. International Journal of Science and Mathematics Education, 14(7), 1255–1273. https://doi.org/10.1007/s10763-015-9649-y

- Black, P., & Wiliam, D. (2006). Developing a theory of formative assessment. In J. Gardner (Ed.), Assessment and learning (pp. 206–230). Sage.

- Braun, V., & Clarke, V. (2006). Using thematic analysis in psychology. Qualitative Research in Psychology, 3(2), 77–101. https://doi.org/10.1191/1478088706qp063oa

- Cetin, P. S., Dogan, N., & Kutluca, A. Y. (2014). The quality of pre-service science teachers’ argumentation: Influence of content knowledge. Journal of Science Teacher Education, 25(3), 309–331. https://doi.org/10.1007/s10972-014-9378-z

- Chang, S.-N., & Chiu, M.-H. (2008). Lakatos’ scientific research programmes as a framework for analysing informal argumentation about socio-scientific issues. International Journal of Science Education, 30(13), 1753–1773. https://doi.org/10.1080/09500690701534582

- Christenson, N. (2015). Socioscientific argumentation: Aspects of content and structure [ Doctoral thesis]. Karlstad University Studies Doctoral thesis.

- Christenson, N., Chang Rundgren, and Höglund, H-O. (2012). Using the SEE-SEP model to analyze upper secondary students' use of supporting reasons in arguing socioscientific issues. Journal of Science Education and Technology, 21, 342–352. https://doi.org/10.1007/s10956-011-9328-x

- Christenson, N., & Chang Rundgren, S.-N. (2015). A framework for teachers' assessment of socio-scientific argumentation: An example using the GMO issue. Journal of Biological Education, 49(2), 204–212. https://doi.org/10.1080/00219266.2014.923486

- Christenson, N., Gericke, N., & Chang Rundgren, S.-N. (2017). Science and language teachers' assessment of upper secondary students' socioscientific argumentation. International Journal of Science and Mathematics Education, 15, 1403–1422. https://doi.org/10.1007/s10763-016-9746-6

- Dawson, V., & Carson, K. (2017). Using climate change scenarios to assess high school students’ argumentation skills. Research in Science & Technological Education, 35(1), 1–16. https://doi.org/10.1080/02635143.2016.1174932

- Dawson, V. M., & Venville, G. (2010). Teaching Strategies for Developing Students’ Argumentation Skills About Socioscientific Issues in High School Genetics. Research in Science Education, 40(2), 133–148. https://doi.org/10.1007/s11165-008-9104-y

- Evagorou, M. (2011). Discussing a socioscientific issue in a primary school classroom: The case of using a technology-supported environment in formal and nonformal settings. In T. D. Sadler (Ed.), Socio-scientific issues in the classroom. Teaching, learning and research (pp. 133–159). Springer.

- Jiménez-Aleixandre, M. P., & Erduran, S. (2008). Argumentation in science education: An overview. In S. Erduran & M. P. Jiménez-Aleixandre (Eds.), Argumentation in science education (pp. 3–28). Springer Netherlands.

- Jönsson, A. (2017). Lärande bedömning. MTM. [Formative assessment].

- Kinskey, M., & Zeidler, D. (2021). Elementary preservice teachers’ challenges in designing and implementing socioscientific issues-based lessons. Journal of Science Teacher Education, 32(3), 350–372. https://doi.org/10.1080/1046560X.2020.1826079

- Klosterman, M. L., & Sadler, T. D. (2010). Multi-level assessment of scientific content knowledge gains associated with socioscientific issues-based instruction. International Journal of Science Education, 32(8), 1017–1043. https://doi.org/10.1080/09500690902894512

- Kolstø, S. D. (2006). Patterns in students’ argumentation confronted with a risk-focused socio-scientific issue. International Journal of Science Education, 28(14), 1689–1716. https://doi.org/10.1080/09500690600560878

- Lazarou, D., & Erduran, S. (2021). “Evaluate what I was taught, not what you expected me to know”: Evaluating students’ arguments based on science teachers’ adaptations to Toulmin’s argument pattern. Journal of Science Teacher Education, 32(3), 306–324. https://doi.org/10.1080/1046560X.2020.1820663

- Lee, H., Abd‐El‐Khalick, F., & Choi, K. (2006). Korean science teachers’ perceptions of the introduction of socio-scientific issues into the science curriculum. Canadian Journal of Science, Mathematics and Technology Education, 6(2), 97–117. https://doi.org/10.1080/14926150609556691

- Levinson, R., & Turner, S. (2001). The teaching of social and ethical issues in the school curriculum, arising from developments in biomedical research: A research study of teachers. Institute of Education, University of London.

- Lin, S.-S., & Mintzes, J. J. (2010). Learning argumentation skills through instruction in socioscientific issues: The effect of ability level. International Journal of Science and Mathematics Education, 8(6), 998–1017. https://doi.org/10.1007/s10763-010-9215-6

- Lindahl, M. G., Folkesson, A.-M., & Zeidler, D. L. (2019). Students’ recognition of educational demands in the context of a socioscientific issues curriculum. Journal of Research in Science Teaching, 56(9), 1155–1182. https://doi.org/10.1002/tea.21548

- McNeill, K. L., & Knight, A. M. (2013). Teachers’ pedagogical content knowledge of scientific argumentation: The impact of professional development on K-12 teachers. Science Education, 97(6), 936–972. https://doi.org/10.1002/sce.21081

- Nielsen, J. A. (2012). Science in discussions: An analysis of the use of science content in socioscientific discussions. Science Education, 96(3), 428–456. https://doi.org/10.1002/sce.21001

- Ratcliffe, M., & Grace, M. (2003). Science education for citizenship: Teaching socio-scientific issues. McGraw Hill Education.

- Reiss, M. J. (2010). Ethical thinking. In A. Jones, A. McKim, & M. Reiss (Eds.), Ethics in the science and technology classroom: A new approach to teaching and learning (pp. 7–17). Sense.

- Robson, C. (2011). Real world research. Wiley & Sons Ltd.

- Sadler, T. D. (2004). Informal reasoning regarding socioscientific issues: A critical review of research. Journal of Research in Science Teaching, 41(5), 513–536. https://doi.org/10.1002/tea.20009

- Sadler, T. D., Amirshokoohi, A., Kazempour, M., & Allspaw, K. M. (2006). Socioscience and ethics in science classrooms: Teacher perspectives and strategies. Journal of Research in Science Teaching, 43(4), 353–376. https://doi.org/10.1002/tea.20142

- Sadler, T. D., & Donnelly, L. A. (2006). Socioscientific argumentation: The effects of content knowledge and morality. International Journal of Science Education, 28(12), 1463–1488. https://doi.org/10.1080/09500690600708717

- Sadler, T. D., & Fowler, S. R. (2006). A threshold model of content knowledge transfer for socioscientific argumentation. Science Education, 90(6), 986–1004. https://doi.org/10.1002/sce.20165

- Sampson, V., & Blanchard, M. R. (2012). Science teachers and scientific argumentation: Trends in views and practice. Journal of Research in Science Teaching, 49(9), 1122–1148. https://doi.org/10.1002/tea.21037

- Sampson, V., & Clark, D. B. (2008). Assessment of the ways students generate arguments in science education: Current perspectives and recommendations for future directions. Science Education, 92(3), 447–472. https://doi.org/10.1002/sce.20276

- Simonneaux, L. (2001). Role-play or debate to promote students‘ argumentation and justification on an issue in animal transgenesis. International Journal of Science Education, 23(9), 903–927. https://doi.org/10.1080/09500690010016076

- Simonneaux, L. (2008). Argumentation in socio-scientific contexts. In S. Erduran & M. P. Jiménez-Aleixandre (Eds.), Argumentation in science education (pp. 179–199). Springer.

- The Swedish National Agency for Education, (2018a). Curriculum for the compulsory school, preschool class and school-age educare. Retrieved 2021-January-18. https://www.skolverket.se/getFile?file=3984

- The Swedish National Agency for Education. (2018b). Curriculum for the upper secondary school. Retrieved 2021-January-18. https://www.skolverket.se/publikationer?id=2975

- Tal, T., & Kedmi, Y. (2006). Teaching socioscientific issues: classroom culture and students' performances. Cultural studies of Science Education, 1, 615–644. 10.1007/s11422-006-9026-9

- Tideman, S., & Nielsen, J. A. (2017). The role of socioscientific issues in biology teaching: From the perspective of teachers. International Journal of Science Education, 39(1), 44–61. https://doi.org/10.1080/09500693.2016.1264644

- Toulmin, S. E. 2003. The uses of argument (Originally published 1958). Cambridge University Press.

- Wu, Y.-T., & Tsai, -C.-C. (2007). High school students’ informal reasoning on a socio-scientific issue: Qualitative and quantitative analyses. International Journal of Science Education, 29(9), 1163–1187. https://doi.org/10.1080/09500690601083375

- Zeidler, D. L. (2014). Socioscientific issues as a curriculum emphasis. Theory, research, and practice. In N. G. Lederman & S. K. Abell (Eds.), Handbook of research on science education: Volume II (pp. 697–726). Routhledge.

- Zeidler, D. L., & Keefer, M. (2003). The role of moral reasoning and the status of socioscientific issues in science education: Philosophical, psychological and pedagogical considerations. In D. L. Zeidler (Ed.), The role of moral reasoning on socioscientific issues and discourse in science education (pp. 7–33). Kluwer Academic Press.

- Zeidler, T. D., & Sadler, T. D. (2008). The role of moral reasoning in argumentation: Conscience, character, and care. In S. Erduran & M. P. Jiménez-Aleixandre (Eds.), Argumentation in science education. Perspectives from classroom-based research (pp. 201–216). Springer.

- Zeidler, D. L., Sadler, T. D., Applebaum, S., & Callahan, B. E. (2009). Advancing reflective judgment through socioscientific issues. Journal of Research in Science Teaching, 46(1), 74–101. https://doi.org/10.1002/tea.20281

- Zohar, A., & Nemet, F. (2002). Fostering students’ knowledge and argumentation skills through dilemmas in human genetics. Journal of Research in Science Education, 39(1), 35–62. https://doi.org/10.1002/tea.10008

Appendix I

Assessment matrix for SSA

SSI:

Debater for:

Debater against:

Subject content knowledge:

Correct and relevant content knowledge included.

Nonspecific general knowledge (not directly related to the issue/focus).

Incorrect content knowledge included (misconception or superficial scientific knowledge).

Appendix

Questions for group discussions after the first round

How did you experience this exercise?

How did you experience having the different roles and in particular being the examiner assessing the argumentation?

Was the matrix helpful when assessing? If so how, if not, why not?

Could the exercise be improved, if so, how?

Questions for the first joint discussion

What was positive in this exercise?

What was less positive?

What kind of improvements could/should be made?

Questions for group discussions after the second round

How did you experience this exercise in the second round?

Where there any differences in your experiences because of the change of change of SSI? If so, what kind of differences?

What kind, if any, changes did you do in this second round compared to the first one?

Questions for the second joint discussion

Where there any differences in conducting the second round?

What was positive this time?

What is still needed to improve and how could this be done?

Questions for the evaluation

How did you experience the exercises?

Was there any kind of progression when the exercises were repeated?

What difficulties and opportunities do you see in using the assessment model in your upcoming work as a teacher of science?

How could one possibly improve the assessment practice?