ABSTRACT

Background: Previous research shows that critical constructive feedback, that scaffolds students to improve on tasks, often remains untapped. The paper’s aim is to illuminate at what stages students provided with such feedback drop out of feedback processing.

Methods: In our model, students can drop out at any of five stages of feedback processing: (1) noticing, (2) decoding, (3) making sense, (4) acting upon, and (5) using feedback to make progress. Eye-tracking was used to measure noticing and decoding of feedback. Behavioral data-logging tracked students’ use of feedback and potential progress. Three feedback signaling conditions were experimentally compared: a pedagogical agent, an animated arrow, and no signaling (control condition).

Findings: Students dropped out at each stage and few made it past the final stage. The agent condition led to significantly less feedback neglect at the two first stages, suggesting that students who are not initially inclined to notice and read feedback text can be influenced into doing so.

Contribution: The study provides a model and method to build more fine-grained knowledge of students’ (non)processing of feedback. More knowledge on at what stages students drop out and why can inform methods to counteract drop out and scaffold more productive and fruitful responses.

Introduction

It is well established that feedback plays a central role in nearly any educational context. Review studies by Hattie and Timperley (Citation2007) and Shute (Citation2008) show that feedback can help students achieve their learning goals, especially when the feedback is critical (helping students identify their mistakes and other shortcomings) and constructive (helping them improve and make progress)—hence the term critical constructive feedback (CCF).

Black and Wiliam (Citation1998) conducted a widely cited meta-study of 250 studies that, taken together, point to a dramatic impact of feedback on learning. Not all of the studies address CCF, but some of the highlighted ones do. For instance, Elawar and Corno (Citation1985), studied teachers trained in giving students written CCF in the form of comments and suggestions on how to improve, with at least one positive remark. Students receiving CCF improved their performance more than students who did not receive this type of feedback. In another study, Butler (Citation1987) compared four different feedback conditions (individualized constructive comments, individualized praise, grade only, and no feedback). The students who received the individualized comments showed the best performance.

To be of potential benefit, feedback needs to meet three design requirements: it must be of reasonable quantity and scope (Brockbank & McGill, Citation1998), understandable (Higgins et al., Citation2001; Lea & Street, Citation1998; Orsmond et al., Citation2005) and connected to a task in a way that makes it potentially useful for improving on that task (Wiliam & Leahy, Citation2015).

However, several studies indicate that even when students are provided with CCF that in a reasonable manner fulfills these three design requirements, many students will still not make use of it. An illustrative case is presented in Segedy et al. (Citation2012). First, the authors report on an earlier study where 77% of the CCF delivered in an educational science learning game for 10- to 11-year-olds seemed to be ignored by the students. Working from that earlier study, the authors refined the CCF so that it aligned more clearly with the students’ current goals within the game and provided useful explanations and examples well adapted to the target group. Despite this, almost half the group of 42 12- to 13-year-olds seemed to ignore the feedback, as measured by low progress in the assignment to create a causal map of climate issues and thermoregulation. The bottom 18 students had a mean score as low as 2.8 (SD 1.59) out of 15. As comparison another 18 students in the group obtained a mean score of 14.6 (SD 0.77). The authors conclude that even though the feedback was refined to be context relevant and align clearly with student’s current goals, a large proportion of students (18 of 42) were either unable or unwilling to use the feedback. Other studies have shown a similar large proportion of students’ nonuse of CCF (Clarebout & Elen, Citation2008; Conati et al., Citation2013; Hounsell, Citation1987; Perrenoud, Citation1998; Wotjas, Citation1998).

Common to all the abovementioned studies reporting that CCF seems untapped by a large proportion of students, is that they measure response to CCF solely by examining students’ subsequent performance: progress on the task or not. This leaves everything that happens from the moment of presentation of CCF till the final measurement of performance inside a black box. The central quest driving our study is to shed more light on what goes on in that black box with respect to learners’ (non)-processing of CCF. Timms et al. (Citation2016) presented a partly related quest by calling for more knowledge on how learners process feedback, provided by a digital system, on errors they make. They carried out a pilot study to see if they could capture how “students notice, decode and make sense of the various types of feedback provided in CI [the digital environment called Crystal Island]” (Timms et al., Citation2016, p. 9). However, that study was an experimental laboratory study with a design that, in several ways, made it more or less impossible for students not to process the feedback they received. When a student had made an error, the system provided a feedback-text-box, which covered the entire screen and remained on screen until the student clicked “okay” to remove it. Thus, it was, in principle, impossible to drop out from the feedback processing at the stage of “noticing”. In addition, students were not working with the educational software in an ordinary classroom but participated individually one at a time in the study, accompanied with one researcher who was seated behind them and observed what they were doing. The likelihood that a student in this situation would skip reading a feedback text is quite low. Furthermore, if this kind of CCF neglect would occur, the measurement for “reading” used in the pilot study of Timms et al. would not necessarily identify it. They measured (logged) the time the feedback text was present on screen, which is in effect not a measure of reading/not reading.

The central differences, in relation to feedback that students receive when they have made an error, is that our work, but not that of Timms et al. (Citation2016), focuses on (i) how and why students do not notice, decode, make sense of, and act upon feedback, (particularly CCF), and (ii) how this occurs in an every-day context of learning as close to an ordinary class-room-based practice as possible.

In sum, our approach and the approach of Timms and colleagues both attempt to explore what happens in the black box of feedback processing. The novelty of our work lies in the focus on when and why students do not process CCF; on how this phenomenon may be studied, and on how learners’ behavior could be influenced in order to decrease the prevalence of non-processing of CCF.

A model for critical constructive feedback-processing

We suggest a model of five stages that are arguably involved in a student’s potential processing of CCF, and her succeeding (or not) in exploiting the CCF to make progress on a task. For a student to profit from an instance of CCF, it must first be noticed by the student, then decoded in some way (e.g., a text must be read) and then made sense of. If made sense of, the CCF can then be acted upon, which may finally, potentially, lead to progress on the task (see ). This model corresponds to well-established cognitive science and human-computer interaction models on error handling and the processing of error messages (Nielsen, Citation1994; Norman, Citation1988; Shneiderman, Citation1986), as summarized on the Nielsen Norman Group website (2019): “in order for the error messages to be effective, people need to see them, understand them, and be able to act upon them”. In error handling analyze, “understanding” is generally separated into (i) deciphering the signs and symbols in an error message (what we call “decode”) and (ii) grasping the meaning of an error message (what we call “make sense”). This division also corresponds to the lexical and sematic processing in linguistics and semiotics, addressing different levels of information processing.

Figure 1. The feedback model used to assess processing of CCF (Critical constructive feedback)

Given our focus on potential non-processing, the dual outcomes of “yes” or “no” for all stages are central aspects of the model. A student may notice a piece of CCF or not. If noticed, she may or may not decode it. If decoded, she may or may not understand it. Then, if a student has understood an instruction or a suggestion, she can make use of it or refrain from making use of it (stage 4). “Acting upon feedback”, i.e., following the instruction or using the hints provided, does not follow as an automatic event upon someone’s having understood the instructions or hints.

A student who does not act upon the hints and instructions provided in a piece of CCF will not make progress on the basis of the CCF (even though she may, potentially, make progress some other way). If the student does act upon the hints and instructions provided to her, this increases the chances of making progress. However, the final stage, that of making progress on the task (stage 5), also has a dual outcome of “yes” as well as “no”. Even if a student understands provided instructions and goes on to act upon them, this will not automatically lead to her making progress with the task. If a task is sufficiently complex and the feedback consists in something other than a single simple directive or a simple answer, it still takes something from the student to actually make progress on the task.

With very simple tasks, the coupling from “acting upon a suggestion on how to improve an answer” to “actually improving the answer” may be tight, but a more general model on CCF processing has to allow things to go wrong also in the very act of revising the task. Otherwise we cannot analyze instances of learning and tasks that—from the perspective of the students—are more challenging and complex.

There are actually two kinds of objectives that must be met in order for a student to make progress on a task on the basis of CCF: First, objectives that concern the design properties of CCF as such. Second, objectives that concern what takes place in and with students after they have been presented with CCF. This study focuses on the second, hitherto under-researched, kind of objectives by going inside the black box to examine stages of CCF-processing. When students drop out from feedback processing, at what stages does this happen?

Students neglect of critical constructive feedback

As a technical way of talking about students dropping out from CCF-processing we will use the term “CCF-neglect”. “Neglect”, as we intend it, should not be taken to imply conscious intentionality exclusively, e.g., as in a deliberate decision not to read a CCF-text displayed on the screen. The very presence of CCF indicates that the student has failed—in part or in whole—at an attempt to solve a task, or at least could have performed it better. If a student feels uneasy when confronted with failure, she may intuitively (or consciously) avoid CCF (Chase et al., Citation2009). Indeed, it has been shown that students may avoid CCF because they interpret it as evaluative punishment (Hattie & Timperley, Citation2007). Unfortunately, the identifying measure of such avoidance has solely been on end performance, leaving open if the neglect or avoidance occurs already at the stage of noticing the CCF, or when decoding, making sense of, or acting upon it.

Research goals

The first goal of our study was methodological: to explore, in an example study, to what extent it is possible to identify CCF-neglect throughout a chain of noticing, decoding, making sense of, and acting upon CCF, and importantly, to do this in a situation as close to an ordinary learning situation in school as possible.

The second goal was intervention-oriented: to examine whether CCF-neglect as identified at different stages could be influenced. On the basis of previous studies, we chose to explore two ways of visual signaling of the CCF: via an animated arrow and via a pointing and gazing digital agent.

Related studies on the effects of visual signaling

In approaching neglect vs. uptake of constructive critical feedback from an information processing perspective () the present study is, to our knowledge, a pioneer study. Indeed, empirical data in this area is remarkably rare, especially in ecologically valid contexts. Yet, with regard to potential methods for influencing (increasing) the uptake of CCF, we could base our choices on previous research from two research areas different from ours, but partly related. In brief, this previous research has showed that signaling by means of arrows, as well as gaze and pointing, can have an impact on attention and learning.

Some studies of visual signaling in educational contexts have compared the effects of (i) signaling with a pointing arrow vs. (ii) signaling with a pedagogical agent who points and gazes toward what the student is meant to attend to vs. (iii) no signaling at all (control condition). Other studies (outside an educational context) have addressed “gaze cuing” and “social attention”. These studies investigate the effects of human gaze compared to pointing arrows on observers’ attention and gaze direction.

Below, we discuss previous results from these two research areas and reason about how the studies differ from our study.

Signaling by means of a pedagogical agent vs. an arrow

Although some research on students using digital learning environments have indeed addressed the two visual signaling conditions examined in this study, none have examined how students, depending on those signaling conditions, handle feedback. Instead they have investigated how those signaling conditions influence students’ handling of task relevant information in general, as measured by performance.

The results are mixed. Some studies have shown no effect on learning outcomes from either pedagogical agents or arrows compared to control conditions (Ozogul et al., Citation2011, experiment 2; Van Mulken et al., Citation1998). Other studies have found a positive effect from both pedagogical agents and arrows compared to control conditions (Moreno et al., Citation2010; Ozogul et al., Citation2011, experiment 1). Still other studies report a positive effect only (or primarily) from pedagogical agents and only for students with low prior knowledge of the learning domain (Choi & Clark, Citation2006; Johnson et al., Citation2013, Citation2015).

Addressing the diverging results on learning effects from signaling Veletsianos (Citation2007, Citation2010) suggests that a pedagogical agent’s contextual relevance is key to its potential effects on students’ learning outcomes, i.e., the agent must be relevant in the context. If an agent points at something, its presence and its gesture must make sense to the students for a positive learning effect to occur.

Zooming in on the question why a pointing arrow, in some studies, turns out to have less positive effects on learning outcomes than a pointing agent, a number of different answers have been proposed. A first answer (especially relevant for the second stage of our CCF-processing model) is that an agent makes the purpose of visual signaling more explicit than an arrow. Students are accustomed to teachers’ pointing gestures in a learning situation and they might find it easier to comprehend an agent’s pointing gestures—evoking as it does social interaction—than a dynamic arrow, which depends on a learned convention and may seem more ambiguous (De Koning & Tabbers, Citation2013).

A second answer (relevant to both the first and second stage of our CCF-processing model), grounded in developmental psychology and neuropsychology, is that humans give priority to social stimuli (Gamé et al., Citation2003; Pinsk et al., Citation2009; Taylor et al., Citation2007). Therefore, they may follow a social actor in the form of an agent more attentively than an inanimate object in the form of an arrow, as well as prioritize what an agent points and looks at more than what an arrow points at. Relatedly, Mayer and DaPra (Citation2012) showed that a more richly embodied agent, that used gestures, facial expressions, and eye gaze, led to better learning outcomes than the same agent without such embodied actions. The authors propose a theory of social agency to explain their results. They suggest that social cues in the form of gestures, gaze, etc., “prime a feeling of social partnership in the learner, which leads to deeper cognitive processing during learning, and results in a more meaningful learning outcome as reflected in transfer test performance” (Mayer & DaPra, Citation2012, p. 239).

A third answer (relevant for both the first and second stage of the CCF-process) is that the persona effect (Lester et al., Citation1997), which is defined as the visual presence of an agent regardless of what it is doing, makes the difference in motivating the student to stay focused on tasks, resulting in increased learning outcomes. Johnson et al. (Citation2013) observed a significant benefit to students with low prior knowledge when visual signaling was done by an agent, but not by an arrow. They proposed that a combination of an agent’s action (pointing) and its social presence enhanced motivation toward the learning task.

Taken together, all three answers point toward different aspects of possible social cueing effects in embodied agents. The first answer concerns cueing grounded in experiences of social interaction. The second answer concerns cueing grounded in social actions. And the third answer concerns cueing grounded in social presence.

Effects of gaze-cuing vs. arrow-cuing

Birmingham and Kingstone (Citation2009) reviewed gaze cuing to conclude that a growing collection of studies suggests that attention to any cue with a directional component—be it an arrow, a pointing hand, or gazing eyes—readily produces a reflex response of orienting gaze in the direction of the cue. However, when subjects are left free to select what they will attend to, they tend to focus on people and their eyes. That means that eyes and eye gaze are more likely than arrows to be attended to in the first place. However, once attended to, both are equally powerful at motivating attention in the intended direction (to the information or object gazed or pointed at).

Becchio et al. (Citation2008) explored how cognitively processing an object in one’s environment can be influenced by someone else looking at the object. Their conclusion is that such influence is very real. In particular, if one observes another person gazing at an object, that object gets loaded with additional meaning. An arrow pointing at the object does not have the same effect, perhaps because a person, unlike an arrow, can act (express agency) with respect to the object (Risko et al., Citation2016).

So-called joint attention, which is a more developed form of simple gaze following, creates a shared psychological space that enables collaborative activities with shared goals possibly unique for human cognition (Moll et al., Citation2006; Tomasello & Carpenter, Citation2007).

Differences between previous studies on signaling and visual cues and the present study

Most studies on gaze cuing are lab-based, introducing single, isolated cues into participants’ visual field. Likewise, most studies on signaling via pedagogical agents in digital educational environments have used setups that are relatively tightly constrained. All these studies are low on ecological validity. By contrast, the tasks provided to students in the present study, and the possible strategies for solving them, come with a large degree of freedom. At each point in the game, the student has multiple choices for action. What choice she makes affects what happens next and what information appears. High ecological validity is achieved at the cost of decreased experimental control.

Furthermore, the present study directly addressed students’ processing of CCF that none of the aforementioned studies on signaling or visual cues have done. And while previous studies used a mix of human and digital agents where sometimes they were gazing, sometimes pointing, sometimes doing both, this study used a pedagogical agent who both gazes and points toward the feedback text.

Finally, and most importantly, our study addresses an entire information process from detecting the presence of CCF to decoding it, understanding it, attempting to make use of it, and finally using it to make progress with a task, whereas the studies reported above all deal with particular aspects of the information process. The studies involving signaling by pedagogical agents or arrows have primarily measured learning outcomes (our stage 5) whereas the studies focusing on social gaze primarily measured information detection (our stage 1).

Formulating the research questions

To reiterate, the primary aim of this study was to open the black box of feedback processing. In particular, we wanted to identify occurrences of CCF-neglect at different stages of the process and do so in an ecologically valid educational context of students working with a digital learning environment as part of their regular lessons. We also wanted to explore whether the amount of neglect at different stages would vary under the three different framing conditions, two with signaling and one without.

An important aspect of the planning process was to reason about how we would measure the outcome at each of the five stages. Given previous experience with eye tracking, and this method’s suitability for measuring allocation of attention on a screen, we chose this method for stage 1 (noticing). We likewise chose eye tracking to measure stage 2 (decoding) given the development of algorithms, that to a sufficient degree, can distinguish actual reading from mere scanning or glancing. For measuring stage 4 (acting upon) and stage 5 (performance or “progress”), we chose to make use of behavioral data logging.

That left us with stage 3 (making sense). As explicated above, a student may refrain from acting upon CCF (stage 4) because she has not made sense of the CCF while another student may refrain from acting upon CCF (stage 4) even though she has made sense of it. Making sense (or not) of CCF does not equal acting (or not) upon the CCF, and should thus be regarded as a relevant stage in its own right. With the goal to clarify in full detail what goes on in terms of CCF neglect from the moment a student is presented with CCF until she potentially makes progress on a task on the basis of CCF, this stage needs to be measured independently, and therefore has a place in the model. However, measuring what goes on internally in someone’s mind, such as whether (and how) a person makes sense of something, is intrinsically challenging. An option for our study would have been to ask questions to probe participants’ understanding of the feedback texts, using, for example, in-game literacy tests and short content tests in direct conjunction with stage 2. However, such probing in connection to all feedback texts would likely irritate students. Students who already struggle to make sense of what they read would get more text to deal with, and students who do make sense of the CCF-text, would likely be demotivated by being forced into a redundant and from their perspective meaningless procedure. Furthermore, we would introduce an explicit test event in contrast to the other more implicit test methods with the risk of confounding the whole test procedure and the aim of ecological validity.

Another possibility would be EEG- or ERP-based measurements but at present these kinds of methods are not applicable in an ecologically valid classroom-like context. Using EEG and ERP would require students to repeatedly be exposed to a set of well-controlled stimuli and monitored, in contrast to the visually rich, dynamic and partly uncontrollable stimuli experienced in an educational game context.

In the end, we did not manage to identify a method to independently measure stage 3 (make sense) and as a result we chose to leave stage 3 out of the study. We discuss this further in the “Measurements” section and in the “Discussion” section where we consider future possibilities for independent measurements of stage 3.

Thus, our primary research question, RQ1, became: At what stages during feedback processing do students neglect CCF? Specifically, we ask the following: (i) Do they neglect CCF in the sense of not noticing it?; (ii) Do they notice CCF but fail to decode it (in our case, not read the CCF-text they have noticed)?; (iii) Do they notice CCF, but fail to decode it (in our case, not read the CCF-text they have noticed)?; (iv) Do they decode CCF, but fail to act on it?; and (v) Do they act on CCF, but fail to make progress at their attempt to revise the task? Our secondary research question, RQ2 became: What difference can be found at each of these stages depending on how the CCF is signaled—via a pedagogical agent, an arrow, or not at all?

Predictions

Prediction RQ1: Large cumulative CCF-neglect by stage 5 (make progress)

As discussed, previous studies have shown that CCF-neglect, measured by final performance, is common if not ubiquitous. In line with these studies we predicted a large cumulative CCF-neglect by the point students reach stage 5 in our model. In other words, only a small proportion would make it through all the way without dropping out at some point. How many would drop out at each stage along the way, however, we could not say, since there were no previous studies to guide us. This necessarily remained an open question.

Prediction RQ2(A): Greatest inclination to notice CCF in the agent condition, less in the arrow condition, least in the control condition

Based on their review, Birmingham and Kingstone (Citation2009) concluded that any cues with a directional component—an arrow or hand pointing, or eyes gazing toward something—elicited a reflex-based gaze orientation.Footnote1 However, they also found that, if the visual environment is sufficiently complex and affords significant freedom of action, then eyes are more likely to attract attention in the first place than other directional cues. The digital learning environment used in our study was, indeed, visually rich, and afforded a significant freedom of action. Therefore, we predicted that the pointing agent would have the greatest impact on influencing students’ detection of the CCF-texts.

Prediction RQ2(B): Greatest inclination to read CCF in the agent condition

Again, neuro- and developmental psychologists agree that humans prioritize social stimuli once detected (Gamé et al., Citation2003; Pinsk et al., Citation2009; Taylor et al., Citation2007). An agent should be likelier than an arrow to prime a social schema. Therefore, students ought to be more inclined to read the detected text in the agent than in the arrow condition. Relatedly, the purpose of an agent’s gaze and pointing may be less ambiguous than that of an arrow and more powerfully indicate that: “You should bother to read this; this is relevant!” (cf. De Koning & Tabbers, Citation2013). The conclusion of Becchio et al. (Citation2008), that humans process stimuli differently in the presence of an agent gazing toward something is along the same line. In our case, the agent’s pointing and gazing at the CCF-text may load the text with more meaning than the arrow pointing to the same CCF-text. Likewise, Veletsianos’ (Veletsianos, Citation2007, Citation2010), claim that an agent’s contextual relevance is important to achieving learning, supports our prediction. It may well make a difference that the agent we used to gaze and point at the CCF has otherwise a central role in the digital learning environment.

Study

Participants

A total of 46 students (22 boys and 24 girls) from two fifth-grade classes at a Swedish municipal school participated in the study. The students were 11- to 12-year-olds with a mean age of 11.6 years. All had middle-class socioeconomic and sociocultural background.

The digital learning environment and its use in the study

The Guardian of History (GoH) is a history-oriented educational game. In the study students used the game during regular history lessons, with teachers as well as researchers supervising the class. Like many other educational games currently used in schools, the game is rich both visually and in terms of content, with many degrees of freedom to navigate.

The game had been previously developed by our research group (Kirkegaard et al., Citation2014; Silvervarg et al., Citation2018) and the reason for choosing our own educational platform was twofold. First, the game had previously been used in several classroom settings in other studies (Silvervarg et al., Citation2018, Citation2014). Thus, we knew it could be meaningfully used for regular history lessons, which was crucial for our aim to obtain ecological validity. Second, using our own game allowed us to design and manipulate the tasks, the CCF-texts, and the visual signaling for the study.

For the study we selected a GoH module that addresses discoveries and inventions during the 15th-18th centuries and their consequences, focusing in particular on the work of female scientists of this period. GoH is based on the pedagogical approach of “learning by teaching” (Bargh & Schul, Citation1980; Chase et al., Citation2009) whereby the student takes the role of a teacher and potentially learns by doing so. GoH features a digital tutee or—the term we will prefer—teachable agent (Blair et al., Citation2007; Chase et al., Citation2009). Students learn about discoveries, intentions, and their consequences by teaching the material to the teachable agent. Professor Chronos, the Guardian of History watching over the passage of time, is about to retire. When the student enters the learning environment for the first time she meets Timy, a teachable agent who tells the student that s/he would very much like to become Professor Chronos’ successor. For this, s/he must pass a series of history exams given by Professor Chronos himself. Unfortunately, Timy suffers from time-travel sickness and so cannot her- or himself travel through time to learn about the past and about history. But—Timy suggests—the student could do the time traveling for her/him, then return to teach Timy so that s/he can pass the exams. The goal of the game is to have Timy pass the exams and the student’s role is to make this happen by helping (teaching) Timy.

The student travels to different historical scenarios where she explores the surroundings via interactive objects and engages in text conversations with important historical figures. In the customized version of GoH used for this study, students were given six different historical missions to complete and teach Timy about.

Teaching activities

For this study, we used three different kinds of teaching activities: constructing a conceptual map, completing a sorting task, and pairing historical figures and events and placing them along a timeline. To complete a teaching activity successfully, students needed to make correct use of the information they had gained from their time travels. This ranged from matters of historical fact (e.g., in 17th Century Europe, only boys—not girls—were allowed to go to school) to conceptual knowledge requiring active comparisons and drawing of conclusions (e.g., the invention of the printing press led to increased literacy by enabling the cheap mass production of books, including the Bible, which made books available also to people who were not well off).

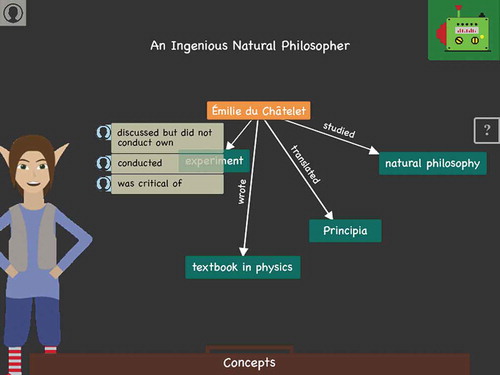

For the conceptual map (), students were provided a starting node, e.g., “Émilie du Châtelet”. The students were then required to choose, from a concept box, those concepts they found relevant with respect to du Châtelet, together with the appropriate relation, from three alternatives, between du Châtelet and the chosen concept.

For the sorting task (), students were presented cards with statements applying to one or more female scientists from the 17th or 18th Century whom students had visited and engaged in conversations with. There were also some statements applying to women in general from that period of time. The students needed to assign the cards to the appropriate scientist(s) and/or to women in general.

The timeline () required students to match two puzzle pieces (a person and a statement about that person), then drag them to the appropriate time slot on the timeline. One time-travel mission concerned the scientific work and inventions of Galileo Galilei, Isaac Newton, and Émilie du Châtelet. Another targeted Johannes Gutenberg and the printing revolution with its preconditions and consequences.

Feedback provided within the digital learning environment

Upon completing a teaching activity, students clicked on a “correction machine” () to see their results, with incorrect items in red and correct items in green. At the same time, a CCF-textbox appeared for one randomly chosen incorrect item (if any). Note that this CCF-text was coded to appear automatically when the correcting machine provided the results. That is, all students (unless they were all correct) were presented with one automatically generated, CCF-text. After the automatic presentation of this CCF-text, the students had the opportunity to generate additional CCF-texts by clicking any remaining red-marked items.

The design rationale for having the first CCF-text appear automatically was to counter the risk that if all CCF-texts had been optional (that is appear only when a student had clicked a red-marked item) students would read all CCF-texts. When making an active choice by clicking a red-marked item, students might be primed, we reasoned, into also looking at the CCF-text. Such an effect would have interfered with our comparison of the three experimental conditions (agent, arrow, and control) with respect to their inducement of noticing and reading the CCF-text. In the end, we could not identify any priming effect from clicking on an error, i.e., doing so did not automatically lead students to look at or read the CCF-text. In effect, the equivalence in students’ inclination to notice and read CCF-texts, whether automatically presented or chosen, enabled us to use all occurrences of CCF (either automatically presented or chosen by the students) in our analysis.

All of the CCF-content was contextualized, given the known importance of contextualization to students finding feedback meaningful (Segedy et al., Citation2013). Each CCF-text was composed of two parts, one “critical” (pointing out what was incorrect), and one “constructive” (suggesting ways to revise the answer), in line with what Black and Wiliam (Citation1998) view as the main functions that feedback should fulfil. For example, if a student erroneously paired “Gutenberg” with “Typewriter” in the timeline activity, she would receive the CCF: “‘Gutenberg’ and ‘typewriter’ do not belong together.” followed by “Find information to solve this by visiting Gutenberg and looking more closely at the Bible in his office.”

For each error, three different phrasings with the same central information were prepared and randomly provided in order to prevent students from getting bored by potentially seeing the same phrases over and over. The length of texts varied according to the contents from 12 to 33 words.

It should be pointed out that the rationale for our design of the CCF-texts in the present digital learning environment was to enable a methodological study of how CCF is handled (or not handled) by students. It was not to create pedagogically flawless CCF-texts. The CCF-texts used in the study are, according to this objective, designed in a standard manner as compared to other kinds of critical constructive feedback in text format.

Design of the three experimental conditions (CCF-framings)

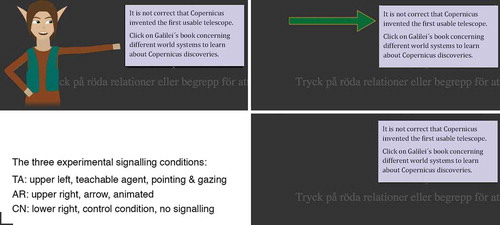

Students were presented with CCF-texts framed in three different ways: (i) the teachable agent pointing to and gazing toward the CCF-text (condition TA); (ii) an animated arrow pointing to the CCF-text (condition AR); (iii) no signaling of the CCF-text (condition CN).

The agent and the arrow were designed to have similar size and appear at the same position on the screen (). The agent’s hand and the arrow were animated as similarly as possible, given the powerful effect motion has in attracting attention. The goal was that the two ways of signaling would be equally salient. A small pilot study verified that this was the case. Finally, the correction machine was located on the opposite side of the screen from the agent or arrow to avoid interfering eye-gaze data and potential ambiguity.

Figure 6. The three experimental signaling conditions: TA (Teachable agent), AR (Arrow), and CN (Control)

The study followed a within-subjects design whereby all participants encountered all three CCF-framings according to a randomized scheme. The same scheme was used during the two sessions in their classrooms and the third at the university. For the three CCFs, each of the three framings appeared exactly once, i.e., we used randomized sampling without replacement. This ensured that each framing occurred equally often for all participants.

Procedure

Each session lasted approximately one hour. Students played the game using an individual login and were instructed to focus on their own game play. The first two sessions took place during scheduled history lessons at the students’ school using the school’s iPads. Students familiarized themselves with the game and met the researchers. Researchers were present in order to assist with technical and interaction-related issues but did not help in solving any game tasks. The third study session took place in a lab classroom at Lund University where each student was seated in front of a desktop computer with an integrated eye tracker, that is with the sensors embedded directly below the computer screen. After a short presentation of the task ahead, the students were instructed to go through a quick individualized calibrating procedure for the eye trackers. They then logged into the game. To secure optimal eye tracking data, researchers gently encouraged students on occasion to sit up straight whenever they started to slump.

All three sessions were scheduled as part of the students’ regular school activities and under the direction of the usual class teacher. The session at the university, with the history lesson taking place in the classroom equipped with eye trackers, was also accompanied by additional visits to a set of university labs.Footnote2 Even though it took place outside the context of the students’ regular classrooms, we believe that high ecological validity was maintained, given the students’ previous exposure to the game and the researchers, and the presence of their usual teachers. It is reasonable to conclude that the students’ goals, strategies, and behavior when using the history game were not substantially different from other school activities. As noted by the teachers and confirmed by the researchers’ own observations, a majority of the students had a positive attitude toward school work, were inclined to follow instructions from teachers and other adults, and made appropriate effort to complete tasks assigned to them.

The first four of the six missions were available during the first two sessions. All students started on the fifth mission for the third session, regardless of how far they had progressed in the two first sessions, to better control for content and tasks. Eye tracking, environment, and choice of missions aside, the three sessions were very similar, including the three signaling conditions (and the schema of randomized sampling without replacement).

Data collection

All data for the two first stages in our model—noticing CCF (stage 1) and decoding/reading (stage 2)—were collected via eye tracking. The final two stages—acting on the CCF (stage 4) and making progress (stage 5)—were measured via logging of students’ interactions with the game. In addition, contextual data was gathered via a questionnaire that the students were asked to complete at the end of the third session.

Eye tracking data collection

The eye tracking equipment comprised an integrated SMI REDm eye tracking camera with SMI iViewX and SMI BeGaze 3.6. software. The system was chosen to provide a less intrusive, more comfortable environment since the students would be playing the game for around fifty minutes.

This type of remote eye tracking system captures eye movement data with less accuracy and precision (using a frequency of 120 Hz in this study), but allows for simultaneous recording of multiple students, thus making it possible to emulate a classroom environment. Given the relatively low resolution, the analysis did not focus on individual words, but on general elements and the overall reading activity. Prior to the recordings, the participants went through a nine-point animated calibration procedure using SMI iViewX. SMI BeGaze 3.6. was used to record such eye movements as fixations, saccades, and blinks.

Behavioral log data collection

Behavioral and associated data were collected via logging integrated into the game code. This included:

whether the CCF was provided automatically or chosen by the student,

how the CCF was framed (agent, arrow, or control),

the content of the CCF-text,

the student’s navigations and interactions—in particular whether she tried to make use of the CCF—after the CCF-textbox had been closed,

how the student scored on each teaching activity before and after the CCF.

Measurements

Noticing CCF

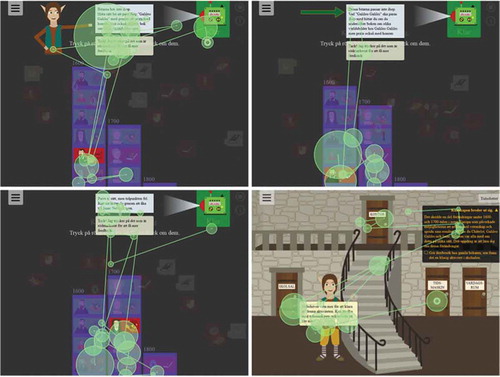

Noticing, in the sense we intend, involves very low-level, sub-personal awareness, allocating attention resources to features in visual input. We took noticing the CCF to be looking at the text box, as determined by the presence of any fixation-based areas of interest (AOI) hits within the coordinates of the text box (Holmqvist et al., Citation2011). The lack of any such hits was counted as CCF-neglect. See for an example of typical eye movement patterns.

Figure 7. Examples of a participant’s eye movements while playing the game

Reading CCF

Reading (as opposed to mere noticing) was determined using a support vector machine (SVM). In light of the large differences in reading behavior between individuals, a separate SVM was created for each individual, using three eye movement measures relevant to reading: duration of fixation, saccadic amplitude, and regression. Every CCF-text was labeled with the binary value of “read” or “not-read” (i.e., CCF-neglect) based on an intrinsic threshold determined from a pilot study (for details, see Lee, Citation2017).

Making sense of CCF

As noted earlier, we identified no direct means to determine whether a student made sense of a text she had read (stage 3) while simultaneously holding true to our goal of ecological validity.

We were, however, able to reason about the stage on the basis of contextual information and measurements of the stage before and after. The teachers had vetted all of the CCF-texts and deemed them comprehensible for the age group and specific cohort. They further judged most students to be good readers. This leads to the estimation that in most cases of CCF-text-reads the students made sense of what they read. Further, that students would act on a CCF-text without having made appropriate sense of it was highly unlikely. This led to the estimation that (almost) all act-upon-CCFs would follow upon sense-making-of-CCF. What was clearly more difficult to make any estimation about is to what extent students who did not act on the CCF might nevertheless have made sense of it.

In the “Discussion” section we discuss a future potential method for distinguishing when neglect, or drop-out, occurs at the make sense stage versus at the act upon stage.

Acting on CCF

Acting on CCF was determined from game logs, from which an interaction history for each CCF occurrence was extracted. The interaction data was then evaluated with respect to the instructions and hints provided in the CCF-text.

Depending on the extent to which the student followed the instructions and hints provided in the CCF, she received a score from 0 to 3. A score of 0 signified that a student traveled to a historical scenario with no relation to the hints and instructions provided in the CCF-text. A score of 1 signified that a student traveled to the correct historical scenario, but “clicked on” the wrong informational objects or nothing at all (for example, that a student, told to visit Newton and investigate his Principia manuscript, did indeed visit Newton but only clicked on his diploma on the wall). A score of 2 signified that a student traveled to the right historical scenario and clicked on the suggested object, but also on other objects (not suggested). A score of 3 meant that a student traveled to the right historical scenario and only clicked on the person or object that had been suggested in the CCF-text. For the analyze, we applied a relatively conservative approach in that only scores of 2 or 3 were counted as act on CCF, i.e., scores of 0 and 1 counted as neglect.

Progress following acting upon CCF

Finally, progress was determined by whether students, in their next attempt to complete the teaching activity, made progress in relation to the mistakes for which they had received CCF. The progress score could be any one of 33%, 50%, or 100% depending on how many parts the feedback was composed of. Consider that two puzzle pieces, each representing the printing press, should be paired with two other pieces in the timeline activity: “led to mass production of brochures” and “led to mass production of books”. A student has paired them with the wrong pieces and received this feedback: “These pieces do not belong together. To learn what consequences the printing press had, return to Gutenberg’s workshop and look at the Bible.” The student visits Gutenberg’s workshop and examines his Bible. On her next round with the teaching activity she correctly pairs one of the pieces with “mass-production of books”, but mis-pairs the other. Her progress then would be 50%.

Students’ answers to explicit questionnaire questions about the feedback texts

At the end of the third session, all students were asked to complete a questionnaire with four questions in Likert scale format probing the student’ experiences of the feedback they had received: To what extent did you read the feedback texts? To what extent did you click to receive more feedback (more than the first, automated one)? To what extent did you find the feedback helpful? How did you feel about the amount of feedback?

The questionnaire also contained additional questions not relevant to the present article.

Results

All statistical analyzes were performed with the statistical software package R (R Core Team, Citation2016/2017). The logistic mixed-effects linear analysis made use of the R method lme4 (see Bates et al., Citation2012).

Of the 46 students participating in the study, four did not take part in the third session. In addition, six students were excluded from the analyzes due to technical problems with the eye tracking.

The remaining data set consisted of 36 students: 20 girls and 16 boys. A total of 451 CCF-instances, where a text box with critical constructive feedback was presented on the computer screen, were recorded using eye tracking (stages 1 and 2). Of these, 27 lacked corresponding behavioral data for stages 4 and 5. Of the final set of 424, 218 were presented automatically and 206 were opted for. These were evenly spread between the three signaling conditions, both with regard to the total count and with regard to automatic CCFs vs. CCFs opted for: 142 instances (72 automatic, 70 opted for) occurred with agent signaling, 143 (74 automatic, 69 opted for) with arrow signaling, and 139 (72 automatic, 67 opted for) with no signaling (control condition).

RQ1: At what stages during feedback-processing do students neglect CCF?

Of the 424 instances of CCF presented to students, only 19 (4.5%) instances made it through to the final stage without being neglected at any of the stages 1, 2, 4, or 5 (). This aligns with our prediction (Prediction RQ1), that there would be a large cumulative CCF-neglect by the final stage of our model.

Figure 8. Remaining CCF-instances after stage 1, 2, 4, & 5 of the CCF-processing model

shows the percentages of CCF-neglect at each of stages 1, 2, 4, and 5. A third of CCFs were never noticed. Of those that were, 39% were never read. Of those that were read, a remarkable 77% did not lead to acting upon CCF in our sense.

Of those that were acted upon, 52% did not lead to any progress. It is however relevant to compare the 48% (n = 19) of 40 CCFs-noticed-read-and-acted-upon that did lead to progress to the 9.0% (n = 12) of 133 CCFs-noticed-read-but-not-acted-upon, 0.9% (n = 1) of 111 CCFs-noticed-but-not-read, and 0% (n = 0) of 140 CCFs-not-noticed, that, as well, made it through all the way to progress.

Clearly, the largest amount of neglect happened at the fourth stage (acting on the CCF). Pairwise Chi-square tests between stages comparing the pass/neglect ratios confirm this ().

Table 1. Pairwise Chi-square tests comparing the pass/neglect ratios for stages 1, 2, 4, and 5 of the CCF-processing model

RQ2: Does the extent of CCF-neglect differ with the signaling conditions?

We predicted (prediction RQ2(A)) that students would be more inclined to notice the CCF-texts in the agent compared to the arrow condition—and more inclined to notice them in the arrow compared to the control condition (no signaling). We also predicted (prediction RQ2(B)) that, in the agent condition, students would be more inclined to read the CCF-texts they had previously noticed compared to the two other conditions.

The overall result is presented in , showing the consecutive dropping out with regard to CCF-neglect. The figure suggests that the “agent” signaling condition, compared to the other conditions, led to less neglect at both the stages of noticing and reading. After that, the benefit diminished. confirms the observation from of a dramatic increase in neglect at the stage of acting on the CCF—effectively overriding the differences between the three framing conditions. The section that follows presents a more detailed analysis of the differences between the three signaling conditions for the first two and last two stages of our model.

Figure 9. Remaining CCF-instances for each of the three signaling conditions

Effects of the three signaling conditions on noticing and reading CCF

To compare the effects of the three signaling conditions on noticing and reading, we used the full dataset of 451 CCFs, since the data loss at subsequent stages was irrelevant for this analysis. presents means and standard deviations by experimental condition, calculated from the means of the individual participants (means and SDs were calculated this way to prevent a few outliers having undue influence). On average, students noticed 67% (SD = 26%) and read 43% (SD = 28%) of all CCFs presented to them, which means that on average 64% of previously noticed CCFs were actually read.Footnote3

Table 2. Means and standard deviations for the amount of noticed and read CCF-texts separated on the different experimental conditions

We used mixed-effects linear regression to account for the within-subject factor and compared the effects of the three signaling conditions on noticing and reading. In the two regression models, noticing and reading were designated as (binominal) outcome variables and signaling condition as a predictor (group) variable with students as the random factor:

notice/read[YES, NO] ~ signaling[TA, AR, CN] + participant(random factor).

TA = teachable agent signaling; AR = arrow signaling; and CN = control (no signaling)

The results () support the prediction that the agent condition would have the greatest positive effect on both noticing and reading, revealing a statistically significant effect for each (TA(notice): Z = 3.38, p <.001; TA(read): Z = 3.69, p < .001). As for the arrow condition, the results did not validate the prediction that the arrow condition would have a better effect than the control condition (AR(notice): Z = − 0.531, p = .60; AR(read): Z = 0.487, p = .626).

Table 3. Logistic mixed-effects linear analysis for the TA (Teachable agent) and AR (Arrow) conditions against the CN (Control) condition on effects of visual signaling on CCF-neglect in the stages of “Noticing” and “Reading”

Effects of the three signaling conditions on stages 4 and 5 (“Act upon CCF” and “Make progress with task”)

As the reader will have observed, we made no predictions for the last two stages: acting on the CCF and making progress. After the reading stage, the dataset became critically unbalanced for the mixed-effect analysis, since the means (for each participant and experimental condition) were subject to an increasing number of missing values, reflecting the neglect occurring at the first two stages. Thus, the large amount of neglect that occurred at the fourth stage (acting on the CCF) left us with a heavily reduced dataset of only 40 CCFs to consider at the progress stage. With this said, the counts for pass/neglect (or success/failure) at the last two stages were: Acting on: TA = 14/60, AR = 12/36, CN = 14/37; Progress: TA = 7/7, AR = 7/5, CN = 5/9).

Since the number and/or distribution of CCF-neglects deteriorated for the last two stages, we turned to Chi-square analyzes on these figures: Acting on: χ 2(2, N = 173) = 1.37, p = .50; Progress: χ2(2, N = 40) = 1.38, p = .50). This suggests that the signaling conditions had no significant effect by this point. However, it is possible that the large amount of neglect during the fourth stage masked any such effect; remember that only a small percentage (23%) of the CCFs that had been noticed and read were acted on: 19% with the agent, 25% with the arrow, and 27% in the control condition. Of those that were acted on, 48% led to progress: 50% with the agent, 58% with the arrow, and 36% in the control condition (see and ).

Questionnaire data

The questionnaire data were used to gain contextual knowledge and to probe the basic design conditions (as discussed in the introduction) that must to be fulfilled for students to possibly profit from CCF. We collected questionnaire data from all 42 students present for the third session, including the six students excluded from the rest of the analysis due to technical problems with the eye tracking.

Again, for CCF to be potentially useful it must be of reasonable quantity and it must be comprehensible. Regarding comprehensibility, the class teachers involved in the study had been asked to evaluate the text and had affirmed that the text was on an adequate level of difficulty. As for the amount of feedback, the students’ opinion was explored with a 3-level questionnaire item asking: “How did you feel about the quantity of feedback? [too much text, adequate amount of text, too little text]”. A clear majority, 30 (71%) of 42 students, answered that they found the amount of text in the text boxes adequate, 7 (17%) answered “too much text”, and 4 (10%) “too little text”. One student did not answer the question.

Second, students need to be able to understand the point of the feedback. This was addressed with a 5-level questionnaire item asking: “How often did you read the feedback texts? [always, often, sometimes, seldom, never]”. A majority of students (23 out of 42, or 55%) answered either “always” or “often”, 12 (29%) answered “sometimes”, and 7 (17%) “seldom” or “never”. A follow-up open-response question asked “Why?” and received 39 answers. Eighteen students (46%) said it was because they found the feedback helpful, 6 (15%) said it was because they found the feedback unhelpful, and 3 (8%) said they did not know (see ).

In response to the 5-level questionnaire item “To what extent did you find the feedback helpful? [1 = not at all, 3 = somewhat, 5 = a lot]”, one third of the students (14 out of 42, or 33%) answered in the affirmative (4 or 5), another third (14, or 33%) answered “somewhat” (3), and the remaining third (14, or 33%) answered in the negative (1 or 2); see .

In sum, the questionnaire data indicated that it was possible for most of the students to understand and decode the CCF and that most of them found that the amount of feedback was reasonable. However, and importantly, the questionnaire data also indicated that one third of the students reported that the feedback was not helpful and that another third of the students found the feedback only somewhat helpful.

Discussion

The paper describes a five-stage model for studying the processing a student ideally will go through after having received critical constructive feedback (CCF), from noticing it to decoding it, understanding it, acting on it, and finally using it successfully to improve performance. The model was applied to elementary school students working with a digital learning environment and receiving CCF within that environment. In contrast to the study of Timms et al. (Citation2016), the study focused on (i) how and why students do not notice, decode, make sense of, and act upon feedback, and (ii) a context as close to an ordinary class-room-based practice as possible.

In methodological terms, the study contributed with evidence that CCF-neglect can be studied in a more fine-grained way than it has been to date. It demonstrated that it was possible to measure levels of neglect at intermediate stages of CCF-processing, namely noticing, decoding, and acting on the CCF. The study further shows that it was possible to do so in an ecologically valid setting close to the students’ ordinary classroom learning environment and with a game that, like many present day educational games, is rich in content and visual dynamics and offers large degrees of freedom of choice and navigation on the part of students. In terms of intervention, the study confirms the value of having an agent gaze and point toward the CCF that students are intended to read, showing that students were likelier both to notice and to decode (read) the text.

CCF-neglect by stage and cumulative

As predicted (prediction RQ1), the cumulative CCF-neglect by the final stage of the model was quite high. This aligns with previous reports of high CCF-neglect measured via performance outcome in both non-digital (Hounsell, Citation1987; Perrenoud, Citation1998; Wotjas, Citation1998) and digital (Clarebout & Elen, Citation2008; Conati et al., Citation2013) contexts.

What our study brought new to the table was evidence about where along the way feedback neglect can take its toll. In our example study, fully a third of the CCF provided in the digital learning environment was never even noticed. More than a third of the CCF that was noticed was never read (39%). More than three quarters of the CCF that was read was never acted on (77%). More than half of the CCF acted on (52%) failed to lead to progress (i.e. improvement in solving the actual task). Charting out neglect at different stages opens up for more detailed analyzes of the phenomenon of CCF-neglect and for more possibilities to counteract CCF-neglect in software design.

Care should be taken with any attempt to generalize the specific numbers or proportions of neglect at the different stages to other digital learning environments that include other kinds of tasks and other formats of feedback (e.g., oral or pictorial as opposed to textual).

That said, the game that we used is not so extraordinary either in its general design or the way it presents CCF. Thus, there is validity, we hold, in the overall indication—in line with other studies—that CCF-neglect is prevalent. A recommendation for educational software designers is therefore: If you make design efforts in providing constructive feedback in an educational game, make sure to examine to what extent the investment pays off in terms of usability in practice for students.

What the overall picture shows is, namely, that in spite of efforts from designers of educational software to provide CCF to students, (and in spite of the amount of time teachers spend on providing CCF to their students) the potential gains to be had from the feedback seem untapped by a large proportion of students.

As this paper shows, it is methodologically possible to build more fine-grained knowledge of what happens along students’ processing (and not) of CCF. On the basis of such knowledge and a charting-out of where neglect occurs, one may also start build hypotheses on how to counteract neglect at different stages. In the end, there is no real gain for a student unless she goes through all stages without dropping out, but this does not mean that earlier stages in the chain are unimportant. Noticing (or reading) a CCF-text is not sufficient for improving on a task on the basis of the CCF in question—but it is necessary. Thus, improving on the design means increasing the proportion of students who do not drop out at each given stage.

The effect of agent framing on whether students notice CCF

We predicted (prediction RQ2(A)) that more students would notice CCF in the agent than in the arrow condition and more in the arrow than in the control condition. As it happened, only the first part was born out: i.e., the agent condition alone had a significant positive effect in reducing CCF-neglect; the animated arrow did not.

The outcome may be explained in two alternative, yet related, ways. One [...] possibility—in line with emphasis on eyes as a principal social cue (Birmingham & Kingstone’s, Citation2009) —is that [...] students were more likely in the first place to notice the agent because of its eyes. Once noticed, however, the agent and the arrow were equally powerful in directing students’ gaze to the CCF-texts and leading to fixations of the CCF-texts. The other possibility, based on Becchio et al. (Citation2008), is that more texts, once looked toward, would be fixated in the agent condition because visual stimuli looked at by another gazing agent gets loaded with more meaning and is therefore processed differently. Both explanations suggest that social stimuli are prioritized over nonsocial stimuli. Either, eyes (being socially loaded visual stimuli) attract attention more than other visual stimuli (e.g., an arrow). Or, another social agent pointing and gazing at an object increases the likelihood that an observer cognitively processes the object in question compared to when the same object is signaled by a nonsocial entity (e.g., an arrow). In different ways both explanations advocate a social aspect for why the TA had a larger influence on learners than to the arrow—as demonstrated in a significantly higher proportion of fixations on CCF-texts when signaled by the agent than when signaled by the arrow.

In practical-pedagogical terms the results indicate that the proportion of students’ noticing CCF in an educational game can be increased by means of the method of framing. For those who work with educational software games including pedagogical agents, the result more specifically suggest that such agents may be useful for increasing students’ inclination to notice CCF.

The effect of agent framing on whether students read the CCF

We predicted (prediction RQ2(B)) that more students would read CCF in the agent condition than the two other conditions; this is indeed what we found. Also, this result aligns with the theory that humans give priority to social over nonsocial stimuli also once detected (Gamé et al., Citation2003; Pinsk et al., Citation2009; Taylor et al., Citation2007). Furthermore, the agent’s pointing and gazing toward the CCF-texts may have loaded these texts with more meaning than the arrow pointing to the same piece of CCF-texts (De Koning & Tabbers, Citation2013), whether from a general communicative perspective or because the students are primed that teachers’ or peers’ pointing gestures signal something of importance in a learning situation (“this you should bother to read”).

In addition, the positive effect of signaling may arise from the contextual relevance of the teachable agent (Veletsianos, Citation2007, Citation2010). The time elf Timy in effect does far more than point and gaze. S/he asks the student for her help, encouraging her to take on the responsibility for guiding her/his studies. The specific effects of teachable agents instigate further elaboration. Chase et al. (Citation2009) found that students who taught TAs were more likely to acknowledge errors than students who learned for themselves, suggesting that teaching a TA protects students’ egos from the psychological ramifications of failure—and fear of failure can be a reason for feedback neglect (Nicholls et al., Citation1990). In the present study, the reduced neglect as to the reading of CCF-texts in the agent condition may relate to the fact that it is the teachable agent that needs help to pass the tests in the game and thus the one risking failing the tests.

Yet another mechanism that may have been in play is that of shared gaze. We remind the reader of the suggestion by Becchio et al. (Citation2008), that if another person gazes at an object and you observe that the person does so, the object in question gets loaded with more meaning than if no one had gazed at it. A pointing arrow does not have this effect, which possibly is related to the fact that a person in contrast to an arrow can potentially act with respect to the object (Risko et al., Citation2016). In the present study, the participants did probably construct a social relationship with the time elf (their TA), which may then have prompted or motivated them both to look at what the time elf looked at—the CCF-text—and then also to read the text.

The practical-pedagogical take-home messages are parallel to those presented with respect to noticing of CCF. The proportion of students’ reading a CCF-text in an educational game can, it turns out, be increased by means of the method of framing. The result more specifically suggests that pointing and gazing agents may be useful for increasing students’ inclination to read CCF.

Signaling is, of course, not the only way that one might influence feedback neglect and signaling can be done in a variety of ways. Thus, our study, that involves two particular forms of signaling, cannot provide any general results on how neglect at different stages of CCF-processing can be influenced. However, it brings a piece of evidence to the larger map by showing that CCF-neglect at the stages of noticing and decoding/reading can be influenced by one of the forms of signaling exploited in our study.

Noticing and reading CCF-texts boosted by the agent in spite of it being an additional and competing visual element

One could argue that, based on theories of cognitive load, the presence of a pointing and gazing agent should hinder rather than help students notice and read the CCF. The agent is, after all, an additional visual element: yet one more thing to attend to, competing with everything else on the screen for attention (Mayer & Moreno, Citation1998; Sweller, Citation2005).

However, we hold that the notion of cognitive load largely has its relevance and explanatory power in contexts that differ in crucial ways from that of a student who uses an educational game—which is also the reason that we did not present the notion in the section on background studies. One prototypical context where the notion of cognitive load has bearing is that of a control-room where operators need to continuously supervise visual displays exposing a flow of dynamic information, that is only partly (if at all) within the control of the operator.

In contrast, the students in our study were themselves in control of the visual dynamics of the game. No information and no visual elements would change or disappear unless the student herself chose to move forward and most textual information could be “clicked away” by the student. In addition, the students were provided with paper and pencil that could be used for external off-loading of information by taking notes. It is true human beings have limitations in their capacities with respect to attention and working memory. However, in a case as ours risks of cognitive overload are minimal, since the students are in control of the visual dynamics, not restricted by a critical time-operated framework, and also have possibilities to redistribute and unload information to the external world,

De Jong (Citation2010) reasons along similar lines. He discusses how learners in realistic situations in general have means to off-load memory as well as to revisit information, whereas nearly all studies on learning in the cognitive load tradition design situations, that are artificially time-critical in the sense that they prevent participants from off-loading memory or revisiting information, etc.

In effect, we suggest as a take-home message for designers of educational software to not unreflectingly accept warnings or recommendations concerning visual design from other domains such as, for example, Human Factors with a tradition of industrial risk environments.

Why did so much neglect happen at the stage of acting on CCF?

Recall that the largest amount of neglect in our example study happened at the fourth stage of our model, when students should have been acting on the CCF they had received. Even when students had read the CCF (and presumably, in many cases, understood it), that often did not translate into action. For software designers, in this case ourselves, such a large number of drop-outs is a call for investigation and an urge to examine what lies behind the drop-outs in order to seek possible explanations and systematically probe how to go about to try to redesign the software.

For the present game this is, in effect, ongoing work on the basis from input from our study. One possibility is that, for many of the students, the CCF was simply not that useful—or, at least, not useful enough. Fully a third of the questionnaire respondents said that the feedback they received either did not help them much or did not help them at all. Another third found the CCF only somewhat helpful. As noted earlier, our aim was not to produce the best possible CCF but rather to create CCF in the way it is usually done—in line with keeping our study as ecologically valid as possible. We wrote the texts ourselves and had the teachers evaluate them, focusing on comprehensibility. To do more than this—e.g., to test and retest the texts with target groups of students—is not, in our judgment, feasible in a real-world educational context, regardless of who the educational software developer is.

A substantial number of observations that we made pointed in a different direction. That is, rather than finding the CCF unhelpful, students were put off by the time and effort required to act on it. To travel in time, as all of the CCF-texts suggested doing in one way or another, required performing a fair number of repetitive actions. The student had to exit the virtual classroom where she was teaching the TA, enter the room with the time machine, enter the time machine, adjust the controls, and only then could she enter the historical period suggested by the CCF. The students clearly got frustrated by this process, as observations and subsequent interviews showed. The game’s navigational features were not well designed.

An updated version of the game allows the student to make an entry for each historical site she visits in a magic book that she has available at all times in the upper corner of her screen; reentering a historical period only requires one click in the book, which is a teleporting short-cut. Whether this makes the difference and sharply reduces the amount of neglect at this stage of CCF-processing must be evaluated in future studies. Already though, this is a clear example of how measuring CCF-neglect at different stages of processing can lead to important software redesigns.

Notably, if a redesign such as the one presented above in effect leads to a higher proportion of students acting upon CCF-text, it is also likely that the comparatively larger set of reads of CCF-texts in a TA-condition will result in a comparatively larger set of CCF-texts “acted upon” in that condition.

Neither agent nor arrow signaling affected the stages of acting on or making progress

We found no significant differences between the three conditions on students’ tendency to act on CCF. There appears to be no difference at the final stage of making progress either, although the large amount of neglect at the previous stage left too few cases for conclusions to be safely drawn.

That the choice of CCF-framing does not seem to play a role by this point in the process, where the visual framings and the text itself are no longer present, comes as no surprise. In particular, it is very unlikely that a previous signaling format will have an effect on whether the student is successful or not in revising the task. At this point, such individual factors such as problem-solving skills and level of knowledge likely play far more important roles.

Limitations of the study and future studies

A key limitation of the present study is that one stage in our CCF-processing model—making sense of the CCF—was not independently evaluated. For future studies this needs to be addressed to have a fuller picture of how students process (and do not process) CCF. One can hope that technological developments will make appropriate (presumably EEG-based) measurements feasible for ecologically valid contexts, such as a regular lesson in a class-room-like setting. For now, we suggest that more indirect measures be investigated. One that we are about to test with the history game is encouraging students to store key words or phrases in a “magic book” (with strict limits on how much they can store). When students return to historical periods they have visited before, they can use the notes as reminders and guidance. The choice of words and phrases can then be used to infer the extent to which students have understood the CCF. However, this method is only of use for students who make use of the magic book.

Another possibility involves an added study phase. For all instances of a specific student not acting upon a specific CCF-text, one could—in a follow-up session after the regular study—walk through each text to probe for the student’s understanding and making sense of it. By doing this one might identify the extent of cases where students have had difficulties in making sense of a text. For our study, class teachers had verified that the texts were adequate and would be understood (made sense of) by the target group—but this is a group level estimate. A full analysis of potential neglect at the stage of making sense needs to find potential individual cases of drop-outs at this stage. The proposed method, if used in such a follow-up session, might distinguish between (i) a drop-out where a student reads the text but has difficulties to understand what it means and therefore, fails to act upon it and (ii) a drop-out where a student reads the text and understands it, but chooses not to act upon it.

Another key limitation was that the study was only an example study, intended to show that intermediate stages of CCF-processing and neglect could be measured. We anticipate that some of our findings will generalize, but obviously further studies need to be done with different digital learning environments, signaling conditions, forms of feedback, etc. To obtain a full body of knowledge, many studies are required in order to investigate the phenomenon of CCF-neglect for different kinds of educational software, tasks, and CCF- formats. In particular, we hold that future studies on CCF-neglect should consider other CCF-formats than text alone, such as CCF in graphics, animations, and audio formats—and combinations of different formats. This will be necessary if the goal is to scaffold as many students as possible into productive use of critical constructive feedback (i.e. “non-neglect”).

The specific results from our study on benefits from signaling pedagogical agents could be pursued further. One variable of interest is students’ prior knowledge. Several studies (Choi & Clark, Citation2006; Johnson et al., Citation2013) have suggested that signaling by pedagogical agents may show limited benefit when averaged across all learners, while having a very large benefit for learners with low prior knowledge. Another line of future investigation could be to compare the signaling of a teachable agent with the signaling of other kinds of pedagogical agents. Then there is the choice of social cues. We used directed gaze accompanied by pointing, but one can imagine other possibilities: eyes without directed gaze, an anthropomorphized arrow, directed sound (a voice calling from a particular direction), bodily movement (the pedagogical agent moves in a direction, prompting the student to follow), and so on.

Another limitation relates to the digital environment context. Our study investigated students’ neglect of CCF when provided to them in a software-based learning environment. This, of course, differs from when students receive verbal or written CCF by a teacher or a peer, all of which involve issues of interpersonal relations not addressed in the present work. However, findings from a computer environment about factors involved when students handle—or don’t handle—critical constructive feedback may be later explored in a classroom environment to determine their influence there. In the meantime, the increasing deployment of computer-based learning environments means that a better understanding of how the uptake of critical constructive feedback can be increased in that context is valuable in its own right.