Abstract

Technical approaches for surfacing, reviewing, and removing terrorist and violent extremist content online have evolved in recent years. Larger companies have added metrics and insights in transparency reports, disclosing that most content removed for terrorist and violent extremist offences is proactive, using hybrid models deploying tooling in combination with human oversight. However, less is known about the algorithmic tools or hybrid models deployed by tech platforms to ensure greater accuracy in surfacing terrorist threats. This paper reviews existing tools deployed by platforms to counter terrorism and violent extremism online, including ethical concerns and oversight needed for algorithmic deployment, before analysing initial results from a GIFCT technical trial. The Global Internet Forum to Counter Terrorism (GIFCT) Technical Trial discussed in this paper presents the results from testing a methodology using behavioural and linguistic signals to more accurately and proactively surface terrorist and violent extremist content relating to potential real-world attacks. As governments, tech companies, and networks like GIFCT develop crisis and incident response protocols, the ability to quickly identify perpetrator content associated with attacks is crucial, whether that relates to the live streaming of an attack, or an attacker manifesto launched in parallel to the real-world violence. Building on previous academic research, while the deployment of layered signals shows promise in proactive detection and the reduction of false/positives, it also highlights the complexities of user speech, online behaviours, and cultural nuances.

The internet, and user-facing platforms, are one of the modern frontlines of both terrorist and counterterrorism efforts. Governments, private technology companies, counterterrorism practitioners, and academics have all increased their focus on the many digital platforms and tools that terrorists exploit and employ. With the scale of online content produced and uploaded every minute of every day, safety and security efforts by larger tech companies rely on deploying algorithmic solutions to proactively identify threats, triage moderation efforts based on severity, and make decisions based on hybrid models of human and machine oversight.Footnote1 This is particularly pertinent in crisis response work to proactively risk mitigate around the potential viral spread of perpetrator or accomplice related to real world, ongoing threats. While low in prevalence, these situations are high in risk, as evidenced by attacks that have taken place in Christchurch, New Zealand; Halle, Germany; Glendale, Arizona; Buffalo, New York; and Memphis Tennessee. These lone actor attackers developed and launched online assets, such as livestreams of their attacks as well as the distribution of manifestos, explainer documents on social media evasion strategies, and how to develop weapons. The distribution of these online assets was choreographed and disseminated online as part of the attack strategies, to increase virality of the attack and draw further attention to the ideology the attackers used to justify their violence.

As platforms grow, reaching billions of users globally, so too have technical approaches to assist in surfacing, reviewing, and removing terrorist and violent extremist content (TVEC). In the best-case scenario, tooling facilitates the proactive surfacing and review of dangerous or policy-violating content at scale, while human oversight adds nuance and quality control to the process, identifying new threats as moderators track adversarial shifts.Footnote2 In the worst-case scenario, processes are built on algorithms that yield high false/positives or false/negatives, leading to potential over-censorship or negligence. Without internal expertise, moderators are ill-equipped to understand the threat enough to understand errors or identify TVEC content.Footnote3 Many processes deployed by platforms remain opaque, but some companies have tried to increase transparency in recent years.

The Global Internet Forum to Counter Terrorism (GIFCT) is uniquely placed to work with companies on these processes. This paper aims to: (1) provide an overview of the known algorithmic tools being deployed by tech companies in counterterrorism and counter-extremism efforts, (2) outline some of the ethical and human rights considerations around the deployment of proactive tooling, (3) analyse the results from a GIFCT Tech Trial testing a methodology for deploying a layered signal methodology for proactively surfacing TVEC related to ongoing credible threats. The research applies a layered signal methodology to test efficacy on both violent Islamist extremist and white supremacy ideologies.

These two ideologies were chosen based on international threat assessments associated with government designation trendsFootnote4 and trend analyses by the Global Network on Extremism and Technology (GNET) highlighting insights from global experts about white supremacy lone actor threats and continued threats from Islamist extremist terrorist networks.Footnote5 These two violent extremist ideologies have also been studied extensively by organizations providing datasets and analyses about phraseology, group names, and dog whistle terms deployed by particular groups within these ideologies that GIFCT could use in its layered signal work. For this research, GIFCT specifically looked at groups or individuals that identify with either Islamist extremist or white supremacy ideologies and are linked to real world violence. This paper explores how mixed-signal tactics might be deployed in instances of crisis response, where specific location and language understanding can increase efficacy.

The Scale of the Problem and Previous Research on Algorithms to Surface TVEC

The need for algorithmic tools to be deployed as part of online counterterrorism and counter-extremism processes is paramount. Daily content shared by users on larger platforms is too immense to rely solely on reactive flagging of content from users and piece-by-piece human review. 2022 statistics on global data shows that internet users send about 650 million tweets, 41.6 million WhatsApp messages, and 333.2 billion emails daily.Footnote6 While many companies lack regular transparency reports,Footnote7 reviewing the transparency reports of larger tech companies shows how widely proactive measures for removing terrorist and violent extremist content are at play, and account for most of the content removed under terrorist or violent extremist policies.Footnote8 As examples, data from Twitter, YouTube, Facebook, and Instagram show the amount of content actioned in the 2021 calendar year for terrorism and/or violent extremism. Twitter reported actions taken on 78,668 accounts for terrorism violations in 2021.Footnote9 YouTube removed 836,659 videos for “violent extremism” in 2021. Within that period between 90% − 95% of the content was removed before having more than ten views.Footnote10 Instagram removed 2.357 million pieces of content for terrorism and 1.33 million pieces of content for Organized Hate, 79.5% proactively identified without user flagging.Footnote11 Facebook had the highest removal numbers with 34.4 million pieces of content removed for terrorism and 20.2 million pieces of content removed for “organized hate,” 98%−99% of which was identified proactively without user reporting.Footnote12

More tools do not mean less humans are needed in moderation processes. Previous research has shown that “hybrid models,” combining layered algorithmic tools with human oversight, are likely best placed for content moderation at scale.Footnote13 Chen et al. (2008) developed a methodology allowing for automated collections and analysis of terrorist content collected on the Dark Web through a combination of domain spidering, black-link searches, keywords, and more qualitative group or profile searches.Footnote14 This method was successful in taking a limited number of verified jihadist URL domains and using them to source further content and domains tracking terrorist propaganda. Some limited research has also worked towards developing hybrid and mixed-method models for assisting law enforcement efforts in tracking potential attackers through online signals. Brynielsson et. al. (2012) developed a method for analysing terrorist forums and profiles to identify potential lone actor terrorists by combining natural language processing and hyperlink analysis to gather a list of potential future attackers that law enforcement could investigate.Footnote15 As in many cases, results were mixed and posed ethical questions around identifying users from online data, surfacing individuals that have not yet committed a violent act, re-identifying a longstanding conclusion that there is no one profile for attackers. Reid et. al. (2004) also developed a methodology to facilitate law enforcement and practitioner efforts by designing custom-built knowledge portals to better triangulate big data.Footnote16 The Terrorism Knowledge Discovery Portal and layered data-collection work focused on discovering and presenting patterns in terrorist networks to identify how relationships among terrorists are formed and dissolved.

These studies reiterate what many internal moderation and engineering teams at tech companies come to terms with daily. Human moderators are best placed for assessing nuance in how normative speech, humour, political satire, or even “lawful but awful” content should be assessed online, while tools can help proactively surface potentially violating or illegal content, most often through layered signal approaches. As increased pressure for tech companies mounts to remove violating content, so do concerns from human rights organizations, fearing potential over-censorship from false/positives labelled by algorithmic tooling.Footnote17 The original research and analysis provided in this paper hopes to contribute to the counterterrorism and counter-extremism community by focusing on crisis response moments, when potential perpetrator content has the highest risk for virality, adding to the wider impact of a real-world violent attack.

Part 1: Algorithmic Tooling and AI Assisting in CT and CVE Efforts Online

There is an increasing focus and interest by governments and the wider public in understanding algorithmic processes, both in terms of how they can be used for positive moderation as well as an overall concern about the potential for algorithmic processes to increase user exposure to violent extremist and terrorist content.Footnote18 This section reviews some of the algorithmic tools known to be deployed by larger tech companies in counterterrorism efforts with a focus on photo and video hashing, Strategic Network Disruptions, logo detection, linguistic processing, and recidivism. The nexus between algorithmic amplification and extremism is one of the four topical work streams of the Christchurch Call to ActionFootnote19 as well as a subtopic within three of the five 2021 - 2022 GIFCT Working Groups.Footnote20 In layman terms, an algorithm is any set of instructions to a computer or online system dictating how to transform a set of facts (or data) into useful information (or an output). Algorithms are any inputs that dictate the processing and surfacing of a particular output online.Footnote21 When tech companies discuss online tools used for identifying, surfacing, and potentially removing content, they are referring to different algorithms set to do specific tasks. In most cases, surfacing denotes the ability to bring specific content or online information forward for human review.

More complex tooling can layer algorithmic processes, or, in the most advanced cases, artificial intelligence (AI) is sometimes used by platforms in these efforts. AI is the broader science of computer systems mimicking human abilities and decision-making processes.Footnote22 Within AI, machine learning is the process that trains a machine how to learn. Machine learning tools look for patterns in data to draw conclusions. The algorithmic lifecycle of machine learning is that a system learns from a large dataset until it reaches optimal precision levels. It can then be applied to new data sets to develop a feedback loop and increased precision to develop predictive models.

Larger social media companies have openly discussed some of the tools deployed on their platforms to assist in proactively surfacing and actioning terrorist and violent extremist content online.Footnote23 This section explains what these tools do and how they function on social media platforms. To note, there is a significant difference in both the human moderation staff and layered technical approaches available to larger companies in comparison to smaller, less resourced, companies. While larger companies might deploy some or all the below, smaller, and medium companies might only have one or two of the below tools deployed for counterterrorism and counter-extremism efforts.

Image and Video Hashing and Matching

Image and video hashing and matching technology has been long discussed publicly for safety efforts online countering child abuse imagery and for counterterrorism efforts. Perceptual hashes are numerical representations of original content that can be used in a similar fashion to how a fingerprint identifies a bad actor in a criminal database.Footnote24 A hash value can be shared between platforms without sharing any Personally Identifiable Information (PII) of users. When content is hashed, the numeric value produced can be used to find further matches related to that content within a database, platform, or across multiple platforms that might agree to parameters by which to share hashes.Footnote25 This is the primary algorithmic tooling running how databases run by the National Center for Missing and Exploited Children (NCMEC) and GIFCT operate.

Most tech companies use Artificial Intelligence (AI) as well as Locality Sensitive Hashing (LSH) algorithms such as PhotoDNA and VideoDNA or PDQ and TMK + PDQF to prevent users from uploading photos or videos that match content previously identified as TVEC. These technologies are designed to operate at a high scale and allow for the automation of processes that could otherwise require tens of thousands of highly specialized human moderators. Alterations to content (such as cropping or colour enhancing) alters the hash value,Footnote26 but by hashing the query point and retrieving elements stored in buckets containing that point, visually similar images with mathematically proximate hash values can be surfaced.Footnote27 When a GIFCT hash-sharing database member company identifies an image or video content on their platform that has violated their terms of service and is associated with the agreed GIFCT Taxonomy for terrorist and violent extremist content, they can produce a hash of the content and upload it to the database,Footnote28 including relevant content related to incident response workFootnote29 and attacker manifestos.Footnote30 Hashes allow GIFCT member companies to quickly identify visually similar content on their own platform which has been removed by another member, enabling them to review such content to see if it breaches their terms and conditions.

Strategic Network Disruptions

“Strategic Network Disruptions” (SND) are a method used to disrupt terrorists’ and violent extremists’ abilities to operate online.Footnote31 These take the form of significant, targeted action against dangerous organizations, groups, or individuals operating online.Footnote32 Terrorist and violent extremist networks are governed by information exchanges that give rise to the structure of a network that can be tracked through interrelated signals and an SND is a methodology for mapping that network and removing all defined elements and accounts at once.Footnote33 By removing a network, instead of piecemeal removals of content, SNDs disrupt the flow of communications between terrorists and violent extremists and force the compartmentation of a network, significantly impeding their ability to function effectively on that platform.Footnote34 This process is generally approached through Social Network Analysis (SNA) using a collection of tools to study relationships, interactions, and communications between people onlineFootnote35 and has been used to map diverse connections between terrorists and violent extremists enhancing our understanding of emergent social structures that shape criminal acts and potential processes of radicalization.Footnote36

Exemplifying the results of SND tactics, in June 2020, Facebook designated a network of individuals tied to the US-based, anti-government “boogaloo” movement. Through a targeted SND Facebook reportedly removed 220 Facebook accounts, 95 Instagram accounts, 106 groups, and 28 pages connected with the movement in a simultaneous takedown.Footnote37 Similar known usage of AI tools to take down larger networks of known violating groups have also taken place on Twitter in their efforts to counter Islamic State accounts.Footnote38

Logo Detection

For more centralized terrorist and violent extremist groups with membership structures and clear iconographic identifying markers, logo detection can be a powerful image recognition technology for localizing and identifying specific objects in each image.Footnote39 Logo detection is a subcategory of computer vision, a field of artificial intelligence that uses deep learning algorithms to train computers to interpret and understand the visual world.Footnote40 Training a logo detection algorithm involves feeding it a series of annotated images indicating which objects you want it to identify,Footnote41 for example, ingesting many images with the logos of a designated terrorist organization from a wide array of propaganda. The model then leverages machine learning to detect logos in different scales, qualities, colours, and with various alterations.Footnote42

While object detection algorithms have opened new ways of understanding and analysing visual data, and have outperformed humans in certain tasks, there are certain limitations to this approach in the detection of terrorist and violent extremist images. For example, many detection models are trained and tested using “ideal” images, meaning the object being detected is clearly identifiable. However, objects are not always positioned in ideal scenarios in online content: the background may be cluttered, an object may be rotated, occluded, or a logo might be embedded in a flag with texture.Footnote43 Terrorist and violent extremist logos are not always clearly identifiable or displayed in the highest resolution, which necessitates the need for a wide variation of training data to ensure high quality matching of logos within images or videos.

Linguistic Processing

While most discourse around terrorist and violent extremist content focusses on image and video propaganda, most user-generated content online is in text format. For this reason, the detection and analysis of terrorist and violent extremist activities online requires support from computerized linguistic tools.Footnote44 Terrorist and violent extremist activity can be detected by identifying patterns of behaviour in text known as “linguistic markers.” Natural Language Processing (NLP) algorithms enable machines to read, understand, and derive meaning from human languages, and have been instrumental in the study of extremist discourse.Footnote45 NLP algorithms can be applied to data to detect warning behaviours from digital traces that could indicate signs of violent extremism. As an example, on Facebook, NLPs are used to analyse text that has previously been removed for praising or supporting terrorist organisations to develop text-based signals which flag such content as propaganda. These machine learning algorithms work on a “feedback loop” and improve themselves over time.Footnote46

While useful, these techniques will likely never fully replace human analysts due to the nature of user language online, which is dynamic, noisy, and highly multilingual.Footnote47 In addition, although multilingual models continue to improve rapidly, challenges remain with rare languages, dialects, and transliteration. Terrorist content is shared in a wide array of languages, and while larger tech platforms have the capacity to employ specialist teams with subject matter and language expertise, most companies have comparatively small moderation teams to review content and very few linguists with the appropriate mix of global dialects.Footnote48 As an example, white supremacy phrases or numeric dog whistle slogans, are often used to evade detection. “1488”, is used in text to signal the 14 words of white supremacy and “Heil Hitler” as H is the 8th letter of the alphabet. While “1488” is used commonly to flag white supremacy sympathies within white supremacy communities online, it can also be associated with dates, addresses, and a myriad of other false/positives. However, by combining NLP with another behavioural signal, such as the mention of a known extremist web domain, early research has shown that a combination of word embedding, and deep learning performed well in accurately surfacing white supremacist hate speech.Footnote49

Recidivism

Harmful actors continually try to circumvent the systems put in place to identify and remove content, accounts, and activity connected to terrorism or violent extremism. Repeat offenders are often found creating new accounts after being kicked-off a platform for violating terms of service or community guidelines. This behaviour is known as recidivism and necessitates the need for tech platforms to quickly detect similar accounts or aliases created by previously banned harmful actors. The work of tackling recidivism is highly adversarial and is continuously reinforced by the harmful actors’ evolving methods.Footnote50 Many platforms have developed algorithms to identify and remove recidivist accounts, even if the new account isn’t sharing any overtly violating TVEC. Through this work, platforms have been able to reduce the time that terrorist recidivist accounts are online.Footnote51

Some researchers have argued that suspending social media users is a near futile endeavour because the users will always create new accounts, negating the benefit of suspension. However, a study of an ISIS network active on Twitter in 2015 demonstrated that account suspensions combined with recidivist detection had a significant effect on decreasing the overall size of a network and decreased activity on a given platform.Footnote52

Part 2: Ethical and Human Rights Considerations in Deploying Algorithmic Solutions

The previous section on various algorithmic tools shows the potential benefits of singular and layered deployment of online methods to counter terrorism and violent extremism online. However, the usage of these tools should always be done with care and oversight of potential unintended consequences.Footnote53 Tech companies that GIFCT works with often have engineering teams and data scientists that work with product policy teams, legal oversight teams, and ethical review teams in the process of developing and deploying algorithms that influence user interfacing aspects of a platform or the collection of data. Tools can help companies get to the scale and speed necessary for global content review and moderation processes, however, this should not be at the cost of significant false/positives or false/negatives that impede on legitimate content or potentially affect marginalized, vulnerable, or persecuted populations.

Ethical and human rights considerations are important to define parameters for the deployment of new or advanced tooling that assist moderation processes. The United Nations’ Universal Declaration of Human Rights lends some guidance to the online space in Article 19, which states that, “[e]veryone has the right to freedom of opinion and expression; this right includes freedom to hold opinions without interference and to seek, receive and impart information and ideas through any media and regardless of frontiers”.Footnote54 Much of the concern about restrictions on human rights in online spaces relates to a government’s ability to force content removal or require access to user data in a way that unlawfully impedes on a person’s rights. There are increasing conflicts between states applying different obligations on tech companies, justified through legislation around safety, privacy, or free speech protections.Footnote55 Relatedly, in 2004 the UN Human Rights Committee declared that states must demonstrate necessity in actions that restrain citizen activities, only taking measures that are proportionate to legitimate aims to protect rights.Footnote56

While tech companies look to adhere to legal frameworks while innovating, there are few globally recognized parameters to guide tech companies in practical terms about how to prioritize rights when national or regional legislative clashes begin to emerge. There are not clearly agreed upon practical steps all platforms should take when assessing an algorithmic tools or moderation processes though some international bodies have progressed on guidance in recent years. Focused on companies’ applications of policies, the UNHR’s Guiding Principles on Business and Human Rights is a bedrock for tech companies.Footnote57 Guiding principles for tech companies in moderation practices and data collection dictate that policies should dictate actions deemed as necessary, lawful, legitimate, and proportionate, and that the right to restriction should be tied to a defined and defendable threat. Looking specifically at guidance for the deployment of artificial intelligence tooling, the Council of Europe has also developed a series of recommendations for tech companies, including lists of Do’s and Don’ts around human rights impact assessments, public consultations, transparency reporting, independent oversight, equality, freedom of expression, and remediation.Footnote58 In the deployment of technical solutions for countering terrorism and violent extremism on platforms, the GIFCT Technical Approaches Working Group report (2020–2021), produced a report led by the initiative Tech Against Terrorism, which included a section about protecting human rights in the deployment of technical solutions.Footnote59 The paper noted four primary areas of concern:

Negative impacts on freedom of speech impact in the accidental removal of legitimate speech content, particularly affecting minority groups.

Unwarranted or unjustified surveillance.

The lack of transparency and accountability in the development and deployment of tools.

Accidental deletion of digital evidence content that is potentially needed in terrorism and war crime trials.

Existing frameworks provide broad suggested guidance that does not always give nuanced answers on how to approach adversarial threats deployed by terrorists online. This is also particularly difficult when approaching wider violent extremist trends from groups and individuals above and beyond government designation lists and legal frameworks. It will continue to be the case that there is no one approach or process for countering terrorism and violent extremism online while considering human rights. However, as previous research has highlighted, single signal detection models yield higher rates of false/positives, causing greater concerns to human rights implications.Footnote60

Taking existing guidance into consideration, this paper argues that using multi-signal or layered-signal approaches for surfacing and reviewing online material and activities related terrorism and violent extremism, can drive down error rates and better address human rights concerns of potential over censorship. The best way layered-signal approaches can be implemented within a human rights framework is to ensure an adequate level of transparency around the types of algorithmic tools being deployed by a platform, as well as high level data on subsequent user content removals, profile restrictions, and information about remediation or appeals processes. The type of algorithmic transparency necessary is not necessary at a source-code level, nor a level of granularity that could spark adversarial shifts, but at a baseline level of understanding of what tools do, such as understanding photo and video matching or logo detection that might be at play.

Multistakeholder guidance to develop principles for tech company transparency to alleviate human rights concerns is crucial, bringing government, tech, and civil society to work together in sharing knowledge and concerns. GIFCT has an ongoing cross-sector Transparency Working Group that produced an overview of concerns, perspectives, and knowledge gaps in approaches to transparency reporting looking at the different viewpoints of governments, tech companies and civil society.Footnote61 One concern remains the disjuncture between appropriate expectations of larger, well-resourced tech companies, in comparison to smaller, less-resourced companies. A first transparency report can be a large lift for smaller companies or companies that have never produced one before. To address this, Global Partners Digital and the Open Technology Institute released a report in 2020 guiding transparency efforts specifically for small and medium sized technology companies.Footnote62 Other guiding documents facilitating greater transparency from tech companies are the Santa Clara Principles on Transparency and Accountability in Content Moderation,Footnote63 and New America’s Transparency Reporting Toolkit on Content Takedowns.Footnote64

Part 3: GIFCT Tech Trial Methodology for Employing Layered Signals in Incident Response Work

Despite significant advancements in algorithmic tools used to surface, review, and remove terrorist content or activity, little is known about how these signals are used or combined in real terms by platforms. As discussed, previous research in machine learning suggests layering signals or indicators of a specific behaviour can ensure greater accuracy in review and removal processes or can be used for counter-narrative targeting online and custom segment audience targeting.Footnote65 For example, ensemble learning is based on the idea that two minds are better than one. To make strategic decisions humans solicit input from multiple sources and combine or rank the sources, so multiple machine learning models (or base learners) can theoretically be combined into one ensemble learner to ensure better overall performance. Ensemble learning aims to integrate data fusion, data modelling, and data mining into a unified framework.Footnote66

The research presented in this paper is based on a trial capability using combinations of different keyword lists as signals to identify, in as real-time as possible, if a potential terrorist or violent extremist incident has occurred. Tech companies, law enforcement units, and cross-platform efforts like GIFCT are highly invested in enhancing better proactive detection to have better crisis response efforts online. This is particularly the case after the Christchurch terrorist attacks that took place in New Zealand in 2019, whereby the attacker livestreamed the attack, followed by an intense international viral spread of the online content. This livestream was also paired with the digital launch of a manifesto justifying the attack with white supremacy ideologies. The livestream and manifesto amplified the harm of that incident to a more global audience. Being able to detect these types of incidents and associated perpetrator content proactively and quickly are crucial to risk mitigation against the viral spread of perpetrator-produced content across platforms during and directly after attacks.

Identifying violent images or violent language on the internet is not particularly challenging. However, this becomes difficult when related to finding perpetrator or accomplice related incidents in near real time with specific requirements and parameters to guide the aims of the algorithmic processes, without impeding on privacy or human rights. Developing a set of criteria for deploying online tools, the aim is to:

identify specific current mass violence, or attempted mass violence,

with a significant online aspect,

that is not bystander footage or journalistic in nature,

while using only necessary, proportionate, and justified approaches, to minimize the intrusion of privacy,

and using openly accessible data (not private data).

This type of content, within near real-time framing, represents a very low-prevalence, high-risk content type in an environment where there are wider online discussions and content-sharing around crisis moments, particularly in comparison to the totality of online content. To note, this is not intended to be an authoritative study on wider linguistic tooling to surface violent extremist activities more broadly. Rather, this research adds to previous research by testing and advocating for layering algorithmic tooling based on behaviours and linguistics to get at this low-prevalence, high-risk crisis scenario.

Identifying and Layering KEYWORDS Lists

The first step in developing this methodology was to develop concise groups of keywords and phrases that could potentially identify the behavioural signals that make up a scenario around a violent extremist attack or potential threat to violence. Some of the terms are discussed as examples, however, we have not shared the exact terms list in this report, as we do not want to unknowingly lead bad actors or sympathizers to use these lists in ways we cannot predict. However, if researchers would like to reach out to GIFCT in their own research efforts to know more about these lists, the authors are open to wider transparency and sharing.

It was important to keep the lists of keywords concise and focus on quality over quantity for testing purposes because scraping content can surface huge pulls of data and our point was, in essence, to find a “needle in a haystack”, rather than just add more hay. Our keyword taxonomy and number of keywords associated with each is shown in the following table:

To create an initial set of keywords to represent language indicating mass violence and language indicating immediacy we started with an analysis of papers analysing the portrait of terrorist events in the media and the related language.Footnote67 We selected the words within these texts that were commonly used to reference ongoing events (e.g. “breaking”, “now”, “active”). These were supplemented with words and phrases around incidents related to tactical positioning, armament and weaponry based on data from GIFCT’s Incident Response Framework. This framework allows GIFCT member companies to have centralized communications to share news of ongoing incidents that might result in the spread of violent or inciting content tied to the specific incident unfolding. These communications allow for widespread situational awareness and a more agile response among member companies, established after the New Zealand, Christchurch terrorist attacks in 2019.Footnote68

We built a list based on organizational and entity related keywords related to both Islamist extremist terrorist groups on the United Nations Security Council Consolidated ListFootnote69 and limited white supremacy linked groups, such as Combat 18, designated by certain democratic countries and supranational institutions as referenced in the Terrorist Content Analytics Platform.Footnote70 Both lists include some slogans and phrases that are specific to violent extremist and terrorist groups from these two categories. In some cases, particularly from the white supremacy related keyword list, these phrases might also be associated with wider targeted racial slurs and have relevance to neo-Nazi related groups. To note, designated entity names and titles are often brought up in wider public conversations online, in mainstream and alternative media outlets, and amongst academics online. As such, they are an important signal but are by no means a single source leading to true positives for incident response purposes and are not present in all the relative content we were aiming to surface.

Finally, we built initial keyword lists related to language indicating support for a violent extremist ideology. This was done through consultations with subject matter experts as well as by consulting research done by the Centre for Analysis of the Radical Right on Symbols Slogans, SlursFootnote71 related to white supremacy groups, and a subset of terms related to Islamist extremist groups developed by the World Islamic Science and Education University.Footnote72 The terms lists specifically focused on keywords likely used by individuals linked to the relevant violent extremist and terrorist groups relevant to the previously described parameters. For analytical purposes, throughout the research we kept Islamist extremist linked terms separate from the white supremacy linked terms. These terms, in and of themselves, are potentially weak signal for identifying terrorist or violent extremist incidents, as they are widely used in hateful speech more generally. However, by layering these four types of linguistic signal our aim was to test the possibility of combining many weak indicators to produce a stronger, more accurate, and more proportionate, approach to surfacing violating perpetrator activity.

For the various keyword lists we tried to focus on native languages spoken by the target groups under investigation, but limited internal linguistic capacities meant that our primary analysis took place in English. While this is a limitation of this study, it does highlight one of the key challenges faced by many companies in moderating content, especially from smaller and medium companies with limited moderation capacities for full-time global languages. While machine translation can help, and was used to some extent in this research, the context and nuance of the language is very important given that violent extremist groups also use slang or coded language.

To further refine our lists of keywords, we tested them to see what results they yielded and further refined the lists to ensure more accurate indicators around our parameters, namely real-time indicators of terrorist or violent extremist violence. We searched for each keyword in a collection of data provided to us by Media Sonar (an open-source intelligence threat monitoring organization) to assess the frequency that terms and phrases occurred in combination with relevance of the results based on our parameters. As expected, many keywords or phrases yielded results that were not relevant to our aims and yielded high volumes of false positives.

As an example, the word “shoot” or “shooting” is often used in discussions linked to violent extremist events, however, its various usages mean it is also used in the entertainment industry (i.e. “shooting a movie”) and in sport (i.e. “a shooting guard”). In situations where a word or phrase was found with a very high frequency of results that had no relevance to our parameters, we either removed the word entirely or, where possible, included an “excluding statement” into the algorithm to remove a specific context. For example, carrying on from the “shooting” sample, we can use an exclusion statement, such as “shooting guard”, to remove the basketball context. Although this process of refinement was data driven, it also has a significant aspect of subject matter expertise and context guiding decisions about what to include or exclude. This could potentially introduce bias into processes, again highlighting the challenges faced by companies trying to do similar work at scale with limited contextual subject matter expertise across different languages and geographies.

At this point each keyword group on its own is a behavioural indicator but is likely a weak signal yielding overly broad results. The specific behaviour we were trying to find was early indications that a terrorist or mass violence event with an online aspect has occurred. Any one keyword group might indicate that, but there are also many false/positives, even with the process of refinement. The “language indicating mass violence” keyword group, for example, finds violent incidents related to a wide variety of actions, from crime to natural disasters. Thus, the testing is set to layer the four groups of linguistic signals to provide us with a small enough corpus of data that can be used for indicating a terrorist or mass violence incident.

As layered testing models surface a large corpus of data, other algorithmic tools are used to make sense of the data, find patterns, visualize results, and allow for analysis to be made. To make our analysis, we applied term frequency inverse document frequency (TFIDF)Footnote73 to identify terms that occurred frequently in the corpus of data but infrequently across a generic set of social media posts (research data sets). We also clustered posts within the corpus using several approaches including TFIDF and Latent Dirichlet Allocation (LDA)Footnote74 with Density-Based Spatial Clustering of Applications with Noise (DBSCAN)Footnote75 and K-Means (a method of vector quantization). These combined tools allow for visualization and turn big data into a dataset that is easier to review for humans. Clusters were then coded based on how relevant they were to the goal, and we identified other potential keywords to continue to refine keyword groups. This methodology for topic modelling and clustering was then iteratively refined, using first Gibbs Sampling Dirichlet Multinomial Mixture (GSDMM) and then Bidirectional Encoder Representations from Transformers (BERT) to assess the most effective way to make clusters of topics that make sense to a human reviewer.

Results and Findings

Based on pulling content that fit within our four-part criteria of keyword groups, we collected 82,440 posts in total from open sources across Twitter, Instagram, Telegram, news media sites, publicly available blogs (not behind subscription or paywall), and Dark Web pages. Dark Web pages are sites only accessible by a specialized web browser, often used to keep internet activity anonymous and private. To note, the outlets used to collect data are less indicative of where GIFCT felt the most likely terrorist threat was and was more based on open-source outlets that posed less ethically challenging. While this is a limitation, it is based on the previous human rights discussion and parameters we set for the research in ensuring that we were not intruding on user privacy as part of our research methodology.

The data collection took place throughout March and April 2022; however, posts were found that were published from as far back as November 2018. This is relevant because even a layered text for “immediacy” can yield dated content or incidents. The keywords were then broken into groups based on the behavioural signals that make up the scenario around a violent extremist attack or potential threat to violence, ideological affiliation, or sympathy with a terrorist or violent extremist group, in diction of mass violence, and immediacy. The ratio of terms to posts is much greater for the mass violence and immediacy terms than the other keyword groups. This likely indicates that the words used in these groups are found at a much higher frequency, and therefore more likely to be false/positives. This is generally because the terms in these groups are found more broadly in common language to express concepts outside of the context of extremism linked to violence, while the other terms have more context-specific meanings.

Language Variations

Despite limitations in our keywords being largely built on English and Arabic lists, the content surfaced yielded posts in more than 54 different languages linked to approximately 100 countries.Footnote76 This was an unexpectedly high volume of other languages that we did not have internal fluency in. This highlights the difficulties tech companies face in moderation efforts when deploying proactive tools to surface potentially violating content or content related to real world harm. Even terms focussed on two specific languages, English and Arabic, yielded a high volume of linguistic variation, with some languages that were indecipherable. When compared to tech company efforts, this implies that the context for why a certain keyword was used, or piece of potentially violating content was shared, might not be understood by moderators without a high volume of moderators covering global languages. For most US-based platforms, there is a default to leave user content up if a clear violation is not determined. While this approach is positive for free speech freedoms, the lack of global coverage for a multitude of languages, particularly for smaller companies, means that potentially violating content might remain online even when flagged and reviewed.

Single KEYWORDS Signal Results

Taking the dataset, we wanted to illustrate potential false/positive rates if keyword groups were identified as a single signal, versus being refined by layering keyword group signals. We therefore looked to develop topic modelling to visualize and highlight false positives. In this modelling, posts that are closer together in the visualization should be discussing the same topic, and posts farther away should be discussing different topics, allowing for the identification of false/positives and true/positives. As an example, if we consider the keyword “AWD”, this should relate to “Atomwaffen Division” in a violent extremist context, but more commonly might surface content relating to “All Wheel Drive”. While we should be able to cluster and negate car related content, and keep Atomwaffen related content based on the clusters, this can be difficult depending on the topic modelling algorithm used.

In our first attempts at topic model clustering, we used Latent Dirichlet Allocation (LDA) because it is fast, simple, and well-known. However, LDA performed poorly on our dataset. While it successfully clustered distinct groupings, mathematically and linguistically it was meaningless and was unable to differentiate core concepts. One reason for this is that LDA performance increases with the length of posts. Our data had a 248-character length average (partly due to the Twitter data set). We therefore added another algorithm to the process, Gibbs Sampling Dirichlet Multinomial Mixture (GSDMM), that was designed specifically to deal with shorter texts.Footnote77

In both LDA and GSDMM processing you must estimate the number of key topics a query might yield, which is very difficult to do with violent extremist and crisis response keywords when the totality of related topics and potential false/positives is unknown. The best approach is to therefore overestimate the number of topics so that the algorithm naturally collapses empty topics as it optimizes for completeness and homogeneity.Footnote78 GSDMM worked slightly better in that we could at least see a pattern of clusters; however, the pattern was not related to semantics or themes of topics. Rather, the clustering was based more on style and sources of the posts. While this is interesting, it is not useful for our overall goal.

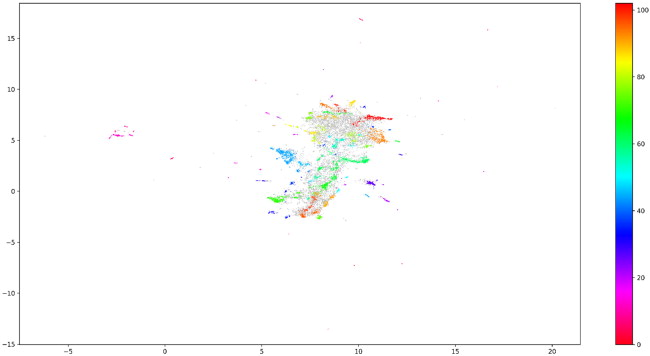

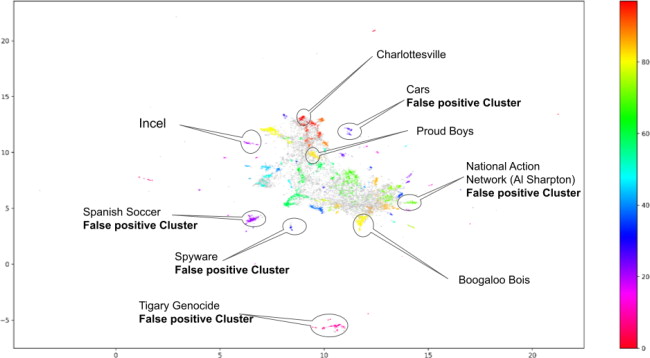

Our third approach was to use a more complex machine learning algorithm called Bidirectional Encoder Representations from Transformers (BERT) developed in 2018.Footnote79 This contextual model generates a representation of words based on other words within a sentence and it allows for fast and scalable implementation. BERT did a good job of modelling the posts and relevant language, though the topical clusters were still somewhat difficult for a human to see. To clarify themed clusters, we applied dimension reduction using UMAP to move from the initial multi-dimensional vectors, into 2-dimensional vectors that can be graphed. The clustering of 2-D points into topics was then brought together using the HDSCAN algorithm. The below is an example of the topic cluster diagram mapping topics from posts containing keywords from our white supremacy related keywords list.

Structure in the clustering is visible, however, the initial clustering is given with numbers, rather than themes, so we used Term Frequency Inverse Document Frequency (TFIDF) to treat each cluster topic as a group of common terms that are rarer in the whole corpus. We can then take the top ten most relevant terms as a representation of the topic a cluster represents. This brings up word clusters outside of our initial keywords list to identify what our keyword is relating to. As an example, a true/positive cluster surfaced that represented posts related to the Charlottesville “Unite the Right Rally”. To note, “Charlottesville” was not a keyword in our original lists. Meanwhile two false/positive clusters included “National Action Network” mentions in relation to the civil rights organization founded by Reverend Al Sharpton, rather than the designated terrorist organization with the same name, and posts containing the term “NSO” included many posts related to the Israeli spyware company, rather than the “National Socialist Order”.

The diagram becomes much more human friendly when labelled with distinct topics to differentiate between posts that are relevant versus clear false/positives:Footnote80

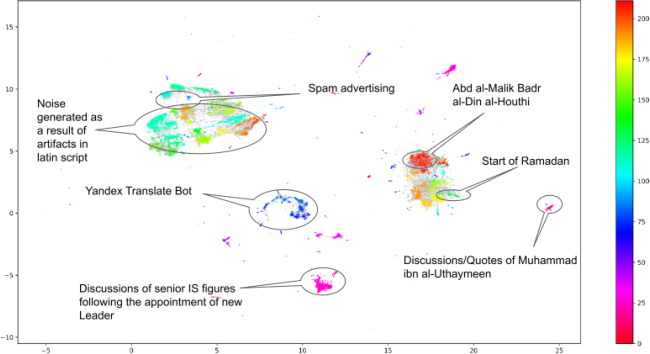

While singular thematic keyword sets worked well enough on white supremacy related topics, the results were not as clear for the Islamist extremist keyword list. There was a much higher degree of spam posts, mixed with Latin script words muddled into Arabic text. There were fewer clear clusters of true/positive topics related to violent extremist topics, meaning there was a higher degree of noise in the results. This can potentially be attributed to the terms list itself. This indicates that key words related to Islamist extremism that we used in this study are often used in mainstream discussions in Arabic and not strong indicators of extremism. There is also a high degree of terms and phrases linked to religious interpretation, making it hard to clearly link a cluster to Islamist extremist violent groups or incidents. It was also difficult to analyse and interpret results given the high degree of both language skills and subject matter expertise needed internally to fully understand some of the clusters.

Combined Layered Keywords Signal Results

We then combined keyword groups to try and decrease levels of false positives and triangulate the information to see if we could more accurately find posts indicating a present terrorist or violent extremist incident. This meant that posts had to contact one keyword from either the Islamist extremist or white supremacy lists, combined with at least one mass violence keyword and one immediacy keyword indicator. Of the total 82,440 posts, this reduced the dataset down to 1,078 posts, primarily on News and Blog sites (813 posts) and some on Website and App posts (265 including the dark web). There were no significant combined results relating to contemporaneous posts indicating terrorist or mass violence events on Twitter or Instagram.

511 of these posts related to news reports containing a white supremacy related keyword. Of this subset, approximately 18% of the posts were considered true/positives - meaning they related to a white supremacy related violent extremist incident or threat. Of these true/positives, most of the posts related to documentation and media that traditionally follows an incident, details of the arrest, court proceedings, or law enforcement related activity. There was a small number of violent threats identified in the set linked to individuals using white supremacy dog whistle terms. None of these had the specificity to be considered a “credible threat” but were of relevance. To note, GIFCT has a “Threat to Life” policy for escalating any content that might be deemed a credible and imminent threat.

The 82% of posts that were considered false/positives in the combined keyword clustering also yielded some interesting learnings. We learned more about car advertising than expected! We mentioned AWD being associated with “all-wheel drive” more often than Atomwaffen, but even with the combined lists we found articles that described a car as “bombing over coarser-chip freeways” and another as having “stealth bomber appearance”, matching AWD with our mass violence and immediacy terms when combined with “breaking news”. False positives also often brought up sports reporting, describing players as “explosive” with statistics that sometimes aligned with white supremacy dog whistle numerology, such as “1488”.

False/positives also included reporting on police brutality, counterterrorism strategies of governments and wider terrorist attacks unrelated to our dataset. These unrelated terrorist attacks usually surfaced due to media sites having “related articles” spread within or at the bottom of current articles that included headlines. For example, a news article related to recent shootings in Israel included “Countries Breaking News” listing further articles at the bottom that linked to articles about Proud Boys court hearings. These examples accounted for nearly half of the false/positives yielded from the combined search term lists.

Only 7% of the posts that surfaced on other websites and apps, including the dark web, were relevant to violent extremist mass violence incidents. Most of these were reporting on arrests. There were a small number of reports of actual incidents reported contemporaneously. These include incidents of white supremacy and xenophobic violence posted about on platforms such as Telegram, Kohlchan and Bitchute. In all the cases related to white supremacy incidents, none of the content was from a perpetrator or accomplice, but in wider threads related to the initial post there were clear divides between concerned users and sympathizers of the wider violence.

There were two cases of posts with true positive terrorist incidents posted shortly after terrorist attacks related to Islamist extremism. These posts were “battlefield reports” from ISIL media channels posted on terrorist operated platforms, documenting ISIL activity in West Africa, Afghanistan, Pakistan, and Northern Iraq.

Accuracy and Recall

From the results we can see that we had lots of false/positives that we can learn from, but we also wanted to know if there is a way of testing false/negatives. As mentioned, GIFCT runs an Incident Response Framework to communicate international terrorist and violent extremist incidents to its tech company members, as a way of ensuring awareness and escalating to a Content Incident Protocol if necessary. During the period of data collection related to this research, GIFCT shared awareness of 19 mass violence events targeting civilians, outside of active war zones. Of these, half were related to Islamist extremist groups of various kinds, six were US-based gun crime incidents, one had unclear motives, and one was related to an “Anti-Vax” protest against Covid-19 vaccination. While this isn’t a perfect list of terrorist or mass violent activity during the period, it is a relatively good proxy for the kind of information we were trying to find with this proactive technical approach.

Of the 10 Islamist extremist incidents we were alerted to, four appeared in the dataset while six did not (making up the false/negative proxy). While the six false/negatives outweigh the true/positives, we are also looking at a severely low-prevalence, high-risk scenario compared to the magnitude of things discussed online. Which indicates that the dataset was catching incidents that would be of interest for GIFCT to flag to member companies for terrorist and violent extremist awareness. In general, we can say that accuracy and recall using individual keyword groups in this study is very low. By combining the keyword groups, we do improve accuracy somewhat in reducing false/positives, but we do not improve recall. Used in its current form, the combined keyword list approach is far from ideal, but we can see where increased capacities, subject matter expertise, language fluencies and layering with other forms of behavioural algorithmic tooling could improve these techniques.

Conclusion and Recommendations

The GIFCT Technical Trial presented in this paper tested a methodology using behavioural and linguistic signals to more accurately and proactively surface terrorist and violent extremist content relating to potential real-world attacks. As governments, tech companies, and networks like GIFCT develop crisis and incident response protocols, the ability to quickly identify perpetrator content associated with attacks is crucial, whether that relates to the live streaming of an attack, or an attacker manifesto launched in parallel to the real-world violence. The analysis of results builds on previous academic literature looking to use algorithmic tools in global counterterrorism and counter-extremism literature with specific takeaways:

Singular Behavioural Indicators Are Unhelpful in Surfacing Terrorist or Violent Extremist Activity or Content

Our results build upon previous online counterterrorism research, which has found that singular classifiers or behavioural indicators have too high a recall rate and that layered signal can significantly improve recall.Footnote81 Using keyword lists focused on specific terrorist and violent extremist threats largely surfaced false/positive content associated with a wide range of topics either unrelated to violent extremism entirely, or too tangential to be useful in finding threat. If this context were utilized by a large tech company, the content surfaced using a single behavioural indicator would not help find “the needle in the haystack”. Rather, it adds a potential high degree of noise into a moderation pipeline, hindering efficacy.

Combining Keywords and Layering Signals Can Improve Accuracy

When we combined keywords related to white supremacist and Islamist extremist groups in combination with signals around mass violence incidents and immediacy there was a notable ability to filter out much of the false/positive content previously surfaced. To be wary, this could also reduce recall. However, we were able to see where the algorithmic layering surfaced incidents that were relevant to GIFCT in its Incident Response Framework. There was still significant noise, but this has the potential to be refined in future testing.

There is Great Potential in Combining and Layering Behavioural Signals for Proactive Hybrid Models of Threat Detection

To improve recall and accuracy there are several approaches that could be tested in the future - and have likely already been tested in larger tech companies. Keywords lists can always be improved by working with partners and homing in on the keywords leading to the higher rates of true/positives. This is an adversarial space and human expertise is always key. Topic clustering can also be added to filter out false/positives and we could use GIFCT’s existing corpus of incidents to train a classifier further, modelling to identify incidents that only exist for a short time. The layered keywords approach can also be combined with other algorithmic tools mentioned earlier in this paper, to further weed out false/positives and decrease false/negatives. In crisis response work, for example, tactical deployment of location detection and language specificities could be helpful. However, the point of this research was not to build a robust and perfected approach to identifying terrorist incidents. GIFCT is a non-profit organization that works with a range of tech companies but does not have access to the back-end data from those platforms or 24/7 engineering resources that our members have. Rather, our aim was to show how we can approach building these methodologies, the complexity involved in proactive technical processes for getting at terrorist and violent extremist harms and the extent to which human resources and expertise should be considered in designing and interpreting results of these systems.

Language and Subject Matter Expertise Remains Important in Ensuring Quality Oversight in Algorithmic Input as Well as in Interpreting Results

As the results showed, even when querying in two languages, content in over 50 languages surfaced. Language skills are critical for both ensuring high quality input in each context and interpreting results. Violent extremist and terrorist groups often use slang and coded language that often only native speakers with some subject matter expertise will understand. Human oversight is also needed in the setting up of algorithmic layering around behavioural signals. Biases can be introduced at every stage, from modelling inputs to algorithm selection, and tuning to results interpretation. Evaluation relies on knowing what the answer is (or having truth data) in a very subjective field with a wide range of definitions.

This research aimed to, (1) ensure wider understanding about the algorithmic tools and approaches currently available to the counterterrorism and counter-extremism sector online, (2) highlight the human rights considerations that should dictate methodologies for proactive tooling, and (3) analyse a possible methodology for proactive crisis and incident response work by tech companies and law enforcement. This research has shown where layered signal methodologies can decrease false/positive rates for surfacing terrorist and violent extremist content but has also highlighted how difficult accurate proactive work in near real-time for crisis and incident response can be in the online space. It also shows where third party researchers might be limited in testing methods without access to fuller datasets that platforms have. Our methodology ensured ethical and human rights oversight, limiting where data could be surfaced from, respecting existing privacy and data laws. Lastly, this research advocates for hybrid models of developing online methodologies, where human oversight works with algorithmic advancements to build, refine, and innovate systems for countering terrorism and violent extremism online. As terrorists and violent extremists evolve tactics online, so too must practitioners, platforms, and law enforcement. As examples, better platform deployment tools for Natural Language Processing, audio detection, and 3-D detection are all areas that research could be further evolved in the counterterrorism sector. GIFCT looks forward to continuing its Tech Trials and working with the technology sector and relevant stakeholders in advancing efforts to prevent terrorists and violent extremists from exploiting digital platforms.

Acknowledgments

The authors would like to thank our colleagues for their feedback and support throughout the research process. In particular, we would like to thank Madeleine Cannon, Jonathon Deedman, and Eviane Leidig for their valuable insights and contributions to this research.

Disclosure Statement

No potential conflict of interest was reported by the author(s).

Notes

1 Erin Saltman, “Identifying and Removing Terrorist Content Online: Cross-Platform Solutions,” Observer Research Foundation (2022). https://www.orfonline.org/expert-speak/identifying-and-removing-terrorist-content-online/.

2 Isabelle van der Vegt, Paul Gill, Stuart MacDonald, and Bennett Kleinberg, “Shedding Light on Terrorist and Extremist Content Removal,” Royal United Services Institute (2019). https://rusi.org/explore-our-research/publications/special-resources/shedding-light-on-terrorist-and-extremist-content-removal.

3 Peter Burnap and Matthew L. Williams, “Cyber Hate Speech on Twitter: An Application of Machine Classification and Statistical Modelling for Policy and Decision Making,” Policy & Internet 7, no. 2 (2015): 223–42, https://doi.org/10.1002/poi3.85

4 While Islamist extremist terrorism has been the primary focus of government designation lists since the 9/11 attacks, in recent years Western governments have had to acknowledge the increase in white supremacy related lone actor attacks. In recent years FVEY governments have designated at least 13 white supremacy related organizations and in March 2019 the US government Congress in its 116th Session found that “white supremacists and other far-right-wing extremists are the most significant domestic terrorism threat facing the United States.” See: “116th Congress, 1st Session, S.894,” (2019), Congress.gov. https://www.congress.gov/116/bills/s894/BILLS-116s894is.xml.

5 A review of international insights developed for GNET shows most of the focus from experts concerned with white supremacy related and Islamist extremist terrorism. See: https://gnet-research.org/resources/insights/

6 Jacquelyn Bulao, “How Much Data is Created Every Day in 2022?” (Tech Jury, 2022). . https://techjury.net/blog/how-much-data-is-created-every-day/#gref (accessed November 25, 2022).

7 OECD, “Transparency reporting on terrorist and violent extremist content online: An update on the global top 50 content sharing services” (OECD Digital Economy Papers, No. 313, OECD Publishing, Paris, 2021). https://doi.org/10.1787/8af4ab29-en (accessed May 28, 2022).

8 Note that for a company to join GIFCT it is a mandatory requirement to at least have an annual transparency report. Member company transparency reports can be found on the GIFCT Member Resource Guide, which can be accessed here: https://gifct.org/resource-guide/

9 Twitter, “Reports Overview - Twitter Transparency Center,” Twitter Transparency Report, 2022. https://transparency.twitter.com/en/reports.html

10 Google, “Google Transparency Report,” Google Transparency Report, 2022. https://transparencyreport.google.com/youtube-policy/featured-policies/violent-extremism?hl=en

11 Meta, “Community Standards Enforcement | Transparency Center,” Transparency Center, 2022. https://transparency.fb.com/data/community-standards-enforcement/dangerous-organizations/facebook/

12 Ibid.

13 Joel Brynielsson, Andreas Horndahl, Fredrik Johansson, Lisa Kaati, Christian Mårtenson and Pontus Svenson, “Analysis of Weak Signals for Detecting Lone Wolf Terrorists” (2012 European Intelligence and Security Informatics Conference, 2012), 197–204, doi: 10.1109/EISIC.2012.20; Katie Cohen, Fredrik Johansson, Lisa Kaati and Jonas Clausen Mork, “Detecting Linguistic Markers for Radical Violence in Social Media,” Terrorism and Political Violence 26, no.1 (2014): 246–56, DOI: 10.1080/09546553.2014.849948; Ryan Scrivens, Garth Davies, and Richard Frank, “Searching for Signs of Extremism on the Web: An Introduction to Sentiment-based Identification of Radical Authors,” Behavioral Sciences of Terrorism and Political Aggression 10 (2017): 1–21, 10.1080/19434472.2016.1276612; van der Vegt et al., “Shedding Light.”

14 Hsinchun Chen, Wingyan Chun, Jialun Qin, Edna Reid, Marc Sageman, and Gabriel Weimann, “Uncovering the Dark Web: A Case Study of Jihad on the Web,” Journal of the American Society for Information Science and Technology 59, no. 6 (2008): 1347–59, https://doi.org/10.1002/asi.20838.

15 Brynielsson et al., “Analysis of Weak Signals.”

16 Edna Reid, Jialun Qin, Wingyan Chung, Jennifer Xu, Yilu Zhou, Rob Schumaker, Marc Sageman, and Hsiu-chin Chen, “Terrorism Knowledge Discovery Project: A Knowledge Discovery Approach to Addressing the Threats of Terrorism,” Intelligence And Security Informatics, Proceedings. Lecture Notes In Computer Science 3073 (2004): 125–45. DOI: 10.1007/978-3-540-25952-7_10.

17 Emma Llansó, “Human Rights NGOs in Coalition Letter to GIFCT” (Center for Democracy and Technology, 2020). https://cdt.org/insights/human-rights-ngos-in-coalition-letter-to-gifct/

18 GIFCT Content-Sharing Algorithms, Processes, and Positive Interventions Working Group, “Part 1: Content-Sharing Algorithms & Processes” (Global Internet Forum to Counter Terrorism, 2021). https://gifct.org/wp-content/uploads/2021/07/GIFCT-CAPI1-2021.pdf

19 Christchurch Call to Action, “Second Anniversary Summit” (Christchurch Call, 2021). https://www.christchurchcall.com/second-anniversary-summit-en.pdf.

20 GIFCT, “Working Groups | GIFCT” (Global Internet Forum to Counter Terrorism). https://gifct.org/working-groups/ (accessed May 28, 2022).

21 Jory Denny, “What is an Algorithm? How Computers Know What to Do with Data,” The Conversation, 2020. https://theconversation.com/what-is-an-algorithm-how-computers-know-what-to-do-with-data-146665.

22 Wayne Thompson, Hui Li, and Alison Bolen, “Artificial Intelligence, Machine Learning, Deep Learning and More.” SAS, 2022. https://www.sas.com/en_us/insights/articles/big-data/artificial-intelligence-machine-learning-deep-learning-and-beyond.html.

23 Monika Bickert and Brian Fishman, “Hard Questions: How We Counter Terrorism | Meta,” Meta, 2017. https://about.fb.com/news/2017/06/how-we-counter-terrorism/.

24 The hashing-matching process converts a picture to grayscale and resizes it so that all images are identically formatted before being hashed. To generate the hash, a mathematical procedure known as Discrete Cosine Transform is applied to the small grayscale image, resulting in a 256-bit hash that has a metric indicating the similarity (or closeness) to other image hashes and a quality metric that describes the level of detail in the image.

25 Hany Farid, “An Overview of Perceptual Hashing,” Journal of Online Trust and Safety 1, no. 1 (2021). https://doi.org/10.54501/jots.v1i1.24

26 Rohit Verma and Aman Kumar Sharma, “Cryptography: Avalanche Effect of AES and RSA,” International Journal of Scientific and Research Publications 10, no.4 (2020): 10013. https://doi.org/10.29322/IJSRP.10.04.2020.P10013

27 Sariel Har-Peled, Piotr Indyk, and Rajeev Motwani, “Approximate Nearest Neighbor: Towards Removing the Curse of Dimensionality,” Theory of Computing 8, no.1 (2012): 321–50, https://doi.org/10.4086/toc.2012.v008a014.

28 For more on GIFCT’s technical innovations and how the Hash Sharing Database operates see: https://gifct.org/tech-innovation/

29 For more on the GIFCT’s Content Incident Protocol and wider Incident Response Framework see: https://gifct.org/content-incident-protocol/.

30 GIFCT, “Broadening the GIFCT Hash-Sharing Database Taxonomy: An Assessment and Recommended Next Steps,” Global Internet Forum to Counter Terrorism, 2021. https://gifct.org/wp-content/uploads/2021/07/GIFCT-TaxonomyReport-2021.pdf

31 Erin Saltman, “Countering Terrorism and Violent Extremism at Facebook: Technology, Expertise and Partnerships,” ORF, 2020. https://www.orfonline.org/expert-speak/countering-terrorism-and-violent-extremism-at-facebook/#_edn2

32 Jonathan Lewis, “Facebook’s Disruption of the Boogaloo Network,” GNET (blog), 2020. https://gnet-research.org/2020/08/05/facebooks-disruption-of-the-boogaloo-network/.

33 Jared P. Keller, Kevin C. Desouza, and Yuan Lin, “Dismantling Terrorist Networks: Evaluating Strategic Options Using Agent-Based Modeling,” Technological Forecasting and Social Change 77, no.7 (2010): 1014–36, https://doi.org/10.1016/j.techfore.2010.02.007.

34 Philip V. Fellman, Jonathan P. Clemens, Roxana Wright, Jonathan Vos Post, and Matthew Dadmun, “Disrupting Terrorist Networks, a Dynamic Fitness Landscape Approach,” ArXiv:0707.4036 [Nlin], 2008, http://arxiv.org/abs/0707.4036.

35 Mohammed Saqr and Ahmad Alamro, “The Role of Social Network Analysis as a Learning Analytics Tool in Online Problem Based Learning,” BMC Medical Education 19, no.1 (2019): 160. https://doi.org/10.1186/s12909-019-1599-6.

36 Xiaoping Zhou, Xun Liang, Haiyan Zhang, and Yuefeng Ma, “Cross-Platform Identification of Anonymous Identical Users in Multiple Social Media Networks,” IEEE Transactions on Knowledge and Data Engineering 28, no. 2 (2016): 411–24. https://doi.org/10.1109/TKDE.2015.2485222; David Bright, Russell Brewer, and Carlo Morselli, “Reprint of: Using Social Network Analysis to Study Crime: Navigating the Challenges of Criminal Justice Records,” Social Networks 69 (2022): 235–50. https://doi.org/10.1016/j.socnet.2022.01.008; Katrin Höffler, Miriam Meyer, and Veronika Möller, “Risk Assessment—the Key to More Security? Factors, Tools, and Practices in Dealing with Extremist Individuals,” European Journal on Criminal Policy and Research 28 (2022): 269–95. https://doi.org/10.1007/s10610-021-09502-6.

37 Lewis, “Facebook’s Disruption.”

38 Twitter, “New data, new insights: Twitter's latest #Transparency Report,” Twitter Blog, 2017. https://blog.twitter.com/en_us/topics/company/2017/new-data-insights-twitters-latest-transparency-report.

39 Jacob Solawetz, “The Ultimate Guide to Object Detection,” Roboflow (blog), 2021. https://blog.roboflow.com/object-detection/

40 see “Computer Vision: What It Is and Why It Matters,” SAS. https://www.sas.com/en_us/insights/analytics/computer-vision.html.

41 Solawetz, “The Ultimate Guide.”

42 See “What Is Logo Detection and What Is It Used For?,” VISUA (blog). https://visua.com/what-is-logo-detection/

43 Sabina Pokhrel, “6 Obstacles to Robust Object Detection,” Medium, 2020. https://towardsdatascience.com/6-obstacles-to-robust-object-detection-6802140302ef

44 Fredrik Johansson and Lisa Kaati.“Detecting Linguistic Markers of Violent Extremism in Online Environments,” In Combating Violent Extremism and Radicalization in the Digital Era eds., Majeed Khader, Neo Loo Seng, Gabriel Ong, Eunice Tan, and Jeffrey Chin (IGI Global: 2016).

45 UNOCT and UNICRI, “Countering Terrorism Online with Artificial Intelligence: An Overview for Law Enforcement and Counter-Terrorism Agencies in South Asia and South-East Asia,” 2021, https://www.un.org/counterterrorism/sites/www.un.org.counterterrorism/files/countering-terrorism-online-with-ai-uncct-unicri-report-web.pdf

46 Bickert and Fishman, “Hard Questions.”

47 UNOCT, “Countering Terrorism Online.”

48 see GIFCT, “Broadening the GIFCT Hash-Sharing Database Taxonomy”, 2021.

49 Hind S. Alatawi, Areej M. Alhothali and Kawthar M. Moria, “Detecting White Supremacist Hate Speech Using Domain Specific Word Embedding With Deep Learning and BERT,” in IEEE Access 9 (2021): 106363–106374. doi: 10.1109/ACCESS.2021.3100435.

50 Bickert and Fishman, “Hard Questions.”

51 Paul Cruickshank, “A View from the CT Foxhole: An Interview with Brian Fishman, Counterterrorism Policy Manager, Facebook.” 10 (8). Combating Terrorism Center, CTC Sentinel 10, no. 8 (2017). https://ctc.usma.edu/wp-content/uploads/2017/09/CTC-Sentinel_Vol10Iss8-13.pdf.

52 J. M. Berger and Heather Perez, “The Islamic State’s Diminishing Returns on Twitter: How Suspensions Are Limiting the Social Networks of English-Speaking ISIS Supporters” (GW Program on Extremism, George Washington University, 2016). https://extremism.gwu.edu/sites/g/files/zaxdzs2191/f/downloads/JMB%20Diminishing%20Returns.pdf

53 Llansó, “Human Rights NGOs.”

54 United Nations, “Universal Declaration of Human Rights | United Nations,” 1948. https://www.un.org/en/about-us/universal-declaration-of-human-rights.

55 Kenneth Roth, “The Internet is Not the Enemy As Rights Move Online, Human Rights Standards Move with Them,” Human Rights Watch, 2017. https://www.hrw.org/world-report/2017/country-chapters/global-5.

56 UN Human Rights Committee, “Human Rights Committee, General Comment 31, Nature of the General Legal Obligation on States Parties to the Covenant, U.N. Doc. CCPR/C/21/Rev.1/Add.13 (2004),” University of Minnesota Human Rights Library, 2004. http://hrlibrary.umn.edu/gencomm/hrcom31.html.

57 United Nations Human Rights, Office of the High Commission, “Guiding Principles on Business and Human Rights,” OHCHR, 2011. https://www.ohchr.org/sites/default/files/Documents/Publications/GuidingPrinciplesBusinessHR_EN.pdf.

58 Council of Europe Commissioner for Human Rights, “Unboxing Artificial Intelligence: 10 steps to protect Human Rights,” Council of Europe, 2019. https://rm.coe.int/unboxing-artificial-intelligence-10-steps-to-protect-human-rights-reco/1680946e64.

59 Tech Against Terrorism, “GIFCT Technical Approaches Working Group: Gap Analysis and Recommendations for Deploying Technical Solutions to Tackle the Terrorist Use of the Internet,” Global Internet Forum to Counter Terrorism, 2021. https://gifct.org/wp-content/uploads/2021/07/GIFCT-TAWG-2021.pdf.

60 Cohen et al., “Detecting Linguistic”; Burnap and Williams, “Cyber Hate Speech.”

61 GIFCT Transparency Working Group, “GIFCT Transparency Working Group: One-Year Review of Discussions,” Global Internet Forum to Counter Terrorism, 2021. https://gifct.org/wp-content/uploads/2021/07/GIFCT-WorkingGroup21-OneYearReview.pdf

62 Global Partners Digital and Open Technology Institute, “Human Rights for Small and Medium Sized Technology Companies: Transparency Reporting,” 2020. https://www.gp-digital.org/wp-content/uploads/2020/08/BHRMN-Transparency-Reporting-Tool.pdf.

63 Santa Clara Principles on Transparency and Accountability in Content Moderation, 2018. https://santaclaraprinciples.org/; Alatawi et al, “Detecting White Supremacists.”

64 Spandana Singh and Kevin Bankston, “The Transparency Reporting Toolkit: Content Takedown Reporting,” New America, 2018. https://www.newamerica.org/oti/reports/transparency-reporting-toolkit-content-takedown-reporting/.

65 Venu Govindaraju, C.R. Rao, and Vijay V. Raghavan, “Cognitive Computing: Theory and Applications,” ed. Vijay V. Raghavan, Venu Govindaraju, Venkat N. Gudivada, and C.R. Rao. Vol. 35. (Elsevier Science, 2016); Scrivens et al., “Searching for Signs”; Erin Saltman, Farshad Kooti, and Karly Vockery, “New Models for Deploying Counterspeech: Measuring Behavioral Change and Sentiment Analysis, Studies in Conflict & Terrorism,” Studies in Conflict & Terrorism (2021). DOI: 10.1080/1057610X.2021.1888404.

66 Xibin Dong, Zhiwen Yu, Wenming Cao, Yifan Shi, and Qianli Ma, “A survey on ensemble learning,” Frontiers of Computer Science 14 (2020), 241–58. https://doi.org/10.1007/s11704-019-8208-z

67 Dorottya Demszky, Nikhil Garg, Rob Voigt, James Zou, Jesse Shapiro, Matthew Gentzkow, and Dan Jurafsky, “Analyzing Polarization in Social Media: Method and Application to Tweets on 21 Mass Shootings,” in Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers) (Minneapolis, Minnesota, Association for Computational Linguistics, 2019), 2970–3005 https://aclanthology.org/N19-1304/.

68 see GIFCT, “Content Incident Protocol | GIFCT,” Global Internet Forum to Counter Terrorism, 2021. https://gifct.org/content-incident-protocol/