ABSTRACT

The rapid rise of digital media use for political participation has coincided with an increase in concerns about citizens’ sense of their capacity to impact political processes. These dual trends raise the important question of how people’s online political participation is connected to perceptions of their own capacity to participate in and influence politics. The current study overcomes the limitation of scarce high-quality cross-national and over-time data on these topics by conducting a meta-analysis of all extant studies that analyze how political efficacy relates to both online and offline political participation using data sources in which all variables were measured simultaneously. We identified and coded 48 relevant studies (with 184 effects) representing 51,860 respondents from 28 countries based on surveys conducted between 2000 and 2016. We conducted a multilevel random effects meta-analysis to test the main hypothesis of whether political efficacy has a weaker relationship with online political participation than offline political participation. The findings show positive relationships between efficacy and both forms of participation, with no distinction in the magnitude of the two associations. In addition, we tested hypotheses about the expected variation across time and democratic contexts, and the results suggest contextual variation for offline participation but cross-national stability for online participation. The findings provide the most comprehensive evidence to date that online participation is as highly associated with political efficacy as offline participation, and that the strength of this association for online political participation is stable over time and across diverse country contexts.

The use of digital media for political participation has increased steadily during the past twenty years (Boulianne, Citation2020). This same time period has also been characterized by concerns about changing relationships between citizens and the state, including the phenomena of democratic backsliding and decreased democratic legitimacy (Kriesi, Citation2013, Citation2020; Lührmann & Lindberg, Citation2019; Waldner & Lust, Citation2018). These contemporaneous trends raise the question of whether people perceive that their online political actions are effective ways to engage in political processes.

Astute observers of recent trends in global political participation such as Erica Chenoweth (Citation2020) have clearly articulated both the promise and potential pitfalls of online and digital political participation. Discussing the challenge of contemporary movements’ tactical reliance on online political participation, Chenoweth (Citation2020, p. 78) notes the advantages of swift recruitment and the capacity to communicate grievances to broad audiences, as well as the disadvantages of diminished capacity to recruit people into effective organizations that are able to strategize to achieve collective goals. Related scholarship on “slacktivism” or “clicktivism,” which Freelon et al. (Citation2020, p. 1197) described as prominent in early online participation literature, was concerned that the low-cost and symbolic nature of activities such as posting on social media may serve to “project an impression of efficacy without actually being effective.”

Early research on online forms of participation noted by Freelon et al. (Citation2020, p. 1197) focused on two main concerns: first, that this type of activity may not be politically consequential; and second, that it may also act as a substitute for more effective and impactful types of participation. While empirical evidence has repeatedly shown that digital activities do not replace offline activities and instead complement them (e.g., Boulianne & Theocharis, Citation2020; Gil de Zúñiga et al., Citation2017; Lane et al., Citation2017), little is known regarding whether political participants themselves consider their online political activities to be (in)consequential or (in)effective in comparison to their offline engagement. This question is increasingly important as the range and prevalence of online political activities have expanded over time, including activities such as signing petitions online, contacting officials through social media, and expressing opinions online (Boulianne, Citation2020; Theocharis, Citation2015; Theocharis & de Moor, Citation2021; Theocharis & van Deth, Citation2018). Further, research shows high levels of online political engagement among young people (Boulianne & Theocharis, Citation2020), suggesting a generational shift in political communication patterns. These trends highlight the importance of assessing whether political participants themselves believe that their online political actions are meaningful.

The current study investigates this topic by assessing whether the relationship between individuals’ perceptions of their political efficacy (PE) and their political participation is weaker for online political participation (OnPP) than offline political participation (OffPP). We test the main hypothesis (H1) regarding efficacy by conducting a multilevel random effects meta-analysis using the most comprehensive evidence available. In addition, we investigate two hypotheses about the expected variation in the relationships between PE, OnPP and OffPP: over time (H2) and across varying national contexts (H3). The concluding discussion reviews the implications of the findings as digital activism continues to expand, and outlines additional avenues for investigating the causal relations between political efficacy and different types of political participation in the digital era.

Political Participation and Political Efficacy

Do people perceive that their political actions make a difference? This question is particularly important in an era marked by concerns about both democratic legitimacy (Dahlberg et al., Citation2015; Kriesi, Citation2014, Citation2020) and the potentially negative effects of online activity on democratic society (Anduiza et al., Citation2019; Vraga, Citation2019). Studies investigating the OnPP-OffPP distinction in terms of sociodemographic correlates using high-quality representative survey data (e.g., Gibson & Cantijoch, Citation2013; Oser et al., Citation2013; Schradie, Citation2018; Vaccari, Citation2017) have found that online participation has a stronger association with socio-economic status compared to offline participation. Consensus has not yet emerged in the literature, however, regarding the question of how people’s self-perceived efficacy relates to their involvement in online versus offline political participation.

In efforts to understand the connection between political participation and democratic governance, political efficacy has been viewed as a key attitudinal measure, dating back to Campbell et al.’s (Citation1954) study of how voters make decisions. The classic definition of political efficacy articulated by Campbell et al. (Citation1954, p. 187) – “the feeling that individual political action does have, or can have, an impact upon the political process” – is still widely cited in contemporary scholarship. By the early 1990s, empirical research clarified this conceptual distinction based on studies drawing on the American National Election Studies (ANES). Niemi et al.’s (Citation1991, pp. 84–85) description of these concepts has informed subsequent research on internal efficacy, defined as “beliefs about one’s own competence to understand, and to participate effectively in, politics,” and external efficacy, defined as “beliefs about the responsiveness of governmental authorities and institutions to citizen demand.” Decades of research on offline participation has shown that both types of political efficacy tend to have a positive association with offline political participation, with internal efficacy having a stronger association with OffPP than external efficacy (e.g., Chamberlain, Citation2012; Morrell, Citation2003).

In contrast to the relatively limited available survey data on the PE-OnPP connection, extensive high-quality cross-sectional and longitudinal data on the PE-OffPP connection have been analyzed in numerous prominent studies that use data from the American National Election Studies (e.g., Niemi et al., Citation1991; Robison et al., Citation2018), the International Social Survey Programme (e.g., Vráblíková, Citation2014), and the Comparative Study of Electoral Systems (e.g., Karp & Banducci, Citation2008). The challenge of relatively limited large-scale comparative survey data about online phenomena was articulated in Theocharis’s 2015 observation (p. 2) that, at the time, prominent surveys such as the European Social Survey, the European Values Study, the European Election Study, and the World Values Survey did not include any questions on these topics. This important task was therefore left to country-specific academic projects. While select questions about online political participation have been added to the survey instruments of some of the large-scale comparative surveys in recent years, the lack of longitudinal data from these sources precludes analysis of longer term trends.

Thus, due to the limited availability of high-quality survey data on online political participation, most of the research on the PE-OnPP relationship is based on country-specific cross-sectional studies. A series of country-specific studies have produced robust empirical evidence on other related topics, such as showing that online political participation is effective at mobilizing people to be politically active offline as well (Bode, Citation2017; Boulianne & Theocharis, Citation2020; Cantijoch et al., Citation2016; Kahne & Bowyer, Citation2018; Karpf, Citation2010; Kwak et al., Citation2018). Yet, the question remains open as to whether the PE-OnPP association has a positive strength similar to that of the PE-OffPP association, as country-specific studies that analyze data from varied contexts and time periods have thus far yielded conflicting results.

For example, consistent with research that conceptualizes political efficacy as more of an inherent “trait” than a context-dependent “state” (Schneider et al., Citation2014), some digital media research indicates similarly large positive associations between political efficacy and both types of participation (online and offline). This pattern of a strong positive PE-OnPP and PE-OffPP connection is evident in studies such as Jung et al.’s (Citation2011) analysis of data from a 2008 survey weighted to be representative of the national US population, and Park’s (Citation2015) representative survey in South Korea conducted in 2012. Consistent with the theoretical rationale offered by social-cognitive theory (e.g., Bandura, Citation2001), these findings indicate that new media affordances may serve to provide those who are politically interested and efficacious with opportunities to engage in politics, regardless of the online or offline political context. In contrast, some studies report negative associations for PE-OnPP along with a positive connection for PE-OffPP, indicating a weaker relationship between efficacy and political participation in online contexts. This pattern of a weaker connection between PE-OnPP than PE-OffPP is reported in studies such as Stromer-Galley’s (Citation2002) analysis of a 2000 survey of US citizens with and without Internet access, and Zhu et al.’s (Citation2017) survey of students in Hong Kong. In addition to emphasizing the need to synthesize conflicting extant findings to assess the generalizable relationship between PE and OnPP/OffPP, recent scholarship on contextualizing evidence in political communication research (Esser, Citation2019; Rojas & Valenzuela, Citation2019) clarifies the importance of investigating whether these relationships change in systematic ways over time and across country contexts.

To synthesize these heterogeneous findings on the relationship between political efficacy and different types of political participation, we focus on OnPP and OffPP in the context of both classic and more recent literature on the expression of the will of the people as a crucial component of democratic governance (Bennett, Citation2012; Bennett & Segerberg, Citation2012; Dahl, Citation1961; Mill, Citation[1861] 1962). Most research on the connection between political behavior and representation has focused on electoral-oriented participation (Dassonneville et al., Citation2021; Powell, Citation2004). Yet some researchers have proposed that the underlying mechanism that links voting and electoral-oriented participation to responsiveness is that individuals who vote are also more likely to participate in additional ways that influence public opinion and decision-makers (Bartels, Citation2016; Giugni & Grasso, Citation2019a; Griffin & Newman, Citation2005). Although the logic of this communication mechanism in the literature is also relevant for online political participation (Bennett, Citation2012; Bennett & Segerberg, Citation2012), empirical studies that have investigated how participation beyond voting may enhance representation have focused primarily on offline political participation behaviors such as civic activism and protest activity (Htun & Weldon, Citation2012; Leighley & Oser, Citation2018; Rasmussen & Reher, Citation2019).

A related line of research that anticipates empirical support for a weaker association between PE-OnPP compared to PE-OffPP has focused on the degree to which online political activities described as “clicktivism” or “slacktivism” may constitute token displays of political support (Chou et al., Citation2020; Kristofferson et al., Citation2014). Both normative and empirical studies have discussed concerns that this type of activity both requires little effort and has minimal impact (Christensen, Citation2011; Freelon et al., Citation2020; Halupka, Citation2014; Karpf, Citation2012), and may even be primarily focused on entertainment (Theocharis & Lowe, Citation2016; Theocharis & Quintelier, Citation2016). An even more concerning possibility discussed in the scholarly literature and public discourse is that online participation may serve as a channel to express frustration with no expectation of political change, and thus can potentially demobilize citizens who would otherwise have been politically engaged (Anduiza et al., Citation2012; Gladwell, Citation2010).

Beyond discussions of clicktivism and slacktivism, the literature includes two main arguments for why political efficacy may have a weaker relationship with OnPP than OffPP. First, OffPP is expected to require more intentional effort and investment than OnPP (Boulianne, Citation2020; Karpf, Citation2010; Schlozman et al., Citation2010). The potentially smaller effort and investment required to participate online versus offline may impact participants’ sense of their own political efficacy, prompting a perception that OnPP is a less meaningful form of participation than OnPP. Second, participants may perceive a clearer connection between offline political participation and political outcomes, as political institutions and processes are often considered to be better designed to respond to offline and more traditional modes of participation (Christensen, Citation2011; Matthews, Citation2021). These arguments relate to the expected role of online versus offline participation in mobilizing citizens into political action and facilitating their sense of efficacy through a meaningful connection between political participation and the achievement of political representation.

While innovative research designs aimed at studying the contemporary mechanisms linking online participation and representational outcomes are just beginning to emerge (Blumenau, Citation2021; Ennser-Jedenastik et al., Citation2022; Matthews, Citation2021), conducting research on longer-term structural trends in the relationship between online participation and individual attitudes is more challenging due to the lack of high-quality long-term comparative data on online participation (Theocharis, Citation2015; Vaccari & Valeriani, Citation2018). In light of these data constraints, an important avenue for investigating the relationship between online political participation and representation is to synthesize extant studies on this topic, which are mostly cross-sectional, single-country studies, in order to assess the relation between citizens’ sense of their own political efficacy and the types of participation in which they are active.

If participants themselves view online participation as ineffective, or even akin to a frustration valve, then empirical evidence would show a low or perhaps even negative association between this type of political behavior and individuals’ sense of their capacity to engage in and affect political processes and outcomes. Thus, the current study investigates the relationship between political efficacy and two main categories of political behavior: online and offline political participation. The classic definition of the distinction between these two types of behaviors as described in the literature is that online participation is activity that takes place through digital means, whereas offline participation is conducted as more traditional in-person activities that involve physical presence (Gibson & Cantijoch, Citation2013). We follow the common approach of investigating differences between offline and online participation (Matthes et al., Citation2019; Strömbäck et al., Citation2018), while acknowledging the theoretical and empirical challenge discussed in recent literature of establishing definitive long-term categories of different types of political participation (Ohme et al., Citation2018; Ruess et al., CitationForthcoming; Theocharis, Citation2015; Theocharis & van Deth, Citation2018). The research design therefore allows an empirical investigation of whether there are differences in the strength of the association between political efficacy and these two types of participation over time.

Research Question and HypothesesFootnote1

Building on this literature, the current study investigates the main research question of how political efficacy relates to OnPP compared to OffPP. The main hypothesis of this study is that individuals’ perceptions of their political efficacy are more weakly related to OnPP than to OffPP. Specifically, the first hypothesis is as follows: Political efficacy is less strongly connected to online than to offline participation (H1: Efficacy).

In addition to this main hypothesis, the literature suggests the importance of testing for two moderating effects on the relationship between political efficacy and OnPP versus OffPP. An important line of research indicates that these two types of behaviors have become less distinct over time, as online activity and digital media have become an ongoing feature of daily life (Farrell, Citation2012; Ohme et al., Citation2018). The increased integration of digital activities as essential aspects of everyday life would mean that any difference in the relationship between political efficacy and OnPP versus OffPP identified in the early years of the Internet may have decreased over time. The second hypothesis is based on this over-time expectation: The relationship between PE and OnPP and the relationship between PE and OffPP have converged in recent years (H2: Over-time convergence).

Finally, drawing on the global reach of the focal studies, we test the expectation that political efficacy and OnPP are more strongly related in established democratic contexts characterized by institutions and representatives that favor responsiveness to the will of the public (Giugni & Grasso, Citation2019a; Wagner et al., Citation2017). The rationale behind this hypothesis emerges from literature on democratic governance and responsiveness. Research on the effect of political participation on representational outcomes discusses a communication mechanism that may enhance the representation of those who participate beyond the electoral arena, particularly in strong democratic contexts (Bartels, Citation2016; Giugni & Grasso, Citation2019b; Griffin & Newman, Citation2005). Therefore, the third hypothesis states: The relationship between PE and OnPP is stronger in stronger electoral democracies (H3: Democratic context).

Data and MethodsFootnote2

Study Search and Selection Procedures

For research questions and hypotheses about which there is a diverse set of findings in the political communications literature, meta-analysis is an increasingly common approach for synthesizing research results and establishing definitive findings (e.g., Boulianne, Citation2020; Matthes et al., Citation2018; Munzert & Ramirez-Ruiz, Citation2021; Walter et al., Citation2020; Zoizner, Citation2021). To identify relevant studies, we conducted a series of systematic search and selection procedures between March 2019 and December 2020 that we documented according to the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) guidelines (Liberati et al., Citation2009; see Supplementary Material for PRISMA figure).

The main selection criterion used to identify relevant studies was that all three relevant variables (PE, OnPP and OffPP) were collected in the same survey sample and analyzed to assess the association between political efficacy with the separate dependent variables of the two key forms of political participation (OnPP and OffPP). We first searched the academic database Scopus to identify studies that met the key search criteria.Footnote3 We followed the standard search practice of reviewing the works cited in systematic reviews of related topics (Lutz et al., Citation2014; Robison et al., Citation2018; Ruess et al., CitationForthcoming), scanning the reference lists of the studies that met the selection criteria to identify additional relevant studies, and reviewing additional research conducted by authors of the studies that met the inclusion criteria. Further, we presented preliminary findings of the study in a series of conference and seminar talks between March and September of 2020, during which we asked content experts to identify any additional studies that met the search criteria.Footnote4 Finally, we emailed the authors of the studies that met the inclusion criteria to inquire whether they had authored or were aware of any additional unpublished studies that met the inclusion criteria.

These search procedures identified 1,356 studies for potential inclusion. A first set of selection steps excluded duplicate studies, studies that lacked relevant quantitative analysis of survey data, or were in a language other than English. The remaining selection steps focused on retaining studies that properly measured the key constructs, and thus excluded indices of political participation that lacked a clear distinction between offline and online participation; limited the sample to studies that used self-reported measures of actual political behavior (rather than likelihood of participating); and omitted effects from models that included an interaction effect but did not also report on the constituent variables’ main effects. For unpublished studies, we conducted a search to determine whether a more recent version of the study had been published, and we included unpublished studies if we did not identify a published version and there were no clear flaws in the study.

Data Coding and Analysis

This search and selection process yielded a final set of 48 studies that we coded according to a coding protocol designed to test our hypotheses, and then conducted intercoder checks of the coded data (see Supplemental Material for the coding protocol). The full dataset of 184 coded effects represents a total sample of n = 51,860 respondents from 28 countries. As expected, due to the lack of high-quality cross-national surveys on these topics by large-scale survey programs, most of the studies that met our selection criteria analyze country-specific cross-national datasets. Since a select number of these studies analyze the same or similar data samples, we assigned the code of a unique dataset number to each of the 41 distinctive datasets.

The main coding distinction relevant for the analysis of political participation is between online and offline political participation. For political efficacy, we included studies that analyzed all forms of political efficacy measures, and we coded the type of political efficacy (internal, external, general, and other) used in each study, following established research on political efficacy types (Kenski & Stroud, Citation2006; Morrell, Citation2003; Niemi et al., Citation1991). We follow the classic definitional distinctions in the political efficacy literature (e.g., Morrell, Citation2003; Niemi et al., Citation1991) for coding internal efficacy as measures that focus on individuals’ beliefs about their own capacity to understand and engage in politics; external efficacy as beliefs about the political responsiveness to citizens; and general efficacy as all measures that reference the broad concept of political efficacy with no internal/external distinction. In addition, a number of specific political efficacy measures have emerged in the more recent literature that are not fully captured by these categories (Pingree et al., Citation2014). These measures are coded in our data as other efficacy, including measures of “collective efficacy,” “self-efficacy,” and “internet efficacy” (see Supplementary Material for documentation of the survey questions used for these “other efficacy” measures).

As noted, the theoretical focus of our contribution is to assess whether political efficacy is more weakly associated with OnPP than OffPP. Thus, the target quantity that we aim to analyze is the magnitude of the association between PE-OnPP in comparison to PE-OffPP. Informed by recent research on causal interpretation and regression models (e.g., Keele et al., Citation2020), our theoretical focus clarifies that the analytical goal of the current study is not causal inference, but rather to conduct a rigorous estimate of the magnitude of the association between political efficacy and these two forms of participation. A long-standing analytical approach in meta-analytic research is to code and analyze the beta coefficients of control variables in multivariate models, motivated in part by this approach’s facilitation of meta-analyses which contribute to cumulative research (see, Hünermund and Louw [Citation2020, p. 6] for a review of this argument). Yet, recent research highlights the problem of biased estimates, and emphasizes the importance of clearly identifying and analyzing the study’s intended estimand, i.e., the target quantity that the study aims to assess and analyze (Hünermund & Louw, Citation2020; Lundberg et al., Citation2021).

With analytical focus on both contributing to cumulative research and analyzing our intended estimand as clearly as possible, we use two meta-analytic datasets to test each of our hypotheses. First, following standard practice in contemporary high-impact meta-analysis to transform different types of effect estimates from multivariate analyses into a common metric (see for example, Amsalem & Zoizner, Citation2022; Dinesen et al., Citation2020; Matthes et al., Citation2019; Walter et al., Citation2020), we converted the k = 184 coded effects from multivariate models into k = 183 common effect estimates.Footnote5 These common effect estimates are converted from three main types of coded effects from multivariate models: standardized regression coefficients, unstandardized regression coefficients, and odds ratios (see Supplementary Material for a summary of the number of samples and effects derived from distinct types of regression models). We used Peterson and Brown’s (Citation2005) approach to convert the standardized coefficients to Pearson’s correlation coefficients (r), and then to Fisher’s z scale with a corresponding standard error (Borenstein et al., Citation2009; Gurevitch et al., Citation2018; Lipsey & Wilson, Citation2001). For unstandardized regression coefficients and odds ratios, we transformed the effects into standardized coefficients and then applied the same conversion procedure.Footnote6 The full coded dataset of 183 common effect estimates is more than sufficient to conduct a valid meta-analysis and related moderator tests (Jackson & Turner, Citation2017; Matthes et al., Citation2019; Rains et al., Citation2018).Footnote7

Second, to complement our hypothesis-testing results using this standard approach in the literature of analyzing common effect estimates coded from multivariate analyses, we also analyze a more limited subset of zero-order correlations between PE-OnPP and PE-OffPP (k = 45) that were also reported in the studies that met our selection criteria (often reported in correlation matrices in appendices). An advantage of analyses based on this smaller subset of zero-order correlation estimates is a more precise measurement of the PE-OnPP and PE-OffPP relationship, while a disadvantage is that this much smaller sample size increases the likelihood that the moderator analyses used to test H2 (Over-time convergence) and H3 (Democratic context) may yield non-significant findings due to insufficient sample size rather than the absence of an actual moderator effect. We therefore report hypothesis-testing results based on separate analyses of these two samples (i.e., k = 183 for the common effect estimate dataset, and k = 45 for the zero-order correlation dataset), and synthesize findings from both sets of analyses to interpret the implications of the findings.

To analyze the data, we conduct a multilevel random effects meta-analysis of precise effect size. Conventional random effects meta-analyses are based on a two-level model of data samples (level 1) from which researchers obtain coded effects (level 2), and effect sizes at level 2 are assumed to be independent (Assink & Wibbelink, Citation2016; Borenstein et al., Citation2009). Multilevel random effects meta-analyses add a third level in order to account for known dependencies among multiple effects, such as effects coded from the same study or dataset (see, for example, Dinesen et al., Citation2020; Matthes et al., Citation2019). The multilevel structure of our coded dataset is produced by the main criterion for inclusion, namely that the study used data from the same dataset to analyze at least two relationships: the association between PE and OnPP, and the association between PE and OffPP. A multilevel meta-analysis approach is therefore necessary to properly account for the nested structure of the data. Specifically, we use the “meta” package in R (Schwarzer, Citation2022; Schwarzer et al., Citation2015) to first fit a random effects model using the meta::metagen function, and specify the Knapp-Hartung Sidik Jonkman (HKSJ) method as estimator. We then fit a multilevel random effects model using the metafor::rma.mv() function (Viechtbauer, Citation2010, Citation2021), specifying that the analysis is clustered by dataset. In addition to our use of multilevel random effects meta-analysis to analyze whether the relationship between PE-OnPP is weaker than PE-OffPP (H1), we test the two additional hypotheses related to over-time convergence (H2) and democratic context (H3) via moderator analyses in separate multilevel models for online and offline political participation. We conclude the analysis by conducting a series of robustness tests and sensitivity analyses that confirm the reported findings.

Meta-analytic Sample and Descriptive Statistics

The 48 studies that met our selection criteria were published between 2002 and 2019, and analyzed 41 distinctive datasets based on surveys conducted between 2000 and 2016. Based on the selection criteria, each study contained at least two relevant effects: the relationship between political efficacy as an independent variable, and both OnPP and OffPP as separate dependent variables. Most of the studies analyzed cross-sectional data; only eight of the identified studies included repeated-wave panel data, and six employed a lagged model.

To assess whether systematic differences in measurement can be identified in the studies’ measures of OnPP and OffPP, we tested whether there was a statistically significant difference between the reported Cronbach’s alpha values for the two forms of participation. The t-test is not statistically significant for the common effect estimate dataset (k = 183) or for the zero-order correlation dataset (k = 45), thus indicating no systematic difference in measurement error between the OnPP and OffPP measures (see Supplementary Material for tabular output).

summarizes additional characteristics of the studies that met our selection criteria. Consistent with findings in the digital participation literature (e.g., Boulianne, Citation2019), about half of the studies (n = 22) analyzed data from US samples, while the remaining studies used data from countries across the globe. Of the studies using data outside the United States, eight samples were from China, six from South Korea, two from Germany, and two from Singapore. Samples from nine other countries (Czech Republic, Denmark, Finland, Hong Kong, Israel, Kuwait, Italy, Taiwan, and the United Kingdom) were used only in one study. Two studies compared data from more than one country (Chan et al., Citation2017; Saldaña et al., Citation2015),Footnote8 and two studies analyzed pooled data from more than one country (Adugu & Broome, Citation2018; Huber et al., Citation2019).Footnote9

Table 1. Sample characteristics.

Results

Testing H1: Efficacy Hypothesis

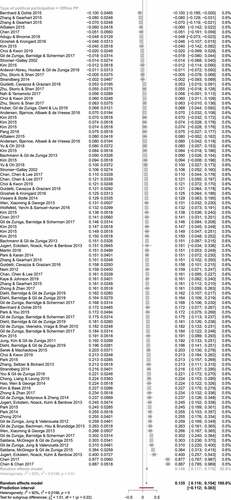

The results of the random effects meta-analysis are depicted in the forest plot in Figure 1. This figure shows the effect sizes for each study’s common effect estimates, listed in ascending order of effect size. The effect size for OnPP () is 0.123, which is significantly different from zero (95% CI: 0.099, 0.147). The effect size for OffPP () is 0.146, which is also significantly different from zero (95% CI: 0.117, 0.175). As noted at the bottom of , the overall estimated effect size for the model as a whole is 0.135 (95% CI: 0.116, 0.154). Taken together, results in the forest plots of the random effects meta-analysis show that political efficacy is positively associated with both online and offline participation, and the point estimate of the effect size for offline participation is larger than that for online participation. However, when confidence intervals are taken into account, the findings in Figure 1 suggest that there is no statistically significant difference in the magnitude of the association between PE with OnPP versus OffPP.

The results of the random effects meta-analysis presented in the forest plots in Figure 1 do not yet account for the nested structure of our coded meta-analytic dataset. As noted, to properly account for the dependent nature of the coded effects, we estimated a multilevel (i.e., three-level) random effects meta-analysis, which allows for the valid inclusion of multiple coded effects from the same dataset. The results of the multilevel model that tests for subgroup differences between OnPP and OffPP reported in are consistent with results in the forest plot in Figure 1. Both online and offline participation have a positive association with political efficacy that is significantly different from zero. The test of subgroup differences shows that there is no statistically significant difference in the associations between political efficacy and online versus offline participation for the common effect estimate dataset of k = 183 () or for the zero-order correlation dataset of k = 45 (). Finally, the results comparing the model fit of the three-level random effects model that clusters at the third level by dataset compared to the standard two-level random effects model confirm improved fit for the three-level model (p < .001).

Table 2. Test for subgroup differences in PE-OnPP versus PE-OffPP (H1, Efficacy hypothesis).

Taken together, the results do not support the efficacy hypothesis (H1). Based on the best available evidence analyzed by the most robust analytical methods, there is no statistically significant difference in the size of the positive association between efficacy and online participation and the size of the positive association between efficacy and offline participation.

Testing H2: Over-time Convergence

The findings for H1 indicate there is no statistically significant difference in the modestly positive effects between PE and OnPP versus PE and OffPP when the analysis does not account for the year in which each study’s survey was conducted. Thus, the over-time convergence hypothesis would be supported if a statistically significant difference was evident in the effect size of our key variables (between PE and OnPP compared to PE and OffPP) only in the early years of the observation period. As noted, the expectations discussed in the literature (e.g., Farrell, Citation2012; Ohme et al., Citation2018) suggest that if a statistically significant distinction was apparent in the early years of the sample, it would be due to a weaker relationship between PE and OnPP than between PE and OffPP – but that the association between PE and both types of participation would become more similar in recent years.

To test the hypothesis of over-time convergence (H2), we assessed whether there is an interaction effect in the multilevel random effects meta-analysis framework between year and the dependent variable type. The findings reported in for both the common effect estimate dataset of k = 183 and the zero-order correlation dataset of k = 45 provide no evidence for moderation between year and the dependent variable type (i.e., OnPP and OffPP), as the interaction between dependent variable type and year is not significant. This lack of a systematic shift in the effect size for OnPP and OffPP over time is also supported by forest plots with effects ordered by year in which the relevant survey was conducted, which shows no clear over-time pattern (see Supplementary Material). Thus, we find no evidence of an over-time convergence in the magnitude of the associations between political efficacy and the two types of political participation (OnPP and OffPP). The results therefore reaffirm the main conclusion about efficacy in relation to these two types of participation (H1): there is no significant difference in the magnitude of the positive associations between political efficacy and OnPP versus OffPP, and these relationships are stable over time.

Table 3. Test of the effect of year as moderator (H2, Over-time convergence).

Testing H3: Democratic Context

To test the democratic context hypothesis, we used data from the Varieties of Democracy (V-Dem) index of electoral democracy (v2x_polyarchy; Coppedge et al., Citation2020a, pp. 42–43, Citation2020b; Teorell et al., Citation2019), which measures the extent to which the ideal of electoral democracy is achieved in its fullest sense. This macro-level index assesses the degree to which governing systems are responsive to their citizens, based on aggregating lower-level indices that assess factors such as electoral competition and freedom of expression (Coppedge et al., Citation2020a, p. 288). The index score assigned to each coded estimate in the dataset corresponds to the country’s score on the electoral democracy index for the year in which the survey was conducted.Footnote10

The findings presented in show that this polyarchy summary measure of the level of electoral democracy is not a statistically significant moderator of either the relationship between efficacy and OnPP or the relationship between efficacy and OffPP, for either the common effect estimate dataset or for the zero-order correlation dataset. However, we also analyzed the more specific lower-level indices that inform the V-Dem overall index of electoral democracy, including measures of freedom of association, clean elections, freedom of expression, and elected officials. In addition, we tested additional contextual measures that may also act as moderators, namely civil society strength (Coppedge et al., Citation2020a, p. 288), Internet penetration (Robinson et al., Citation2015), and globalization as measured by trade (World Bank, Citation2021).

Table 4. Test of democratic contextual features as moderators (H3, Democratic context).

The findings on the relationship between political efficacy and OnPP reveal that none of these more specific country-level measures are significant moderators for the common effect estimate dataset () or for the zero-order correlation dataset (). The same null effects findings pattern holds for PE-OffPP for the zero-order correlation sample, which is the smaller of the two data samples (k = 45). However, for the larger common effect estimate dataset (k = 183), the findings do show that three of these country-level features moderate the relationship between political efficacy and OffPP: countries with cleaner elections, greater freedom of expression, and stronger civil society have, on average, a weaker relationship between political efficacy and OffPP. This means that, according to results based on the common effect estimate dataset, some of the association between political efficacy and political participation is explained by the strength of these contextual democratic features for OffPP but not OnPP. For offline participation, these findings suggest that in contexts that have stronger democratic institutions, individual-level political efficacy may play less of an important role to motivate individuals to engage in offline opportunities of participation, though further research would be needed to establish the causal mechanisms at play.

For online participation, however, the implications of the tests for country-level moderators for both the common effect estimate dataset (k = 183) and the zero-order correlation dataset (k = 45) are clear: the positive association between political efficacy and OnPP is of similar magnitude as the main effect for OffPP, and is unaffected by all tested measures of country context. This indicates that online participation’s positive association with people’s sense of their ability to engage in politics and impact political processes is stable across the globe in diverse political contexts.

Robustness Tests

This section summarizes a series of robustness tests, including sensitivity analyses, study-level robustness tests, and publication bias tests. All results summarized in this section are documented in further detail in the Supplementary Material and replication files.

Sensitivity Analyses

We conducted several sensitivity tests to assess whether the findings are robust despite the varying specifications of the original studies. These sensitivity analyses all support our main findings, including testing for potential effects of study quality, sample features, and outlier effects. For study quality, we tested whether objective measures of study quality affect the results by testing for differences between findings from studies published in high-ranked versus low-ranked journals and unranked studies; the results showed no statistically significant difference.Footnote11 Regarding features of the study sample, sample size is a significant moderator for some models (i.e., OnPP k = 45 and OffPP k = 183), but the coefficient is modest in size, and the main findings hold. Sample representativeness is a significant moderator in only one model (OffPP k = 183), with a modest coefficient, and the main findings hold. As a final sensitivity test, standard tests for outlier detection identified no effects that met established criteria to qualify as outliers for either the common effect estimate dataset or the zero-order correlation dataset (Cook & Weisberg, Citation1982; Viechtbauer & Cheung, Citation2010).

Study-Level Robustness Tests

For the common effect estimate dataset (k = 183), we examined whether the main findings are affected by attitudinal control variables in the multivariate models from which the effects were coded, namely the key attitudes in the literature of political knowledge and political interest. Political interest was not a significant moderator for either type of participation. Political knowledge was not a significant moderator for OffPP, but the findings showed a marginally significant association with OnPP (b = −0.044, p = .049), indicating a weaker relationship between political efficacy and OnPP when political knowledge is taken into account. Yet, even with the inclusion of political knowledge in the models, the main findings hold of no significant difference in the magnitude of the positive association between political efficacy and the two types of political participation. We conducted several additional study-level robustness tests for both the common effect estimate dataset and the zero-order correlation dataset, including assessment of distinctive types of efficacy, findings for the United States compared to all other countries, and whether OnPP is defined as social media activities. The robustness tests show that the main findings hold when accounting for these study-level factors, and the findings are reported in full in the Supplementary Material.

Publication Bias

We conducted standard publication bias tests (Egger et al., Citation1997; Sterne & Egger, Citation2005). The results provide evidence of modest publication bias for OffPP but no evidence of such bias for OnPP, although there is not yet consensus regarding thresholds for publication bias at the effect-level in multilevel meta-analysis (Fernández-Castilla et al., Citation2021; Rodgers & Pustejovsky, Citation2021). In addition, we also tested for publication bias by conducting a p-curve analysis (Carter et al., Citation2019; Simonsohn et al., Citation2014; Sun & Pan, Citation2020) and the results show no indication of publication bias.

Robustness Tests Summary

In sum, the results of these robustness tests confirmed the main findings: results based on both the common effect estimate dataset (k = 183) and the zero-order correlation dataset (k = 45) show that political efficacy is positively related to both online and offline political participation; these two positive relationships are similar in magnitude; there is no statistically significant difference in the strength of the associations between PE and OnPP versus OffPP across time periods; and the positive association between efficacy and OnPP is unaffected by country contextual features. Finally, according to the larger common effect estimate dataset (k = 183), the findings suggest that several country context features may moderate the relationship between efficacy and OffPP, but the smaller zero-order correlation dataset (k = 45) does not yield significant findings for any of the democratic context moderators.

Discussion

This study provides the most comprehensive investigation to date of the important question of how online and offline political participation relates to people’s sense of their capacity to engage in and impact political processes. Both normative and empirical studies have raised the question of whether online political participation is considered a meaningful form of political activity (Christensen, Citation2011; Freelon et al., Citation2020; Halupka, Citation2014; Karpf, Citation2012; Matthews, Citation2021). This type of activity has been considered in some research and public discourse as a low-cost way to participate that may also be used to express frustration with no expectation of political change, and thus may even demobilize citizens who would otherwise have been politically engaged (Anduiza et al., Citation2012; Gladwell, Citation2010). Despite these discourses, the current study shows that believing in one’s ability to participate in and impact political processes is as strongly related to online as to offline forms of political participation. The meta-analysis findings do not support the main efficacy hypothesis (H1), as political efficacy has a modestly positive association with both online and offline participation, with no statistically significant difference in the magnitude of these associations.

The empirical challenges involved in addressing this question are considerable due to limited availability of high-quality cross-national and longitudinal data on online participation, which limits researchers’ capacity to make valid inferences on the relation between key variables over time. The current study demonstrates how a multilevel random effects meta-analysis of all relevant empirical studies can yield new evidence to inform this important debate. Focusing on the online-offline distinction entails the theoretically and empirically challenging task of definitively distinguishing between these two categories of participation. Thus, the research design used the common distinction in the literature, which allowed the most robust test possible of the difference between the strength of the PE-OnPP association and the PE-OffPP association, and of whether this difference varied over time and across diverse country contexts. The results showed that a positive and substantively similar relationship between political efficacy and OnPP versus OffPP remained stable over time and that the strength of the association between PE and OnPP is stable across diverse country contexts. As noted, the moderator results for several features of democratic context (i.e., clean elections, freedom of expression, and civil society strength) were statistically significant in analyses of the common effects estimate dataset (k = 183), but not in analyses of the zero-order correlation dataset (k = 45), suggesting the importance of future research on these topics as more zero-order correlation data become available. To this end, we support Amsalem & Nir’s, Citation2021, p. 636) strong recommendation that documentation of correlation matrices of all study variables become standard practice for appendices of multivariate quantitative studies. Particularly for topics such as online political participation for which large-scale comparative surveys will continue to include a limited number of indicators for the foreseeable future, the accumulation of scientific knowledge will be greatly facilitated through meta-analyses of the growing body of country-specific research.

As noted in the descriptive statistics for the sample, the current analysis includes few studies that analyzed repeated-wave panel data. Thus, an important limitation of the current study is the lack of data that can be used to assess the causal direction of the relationship between political efficacy and political participation. Although conventional wisdom in this field tends to presume that political efficacy has a causal effect on political participation, research on offline participation suggests it is important to consider the possibility that the causal arrow can also be reversed, such that political participation enhances efficacy (Finkel, Citation1985; Quintelier & van Deth, Citation2014).

Given this possibility, we conclude by situating the current study within a broader stream of research that investigates the core topics of causal inference from several angles. The meta-analytic sample analyzed in the current study includes eight studies that analyzed repeated-wave data (six of which conducted lagged analyses), and additional repeated-wave studies on these topics have been emerging rapidly, which will enable future meta-analytic study of causal direction. While the comprehensive meta-analytic findings of the current study definitively establish that political efficacy has a similar positive association with both online and offline political participation, more fine-grained research is needed to assess the underlying mechanisms that may enhance these relations through different activities, and in different contexts. For example, future research is needed to assess whether the specific types of political acts within the broad OnPP/OffPP distinction of the current meta-analysis (e.g., engagement with the news, connecting with politicians online, or joining online groups) are more highly associated with individuals’ sense of efficacy.

To this end, multi-method research is needed on the relationship between political efficacy and political participation in the digital era, including studies based on cross-national repeated-wave surveys, qualitative fieldwork, and experimental data. For example, Shuman et al.’s (Citation2021) experimental study which shows that collective action that is non-normative and nonviolent succeeds in motivating support for policy goals can be adapted to test the validity of these findings for online forms of participation, and in contexts characterized by varying levels of political efficacy. Further, a high-quality and large-scale cross-national survey specifically designed to test the effects of macro-level context will be useful for precisely assessing contextual effects through multilevel modeling and cross-level interaction tests. Building on the current study’s clear affirmation of the positive association between political efficacy and online participation, these future lines of research on mechanisms that underlie this association can shed new light on the important question of how different types of online political participation may affect the connection between citizens and democratic processes in the digital era.

Open Scholarship

This article has earned the Center for Open Science badges for Open Data, Open Materials and Preregistered. The data and materials are openly accessible at http://doi.org/10.17605/OSF.IO/AF5DR. The pregistration is available at http://doi.org/10.17605/OSF.IO/MHXA8.

Supplemental Material

Download PDF (2.7 MB)Acknowledgments

We are grateful for expert input on the development of this study to (in alphabetical order) Jeremy Albright, Johanna Dunaway, Caleb Scheidel, Wolfgang Viechtbauer, and Alon Zoizner. We are solely responsible for the research.

Disclosure Statement

No potential conflict of interest was reported by the author(s).

Supplementary Material

Supplemental data for this article can be accessed on the publisher’s website at https://doi.org/10.1080/10584609.2022.2086329

Data Availability Statement

The data described in this article are openly available in the Open Science Framework at http://doi.org/10.17605/OSF.IO/AF5DR.

Additional information

Funding

Notes on contributors

Jennifer Oser

Jennifer Oser is an Associate Professor in the department of Politics and Government at Ben-Gurion University in Israel. Her research focuses on the relationships between public opinion, political participation, and policy outcomes.

Amit Grinson

Amit Grinson completed his M.A. in Sociology and Anthropology at Ben-Gurion University in Israel. He has researched topics of trust and online participation, and his methodological research interests include data visualization and data analytics.

Shelley Boulianne

Shelley Boulianne is an Associate Professor in Sociology at MacEwan University in Canada. She researches citizens’ engagement in civic and political life, including topics related to digital media, climate change, environmental policies, and misinformation.

Eran Halperin

Eran Halperin is a Full Professor of Psychology at the Hebrew University of Jerusalem in Israel. His research uses psychological and political theories and methods to investigate different aspects of inter-group conflicts and emotion regulation.

Notes

1. See registered hypotheses and research design in the Open Science Framework (OSF) http://doi.org/10.17605/OSF.IO/MHXA8.

2. All analyses were conducted in R version 4.1.1. Open Science Framework: http://doi.org/10.17605/OSF.IO/AF5DR.

3. The search string consisted of the following Boolean string: ALL (“political efficacy” OR “general efficacy” OR “internal efficacy” OR “external efficacy” AND part* AND online OR e-participation OR “digital media” AND offline).

4. The seminars and conference talks were all conducted virtually: University of Montreal Seminar, Canada Research Chair in Electoral Democracy and the Research Chair in Electoral Studies on April 21, 2020; European University Institute’s Political Behavior Colloquium, May 19, 2020; American Political Science Association Political Communication Pre-Conference, September 1, 2020; International Journal of Press/Politics, September 8, 2020.

5. One of the effects reported in the descriptive statistics (from Kim & Baek, Citation2018) was an outlier that was too large to transform to the common effect estimate measure of Fisher’s z. The sample size for the common effect estimate dataset is therefore k = 183 for all analyses.

6. To transform unstandardized regression coefficients, we multiplied the unstandardized coefficients by the standard deviation of the predictor variable, divided by the standard deviation of the outcome variable. For odds ratios, we used Menard’s second formula (Menard, Citation2004, p. 219).

7. As clarified by Jackson and Turner (Citation2017), five or more studies are all that is needed to achieve sufficient power in a random effects meta-analysis. In a meta-analysis of meta-analyses conducted over 60 years in the field of communication, Rains et al. (Citation2018) find that the average effect estimate of published studies is 49.

8. Specifically, Chan et al. (Citation2017) compared data from three countries (China, Hong Kong, and Taiwan); and Saldaña et al. (Citation2015) compared data from two countries (the United Kingdom and the United States).

9. Specifically, Adugu and Broome (Citation2018) analyzed data from five countries (Jamaica, Trinidad and Tobago, Guyana, Surinam, and Haiti); Huber et al. (Citation2019) analyzed data from 19 countries (Argentina, Brazil, China, Estonia, Germany, Indonesia, Italy, Japan, South Korea, New Zealand, the Philippines, Poland, Russia, Spain, Taiwan, Turkey, Ukraine, United Kingdom, and the United States).

10. As noted in the description of sample characteristics, two studies aggregate data from several countries (Adugu & Broome, Citation2018; Huber et al., Citation2019) and therefore cannot be included in analyses that test for country-level moderators.

11. Journal ranking was coded according to the four quartiles in Web of Science’s Journal Citation Reports (JCR), and a “not ranked” category, which includes both peer reviewed sources not ranked in JCR, and unpublished sources (e.g., conference papers, dissertations). JCR rankings were accessed through Clarivate Analytics (jcr.clarivate.com) and were coded according to the year in which the study was published.

References

- Adugu, E., & Broome, P. A. (2018). Exploring factors associated with digital and conventional political participation in the Caribbean. International Journal of E-Politics (IJEP), 9(2), 35–52. http://doi.org/10.4018/IJEP.2018040103

- AlSalem, F. (2015). Digital media and women’s political participation in Kuwait [Unpublished doctoral dissertation]. Indiana University.

- Amsalem, E., & Nir, L. (2021). Does Interpersonal Discussion Increase Political Knowledge? a Meta-Analysis. Communication Research, 48(5), 619–641. http://doi.org/10.1177/0093650219866357

- Amsalem, E., & Zoizner, A. (2022). Real, but limited: A meta-analytic assessment of framing effects in the political domain. British Journal of Political Science, 52(1), 221–237. http://doi.org/10.1017/s0007123420000253

- Anduiza, E., Jensen, M. J., & Jorba, L. (Eds.). (2012). Digital media and political engagement worldwide. Cambridge University Press.

- Anduiza, E., Guinjoan, M., & Rico, G. (2019). Populism, participation, and political equality. European Political Science Review, 11(1), 109–124. http://doi.org/10.1017/s1755773918000243

- Assink, M., & Wibbelink, C. J. M. (2016). Fitting three-level meta-analytic models in R: A step-by-step tutorial. The Quantitative Methods for Psychology, 12(3), 154–174. http://doi.org/10.20982/tqmp.12.3.p154

- Bandura, A. (2001). Social cognitive theory: An agentic perspective. Annual Review of Psychology, 52(1), 1–26. http://doi.org/10.1146/annurev.psych.52.1.1

- Bartels, L. M. (2016). Unequal democracy: The political economy of the new gilded age (2nd ed.). Princeton University Press.

- Bennett, W. L., & Segerberg, A. (2012). The logic of connective action: Digital media and the personalization of contentious politics. Information, Communication & Society, 15(5), 739–768. http://doi.org/10.1080/1369118X.2012.670661

- Bennett, W. L. (2012). The personalization of politics: Political identity, social media, and changing patterns of participation. The ANNALS of the American Academy of Political and Social Science, 644(1), 20–39. http://doi.org/10.1177/0002716212451428

- Blumenau, J. (2021). Online activism and dyadic representation: Evidence from the UK e-petition system. Legislative Studies Quarterly, 46(4), 889–920. http://doi.org/10.1111/lsq.12291

- Bode, L. (2017). Gateway Political Behaviors: The Frequency and Consequences of Low-Cost Political Engagement on Social Media. Social Media + Society, 3(4), 205630511774334. http://doi.org/10.1177/2056305117743349

- Borenstein, M., Hedges, L. V., Higgins, J. P. T., & Rothstein, H. R. (2009). Introduction to meta-analysis. John Wiley & Sons.

- Boulianne, S. (2019). US dominance of research on political communication: A meta-view. Political Communication, 36(4), 660–665. http://doi.org/10.1080/10584609.2019.1670899

- Boulianne, S. (2020). Twenty years of digital media effects on civic and political participation. Communication Research, 47(7), 947–966. http://doi.org/10.1177/0093650218808186

- Boulianne, S., & Theocharis, Y. (2020). Young people, digital media, and engagement: A meta-analysis of research. Social Science Computer Review, 38(2), 111–127. http://doi.org/10.1177/0894439318814190

- Campbell, A., Gurin, G., & Miller, W. E. (1954). The voter decides. Row, Peterson.

- Cantijoch, M., Cutts, D., & Gibson, R. (2016). Moving slowly up the ladder of political engagement: A ‘spill-over’ model of internet participation. The British Journal of Politics & International Relations, 18(1), 26–48. http://doi.org/10.1111/1467-856x.12067

- Carter, E. C., Schönbrodt, F. D., Gervais, W. M., & Hilgard, J. (2019). Correcting for bias in psychology: A comparison of meta-analytic methods. Advances in Methods and Practices in Psychological Science, 2(2), 115–144. http://doi.org/10.1177/2515245919847196

- Chamberlain, A. (2012). A time-series analysis of external efficacy. Public Opinion Quarterly, 76(1), 117–130. http://doi.org/10.1093/poq/nfr064

- Chan, M., Chen, H.-T., & Lee, F. L. F. (2017). Examining the roles of mobile and social media in political participation: A cross-national analysis of three Asian societies using a communication mediation approach. New Media & Society, 19(12), 2003–2021. http://doi.org/10.1177/1461444816653190

- Chenoweth, E. (2020). The future of nonviolent resistance. Journal of Democracy, 31(3), 69–84. http://doi.org/10.1353/jod.2020.0046

- Chou, E. Y., Hsu, D. Y., & Hernon, E. (2020). From Slacktivism to Activism: Improving the Commitment Power of E-Pledges for Prosocial Causes. PloS one, 15(4), e0231314. http://doi.org/10.1371/journal.pone.0231314

- Christensen, H. S. (2011). Political activities on the internet: slacktivism or political participation by other means? First Monday, 16(2). http://doi.org/10.5210/fm.v16i2.3336

- Cook, R. D., & Weisberg, S. (1982). Residuals and influence in regression. Chapman and Hall.

- Coppedge, M., Gerring, J., Knutsen, C., Lindberg, S., Teorell, J., Altman, D., Bernhard, M., Fish, M., Glynn, A., Hicken, A., Lührmann, A., Marquardt, K., McMann, K., Paxton, P., Pemstein, D., Seim, B., Sigman, R., Skaaning, S., Staton, J., … Ziblatt, D. (2020a). V-Dem Codebook v10. Varieties of Democracy (V-Dem) Project. http://dx.doi.org/10.2139/ssrn.3557877

- Coppedge, M., Gerring, J., Knutsen, C., Lindberg, S., Teorell, J., Altman, D., Bernhard, M., Fish, M., Glynn, A., Hicken, A., Lührmann, A., Marquardt, K., McMann, K., Paxton, P., Pemstein, D., Seim, B., Sigman, R., Skaaning, S., Staton, J., … Ziblatt, D. (2020b). V-Dem [Country–Year/Country–Date] Dataset v10. Varieties of Democracy (V-Dem) Project. http://doi.org/10.23696/vdemds20

- Dahl, R. A. (1961). Who governs? Democracy and power in an American city. Yale University Press.

- Dahlberg, S., Linde, J., & Holmberg, S. (2015). Democratic discontent in old and new democracies: Assessing the importance of democratic input and governmental output. Political Studies, 63(1), 18–37. http://doi.org/10.1111/1467-9248.12170

- Dassonneville, R., Feitosa, F., Hooghe, M., & Oser, J. (2021). Policy responsiveness to all citizens or only to voters? A longitudinal analysis of policy responsiveness in OECD countries. European Journal of Political Research, 60(3), 583–602. http://doi.org/10.1111/1475-6765.12417

- Dinesen, P. T., Schaeffer, M., & Sønderskov, K. M. (2020). Ethnic diversity and social trust: A narrative and meta-analytical review. Annual Review of Political Science, 23(1), 441–465. http://doi.org/10.1146/annurev-polisci-052918-020708

- Egger, M., Smith, G. D., Schneider, M., & Minder, C. (1997). Bias in meta-analysis detected by a simple, graphical test. BMJ, 315(7109), 629. http://doi.org/10.1136/bmj.315.7109.629

- Ennser-Jedenastik, L., Gahn, C., Bodlos, A., & Haselmayer, M. (2022). Does social media enhance party responsiveness? Party Politics, 28(3), 468–481. http://doi.org/10.1177/1354068820985334

- Esser, F. (2019). Advances in comparative political communication research through contextualization and cumulation of evidence. Political Communication, 36(4), 680–686. http://doi.org/10.1080/10584609.2019.1670904

- Farrell, H. (2012). The consequences of the Internet for politics. Annual Review of Political Science, 15(1), 35–52. http://doi.org/10.1146/annurev-polisci-030810-110815

- Fernández-Castilla, B., Declercq, L., Jamshidi, L., Beretvas, S. N., Onghena, P., & Van den Noortgate, W. (2021). Detecting selection bias in meta-analyses with multiple outcomes: A simulation study. The Journal of Experimental Education, 89(1), 125–144. http://doi.org/10.1080/00220973.2019.1582470

- Finkel, S. E. (1985). Reciprocal effects of participation and political efficacy: A panel analysis. American Journal of Political Science, 29(4), 891–913. http://doi.org/10.2307/2111186

- Freelon, D., Marwick, A., & Kreiss, D. (2020). False equivalencies: Online activism from left to right. Science, 369(6508), 1197. http://doi.org/10.1126/science.abb2428

- Gibson, R., & Cantijoch, M. (2013). Conceptualizing and measuring participation in the age of the internet: Is online political engagement really different to offline? The Journal of Politics, 75(3), 701–716. http://doi.org/10.1017/S0022381613000431

- Gil de Zúñiga, H., Barnidge, M., & Scherman, A. (2017). Social media social capital, offline social capital, and citizenship: Exploring symmetrical social capital effects. Political Communication, 34(1), 44–68. http://dx.doi.org/10.1080/10584609.2016.1227000

- Giugni, M., & Grasso, M. (2019a). Mechanisms of responsiveness: What MPs think of interest organizations and how they deal with them. Political Studies, 67(3), 557–575. http://doi.org/10.1177/0032321718784156

- Giugni, M., & Grasso, M. (2019b). Street citizens: Protest politics and social movement Activism in the age of globalization. Cambridge University Press.

- Gladwell, M. (2010). Why the revolution will not be tweeted. The New Yorker. Condé Nast. http://www.newyorker.com/magazine/2010/10/04/small-change-malcolm-gladwell

- Griffin, J. D., & Newman, B. (2005). Are voters better represented? The Journal of Politics, 67(4), 1206–1227. http://doi.org/10.1111/j.1468-2508.2005.00357.x

- Guidetti, M., Cavazza, N., & Graziani, A. R. (2016). Perceived disagreement and heterogeneity in social networks: Distinct effects on political participation. The Journal of Social Psychology, 156(2), 222–242. http://doi.org/10.1080/00224545.2015.1095707

- Gurevitch, J., Koricheva, J., Nakagawa, S., & Stewart, G. (2018). Meta-analysis and the science of research synthesis. Nature, 555(7695), 175–182. http://doi.org/10.1038/nature25753

- Halupka, M. (2014). Clicktivism: A systematic heuristic. Policy & Internet, 6(2), 115–132. http://dx.doi.org/10.1002/1944-2866.POI355

- Htun, M., & Weldon, S. L. (2012). The civic origins of progressive policy change: Combating violence against women in global perspective, 1975-2005. American Political Science Review, 106(3), 548–569. http://doi.org/10.1017/S0003055412000226

- Huber, B., Gil de Zúñiga, H., Diehl, T., & Liu, J. H. (2019). The citizen communication mediation model across countries. Journal of Communication, 69(2), 144–167. http://doi.org/10.1093/joc/jqz002

- Hünermund, P., & Louw, B. (2020, October 1). On the nuisance of control variables in regression analysis. arXiv preprint arXiv:2005.10314, 3. http://arxiv.org/abs/2005.10314

- Jackson, D., & Turner, R. (2017). Power analysis for random-effects meta-analysis. Research Synthesis Methods, 8(3), 290–302. http://doi.org/10.1002/jrsm.1240

- Jugert, P., Eckstein, K., Noack, P., Kuhn, A., & Benbow, A. (2013). Offline and online civic engagement among adolescents and young adults from three ethnic groups. Journal of Youth and Adolescence, 42(1), 123–135. http://doi.org/10.1007/s10964-012-9805-4

- Jung, N., Kim, Y., & de Zúñiga, H. G. (2011). The mediating role of knowledge and efficacy in the effects of communication on political participation. Mass Communication and Society, 14(4), 407–430. http://doi.org/10.1080/15205436.2010.496135.

- Kahne, J., & Bowyer, B. (2018). The political significance of social media activity and social networks. Political Communication, 35(3), 470–493. http://doi.org/10.1080/10584609.2018.1426662

- Karp, J. A., & Banducci, S. A. (2008). Political efficacy and participation in twenty-seven democracies: How electoral systems shape political behaviour. British Journal of Political Science, 38(2), 311–334. http://doi.org/10.1017/S0007123408000161

- Karpf, D. (2010). Online political mobilization from the advocacy group’s perspective. Policy & Internet, 2(4), 7–41. http://doi.org/10.2202/1944-2866.1098

- Karpf, D. (2012). The MoveOn effect - the unexpected transformation of American political advocacy. Oxford University Press.

- Keele, L., Stevenson, R. T., & Elwert, F. (2020). The causal interpretation of estimated associations in regression models. Political Science Research and Methods, 8(1), 1–13. http://doi.org/10.1017/psrm.2019.31

- Kenski, K., & Stroud, N. J. (2006). Connections between Internet use and political efficacy, knowledge, and participation. Journal of Broadcasting & Electronic Media, 50(2), 173–192. http://dx.doi.org/10.1207/s15506878jobem5002_1

- Kim, H. M., & Baek, Y. M. (2018). The power of political talk: How and when it mobilizes politically efficacious citizens’ campaign activity during elections. Asian Journal of Communication, 28(3), 264–280. http://doi.org/10.1080/01292986.2018.1431295

- Kriesi, H. (2013). Democratic legitimacy: Is there a legitimacy crisis in contemporary politics? Politische vierteljahresschrift, 54(4), 609–638. http://doi.org/10.5771/0032-3470-2013-4

- Kriesi, H. (2014). The populist challenge. West European Politics, 37(2), 361–378. http://doi.org/10.1080/01402382.2014.887879

- Kriesi, H. (2020). Is there a crisis of democracy in Europe? Politische vierteljahresschrift, 61(2), 237–260. http://dx.doi.org/10.1080/01402382.2014.887879

- Kristofferson, K., White, K., & Peloza, J. (2014). The nature of slacktivism: How the social observability of an initial act of token support affects subsequent prosocial action. Journal of Consumer Research, 40(6), 1149–1166. http://doi.org/10.1086/674137

- Kwak, N., Lane, D. S., Weeks, B. E., Kim, D. H., Lee, S. S., & Bachleda, S. (2018). Perceptions of social media for politics: Testing the slacktivism hypothesis. Human Communication Research, 44(2), 197–221. http://dx.doi.org/10.1093/hcr/hqx008

- Lane, D. S., Kim, D. H., Lee, S. S., Weeks, B. E., & Kwak, N. (2017). From online disagreement to offline action: How diverse motivations for using social media can increase political information sharing and catalyze offline political participation. Social Media + Society, 3(3), 205630511771627. http://doi.org/10.1177/2056305117716274

- Leighley, J. E., & Oser, J. (2018). Representation in an era of political and economic inequality: How and when citizen engagement matters. Perspectives on Politics, 16(2), 328–344. http://doi.org/10.1017/S1537592717003073

- Liberati, A., Altman, D. G., Tetzlaff, J., Mulrow, C., Gøtzsche, P. C., Ioannidis, J. P. A., Clarke, M., Devereaux, P. J., Kleijnen, J., & Moher, D. (2009). The PRISMA statement for reporting systematic reviews and meta-analyses of studies that evaluate health care interventions: Explanation and elaboration. Journal of Clinical Epidemiology, 62(10), e1–e34. http://doi.org/10.1016/j.jclinepi.2009.06.006

- Lipsey, M. W., & Wilson, D. B. (2001). Practical meta-analysis. Sage.

- Lührmann, A., & Lindberg, S. I. (2019). A third wave of autocratization is here: What is new about it? Democratization, 26(7), 1095–1113. http://doi.org/10.1080/13510347.2019.1582029

- Lundberg, I., Johnson, R., & Stewart, B. M. (2021, June 1). What is your estimand? Defining the target quantity connects statistical evidence to theory. American Sociological Review, 86(3), 532–565. http://doi.org/10.1177/00031224211004187

- Lutz, C., Hoffman, C. P., & Meckel, M. (2014). Beyond just politics: A systematic literature review of online participation. First Monday, 19(7) . http://doi.org/10.5210/fm.v19i7.5260

- Matthes, J., Knoll, J., & von Sikorski, C. (2018). The “spiral of silence” revisited: A meta-analysis on the relationship between perceptions of opinion support and political opinion expression. Communication Research, 45(1), 3–33. http://doi.org/10.1080/10584609.2019.1619638

- Matthes, J., Knoll, J., Valenzuela, S., Hopmann, D. N., & Von Sikorski, C. (2019). A meta-analysis of the effects of cross-cutting exposure on political participation. Political Communication, 36(4), 523–542. http://doi.org/10.1177/0093650217745429

- Matthews, F. (2021). The value of “between-election” political participation. The British Journal of Politics and International Relations, 23(3), 410–429. http://doi.org/10.1177/1369148120959041

- Menard, S. (2004). Six approaches to calculating standardized logistic regression coefficients. The American Statistician, 58(3), 218–223. http://doi.org/10.1198/000313004X946

- Mill, J. S. ([1861] 1962). Considerations on representative government. Henry Regnery.

- Morrell, M. E. (2003). Survey and experimental evidence for a reliable and valid measure of internal political efficacy. The Public Opinion Quarterly, 67(4), 589–602. http://doi.org/10.1086/378965

- Munzert, S., & Ramirez-Ruiz, S. (2021). Meta-analysis of the effects of voting advice applications. Political Communication, 38(6) , 691–706. http://doi.org/10.1080/10584609.2020.1843572

- Niemi, R. G., Craig, S. C., & Mattei, F. (1991). Measuring internal political efficacy in the 1988 National Election Study. American Political Science Review, 85(4), 1407–1413. http://doi.org/10.2307/1963953

- Ohme, J., de Vreese, C. H., & Albæk, E. (2018). From theory to practice: How to apply van Deth’s conceptual map in empirical political participation research. Acta Politica, 53(3), 367–390. http://doi.org/10.1057/s41269-017-0056-y

- Oser, J., Hooghe, M., & Marien, S. (2013). Is online participation distinct from offline participation? A latent class analysis of participation types and their stratification. Political Research Quarterly, 66(1), 91–101. http://doi.org/10.1177/1065912912436695

- Park, C. S. (2015). Pathways to expressive and collective participation: Usage patterns, political efficacy, and political participation in social networking sites. Journal of Broadcasting & Electronic Media, 59(4), 698–716. http://doi.org/10.1080/08838151.2015.1093480

- Peterson, R. A., & Brown, S. P. (2005). On the use of beta coefficients in meta-analysis. Journal of Applied Psychology, 90(1), 175–181. http://doi.org/10.1037/0021-9010.90.1.175

- Pingree, R. J., Brossard, D., & McLeod, D. M. (2014). Effects of journalistic adjudication on factual beliefs, news evaluations, information seeking, and epistemic political efficacy. Mass Communication and Society, 17(5), 615–638. http://doi.org/10.1080/15205436.2013.821491

- Powell, G. B. (2004). The chain of responsiveness. Journal of Democracy, 15(4), 91–105. http://doi.org/10.1353/jod.2004.0070

- Quintelier, E., & van Deth, J. W. (2014). Supporting democracy: Political participation and political attitudes. Exploring causality using panel data. Political Studies, 62(S1), 153–171. http://dx.doi.org/10.1111/1467-9248.12097

- Rains, S. A., Levine, T. R., & Weber, R. (2018). Sixty years of quantitative communication research summarized: Lessons from 149 meta-analyses. Annals of the International Communication Association, 42(2), 105–124. http://doi.org/10.1080/23808985.2018.1446350

- Rasmussen, A., & Reher, S. (2019). Civil society engagement and policy representation in Europe. Comparative Political Studies, 52(11), 1648–1676. http://doi.org/10.1177/0010414019830724

- Robinson, L., Cotten, S. R., Ono, H., Quan-Haase, A., Mesch, G., Chen, W., Schulz, J., Hale, T. M., & Stern, M. J. (2015). Digital inequalities and why they matter. Information, Communication & Society, 18(5), 569–582. http://doi.org/10.1177/2158244018794769

- Robison, J., Stevenson, R. T., Druckman, J. N., Jackman, S., Katz, J. N., & Vavreck, L. (2018). An audit of political behavior research. SAGE Open, 8(3), 1–14. http://dx.doi.org/10.1080/1369118X.2015.1012532

- Rodgers, M. A., & Pustejovsky, J. E. (2021). Evaluating meta-analytic methods to detect selective reporting in the presence of dependent effect sizes. Psychological Methods, 26(2), 141–160. http://doi.org/10.1037/met0000300

- Rojas, H., & Valenzuela, S. (2019). A call to contextualize public opinion-based research in political communication. Political Communication, 36(4), 652–659. http://doi.org/10.1080/10584609.2019.1670897

- Ruess, C., Hoffmann, C., Heger, K., & Boulianne, S. (Forthcoming). Online political participation: The evolution of a concept. Information, Communication & Society, 1–18. http://doi.org/10.1080/1369118X.2021.2013919