?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

Approximate Bayesian computation (ABC) is now an established technique for statistical inference used in cases where the likelihood function is computationally expensive or not available. It relies on the use of a model that is specified in the form of a simulator, and approximates the likelihood at a parameter value θ by simulating auxiliary data sets x and evaluating the distance of x from the true data y. However, ABC is not computationally feasible in cases where using the simulator for each θ is very expensive. This article investigates this situation in cases where a cheap, but approximate, simulator is available. The approach is to employ delayed acceptance Markov chain Monte Carlo within an ABC sequential Monte Carlo sampler to, in a first stage of the kernel, use the cheap simulator to rule out parts of the parameter space that are not worth exploring, so that the “true” simulator is only run (in the second stage of the kernel) where there is a reasonable chance of accepting proposed values of θ. We show that this approach can be used quite automatically, with few tuning parameters. Applications to stochastic differential equation models and latent doubly intractable distributions are presented. Supplementary materials for this article are available online.

1 Introduction

1.1 Motivation

Approximate Bayesian computation (ABC) is a technique for approximate Bayesian inference originally introduced in the population genetics literature (Pritchard et al. Citation1999; Beaumont, Zhang, and Balding Citation2002), but which is now used for a wide range of applications. It is suitable for situations in which a model with parameters θ for data y is readily available in the form of a simulator, but where l as a function of θ (known as the likelihood) is intractable in that it cannot be evaluated pointwise. If θ is assigned prior distribution p, the object of inference is the posterior distribution

. ABC yields approximations to the posterior distribution through approximating the likelihood, in the simplest case using

(1)

(1) where

is a kernel centered around

and M points

are sampled from

. We may see Monte Carlo ABC algorithms as sampling from a joint distribution on θ and x proportional to

, where l is used as a proposal distribution for x.

In this article, we describe methods that are designed to be applicable in cases where the likelihood estimation is computationally costly because the model l is expensive to simulate from, for example when studying an ordinary differential equation (ODE) or stochastic differential equation (SDE) model (Picchini Citation2014) that requires a solver with a small step size, or when using an individual based model (van der Vaart et al. Citation2015) with a large number of individuals. This situation is not uncommon, since even if the model takes only a matter of seconds to simulate, this cost is prohibitive when it needs to be simulated a number of times due to estimating the likelihood at a large number of Monte Carlo points in θ-space. We note that this situation is exacerbated when θ is of moderate to high dimension, since in these cases (as in any Monte Carlo method) it is difficult to design a proposal that can efficiently make moves on all dimensions of θ simultaneously. Most commonly, each dimension of θ is updated using a single component move, and in the ABC context this means using M simulations from the likelihood when updating each dimension of θ.

1.2 Previous Work and Contributions

This article describes an ABC-sequential Monte Carlo (SMC) algorithm that uses a delayed acceptance (DA) ABC-Markov chain Monte Carlo (MCMC) move that aims to limit the number of θ points at which we need to perform an expensive simulation of the likelihood, while also attempting to maintain a good exploration of the target distribution. We begin in Section 2 with a review of the literature on ABC-SMC, focusing particularly on the case where a uniform ABC kernel is used. Then in Section 3.1.1, we describe DA ABC-MCMC for exploring θ-space, and use it as a method for discarding θ points that are unlikely to be in regions of high posterior density. DA decomposes an MCMC move into two stages. At the first stage a point is proposed and is accepted or rejected according to a posterior that is usually chosen to approximate the desired posterior. If accepted at the first stage, at the second stage an acceptance probability is used that “corrects” for the discrepancy between the approximate and the desired target. Thus, we may think of the first stage as screening for points to take through to the second stage. Compared to the previous work, this approach is most similar to the “early rejection” of Picchini and Forman (Citation2016) who use the prior for this screening step. DA ABC-MCMC at the first stage makes use of an approximate, but computationally cheap, alternative to the full simulator. We will see that our approach is applicable in cases in which there is a cheap, approximate simulator is used independently of the full simulator, and also where the full simulator is a continuation of the initial cheap simulation. This latter situation is the same as that considered in “Lazy” ABC (Prangle Citation2016), in which is it possible to monitor a simulation as it is progressing, and to be able to assess before the full simulation whether the current θ point is likely to be in a region of high posterior density. We will see that using DA ABC-MCMC introduces an additional tuning parameter: the tolerance ϵ used for the cheap simulator. When using DA ABC-MCMC directly, it is not always straightforward to set this parameter. In Section 3.2.1, we embed DA ABC-MCMC in the ABC-SMC framework, since this is established as an effective method for guiding Monte Carlo points in θ-space to regions of high posterior density, and also since we see that it allows for automating the choice of the additional tuning parameter. The resultant algorithm is particularly useful for distributions for which it is difficult to design a useful proposal distribution. When our proposal has a poor acceptance rate, we may need to run the expensive simulator many times for a single acceptance. However, with delayed acceptance we may propose a very large number of points without the need to run the expensive simulator for a large number of them. The procedure is to evaluate whether the proposed points are in a high density region according to the cheap simulator—in the cases where they are, we run the expensive simulator. For points that lie in this region (i.e., that pass the first stage of DA), we must run the expensive simulator, and expect to accept a reasonable proportion of these (as long as the cheap simulator is close to the expensive simulator). Essentially, the first stage of the DA acts to give us a well-targeted proposal. Section 4 considers an application to SDEs, where we examine using cheap simulators with a large step size in the numerical solver. Section 5 considers applications to doubly intractable distributions: applying ABC to these models (and other Markov random fields) is complicated because no computationally feasible exact simulator is available. Instead an MCMC algorithm is commonly used as an approximate approach (Grelaud, Robert, and Marin Citation2009; Everitt Citation2012). In such approaches the burn in of this MCMC method determines the accuracy of the approach: for a small burn in the method is biased, with this bias being eliminated by a large burn in which may be computationally expensive. In the main article, we examine the case of latent Ising models previously studied in Everitt (Citation2012), noting the potential advantage of ABC in these cases compared to methods such as the exchange algorithm. In the supplementary appendix, we also examine an application to a latent exponential random graph model (ERGM). We then conclude with a discussion in Section 6.

2 Background on ABC-SMC

2.1 SMC Samplers

An SMC sampler (Del Moral, Doucet, and Jasra Citation2006) is an importance sampling based Monte Carlo method for generating weighted points from a target distribution π through making use of a sequence of T target distributions that bridge between some initial proposal distribution π0 from which we can simulate, and the final target . Here we focus on the variant of the algorithm that uses an MCMC kernel, and provide a sketch of the main steps of the algorithm (further details may be found in Del Moral, Doucet, and Jasra (Citation2006)). The algorithm begins by simulating N weighted “particles” from π0 (initially with equal weights). Then the particles are moved from target πt to

through an importance sampling reweighting step, followed by a “move” step in which each particle is simulated from an MCMC kernel starting at its current value, and with target

. Let

be the value taken by the ith particle after iteration t and

be its (normalized) weight. The reweighting step then computes the (unnormalized) weight

of the nth particle

using

(2)

(2) followed by a normalization step to find

. This algorithm yields an empirical approximation of πt

(3)

(3)

where is a Dirac mass at θ, and an estimate of its normalizing constant

(4)

(4)

Usually the variance of the weights is monitored during the algorithm, and if this becomes too large (which would lead to high variance estimators if no intervention was made) a resampling step is performed after reweighting (in this article stratified resampling is used). The resampling step at iteration t + 1 simulates N particles from the empirical distribution .

SMC outperforms importance sampling in cases where one needs many points to obtain low variance importance sampling estimates when directly using π0 as a proposal for π, that is, when the distance between π0 and π is too large. To ensure that SMC estimates have low variance, we require the distance between πt and to be small for all t. a proxy for the distance between subsequent targets that may be estimated as the algorithm runs is given by the effective sample size (ESS) (Kong, Liu, and Wong Citation1994)

(5)

(5)

A large ESS corresponds to a small distance between targets although, as referred to in the following section, a high ESS is necessary but not sufficient for achieving a low Monte Carlo variance.

2.2 ABC-SMC

Sisson, Fan, and Tanaka (Citation2007, Citation2009) remarked that the ABC posterior is a natural candidate for simulating from using SMC, in that a sequence of posteriors with a decreasing sequence of tolerances ϵ1 to

is a natural and useful choice as a sequence of distributions to use in SMC. We follow Del Moral, Doucet, and Jasra (Citation2012a) in choosing the sequence of distributions

(6)

(6) such that

, with xt being the corresponding auxiliary variable (as in EquationEquation (1)

(1)

(1) ) at iteration t. When an ABC-MCMC move is used for the “move” step, this leads to the following weight update when moving from target πt to

(7)

(7)

(a derivation may be found in the supplementary appendix). This weight update is computationally cheap and requires no simulation to produce , which has been generated either in the initial step of the algorithm or as a part of a previous MCMC move. Additionally, it is cheap to compute for any choice of

. This fact is used by Del Moral, Doucet, and Jasra (Citation2012a) to devise an adaptive ABC-SMC algorithm that automatically determines the sequence

. At every SMC iteration a bisection method is used to determine the

that will result in an ESS that is some proportion (e.g., 90%) of N.

It is common practice in SMC algorithms to use the current set of particles to adaptively set the proposal for the MCMC kernels used in the “move” step (note that this introduces a small bias into estimates based on the SMC for the methods used in this article). The simplest scheme, from Robert et al. (Citation2011), sets the proposal covariance to be some multiple of the empirical covariance of the particles (this choice being rooted in results on optimal proposals for some MCMC algorithms).

2.2.1 ABC-SMC With Indicator Potentials

In ABC a common choice for the kernel is

, where d is a distance metric. This kernel is known to be sub-optimal (Li and Fearnhead Citation2018), but we restrict our attention to this case for the remainder of the article since it simplifies the presentation and interpretation of the algorithms we introduce. The first example of this simplification is that when used in the ABC-SMC method of Del Moral, Doucet, and Jasra (Citation2012a), the particle weights are either zero (“dead”) or nonzero (“alive”) (this following from EquationEquation (7)

(7)

(7) ). This type of SMC algorithm is also studied in Cérou et al. (Citation2012), Del Moral et al. (Citation2015), and Prangle, Everitt, and Kypraios (Citation2017). A further consequence that the ESS is equal to the number of “alive” particles with nonzero weight after the update, simplifying the interpretation of the adaptive approach to choosing

described above.

In this article, we use the revised scheme of Bernton et al. (Citation2017). The revision addresses the issue that the acceptance rate of ABC-MCMC moves decreases as the ABC-SMC progresses (since the ABC tolerance decreases). When the MCMC moves have a poor acceptance rate, the ESS is not a good criterion for deciding on a new tolerance, since it is unaffected by the values taken by each particle: even if all particles take the same value, the ESS may be high. Therefore, Bernton et al. (Citation2017) suggested instead to choose such that some number U (with

) of the N particles will be unique after resampling has been performed. Both this new scheme, and the original method that uses the ESS, introduce a small bias into estimates from the SMC (Cérou et al. Citation2012; Prangle, Everitt, and Kypraios Citation2017).

To simplify the presentation of our method, we perform resampling at every step of the algorithm. As a consequence, the denominator in EquationEquation (7)(7)

(7) is positive and the same for every particle, thus in practice the reweighting step becomes

where for simplicity we have omitted the additional normalizing term for

, required to provide a correct marginal likelihood estimator—see Didelot et al. (Citation2011) for details. Resampling at every step avoids propagating “dead” particles with zero weight, but is not an optimal strategy since it is possible that not all alive particles will be part of the resample (unless systematic resampling is used). A standard approach to deciding when to resample is to only do so when the ESS fall below a prespecified threshold (Del Moral, Doucet, and Jasra Citation2012b), but we do not consider such approaches in this article.

The ABC-SMC algorithm with the configuration described in this section is given in Algorithm 1. Note that although a final tolerance is specified in this algorithm, in practice the method is run for as long as computational resources will allow; a standard approach would be to monitor the acceptance probability of the MCMC moves and to terminate when this is zero for a number of iterations. The early rejection method of Picchini and Forman (Citation2016) may be employed for the ABC-MCMC moves when using an indicator kernel: this approach is precisely the same as standard ABC-MCMC, with the calculation reorganized to avoid unnecessary simulations from the likelihood (see the supplementary appendix for details).

Algorithm 1 ABC-SMC using early rejection.

Inputs: Number of particles N, desired number of unique particles U, prior p, simulator l, final tolerance .

Outputs: Particles and weights

for all t.

for do

end for

, t = 0.while

do

Simulate , to be used in resampling.

Use bisection to choose s.t. there will be U unique particles after reweighting and resampling (using random numbers v).for

do

end for

Normalize to give normalized weights

.

Perform resampling using random draws v.for do

if

then

if

then

end if

end if

end for

end while

3 Delayed Acceptance ABC-SMC

This section describes the algorithm that is introduced by this article: an ABC-SMC sampler that uses a DA ABC-MCMC kernel as its “move” step (instead of standard ABC-MCMC), with the DA move being tuned automatically using the population of particles. We begin by describing DA, then outline how it may be used in the ABC context, before describing an ABC-SMC sampler that makes use of it. Where they are omitted from the main article, full derivations are given in the supplementary appendix.

3.1 Delayed Acceptance

This section introduces DA (Christen and Fox Citation2005) as the algorithm that results when one Metropolis–Hastings kernel is used as a proposal within another. We note here the link with pseudo-marginal type algorithms that, as a special case, show how to use importance sampling or SMC within a Metropolis–Hastings algorithm. Let Metropolis–Hastings transition kernel K1 have invariant distribution π1 and suppose our aim is to use the distribution π1 as the proposal distribution in another Metropolis–Hastings kernel K2 with an invariant distribution π2. It turns out that we can use a draw from K1 as if it was a point drawn from π1. That is, we apply K1 to θ to obtain , then accept

with probability

(8)

(8)

A computational saving arises when a rejection occurs under K1, since in this case , giving

without needing to evaluate π2. Verifying this acceptance probability is simple. Since

is simulated from K1, we have an acceptance probability for K2 of

(9)

(9)

Now, K1 satisfies the detailed balance equation with respect to π1

(10)

(10) and substituting this into (9) we obtain the desired result of EquationEquation (8)

(8)

(8) .

In the literature on DA, π2 is chosen to be a desired target distribution that may be computationally expensive to evaluate, and π1 is chosen to be a cheaper, approximate target distribution. Used in this way, the first stage of delayed acceptance (i.e., the result of applying K1) provides a proposal that should be well suited for an MH algorithm with a target π2, for example, Banterle, Grazian, and Robert (Citation2014) used approximate likelihoods based on subsets of the data as the approximate targets. Strens (Citation2004) used a very similar idea, that is, a hierarchical decomposition of the likelihood. Banterle, Grazian, and Robert (Citation2014) noted that while a standard MH algorithm dominates DA in terms of the Peskun ordering, it is possible that a reduced computational cost in the first stage of DA can lead to more efficient algorithms in terms of the Monte Carlo error per computational time.

3.1.1 Delayed Acceptance With ABC-MCMC

This article makes use of DA in the ABC setting. Suppose that there exists a computationally cheap alternative l1 to our “true” simulator . We wish to perform the first stage of DA using points

simulated from l1, which are compared to data y1 (which need not necessarily be the same as y) to determine the parameters at which we simulate points

from l2, from which we estimate the standard ABC likelihood. At the first stage of DA, we use an ABC-MCMC move with the simulator l1, using a tolerance ϵ1, giving an acceptance probability of

(11)

(11)

The acceptance probability at the second stage iswhere the marginal distribution of the target we have used is the ABC posterior with l2, ϵ2, and y. Note that we have included the conditioning on x1 in

and l2, which allows for the possibility that the “true” simulator is a composition of the simulators l1 and l2, with

being a partial simulation. If the true simulator is simply l2, we may drop this conditioning on x1 from

and l2.

3.2 Delayed Acceptance ABC-SMC

3.2.1 Use of DA-ABC-MCMC in ABC-SMC

We now examine the use of the DA-ABC-MCMC approach from the preceding section for the “move” step in the ABC-SMC algorithm of Del Moral, Doucet, and Jasra (Citation2012a). The new SMC sampler operates on a sequence of target distributions where ϵ2 decreases at each iteration, thus we use to denote its value at the tth iteration. ϵ1 and y1 may also change between SMC iterations, and we denote their values at the tth iteration by

and

, respectively. In this case the weight update for each particle is given by

(12)

(12)

3.2.2 Adaptive DA Within ABC-SMC When Using the Indicator Kernel

In this section, we consider the implementation of the DA-ABC-MCMC move when is chosen to be an indicator function; that is,

, where d is a distance metric. Following Section 3.1.1 we obtain, at the tth iteration of the SMC, an acceptance probabilities of

(13)

(13)

andat the first and second stages of DA, respectively. The dependence in EquationEquation (13)

(13)

(13) on the condition

is maybe surprising, but is required since if it is not satisfied the denominator in the acceptance ratio is zero, and in this case the proposal should be rejected (Tierney Citation1998). The presence of this condition is due to the possibility that the sequence

is not always decreasing in t. Full details may be found in the supplementary appendix, along with details of how early rejection may be used in the first stage of DA.

Del Moral, Doucet, and Jasra (Citation2012a) made use of a useful property of ABC to create an adaptive algorithm: once simulation of x has been performed for any θ, it is computationally cheap to estimate the ABC likelihood for any tolerance ϵ. Here we use this property, together with the fact that we have a population of particles available in the SMC algorithm, to automatically determine an appropriate value for at every iteration. We choose

using the criterion that we desire to perform the second stage of the DA for a fixed proportion of the particles; we choose

such that A particles are accepted to move forward to the second stage, where

is chosen prior to running the SMC. This is achieved by choosing

such that A particles satisfy

: a bisection method may be used to find such an

. As with the bisection used in the adaptive approach of Del Moral, Doucet, and Jasra (Citation2012a), in practice the bisection will not always give precisely A particles at the second stage of DA; if fewer than A particles pass early rejection due to the prior,

is chosen to let all these particles through to the second stage.

3.2.3 Discussion

This ABC-SMC algorithm, which we call DA-ABC-SMC, is described in full in Algorithm 2. In brief, we use this algorithm where we: adaptively choose the sequence such that there are U unique particles after reweighting and resampling (Bernton et al. Citation2017); adaptively choose the variance of the (Gaussian) MCMC proposals to be the sample variance of the current particle set. The method requires us to specify: the number of particles N, the number of particles

that are allowed past the first stage of the DA and the number of unique particles

. We now discuss how to choose each of these parameters.

Number of unique particles: On terminating the algorithm, we effectively have a sample of size U from the final ABC posterior, thus U should be chosen to be the number of Monte Carlo points we wish to generate.

Number of particles allowed past the first stage: A dictates the computational cost of each iteration, since it is the number of expensive simulations we will perform per SMC iteration.

Total number of particles: To choose N we need to consider the factor F by which the cheap simulator is faster than the expensive simulator. For DA to be most effective, we require that the cost of running the cheap simulator is small compared to running the expensive simulator, that is,

.

If N is chosen to be much larger than A, we require a slightly nonstandard initial step in our SMC algorithm so that the computational expense of this step does not dominate the subsequent iterations. In a standard SMC sampler, the initial step would require initial values of x1 and x2 to be simulated for each of the N particles, which requires running the expensive simulator N times. Instead we simulate times from the initial distribution, and repeat these points N/A times (i.e., the vector of particles consists of stacked copies of these values) so that we have a sample of size N from the initial distribution. This sample contains only A unique points but, importantly, will result in N proposed points at every MCMC move in the SMC. The parameter N plays slightly different role to a standard SMC sampler, in which we might informally think of it as roughly the size of the Monte Carlo sample (in DA-ABC-SMC, U plays this role instead). In DA-ABC-SMC, N is one of four factors determining the “DA proposal” (i.e., the distribution of points that arrive at the second stage of DA), the remaining factors being: the choices of A and l1, and the distribution of the particles from the previous iteration of the SMC. A useful DA proposal has similar characteristics to a good independent MCMC or importance sampling proposal: we wish it to be close to the target distribution, with wider tails. Since the DA proposal depends on the previous distribution of the particles, we observe empirically that it can to some extent automatically “track” the target distribution over SMC iterations, and provide a useful proposal distribution at each SMC iteration. The extent to which this is the case is investigated in Sections 4 and 5, where we illustrate the performance of our algorithm for different choices of the tuning parameters, which result in different sequences of DA proposals.

Algorithm 2 DA-ABC-SMC using early rejection.

Inputs: As algorithm 1, and the number of particles A to pass the first stage of DA.

Outputs: Particles and weights

for all t.

for do

end for

, t = 0.while

do

Simulate , to be used in resampling and use bisection to choose

s.t. there will be U unique particles after reweighting and resampling (using v).for

do

end for

Normalize to give normalized weights

, perform resampling using random numbers v, and let

.for

do

if

then

end if

end for

Use bisection to choose s.t. A particles satisfy

.

Let I be the set of indices of the particles that pass the first stage of DA.for do

if

then

end if

end for

end while

4 Application to SDEs

4.1 Lotka–Volterra Model

The Lotka–Volterra model is a stochastic Markov jump process that models the number of individuals in two populations of animals: predator and prey. It is a commonly used example in ABC since it is possible to simulate exactly from the model using the Gillespie algorithm (Gillespie Citation1977), but the likelihood is not available pointwise. We follow the model described in Wilkinson (Citation2011), in which X represents the number of predators and Y the number of prey. The following reactions may take place: a prey may be born, with rate , increasing Y by one; predator and prey may interact, with rate

, increasing X by one and decreasing Y by one; a predator may die, with rate

, decreasing X by one. Papamakarios and Murray (2016) noted that for most parameters the size of one population quickly decreases to zero (in the case of the predators dying out, this results in the prey population growing exponentially). The relatively small region of parameter space that contains parameter values resulting in oscillating population sizes makes this a relatively challenging inference problem.

In this section, we use this example to demonstrate the use of DA-ABC-SMC for SDE models that need to be simulated numerically. To do this, we use the chemical Langevin equation approximation to the Markov jump process, as detailed in Golightly, Henderson, and Sherlock (Citation2015). This results in two coupled nonlinear SDEs, which we simulate numerically using the Euler–Maruyama method.

4.2 Results

All of our empirical results were generated using R (R Core Team Citation2019), and the R packages ggplot2 (Wickham Citation2016), matlab (Roebuck Citation2014), and mvtnorm (Genz and Bretz Citation2009) were used. We study the data “LVPerfect” in the R package smfsb (Wilkinson Citation2018) (the numerical methods for simulating from the likelihood are also taken from this package). These data, which were generated using parameter values , have been previously studied in Wilkinson (Citation2011), and we use the same priors and ABC approach as in this reference. We used DA-ABC-SMC with a variety of choices of U, A, and N, and two different choices of the Euler–Maruyama step size s in the cheap simulator s = 0.5 and s = 0.1, both of which result in very rough approximations of the dynamics. We compared these approaches with standard ABC-SMC, with N = 200 particles and a sequence of tolerances selected such that U = 100 unique particles are retained at each iteration, an SMC2-style approach (Duan and Fulop Citation2015) (that uses M particles when using a particle filter to estimate the likelihood) and also, as black horizontal lines, “ground truth” for the posterior expectation and standard deviation of the parameters found using a long run (105 iterations) of ABC-MCMC. All algorithms we run 30 times, and measure computational cost, we counted the total number of steps S (taking the median

over the 30 runs) simulated using Euler–Maruyama. The supplementary appendix contains the full details.

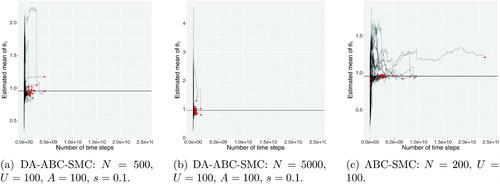

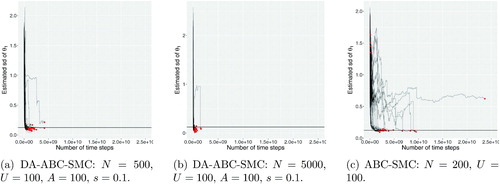

and show, for every run of three methods, the evolution of the sample mean and standard deviation of the particles against the number of time steps of the numerical solver, with the final red dot indicating the estimate from the final distribution (where was reached). The black line on each plots shows estimated ground truth values estimated using 20 runs of ABC-MCMC of length 5000 each. We only show the results for θ1 here, since the results for the other parameters are comparable in terms of how they illustrate the properties of the algorithms. The method on the left is DA-ABC-SMC with N = 500 and

; in the middle is DA-ABC-SMC with N = 5000 and

; and on the right is ABC-SMC with N = 200 and U = 100. We observe that while ABC-SMC takes significantly more steps to converge to the target distribution, the estimates of the mean and standard deviation have a relatively low variance. The DA-ABC-SMC approaches converge much faster, but result in estimates that are usually further from the ground truth. In addition, both DA-ABC-SMC approaches appear to underestimate the posterior standard deviation, with this effect becoming more pronounced when N is larger. This result suggests that choosing N too large (resulting in A/N being small) can result in a DA proposal that is too concentrated compared to the target. To further elaborate on this important point, as the ratio A/N decreases, the first stage of the DA leads to a very poor proposal—this is because the particles that make it through to the second stage of the DA step are those that have simulations that result in the very smallest distance to the observed data. The effect is to make the DA proposal too concentrated around the mode of the posterior yielded by the fast approximation.

Fig. 1 The estimated posterior mean plotted against the total number of time steps used in Euler–Maruyama.

Fig. 2 The estimated posterior standard deviation plotted against the total number of time steps used in Euler–Maruyama.

and summarize the properties of the estimates of the posterior mean and standard deviation from each method. The bias, standard deviation and root mean square error (RMSE) are reported, along with the , to help compare the errors of methods with different computational costs. The results for some configurations are heavily influenced by poor results from a single run, for example the case of DA-ABC-SMC with N = 1000, U = 100, A = 100, and s = 0.1. However, based on these tables and the plots of the estimated posterior mean and standard deviation in the supplementary appendix, there is evidence to make the following conclusions.

Table 1 Properties of estimators of the posterior mean of θ1.

Table 2 Properties of estimators of the posterior standard deviation of θ1.

Choosing N too large (resulting in A/N being small) can result in a DA proposal that is too concentrated compared to the target, and leads to underestimating the posterior standard deviation.

Even when N is not large, in this example often DA-ABC-SMC results in a sample that underestimates the standard deviation of the posterior distribution. The likely cause of this is that the DA proposal is too concentrated. This proposal easily generates unique points, thus the tolerance ϵ2 is reduced too quickly, resulting in a poor quality sample. The results from DA-ABC-SMC when N = 1000, U = 200, A = 100, and s = 0.1, where 200 instead of 100 unique points are required when reducing the tolerance, suggests that when the SMC does not reduce the tolerance as fast (and hence more MCMC moves are made), the estimates of the posterior standard deviation are more accurate.

DA-ABC-SMC can offer improved performance over ABC-SMC when measured by RMSE scaled by computational cost. This is largely due to its ability to quickly locate the region of highest posterior mass. However, it may not provide a representative sample from the posterior if the DA proposal is not tuned well. In contrast, compared to DA-ABC-SMC, standard ABC-SMC may be extremely slow in converging at all.

The SMC2 approaches tried here do not lead to an improvement over the ABC approaches, due to the low acceptance rate of the particle MCMC moves used in the move step of the SMC sampler (a consequence of the high variance likelihood estimates). In many cases no proposals were accepted at an SMC iteration, leading to a poor quality sample.

5 Applications to Doubly Intractable Distributions

5.1 Background

This section concerns the class of likelihoods that may not be evaluated pointwise due to the presence of an intractable normalizing constant, that is,where

is tractable, but it is not computationally feasible to evaluate the normalizing constant

(also known as the partition function). Such a distribution is known as doubly intractable (Murray, Ghahramani, and MacKay Citation2006) since it is not possible to directly use the Metropolis–Hastings algorithm to simulate from the posterior

, due to the presence of

in both the numerator and denominator of the acceptance ratio. The most common occurrence of these distributions occurs when l factorizes as a Markov random field. The most well-established approaches to inference for these models are the single and multiple auxiliary variable approaches (Møller et al. Citation2006; Murray, Ghahramani, and MacKay Citation2006) and the exchange algorithm (Murray, Ghahramani, and MacKay Citation2006), which, respectively, use importance sampling estimates of the likelihood and acceptance ratio to avoid the calculation of the normalizing constant. From here on we refer to these as “auxiliary variable methods.” ABC (Grelaud, Robert, and Marin Citation2009) and synthetic likelihood (Everitt, Johansen, et al. Citation2017) have also been used for inference in these models. See Stoehr (Citation2017) for a recent, thorough review of the literature.

Previous work, for example, Friel (Citation2013), suggests that auxiliary variable methods are more effective than ABC for simulating from the posterior when l has an intractable normalizing constant. In the full data ABC case, Everitt, Prangle, et al. (Citation2017) suggested that this is because the multiple auxiliary variable approach may be seen to be a carefully designed nonstandard ABC method. However, in this article we consider the situation in Everitt (Citation2012), where our data y are indirect observations of a latent (hidden) field xh, modeled with a joint distribution

with

having an intractable normalizing constant. In this case we might expect ABC to become more competitive, or even to outperform other approaches. The most obvious approach is to use data augmentation: using MCMC to simulate from the joint posterior

by alternating simulation from the full conditionals of θ and xh, with the exchange algorithm in the θ update. Everitt (Citation2012) compared this approach with “exchange marginal particle MCMC,” in which an SMC algorithm is used to integrate out the xh variables at every iteration of an MCMC and finds the particle MCMC approach to be preferable. However, to perform well, both of these approaches require efficient simulation from the conditional distribution

.

Now compare these “exact” approaches with ABC. In this case, for every θ we simulate and

, then use an ABC likelihood that compares

with

. The xh variables corresponding to a particular θ variable that are retained in the ABC sample are distributed according to an ABC approximation to

; indeed, that the ABC is at all efficient, there must be a reasonable probability that simulated xh is in a region of high probability mass under this distribution. Compared to particle MCMC:

Standard ABC has the disadvantage that the simulation of xh is, in contrast to particle MCMC, not conditioned on y (although we note recent work in Prangle, Everitt, and Kypraios (Citation2017) in which for some models we may also refine the ABC likelihood by conditioning on y).

ABC has the advantage that a posteriori xh is only conditioned on some statistic

, rather than y as in particle MCMC. This condition is often considerably less stringent. For example, consider the Ising model example described below. When conditioning on y, the posterior

is often restricted to relatively small regions of xh-space: for individual pixel of xh the posterior mass may be concentrated in a small region to match each individual data point. However, when conditioning on

, the posterior

may have nonnegligible mass in many regions of xh-space: there are many different configurations of pixels that give rise to similar summary statistics.

In this article, we revisit using ABC on these models, examining the data previously studied in Everitt (Citation2012), and show it is possible to make computational savings using DA-ABC-SMC. We now introduce the cheap simulator that makes this improvement possible. In Markov random field models, exact simulation from is either very expensive or intractable. Grelaud, Robert, and Marin (Citation2009) proposed to replace the exact simulator with taking the last point of a long MCMC run, and Everitt (Citation2012) showed that, under certain ergodicity conditions, as the length of this “internal” MCMC goes to infinity, the bias in our ABC posterior sample due to this particular approximation also goes to zero (following ideas in Andrieu and Roberts (Citation2009)). However, empirically one finds that often using only a single MCMC iteration is sufficient to yield a posterior close to the desired posterior. In this article, we propose to use an internal MCMC run of a single iteration as the cheap simulator, and to run the internal MCMC for many iterations (we use 1000) as the expensive simulator, where the final iteration of the cheap simulator is used as the first iteration of the expensive simulator. The next section presents an application to latent Ising models, with an application to ERGMs in the supplementary appendix.

5.2 Latent Ising Model

An Ising model is a pairwise Markov random field model on binary variables, each taking values in . Its distribution is given by

where

denotes the ith random variable in xh and N is a set defining pairs of nodes that are “neighbors.”We consider the case where the neighborhood structure is given by a regular two-dimensional grid. Our data y are noisy observations of this xh field: the ith variable in y has distribution

as in Everitt (Citation2012) (with the normalizing constant being intractable). We used independent Gaussian priors on θx and θy, each with mean 0 and standard deviation 5. Our DA-ABC-SMC algorithm used

, and we examined different values of N. A single site Gibbs sampler was used to simulate from the likelihood, with the expensive simulator using the final point of 1000 sweeps of the Gibbs sampler. The MCMC proposal was taken to be a Gaussian distribution centered at the current particle, with covariance given by the sample covariance of the particles from the previous iteration. We used a single statistic in the ABC: the number of equivalently valued neighbors

(noting that this is not sufficient, but that the particle MCMC results in Everitt (Citation2012) indicate that this does not have a large impact on the posterior). The distance metric used in ABC on the summary statistics was the absolute value of the difference.

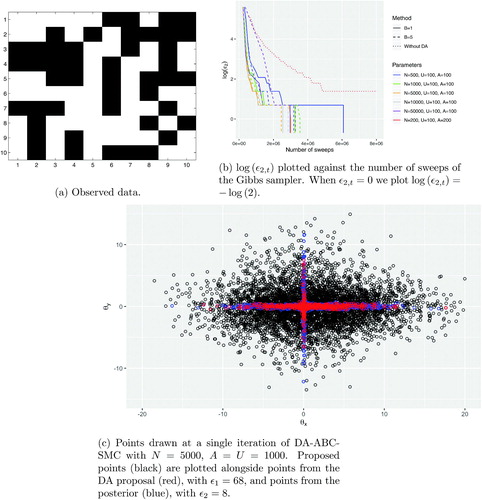

We used the same 10 × 10 grid of data as was studied in Everitt (Citation2012), shown in , which was generated from the model using . These parameter values represent a relatively weak interaction between neighboring pixels, and also quite noisy observations. On a grid of this size, there is ambiguity as to whether this data may have been generated with either one or both of θx and θy being small, giving a posterior distribution shaped like a cross, similar to that shown in . gives an example of a DA proposal, that is, points that pass the first stage of the DA-MCMC, from the early stages of a run of DA-ABC-SMC. We observe how this distribution would be a suitable independent MCMC proposal for the posterior.

All of our empirical results were generated using R (R Core Team Citation2019), and the R packages ggplot2 (Wickham Citation2016), matlab (Roebuck Citation2014), and mvtnorm (Genz and Bretz Citation2009) were used. We ran DA-ABC-SMC for several values of N, and compared the results with standard ABC-SMC using all of the same algorithmic choices, with N = 200 and U = 100. The standard ABC-SMC algorithm used the expensive simulator, that is, 1000 iterations of the internal Gibbs sampler. In algorithms we terminated the method when , or after 500 SMC iterations, whichever was sooner. We focus on studying the total computational effort expended in each algorithm, measured by the total number of sweeps S of the internal Gibbs sampler, and on the mean and standard deviation of the Euclidean distance ρ of the parameter vector

from the origin.

We ran the algorithm with several different values of N, and two different cheap simulators: the first where only a single sweep of the Gibbs sampler is used (B = 1); the second using the final point of 5 sweeps of the Gibbs sampler (B = 5). Each configuration was run 30 times. We chose N such that in most cases the computational cost was dominated by the expensive simulations in the second stage of the DA, although when and B = 5 the cost of the first stage dominated. shows gives an example showing the evolution of ϵ2 against the total number of sweeps of the Gibbs sampler for several different configurations. We see that all configurations of the DA approach appear to have a significantly lower cost than standard ABC-SMC: in all cases the DA approach reaches

whereas the ABC-SMC reaches

.

shows properties of estimates of the mean and standard deviation posterior on ρ. The estimates from ABC-SMC are based on the output of the 500th iteration of the SMC, where in all runs . we observe that we obtain lower variance estimates from ABC-SMC than from DA-ABC-SMC, but at a higher cost. This cost may be significantly higher if we wish to reduce ϵ2 to 0, as is achieved in all cases by DA-ABC-SMC. As in the previous section, we note that using a large value of N appears to lead to poor results; it appears likely that the standard deviation of the posterior is being underestimated.

Table 3 The mean and standard deviation of the estimated posterior mean (columns 2–4) and standard deviation (columns 5–7) of ρ, and the median number of sweeps of the Gibbs sampler

.

6 Conclusions

In this article, we introduced DA-ABC-SMC as a means for reducing the computational cost of ABC-SMC in the case of an expensive simulator, through using a DA-ABC-MCMC move, in which a cheap simulator is used to “screen” the candidate values of proposed parameters so that less effort needs to be used running the expensive simulator. This cheap simulator may be completely independent of the expensive simulator, but preferably the expensive simulator may be conditioned on the output of the cheap simulator. The tolerance for the cheap simulator is chosen adaptively with DA-ABC-SMC, giving an algorithm that only has three relatively easy to choose tuning parameters. The key factor in the performance of the algorithm is the DA proposal, that is, the distribution of the particles that pass the first stage of DA. For this proposal to be useful, the cheap simulator needs to produce a posterior centered around the same values as the expensive simulator, and the total number of particles N in the DA-ABC-SMC needs to be chosen appropriately. The empirical results suggest that there is a trade-off in choosing the size of N: large values result in the largest computational saving, but can produce a DA proposal that is too concentrated which can result in a poor sample from the posterior. For smaller values of N the DA proposal is usually more suitable, and can result in computational savings compared to ABC-SMC.

Section 4 illustrates that DA-ABC-SMC shows promise for using ABC for inference in ODE or SDE models, where a numerical method is used to simulate from the model. Section 5 revisits the idea of using ABC for inference in latent Markov random field models, and suggests an approach that in some cases reduces the computational cost of ABC-SMC by using short runs of MCMC to simulate from the model. Future work might extend the approach to ABC methods that do not use an indicator kernel, use the adaptive tuning of the first stage of DA outside of the ABC context (along with an investigation of any bias this adaptation may introduce), or aim to improve the automation of the design of the DA proposal, which is often too concentrated.

Supplemental Material

Download Text (218 B)Supplemental Material

Download PDF (3.8 MB)Supplemental Material

Download Zip (3.6 MB)Acknowledgments

Many thanks to Chris Drovandi for pointing out the method of Duan and Fulop (Citation2015), and for discussions on using DA within SMC outside of the ABC setting.

Supplementary Materials

Appendix: Appendix containing full derivations of ABC-SMC and DA-ABC-SMC, additional results for the Lotka–Volterra model, and an application to ERGMs. (pdf)

Data and code: Data and R code implementing DA-ABC-SMC for the three applications. (zip)

Additional information

Funding

References

- Andrieu, C., and Roberts, G. O. (2009), “The Pseudo-Marginal Approach for Efficient Monte Carlo Computations,” The Annals of Statistics, 37, 697–725. DOI: 10.1214/07-AOS574.

- Banterle, M., Grazian, C., and Robert, C. P. (2014), “Accelerating Metropolis–Hastings Algorithms: Delayed Acceptance With Prefetching,” arXiv no. 1406.2660.

- Beaumont, M. A., Zhang, W., and Balding, D. J. (2002), “Approximate Bayesian Computation in Population Genetics,” Genetics, 162, 2025–35.

- Bernton, E., Jacob, P. E., Gerber, M., and Robert, C. P. (2017), “Inference in Generative Models Using the Wasserstein Distance,” arXiv no. 1701.05146.

- Cérou, F., Del Moral, P., Furon, T., and Guyader, A. (2012), “Sequential Monte Carlo for Rare Event Estimation,” Statistics and Computing, 22, 795–908. DOI: 10.1007/s11222-011-9231-6.

- Christen, J. A., and Fox, C. (2005), “Markov Chain Monte Carlo Using an Approximation,” Journal of Computational and Graphical Statistics, 14, 795–810. DOI: 10.1198/106186005X76983.

- Del Moral, P., Doucet, A., and Jasra, A. (2006), “Sequential Monte Carlo Samplers,” Journal of the Royal Statistical Society, Series B, 68, 411–436. DOI: 10.1111/j.1467-9868.2006.00553.x.

- Del Moral, P., Doucet, A., and Jasra, A. (2012a), “An Adaptive Sequential Monte Carlo Method for Approximate Bayesian Computation,” Statistics and Computing, 22, 1009–1020.

- Del Moral, P., Doucet, A., and Jasra, A. (2012b), “On Adaptive Resampling Strategies for Sequential Monte Carlo Methods,” Bernoulli, 18, 252–278.

- Del Moral, P., Jasra, A., Lee, A., Yau, C., and Zhang, X. (2015), “The Alive Particle Filter and Its Use in Particle Markov Chain Monte Carlo,” Stochastic Analysis and Applications, 33, 943–974. DOI: 10.1080/07362994.2015.1060892.

- Didelot, X., Everitt, R. G., Johansen, A. M., and Lawson, D. J. (2011), “Likelihood-Free Estimation of Model Evidence,” Bayesian Analysis, 6, 49–76. DOI: 10.1214/11-BA602.

- Duan, J. C., and Fulop, A. (2015), “Density-Tempered Marginalized Sequential Monte Carlo Samplers,” Journal of Business and Economic Statistics, 33, 192–202. DOI: 10.1080/07350015.2014.940081.

- Everitt, R. G. (2012), “Bayesian Parameter Estimation for Latent Markov Random Fields and Social Networks,” Journal of Computational and Graphical Statistics, 21, 940–960. DOI: 10.1080/10618600.2012.687493.

- Everitt, R. G., Johansen, A. M., Rowing, E., and Evdemon-Hogan, M. (2017), “Bayesian Model Comparison With Un-Normalised Likelihoods,” Statistics and Computing, 27, 403–422. DOI: 10.1007/s11222-016-9629-2.

- Everitt, R. G., Prangle, D., Maybank, P., and Bell, M. (2017), “Marginal Sequential Monte Carlo for Doubly Intractable Models,” arXiv no. 1710.04382.

- Friel, N. (2013), “Evidence and Bayes Factor Estimation for Gibbs Random Fields,” Journal of Computational and Graphical Statistics, 22, 518–532. DOI: 10.1080/10618600.2013.778780.

- Genz, A., and Bretz, F. (2009), Computation of Multivariate Normal and t Probabilities, Lecture Notes in Statistics, Heidelberg: Springer-Verlag.

- Gillespie, D. T. (1977), “Exact Stochastic Simulation of Coupled Chemical Reactions,” Journal of Physical Chemistry, 8, 2340–2361. DOI: 10.1021/j100540a008.

- Golightly, A., Henderson, D. A., and Sherlock, C. (2015), “Delayed Acceptance Particle MCMC for Exact Inference in Stochastic Biochemical Network Models,” Statistics and Computing, 25, 1039–1055. DOI: 10.1007/s11222-014-9469-x.

- Grelaud, A., Robert, C. P., and Marin, J.-M. (2009), “ABC Likelihood-Free Methods for Model Choice in Gibbs Random Fields,” Bayesian Analysis, 4, 317–336. DOI: 10.1214/09-BA412.

- Kong, A., Liu, J. S., and Wong, W. H. (1994), “Sequential Imputations and Bayesian Missing Data Problems,” Journal of the American Statistical Association, 89, 278–288. DOI: 10.1080/01621459.1994.10476469.

- Li, W., and Fearnhead, P. (2018), “On the Asymptotic Efficiency of Approximate Bayesian Computation Estimators,” Biometrika, 105, 285–299. DOI: 10.1093/biomet/asx078.

- Møller, J., Pettitt, A. N., Reeves, R. W., and Berthelsen, K. K. (2006), “An Efficient Markov Chain Monte Carlo Method for Distributions With Intractable Normalising Constants,” Biometrika, 93, 451–458. DOI: 10.1093/biomet/93.2.451.

- Murray, I., Ghahramani, Z., and MacKay, D. J. C. (2006), “MCMC for Doubly-Intractable Distributions,” in Proceedings of the 22nd Annual Conference on Uncertainty in Artificial Intelligence (UAI), pp. 359–366.

- Papamakarios, G., and Murray, I. (2016), “Fast Epsilon-Free Inference of Simulation Models With Bayesian Conditional Density Estimation,” arXiv no. 1605.06376.

- Picchini, U. (2014), “Inference for SDE Models via Approximate Bayesian Computation,” Journal of Computational and Graphical Statistics, 23, 1080–1100. DOI: 10.1080/10618600.2013.866048.

- Picchini, U., and Forman, J. L. (2016), “Accelerating Inference for Diffusions Observed With Measurement Error and Large Sample Sizes Using Approximate Bayesian Computation: A Case Study,” Journal of Statistical Computation and Simulation, 86, 195–213. DOI: 10.1080/00949655.2014.1002101.

- Prangle, D. (2016), “Lazy ABC,” Statistics and Computing, 26, 171–185. DOI: 10.1007/s11222-014-9544-3.

- Prangle, D., Everitt, R. G., and Kypraios, T. (2017), “A Rare Event Approach to High Dimensional Approximate Bayesian Computation,” Statistics and Computing, 28, 819–834. DOI: 10.1007/s11222-017-9764-4.

- Pritchard, J. K., Seielstad, M. T., Perez-Lezaun, A., and Feldman, M. W. (1999), “Population Growth of Human Y Chromosomes: A Study of Y Chromosome Microsatellites,” Molecular Biology and Evolution, 16, 1791–1798. DOI: 10.1093/oxfordjournals.molbev.a026091.

- R Core Team (2019), R: A Language and Environment for Statistical Computing, Vienna, Austria: R Foundation for Statistical Computing, available at https://www.R-project.org/.

- Robert, C. P., Beaumont, M. A., Marin, J.-M., and Cornuet, J.-M. (2011), “Adaptivity for ABC Algorithms: The ABC-PMC Scheme,” Biometrika, 96, 983–990.

- Roebuck, P. (2014), “matlab: MATLAB Emulation Package,” R Package Version 1.0.2, available at https://CRAN.R-project.org/package=matlab.

- Sisson, S. A., Fan, Y., and Tanaka, M. M. (2007), “Sequential Monte Carlo Without Likelihoods,” Proceedings of the National Academy of Sciences of the United States of America, 104, 1760–1765. DOI: 10.1073/pnas.0607208104.

- Sisson, S. A., Fan, Y., and Tanaka, M. M. (2009), “Correction: Sequential Monte Carlo Without Likelihoods,” Proceedings of the National Academy of Sciences of the United States of America, 106, 16889–16890.

- Stoehr, J. (2017), “A Review on Statistical Inference Methods for Discrete Markov Random Fields,” arXiv no. 1704.03331.

- Strens, M. (2004), “Efficient Hierarchical MCMC for Policy Search,” in Proceedings of the 21st International Conference on Machine Learning, p. 97.

- Tierney, L. (1998), “A Note on Metropolis–Hastings Kernels for General State Spaces,” Annals of Applied Probability, 8, 1–9. DOI: 10.1214/aoap/1027961031.

- van der Vaart, E., Beaumont, M. A., Johnston, A. S. A., and Sibly, R. M. (2015), “Calibration and Evaluation of Individual-Based Models Using Approximate Bayesian Computation,” Ecological Modelling, 312, 182–190. DOI: 10.1016/j.ecolmodel.2015.05.020.

- Wickham, H. (2016), ggplot2: Elegant Graphics for Data Analysis, New York: Springer-Verlag, available at https://ggplot2.tidyverse.org.

- Wilkinson, D. J. (2018), “smfsb: Stochastic Modelling for Systems Biology,” R Package Version 1.3, available at https://CRAN.R-project.org/package=smfsb.

- Wilkinson, D. J. (2011), Stochastic Modelling for Systems Biology (2nd ed.), Boca Raton, FL: Chapman & Hall/CRC Press.