?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

This article develops a sparsity-inducing version of Bayesian Causal Forests, a recently proposed nonparametric causal regression model that employs Bayesian Additive Regression Trees and is specifically designed to estimate heterogeneous treatment effects using observational data. The sparsity-inducing component we introduce is motivated by empirical studies where not all the available covariates are relevant, leading to different degrees of sparsity underlying the surfaces of interest in the estimation of individual treatment effects. The extended version presented in this work, which we name Shrinkage Bayesian Causal Forest, is equipped with an additional pair of priors allowing the model to adjust the weight of each covariate through the corresponding number of splits in the tree ensemble. These priors improve the model’s adaptability to sparse data generating processes and allow to perform fully Bayesian feature shrinkage in a framework for treatment effects estimation, and thus to uncover the moderating factors driving heterogeneity. In addition, the method allows prior knowledge about the relevant confounding covariates and the relative magnitude of their impact on the outcome to be incorporated in the model. We illustrate the performance of our method in simulated studies, in comparison to Bayesian Causal Forest and other state-of-the-art models, to demonstrate how it scales up with an increasing number of covariates and how it handles strongly confounded scenarios. Finally, we also provide an example of application using real-world data. Supplementary materials for this article are available online.

1 Introduction

Inferring the treatment effect at an individual level in a population of interest lies at the heart of disciplines such as precision medicine or personalized advertisement, where decision making in terms of treatment administration is based on individual characteristics. The ever-increasing amount of observational data available offers a unique opportunity for drawing inferences at the resolution of each individual. However, since Individual Treatment Effects (ITEs) are never directly observable in the real world, standard supervised learning techniques cannot be directly applied. Moreover, the process of treatment allocation in large, observational datasets is usually unknown and can obscure the effect of the actual treatment through confounding (Dawid Citation2000; Pearl Citation2009a; Imbens and Rubin Citation2015).

The application of statistical learning tools (Hastie, Tibshirani, and Friedman Citation2001) for causal inference has led to significant improvements in the estimation of heterogeneous treatment effects. These improvements stem from the predictive power of advanced nonparametric regression models, that, after being appropriately adapted to the causal inference setting, can leverage large observational datasets and capture nonlinear relationships. Caron, Manolopoulou, and Baio (Citation2020) provide a review of the most recent and popular methods, together with a comparison of their performance.

Two of the early contributions that paved the way toward the use of tree-based statistical learning tools on large datasets for causal analysis purposes are Foster, Taylor, and Ruberg (Citation2011) and Hill (Citation2011), who advocate the use of tree ensemble methods for the estimation of ITE. The former focuses on randomized experiments and makes use of Random Forests (Breiman Citation2001; Lu et al. Citation2018); the latter instead addresses the problem from an observational study perspective, and employs Bayesian Additive Regression Trees (BART) (Chipman, George, and McCulloch Citation1998, Citation2010). Another early contribution that focuses instead on Average Treatment Effect (ATE) estimation in observational studies is Traskin and Small (Citation2011), where the authors propose classification trees for identifying the study population with sufficient overlap. A more recent and popular tree-based method for ITE estimation is Causal Forests (CF) (Athey and Imbens Citation2016; Wager and Athey Citation2018), a causal implementation of Random Forests. Hahn, Murray, and Carvalho (Citation2020) instead build on the work of Chipman, George, and McCulloch (Citation2010) and Hill (Citation2011) to formulate a new BART framework for causal analysis, under the name of Bayesian Causal Forests (BCF), specifically designed to address strong confounding and separate between prognostic and moderating effects of the covariates when estimating ITE. The prognostic effect (or prognostic score) is defined as the impact of the covariates on the outcome in the absence of treatment, while the moderating effect is the impact of the covariates on the response to treatment.

A different stream of contributions that do not focus on any specific regression model is that of Meta-Learners. Meta-Learners are meta-algorithms that design a procedure to estimate ITE via any suitable off-the-shelf supervised regression model (e.g., random forests, neural networks, etc.). Recent popular work on Meta-Learners include Künzel et al. (Citation2017), where the authors develop a framework to deal with unbalanced treatment groups (named X-learner), and Nie and Wager (Citation2020), where parameterization in Robinson (Citation1988) is exploited (hence, the name R-learner) to design a direct loss function on the treatment effect surface for parameter tuning. Among other notable works on ITE estimation, Alaa and van der Schaar (Citation2017, Citation2018) adopt a multi-task learning approach using Gaussian Processes, while Johansson, Shalit, and Sontag (Citation2016), Shalit, Johansson, and Sontag (Citation2017), and Yao et al. (Citation2018) employ deep neural networks to learn balanced representations that aim at minimizing a distributional distance between treatment groups. Moreover, contributions such as those of Powers et al. (Citation2018); Zhao, Small, and Ertefaie (Citation2018); Zimmert and Lechner (Citation2019), and Fan et al. (Citation2020) focus specifically on high-dimensional settings, where a large number of covariates is available. In particular, Zhao, Small, and Ertefaie (Citation2018) use Robinson (Citation1988) decomposition to estimate nuisance parameters with machine learning methods and isolate the treatment effect function, which is then fit via LASSO regression, for a more interpretable output on effect modifiers. Zimmert and Lechner (Citation2019) and Fan et al. (Citation2020) instead propose and derive properties of a cross-validated two stage estimator where nuisance parameters are fitted with ML methods in the first stage, and a nonparametric local kernel smoother is instead applied to fit treatment effect.

Regression-based methods for treatment effect estimation typically leverage large samples in observational studies. However, large observational data often also feature a large number of pretreatment covariates, many of which may not affect the response variable in question nor act as a modifier of the treatment effect. Hence, the task of estimating ITE, whose complexity inevitably depends on the smoothness and sparsity of the outcome surface (Alaa and van der Schaar Citation2018), necessitates regularization. At the same time, prior subject-matter knowledge on the relative importance of the covariates may be available, and can improve estimates if embedded in the model. In light of these considerations, none of the aforementioned approaches mentioned allow to jointly: (i) account for heterogeneous smoothness and sparsity across covariates; (ii) tease apart prognostic and moderating covariates through targeted feature shrinkage; (iii) incorporate prior knowledge on the relevant covariates and their relative impact on the outcome. Carefully designed regularization can lead to improved ITE estimates and inferences on prognostic and moderating factors, since including a large number of covariates in a fully-saturated model to adjust for confounding may lead to misspecification. In this work, we propose an extension of the Bayesian Causal Forest framework (Starling et al. Citation2019; Hahn, Murray, and Carvalho Citation2020), consisting in additional Dirichlet priors placed on the trees splitting probabilities (Linero Citation2018), that implement fully Bayesian feature shrinkage on the prognostic and moderating covariates, and allow the incorporation of prior knowledge on their relative importance. BART (and consequently BCF) was originally designed to adapt to smoothness but not to sparsity.Footnote1 Our extended version of the model can be easily fitted with a slight modification of the existing MCMC algorithm and provably results in improved performance thanks to its better adaptability to sparse DGPs, for negligible extra computational cost.

The rest of the article is organized as follows. Section 2 introduces the problem of estimating treatment effects using the Neyman–Rubin causal model framework, and formulates the necessary assumptions to recover treatment effect estimates under confounded observational data. Section 3 offers an overview on Bayesian Additive Regression Trees and their popular causal version, Bayesian Causal Forest. Section 4 introduces our shrinkage-inducing extension, under the name of “Shrinkage Bayesian Causal Forest.” Section 5 presents results from simulated studies carried out to compare Shrinkage Bayesian Causal Forest performance with other state-of-the-art models. Section 6 provides an example of analysis using data from the Infant Health and Development Program aimed at investigating the effects of early educational support on cognitive abilities in low birth weight infants. Section 7 concludes with a discussion.

2 Problem Framework

In this section we outline the problem of deriving an estimator for ITE using observational data, using the formalism of the Neyman–Rubin potential outcomes framework (Rubin Citation1978; Imbens and Rubin Citation2015).Footnote2 We consider a setup where the outcome variable is continuous and the treatment assignment is binary (of the type exposure versus nonexposure), but most of the notions in this section can be generalized to noncontinuous responses and more than two treatment arms. For each individual , the two potential outcomes are defined as

, where

is the binary treatment assignment, with Zi = 1 indicating exposure to the treatment, while Zi = 0 nonexposure. We consider continuous type of outcomes such that

. Given the potential outcomes and the binary treatment assignment, ITE is defined, for each individual i, as the difference

. The fundamental problem of causal inference is that, for each i, we get to observe only one of the two potential outcomes

, specifically the one corresponding to the realization of Zi, that is,

, so that ITE is never observable.

Given a dataset of sample size N, where

are P pretreatment covariates, the ITE is the (unobserved) difference

. In practice, the goal is often to estimate the Conditional Average Treatment Effects (CATE), defined as

(1)

(1)

CATE is the conditional mean of the ITE for the given value of the covariates, averaging across individual-level noise, so it is the best estimator for ITE in terms of mean squared error.

In order to estimate through the observational quantities

, we rely on a common set of assumptions to achieve identification. First of all, as already implied by the notation introduced above, we are assuming that Stable Unit Treatment Value Assumption (SUTVA) holds, ensuring that one unit’s outcome is not affected by other units’ assignment to treatment (no interference). The second assumption is unconfoundedness, which can be expressed through the conditional independence

, and it rules out the presence of unobserved common causes of Z and Y (i.e., no unobserved confounders). The third and final assumption is common support, which means that all units have a probability of falling into either treatment groups which is strictly between 0 and 1. More formally, after defining the propensity score (Rosenbaum and Rubin Citation1983) as the probability of unit i being selected into treatment given

,

(2)

(2) common support implies

, so that there is no deterministic assignment to one of the groups given features

. Note that unconfoundedness and common support are automatically satisfied in the case of fully randomized experiments. While the degree of overlap between the two treatment groups can be typically examined in the data, SUTVA and unconfoundedness are untestable assumptions, and their plausibility must be justified based on domain knowledge.

In this work, we focus on nonparametric regression-based approaches to CATE estimation. Imbens (Citation2004) offers a comprehensive overview on different methodologies (regression-based, matching-based, etc.) to derive different causal estimands of interest, such as sample and population ATE and CATE, Average Treatment effect on the Treated (ATT), Conditional Average Treatment effect on the Treated (CATT) etc. A nonparametric regression approach entails modeling the response surface as an unknown function of the covariates and treatment assignment indicator, and an error term. As typically done in the vast majority of the contributions on regression-based CATE estimation, we assume that the error term is additive and normally distributed with zero mean, such that Yi is modeled as(3)

(3) and where

is of unknown form, and learnt from the data. A broad variety of methods to retrieve a CATE estimator from EquationEquation (3)

(3)

(3) have been developed in the literature (see, Caron, Manolopoulou, and Baio Citation2020 for a review). Among these, we will follow in particular the one presented in Hahn, Murray, and Carvalho (Citation2020), that we introduce and discuss in the next section.

3 BART for Causal Inference

Bayesian Additive Regression Trees (BART) are a nonparametric regression model that estimates the conditional expectation of a response variable Yi via a “sum-of-trees.” Considering the regression framework in (3), one can use BART to flexibly represent as

(4)

(4) where m is the total number of trees in the model; the pair

defines the structure of the jth tree, namely Tj embeds the collection of binary split rules while

the collection of b terminal nodes in that tree;

is a tree-specific function mapping the predictors

to the set of terminal nodes Mj, following the set of binary split rules expressed by Tj. The conditional mean function

fit is computed by summing up all the terminal nodes ψij assigned to the predictors

by the tree functions

, that is,

. We refer the reader to Chipman, George, and McCulloch (Citation1998, Citation2010) and Ročková and Saha (Citation2019) for more details about BART priors and inference.

3.1 Bayesian Causal Forests

As briefly mentioned in Section 2, we will follow the representation proposed by Hahn, Murray, and Carvalho (Citation2020), that avoids imposing direct regularization on in EquationEquation (3)

(3)

(3) . Hahn et al. (Citation2018) and Hahn, Murray, and Carvalho (Citation2020) in fact show that regularization on

can generate unintended bias in the final estimation of CATE, and propose a simple reparameterization of (3) that uses a two-stage regression approach that dates back to the early contributions of Heckman (Citation1979) and Robinson (Citation1988). The two-stage representation reads:

(5)

(5)

(6)

(6)

The first stage (5) deals with propensity score estimation, for which any probabilistic classifier is suitable (e.g., logistic regression, Probit BART, neural nets, etc.). In the simulated experiments shown in later sections, we will specifically employ either default probit BART or a one-hidden-layer neural network. In general, it is advisable not to rely on aggressive regularization in the estimation of , as this could accidentally result into one or more main confounders being over-shrunk and/or left out of the model. The second stage (6) estimates the prognostic score

, defined as the effect of the covariates

on the outcome Yi in the absence of treatment

, and CATE

. Note that we use slightly different notation for the covariates in

and

. This is to highlight the fact that the set of available covariates

might consist of four different types (Herren and Hahn Citation2020): (i) confounders, that is, direct and indirect common causes of Z and Y; (ii) prognostic covariates, that is, predictors of

only; (iii) moderators, that is, predictors of

only; (iv) propensity covariates, entering only

equation. Any covariate that does not fall into one of these categories is an irrelevant/nuisance predictor.

The two-stage procedure described above belongs to a class of models known as “modularized,” as opposed to joint-models, that attempt to embed uncertainty around propensity scores in a single stage, which nonetheless can lead to poor estimates due to feedback issues in the approximation of the full posterior (Zigler et al. Citation2013; Zigler and Dominici Citation2014). See Jacob et al. (Citation2017) for a thorough discussion on the issue of modularized versus joint models.

A Bayesian Causal Forest model (Hahn, Murray, and Carvalho Citation2020) is based on the reparameterization of the second stage regression (6). The advantage of this reparameterization from a Bayesian standpoint lies in the fact that separate priors, offering targeted regularization, can be placed on the prognostic score and on CATE

directly. This approach mitigates unintended bias attributable to what the authors call Regularization Induced Confounding (RIC). The intuition behind RIC is that CATE posterior is strongly influenced by the regularization effects of the prior on

in EquationEquation (3)

(3)

(3) , such that posterior estimates of CATE are bound to be biased, even more so in presence of strong confounding, such as when treatment selection is suspected to be “targeted,” that is, when individuals are selected into treatment based on the prediction of an adverse potential outcome if left untreated. In order to alleviate confounding from targeted selection, the authors suggest to employ propensity score estimates obtained from the first stage

as an additional covariate in the estimation of

.

In practice, a BCF model assigns a default BART prior to , while a prior with stronger regularization is chosen for

, as moderating patterns are believed to be simpler. The BART prior on

, compared to the default specification, consists in the use of a smaller number of trees in the ensemble (50 trees instead of 200), and a different combination of hyperparameters that govern the depth of each tree. In particular, in the context of BART priors, the probability that a node at depth

in a tree is nonterminal is given by

, where

are the hyperparameters to set (Chipman, George, and McCulloch Citation2010). The default specification

already has a shrinkage effect that accommodates small trees. The BCF prior on

instead sets

, with the purpose of assigning higher probability mass to even smaller trees. This combination of hyperparameters in the CATE prior allows to detect weak heterogeneous patterns, and provides robustness in case of homogeneous treatment effects.

For the reasons illustrated above, BCF tends to outperform BART and other tree-based methods for CATE estimation, such as Causal Forests (Wager and Athey Citation2018). As we will illustrate in the following sections, our work extends the BCF framework by introducing explicit shrinkage of irrelevant predictors, which results into higher computational efficiency, and accommodates different levels of smoothness across covariates, while, at the same time, returning interpretable measures of feature importance in the estimation of and

, separately.

4 Shrinkage Bayesian Causal Forests

BART, and consequently BCF, are known to handle sparsity quite well, thanks to the fact that splitting variables are chosen uniformly at random. However, they do not actively implement heterogeneous sparsity, nor feature shrinkage, which inevitably implies assigning equal level of heterogeneity to every covariate in the model. We will briefly illustrate in this section the concept of feature shrinkage in the context of tree ensemble models such as BART. Let us define first as the vector of splitting probabilities of each predictor

, where each sj represents the probability for the jth predictor of being chosen as a splitting variable in one of the decision nodes of a tree. The default version of BART places a uniform distribution over the splitting variables, meaning that each predictor has equal chance of being picked as a splitting variable:

. As a consequence, predictors are virtually given equal prior importance in the model. A sparsity-inducing solution in this framework implies having a vector s of “stick-breaking” posterior splitting probabilities where ideally the entries corresponding to irrelevant predictors are near-zero, while the ones corresponding to relevant predictors are significantly higher than

. Posterior splitting probabilities in this context can be intuitively viewed as a measure of variables importance (Breiman Citation2001). A complementary, decision-theoretic interpretation of sparsity-inducing solutions in this setup is given by the posterior probabilities that a predictor j appears in a decision node at least once in the ensemble. The two interpretations above (variables importance and probability of inclusion) are interchangeable and qualitatively lead to the same conclusions. In the next section we review how a simple extension of BART proposed by Linero (Citation2018) can accommodate sparse solutions as described above, and how this modified version of BART can be put to use in the context of Bayesian Causal Forests.

4.1 Dirichlet Additive Regression Trees

Dirichlet Additive Regression Trees (Linero Citation2018), or DART, constitute an effective and practical way of inducing sparsity in BART. The proposed modification consists in placing an additional Dirichlet prior on the vector of splitting probabilities s, which triggers a consequent posterior update in the backfitting MCMC algorithm. The Dirichlet prior on s reads(7)

(7) where α is the hyperparameter governing the a priori preference for sparsity. Lower values of α correspond to sparser solutions, that is, fewer predictors included in the model. The hyperparameter α is in turn assigned a prior distribution, in order to deal with unknown degree of sparsity. This prior is chosen to be a

distribution, placed over a standardized version of the α parameter, of the following form

(8)

(8)

where the default parameter values are

. The combination of values a = 0.5 and b = 1 assigns higher probability to low values of α, thus, giving preference to sparse solutions (the combination

would instead revert back to default BART splitting probabilities, that is, uniform distribution over the splitting variables). The prior is assigned to the standardized version of α in EquationEquation (8)

(8)

(8) instead of α directly, as this allows to easily govern preference for sparsity through the parameter ρ. If one suspects that the level of sparsity is, although unknown, rather high, setting a smaller value of ρ facilitates even sparser solutions.

The modified version of DARTs MCMC implies an extra step to update s, according to the conjugate posterior(9)

(9) where the update depends on uj, defined as the number of attempted splits on the jth predictor in the current MCMC iteration. The phrase “attempted splits” refers to the fact that BART MCMC algorithm generates trees through a branching process undergoing a Metropolis–Hastings step, so that a proposed tree in the process might be rejected, but the chosen splitting variables are counted anyway in

(Chipman, George, and McCulloch Citation1998, Citation2010; Linero and Yang Citation2018).

The rationale behind the update in EquationEquation (9)(9)

(9) follows the natural Dirichlet-Multinomial conjugacy. The more frequently a variable is chosen for a splitting rule in the trees of the ensemble in a given MCMC iteration (or equivalently the higher is uj), the higher the weight given to that variable by the updated

in the next MCMC iteration. Hence, the higher sj, the higher the chance for the jth predictor of being drawn as splitting variable from the multinomial distribution described by

. This extra Gibbs step comes at negligible computational cost when compared to default BART typical running time.

4.2 Shrinkage BCF Priors

Similarly to Linero (Citation2018), symmetric Dirichlet priors can be straightforwardly embedded in the Bayesian Causal Forest framework to induce sparsity in the estimation of prognostic and moderating effects. Bearing in mind that, as described in the previous section, BCF prior consists in two different sets of independent BART priors, respectively, placed on the prognostic score and CATE

, our proposed extension implies adding an additional Dirichlet prior over the splitting probabilities to these BART priors. Throughout the rest of the work we will consider the case where

, that is, where the same set of covariates is used for the estimation of

and

(see EquationEquation (6)

(6)

(6) for reference), but the ideas easily extend to scenarios where a different set of covariates is designed, based on domain knowledge, to be used for

and

Footnote3. The additional priors are, respectively,

(10)

(10) where the

’s parameters are chosen to be

as default. The hyperparameter ρ is set equal to

in the case of the prognostic score (

) since, when estimating

, we make use of P covariates plus an estimate of the propensity score

as an additional covariate. In the case of

, we set it equal to

to give preference to even more targeted shrinkage, as the CATE is typically believed to display simple heterogeneity patterns and a higher degree of sparsity compared to the prognostic score.

Algorithm 1: Bayesian Backfitting MCMC in Shrinkage BCF

Input: Data (X, Z, Y)

Output: MCMC samples offor

do

Result: Sample for

do

Sample tree structure

Sample terminal nodes

(conjugate normal)end

Sample

Result: Sample for

do

Sample tree structure

Sample terminal nodes

(conjugate normal)end

Sample

Result: Sample

Sample end

We refer to this setup as Shrinkage Bayesian Causal Forest (Shrinkage BCF). Naturally, the two Dirichlet priors trigger two separate extra steps in the Gibbs sampler, implementing draws from the conjugate posteriors:(11)

(11)

Shrinkage BCFs setup allows first of all to adjust to different degrees of sparsity in and

, and thus to induce different levels of smoothness across the covariates. Second, it naturally outputs feature importance measures on both the prognostic score and CATE separately, given that separate draws of the posterior splitting probabilities are returned. The raw extra computational time, per MCMC iteration, is slightly greater, albeit negligible, compared to default BCF; however, Shrinkage BCF demonstrates higher computational efficiency thanks to the fact that it avoids splitting on irrelevant covariates. Thus, it necessitate far fewer MCMC iterations to converge, and improves performance under sparse DGPs. A sketch of pseudo-code illustrating the backfitting MCMC algorithm in Shrinkage BCF can be found in Algorithm 1.

The Dirichlet priors in Shrinkage BCF can be also adjusted to convey prior information about the relevant covariates and their relative impact on the outcome. This can be achieved by introducing a set of scalar prior weights , such that

(12)

(12)

The weights can take on different values for each covariate and can be set separately for prognostic score and CATE. If the jth covariate is believed to be significant in predicting , then its corresponding prior weight

can be set higher than the others, in order to generate draws from a Dirichlet distribution that allocate higher splitting probability to that covariate. In the simulated experiment of Section 5.2 we will introduce a version of Shrinkage BCF with informative priors assigning higher a priori weight to the propensity score in

, to investigate whether this helps tackling strong confounding.

4.3 Targeted Sparsity and Covariate Heterogeneity

As a result of a fully Bayesian approach to feature shrinkage, Shrinkage BCF returns nonuniform posterior splitting probabilities that assign higher weight to more predictive covariates. This automatically translates into more splits along covariates with higher predictive power, compared to default BCF. To investigate whether this more strategic allocation of splitting probabilities in Shrinkage BCF leads to better performance, we test it against a default version of BCF including all the covariates and a version of BCF that already employs the subset of relevant covariates only. Think of the latter as a sort of “oracle” BCF that knows a priori the subset of relevant covariates, but may not assign different weights to them in terms of relative importance in the estimation of and

, respectively. To this end, we run a simple simulated example with P = 10 correlated covariates, of which only 5 are relevant, meaning that they exert some effect on the prognostic score or on CATE. We compare default BCF, “oracle” BCF using only the 5 relevant covariates and Shrinkage BCF using all the covariates (5 relevant and 5 nuisance). We generate the P = 10 covariates from a multivariate Gaussian

, where the entries of the covariance matrix are such that

, indicating positive correlation between predictors. Sample size is set equal to N = 1000. We then generate treatment assignment as

, where the propensity score is

(13)

(13) and

is the cumulative distribution function of a standard normal distribution. The prognostic score, CATE and response Yi are, respectively, generated as

(14)

(14)

In this experiment only the first five predictors are relevant. shows performances of the default BCF, “oracle” BCF run on just the 5 relevant predictors (oracle BCF-5) and Shrinkage BCF (SH-BCF), averaged over H = 500 Monte Carlo simulations. Performance of the methods is measured through: , defined as

; the quadratic loss function

(15)

(15) where

is the model-specific CATE estimate, while τi is the ground-truth CATE; and finally 95% frequentist coverage, defined as

, where

are the upper and lower bounds of 95% credible interval around

, returned by the MCMC. The loss function in (15) is also known as the Precision in Estimating Heterogeneous Treatment Effects (PEHE) from Hill (Citation2011). Bias, PEHE and coverage estimates are estimated by computing, for each of the H = 500 Monte Carlo simulations, their sample equivalents

and then averaging these over all the simulations. More precisely, reports

and coverage estimates together with 95% Monte Carlo confidence intervals.

Table 1 Sample average and 95% coverage for default BCF, “oracle” BCF which uses only the five relevant predictors (Oracle BCF-5) and shrinkage BCF (SH-BCF).

Shrinkage BCF shows better performance than default BCF as well as the “oracle” BCF version in terms of bias and , while reports just marginally lower coverage, indicating that the method allocates “stick-breaking” splitting probabilities in an efficient way and necessitates fewer MCMC iterations for convergence. The intuition as to why Shrinkage BCF performs better than “oracle” BCF, is that its priors allow not only to split more along relevant covariates instead of irrelevant ones (which explains the advantage over BCF), but also to split more frequently along covariates that are more predictive of the outcome, resulting in higher computational efficiency. To illustrate this concept, suppose we have the following trivial linear DGP with two covariates on the same scale,

. Both covariates are relevant for predicting Y, but X1 has a relatively higher impact in magnitude. DART, and thus Shrinkage BCF, allocate more splits along the more predictive dimension X1, while BART produces a similar level of splits along both X1 and X2 and hence requires a larger number of MCMC iterations and provides noisier estimates.

4.4 Targeted Regularization in Confounded Studies

The parameterization in BCF, and thus in Shrinkage BCF as well, is designed to effectively disentangle prognostic and moderating effects of the covariates and to induce different levels of sparsity when estimating these effects, in contrast to other methods for CATE estimation. The purpose of this section is to briefly illustrate with a simple example how naively introducing sparsity through a model that does not explicitly guard against RIC can have a detrimental effect on CATE estimates. To this end, we simulate, for N = 1000 observations, P = 5 correlated covariates as , where the entries of the covariance matrix are

. The treatment allocation, prognostic score, CATE and response Yi are then, respectively, generated as follows:

Notice that in this simple setup the prognostic effect is determined by the first covariate , while the moderating effect by the second covariate

. We run CATE estimation via three different methods that make use of DART priors. The first is a “Single-Learner” (S-learner) that employs DART (S-DART) to fit a single surface

and computes CATE estimates as

. The second is a “Two-Learner” (T-learner) that employs DART (T-DART) to fit two separate surfaces,

and

, for the two treatment groups and derives CATE estimates as

. The last method is our Shrinkage BCF (SH-BCF). Each of these methods is able to account for sparsity when estimating CATE. However, the interpretation of covariate importance is very different across them, due to the way the CATE estimator is derived. In particular, as indicated by the posterior splitting probabilities of each method in -DART fits a single surface

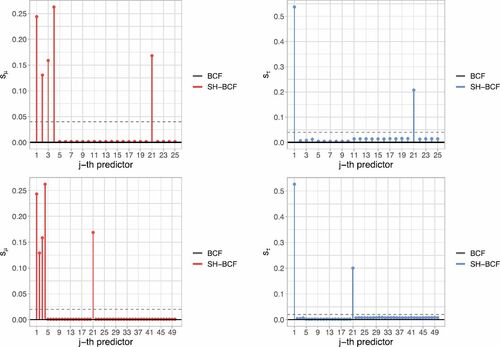

, where Z is treated as an extra covariate, so it ends up assigning most of the splitting probability to Z and then in turn to other relevant covariates. T-DART performs “group-specific” feature shrinkage, in that it fits separate surfaces for each of the treatment groups. Although both S-DART and T-DART turn out to select the relevant covariates for the final estimation of CATE, they are unable, by construction, to distinguish between prognostic and moderating ones. Shrinkage BCF instead, thanks to its parameterization, is capable of doing so, disentangling the two effects.

Table 2 Posterior splitting probabilities from S-learner DART, T-learner DART and Shrinkage BCF over the five available covariates.

In Section 5, we will show that Shrinkage BCF outperforms default BCF and other state-of-the-art methods in estimating CATE under two more challenging simulated exercises. Furthermore, in the supplementary materials we present results from few additional simulated experiments.

5 Simulated Experiments

In this section, we report results from two simulated studies carried out to demonstrate the performance of Shrinkage BCF and its informative prior version under sparse DGPs. The first simulated study is intended to evaluate Shrinkage BCF performance compared to other popular state-of-the-art methods for CATE estimation, and to show how it scales up with an increasing number of nuisance covariates. In addition, we will also illustrate how the method returns interpretable feature importance measures, as posterior splitting probabilities on and

. The second simulated setup instead mimics a strongly confounded study, and is designed to show how versions of Shrinkage BCF deal with targeted selection scenarios. In the supplementary materials, we present further results from four additional simulated exercises, designed to: (i) study what happens with perfectly known propensity scores in confounded settings; (ii) investigate computational advantage of DART priors; (iii) test Shrinkage BCFs reliability under increasingly larger P; (iv) consider different types of sparse DGPs. The R code implementing Shrinkage BCF is available at: https://github.com/albicaron/SparseBCF.

5.1 Comparison to Other Methods

The first setup consists of two parallel simulated studies, where only the total number of predictors (P = 25 and P = 50) is changed. The purpose underlying this setup is to illustrate how Shrinkage BCF relative performance scales up when nuisance predictors are added and the level of sparsity increases.

For both simulated exercises, sample size is set equal to N = 1000. In order to introduce correlation between the covariates, they are generated as correlated uniforms from a Gaussian Copula , where Θ is a covariance matrix such that

. A 40% fraction of the covariates is generated as continuous, drawn from a standard normal distribution

, while the remaining 60% as binary, drawn from a binomial

. Propensity score is generated as

(16)

(16) where

is the cumulative distribution function of a standard normal, and ηi is a noise component drawn from a uniform

. The binary treatment indicator is drawn as

. Prognostic score and CATE functions are simulated as follows:

(17)

(17)

Notice that only five predictors among , namely

, are relevant to the estimation of the prognostic score and CATE. Eventually, the response variable Yi is generated as usual:

(18)

(18)

The error term standard deviation is set equal to , where

is the sample standard deviation of the simulated prognostic score

in (17).

Performance of each method is evaluated through estimates, averaged over H = 1000 replications, reported together with 95% Monte Carlo confidence intervals. Data are randomly split in 70% train set, used to train the models, and 30% test set to evaluate the model on unseen data;

estimates are reported both for train and test data.

The models evaluated on the simulated data are summarized in . We make use of the Meta-Learners terminology described in Künzel et al. (Citation2017) and Caron, Manolopoulou, and Baio (Citation2020). The first set of models includes a S-learner and a T-learner least squares regressions (S-OLS and T-OLS), and a R-learner (Nie and Wager Citation2020) LASSO regression (R-LASSO). The second set consists just in a naive k-nearest neighbors (kNN) as a T-learner. The third set includes the following popular tree ensembles methods: Causal Forest (CF) (Wager and Athey Citation2018); a S-learner and a T-learner versions of BART (S-BART and T-BART) (Hill Citation2011; Green and Kern Citation2012; Sivaganesan, Müller, and Huang Citation2017) and DART (S-DART and T-DART); Bayesian Causal Forest (BCF) (Hahn, Murray, and Carvalho Citation2020); and finally our method, Shrinkage Bayesian Causal Forest (SH-BCF). The last set includes two causal multitask versions of Gaussian Processes, with stationary (CMGP) and nonstationary (NSGP) kernels, respectively, both implementing sparsity-inducing Automatic Relevance Determination over the covariates (Alaa and van der Schaar Citation2017, Citation2018).

Table 3 List of models tested on the simulated experiment in Section 5.1.

Performance of each method, for the two simulated scenarios with P = 25 and P = 50 covariates, respectively, is shown in . Results demonstrate the high adaptability and scalability of Shrinkage BCF, as the method displays the lowest estimated error in both simulated scenarios, and its performance is not undermined when extra nuisance covariates are added, while the other methods generally deteriorate.

Table 4 Train and test set estimates, together with 95% confidence interval, in the case of P = 25 covariates and P = 50 covariates scenarios.

shows how Shrinkage BCF correctly picks the relevant covariates behind both prognostic and moderating effects, in contrast to default BCF which assigns equal probability of being chosen as a splitting variable to each predictor. Notice also that results do not essentially vary between the P = 25 and the P = 50 scenarios (respectively, first and second row graphs in ), as Shrinkage BCF virtually selects the same relevant predictors.

Fig. 1 Shrinkage BCF posterior splitting probabilities for each single covariates, indexed on the x-axis, for (on the left) and

(on the right), in the scenarios with P = 25 predictors (first row) and P = 50 predictors (second row). Spikes indicate higher probability assigned by Shrinkage BCF to the relevant predictors. The horizontal dashed lines denote default BCF uniform splitting probabilities.

5.2 Strongly Confounded Simulated Study

This section presents results from a second simulated study, aimed at showing how Shrinkage BCF addresses scenarios characterized by strong confounding. In particular, the setup is designed around the concept of targeted selection, a common type of selection bias in observational studies, expressively tackled by the BCF framework, that implies a direct relationship between and

. We run the simulated experiment in the usual way, by first estimating the unknown propensity score; then we also rerun the same experiment assuming that propensity score is known (results in the supplementary materials), to gain insights by netting out effects due to propensity model misspecification.

We simulate N = 500 observations from P = 15 correlated covariates (the first five continuous and the remaining 10 binary), generated as correlated uniforms from the Gaussian Copula , where the covariance matrix is such that

. The relevant quantities are simulated as follows:

(19)

(19) where

is the logistic cumulative distribution function. The error’s standard deviation is set equal to half the sample standard deviation of the generated

. Targeted selection is introduced by generating the propensity score

as a function of the prognostic score

(Hahn, Murray, and Carvalho Citation2020). The BCF models tested on this simulated setup are: (i) Default BCF; (ii) agnostic prior Shrinkage BCF; (iii) agnostic prior Shrinkage BCF, without propensity score estimate as an additional covariate; (iv) Shrinkage BCF with informative prior on

only, where prior weight given to propensity score is kPS = 50; (v) Shrinkage BCF with the same prior as (iv), but kPS = 100. We test a variety of BCF versions to examine how they tackle confounding deriving from targeted selection. In particular, with (iv) and (v), we investigate whether nudging more splits on the propensity score covariate induces better handling of confounding and better CATE estimates. With (ii) and (iii) we study whether it is sensible to have propensity score as an extra covariate, once we have accounted for sparsity, in settings such as the one described in (19), where propensity

and prognostic score

are functions of the same set of covariates—more specifically

is a function of

.

We first compare the usual performance metrics (bias, , 95% coverage), averaged over H = 500 replications, which are gathered in , together with the average posterior splitting probability assigned to propensity score (

) by each model, where applicable. As for the posterior splitting probability

, we notice that in (ii) this is nearly zero, thus, not really different than not having

at all, as in (iii). This means that estimates of

do not virtually contribute a lot to the fit. Also, in (i) and (iv), the probability is more or less the same, meaning that, in this example, setting kPS = 50 implies assigning similar

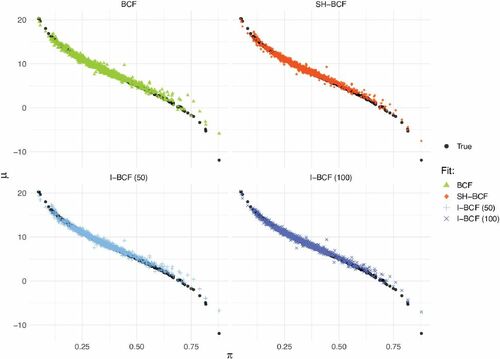

as default BCF, but allowing sparsity across the other covariates. In addition to the information in , for a better visual inspection, we plot the posterior fit of the

and

relationship for each specification of BCFFootnote4.

Table 5 , 95% coverage and posterior splitting probability on

—

— for: (i) default BCF; (ii) Shrinkage BCF; (iii) Shrinkage BCF without

; (iv) informative prior BCF with kPS = 50; (v) informative prior BCF with

.

The results corroborate those of the previous sections, as all the Shrinkage BCF versions (ii)–(v) outperform default BCF (i), thanks to their ability to adapt to sparsity (). In order to net out effects that are due to propensity model misspecification, we rerun the same example in (19) for H = 250, this time assuming that PS is known, thus, plugging in the true values in . Results can be found in the supplementary materials.

The picture emerging from this exercise is the following. Methods (ii)–(v) all have comparable performances in the realistic scenario where PS is to be estimated (see ); moreover, show that, in this case, they all effectively capture the relationship between and

. Hence, adjusting prior weights to nudge more splits on the estimated PS—methods (iv) and (v)—does not seem to improve performance. In the more abstract scenario where PS is assumed to be known (whose results are gathered in supplementary materials), and thus the relationship between

and

can be directly estimated, versions (i) and iii) perform poorly. The first because it does not induce sparsity, while iii) does not include

as extra covariate. Versions (ii), (iv), and (v) instead perform comparatively better as they virtually assign all the splitting probability to

, leaving the other covariates out of the model. This is unsurprising in a setup where

is known, as its relationship with

is straightforwardly captured. Even under this abstract scenario, specifications (iv) and (v), which assign higher weight to

, do not show improvements on performance, as also the agnostic prior version (ii) effectively allocates the entire splitting probability to the

covariate.

Fig. 2 Posterior fit of and

relationship, for default BCF, Shrinkage BCF (with

) and the two versions of informative prior BCF (kPS = 50 and

). All the specifications effectively capture the underlying relationship.

Results from the example where PS is perfectly known are in line with the findings of Hahn, Murray, and Carvalho (Citation2020) and shed light on why adding as an extra covariate is always helpful in tackling targeted selection. Naturally, the success of this practice in addressing strong confounding heavily depends on the quality of the approximation of

, that is, the quality of the propensity model that estimates

.

6 Case Study: The Effects of Early Intervention on Cognitive Abilities in Low Birth Weight Infants

In this section, we illustrate the use of Shrinkage BCF by revisiting the study in Brooks-Gunn et al. (Citation1992), which analyzes data from the Infant Health and Development Program (IHDP), found also in the more recent contribution of Hill (Citation2011). The IHDP was a randomized controlled trial aimed at investigating the efficacy of educational and family support services, with pediatric follow-ups, in improving cognitive skills of low birth weight preterm infants, who are known to have developmental problems regarding visual-motor and receptive language skills (McCormick Citation1985; McCormick, Gortmaker, and Sobol Citation1990). The study includes observations on 985 infants whose weight at birth was less than 2500 grams, across eight different sites. About one third of the infants were randomly assigned to treatment (Zi = 1), which consisted in routine pediatric follow-up (medical and developmental), in addition to frequent home visits to inform parents about child’s progress and communicate instructions about recommended activities for the child. Following Hill (Citation2011), the outcome variable (Yi) we use is the score in a Stanford Binet IQ test, whose values can range from a minimum of 40 to a maximum of 160, taken at the end of the intervention period (child’s age equal 3). The available final sample, obtained after removing 77 observations with missing IQ test score, consists of N = 908 data points, while the number of pretreatment covariates amounts to P = 31. A full list of the variables included in the analysis, together with a short description, can be found in the supplementary materials.

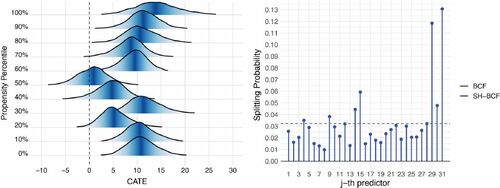

First, we estimate propensity score using a 1-hidden layer neural network classifier. Then we run Shrinkage BCF with default agnostic prior for MCMC iterations in total, but we discard the first

as burn-in. As output, we obtain the full posterior distribution on CATE estimates and splitting probabilities relative to each covariate. The left-hand pane graph of shows the estimated CATE posterior distribution for the individuals in the sample whose estimated propensity corresponds, or is closest, to the ith percentile of the estimated propensity distribution, where i is 0, 10, 20,…, 100. The represented stratified CATE posterior distribution relative to these propensity values conveys information about the uncertainty around the estimates and depicts an overall positive and rather heterogeneous treatment effects. The estimated average treatment effect is equal to

and standard deviation of CATE estimates, averaged over the post burn-in draws, is equal to 3.25, which is another sign of underlying heterogeneity patterns in the treatment response. The analysis would thus, benefit from further investigation about the heterogeneity of treatment effects, with the aim of distinguishing the impact within subgroups of individuals characterized by similar features (i.e., covariates values). Evidence on what the relevant drivers of heterogeneity behind treatment effect are is given by the posterior splitting probabilities on

(again averaged over the post burn-in draws), reported in the right-hand pane graph of , where few covariates end up being assigned relatively higher weights compared to the others. The two covariates that primarily stand out are the binary indicator on whether the mother’s ethnicity is white (29th predictor) and the ordinal variable indicating mother’s level of education (31st predictor).

Fig. 3 Left panel: Posterior distributions for the CATE estimates, obtained using Shrinkage BCF, corresponding to the approximated propensity percentiles (i.e., for individuals in the sample whose estimated propensity corresponds or is closest to the percentiles). Fill color is darker around the median. Right panel: Shrinkage BCFs posterior splitting probabilities on , averaged over the post burn-in MCMC draws.

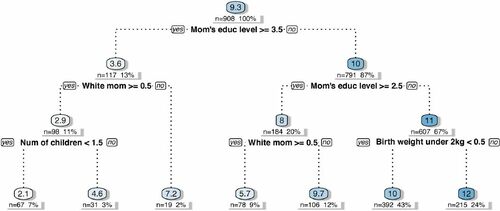

We proceed with a sensitivity analysis of treatment effect subgroups by following the suggestion of Hahn, Murray, and Carvalho (Citation2020); that is, we fit a decision tree partition algorithm using the R package rpart, by regressing mean CATE estimates obtained from Shrinkage BCF (averaged over the MCMC post burn-in draws) on the available covariates

. The purpose of this sensitivity analysis exercise is to identify the most homogeneous subgroups, namely the subgroups leading to an optimal partition, in terms of their estimated mean CATE, as a function of the covariates, and to examine how much the emerging partition agrees with the results on posterior splitting probabilities in .

Results are depicted in in the form of a decision tree, pruned at four levels. Zero splits trivially return ATE estimate (first node in ), while shallower nodes show CATE estimates averaged within the subgroup defined by the corresponding split rule. The first split is on the variable “Mother’s level of education,” specifically on whether the mother has attended college or not. The second level features a split on whether mother’s ethnicity is white in one branch, and a split on whether mother has finished high school in the other. These are exactly the same covariates selected by the posterior splitting probabilities. The last set of splits is again on mother’s ethnicity, number of children the mother has given birth to and whether child’s birth weight is less than 2kg. Within these subgroups, CATE estimates range from a minimum of +2.1 to a maximum of +12.

Fig. 4 Decision tree identifying the most homogeneous subgroups in terms of treatment response, based on splitting rules involving the available covariates. The nodes report CATE estimates averaged within the corresponding subgroup.

Both CATE’s posterior splitting probabilities as well as subgroup analysis particularly point to covariates related to mother’s education and ethnicity, in addition to birth weight (in the subgroup analysis only). Results concerning heterogeneity stemming from mother’s ethnicity and child’s birth weight are consistent with those in the original (Brooks-Gunn et al. Citation1992) and follow-up studies Brooks-Gunn et al. (Citation1994) and McCarton et al. (Citation1997), where the treatment effect is found to be lower for white mothers and for children with lower weight. The advantage of carrying out subgroup analysis through models such as Shrinkage BCF lies in the fact that subgroup identification can be done ex-post using CATE estimates, without the need of manually identifying the groups or partitioning the original sample ex-ante.

This illustrative example showed how Shrinkage BCF detects covariates which are responsible for the heterogeneity behind treatment impact in an example of real-world analysis, and how simple a posteriori partitioning of CATE estimates allows the derivation of optimal splitting rules to identify the most homogeneous subgroups in terms of treatment response. The analysis demonstrated that the estimation of individual (or subgroup) effects is a key factor for the correct evaluation and design of treatment administration policies.

7 Conclusions

In this work, we introduced a sparsity-inducing version of the popular nonparametric regression model Bayesian Causal Forest, recently developed by Hahn, Murray, and Carvalho (Citation2020), in the context of heterogeneous treatment effects estimation. The new version proposed, Shrinkage Bayesian Causal Forest, is based on the contributions by Linero (Citation2018) and Linero and Yang (Citation2018), and differs in the two additional priors that modify the way the model selects the covariates to split on. Shrinkage BCF allows targeted feature shrinkage on the prognostic score and CATE surfaces, and in addition returns posterior splitting probabilities, an interpretable measure of feature importance. In Section 5, we demonstrated its performance on simulated exercises that mimic confounded observational studies where only some covariates are relevant, while the rest of them constitutes nuisance predictors that can cause bias if included in a fully-saturated outcome model. Shrinkage BCF demonstrates competitive performance and scalability compared to the original version of BCF and to other state-of-the-art methods for CATE estimation, that tend to deteriorate with an increasing number of covariates. We also showed that it effectively tackles strong confounding from targeted selection, a property inherited from the BCF parameterization, and illustrated its use on a real-world study.

In the simulated studies of Sections 4 and 5, in addition to those in the supplementary materials, we have investigated Shrinkage BCF’s performance on different sparse DGPs. When we consider nonsparse DGPs instead, with few but all relevant covariates, default BCF might be the preferable option, even though Shrinkage BCF would not incur in much higher error.

Besides the implementation of feature shrinkage per se, the additional advantage of Shrinkage BCF specification is that the pair of Dirichlet priors placed on the splitting probabilities can be tailored to incorporate subject-matter knowledge about the importance and impact of the covariates, separately for prognostic score and CATE. Embedding of prior information in a Bayesian fashion represents a way of avoiding a completely agnostic model, that nonetheless benefits from the excellent predictive properties of a nonparametric regression algorithm such as BART. Hence, the informative version of Shrinkage BCF can be useful in applied studies with limited sample size, where a priori knowledge is possessed and can be efficiently incorporated without losing the benefits of using a powerful nonlinear model.

Finally, as highlighted in different parts of the manuscript, we stress how the main advantage of DART (and consequently Shrinkage BCF) over BART (and BCF) is very much computational and improves performance in sparse DGPs settings exclusively. The MCMC convergence in DART is faster, as the model is urged to split more and more eagerly along the most predictive features. However, DART and Shrinkage BCF do not perform variable selection explicitly. An interesting future direction would be to augment DART priors to include predictor-specific inclusion parameters, to completely select out irrelevant predictors.

Supplemental Material

Download Zip (630.6 KB)Supplementary Materials

Supplementary materials include a pdf file describing additional simulated experiments, and code and data to replicate all the simulated and real-world examples.

Additional information

Funding

Notes

1 Regularization in BART is introduced via shallow trees structures, to avoid overfitting (similarly to Gradient Boosting). Linero and Yang (2018) proposed a way to further enhance smoothness adaptation in BART through a probabilistic version of the trees, where inputs follow a probabilistic, rather than deterministic, path to the terminal nodes.

2 Note that identification of causal effects can be achieved also with other causal frameworks, such as do-calculus in Structural Causal Models (Pearl 2009a,b, 2018), or decision-theoretic approach (Dawid Citation2000, 2015), and the contribution of this work, which concerns solely estimation, still apply.

3 In certain cases, the set of pretreatment covariates might benefit from an initial screening by the researcher in the design of the study, and later undergo feature shrinkage in Shrinkage BCF, with the possibility of incorporating further a priori knowledge through the prior distributions, as described later in this section. As we will show in Section 4.3, in fact, Shrinkage BCF not only adjusts to sparse data generating processes (DGPs) per se, but allocates splitting probabilities in a more efficient way among the covariates, compared to uniformly at random splits, increasing computational efficiency.

4 We avoid plotting the fit for (iii) Shrinkage BCF without , since it yields very similar results to (ii) Shrinkage BCF with

—In Table 5, (ii) allocates nearly 0% splits to

, as in (iii).

References

- Alaa, A., and van der Schaar, M. (2018), “Limits of Estimating Heterogeneous Treatment Effects: Guidelines for Practical Algorithm Design,” in Proceedings of the 35th International Conference on Machine Learning (Vol. 80), pp. 129–138.

- Alaa, A. M., and van der Schaar, M. (2017), “Bayesian Inference of Individualized Treatment Effects Using Multi-Task Gaussian Processes,” in Proceedings of the 31st International Conference on Neural Information Processing Systems, NIPS’17, pp. 3427–3435.

- Athey, S., and Imbens, G. (2016), “Recursive Partitioning for Heterogeneous Causal Effects,” Proceedings of the National Academy of Sciences, 113, 7353–7360. DOI: 10.1073/pnas.1510489113.

- Breiman, L. (2001), “Random Forests,” Machine Learning, 45, 5–32. DOI: 10.1023/A:1010933404324.

- Brooks-Gunn, J., Liaw, F., and Klebanov, P. K. (1992), “Effects of Early Intervention on Cognitive Function of Low Birth Weight Preterm Infants,” The Journal of Pediatrics, 120, 350–359. DOI: 10.1016/S0022-3476(05)80896-0.

- Brooks-Gunn, J., McCarton, C., Casey, P., McCormick, M., Bauer, C., Bernbaum, J., Tyson, J., Swanson, M., Bennett, F., and Scott, D. (1994), “Early Intervention in Low-Birth-Weight Premature Infants. Results through Age 5 Years from the Infant Health and Development Program,” JAMA, 272, 1257–1262. DOI: 10.1001/jama.272.16.1257.

- Caron, A., Manolopoulou, I., and Baio, G. (2020), “Estimating Individual Treatment Effects Using Non-parametric Regression Models: A Review,” arXiv:2009.06472.

- Chipman, H. A., George, E. I., and McCulloch, R. E. (1998), “Bayesian CART Model Search,” Journal of the American Statistical Association, 93, 935–948. DOI: 10.1080/01621459.1998.10473750.

- Chipman, H. A., George, E. I., and McCulloch, R. E. (2010), “BART: Bayesian Additive Regression Trees,” The Annals of Applied Statistics, 4, 266–298. DOI: 10.1214/09-AOAS285.

- Dawid, A. P. (2000), “Causal Inference Without Counterfactuals,” Journal of the American Statistical Association, 95, 407–424. DOI: 10.1080/01621459.2000.10474210.

- Dawid, A. P. (2015), “Statistical Causality from a Decision-Theoretic Perspective,” Annual Review of Statistics and Its Application, 2, 273–303.

- Fan, Q., Hsu, Y.-C., Lieli, R. P., and Zhang, Y. (2020), “Estimation of Conditional Average Treatment Effects with High-Dimensional Data,” Journal of Business & Economic Statistics, 40, 313–327.

- Foster, J. C., Taylor, J. M. G., and Ruberg, S. J. (2011), “Subgroup Identification from Randomized Clinical Trial Data,” Statistics in Medicine, 30, 2867–2880. DOI: 10.1002/sim.4322.

- Green, D. P., and Kern, H. L. (2012), “Modeling Heterogeneous Treatment Effects in Survey Experiments with Bayesian Additive Regression Trees,” Public Opinion Quarterly, 76, 491–511. DOI: 10.1093/poq/nfs036.

- Hahn, P. R., Carvalho, C. M., Puelz, D., and He, J. (2018), “Regularization and Confounding in Linear Regression for Treatment Effect Estimation,” Bayesian Analysis, 13, 163–182. DOI: 10.1214/16-BA1044.

- Hahn, P. R., Murray, J. S., and Carvalho, C. M. (2020), “Bayesian Regression Tree Models for Causal Inference: Regularization, Confounding, and Heterogeneous Effects,” Bayesian Analysis, 15, 965–1056. DOI: 10.1214/19-BA1195.

- Hastie, T., Tibshirani, R., and Friedman, J. (2001), The Elements of Statistical Learning, Springer Series in Statistics, New York: Springer.

- Heckman, J. J. (1979), “Sample Selection Bias as a Specification Error,” Econometrica, 47, 153–161. DOI: 10.2307/1912352.

- Herren, A., and Hahn, P. R. (2020), “Semi-Supervised Learning and the Question of True Versus Estimated Propensity Scores,” arXiv:2009.06183.

- Hill, J. L. (2011), “Bayesian Nonparametric Modeling for Causal Inference,” Journal of Computational and Graphical Statistics, 20, 217–240. DOI: 10.1198/jcgs.2010.08162.

- Imbens, G. W. (2004), “Nonparametric Estimation of Average Treatment Effects Under Exogeneity: A Review,” The Review of Economics and Statistics, 86, 4–29. DOI: 10.1162/003465304323023651.

- Imbens, G. W., and Rubin, D. B. (2015), Causal Inference for Statistics, Social, and Biomedical Sciences: An Introduction, New York: Cambridge University Press.

- Jacob, P. E., Murray, L. M., Holmes, C. C., and Robert, C. P. (2017), “Better Together? Statistical Learning in Models Made of Modules,” arXiv:1708.08719.

- Johansson, F. D., Shalit, U., and Sontag, D. (2016), “Learning Representations for Counterfactual Inference,” in Proceedings of the 33rd International Conference on International Conference on Machine Learning (Vo. 48), pp. 3020–3029.

- Künzel, S., Sekhon, J., Bickel, P., and Yu, B. (2017), “Meta-Learners for Estimating Heterogeneous Treatment Effects Using Machine Learning,” Proceedings of the National Academy of Sciences, 116, 4156–4165. DOI: 10.1073/pnas.1804597116.

- Linero, A. R. (2018), “Bayesian Regression Trees for High-Dimensional Prediction and Variable Selection,” Journal of the American Statistical Association, 113, 626–636. DOI: 10.1080/01621459.2016.1264957.

- Linero, A. R., and Yang, Y. (2018), “Bayesian Regression Tree Ensembles that Adapt to Smoothness and Sparsity,” Journal of the Royal Statistical Society, Series B, 80, 1087–1110. DOI: 10.1111/rssb.12293.

- Lu, M., Sadiq, S., Feaster, D. J., and Ishwaran, H. (2018), “Estimating Individual Treatment Effect in Observational Data Using Random Forest Methods,” Journal of Computational and Graphical Statistics, 27, 209–219. DOI: 10.1080/10618600.2017.1356325.

- McCarton, C., Brooks-Gunn, J., Wallace, I., Bauer, C., Bennett, F., Bernbaum, J., Broyles, R., Casey, P., McCormick, M., Scott, D., Tyson, J., Tonascia, J., and Meinert, C. (1997), “Results at Age 8 Years of Early Intervention for Low-Birth-Weight Premature Infants. The Infant Health and Development Program,” JAMA, 277, 126–132. DOI: 10.1001/jama.277.2.126.

- McCormick, M. (1985), “The Contribution of Low Birth Weight to Infant Mortality and Childhood Morbidity,” The New England Journal of Medicine, 312, 82–90. DOI: 10.1056/NEJM198501103120204.

- McCormick, M. C., Gortmaker, S. L., and Sobol, A. M. (1990), “Very Low Birth Weight Children: Behavior Problems and School Difficulty in a National Sample,” The Journal of Pediatrics, 117, 687–693. DOI: 10.1016/S0022-3476(05)83322-0.

- Nie, X., and Wager, S. (2020), “Quasi-Oracle Estimation of Heterogeneous Treatment Effects,” Biometrika, 108, 299–319. DOI: 10.1093/biomet/asaa076.

- Pearl, J. (2009a), Causality: Models, Reasoning and Inference (2nd ed.), New York: Cambridge University Press.

- Pearl, J. (2009b), “Remarks on the Method of Propensity Score,” Statistics in Medicine, 28, 1415–1416.

- Pearl, J. (2018), “Theoretical Impediments to Machine Learning with Seven Sparks from the Causal Revolution,” in Proceedings of the Eleventh ACM International Conference on Web Search and Data Mining—WSDM ’18.

- Powers, S., Qian, J., Jung, K., Schuler, A., Shah, N. H., Hastie, T., and Tibshirani, R. (2018), “Some Methods for Heterogeneous Treatment Effect Estimation in High Dimensions,” Statistics in Medicine, 37, 1767–1787. DOI: 10.1002/sim.7623.

- Robinson, P. M. (1988), “Root-n-Consistent Semiparametric Regression,” Econometrica, 56, 931–954. DOI: 10.2307/1912705.

- Rosenbaum, P. R., and Rubin, D. B. (1983), “The Central Role of the Propensity Score in Observational Studies for Causal Effects,” Biometrika, 70, 41–55. DOI: 10.1093/biomet/70.1.41.

- Ročková, V., and Saha, E. (2019), “On Theory for BART,” in Proceedings of the Twenty-Second International Conference on Artificial Intelligence and Statistics (Vol. e 89), pp. 2839–2848.

- Rubin, D. B. (1978), “Bayesian Inference for Causal Effects: The Role of Randomization,” The Annals of Statistics, 6, 34–58. DOI: 10.1214/aos/1176344064.

- Shalit, U., Johansson, F. D., and Sontag, D. (2017), “Estimating Individual Treatment Effect: Generalization Bounds and Algorithms,” in Proceedings of the 34th International Conference on Machine Learning (Vol. 70), pp. 3076–3085.

- Sivaganesan, S., Müller, P., and Huang, B. (2017), “Subgroup Finding via Bayesian Additive Regression Trees,” Statistics in Medicine, 36, 2391–2403. DOI: 10.1002/sim.7276.

- Starling, J., Murray, J., Lohr, P., Aiken, A., Carvalho, C., and Scott, J. (2019), “Targeted Smooth Bayesian Causal Forests: An Analysis of Heterogeneous Treatment Effects for Simultaneous Versus Interval Medical Abortion Regimens Over Gestation,” The Annals of Applied Statistics, 15, 1194–1219.

- Traskin, M., and Small, D. S. (2011), “Defining the Study Population for an Observational Study to Ensure Sufficient Overlap: A Tree Approach,” Statistics in Biosciences, 3, 94–118. DOI: 10.1007/s12561-011-9036-3.

- Wager, S., and Athey, S. (2018), “Estimation and Inference of Heterogeneous Treatment Effects Using Random Forests,” Journal of the American Statistical Association, 113, 1228–1242. DOI: 10.1080/01621459.2017.1319839.

- Yao, L., Li, S., Li, Y., Huai, M., Gao, J., and Zhang, A. (2018), “Representation Learning for Treatment Effect Estimation from Observational Data,” in Advances in Neural Information Processing Systems, 31, 2633–2643.

- Zhao, Q., Small, D. S., and Ertefaie, A. (2018), “Selective Inference for Effect Modification via the Lasso,” arXiv:1705.08020.

- Zigler, C., Watts, K., Yeh, R., Wang, Y., Coull, B., and Dominici, F. (2013), “Model Feedback in Bayesian Propensity Score Estimation,” Biometrics, 69, 263–273. DOI: 10.1111/j.1541-0420.2012.01830.x.

- Zigler, C. M., and Dominici, F. (2014), “Uncertainty in Propensity Score Estimation: Bayesian Methods for Variable Selection and Model-Averaged Causal Effects,” Journal of the American Statistical Association, 109, 95–107. DOI: 10.1080/01621459.2013.869498.

- Zimmert, M., and Lechner, M. (2019), “Nonparametric Estimation of Causal Heterogeneity Under High-Dimensional Confounding,” arXiv:1908.08779.