?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

Variational Bayes methods approximate the posterior density by a family of tractable distributions whose parameters are estimated by optimization. Variational approximation is useful when exact inference is intractable or very costly. Our article develops a flexible variational approximation based on a copula of a mixture, which is implemented by combining boosting, natural gradient, and a variance reduction method. The efficacy of the approach is illustrated by using simulated and real datasets to approximate multimodal, skewed and heavy-tailed posterior distributions, including an application to Bayesian deep feedforward neural network regression models. Supplementary materials, including appendices and computer code for this article, are available online.

1 Introduction

Variational Bayes (VB) methods are increasingly used for Bayesian inference in a wide range of challenging statistical models (Ormerod and Wand Citation2010; Blei, Kucukelbir, and McAuliffe Citation2017). VB approximates the target posterior density as the solution of an optimization problem over a simpler and more tractable family of distributions; this family is usually selected to balance accuracy and computational cost. VB methods are particularly useful in estimating the posterior densities of the parameters of complex statistical models when exact inference is impossible or computationally expensive. They are usually computationally much cheaper than methods such as Markov chain Monte Carlo (MCMC) which produce exact draws from the posterior as the number of simulated draws goes to infinity. We call this property of MCMC estimators “simulation consistent,” and for the rest of the article, we refer to MCMC-type algorithms as “exact” methods.

Much of the current literature focuses on Gaussian variational approximation (GVA) for approximating the target posterior density (Challis and Barber Citation2013; Titsias and Lázaro-Gredilla Citation2014; Kucukelbir et al. Citation2017; Tan and Nott Citation2018; Ong, Nott, and Smith Citation2018). A major problem with Gaussian approximations is that many posterior distributions are skewed, multimodal, and heavy-tailed. This is true in particular for complex statistical models such as Bayesian deep feedforward neural network (DFNN) regression models (Izmailov et al. Citation2021; Jospin et al. Citation2022). Gaussian variational approximations for such models poorly approximate their posterior distributions; see Section 5.

There are a number of attempts to overcome the issue with Gaussian variational approximation including Smith, Loaiza-Maya, and Nott (Citation2020) who propose Gaussian copula and skew Gaussian copula-based variational approximations; Guo et al. (Citation2017) and Miller, Foti, and Adams (Citation2017) who propose a mixture variational approximation; Rezende and Mohamed (Citation2015) who propose normalizing planar and radial flows. Other types of normalizing flows for variational inference are also proposed in the literature and are reviewed by Papamakarios et al. (Citation2021).

Our article makes a number of contributions. The first is to propose a flexible copula-based variational approximation by a mixture of Gaussians that builds on the Gaussian and skew-Gaussian copula approximations (Smith, Loaiza-Maya, and Nott Citation2020) and on the mixture of Gaussians variational approximations (Guo et al. Citation2017; Miller, Foti, and Adams Citation2017). We do not believe that such a variational mixture has been used before in the literature. The main idea of this part of our approach is to start by using a Gaussian or skew Gaussian copula-based approximation as the first component. This leads to a possible transformation of each parameter (marginal of the posterior) which may then make it easier to fit a joint distribution to the posterior of the transformed parameters. In our approach simpler Gaussian distributions are then used as the additional mixture components to make the optimization tractable while still improving the inference and prediction. Section 3 gives further details. The key insight is that of fitting a mixture after transforming each parameter, rather than using a simple multivariate family like the normal distribution. The proposed variational approximation allows fitting of multimodal, skewed, heavy tailed, and high-dimensional posterior distributions with complex dependence structures. We show in a number of examples that our approach gives more accurate inference and forecasts than the corresponding Gaussian copula and mixture of normals variational approximation. Although not proved in the article, it is not difficult to see that a version of an estimator based on a copula of a mixture will be a universal approximator of a multivariate distribution under reasonable assumptions because a mixture of normals is a universal approximator. It is interesting to note that a mixture of Gaussian copulas does not give a universal approximator of a multivariate distribution (Khaled and Kohn Citation2023).

Variational optimization of a copula-based mixture approximation is challenging in complex models with a large number of parameters because of the large number of variational parameters that need to be optimized. The boosting variational inference method in Guo et al. (Citation2017), and Miller, Foti, and Adams (Citation2017) is a promising recent approach to fit mixture type variational approximations. By adding a single mixture component at a time, the posterior approximation of the model parameters is refined iteratively. Miller, Foti, and Adams (Citation2017) uses stochastic gradient ascent (SGA) optimization with the reparameterization trick to fit a mixture of Gaussian densities. They find that the use of the reparameterization trick in the boosting variational method still results in a large variance and it is necessary to use many samples to estimate the gradient of the variational lower bound. Guo et al. (Citation2017), Locatello et al. (Citation2018), and Campbell and Li (Citation2019) consider similar variational boosting mixture approximations, although they use different approaches to optimize and specify the mixture components. Jerfel et al. (Citation2021) consider boosting using the forward KL-divergence and combines variational inference and importance sampling.

Our second contribution is to build (see Section 4) on the variational boosting method by efficiently adding a single mixture component at a time using the natural gradient (Amari Citation1998) and the variance reduction method of Ranganath, Gerrish, and Blei (Citation2014) to fit the flexible copula of the mixture. Previous literature on variational boosting optimization does not use the natural gradient. Many natural-gradient variational methods are available, for example, Hoffman et al. (Citation2013), Khan and Lin (Citation2017), and Lin, Khan, and Schmidt (Citation2019). This literature shows that using natural gradients enables faster convergence than the traditional gradient-based methods because they exploit the information geometry of the variational approximation; Section 5.5 suggests that this is also true for our estimator.

Our third contribution (see Section 4.3) is to provide methods to initialize the variational parameters of the additional component in the mixtures.

The rest of the article is organized as follows. Section 2 gives the necessary background to the article; Section 3 discusses the copula of the mixture variational approximation; Section 4 discusses the variational optimization algorithm that fits the copula of a mixture variational approximation; Section 5 presents results from both simulated and real datasets; Section 6 concludes with a discussion of our approach and results. This article has an online supplement containing additional technical details and empirical results.

2 Variational Inference

Let be the vector of model parameters, and

the vector of observations. Bayesian inference about θ is based on the posterior distribution

is the prior,

is the likelihood function, and

is the marginal likelihood. The posterior distribution

is unknown for most statistical models, making it challenging to carry out Bayesian inference. We consider variational inference methods, where a member

of some family of tractable densities, indexed by the variational parameter

, is used to approximate the posterior

. The optimal variational parameter λ is chosen by minimizing the Kullback-Leibler divergence between

and

,

where

(1)

(1)

is a lower bound on the log of the marginal likelihood

. Therefore, minimizing the KL divergence between

and

is equivalent to maximizing the Evidence Lower Bound (ELBO) given by (1). The ELBO can be used as a tool for model selection (Ong, Nott, and Smith Citation2018; Smith, Loaiza-Maya, and Nott Citation2020; Tran et al. Citation2020) although care is needed if the tightness of the lower bound varies significantly between the candidate models.

Although is often an intractable integral with no closed form solution, we can write it as an expectation with respect to

,

(2)

(2) where

. This interpretation allows the use of stochastic gradient ascent (SGA) methods to maximize the variational lower bound

. See, for example, Nott et al. (Citation2012), Paisley, Blei, and Jordan (Citation2012), Hoffman et al. (Citation2013), Salimans and Knowles (Citation2013), Kingma and Welling (Citation2014), Titsias and Lázaro-Gredilla (Citation2014), and Rezende, Mohamed, and Wierstra (Citation2014). In SGA, an initial value

is updated according to the iterative scheme

(3)

(3)

denotes the Hadamard (element by element) product of two random vectors;

is a vector of step sizes, where m is the dimension of the variational parameters λ, and

is an unbiased estimate of the gradient of the lower bound

at

. The learning rate sequence satisfies the Robbins-Monro conditions

and

(Robbins and Monro Citation1951), which ensures that the iterates

converge to a local optimum as

under suitable regularity conditions (Bottou Citation2010). Adaptive step sizes are often used in practice, and we employ the ADAM method of Kingma and Ba (Citation2015), which uses bias-corrected estimates of the first and second moments of the stochastic gradients to compute adaptive learning rates. The update in (3) continues until a stopping criterion is satisfied.

To estimate the gradient, SGA methods often use the “log-derivative trick” (Kleijnen and Rubinstein Citation1996),

, and it is straightforward to show that

(4)

(4)

is the expectation with respect to

in (4). Let

Then, is an unbiased estimate of

. However, this approach usually results in large fluctuations in the stochastic gradients (Ranganath, Gerrish, and Blei Citation2014). Section 4 discusses variance reduction and natural gradient methods, which are very important for fast convergence and stability.

3 Flexible Variational Approximation Based on a Copula of a Mixture

Smith, Loaiza-Maya, and Nott (Citation2020) propose Gaussian and skew Gaussian copula based variational approximations, which are constructed using Gaussian or skew Gaussian distributions after element-wise transformations of the parameters. They consider the Yeo-Johnson (Yeo and Johnson Citation2000) and G&H families (Tukey Citation1977) for the element-wise transformations and use the sparse factor structure proposed by Ong, Nott, and Smith (Citation2018) as the covariance matrix of the Gaussian distributions. This section discusses the flexible copula based mixture of Gaussians variational approximation that builds on Smith, Loaiza-Maya, and Nott (Citation2020). The main idea is to use a flexible variational approximation, such as the Gaussian or skew Gaussian copulas of Smith, Loaiza-Maya, and Nott (Citation2020) as the first component. This step also produces the transformed parameters we work with for the rest of the components. The rest of the components (second, third, etc.) are then chosen to be Gaussian distributions with a simple covariance structure making the optimization tractable, while still improving the inference and prediction.

Let be a family of one-to-one transformations with parameter vector γ. Each parameter θi is first transformed as

; the density of

is then modeled as a K component multivariate mixture of Gaussians. The variational approximation density for θ is then obtained by computing the Jacobian of the element-wise transformation from θi to

, for

, so that

(5)

(5) the variational parameters are

Footnote1

and

. The marginal densities of the approximation are

(6)

(6) for

, with

,

, a sub-vector of λ. As in Smith, Loaiza-Maya, and Nott (Citation2020), the variational parameters λ are all identified in

without additional constraints because they are also parameters of the margins given in (6). The variational approximation in (5) is called a copula of a mixture of Gaussians variational approximation (CMGVA). It can fit multimodal, skewed, heavy tailed, and high-dimensional posterior distributions with complex dependence structures; see Section 5.

When θ is high dimensional, we follow Ong, Nott, and Smith (Citation2018) and adopt a factor structure for each Σk, . Let βk be an

matrix, with

. For identifiability, we set the strict upper triangle of βk to zero. Let

be a parameter vector with

, and denote by Dk the m × m diagonal matrix with entries dk. We assume that

so that the number of parameters in Σk grows linearly with m if

is kept fixed. Note that the number of factors rk can be different for each component of the mixture for

. We set the number of factors for the first component higher than for the other components in the mixture to make the variational approximation scalable. Section 5 discusses this further.

To draw S samples from the variational approximation given in (5), the indicator variables Gs, for , are first generated; each Gs selects the component of the mixture from which the sample is to be drawn, with

with probability πk. Then,

are generated, where

is

-dimensional and

is m-dimensional;

are then calculated, where

denotes the Hadamard product defined above. This representation shows that the latent variables z, which are low-dimensional, explain all the correlation between the transformed parameters

, and the parameter-specific idiosyncratic variance is captured by η. Finally,

is generated for

and

. The Yeo-Johnson (YJ) transformation (Yeo and Johnson Citation2000) is used as

for all

. If a parameter θi is constrained, it is first transformed to the real line; for example, a variance parameter is transformed to its logarithm. Smith, Loaiza-Maya, and Nott (Citation2020) gives the inverses and derivatives of the Yeo-Johnson transformation. Both the Gaussian copula and the mixture of Gaussians variational approximations are special cases of the CMGVA. The mixture of Gaussians variational approximation is a special case of the CMGVA when the variational parameters γi, are set to 1 for

. The Gaussian copula is a special case of the CMGVA when the number of components in the mixture is K = 1.

The variational approximation in (5) can be extended by including a skew Gaussian component as the first mixture component. It then becomes

(7)

(7)

where is a multivariate skew Gaussian distribution of Azzalini (Citation1985) with density

,

is the ith diagonal element of

, and

. The parameter

determines the level of skewness of the ith marginal of

. When

for

, the distribution reduces to a multivariate Gaussian. The skew Gaussian copula is a special case of the variational approximation in (7) when the number of components in the mixture is K = 1.

4 Variational Methods

This section discusses the estimation method for the copula based mixture of Gaussians variational approximation in (5). We first describe how the first component of the mixture is fitted and then the process for adding an additional component to the existing mixture approximation. Extension to the variational approximation in (7) is straightforward.

4.1 Optimization Methods

The method starts by fitting an approximation to the posterior distribution with a single mixture distribution, K = 1, using the variational optimization algorithm given in Smith, Loaiza-Maya, and Nott (Citation2020); the optimal first component variational parameters are denoted as

, with the mixture weight π1 set to 1. We do this by maximizing the first lower bound objective function

where

. After the optimization algorithm converges, λ1 is fixed as

.

After iteration K, the current approximation to the posterior distribution is a mixture distribution with K components

We can introduce a new mixture component with new component parameters, , and a new mixing weight

. The weight

mixes between the new component and the existing approximation. The new approximating distribution is

The new lower bound objective function is

Note that with the copula transformation fixed, updating the mixture approximation parameters is the same as updating an ordinary mixture approximation in the transformed space of . Since the existing variational approximation is also fixed, it is only necessary to optimize the new component parameters

, and the new mixing weight

, which reduces the dimension of the variational parameters to be optimized. Although the existing mixture components are fixed, their mixing weights can vary. It is possible to reoptimize the variational parameters γi for all

at each iteration of the algorithm. However, we obtained no substantial improvement with the increased computational cost. The γi, for all

are kept fixed for iterations k > 1.

4.2 Updating the Variational Parameters

This section outlines the updating scheme for the variational parameters of the new component parameters

and the new mixing weight

based on natural-gradient methods and control-variates for reducing the variance of the unbiased estimates of the gradient of the lower bound. Many natural-gradient methods for variational inference are available (Hoffman et al. Citation2013; Khan and Lin Citation2017); these show that natural-gradients produce faster convergence than traditional gradient-based methods.

The natural-gradient approach exploits the information geometry of the variational approximation to speed-up the convergence of the optimization. If the Fisher information matrix (FIM), denoted by

, of the

is positive-definite for all

, the natural-gradient update is

(8)

(8)

Multiplying the estimated gradient of the lower bound by the inverse of the Fisher information matrix leads to a proper scaling of the gradient in each dimension and takes into account dependence between the variational parameters λ. In general, the natural-gradient update in (8) requires computing and inverting the FIM, which can be computationally expensive in high-dimensional problems. However, Khan and Nielsen (Citation2018) shows that for exponential families (EF), the natural-gradient update can be much simpler than the traditional gradient-based methods. The standard EF variational approximation is

where

is the sufficient statistic,

is the base measure,

is the log-partition, and

denotes an inner product. For such approximations, it is unnecessary to compute the Fisher information matrix (FIM) explicitly and the expectation parameter

can be used to compute natural-gradients, provided the FIM is invertible for all λ. The update for the natural-gradient method is now

(9)

(9)

where

denotes the gradient with respect to the expectation parameter

. The following is used to obtain (9):

the first equality is obtained by using the chain rule and the second equality is obtained by noting

; see Lin, Khan, and Schmidt (Citation2019) for details.

Lin, Khan, and Schmidt (Citation2019) derive natural gradient methods for a mixture of EF distributions in the conditional exponential family form

(10)

(10) with

as the component and

as the mixing distribution. A special case is the finite mixture of Gaussians, where the components in EF form are mixed using a multinomial distribution. They show that if the FIM,

, of the joint distribution of θ and

, is invertible, then it is possible to derive natural-gradient updates without explicitly computing the FIM. They use the update

with the expectation parameters

, where

, with

, and

with

, and

denotes the indicator function which is 1 if

, and 0 otherwise. This results in simple natural-gradient updates for the mixture components and weights. Lin, Khan, and Schmidt (Citation2019) apply their natural gradient methods for fitting a mixture of Gaussians variational approximation with a full covariance matrix for each component, which makes it less scalable for a large number of parameters. They also do not use the boosting approach for adding a mixture component one at a time. In this case, choosing good initial values for all variational parameters can be difficult.

As a factor structure is used for the covariance matrix, we adopt the natural-gradient updates of Lin, Khan, and Schmidt (Citation2019) only for the new weight and the mixture means

. Denote

and

. The natural-gradient update for the new mixture weight,

is

(11)

(11) where

is given in (5) and the natural-gradient update for the new means

is

(12)

(12)

where

Updating the variational parameters and

is now discussed. There are two reasons why the reparameterization trick is not used to update the variational parameters

and

. The first is that Miller, Foti, and Adams (Citation2017) find that using the reparameterization trick in the boosting variational method still results in a large variance and it is necessary to use many samples to estimate the gradient of the variational lower bound. The second is that it may be impossible to use the reparameterization trick because of the copula transformation. An alternative method to update the variational parameters

and

is now discussed.

The gradients of the lower bound in (4) require the gradient . The gradient of

with respect to

is

and the gradient of

with respect to

is

We also employ control variates as in Ranganath, Gerrish, and Blei (Citation2014) to reduce the variance of an unbiased estimate of gradient of the and

and the efficient natural-gradient updates using the conjugate gradient methods given in Tran et al. (Citation2020). To use a conjugate gradient linear solver to compute

it is only necessary to be able to quickly compute matrix vector products of the form

for any vector x, without needing to store the elements of

. When

is a vector, the natural gradient can be computed efficiently as outlined in algorithm S1 in section S2 of the online supplement. Our updates for

and

are pre-conditioned gradient steps based on the natural gradient update for a Gaussian approximation in the added component, not the natural gradient in the mixture approximation.

Algorithm 1

Variational Algorithm

Initialize

, set t = 0, and generate

for

. Let

, md, be the number of elements in

, and

.

(b) Evaluate the control variates

,

, with

(13)

for

, where

and

are the sample estimates of covariance and variance based on S samples from step (1a). The

are estimated similarly.

Repeat until the stopping rule is satisfied

Update

:

Generate

for

.

Compute

, with

(14)

The gradient of the lower bound

can be computed similarly as in (14).

Compute the control variate

and

as in (13) and compute

and

using algorithm S1 in section S2 of the online supplement.

Compute

using ADAM as described in section S1 of the online supplement. Then, set

,

.

Update

and

Generate

for

.

Use (11) to update

and (12) to update

, respectively. Set

However, we find this pre-conditioned gradient step improves efficiency compared to the ordinary gradient. Section 5.5 gives further details. Algorithm 1 presents the full variational algorithm.

4.3 Initializing a New Mixture Component

Introducing a new component requires setting the initial values for the new component parameters and the new mixing weight

. A good initial value for the new mixture component should be located in the region of the target posterior distribution

that is not well represented by the existing mixture approximation

. There are many ways to set the initial value. We now discuss some that work well in our examples. The elements in

are initialized randomly by drawing from

, the diagonal elements in

are initialized by 0.001. These values ensure that the optimization algorithm is stable because generated values will be close to

in the first few initial iterations. The mixture weight

is initialized by 0.5. Algorithm 2 gives the initial value for

.

Algorithm 2

Initial values for .

Input: and γ

Output: initial values for

For i = 1 to m

Construct a grid of S values of the ith transformed parameter

. One way to construct the grid of S values is to draw samples from the current approximation, and compute the minimum and maximum values

for

. Let

be a vector containing

and other transformed parameters fixed at their means or some other reasonable values.

Compute

for

and

.

Compute the

for

.

Set

with a probability proportional to the weight

.

Alternatively, when the dimension of the parameters is large,

Draw S samples from the current variational approximation

, and compute the weights

for

. Then, set

with a probability proportional to the weight

.

5 Examples

To illustrate the performance of the proposed variational approximations described in Section 3, we employ them to approximate complex and high-dimensional distributions, where their greater flexibility may increase the accuracy of inference and prediction compared to simpler approximations.

The section has four examples. The first approximates a high dimensional, skewed, and heavy tailed distribution. The second example approximates a high dimensional multimodal distribution. The third example approximates the posterior distributions of the model parameters of a logistic regression model with a complex prior distribution. The fourth example fits a Bayesian deep neural network regression model. In all the examples, the following variational approximations are considered:

(A1) A mixture of Gaussians variational approximation (MGVA). The Gaussian variational approximation is a special case with K = 1.

(A2) The copula-based mixture of Gaussians variational approximation (CMGVA). The Gaussian copula variational approximation is a special case where K = 1.

(A3) The mean-field mixture of Gaussians variational approximation (MGVA-MF). We use the terms mean-field variational approximation to refer to the case where the covariance matrix for each component in the mixture is diagonal.

(A4) The mean-field copula-based mixture of Gaussians variational approximation (CMGVA-MF).

(A5) The mixture of skew Gaussian variational approximation (MSGVA). This is a special case of the variational approximation described in (7) when the variational parameters

for all

.

(A6) The mixture of skew Gaussian copula-based variational approximation (CMSGVA) given in (7). For MSGVA and CMSGVA, only the first component is the skew Gaussian distribution. The other K – 1 components are Gaussian distributions.

All the examples are implemented in Matlab. The first three examples were run on a standard desktop computer. The fourth example was run on a 28 CPU-cores of a high performance computer cluster. Unless otherwise stated, we use the estimates of the variational lower bound to select the best variational approximations. In principle, making the variational family more flexible by adding new components should not reduce the variational lower bound; in practice, the difficulty of the optimization means that adding a new component may worsen the approximation. The variational approximation is useful when the exact MCMC method is impossible or computationally expensive. The boosting approach, where an existing approximation is improved by adding one new component at a time allows us to tune the accuracy/computational effort tradeoff, where we start with a rough fast approximation and keep improving until the computational budget is exhausted. All the variational parameters are initialized using the approach in Section 4.3.

5.1 Skewed and Heavy-Tailed High-Dimensional Distributions

This section investigates the ability of variational approximations (A1)–(A6) to approximate skewed, heavy-tailed, and high-dimensional target distributions. The true target distributions are assumed to follow a multivariate t-copula,

(15)

(15)

The density is a multivariate t-distribution with zero mean, full-covariance matrix (ones on the diagonal and 0.8 on the off-diagonals), and degrees of freedom df = 4. The Yeo-Johnson (YJ) transformation with parameters set to 0.5 (Yeo and Johnson Citation2000) is used. The dimension of the parameters θ is set to m = 100. The number of factors r1 is set to 4 for the first component and rk = 1, for each additional mixture component for

for MGVA, CMGVA, MSGVA, and CMSGVA. We use S = 100 samples to estimate the lower bound and the gradients of the lower bound. The algorithm in Smith, Loaiza-Maya, and Nott (Citation2020) is performed for 5000 iterations to obtain the optimal variational parameters for the first component of the mixture, and then Algorithm 1 is performed for 5000 iterations to obtain the optimal variational parameters for each additional component of the mixture for variational approximations (A1)–(A6).

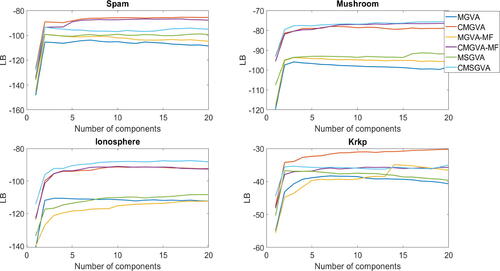

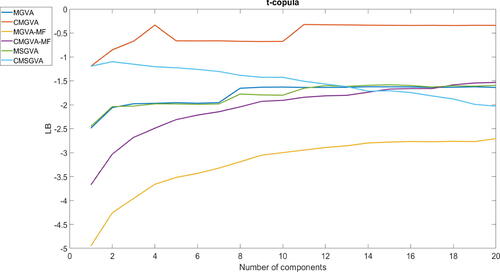

shows the average lower bound value over the last 500 steps of the optimization algorithm for variational approximations (A1)–(A6). The figure shows that the Gaussian and skew Gaussian copula-based estimators have similar lower bounds that are larger than the Gaussian, skew Gaussian, mean-field Gaussian copula-based approximations, and the mean-field Gaussian variational approximation. As expected, the mean-field Gaussian variational approximation has the lowest lower bound value because it does not capture the dependence structure of the θ posterior. The figure also shows that there is substantial improvement obtained by going from k = 2 to 8 components for most variational approximations, and there are no significant improvements thereafter. Interestingly, the lower bound values of the CMSGVA decrease when more components are added in the mixture. The Gaussian copula has greater lower bound than MGVA for any number of components for this example. The CMGVA with k = 4 components has a significantly higher lower bound compared to other variational approximations for this example.

Fig. 1 Plot of the average lower bound values over the last 500 steps for variational approximations (A1)–(A6) for the 100-dimensional multivariate t-copula example.

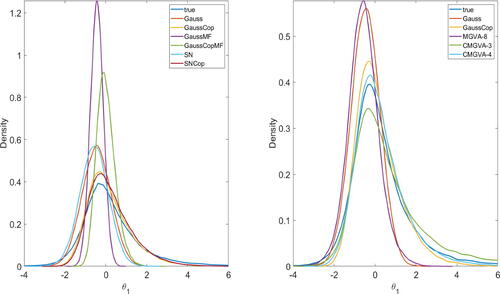

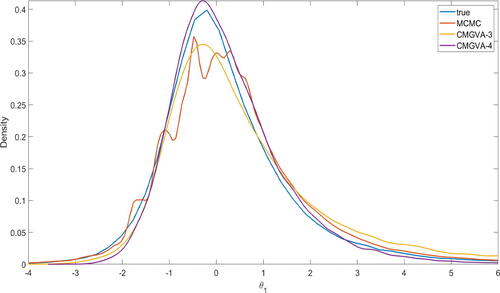

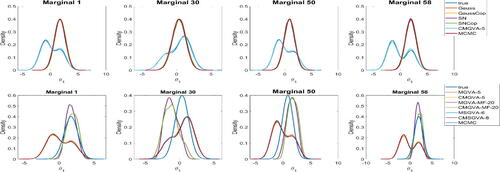

shows the kernel density estimates of the marginal densities of the parameter θ1 estimated using the different variational approximations. The left panel of compares the performance of mean-field Gaussian, mean-field Gaussian copula, Gaussian, Gaussian copula, skew Gaussian, and skew Gaussian copula variational approximations. It shows that the mean-field Gaussian and mean-field Gaussian copula variational approximations significantly underestimate the posterior variances of the parameter θ1. The Gaussian copula and skew Gaussian copula perform better than the Gaussian and skew Gaussian variational approximations. The right panel of shows that the CMGVA with k = 3 and k = 4 components captures both the skewness and the heavy tails of the marginal posteriors better than the Gaussian copula and the mixture of the Gaussians variational approximations with k = 8 components.

Fig. 2 Left: Kernel density estimates of the marginal parameter θ1 approximated by the mean-field Gaussian, Gaussian, skew Gaussian, Gaussian copula, mean-field Gaussian copula, skew Gaussian copula variational approximations. Right: Kernel density estimates of the marginal parameter θ1 approximated by the Gaussian, Gaussian copula, 3-component CMGVA, 4-component CMGVA, and 8-component MGVA.

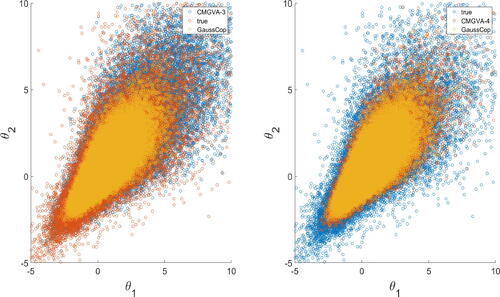

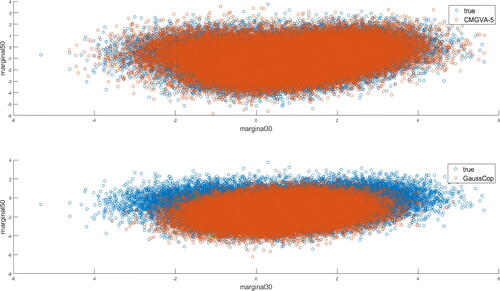

shows the scatterplot of the observations from the first and second margins generated from the true target densities, Gaussian copula variational approximation, the 3-component CMGVA, and the 4-component CMGVA. This suggests that the CMGVA is better at capturing the skewness and heavy-tailed properties of the true target densities compared to the Gaussian copula-based variational approximations.

Fig. 3 Left: Scatterplot of the observations from the first and second margins generated from true target density (red), Gaussian copula variational approximation (yellow), and 3-component CMGVA (blue). Right: Scatterplot of the observations from the first and second margins generated from true target density (blue), Gaussian copula variational approximation (yellow), and 4-component CMGVA (red).

We now compare the accuracy of the CMGVA to the densities estimated using the Hamiltonian Monte Carlo (HMC) method of Hoffman and Gelman (Citation2014), called the No U-Turn Sampler (NUTS); this method is a popular MCMC algorithm for sampling high dimensional posterior distributions. For all examples, the NUTS tuning parameters, such as the number of leapfrog steps and the step size, are set to the default values as in the STAN reference manual Footnote2. We ran the HMC method for 1,005,000 iterations, discarding the initial 5000 iterations as warm-up. The remaining 1,000,000 MCMC samples are stored for further analysis. The inefficiency of the HMC method is measured using the integrated autocorrelation time (IACT) defined in section S3 of the online supplement.

Figure S2 in section S4 of the online supplement shows the IACT of the parameters θ estimated using the HMC method. The figure shows that the average IACT of the parameters is 391 with the average effective sample size of 2556.70. Figure S1 also shows that the Markov chain sometimes gets stuck, indicating that the HMC method performs quite inefficiently in this example. shows that the 3-component and 4-component CMGVA are more accurate than the HMC method for estimating the marginal density of the parameter θ1. The same applies to other marginal densities. Again, this suggests that the CMGVA can capture the skewness and heavy-tailed properties of the true target densities.

Fig. 4 Kernel density estimates of the marginal parameter θ1 estimated using the HMC method, 3-component CMGVA, and 4-component CMGVA for m = 100.

The CPU time for the HMC method is 167.5 min. The time taken for estimating the 3- and 4-component CMGVA are 36.15 min and 50.10 min.Footnote3 Therefore, the total CPU time for estimating the 3- and 4-component CMGVA are 4.63 and 3.34 times faster than the HMC method.

5.2 Multimodal High-Dimensional Distributions

This section investigates the ability of the proposed variational approximations (A1)–(A6) to approximate multimodal high-dimensional target distributions. The true target distribution is the multivariate mixture of normals, The dimension of the parameters θ is set to 100. Each element

is uniformly drawn from the interval

for

and

. We set the full covariance matrix Σc with ones on the diagonal and the correlation coefficients

and 0.8 in the off-diagonals for all components. The number of factors

for the first component and rk = 1, for each additional mixture component for

for variational approximations A1, A2, A5, and A6. Similarly to the previous example, we use S = 100 samples to estimate the lower bound values and the gradients of the lower bound. The algorithm in Smith, Loaiza-Maya, and Nott (Citation2020) is performed for 5000 iterations to obtain the optimal variational parameters for the first component of the mixture and then Algorithm 1 is performed for 5000 iterations to obtain the optimal variational parameters for each additional component of the mixture. The step sizes are set to the values given in section S1 of the online supplement.

Figure S3 in section S5 of the online supplement shows the average lower bound values over the last 500 steps for the variational approximations (A1)–(A6) for the 100-dimensional mixture of normals example. The figure shows that the performances of the CMGVA, MGVA, MSGVA, and CMSGVA are comparable for the cases and

. Figure S4 in section S5 of the online supplement confirms that by showing that all the YJ-parameters are close to 1 for CMGVA and CMSGVA and all the

parameters in (7) are close to 0 for MSGVA and CMSGVA for the case

. Similar conclusions hold for the case

. The mixture of normals is a special case of CMGVA when all the YJ-parameters are equal to 1, are special cases of MSGVA when all the

parameters are close to 0, and are special cases of CMSGVA when all the YJ-parameters are close to 1 and all the

are close to 0. The MGVA, CMGVA, MSGVA, and CMSGVA are clearly much better than MGVA-MF and CMGVA-MF in this example.

The top panel of shows the kernel density estimates of some of the posterior densities of the marginal parameters of θ approximated with several of the variational approximations, together with the true marginal distributions and the marginal distributions estimated using the HMC method of Hoffman and Gelman (Citation2014) for the case . The HMC method ran for 1,005,000 iterations, with the initial 5000 iterations discarded as warm up. The remaining 1,000,000 iterations are used for further analysis. Figure S9 in section S5 of the online supplement shows the IACT of the parameters θ estimated using HMC. The figure shows that the average IACT of the parameters is 226.32 with the average effective sample size of 4418.52, indicating that the HMC method is also quite inefficient for this example.

Fig. 5 Kernel density estimates of some of the marginal parameters θ approximated with Gaussian, Gaussian Copula, skew Gaussian, skew Gaussian copula, and the optimal variational approximations (A1)–(A6) together with the true marginal distributions and the marginal distributions estimated using the HMC method for the mixture of normals example with .

The bottom panel of shows the variational approximations (A1)–(A6), together with the true marginal distributions and the marginal distributions estimated using HMC for . The top panel shows that the Gaussian, Gaussian copula, skew Gaussian, skew Gaussian copula, and HMC approaches are unable to approximate multimodal distributions. The bottom panel shows that the optimal MGVA, CMGVA, MSGVA, and CMSGVA perform much better than the optimal MGVA-MF and CMGVA-MF. Similar conclusions can be made from figure S5 in section S5 of the online supplement for the case

. The CPU time for the HMC method for this example is 83.75 min. The time taken to estimate the 5-component CMGVA is 37.25 min which is 2 and a quarter times faster than HMC.

Finally, and S6 in section S5 of the online supplement show the scatterplots of the observations generated from the true density, the Gaussian copula variational approximation, and the optimal CMGVA for and 0.2, respectively. The figures confirm that the CMGVA can capture the bimodality and complex-shaped of the two dimensional distribution of the parameters.

Fig. 6 Top: Scatterplot of the observations generated from true target distribution (blue), and a 5-component CMGVA (orange) for the mixture of normals example with ; Bottom: Scatterplot of the observations generated from true target distribution (blue), and the Gaussian copula (orange) for the mixture of normals example with

.

We now show that the CMGVA does not overfit a Gaussian target distribution, which we take as a q-variate normal with zero mean and full covariance matrix with ones on the diagonal and correlation coefficients in the off-diagonals. Figure S7 in section S5 of the online supplement plots the average lower bound values over the last 500 steps for the CMGVA for this example and shows that no improvement is obtained by adding additional components in the mixture.

The two examples in Sections 5.1 and 5.2 suggest that: (a) The CMGVA can approximate heavy tails, multimodality, skewness and other complex properties of the high dimensional target distributions, outperforming the Gaussian copula and other variational approximations. Section 5.1 shows that the Gaussian copula outperforms MGVA, MGVA-MF, CMGVA-MF, MSGVA, and CMSGVA at approximating a skewed target distribution. The optimal variational approximations (A1)–(A6) are better than a Gaussian copula at approximating multimodal target distributions. (b) Adding a few components to the MGVA, CMGVA, MSGVA, and CMSGVA generally improves their ability to approximate complex target distributions. Therefore, the proposed approach can be considered as a refinement of the Gaussian copula and skew Gaussian copula variational approximations. (c) Adding additional components one at a time provides a practical method for constructing an increasingly complicated approximation and applies to a variety of multivariate target distributions. (d) The HMC method of Hoffman and Gelman (Citation2014) fails to estimate the high dimensional, heavy tailed, and multimodal target distribution.

5.3 Bayesian Logistic Regression Models with Complex Prior Distributions

This section considers a logistic regression model

with the response

, and with a complex prior distribution for the regression parameters

. The prior for each regression parameter, except the intercept, is the two-component mixture of skew normals

(16)

(16)

is the skew-normal distribution of Azzalini (Citation1985) with density

. We set w = 0.5,

, and

. This prior is motivated by a variable selection scenario, where some coefficients may be 0 and we would like to set these close to zero. The prior for the intercept term b0 is N(0, 1)

We consider the spam, krkp, ionosphere, and mushroom data for the logistic regression model; they have sample sizes n = 4601, 351, 3196, and 8124, with 104, 111, 37, and 95 covariates, respectively and are also considered by Ong, Nott, and Smith (Citation2018) and Smith, Loaiza-Maya, and Nott (Citation2020); the data are available from the UCI Machine Learning Repository (Lichman Citation2013)Footnote4. In the results reported below, we include all covariates but only use the first 50 observations of each dataset. The small dataset size and the complex prior distribution for each regression parameter are chosen to create a complex posterior structure to evaluate the performance of variational approximations (A1)-(A6) when the posteriors are non-Gaussian.

Similarly to the previous example, we set the number of factors r1 to 4 for the first component and rk = 1, for each additional mixture component for for variational approximations A1, A2, A5, and A6. We use S = 100 samples to estimate the lower bound values and the gradients of the lower bound. The algorithm in Smith, Loaiza-Maya, and Nott (Citation2020) is performed for 5000 iterations to obtain the optimal variational parameters for the first component of the mixture and then Algorithm 1 is performed for 5000 iterations to obtain the optimal variational parameters for each additional component of the mixture.

shows the average lower bound values over the last 500 steps of the optimization algorithm for the variational approximations (A1)–(A6) for the Bayesian logistic regression model for the four datasets. The Gaussian and skew Gaussian copula variational approximations outperform the Gaussian and skew Gaussian variational approximations. Interestingly, the mean-field Gaussian copula variational approximation is better than the skew Gaussian and the Gaussian variational approximation for the spam, mushroom, and ionosphere datasets. The figure also shows that adding a few components in the mixture for variational approximations (A1)–(A6) improves the lower bound values for all datasets. The optimal CMGVA performs the best for the spam and krkp datasets. The CMSGVA performs slightly better than CMGVA for the mushroom and ionosphere datasets.

5.4 Flexible Bayesian Regression with a Deep Neural Network

Deep feedforward neural network (DFNN) models with binary and continuous response variables are widely used for classification and regression in the machine learning literature. The DFNN method can be viewed as a way to efficiently transform a vector of p raw covariates into a new vector Z having the form

(17)

(17)

Each , is called a hidden layer, L is the number of hidden layers in the network,

is the set of weights and

by construction. The function

is assumed to be of the form

, where Wl is a matrix of weights that connect layer l – 1 to layer l, which includes weight coefficients attached to the input

and the constant terms, and

is a scalar activation function. Estimation in complex high dimensional models like DFNN regression models is challenging. This section studies the accuracy of posterior densities and the predictive performance of the variational approximations (A1)-(A6) for a DFNN regression model with continuous responses; see Goodfellow, Bengio, and Courville (Citation2016, chap. 6, 9, and 10) for a comprehensive recent discussion of DFNNs and other types of neural networks.

Consider a dataset D with n observations, with yi the scalar response and the vector of p covariates. We consider a neural network structure with the input vector x and a scalar output. Denote

, the units in the last hidden layer, w is the vector of weights up to the last hidden layer, and

are the weights that connect the variable zl,

, to the output y. The model, with a continuous response y, can be written as

(18)

(18)

(19)

(19)

(20)

(20)

(21)

(21) where

,

, Mw is the number of weight parameters;

is a skew-normal density of Azzalini (Citation1985) defined in Section 5.3, and

is the gamma distribution with shape parameter 1 and scale parameter 10. We set

, and

. Miller, Foti, and Adams (Citation2017) uses similar priors for

. The priors for bl for

and wi for

will shrink some of the coefficients that may be 0 or very close to zero.

All the examples use the rectified linear unit (ReLU) as an activation function, unless otherwise stated; ReLU is widely applied in the deep learning literature (Goodfellow, Bengio, and Courville Citation2016) because it is easy to use within optimization as it is quite similar to a linear function, except that it outputs zero for negative values of x.

We consider the auto and abalone datasets. The auto dataset, available from James et al. (Citation2021), consists of 392 observations for different makes of cars, with the response being gas mileage in miles per gallon, with 7 additional covariates used here to predict the mileage. The abalone dataset, available on the UCI Machine Learning Repository, has 4177 observations. The response variable is the number of rings used to determine the age of the abalone. There are 9 covariates including sex, length, diameter, as well as other measurements of the abalone. We use 90% of the data for training and the rest for computing the log of the approximate predictive score in (24). Both datasets have continuous responses

Neural nets with (8,5,5,1), (8,10,10,1), and (8,20,20,1) structures are used for the auto dataset. For the (8,5,5,1) structure, the input layer has 8 variables, there are two hidden layers each having 5 units and there is a scalar output. The first layer has w parameters, the second layer has 6 × 5 = 30 w parameters (including the intercept term), and 6 b parameters (including the intercept term); this gives a total of 76 parameters. Similar calculations can be made for the (8,10,10,1) and (8,20,20,1) structures to give a total of 201 and 601 parameters, respectively. Neural nets with (9,5,5,1), (9,10,10,1), and (9,20,20,1) structures are used for the abalone dataset. Similar calculations can be done for them to give a total of 75, 211, and 621 parameters, respectively.

This section studies the accuracy of the inference and the predictive performance for variational approximations A1 and A2. For this example, variational approximations A5 and A6 are not implemented due to the numerical issues in estimating the skew normal and skew normal copula variational approximations. Sections 5.1–5.3 show that CMGVA is better than MSGVA and CMSGVA. We do not compare the posterior density of the parameters obtained from the variational approximations A1 and A2 to HMC, as it is difficult to obtain the exact posterior distribution for the parameters of the Bayesian neural network. Papamarkou et al. (Citation2021) shows that the Markov chains generated by the Metropolis-Hastings and Hamiltonian Monte Carlo methods fail to converge for estimating the parameters of the Bayesian neural network model. To show the lack of convergence of the HMC method, we ran it for 1,005,000 iterations discarding the initial 5000 iterations as warm up for estimating neural nets with a (9,10,10,1) structure for the auto dataset. Figure S14 in section S8 of the online supplement shows the IACT of the parameters θ estimated using the HMC method. The figure shows that the average IACT of the parameters is 1904.08 with an average effective sample size of 525.19, indicating that HMC is very inefficient for this example. In addition, figure S13 in section S8 of the online supplement shows the trace plots of the parameters of the neural net with the (9,10,10,1) structure for the auto dataset. The figure shows that the parameters mix poorly. Computing time for a 10-component CMGVA is an order of magnitude less than for the HMC.

To evaluate the predictive accuracy of a DFNN regression model estimated by the variational approximations A1 and A2, we consider the posterior predictive density defined as

(22)

(22)

Given that we have the variational approximation of the posterior distribution, we can define the approximate predictive density

(23)

(23)

Computing the approximate posterior predictive density in (23) is challenging because it involves high dimensional integrals that cannot be solved analytically. However, it can be estimated using Monte Carlo integration. The estimate of the log of the approximate posterior predictive score is

(24)

(24)

The higher the log of the approximate posterior predictive score, the more accurate the prediction.

In this example, the number of factors is set to 1 for all 20 components in the mixture; S = 200 samples are used to estimate the gradients of the lower bound; samples are used to estimate the log of the approximate posterior predictive score in (24); section S7 of the online supplement discusses the stopping criterion for the optimization algorithm.

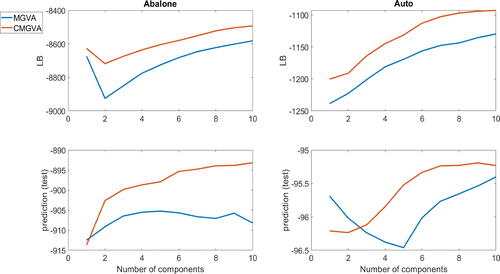

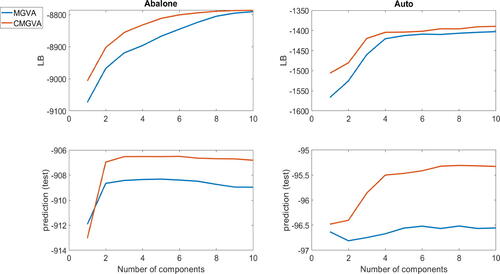

The top panels of figure S15 in section S8 of the online supplement show the average lower bound values over the last 100 steps of the optimization algorithm for the (8,5,5,1) neural net structure for the auto dataset and the (9,5,5,1) structure for the abalone dataset. The figure shows that adding components to the variational approximations A1 and A2 increases the lower bound significantly for both datasets. Clearly, CMGVA performs best for both datasets. The lower panels of figure S15 show the log of the estimated approximate posterior predictive scores evaluated for the test data. The figure also shows that adding more components to the variational approximations can improve the prediction accuracy significantly. Similar conclusions can be drawn from for the (8,10,10,1) neural net structure for the auto dataset and the (9,10,10,1) neural net structure for the abalone dataset and for the (8,20,20,1) neural net structure for the auto dataset and the (9,20,20,1) neural net structure for the abalone dataset. This suggests the usefulness of the proposed variational approximations for complex and high-dimensional Bayesian deep neural network regression models.

Fig. 8 Top panels: The plots of the average lower bound values over the last 100 steps for the variational approximations A1 and A2 for the (8,10,10,1) neural net structure for the auto dataset and the (9,10,10,1) neural net structure for the abalone dataset. Bottom panels: The plots of the log of the estimated approximate posterior predictive scores for the variational approximations A1 and A2 for the (8,10,10,1) neural net structure for the auto dataset and the (9,10,10,1) neural net structure for the abalone dataset.

Fig. 9 Top panels: The plots of the average lower bound values over the last 100 steps for the variational approximations A1 and A2 for the (8,20,20,1) neural net structure for the auto dataset and the (9,20,20,1) neural net structure for the abalone dataset. Bottom panels: The plots of the log of the estimated approximate posterior predictive scores for the variational approximations A1 and A2 for the (8,20,20,1) neural net structure for the auto dataset and the (9,20,20,1) neural net structure for the abalone dataset.

We now compare the optimal CMGVA to the planar flows of Rezende and Mohamed (Citation2015) with flow lengths of 10 transformations. Tanh is the non-linearity function with the initial distribution being Gaussian with mean μ and a diagonal covariance matrix; S = 1000 samples are used to accurately estimate the gradients of the lower bound. A similar stopping rule to that described in section S7 of the online supplement is used. Figures S11 and S12 in section S6 of the online supplement show that the lower bound of the planar flows for the two datasets for neural nets with different structures increase at the start and then converge. Table S1 in section S8 of the online supplement shows that the CMGVA has a higher lower bound and higher log of the approximate posterior predictive scores compared to the planar flows.

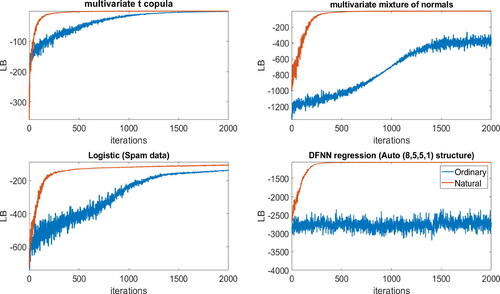

5.5 Efficiency of the Natural Gradient

This section compares the performance of the natural gradient and the ordinary gradient methods using the same initial values for the variational parameters for both methods. shows the lower bound values over iterations for both methods for the 2-component CMGVA for the multivariate t-copula, multivariate mixture of normals, logistic regression (spam dataset), and Bayesian DFNN regression (abalone dataset with neural net (9, 5, 5, 1) structure). The figure shows that the natural gradient is much less noisy and converges much faster than the ordinary gradient.

Fig. 10 The plot of the lower bound values over iterations for the ordinary and natural gradient methods for the 2-components CMGVA for the 100-dimensional multivariate t-copula, multivariate mixture of normals, Bayesian logistic regression model (spam data), and the DFNN regression model for the Abalone dataset with a (9,5,5,1) neural net structure examples.

6 Conclusion

The article proposes flexible variational approximations based on a copula of a mixture of normals and constructs the computational algorithms for estimating the approximation. An important part of the approach is the construction of appropriate transformations of the parameters to try and simplify the joint posterior which is then estimated by a mixture of normals.

The VB method is made efficient by using the natural gradient and control variates. Our approach of adding one component at a time provides a practical variational inference method that constructs an increasingly complicated posterior approximation and is an extension and refinement of state-of-the-art Gaussian and skew Gaussian copula variational approximations in Smith, Loaiza-Maya, and Nott (Citation2020). The proposed variational approximations apply to a wide range of Bayesian models; we apply it to four complex examples, including Bayesian deep learning regression models, and show that it improves upon the Gaussian copula and mixture of normals variational approximations in terms of both inference and prediction. Our article uses a factor structure for the covariance matrix in our variational approximation, but it is straightforward to extend the variational approach to consider other sparse forms of the covariance structure, such as a sparse Cholesky factorization as in Tan, Bhaksaran, and Nott (Citation2020).

We note that it is straightforward to use any other copula-based approximation as a first component in our approach, such as a t-copula. This may result in a simpler mixture approximation than using the Gaussian copula as a first component.

Supplementary Materials

CopMixJCGSSupp.pdf (pdf file) provides additional examples, results and technical details. Computer code to implement the methods for the examples of this article is available online.

code_SI.zip

Download Zip (68.9 MB)OnlineSupplementFinal_9Sept2023.pdf

Download PDF (1.5 MB)Disclosure Statement

No potential conflict of interest was reported by the authors.

Additional information

Funding

Notes

1 For an m × m matrix A, is the vector of length m2 obtained by stacking the columns of A under each other from left to right;

is the vector of length

obtained from

by removing all the superdiagonal elements of the matrix A.

3 The CPU time for estimating the 3-component CMGVA is the total time taken for estimating 1 to 3-component CMGVA. Similar calculations are used for calculating the CPU time for the 4-component CMGVA.

References

- Amari, S. (1998), “Natural Gradient Works Efficiently in Learning,” Neural Computation, 10, 251–276. DOI: 10.1162/089976698300017746.

- Azzalini, A. (1985), “A Class of Distributions Which Includes the Normal Ones,” Scandinavian Journal of Statistics, 12, 171–178.

- Blei, D. M., Kucukelbir, A., and McAuliffe, J. D. (2017), “Variational Inference: A Review for Statisticians,” Journal of American Statistical Association, 112, 859–877. DOI: 10.1080/01621459.2017.1285773.

- Bottou, L. (2010), “Large-Scale Machine Learning with Stochastic Gradient Descent,” in Proceedings of the 19th International Conference on Computational Statistics (COMPSTAT2010), pp. 177–187, Springer.

- Campbell, T., and Li, X. (2019), “Universal Boosting Variational Inference,” in Advances in Neural Information Processing Systems (Vol. 32).

- Challis, E., and Barber, D. (2013), “Gaussian Kullback-Leibler Approximate Inference,” Journal of Machine Learning Research, 14, 2239–2286.

- Goodfellow, I., Bengio, Y., and Courville, A. (2016), Deep Learning, Cambridge, MA: MIT Press.

- Guo, F., Wang, X., Broderick, T., and Dunson, D. B. (2017), “Boosting Variational Inference,” ArXiv: 1611.05559v2.

- Hoffman, M. D., Blei, D. M., Wang, C., and Paisley, J. (2013), “Stochastic Variational Inference,” Journal of Machine Learning Research, 14, 1303–1347.

- Hoffman, M. D., and Gelman, A. (2014), “The no-u-turn Sampler: Adaptively Setting Path Lengths in Hamiltonian Monte Carlo,” Journal of Machine Learning Research, 15, 1593–1623.

- Izmailov, P., Vikram, S., Hoffman, M. D., and Wilson, A. G. (2021), “What are Bayesian Neural Network Posteriors Really Like?” in International Conference on Machine Learning, pp. 4629–4640, PMLR.

- James, G., Witten, D., Hastie, T., and Tibshirani, R. (2021), An Introduction to Statistical Learning: With Applications in R (2nd ed.), New York: Springer.

- Jerfel, G., Wang, S. L., Fannjiang, C., Heller, K. A., Ma, Y., and Jordan, M. (2021), “Variational Refinement for Importance Sampling Using the Forward Kullback-Leibler Divergence,” in Uncertainty in Artificial Intelligence (UAI), Proceedings of the Thirty-Seventh Conference, eds. C. de Campos and M. Maathuis.

- Jospin, L. V., Laga, H., Boussaid, F., Buntine, W., and Bennamoun, M. (2022), “Hands-on Bayesian Neural Networks – A Tutorial for Deep Learning Users,” IEEE Computational Intelligence Magazine, 17, 29–48. DOI: 10.1109/MCI.2022.3155327.

- Khaled, M., and Kohn, R. (2023), “On Approximating Copulas by Finite Mixtures,” https://arxiv.org/pdf/1705.10440.pdf.

- Khan, M. E., and Lin, W. (2017), “Conjugate-Computation Variational Inference: Converting Variational Inference in Non-Conjugate Models to Inferences in Conjugate Models,” in Proceedings of the 20th International Conference on Artificial Intelligence and Statistics, AISTATS 2017, 20-22 April 2017, Fort Lauderdale, FL, USA (Vol. 54), eds. A. Singh and X. J. Zhu, pp. 878–887, PMLR.

- Khan, M. E., and Nielsen, D. (2018), “Fast Yet Simple Natural-Gradient Descent for Variational Inference in Complex Models,” in International Symposium on Information Theory and Its Applications, ISITA 2018, Singapore, October 28–31, 2018, pp. 31–35, IEEE.

- Kingma, D. P., and Ba, J. (2015), “Adam: A Method for Stochastic Optimization,” in 3rd International Conference on Learning Representations, eds. Y. Bengio and Y. LeCun, ICLR 2015, San Diego, CA, USA, May 7–9, 2015, Conference Track Proceedings.

- Kingma, D. P., and Welling, M. (2014), “Auto-Encoding Variational Bayes,” in 2nd International Conference on Learning Representations, ICLR 2014, Banff, AB, Canada, April 14–16, 2014, Conference Track Proceedings.

- Kleijnen, J. P. C., and Rubinstein, R. Y. (1996), “Optimisation and Sensitivity Analysis of Computer Simulation Models by the Score Function Method,” European Journal of Operational Research, 88, 413–427. DOI: 10.1016/0377-2217(95)00107-7.

- Kucukelbir, A., Tran, D., Ranganath, R., Gelman, A., and Blei, D. M. (2017), “Automatic Differentiation Variational Inference,” Journal of Machine Learning Research, 18, 1–45.

- Lichman, M. (2013), “UCI Machine Learning Repository,” University of California, Irvine, School of Information and Computer Sciences.

- Lin, W., Khan, M. E., and Schmidt, M. (2019), “Fast and Simple Natural-Gradient Variational Inference with Mixture of Exponential-Family Approximations,” in Proceedings of the 36th International Conference on Machine Learning, Volume 97 of Proceedings of Machine Learning Research, eds. K. Chaudhuri and R. Salakhutdinov, pp. 3992–4002, PMLR.

- Locatello, F., Khanna, R., Ghosh, J., and Ratsch, G. (2018), “Boosting Variational Inference: An Optimization Perspective,” in Proceedings of the Twenty-First International Conference on Artificial Intelligence and Statistics, Volume 84 of Proceedings of Machine Learning Research, eds. A. Storkey and F. Perez-Cruz, pp. 464–472. PMLR.

- Miller, A. C., Foti, N. J., and Adams, R. P. (2017), “Variational Boosting: Iteratively Refining Posterior Approximations,” in Proceedings of the 34th International Conference on Machine Learning, Volume 70 of Proceedings of Machine Learning Research, pp. 2420–2429. PMLR.

- Nott, D. J., Tan, S., Villani, M., and Kohn, R. (2012), “Regression Density Estimation with Variational Methods and Stochastic Approximation,” Journal of Computational and Graphical Statistics, 21, 797–820. DOI: 10.1080/10618600.2012.679897.

- Ong, M. H. V., Nott, D. J., and Smith, M. S. (2018), “Gaussian Variational Approximation with a Factor Covariance Structure,” Journal of Computational and Graphical Statistics, 27, 465–478. DOI: 10.1080/10618600.2017.1390472.

- Ormerod, J. T., and Wand, M. P. (2010), “Explaining Variational Approximations,” American Statistician, 64, 140–153. DOI: 10.1198/tast.2010.09058.

- Paisley, J., Blei, D. M., and Jordan, M. I. (2012), “Variational Bayesian Inference with Stochastic Search,” in Proceedings of the 29th International Conference on Machine Learning, ICML’12, pp. 1363–1370, Madison, WI, USA. Omnipress.

- Papamakarios, G., Nalisnick, E., Rezende, D. J., Mohamed, S., and Lakshminarayanan, B. (2021), “Normalizing Flows for Probabilistic Modeling and Inference,” The Journal of Machine Learning Research, 22, 2617–2680.

- Papamarkou, T., Hinkle, J., Young, M., and Womble, D. (2021), “Challenges in Markov Chain Monte Carlo for Bayesian Neural Networks,” arXiv:1910.06539v6.

- Ranganath, R., Gerrish, S., and Blei, D. M. (2014), “Black Box Variational Inference,” in Proceedings of the Seventeenth International Conference on Artificial Intelligence and Statistics, Volume 33 of Proceedings of Machine Learning Research, eds. S. Kaski and J. Corander, pp. 814–822, Reykjavik, Iceland. PMLR.

- Rezende, D., and Mohamed, S. (2015), “Variational Inference with Normalizing Flows,” in International Conference on Machine Learning, pp. 1530–1538. PMLR.

- Rezende, D. J., Mohamed, S., and Wierstra, D. (2014), “Stochastic Backpropagation and Approximate Inference in Deep Generative Models,” in Proceedings of the 31st International Conference on Machine Learning, Volume 32 of Proceedings of Machine Learning Research, eds. E. P. Xing and T. Jebara, pp. 1278–1286, Beijing, China. PMLR.

- Robbins, H., and Monro, S. (1951), “A Stochastic Approximation Method,” The Annals of Mathematical Statistics, 22, 400–407. DOI: 10.1214/aoms/1177729586.

- Salimans, T., and Knowles, D. A. (2013), “Fixed-Form Variational Posterior Approximation through Stochastic Linear Regression,” Bayesian Analysis, 8, 741–908. DOI: 10.1214/13-BA858.

- Smith, M., Loaiza-Maya, R., and Nott, D. J. (2020), “High-Dimensional Copula Variational Approximation through Transformation,” Journal of Computational and Graphical Statistics, 29, 729–743. DOI: 10.1080/10618600.2020.1740097.

- Tan, L., Bhaksaran, A., and Nott, D. (2020), “Conditionally Structured Variational Gaussian Approximation with Importance Weights,” Statistics and Computing, 30, 1225–1272. DOI: 10.1007/s11222-020-09944-8.

- Tan, L. S. L., and Nott, D. J. (2018), “Gaussian Variational Approximation with Sparse Precision Matrices,” Statistics and Computing, 28, 259–275. DOI: 10.1007/s11222-017-9729-7.

- Titsias, M., and Lázaro-Gredilla, M. (2014), “Doubly Stochastic Variational Bayes for Non-conjugate Inference,” in Proceedings of the 31st International Conference on Machine Learning, volume 32 of Proceedings of Machine Learning Research, eds. E. P. Xing and T. Jebara, pp. 1971–1979, Beijing, China, PMLR.

- Tran, M. N., Nguyen, N., Nott, D., and Kohn, R. (2020), “Bayesian Deep Net GLM and GLMM,” Journal of Computational and Graphical Statistics, 29, 97–113. DOI: 10.1080/10618600.2019.1637747.

- Tukey, T. W. (1977), “Modern Techniques in Data Analysis,” NSP-sponsored Regional Research Conference at Southeastern Massachesetts University, North Dartmount, Massachesetts.

- Yeo, I. K., and Johnson, R. A. (2000), “A New Family of Power Transformations to Improve Normality or Symmetry,” Biometrika, 87, 954–959. DOI: 10.1093/biomet/87.4.954.