Abstract

We provide a computational exercise suitable for early introduction in an undergraduate statistics or data science course that allows students to “play the whole game” of data science: performing both data collection and data analysis. While many teaching resources exist for data analysis, such resources are not as abundant for data collection given the inherent difficulty of the task. Our proposed exercise centers around student use of Google Calendar to collect data with the goal of answering the question “How do I spend my time?” On the one hand, the exercise involves answering a question with near universal appeal, but on the other hand, the data collection mechanism is not beyond the reach of a typical undergraduate student. A further benefit of the exercise is that it provides an opportunity for discussions on ethical questions and considerations that data providers and data analysts face in today’s age of large-scale internet-based data collection.

1 Introduction

The title of our article refers to the reality that to master a subject, one needs to do more than practice the individual, elemental, and necessary parts. For example, no matter how good you are at running, dribbling the ball, or shooting the ball into the upper corner of the net, you cannot excel at soccer unless you practice the whole game (Perkins Citation2010). While Wickham and Bryan (Citation2019) used the phrase to describe creating an entire R package from beginning all the way to distribution on GitHub, we use the term to describe the entire process by which data science is performed.

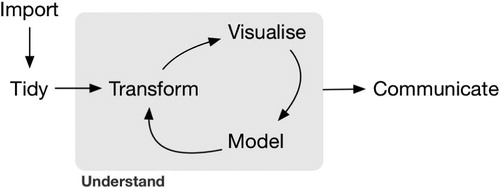

Many statistics and data science educators are likely quite familiar with Wickham and Grolemunds (Citation2017) model of the tools needed in a typical data science project seen in . While one should not interpret any simplifying diagram in an overly literal fashion, we appreciate two aspects of this diagram. First, the cyclical nature of the “Understand” portion emphasizes that in many substantive data analysis projects, original models and visualizations need updating, necessitating many iterations through this cycle until a desired outcome can be communicated. Second, it encourages a holistic view of the elements of a typical data science project.

Previously many undergraduate statistics courses have not taught such a holistic approach, instead focusing only on individual components at the expense of others, thereby leaving gaps in the process. In this article, we view these gaps in terms of what Not So Standard Deviations podcast hosts Roger Peng and Hilary Parker call differences between “what data analysis is” and “what data analysts do” (Peng and Parker Citation2018b). In other words, there are many differences between the idealized view of the data analysis process and what is actually done in practice.

We argue that it is important to adjust curricula to “bridge the gaps” between “what data analysis is” and “what data analysts do” (McNamara Citation2015). For example, a substantial bridging of the gap has come through great pedagogical strides to expose students in statistics and data science courses to the entirety of the process in (Baumer Citation2015; Hardin et al. Citation2015; Loy, Kuiper, and Chihara Citation2019; Yan and Davis Citation2019).

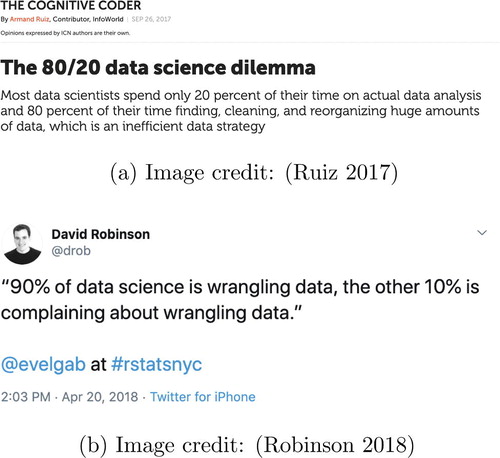

Another example of an existing gap in some statistics and data science courses is sparse treatment of data wrangling. A familiar refrain from working data scientists is that 80% of their time is spent wrangling data, leaving only 20% for actual analysis (see ). Given the prospect of the 80–20 rule, Kim, Ismay, and Chunn (Citation2018) argued that to completely shield students in statistics courses from performing meaningful data wrangling is to do them a disservice. Horton, Baumer, and Wickham (Citation2015) proposed five key data wrangling elements that deserve greater emphasis in the undergraduate curriculum: creative and constructive thinking, facility with different types of data, statistical computing skills, experience wrangling, and an ethos of responsibility. Two of the most seminal contributions to modernizing the statistics and data science curriculum, National Academies of Sciences Engineering and Medicine (Citation2018) and Nolan and Temple Lang (Citation2010), both emphasize the importance of teaching and practicing data wrangling.

In this article, we argue that another such gap in curricula is inadequate treatment of data collection. Many working data scientists do not spend a large proportion of their time working on one static dataset as suggested by the single “Import” step in (Robinson Citation2018; Ruiz Citation2017). Instead, the data used to address questions are often dynamic; the data change based on differences in time, sampling strategies, questions asked, variables collected, etc. Furthermore, only after a first iteration of data has been collected and analyzed can research questions be updated and fine tuned, often necessitating another round (or more) of data collection.

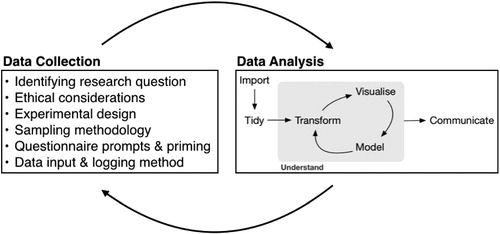

While we are not the first to argue that data collection and acquisition is an important topic for the statistics classroom (Zhu et al. Citation2013) or the computer science classroom (Blitzstein Citation2013; Protopapas et al. Citation2020), we point out that Wickham and Grolemunds (Citation2017) model of data analysis from is not a complete representation of a typical “data science project” as it neglects a critical phase: (repeated) data collection. We present what we term “playing the whole game” in , which augments the earlier “data analysis” diagram in with an additional “data collection” block. The new block consists of key elements to consider when collecting data, including ethical considerations, the experimental design (if any), the sampling methodology, questionnaire design in the case of surveys, the data input and logging methods used, and most critically, identifying the research question. While the new block is by no means exhaustive and thus should not be interpreted in an overly literal fashion either, we again emphasize the cyclical and iterative nature of data collection and data analysis. Our diagram and suggested activity is similar to the PPDAC cycle promoted by the New Zealand mathematics curriculum (Statistical Enquiry Cycle Citation2012).

Note that some of the tasks in the “Data Collection” box may not be seen immediately as collecting data. However, we believe that they are important considerations at the data collection step. For example as it relates to “Ethical considerations,” questions like “Should you collect someone’s location data?” or “Should you require explicit permission to collect a particular variable?” would be considered. As it relates to “Question prompts,” studies on the psychological effect of “priming” have shown that you can steer survey responses to a particular direction depending on how and when questions are asked (Hjortskov Citation2017).

In the context of undergraduate statistics and data science classrooms, a large amount of data analysis conducted by students uses data that they themselves or the class as a whole had no part in collecting. For example, many students seek data for assignments and projects from repositories like Kaggle.com or data.gov. Thus, in many cases students get no exposure to the “data collection” component of and take the data for granted. Lack of exposure to data collection has potential pitfalls including potentially erroneous conclusions based on mistaken assumptions of the data collection method, a lack of a sense of ownership of the work, and most importantly, no experience going through the iterative process of “playing the whole game.”

Previous literature has focused on slightly different ways of incorporating data collection into the classroom: research projects (e.g., Halvorsen and Moore Citation2000; Halvorsen Citation2010; Sole and Weinberg Citation2017); simulating realistic data through games (e.g., Kuiper and Sturdivant Citation2015); and collecting classroom activity data (e.g., Scheaffer et al. Citation2004).

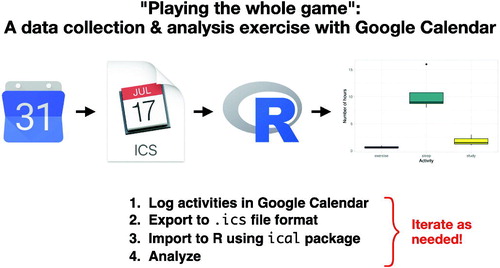

Building toward the goal of having students experience the entire data analysis process, we propose a data collection activity that allows students to mimic the real-life data collection conducted by numerous internet-age organizations in industry, media, government, and academia. At the same time the technological background and sophistication necessary for the activity is kept at a level suitable for undergraduate classroom settings. The classroom activity touches many components of playing the whole game, demonstrates to the students the difficulty of data science, and provides an interesting research question with which the students can engage. A visual summary of the activity is presented in .

1.1 Muse

The idea for our “data collection” exercise for students came out of an episode of the earlier mentioned Not So Standard Deviations podcast titled “Compromised Shoe Situation” (Peng and Parker Citation2018a) (as well as described in a corresponding blog post by Roger Peng titled “How Data Scientists Think—A Mini Case Study” (Peng Citation2019)).

Hosts Hilary Parker and Roger Peng gave each other a data science challenge whereby they had to solve a problem using data science. They contrasted it to other common data science challenges (such as the American Statistical Association’s DataFest (Bialik Citation2014) or prediction and classification competitions available from Kaggle.com) where the data have already been collected and cleaned.

The challenge that Parker and Peng proposed centers on identifying what factors influence the time it takes each of them to get to work. In one of our favorite discussions of their podcast, they break down the iterative process of gathering data, learning what information it provides, and gathering more data. They also discuss the difficulty of gathering precise information and the balance of “low-touch” and “high-touch” data collection (collection methods that require few active user actions versus many, respectively). In Parker’s data collection, she implemented a low-touch system of recording when her commute started by automatically recording when her phone disconnects from her home WiFi; a higher-touch part of her analysis was when she manually logged the route she took to work.

In the rest of the article, we describe how we have taken the ideas from Parker and Peng’s podcast and infused them into a class assignment previewed in that allows students to practice “playing the whole game.” In Section 2 we provide the details of the assignment and the considerations that went into why we made some of the choices we did. Section 3 provides a reflection on what we learned and how the assignment succeeded in accomplishing the goals for “playing the whole game.” In Section 3.3 we include quotes from reflection pieces written by students summarizing their experiences. As a vital aspect of our work, in Section 4 we discuss the ethical considerations of the assignment and how they can be generalized to the larger scale data collection performed by internet-age organizations in industry, media, government, and academia. As part of our ethics discussion, we emphasize the importance of bringing up data ethics all along the semester with every data analysis, and not just in a data ethics course.

2 Materials and Methods

2.1 Context

In Fall 2019, both authors were each teaching classes in a context where the whole game would be important to the learning outcomes of the course. Albert’s class was “Introduction to Data Science” at Smith College, a no-prerequisite course designed to appeal to a broad audience and act as an entry-point to the Statistical and Data Sciences major. He gave the assignment in the fifth week of the semester as the first “mini-project.” Jo’s class was “Computational Statistics” at Pomona College with primarily junior and senior math/statistics majors; she used the assignment as the first homework of the semester.Footnote1

In what follows, we provide the details of the assignment. To engage the students maximally, we tried to find a question with universal appeal and landed on “How do I spend my time?” In the assignment, the student is required to collect, wrangle, visualize, and analyze data based on entries they make in their own electronic calendar/planner application, such as Google Calendar, macOS Calendar, or Microsoft Outlook.

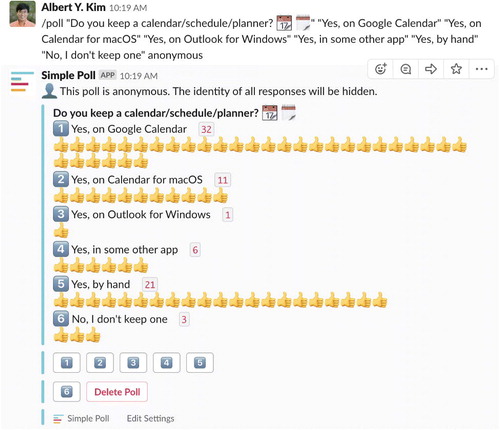

To assess prior student use of a calendar/planner, Albert polled his class at Smith College on their preferred method of keeping track of their schedule (see ). In Albert’s case, most students were already keeping an electronic calendar (44 of 74), while some were keeping a hand-written calendar (21 of 74).Footnote2

2.2 Learning Outcomes

The learning outcomes for the exercise can be broken down into three categories. The first category is “Data collection,” which focuses on the technical aspects of the activity. The second category is “Data ethics,” which focuses on the larger context and conclusions resulting from the exercise. The last category encompasses the learning goals related to “Playing the whole game.”

Data collection

Experience creating measurable data observations (e.g., how is “one day” measured, or what defines “studying”).

Address data collection constraints due to limits in technological capacity and human behavior.

Data ethics

Practice the ethical and legal responsibilities of those collecting, storing, and analyzing data.

Decide limits for personal privacy.

Deliberate on the trade-offs between research results and privacy.

“Playing the whole game”

Tie together data collection, analysis, ethics, and communication components.

Iterate between and within the components of the “whole game.”

Throughout the article, we touch on all of the learning outcomes. Section 3.1 describes many of the benefits and learning outcomes related to “Data collection”; Section 3.2 describes many of the benefits and learning outcomes related to “Playing the whole game.”

As a specific example of learning outcome 5, consider ethics training for animal studies where there is typically a discussion on the minimum number of samples needed for “ethical research.” The idea is that an under-powered study would indicate that the animals had been needlessly sacrificed with no possibility of moving scientific research forward. As with minimum number of samples, we ask the students what types of research connect with which types of privacy violations, and where is the correct balance for pushing forward knowledge. A more complete examination of data ethics in the classroom is given in Section 4.

2.3 The Assignment

We have provided the complete assignments used by both authors at the following website https://smithcollege-sds.github.io/sds-www/JSE_calendar.html/. While the two versions of the assignment vary slightly in format and reflection, both still require the students to go through the entire process of collecting, wrangling, visualizing, analyzing, and communicating about the data, with an important additional step of reflecting on the information gathered. The assignment was scaffolded so that a student with minimal computational and coding experience could still directly work with calendar data.

Before we go into the details of working with calendar data, we first point out some differences in approach between the two assignments. One unique aspect of Albert’s class is that he had the students work in pairs. A particular student would make their calendar entries and then export the data. However, instead of analyzing their own calendar, they would send their data to their partner who then wrangled, visualized, and analyzed the data (as well as vice versa). The motivation was to encourage students to think about what data they could or should share with their partner, what data they couldn’t nor shouldn’t, and any particular responsibilities that the individual analyzing the data had.

In Jo’s class, she spent half of one class period discussing the podcast in great detail. Because it was early on in the semester, there were lots of unknowns about what is data science and what are the possible ways one can use data to make decisions. Additionally, the assignment and podcast continued to come up in class sessions throughout the semester as a way to ground conversations with respect to data collection and analysis: what we can and can’t do, as well as what we should and shouldn’t do.

2.4 Details of Working With Calendar Data

Students were instructed to track how they spent their time on their calendar application for approximately 10–14 days. More specifically, students were instructed to fill in blocks of time and mark the entry with the activity they were performing: sleeping, studying, eating, exercising, socializing, etc. The students chose their own categories to fit their own schedules. Students were able to have overlapping blocks of activities in situations where two activities were done simultaneously. Students were also informed that they should feel comfortable leaving out any details they did not want to share; indeed, if they wanted to, they were free to make up all the information in the calendar.

Note that our example centers around the use of Google Calendar. To ensure as much consistency as possible we encouraged students who did not have an electronic calendar to use Google Calendar to record their activities. However, the assignment can equally be done using macOS Calendar or Microsoft Outlook.

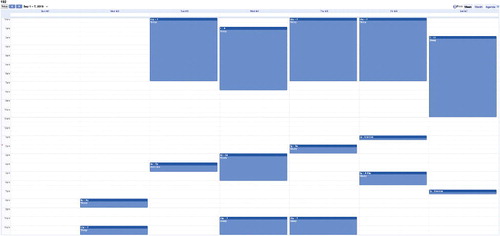

As an example, we filled a sample Google Calendar with entries between September 2nd and 7th, 2019, which you can view using the Google Calendar interface at http://bit.ly/dummy_calendar. We suggest that after scrolling to the week of September 1st, 2019, you click the “Week” tab on the top right for a week-based overview of the calendar entries.

After looking at the sample calendar, students exported their own calendar data to.ics file format, a universal calendar format used by several E-mail and calendar programs, including Google Calendar, macOS Calendar, and Microsoft Outlook. They then imported their file into R as a data frame using the ical_parse_df() function from the ical package (Meissner Citation2019).

To help the students focus on the larger data science paradigm, the instructors scaffolded the assignment in template R Markdown files (Allaire et al. Citation2019) which served as the foundation of the students’ submissions; we provide these scaffolded assignments at https://smithcollege-sds.github.io/sds-www/JSE_calendar.html/. Continuing our earlier example involving a sample Google Calendar, we exported its contents to a 192.ics file. Subsequently, we imported the.ics file into R as a tibble data frame (Müller and Wickham Citation2019), and then performed some data wrangling using dplyr (Wickham, François, Henry and Müller Citation2019) and the lubridate package for parsing and wrangling dates and times (Grolemund and Wickham Citation2011).

Below, we give an extract of the code representing the crux of the process previously described. (Note that the code below sets the timezone of all entries to be” America/New_York”. For a list of other timezones that can be used instead, run the OlsonNames() function in R.)

library(ggplot2)

library(dplyr)

library(lubridate)

library(ical)

calendar_data <- "192.ics" %>%

# Use ical package to import into R:

ical_parse_df()

# Convert to "tibble" data frame format:

as_tibble() %>%

# Use lubridate packge to wrangle dates and

times:

mutate(

start_datetime = with_tz(start, tzone =

"America/New_York"),

end_datetime = with_tz(end, tzone =

"America/New_York"),

duration = end_datetime - start_datetime,

date = floor_date(start_datetime, unit =

"day")

) %>%

# Convert calendar entry to all lowercase and

rename:

mutate(activity = tolower(summary)) %>%

# Compute total duration of time for each day &

activity:

group_by(date, activity)

summarize(duration = sum(duration))

# Convert duration to numerical variable and

set units. Here hours:

mutate(

duration = as.numeric(duration),

hours = duration/60

) %>%

# Filter out only rows where date is later than

2019-09-01:

filter(date > "2019-09-01")

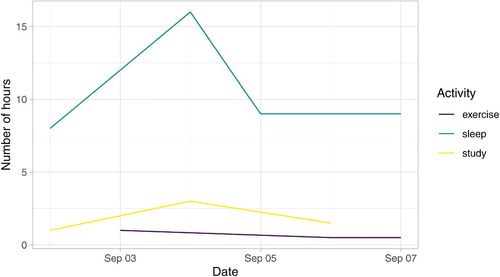

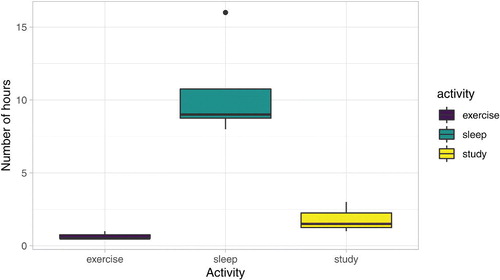

The resulting calendar_data data frame output is presented in . The calendar_data data frame can then be used to make plots like the time-series linegraph in and the side-by-side boxplots in .

Table 1 Example calendar data frame.

3 Results

The assignments we used in our classes did not take a substantial amount of time to create, execute, or discuss. Albert engaged with the assignment for about half a class period at three different times: (1) to motivate and introduce the assignment including an end-to-end demonstration; (2) a check-in to make sure everyone was on track, and (3) an in-class discussion after the assignment had been submitted. Jo spent only a few minutes discussing the technical aspects of the assignment and 30 minutes during one class period discussing Peng and Parker (Citation2018a). We believe that including the “play the whole game” activity in the course allowed for a much deeper understanding and reflection on the entire data science pipeline, without needing to substitute any of our previous course learning goals. That is to say, the assignment we have shared is in keeping with the current structure of many statistics and data science courses. In fact, while our article was under review, Prof. Katharine F. Correia at Amherst College extended our original ideas and modified them so that her students could create “data diaries” during the social isolation period of Spring 2020. Some examples of her students’ work can be found here: https://stat231-01-s20.github.io/data-science-diaries/.

We now discuss what we view as the successes and lessons of the activity (for student perspectives on these successes and lessons see Section 3.3).

3.1 Benefits Inherent to the Activity

First, the focus on “self” and an engaging question (“How do I spend my time?”) easily captured our students’ interest. Universally, they found the assignment compelling, with one student remarking informally afterward that they planned on pursuing further analyses on their own private Google Calendar. Another student came up with a clever idea of documenting their intended time studying versus their actual time studying. Some students seemed concerned that their question on how they used their time wasn’t interesting enough, but we emphasized that the project should be more about process than outcome. Additionally, we prompted students with follow-up queries such as: “Do the amounts of time spent on certain activities have an inverse relationship with each other?” or “Do you spend as much time on a particular activity as you expect to?” (where the latter question would necessitate recording both expected and actual time for each event).

Second, having the data collection involve time intervals puts a nice cap/standardization on the scope on the type of data collected. As can be seen in of the example calendar_data data frame, all resulting data frames consist of three variables: date (numerical), length of time spent (numerical), and activity type (categorical). Having such a combination of variables is an excellent starting point for data science courses. Furthermore, many students encountered very interesting computational and data wrangling questions quite organically, some along the lines of:

How do I combine two types of activities in R?

How do I only select events from days past 2019-09-02?

My “hours spent” variable is actually in minutes rather than hours. How do I convert it to hours? (We noticed operating system level variation in the default units of the recorded length variable in the calendar_data dataframe.)

How would I make a scatterplot of daily time spent studying versus time spent sleeping?

The latter question is particular interesting as it necessitates converting data frames from tall/long format (like that of the calendar_data example in ) to wide format using the pivot_wider() function from the tidyr package (Wickham and Henry 2019). In our opinions, this question serves as an ideal method to teach the subtle yet important concept of “tidy” data (Wickham Citation2014). Rather than starting with the somewhat technical definition of “tidy” data, there is a benefit to having students gently “hit the wall” whereby they cannot create a certain visualization or wrangle data in a certain way unless the data is in the correct format.

Finally, both instructors found that the exercise served as an excellent opportunity to teach students about file paths and including the dataset (in this case the.ics file) in an appropriate directory. Students were required to compile.Rmd files that connected to a unique.ics file, and a second individual (either a partner or a student grader) also needed to be able to compile the.Rmd file. Due to the typical structure of a homework assignment (e.g., the professor providing a link to a dataset), our experience is that working with file paths is both difficult for novice data science students as well as being a difficult topic in which to provide examples for practicing.

3.2 Benefits From “Playing Whole Game”

Importantly, there were benefits engendered by having students “play the whole game,” both (1) of a technical, coding, and computational nature and (2) of a scientific and research nature. Many lessons were only learned by students after having gone through an entire iteration of “data collection” and “data analysis” cycle as seen in .

For example, one student made calendar entries recording the start times of events, whereas the assignment assumed time intervals were being recorded. For example, “I went to sleep at time X.” versus “I slept between times X and Y, and thus slept for Z hours.” The student thus needed to revise their data collection method.

Other students found out that unless their calendar entry titles matched exactly, they are recorded as two different activity types. Consider the following difference due to trailing spaces:” Sleep” versus” Sleep” , such data collection methods were also revised to ensure entries were standardized. These students were reminded about the differences in how humans and computers process text data.

Another issue that surprised the instructors was that calendar entries based on a “repeat” schedule only appeared once in their resulting data in R: the entry for the first day. The instructors thus had to consider whether to update the assignment instructions for students in future classes, or to let them encounter this issue on their own.

On top of more technical, coding, and computational issues, sometimes students realized mid-way that their research question itself needed revising and thus had to retroactively fill in their calendars using their best guesses. These students thus realized the importance of iterating through the entire process sooner rather than later to prevent errors from accumulating and snowballing.

An unforeseen issue that combined both technical and scientific queries was how to allocate sleep hours. For example, if a student went to sleep at 10 p.m. and woke up at 6 a.m., then 2 hr of sleep would be logged for one day and 6 hr for the next, whereas most people would call this a single night of sleep.

3.3 Student Quotes

On top of the earlier mentioned student “data diaries” from Prof. Katharine F. Correia’s course available at https://stat231-01-s20.github.io/data-science-diaries/, we now provide excerpts from the reflection pieces students wrote in Albert’s course at Smith College. Students were asked to write a joint reflection piece on their experiences, keeping the podcast in mind (Peng and Parker Citation2018a).Footnote3 In particular, students were given the following prompts:

As someone who provides data: What expectations do you have when you give your data?

As someone who analyzes other’s data: What legal and ethical responsibilities do you have?

The student quotes have been grouped into categories that relate back (roughly) to the previously described learning outcomes (LO) in Section 2.2.

Relating to iterating between “data collection” and “data analysis” (LO1):

“I fell ill and was unable to log in more data points for her to visualize. Student X was still able to visualize my activity, incorporating my sick days as another variable.”

“One of the technical issues we ran into, and probably the defining experience of this project, was the difficulty in creating consistent error free information to export to our partner. It’s kind of ridiculous how small manual entry errors, like whether or not I used spaces (in the calendar entries), made such a difference at the end of the line when mystery variables started showing up.”

“But that was not the biggest issue we encountered, our issue was the fact that we inputted repeated events on our calendar which affected how many observations came up onto the dataset (since only the first of the multiple events would show up).”

“I, for example, learned that data is fickle and needs consideration of how variables interact with each other within a data visualization before unnecessary data collection. Her original question was inadequate as one of her variables wasn’t a function of time; thus, she had to retroactively enter the data for her new question using her best estimate, meaning her data should be recognized as imprecise and that it could affect the outcome of any analysis.”

Relating to “low” and “high touch” data collection methods (LO2):

“Specifically for Student X’s data, it was interesting to work with real-time data recorded by her phone. Because it was automatically collected by her mobile device, there was less room for human errors when calculating the time spent on her phone screen.”

“The time is hard to pin down because sometimes there will be interruptions or potentially interleaving studying to do other things. We think that the study time could be off between an hour and a half an hour for regular weekdays and maybe two during weekends because sometimes it is harder to take down the exact start and stop time on the spot. Comparatively, recording sleeping time is much easier because we could always check the time when the alarm goes off and take a screenshot when setting the alarm for the other day.”

Relating to analyst ethical responsibilities (LO3):

“For example, in our group, one member accidentally shared their entire calendar data with the other member when only a small portion of this data was needed for analysis. The other member realized the first member’s mistake, deleted the data, and showed the first member how to separate the events she wanted to be analyzed from the rest of her calendar.”

Relating to prior analyst biases (LO3):

“It might be worthwhile as the analyzer to be completely transparent and address any previous biases that may affect the reliability of a given data analysis.”

“Keep to the original data, don’t change the data to get a favorable result.”

Relating to data provider expectations (LO4):

“Additionally, the individual should have the right to ask about the research project that the data is going to and how their data may be used.”

Relating to analyst empathy toward data providers (LO5):

“The question was straightforward but it also made us uneasy as we had to consider both what we were comfortable sharing and how to handle our partner’s data in an ethical manner.”

“On the other hand, because of my discomfort, I was also able to handle Student X’s data with the same caution that I would expect her to do with my dataset.”

“This in turn motivated me to handle her data with care and sensitivity. For this reason, I appreciated this project as it forced us to simultaneously consider our roles both as people who share our data in numerous scenarios throughout our daily lives and as data science students with a new responsibility to handle another person’s data ethically.”

“If we oppose Facebook tracking our personal information and profiting off it without our consent, we owe it to our customers/providers of data to not do the same.”

“Personally I did question how comfortable I was sharing information about XXX, but decided to push my boundaries, because though it made me nervous to share information about XXX I was also very curious about how it affected YYY, and the actual risk of sharing this information was little to none. I wanted to gain some insight from this project that would help me lead a healthier, more stable life.”

Relating to the value of data (LO5):

“There would be no incentives for us to share our data if we are not getting anything back from it.”

“A gray area of data analysis would be targeted ads. On the one hand, one could argue that getting targeted ads bring convenience to only getting advertised products we would enjoy. On the other hand, one could also argue it pushes capitalism on us and thus only disadvantages the public by heavily influencing us to buy products we might not need.”

Relating to the balancing act many analysts face (LO5):

“Information that puts our security or identity at risk should be left out. However, if too little information is shared, it may affect the ability to accurately represent any phenomena occurring within the data.”

“In the podcast we listened to, Hilary Parker specifically mentioned how in collecting employees’ commute time, she wanted to protect privacy by withholding the exact minute they left work. An alternative was releasing the general length of time employees spend commuting to work would, but this would remove the impact of confounding variables and externalities. The exact times an employee commuted were important because part of the data collection included factoring in traffic patterns. Because of this consideration, Parker decided it would be more effective to round the times an employee arrived of left work to the nearest hour to maximize privacy and accuracy of the data.”

Relating to actionable insight (LO6):

“Since then I have been more cautious about granting apps access to my information.”

“Visualization 1 made me realize that I should also spend more time during the weekdays sleeping and hanging out with friends to take care of myself.”

Relating to revised research questions (LO7):

“For example, while analyzing Student X’s data, we had to decide whether or not Friday would be considered a weekday or a weekend.”

“As for improvements in our next projects, for consistency and accuracy, it might be important to keep the data collection dates fixed so that there are the same number of the week days considered in the analysis.”

4 Discussion

As noted by Baumer et al. (Citation2020), many major professional societies, including the American Statistical Association (ASA) (Committee on Professional Ethics Citation2018b), the Association for Computing Machinery (ACM) (Committee on Professional Ethics Citation2018a), and the National Academy of Sciences (NAS) (Committee on Science, Engineering, and Public Policy Citation2009), have long published guidelines for conducting ethical research. However, only more recently has there been growing literature on the weaving of data ethics topics early and often all throughout undergraduate curricula in data science, statistics, and computer science (Burton, Goldsmith, and Mattei Citation2018; Elliott, Stokes, and Cao Citation2018; Heggeseth Citation2019; Ott Citation2019; Baumer et al. Citation2020). National Academies of Sciences Engineering and Medicine (Citation2018, pp. 30–33) provided specific recommendations for teaching ethics in an undergraduate classroom (e.g., use case studies, practice ethical discussions throughout, adopt a code of ethics).

The class activity we present provides a myriad of ways to start a classroom conversation about data ethics. Albert motivated the idea of the project by discussing the pros and cons of how he logs his daily calorie consumption using the MyFitnessPal app. On the one hand, the students could appreciate the convenience of logging calories using the barcode scanner, on the other hand, they could also understand that Albert is essentially telling the Under Armour corporation everything he eats and when. In the age of Fitbit, 23andMe, and innumerable different health and fitness apps, the example presents the students with an opportunity to think about what information they are sharing with large corporations.

Some of the discussion questions that led to good classroom conversations included:

What were Hilary’s main hurdles in gathering accurate data? What were your main hurdles? Are there ethical reasons to need “accurate” data?

What are the trade-offs between collecting data which is “high-touch” (manual) versus “low-touch” (automatic)? Do any of the trade-offs bring up ethical dilemmas?

What expectations do you have surrounding the data you have provided as part of the class assignment? What expectations do you have surrounding the data you provide to online apps?

(For Albert’s class:) What ethical responsibilities do you have when analyzing someone else’s data?

Instructors may choose a different type of data collection, or let each student decide on their own collection method. Some possible extensions include the above mentioned fitness apps (outputting all entries in a.csv file); every interaction on Instagram (outputting a.json file); Google Trends or Google Search terms (output as a function of time); or personal Facebook information (as.html or.json). Each data type will present a unique setting in which to discuss privacy and data ethics considerations.

In Jo’s class conversation about the podcast, one student appreciated that Hilary and Roger discussed whether they should do the data collection. The student expressed that maybe Hilary should just leave earlier for work so as to not make anyone wait. That would be the more kind thing to do. Indeed, as Hilary herself says “… it’s probably better to just give yourself a cushion so you don’t have to worry.” The student comment led to a larger conversation about what types of questions we should answer with data science and what types of questions should not be data driven.

In the last few minutes of the podcast, Parker says (Peng and Parker Citation2018a):

… setting up, thinking through what could you collect, what makes sense to collect? What are the assumptions in the data that you’re collecting? Like going through that, I would encourage people to go through that process, even if you end up like not doing it long-term or something, but it’s still, I think that’s the skill set that’s important to develop if you want to work in data science.

Why does Hilary Parker implore people to “play the whole game?” We believe that there is a disconnect in the narrative surrounding “what is data analysis” when instead it should be more closely connected to “what do data analysts do.” The only way to inculcate lessons pertaining to “what data analysts do” is by having students “play the whole game.” Furthermore, just as in real-life, it is critical that students go through the cycle several times. In other words, “iterate early and iterate often.”

Acknowledgments

Most importantly, we would like to acknowledge the Smith College and Pomona College students with whom we work. It is from their ideas and energy that we discovered the fun and beauty of working in statistics and data science. Hilary Parker and Roger Peng provided not only the inspiration for the activity but also helpful feedback on the preprint. We appreciate the thoughtful suggestions of three anonymous referees and the associate editor. On top of the packages cited in the article, the authors also used the ggplot2 (Wickham, Chang, Henry, Pedersen, Takahashi, Wilke, Woo and Yutani Citation2019), kableExtra (Zhu Citation2019), knitr (Xie Citation2019), and viridis packages (Garnier Citation2018). We also thank Hadley Wickham for providing the direct inspiration for the “playing the whole game” wording in our article. The authors thank numerous colleagues and students for their support.

Notes

1 The work has been verified by the Smith College Institutional Review Board as “Exempt” according to 45CFR46.101(b)(1): (1) Educational Practices.

2 Both authors were surprised to see that any college student would be able to make it through the semester without any type of calendar at all (3 of 74)!

3 Per Smith College Institutional Review Board guidelines, explicit consent was obtained directly from all students who are confidentially quoted in the article.

References

- Allaire, J., Xie, Y., McPherson, J., Luraschi, J., Ushey, K., Atkins, A., Wickham, H., Cheng, J., Chang, W., and Iannone, R. (2019), “rmarkdown: Dynamic Documents for R,” R Package Version 1.15, available at https://CRAN.R-project.org/package=rmarkdown.

- Baumer, B. S. (2015), “A Data Science Course for Undergraduates: Thinking With Data,” The American Statistician, 69, 334–342, DOI: 10.1080/00031305.2015.1081105.

- Baumer, B. S., Garcia, R. L., Kim, A. Y., Kinnaird, K. M., and Ott, M. Q. (2020), “Integrating Data Science Ethics Into an Undergraduate Major,” arXiv no. 2001.07649.

- Bialik, C. (2014), “The Students Most Likely to Take Our Jobs,” FiveThirtyEight.com, available at https://fivethirtyeight.com/features/the-students-most-likely-to-take-our-jobs/.

- Blitzstein, J. (2013), “What Is It Like to Design a Data Science Class? In Particular, What Was It Like to Design Harvard’s New Data Science Class, Taught by Professors Joe Blitzstein and Hanspeter Pfister?,” available at https://www.quora.com/Data-Science/What-is-it-like-to-design-a-data-science-class-In-particular-what-was-it-like-to-design-Harvards-new-data-science-class-taught-by-professors-Joe-Blitzstein-and-Hanspeter-Pfister/answer/Joe-Blitzstein.

- Burton, E., Goldsmith, J., and Mattei, N. (2018), “How to Teach Computer Ethics Through Science Fiction,” Communications of the ACM, 61, 54–64. DOI: 10.1145/3154485.

- Committee on Professional Ethics (2018a), ACM Code of Ethics and Professional Conduct, New York, NY: Association for Computing Machinery, Inc., available at https://www.acm.org/binaries/content/assets/about/acm-code-of-ethics-booklet.pdf

- Committee on Professional Ethics (2018b), “Ethical Guidelines for Statistical Practice,” Technical Report, American Statistical Association, available at http://www.amstat.org/asa/files/pdfs/EthicalGuidelines.pdf.

- Committee on Science, Engineering, and Public Policy (2009), On Being a Scientist: A Guide to Responsible Conduct in Research (3rd ed.), Washington, DC: National Academies Press.

- Elliott, A. C., Stokes, S. L., and Cao, J. (2018), “Teaching Ethics in a Statistics Curriculum With a Cross-Cultural Emphasis,” The American Statistician, 72, 359–367, DOI: 10.1080/00031305.2017.1307140.

- Garnier, S. (2018), “viridis: Default Color Maps From ‘matplotlib’,” R Package Version 0.5.1, available at https://CRAN.R-project.org/package=viridis.

- Grolemund, G., and Wickham, H. (2011), “Dates and Times Made Easy With lubridate,” Journal of Statistical Software, 40, 1–25. DOI: 10.18637/jss.v040.i03.

- Halvorsen, K. (2010), “Formulating Statistical Questions and Implementing Statistics Projects in an Introductory Applied Statistics Course,” in Proceedings of the International Conference on Teaching Statistics (Vol. 8), available at https://iase-web.org/documents/papers/icots8/ICOTS8/_4G3/_HALVORSEN.pdf.

- Halvorsen, K., and Moore, T. (2000), “Section 2: Motivating, Monitoring, and Evaluating Student Projects,” in Teaching Statistics: Resources for Undergraduate Instructors (Vol. 52), ed. T. Moore, Washington, DC: The Mathematical Association of America, pp. 27–32.

- Hardin, J., Hoerl, R., Horton, N. J., Nolan, D., Baumer, B. S., Hall-Holt, O., Murrell, P., Peng, R., Roback, P., Temple Lang, D., and Ward, M. D. (2015), “Data Science in Statistics Curricula: Preparing Students to ‘Think With Data’,” The American Statistician, 69, 343–353, DOI: 10.1080/00031305.2015.1077729.

- Heggeseth, B. (2019), “Intertwining Data Ethics In Intro Stats,” in Symposium on Data Science and Statistics, available at https://drive.google.com/file/d/1GXzVMpb6GVNfWPS6bd9jggtqq1C77Wsc/view.

- Hjortskov, M. (2017), “Priming and Context Effects in Citizen Satisfaction Surveys,” Public Administration, 95, 912–926, DOI: 10.1111/padm.12346.

- Horton, N. J., Baumer, B. S., and Wickham, H. (2015), “Taking a Chance in the Classroom: Setting the Stage for Data Science: Integration of Data Management Skills in Introductory and Second Courses in Statistics,” CHANCE, 28, 40–50, DOI: 10.1080/09332480.2015.1042739.

- Kim, A. Y., Ismay, C., and Chunn, J. (2018), “The fivethirtyeight R Package: ‘Tame Data’ Principles for Introductory Statistics and Data Science Courses,” Technology Innovations in Statistics Education, 11, available at https://escholarship.org/uc/item/0rx1231m.

- Kuiper, S., and Sturdivant, R. X. (2015), “Using Online Game-Based Simulations to Strengthen Students’ Understanding of Practical Statistical Issues in Real-World Data Analysis,” The American Statistician, 69, 354–361, DOI: 10.1080/00031305.2015.1075421.

- Loy, A., Kuiper, S., and Chihara, L. (2019), “Supporting Data Science in the Statistics Curriculum,” Journal of Statistics Education, 27, 2–11. DOI: 10.1080/10691898.2018.1564638.

- McNamara, A. A. (2015), “Bridging the Gap Between Tools for Learning and for Doing Statistics,” PhD thesis, UCLA, available at https://escholarship.org/uc/item/1mm9303x.

- Meissner, P. (2019), “ical: ‘iCalendar’ Parsing,” R Package Version 0.1.6, available at https://CRAN.R-project.org/package=ical.

- Müller, K., and Wickham, H. (2019), “tibble: Simple Data Frames,” R Package Version 2.1.3, available at https://CRAN.R-project.org/package=tibble.

- National Academies of Sciences Engineering and Medicine (2018), Data Science for Undergraduates: Opportunities and Options, Washington, DC: The National Academies Press.

- Nolan, D., and Temple Lang, D. (2010), “Computing in the Statistics Curricula,” The American Statistician, 64, 97–107, DOI: 10.1198/tast.2010.09132.

- Ott, M. (2019), “Symposium on Data Science & Statistics, Seattle, WA,” available at http://www.science.smith.edu/∼mott/SDSS2019.html.

- Peng, R. (2019), “How Data Scientists Think—A Mini Case Study,” Simply Stats, available at https://simplystatistics.org/2019/01/09/how-data-scientists-think-a-mini-case-study/.

- Peng, R., and Parker, H. (2018a), “‘Compromised Shoe Situation’, Not So Standard Deviations,” available at http://nssdeviations.com/71-compromised-shoe-situation.

- Peng, R., and Parker, H. (2018b), “‘The Smoothie Happens Everyday’, Not So Standard Deviations,” available at http://nssdeviations.com/70-the-smoothie-happens-everyday.

- Perkins, D. (2010), Making Learning Whole: How Seven Principles of Teaching Can Transform Education, San Francisco, CA: Jossey-Bass.

- Protopapas, P., Rader, K., Glickman, M., Tanner, C., Blitzstein, J., Pfister, H., and Kaynig-Fittkau, V. (2020), “CS109 Data Science,” available at http://cs109.github.io/2015/.

- Robinson, D. (2018), “Twitter,” available at https://twitter.com/drob/status/987436677026254848.

- Ruiz, A. (2017), “The 80/20 Data Science Dilemma,” InfoWorld, available at https://www.infoworld.com/article/3228245/the-80-20-data-science-dilemma.html.

- Scheaffer, R., Erickson, T., Watkins, A., Witmer, J., and Gnanadesikan, M. (2004), Activity-Based Statistics, Emeryville, CA: Key College.

- Sole, M. A., and Weinberg, S. L. (2017), “What’s Brewing? A Statistics Education Discovery Project,” Journal of Statistics Education, 25, 137–144, DOI: 10.1080/10691898.2017.1395302.

- Statistical Enquiry Cycle (2012), https://nzmaths.co.nz/category/glossary/statistical-enquiry-cycle.

- Wickham, H. (2014), “Tidy Data,” Journal of Statistical Software, 59, 1–23. DOI: 10.18637/jss.v059.i10.

- Wickham, H., and Bryan, J. (2019), R Packages, Sebastopol, CA: O’Reilly Media.

- Wickham, H., Chang, W., Henry, L., Pedersen, T. L., Takahashi, K., Wilke, C., Woo, K., and Yutani, H. (2019), “ggplot2: Create Elegant Data Visualisations Using the Grammar of Graphics,” R Package Version 3.2.1, available at https://CRAN.R-project.org/package=ggplot2.

- Wickham, H., François, R., Henry, L., and Müller, K. (2019), “dplyr: A Grammar of Data Manipulation,” R Package Version 0.8.3, available at https://CRAN.R-project.org/package=dplyr.

- Wickham, H., and Grolemund, G. (2017), R for Data Science, Sebastopol, CA: O’Reilly Media.

- Wickham, H., and Henry, L. (2019), “tidyr: Tidy Messy Data,” R Package Version 1.0.0., available at https://CRAN.R-project.org/package=tidyr.

- Xie, Y. (2019), “knitr: A General-Purpose Package for Dynamic Report Generation in R,” R Package Version 1.26, available at https://CRAN.R-project.org/package=knitr.

- Yan, D., and Davis, G. (2019), “A First Course in Data Science,” Journal of Statistics Education, 27, 99–109. DOI: 10.1080/10691898.2019.1623136.

- Zhu, H. (2019), “kableExtra: Construct Complex Table With ‘kable’ and Pipe Syntax,” R Package Version 1.1.0, available at https://CRAN.R-project.org/package=kableExtra.

- Zhu, Y., Hernandez, L. M., Mueller, P., Dong, Y., and Forman, M. R. (2013), “Data Acquisition and Preprocessing in Studies on Humans: What Is Not Taught in Statistics Classes?,” The American Statistician, 67, 235–241, DOI: 10.1080/00031305.2013.842498.