Abstract

The goal of identifying hazardous chemicals registered under the Registration, Evaluation, Authorization and restriction of CHemicals (REACH) Regulation and taking appropriate risk management measures relies on robust data registrations. However, the current procedures for European chemical manufacturers and importers to evaluate data under REACH neither support systematic evaluations of data nor transparently communicate these assessments. The aim of this study was to explore how using a data evaluation method with predefined criteria for reliability and establishing principles for assigning reliability categories could contribute to more structured and transparent evaluations under REACH. In total, 20 peer-reviewed studies for 15 substances registered under REACH were selected for an in-depth evaluation of reliability with the SciRAP tool. The results show that using a method for study evaluation, with clear criteria for assessing reliability and assigning studies to reliability categories, contributes to more structured and transparent reliability evaluations. Consequently, it is recommended to implement a method for evaluating data under REACH with predefined criteria and fields for documenting and justifying the assessments to increase consistency of data evaluations and transparency.

Introduction

Chemical hazard and risk assessments form the basis for any regulatory risk management measures and therefore must be scientifically robust to address potential hazards and risks adequately. However, the process of conducting chemical risk assessments is not currently standardized across different chemicals legislations or jurisdictions. In the wake of the controversies regarding potential harm of widely used chemicals, the need for a more systematic and transparent approach in identifying, selecting, evaluating and summarizing data for risk assessment has been recognized (SCENIHR Citation2012; WHO/UNEP Citation 2013; Vandenberg et al. Citation2016; Whaley et al. Citation2016; Hoffmann et al. Citation2017). For example, expert judgment, which is an inherent part of risk assessment, brings subjectivity into the process and should be consistently and transparently applied (Rudén Citation2002; Wandall Citation2004; Wandall et al. Citation2007).

A systematic and transparent approach to risk assessment is particularly important for regulations, such as Registration, Evaluation, Authorization and restriction of CHemicals (REACH Citation2006), where the responsibility to gather data and assess the hazards and risks of chemicals lies with the many European chemical manufacturers and importers, i.e. registrants, whose experience and expertise vary. The REACH Regulation applies to industrial chemicals at or above one tonne per year and the data requirements are tonnage dependent, i.e. the requirements are more extensive for high tonnage substances. Their assessments are electronically submitted in a registration dossier to the European Chemicals Agency through the software program International Uniform Chemical Information Database (IUCLID) (ECHA Citation2012a). IUCLID has been specifically developed for compiling and exchanging information on chemicals between industry and regulatory authorities. As of June 2018, ∼83,300 registration dossiers for ∼20,500 unique substances have been registered (ECHA 2018). Regulatory authorities use the information to identify chemicals of concern and take suitable regulatory action to manage risks if needed. Thus, it is crucial that these assessments are consistently conducted and transparent to ensure they can be scrutinized by ECHA as well as other stakeholders.

Evaluating the adequacy, or usefulness, of available data is an important step in the risk assessment process. Under REACH, adequacy is determined by evaluating the reliability as well as relevance of data. Reliability is the inherent scientific quality of the study, i.e. reproducibility and accuracy of the results. Relevance is the appropriateness of the study for identifying and characterizing a specific hazard and/or risk (ECHA Citation2011). Several methods have been developed to structure the evaluation of data (Roth and Ciffroy Citation2016; Samuel et al. Citation2016). However, the design of the method has shown to influence the outcome of the assessment (Ågerstrand et al. Citation2011; Kase et al. Citation2016). Methods that provide little guidance and few evaluation criteria inevitably rely more on expert judgment (Rudén et al. Citation2017).

The Klimisch approach was one of the first methods for structuring data evaluations in the 1990s and has since been accepted practice in regulatory settings, including REACH (ECHA Citation2011). The Klimisch approach focuses mainly on evaluating reliability of data and studies are assigned a reliability category (Klimisch category) 1–4 corresponding to (1) “Reliable without restriction”, (2) “Reliable with restriction”, (3) “Not reliable” and (4) “Not assignable” (Klimisch et al. Citation1997). Registrants report their reliability assessment for each study within one of the four reliability categories together with a justification for the choice of category in the field, “Rationale for reliability incl. deficiencies” (ECHA Citation2013).

Although the Klimisch method is commonly used, it has been criticized for lacking criteria and guidance for evaluating data, as well as for giving more weight to studies conducted according to Good Laboratory Practice (GLP) and standardized test guidelines (Ågerstrand et al. Citation2011; Kase et al. Citation2016; Ingre-Khans et al. 2018, manuscript). Thus, there is a risk that studies stated to be compliant with GLP and/or test guideline studies are considered reliable by default without an evaluation of studies for their inherent scientific quality. There is also a risk that reliable and relevant studies not performed according to GLP and test guidelines are assigned less weight or even excluded from the assessment (Molander et al. Citation2015), despite the requirement under REACH to include all relevant information (Art 12(1), REACHFootnote1).

The research project Science in Risk Assessment and Policy (SciRAP) was initiated with the main purpose to ensure that (eco)toxicity data used for chemical hazard and risk assessments are evaluated based on their inherent scientific quality and relevance rather than GLP and test guideline compliance, as in the Klimisch method. Another important aim was to increase structure and transparency in the evaluation process. SciRAP provides specific criteria and guidance for evaluating study reliability and relevance, as well as a web-based tool to facilitate the application of the criteria and interpretation of the evaluation (SciRAP Citation2018). The aim of this study was to apply the SciRAP tool for evaluating reliability of studies submitted by registrants under REACH, and develop principles for categorizing studies based on reliability to explore how to improve structure and transparency in this process.

Materials and methods

Scope and data selection

In total, 20 in vivo studies and abstracts published in the literature were selected from registration dossiers in the ECHA database before 1 Sep 2015 (). The studies were selected according to the following criteria from a batch of 435 study summaries for 60 substances registered for the endpoint repeated dose toxicity (RDT). The 60 substances comprised 51 substances selected from a list of substances provided by the German Federal Institute of Risk Assessment (BfR) investigating data availability in REACH dossiers (BfR Citation2015), as well as another nine substances undergoing the REACH authorization and/or restriction processes. The selection of substances in the original data set has been described in detail elsewhere (Ingre-Khans et al. Citation2016). Data from 60 substances were considered as a feasible and sufficient basis for carrying out case-studies and the endpoint RDT was selected since this was a standard information requirement for all the included substances.

Table 1. Peer-reviewed studies included in this investigation. Studies have been listed according to the assigned reliability category by the registrant: category 1 = Reliable without restriction, category 2 = Reliable with restriction, category 3 = Not reliable and category 4 = Not assignable.

Studies were selected based on the following criteria:

the bibliographic reference of the study summary was stated as “publication” in IUCLID to ensure access to the same information as the registrant for evaluating reliability;

the bibliographic reference could be identified, i.e. author, title and/or bibliographic source of the published paper was stated in the study summary, and accessible;

the study summary referred to only one bibliographic reference to avoid a joint reliability evaluation of several studies;

the published study was written in English; and

the adequacy of the study was reported in the study summary, which refers to whether the study is used as key, supporting, weight of evidence or disregarded study under REACH.

Studies were selected as far as possible from all four reliability categories and assigned adequacies, i.e. key, supporting, weight of evidence or disregarded study. According to ECHA guidance, studies that are flawed but show critical results should be assigned as disregarded studies (ECHA Citation2012b). All studies assigned the Klimisch categories 1 (n = 1), 3 (n = 3) and 4 (n = 2) by the registrants were included since few studies fulfilled the inclusion criteria in those categories. For one of the studies assigned to reliability category 3, the information reported in the study summary did not correspond to the bibliographic reference and was therefore replaced by another study assigned to category 3, but with no assigned adequacy.

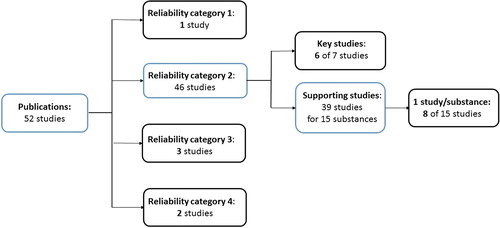

The number of studies in Klimisch category 2 was higher than the other categories, comprising 46 studies in total, of which 7 were assigned as key studies and 39 as supporting studies (). Only six of the seven key studies were included for analysis as two of the study summaries referred to the same bibliographic reference. The 39 studies assigned to reliability category 2 and used as supporting information represented 15 different substances, of which as many as 22 studies were reported for the same substance. A pool of 15 studies representing one study per substance was assembled to ensure variation in the data set. For substances where more than one study had been reported, one study was randomly selected for inclusion. From the resulting set of 15 studies assigned reliability category 2 and used as supporting information, eight studies were randomly selected for evaluation amounting to 20 studies in total. Overall, 14 studies assigned Klimisch category 2 (6 key and 8 supporting studies) were selected ().

Figure 1. Overview of selected studies from each reliability category fulfilling the criteria (1) the bibliographic reference was stated as “publication”, (2) the reference could be identified, (3) the study summary only referred to one bibliographic reference and (5) the adequacy was stated. One of the three studies in reliability category 3, was exchanged as the information in the summary did not correspond to the study stated as reference. Instead, another study assigned reliability category 3, but with no assigned adequacy was included. The numbers in bold indicate the number of included studies in each category (in total 20).

Tools used for data evaluation and synthesis

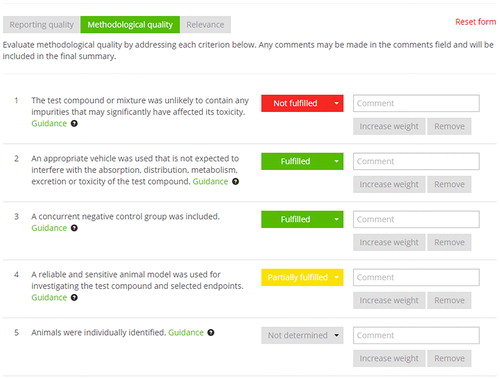

The freely accessible web-based SciRAP tool (www.scirap.org) was used for evaluating the reliability of the included studies. SciRAP provides criteria for evaluating reliability of in vivo studies, which are divided into evaluating reporting quality and methodological quality (SciRAP Citation2017a; Beronius et al. Citation2018). The criteria cover aspects regarding the test compound and controls, animal model and housing conditions, dosing and administration of the test compound as well as data collection and analysis. Each criterion can be judged as “fulfilled” (F), “partially fulfilled” (PF), “not fulfilled” (NF) or “not determined” (ND). Criteria for methodological quality may, for example, be set to “not determined” if information to evaluate that aspect of the study has not been sufficiently reported. Individual criteria may also be removed if considered not applicable for the specific case or type of study being evaluated. Since this investigation comprised 20 studies registered for the RDT endpoint for 15 different studies, certain criteria were not applicable to all studies. For example, the criterion “The allocation of animals to different tests and measurements was randomized” was removed for studies where the animals have been subjected to the same tests and measurements. The evaluation was conducted using the online tool on the website () and the evaluation result was exported to an Excel file (Beronius et al. Citation2018).

SciRAP provides guidance to support the evaluation of methodological quality (SciRAP Citation2017b). Also, OECD test guidelines (OECD Citation1981a, b; Citation1998a, b; Citation2002; Citation2008; Citation2009a, b, c, d) were used as guidance when evaluating methodological aspects of the studies. The OECD test guidelines No. 408, 412 and 452 (OECD Citation1998a, Citation2009b, c) were updated in 2017 and 2018 after the assessments were carried out and therefore the older versions of the test guidelines were used. Justifications for judging a criterion as fulfilled, partially fulfilled, not fulfilled or not determined in this investigation were recorded to ensure that criteria were consistently evaluated. The evaluation was based on the available information in the published studies, i.e. information that could potentially be attained from contacting the authors of the study was not considered, to base the evaluation on the same information accessible to the registrant.

Figure 2. Screen shot of the online SciRAP tool, showing part of the form for evaluating methodology quality. The evaluation is recorded by selecting a pre-defined evaluation statement (fulfilled, partially fulfilled, not fulfilled or not determined) and justifying the assessment with a comment.

Some of the studies summarized several toxicity tests or included tests on more than one chemical. However, only the test which had been summarized and referred to in the dossier by the registrant was considered for the evaluation of reliability. All evaluations of the studies with SciRAP were performed by the same evaluator and checked by another evaluator.

The in vivo criteria in SciRAP are intended to be applicable for various regulatory settings in which studies are assigned reliability categories, as well as for other purposes, such as weight of evidence evaluations and systematic reviews. Consequently, there are currently no set principles for assigning studies into reliability categories based on the results from the reliability evaluation. Specific principles for categorization need to be established and described by the user on a case-by-case basis. Since the evaluation of data quality under REACH is based on assigning studies reliability categories, key criteria () for categorizing studies were established for this particular investigation. The selection of key reporting and methodology criteria was based on expert judgment as well as cross-checking against criteria considered specifically important (“red criteria”) in the evaluation method ToxRTool (EC 2018). ToxRTool was developed for evaluating reliability of toxicological data used in regulatory contexts and categorizing studies according to Klimisch (Schneider et al. Citation2009). The principles for assigning studies into categories corresponding to Klimisch categories 1–4 have been summarized in .

Table 2. SciRAP reporting and methodology criteria considered key for evaluating reliability based on expert judgment and “red criteria” in ToxRTool.

Table 3. Principles for categorizing studies into reliability categories 1–4 based on the SciRAP evaluation.

Generally, data for specific endpoints are evaluated and recorded separately under each endpoint section in IUCLID. However, in tests, such as repeated dose toxicity where several endpoints have been investigated (e.g. body weight, organ and tissue weights, alterations in clinical chemistry, urinalysis, hematological parameters, and pathological changes in organs and tissues) the reliability of the various test methods and measurements of specific endpoints may vary. Nevertheless, each study in this investigation was assigned one reliability category, based on an overall evaluation of the reporting and methodological aspects of the study, since this is the procedure under REACH for RDT-studies. Consequently, the assigned reliability category for the whole study may not reflect the reliability of a specific measured endpoint in the test.

Results

Description of included studies

The 20 studies selected for the study originated from 15 registration dossiers, i.e. represented 15 unique substances (). The studies included 11 oral, 5 dermal and 4 inhalation toxicity studies and covered acute, sub-chronic as well as chronic toxicity test designs. Although only repeated dose tests should be registered under the RDT endpoint, the inhalation study by Detwiler-Okabayashi and Shaper (Citation1996) was an acute test where mice were exposed for 180 min and then observed for a week. Most studies were performed in rodents (rats or mice), but four of the included studies were performed in non-rodents (rabbit and dog). The oldest study was published in 1942 and the most recent in 2006. Over half of the 20 studies were published in the 1980s (n = 7) and the 1990s (n = 5).

Registrants’ reliability evaluations

Of the 20 studies included in this investigation, one study (study 1) was assigned reliability category 1 by the registrant and used as supporting evidence (). The categorization was justified with the statement “well documented” and “scientifically acceptable” ().

The majority of the studies (study 2–15) were assigned reliability category 2 by the registrant and used as either key (n = 6) or supporting (n = 8) evidence (). Typical statements for justifying category 2 included “well-documented”, “scientifically accepted”, “meets basic scientific principles” or similar (n = 10 studies) (). GLP and standardized test guidelines were mentioned in the rationales by the registrant for six of these 14 studies. Three of these six studies reported that neither GLP nor test guidelines were followed. The fourth study was reported to predate GLP, and the last two studies were reported to be near or similar to the guideline study.

For four of the studies (study 6, 7, 13 and 14), the registrants reported deficiencies or limitations in reporting and/or methodology. For study 7 and 14, the deficiencies in the rationales were specified by the registrants. For study 6 and 13, the deficiencies were not further specified but expressed as “possibly with incomplete reporting or methodology, which do not affect the quality of the relevant results” and “limitations in design and/or reporting”.

Three studies (study 16–18) were assigned reliability category 3. Two studies were reported as disregarded studies and one had no assigned adequacy (). For two of the studies (study 16 and 17), the reliability category was justified by either insufficient or unclear reporting, such as uncertainties regarding negative controls or no data on substance purity (). For study 16, the registrant also stated methodological deficiencies without providing any further details. In the third study (study 18), the registrant justified the category by stating “significant methodological deficiencies” which were then further specified. However, some of the reported deficiencies were rather related to reporting flaws than methodological flaws, such as no data on substance purity and experimental conditions.

Two of the 20 studies (study 19 and 20), were assigned reliability category 4 and used as supporting information in the dossier (). The studies were assigned reliability category 4 due to insufficient information for evaluating the reliability of the study (). Study 19 was available as an abstract only.

Table 4. Reliability category and rationale for reliability as registered in the REACH registration dossier for the 20 selected studies and resulting reliability evaluation with the SciRAP tool.

Reliability evaluation of studies with SciRAP

The results from the evaluation of the reporting and methodological aspects of the studies with the SciRAP tool have been summarized in and , respectively. Information on housing conditions, individual identification of animals and method for allocating animals to different treatments were generally lacking for many of the studies (). Insufficient reporting of study details generally resulted in some methodology criteria being set to not determined since they could not be evaluated ().

Table 5. SciRAP evaluation of reporting quality criteria for the 20 studies included in this investigation listed according to the assigned reliability category with the SciRAP tool. Each criterion was evaluated as either F = fulfilled, PF = partially fulfilled, NF = not fulfilled, ND = not determined or NA = not applicable.

Table 6. SciRAP evaluation of methodology quality criteria for the 20 studies included in this investigation listed according to the assigned reliability category with the SciRAP tool. Each criterion was evaluated as either F = fulfilled, PF = partially fulfilled, NF = not fulfilled, ND = not determined or NA = not applicable.

In certain cases, criteria were set not to be determined because we lacked specific expertise to assess the criteria. For example, the reporting criterion for statistical methods was set to not determined for study 2 and 18 (). No statistics have been used for analyzing the results in these studies and it could not be determined whether it would have been appropriate to use statistics in these cases. The methodology criterion concerning contamination of the mination of the test system that could affect test results was set not to be determined for all studies, since evaluating this criterion would require specific expertise for all included substances ().

According to the scheme based on the SciRAP evaluation and the principles for assigning studies reliability categories used in this investigation ( and ), the included studies were categorized as follows: category 1 “Reliable without restriction” (n = 1), category 2 “Reliable with restriction” (n = 8), category 3 “Not reliable” (n = 5) and category 4 “Not assignable” (n = 6) ().

The assigned reliability categories based on the evaluation with SciRAP differed from the registrants’ categorization for 11 studies (). Only, studies 14 and 18 were assigned a higher reliability category in the SciRAP evaluation compared to the registrants’ categorization. However, 9 out of 20 studies (45%) were assigned a lower category with SciRAP. The studies were mostly changed from reliability category 2 to 3 (four studies) and from 2 to 4 (three studies). Nine of 20 studies (45%) were assigned the same reliability category with SciRAP as assigned by the registrants.

Reliability category 1

Only one of the 20 studies (study 14) was assigned to reliability category 1 based on the SciRAP evaluation and the principles for categorization. This study fulfilled all key reporting and methodology criteria and was generally well reported and performed (). The same study had been assigned to reliability category 2 by the registrant with the rationale that the study was performed according to “generally accepted principles”. The registrant also stated that the study had possible reporting or methodological deficiencies without further specifying which, but concluding that these deficiencies would “not affect the quality of the relevant results”.

Reliability category 2

In total, eight studies were assigned reliability category 2 according to the principles applied here (). The key reporting and methodology criteria were evaluated either as fulfilled or partially fulfilled and no other major issues were identified that would make the studies unreliable or not possible to evaluate.

Six of the eight studies have also been assigned reliability category 2 by the registrant. For two studies (study 1 and 18), the categorization differed from that of the registrant. Study 1 had been assigned to reliability category 1 by the registrant with the rationale “well documented and scientifically acceptable”. The study was assigned reliability category 2 based on the SciRAP evaluation as the key methodological criterion regarding sufficient number of animals per dose group was judged as partially fulfilled (). The number of animals killed in each interim killing was reported as a range for certain tests and measurements. It was consequently unclear how many animals had in fact been used. In addition, the lower limit of the range suggested that fewer animals than the recommended number in the OECD guidelines (OECD 2009c) have been used in some of the tests.

Study 18 had been assigned to reliability category 3 by the registrant for the following reasons: no reporting on the purity of the substance nor the experimental conditions, only using four animal per dose group, administration via gavage only three times a week and focusing on adverse effects on kidney but no other organs (). The study was assigned reliability category 2 with SciRAP since all key reporting and methodological quality criteria were judged as either fulfilled or partially fulfilled ( and ).

Reliability category 3

In total, five studies were assigned reliability category 3 with SciRAP as one of the key methodology criteria was assessed as not fulfilled. Only one of these studies (study 16) had also been assigned category 3 by the registrant. The registrant did not clarify “methodological deficiencies” as stated in the rationale other than specifying reporting issues (). The study was assigned reliability category 3 in the SciRAP evaluation, due to too few replicates per dose groups being used ( and ).

Two inhalation studies (study 2 and 9) were assigned reliability category 3 because an inappropriate route of administration was used. The preferred inhalation exposure to substances prepared from liquids or having low vapor pressure is nose-only (OECD Citation2009b). However, in both cases, the animals were exposed whole-body. In study 9, the authors of the study repeated the experiment with nose-only exposure to receive a more accurate result, since they suspected the substance to cause vetting of the fur and thus contribute to the observed toxicity by ingestion during preening (Ballantyne et al. Citation2006). The results from the nose-only test were also reported in the peer-reviewed study. However, the registrant neither discussed the appropriateness of the exposure mode in the study summary for the whole-body test nor included the results from the nose-only test in the registration dossier.

Study 5 was assigned reliability category 3 because an insufficient number of animals per dose group was used and for study 6, the exposure time was too short for the investigated effect to develop ( and ).

Reliability category 4

Six of the 20 studies were assigned reliability category 4, due to at least one of the critical reporting criteria being judged as not fulfilled (). Criteria that were not fulfilled included number of animals per dose group, description of test and/or analytical methods, and statistical methods. Three of these studies (study 4, 10 and 12, ) have been assigned reliability category 2 by the registrant and were reported to be “well documented”. Two of the studies (study 16 and 17) have been assigned reliability category 3 by the registrant stating deficiencies related to reporting. In the rationale for study 16, the registrant also stated “methodological deficiencies” without further clarification.

Study 10 was assigned reliability category 4 as one of the key reporting criteria on statistics was evaluated as not fulfilled (). In the paper, it was stated that the original report could be accessed on request, which we did out of interest (ACC 2017). If the information on statistics in the original report had been taken into consideration, the criterion would have been evaluated as fulfilled. This would have changed the assigned reliability category to 2.

The registrant had assigned study 17 reliability category 3 because of unclear reporting on the use of concurrent positive and negative controls (). The criterion for negative control was evaluated as fulfilled in the SciRAP evaluation since the study reported that a negative control was used. However, the use of positive control was not described and thus the criterion for positive control was evaluated as not fulfilled. Since unclear reporting was a reason for the registrant to assign the study as not reliable, this is a case where the registrant should attempt to contact the authors of the study for clarification. Out of interest, we contacted one of the authors of the study who explained the use of negative and positive controls. If this information had been taken into consideration in the evaluation, the methodology quality criterion for positive control would have been judged as fulfilled (Roy Citation2017). The study was nevertheless assigned reliability category 4 with the SciRAP tool due to insufficient reporting of the number of animals per dose group, which could not be clarified by the author ().

For study 19, several of the key reporting criteria were evaluated as not fulfilled since it was only available as an abstract (). One of the key methodological criteria (sufficient number of animals per dose group) was also evaluated as not fulfilled () and consequently the study should have been assigned reliability category 3 according to the principles for categorizing studies. However, considering too little information was available to make an overall assessment of the study’s reliability, the study was assigned to reliability category 4.

Discussion

The aim of this study was to examine how using the SciRAP tool and developing corresponding principles for assigning studies reliability categories could contribute to structure and transparency in the process of evaluating reliability of data under REACH. As previously shown, the current system in REACH lacks clear criteria and guidance for registrants to evaluate data as well as procedures for transparently reporting their evaluations (Ingre-Khans et al. Citation2018 manuscript). Moreover, little guidance is given on how to assign studies to reliability categories, except for relying on the contested criteria of assigning high reliability to GLP and/or test guideline studies. In contrast, using the SciRAP tool improved structure and transparency to the evaluation process by providing (1) a set of clearly defined criteria, which ensures that important aspects regarding the validity of a study are considered for all studies, standard as well as nonstandard studies; (2) guidance on how to evaluate the criteria; (3) a color-coded recording of the evaluation of each criterion, which gives an overview of the study’s strengths and weaknesses; and (4) an output from the evaluation, which was used for assigning reliability categories.

Evaluation of study reliability

Registrants under REACH are required to evaluate reliability of data and report their evaluation in the study summary. However, there is currently no structured method under REACH for doing so. ECHA provides limited guidance for evaluating reliability of studies except for compliance with GLP and test guidelines. The guidance broadly states that reliability should be evaluated by using “formal criteria using international standards as references” and lists a few key points that should be considered including adequately describing the study and compliance with “generally accepted scientific standards” (ECHA Citation2011). If the study differs from recognized test methods or accepted scientific standards, the registrant must decide to what extent the study can be used and how. The guidance for reporting robust study summaries lists some further aspects that are important to report for evaluating adequacy of data, but does not state how to evaluate this other than referring to corresponding test guidelines (ECHA Citation2012b). This guidance also states that registrants should clearly state in their conclusion in the study summary whether specific “validity, quality or repeatability criteria” of the test method have been fulfilled. Whereas validity criteria are generally clearly stated in OECD guidelines for ecotoxicity studies (e.g. OECD Citation2012, Citation2013), this is not the case in guidance for toxicity studies. Thus, the guidance provided by ECHA does not support registrants in evaluating toxicity data in a structured and consistent way, in particular for nonstandard studies, and may contribute to overlooking important aspects.

Additionally, the resulting reliability evaluations under REACH are not transparently communicated. The evaluations are reported in IUCLID by assigning a reliability category 1–4 and providing a justification in the field “Rational for reliability incl. deficiencies” (ECHA Citation2013). The field for justifying the reliability evaluation consists of a pick-list with standard phrases as well as a free-text field for providing further details. Standard phrases include broad statements such as “guideline study”, “guideline study with acceptable restrictions”, “study well documented, meets generally accepted scientific principles, acceptable for assessment” (OECD Citation2016). Thus, understanding the registrant’s underlying reason for judging a study as reliable or unreliable depends on how detailed it has been reported by the registrant in the free-text field or elsewhere in the study summary. As seen in a previous study, the rationales do not necessarily bring clarity as to how reliability has been assessed by the registrant (Ingre-Khans et al. Citation2018 manuscript). For example, for studies that were assigned reliability category 2 by registrants, “reporting and/or methodological deficiencies” were commonly stated in the rationales without further specifying what type of deficiencies had been observed, as exemplified in the rationales for study 7 and 14 ().

In contrast, the SciRAP tool provided a more structured and transparent method for evaluating the reliability of the studies included in this investigation. The detailed criteria for evaluating reliability of in vivo studies in SciRAP help to ensure that important aspects for evaluating the inherent scientific quality of the study are considered across all studies, irrespective of adherence to GLP and/or standardized test guidelines. This facilitates structured evaluation and integration of all available relevant data, including nonstandard data, as required under REACH. Clear criteria also increase the chances of identifying, as well as distinguishing between, reporting and methodological flaws in studies (Kase et al. Citation2016). For example, serious reporting and methodological flaws were identified for studies 4 and 5 that had not been commented upon by the registrant. No statistics have been used for most of the measured parameters and no description of variance had been reported in either of the studies. In addition, too few animals per sex and dose group have been used in study 5. This resulted in study 4 and 5 being assigned reliability category 4 and 3, respectively. Despite these flaws, the studies had been assigned reliability category 2 by the registrant and stated to be well-documented (although not according to GLP method and test guidelines). Thus, providing clear criteria will at least ensure that certain aspects have been considered in the evaluation. The guidance provided in SciRAP for interpreting and judging criteria for in vivo studies assists in evaluating and consistently applying the criteria. Guidance is also helpful for risk assessors who have less expertise and experience in evaluating data for risk assessment and provides support for making evaluations more consistent.

Furthermore, using a set of clear criteria for which the evaluation of each criterion is recorded (fulfilled, partially fulfilled and not fulfilled) and the possibility to comment and justify the evaluation of each criterion, transparently communicates how the risk assessor evaluates various aspects of the study. The output from the evaluation, as summarized in and , allows for easy comparison of strengths and weaknesses between studies. Such an overview can help registrants to judge which studies are eligible to use in the risk assessment and also be useful for identifying aspects where experts disagree (Beronius and Ågerstrand Citation2017). This would also be useful for transparently communicating registrants’ evaluations to regulatory authorities. Thus, the evaluations could become more structured and transparent by incorporating fields in the IUCLID template for study summaries that not only report the data from the original report but also show how the registrant has evaluated each aspect and a comment field for justifying the evaluation.

Assigning studies to reliability categories

The guidance developed by ECHA does not describe any systematic approaches for assigning studies a certain reliability category, except for assigning GLP and test guideline studies to category 1 (ECHA Citation2011). The practice of assigning GLP and test guideline studies high reliability has been criticized (Ågerstrand et al. Citation2011; Kase et al. Citation2016), and any other guidance for categorizing studies is vague. Consequently, it was not always transparent how or why the registrant had assigned the studies in this investigation a certain reliability category. For example, the rationale for the study assigned reliability category 1 (“well documented and scientifically acceptable”) did not essentially differ from the rationales for several studies assigned reliability category 2 (e.g. study 2, 9, 11 and 12, ). Moreover, methodological deficiencies were seemingly not distinguished from limitations in reporting. This was demonstrated for study 17, where the registrant assigned the study to reliability category 3 due to ambiguous reporting regarding concurrent positive and vehicle control as stated in the rationale for reliability. However, incomplete or ambiguous reporting does not imply poor design or conduct of the study as the issue was clarified by contacting one of the authors of the study (Roy Citation2017). An attempt to contact the authors of the study should generally be made if the missing information affects how the study is assessed and used in the risk assessment.

Since registrants under REACH are required to assign studies to the Klimisch reliability categories, principles for categorizing studies based on the output from the SciRAP evaluation were specifically developed. These principles were based on identifying key criteria that were considered critical, similar to the method for risk of bias evaluation proposed in the National Toxicology Program Office of Health Assessment and Translation (OHAT Citation2015) and the “red criteria” in ToxRTool (Schneider et al. Citation2009). Whereas, the ToxRTool calculates a score and uses cutoff values for categorizing studies (Schneider et al. Citation2009), a similar approach to the OHAT method based on the SciRAP evaluation was used here. The SciRAP tool also calculates a numerical score for reporting quality and methodological quality, respectively. However, the scores were not used for this study, as they were not considered useful for categorization. A single numerical score loses too much information concerning the specific strengths and weaknesses of the studies and their impact on overall reliability. Importantly, the SciRAP scores are only comparable between studies for which the same criteria have been retained or removed (SciRAP Citation2017a; Beronius et al. Citation2018), which was not the case in this study.

The reliability categorization of studies based on the SciRAP evaluation differed from the registrants’ categorization for 11 of the included studies. In general, studies were assigned a lower reliability category (category 3 and 4) based on the SciRAP evaluation than what had been assigned by the registrants. The detailed criteria facilitated in identifying methodological as well as reporting flaws in the studies that the registrant had not commented on. To what extent the registrant had overlooked these aspects or simply evaluated these aspects differently is not known, since the rationale for categorization is often not clearly stated in IUCLID. Furthermore, by using different sets of criteria for evaluating, reporting and methodological aspects, methodological flaws that make the study deemed unreliable could be more easily distinguished from insufficient reporting required for evaluating the reliability of the study. Generally, using a more systematic method for evaluating data seems to lead to stricter evaluations as more detailed criteria helps to identify flaws in the studies (Kase et al. Citation2016).

Using key criteria for categorization may, however, result in studies being assigned too strictly to categories. For example, to distinguish between studies that were assigned reliability category 1 and 2, it was suggested that all key reporting and methodology criteria should be fulfilled. Accordingly, a study could be overall well documented and performed, but only partially fulfilling one key criterion and consequently assigned to reliability category 2. Similarly, if a key methodology or reporting criterion is evaluated as not fulfilled, the study is assigned to reliability category 3 and 4, respectively. This makes it important not only how criteria are evaluated, particularly in borderline cases, but also which criteria are selected as key if an approach such as this is applied, which experts may not necessarily agree on. Critical aspects may differ for various studies depending on the type of substance, type of test and study design (Kase et al. Citation2016). Although this was accounted for by stating that non-key criteria should also be considered when assigning reliability category, we nevertheless assumed that the selected key criteria were equally critical for all studies in this investigation.

In the SciRAP evaluation method for ecotoxicity studies, Criteria for Reporting and Evaluating ecotoxicity Data (CRED), the use of key criteria for categorization was not considered useful after conducting a ring test. Participants, in general, did not rely on the identified key criteria for categorization as they emphasized that key criteria needed to be decided on a case-by-case basis depending on the test design (Kase et al. Citation2016). Moreover, when participants did use the key criteria, they applied them very strictly despite explaining there could be potential exceptions (Moermond et al. Citation2016). Also, more experienced risk assessors seem to be more lenient in their evaluations compared to less experienced participants who were more strict, as seen in workshops where participants from different sectors and with different levels of experience have evaluated the same studies (Ingre-Khans et al. Citation2018; Beronius et al. Citation2018).

Thus, when using key criteria for categorizing studies, these should be carefully considered and evaluated how they impact the categorization of studies. It should be emphasized that the purpose of SciRAP is to promote use of all sufficiently relevant and reliable information for hazard and risk assessment. Developing criteria for categorizing studies that are clear and consistent and at the same time allow for flexibility and expert judgment is certainly challenging. Criteria risk becoming either very strict, resulting in assigning studies a low(er) reliability category, or very flexible, resulting in reduced ability to distinguish reliable studies from less reliable studies. Regardless of how a system for categorizing studies is designed, the reasons for assigning a study a certain category need to be transparent and any deviations from the set criteria should be fully explained.

Limitations to applying the SciRAP tool under REACH

The in vivo SciRAP criteria are currently easier to apply to oral toxicity studies than inhalation and dermal studies and should be further developed to be applicable to the evaluation of all exposure routes. For example, for inhalation studies, conditions in the exposure chamber need to be considered, such as chamber air flow, nominal and actual concentrations, and particle size distributions. Since there are no specific criteria for evaluating these aspects in the current version of SciRAP, conditions in the exposure chamber were considered in the reporting criterion regarding the administered dose and the methodology criterion on the route of administration.

The guidance for evaluating the methodology criteria provided in SciRAP could also be further refined. Developing guidance to evaluate reporting criteria would also be helpful in interpreting criteria and increases the likelihood that criteria are applied and evaluated consistently by different assessors.

Although a method that introduces a systematic and transparent approach for evaluating and categorizing data is used, this does not guarantee that the evaluations will be consistent (Schneider et al. Citation2009; Kase et al. Citation2016; Beronius and Ågerstrand Citation2017). For example, instructions may still not be followed, or mistakes can be made, relevance aspects may be evaluated rather than reliability, and there may be differences in how assessors interpret the application of criteria (Schneider et al. Citation2009; Beronius and Ågerstrand Citation2017). Also, implementing a structured method may improve consistency in how data are evaluated, but risk assessors may still come to different conclusions (Beronius et al. Citation2017).

Conclusions and recommendations

The current system for evaluating information under REACH could be improved to achieve more systematic and transparent hazard and risk assessments. Ensuring that data are uniformly evaluated is particularly important under REACH considering that registrants’ expertise and experience will inevitably vary. REACH registrations form the basis for regulatory authorities to identify chemicals of potential concern and take action. Consequently, the assessments need to be scientifically robust and transparent. As of May 2018, all chemicals that are currently on the European market at or above one tonne per year should be risk assessed and registered with ECHA. Nevertheless, we recommend that ECHA, in collaboration with OECD and other relevant agencies and stakeholders, develop or build on existing data evaluation frameworks, such as SciRAP, for implementation in IUCLID. This would benefit registrations of new chemicals as well as already existing registration dossiers when they are updated with new data. A more structured and transparent framework for evaluating and reporting data would also improve the robustness of evaluations in other chemicals legislations that use IUCLID for regulatory purposes, such as the Biocidal Products Regulation. We acknowledge that implementing a new system for evaluating data requires considerable resources in developing the method as well as providing adequate training for the users. However, the benefit of more systematic and transparent assessments may well outweigh the work required for introducing such a system. If implemented, the method should include the following aspects:

Clear criteria and guidance for evaluating reliability and relevance of data for (eco)toxicity studies as well as other type data required under REACH that do not require compliance with GLP and/or standardized test guidelines.

A template for transparently reporting the evaluation of each criterion with a justification and visualization of the output.

A function to ensure that insufficient reporting is distinguished from methodological flaws when assigning studies to reliability categories.

Guidance for categorizing reliability and relevance evaluations while allowing for flexibility and the use of expert judgment.

An approach for combining reliability and relevance to assign adequacy to studies as well as integrating different types of data in the hazard and risk assessment.

| Abbreviations | ||

| BfR | = | German federal institute for risk assessment (Bundesinstitut für Risikobewertung) |

| DEHP | = | Di-EthylHexyl Phthalate |

| ECHA | = | European CHemicals Agency |

| EU RAR | = | European Union Risk Assessment Report |

| GLP | = | Good Laboratory Practice |

| IUCLID | = | International Uniform Chemical Information Database |

| OECD | = | Organization for Economic Co-operation and Development |

| RDT | = | Repeated Dose Toxicity |

| REACH | = | Registration, Evaluation, Authorization and restriction of Chemicals |

| SciRAP | = | Science in Risk Assessment and Policy |

| SI | = | |

| SIDS | = | Screening Information Dataset. |

Disclosure statement

Christina Rudén was a member of ECHA’s management board between 2012 and 2017. However, ECHA has not been involved in funding nor in any other part of this research project.

Marlene Ågerstrand, Anna Beronius and Christina Rudén have developed the web-based reporting and evaluation resource SciRAP in a collaboration between the Department of Environmental Science and Analytical Chemistry (ACES), Stockholm University, and the Institute of Environmental Medicine (IMM), Karolinska Institutet. The specific method for evaluating in-vivo studies has been developed by Anna Beronius and Christina Rudén. SciRAP is available free of charge and does not generate any revenue.

Notes

1 Art 12(1), REACH: “The technical dossier referred to in Article 10(a) shall include under points (vi) and (vii) of that provision all physicochemical, toxicological and ecotoxicological information that is relevant and available to the registrant […].” (www.eur-lex.europa.eu).

References

- ACC (American Chemistry Council). 2017. Personal Communication. February 2, 2017

- Ågerstrand M, Breitholtz M, and Rudén C. 2011. Comparison of four different methods for reliability evaluation of ecotoxicity data: a case study of non-standard test data used in environmental risk assessments of pharmaceutical substances. Environ Sci Europe 23:17

- Ballantyne B, Snellings WM, and Norris JC. 2006. Respiratory peripheral chemosensory irritation, acute and repeated exposure toxicity studies with aerosols of triethylene glycol. J Appl Toxicol 26:387–96

- Barnes JM and Stoner HB. 1958. Toxic properties of some dialkyl and trialkyl tin salts. Brit J Industr Med 15:15–22

- Beronius A and Ågerstrand M. 2017. Making the most of expert judgment in hazard and risk assessment of chemicals. Toxicol Res 6:571–7

- Beronius A, Ågerstrand M, Ruden C, et al. 2017. SciRAP workshop report: Bridging the gap between academic research and chemicals regulation – the SciRAP tool for evaluating toxicity and ecotoxicity data for risk assessment of chemicals. Nordic Working Papers. Nordic Council of Ministers

- Beronius A, Molander L, Zilliacus J, et al. 2018. Testing and refining the Science in Risk Assessment and Policy (SciRAP) web‐based platform for evaluating the reliability and relevance of in vivo toxicity studies. J Appl Toxicol. DOI: 10.1002/jat.3648

- BfR (Bundesinstitut für Risikobewertung). 2015. REACH Compliance: data availability of REACH registrations. Part 1: Screening of chemicals >1000 tpa. Texte 43/2015. Environmental Research of the Federal Ministry for the Environment, Nature Conservation, Building and Nuclear Safety.

- Colerangle JB and Roy D. 1996. Exposure of environmental estrogenic compound nonlyphenol to noble rats alters cell-cycle kinetics in the mammary gland. Endocrine 4:115–22

- Condie LW, Hill JR, and Borzelleca JF. 1988. Oral toxicology studies with xylene isomers and mixed xylenes Drug Chem Toxicol 11:329–54

- Cranch AG, Smyth HF, and Carpenter CP. 1942. External contact with monoethyl ether of diethylene glycol (carbitol solvent). Arch Dermatol Syph 45:553–9

- Crocker JFS, Safe SH, and Acott P. 1988. Effects of chronic phthalate exposure on the kidney. J Toxicol Environ Health 23:433–44

- Detwiler-Okabayashi KA and Schaper MM. 1996. Respiratory effects of a synthetic metalworking fluid and its components. Arch Toxicol 70:195–201

- EC (European Commission). 2018. European Commission > EU Science Hub > EURL ECVAM > About EURL ECVAM > Archive of Publications > ToxRTool: ToxRTool – Toxicological data Reliability Assessment Tool. https://eurl-ecvam.jrc.ec.europa.eu/about-ecvam/archive-publications/toxrtool. February 13, 2018

- ECHA (European Chemicals Agency). 2011. Guidance on Information Requirements and Chemical Safety Assessment. Chapter R.4: Evaluation of Available Information. Version 1.1. https://echa.europa.eu/guidance-documents/guidance-on-reach

- ECHA (European Chemicals Agency). 2012a. Guidance on Registration. Guidance for the Implementation of REACH. Version 2.0. https://echa.europa.eu/guidance-documents/guidance-on-reach

- ECHA (European Chemicals Agency). 2012b. Practical Guide 3: How to Report Robust Study Summaries. Version 2.0. https://echa.europa.eu/practical-guides

- ECHA (European Chemicals Agency). 2013. IUCLID 5. End-user Manual. https://iuclid6.echa.europa.eu/archive-iuclid-5

- ECHA (European Chemicals Agency). 2018. REACH Registration Database. https://echa.europa.eu/sv/information-on-chemicals/registered-substances. Accessed: June 7, 2018

- Fairhurst S, Knight R, Marrs TC, et al. 1989. Percutaneous toxicity of ethylene-glycol monomethyl ether and of dipropylene glycol monomethyl ether in the rat. Toxicology 57:209–15

- Gaunt IF, Colley J, Grasso P, et al. 1968. Acute and short-term toxicity studies on di-n-butyltin dichloride in rats. Food Cosmet Toxicol 6:599–608

- Goldberg ME, Johnson HE, Pozzani UC, et al. 1964. Effect of repeated inhalation of vapors of industrial solvents on animal behavior. I. Evaluation of nine solvent vapors on pole-climb performance in rats. Am Indust Hygiene Assoc J 25:369–75

- Hoffmann S, de Vries RBM, Stephens ML, et al. 2017. A primer on systematic reviews in toxicology. Arch Toxicol 91:2551–75

- Ingre-Khans E, Agerstrand M, Beronius A, et al. 2016. Transparency of chemical risk assessment data under REACH. Environ Sci-Process Impacts 18:1508–18

- Ingre-Khans E, Ågerstrand M, Beronius A, Rudén C. 2018. Reliability and relevance evaluations of REACH data. Unpublished manuscript.

- Innis JD and Nixon GA. 1988. No evidence of toxicity associated with subchronic dermal exposure of rabbits to butoxypropanol. Toxicologist 8:213

- Kase R, Korkaric M, Werner I, et al. 2016. Criteria for Reporting and Evaluating ecotoxicity Data (CRED): comparison and perception of the Klimisch and CRED methods for evaluating reliability and relevance of ecotoxicity studies. Environ Sci Europe 28:7

- Klimisch HJ, Andreae M, and Tillmann U. 1997. A systematic approach for evaluating the quality of experimental toxicological and ecotoxicological data. Regul Toxicol Pharmacol 25:1–5

- Lake BG, Gray TJB, Foster JR, et al. 1983. Comparative studies on di-(2-ethylhexyl) phthalate-induced hepatic peroxisome proliferation in the rat and hamster. Toxicol Appl Pharmacol 72:46–60

- Leber AP, Scott RC, Hodge MCE, et al. 1990. Triethylene glycol ethers: evaluations of in vitro absorption through human epidermis, 21-day dermal toxicity in rabbits, and a developmental toxicity screen in rats. J Am College Toxicol 9:507–15

- Loeser E and Lorke D. 1976a. Semichronic oral toxicity of cadmium. I. Studies on rats. Toxicology 7:215–24

- Loeser E and Lorke D. 1976b. Semichronic oral toxicity of cadmium. 2. Studies on dogs. Toxicology 7:225–32

- Maltoni C, Lefermine G, and Cotti G. 1986. Experimental research on trichloroethylene carcinogenesis. In: Maltoni C and Mehlman M (eds), Archives of Research on Industrial Carcinogenesis, Vol 5, pp 1–393. Princeton Scientific, Princeton, NJ

- Moermond CTA, Kase R, Korkaric M, et al. 2016. CRED: criteria for reporting and evaluating ecotoxicity data. Environ Toxicol Chem 35:1297–309

- Molander L, Ågerstrand M, Beronius A, et al. 2015. Science in Risk Assessment and Policy (SciRAP) – an online resource for evaluating and reporting in vivo (eco) toxicity studies. Hum Ecol Risk Assess 21: 753–62

- OECD (Organization for Economic Co-operation and Development). 1981a. OECD Test Guideline No. 410. Repeated Dose Dermal Toxicity: 21/28-Day Study. http://www.oecd.org/chemicalsafety/testing/oecdguidelinesforthetestingofchemicals.htm

- OECD (Organization for Economic Co-operation and Development). 1981b. OECD Test Guideline No. 411. Subchronic Dermal Toxicity: 90-Day Study. http://www.oecd.org/chemicalsafety/testing/oecdguidelinesforthetestingofchemicals.htm

- OECD (Organization for Economic Co-operation and Development). 1998a. OECD Test Guideline No. 408. Repeated Dose 90-Day Oral Toxicity Study in Rodents. http://www.oecd.org/chemicalsafety/testing/oecdguidelinesforthetestingofchemicals.htm

- OECD (Organization for Economic Co-operation and Development). 1998b. OECD Test Guideline No. 409. Repeated Dose 90-Day Oral Toxicity Study in Non-Rodents. http://www.oecd.org/chemicalsafety/testing/oecdguidelinesforthetestingofchemicals.htm

- OECD (Organization for Economic Co-operation and Development). 2002. OECD Series on Testing and Assessment Number 32 and OECD Series on Pesticides Number 10. Guidance Notes for Analysis and Evaluation of Repeat-Dose-Toxicity Studies. ENV/JM/MONO(2000)18. http://www.oecd.org/chemicalsafety/pesticides-biocides/pesticides-publications-chronological-order.htm

- OECD (Organization for Economic Co-operation and Development). 2008. OECD Test Guideline No. 407. Repeated Dose 28-Day Oral Toxicity Study in Rodents. http://www.oecd.org/chemicalsafety/testing/oecdguidelinesforthetestingofchemicals.htm

- OECD (Organization for Economic Co-operation and Development). 2009a. OECD Test Guideline No. 403. Acute Inhalation Toxicity. http://www.oecd.org/chemicalsafety/testing/oecdguidelinesforthetestingofchemicals.htm

- OECD (Organization for Economic Co-operation and Development). 2009b. OECD Test Guideline No. 412. Subacute Inhalation Toxicity: 28-Day Study. http://www.oecd.org/chemicalsafety/testing/oecdguidelinesforthetestingofchemicals.htm

- OECD (Organization for Economic Co-operation and Development). 2009c. OECD Test Guideline No. 452. Chronic Toxicity Studies. http://www.oecd.org/chemicalsafety/testing/oecdguidelinesforthetestingofchemicals.htm

- OECD (Organization for Economic Co-operation and Development). 2009d. Series on Testing and Assessment. Number 39. Guidance Document on Acute Inhalation Toxicity Testing. ENV/JM/MONO(2009)28. http://www.oecd.org/chemicalsafety/testing/seriesontestingandassessmenttestingforhumanhealth.htm

- OECD (Organization for Economic Co-operation and Development). 2012. OECD Test Guideline No. 211. Daphnia magna Reproduction Test. http://www.oecd.org/chemicalsafety/testing/oecdguidelinesforthetestingofchemicals.htm

- OECD (Organization for Economic Co-operation and Development). 2013. OECD Test Guideline No. 236. Fish Embryo Acute Toxicity (FET) Test. http://www.oecd.org/chemicalsafety/testing/oecdguidelinesforthetestingofchemicals.htm

- OECD (Organization for Economic Co-operation and Development). 2016. OECD Template #67: Repeated dose Toxicity: Oral (Version 5.17-April 2016). https://www.oecd.org/ehs/templates/

- OHAT (Office of Health Assessment and Translation). 2015. Handbook for Conducting a Literature-Based Health Assessment Using OHAT Approach for Systematic Review and Evidence Integration. Office of Health Assessment and Translation (OHAT). Division of the National Toxicology Program. National Institute of Environmental Health Sciences

- Penninks AH and Seinen W. 1982. Comparative toxicity of alkyltin and estertin stabilizers. Food Chem Toxicol 20:909–16

- REACH. (2006). Regulation (EC) No 1907/2006 of the European Parliament and of the Council of 18 Dec 2006 concerning the Registration, Evaluation, Authorisation and Restriction of Chemicals (REACH), establishing a European Chemicals Agency, amending Directive 1999/45/EC and repealing Council Regulation (EEC) No 793/93 and Commission Regulation (EC) No 1488/94 as well as Council Directive 76/769/EEC and Commission Directives 91/155/EEC, 93/67/EEC, 93/105/EC and 2000/21/EC, 2006 OJ L 396/1

- Roth N and Ciffroy P. 2016. A critical review of frameworks used for evaluating reliability and relevance of (eco)toxicity data: perspectives for an integrated eco-human decision-making framework. Environ Int 95:16–29

- Roy D. 2017. Personal communication. September 19, 2017

- Rudén C. 2002. From Data to Decision. A Case Study of Controversies in Cancer Risk Assessment. Karolinska Institute, Karolinska University Press, Stockholm, Sweden

- Rudén C, Adams J, Ågerstrand M, et al. 2017. Assessing the relevance of ecotoxicological studies for regulatory decision making. Integr Environ Assess Manag 13:652–63

- Samuel GO, Hoffmann S, Wright RA, et al. 2016. Guidance on assessing the methodological and reporting quality of toxicologically relevant studies: a scoping review. Environ Int 92–93:630–46

- SCENIHR (Scientific Committee on Emerging and Newly Identified Helath Risks). 2012. Memorandum on the use of the scientific literature for human health risk assessment purposes – weighing of evidence and expression of uncertainty

- Schneider K, Schwarz M, Burkholder I, et al. 2009. "ToxRTool", a new tool to assess the reliability of toxicological data. Toxicol Lett 189: 138–44

- SciRAP (Science in Risk Assessment and Policy). 2017a. Evaluation of in vivo toxicity studies. http://www.scirap.org/Page/Index/a0130706-adce-45e0-83aa-64516c855fda/evaluate-reliability-relevance. October 31, 2017

- SciRAP (Science in Risk Assessment and Policy). 2017b. SciRAP criteria and guidance for assessing methodological quality of in vivo toxicity studies. http://www.scirap.org/Upload/Documents/SciRAP%20critera%20for%20methodologial%20quality%20-%20with%20guidance_ver170428.pdf. October 31, 2017

- SciRAP (Science in Risk Assessment and Policy). 2018. Development of SciRAP. http://www.scirap.org/Page/Index/ef39c65b-815d-4ba9-8e63-e85748e720ae/development-of-scirap. March 21, 2018

- van der Ven LTM, Verhoef A, van de Kuil T, et al. 2006. A 28-day oral dose toxicity study enhanced to detect endocrine effects of hexabromocyclododecane in wistar rats. Toxicol Sci 94:281–92

- Vandenberg LN, Ågerstrand M, Beronius A, et al. 2016. A proposed framework for the systematic review and integrated assessment (SYRINA) of endocrine disrupting chemicals. Environ Health 15:1–19

- Wandall B. 2004. Values in science and risk assessment. Toxicol Lett 152:265–72

- Wandall B, Hansson SO and Rudén C 2007. Bias in toxicology. Arch Toxicol 81: 605–17

- Whaley P, Halsall C, Ågerstrand M, et al. 2016. Implementing systematic review techniques in chemical risk assessment: challenges, opportunities and recommendations. Environ Int 92:556–64

- Whelton BD, Peterson DP, Moretti ES, et al. 1997. Skeletal changes in multiparous, nulliparous and ovariectomized mice fed either a nutrient-sufficient or -deficient diet containing cadmium. Toxicology 119:103–21

- WHO/UNEP. 2013. State of the Science of Endocrine Disrupting Chemicals – 2012. http://www.who.int/ceh/publications/endocrine/en/

- Zissu D. 1995. Histopathological changes in the respiratory tract of mice exposed to ten families of airborne chemicals. J Appl Toxicol 15:207–13