ABSTRACT

Background: Ensuring that learners acquire diagnostic competence in a timely fashion is critical to providing high quality and safe patient care. Resident trainees typically gain experience by undertaking repetitive clinical encounters and receiving feedback from supervising faculty. By critically engaging with the diagnostic process, learners encapsulate medical knowledge into discrete memories that are able to be recollected and refined in subsequent clinical encounters. In the setting of exponentially increasing medical complexity and current duty hour limitations, the opportunities for successful practice in the clinical arena have become limited. Novel educational methods are needed to more efficiently bridge the gap from novice to expert diagnostician.

Objective: Using a conceptual framework which incorporates deliberate practice, script theory, and learning curves, we developed an educational module prototype to coach novice learners to formulate organized knowledge (i.e. a repertoire of illness scripts) in an accelerated fashion thereby simulating the ideal experiential learning in a clinical rotation.

Design: We developed the Diagnostic Expertise Acceleration Module (DEAM), a web-based module for learning illness scripts of diseases causing pediatric respiratory distress. For each case, the learner selects a diagnosis, receives structured feedback, and then creates an illness script with a subsequent expert script for comparison.

Results: We validated the DEAM with seven experts, seven experienced learners and five novice learners. The module data generated meaningful learning curves of diagnostic accuracy. Case performance analysis and self-reported feedback demonstrated that the module improved a learner’s ability to diagnose respiratory distress and create high-quality illness scripts.

Conclusions: The DEAM allowed novice learners to engage in deliberate practice to diagnose clinical problems without a clinical encounter. The module generated learning curves to visually assess progress towards expertise. Learners acquired organized knowledge through formulation of a comprehensive list of illness scripts.

Background

How to bridge the chasm from novice to expert diagnostician safely and efficiently has always been the fundamental challenge of medical education. Traditionally, resident trainees have engaged in experiential learning by participating in repetitive clinical encounters with subsequent feedback from supervising faculty. Ideally, this experiential learning should allow the learner to engage critically in the diagnostic process and establish relationships that encapsulate medical knowledge into accessible memories within an individual’s mind. This form of organized knowledge with deep meaning is what distinguishes experts from novices[Citation1]. In the setting of exponentially increasing medical complexity, a public outcry to minimize diagnostic errors while maintaining timely patient care[Citation2], and current duty hour limitations, the opportunities to successfully practice diagnostic skills in the clinical setting (i.e. experiential learning) have become limited. For example, at our institution, in response to the duty hour changes and logistics related to clinical service complexity, our intern rotation in the ICU has lost ~25–33% of the time available for clinical learning. The lack of continuity of care created by shift work amplifies this problem by fragmenting the feedback loop inherent to the diagnostic process. This fragmentation creates a loss of opportunity which further limits the possibility of learning[Citation2]. In order to bridge this chasm more efficiently, novel modes of learning must be implemented to augment traditional educational strategies.

A considerable amount of research in diagnostic thinking has shown that organization and availability of medical knowledge within clinicians’ memories are what make experts superior to novice diagnosticians [Citation3,Citation4]. Many models or constructs have been studied to understand how knowledge is organized and the interplay of this organized knowledge with a clinician’s diagnostic thinking [Citation5–Citation7]. The use of illness scripts as a framework to help leaners organize medical knowledge is among the most widely adopted approaches for successfully teaching diagnostic reasoning. [Citation8–Citation12]

We aimed to develop an educational module prototype to coach novice learners to formulate organized knowledge (i.e. a repertoire of illness scripts) in an accelerated fashion thereby simulating the ideal experiential learning in a clinical rotation.

Methods

Conceptual framework

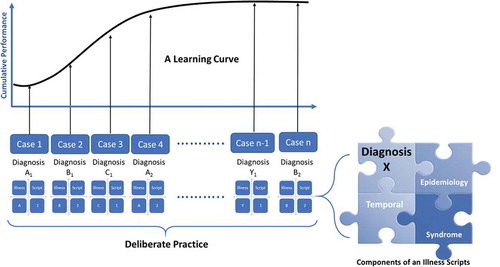

To represent the cognitive activity of organizing knowledge, we developed a conceptual framework () that incorporated deliberate practice, script theory, and learning curves to accelerate learning.

Figure 1. Conceptual Framework utilizing deliberate practice of diagnosing cases and creating illness scripts to accelerate learning as visually represented by a learning curve.

Successful deliberate practice is an effortful, repetitive training of key skills with immediate and informative feedback from an expert instructor[Citation13]. Illness scripts are cognitive structures based on script theory which encapsulate organized and accessible clinical knowledge[Citation14].An illness script contains three major components: epidemiology (age, underlying characteristics or health conditions); temporal relationship (onset, duration, progress), and syndrome (key signs/symptoms) [Citation15,Citation16]. Importantly, an illness script must be constructed by an individual learner and cannot be learned by memorization from an external source[Citation17]. Learning curves are graphical relationships between effort (i.e. number of practice sessions) and learning achievement (e.g. score, pass rate, etc.) and can be used to quantify and analyze effort, rate, direction and maximal potential of learning[Citation18]. These curves have been used to represent deliberate practice in clinical contexts[Citation19].

Module and case development

Using the proposed conceptual framework to guide the design, we developed the Diagnostic Expertise Acceleration Module (DEAM) prototype using respiratory distress cases in our Progressive Care Unit (PCU) as an exemplar. The DEAM is a computer-assisted, case-based learning module. The PCU is a 36-bed, intermediate care unit/step down ICU. The PCU is considered the most challenging rotation for our interns given the breadth and complexity of the patient population. In collaboration with a panel of five critical care experts, we identified the most common respiratory-based admission diagnoses to our PCU. We divided these diagnoses into broad categories which were initially based on a traditional ‘systems based’ approach of respiratory, cardiac, neurologic, toxic/metabolic, etc. Believing this system to be too simplistic and too similar to other educational approaches, we developed a 'physiology based' system that grouped illnesses based on primary respiratory signs. These major categories included wheezing, stridor, crackles, tachypnea/bradypnea with normal breath sounds and absent breath sounds. We believed this added layer of organization would be beneficial for the learners in establishing the patterns of the illness scripts and would more accurately mimic the level of expert thinking. Ultimately, we derived a consensus total of 35 illness scripts of which 29 were included in the final module (). For example, the expert illness script for laryngomalacia is epidemiology: infant, temporal: intermittent but recurrent, syndrome: inspiratory stridor, exacerbated by crying or agitation, improves with prone positioning.

Table 1. Diagnoses resulting in respiratory distress included as illness scripts in the DEAM. Number in parentheses indicates number of cases included in the final module.

A Monte Carlo simulation was used to estimate the number of cases required to demonstrate an acceptable level of performance using Cumulative Summation methods[Citation20]. About 90% of 10,000 simulated trials reached an acceptable level of performance within 50 cases assuming an acceptable failure rate ≤5%, an unacceptable failure rate ≥30%, 10% decreased odds of failure per case, and 10% type 1 and 2 error rates. While there are no established standards for these statistical measures, these assumptions target an educational intervention wherein the average learner achieves a correct answer 70–95% of the time and conservatively learns by ~10% with each question. The expert panel then authored 50 cases for the module, including a short history, physical exam and diagnostic data, and specifically designed each case to include the essential aspects of the illness scripts. The panel’s intent was for each case to have equivalent difficulty.

Initial module revision

The module was initially tested by four individuals (2 attendings, 1 fellow, 1 nurse practitioner) using Select Survey® software. This initial test resulted in three major revisions to the final module. First, the module was transitioned to an external website with a Moodle® based software platform to allow learners to access the module from any computer. Second, there were 15 to 17 possible answer choices from which the learner initially chose the correct diagnosis. This was reduced to 10 in the final module to eliminate redundancy and reduce distraction – for example heart failure, myocarditis, and congenital heart disease were no longer all present as possible answer choices. The third improvement was to refine the illness scripts in order to eliminate ambiguity – for example, community-acquired pneumonia was changed to pneumonia and the scripts for vascular ring (too rare an entity and lacking distinctive history or physical exam findings) and cystic fibrosis (too specific to be diagnosed within case) were removed.

Validation with experts, experienced and novice learners

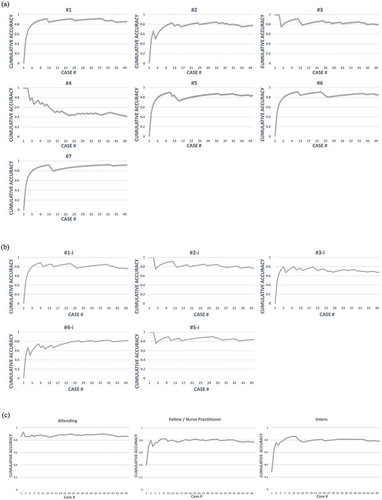

In order to assess our module prototype, we sought to query the continuum of experience – namely, experts, experienced learners and novices. The experts were critical care attendings and the experienced learners were critical care fellows and nurse practitioners. The novices were pediatric interns at the start of their residency. Both groups of learners performed the same four steps for the deliberate practice of making a diagnosis and formulating an illness script. Each participant 1) read a scenario and diagnosed the disease process from a selection of answers, 2) received the correct answer without further feedback or explanation, 3) created an illness script and 4) received a consensus expert illness script for comparison (See , example case). Using the answers from the first step, cumulative percent accuracy was plotted over the number of cases studied to generate individual and group learning curves () for feedback on the individual’s progressive learning and performance. The Institutional Review Board of Baylor College of Medicine approved this project (H-35103).

We evaluated how the various experts and experienced learners interacted with the module. In this way, we could verify the content of the cases while testing the ability of the module to collect data that generated learning curves. Using a survey as well as module analytics, we assessed the feasibility of the user interface, specifically the time to complete the module and each learner’s evaluation of the module.

Next, we piloted the module with the incoming interns in May/June 2016. The participants received an email introducing the study which included a 1-minute video introducing the module interface and the basics of an illness script as a package of organized knowledge which included semantic qualifiers in three domains (epidemiology, temporal relationship, and syndrome). Individuals were allowed access to the DEAM for 30 days. Each individual participant was required to work on the cases in the prescribed order (1–50), but could complete the cases at their own pace. We collected the same analytic data for comparison with the experts and experienced learners.

Results

A total of seven expert attendings, and seven experienced participants including four nurse practitioners with more than 2 years of critical care experience, and three critical care fellows (one in the first year and two in the second year of training) completed the module. From the 50 interns invited to participate in the pilot, 19 started and 5 completed all 50 cases within the module. An additional 5 completed more than 8 cases, and the remaining 9 completed 5 or fewer cases.

Learning curve generation

The DEAM pilot data demonstrated meaningful learning curves on diagnostic accuracy for individual learners as well as groups of learners (). Considering all the participants, the expert attending data means generated a group curve that reached a sustained plateau at approximately 90% accuracy, representing the maximal learning potential for the module. The fellow/nurse practitioner curve as well as the intern curve reached a plateau at ~80% ()). For the 5 interns who completed all 50 cases, the DEAM yielded learning curves which were similar in shape to the experienced learner’s curve ()). All of the learning curves reached a plateau within about 14 cases, which we believe is indicative of rapid acclimation to the testing environment. There was one individual in both the experienced learners (), #4) and novice (), #3-i) groups whose sustained plateau did not approach the maximal learning potential for their level of experience. For the 3 novice learners that completed at least 14 cases but not the entire module, one individual answered the first 13 cases correctly before reaching a plateau of ~85% accuracy over 24 cases. A second individual’s curve was similar to ), #5-i with a plateau at ~75% over 19 cases. The final individual’s curve was similar is shape to ), #4-i and was approaching 80% over 14 cases.

Figure 2. DEAM Learning Curves. For each case, the learner reads the case and selects a diagnosis. The module calculates a cumulative accuracy (percent correct) after each case. At the conclusion of all 50 cases, the module plots the individual’s learning curve as well as a group curve. a) Individual curves for the 7 Fellows/Nurse Practitioners, b) Individual curves for the 5 Interns who completed all 50 cases, c) collective group curves distributed by level of training.

Time to complete module

For the experts and experienced learners, the median time spent per case was 2.3 min (Interquartile range-IQR: 1.4–4.2), or approximately 3 cumulative hours to complete the entire module over multiple sittings. The median time the novices spent per case was slightly longer 2.5 min (IQR: 1.9–4.3), and the average cumulative time to complete all cases was comparable at 2.8 h.

Learner evaluation

Overall, feedback for the module was positive. On a 5-point Likert scale (1 – strongly disagree to 5 – strongly agree), the learners rated the module (mean ± SD) at 3.9 ± 0.5 for improving the ability to diagnose a cause for respiratory distress and at 3.8 ± 0.9 for improving the ability to create an illness script. The experts and experienced participants commented that they were challenged to ‘think critically’ and that ‘practice of making illness scripts is a good one, forces you to take [making] diagnosis to another level.’ No novice reported dissatisfaction with the time to complete the module. All of the comments from the novices are reported below:

‘Nice variety. Practice of making illness script is a good one’

‘I should be able to make [diagnosis] faster and make the correct diagnosis with more [confidence].’

‘Would be helpful to choose choices for illness scripts as an example of what is expected.’

“Really enjoyed this set of modules. Highly recommend before starting a [PICU] rotation. “

Case performance analysis

When analyzing the learners’ performance on diagnoses with multiple cases within the module, we identified five representative patterns. summarizes these five diagnoses, including case numbers within the module and mean expert and novice score. The first pattern is demonstrated by the asthma cases which had a relatively high but flat performance, ideally indicative of deliberate practice of an appropriately difficult case. The bronchiolitis cases were universally answered correctly by both expert and novice learners. While this would be expected from even novice pediatricians, this pattern may also indicate that these cases are too easy. A subset of cases, such as fever manifesting as acute respiratory distress, had universally poor performance which likely indicates an unclear question or diagnosis. The myocarditis cases demonstrated an optimal pattern for successful deliberate practice. There was improvement from the first to the second case for novice learners as well as high performance by experts and experienced learners. Finally, the pneumonia cases demonstrated an unexpected decline in performance with each subsequent case. This pattern indicates a set of cases that may be unclear (similar to the fever cases) or that contain confounding information in the latter case. For example, the third question in this set included a patient with a tracheostomy which may have contributed to the decline in learner performance.

Table 2. Mean accuracy scores for representative diagnoses and cases (see for all cases). Bold scores are less than 0.70.

Illness script analysis

After reviewing the illness scripts that were entered by the learners in the DEAM, the vast majority of both experienced and novice learners completed the free text response illness script questions for all 50 of the cases. We generally observed that for the cases which repeated diagnoses that the quality of the illness script improved with each attempt. For each of the diagnoses listed in , enumerates the illness scripts which were entered by a single, representative novice () and expert (). The table includes the diagnosis, whether the learner correctly or incorrectly answered the question, and then the full and unedited illness scripts – including epidemiology (E), temporal relationship (T), and syndrome (S) – that were entered by the representative user. For each set of cases, there was a progressive improvement in the quality of the illness script developed by both the novice and expert learners. Our commentary of this improvement is summarized in the final column of .

Table 3a. Representative Novice learner illness scripts as entered by learner and author commentary. Bold text indicates that diagnosis was incorrect, E = epidemiology, T: temporal relationship, S: syndrome.

Table 3b. Representative Expert and Experienced learner illness scripts as entered by learner and author commentary. Bold text indicates that diagnosis was incorrect, E = epidemiology, T: temporal relationship, S: syndrome.

Discussion

Conceptual frameworks grounded in educational theories and best practices can guide the development and evaluation of an educational innovation. This study describes a learning module that uses deliberate practice principles to teach learners to formulate an illness script, a surrogate for organized knowledge, with a quantitative outcome in learning curves. Our proposed approach appears to be a feasible and effective method for learners to create and refine a variety of illness scripts even in the absence of a clinical encounter. The clinical reasoning literature purports that elaborated illness scripts are the end products of years of clinical learning experience [Citation8,Citation21] and, therefore, teaching learners about illness scripts by other means is difficult to impossible[Citation17]. Our preliminary evidence – that the novice interns in our study created increasingly robust scripts as they progressed through the module – challenges this notion by expanding the definition of experiential clinical practice.

Our study adds another chapter to the utility of learning curves in medical education. Pusic has published extensively on the use of learning curves in the interpretation of radiographs by emergency medicine physicians as well as the generation and analysis of learning curves in general [Citation18,Citation22,Citation23]. Similar to Pusic’s results, the learning curves in our study provide valuable information regarding a learner’s initial and peak performance. Most importantly, they can quickly and effectively identify the struggling learner and thus allow for timely assessment and intervention. This capability opens the possibility of using our module as an on-boarding tool to accelerate learning for residents prior to a scheduled rotation. With the proper content, a DEAM could assess a novice learner on a standard set of cases (content knowledge) and assess the ability to formulate an illness script (knowledge organization). By being able to compare an individual to their current peers (or a historical data set), the learning curve could provide the learner with motivation to improve during their rotation. The educator could also gain insight into which learners may need extra preparation or remediation upon starting a rotation. Additionally, our data demonstrate that even experienced practitioners (), #4) may have deficits and highlights the potential utility of our module as a tool not only for on-boarding novices but also for ensuring competency of experienced providers in a form of continuing education. Learner #4 did complete the free text questions for every illness script with appropriate and case-specific information which decreases the likelihood of random guessing as an explanation for below average performance. We would expect the learning curve for someone who was randomly selecting answers to never reach a plateau level which would inform the educator to investigate the learner’s ability or willingness to engage with the module.

As with any educational innovation, the content of what we are trying to teach impacts both the perceived and actual learning. Cases that seemed obvious to the authors may be misinterpreted by the learners (e.g. the fever cases). Our proof of concept study offers encouraging evidence that cases can be designed with the proper balance of desirable difficulty and deliberate practice (e.g. myocarditis and asthma cases) to challenge learners to encode the knowledge without leading to frustration and abandonment of the module. We are encouraged that those who completed our entire module described an appropriate balance in this regard. Given the low response rate from our interns, we surmise that the time we offered the module, just prior to starting residency, may not be practical. In future studies, we plan to assess the optimal timing for this DEAM.

This is the first attempt to teach the challenging concept of illness script formation via an online module, and our preliminary data indicate that our DEAM allows for successful deliberate practice of the creation of an illness script. Further work remains to understand how to best assess the quality of illness scripts created and provide impactful feedback to each learner about their illness scripts.

During this pilot study, the process of creating the conceptual framework, cases and the DEAM taught us many lessons. contains a summary of lessons learned regarding the use of deliberate practice, for teaching illness scripts, and for utilizing computer-assisted modules in graduate medical education.

Table 4. Tips and lessons learned.

This study has some limitations. We assumed that learning from a written case presentation can mimic an experiential learning from a clinical encounter. It remains to be seen whether utilizing a case presentation to prompt a learner to formulate his or her script can approximate the cognitive effort required to create and encode an illness script. Since this was an asynchronous online learning, we did not have control over potential contaminations from learning within participants or from others outside the study. Our pilot study was neither designed nor powered to interrogate these possibilities.

Through an iterative process, we attempted to create cases that would provide enough difficulty to distinguish performance between novice and experienced learners. Since the learning curves for our novice and experienced learners were similar, our set of pilot cases may not be able to differentiate between these groups. We will need to continue to assess the balance between the difficulty of the cases and our learner’s level of experience to determine if we can (and should) distinguish these sub-groups. Though the DEAM is presented as a series of test questions, it is supposed to serve as an assessment for learning.

Finally, our DEAM presented a case set in a predefined order for all learners. While this allowed us to compare cases with the same diagnosis, it limits our ability to analyze the cases completely as we are unable to determine if learner performance is solely dependent on the case itself or on the case sequence. Additionally, as can be seen in , approximately equal number of diagnoses had a decrease in performance between cases as had an increase. Given the small sample size in this pilot phase, we cannot conclude whether this finding was due to suboptimal learning from the illness script, content of our cases and confounding information in the case stems. It is possible that more repetition of a case (i.e. deliberate practice) is required to ascertain a stable diagnostic performance. As more learners utilize our DEAM in the future phase, we will be able to adjust both the case content and sequence to ensure optimal learning.

Future/next steps

We plan to continue to refine this prototype with a larger population of learners in order to generalize the findings from the pilot study. As more data is collected, we will be able to gain deeper insight into the information provided by learning curves as well as enhance our ability to understand how our content affects the learning process.

We aim to create a comprehensive educational program using a DEAM platform to allow accelerated learning prior to or early in a clinical rotation. In this way, residents will be primed with a core set of illness scripts which they can further recalibrate and optimize during their clinical rotation with actual patient encounters. We, also, hope to optimize the feedback on the illness scripts generated by the learner in order to enhance the retention of acquired knowledge. Finally, we hope to develop a post-test to determine if the learner has achieved and retained mastery of basic principles required to create an illness script.

Acknowledgments

This work was generously supported by the Center for Research, Innovation and Scholarship in Medical Education, Texas Children’s Hospital, Baylor College of Medicine.

Disclosure statement

No potential conflict of interest was reported by the authors.

Additional information

Funding

References

- Mylopoulos M, Lohfeld L, Norman G, et al. Renowned physician’s perceptions of expert diagnostic practice. Acad Med. 2012;87(10):1413–12.

- Institute of Medicine. Improving diagnosis in healthcare. Washington DC: National Academy Press; 2015.

- Bordage G, Lemieux M. Semantic structures and diagnostic thinking of experts and novices. Acad Med. 1991;66:S70–S72.

- Bordage G, Zacks R. The structure of medical knowledge in the memories of medical students and general practitioners: categories and prototypes. Med Educ. 1984;18:406–416.

- Fetovich PJ, Knowledge based components of expertise in medical diagnosis, PhD Diss The University of Minnesota, 1981: P275.

- Patel VL, Groen GJ. Knowledge based solution strategies in medical reasoning. Cogn Sci. 1986;10:91–116.

- Norman GR, Brook LR. Allen SW et.al. Expertise in visual diagnosis: a review of the literature. Acad Med. 1992;67(S10):S78–83.

- Custers EJ. Thirty years of illness scripts: theoretical origins and practical applications. Med Teach. 2015;37(5):457–462.

- Lubarsky S, Dory V, Audétat MC, et al. Using script theory to cultivate illness script formation and clinical reasoning in health professions education. Can Med Educ J. 2015;6(2):e61–70. Epub 2015 Dec 11.

- Schmidt HG, Rikers RM. How expertise develops in medicine: knowledge encapsulation and illness script formation. Med Educ. 2007;41(12):1133–1139.

- Charlin B, Boshuizen HP, Custers EJ, et al. Scripts and clinical reasoning. Med Educ. 2007 Dec;41(12):1178–1184.

- Charlin B, Tardif J, Boshuizen HP. Scripts and medical diagnostic knowledge: theory and applications for clinical reasoning instruction and research. Acad Med. 2000 Feb;75(2):182–190.

- Ericsson K, Krampe R, Tesch-Romer C. The role of deliberate practice in the acquisition of expert performance. Psychol Rev. 1993;100(3):363–406.

- Schmidt HG, Norman GR, Boshuizen PA. A cognitive perspective on medical expertise: theory and implications. Acad Med. 1990;65(10):611–621.

- Schmidt HG, Rikers R. How expertise develops in medicine: knowledge encapsulation and illness script formation. Med Educ. 2007;41:1133–1139.

- Lee A, Joynt G, Lee A, et al. Using Illness scripts to teach clinical reasoning skills to medical students. Fam Med. 2010;42(4):255–261.

- Charlin B, Tardif J, Boshuizen H. Scripts and medical diagnostic knowledge: theory and applications for clinical reasoning instruction and research. Acad Med. 2000;75(2):182–190.

- Pusic M, Boutis K, Hatala R, et al. Learning curves in health professions education. Acad Med. 2015;90(8):1034–1042.

- Pusic M, Pecaric M, Boutis K. How much practice is enough? using learning curves to assess the deliberate practice of radiograph interpretation. Acad Med. 2011;86:731–736.

- Yap CH, Colson M, Watters D. Cumulative sum techniques for surgeons: A brief review. ANZ J Surg. 2007;77:583–586.

- Norman G. Building on experience—the development of clinical reasoning. N Engl J Med. 2006;355(21):2251–2252.

- Pusic M, Kessler D, Szyld D, et al. Experience curves as an organizing framework for deliberate practice in emergency medicine learning. Acad Emerg Med. 2012;19:1476–1480.

- Pusic M, Boutis K, Pecaric M, et al. A primer on the statistical modelling of learning curves in health professions education. Adv Health Sci Educ. 2017;22:741–759.

Appendix A: Representative case in DEAM utilizing 4 steps to emphasize deliberate practice

Step 1)

Step 2)

Step 3)

Step 4)