?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

Our research aimed to improve survival prediction by combining gene expression datasets, and to apply molecular signatures across different datasets. Many methods have previously been developed to remove unwanted variations among datasets and maintain the wanted factor variations. However, for inter-study validation (ISV) research, a whole dataset is set aside for testing, and the statuses of wanted factors are assumed unknown for the whole dataset; thus, regression cannot be used to determine the unwanted variations for this dataset. In this study, quantile normalization (QN) was utilized to remove the unwanted dataset variations, after which the adjusted datasets were used for classification. It was observed that the datasets formed by QN combination in the study of ISV had superior prediction performance compared to the datasets combined by other methods. Combining datasets using QN could improve the prediction performance for the study of ISV.

Introduction

Microarray gene expression technology provides a systematic approach to cancer classification [Citation1,Citation2]. Many studies have aimed to improve survival prediction by combining gene expression datasets, and to apply molecular signatures across different datasets [Citation3–8]. However, inter-dataset variation hindered these attempts [Citation9–11].

Some methods have been developed to remove unwanted dataset variations (or batch variations) and maintain the wanted factor variations [Citation12–15]. For example, in the removal of unwanted variation (RUV) model, the gene expression values are assumed as Y = Xβ + Wα + ϵ, where X represents the factors of interest (e.g. the predictive factors), W, the unwanted factors (e.g. the dataset factors or batch factors), and ϵ, an arbitrary amount of noise (see details in section Materials and methods) [Citation15].

The RUV model is effective for the study of intra-dataset validation, in which some samples or one sample of the datasets is set aside for testing, and the remaining part (each dataset has samples in this part) is utilized for training. In the training process (both wanted and unwanted factors, X and W, are assumed known for the training samples), the unwanted variations, α, can be determined for every dataset by regression, and then be removed.

However, for the study of inter-study validation (ISV), a whole dataset is set aside for testing, and the record of X is assumed unknown for the whole dataset; thus, the unwanted variations cannot be determined for this dataset in the regression.

In this regard, quantile normalization (QN), a global adjustment method that assumes the statistical distribution of each sample is the same [Citation16], is used, as it does not require the record of X. The assumption is justified in many biomedical applications in which only a minority of genes are expected to be differentially expressed (not including differential mythylation). QN has been shown to be effective for normalizing GeneChip arrays, and was integrated into RMA (robust multi-array analysis), the software for raw data processing [Citation17].

In this study, QN was utilized to remove unwanted dataset variations. It was utilized to combine simulated or real datasets, after which the datasets were used for classification. The classification performance was evaluated and compared with those of other methods.

Materials and methods

Simulated datasets

A Monte Carlo setup similar to reference [Citation18] was used. In each simulation, five datasets that contain 40, 60, 80, 100 and 120 samples of class 0, and 200, 180, 160, 140 and 120 samples of class 1 were generated using multivariate normal distributions with class-specific mean vectors and common covariance matrices. The simulations were performed with n = 1000 genes (variables), and the first n1 = 50 were differentially expressed between classes with a mean difference of 1. The remaining 950 genes had the same mean for each class. For the common covariance matrix, Structures 1–3 as defined in reference [Citation18] were used:where

and

are

and

intra-class correlation matrices for

informative genes and for

noninformative genes, respectively.

is the

correlation matrix between informative and noninformative genes and

is the

identity matrix. The gene–gene correlations were set as 0.5. One hundred simulation replicates were performed for each structure.

Real datasets

Gene-expression datasets of breast cancer with a record of endocrine responsiveness (ER) status were pre-selected from the Gene Expression Omnibus (http://www.ncbi.nlm.nih.gov/geo [Citation19]). The following criteria were also applied: (1) datasets were in the same platform (GPL96, which contains 22215 probe-sets); (2) probe intensities were quantified with the same method (MAS 5.0); and (3) sample numbers were greater than 100; (4) datasets have both ER+ and ER− samples. In addition, datasets with overlapped samples were excluded. Finally, six datasets [Citation20–25] were included (see ), consisting of 1640 samples (1103 ER+ and 537 ER−).

Table 1. Gene expression datasets.

QN combination

QN was chosen in this study to remove unwanted dataset variations before combining the datasets. Normalization was achieved by forcing the observed distributions to be the same and the average distribution, obtained by taking the average of each quantile across samples, was used as the reference [Citation16]. It has been successfully used to remove batch effects for microarray datasets. In this study, it was used to remove inter-dataset variations. It was conducted in MATLAB according to an algorithm previously described [Citation16].

RUV combination

RUV combination was also chosen for comparison. In the RUV model, the gene expression values are assumed as Y = Xβ + Wα + ϵ, with Y ∈ Rm×n, X ∈ Rm×p, β ∈ Rp×n, W ∈ Rm×k, α ∈ Rk×n, and ϵ ∈ Rm×n, where Y is the observed matrix of expression of n genes for m samples, X represents the p factors of interest, W, the k unwanted factors, and ϵ, an arbitrary amount of noise [Citation15].

According to the RUV model, if both the factor matrices X and W are known, the wanted variations (β) and unwanted variations (α) can be determined by regression. The adjusted gene expression values can be obtained by removing the unwanted variations, Y* = Y − Wα.

In the RUV model, the element (W)ij is set to 1 if the i-th sample belongs to the j-th dataset, or set to 0 otherwise; the element (X)ij is set to 1 or −1 if the i-th sample belongs to one of two statuses of the j-th factor. For the study of ISV, a whole dataset is set aside for testing, and the record of X is assumed unknown for the whole dataset; thus, the unwanted variation, α, as well as the adjusted value, Y*, cannot be determined for this dataset in the regression. The ISV may not be performed. Alternatively, the element (X)ij may be set to a median value, 0, if the status of the j-th factor was unknown for the i-th sample.

RUV combination was conducted in MATLAB according to the above descriptions.

Classifiers

There are correlations between genes [Citation26]. Therefore, correlation-based classifiers were chosen, and utilized to predict the status of wanted factors.

SVM (support vector machine) classification is a correlation-based binary classification that uses selected variables (features) and fits an optimal hyperplane between two classes by maximizing the margin between the closest points [Citation27,Citation28]. This classification was implemented in MATLAB using the functions svmtrain and svmclassify.

The ensemble of random subspace (RS) Fisher linear discriminant (FLD) classifier (referred to as enRS-FLD) is another correlation-based classifier, the decision of which is taken by averaging the decisions of the base classifiers (FLD classifiers) working in the lower dimensional subspaces [Citation29]. The enRS-FLD classifier was also chosen, and was conducted in MATLAB according to the algorithm described in reference [Citation29]. The parameters employed by these authors were also used. The dimension of the subspaces, k, was set to (N − 2)/2 to optimize the classification, where N was the number of samples in the training dataset. The number of subspaces, M, was set to 100 (for simulated datasets) or 1000 (for real datasets).

Classification performance

The F1-measure and accuracy [Citation30,Citation31] were used to evaluate the classification performance.

For the study of intra-dataset validation, 5-fold cross-validation (CV) was used. For the study of inter-dataset validation, leave-dataset-out cross-validation (LDOCV) was used.

In the process of validation, one fold or one dataset was set aside in turn for testing, and the wanted factors were assumed unknown for them; the remaining part was utilized for training. Then both the testing and training set were combined and adjusted by QN combination or RUV combination, and the adjusted training set was used to train the classifier and predict the wanted factors for the testing set.

Results and discussion

Intra-dataset validation for simulated datasets

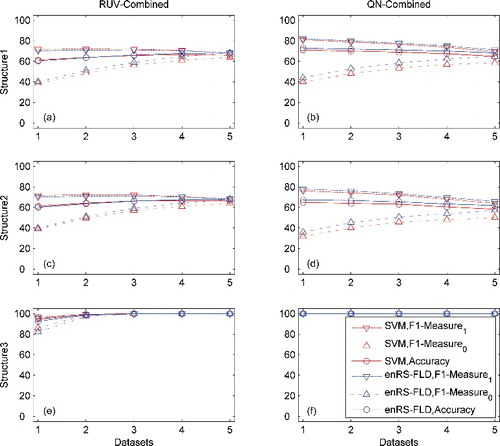

For each of the three structures, 100 replicates were generated. In each replicate, five datasets were generated. Intra-dataset validation (5-fold CV, 100 random splits) was carried out using each dataset individually or using the five datasets combined by RUV or QN combination. After the validation, further testing was carried out using 100 times larger datasets. The F1-measure and accuracy were computed for each dataset and averaged over the 100 splits and the 100 replicates. The results are shown in and (see also Figure S1, Tables S1 and S2 in the Online Supplemental Data). It was observed that the classification performance for Structure 3 was superior to those for the other two structures. The classifiers benefited from the correlations between the informative and non-informative genes [Citation32]. It was observed that the performance of RUV combination was superior to that of the non-combination approach for datasets of Structure 3; and inferior for datasets of Structures 1 and 2. This might be due to the shift of the mean in the RUV combination. The performance of QN combination was inferior to RUV combination for datasets of Structure 2, and near to RUV combination for datasets of the other two structures.

Figure 1. Classification performance in intra-dataset validation (5-fold CV, 100 splits) for simulated datasets.

Table 2. Classification performance in intra-dataset validation (5-fold CV) for simulated datasets.

Inter-dataset validation for simulated datasets

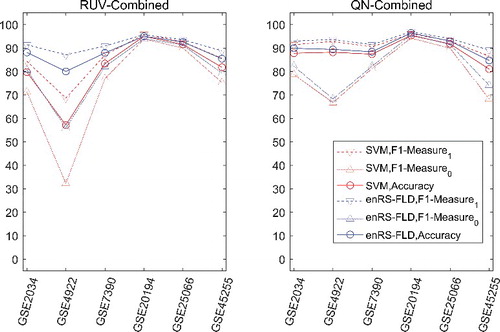

Inter-dataset validation (LDOCV) was carried out using datasets combined by RUV combination and QN combination. The F1-measure and accuracy were computed for each dataset and averaged over the 100 replicates. The results are shown in and (see also Figure S2, Tables S3 and S4 in the Online Supplemental Data). The inter-dataset validation was found to exhibit declined performance compared to the intra-dataset validation when the datasets were combined by RUV combination, especially when a dataset was unbalanced. However, the inter-dataset validation using datasets combined by QN combination outperformed the use of datasets combined by RUV combination, especially for datasets of Structures 1 and 3, owing to the improvement of the classification performance for the unbalanced datasets; and had performance similar to the intra-dataset validation using datasets combined by QN combination.

Table 3. Classification performance in inter-dataset validation (LDOCV) for simulated datasets.

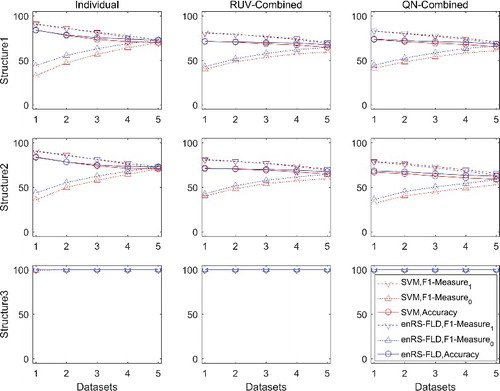

Intra-dataset validation for real datasets

For real datasets, intra-dataset validation (5-fold CV) was carried out using each dataset individually or the six datasets combined by RUV or QN combination. The F1-measure and accuracy were computed for each dataset. The results are shown in and . Overall, it was observed that the RUV combined datasets performed more effectively than the individual datasets, and the QN combined datasets performed somewhat better than the RUV combined datasets.

Figure 3. Classification performance in intra-dataset validation (5-fold CV, 100 splits) for real datasets.

Table 4. Classification performance in intra-dataset validation (5-fold CV) for real datasets.

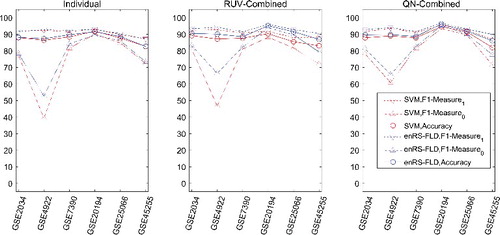

Inter-dataset validation for real datasets

Inter-dataset validation (LDOCV) was carried out using datasets combined by RUV combination and QN combination. The F1-measure and accuracy were computed for each dataset. The results are shown in and . It was also observed that the inter-dataset validation had a declined performance compared to the intra-dataset validation when the datasets were combined by RUV combination. The performance of inter-dataset validation using datasets combined by QN combination was superior to that using datasets combined by RUV combination, owing to the improvement of classification performance for the unbalanced datasets. Even if datasets having only ER+ (or ER−) or having only one sample were included, QN combination would work well, while the RUV combination would not work.

Table 5. Classification performance in inter-dataset validation (LDOCV) for real datasets.

The performance of inter-dataset validation using datasets combined by QN combination was superior to that of intra-dataset validation using individual datasets, therefore higher level of statistical significance was achieved by QN combination. Inter-dataset validation using datasets combined by QN combination had performance similar to or somewhat better than that of intra-dataset validation using datasets combined by RUV combination. This challenged the conventional view that the performance of inter-dataset validation was poorer than that of intra-dataset validation [Citation11,Citation33]. In future research, it may be need to take into account the unbalanced datasets and QN normalization.

Conclusions

In intra-dataset validation, combining datasets using the RUV model had positive impact compared to the individual datasets for simulated datasets of Structure 3 and real datasets. In inter-dataset validation, combining datasets using QN had positive impact compared to combining datasets using the RUV model. Combining datasets using QN could improve the prediction performance for the study of ISV. The prediction performance of inter-dataset validation using datasets combined by QN was higher than that of intra-dataset validation using individual datasets, for simulated datasets of Structure 3 and real datasets. The inter-dataset variations were removed by QN and higher performance was obtained.

Supplemental_Data.pdf

Download PDF (417.3 KB)Acknowledgments

The authors are grateful to the authors of the datasets and those responsible for the availability of the data.

Disclosure statement

The authors declare no conflict of interest.

References

- Golub TR, Slonim DK, Tamayo P, et al. Molecular classification of cancer: class discovery and class prediction by gene expression monitoring. Science. 1999;286:531–537.

- Ross DT, Scherf U, Eisen MB, et al. Systematic variation in gene expression patterns in human cancer cell lines. Nat Genet. 2000;24:227–234.

- Autio R, Kilpinen S, Saarela M, et al. Comparison of Affymetrix data normalization methods using 6,926 experiments across five array generations. BMC Bioinformatics. 2009;10(Suppl. 1):S24.

- Xu L, Tan AC, Winslow RL, et al. Merging microarray data from separate breast cancer studies provides a robust prognostic test. BMC Bioinformatics. 2008;9:125.

- van Vliet MH, Reyal F, Horlings HM, et al. Pooling breast cancer datasets has a synergetic effect on classification performance and improves signature stability. BMC Genomics. 2008;9:375.

- Xu L, Tan AC, Naiman DQ, et al. Robust prostate cancer marker genes emerge from direct integration of inter-study microarray data. Bioinformatics. 2005;21:3905–3911.

- Kim K-Y, Ki D, Jeung H-C, et al. Improving the prediction accuracy in classification using the combined data sets by ranks of gene expressions. BMC Bioinformatics. 2008;9:283.

- Kim S-Y. Effects of sample size on robustness and prediction accuracy of a prognostic gene signature. BMC Bioinformatics. 2009;10:147.

- Yasrebi H, Sperisen P, Praz V, et al. Can survival prediction be improved by merging gene expression data sets? PLoS ONE. 2009;4(10):e7431.

- Bevilacqua V, Pannarale P, Abbrescia M, et al. Comparison of data-merging methods with SVM attribute selection and classification in breast cancer gene expression. BMC Bioinformatics. 2012;13(Suppl. 7):S9.

- Ma S, Sung J, Magis AT, et al. Measuring the effect of inter-study variability on estimating prediction error. PLoS ONE. 2014;9(10):e110840.

- Johnson WE, Li C, Rabinovic A. Adjusting batch effects in microarray expression data using empirical Bayes methods. Biostatistics. 2007;8(1):118–127.

- Leek JT, Storey JD. Capturing heterogeneity in gene expression studies by surrogate variable analysis. PLoS Genet. 2007;3:e161.

- Gagnon-Bartsch JA, Speed TP. Using control genes to correct for unwanted variation in microarray data. Biostatistics. 2012;13:539–552.

- Jacob L, Gagnon-Bartsch JA, Speed TP. Correcting gene expression data when neither the unwanted variation nor the factor of interest are observed. Biostatistics. 2016;17(1):16–28.

- Hicks SC, Irizarry RA. Quantro: a data-driven approach to guide the choice of an appropriate normalization method. Genome Biol. 2015;16(1):117.

- Irizarry RA, Hobbs B, Collin F, et al. Exploration, normalization, and summaries of high density oligonucleotide array probe level data. Biostatistics. 2003;4:249–264.

- Kim KI, Simon R. Probabilistic classifiers with high-dimensional data. Biostatistics. 2011;12(3):399–412.

- Gene Expression Omnibus. Available from: http://www.ncbi.nlm.nih.gov/geo/browse/

- Wang Y, Klijn JG, Zhang Y, et al. Gene-expression profiles to predict distant metastasis of lymph-node-negative primary breast cancer. Lancet. 2005;365(9460):671–679.

- Ivshina AV, George J, Senko O, et al. Genetic reclassification of histologic grade delineates new clinical subtypes of breast cancer. Cancer Res. 2006;66(21):10292–10301.

- Desmedt C, Piette F, Loi S, et al. Strong time dependence of the 76-gene prognostic signature for node-negative breast cancer patients in the transbig multicenter independent validation series. Clin Cancer Res. 2007;13:3207–3214.

- Shi L, Campbell G, Jones WD, et al. The MicroArray Quality Control (MAQC)-II study of common practices for the development and validation of microarray-based predictive models. Nat Biotechnol. 2010;28(8):827–838.

- Hatzis C, Pusztai L, Valero V, et al. A genomic predictor of response and survival following taxane-anthracycline chemotherapy for invasive breast cancer. J Am Med Assoc. 2011;305:1873–1881.

- Nagalla S, Chou JW, Willingham MC, et al. Interactions between immunity, proliferation and molecular subtype in breast cancer prognosis. Genome Biol. 2013;14:R34.

- Jong VL, Novianti PW, Roes KC, et al. Exploring homogeneity of correlation structures of gene expression datasets within and between etiological disease categories. Stat Appl Genet Mol Biol. 2014;13(6):717–732.

- Novianti PW, Jong VL, Roes KC, et al. Factors affecting the accuracy of a class prediction model in gene expression data. BMC Bioinformatics. 2015;16:199.

- Statnikov A, Aliferis CF, Tsamardinos I, et al. A comprehensive evaluation of multicategory classification methods for microarray gene expression cancer diagnosis. Bioinformatics. 2005;21(5):631–643.

- Durrant R, Kabán A. Random projections as regularizers: learning a linear discriminant from fewer observations than dimensions. Mach Learn. 2015;99:257–286.

- Xie S, Li P, Jiang Y, et al. A discriminative method for protein remote homology detection based on N-Gram. Genet Mol Res. 2015;14(1):69–78.

- Tripathy A, Agrawal A, Kumar Rath SK. Classification of sentiment reviews using n-gram machine learning approach. Expert Syst Appl. 2016;57:117–126.

- Pan M, Zhang J. Correlation-based linear discriminant classification for gene expression data. Genet Mol Res. 2017;16(1): gmr16019357.

- Allahyar A, de Ridder J. FERAL: network-based classifier with application to breast cancer outcome prediction. Bioinformatics. 2015;31:i311–i319.