ABSTRACT

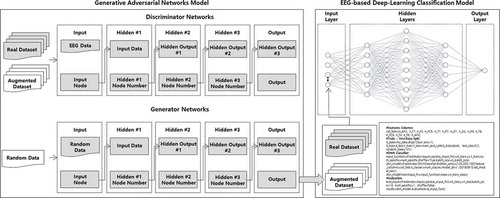

In architectural planning and initial designing process, it is critical for architects to recognise users’ emotional responses toward design alternatives. Since Building Information Modelling and related technologies focuses on physical elements of the building, a model which suggests decision-makers’ subjective affection is strongly required. In this regard, this paper proposes an electroencephalography (EEG)-based hybrid deep-learning model to recognise the emotional responses of users towards given architectural design. The hybrid model consists of generative adversarial networks (GANs) for EEG data augmentation and an EEG-based deep-learning classification model for EEG classification. In the field of architecture, a previous study has developed an EEG-based deep-learning classification model that can recognise the emotional responses of subjects towards design alternatives. This approach seems to suggest a possible method of evaluating design alternatives in a quantitative manner. However, because of the limitations of EEG data, it is difficult to train the model, which leads to the limited utilisation of the model. In this regard, this study constructs GANs, which consists of a generator and discriminator, for EEG data augmentation. The proposed hybrid model may provide a method of developing supportive and evaluative environments in planning, design, and post-occupancy evaluation for decision-makers.

1. Introduction

1.1 Research background and aim

Architectural planning and initial designing involve communication among various decision-making participants including clients, designers, engineers, potential users, and local community members. In the architectural planning step, information that can be a basis for future decision making is generated. In the initial designing step, various design alternatives are suggested by the designer based on the information generated in the planning step. These design alternatives are then reviewed and revised by decision-making participants. The designing step is of considerable importance because as it proceeds, design alternatives are specified and used as the fundamental basis for future procedures (Sebastian Citation2007).

However, in architectural planning and initial designing, communication problems have constantly arisen between the client and designer (Siva and London Citation2012). There are inevitable limitations in the client gaining an accurate understanding of the suggestions of the designer and in the process of communicating requirements and aspects to be revised (Shen et al. Citation2013). In addition, it is difficult for the designer to grasp the client’s requirements accurately and to quantitatively track and review the responses of users towards revised design alternatives (Kiviniemi Citation2005). Therefore, design processes are often inefficient and the design quality is not guaranteed.

Building information modelling (BIM) technology is used to structure physical architectural elements and their relationships in a form of a database throughout the lifecycle of a building based on a digitalised building information management platform and to maintain the accumulated building information. BIM technology has been utilised in various fields such as building design, construction, and maintenance. It plays an important role in supporting the architectural design step, providing various types of building information that can be utilised in the decision-making process. However, BIM technology does not reflect the emotional evaluation of decision-making participants because it focuses solely on information regarding the physical attributes of buildings. This is a major limitation of BIM technology because in the planning and designing steps, the emotional evaluation of decision-making participants with regard to the design alternatives suggested by the designer can be an important basis for decision making. Against this background, research has been conducted actively in the fields of brain science and neuroscience on the emotional responses of users towards certain conditions by utilising electroencephalography (Ekman and Friesen Citation1971). In the fields of architecture and design as well, quantitative research has been conducted actively on the emotions of users by utilising EEG. Brain waves are electric signals generated during different brain activities, and EEG is a technology to generate numerical data by attaching electrodes to the scalps of subjects for brain wave augmentation (Schomer and Silva Citation2010).

Recently, in the field of architecture, some research studies have presented design alternatives to subjects in the form of images while recording their EEG and analysing the EEG data using deep-learning classification models (Chang, Dong, and Jun 2018). The models proposed in these studies show the basic possibility of estimating the emotional responses of decision-making participants towards certain spaces. However, there are limitations in training the model because the brain wave data collected from experiments are insufficient. In this reagard, as an alternative, a generative adversarial networks (GANs) may be utilised to generate virtual data using only a small volume of input data.

Goodfellow et al. (Citation2014) proposed a GANs consisting of a generator and discriminator and generated virtual data through the augmentation of a small volume of input data. A GANs can be utilised when the available volume of data is not sufficient for the training and evaluation of a deep-learning model. The GANs concept is drawing attention in many fields, particularly in fields where data acquisition is challenging, such as facial recognition (Yang, Zhang, and Yin Citation2018) and voice recognition (Han et al. Citation2018).

Accordingly, this paper proposes an EEG-based hybrid deep-learning model that can classify the emotional responses of potential users towards architectural design alternatives. This model is a combination of a GANs and EEG-based deep-learning classification model. The GANs augments brain wave data acquired in previous studies, and the augmented brain wave data are provided tothe classification model to train it.

1.2 Study method

The rest of the paper is structured as follows. Section 2 presents a theoretical investigation on previous studies that utilise brain waves in the field of architecture and establishes the theoretical background for GANs models and deep-learning classification models. Section 3 describes the development of a GANs model for brain wave data augmentation and an EEG-based deep-learning classification model for the data classification based on the findings of the previous studies; hence, a hybrid deep-learning model based on EEG is developed by combining these two deep-learning models. Section 4 presents the implementation of the proposed GANs model for the augmentation of the brain wave data acquired under experimental settings and describes the details of an EEG-based deep-learning model training and evaluation using both existing data and augmented data. In the process of establishing the GANs model and EEG-based deep-learning classification model, the TensorFlow platform developed by Google is utilised, which is a core open-source machine learning library (TensorFlow, Citation2018). The proposed hybrid model in this research is built especially for EEG data augmentation and classification using the TensorFlow libraries. The brain wave datasets are recorded using the “EMOTIV EPOC+ 14 Channel Mobile EEG” device from Emotiv and the EmotivPro software (Emotiv Citation2018).

2. Theoretical investigation

2.1 Utilisation of brain waves in field of architecture

Brain waves are electric signals generated during brain cell and neurons activities. The numerical data of these waves are recorded using EEG, for which electrodes are attached to the scalps of subjects (Schomer and Silva Citation2010). Brain waves have drawn attention because it is possible to usually detect them in any person and to utilise their quantitative numerical data, which indicate the responses of the brain towards external stimuli. In the field of architecture, the quantitative data are utilised for research on the concentration and productivity of space occupants, dependence of sleep patterns on environmental changes, biological reactions of occupants or subjects in satisfaction analysis, and so on. Lan and Lian (Citation2009), Lee, Choi, and Chun (Citation2012), and Zhang et al. (Citation2017) utilised brain waves to observe and verify changes in the productivity of occupants depending on the indoor temperature. Pan, Lian, and Lan (Citation2011), Lan et al. (Citation2013), and Lan, Lian, and Lin (Citation2016) utilised EEG for obtaining quantitative data, using which they analysed the dependence of the sleep patterns and satisfaction of subjects on the spatial environment. All such studies utilise brain waves for obtaining quantitative data, which are used to examine the physiological changes in occupants depending on the physical architectural conditions.

Many previous studies relevant to architectural design have used EEG to grasp the emotional responses of users towards changes in the architectural space. One such research included a preference survey and brain wave experiments among youths, who were given indoor images with emotional vocabularies of community facilities in an apartment complex (Hwang, Kim, and Kim Citation2013). Hwang et al. (Citation2014) used brain wave data to examine facility preferences among youths. Ryu and Lee (Citation2015) examined the correlations between colour changes in an indoor residential space and brain waves. Such studies are important in that they utilise the quantitative data obtained from brain to grasp the emotional responses of subjects in architectural design perspectives and to observe relevant changes. However, these studies have limitations in terms of establishing specific models for EEG data analysis. In contrast, Chang, Dong, and Jun (Citation2018) recorded the brain waves of subjects while showing them images of a small residential space and asking them to select the most-preferred and least-preferred images. This study also proposed an EEG-based deep-learning classification model through which the recorded brain wave data could be analysed. This model is important in that it analyses the subjective emotional responses of users towards architectural spaces in a limited experimental situation, but the quantity of brain wave data obtained from experiments is limited. Therefore, it is difficult to apply this model universally, and further learning and evaluation of the model is required. In addition, the process of obtaining brain wave data is complicated and requires EEG equipment, making it difficult to apply this model to various other research areas. Thus, the present study aims to establish a generative adversarial networks model through which a small quantity of brain wave data acquired through experiments from previous studies could be augmented and converted into virtual data and to train the EEG-based deep-learning classification model using the acquired data. shows a diagram that illustrates how the proposed hybrid deep-learning model could be applied to an architectural design process.

2.2 Generative adversarial networks

Goodfellow et al. (Citation2014) proposed the concept of a GANs, which is a deep-learning algorithm based on unsupervised learning. Supervised deep-learning method algorithm processes data based on the feature values of input data, calculates differences(loss) between model predictions and actual data labels based on the user-given results, and proceeds to reduce the loss from such differences (Hinton and Salakhutdinov Citation2006). When this process occurs while classification values of actual data (labels) are provided, it is called supervised learning. When any classification values of actual data are provided, it is called unsupervised learning. However, in the case of supervised learning, model training is limited if any label values of actual data are provided or if the quantity of data is small. In contrast, the GANs model is different in that this algorithm is based on unsupervised learning and thus can generate virtual data based on input data even if the quantity of the input data is small. This data augmentation supports the process of training the supervised deep-learning algorithm. The GANs model consists of a generator and discriminator. The generator generates virtual data using latent data and delivers the generated data to the discriminator. The discriminator processes the data from the generator and the original data simultaneously, distinguishing the original data from the virtual data. As the training is repeated, the generator and discriminator continue to adjust the weight and bias values that indicate the extent of connection among the nodes in each hidden layer. The discriminator continues learning with regard to distinguishing the original data from the virtual data, whereas the generator proceeds to generate virtual data that are similar to the original data. Because the generator and discriminator continue learning in a hostile and competing environment, the algorithm is adversarial, competitive and generative.

The GANs model is utilised to increase the size of data whose actual classification values are hardly secured as well as to generate certain data types such as image, video, and voice. Caramihale, Popescu, and Ichim (Citation2018) and Luo and Lu (Citation2018) augmented key facial expression data and brain wave data by utilising the GANs. Their finding indicates that as augmented data are utilised for training an emotional lassification model, the model’s accuracy is enhanced.

shows the structure of the GANs consisting of the discriminator and generator. The discriminator receives virtual data generated by the generator in addition to actual data, whereas the generator receives random data. Input datasets pass through the neural networks of both the generator and discriminator. The discriminator receives actual data and data generated by the generator simultaneously. It distinguishes the actual data from the fake data and calculates the loss. The generator and discriminator continue learning in a manner that reduces this loss.

2.3 Deep-learning classification

Hinton and Salakhutdinov (Citation2006) proposed a concept of deep learning that combines multiple artificial neural networks. The deep-learning model is mainly divided into three types of layers: the input layer, hidden layers, and output layer. The hidden layers consist of multiple nodes and the weight and bias values that indicate the extent of connection between the nodes. These values pass through the activation function and then through the following layer. As data enter into the deep-learning model, computational operations are performed on them while they pass through each layer. The output layer presents the predicted values after the model classifies the data. In the case of the supervised learning classification model, both the labels and feature values of the data are put into the model. The difference between the classification values of the actual data and the values predicted by the model is calculated which is so called loss.

shows the structure of the deep neural networks. An input (xn) is multiplied by a weight value (wn), and then, the result works as an input for the activation function. The activation function then calculates the input and transmits it to the following node as an output value.

If the classification values of the actual data are provided to the model in the process of entering data to the deep-learning model and training it, it is called supervised learning. If such values are not provided, it is called unsupervised learning. If the model is trained through supervised learning, the training may proceed in a manner that reduces the difference between the values predicted by the model and the classification values of the actual data. However, in this case, the learning may be limited if the quantity of data is small or if no classification values are provided. In contrast, the unsupervised learning method has limitations in that it may produce different values depending on the model’s structure or parameters although it is possible to train the model even without the classification values of the data (Jain, Duin, and Mao Citation2000).

Accordingly, this study aims to develop an EEG-based hybrid deep-learning model that can recognise the emotional responses of users in the initial designing step. It consists of a GANs for brain wave data augmentation and an EEG-based deep-learning classification model for brain wave data classification. Brain wave datasets of subjects from previous studies are augmented through the GANs, and then, the augmented datasets are utilised to train and evaluate the classification model (Chang, Dong, and Jun 2018).

3. Proposed hybrid deep-learning model

In this work, two distinct deep-learning-based models are suggested. The first is a generative adversarial networks (GANs) model for EEG data augmentation, and the second is an EEG-based deep-learning model for data classification. The hybrid deep-learning model consists of a combination of these two models. Both these models were built in this study using Google TensorFlow, which is a core open-source library for machine learning and deep learning.

Brain wave data collected from experiments work as input values for GANs and then augmented in a competitive environment between the generator and discriminator. The augmented data work as input values for deep-learning classification model along with raw brain wave data collected through experiments, and then, the model proceeds with training and evaluation based on the data. shows how the GANs used for brain wave data augmentation is combined with the EEG-based deep-learning classification model used for EEG data classification.

3.1 Generative adversarial networks for brain wave data augmentation

This section aims to establish a GANs model to augment a small quantity of brain wave data collected in previous studies. In the process of establishing this model, the open-source library Google TensorFlow was used.

The EEG data that are to be used by the established model have 14 channels, and datasets are normalised for each channel. This step is necessary to prevent data distortion in the event that the value of a certain channel is larger than that of another channel.

The generator and discriminator of the established GANs consist of three hidden layers, which consist of 50, 100, and 50 nodes, respectively. The number of nodes in each layer was determined after evaluations with multiple combinations of hyper parameters that showed the highest training speeds. The generator receives random values between 0 and 1 and generates virtual EEG data. The discriminator receives EEG collected through experiments and virtual data and distinguishes the original data from the virtual data. Once the training is completed, the EEG data generated by the generator are presented to users and saved as csv format. shows the structure of the generator and discriminator of the established GANs.

3.2 EEG-based deep-learning classification model for brain wave data classification

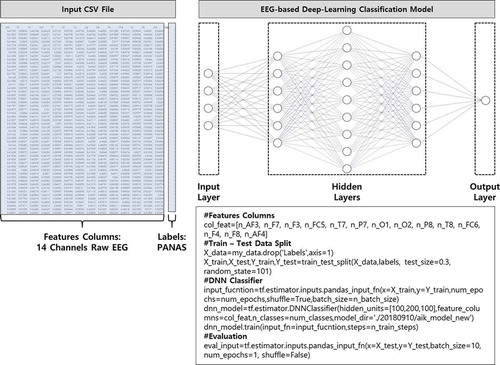

The purpose of the EEG-based deep-learning classification model is to classify the emotional states of the brain wave data as “positive” or “negative” toward architectural design alternatives. The classification model utilises TensorFlow as well as application programming libraries related to supervised learning.

The model consists of three layers: the input layer, hidden layers, and output layer. And the hidden layers consist of three layers which consist of 100,200 and 100 nodes respectively. A number of nodes in the hidden layers were selected after preliminary tests with various combinations of hyper-parameters and were optimised for training speed and accuracy.

The classification model proceeds with learning according to the following steps: (1) data input and normalisation, (2) distinction of training data from evaluation data(train-test split), (3) training, and (4) evaluation and accuracy measurement. (1) In the data input and normalisation step, the brain wave data of 14 channels are entered according to each channel’s feature data values and are normalised. The actual classification values of the corresponding brain wave data are also given to the model. (2) In the data splitting step, the entire input data are shuffled and divided: 70% for training and 30% for testing. The division between the training and test datasets was determined after conducting multiple optimisations of the model and was intended to prevent over-fitting or under-fitting problems. (3) In the training step, the classification model presents the predicted values based on the feature values of the input data, and the weight and bias values are adjusted in a manner that reduces the difference between the predicted output values and actual classification values. (4) In the evaluation and accuracy measurement step, the model accuracy is measured and given to users. shows the structure of the model.

4. Implementation of electroencephalography-based hybrid deep-learning model

4.1 Brain wave dataset

Chang, Dong, and Jun (Citation2018) performed an experiment in which they recorded EEG data of subjects. The experiment was performed in an experiment room of Hanyang University with the help of 18 individuals (12 women and 6 men) who were healthy physically and mentally. The experiment was performed in the following order: (1) presentation of space images to subjects for selection, (2) machine calibration and meditation, (3) brain wave measurement, and (4) questionnaire completion. (1) In the step of presenting space images to subjects for selection, eight space images were presented and subjects selected images that they most preferred and the ones they least preferred. (2) In the machine calibration and meditation step, subjects were asked to wear the “EMOTIV EPOC+ 14 Channel Mobile EEG” device from Emotiv for machine calibration using the EmotivPro software. (3) In the brain wave measurement step, the brain waves of subjects were recorded for 20 s. (4) In the questionnaire completion step, subjects filled out the Positive and Negative Affect Schedule (PANAS) questionnaire. The PANAS questionnaire included 20 questions: 10 regarding “positive emotion” and 10 regarding “negative emotion.” For the answer to each question, “very much” was given 5 points and “not at all” was given 1 point. In this manner, the scores of the “positive emotion” and “negative emotion” questions were calculated (Watson, Clark, and Tellegen Citation1988). Chang, Dong, and Jun (Citation2018) excluded the emotion scores that were lower than the average scores of “positive emotion” and “negative emotion” presented in the research of Watson, Clark, and Tellegen (Citation1988). Consequently, the brain wave data of 8 individuals for the “positive emotion” questions and the data of 17 individuals for the “negative emotion” questions were included in the dataset. From the brain wave data for 20 s, the data for the first 5 s and last 5 s were excluded to remove noise, and the remaining brain wave data for 10 s were used. The number of rows in the “positive emotion” and “negative emotion” datasets was 10,240 (8 × 128 × 10) and 21,760 (17 × 128 × 10), respectively.

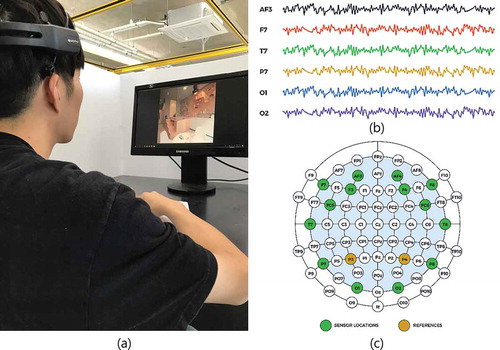

) shows the result of the brain wave experiment performed on one subject, ) shows the brain wave recording software called EmotivPro(Emotiv Citation2018), and ) shows the electrode position of the “EMOTIV EPOC+ 14 Channel Mobile EEG” device, which is the EEG equipment used in this experiment (Emotiv Citation2018).

Figure 7. (a) Subject recording EEG, (b) EmotivPro software interface with recording EEG (Emotiv Citation2018), (c) “EMOTIV EPOC+ 14 channel mobile EEG” device technical specifications (Emotiv Citation2018).

shows the structure of the EEG dataset.) shows the raw brain wave data of 14 channels, which include feature values. ) shows the re-classified affection results of the PANAS survey. ) shows numerically casted values from the survey results. Actual classification values were entered into the model as follows: 1 for “negative” items and 2 for “positive” items. ) shows the initials of each subject, and ) shows the frequency.

4.2 Brain wave dataset augmentation using generative adversarial networks

The learning process of the GANs includes the following four steps: (1) data input, (2) virtual data generation by the generator, (3) discriminator training, and (4) virtual data output. (1) In the data input step, the “positive emotion EEG dataset” and “negative emotion EEG dataset” recorded in the previous study were entered into the model. (2) In the step involving virtual data generation by the generator, random values between 0 and 1 were entered into the generator and the generator generated virtual brain wave data. (3) In the discriminator training step, the actual EEG data and virtual EEG data generated by the generator were entered into the discriminator simultaneously. The discriminator distinguished the actual data from the fake data. In this phase, loss values of discriminator and generator are calculated. If generated data becomes similar to actual data during training process, generator loss decreases. (4) In the virtual data output step, virtual brain wave data generated by the generator were saved in csv format. In total, 30,000 rows were generated by the generator for the “positive emotion EEG dataset” and “negative emotion EEG dataset” items respectively.

indicates that as the training was repeated, the virtual data in blue gradually became similar to the actual data in orange. Among the 14 channels of the “positive emotion” brain wave data, the two channels AF3 and T7 were visualised on the x axis and y axis, respectively. When the training was repeated 1,000 times, the generator generated data containing negative numbers with no actual data. When the training was repeated 8,000 times, the overlap of the virtual data with the actual data increased. As the training was repeated, the overlapped parts of the actual data and virtual data on the coordinate axes increased to the point that the discriminator could no longer distinguish the actual data from the virtual data. Accordingly, the generator’s loss decreased gradually.

Table 1. Comparison between real data and augmented data through iterations (Positive affection).

4.3 DEEG-based deep-learning classification model training using virtual brain wave data

The virtual EEG data generated using the GANs model were utilised in the process of training the EEG-based deep-learning classification model. The virtual brain wave dataset generated based on the “positive emotion EEG dataset” was added to this dataset, and then, the “negative emotion EEG dataset” was added in the same manner. Consequently, the “positive emotion brain wave dataset” and “negative emotion brain wave dataset” were combined with 40,240 and 51,760 rows, respectively.

The expanded “positive emotion EEG dataset” and “negative emotion EEG dataset” were then entered into the classification model for training. While the previous classification model utilised 32,000 datasets for training, in the present model, 92,000 datasets were utilised in training as virtual brain wave data were added. The final accuracy was 0.984, which was better than the existing accuracy (0.979). If more data are added in the future using the GANs model, the accuracy and universality may improve further.

4.4 Implications and model utilisation scenarios

The proposed EEG-based hybrid deep-learning model enables quantitative identification of user emotions towards architectural space images. At the design stage, the architects consider the emotional qualitative reviews of the decision makers, including owners and preliminary users, as well as the quantitative reviews, including legal and location-based reviews, on the design alternatives to be important. In this regard, the proposed model can be effectively used. However, at the current stage of research, because it is difficult to manipulate EEG recording machines and because the experimental procedures are complicated, applying the proposed model to actual design processes will require additional research in the fields of brain science and architecture in the future.

At present, we can present research scenarios that quantitatively measure specific subjects’ responses towards specific architectural spaces and build databases by obtaining measurement data and incorporating them into design knowledge using the proposed model. Previous studies on design knowledge databases mainly used surveys and in-depth interviews to measure the emotional responses of subjects to a specific type of architecture. However, if the model proposed in this study is adopted, architects can quantitatively measure the emotional responses of users under certain conditions and suitably incorporate them into the knowledge base to reflect real business processes. This scenario is suggested in .

5. Conclusion

The objective of this study was to establish an EEG-based hybrid deep-learning model through which the emotional responses of users towards a architectural space could be evaluated. To this end, brain wave data collected in previous studies were augmented using the GANs model, and an EEG-based deep-learning classification model was trained and evaluated by using both actual and virtual data. The findings of this study are as follows.

First, the GANs showed a major possibility of augmenting atypical EEG data. It is expected that this networks can be used in the field of architecture to augment and utilise datasets whose scale and availability are limited, such as simulation data, environmental sensor data, and observation data. Second, as the quantity of data increases, the accuracy of training and evaluating the deep-learning model will improve continually. The model has demonstrated that it can handle big data pertaining to architecture, traffic, and other environments that can be utilised in the field of architecture. Third, by using the brain wave data and EEG-based hybrid deep-learning model, the study has demonstrated the possibility of helping the designer in the architectural design step to grasp the emotional responses of future users or clients towards proposed design alternatives. In this study, it was possible to utilise brain wave data as indicators of the emotional responses or preferences of subjects only in limited environments.

In future studies, the following research activities should be considered. The proposed hybrid deep-learning model should be applied to actual design processes. The hybrid deep-learning model should be evaluated and verified. Experiments should be performed for recording additional brain wave data to facilitate the universal application of the model. The EEG-based hybrid deep-learning model should be combined with an eye tracking system for the evaluation of the emotional responses of users towards actual spatial environments.

Acknowledgments

We would like to express our sincere gratitude to GARAM Architects and Associates Research and Development Center for offering supportive environment to finish this research.

Disclosure statement

No potential conflict of interest was reported by the authors.

Additional information

Funding

References

- Caramihale, T., D. Popescu, and L. Ichim. 2018. “Emotion Classification Using a TensorFlow Generative Adversarial Networks Implementation.” Symmetry 10 (9): 414. doi:10.3390/sym10090414.

- Chang, S. W., W. H. Dong, and H. J. Jun. 2018. “EEG-Based Deep Neural Networks Classification Model for Recognizing Emotion of Users in Early Phase of Design”. Journal of the Architectural Institute of Korea Planning & Design 34(12): 85-94.

- Ekman, P., and W. V. Friesen. 1971. “Constants across Cultures in the Face and Emotion.” Journal of personality and social psychology 17 (2): 124–129. doi:10.1037/h0030377.

- Emotiv. 2018. “EMOTIV EPOC+, EPOC+ Headset Details.” Accessed 27 September 2018. https://emotiv.gitbooks.io/epoc-user-manual/content/epoc+_headset_details/

- Goodfellow, I., J. Pouget-Abadie, M. Mirza, B. Xu, D. Warde-Farley, S. Ozair, A. Courville, and Y. Bengio. 2014. “Generative Adversarial Nets.” In Proceedings of the 27th International Conference on Neural Information Processing Systems, Montreal, Canada, 2 vols, 2672–2680.

- Han, J., Z. Zhang, Z. Ren, F. Ringeval, and B. Schuller. 2018. “Towards Conditional Adversarial Training for Predicting Emotions from Speech.” In IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, Alberta, Canada, 6822–6826.

- Hinton, G. E., and R. R. Salakhutdinov. 2006. “Reducing the Dimensionality of Data with Neural Networks.” Science 313 (5786): 504–507. doi:10.1126/science.1127647.

- Hwang, Y. S., J. Y. Kim, A. L. Chang, E. Y. Lim, and H. W. Jung. 2014. “Planning of Apartment Community Facilities According to EEG Analysis by School Age of Youth Emotional Words.” Korean Institute of Interior Design Journal 23 (4): 181–189. doi:10.14774/JKIID.2014.23.4.181.

- Hwang, Y. S., S. Y. Kim, and J. Y. Kim. 2013. “An Analysis of Youth EEG Based on the Emotional Color Scheme Images by Different Space of Community Facilities.” Korean Institute of Interior Design Journal 22 (5): 171–178. doi:10.14774/JKIID.2013.22.5.171.

- Jain, A. K., R. P. W. Duin, and J. Mao. 2000. “Statistical Pattern Recognition: A Review.” IEEE transactions on pattern analysis and machine intelligence 22 (1): 4–37. doi:10.1109/34.824819.

- Kiviniemi, A. 2005. “Requirements Management Interface to Building Product Models.” Thesis, Stanford University.

- Lan, L., and Z. Lian. 2009. “Use of Neurobehavioral Tests to Evaluate the Effects of Indoor Environment Quality on Productivity.” Building and environment 44 (11): 2208–2217. doi:10.1016/j.buildenv.2009.02.001.

- Lan, L., Z. W. Lian, and Y. B. Lin. 2016. “Comfortably Cool Bedroom Environment during the Initial Phase of the Sleeping Period Delays the Onset of Sleep in Summer.” Building and environment 103: 36–43. doi:10.1016/j.buildenv.2016.03.030.

- Lan, L., L. Pan, Z. Lian, H. Huang, and Y. Lin. 2013. “Experimental Study on Thermal Comfort of Sleeping People at Different Air Temperatures.” Building and environment 73: 24–31. doi:10.1016/j.buildenv.2013.11.024.

- Lee, H. J., Y. L. Choi, and C. Y. Chun. 2012. “Effect of Indoor Air Temperature on the Occupants’ Attention Ability Based on Electroencephalogram Analysis.” Journal of the Architectural Institute Of Korea Planning & Design 28 (3): 217–225.

- Luo, Y., and B. Lu. 2018. “EEG Data Augmentation for Emotion Recognition Using a Conditional Wasserstein GAN.” In 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Honolulu, Hawaii, 2535–2538.

- Pan, L., Z. Lian, and L. Lan. 2011. “Investigation of Gender Differences in Sleeping Comfort at Different Environmental Temperatures.” Indoor and Built Environment 21 (6): 811–820. doi:10.1177/1420326X11425967.

- Ryu, J. S., and J. S. Lee. 2015. “Correlation Analysis of Emotional Adjectives and EEG to Apply Color to the Indoor Living Space.” Journal of Korea Society of Color Studies 29 (3): 25–35. doi:10.17289/jkscs.29.3.201508.25.

- Schomer, D., and F. Silva. 2010. Niedermeyer’s Electroencephalography: Basic Principles, Clinical Applications, and Related Fields. 6th ed. Lippincott Williams & Wilkins. Philadelphia, Pennsylvania, United States.

- Sebastian, R. 2007. “Managing Collaborative Design.” Doctoral Thesis, Delft University of Technology.

- Shen, W., X. Zhang, G. Q. Shen, and T. Fernando. 2013. “The User Pre-Occupancy Evaluation Method in Designer–Client Communication in Early Design Stage: A Case Study.” Automation in Construction 32: 112–124. doi:10.1016/j.autcon.2013.01.014.

- Siva, J., and K. London. 2012. “Client Learning for Successful Architect‐Client Relationship.” Engineering, Construction and Architectural Management 19 (3): 253–268. doi:10.1108/09699981211219599.

- TensorFlow. 2018. Accessed 12 November 2018. https://www.tensorflow.org/

- Watson, D., L. A. Clark, and A. Tellegen. 1988. “Development and validation of brief measures of positive and negative affect: The PANAS scales.” Journal of personality and social psychology 54 (6): 1063–1070. doi:10.1037/0022-3514.54.6.1063.

- Yang, H., Z. Zhang, and L. Yin. 2018. “Identity-Adaptive Facial Expression Recognition through Expression Regeneration Using Conditional Generative Adversarial Networks.” In 13th IEEE International Conference on Automatic Face & Gesture Recognition, Xi’an, China, 294–301.

- Zhang, F., S. Haddad, B. Nakisa, M. N. Rastgoo, C. Candido, D. Tjondronegoro, and R. D. Dear. 2017. “The Effects of Higher Temperature Setpoints during Summer on Office Workers’ Cognitive Load and Thermal Comfort.” Building and environment 123: 176–188. doi:10.1016/j.buildenv.2017.06.048.