?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

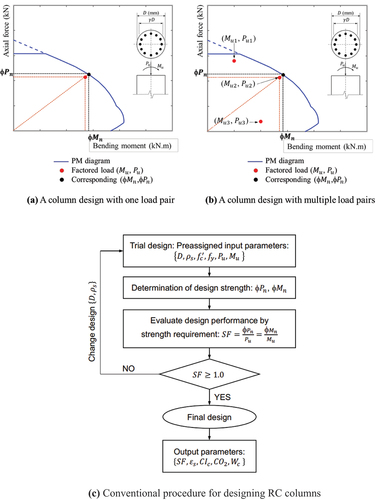

Design optimizations of round reinforced concrete columns based on artificial neural networks (ANNs) have been investigated in previous studies with only one pair of axial load () and bending moment (

). In this study, ANNs are generalized to be applicable to multiple load pairs by reshaping weight matrices of ANNs to prevent retraining of ANNs on the large datasets. Generalized ANN-based Lagrange optimizations are proposed for structural designs of round reinforced concrete columns with multiple load combinations. The present study modularizes the weight matrix of ANNs which considers one load pair to completely capture multiple factored loads. An optimal design by ANNs based on the modularized weight matrix and Lagrange optimization techniques using the Karush–Kuhn–Tucker (KKT) conditions was performed and validated with large datasets. Design examples performed by an ANN-based method and structural mechanics demonstrate accuracies of safety factors (SF) as small as 1% − 2%, which confirms the applicability of the proposed ANNs. Based on the present study, ANNs with modularized weight matrices aid engineers in optimizing round reinforced concrete columns subject to multiple loads.

1. Introduction

A breakthrough in applying machine learning (ML) techniques and optimization algorithms in the area of the civil engineering has been recently seen. ANN is an information-processing technique inspired by the human biological system, which aims to discover certain trends among input and output parameters of large datasets. Hong et al. (Citation2021a) implemented ANN-based design charts for doubly reinforced concrete beams in reverse design problems. Other studies use ANN’s learning algorithm to allow ANNs to learn from material and structural behaviors. ANN-based models for structural analysis and design of RC columns were addressed by Kaarthikeyan(Citation2016) and Charalampakis and Papanikolaou (Citation2021) Although these studies developed rapid designs, they did not validate the developed ML models in practical design.

In the present study, conventional designs of round concrete columns subject to multiple loads are replaced by ANNs. Round reinforced concrete columns are usually designed to sustain axial forces () and bending moments (

). A pair of

,

is regarded as one load pair or one load point on an interaction axial force–bending moment (P-M) diagram when designing columns. However, multiple load pairs must be sustained to practically design columns. This pivotal design aspect was rarely examined in previous studies. Lagaros and Papadrakakis (Citation2015) tested six cases for optimization designs of RC columns while the investigated columns sustained one pair of

and

in each case. Hong and Cuong Nguyen, (Citation2022a) performed design optimization of round concrete columns based on ANNs with only one pair of axial load (

) and bending moment (

). Hong et al. (Citation2022b and Hong and Nguyen Citation2021) also studied a gradient-based approach utilizing Lagrange multiplier method (LMM) to optimize designs of RC uniaxial columns taking into account one load pair in strength limit state. Recently, Hong et al. (Citation2022c) implemented ANNs and Lagrange optimization method to design RC round columns optimizing three objectives simultaneously and verified the method using a non-gradient Nondominated Sorting Genetic Algorithm – II. The suggested approach exhibited potential for structural designs. Specifically, uncommon structures such as tuned mass dampers were devised by Wang, L. et al. in their research [Wang et al. (Citation2019a, Citation2019b, Citation2020a, Citation2020b, Citation2023a, Citation2022a, Wang et al. Citation2022b, Citation2023b; Wang, Zhou, and Shi Citation2023a, Citation2023b)].

The present study is divided into four main parts besides an introduction and research significance. Section 3 discusses a methodology of an ANN-based design of a round reinforced concrete column section to construct a generalized neural network considering multiple load pairs. Section 4 develops a hybrid optimization design method by implementing the Lagrange techniques based on an ANN. Section 5 validates the ANN-based optimization design, followed by a final section that concludes the study. In the present study, conventional design procedures of round concrete columns subject to multiple loads are replaced by ANN-based methods to optimize them. Safety factors (SF) were validated as small as 1% − 2% based on design examples performed by the ANN-based method and structural mechanics, which confirmed the accuracy of the proposed ANNs. The present study is developed on the basis of ANN-based structure designs shown in the books by Hong (Citation2019, Hong Citation2023b, Citation2023c).

2. Research significance

Design optimizations of round reinforced concrete columns based on ANNs have been investigated in previous studies with only one pair of axial load () and bending moment (

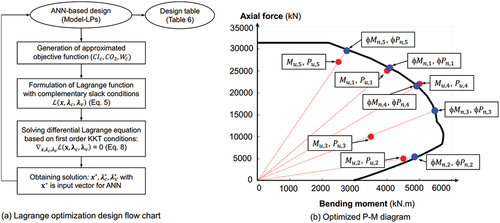

), as shown in (a). In this study, ANNs are generalized to be applicable to any number of load pairs by reshaping an architecture of ANNs without needs of retraining the ANNs. A comprehensive objective function is also extracted from ANNs, which captures multiple load combinations, as illustrated in , to allow an implementation of Lagrange Multiplier Method and Karush–Kuhn–Tucker (KKT) conditions ([Peel and Moon Citation2020)].

3. ANN-based design of round reinforced concrete column

3.1. Generation of large structural datasets

3.1.1. Forward design analysis and selection of design parameters

shows the conventional forward analysis to design an RC column. Design parameters include column dimensions (),

, strain in the rebars (

), and material properties, such as

and

to determine

which is a ratio of nominal section capacity to factored load

. A design performance is evaluated in terms of strength requirements based on the condition of

, referring to section 10.5 – ACI 318–19. Economy and design sustainability are estimated based on

,

, and

emissions, while design parameters are calculated accordingly. An interaction P-M diagram based on a nominal capacity of column sections (

,

) needs to be calculated when designing an RC column. A conventional design fits all load pairs inside a P-M diagram based on a trial-and-error process.

s are selected as random variables instead of factored loads

and

when generating large datasets. Parameter (

) representing an eccentricity of applied axial loads is calculated when constructing P-M diagrams. However,

is not generated in large datasets, and not trained by the network either. Six inputs (

) are selected to represent the design requirements as shown in whereas other parameters such as

and

, are calculated on an output-side.

,

, and

emissions are also treated as output parameters.

Table 1. Design parameters’ nomenclature.

3.1.2. Generation of large structural datasets

Forward analysis of round RC columns was initially performed to select design input and output parameters when generating big datasets. A strain-compatibility-based structural analysis software of RC columns (called AutoCC) was written with MATLAB code. The software AutoCC is based on a conventional design following Building Code Requirements (Structural Concrete ACI 318–19 ([ACI Committee Citation2019])) shown in ). Big structural datasets are generated by AutoCC in which each input parameter will be randomly selected within its defined range shown in whereas the corresponding outputs are calculated. Each data sample represents a set of randomly selected inputs and correspondingly calculated outputs. The generated datasets are divided into three subsets, such as training (70%), testing (15%), and validation datasets (15%). 70% of the generated datasets are used to train the ANN. The remaining datasets are divided equally into testing and validation datasets to test and validate the trained ANN.

Table 2. Ranges to generate large dataset using structural mechanics-based software AutoCC.

One hundred thousand structural datasets are generated based on AutoCC. are randomized input parameters within their ranges specified in . The corresponding output parameters

are calculated on an output side of the AutoCC based on randomly selected inputs (

). In particular, random ranges for

and

are 20–70 MPa and 300–550 MPa, respectively, for

varying from 400 to 2000 mm.

is restricted by minimum and maximum rebar ratios of 0.01 and 0.08 based on the design code ACI 318–19. The random values of

are controlled within 0.1–3.0, whereas those of

which are not generated in large datasets are within the range of

–

. After generation, the large datasets are normalized in a range of − 1 to 1 before training, testing, and validating.

3.2. Formulation of networks with multiple load pairs by reusing weight matrices with one load pair

3.2.1. Topology of Model-1LP and Model-LPs

An ANN-based design of round RC columns with one load pair (Model-1LP) is performed based on design parameters including column diameter , rebar ratio

, material properties (such as compressive strength of concrete (

), and yield strength of reinforcement (

)), and factored loads (axial load

and bending moment

). These parameters are inputs of an ANN. The corresponding outputs include safety factor

, strain of rebar

, cost index (

), column weight (

), and

emission. A number of inputs and outputs used for ANNs with one load pair (Model-1LP) adjust and increase when multiple load pairs are considered for ANNs (Model-LPs) with multiple load pairs. An adjusted number of inputs and outputs, then, disturb the topology of Model-LPs. As a first step to fix the disturbance, Model-1LP with one load pair is trained, after which components of weight matrices, Model-1LP, corresponding to one load pair of are re-used to formulate weight matrices for a generalized ANN (Model-LPs) with multiple load pairs. The duplicated weight matrices of Model-LPs remove the needs of re-training Model-LPs to derive weight matrices with multiple loads. The duplicated weight matrices of Model-LPs help one derive objective functions, such as cost and

emissions which are used for optimizing Lagrange functions with LMM (refer to Section 4). The Lagrange function is well-behaved and well-defined to replace explicit mathematical functions because input parameters are mapped into output parameters through training. shows a design process implemented by ANN which is compared with the conventional design.

3.2.2. Network duplication

n multiple inputs load pairs ,

result in n numbers of outputs

and

, respectively. Output parameters in ANN are mapped by input parameters, such that mapped networks represent interconnected neural relationships between output and input parameters. Weight matrices with one load pair alter between the input layer and Hidden Layer 1 when input parameters increase when considering multiple load pairs for Model-LPs and hence, the components of weight matrices of Model-1LP corresponding to one load pair are reused to formulate weight matrices for a generalized ANN (Model-LPs) with multiple load pairs. Components of weight matrices of ANN (Model-1LP) corresponding to one load pair during mapping between input layer and Hidden Layer 1 can be duplicated and modularized to formulate a generalized ANN (Model-LPs) with multiple load pairs. However, after Hidden Layer 1, the topology of an ANN (Model-LPs) with multiple load pairs is unaltered because the hidden layers and neurons after Hidden Layer 1 are identical to those of ANN (Model-1LP), and hence, mapped networks for Model-LPs with multiple load pairs which links output parameters

to input parameters can be treated similarly to the link of one SF to input parameters of ANN (Model-1LP) with one load pair after Hidden Layer 1.

shows the topology of Model-1LP for designing round RC columns subject to one load pair. However, the practical designs of round RC columns must simultaneously capture multiple load pairs. In generalized Model-LPs for n load pairs (, i = 1, … , n) shown in +4 input variables are considered in the input layers rather than six input parameters (

) adapted for Model-1LP shown in . Input parameters 2n + 4 represent 2 × n load pairs (

) + four input parameters (

) which are unaffected by duplications. Output parameters corresponding to n load pairs (

, i = 1, … , n) are also adjusted by adding

and

on an output-side as shown in . The altered input parameters (2n + 4) directly affect the mapping input parameters to Hidden Layer 1, altering only weight matrices in the first hidden layer

. However, the rest of the hidden layers of Model-LPs do not alter, remaining the same as those of Model-1LP.

EquationEquation 1(1)

(1) represents an ANN for an output parameter

with one load pair Villarrubia et al. (Citation2018) in which the input vector

contains normalized values of

. Neural values of the first hidden layer (Hidden Layer 1) are calculated based on the input parameters

using the first weight matrix

and bias matrix

. Neural values in Hidden Layer 1 are activated by the tansig activation function (

. Neural values of the successive hidden layers are calculated using weight matrix

and bias matrix

, where k represents a kth hidden layer under consideration. Note that the best training of the output parameter

is based on 10 layers and 20 neurons as shown in . Only one neuron value is calculated at the output layer which is activated by the linear activation function (

) before being de-normalized to obtain output

of the original scale as

.

Table 3. Training results and accuracies from using tansig activation function.

EquationEquation 2(2)

(2) describes an ANN for output parameters

with i = n load pairs Villarrubia et al. (Citation2018). Weight matrix Model-LPs is obtained from Model-1LP. The increased input parameters

contain (2n +4) normalized variables, which increase the size of the weight matrix of the first hidden layer

to a

matrix shown in EquationEquation 2

(2)

(2) from a

matrix of

shown in EquationEquation 1

(1)

(1) . The other components of the weight and bias matrices of the hidden and output layers are unchanged, which is shown in EquationEquation 2

(2)

(2) and the topology of network Model-LPs shown in . The output parameter

shown in EquationEquation 1

(1)

(1) calculated by network Model-1LP is equivalent to

shown in Equation 2 calculated by Model-LPs which is governed by the i-th load pair (

)

.

3.2.3. Formulation of weight matrices with multiple load pairs

An output parameter in Model-LPs should have a mapping trait similar to that of an output

in Model-1LP because their components related to the applied load pairs of weight matrices (

for Hidden Layer 1 of in Model-LPs is duplicated from

of Model-1LP shown in EquationEquation 3

(3)

(3) (Hong Citation2023a).

Let’s derive the weight matrix at the first hidden layer of the ANN Model-LPs subject to multiple load pairs. Firstly, the weight matrix of at the first hidden layer of the ANN Model-LPs is derived when Load Pair 1 (

) is applied. The weight matrix of Hidden Layer 1 for

of Model-LPs

shown in EquationEquation 4

(4)

(4) (Hong Citation2023a) is modified from the weight matrix of Model-1LP shown in EquationEquation 3

(3)

(3) .

EquationEquations 3(3)

(3) and Equation4

(4)

(4) show that the components of the weight matrices related to the first four input parameters

are unaffected when formulating weight matrices of Model-LPs. Note that

is determined as a ratio of the nominal strength to factored load (

), indicating that each value of

is governed by its corresponding load pair (

). The components of

matrix corresponding to factored loads

and

shown in EquationEquation 4

(4)

(4) are

which are the same weight components with respect to

and

in Model-1LP shown in EquationEquation 3

(3)

(3) , respectively. The components of other weight matrix with respect to load pairs (

, i = 2, … , n) are equal to zero because

is only controlled by Load Pair 1 (

).

Similarly, as shown in EquationEquation 5(5)

(5) (Hong Citation2023a), the modified weight matrix of

corresponding to the Load Pair (

) of Model-LPs is achieved by reusing the same weight components of

with respect to

and

in Model-1LP, respectively, shown in EquationEquation 3

(3)

(3) whereas the other components of the weight matrix are also zero because Load Pair 2 (

) only controls the

.

The weight matrices for the rest of the load pairs are obtained similarly. As shown in EquationEquation 6(6)

(6) (Hong Citation2023a), the modified weight matrix of

corresponding to the Load Pair n (

) of Model-LPs is achieved by reusing the same weight components of

with respect to

and

in Model-1LP, respectively, shown in EquationEquation 3

(3)

(3) , whereas the other components of the weight matrix are also zero because Load Pair n (

) only controls the

.

At the first hidden layer of the ANN Model-1LP considering :

At the first hidden layer of the ANN Model-LPs considering

At the first hidden layer of the ANN Model-LPs considering i = 2:

At the first hidden layer of the ANN Model-LPs considering

Duplication of the weight matrix is performed similarly for other output parameters such as . The other outputs,

,

and

, are not directly affected by multiple load pairs, such that their modularized weight matrices are obtained by adding zero components. Network Model-LPs with multiple load pairs are not obtained through training, but obtained by adding components related to applied load pairs, and hence, their outputs inherit the same characteristics as those of Model-1LP. For example, an output

in Model-LPs should have a mapping trait similar to that of an output

in Model-1LP because their components related to applied load pairs of weight matrices for Hidden Layer 1

are reused from

of Model-1LP shown in EquationEquation 3

(3)

(3) .

The procedure to obtain a generalized ANN capturing multiple load pairs in the design of round RC columns is summarized in the following four steps.

Step 1: Selection of input and output parameters for ANNs based on a forward analysis of round RC columns considering one load pair.

Step 2: Large datasets are generated by structural analysis software (AutoCC) after the selection of input and output parameters in Step 1.

Step 3: Weight matrices to formulate ANNs with one load pair, Model-1LP, is obtained after training ANN on the large datasets.

Step 4: The weight components with respect to and

in Model-1LP is re-used for weight components in Model-LPs to obtain a generalized ANN with multiple load pairs.

3.3. Network training

Model-1LP, which represents an ANN with one load pair, is trained based on 5 and 10 hidden layers, each containing 20, 50, and 80 neurons, respectively. The ANNs are trained based on the parallel training method ([Hong, Dat Pham, and Tien Nguyen Citation2022]) in which each output parameter shown in is mapped by all input parameters using the MATLAB training toolbox [MathWorks (Citation2022b-f)], leading to five networks. A validation sub-dataset is used to avoid an overfitting, whereas a test subset is used to assess a training performance. Test MSE (mean squared error) against unseen datasets is used for demonstrating training efficiency. lists the training results, which indicates the best layers and neurons with respect to two types of activation functions. The ReLU activation function is employed at the nodes of hidden layers, and compared with the tansig function based on 5 and 10 layers with 20, 50, and 80 neurons. As shown in , networks trained using the tansig activation function perform better than those using the ReLU activation function (), ad hence, the tansig activation function is used at the hidden layers of the present study, whereas the linear activation function is used at an output layer.

Table 4. Training results and accuracies from using ReLU activation function.

4. Optimization design using Lagrange multiplier method

4.1. Formulation of Lagrange optimization function

EquationEq 7(7)

(7) describes a general constrained optimization problem, where

is the objective function with an input vector

, and

and

are equality and inequality constraints equations, respectively. Any optimal solution of EquationEquation 7

(7)

(7) belongs to a feasible set

. LMM associates the objective function

with constrained conditions by applying Lagrange multipliers

and

for equality and inequality constraints, respectively. These multipliers transfer

as a multivariate objective function subjected to multiple constraints in a non-boundary Lagrange function (EquationEquation 8

(8)

(8) ) ([Lagrange Citation1804]), which allows conventional optimization algorithms to be implemented. The solutions of optimization problems

are critical points at which the gradient of

and the gradient of the constraint functions

and

are aligned by multiples of

and

. The stationary points of the Lagrange optimization function,

, must satisfy the first-order necessary conditions for optimality known as the KKT conditions ([Peel and Moon Citation2020]).

A diagonal matrix (EquationEquation 9

(9)

(9) ) comprises the inequality term of the Lagrange function, which takes a complementary slackness condition into

Each value

indicates a status of inequalities

, respectively. An activated inequality

is demonstrated by setting the

value = 1, whereas

is set as 0 when the inequality

is inactive.

4.2. Solution of Lagrange function based on KKT conditions

Solutions of an optimization problem are obtained by solving the KKT systems of equations and inequalities with the complementary slackness as Partial differential equations of the Lagrange function with respect to xi and

and

are initially derived in the form of a gradient matrix (EquationEquations 10

(10)

(10) and Equation11

(11)

(11) ) to solve the first KKT condition to seek the stationary points. Since the Lagrange function is multi-dimensional, its first-order derivative function is nonlinear and difficult to be solved analytically. Gradient matrix (EquationEquations 10

(10)

(10) and Equation11

(11)

(11) ) is introduced to linearize first-order derivative of the Lagrange function described in Eq. 12, where

is the second-order derivative Hessian matrix. The Newton–Raphson’s method ([Upton and Cook Citation2014]) updates the initial vector after the j-th iteration following a multivariate form (EquationEquation 13

(13)

(13) ) until convergence is achieved.

Any solution of EquationEquation 10(10)

(10) is a critical point (

). These points can be local minima, global minima, or saddle points. Consequently, the test for optimality is required by comparing the values of

) to assure targeted solutions are the global extremum values at the critical points. Note that certain possible critical points correspond to some KKT conditions regarding the status of inequalities.

5. Results and validations of ANN-based optimization design

5.1. Application of generalized model-LPs to an optimization of round RC columns

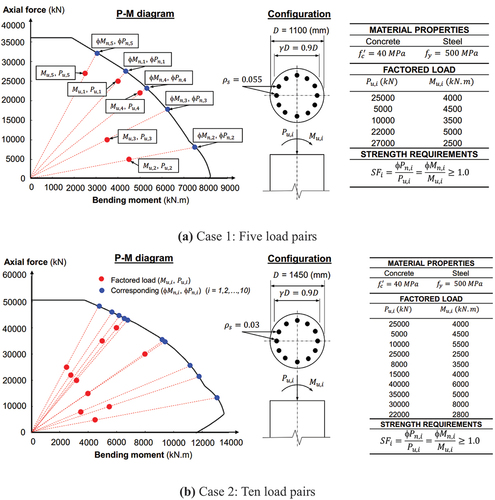

The accuracies of training ANNs are illustrated by MSE presented in . Two forward designs are discussed in which the designed sections shown in are verified for strength requirements. Each load pair is presented by one point, which is inside an interaction P-M diagram. An input parameters x are the preassigned values of ,

,

,

,

. The output parameters are determined by the generalized ANN (Model-LPs), and validated by structural analysis software AutoCC to investigate the design accuracy. presents the results of Design Case 1, considering five load pairs to design columns with

= 1100 mm and

= 0.055. presents Design Case 2 capturing ten load pairs,

= 1450 mm, and

= 0.03.

= 40 MPa and

= 500 MPa are used in both cases. Design parameters obtained by Model-LPs are well compared with those calculated by structural mechanics AutoCC. The calculation errors of both cases commonly range from 0% to 3%.

Table 5. ANN-based design Case 1.

Table 6. ANN-based design Case 2.

5.2. Application of hybrid optimization design

shows two forward design cases with five and ten load pairs where output parameters are calculated using the preassigned input parameters without optimizations. describes the optimized design with five load pairs where column diameters and rebars are optimized by ANN-based Lagrange optimizations. The rebar ratios range from 0.01 to 0.08, which is imposed by the minimum and maximum rebar requirements in the design code ACI 318–19 for RC columns. is limited between 400 and 2000 mm for its practical applicability. The given factored loads (

) are five load pairs similar to those in Design Case 1 (). The preassigned load factors and material properties (steel and concrete) are determined by the equality constraint equations, whereas the limitations of rebar ratio and column diameter are formulated into inequality constraint functions ().

governed by load pair

are set as inequalities (

) to meet design strength requirements. shows an optimization design in terms of minimizing

,

, or

. The design objective in this example is to minimize cost. An objective function of

is derived from the generalized ANNs - Model-LPs. The constraint equations () and the objective function are adopted to formulate the Lagrange optimization function. The first-order derivative is implemented, and partial differential equations are linearized before applying the Newton – Raphson method, as discussed in Section 4. Optimal values of

and

are found with respect to the constraint conditions at the input vector

reported during the Lagrange optimization. The output parameters,

,

,

,

, and

, corresponding to each load pair are obtained by Model-LPs and calculated by structural mechanics AutoCC to confirm the design accuracies of ANN.

and

are optimized as 1272.8 mm and 0.01, respectively, when the cost

is optimized as 225,772 KRW/m based on Lagrange optimization as shown in . An optimized P-M diagram shown in is plotted based on optimized design results shown in , in which all load points (red points) are placed inside the optimized P-M diagram. In particular, the

are close to 1.0, which indicates an adequate design that is challenging to obtain by the conventional design procedure.

Table 7. Factored load and formulation of inequality and equality constraints.

Table 8. Design table for ANN-based Lagrange optimization.

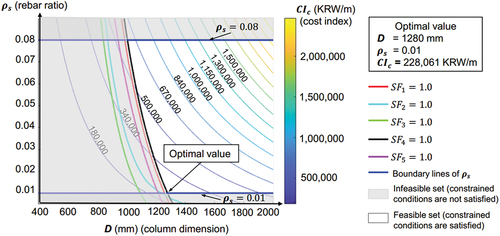

5.3. Validation of optimized design by large datasets

Five million datasets were generated to validate the optimized design efficiency obtained by a generalized ANN (Model-LPs). shows the contour lines of the objective function ( and

values corresponding to five load pairs.

is constrained in a range of 400–2000 mm presented in the x-axis, whereas the y-axis shows the

values. The optimal design identified by brute force method (large datasets) for cost minimization () is 228,061 KRW/m, which is 1.01% different from that calculated by ANN Model-LPs.

and

were optimized as 1280 mm and 0.01001, respectively, as indicated in . An optimal design lies at a black line indicating that

= 1.0, noticeably seen in . The acceptable design is located inside a feasible set (), demonstrating that all constraint conditions are satisfied. It is noted that any region below

= 1.0 is infeasible, while

= 1.0,

,

, and

= 1.0 are found below

= 1.0, indicating that

,

,

, and

did not reach 1.0 as shown in . The optimized design (225,772 KRW/m shown in ) based on ANN-based Lagrange optimization is obtained when

= 0.01 based on KKT condition with active inequality

, and

= 1.0 with active inequality

. Other inequalities related to

are inactive.

Figure 6. Verification of Lagrange optimization by five million datasets for a P-M diagram capturing five load pairs shown in .

Table 9. Verification of AI-based optimization by large datasets.

Other design parameters are controlled by constraint conditions similar to those with five load pairs for the Lagrange optimization described in . The in these design examples are controlled within 1.0–1.2 to avoid conservative designs. In , the cost minimized by ANN-based Lagrange hybrid optimization is compared with a typical cost provided by the probable design of engineers. This probable cost is estimated as the average of 251,496 datasets sorted from five million datasets. Each data in these sorted datasets represents one design completed by each engineer based on a P-M diagram capturing five load points illustrated in . compares the probable cost (calculated as 470,508 KRW/m by engineers) and the cost optimized by the Lagrange hybrid design (225,772 KRW/m), which shows a benefit of 51.53% saving.

Table 10. Cost comparison between ANN-based hybrid Lagrange optimization and probable design.

The present study develops the data generating, training, and optimizing codes based on MATLAB Deep Learning Toolbox [MathWorks (Citation2022b)], MATLAB Parallel Computing Toolbox ([MathWorks Citation2022e]), MATLAB Statistics and Machine Learning Toolbox [MathWorks (Citation2022f)], MATLAB Global Optimization Toolbox [MathWorks (Citation2022c)], MATLAB Global Optimization Toolbox [MathWorks (Citation2022d)], and MATLAB R2022a [MathWorks (Citation2022a)].

6. Summary and conclusions

This study presented an ANN-based modularized weight matrix to design RC round columns capturing multiple load pairs. Cost, CO2 emission, or weight in the round RC columns was optimized with acceptable accuracies based on a combination of ANN and Lagrange optimization techniques. Their applicability is wide; they are advantageous for round RC columns, and can be applied to any structure, such as beam and frames. This study will aid engineers in making rational design decisions instead of intuitive ones when seeking optimality in their designs. The conclusions drawn from this study are listed below.

A design of round concrete column considering one load pair limits the applicability of ANN-based designs, and hence, a generalized ANN (Model-LPs) is proposed to consider multiple load pairs such as multiple factored loads (

) and their corresponding outputs (

). Weight matrices of ANNs are reshaped, preventing re-training of the large datasets. Generation of large structural datasets and training of ANN were performed once with networks considering one load pair, but applied to a design of round concrete column subject to multiple load pairs by duplicating the weight components with respect to

and

in Model-1LP, which leads to a generalized Model-LPs subject to multiple load pairs. The proposed method is to derive networks considering multiple load pairs while removing the needs to re-train networks, contributing to reducing computational time for the practical applications.

Lagrange functions are modified to enable an optimization of structural designs to cope with various complex constraint conditions, transforming them into an unbounded optimization problem, which allows derivative methods to be implemented.

Cost is minimized by implementing LMM with a generalized ANN (Model-LPs), from which an objective function

is extracted while considering multiple load points.

Minimized costs accessed by the ANN-based hybrid optimization proposed are validated by those extracted from five million datasets. Besides, design results show negligible errors with those calculated by structural mechanics AutoCC. Designs based on hybrid optimization are significantly beneficial for material savings compared to probable costs by engineers. The cost optimized by the Lagrange hybrid design (225,772 KRW/m) shows a benefit of 51.53% saving compared with the probable cost (calculated as 470,508 KRW/m by engineers). Conservative designs are also avoided by controlling

values within 1.0–1.2 in these design examples.

Some AI-based structural researches are implemented in specific structures where analytical equations are not available, in which the big data should be collected through experiments. However, the novel holistic ANN-based structural designs for real engineering applications introduced in this study collects big data from structural codes such as ACI, ASCE, AISI, AISE, and EC, etc. directly. All the standards and equations of the codes that are used to generate the big data were already verified by experiments from various researchers. For example, strength reduction factors in ACI 318-19 were established by a study by MacGregor in 1976 [MacGregor, J. G. (Citation1976)], which reviewed test data from multiple studies to develop strength reduction factors, accounting for variabilities in material strengths, overloading, and severities of failure consequences.

Acknowledgements

This work was supported by the National Research Foundation of Korea (NRF) grant funded by the Korean government (MSIT 2019R1A2C2004965).

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

Notes on contributors

Won-Kee Hong

Dr. Won-Kee Hong is a Professor of Architectural Engineering at Kyung Hee University. Dr. Hong received his Master’s and Ph.D. degrees from UCLA, and he worked for Englelkirk and Hart, Inc. (USA), Nihhon Sekkei (Japan) and Samsung Engineering and Construction Company (Korea) before joining Kyung Hee University (Korea). He also has professional engineering licenses from both Korea and the USA. Dr. Hong has more than 30 years of professional experience in structural engineering. His research interests include a new approach to construction technologies based on value engineering with hybrid composite structures. He has provided many useful solutions to issues in current structural design and construction technologies as a result of his research that combines structural engineering with construction technologies. He is the author of numerous papers and patents both in Korea and the USA. Currently, Dr. Hong is developing new connections that can be used with various types of frames including hybrid steel–concrete precast composite frames, precast frames and steel frames. These connections would help enable the modular construction of heavy plant structures and buildings. He recently published a book titled as ”Hybrid Composite Precast Systems: Numerical Investigation to Construction” (Elsevier).

Thuc Anh Le

Thuc Anh Le is currently enrolled as a master candidate in the Department of Architectural Engineering at Kyung Hee University, Republic of Korea. Her research interest includes AI and precast structures.

Manh Cuong Nguyen

Manh Cuong Nguyen is currently enrolled as a master candidate in the Department of Architectural Engineering at Kyung Hee University, Republic of Korea. His research interest includes AI and precast structures.

References

- ACI Committee. 2019. Technical Documents. Farmington Hills, USA: American Concrete Institute.

- Charalampakis, A. E., and V. K. Papanikolaou. 2021. “Machine Learning Design of R/C Columns.” Engineering Structures 226 (March 2020): 111412. https://doi.org/10.1016/j.engstruct.2020.111412.

- Hong, W.-K. 2019. Hybrid Composite Precast Systems (Numerical Investigation to Construction). Alpharetta, USA: Elsevier.

- Hong, W.-K. 2023a. Artificial Neural Network-Based Optimized Design of Reinforced Concrete Structures. Tayor & Francis (CRC press). https://doi.org/10.1201/9781003314684.

- Hong, W.-K. 2023b. Artificial Neural Network-Based Prestressed Concrete and Composite Structures. Milton Park, Abingdon-on-Thames, Oxfordshire United Kingdom: Taylor and Francis.

- Hong, W.-K. 2023c. “(Artificial Neural Networks for Engineering Applications).” In Artificial Intelligence-Based Design of Reinforced Concrete Structures, 329–394. Elsevier. https://doi.org/10.1016/B978-0-443-15252-8.00004-2.

- Hong, W.-K., and M. Cuong Nguyen. 2022. “AI-Based Lagrange Optimization for Designing Reinforced Concrete Columns.” Journal of Asian Architecture & Building Engineering 21 (6): 2330–2344. https://doi.org/10.1080/13467581.2021.1971998.

- Hong, W.-K., M. Cuong Nguyen, and T. Dat Pham. 2022. “Optimized Interaction P-M Diagram for Rectangular Reinforced Concrete Column Based on Artificial Neural Networks Abstract.” Journal of Asian Architecture and Building Engineering 22 (1): null–null. https://doi.org/10.1080/13467581.2021.2018697.

- Hong, W.-K., T. Dat Pham, and V. Tien Nguyen. 2022. “Feature Selection Based Reverse Design of Doubly Reinforced Concrete Beams.” Journal of Asian Architecture and Building Engineering 21 (4): 1472–1496. https://doi.org/10.1080/13467581.2021.1928510.

- Hong, W.-K., T. A. Le, M. C. Nguyen, and T. D. Pham. 2022. “ANN-Based Lagrange Optimization for RC Circular Columns Having Multiobjective Functions.” Journal of Asian Architecture and Building Engineering 22 (2): 961–976. https://doi.org/10.1080/13467581.2022.2064864.

- Hong, W.-K., and M. C. Nguyen. 2021. “AI-Based Lagrange Optimization for Designing Reinforced Concrete Columns.” Journal of Asian Architecture and Building Engineering TABE 21 (6): 2330–2344. https://doi.org/10.1080/13467581.2021.1971998.

- Hong, W.-K., V. T. Nguyen, and M. C. Nguyen. 2021. “Artificial Intelligence-Based Noble Design Charts for Doubly Reinforced Concrete Beams.” Journal of Asian Architecture and Building Engineering 21 (4): 1497–1519. https://doi.org/10.1080/13467581.2021.1928511.

- Kaarthikeyan, K., and D.R.M. Shanthi. 2016. “Optimization of RC Columns Using Artificial Neural Network.” 7 (4): 219–230.

- Lagaros, N. D., and M. Papadrakakis. 2015. “Preface.” Computational Methods in Applied Sciences 38 (c): ix–xii. https://doi.org/10.1007/978-3-319-18320-6.

- Lagrange, J. L. 1804. Leçons sur le calcul des fonctions. Paris: Imperiale.

- MacGregor, J. G. 1976. “Safety and Limit States Design for Reinforced Concrete.” Canadian Journal of Civil Engineering 3 (4): 484–513. https://doi.org/10.1139/l76-055.

- MathWorks, (2022a). MATLAB (R2022a).

- MathWorks, (2022b).” Deep Learning Toolbox: User’s Guide (R2022a).” Accessed July 26, 2012. https://www.mathworks.com/help/pdf_doc/deeplearning/nnet_ug.pdf.

- MathWorks, (2022c). “Global Optimization: User’s Guide (R2022a).” Accessed July 26, 2012. https://www.mathworks.com/help/pdf_doc/gads/gads.pdf.

- MathWorks, (2022d). “Optimization Toolbox: Documentation (R2022a).” Accessed July 26, 2022. https://uk.mathworks.com/help/optim/.

- MathWorks, (2022e). “Parallel Computing Toolbox: Documentation (R2022a).” Accessed July 26, 2022. https://uk.mathworks.com/help/parallel-computing/.

- MathWorks, (2022f). Statistics and Machine Learning Toolbox: Documentation (R2022a). Accessed July 26, 2022. https://uk.mathworks.com/help/stats/.

- Peel, C., and T. K. Moon. 2020. “Algorithms for Optimization [Bookshelf].” IEEE Control Systems 40 (2): 92–94. https://doi.org/10.1109/MCS.2019.2961589.

- Upton, G., and I. Cook. 2014. A Dictionary of Statistics 3e. Oxford university press.

- Villarrubia, G., J. F. De Paz, P. Chamoso, and F. De la Prieta. 2018. “Artificial Neural Networks Used in Optimization Problems.” Neurocomputing 272:10–16. https://doi.org/10.1016/j.neucom.2017.04.075.

- Wang, L., S. Nagarajaiah, W. Shi, and Y. Zhou. 2022b. “Seismic Performance Improvement of Base-Isolated Structures Using a Semi-Active Tuned Mass Damper.” Engineering Structures 271:114963. https://doi.org/10.1016/j.engstruct.2022.114963.

- Wang, L., S. Nagarajaiah, Y. Zhou, and W. Shi. 2023b. “Experimental Study on Adaptive-Passive Tuned Mass Damper with Variable Stiffness for Vertical Human-Induced Vibration Control.” Engineering Structures 280:115714. https://doi.org/10.1016/j.engstruct.2023.115714.

- Wang, L., W. Shi, X. Li, Q. Zhang, and Y. Zhou. 2019a. “An Adaptive‐Passive Retuning Device for a Pendulum Tuned Mass Damper Considering Mass Uncertainty and Optimum Frequency.” Structural Control and Health Monitoring 26 (7): e2377. https://doi.org/10.1002/stc.2377.

- Wang, L., W. Shi, Q. Zhang, and Y. Zhou. 2020a. “Study on Adaptive-Passive Multiple Tuned Mass Damper with Variable Mass for a Large-Span Floor Structure.” Engineering Structures 209:110010. https://doi.org/10.1016/j.engstruct.2019.110010.

- Wang, L., W. Shi, and Y. Zhou. 2019b. “Study on Self‐Adjustable Variable Pendulum Tuned Mass Damper.” The Structural Design of Tall & Special Buildings 28 (1): e1561. https://doi.org/10.1002/tal.1561.

- Wang, L., W. Shi, and Y. Zhou. 2022a. “Adaptive-Passive Tuned Mass Damper for Structural Aseismic Protection Including Soil–Structure Interaction.” Soil Dynamics and Earthquake Engineering 158:107298. https://doi.org/10.1016/j.soildyn.2022.107298.

- Wang, L., W. Shi, Y. Zhou, and Q. Zhang. 2020b. “Semi-Active Eddy Current Pendulum Tuned Mass Damper with Variable Frequency and Damping.” Smart Structures and Systems 25 (1): 65–80. https://doi.org/10.12989/sss.2020.25.1.065.

- Wang, L., Y. Zhou, S. Nagarajaiah, and W. Shi. 2023a. “Bi-Directional Semi-Active Tuned Mass Damper for Torsional Asymmetric Structural Seismic Response Control.” Engineering Structures 293:116744. https://doi.org/10.1016/j.engstruct.2023.116744.

- Wang, L., Y. Zhou, and W. Shi. 2023a. “Seismic Control of a Smart Structure with Semiactive Tuned Mass Damper and Adaptive Stiffness Property.” Earthquake Engineering and Resilience 2 (1): 74–93. https://doi.org/10.1002/eer2.38.

- Wang, L., Y. Zhou, and W. Shi. 2023b. “Seismic Response Control of a Nonlinear Tall Building Under Mainshock-Aftershock Sequences Using Semi-Active Tuned Mass Damper.” International Journal of Structural Stability and Dynamics. https://doi.org/10.1142/S0219455423400278.