ABSTRACT

Scholarship on evidence-based policy, a subset of the policy analysis literature, largely assumes information is produced and consumed by humans. However, due to the expansion of artificial intelligence in the public sector, debates no longer capture the full range concerns. Here, we derive a typology of arguments on evidence-based policy that performs two functions: taken separately, the categories serve as directions in which debates may proceed, in light of advances in technology; taken together, the categories act as a set of frames through which the use of evidence in policy making might be understood. Using a case of welfare fraud detection in the Netherlands, we show how the acknowledgement of divergent frames can enable a holistic analysis of evidence use in policy making that considers the ethical issues inherent in automated data processing. We argue that such an analysis will enhance the real-world relevance of the evidence-based policy paradigm.

Introduction

Policy analysis has been defined as ‘formal and informal professional practices that organisations and actors entertain to define a problem marked for government action, as well as to prescribe the measures to solve that problem by policy action or change’ (Brans et al., Citation2017, p. 2). In more simple terms, policy analysis is what professional public servants do when they use information to create advice for political decision makers (Wilson, Citation2006).

The meaning of the term ‘information’ is contested, as are understandings of how it is used. Debates in this area often centre around the extent to which policy analysis can be rational, logical, linear, or instrumental, with some authors taking the perspective that policy analysis is a fundamental component of rational efforts at policy ‘design’ (Howlett et al., Citation2015), while others argue that policy analysis is more about setting the agenda, imagining and defining problems that might command attention, and generally pursuing ‘the construction of meaning’ in the activities of public authorities (Colebatch, Citation2006, p. 9). Since the late 1990s, a discourse has developed around a subset of policy analysis called evidence-based policy making, examining its function, merits, and existence. In this successor discourse to debates about policy analysis (Howlett, Citation2009), arguments are, again, mostly divided into positivist (also known as ‘objectivist’) and constructivist (also known as ‘subjectivist’) perspectives (Capano & Malandrino, Citation2022; Newman, Citation2017). These debates occupy the bulk of the scholarship in this area.

In a separate literature, scholars have conceptualised ethical practice in professional policy analysis, often focusing on basic principles such as integrity, competence, responsibility, respect, and concern, which together are intended to ‘promote outcomes that are good for society’ (Mintrom, Citation2010, p. 39). The literature on ethical policy analysis dovetails with scholarship on evidence-based policy, because ethical principles such as integrity and competence relate to the practical use of information in the development of public policy (Weimer & Vining, Citation2017, pp. 42–55).

While these literatures have overlapped in the past (see for example, Sanderson, Citation2003), they are now set on a virtual collision course. The advent of advanced computing technologies, captured under the umbrella term ‘artificial intelligence’, opens a vast new area of inquiry into the use of information in public policy making, and much of it is related to ethics. Automated decision making in the public sector creates a number of ethical dilemmas relating to the collection, storage, and application of information, and the concerns that are incited by these technologies will upend the previous discourse on policy analysis and evidence-based policy.

Here, we explain the potential for artificial intelligence to disrupt understandings of evidence-based policy. We then outline an approach by which scholarly arguments could productively progress knowledge in this area. The approach builds on ‘frame reflection’, a means of exploring policy controversies advanced by Donald Schön and Martin Rein (Citation1994). To demonstrate how this ‘frame reflection’ approach might be used to understand a real-world policy issue, we apply it to the case of automated welfare fraud detection in the Netherlands. That high-profile application of artificial intelligence in pursuit of greater efficiency in public service delivery had catastrophic implications for thousands of families receiving child benefits. The ensuing controversy saw the whole cabinet of the Dutch government resign in January 2021. We analyse this case with the purpose of showing how explicit use and comparison of different perspectives on artificial intelligence and evidence-based policy can improve our understanding of the issues at stake when different forms of evidence are used to guide public sector decision making. In the end, while some arguments concerning evidence-based policy making may seem to contradict each other, they are all valid – but different – frames that can be used productively together. However, we suggest that these arguments will need to be updated to incorporate the changes to information gathering and processing derived from advances in computing that will inevitably be adopted by professional policy analysts in many jurisdictions.

Policy analysis and evidence-based policy

Policy analysis is often discussed in rational-logical terms. Weimer and Vining (Citation2017, p. 31), for instance, write that the objective of policy analysis is to determine options for public sector decision makers using the formula ‘if the government does X, then Y will result’. To what extent this actually occurs in practice has been questioned (Colebatch, Citation2005), and less positivist alternative approaches have been proposed (e.g., Bacchi & Goodwin, Citation2016), but for many observers – whether supportive or critical – the term ‘policy analysis’ connotes a rational, logical approach to addressing problems relevant to public policy, and the language used to discuss policy analysis reflects this approach.

Accordingly, there is a direct link between discussions of policy analysis and the discourse on evidence-based policy, in which scholars debate whether or not public policy decision making can be, or should be, better informed by scientific research evidence. According to Head (Citation2010), the evidence-based policy paradigm has been interpreted by many – especially practitioners working in government – as a promise of better analytical techniques leading to improved societal outcomes. ‘Evidence-based policy’ might therefore be understood as a subset of the greater policy analysis discourse: it is the latest trend in debates around how to improve or reform policy analysis (Howlett, Citation2009, p. 154).

Many aspects of evidence-based policy are hotly debated, and numerous arguments have been made in support and in critique. The most common arguments align with two distinct debate streams (see Newman, Citation2022 for a broader discussion). First, there is a debate sited at the generation point of evidence, or what Capano and Malandrino (Citation2022) refer to as ‘knowledge for policymaking’. Here, supporters of evidence-based policy see the research-to-policy pathway as a pipeline, with improved policy decisions resulting from more and better evidence flowing more effectively up the pipeline from researchers to policy makers (e.g., Andrews, Citation2017; Just & Byrne, Citation2020). Critics argue that evidence is subjective, often cherry-picked, and more useful for deliberate manipulation of the policy agenda than for illumination of policy options (Packwood, Citation2002, p. 267). Arguments at this stage focus on how evidence is created, the identities and agency of actors engaged in its creation, and the communication of evidence to policy decision makers. Challenges (creating fair and meaningful knowledge) and responsibilities (communicating knowledge effectively and impartially to decision makers) are often assigned to researchers (e.g., Mead, Citation2015).

Secondly, there is a debate stream located at the usage end of the policy process, or ‘knowledge in policymaking’ (Capano & Malandrino, Citation2022). Here, more optimistic arguments assert that the problem is not with the quality or quantity of evidence available, but rather that governments lack the capacity required to process this information effectively and convert it into advice that is useful for senior decision makers (Hahn, Citation2019). Critics argue that policy decision making is inherently political and therefore evidence-based policy in any rational-logical sense is simply impossible and, in a democracy, undesirable (Cairney, Citation2016). Arguments at the usage stage treat policy analysts and decision makers as the main set of actors, and largely set aside the agency of researchers in influencing public policy (e.g., Newman & Head, Citation2015).

All of these arguments rely on a traditional understanding of information gathering and processing that centres on human abilities. In this traditional understanding, human researchers create or improve knowledge, human public servants acquire (or fail to acquire) that knowledge, and human decision makers choose what actions public authorities should or should not take. The debates mainly involve the contestation of values, such as how some information and information sources might take precedence over other information and information sources (e.g., Deaton & Cartwright, Citation2018; Doleac, Citation2019), which groups should be allowed to benefit from public interventions and which groups might be knowingly put at a disadvantage (Saltelli & Giampietro, Citation2017), or whether the policy making system can even allow for instrumental decision making in the first place (Cairney, Citation2022). All of these arguments imagine a system in which humans produce, process, and communicate knowledge, and in which humans make decisions based on this human-generated knowledge (or not based on it, as the case may be). The values that guide decision making in this context are also human values.

However, we are approaching a period of rapid change in which technology will facilitate and perhaps automate many aspects of information gathering, processing, and usage. Digitisation has already dramatically altered the way we access, store, and communicate data, but artificially intelligent systems that select and present information autonomously, or even automate decision making for service delivery, promise to transform the way that policy analysis and policy decision making are conducted (Makridakis, Citation2017). While numerous authors have noted that there is no specific definition of ‘artificial intelligence’, the term is commonly used to refer to advanced pattern recognition, analysis of extremely large datasets (often referred to as ‘big data’), and machine learning algorithms that use data inputs to refine their own programming (Calo, Citation2017, p. 405). These technologies are already in use in many jurisdictions in areas such as tax collection, criminal justice, and public health (Newman et al., Citation2022).

There are many possible applications of artificial intelligence in public service delivery, covering perhaps all areas of public policy, and the precise outcomes of each application will no doubt be unique. However, as Bullock (Citation2019) argues, artificial intelligence has the potential to fundamentally alter the way human decision-making features in public administration, and therefore there are some general principles that will apply across issue areas. First, street-level bureaucracy, as described by Lipsky (Citation1980), will likely be significantly diminished in size and authority, as frontline jobs in customer service, call centres, emergency services, public safety, and a host of other areas are replaced by technological advances like chatbots and smart cameras. Some of these systems are already in place, such as traffic cameras that automatically send out fines to motorists driving over the speed limit. This contraction of the street-level administrative workforce will, for better or for worse, significantly reduce the level of human discretion in administrative decision making experienced by citizen end-users (Bullock, Citation2019). Secondly, the limits of human cognition that Herbert Simon (Citation1947) wrote about in relation to administrative decision making, including bounded rationality and satisficing, may be surmounted by machines that have higher computational limits with respect to the quantum of information they can process at any given time. Simon himself noted this possibility – although he described the phenomenon more in terms of expanded rationality boundaries and greater thresholds for satisficing, rather than fundamentally different decision-making processes (Simon, Citation1996).

Taken together, a reduced level of human administrative discretion and a significantly expanded capacity for computational analysis will require a shift in the assumptions that underpin notions of evidence-based policy – as well as in the critiques that are directed at those assumptions. Does information collected by artificial intelligence constitute ‘evidence’ in the same way as information collected by human researchers? Or is it in some ways superior or inferior? Can algorithmic decision making, based on automated data collection and analysis of big data, be described as evidence-based policy? Does automation of aspects of the policy analysis process eliminate human bias in analysis and decision making, or conversely does it introduce new biases or entrench existing ones? More generally, how do ethical concerns related to artificial intelligence translate to improved or weakened use of information in public policy making? Current debates on evidence-based policy are not yet equipped to deal with these questions, because they mainly assume that humans are the primary agents of information generation and usage.

Since 2016, a scholarly literature has developed around concerns about the ethical use of artificial intelligence in the public sector. As in debates on evidence-based policy, the discourse on the ethics of artificial intelligence in the public sector can also be divided according to the site of application, that is, in the generation of information or in its use. Likewise, as Newman (Citation2022) has noted about the literature on evidence-based policy, much of the scholarly writing on the ethics of using artificial intelligence in the public sector is largely either notably supportive or overtly critical of this expanded use of technology.

At the generation end, supporters see artificial intelligence as capable of analysing big datasets dramatically faster than any human ever could, reaching conclusions quickly and with little or no error. For policy analysts, this means more rapid advice that considers bigger populations and more complex problems (Mehr, Citation2017). Critics point out that algorithms are rarely available to (or even, properly understood by) the people who use them, and the software is usually owned by third party private developers and is therefore protected by commercial intellectual property laws (Harrison & Luna-Reyes, Citation2020). Therefore, the advice provided by artificial intelligence systems is created in a kind of black box that does not allow for validation, audit, or appeal. This can be a serious shortcoming in, for example, areas of criminal justice, where decisions on remand and sentencing may be informed by algorithms but where policy analysts creating guidelines and judges using them have little or no understanding of the calculations that decisions are based on (Starr, Citation2014). Again, arguments concerning the generation of information place the responsibility for maintaining ethical standards (and correcting ethical wrongs) in the hands of those who create the knowledge, namely computer programmers and the algorithms themselves.

At the usage end, supporters of the use of artificial intelligence in the public sector argue that algorithms have the potential to boost equity and fairness and eliminate bias. Machines apply rules without discrimination, so the outputs of computerised decision-making ought to be fairer than the outputs of human decision making (Gaon & Stedman, Citation2018, p. 1146). When biases are discovered, they can be fixed easily, quickly, and comprehensively, simply by adjusting the programming. Critics argue that computer programs are vulnerable to bias because they are programmed by humans. For machine learning algorithms, for instance, in which computers are trained to recognise patterns and then make decisions or create advice based on those patterns, the data used to train the algorithm often contains inherent biases that can end up disadvantaging particular societal groups (Gulson & Webb, Citation2017, p. 21). Due to the black box nature of the algorithm, these biases would not be detected or corrected until after services have been rolled out to the public. Critics have also argued that rather than improving innovation in public service delivery, artificial intelligence will be used for racial profiling, targeted discrimination, and generally coercive activities such as increased surveillance and invasion of privacy (Desouza et al., Citation2020). Arguments at the usage stage, therefore, place responsibility for ethical operation in the hands of policy analysts and decision makers.

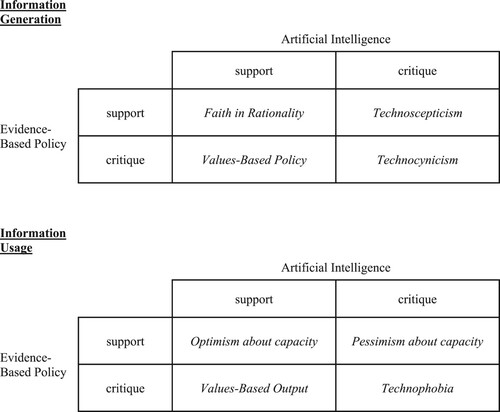

Thus, the advent of artificial intelligence, and especially the ethical considerations involved in using the technology in the public sector, has significant implications for the debates around evidence-based policy and policy analysis more generally. By treating discussions of information generation and information usage separately, it is possible to expose a variety of unique arguments that act essentially as cardinal directions of debate. In what follows, we use the categories of generation/usage and support/critique described above to characterise eight distinct directions in which a new discourse on evidence-based policy – one that takes the ethics of artificial intelligence into consideration – can progress. These directions of debate can be productively treated as frames through which evidence-based policy can be viewed.

Framing and frame reflection

Within the social sciences, a small but important tradition of scholarship has evolved over recent decades that suggests all of us use frames – usually unconsciously – to understand and interpret the world around us. While not the first to propose the idea of frames (see for example, Goffman, Citation1974), and not significantly different in principle to other methods that use multi-dimensional analysis, such as Edward de Bono’s (Citation1985) ‘Six Thinking Hats’ approach, Donald Schön and Martin Rein's (Citation1994) frame analysis aimed specifically to help break through controversies in public policy. Schön and Rein construed frames as generic narratives that can be treated as approximations to the problem-setting stories that inevitably accompany any particular policy controversy. In arguing for the importance of ‘frame reflection’ in policymaking, Schön and Rein emphasised the capability of individuals and institutions to work out agreements on policy decisions and actions, in the face of their different and often contending interests and their divergent views of the world that underlie those interests.

The frames we introduce here are intended to demonstrate how the same issue (the effect of artificial intelligence on the role of information in the policy making process) can be understood differently, and how various arguments in this area might be conceptualised. The frames are meant to be regarded as equally valid, with no implied hierarchy of merit. Rather, the purpose of casting these divergent views as comparable frames is to unite the discourse: by considering various logical directions of inquiry, it may be possible to develop an appreciation and an understanding of alternative viewpoints. For simplicity, we develop the frames using binary categories of generation/usage and support/critique, as derived in the discussion above. However, it should be noted that these distinctions operate more as a two-dimensional spectrum. For example, arguments could be more supportive or less supportive, more towards the generation end or more towards the usage end. Below, we discuss each frame in general terms, as a prelude to applying the frames to the analysis of our case study.

Frame 1. Faith in Rationality (information generation stage: evidence-based policy support, artificial intelligence support)

Under this frame, artificial intelligence is understood to be able to create new knowledge and evidence, far beyond the abilities of human analysts. The sheer volume of information and extreme complexity of analysis that machines are capable of handling will enable a level of evidence-based policy that up until now could only be imagined, resulting in dramatically improved outcomes for society and the wellbeing of citizens.

In short: artificial intelligence represents a technological advance in evidence-based policy making. These technologies can provide greater quantities of policy-relevant information than human policy analysts could, and much more quickly.

Frame 2. Technoscepticism (information generation stage: evidence-based policy support, artificial intelligence critique)

Arguments advanced from this perspective see evidence-based policy as beneficial, but artificial intelligence as potentially eroding those benefits rather than enhancing them. While algorithms may be able to execute large and complex calculations, they are not self-sufficient and can only do what humans program them to do. However, the black box problem, in which policy analysts do not understand how a computer may have arrived at particular conclusions or outputs, coupled with a common (and self-reinforcing) perception that machines are always right and do not need to be questioned (Parikh et al., Citation2019), leads to a general reduction in human intervention in the policy analysis process and a degradation in the quality of information available for advising policy decision making. From this point of view, as policy analysis activities become more and more automated, a lack of adequate human oversight and participation in the process will ultimately undermine the evidence base in many policy areas, rather than expand it.

In short: artificial intelligence technologies undermine the quality of knowledge useful to making policy decisions, because the information cannot be independently verified.

Frame 3. Values-Based Policy (information generation stage: evidence-based policy critique, artificial intelligence support)

In this frame, policy decisions are based on the contestation of values, rather than a neutral appraisal of facts. Artificial intelligence, particularly through media and social media, will be extremely useful for sorting societal values and communicating preferences to decision makers. Policy decision making can then be enhanced by producing outcomes that better represent what citizens want.

In short: artificial intelligence is beneficial for evidence-based policy making not because it creates information on what is, but rather because it can better assess what values are prominent in society and enable the communication of values to decision makers.

Frame 4. Technocynicism (information generation stage: evidence-based policy critique, artificial intelligence critique)

Arguments in this frame are highly critical of both evidence-based policy and artificial intelligence. In this frame, artificial intelligence is just one more method for manipulating the agenda, to proclaim that a preferred policy option or ideological point of view is ‘correct’ or unimpeachable, and to suppress minority voices.

In short: artificial intelligence may or may not produce useful information, but in either case it will be used by elites to justify their ideological positions and preserve their privileged status in society.

Frame 5. Optimism about Capacity (information usage stage: evidence-based policy support, artificial intelligence support)

Here, artificial intelligence is believed to be able to resolve the policy capacity problem in the public sector. Rather than investing in human resources, it will be vastly more economical to invest in computer systems, which will boost the analytical capacity of public sector organisations and allow for a significantly improved use of evidence in public policy decision making. Human policy analysts won't be ‘replaced’, but their work will be augmented by the extensive use of computer analysis.

In short: artificial intelligence enables evidence-based policy making by amplifying the capacity of public sector policy analysts to process information and create policy advice.

Frame 6. Pessimism about Capacity (information usage stage: evidence-based policy support, artificial intelligence critique)

In this frame, the reliance on technology, especially on third-party private computer systems that are not transparent to, or well understood by, government employees, will contribute to what Rhodes (Citation1994) referred to as the ‘hollowing out’ of the public sector and will undermine policy analytical capacity. Much has already been written about the loss of analytical capacity resulting from waves of outsourcing and the hiring of consultants in many countries (e.g., Aucoin & Bakvis, Citation2005; Head, Citation2015). However, artificial intelligence arguably poses a greater threat to analytical capacity than traditional public administrative reform. For one thing, the massive computational capabilities of artificial intelligence suggest that the scale of erosion of institutional memory and expertise is vastly greater than what might be lost to human consultants. And further, though challenging, human consultants can be supervised during their contracted work and questioned about outcomes once the work is done, but the black box nature of artificial intelligence systems and the lack of requisite expertise among public servants who will work with these systems makes the same kind of oversight impossible.

In short: artificial intelligence accelerates the undermining of policy capacity by reducing analytical expertise and institutional memory among human policy analysts in a way that far exceeds reduction in capacity that is due to human resource changes.

Frame 7. Values-Based Output (information usage stage: evidence-based policy critique, artificial intelligence support)

Here, again, values are understood to be more important than facts, which are never really intersubjectively agreed upon anyway. In this frame, artificial intelligence is seen as enabling better communication of ideology from policy decision makers out to citizen end-users. Policies and services can be recommended to citizens ‘based on your likes’.

In short: artificial intelligence may not support evidence-based policy but it can easily support policy-based evidence, by enabling agenda-setting and top-down communication of values and ideologies.

Frame 8. Technophobia (information usage stage: evidence-based policy critique, artificial intelligence critique)

Under this frame, artificial intelligence in public administration is believed to manipulate information, rather than supporting a fair and equitable use of information. This manipulation of information will enable an abundance of coercive practices by the government, such as ubiquitous surveillance, harassment of societal groups, unfair profiling and targeted police interventions, and invasions of privacy. These practices will generally be aimed at minorities and poor people and will increase inequality and inequity, ultimately delivering harsher outcomes for many people all while enriching and advancing wealthy elites.

In short: artificial intelligence will be further used by elites to enforce their ideologies and to deliver coercive policies that benefit themselves and harm others, especially the vulnerable and marginalised.

provides an illustration of this typology of frames. Again, these categories represent extreme points, and are designed to illustrate the typology more clearly. In reality, these points of view reside along a spectrum.

Applying frame reflection to the case of automated welfare fraud detection in The Netherlands

We have presented a series of eight frames on evidence-based policy and artificial intelligence that represent distinctly different ways of interpreting how advances in technology might affect the use of information in policy making. Our intention is that these contrasting frames offer starting points for discussing when and how artificial intelligence might contribute productively (and ethically) to improving public service delivery.

In what follows, we apply our frames to the case of automated welfare fraud detection in the Netherlands. The case concerns a major policy controversy of the type that Schön and Rein (Citation1994) saw as requiring frame reflection if a productive resolution were to be attained. Significantly for our purposes, this Netherlands case has parallels with recent instances in other jurisdictions where application of artificial intelligence to support governmental processes have produced serious unintended negative effects, such as the ‘Robodebt’ scandal in Australia (Braithwaite, Citation2020) or the exam grading fiasco in the United Kingdom (Kippin & Cairney, Citation2022).

Our case concerns the Netherlands government's System Risk Indication (SyRI) program, an artificial intelligence-based system that used a self-learning algorithm to detect suspected fraudulent behaviour. The Dutch childcare benefits scheme was introduced in 2005 (Amnesty International, Citation2021). Subsequently, in the early 2010s, the Dutch Tax and Customs Administration implemented this digital welfare fraud detection system. Preliminary versions had already been in use for several years, but the final SyRI system used data from various government and public agencies to conduct risk analyses that supposedly indicated whether a person or household was likely to engage in welfare fraud (Giest & Klievink, Citation2022, p. 10). Personal details of Dutch citizens were collected without their knowledge. It was subsequently revealed in news outlets that the government did not have the proper legal foundations to do so (Associated Press, Citation2022).

The SyRI program used a risk classification model to automate fraud detection. Risk profiles of childcare benefit applicants were created using the system's self-learning components, selecting people who were deemed more likely to engage in fraudulent activities. The risk classification model used a black box system which means that only the inputs and outputs are visible, rather than the processes and workings of the system. This approach significantly limited the transparency and accountability of tax authorities (Amnesty International, Citation2021). As a result of this approach, around 270,000 people were falsely accused of fraud and demands were made that they return their childcare benefits to tax authorities (Boztas, Citation2022). Sometimes, the amounts involved tens of thousands of euros for individual households. Those impacted were denied childcare benefits along with other benefits. Very often, those who received the repayment demands were from immigrant backgrounds, with up to 11,000 people presumedly simply targeted for possessing dual nationality (BBC, Citation2021). As a result, ethnic minorities and vulnerable communities were subject to increased scrutiny from the government. Hence, despite efforts to implement more effective and efficient administrative processes through artificial intelligence systems, the resulting situation left many people in marginalised communities inadvertently facing dire consequences.

In 2018, a coalition consisting of several civil rights NGOs (including the Netherlands Committee of Jurists for Human Rights) and two famous Dutch authors was formed to sue the government for its use of the SyRI system, and an amicus brief was also submitted to the Dutch court against the use of the SyRI system from an official in the United Nations (van Bekkum & Borgesius, Citation2021). In 2020, the legislation that authorised the use of SyRI was finally ruled by the Dutch Data Protection Authority as being unlawful, for violating privacy rights according to the European Convention on Human Rights. The court outlined that the system was ‘too opaque’ as too much data was being collected with little transparency regarding the purpose of the data collected (van Bekkum & Borgesius, Citation2021, p. 332). The legislation failed to inform people that their data was being processed, in addition to not making the risk model and its indicators public. Hence, the Dutch court ultimately concluded that the SyRI legislation could not justifiably isolate which demographics displayed increased risk, particularly given the fact that the system was biased against lower income and marginalised communities.

The Dutch government commissioned accountancy firm PwC to conduct an audit of the SyRI system. The 2022 report from PwC revealed that people were listed as suspected tax fraudsters on the basis of appearance and nationality (Boztas, Citation2022). The tax office has also been found to collect personal information on religion and beliefs (Boztas, Citation2022). However, the exact workings of the risk model, its relevant risk indicators and associated data have never been made public or provided to the court (van Veen, Citation2020).

The Dutch tax authorities stopped using SyRI and the secret blacklist in 2020 and have been issued a €2.75 million fine from the national privacy watchdog, whereas the finance ministry was fined €3.7 million (Boztas, Citation2022). Victims of this scandal were offered a fixed amount of compensation from the Dutch government in 2021. At that time, the Cabinet formally acknowledged the government's wrongdoings and collectively resigned. A new Dutch government, elected into office in 2021, subsequently pledged to implement a new algorithm that abides by the national data protection laws (Heikkilä, Citation2022).

Frame reflection provides a means of analysing this case study through multiple perspectives simultaneously. The eight frames introduced above allow for consideration of how the use of artificial intelligence in this case intersected with the generation and usage of information for supporting policy analysis.

Applying Frame 1. Faith in Rationality. Artificial intelligence has tremendous potential to identify tax and benefits fraud. By scanning huge quantities of records, algorithms can detect numbers of anomalous claims in a single pass that human claims adjusters would never be able to reach in a whole career. By any standard of judgement, an automated system that (accurately) identifies fraud and initiates proceedings against those suspected of committing it is orders of magnitude more effective and efficient than a human-based system that, for instance, relies on randomly-assigned review and audit as a universal deterrent.

Under this frame, the SyRI scandal was not a problem of technology, which had the potential to collect and process information at a scale that would have radically improved fraud detection and prosecution. Rather, the scandal is understood to have resulted from the failure of human programmers and administrators who did not implement the kind of backstops, fail-safes, and appeals processes that are standards in any public program.

Applying Frame 2. Technoscepticism. Viewed through the technosceptic lens, one outcome of using SyRI to adjudicate cases of suspected benefits fraud is that, rather than being enhanced, the actual evidence base was demonstrably degraded. Thousands of legitimate beneficiaries of childcare subsidies were unfairly and unlawfully targeted, while the true number of fraudulent claims was completely obscured by the program – resulting in individuals who genuinely committed fraud escaping without punishment. Rather than automating and improving the process of identifying fraud, artificial intelligence in this case made it more difficult to identify it at all.

Applying Frame 3. Values-Based Policy. The use of SyRI exposes a level of values-based policy that is not normally so apparent. Policy designers were intent on uncovering fraud because of an existing ideological fixation by the government on the exploitation of the Dutch welfare system by ‘fraudsters’ (Giest & Klievink, Citation2022, p. 12), especially non-citizens migrating from other parts of Europe (Amnesty International, Citation2021, p. 22). In other words, the values attached to catching and punishing welfare cheats were more important in this case than evidence about the true extent of the problem or about how to effectively cut down on fraudulent claims. Likewise, the bias introduced into (and subsequently not enthusiastically controlled within) SyRI algorithms likely says more about the entrenched biases within the Dutch public sector or its tax agencies than it does about faulty or incomplete computer programming.

Applying Frame 4. Technocynicism. Under a technocynical interpretation of the SyRI case, the abuses perpetrated in the name of fraud prevention went on much longer than they needed to, because of the nature of technology-based manipulation of information. Dutch authorities had been using artificial intelligence to identify potential cases of benefits fraud for over ten years before the resignation of the Rutte government in 2021 (BBC, Citation2021). A general societal worship of computers, and the perception that the information they produce is infallible, would have made it difficult to interfere with the system, correct its flaws, or bring justice to the people it harmed.

Applying Frame 5. Optimism about Capacity. Automated systems reduce pressure on human resources by giving human workers expanded tools to do their jobs. Seen through this frame, the SyRI system was a tool that tax authorities had at their disposal to enhance their work. The scandal revealed that the tool was badly designed and improperly implemented, but, as in Frame 1, that is not the fault of the technology but of the people who commissioned and designed it. A more transparent algorithm, and more complete training for staff, would have allowed tax auditors to do their job more effectively, quicker, and with fewer people – freeing up human resource capital for other tasks.

Applying Frame 6. Pessimism about Capacity. Under this perspective, the SyRI system in effect reduced the ability of the Dutch tax authorities to do their job. The information produced by the system was faulty, and the reliance on computerised fraud detection for so many years impeded the ability of the authorities to correct the problem and to identify and prosecute fraud accurately and fairly.

Applying Frame 7. Values-Based Output. One interpretation generated in this frame is that the SyRI scandal was enabled by societal attitudes. With xenophobia, concerns about protection of the ‘Dutch identity’, and support for populist far right parties all on the rise (Missier, Citation2022), public messaging that systems could be put in place to prevent ‘foreigners’ from exploiting Dutch welfare benefits would have been politically expedient.

Applying Frame 8. Technophobia. Seen through this frame, SyRI enabled the government to persecute a sizeable cohort of residents in a highly coercive and unrepairable manner. Artificial intelligence – perhaps unintentionally, but nonetheless in actual effect – directly led to the unfair and unjustified targeting and punishment of many law-abiding people.

Discussion: using frames to advance debate

The use of artificial intelligence in the public sector is disrupting the discourse around evidence-based policy. Because of the responsibility of public sector policy analysts to act ethically (Mintrom, Citation2010), the ethical concerns related to artificially intelligent public systems offer new challenges to the way that information is traditionally used to support policy decisions. Debates which, up to now, have centred mainly around positivist and constructivist conceptions of the intersubjectivity of evidence need to be updated to take into consideration the ethics of algorithmic data analysis and automated decision making.

Above, we elaborated eight directions in which we see an updated discourse on evidence-based policy in the time of artificial intelligence proceeding. It is tempting to interpret these perspectives as competing arguments that need to be either endorsed or debunked. However, such an interpretation would lead to an intellectual impasse. More productively, the typology presented above can be interpreted as a set of equally valid frames which, taken together, enable a more complete understanding of the benefits, drawbacks, and other issues related to the computerisation of policy analysis.

This perspective is illustrated by our case study. All eight frames are apparent here, and they are all valid – but incomplete – ways to understand the events related to the case. For example, it is true that computer programs can scan thousands or even millions of client files and extract anomalous entries for closer examination, generating information that human auditors could never produce. Used appropriately, this technology would represent a significant advance in the ability of policy analysts to inform policy decisions. However, it is also possible that, in this case especially, computer programs were not used appropriately, and that the ‘evidence’ that was generated by the system was flawed, leading to erroneous allegations of fraud and ultimately enabling coercive policies that disproportionately affected vulnerable citizens.

Therefore, by taking multiple frames into consideration, a more holistic interpretation of the case study might be possible: Advanced computing technologies, especially pattern recognition, machine learning, and the analysis of big data, offered the Dutch tax authority a way to expand fraud detection and enforcement (frame 1). However, the system was opaque to its operators, and the data it generated was of poor quality because it could not be verified (frame 2). This problem was exacerbated by the fact that the system's designers were, for whatever reason, capitalising on latent anti-immigrant rhetoric, and the software was initiated with an emphasis on files indicating foreign or dual citizenship, which may have been further reinforced by machine learning algorithms (frame 3). The outputs of the system created a feedback loop in which the identification (and subsequent punishment) of ‘fraudsters’ reinforced the belief in an underclass of recent arrivals and dual nationals abusing the welfare system, which enabled the program to continue for a decade before serious action was taken to fix it (frame 4). If it had been designed and implemented appropriately, the SyRI program could have represented a significant boost in the capacity of the tax authority to do its job, including the possibility of redistributing resources to other areas (frame 5), but the flaws in the system and its persistence for so many years likely resulted in a decrease in policy capacity in the form of lost in-house expertise and forgotten institutional memory (frame 6). In the end, the legacy of SyRI is a damaging perpetuation of xenophobic stereotypes and countless stories of vulnerable families unfairly hurt by harsh civil penalties (frames 7 and 8).

From an academic perspective, then, the advent of artificial intelligence in the public sector demands an update in the discourse on evidence-based policy. Debates about what constitutes evidence and whether or not policy can or should be based on it need to be upgraded to include consideration of the impact of automation on the ethical practice of policy analysis. Academic questions for future exploration will need to consider more precisely, for example, what kind of information is generated by artificial intelligence, and how systems might be backstopped to allow for greater oversight, transparency, and verification of data; the importance of training for public servants working with artificial intelligence to prevent over-reliance on technology and to preserve in-house expertise and policy analytical capacity; and how protections might be extended to citizens through stronger legislation, more effective complaints mechanisms, call centre support, and ombuds services.

From a practitioner perspective, frame reflection offers a more inclusive approach to the ethical practice of policy analysis. Complete faith in the instrumental use of technology can have serious downsides, such as insufficient consideration to the harms associated with inaccuracies and biases in computer programming. A frame reflection approach would enable practitioners to think beyond the immediate promises of efficiency in the workplace, to ask questions about how new technologies might strengthen the coercive powers of government, and encourage unfair profiling, targeted interventions, invasions of privacy, and covert policy instruments designed for manipulation and compliance (Hausman & Welch, Citation2010; Mols et al., Citation2015). In short, through frame reflection, policy analysts can improve their engagement with the principles of ethical practice (integrity, competence, responsibility, respect, and concern): by considering their policy through alternative lenses, they might be able to anticipate some of the possible negative outcomes of a program and better appreciate the risks, and a more ethical application of the technology could be pursued.

Conclusion

Governments are increasingly using artificial intelligence to guide decision-making around public service delivery. This appears consistent with the evidence-based policy paradigm that envisions policy decisions informed by the latest, most rigorous, and most reliable evidence on policy choices and their expected effects. In theory, artificial intelligence should be embraced by proponents of evidence-based policy. In practice, the potential is real for deployment of artificial intelligence to entrench biases, increase inequality, and impede democracy. Given this, ethical conundrums raised by artificial intelligence have the potential to significantly undermine the evidence-based policy paradigm.

In this article, we have explored the connection between ethical concerns about using artificial intelligence in public service delivery and debate on the use of evidence for purposes of policy analysis. We suggest that the increasing use of artificial intelligence has the potential to fundamentally alter the way human decision-making features in public administration. It will require a shift in the assumptions that underpin notions of evidence-based policy. Going forward, scholars will need to revisit questions of what constitutes evidence, how we address potential bias in systems, and how we confront ethical concerns. Using dimensions of generation/usage and support/critique, we have proposed eight unique directions in which we expect the discourse on evidence-based policy to advance in an era of automated data collection and processing. Furthermore, we argue that in many cases of real-life policy decision making, there is value in applying a ‘frame reflection’ approach in which multiple alternative viewpoints can be appreciated and understood simultaneously in order to generate a more holistic and inclusive narrative.

Using the SyRI automated welfare fraud detection system in the Netherlands as our case study, we have illustrated how multiple frames can be brought together to produce a more complete narrative of the use of evidence in policy making in an era of artificial intelligence. Our analysis is ex post, and our purpose here is neither to replicate nor to approximate the decision-making that happened in the Netherlands. Aspects of that will never be fully known. Rather, our purpose is to illustrate, with reference to this case, how the application of frame reflection can facilitate discussions of artificial intelligence in the generation and usage of evidence for policy-making. However, we envision that a frame reflection approach might also be used by policy workers for ex ante analysis as well, to guide discussion, to reveal less prominent perspectives, and to help navigate controversies.

The use of artificial intelligence will require a re-imagining of what evidence means and how it is and is not used to inform policy development. One way to do that is to explicitly acknowledge and discuss the competing frames through which individuals and institutions might view specific proposals for policy change. Actions along these lines – which could include a range of stakeholder engagement techniques – will be vital if the evidence-based policy paradigm is to contribute as anticipated to helping polities address pressing real-world problems.

Acknowledgements

Many thanks to Veronica Lay for invaluable research assistance on this project.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Notes on contributors

Joshua Newman

Joshua Newman is Associate Professor of Politics and Public Policy in the School of Social Sciences, Faculty of Arts, Monash University. His research covers policy analysis, evidence-based policy, research impact, regulation and privatisation, and addressing complex policy problems.

Michael Mintrom

Michael Mintrom is Professor of Public Policy in the School of Social Sciences, Faculty of Arts, Monash University. His research covers policy analysis, policy design, and policy advocacy.

References

- Amnesty International. (2021). Xenophobic machines: Discrimination through unregulated use of algorithms in the Dutch childcare benefits scandal.

- Andrews, L. (2017). How can we demonstrate the public value of evidence-based policy making when government ministers declare that the people ‘have had enough of experts’? Palgrave Communications, 3(1), 1–9. https://doi.org/10.1057/s41599-017-0013-4

- Associated Press. (2022, April 12). Record fine for Dutch tax office over personal data list. AP News. https://apnews.com/article/business-netherlands-europe-child-welfare-3886baef976fdc9a5963cfbc40991314.

- Aucoin, P., & Bakvis, H. (2005). Public service reform and policy capacity: Recruiting and retaining the best and the brightest. In M. Painter & J. Pierre (Eds.), Challenges to state policy capacity: Global trends and comparative perspectives (pp. 185–204). Palgrave Macmillan.

- Bacchi, C., & Goodwin, S. (2016). Poststructural policy analysis: A guide to practice. Springer.

- BBC. (2021, January 15). Dutch Rutte government resigns over child welfare fraud scandal. BBC News. https://www.bbc.com/news/world-europe-55674146.

- Boztas, S. (2022, February 11). ‘Non-Western appearance’ trigger for tax office fraud listing. DutchNews, Friday. https://www.dutchnews.nl/news/2022/02/non-western-appearance-trigger-for-tax-office-fraud-listing/.

- Braithwaite, V. (2020). Beyond the bubble that is Robodebt: How governments that lose integrity threaten democracy. Australian Journal of Social Issues, 55(3), 242–259. https://doi.org/10.1002/ajs4.122

- Brans, M., Geva-May, I., & Howlett, M. (2017). Policy analysis in comparative perspective: An introduction. In M. Brans, I. Geva-May, & M. Howlett (Eds.), Routledge handbook of comparative policy analysis (pp. 1–23). Routledge.

- Bullock, J. B. (2019). Artificial intelligence, discretion, and bureaucracy. The American Review of Public Administration, 49(7), 751–761. https://doi.org/10.1177/0275074019856123

- Cairney, P. (2016). The politics of evidence-based policy making. Palgrave Macmillan.

- Cairney, P. (2022). The myth of ‘evidence-based policymaking’ in a decentred state. Public Policy and Administration, 37(1), 46–66. https://doi.org/10.1177/0952076720905016

- Calo, R. (2017). Artificial intelligence policy: A primer and roadmap. University of California, Davis Law Review, 51(2), 399.

- Capano, G., & Malandrino, A. (2022). Mapping the use of knowledge in policymaking: Barriers and facilitators from a subjectivist perspective (1990–2020). Policy Sciences, 55(3), 399–428. https://doi.org/10.1007/s11077-022-09468-0

- Colebatch, H. K. (2005). Policy analysis, policy practice and political science. Australian Journal of Public Administration, 64(3), 14–23. https://doi.org/10.1111/j.1467-8500.2005.00448.x

- Colebatch, H. K. (2006). Mapping the work of policy. In H. K. Colebatch (Ed.), Beyond the policy cycle: The policy process in Australia (pp. 1–19). Routledge.

- Deaton, A., & Cartwright, N. (2018). Understanding and misunderstanding randomized controlled trials. Social Science & Medicine, 210, 2–21. https://doi.org/10.1016/j.socscimed.2017.12.005

- de Bono, E. (1985). Six thinking hats: An essential approach to business management. Little, Brown, & Company.

- Desouza, K. C., Dawson, G. S., & Chenok, D. (2020). Designing, developing, and deploying artificial intelligence systems: Lessons from and for the public sector. Business Horizons, 63(2), 205–213. https://doi.org/10.1016/j.bushor.2019.11.004

- Doleac, J. L. (2019). “Evidence-based policy” should reflect a hierarchy of evidence. Journal of Policy Analysis and Management, 38(2), 517–519. https://doi.org/10.1002/pam.22118

- Gaon, A., & Stedman, I. (2018). A call to action: Moving forward with the governance of artificial intelligence in Canada. Alberta Law Review, 56(4), 1137–1166. https://doi.org/10.29173/alr2547

- Giest, S. N., & Klievink, B. (2022). More than a digital system: How AI is changing the role of bureaucrats in different organizational contexts. Public Management Review (in Press). https://doi.org/10.1080/14719037.2022.2095001

- Goffman, E. (1974). Frame analysis: An essay on the organization of experience. Harvard University Press.

- Gulson, K. N., & Webb, P. T. (2017). Mapping an emergent field of ‘computational education policy’: Policy rationalities, prediction and data in the age of artificial intelligence. Research in Education, 98(1), 14–26. https://doi.org/10.1177/0034523717723385

- Hahn, R. (2019). Building upon foundations for evidence-based policy. Science, 364(6440), 534–535. https://doi.org/10.1126/science.aaw9446

- Harrison, T. M., & Luna-Reyes, L. F. (2020). Cultivating trustworthy artificial intelligence in digital government. Social Science Computer Review, 40(2), 494–511. https://doi.org/10.1177/0894439320980122

- Hausman, D. M., & Welch, B. (2010). Debate: To nudge or not to nudge. Journal of Political Philosophy, 18(1), 123–136. https://doi.org/10.1111/j.1467-9760.2009.00351.x

- Head, B. W. (2010). Reconsidering evidence-based policy: Key issues and challenges. Policy and Society, 29(2), 77–94. https://doi.org/10.1016/j.polsoc.2010.03.001

- Head, B. W. (2015). Policy analysis and public sector capacity. In B. W. Head & K. Crowley (Eds.), Policy analysis in Australia (pp. 53–68). Policy Press.

- Heikkilä, M. (2022). Dutch scandal serves as a warning for Europe over risks of using algorithms. Politico. Retrieved March 29, 2022, from https://www.politico.eu/article/dutch-scandal-serves-as-a-warning-for-europe-over-risks-of-using-algorithms/.

- Howlett, M. (2009). Policy analytical capacity and evidence-based policy-making: Lessons from Canada. Canadian Public Administration, 52(2), 153–175. https://doi.org/10.1111/j.1754-7121.2009.00070_1.x

- Howlett, M., Mukherjee, I., & Woo, J. J. (2015). From tools to toolkits in policy design studies: The new design orientation towards policy formulation research. Policy & Politics, 43(2), 291–311. https://doi.org/10.1332/147084414X13992869118596

- Just, D. R., & Byrne, A. T. (2020). Evidence-based policy and food consumer behaviour: How empirical challenges shape the evidence. European Review of Agricultural Economics, 47(1), 348–370.

- Kippin, S., & Cairney, P. (2022). The COVID-19 exams fiasco across the UK: Four nations and two windows of opportunity. British Politics, 17(1), 1–23. https://doi.org/10.1057/s41293-021-00162-y

- Lipsky, M. (1980). Street-level bureaucracy: Dilemmas of the individual in public service. Russell Sage Foundation.

- Makridakis, S. (2017). The forthcoming artificial intelligence (AI) revolution: Its impact on society and firms. Futures, 90, 46–60. https://doi.org/10.1016/j.futures.2017.03.006

- Mead, L. M. (2015). Only connect: Why government often ignores research. Policy Sciences, 48(2), 257–272. https://doi.org/10.1007/s11077-015-9216-y

- Mehr, H. (2017). Artificial intelligence for citizen services and government. Ash Center for Democratic Governance and Innovation, Harvard Kennedy School.

- Mintrom, M. (2010). Doing ethical policy analysis. In J. Boston, A. Bradstock, & D. Eng (Eds.), Public policy: Why ethics matters (pp. 37–54). Australia and New Zealand School of Government (ANZSOG), ANU E-Press.

- Missier, C. A. (2022). The making of the Licitness of right-wing rhetoric: A case study of digital media in The Netherlands. Sage Open, 12(2), 1–14. https://doi.org/10.1177/21582440221099527

- Mols, F., Haslam, S. A., Jetten, J., & Steffens, N. K. (2015). Why a nudge is not enough: A social identity critique of governance by stealth. European Journal of Political Research, 54(1), 81–98. https://doi.org/10.1111/1475-6765.12073

- Newman, J. (2017). Deconstructing the debate over evidence-based policy. Critical Policy Studies, 11(2), 211–226. https://doi.org/10.1080/19460171.2016.1224724

- Newman, J. (2022). Politics, public administration, and evidence-based policy. In A. Ladner & F. Sager (Eds.), Handbook on the politics of public administration (pp. 82–92). Edward Elgar.

- Newman, J., & Head, B. (2015). Beyond the two communities: A reply to mead’s “why government often ignores research”. Policy Sciences, 48(3), 383–393. https://doi.org/10.1007/s11077-015-9226-9

- Newman, J., Mintrom, M., & O’Neill, D. (2022). Digital technologies, artificial intelligence, and bureaucratic transformation. Futures, 136, 102886. https://doi.org/10.1016/j.futures.2021.102886

- Packwood, A. (2002). Evidence-based policy: Rhetoric and reality. Social Policy and Society, 1(3), 267–272. https://doi.org/10.1017/S1474746402003111

- Parikh, R. B., Teeple, S., & Navathe, A. S. (2019). Addressing bias in artificial intelligence in health care. JAMA, 322(24), 2377–2378. https://doi.org/10.1001/jama.2019.18058

- Rhodes, R. A. (1994). The hollowing out of the state: The changing nature of the public service in Britain. The Political Quarterly, 65(2), 138–151. https://doi.org/10.1111/j.1467-923X.1994.tb00441.x

- Saltelli, A., & Giampietro, M. (2017). What is wrong with evidence based policy, and how can it be improved? Futures, 91, 62–71. https://doi.org/10.1016/j.futures.2016.11.012

- Sanderson, I. (2003). Is it ‘what works’ that matters? Evaluation and evidence-based policy-making. Research Papers in Education, 18(4), 331–345. https://doi.org/10.1080/0267152032000176846

- Schön, D., & Rein, M. (1994). Frame reflection: Toward the resolution of intractable policy controversies. Basic Book.

- Simon, H. A. (1947). Administrative behavior: A study of decision-making processes in administrative organization. Macmillan.

- Simon, H. A. (1996). The sciences of the artificial (3rd ed.). MIT Press.

- Starr, S. B. (2014). Evidence-based sentencing and the scientific rationalization of discrimination. Stanford Law Review, 66(4), 803–872.

- van Bekkum, M., & Borgesius, F. Z. (2021). Digital welfare fraud detection and the Dutch SyRI judgment. European Journal of Social Security, 23(4), 323–340. https://doi.org/10.1177/13882627211031257

- van Veen, C. (2020). Landmark judgment from the Netherlands on digital welfare states and human rights. OpenGlobalRights. Retrieved March 19, 2020, from https://www.openglobalrights.org/landmark-judgment-from-netherlands-on-digital-welfare-states/.

- Weimer, D. L., & Vining, A. R. (2017). Policy analysis: Concepts and practice. Routledge.

- Wilson, R. (2006). Policy analysis as policy advice. In R. Goodin, M. Moran, & M. Rein (Eds.), The Oxford handbook of public policy (pp. 152–168). Oxford University Press.