Abstract

Citizen science, community science, and related participatory approaches to scientific research and monitoring are increasingly used by environmental educators and conservation practitioners to achieve environmental education (EE) goals. However, evidence of EE learning outcomes from these approaches are typically reported on a case-by-case basis, if at all. We undertook a systematic review of empirical studies in which community and citizen science (CCS) projects lead to EE outcomes. We surfaced 100 studies that met our inclusion criteria using a broad definition of CCS and empirical research on EE outcomes. The studies involved people in a wide variety of aspects of environmental research that included, but also went beyond, data collection. We found CCS approaches to EE overall resulted in positive learning outcomes for adults and youth, particularly gains for science content knowledge (56 articles), science inquiry skills (32), positive attitudes toward science and the environment (16), and self-efficacy toward science and the environment (11). We also found evidence for positive gains in environmental behavior and stewardship (29) and community connectedness and cooperation outcomes (30). These findings highlight how CCS programs may be uniquely impactful when involving people in planning, data analysis, and reporting aspects of environmental research and monitoring, as well as in data collection, and we offer examples and suggestions for CCS design. We found a heavy reliance on self-reporting in the research methods used in many studies, however, and so offer suggestions for more rigorous methods and directions for future research.

SUSTAINABLE DEVELOPMENT GOAL:

Over the past several decades, interest in community and citizen science (CCS) as a strategy for environmental education (EE) has increased dramatically amongst scholars and practitioners alike. In the context of the socio-ecological crises facing the planet, there is a growing body of multidisciplinary research as well as curricula, programs, and organizations advancing participation in CCS as a valuable way to support learning in, with, and about the environment (Dillon et al. Citation2016; National Academies, 2018; Reid et al. Citation2021). Much of this research, however, remains conceptual, often citing the potential of CCS as a tool for EE (Bela et al. Citation2016), with a subset of these examining empirical evidence of EE outcomes for participants. In light of the myriad promises regarding CCS, a systematic review of empirical research in this field can provide a foundation for understanding what and how EE outcomes actually result from CCS and can challenge the field to more deeply and broadly seek evidence for when, how, and why participation in CCS might accomplish EE goals. Similarly, systematic reviews of related fields whose outcomes align with traditional EE outcomes can help the field of EE continue to coalesce the research evidence for learning outcomes (Ardoin et al.; 2018, Clark et al. Citation2020)

In light of this, in 2013, the North American Association for Environmental Education (NAAEE) launched an initiative called eeWorks: From Anecdotes to Evidence (https://naaee.org/programs/eeworks) inviting teams of scholars to conduct comprehensive international research reviews to investigate the evidence for achieving outcomes of EE in several priority areas, including citizen science and related participatory approaches, to demonstrate the impact and value of EE. The reviews occurred from 2013 to 2018, for example: examining outcomes for K-12 students ended in 2013 (Ardoin et al., Citation2018), for climate change education ended in 2015 (Monroe et al. Citation2019), and for early childhood EE ended in 2016 (Ardoin et al. Citation2020). In this context, we conducted a systematic review of the empirical, peer-reviewed research literature linking CCS and EE, with the goals of: (1) characterizing the studies that meet criteria for inclusion in this review (2) identifying the extent to which EE learning outcomes have been empirically measured as resulting from CCS participation, (3) developing recommendations for the design and implementation of CCS for EE practitioners, and (4) suggesting directions for future research. This review examined research ending in 2018 in keeping with the other review papers in eeWorks to establish a foundational baseline of empirical findings from which the field may build, critique, and expand as the fields of CCS and EE evolve.

Community and citizen science (CCS)

Despite having received increased attention in recent years, CCS is not a new way of doing environmental science. Members of the public have a long history of recording their observations of the natural world, generating data that have been used to advance science in ways that would otherwise be impossible (Miller-Rushing et al. Citation2012). There continue to be debates concerning definitions of citizen science, community science, and public participation in scientific research (PPSR), and concerning how these align with associated approaches such as volunteer monitoring, community-based participatory research, and other participatory approaches to research and monitoring (Cooper et al. Citation2021; Eitzel et al. Citation2017; Haklay, Citation2013; Shirk et al. Citation2012). Because we consider scientist-led (citizen science) and community-led (community science) endeavors distinct but related parts of the same spectrum of participatory approaches to research and monitoring, we use the term community and citizen science (CCS) for this review, defined as including the range of participatory ways of doing science that involve members of the public in some or all parts of scientific research or monitoring projects for which the data or results are used for monitoring, decision-making, or basic research (Ballard et al. Citation2017a). This includes projects that range from being ‘contributory’ in nature, where public participation is limited primarily to data collection and/or analysis, to those that are ‘co-created’, where public participation plays a role in all parts of the scientific research, from defining the research questions to acting on the results (Shirk et al. Citation2012; Bonney et al. Citation2009). While CCS occurs in a range of scientific fields, from astronomy to genetics to paleontology and increasingly in the humanities and social sciences (Tauginienė et al. Citation2020), we focused our review on studies of projects related to biodiversity, conservation, and the environment, including the fields of environmental health and justice.

Because learning is intimately tied to participation in different social practices, such as those within CCS projects, a key goal of this study was to examine evidence of the degree to which participation in the different activities of scientific research related to EE learning outcomes. Early on, Shirk et al. (Citation2012) focused on ‘stages of the scientific process’ in which people participate, ranging from contributory, to collaborative to co-created as described above, as did Dillon et al. (Citation2016) using the terms science-driven and transition-driven citizen science, respectively. As several authors have pointed out, what influences learning is not just participation but also the control over the scientific process that participants have (Shirk et al. Citation2012; Haklay Citation2013).

In the end, most typologies of CCS and related approaches categorize based on the degree or extent to which members of the public participate in the main activities of scientific research (Haklay Citation2013; Wiggins & Crowston Citation2011; Eitzel et al. Citation2017; Shirk et al. Citation2012), and these articles were frequently cited by studies in the review when describing their participatory approaches. Consequently, we argue that it is the authentic participation in one or more of these main activities of scientific research that can be considered the ‘instructional approach’ that CCS projects have to offer the fields of environmental and science education. Therefore, for the purposes of this review, we used an aggregate of the categories offered by all these typologies, rather than delving into sociology and philosophy of science literature.

Identifying science and environmental education outcomes of CCS

CCS as an educational approach intertwines science as well as environmental learning outcomes that we had to consider in our review. Wals et al. (Citation2014) argue that citizen science may serve as a nexus between science education, which focuses on teaching knowledge and skills, and environmental education, which additionally focuses on values and behaviors with respect to the environment, in order to produce a public who can respond to pressing environmental problems. Lindgren et al. (Citation2021) further argue that the overlapping goals of science education and environmental education converge around the practices of questioning, analysis, and interpretation as key outcomes, resonant with key features of CCS activities described above. Hence, in examining the evidence for CCS in promoting EE learning outcomes, we draw on previous research from both science education and environmental education outcomes to develop our analytical frame.

Recently, interest in the potential of CCS to improve public understanding of science as well as youth science education (Bonney et al. Citation2016; Kloetzer et al. Citation2021) has skyrocketed. Researchers and educators view CCS as a strategy to facilitate meaningful engagement in authentic scientific inquiry and investigation of place-based phenomena (Harris et al. Citation2020; Calabrese Barton, Citation2012; Mueller et al. Citation2012). This is in part because effective science education reflects the ways scientists actually work (National Research Council, 2009), and participation in different science practices may spur different forms of science reasoning and sensemaking practices (Hayes et al. Citation2020). In a comprehensive literature synthesis, Phillips et al. (Citation2018) identified science learning outcomes that might result from CCS participation (science content knowledge, science inquiry skills, self-efficacy with science and the environment, knowledge of the nature of science, science interest), which we used to analyze articles included in our review, with a particular focus on environmental science as the domain rather than all natural sciences more broadly.

Beyond science-focused learning outcomes, CCS is a promising strategy for achieving the broader environmental education goals that include but are not limited to science learning. To determine these, we turned to scholarship focused on defining key EE outcomes for individuals and communities that range from content knowledge to civic engagement, nature connectedness, pro-environmental behavior, and care for the natural world (Clark et al. Citation2020; Jacobson et al. Citation2015; Krasny, Citation2020). Employing a Delphi study of EE professionals, Clark et al. (Citation2020) identified five core outcome areas for EE: environmentally related action and behavior change, connection to nature, improving health of the environment, improving social and cultural aspects of the human experience, and learning necessary skills and competencies necessary to engage in environmentally related decision-making and behaviors, which build on and align with the key components of environmental literacy identified by the North American Association for Environmental Education (Hollweg et al. Citation2011).

In addition to these outcome areas, we considered areas that EE researchers are increasingly focused on that reflect the capacities of individuals and communities to help resolve complex social-ecological issues (Ardoin et al. Citation2013; Stevenson et al. Citation2013) Firstly CCS projects can provide opportunities for people to participate and contribute to the life of their community, for example, through volunteer water quality monitoring of local streams, fostering scientific literacy that is inclusive of and extends beyond the K-12 settings (Roth & Calabrese Barton, Citation2004), hence we considered ‘community connectedness and cooperation’ as a possible EE outcome from CCS. We also see important research on ‘environmental behaviors and stewardship’ as an EE outcome, emphasizing the relationship between participation, sensemaking, and stewardship (Korfiatis & Petrou, Citation2021), and between sense of place, connection to nature, and environmental behaviors (Gosling & Williams, Citation2010). When participants see the scientific work they have done on a CCS project get taken up in conservation efforts or policy, this can influence the degree to which some participants experience stewardship learning outcomes (Ballard et al. Citation2017b). Finally, CCS researchers have examined ‘sense of place’ and associated place values, including place identity and place attachment (a positive connection or emotional bond with a place (Gosling & Williams, Citation2010), and found these types of constructs were associated with some kinds of CCS participation (Haywood et al. Citation2016). We view these constructs, which we cluster together as ‘place values’, as parallel to Clark et al.’s (Citation2020) core EE outcome of connection to nature (the extent to which an individual feels part of nature, Schultz, Citation2001). In summary, by encompassing key outcome areas for science and environmental education, we aimed to capture the particular learning outcomes that may be afforded by participation in environmental research and monitoring.

Reviews of research in CCS

In the context of the several systematic reviews examining empirical research in EE and the increased attention to CCS as a strategy for EE, we conducted a systematic review of the peer-reviewed academic literature to shed light on what empirical evidence existed linking the two. Few reviews of this nature and scope exist. Peter et al. (Citation2019), for instance, reviewed the literature for the participant outcomes of biodiversity citizen science projects that ended in 2017, but used a more limited definition of CCS and reviewed only fourteen studies. Others have focused on climate change education outcomes (Groulx et al. Citation2017) or on environmental monitoring (Stepenuck & Green, Citation2015), or on participation aspects but not measured learning outcomes (Vasiliades et al. Citation2021). In our review, we sought to use an expansive definition of CCS described above based on the historical development of the field to better understand what learning outcomes have been documented across the broad spectrum of project types and environmental topics that exist in practice, focusing on outcomes relevant to EE (details in Methods).

Our research questions guiding this review were:

What are the characteristics of CCS projects that reported EE learning outcomes?

What evidence do the studies provide for EE learning outcomes achieved by CCS projects?

How did participation in specific scientific research activities impact EE learning outcomes?

What research methods and approaches have been used to study the EE learning outcomes of CCS, and what are the implications for future research?

Methods

Systematic review approach

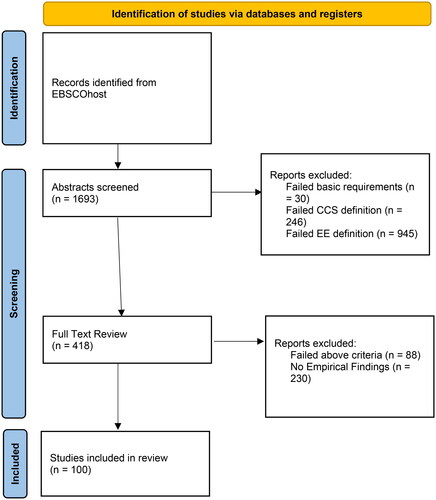

Marcinkowski (Citation2003) describes the purposes of reviews of research: ‘(a) to identify research studies; (b) to describe or characterize research studies; (c) to critique research studies; and (d) to summarize or synthesize the results or claims of research studies’ (183). As suggested for all reviews by Grant & Booth’s (Citation2009) typology of reviews, we systematically searched the literature, applied strict inclusion and exclusion criteria, and synthesized what we know about CCS as a process of EE from the body of literature. We approached this review through a systematic qualitative analysis with the primary goals of identifying, describing, and synthesizing the claims of studies across diverse methods and research paradigms. We provide here a PRISMA (Preferred Reporting Items for Systematic Reviews and Meta-Analyses) flow diagram of our process (), to report transparently how the review was conducted and what was found (Page et al. Citation2021).

Figure 1. PRISMA flow diagram of systematic review methods based on Moher et al. (Citation2009).

Literature search

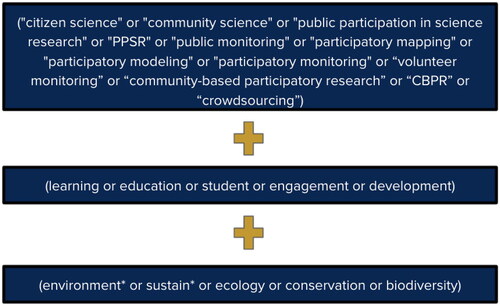

We began our review by performing pilot searches using different terms to identify articles focused on CCS and EE learning outcomes discussed in the literature as above, with the variety of terms used in multiple fields. For all searches we used the database EBSCOhost to search all the included databases in the University of California, Davis subscription, which included Academic Search Complete as an all-around database, education indexes such as ERIC, Education Source, EdArXiv, and environment indexes such as Ecology Abstracts, BIOSIS; we confirmed that all the major journals of the environmental education field were included in these databases. Terms at this early stage included but were not limited to: environmental education, civic engagement, youth development, participatory,and conservation. We examined the returns of each search to determine if we were gaining relevant articles; those results brought us to the final list of keywords in that we used to conduct an unqualified search (meaning the database searched the title, abstract, subject, keywords, and author) with no start date and ending at our search date of September 2018 (see below for Limitations).

In , the first nesting of terms aims to capture all the articles in the area of community and citizen science. With feedback from a diverse set of colleagues in EE and CCS fields, we intentionally included a broad array of participatory research approaches as search terms to ensure it captured the diverse forms of public participation in science that can be considered CCS, given our earlier definition. To limit to articles that included research on educational outcomes, we included the second nesting of terms. The final nesting of terms aims to limit included articles to papers within the realm of environmental topics only and exclude those from other scientific fields. We also limited the search with the following restrictions: peer-reviewed articles, written in English. This produced 1639 unique articles for initial review published prior to September 2018, which was the end of the grant-funding and target timeframe for this component of the eeWorks initiative. (For a further scan of the literature from 2018–2023 to preliminarily examine if themes persisted, please see Discussion).

Identifying articles for inclusion

We imported citations and abstracts from the keyword search into Covidence systematic review management software (Babineau, Citation2014) and used a decision tree to exclude sources that did not meet our criteria (). The decision tree was refined and calibrated by team member review using a pool of 20 articles. Team members then reviewed random subsets of abstracts until each abstract had been reviewed by two people. The decision tree continued to be refined during meetings to resolve conflicts between readers. Abstracts that included unclear wording of EE outcomes remained categorized as ‘maybes’ and were prioritized for full-text review.

Requirements included peer-reviewed, in English, not book reviews or dissertations. Authors of articles had to identify their approach using at least one of our keywords for CCS inclusion. Additionally, abstracts had to include at least one of the following participant outcomes in this phase of the screening process (see our final list of EE learning outcomes defined below): knowledge of environmental science, environmental science skills, environmentally responsible behavior change, attitude toward the environment, and/or participation in environmental science, which the North American Association for Environmental Education identify as key components of environmental literacy (Hollweg et al. Citation2011). Some of the most commonly excluded articles were about public health topics that did not include an explicit environmental focus, like food access and urban planning. When we applied the decision tree criteria, we excluded 74% (n = 1221) of the initial article pool ().

Following abstract screening the remaining 26% of the articles went to full-text review. This process involved a higher level of scrutiny and deeper reading of articles, leading to the exclusion of an additional 88 articles (5%) that failed the decision tree after full-text review. Articles were then sorted to describe only articles that included empirical findings of participant outcomes, resulting in 100 articles (6%) that met decision tree criteria and empirically investigated participant outcomes related to EE. We then included these 100 articles for full coding and analysis.

Developing coding scheme and process

We developed categorical codes both deductively and inductively. We began with our original study objectives and developed categorical codes, then added additional codes as they emerged from article review, as well as sub-codes (). Further, because CCS projects aim to produce data or results used for scientific research, monitoring, or decision-making, we see participation in those scientific research activities as the most distinguishing educational approach that CCS projects employ compared to other EE initiatives, and therefore endeavored to identify the nuances of participation in the articles, using sub-codes for the major activities of scientific research as explained below (Eitzel et al. Citation2017; Haklay, Citation2013; Shirk et al. Citation2012). We drew from Shirk et al.’s (Citation2012), Haklay’s (Citation2013), and Wiggins’ (2011) categories of ‘parts of scientific process’ in which members of the public participated to arrive at our categories. We recognize that, in reality, there is no singular scientific method, and instead, there are multiple ways in which scientists may engage in inquiry across disciplines and paradigms (Ault, Citation2023; NRC, 2012). While these categories can be, on one hand, read as overly rigid and prescriptive, on the other hand, we recognize they are also iterative, fluid, and overlapping (Ault, Citation2023), and very much depend on the discipline and/or paradigm at play. For the purposes of our analysis, the goal was to categorize the myriad activities and tasks that make up an environmental science research or monitoring project, rather than try to discern scientific reasoning practices, for example, described in the U.S. Next Generation Science Standards (NRC, 2012) or encompass the nuances of each discipline. We, therefore, delineated what are commonly recognized in the CCS typologies as the ‘main scientific research activities’ in which people in CCS participate (codes in ) and created four clusters based on the predominant activities for each (planning, data collection, data analysis, and reporting; see for details).

Table 1. Example main codes for analysis of empirical articles.

Table 2. Environmental education learning outcomes and definitions.

For participant learning outcomes codes, we brought together key outcome areas for science education and environmental education, taking into consideration the particular context of people participating in CCS. Specifically, as we explain above, we included versions of EE outcomes that drew from Clark and colleagues’ (2020) five core EE outcomes and Holloweg and colleagues’ (2011) environmental literacy outcomes, tailored to the CCS context, such as environmental stewardship and behaviors (Phillips et al. Citation2018), community outcomes (Jordan et al. Citation2012), and place-based outcomes (Haywood et al. Citation2016; Kudryavtsev et al. Citation2012) (). We also included the science-focused learning outcomes of citizen science identified through a literature review of science education research by Phillips et al. (Citation2018) with a focus on the domain of environmental science; many of these outcomes were also used by a recent narrower review of biodiversity citizen science projects by Peter et al. (Citation2019) ().

Using the main categories and sub-codes, coders filled out a Google form to assign non-mutually exclusive sub-codes to each article, applying presence/absence to each. We established inter-rater reliability by two coders coding the same 20 articles, discussing differences in coding, and revising and refining the codes and definitions accordingly. Both coders coded another five articles separately and achieved total agreement on coding. The remainder of the articles were coded by one coder. In addition to looking at each learning outcome across the total set of articles we reviewed, we also wanted to examine how different outcomes might link to particular CCS approaches to participation, and whether participation in more than one activity of scientific research led to particular learning outcomes. We, therefore, determined the number of articles with each learning outcome for each cluster, and examined these to identify the ways in which authors described how each type of participation may have led to those learning outcomes.

Results

RQ 1) What are characteristics of CCS programs that reported EE learning outcomes?

We report here first the age groups of participants, whether projects were primarily field-based, online, or both, and the main topics and taxa that CCS projects focused on. We then report the specific scientific activities in which people participated in order to reveal the predominant ways people participated in CCS in studies with EE outcomes.

Age groups

A slim majority of the studies targeted adults (54%), with 13% of all articles focused on college students. Twenty-nine of the articles focused on young people ages 4–18 years old, with middle-school-aged youth (11 articles) and high-school-aged youth (10 articles) being more predominantly studied. Over one-third (35%) of the studies did not report the age group of the participating audience. Some of these were large app-based projects where participants did not report demographic information (e.g. iNaturalist), though many articles referred to ‘the community’ in ways that implied participants were primarily adults.

Field-based vs. online

The majority of articles (81) reported on CCS projects in which participants were involved in field-based settings (where the primary activities were outdoors and/or in-person, e.g., a BioBlitz (one-day/short-term events to inventory biodiversity in a bounded location like a park or city), bird and plant phenology projects, or water quality monitoring). Relatively few articles (5) reported involvement that was entirely online (e.g., online wildlife camera image identification platform or other image classification platforms such as Zooniverse), the low number of which likely reflects the review end date. Fourteen articles reported on projects that included both field-based and online settings, such as a participatory mapping project that involved field-based data collection as well as web-based digital mapping for community bushfire preparation in Tasmania (Haworth et al. Citation2016). In another example, university students created walking maps of health hazards with community members in South Carolina while also using GIS to analyze spatial disparities (Wilson et al. Citation2012).

Environmental topics or issues

The articles reported on CCS projects that investigated a broad range of environmental issues, from more conventional scientific topics such as particular taxa (14 articles focused on insects, and 8 articles each focused on birds, mammals, and plants) or biodiversity in general (8 articles focused on terrestrial and 4 on aquatic biodiversity), to explicitly socio-scientific topics: Fifteen studies on environmental health examined human exposure to environmental pollutants, with people collecting data about symptoms and health impacts of pollution (7 articles on air quality and 8 articles on water quality monitoring). Additionally, fifteen studies focused on environmental social science included local people gathering perspectives on natural disaster preparedness or using participatory mapping and photovoice to document habitat and natural resource loss or people’s willingness to pay for ecosystem service restoration. There were also 23 articles focused on broader place-based conservation topics, nine on habitat loss and deforestation, and nine each on invasive species or on food and agriculture-related conservation issues.

Specific scientific research activities in which people participated

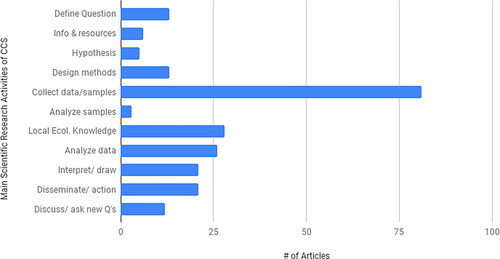

A key goal for this study was to examine the specific ways in which participants took part in CCS projects that had EE outcomes, rather than simply putting everything under the broad umbrella of ‘participation,’ Unsurprisingly, we found that the predominant way participants engaged in scientific research is through collecting data or samples (81 articles) (). However, many studies also involved participants in stages post-data collection, wherein they analyzed data (26), interpreted data/drew conclusions (21), or disseminated or translated results into action (21). We provide examples of how people participated in these activities below. Relatively fewer articles reported on projects where participants were involved in early stages of scientific research, like choosing/defining the research question (13) or designing of data collection methods (13) (). Somewhat surprisingly, we also found a number of articles (28) that engaged participants through documenting/reporting their own local ecological knowledge (LEK). For this category, we define LEK as the expertise of people who have wisdom, experience, and practices associated with local ecosystems (Olsson & Folke, Citation2001). While anthropologists and ethnobotanists often document LEK as part of their research, which may or may not have been participatory, we included articles only if participants were intentionally documenting their own knowledge in a participatory way to contribute to a scientific project.

Figure 3. Number of articles reporting participants taking part in of the main scientific research activities of CCS.

We also clustered these more detailed activities to look for patterns in the ways people were involved in the scientific research activities: planning, data collection, data analysis and interpretation, and reporting, and determined the number of articles in each cluster (). This allowed us to examine the potential relationship between the ways that people participated and the EE outcomes we coded for below.

Table 3. Number of articles with participants engaging in clustered scientific research activities of CCS.

Because participation in more or a wider range of activities of scientific research may lead to more or different learning outcomes from CCS, we also examined the number of articles that reported participants engaging in one, two, three, or more of the 11 delineated scientific research activities.The majority of articles reported participants involved in only one of the main activities of scientific research (56), primarily in data collection. However, 14 reported participants involved in two activities, nine studies reported people involved in three scientific research activities (typically adding analysis and/or interpretation to data collection), and 21 reported involvement in four or more scientific research activities. The latter took a range of forms but was very often focused on an environmental justice issue of urgent concern to the local community. For example, Hoover (Citation2016) reported that Mohawk (Akwesasne) community members, specifically a midwife and mothers, designed and implemented all aspects of an environmental health study of the impacts of PCBs in local water in collaboration with SUNY Albany scientists. In a completely different disciplinary context, Allen et al. (Citation2015) reported how local residents on the steep slopes of Bogotá, Colombia, initiated a mapping project with an anthropologist to provide evidence of dwelling practices in an area demarcated for ecological preservation.

RQ 2) What evidence do the studies provide for EE learning outcomes achieved by CCS projects?

In answering our second research question, we found that nearly every study found positive gains in EE learning outcomes for participants. Because study methods were incredibly varied across qualitative and quantitative approaches, we are not able to quantify the impacts within each outcome, but we can report that we found compelling evidence for five clusters of outcomes: science content knowledge; science inquiry skills and understanding the nature of science; positive attitudes or interest toward science, the local place, and the environment; community connectedness and cooperation; and self-efficacy, identity, agency and environmental behavior and stewardship. Only a very few articles reported no change in the outcomes: understanding of the process and nature of science (two of the 17 that measured it, see ) and interest in science or environment (two of the eight articles measured it, ), the rest reporting positive changes.

Table 4. Number of articles reporting learning outcomes (all positive outcomes except for *knowledge of nature of science and interest in science or environment).

Science content knowledge

The most common learning outcome documented was science content knowledge gains (all positive for this outcome) (56 articles, ). For all of these articles, facts and concepts to be learned focused on the topic of the CCS project (insects, birds, biodiversity, water quality, etc.). For example, Hesley et al. (Citation2017) reported increased knowledge of coral reef ecology through participation in a reef monitoring and restoration project; Langsdale et al. (Citation2009) reported that participatory mapping used to inform models of a water resource system helped participants understand causal relationships across that system; and Haywood et al. (Citation2016) reported that participants in a beached seabird monitoring project displayed increased knowledge of bird biology, behavior, and ecology and ecosystem components, structure, and processes.

Science inquiry skills and understanding the nature of science

A number of articles reported that participants increased their science inquiry skills (32 articles, , all positive for this outcome). Importantly, these were observed by authors, or were self-reports by participants through surveys or interviews, rather than as assessments of actual skills. For example, some authors reported that participants gained research skills (Nicosia et al. Citation2014; Wilson et al. Citation2012) and accuracy in data collection tasks (Becker et al. Citation2013) while others were more specific: improved camera deployment skills, map interpretation skills, data analysis skills, and use of monitoring tools. Brannon et al. (Citation2017) observed students learn how to successfully distinguish small-mammal skulls; Sabai and Sisitka (Citation2013) found that fishers and mangrove restorers, when involved in developing both scientific indicators and locally derived indicators of mangrove health, gained and shared those skills with other local actors.

Seventeen articles investigated participants’ understanding of the process and nature of science, 15 of which reported positive outcomes and 2 with no change (). Mitchell et al. (Citation2017) reported on students contributing to the ClimateWatch citizen science program and writing journal articles on their findings. Through this process students were able to critique the reliability of data that were contributed to CCS projects and learned how to ensure they were contributing reliable data. Cronje et al. (Citation2011) found that there was a significant increase in participants’ understanding of scientific methodology, validity, and reasoning in an invasive species program. And in online settings, Jennett et al. (Citation2016) found that online CCS participants reported an increase in understanding of the process and nature of science, though were not specific. However, Scheuch et al. (Citation2018) found that teachers’ knowledge of the nature of science was not changed during their citizen science-focused professional development program, even though that was an explicit goal. Similarly, Brossard et al. (Citation2005) found no significant difference in adult participants’ understanding of the scientific process in a bird monitoring project, and Jordan et al. (Citation2011) found little change in this outcome in their survey of participants in an invasive species program. The lack of change or null findings for both NOS and interest are consistent with previous studies of CCS projects, which also found some negative or null outcomes for these outcomes (Druschke and Seltzer, Citation2012; Brossard, et al. Citation2005).

Positive attitudes or interest toward science, the local place, and the environment

Sixteen articles found positive changes in attitudes toward science and the environment (). While the differences between attitudes and interest as constructs may be muddy, we relied on these articles specifically framing their investigations as documenting ‘changes in attitudes’ to include them in this category. A common type of change reported by many of these articles was participants’ increased positive emotions toward the focal species of the project. For example, volunteers working alongside professional scientists to evaluate hedgehog urban habitat use reported feeling ‘closer to hedgehogs’ (Hobbs & White, Citation2015); and Lynch et al. (Citation2018) reported participants gaining positive feelings towards insects through participation in an entomology citizen science project (Lynch et al. Citation2018). Land-Zandstra et al. (Citation2016) reported participants who contribute to citizen science through their smartphones but had limited involvement with science in the rest of their lives felt ‘science can have a positive impact on our lives.’

Nine articles reported positive changes in what we defined as place values and connection to nature, () (Gosling & Williams, Citation2010; Kudryavtsev et al. Citation2012). Many were focused on participatory mapping projects; Bergós et al. (Citation2018) reported that local people participating in mapping wildlife in rural Uruguay had more positive relationships with local animals and the environment following their participation. Similarly, Allen et al. (Citation2015) reported that participants learned how to make and use maps to document and plan for dwelling spaces and that they were able to ‘engage with complex planning decisions and ethical questions concerning the social function of the land, the contention of further sprawl and ecological carrying capacity of the territory’ (269). Other projects reporting changes in place values addressed water quality monitoring and urban garden soil testing; Doyle and Krasny (Citation2003) report on a project in which local gardeners took part in a participatory rural appraisal of their community garden in New York, and youth conducted interviews of local residents, and that youth learned about the relationship of previous land use to the community garden.

Eight articles investigated interest in science and the environment as a learning outcome (). Though six studies found positive gains in this outcome, two articles reported no change in interest after participating in CCS projects. Everett & Geoghegan’s (Citation2016) study of OPAL (Open Air Laboratories) citizen science biological surveys in the UK found participants reported renewed interest in amateur naturalist and biological recording activities. However, Nicosia et al. (Citation2014) did not find significant changes in this outcome after students investigated ‘willingness to pay’ for ecosystem services as part of their high school biology course.

Community connectedness and cooperation

Thirty articles reported positive changes in community connectedness and cooperation (). We identified two category sub-themes: social connectedness outcomes and stakeholder cooperation/social learning. Several articles reported impacts on social connectedness. For example, Haworth et al. (Citation2016) reported that participatory mapping activities in a bushfire risk workshop in Australia contributed to community connectedness and knowledge sharing, which is theorized to improve community resilience and disaster preparedness. In addition, several articles reported impacts on stakeholder cooperation and social learning. For example, Bergós et al. (Citation2018) reported that a participatory wildlife monitoring project in Uruguay involved both youth and adults in collecting and analyzing data related to local wildlife in addition to sharing local ecological knowledge and discussing the results. The collaborative workshop process facilitated increased trust between the involved parties, which included village residents, a school, and a conservation organization. Similarly, Henly-Shepard et al. (Citation2015) reported that participatory modeling activities undertaken by a community disaster planning committee in Hawai’i contributed to social learning. They reported that both individual reflection and group deliberation as part of these activities, in which members of the committee reported local knowledge, facilitated social learning to manage uncertainty and increase adaptive capacity.

Self-efficacy, identity, agency, and environmental behavior and stewardship

Studies found that participation in community and citizen science programs may contribute to participants’ development of identity and agency with science, their sense of self-efficacy to improve ecosystem health and their ability to solve problems and take action. Specifically, eleven articles reported positive outcomes for participants’ self-efficacy toward science and the environment (). Grasser et al. (Citation2016) reported that children participating in an ethnobotany project showed increased confidence in their ability to produce something of value in general and specifically for an adult audience, and Jordan et al. (20106) reported that after a collaborative planning and monitoring process, participants in the Virginia Master Naturalists program increased their confidence in their ability and strategies to address a natural resource problem. Four articles included positive changes in either participants’ identity with science or the environment (two articles) or agency with science or the environment (two articles) (). For example, Calabrese Barton and Tan (Citation2010) found young people who led a community-based research project in their after-school program enacted agency with the physics knowledge they had learned by investigating whether and how their neighborhood was an urban heat island and sharing their findings with community members. Merenlender et al. (Citation2016) found that adults who had been trained as naturalists and subsequently volunteered for local citizen science projects increased their identification as people who understand and do science in their daily lives, and are recognized by others that way as well.

Twenty-nine articles provided empirical evidence of positive environmental stewardship and behavior outcomes (). This is a substantial proportion of articles with important EE outcomes from CCS, and so we identified several sub-themes within this category: sharing information with others, civic action, and stewardship as site management. First, one commonly reported behavior and stewardship outcome of citizen science projects is participants sharing information with people in their networks. For example, Lewandowski & Oberhauser (Citation2017a) reported that 95% of participants in butterfly citizen science projects reported talking to others about butterflies or conservation and recruiting others, among other conservation actions. Specifically, 73% of participants reported talking informally with others about butterflies; 69% of participants who volunteered more of their time had higher odds of reporting that they involved others in monitoring or conservation. Second, many articles described CCS participants leveraging the project as part of civic action to advance political goals. For example, participants used data from FracTracker, a participatory geographic information system focused on unconventional natural gas development, to take some sort of action (Malone et al. Citation2012): 57% of individual survey respondents used FracTracker in discussions with regulatory officials, and 67% of nonprofit respondents used it to write to state representatives and regulatory officials. Third, many articles reported that participants subsequently or concurrently engaged in improving local habitats through stewardship and restoration. For instance, participants in East Bay Academy for Young Scientists (EBAYS), a community science program for high school students in California, found a lack of biodiversity and high levels of toxins in a local urban creek, prompting them to take action by removing trash and invasive species (Ballard et al. Citation2017b).

RQ 3) How did participation in specific scientific research activities impact EE learning outcomes?

In this section, we report the number of articles that included participation in each of the activities of scientific research, and the learning outcomes that were reported for each of those clustered activities, to examine how different CCS approaches to participation might relate to particular learning outcomes (). While we do not attempt to make causal claims from our data, we can say that for each of these clustered activities of CCS projects, the studies that examined each of the learning outcomes reported in found positive outcomes.

Table 5. EE learning outcomes by clustered scientific research activities of CCS.

Participation in planning

Of the 18 articles with volunteers participating in planning scientific research, a large portion reported positive gains in science content knowledge (12; ). In the majority of these 18 studies, participants helped shape the initial research questions, mostly by acting collectively. Many were participatory modeling or mapping projects, where participants helped design the mapping of vernal pools in Maine, USA (McGreavy et al. Citation2016), or dwelling practices in Bogotá, Colombia (Allen et al. Citation2015), or setting the agenda and selecting water management models in Vermont, USA (Gaddis et al. Citation2010). These all reported increased participant understanding and awareness of the environmental or social systems being studied and attributed this to the early initial meetings focused on deeply educating participants about the issue of concern to plan appropriate mapping or modeling and action efforts.

Participation in data collection

Of the 97 articles with volunteers participating in data collection, a large portion reported positive gains for environmental science content knowledge (55), science inquiry skills (30), community-level outcomes(2), and environmental behavior and stewardship outcomes (28) (). Many of those that measured gains in content knowledge were projects focused on particular taxa or habitats that provided explicit support to collect high-quality data, including taxonomic or ecological disciplinary content as well as the scientific skills required. For example, volunteers who collected samples of Staghorn corals to propagate and monitor coral reefs in the Caribbean gained knowledge of coral reef ecology (Hesley et al. Citation2017), and participants who collected forensic data on seabirds washed on the beach in the Pacific Northwest gained knowledge of bird biology and ecology (Haywood et al. Citation2016).

Participation in data analysis and interpretation

Of the 33 articles with participants involved in data analysis and interpretation, a majority reported positive gains in science content knowledge (20), but also gains in science inquiry skills (16) (). Several of these studies were of CCS projects in online settings, where participation focused on classification tasks for data analysis, described as ‘volunteer thinking’ by Jennett et al. (Citation2016), as opposed to more passive online tasks. As an example of how participation in analysis is linked to knowledge and skills gains, in their review of several online CCS projects, Jennett et al. (Citation2016) found that along with content knowledge, participants gained ‘pattern recognition skills’ and an enhanced understanding of how science works, including the use of rigorous protocols, the importance of learning from failures, and continued exploration. At the other end of the spectrum of intensely field-based settings, youth conducting a Participatory Rural Appraisal (PRA) of their own community garden analyzed their interview data and gained an understanding of their neighborhood’s environmental and social history, soil chemistry, and interviewing and mapping skills, as well as a stronger connection to place.

Participation in reporting

Of the 25 articles reporting participants involved in scientific reporting, 15 articles reported positive gains in scientific content knowledge, and a bit less than half reported evidence of gains in science inquiry skills and environmental stewardship and behavior gains (11 each) (). For example, Whitman et al. (Citation2015) report on a participatory action research project on farm slurry pollution in the UK that included local stakeholders working with a River Trust not only in vegetation mapping, but also in developing and disseminating a toolkit to other communities and presenting at conferences. Zeegers et al. (Citation2012) involved teacher leaders in Australia in not only conducting bird studies with their students, but also writing and disseminating their results via professional development workshops for other teachers. Taylor and Hall (Citation2013) reported on young people’s mapping of their urban neighborhood while riding bikes and collecting GPS data, sharing their findings with the neighborhood, and resulted in their using their data to critique their built environment and neighborhood. These findings might indicate that involving people in the reporting of scientific findings can have substantially positive impacts on these outcomes, particularly related to conservation and stewardship actions, which we discuss further below.

Participation in multiple scientific research activities

We also examined whether participation in a breadth of different scientific research activities or in only one kind of research activity seems to relate to EE learning outcomes. For the 61 articles reporting participation in just one type of scientific research activity (typically data collection), we found evidence of positive gains across all the EE learning outcomes. Further, fifteen articles reported people participating in two of our clustered types of participation (typically in data collection and in data analysis or planning), 14 articles included participants in three main areas, and ten articles reported people participating in all four main areas of scientific research as we defined them.

We examined these latter studies in greater detail to identify examples of ways that this intensive participation in nearly all aspects of scientific research resulted in environmental learning outcomes. Half of the ten studies with participation across all aspects of scientific research found positive impacts on environmental behavior and stewardship outcomes, many using strategies that engaged people in collective planning and dissemination activities as well as individual activities around data collection. These provided multiple opportunities for sense-making with the data they were collecting, and made visible ways that their own science production was being used to answer collective questions or solve collective problems. This latter took the form of monitoring the extraction and health of forest resources in Yunnan, China (Van Rijsoort & Jinfeng, Citation2005) or studying the siting of a waste facility in Los Angeles, California, USA (Dhillon, Citation2017). These resulted in both collective and individual behavior changes, with the participants in the former project changing their own harvest practices as well as creating village-level policies (Van Rijsoort & Jinfeng, Citation2005), and the participants in the latter project changing their own behavior related to trash as well as advocating collectively as an environmental justice group (Dhillon, Citation2017). While only a small subset of the 100 articles in the review, these projects with participation across the range of research activities offer strategies of participation that resulted in important EE learning outcomes.

RQ 4) What research methods and approaches have been used to study the EE learning outcomes of CCS, and what are the implications for future research?

Areas of publication over time

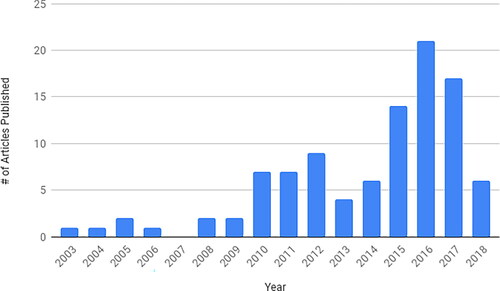

One hundred articles in our sample were published in 65 different journals in diverse fields (see full list in Appendix). The most popular journal outlets were Ecology and Society (7 articles), Conservation Biology (6 articles), Biological Conservation (5 articles), and Journal of Science Communication (5 articles). The remaining 77 articles were distributed across 61 journals in many fields, speaking to the multidisciplinarity of research on CCS and EE. We also found an increasing number of empirical journal articles exploring CCS with EE outcomes over time (). 2016 saw the most articles published, which can be explained in part by a number of these journals publishing special issues focused on citizen science that year (e.g., Journal of Science Communication, Conservation Biology, Biological Conservation). The trend indicates a rapid increase in research publications in the area of CCS for EE.

Research designs

Ten different research designs were used across the studies we reviewed, with over a quarter each using a case study approach, or a quasi-experimental approach with pre- and post-measures, or multiple group design (27 and 26 respectively) (). Twenty-one articles used participatory approaches, which involve participants in scientific research activities, with our search terms likely contributing to this large representation. We found that nearly all the studies except the two noted above reported uniformly positive findings for the outcomes they measured. This pattern may be reflective of the tendency for journals to publish only positive findings, which is a failing of the academic publishing culture more broadly as well as in the field of environmental education. In this case, it may be because the outcomes reported could be a particular reflection of what the researchers chose to study (see Discussion below) and because null or non-significant findings often go unreported outside of randomized control trials (Herrington & Maynard, Citation2019), which none of our included studies utilized.

Table 6. Research designs used in studies of CCS for EE.

Research methods

The most common data collection method was structured survey instruments (n = 54), followed by interviews (n = 45) (). Surveys included open and closed-ended questions, validated scales, self-reported assessments, and traditional knowledge assessments. Fifty-seven studies used more than one method to collect data, typically combining other research methods such as observations (n = 25) or focus groups (n = 21). Only six studies reported using quality control checks. Because environmental and science content knowledge was the most often studied and reported outcome, we examined the methods used to study these in more detail to examine the robustness of the findings reported. Within the studies that reported science content knowledge outcomes (n = 56), all reporting positive gains, we found that 25 used surveys to measure this outcome, 16 of which were self-reported, and nine assessed pre/post. Sixteen studies used interviews to measure science content knowledge gains, 12 of which used self-reports and four assessed with pre/post-tests. The three studies that used focus groups to measure this outcome all relied on self-reports, as did one of the three studies using community meetings/listening sessions. Five studies used observations by researchers to determine knowledge gains. Below, we discuss the implications and concerns surrounding the predominant use of self-reporting to measure this outcome.

Table 7. Data collection methods used (n = 100).

Discussion

Characteristics of CCS projects with EE learning outcomes

The fifty-four articles reporting positive EE learning outcomes for adult participants in CCS (n = 54) provide compelling evidence that CCS can support lifelong environmental learning beyond the K-12 years (Roth & Lee, Citation2004) and positive impacts on public engagement in science suggested in more conceptual articles by Bonney et al. (Citation2016) and others. In addition, the 29 articles that found positive learning gains for young people ages 4–18 reinforces the increasing interest in K-12 school settings in CCS as an effective approach for engaging students in science and science reasoning practices in and out of school (Shah & Martinez, Citation2016). While we found a relatively small proportion of empirical studies of online-only CCS projects, this is a rapidly expanding area of CCS and has likely increased since our 2018 search cut-off, including research on youth participation in online citizen science platforms like Zooniverse (https://www.zooniverse.org/), iSpot (https://www.ispotnature.org/), and iNaturalist (https://www.inaturalist.org/) (Aristeidou & Herodotou, Citation2020; Aristeidou et al. Citation2021).

While we saw a broad range of EE-relevant issues addressed by CCS approaches, a large portion of the articles focused on studying environmental social science issues. While this primarily reflects what researchers chose to study, it may also reflect the growing interest in the concept of ‘citizen social science’ on the part of the CCS field (Purdam, Citation2014; Kythreotis et al. Citation2019), particularly in Europe (Heiss & Matthes, Citation2017). This new area faces tensions around the differences between participatory approaches and standard social science survey and interview methods, but is clearly a burgeoning area for the field (Albert et al. Citation2021).

Compelling evidence that participation in CCS results in EE learning outcomes: trends and implications for the field

Our systematic review of empirical research in CCS reveals substantial evidence that participation in environmental science can lead to important EE learning outcomes and offers a comprehensive picture of the EE learning outcomes that result across the range of CCS approaches to EE. These findings complement and support several conceptual and conjectural articles about how participation in environmental science might lead to important learning outcomes. To begin, the large number of articles that studied and found positive gains in science and environmental content knowledge (56 articles) is consistent with Phillips et al. (Citation2018), who found this was one of the learning outcomes most often reported in their review of citizen science project websites. CCS programs can be assured that when they train participants to identify and collect data (the most predominant type of participation), they will likely gain knowledge of the target environmental science content of their project. However, it is concerning that even for this outcome, which can be assessed with a pre-post survey more easily than other more nuanced constructs, researchers still relied heavily on self-reports rather than assessments. If CCS is going to be more widely adopted as an approach to EE to address K-12 education standards such as the Disciplinary Core Ideas in the Next Generation Science Standards (NGSS Lead States, Citation2013) in the U.S., researchers should use less self-reporting and more observable gains in knowledge wherever possible.

The large number of studies (30) that documented positive community-level outcomes from CCS projects is notable, considering other recent reviews found far fewer studies in reviewing civic engagement outcomes of EE programs (Ardoin et al. Citation2022), and these outcomes can be difficult to measure (Thomas et al. Citation2018). Environmental education researchers and practitioners are increasingly focused on community (Aguilar, Citation2018) and ways collective action is intertwined with EE (Ardoin et al. Citation2022). The large number of studies that chose to focus on community connectedness and cooperation, often in the context of environmental health research, may reflect the growing focus on community science and other community-driven, rather than scientist-driven, forms of participatory environmental science (Cooper et al. Citation2021; Reid et al. Citation2021). These fields may not identify their work as EE, but nevertheless, they are impacting and measuring EE outcomes, as evidenced in our review.

Promoting pro-environmental behavior change is a crucial goal in environmental education, yet achieving it remains a complex task for educators (Heimlich & Ardoin, Citation2008); we found evidence that CCS approaches may be particularly effective at achieving those outcomes. While Phillips et al. (Citation2018) found that increased environmental stewardship was the third most common outcome self-reported by citizen science projects on their websites, little research evidence was available for those self-reports. Outcomes around participant behaviors like ‘sharing project findings with others’ indicate that CCS may increase the conservation involvement of volunteers by offering a sense of social connectedness (Lewandowski & Oberhauser, Citation2017b). Many of the CCS projects that resulted in participants taking action to improve habitat and other site management actions intentionally integrated stewardship activities into the program that built from the data collection and citizen science work (for example, Ballard et al. Citation2017b). This integration of both participation in the scientific research and stewardship activities may be particularly powerful and consistent with recommendations for including direct environmental action, individual and collective, in EE programs (Ardoin et al. Citation2022; Dubois et al. Citation2018).

While very distinct and separate outcomes, the development of self-efficacy, identity, and agency with science and the environment are all important aspects of learning through environmental education that CCS is particularly well-positioned to address (Phillips et al. Citation2018). We found that most articles reporting these outcomes involved participation in more than three of the scientific research activities beyond data collection. Therefore, we suggest that CCS project designers consider that outcomes like identity development require practicing one’s identities over time (Nasir & Hand, Citation2008), and do not necessarily result from all CCS projects. While only 11 studies focused on these constructs, research on these outcomes from CCS approaches has increased since 2018, and continues to provide evidence for positive and nuanced impacts on these outcomes of CCS approaches (i.e. He et al. Citation2019; Williams et al. Citation2021).

Impacts of participation in scientific research activities on EE learning outcomes: recommendations for design

As a distinguishing feature of CCS for EE, we wanted to discover precisely which scientific research activities, including but beyond data collection, produced EE learning outcomes. First, for those articles wherein people solely participated in data collection, we found a wide range and ample evidence of EE learning outcomes. This suggests that while more participation might be better, even simply participating in data collection can still have positive EE outcomes. Further, studies are emerging that directly compare the learning outcomes for data collection-only CCS projects versus those where participants help or lead throughout the scientific process, finding important outcomes for both (Williams et al. Citation2021).

Second, we found that participation beyond data collection was more prevalent than might have been expected, and found extensive evidence for positive EE outcomes from participation in these other aspects of scientific research, i.e., planning (18 articles), data analysis (33 articles) and reporting (25 articles). Further, by examining closely the ten studies that involved people in all four main clusters of participation in scientific research activities, we found they reflected the more co-created (Shirk et al. Citation2012/community science (Dosemagen & Parker Citation2019)/community-based participatory research (Israel et al. Citation2013) approaches that may be particularly impactful for participant learning. We found that involving people in the planning stages of CCS projects can also have substantially positive impacts on a number of important EE learning science and environmental learning outcomes, particularly community-level outcomes like social connectedness and stakeholder cooperation, as well as environmental behaviors and stewardship. This suggests CCS project design for EE should involve participants in planning stages, focused on interaction and deliberative dialogue (Muro & Jeffrey, Citation2008) to ensure ownership of the project and research questions focused on community-driven questions, and can be important catalysts for social learning in collaborative resource management and conservation settings (Conrad & Hilchey, Citation2011; Jadallah & Ballard, Citation2021).

We suggest that EE practitioners designing CCS projects could intentionally work to involve participants in the planning, analysis and/or reporting stages in addition to data collection, consistent with Phillips et al. (Citation2019), with numerous examples provided above, and in particular:

Involving participants in research planning for a CCS project, such as community meetings or providing feedback on methods, can result in positive EE outcomes in community-conneectedness and cooperation especially, and also in science content knowledge, and environmental behaviors and stewardship;

Involving participantsin data analysis and interpretation for a CCS project, such as group discussions around data visualizations, can result in positive EE outcomes in place values;

Involving participants in the reporting for a CCS project, such as sharing presentations, newsletters, blogs, or posters locally, can result in positive EE outcomes in science inquiry skills, environmental behaviors and stewardship, and place values.

Critiquing the research on CCS for EE and recommendations for future research

Our review brought to light the fact that some of the empirical studies on EE learning outcomes of CCS were less rigorous methodologically. As empirical research on CCS as an approach to EE continues to expand, we offer the gaps and blind spots in research methods for future research. We found a mix of research designs and data collection methods used across reviewed articles, and this can contribute to a robust understanding of the field, with quantitative and qualitative methods contributing different types of evidence to the understanding of a construct. However, reliance on case studies was the most frequently used design (27%), and while case studies allow for deep understanding of a phenomena, they have severe limitations when it comes to generalizability (although we note they often don’t carry the goal of generalizability). Studies that make use of different research designs, such as quasi-experimental research, can be of use in helping construct causal relationships with more confidence. Also, very few of the articles used validated measures for their instruments; in fact, we know that recently validated survey scales for many common learning constructs have been developed and are beginning to be implemented in the CCS field (Phillips et al. Citation2018).

Another blind spot lies in the heavy reliance on participants self-reporting learning and skill gains, which is also an important limitation in drawing conclusions about impacts on learning outcomes. Self-assessments of knowledge only moderately correlate with learning in adults and have been found to be inaccurate over half the time in studies of undergraduate students (Sitzmann et al. Citation2010). To reduce reliance on self-reporting, we suggest increasing the use of embedded assessments (Becker-Klein et al. Citation2016), observation protocols, and quality control checks to obtain reliable measures of knowledge, skills, and science process knowledge. This would reduce the burden on participants with time-consuming or ‘test-like’ surveys or interviews and increase our confidence in interpreting findings in these outcome areas. Self-reporting is not a concern for all outcomes, however. For outcomes such as self-efficacy, identity, agency, interest, and attitudes, interviews and other qualitative methods focused on self-story are valuable and even essential to reflect participants’ own meaning in their words and can supplement quantitative measures of these outcomes. We also saw some examples of mixed methods yielding nuanced results, as in Lynch et al.’s (2017) findings on nature relatedness using both quantitative and qualitative methods in an entomology citizen science project. Finally, we believe that emergent methods not used in any of the studies we reviewed, such as art-based methods or walking interviews, can be used to further assess learning outcomes in novel ways (Tuck & McKenzie, Citation2014).

Further, the numerous studies we found reporting environmental behavior and stewardship varied in their use of measures for intention to behave, self-reporting of behaviors already performed, and observations by researchers of actual behaviors. Actual pro-environmental behaviors are very difficult to measure (Ardoin et al. Citation2018; Heimlich & Ardoin, Citation2008), and Hughes et al. (Citation2013) found in studying the impact of viewing wildlife on behaviors that positive intentions are not good indicators of long-term behavior change. These limitations don’t discount the mounting evidence that CCS can successfully achieve common EE outcomes, as we saw in our review, but to be more confident in our assertions as a field, we must include more rigorous research designs and data collection methods.

Limitations and continuing and future trends

We acknowledge several limitations to our analysis that constrain our ability to make causal claims. First, the predominance of articles reporting a particular learning outcome might only reflect the relative ease or popularity for researchers to study that outcome (i.e., studying scientific content knowledge is very common), possibly reflecting the ‘streetlight effect’ whereby we see more evidence of outcomes that researchers find easier to measure (Ardoin et al. Citation2018; Freedman, Citation2010). We can only report on the studies that were conducted and, therefore, what the researchers chose to study. That is to say, none of these studies attempted to examine all of the learning outcomes we delineated, such that comparing the outcomes to each other directly is not possible. Last, many disciplines, including education, have a bias toward publishing positive findings and a dearth of published studies reporting null or negative findings (Fanelli, Citation2012). Therefore, the absence of evidence doesn’t reflect evidence of absence; that is, other outcomes may have resulted from a CCS project beyond what the researchers chose to measure and report in these articles. That said, each of these articles designed and reported almost entirely positive learning outcomes from their studies of CCS projects, regardless of whether they were designed originally as educational programs, which leads us to conclude that CCS participation has indeed led to a myriad of positive EE learning outcomes. Lastly, we do not claim that these 100 articles are proportionally representative of all CCS projects in general, as many projects and programs remain under-evaluated in the field (Jordan et al. Citation2012; Phillips et al. Citation2018).

We also acknowledge that our review of the literature ended in 2018, but offer our findings as a crucial baseline from which other systematic reviews may build. Furthermore, the literature published after this systematic review (2019–2023) suggests the same types of EE outcomes continue to be achieved by CCS programs for adults (He et al. Citation2019) and young people. In particular, research on learning outcomes for young people in and outside of schools has surged (Kali et al. Citation2023), finding many of the strong positive outcomes, particularly in understanding environmental science content (Yan et al. Citation2023), positive attitudes about the environment (Aivelo, Citation2023), and self-efficacy and interest in environmental science (Clement et al. Citation2023). CCS approaches in K-12 education settings are increasingly implemented globally (Atias et al. Citation2023), not only to teach STEM content but to engage young people in environmental problem-solving and action. We found outcomes for teachers/educators that resulted from incorporating CCS into their classroom teaching, including changes in teacher practices by sharing more authority with students and enhancing pedagogical content knowledge and approaches to STEM teaching. A recent special issue of Instructional Science journal on citizen science in schools for learning in a networked society (Kali et al. Citation2023) provides continuing evidence of the trends in positive science learning outcomes for K-12 students we found in our review.

In addition, several potential trends warrant future investigation, including increasing focus on the ways that CCS may or may not address equity and diversity issues in science and environmental education (Parrish et al. Citation2019; Pateman and West, Citation2023), and empower participants to address impacts of climate change (Day et al. Citation2022). Research on learning outcomes of online-focused citizen science participation has surged recently (Aristeidou & Herodotou, Citation2020), and will become even more complex as Artificial Intelligence becomes even further embedded in many crowdsourcing platforms such as Zooniverse and iNaturalist. An important area of growing research is in studies examining evidence that CCS programs impact broader socio-ecological systems through the combined positive outcomes for participants and more robust science (Jadallah & Wise, Citation2023, Jørgensen & Jørgensen, Citation2021; Receveur et al. Citation2022). Finally, several studies examined the changes in participation in CCS during the COVID-19 pandemic lockdowns (Coldren, Citation2022; Drill et al. Citation2022) which may continue as broader education research continues to examine the impacts of the pandemic shutdowns on learning for K-12 students, as well as uses of CCS to study aspects of the pandemic itself (Sadiković et al. Citation2020).

A final note: challenges in defining EE and CCS

Our review raises the perennial question as to what actually constitutes ‘environmental education’ and ‘community and citizen science’, and whether we can or should have strict definitions for these areas (Ardoin et al. Citation2018; Eitzel et al. Citation2017). Particularly, we debated if authors needed to self-identify using key terms, or rely on our assessment of the project activities, topics of study, and/or social science research. We decided to use both, but acknowledge that this is raises questions. In CCS, what actually constitutes participation in science? Many projects claim to be ‘participatory’, but how this occurs isn’t always evident and is a sticking point in defining the field of citizen science and all its permutations (Eitzel et al. Citation2017; Shirk et al. Citation2012). In our review, when we couldn’t tell, we had to honor the authors’ use of the term. Given that the term ‘participatory’ has itself been widely debated and yet adopted throughout education, sociology, and conservation literature for many decades (e.g., Arnstein, Citation1969), we suggest that any claims about participation, co-creation, and power-sharing in science should be transparent and critically interrogated. For EE, the field has evolved, and terminology can sometimes be limiting (Ardoin et al. Citation2018). Our inclusion criteria meant we included many articles focused on environmental health, environmental justice, and natural resource management that DO meet those criteria, even though those authors and/or participants may never have considered it ‘environmental education.’ This speaks to Clark and colleagues’ (2020) Delphi study with EE experts to determine how those and other topics fit under the larger umbrellas of EE. By delving into and including articles that don’t self-identify as CCS or as EE, but clearly involve the activities, topics, and outcomes of CCS and EE as we’ve defined them, we were able to find evidence of participatory approaches to environmental science that result in some of the more intractable outcomes we care about in EE, like community connectedness and cooperation. We suggest that widely agreed-upon and static definitions for either field are neither likely nor, perhaps, desirable. Similarly, as environmental education becomes increasingly, and appropriately, intertwined with social justice, diversity, and equity-focused education and advocacy, the definition of what constitutes EE should also expand, and the premise of CCS to broaden participation in science can contribute to these goals. The expanding implementation of CCS projects aimed at the UN Sustainable Development Goals provides a rich opportunity for environmental educators and researchers to help shape these ongoing debates.

Conclusions