?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

We develop several deep learning algorithms for approximating families of parametric PDE solutions. The proposed algorithms approximate solutions together with their gradients, which in the context of mathematical finance means that the derivative prices and hedging strategies are computed simultaneously. Having approximated the gradient of the solution, one can combine it with a Monte Carlo simulation to remove the bias in the deep network approximation of the PDE solution (derivative price). This is achieved by leveraging the Martingale Representation Theorem and combining the Monte Carlo simulation with the neural network. The resulting algorithm is robust with respect to the quality of the neural network approximation and consequently can be used as a black box in case only limited a-priori information about the underlying problem is available. We believe this is important as neural network-based algorithms often require fair amount of tuning to produce satisfactory results. The methods are empirically shown to work for high-dimensional problems (e.g., 100 dimensions). We provide diagnostics that shed light on appropriate network architectures.

1. Introduction

Numerical algorithms that solve PDEs suffer from the so-called ‘curse of dimensionality’, making it impractical to apply known discretization algorithms such as finite difference schemes to solve high-dimensional PDEs. However, it has been recently shown that deep neural networks trained with stochastic gradient descent can overcome the curse of dimensionality (Beck, Gonon, and Jentzen Citation2020; Berner, Grohs, and Jentzen Citation2020), making them a popular choice to solve this computational challenge in the last few years.

In this work, we focus on the problem of numerically solving parametric linear PDEs arising from European option pricing in high dimensions. Let be a parameter space (for instance, in the Black–Scholes equation with fixed rate, B is the domain of the volatility parameter). Consider

satisfying

(1)

(1)

Here

,

and

and

and g are functions of

which specify the problem. The Feynman–Kac theorem provides a probabilistic representation for v so that Monte Carlo methods can be used for its unbiased approximation in one single point

. What we propose in this work is a method for harnessing the power of deep learning algorithms to numerically solve (Equation1

(1)

(1) ) in a way that is robust even in edge cases when the output of the neural network is not of the expected quality, by combining them with Monte Carlo algorithms.

From the results in this article, we observe that neural networks provide an efficient computational device for high dimensional problems. However, we observed that these algorithms are sensitive to the network architecture, parameters and distribution of training data. A fair amount of tuning is required to obtain good results. Based on this we believe that there is great potential in combining artificial neural networks with already developed and well understood probabilistic computational methods, in particular the control variate method for using potentially imperfect neural network approximations for finding unbiased solutions to a given problem, see Algorithm 1.

1.1. Main Contributions

We propose three classes of learning algorithms for simultaneously finding solutions and gradients to parametric families of PDEs.

Projection solver: See Algorithm 2. We leverage Feynman–Kac representation together with the fact that conditional expectation can be viewed as an

-projection operator. The gradient can be obtained by automatic differentiation of already obtained approximation of the PDE solution.

Martingale representation solver: See Algorithm 3. This algorithm was inspired by Cvitanic and Zhang (Citation2005) and Weinan, Han, and Jentzen (Citation2017) and Han, Jentzen, and Weinan (Citation2017) and is referred to as deep BSDE solver. Our algorithm differs from Weinan, Han, and Jentzen (Citation2017) in that we approximate solution and its gradient at all the time-steps and across the entire space and parameter domains rather than only one space-time point. Furthermore we propose to approximate the solution-map and its gradient by separate networks.

Martingale control variates solver: Algorithms 4 and 5. Here we exploit the fact that martingale representation induces control variate that can produce zero variance estimator. Obviously, such control variate is not implementable but provides a basis for a novel learning algorithm for the PDE solution.

For each of these classes of algorithms, we develop and test different implementation strategies. Indeed, one can either take one (large) network to approximate the entire family of solutions of (Equation1(1)

(1) ) or take a number of (smaller) networks, where each of them approximates the solution at a time point in a grid. The former has the advantage that one can take arbitrarily fine time discretization without increasing the overall network size. The advantage of the latter is that each learning task is simpler due to each network being smaller. One can further leverage the smoothness of the solution in time and learn the weights iteratively by initializing the network parameters to be those of the previous time step. We test both approaches numerically. At a high level all the algorithms work in path-dependent (non-Markovian) setting but there the challenge is an efficient method for encoding information in each path. This problem is solved in companion paper (Sabate-Vidales, Šiška, and Szpruch Citation2020).

To summarize the key contribution of this work are:

We derive and implement three classes of learning algorithms for approximation of parametric PDE solution map and its gradient.

We propose a novel iterative training algorithm that exploits regularity of the function we seek to approximate and allows using neural networks with smaller number of parameters.

The proposed algorithms are truly black-box in that quality of the network approximation only impacts the computation benefit of the approach and does not introduce approximation bias. This is achieved by combining the network approximation with Monte Carlo as stated in Algorithm 1.

Code for the numerical experiments presented in this paper is being made available on GitHub: https://github.com/msabvid/Deep-PDE-Solvers.

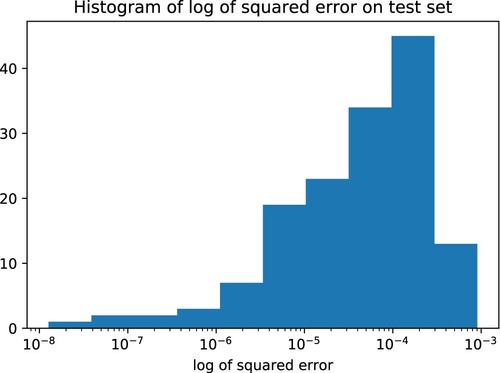

We stress the importance of point (iii) above by directing reader's attention to Figure , where we test generalization error of trained neural network for the five-dimensional family of PDEs corresponding to pricing a basket option under the Black–Scholes model. We refer reader to Example A.2 for details. We see that while the average error over test set is of order , the errors for a given input vary significantly. Indeed, it has been observed in deep learning community that for high dimensional problems one can find input data such that trained neural network that appears to generalize well (i.e., achieves small errors on the out of training data) produces poor results (Goodfellow, Shlens, and Szegedy Citation2014).

1.2. Literature Review

Deep neural networks trained with stochastic gradient descent proved to be extremely successful in number of applications such as computer vision, natural language processing, generative models or reinforcement learning (LeCun, Bengio, and Hinton Citation2015). The application to PDE solvers is relatively new and has been pioneered by Weinan, Han, and Jentzen (Citation2017), Han, Jentzen, and Weinan (Citation2017) and Sirignano and Spiliopoulos (Citation2017). See also Cvitanic and Zhang (Citation2005) for the ideas of solving PDEs with gradient methods and for direct PDE approximation algorithm. PDEs provide an excellent test bed for neural networks approximation because (a) there exists alternative solvers, e.g., Monte Carlo, (b) we have well-developed theory for PDEs, and that knowledge can be used to tune algorithms. This is contrast to mainstream neural network approximations in text or images classification.

Apart from growing body of empirical results in the literature on ‘Deep PDEs solvers’, Chan-Wai-Nam, Mikael, and Warin (Citation2019), Huré, Pham, and Warin (Citation2019), Beck et al. (Citation2018), Jacquier and Oumgari (Citation2019) and Henry-Labordere (Citation2017), there has been also some important theoretical contributions. It has been proved that deep artificial neural networks approximate solutions to parabolic PDEs to an arbitrary accuracy without suffering from the curse of dimensionality. The first mathematically rigorous proofs are given in Grohs et al. (Citation2018) and Jentzen, Salimova, and Welti (Citation2018). The high level idea is to show that neural network approximation to the PDE can be established by building on Feynman-Kac approximation and Monte Carlo approximation. By checking that Monte Carlo simulations do not suffer from the curse of dimensionality one can imply that the same is true for neural network approximation. Furthermore, it has been recently demonstrated in Hu et al. (Citation2021) and Mei, Montanari, and Nguyen (Citation2018) that noisy gradient descent algorithm used for training of neural networks of the form considered in Grohs et al. (Citation2018) and Jentzen, Salimova, and Welti (Citation2018) induces unique probability distribution function over the parameter space which minimizes learning. See Du et al. (Citation2018), Chizat and Bach (Citation2018), Rotskoff and Vanden-Eijnden (Citation2018), Sirignano and Spiliopoulos (Citation2020), Wang and Tang (Citation2021) and Han and Long (Citation2020) for related ideas on convergence of gradient algorithms for overparametrized neural networks. This means that there are theoretical guarantees for the approximation of (parabolic) PDEs with neural networks trained by noisy gradient methods alleviating the curse of dimensionality.

An important application of deep PDE solvers is that one can in fact approximate a parametric family of solutions of a PDE. To be more precise let ,

, be a parameter space. In the context of finance these, for example, might be initial volatility, volatility of volatility, interest rate and mean reversion parameters. One can approximate the parametric family of functions

for an arbitrary range of parameters. This then allows for swift calibration of models to data (e.g. option prices). This is particularly appealing for high dimensional problems when calibrating directly using noisy Monte Carlo samples might be inefficient. This line of research gained some popularity recently and the idea has been tested numerically on various models and data sets (Horvath, Muguruza, and Tomas Citation2019; Liu et al. Citation2019; Bayer and Stemper Citation2018; Stone Citation2018; Hernandez Citation2016; Itkin Citation2019; McGhee Citation2018). There are some remarks that are in order. In the context of models calibration, while the training might be expensive one can do it offline, once and for good. One can also notice that training data could be used to produce a ‘look-up table’ taking model parameters to prices. From this perspective, the neural network, essentially, becomes an interpolator and a compression tool. Indeed the number of parameters of the network is much smaller than number of training data and therefore it is more efficient to store those. The final remark is that while there are other methods out there, such as Chebyshev functions, neural networks seem robust in high dimensions which make them our method of choice.

1.3. Notation

We denote by the set of all fully connected feedforward neural networks (see Appendix 3). We also use

with

to denote a neural network with weights θ approximating the function

for some

.

1.4. Outline

This paper is organized as follows. Section 2 provides theoretical underpinning for the derivation of all the algorithms we propose to solve (Equation1(1)

(1) ). More specifically in Section 2.2 we combine the approximation of the gradient of the solution of the PDE resulting from the Deep Learning algorithms with Monte Carlo to obtain an unbiased approximation of the solution of the PDE. In Section 3, we describe the algorithms in detail.

Finally in Section 4, we provide numerical tests of the proposed algorithms. We empirically test these methods on relevant examples including a 100 dimensional option pricing problems, see Examples 4.4 and A.3. We carefully measure the training cost and report the variance reduction achieved.

Since we work in situation where the function approximated by neural network can be obtained via other methods ( Monte Carlo, PDE solution) we are able to test the how the expressiveness of fully connected artificial neural networks depends on the number of layers and neurons per layer. See Section A.2 for details.

2. PDE Martingale Control Variate

Control variate is one of the most powerful variance reduction techniques for Monte Carlo simulation. While a good control variate can reduce the computational cost of Monte Carlo computation by several orders of magnitude, it relies on judiciously chosen control variate functions that are problem specific. For example, when computing price of basket options a sound strategy is to choose control variates to be call options written on each of the stocks in the basket, since in many models these are priced by closed-form formulae. In this article, we are interested in black-box-type control variate approach by leveraging the Martingale Representation Theorem and neural networks. The idea of using Martingale Representation to obtain control variates goes back at least to Newton (Citation1994). It has been further studied in combination with regression in Milstein and Tretyakov (Citation2009) and Belomestny et al. (Citation2018).

The bias in the approximation of the solution can be completely removed by employing control variates where the deep network provides the control variate resulting in very high variance reduction factor in the corresponding Monte Carlo simulation.

Let be a probability space and consider an

-valued Wiener process

. We will use

to denote the filtration generated by W. Consider a

-valued, continuous, stochastic process defined for the parameters

,

adapted to

given as the solution to

(2)

(2)

We will use

to denote the filtration generated by

.

Let be a measurable function and we assume that there is a (stochastic) discount factor given by

for an appropriate function

. We will omit β from the discount factor notation for brevity. We now interpret

as some risk-neutral measure and so the

-price of our contingent claim is

(3)

(3)

Say we have iid r.v.s

with the same distribution as

, where for each i,

. Then the standard Monte Carlo estimator is

Convergence

in probability as

is granted by the Law of Large Numbers. Moreover the classical Central Limit Theorem tells that

where

and

is such that

with Φ being distribution function (cumulative distribution function) of the standard normal distribution. To decrease the width of the confidence intervals one can increase N, but this also increases the computational cost. A better strategy is to reduce variance by finding an alternative Monte Carlo estimator, say

, such that

(4)

(4)

and the cost of computing

is similar to

.

In the remainder of the article, we will devise and test several strategies, based on deep learning, to find a suitable approximation for , by exploring the connection of the SDE (Equation2

(2)

(2) ) and its associated PDE.

2.1. PDE Derivation of the Control Variate

It can be shown that under suitable assumptions on b, σ, c and g, and fixed that

. See, e.g., Krylov (Citation1999). Let

. Then, from Feynman–Kac formula (see, e.g., Th. 8.2.1 in Øksendal Citation2003), we get

(5)

(5)

Since

and since v satisfies the above PDE, if we apply Itô's formula then we obtain

(6)

(6)

Hence Feynman–Kac representation together with the fact that

yields

(7)

(7)

Provided that

then the stochastic integral is a martingale. Thus we can consider the Monte Carlo estimator.

(8)

(8)

To obtain a control variate, we thus need to approximate

. If one used classical approximation techniques to the PDE, such as finite difference or finite element methods, one would run into the curse of the dimensionality – the very reason one employs Monte Carlo simulations in the first place. Artificial neural networks have been shown to break the curse of dimensionality in specific situations (Grohs et al. Citation2018). To be more precise, authors in Berner, Grohs, and Jentzen (Citation2020), Elbrächter et al. (Citation2022), Jentzen, Salimova, and Welti (Citation2018), Grohs, Jentzen, and Salimova (Citation2019), Hutzenthaler et al. (Citation2020), Grohs et al. (Citation2018), Kutyniok et al. (Citation2020), Gonon et al. (Citation2021) and Reisinger and Zhang (Citation2020) have shown that there always exist a deep feed forward neural network and some parameters such that the corresponding neural network can approximate the solution of a linear PDE arbitrarily well in a suitable norm under reasonable assumptions (terminal condition and coefficients can be approximated by neural networks). Moreover, the number of parameters grows only polynomially in dimension and so there is no curse of dimensionality. However, while the papers above construct the network they do not tell us how to find the ‘good’ parameters. In practice, the parameter search still relies on gradient descent-based minimization over a non-convex landscape. The application of the deep-network approximation to the solution of the PDE as a martingale control variate is an ideal compromise.

If there is no exact solution to the PDE (Equation5(5)

(5) ), as would be the case in any reasonable application, then we will approximate

by

.

To obtain an implementable algorithm we discretize the integrals in and take a partition of

denoted

, and consider an approximation of (Equation2

(2)

(2) ) by

. For simplicity we approximate all integrals arising by Riemann sums always taking the left-hand point when approximating the value of the integrand.

The implementable control variate Monte Carlo estimator is then the form

(9)

(9)

where

and λ is a free parameter to be chosen (because we discretize and use approximation to the PDE it is expected

). Again, we point out that the only bias of the above estimator comes from the numerical scheme used to solve the forward and backward processes. Nevertheless,

does not add any additional bias independently of the choice θ. We will discuss possible approximation strategies for approximating

with

in the following section.

In this section, we have actually derived an explicit form of the Martingale representation (see, e.g., Cohen and Elliott Citation2015, Th. 14.5.1) of in terms of the solution of the PDE associated to the process

, which is given as the solution to (Equation2

(2)

(2) ). In Appendix 1, we provide a more general framework to build a low-variance Monte Carlo estimator

for any (possibly non-Markovian)

-adapted process

.

2.2. Unbiased Parametric PDE Approximation

After having trained the networks (using any of Algorithms 2–3 that we will introduce in Section 3) and

(using any of Algorithms 2 and 3) that approximate

one then has two options to approximate

Directly with

if Algorithms 2 or 3 were used, which will introduce some approximation bias.

By combining

with the Monte Carlo approximation of

using (Equation9

(9)

(9) ), which will yield an unbiased estimator of

. The complete method is stated as Algorithm 1.

3. Deep PDE Solvers

In this section, we propose two algorithms that learn the PDE solution (or its gradient) and then use it to build control variate using (Equation9(9)

(9) ). We also include in Appendix 2 an additional algorithm to solve such linear PDEs using deep neural networks.

3.1. Projection Solver

Before we proceed further we recall a well-known property of conditional expectations, for proof see, e.g., Krylov (Citation2002, Ch.3 Th. 14).

Theorem 3.1

Let . Let

be a sub σ-algebra. There exists a random variable

such that

The minimizer,

, is unique and is given by

.

The theorem tell us that conditional expectation is an orthogonal projection of a random variable X onto . Instead of working directly with (Equation5

(5)

(5) ) we work with its probabilistic representation (Equation6

(6)

(6) ). To formulate the learning task, we replace

by

so that

. Hence, by Theorem (3.1),

and we know that for a fixed t the random variable which minimizes the mean square error is a function of

. But by the Doob–Dynkin Lemma (Cohen and Elliott Citation2015, Th. 1.3.12) we know that every

can be expressed as

for some appropriate measurable

. For the practical algorithm, we restrict the search for the function

to the class that can be expressed as deep neural networks

. Hence we consider a family of functions

and set learning task as

(10)

(10)

The inner expectation in (Equation10

(10)

(10) ) is taken across all paths generated using numerical scheme on (Equation2

(2)

(2) ) for a fixed β and it allows to solve the PDE (Equation5

(5)

(5) ) for such β. The outer expectation is taken on β for which the distribution is fixed beforehand (e.g., uniform on B if it is compact), thus allowing the algorithm to find the optimal neural network weights

to solve the parametric family of PDEs (Equation5

(5)

(5) ). Automatic differentiation is used to approximate

. Algorithm 2 describes the method.

3.2. Probabilistic Representation Based on Backward SDE

Instead of working directly with (Equation5(5)

(5) ) we work with its probabilistic representation (Equation6

(6)

(6) ) and view it as a BSDE. To formulate the learning task based on this, we recall the time-grid π so that we can write it recursively as

Next consider deep network approximations for each time step in π and for both the solution of (Equation5

(5)

(5) ) and its gradient.

and

Approximation depends on weights

,

. We then set the learning task as

(11)

(11)

where

The complete learning method is stated as Algorithm 3, where we split the optimization (Equation11

(11)

(11) ) in several optimization problems, one per time step: learning the weights

or

at a certain time step

only requires knowing the weights

. At

, learning the weights

only requires the terminal condition g. Note that the algorithm assumes that adjacent networks in time will be similar, and therefore we initialize

and

by

and

.

3.3. Martingale Control Variate Deep Solvers

So far, the presented methodology to obtain the control variate consists on first learning the solution of the PDE and more importantly its gradient (Algorithms 2, 3) which is then plugged in (Equation9(9)

(9) ). Alternatively, one can directly use the variance of (Equation9

(9)

(9) ) as the loss function to be optimized in order to learn the control variate. We expand this idea and design two additional algorithms.

Recall definition of given by (Equation9

(9)

(9) ). From (Equation8

(8)

(8) ) we know that the theoretical control variate Monte Carlo estimator has zero variance and so it is natural to set-up a learning task which aims to learn the network weights θ in a way which minimizes said variance:

Setting

, the learning task is stated as Algorithm 4.

We include a second similar Algorithm in Appendix 2.

4. Examples and Experiments

4.1. Options in Black–Scholes Model on d>1 Assets

Take a d-dimensional Wiener process W. We assume that we are given a symmetric, positive-definite matrix (covariance matrix) Σ and a lower triangular matrix C s.t. . For such a positive-definite Σ we can always use Cholesky decomposition to find C. The risky assets will have volatilities given by

. We will (abusing notation) write

, when we don't need to separate the volatility of a single asset from correlations. The risky assets under the risk-neutral measure are then given by

(12)

(12)

All sums will be from 1 to d unless indicated otherwise. Note that the SDE can be simulated exactly since

The associated PDE is (with

)

for

together with the terminal condition

for

.

4.2. Deep Learning Setting

In this section, we describe the neural networks used in the four proposed algorithms as well as the training setting, in the specific situation where we have an options problem in Black–Scholes model on d>1 assets.

Learning Algorithms 3–5 share the same underlying fully connected artificial network which will be different for different ,

. At each time step, we use a fully connected artificial neural network denoted

. The choice of the number of layers and network width is motivated by empirical results on different possible architectures applied on a short-lived options problem. We present the results of this study in Appendix A.2. The architecture is similar to that proposed in Beck et al. (Citation2018).

At each time step, the network consists of four layers: one d-dimensional input layer, two -dimensional hidden layers and one output layer. The output layer is one dimensional if the network is approximation for v and d-dimensional if the network is an approximation for

. The non-linear activation function used on the hidden layers is the linear rectifier relu. In all experiments except for Algorithm 3 for the basket options problem, we used batch normalization (Sergey Ioffe Citation2015) on the input of each network, just before the two nonlinear activation functions in front of the hidden layers and also after the last linear transformation.

The networks' optimal parameters are approximated by the Adam optimizer (Diederik and Kingma Citation2017) on the loss function specific for each method. Each parameter update (i.e. ,one step of the optimizer) is calculated on a batch of paths

obtained by simulating the SDE. We take the necessary number of training steps until the stopping criteria defined below is met, with a learning rate of

during the first

iterations, decreased to

afterwards.

During training of any of the algorithms, the loss value at each iteration is kept. A model is assumed to be trained if the difference between the loss averages of the two last consecutive windows of length 100 is less than a certain ϵ.

4.3. Evaluating Variance Reduction

We use the specified network architectures to assess the variance reduction in several examples below. After training the models in each particular example, they are evaluated as follows:

We calculate

times the Monte Carlo estimate

and the Monte Carlo with control variate estimate

using

Monte Carlo samples.

From Central Limit Theorem, as

increases the standardized estimators converge in distribution to the Normal. Therefore, a 95% confidence interval of the variance of the estimator is given by

where S is the sample variance of the

controlled estimators

, and

. These are calculated for both the Monte Carlo estimate and the Monte Carlo with control variate estimate.

We use the

generated samples

and

to calculate and compare the empirical variances

and

.

The number of optimizer steps and equivalently number of random paths generated for training provide a cost measure of the proposed algorithms.

We evaluate the variance reduction if we use the trained models to create control variates for options in Black–Scholes models with different volatilities than the one used to train our models.

Example 4.1

Low dimensional problem with explicit solution

We consider exchange option on two assets. In this case, the exact price is given by the Margrabe formula. We take d = 2, ,

,

,

,

for

. The payoff is

From Margrabe's formula, we know that

where

.

We organize the experiment as follows: We train our models with batches of 5000 random paths sampled from the SDE (Equation12

(12)

(12) ), where

. The assets' initial values

are sampled from a lognormal distribution

where

. The existence of an explicit solution allows to build a control variate of the form (Equation9

(9)

(9) ) using the known exact solution to obtain

. For a fixed number of time steps

this provides an upper bound on the variance reduction an artificial neural network approximation of

can achieve.

We follow the evaluation framework to evaluate the model, simulating paths by simulating (Equation12

(12)

(12) ) with constant

. We report the following results:

Table provides the empirical variances calculated over

generated Monte Carlo samples and their corresponding control variates. The variance reduction measure indicates the quality of each control variate method. The variance reduction using the control variate given by Margrabe's formula provides a benchmark for our methods. Table also provides the cost of training for each method, given by the number of optimizer iterations performed before hitting the stopping criteria, defined defined before with

. We add an additional row with the control variate built using automatic differentiation on the network parametrized using the Deep Galerkin Method (Sirignano and Spiliopoulos Citation2017). The DGM attempts to find the optimal parameters of the network satisfying the PDE on a pre-determined time and space domain. In contrast to our algorithms, the DGM method is not restricted to learn the solution of the PDE on the paths built from the probabilistic representation of the PDE. However, this is what is precisely enhancing the performance of our methods in terms of variance reduction, since they are specifically learning an approximation to the solution of the PDE and its gradient such that the resulting control variate will yield a low-variance Monte Carlo estimator.

Table provides the confidence intervals for the variances and of the Monte Carlo estimator, and the Monte Carlo estimator with control variate assuming these are calculated on

random paths. Moreover, we add the confidence interval of the variance of the Monte Carlo estimator calculated over

antithetic paths where the first

Brownian paths generated using

samples from a normal and the second half of the Brownian paths are generated using the antithetic samples

. See Belomestny, Iosipoi, and Zhivotovskiy (Citation2017, Section 4.2) for more details. All the proposed algorithms in this paper outperform the Monte Carlo estimator and the Monte Carlo estimator with antithetic paths; compared to the latter, our algorithms produce unbiased estimators with variances that 2 orders of magnitude less.

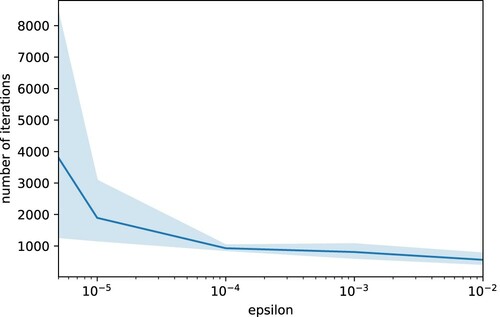

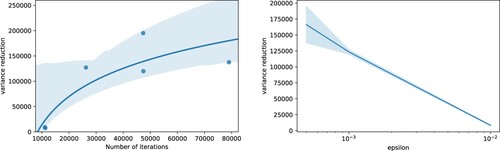

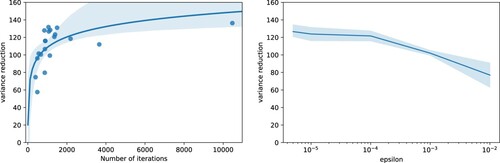

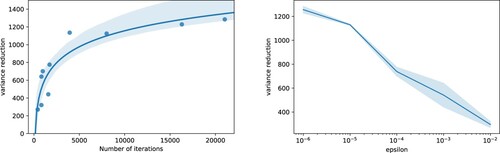

Figure studies the iterative training for the BSDE solver. As it has been observed before, this type of training does not allow us to study the overall loss function as the number of training steps increases. Therefore we train the same model four times for different values of ϵ between 0.01 and

and we study the number of iterations necessary to meet the stopping criteria defined by ϵ, the variance reduction once the stopping criteria is met, and the relationship between the number of iterations and the variance reduction. Note that the variance reduction stabilizes for

. Moreover, the number of iterations necessary to meet the stopping criteria increases exponentially as ϵ decreases, and therefore for our results printed in Tables and we employ

(Figure ).

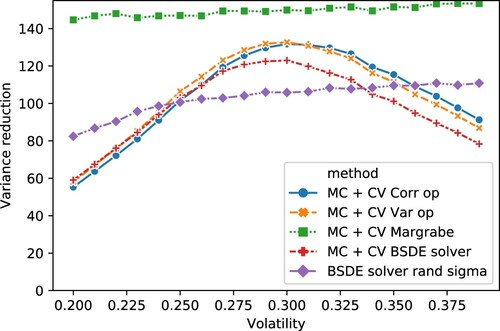

Figure displays the variance reduction after using the trained models on several Black Scholes problem with exchange options but with values of σ other than 0.3 which was the one used for training. We see that the various algorithms work similarly well in this case (not taking training cost into account). We note that the variance reduction is close to the theoretical maximum which is restricted by time discretization. Finally we see that the variance reduction is still significant even when the neural network was trained with different model parameters (in our case volatility in the option pricing example). The labels of Figure can be read as follows:

MC + CV Corr op: Monte Carlo estimate with Deep Learning-based Control Variate built using Algorithm 5.

MC + CV Var op: Monte Carlo estimate with Deep Learning-based Control Variate built using Algorithm 4.

MC + CV BSDE solver: Monte Carlo estimate with Deep Learning-based Control Variate built using Algorithm 3.

MC + CV Margrabe: Monte Carlo estimate with Control Variate using analytical solution for this problem given by Margrabe formula.

Figure 2. Left: Variance reduction in terms of number of optimizer iterations. Right: Variance reduction in terms of epsilon. Both are for Example 4.1 and Algorithm 3.

Figure 4. Variance reduction achieved by network trained with but then applied in situations where

. We can see that the significant variance reduction is achieved by a neural network that was trained with ‘incorrect’ σ. Note that the ‘MC + CV Margbrabe’ displays the optimal variance reduction that can be achieved by using exact solution to the problem. The variance reduction is not infinite even in this case since stochastic integrals are approximated by Riemann sums.

![Figure 4. Variance reduction achieved by network trained with σ=0.3 but then applied in situations where σ∈[0.2,0.4]. We can see that the significant variance reduction is achieved by a neural network that was trained with ‘incorrect’ σ. Note that the ‘MC + CV Margbrabe’ displays the optimal variance reduction that can be achieved by using exact solution to the problem. The variance reduction is not infinite even in this case since stochastic integrals are approximated by Riemann sums.](/cms/asset/3b5b1336-233a-4533-9c7d-1881e1005167/ramf_a_2030773_f0004_oc.jpg)

Table 1. Results on exchange option problem on two assets, Example 4.1. Empirical Variance and variance reduction factor.

Table 2. Results on exchange option problem on two assets, Example 4.1.

Example 4.2

Low-dimensional problem with explicit solution – Approximation of Price using PDE solver compared to Control Variate

We consider exchange options on two assets as in Example 4.1. We consider algorithm 3 that can be applied in two different ways:

It directly approximates the solution of the PDE (Equation5

(5)

(5) ) and its gradient in every point.

We can use

to build the control variate using probabilistic representation of the PDE (Equation6

(6)

(6) ).

We compare both applications by calculating the expected error of the -error of each of them with respect to the analytical solution given by Margrabe formula. From Margrabe's formula, we know that

Let

be the Deep Learning approximation of price at any point at initial time, calculated using Algorithm 3, and

be the Deep Learning approximation of its gradient for every time step in the time discretization. The aim of this experiment is to show how even if Algorithm 3 numerically converges to a biased approximation of

(see Figure left), it is still possible to use

to build an unbiased Monte-Carlo approximation of

with low variance.

Figure 5. Left: Loss of Algorithm 3 and squared error of in terms of training iterations. Right: Expected MSE of the two different approaches with respect to analytical solution in terms of number of Monte Carlo samples.

in terms of training iterations. Right: Expected MSE of the two different approaches with respect to analytical solution in terms of number of Monte Carlo samples.](/cms/asset/0aad9b7f-6754-4100-af43-9b2342d788da/ramf_a_2030773_f0005_oc.jpg)

We organize the experiment as follows.

We calculate the expected value of the

-error of

where each component of

is sampled from a lognormal distribution:

We calculate the expected value of the

-error of the Monte Carlo estimator with control variate where each component of

is sampled from a lognormal distribution:

where

is given by (Equation9

(9)

(9) ), and is calculated for different values of Monte Carlo samples.

We calculate the expected value of the

-error of the Monte Carlo estimator without control variate where each component of

is sampled from a lognormal distribution:

where

Figure provides one realization of the described experiment for different Monte Carlo iterations between 10 and 200. It shows how in this realization, 60 Monte Carlo iterations are enough to build a Monte Carlo estimator with control variate having lower bias than the solution provided by Algorithm 3.

Example 4.3

Low-dimensional problem with explicit solution. Training on random values for volatility

We consider exchange option on two assets. In this case, the exact price is given by the Margrabe formula. The difference with respect to the last example is that now we aim to generalize our model, so that it can build control variates for different Black–Scholes models. For this we take d = 2, , r = 0.05,

,

,

for

.

The payoff is

We organize the experiment as follows: for comparison purposes with the BSDE solver from the previous example, we train our model for exactly the same number of iterations, i.e. ,1380 batches of 5000 random paths

sampled from the SDE Equation12

(12)

(12) , where

. The assets' initial values

are sampled from a lognormal distribution

where

. Since now σ can take different values, it is included as input to the networks at each time step.

The existence of an explicit solution allows to build a control variate of the form (Equation9(9)

(9) ) using the known exact solution to obtain

. For a fixed number of time steps

this provides an upper bound on the variance reduction an artificial neural network approximation of

can achieve.

Figure adds the performance of this model to Figure , where the variance reduction of the Control Variate is displayed for different values of the volatility between 0.2 and 0.4.

Figure 6. Extension of Figure with variance reduction achieved by training the model on different Black–Scholes models.

Example 4.4

High-dimensional problem, exchange against average

We extend the previous example to 100 dimensions. This example is similar to from Broadie, Du, and Moallemi (Citation2015). We will take

,

,

,

,

for

.

We will take this to be

The experiment is organized as follows: we train our models with batches of

random paths

sampled from the SDE (Equation12

(12)

(12) ), where

. The assets' initial values

are sampled from a lognormal distribution

where

,

,

.

We follow the evaluation framework to evaluate the model, simulating paths by simulating (Equation12

(12)

(12) ) with constant

for

. We have the following results:

Table provides the empirical variances calculated over

generated Monte Carlo samples and their corresponding control variates. The variance reduction measure indicates the quality of each control variate method. Table also provides the cost of training for each method, given by the number of optimizer iterations performed before hitting the stopping criteria with

. Algorithm 3 outperforms the other algorithms in terms of variance reduction factor. This is not surprising as Algorithm 3 explicitly learns the discretization of the Martingale representation (Equation (Equation7

(7)

(7) )) from which the control variate arises.

Table provides the confidence interval for the variance of the Monte Carlo estimator, and the Monte Carlo estimator with control variate assuming these are calculated on

random paths.

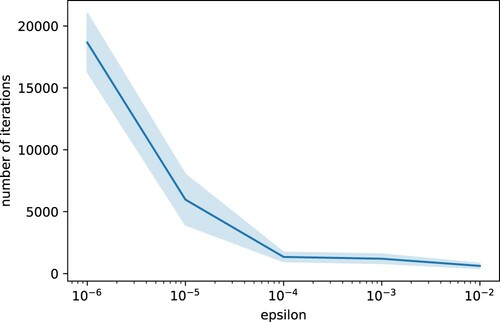

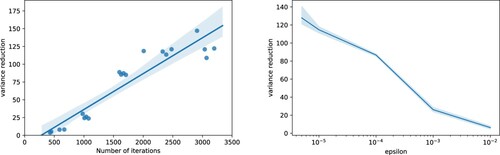

Figures and study the iterative training for the BSDE solver. We train the same model four times for different values of ϵ between 0.01 and

and we study the number of iterations necessary to meet the stopping criteria defined by ϵ, the variance reduction once the stopping criteria is met, and the relationship between the number of iterations and the variance reduction. Note that in this case the variance reduction does not stabilize for

. However, the number of training iterations increases exponentially as ϵ decreases, and therefore we also choose

to avoid building a control that requires a high number of random paths to be trained (Figure ).

Figure 7. Left: Variance reduction in terms of number of optimizer iterations. Right: Variance reduction in terms of epsilon. Both for Example 4.4 and Algorithm 3.

Figure 9. Variance reduction with network trained with but applied for

for the model of Example 4.4. We see that the variance reduction factor is considerable even in the case when the network is used with ‘wrong’ σ. It seems that Algorithm 4 is not performing well in this case.

![Figure 9. Variance reduction with network trained with σ=0.3 but applied for σ∈[0.2,0.4] for the model of Example 4.4. We see that the variance reduction factor is considerable even in the case when the network is used with ‘wrong’ σ. It seems that Algorithm 4 is not performing well in this case.](/cms/asset/68338268-9bc7-47b7-a95a-b0faef6fc875/ramf_a_2030773_f0009_oc.jpg)

Table 3. Results on exchange option problem on 100 assets, Example 4.4. Empirical Variance and variance reduction factor and costs in terms of paths used for training and optimizer steps.

Table 4. Results on exchange option problem on 100 assets, Example 4.4.

Disclosure statement

No potential conflict of interest was reported by the authors.

Additional information

Funding

References

- Bayer C., and B. Stemper. 2018. “Deep Calibration of Rough Stochastic Volatility Models.” arXiv:1810.03399.

- Beck C., S. Becker, P. Grohs, N. Jaafari, and A. Jentzen. 2018. “Solving Stochastic Differential Equations and Kolmogorov Equations by Means of Deep Learning.” arXiv:1806.00421.

- Beck C., L. Gonon, and A. Jentzen. 2020. “Overcoming the Curse of Dimensionality in the Numerical Approximation of High-Dimensional Semilinear Elliptic Partial Differential Equations”.

- Belomestny D., L. Iosipoi, and N. Zhivotovskiy. 2017. “Variance Reduction via Empirical Variance Minimization: Convergence and Complexity.” arXiv:1712.04667.

- Belomestny D., S. Hafner, T. Nagapetyan, and M. Urusov. 2018. “Variance Reduction for Discretised Diffusions Via Regression.” Journal of Mathematical Analysis and Applications 458 (1): 393–418.

- Berner J., P. Grohs, and A. Jentzen. January, 2020. “Analysis of the Generalization Error: Empirical Risk Minimization Over Deep Artificial Neural Networks Overcomes the Curse of Dimensionality in the Numerical Approximation of Black–scholes Partial Differential Equations.” SIAM Journal on Mathematics of Data Science 2 (3): 631–657.

- Blanka Horvath M. T., and Muguruza Aitor. 2019. “Deep Learning Volatility: A Deep Neural Network Perspective on Pricing and Alibration in (Rough) Volatility Models.” arXiv:1901.09647.

- M. Broadie, Y. Du, and C. C. Moallemi. 2015. “Risk Estimation Via Regression.” Operations Research 63 (5): 1077–1097.

- Chan-Wai-Nam Q., J. Mikael, and X. Warin. 2019. “Machine Learning for Semi Linear Pdes.” Journal of Scientific Computing 79 (3): 1667–1712.

- Chizat L., and F. Bach. 2018. “On the Global Convergence of Gradient Descent for Over-Parameterized Models Using Optimal Transport.” In Advances in Neural Information Processing Systems, 3036–3046.

- Cohen S. N., and R. J. Elliott. 2015. Stochastic Calculus and Applications. Vol. 2. New York: Birkhäuser.

- Cont R., and Y. Lu. March, 2016. “Weak Approximation of Martingale Representations.” Stochastic Processes and Their Applications 126 (3): 857–882.

- Cvitanic J., and J. Zhang. 2005. “The Steepest Descent Method for Forward-backward Sdes.” Electronic Journal of Probability 10: 1468–1495.

- Diederik J. B., and P. Kingma. 2017. “Adam: A Method for Stochastic Optimization.” arXiv:1412.6980.

- Du S. S., X. Zhai, B. Poczos, and A. Singh. 2018. “Gradient Descent Provably Optimizes Over-Parameterized Neural Networks.” arXiv:1810.02054.

- Elbrächter D., P. Grohs, A. Jentzen, and C. Schwab. Dnn expression rate analysis of high-dimensional pdes. 2022. “Application to Option Pricing.” Constructive Approximation 55 (1): 3–7.

- Glasserman P. 2013. Monte Carlo Methods in Financial Engineering. Springer.

- Gonon L., P. Grohs, A. Jentzen, D. Kofler, and D. Šiška. 2021. “Uniform Error Estimates for Artificial Neural Network Approximations for the Heat Equation.” IMA Journal Numerical Analysis. https://academic.oup.com/imajna/advance-article/doi/https://doi.org/10.1093/imanum/drab027/6279436?guestAccessKey=cef28afb-c79a-433a-855c-08f76b4732fd&login=false

- Goodfellow I., J. Shlens, and C. Szegedy. 2014. “Explaining and Harnessing Adversarial Examples.” arXiv:1412.6572.

- Grohs P., F. Hornung, A. Jentzen, and P. von Wurstemberger. 2018. “A Proof That Artificial Neural Networks Overcome the Curse of Dimensionality in the Numerical Approximation of Black-Scholes Partial Differential Equations.” arXiv:1809.02362.

- Grohs P., A. Jentzen, and D. Salimova. 2019. “Deep Neural Network Approximations for Monte Carlo Algorithms”.

- Han J., and J. Long. 2020. “Convergence of the Deep Bsde Method for Coupled Fbsdes.” Probability, Uncertainty and Quantitative Risk 5 (1): 1–33.

- Han J., A. Jentzen, and E. Weinan. 2017. “Solving High-Dimensional Partial Differential Equations Using Deep Learning.” arXiv:1707.02568.

- Henry-Labordere P. 2017. “Deep Primal-Dual Algorithm for BSDEs: Applications of Machine Learning to CVA and IM.” Available at SSRN 3071506.

- Hernandez A. 2016. “Model Calibration With Neural Networks.” Available at SSRN 2812140.

- Horvath B., A. Muguruza, and M. Tomas. 2019. “Deep Learning Volatility.” Available at SSRN 3322085.

- Hu K., Z. Ren, Šiška D., and L. Szpruch. 2021. “Mean-Field Langevin Dynamics and Energy Landscape of Neural Networks.” Annales de l'Institute Henry Poincaré Proability and Statistics 57 (4): 2043–2065.

- Huré C., H. Pham, and X. Warin. 2019. “Some Machine Learning Schemes for High-Dimensional Nonlinear PDEs.” arXiv:1902.01599.

- Hutzenthaler M., A. Jentzen, T. Kruse, and T. A. Nguyen. April, 2020. “A Proof that Rectified Deep Neural Networks Overcome the Curse of Dimensionality in the Numerical Approximation of Semilinear Heat Equations.” SN Partial Differential Equations and Applications 1 (2): 1–34.

- Itkin A. 2019. “Deep Learning Calibration of Option Pricing Models: Some Pitfalls and Solutions.” arXiv:1906.03507.

- Jacquier A. J., and M. Oumgari. 2019. “Deep PPDEs for Rough Local Stochastic Volatility.” Available at SSRN 3400035.

- Jentzen A., D. Salimova, and T. Welti. 2018. “A Proof That Deep Artificial Neural Networks Overcome the Curse of Dimensionality in the Numerical Approximation of Kolmogorov Partial Differential Equations With Constant Diffusion and Nonlinear Drift Coefficients.” arXiv:1809.07321.

- Krylov N. 1999. “On Kolmogorov's Equations for Finite Dimensional Diffusions.” In Stochastic PDE's and Kolmogorov Equations in Infinite Dimensions, 1–63. Springer.

- Krylov N. V. 2002. Introduction to the Theory of Random Processes. Vol. 43. American Mathematical Society.

- Kutyniok G., P. Petersen, M. Raslan, and R. Schneider. 2020. “A Theoretical Analysis of Deep Neural Networks and Parametric pdes”.

- LeCun Y., Y. Bengio, and G. Hinton. 2015. “Deep Learning.” Nature 521 (7553): 436.

- Liu S., A. Borovykh, L. A. Grzelak, and C. W. Oosterlee. 2019. “A Neural Network-Based Framework for Financial Model Calibration.” arXiv:1904.10523.

- McGhee W. A. 2018. “An Artificial Neural Network Representation of the SABR Stochastic Volatility Model.” SSRN 3288882.

- Mei S., A. Montanari, and P.-M. Nguyen. 2018. “A Mean Field View of the Landscape of Two-layer Neural Networks.” Proceedings of the National Academy of Sciences 115 (33): E7665–E7671.

- Milstein G., and M. Tretyakov. 2009. “Solving Parabolic Stochastic Partial Differential Equations Via Averaging Over Characteristics.” Mathematics of Computation 78 (268): 2075–2106.

- Newton N. J. 1994. “Variance Reduction for Simulated Diffusions.” SIAM Journal on Applied Mathematics 54 (6): 1780–1805.

- Øksendal B. 2003. “Stochastic Differential Equations.” In Stochastic Differential Equations, 65–84. Springer.

- Reisinger C., and Y. Zhang. 2020. “Rectified Deep Neural Networks Overcome the Curse of Dimensionality for Nonsmooth Value Functions in Zero-Sum Games of Nonlinear Stiff Systems”.

- Rotskoff G. M., and Vanden-Eijnden E.. 2018. “Neural Networks as Interacting Particle Systems: Asymptotic Convexity of the Loss Landscape and Universal Scaling of the Approximation Error.” arXiv:1805.00915.

- Sabate-Vidales M., D. Šiška, and L. Szpruch. 2020. “Solving Path Dependent pdes with LSTM Networks and Path Signatures”.

- Sergey Ioffe C. S. 2015. “Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift.” arXiv:1502.03167.

- Sirignano J., and K. Spiliopoulos. 2017. “DGM: A Deep Learning Algorithm for Solving Partial Differential Equations.” arXiv:1708.07469.

- Sirignano J., and K. Spiliopoulos. 2020. “Mean Field Analysis of Neural Networks: A Central Limit Theorem.” Stochastic Processes and Their Applications 130 (3): 1820–1852.

- Stone H. 2018. “Calibrating Rough Volatility Models: A Convolutional Neural Network Approach.” arXiv:1812.05315.

- Wang Z., and S. Tang. 2021. “Gradient Convergence of Deep Learning-based Numerical Methods for Bsdes.” Chinese Annals of Mathematics, Series B 42 (2): 199–216.

- Weinan E., J. Han, and A. Jentzen. 2017. “Deep Learning-based Numerical Methods for High-dimensional Parabolic Partial Differential Equations and Backward Stochastic Differential Equations.” Communications in Mathematics and Statistics 5 (4): 349–380.

Appendices

Appendix 1.

Martingale Control Variate

Let be a probability space and consider an

-valued Wiener process

. We will use

to denote the filtration generated by W. Consider a

-valued, continuous, stochastic process defined for the parameters

,

adapted to

.

Let be a measurable function. We shall consider path-dependent contingent claims of the form

. Finally we assume that there is a (stochastic) discount factor given by

for an appropriate function

. We will omit the β from the discount factor notation. Let

We now interpret

as some risk-neutral measure and so the

-price of our contingent claim is

By assumption

is

measurable and

. Hence, from the Martingale Representation Theorem, see, e.g., Cohen and Elliott (Citation2015, Th. 14.5.1), there exists a unique process

adapted to

with

such that

(A1)

(A1)

The proof of the existence of the process

, is non-constructive. In the setup of the paper, we used the Markovian property of

to approximate

via the associated linear PDE. In the more general non-Markovian setup, Cont and Lu (Citation2016) provide a numerical method to construct the martingale representation.

Observe that in our setup, ,

for

. Hence tower property of the conditional expectation implies that

(A2)

(A2)

Consequently (EquationA1

(A1)

(A1) ) and (EquationA2

(A2)

(A2) ) imply

We then observe that

If we can generate iid

and

with the same distributions as W and Z respectively then we can consider the following Monte-Carlo estimator of

:

In the companion paper (Sabate-Vidales, Šiška, and Szpruch Citation2020), we provide deep learning algorithms to price path-dependent options in the risk neutral measure by solving the corresponding path-dependent PDE, using a combination of Recurrent Neural networks and path signatures to parametrize the process

.

Appendix 2.

Martingale Control Variate Deep Solvers

A.1. Empirical Correlation Maximization

This method is based on the idea that since we are looking for a good control variate we should directly train the network to maximize the variance reduction between the vanilla Monte-Carlo estimator and the control variates Monte-Carlo estimator by also trying to optimize λ.

Recall we denote . We also denote as

as the stochastic integral that arises in the martingale representation of

. The optimal coefficient

that minimizes the variance

is

Let

denote the Pearson correlation coefficient between

and

, i.e.,

With the optimal

we then have that the variance reduction obtained from the control variate is

See Glasserman (Citation2013, Ch. 4.1) for more details. Therefore we set the learning task as

The implementable version requires the definition of

in (Equation9

(9)

(9) ), where we set

The full method is stated as Algorithm 5.

Appendix 3.

Artificial Neural Networks

We fix a locally Lipschitz function and for

define

as the function given, for

by

. We fix

(the number of layers),

,

(the size of input to layer k) and

(the size of the network output). A fully connected artificial neural network is then given by

, where, for

, we have real

matrices

and real

dimensional vectors

.

The artificial neural network defines a function given recursively, for

, by

We can also define the function

which counts the number of parameters as

We will call such class of fully connected artificial neural networks

. Note that since the activation functions and architecture are fixed the learning task entails finding the optimal

.

Appendix 4.

Additional Numerical Results

Example A.1

Low dimensional basket option

We consider the basket options problem of pricing, using the example from Belomestny, Iosipoi, and Zhivotovskiy (Citation2017, Sec 4.2.3). The payoff function is

We first consider the basket options problem on two assets, with d = 2,

,

,

,

,

for

, and constant strike

. In line with the example from Belomestny, Iosipoi, and Zhivotovskiy (Citation2017, Sec 4.2.3) for comparison purposes we organize the experiment as follows. The control variates on 20, 000 batches of 5000 samples each of

by simulating the SDE Equation12

(12)

(12) , where

. The assets' initial values

are always constant

. We follow the evaluation framework to evaluate the model, simulating

paths by simulating Equation12

(12)

(12) with constant

for

. We have the following results:

Table provides the empirical variances calculated over

generated Monte Carlo samples and their corresponding control variates. The variance reduction measure indicates the quality of each control variate method. Table also provides the cost of training for each method, given by the number of optimizer iterations performed before hitting the stopping criteria, defined defined before with

.

Table provides the confidence interval for the variance of the Monte Carlo estimator, and the Monte Carlo estimator with control variate assuming these are calculated on

random paths.

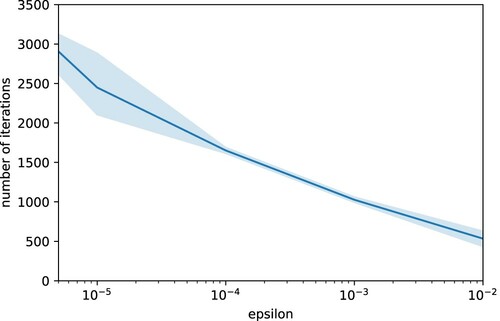

Figures and study the iterative training for the BSDE solver. We train the same model four times for different values of ϵ between 0.01 and

and we study the number of iterations necessary to meet the stopping criteria defined by ϵ, the variance reduction once the stopping criteria is met, and the relationship between the number of iterations and the variance reduction. Note that the variance reduction stabilizes for

. Furthermore, the number of training iterations increases exponentially as ϵ decreases. We choose

.

Table E1. Results on basket options problem on two assets, Example A.1. Models trained with fixed, non-random. Empirical Variance and variance reduction factor are presented.

Table E2. Results on basket options problem on two assets, Example A.1. Models trained with fixed, non-random.

Figure A1. Left: Variance reduction in terms of number of optimizer iterations. Right: Variance reduction in terms of epsilon. Both refer to Algorithm 3 used in Example A.1.

We note that in the example from Belomestny, Iosipoi, and Zhivotovskiy (Citation2017, Sec 4.2.3), the control variate is trained with fixed. Using this setting, Algorithm 2 cannot be used to approximate the control variate in (Equation9

(9)

(9) ): since the network at t = 0,

, is trained only at

, then automatic differentiation to approximate

will yield a bad approximation of

; indeed, during training

is unable to capture how v changes around

at t = 0. For this reason, Algorithm 2 is not included in the following results.

Example A.2

basket option with random sigma

In this example, as in Example 4.2, we aim to show how our approach – where we build a control variate by approximating the process – is more robust compared to directly approximating the price by a certain function in a high-dimensional setting.

We use the methodology proposed in Blanka Horvath and Muguruza (Citation2019), where the authors present a deep learning-based calibration method proposing a two-steps approach: first the authors learn the model that approximates the pricing map using an artificial neural network in which the inputs are the parameters of the volatility model. Second the authors calibrate the learned model using available data by means of different optimization methods.

For a fair comparison between our deep learning-based control variate approach vs. the method proposed in Blanka Horvath and Muguruza (Citation2019), we make the following remarks:

We will only use the first step detailed in Blanka Horvath and Muguruza (Citation2019) where the input to the model that approximates the pricing map is the volatility model's parameters:

, and the initial price is considered constant for training purposes. We run the experiment for d = 5.

In Blanka Horvath and Muguruza (Citation2019), the authors build a training set and then perform gradient descent-based optimization on the training set for a number of epochs. This is somewhat a limiting factor in the current setting where one can have as much data as they want since it is generated from some given distributions. In line with our experiments, instead of building a training set, in each optimization step we sample a batch from the given distributions.

In Blanka Horvath and Muguruza (Citation2019), the price mapping function is learned for a grid of combinations of maturities and strikes. In this experiment, we reduce the grid to just one point considering T = 0.5,

, where

.

We will use Algorithm 3 to build the control variate with the difference that now

will be passed as input to the each network at each time step

.

The experiment is organized as follows:

We train the network proposed in Blanka Horvath and Muguruza (Citation2019) approximating the price using Black–Scholes model and Basket options payoff. In each optimization iteration, a batch of size

, where the volatility model's parameters are sampled using

and

. We keep a test set of size 150,

where

denotes the price and is generated using 50, 000 Monte Carlo samples.

We use Algorithm 3 to build the control variate, where σ and r are sampled as above and are included as inputs to the network. We denote the trained model by

where

. In contrast with Algorithm 3.

We present the following results:

Figure displays the histogram of the squared error of the approximation of the PDE solution

for each instance in

. In this sample, it spans from almost

to

, i.e. ,for almost 5 orders of magnitude.

We build the control variate for that instance in the test set for which

generalizes the worst. For those particular

, Table provides its variance reduction factor.

Table E3. Results on basket options problem on 5 assets, Model trained with non-random , and random

.

Example A.3

High dimensional basket option

We also consider the basket options problem on d = 100 assets but otherwise identical to the setting of Example A.1. We compare our results against the same experiment in Belomestny, Iosipoi, and Zhivotovskiy (Citation2017, Sec 4.2.3, Table 6 and Table 7).

Table shows a significant improvement of the variance reduction factor (10× and 100× better) of all our Algorithms than the methods proposed in Belomestny, Iosipoi, and Zhivotovskiy (Citation2017) and applied in the same example.

Table E4. Results on basket options problem on 100 assets, Example A.3. Models trained with non-random so that the results can be directly compared to Belomestny, Iosipoi, and Zhivotovskiy (Citation2017).

Table E5. Results on basket options problem on 100 assets, Example A.3. Models trained with non-random .

A.2. Empirical Network Diagnostics

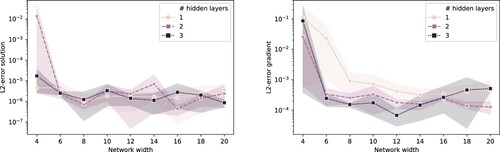

In this section, we consider the exchange options problem on two assets from Example 4.1, where the time horizon is 1 day. We consider different network architectures for the BSDE method described by Algorithm 3 to understand their impact on the final result and their ability to approximate the solution of the PDE and its gradient. We choose this problem given the existence of an explicit solution that can be used as a benchmark. The experiment is organized as follows:

Let L−2 be the number of hidden layers of

and

. Let

be the number of neurones per hidden layer k.

We train four times all the possible combinations for

and for

using

for the stopping criteria. The assets' initial values

are sampled from a lognormal distribution

where

.

We approximate the

-error of

and

with respect to the exact solution given by Margrabe's formula and its gradient.

Figure displays the average of the -errors and its confidence interval. We can conclude that for this particular problem, the accuracy of

does not strongly depend on the number of layers, and that there is no improvement beyond eight nodes per hidden layer. The training (its inputs and the gradient descent algorithm together with the stopping criteria) becomes the limiting factor. The accuracy of

is clearly better with two or three hidden layers than with just one. Moreover, it seems that there is benefit in taking as many as 10 nodes per hidden layer.