Abstract

Student engagement with evaluation surveys has been declining, reducing the reliability and usability of the data for quality assurance and enhancement. One of the reasons for that, as reported by students, is the perceived low relevance of survey questions to their daily experiences and concerns. Uniform questions, provided by standardised survey instruments, rarely capture the needs of a diverse student population with wide-ranging educational experiences. This article draws on findings from a project that explored student priorities in the module level experience by involving them in the development of survey questions. Q methodology was utilised to identify groups of students with similar views and to explore key factors and patterns of thoughts about module experience. The project findings are indicative of three distinctive groups that reflect different stages of the student university journey, their level of maturity and cognitive engagement. The article reflects on the implications of the findings for quality assurance processes, teaching and student support.

Introduction

In higher education, student evaluations of teaching have multiple purposes, including public accountability, performance management and the improvement of teaching and learning (Williams & Cappuccini‐Ansfield, Citation2007; Huxham et al., Citation2008; Spooren et al., Citation2013). The latter of these is seen as the most important aspect by students and academic staff (Nasser & Fresko, Citation2002; Chen & Hoshower, Citation2003; Chan et al., Citation2014). Collecting student feedback via surveys, timely reporting and acting on results are key elements of quality monitoring and enhancement processes in universities across the world. However, the reliability and usability of single institutional measures in the context of institutional diversity have been questioned by academic staff (Leathwood & Phillips, Citation2000). Relevance of the questions has also been queried by students (Harvey, Citation2003).

This study addresses these concerns by exploring students’ priorities in module level experience and developing a student-generated question bank. It also attempts to identify groups of students who share similar priorities and opinions and reflects on the implications of these findings for the quality of higher education.

Evaluating module level experience: challenges of measurement

Module, a self-contained, formally structured unit of study, with an explicit set of learning outcomes, is an integral part of students’ university experience. Module evaluation surveys, also known as the end of semester evaluation surveys, unit evaluations in Australia, or course evaluation surveys in the USA and Canada, are usually standardised, benchmarking instruments that are centrally devised or partially reproduced from validated national surveys or other published instruments. As Gibbs (Citation2010) rightly indicated, ‘almost all such questionnaires are ‘home-grown’ and are likely to be of doubtful reliability and open to all kinds of biases’ (Gibbs, Citation2010, p. 27). It is not uncommon that items from validated instruments designed for other purposes are taken out of context and used in module evaluation surveys, reducing the validity of the measure (Shah & Nair, Citation2012).

Module evaluation surveys seek student feedback on what Pastore et al. (Citation2019) refer to as the ‘micro’ level of teaching and learning processes, rather than wider course (programme of study) or institutional issues. As every student has to complete several module surveys each semester, standardisation of the survey is ‘an inevitable part of developing a sustainable structure for such systems, given the sheer volume of administration created by surveying students on this scale’ (Wiley, Citation2019, p. 56). However, the experience across modules is not homogenous: some are taught predominantly via lectures and seminars, while others might be practice-based or linked to placements. Modules can be core or elective, may have different credit weightings, or be shared across programmes. Some modules are team taught, while others are delivered by a single academic. A lack of sensitivity to individual contexts and schedules could suggest that standardised surveys are only partially effective as a means of evaluation (Wiley, Citation2019).

In module evaluation surveys, the focus is on the unit of learning that the student is undertaking, rather than on individual teachers. In the United Kingdom (UK), many higher education institutions evaluate the module experience as a whole, with students having an option to leave comments related to particular teachers. Merging teacher and unit evaluation blocks into a single instrument is a common practice in Northern America and Australia. The teacher evaluation form asks questions about the teaching behaviours of the instructor (for example, instructor enthusiasm for the material, availability to students, classroom atmosphere and engagement) and, in addition to the quality assurance, is used for the purpose of hiring, tenure or promotion decisions (Gravestock & Gregor-Greenleaf, Citation2008).

Typical unit evaluation questions might include items on curriculum content, assessment and feedback, teaching delivery, course organisation and learning resources. They are usually worded in a way that is broad enough to apply to all modules.

Evaluating module level experience: perceptions of staff and students

The ability to standardise module evaluation surveys and gather large amounts of comparable data quickly has led to their widespread use across the sector and it is generally considered that findings from student surveys represent important evidence for quality assurance and enhancement (Spooren et al., Citation2013; Pastore et al., Citation2019). However, despite their ubiquity among higher education institutions, scepticism towards institutionally-derived module evaluation surveys has come from both academic staff and from students (Huxham et al., Citation2008; Spooren et al., Citation2013; Chan et al., Citation2014; Borch et al., Citation2020). Academic staff may view such instruments as superficial or unhelpful (Edström, Citation2008) and believe that the administration and analysis of surveys are mainly driven by audit and control (Newton, Citation2000; Harvey, Citation2002; Borch et al., Citation2020). When evaluation of modules (units), alongside teacher evaluations, moved from voluntary to mandatory in Australia, with results linked to academic staff performance, it was perceived as an intrusion of academic autonomy (Shah & Nair, Citation2012). Reliance on student happiness as a measure of educational quality was also criticised (Shah & Nair, Citation2012).

Serious questions have been raised about bias in student feedback, such as fairness to particular groups of staff who may attract more negative ratings because of demographic characteristics, such as gender, ethnicity, or the subject they have been assigned to teach (Uttl & Smibert, Citation2017; Mengel et al., Citation2019; Esarey & Valdes, Citation2020; Radchenko, Citation2020). In addition to biases detected in the numerical ratings, research also suggests that student and instructor gender play roles in shaping open-ended evaluations of teaching, especially in relation to describing the strength and weaknesses of academics (Taylor et al., Citation2021).

Meanwhile, from the perspective of students, it is argued that surveys rarely provide a nuanced understanding of their concerns, issues and acknowledgements (Harvey, Citation2003) and may sometimes lack face validity (Spooren et al., Citation2013). For example, in a recent study by Borch et al. (Citation2020), students reported that responding to standard module evaluation questions was difficult because their module might be non-standard, or cover many different teaching topics and activities. Academic staff in this Norwegian research study also found the centrally derived surveys to be non-specific and often unusable and therefore created their own questions or surveys. A study by Huxham et al. (Citation2008) directly compared closed-question surveys with other methods for eliciting student feedback on the same modules. They concluded that issues raised by students from open and free-form feedback methods (for example, focus groups) were much broader, with only about a third of the issues being covered in the standard survey.

A recent study by Wiley (Citation2019) also sought to establish students’ perspectives on module evaluation surveys. It found that students recognised some benefits of standardised module evaluation, such as consistency and being able to compare performance across different modules. However, they questioned the ability of the instrument to adequately capture information specific to individual modules. The research also highlighted that students could become disengaged with the process if the timing of the module evaluation survey was not right; leading to reduced applicability of some of the questions. In summary, many studies have shown that both staff and students see limitations in the design and procedure of module evaluation surveys.

Improving module evaluations

Given these critiques, a potential path to improvement is tailoring survey content to what students themselves consider to be most relevant to their learning (Tucker et al., Citation2003; Harvey, Citation2011; Spooren et al., Citation2013; Wiley, Citation2019). Patton (Citation2008) has also argued that evaluation should be judged by its utility to its intended users, who should be engaged in the process throughout. Arguably, this should establish an increased understanding of evaluation, a sense of ownership and ultimately increase uptake. A need for partnerships between students, teachers and institutions in approaching evaluation activities was also suggested by Stein et al. (Citation2020). In Borch et al.’s (Citation2020) study, students believed that when staff demonstrated respect for their opinions by engaging in a dialogue, they felt increased motivation to provide module feedback. This can be a way to make surveys more learner-orientated and useful as pedagogical tools (Borch et al., Citation2020). Students are also more likely to participate in evaluation surveys if they feel that their feedback will make a meaningful contribution and are assured that the lecturer is listening (Porter, Citation2004; Coates, Citation2005; Winchester & Winchester, Citation2012). Furthermore, Spooren and Christiaens (Citation2017) showed that the students who strongly valued the evaluation process gave more positive ratings of their teaching. The tailoring of surveys for specific modules can accommodate these nuanced discourses.

A further benefit of gathering information on module evaluation items that appear relevant and important to students is the identification of clusters of students who share the same priorities. As Culver et al. (Citation2021) emphasised, students’ perceptions of teaching are the product of their individual characteristics and the teaching and learning environment. Such clusters might coalesce around different approaches to learning, levels of study, academic disciplines, or demographic factors, such as gender, age, educational, or socio-economic background. In addition, survey items considered important by students may illuminate their more general views on what constitutes quality and value for money in higher education (Lagrosen et al., Citation2004; Jungblut et al., Citation2015). By learning about student priorities and aligning them with learner characteristics, there is potential for educators to find out more about the profile of their cohort and address the needs of particular types of learners.

Aims of the research

The overarching aim of the research was to bring the student voice into the process of module evaluation and to understand the similarities and differences in priorities of different demographic groups. Specifically, the project aimed to:

generate a list of questions that reflected student priorities in module level experience and translate it into a bank of questions that module leaders could use to customise their evaluations.

categorise different patterns of thought about module level experience among students to identify clusters of students who share similar concerns and opinions.

Methodology

The study took place in a single UK higher education institution. The project was designed and implemented using the Q Methodology, which combines the strengths of both qualitative and quantitative research traditions (Brown, Citation1996). It is considered particularly suitable for researching the diversity of subjective experiences, perspectives and beliefs. The methodology comprises three main stages. Stage one involves developing a set of statements to be sorted—a concourse. Stage two requires participants to sort the statements along a continuum of preference. In stage three, the outputs (sorts generated) are analysed and interpreted (Brown, Citation1993). The identification of broad categories of belief is based on correlation and factor analysis of the ranked statements, with interpretation supported by participant commentaries provided during or after the sorting exercise.

In Q methodology, the factors emerge from participants’ sorting activity rather than being arrived at through the researcher’s analysis and classification of themes as in other qualitative methods. For this reason, ‘the analysis … may incorporate less researcher bias than other interpretive techniques’ (Cordingley et al., Citation1997, p. 41).

Mapped on the Q Methodology stages, the project involved three phases.

Phase 1: gathering opinion statements

Student priorities in module experience were explored via four focus groups attended by twenty-nine students from across the university. To ensure that perspectives of the whole student body are represented, students from all levels of study and all subject areas were invited to take part in the discussions. The demographic profile of participants in Phase 1 was the following: there were 15 female and 14 male students comprising 21 undergraduate (all three levels of study) and nine post-graduate students. Various disciplinary areas were represented, including engineering, mathematics, English, chemistry, sports science, human resource management, computer science and psychology among others. Six students were international and 23 home. The focus groups were campus-based and had a mix of participants representing different levels of study and subject areas specific to a campus location. Based on the four discussions, 60 statements were generated as a pool of questions for the student question bank.

Phase 2: sorting opinion statements

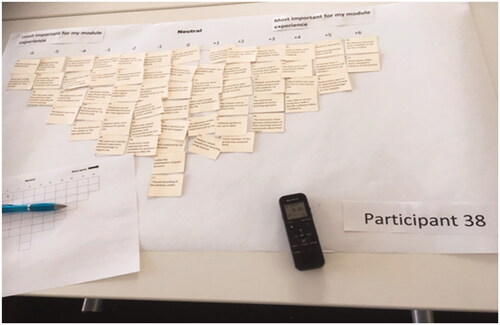

Thirty students, recruited separately following the same criteria (see for the full demographic profile of this group), were asked to sort the statements on a continuum of ‘most important question for me’ to ‘least important question for me’, using sort cards and an answer sheet. The answer sheet forces the Q-sorts into the shape of a normal distribution (), allowing fewer statements to be placed at either end and more placed in the middle (neutral) zone of the scale (Brown, Citation2004).

Table 1. Loading of research participants on each factor and participants’ demographics (defining sort is indicated by bold font).

The sorting activity was followed by interviews where participants explained why they sorted the statements as they did, focusing on the significance of particularly important items. The interviews were recorded and transcribed to be utilised later in the identification of factors.

Phase 3: data analysis

Each completed sort was entered and analysed using a dedicated software package: PQ Method Version 2.35.27 (Schmolk, Citation2014). The software correlates each Q sort with other Q sorts to identify factors that can represent shared forms of understanding among participants. The procedure includes principal component analysis, determination of the number of factors, varimax rotation and classification of respondents by the number of load factors. A factor is the weighted average Q-sort of a group of respondents that responded similarly. Although no research participant may be a perfect representative of a factor, typically each student is more similar to one factor than the rest. A table of all factors and the ranking assigned to each statement in each factor serves as a basis for factor interpretation.

From a methodological standpoint, Q provides a robust method to investigate subjectivity (for example, attitudes, viewpoints and perspectives) and to reveal consensus and disagreement among responders. As Brown (Citation2019, p. 568) emphasised, ‘it is important to point out that the a priori structuring of Q samples is not for testing, that is, unlike the case in rating scales, no effort is made to prove, for example, that a statement unequivocally belongs in category’. As participants are forced to rank-order the statements into one single continuum based on instruction, the continuum resembles a normal bell-shaped distribution with more statements in the middle than in the tails of the continuum. The sorted statement sets are then factor analysed case-wise (as opposed to the standard item-wise factoring), to generate clusters of similar perspectives (Spurgeon et al., Citation2012). The approach is more engaging for participants and more natural than assigning abstract scores using questionnaires with Likert scales (Klooster et al., Citation2008). As the main aim is to access the diversity of point-of-view, clusters of subjectivity, the use of interval scale statistical analysis on what is essentially ordinal data, can be justified.

The first two phases of the project were facilitated by student researchers and supervised by the project leaders. Students were trained in focus group facilitation and Q-Methodology sorting to enable open, power-free, cross-level and cross-disciplinary discussion about module experiences. University Research Ethics Approval was granted to both phases of the project.

Results

Student-generated questions

Sixty student-generated statements, reflecting their needs and priorities in module experience, were grouped into four main categories: teaching delivery, organisation and communication, learning environment and student support and well-being (Zaitseva & Law, Citation2020a).

Teaching delivery questions included teaching being tailored to their level of study, the pace of delivery, logical progression of content and suitability of the material for all students on multi-programme modules. Students also highlighted the importance of understanding how the module content was relevant for their future. Lecturers’ enthusiasm and ability to motivate and inspire students were reflected in multiple questions. Lecturers providing opportunities after lectures for students to finish writing up notes, ask questions, or informally discuss content were valued by many. Usability of PowerPoint slides for revision, availability of lecture recordings and use of interactive elements during the lecture were also emphasised.

Organisation and communication questions related to clear and detailed module information, including consistency of communication and advice from staff. Teaching and learning materials being up-to-date and easy to find in the virtual learning environment was another prominent theme, as well as the organisation of practical sessions and the ability to access subject-specific software. Sufficient contact time and interaction with academic staff outside taught sessions were also perceived as important.

Learning environment questions included statements on adequate breaks, suitability of group size for specific activities, availability of space in the laboratories, the impact of disruptions (for example, late arrivals or building noise), appropriate length of lectures and teachers being able to control the audience.

Student support and well-being questions reflected the importance of being supported and respected as an individual. Questions in this category focused on lecturers being approachable and on academics’ ability to connect with students and value their opinions. Students also emphasised the importance of knowledgeable, supportive and accessible project supervisors.

The list of questions generated by students reflected the varied priorities of a diverse cohort. While overarching categories aligned with those in standard questionnaires, specific questions were augmented by their daily experiences of teaching and learning, being indicative of both their appreciation of good practice and desire to address unresolved problems.

Factor extraction and interpretation

The eigenvalue is an indicator of a factor's statistical strength and explanatory power. Usually, eigenvalues of <1.00 are taken as a cut-off point for the extraction and retention of factors. The larger the eigenvalues, the more variance is explained by the factor (Kline, Citation1994). Eight factors with eigenvalues above 1.00 were extracted but when all factor retention criteria were considered (Brown, Citation1980, p. 223), only three factors were retained. The eigenvalues were 4.3, 2.7 and 2.4, respectively (Zaitseva & Law, Citation2020b). Twenty-five participants' responses determined the three factors: eleven participants were loaded on Factor 1, six on Factor 2 and eight on Factor 3 ().

Factor interpretation requires the integration of the quantitative data provided by the factor arrays and relative rankings tables, demographic data and the qualitative data collected by the interviews. Each factor is usually given a descriptive title to characterise the group identified.

Factor 1: structured and guided module experience

The factor’s eigenvalue is 4.3, it explains 14% of the study variance. Eleven students significantly associated with this factor were predominantly first and second years, representing a mix of subject areas, including humanities and social sciences, science, and engineering and technology. There was an almost equal proportion of male and female students (six and five, respectively) and being first/not first in the family to attend university (five and six, respectively) in this group. Twenty-two distinguishing statements were representative of this factor (significance at p < 0.01), as summarised below.

Students, representing this group, value structured module content and well-defined and guided learning experience (Everything I needed for exam and/or coursework was covered in this module) ranked (+6). This value is a ranking of the item within the factor array, which is generated by means of a weighted average of the Q-sorts, called a z-score, that load significantly onto a given factor (Mullen et al., Citation2022). The higher the value, the more strongly and positively participants feel about the statement. Students in this group also value clear instruction for lab and practice-based sessions (+6). While these students appreciate it when a module can spark their curiosity (The module helped me to develop interest for the subject area (+5)), they tend to rely on resources or guidance provided (There was enough material present on the slides for later revision (+4)). The approachability of staff and provision of support is appreciated by the group (I could approach my lecturer and ask for help if I didn’t understand something (+5)). These students are also concerned with the fairness of assessment and marking teamwork: (+3) and (+4), respectively.

Students loaded on Factor 1 did not prioritise assessment flexibility (for example, being able to choose from a range of assessments (–3)); lecturers being open to discussion after class was also less valued (–2). Lecture recordings or Powerpoint slides being released in advance were rated relatively low (–4). In summary, the group associated with Factor 1 is representative of a student who generally tends to be in the early stages of their university journey; they value a well-structured and supportive learning environment and demonstrate assessment-related anxiety.

Factor 2: maximising learning experience

The Factor’s eigenvalue is 2.7, with 9% of the study variance explained and 26 distinguishing statements loaded on it. Six students associated with this factor were predominantly final-year undergraduates, representing humanities and social sciences, health and business. Similar to Factor 1, there was an almost equal proportion of male and female students and being first/not first in the family to attend university in this group.

Factor 2 students value interaction with staff (Lecturer(s) made appropriate time available for questions (+6); Lecturer(s) are open to discussion in class (+4)) and clear communication (+3). They appreciate engaging delivery (+5) and the ability to choose from a range of assessments (+2). Having access to slides before the lecture and to recordings afterwards (+6) and having all necessary resources provided on the VLE (+5) is important for this group of students.

These students are not particularly concerned about unequal workload in group work (–4) and fairness of assessment (–3); they rated the ability of the lecturer to motivate students and an appropriate amount of contact time relatively low ((–5) and (–3), respectively). The organisation of practical sessions was also of limited concern for this group but this might be related to the nature of the subjects. It appears that students associated with Factor 2 are motivated and confident learners, often at the final stage of their university journey, who demonstrate cognitive effort and a strategic approach to their studies. They are keen to maximise the learning experience, appreciate the opportunity to shape their own assessment choices and interact with staff, as well as valuing the availability of information and resources.

Factor 3: settling into a culture of research and scholarship

The Factor’s eigenvalue is 2.4, with 8% of the study variance explained and 23 distinguishing statements loaded. Eight students are associated with this factor: they are mainly final-year undergraduates and post-graduate taught students, with a few second-year students, representing engineering and technology, science and health. Five of them are females and three males, with an equal split for family education background (four students are the first in the family to attend university and four are not).

This group is concerned with the successful completion of the dissertation, research, or final year project. They want their supervisor to be an expert in the subject area (+5) and their queries to be answered promptly (+6). The importance of research-led teaching is also reflected in their prioritisation of the lecture content including current and up-to-date research (+4).

While this group spans various levels of study, they display common trends related to students loaded in Factor 1: (The lectures were tailored well for my level of study (+5); The module content had a logical progression (+4)) and also looking ahead to post-university life (I can see the relevance of this module to my future (+5)).

Other indicators included a need for lecture recording: (I believe I would benefit from recordings of the lectures (+6); I found recordings of the lectures useful (+3)). They also put more emphasis than the other two groups on the importance of being respected by their lecturers as an individual (+3), however, in seeming contradiction, provided a low rating of importance for: Student opinions are valued and engaged with (–5). Students loaded on this factor were also less concerned about lectures being dynamic (–6) and that marking criteria were applied fairly by all markers (–5).

Reflecting on the differences in students’ priorities and implications for quality assurance

Exploring students’ module-level experience in a student-led environment and eliciting their priorities demonstrated a nuanced picture linked to student characteristics. The findings and their implications for quality assurance and enhancement in higher education are discussed below.

Three distinctive perspectives and implications for learner development

The findings illustrate at least three distinctive perspectives on module level experience.

Empirically derived observations confirmed that level of study and therefore students’ maturity was a noticeable factor for these groups. There are also some discipline influences (for example, Factor 2 was represented mainly by humanities and social sciences students and there was some association with science and engineering subjects in Factor 3). Gender did not have an empirical association with any of the factors and neither did being the first generation to go to the university.

Factor 1 students relied on a provision of a highly structured environment, required support and guidance and seemed to show signs of extrinsic motivation. Some were recent school leavers and so may have been used to more structured teaching and learning while still transitioning to independent learning. Their priorities may indicate a more strategic, assessment-oriented approach to learning (Biggs, Citation1988), as opposed to deep learning in which students strive to grasp the full meaning of the material. Ramsden (Citation1988) argued that the student approach to learning is both personal and situational; it is not a pure individual characteristic but rather a response to the teaching environment. Biggs (Citation1999) also noted that approaches to learning can be modified by the teaching and learning context and by learners themselves. Therefore, the priorities of these students could indicate a need to provide a teaching environment that will nurture and facilitate a deeper engagement with their studies from the very beginning of their university journey.

According to Malone and Lepper (Citation1987), there are four basic factors for a higher level of intrinsic motivation in learning: challenge, curiosity, control and fantasy (encompassing the emotions and the thinking processes of the learner). These would seem to be important for Factor 1 students to develop, although a counterpoint to this view is given by Haggis (Citation2003, p. 102). Haggis goes on to a broader critique that challenges the assumption that deep approaches ought to be fostered in higher education and suggests that this may reflect the ‘elite’ goals and values of academics (‘gatekeepers’) themselves but has little relevance to the majority of students in a mass higher education context.

Approaches to learning develop over time and there are indications in our data of the students growing in maturity during the course of their degree programmes. Factor 2 students are mainly in their final year of study and becoming more motivated and engaged with their work. They demonstrate intrinsically driven behaviours in their priorities and a desire to capitalise on their strengths. Factor 3 students meanwhile appear to be venturing into a research journey, with some needing more support and reassurance from their supervisors. They valued research-informed teaching and showed signs of settling into a culture of scholarship. This cluster of students was dominated by science and engineering disciplines, which may explain some of their ratings. For example, although they wanted to be respected by lecturers, they did not think it important that ‘student opinions are valued and engaged with’. The interviews were indicative of the perceived objectivity of scientific knowledge. The low concern about markers applying the criteria fairly was due to many assessments being mathematical or statistical with less room for varied interpretations from markers.

Implications for quality assurance and enhancement: developing a partnership approach

Quality in higher education can be conceptualised as exception, perfection, fitness-for-purpose, value-for-money, or transformation (Harvey & Green, Citation1993). Factor 1 students gave a high ranking to items that were focussed on the mechanics of running the module and its organisation, which could show that they subscribed to the notion of quality as perfection in meeting their needs, the absence of ambiguity and consistency in getting everything right first time (Harvey & Green, Citation1993). Meanwhile, Factor 2 students were more focussed on the extra value they could gain from the module and opportunities to maximise their learning, as shown in items, such as ‘lecturers made appropriate time available for questions’. Possibly, this group of students is more focussed on the transformative power of education and would regard a module that facilitated this as being of high quality. Similarly, Factor 3 students gave a high rating to ‘I can see the relevance of this module to my future’ which might imply a certain level of uncertainty in relation to complex research topics and students questioning ‘why am I doing this?’, thus emphasising fitness-for-purpose. Echoing Jungblut (Citation2015), the findings show that there is heterogeneity in what students value in their higher education experience at a particular stage of their university journey.

The project findings demonstrated that ‘recasting student evaluations of teaching within a narrative of ‘partnership’ (Stein et al., Citation2020, p. 13) is paramount for quality assurance in a higher education context. Isaeva et al. (Citation2020) also recommended that quality assurance should be built as a partnership with students, something that can be facilitated by the approach described here. Student engagement in the process of devising questions is beneficial for both students and staff, as the former is being given an authentic opportunity to provide their feedback and for the latter, the results of the evaluation should lead to increased awareness and insights about student learning approaches and processes (Borch et al., Citation2020). For example, high ratings of group assessment being unfair might be indicative of a noticeable proportion of Factor 1 students in the cohort. This suggests the need for developing more functional learning approaches and self-regulatory skills.

Making sure that everyday experiences and concerns of students, whatever stage of their learning journey they are in, are reflected in the evaluation questions, will make their feedback more informative, reliable and usable for quality assurance and enhancement purposes. Starting from ‘evaluative dialogue’ (discussion with course representatives) would be one way for module leaders to gain insight into potential student priorities for the module, which could then be tested with the wider student body in the survey. As the next step, the methodology could be used by practitioners as a ‘research-informed’ way of enhancing communication with students and understanding their needs.

Concluding notes

Since the Student Question Bank has been introduced into the module evaluation in 2020, with a recommendation to add at least one student-devised question to the main module questionnaire, the number of module leaders who use the questions grows each semester. Anecdotal evidence suggests that this also prompted staff to talk to students and write additional bespoke questions, gradually making it an integral and essential part of the quality enhancement process, rooted in partnership.

Disclosure statement

No potential conflict of interest was reported by the authors.

Data availability statement

The data that support the findings of this study were fully anonymised and are available at: https://figshare.com/.

Additional information

Funding

References

- Biggs, J.B., 1988, ‘Assessing student approaches to learning’, Australian Psychologist, 23(2), pp. 197–206.

- Biggs, J.B., 1999, ‘What the student does: teaching for enhanced learning’, Higher Education Research & Development, 18(1), pp. 57–75.

- Borch, I., Sandvoll, R. & Risør, T., 2020, ‘Discrepancies in purposes of student course evaluations: what does it mean to be satisfied?’, Education Assessment, Evaluation and Accountability, 32, pp. 83–102.

- Brown, M., 2004, ‘Illuminating patterns of perception: an overview of Q Methodology’. Available at https://apps.dtic.mil/sti/pdfs/ADA443484.pdf (accessed 13 June 2022).

- Brown, S.R., 1980, Political subjectivity (New Haven, CT, Yale University Press).

- Brown, S.R., 1993, ‘A primer on Q Methodology’, Operant Subjectivity, 16(3/4), pp. 91–138.

- Brown, S.R., 1996, ‘Q Methodology and qualitative research’, Qualitative Health Research, 6(4), pp. 561–67.

- Brown, S.R., 2019, ‘Subjectivity in the human sciences’, The Psychological Record, 69(4), pp. 565–79.

- Chan, C.K.C., Luk, L.Y.Y. & Zeng, M., 2014, ‘Teachers’ perceptions of student evaluations of teaching’, Educational Research and Evaluation, 20(4), pp. 275–89.

- Chen, Y. & Hoshower, L., 2003, ‘Student evaluation of teaching effectiveness: an assessment of student perception and motivation’, Assessment & Evaluation in Higher Education, 28, pp. 71–88.

- Coates, H., 2005, ‘The value of student engagement for higher education quality assurance’, Quality in Higher Education, 11(1), pp. 25–36.

- Cordingley, M.E., Webb, C. & Hillier, V., 1997, ‘Q Methodology’, Nurse Researcher, 4, pp. 31–45.

- Culver, K., Bowman, N. & Pascarella, E., 2021, ‘How students’ intellectual orientations and cognitive reasoning abilities may shape their perceptions of good teaching practices’, Research in Higher Education, 62, pp. 765–88.

- Edström, K., 2008, ‘Doing course evaluation as if learning matters most’, Higher Education Research and Development, 27(2), pp. 95–106.

- Esarey, J. & Valdes, N., 2020, ‘Unbiased, reliable, and valid student evaluations can still be unfair’, Assessment & Evaluation in Higher Education, 45(8), pp. 1106–20.

- Gibbs, G., 2010, Dimensions of Quality (York, Higher Education Academy). Available at: https://www.heacademy.ac.uk/sites/default/files/dimensions_of_quality.pdf (accessed 13 June 2022).

- Gravestock, P. & Gregor-Greenleaf, E., 2008, Student Course Evaluations: Research, models and trends (Toronto, Higher Education Quality Council of Ontario). Available at https://teaching.pitt.edu/wp-content/uploads/2018/12/OMET-Student-Course-Evaluations.pdf (accessed 13 June 2022).

- Haggis, T., 2003, ‘Constructing images of ourselves? A critical investigation into 'approaches to learning' research in higher education’, British Educational Research Journal, 29(1), pp. 89–104.

- Harvey, L., 2002, ‘Evaluation for what?’, Teaching in Higher Education, 7(3), pp. 245–63.

- Harvey, L., 2003, ‘Student feedback’, Quality in Higher Education, 9(1), pp. 3–20.

- Harvey, L., 2011, ‘The nexus of feedback and improvement’, in Nair, C.S, Patil, A. & Mertova, P. (Eds.), 2011, Student Feedback: The cornerstone to an effective quality assurance system in higher education, pp. 3–26 (Cambridge, Woodhead).

- Harvey, L. & Green, D., 1993, ‘Defining quality’, Assessment & Evaluation in Higher Education, 18(1), pp. 9–34.

- Huxham, M., Laybourn, P., Cairncross, S., Gray, M., Brown, N., Goldfinch, J. & Earl, S., 2008, ‘Collecting student feedback: a comparison of questionnaire and other methods’, Assessment & Evaluation in Higher Education, 33(6), pp. 675–86.

- Isaeva, R., Eisenschmidt, E., Vanari, K, & Kumpas-Lenk, K., 2020, ‘Students’ views on dialogue: improving student engagement in the quality assurance process’, Quality in Higher Education, 26(1), pp. 80–97.

- Jungblut, J., Vukasovic, M. & Stensaker, B., 2015, ‘Student perspectives on quality in higher education’, European Journal of Higher Education, 5(2), pp. 157–80.

- Kline, P., 1994, An Easy Guide to Factor Analysis (New York, NY, Routledge).

- Klooster P.M., Visser M. & de Jong M.D., 2008, ‘Comparing two image research instruments: the Q-sort method versus the Likert attitude questionnaire’, Food Quality and Preference, 19(5), pp. 511–18.

- Lagrosen, S., Seyyed-Hashemi, R. & Leitner, M., 2004, ‘Examination of the dimensions of quality in higher education’, Quality Assurance in Education, 12(2), pp. 61–9.

- Leathwood, C. & Phillips, D., 2000, ‘Developing curriculum evaluation research in higher education: process, politics and practicalities’, Higher Education, 40, pp. 313–30.

- Malone, T.W. & Lepper, M.R., 1987, ‘Making learning fun: a taxonomy of intrinsic motivations for learning’, in Snow, R.E. & Farr, M.J. (Eds.), 1987, Aptitude, Learning, and Instruction, Volume 3: Conative and affective process analyses, pp. 223–53 (Hillsdale, NJ, Lawrence Erlbaum Associates).

- Mengel, F., Sauermann, J. & Zölitz, U., 2019, ‘Gender bias in teaching evaluations’, Journal of the European Economic Association, 17(2), pp. 535–66.

- Mullen, R.F., Fleming, A., McMillan, L. & Kydd, A., 2022, Q Methodology: Quantitative aspects of data analysis in a study of student nurse perceptions of dignity in care (London, SAGE).

- Nasser, F. & Fresko, B., 2002, ‘Faculty views of student evaluation of college teaching’, Assessment & Evaluation in Higher Education, 27, pp. 187–98.

- Newton, J., 2000, ‘Feeding the beast or improving quality? Academics' perceptions of quality assurance and quality monitoring’, Quality in Higher Education, 6(2), pp. 153–63.

- Pastore, S., Manuti, A., Scardigno, F., Curci, A. & Pentassuglia, M., 2019, ‘Students’ feedback in mid-term surveys: an explorative contribution in the Italian university context’, Quality in Higher Education, 25(1), pp. 21–37.

- Patton, M.Q., 2008, Utilization-focused Evaluation, fourth edition. (Thousand Oaks, CA, Sage).

- Porter, S., 2004, ‘Raising response rates: what works?’, New Directions for Institutional Research, (121), pp. 5–21.

- Radchenko, N., 2020, ‘Student evaluations of teaching: unidimensionality, subjectivity, and biases’, Education Economics, 28(6), pp. 549–66.

- Ramsden, P., 1988, ‘Context and strategy: situational influences on learning’, in Schmeck, R.R. (Ed.), 1988, Learning Strategies and Learning Styles, pp. 159–84, (New York, Plenum Press).

- Schmolk, P., 2014, PQ Method Manual. Available at http://schmolck.userweb.mwn.de/qmethod/pqmanual.htm (accessed 13 June 2022).

- Shah, M. & Nair, C.S., 2012, ‘The changing nature of teaching and unit evaluations in Australian universities’, Quality Assurance in Education, 20(3), pp. 274–88.

- Spooren, P., Brockx, B. & Mortelmans, D., 2013, ‘On the validity of student evaluation of teaching: the state of the art’, Review of Educational Research, 83(4), pp. 598–642.

- Spooren, P. & Christiaens, W., 2017, ‘I like your course because I believe in (the power of) student evaluations of teaching (SET). Students’ perceptions of a teaching evaluation process and their relationships with SET scores’, Studies in Educational Evaluation, 54, pp. 43–9.

- Spurgeon, L., Humphreys, G., James, G.& Sackley, C., 2012, ‘A Q-methodology study of patients' subjective experiences of TIA’, Stroke Research and Treatment, Article ID 486261.

- Stein, S.J., Goodchild, A., Moskal, A., Terry, S. & McDonald, J., 2020, ‘Student perceptions of student evaluations: enabling student voice and meaningful engagement’, Assessment & Evaluation in Higher Education, 46(6), pp. 837–51.

- Taylor, M., Su, Y., Barry, K. & Mustillo, S., 2021, ‘Using structural topic modelling to estimate gender bias in student evaluations of teaching’, in Zaitseva, E., Tucker, B. & Santhanam, E. (Eds.), 2021, Analysing Students Feedback in Higher Education: Using text mining to interpret student voice, pp. 51–67 (London, Routledge).

- Tucker, Β., Jones, S., Straker, L. & Cole, J., 2003, ‘Course evaluation on the web: facilitating student and teacher reflection to improve learning’, New Directions for Teaching and Learning, 96, pp. 81–94.

- Uttl, B. & Smibert, D., 2017, ‘Student evaluations of teaching: teaching quantitative courses can be hazardous to one’s career’. PeerJ, 5, e3299. Available at: https://peerj.com/articles/3299/ (accessed 13 June 2022).

- Wiley, C., 2019, ‘Standardised module evaluation surveys in UK higher education: establishing students’ perspectives’, Studies in Educational Evaluation, 61, pp. 55–65.

- Williams, J. & Cappuccini‐Ansfield, G., 2007, ‘Fitness for purpose? National and institutional approaches to publicising the student voice’, Quality in Higher Education, 13(2), pp. 159–72.

- Winchester, M.K. & Winchester, T.M., 2012, ‘If you build it will they come? Exploring the student perspective of weekly student evaluations of teaching’, Assessment & Evaluation in Higher Education, 37(6), pp. 671–82.

- Zaitseva, E. & Law, A., 2020a, Student Question Bank. Available at https://doi.org/10.6084/m9.figshare.17391470 (accessed 6 July 2022).

- Zaitseva, E. & Law, A., 2020b, Q methodology calculations. Available at https://doi.org/10.6084/m9.figshare.17390939 (accessed 6 July 2022).