Abstract

Initial Judgment of Solvability (iJOS) is a metacognitive judgment that reflects solvers’ first impression as to whether a problem is solvable. We hypothesized that iJOS is inferred by combining prior expectations about the entire task with heuristic cues derived from each problem’s elements. In two experiments participants first provided quick iJOSs for all problems, then attempted to solve them. We manipulated expectations by changing the proportion of solvable problems conveyed to participants, 33%, 50%, or 66%, while the true proportion was the 50% for all. In Experiment 1 we used the non-verbal Raven’s matrices and examined nameability as the element-based heuristic cue. Unsolvable matrices were generated by switching locations of elements in original Raven’s matrices. In Experiment 2 we used the verbal Compound Remote Associate (CRA) problems and examined word’s frequency in the language as the element-based heuristic cue. Unsolvable CRAs were random word triads from the same word pool. The results were consistent in suggesting that quick iJOS integrates prior expectations and experience-based heuristic cues. Notably, iJOS was predictive for the subsequent solving attempt only for Raven’s matrices.

Problem solving is classically defined as overcoming the gap between a known state and a goal state when it is impossible to reach the goal state in an immediate and obvious way (Duncker, Citation1945). Newell and Simon (Citation1972) described problem solving as a mental activity whose main components are knowledge states, operators for changing one state into another, and constraints on applying the operators. This definition deals with cognitive challenges of problem solving, but also hints at the importance of metacognitive processes. Metacognitive monitoring is the process by which people assess their own states of knowledge, while metacognitive control encompasses applying strategies and regulating effort towards achieving one’s goals based on monitoring output.

Meta-reasoning processes are the metacognitive processes that accompany solving problems (Ackerman & Thompson, Citation2017). As people are reluctant to invest time and effort in a task for which the probability of success is low (e.g., De Neys et al., Citation2013; see Stanovich, Citation2009, for a review), the meta-reasoning framework suggests that the very first metacognitive control decision made when encountering a problem is whether to attempt solving it at all. This decision is informed by the monitoring type called initial Judgment of Solvability (iJOS, Ackerman & Thompson, Citation2017)—the solver’s assessment of the likelihood that a problem is solvable (rather than unsolvable) prior to starting the solving process. By this theorizing, biases in these initial judgments are expected to mislead effort regulation, leading people to waste time on unsolvable problems and/or abandon solvable ones (e.g., Payne & Duggan, Citation2011). Lauterman and Ackerman (Citation2019) demonstrated this misleading power of iJOS on effort regulation with Raven’s matrices, showing that more time was invested in solving attempts for problems initially judged as solvable rather than unsolvable regardless of their objective solvability. Recently, Burton et al. (in press) found similar predictive power of iJOS with anagrams in some conditions, but not in others.

Very little is known so far about how iJOS is inferred. In previous studies researchers required participants to provide an iJOS for each problem very quickly (0.5–4 sec.)—a time frame that was sufficient to glean a first impression, but insufficient for solving any of the problems (Ackerman & Beller, Citation2017; Burton et al., in press; Lauterman & Ackerman, Citation2019; Markovits et al., Citation2015; Topolinski & Strack, Citation2009). Nevertheless, iJOS was clearly not arbitrary, even when it did not reflect actual solvability. For example, Topolinski et al. (Citation2016) collected iJOS for solvable anagrams (scrambled words) and similar unsolvable ones (where no word could be formed from the given letters). Their stimuli included letter sets that were more and less easy to pronounce. They found that iJOS was higher for anagrams that were easy (vs. hard) to pronounce. These findings suggest utilization of heuristic cues that are not associated with actual solvability. As such, iJOS is similar to other metacognitive judgments, which have also been shown to rely on heuristic cues (see Ackerman, Citation2019; Koriat, Citation1997, for reviews).

In most meta-reasoning studies, as demonstrated above, as well as in most meta-memory research (e.g., word pairs: Koriat, Citation1997; Undorf et al. Citation2018; knowledge questions: Ackerman et al., Citation2020), the tasks have been verbal. The present study examined how iJOS integrates two clearly distinctive information sources while comparing a non-verbal task, Raven’s matrices, to a verbal task, Compound Remote Associates (CRA; Bowden & Beeman, Citation1998, Bowden & Jung-Beeman, Citation2003).

Sources of heuristic cues for metacognitive judgments

Ackerman (Citation2019) suggested a taxonomy whereby heuristic cues are organized in three levels. Level 1 is self-perceptions—a person’s beliefs about his/her own traits, abilities, and knowledge. A key aspect of self-perceptions is confidence in one’s ability to succeed in a given task (e.g., Dunning et al., Citation2003). Level 2 is task characteristics—beliefs about factors affecting performance in a task as a whole (e.g., a set of exam questions or a problem set). Task characteristics may include time frame: time pressure versus ample time (e.g., Ackerman & Lauterman, Citation2012); test type: multiple-choice versus open-ended questions (e.g., Ozuru et al., Citation2012); learning procedure: availability of study aids (e.g., Mudrick et al., Citation2019; Wiley et al., Citation2018); and beliefs regarding the effectiveness of such study aids (e.g., Serra & Dunlosky, Citation2010). Level 3 is momentary experiences. These are item-based cues (Koriat, Citation1997) available to the solver throughout the specific solving episode—i.e., before, during, and after attempting each task item (e.g., a question or problem). Most studies dealing with heuristic cues for metacognitive judgments focus on level 3. However, only a small number of non-verbal cues have been considered (e.g., Besken, Citation2016).

An example of an item-based cue that has been uniquely studied in non-verbal problems is symmetry. Reber et al. (Citation2008) presented participants with a simple arithmetic verification task (e.g., 12 + 24 = 36; true or false?), in which the digits in each equation were replaced by the appropriate numbers of dots. The dots were arranged in either symmetric or asymmetric patterns, and the equations were shown with either correct or incorrect results for verification. Reber et al. found that participants were more likely to judge the arithmetic statement as true when the dots were arranged in symmetric patterns. Thus, that study suggests that symmetry is an item-based (level 3) heuristic cue.

In the present study, in addition to our main contributions, we offer nameability of presented shapes as a novel heuristic cue for metacognitive judgments in non-verbal problems, and examine whether it is an item-level heuristic cue underlying iJOS. We chose nameability because it entails translating visual information into verbal representations, and thus bridges between non-verbal and verbal item characteristics.

Overall, it has long been assumed in metacognitive research that item-based subjective experience (level 3) typically dominates task characteristics (level 2). For instance, Rabinowitz et al. (Citation1982) found that people differentiate between related and unrelated word pairs to be memorized (an item-cased cue), but pay less heed to the imagery strategy they were asked to apply (a task characteristic) despite this strategy being highly effective in improving memory performance (see also Koriat, Citation1997). This dominance of item-level experiences over task-level characteristics has been found with various memorization study designs (e.g., spaced vs. massed rehearsals; Logan et al., Citation2012), and also in reading comprehension (e.g., computer vs. paper; Ackerman & Lauterman, Citation2012) and in reasoning (e.g., solving syllogisms with vs. without training; Prowse et al., 2009). Notably, in most of these studies the researchers assumed that people hold implicit theories (or beliefs) about task characteristics (e.g., the benefits of rehearsal for memorization; Kornell & Son, Citation2009), but do not take these theories sufficiently into account in their metacognitive judgments. The present study examined the effects of task-level characteristics on metacognitive judgments when the task-level characteristic is explicitly stated, rather than inferred from implicit theories.

When people face a problem, they have both prior expectations based upon the context of the problem, which entail top-down inference processes (e.g., multiple choice vs. open-ended test formats), and item-based cues derived from encountering the problem, which entail bottom-up inference processes (e.g., font size, image sharpness, familiarity). We examined whether one set of processes (top-down or bottom-up) dominates the other when people have access to both sources of information while making a quick iJOS. Such integration of different sources of information has been recently studied in memorization tasks (see Undorf et al., Citation2018), but has not yet been systematically examined in meta-reasoning contexts.

In the present study, each complete set of problems and its framing provide task-level heuristic cues, and each problem provides item-level cues. For each task, we included both solvable and unsolvable problems, and manipulated what we told participants about the proportions of each. We examined how iJOS reflects the integration between this task-level information and specific item characteristics chosen for each experiment (these will be explained in detail below).

Integrating prior expectations and experience-based heuristic cues

The lexical definition of expectations is “a strong belief that something will happen or be the case in the future” (Oxford English Dictionary). Expectations can be considered as prior beliefs that guide one’s interpretation of incoming streams of evidence (Sherman et al., Citation2015). In this study, we focus on expectations derived from information about the task obtained before one begins the solving attempt.

When inferring solvability, the interaction between prior expectations, based on information provided about the task, and experience-based heuristic cues, derived from attempting each item (problem), may take three forms: (A) reliance mainly upon prior expectations; (B) reliance mainly on item-based subjective cues; or (C) an integration of both. Some researchers argue that people’s cognitive resources are too limited to handle more than one cue at a time (Todd et al., Citation2012). There is evidence from memorization tasks that adults do integrate multiple heuristic cues when they judge their learning (Koriat & Levy-Sadot, Citation2001; Koriat et al., Citation2006; Undorf et al., Citation2018; Undorf & Bröder, Citation2020). Nevertheless, it is possible that under time constraints, as required for judging solvability based on a brief glance, they may rely on only one kind of cue (see Pachur & Bröder, Citation2013, for a review). If this is the case, do people rely upon expectations (possibility A) or upon item-based cues (possibility B)?

If expectations help people interpret incoming streams of evidence (Sherman et al., Citation2015), then efficient processing can be achieved by restricting perceptual inference in line with prior expectations. For example, in the context of a perceptual task, Sherman et al. (Citation2015) asked participants to report the presence or absence of a near-threshold Gabor patch (a visual stimulus used to measure people’s vision threshold, comprised of blurry lines created by varying grey backgrounds). Expectations were manipulated by changing the probability that the Gabor patch would be present versus absent over blocks of trials (25%, 50% or 75% probability of target presence). Participants were asked to state whether the Gabor patch was present, and then to report their confidence (yes/no) in their response. Sherman et al. found that as the probability of target presence increased, participants were more likely to be confident in their perceptions that the Gabor patch was present. In the context of problem solving, Payne and Duggan (Citation2011) also manipulated information about the probability that problems were solvable. They found lower confidence in unsolvable decisions when participants were informed that a higher proportion of problems were solvable. Together, the results of previous studies regarding effects of prior expectations on judgments of solvability suggest that unlike in judgments of learning, people base their meta-reasoning judgments mostly on their prior expectations. Notably, though, in those studies there was no time restriction for providing metacognitive judgments.

The third possibility is that problem-solvers do indeed integrate prior expectations and item-based heuristic cues (possibility C). This possibility is extensively discussed in decision-making research. Some researchers suggest additive or compensatory use of several cues (e.g., Brehmer, Citation1994; Einhorn et al., Citation1979). A prominent example is the diffusion models based on Ratcliff (Citation1978). By these models, when facing two-alternative forced-choice tasks, the decision process begins at a starting point, usually called parameter z, and gradually advances toward one of two response boundaries. If the a priori starting point z is closer to one of the two boundaries than to the other, response bias is present. This starting point is influenced by prior expectations and thus can be experimentally manipulated, for example by providing probabilities of solvability (e.g., Arnold et al., Citation2015). Since iJOS is a type of two-alternative decision, either solvable or unsolvable, we can hypothesize that iJOS is adjusted to prior expectations in addition to information derived from each encountered stimulus.

To examine which of the three possibilities fits the cue utilization that underlies iJOS, we applied data analysis methods derived from signal detection theory (SDT). By this theory, the criterion for judging a problem as solvable or unsolvable is initially set based upon prior expectations, and then adjusted by the evidence derived from item-based cues. Thus, this method allows exposing whether iJOS is grounded in prior expectations, reliance on item-based cues, or integration of both sources.

Overview of the study

To examine the effects of prior expectations and item-based cues on iJOS in a generalized manner, we used two problem-solving tasks: Raven’s matrices in Experiment 1 () and CRAs in Experiment 2. Each CRA item includes three words, and participants’ task is to find a fourth word which generates a compound word or a phrase with each of the given words (e.g., PINE, CRAB, SAUCE; the solution word is APPLE). In reporting both experiments we employ the same terminology, such that the task is the complete set of problems, an item is a single problem, and elements are the building blocks of each item: eight figures in a Raven’s matrix, and three words in a CRA. In both experiments, we sought to examine the effects on iJOS of (a) expectations based on information about the solvability rate in the whole task, and (b) specific item-level cues chosen for this study. We used nameability as the item-level cue for Raven’s matrices, and frequency of each word in a semantic corpus for CRAs. In both cases, the item-level cue properly applies to the elements of each item—the figures in the Raven’s matrices, and the words in the CRAs. Full details about these cues are provided below in the introduction to each experiment.

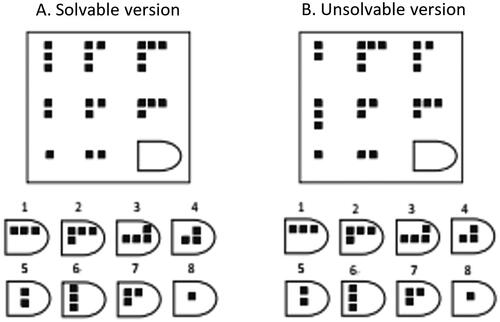

Figure 1. Example of an easy Raven’s matrix (Raven et al., 1993) in a solvable version (A) and an unsolvable version, in which two pairs of elements within the solvable version were switched (B).

We generated unsolvable versions of items in each task by manipulating their elements. Unsolvable Raven’s matrices were generated for Experiment 1 by switching the positions of elements in original Raven’s matrices, thus breaking the rules while keeping the visual elements identical to those in the solvable matrices (). Unsolvable CRAs were produced by creating random word triads using words drawn from different solvable CRA items.

Both experiments had a similar two-phase structure to previous iJOS research (e.g., Burton et al., in press; Lauterman & Ackerman, Citation2019). In the first phase, participants provided an iJOS (yes/no) after brief exposure to each problem. In the second phase, they solved all problems without a time limit, and either provided a solution or chose the “unsolvable” option. Providing a solution indicated that the participant thought the problem to be solvable—a positive final JOS. Choosing “unsolvable” indicated a negative final JOS. Above and beyond our main goal of examining cue integration between task-level and item-level cues, we also expected to replicate Lauterman and Ackerman’s (Citation2019) findings that iJOS would have predictive power for solving time (response time) in the second phase and for final JOS.

Expectations were manipulated between participants by varying the prior information provided about the proportion of solvable problems in the item pool (33% or 66% in Experiment 1; 33%, 50%, or 66% in Experiment 2).Footnote1 The actual solvability rate was 50% for all conditions. In preparing the stimuli we took pains to ensure that both item-based cues (figure nameability in Raven’s matrices and word frequency in CRAs) characterized the stimuli orthogonally to solvability, such that all levels of nameability and word frequency were comparably represented in solvable and unsolvable item sets for each participant. We expected to find a similar pattern for prior expectations and item-based cues in both tasks, with two main effects: the higher the expected solvability rate, the higher the chance of a positive iJOS; and the greater the nameability or word frequency, the higher the chance of a positive iJOS. To facilitate consideration of alternative explanations, we also examined whether nameability and word frequency make unique contributions to iJOS even after controlling for response time, a dominant cue for metacognitive judgments (e.g., Thompson et al., Citation2013). Finally, to examine the possibility that the role of expectations shifted as participants progressed through the task (e.g., with the effects of expectations most prominent early in the task), we tested for effects of the items’ serial order.

Experiment 1

In Experiment 1 we had two main goals. First, we examined whether the nameability of visual stimuli serves as an item-based cue for iJOS in non-verbal problems. Second, we sought to identify unique contributions of top-down expectations and the bottom-up item-based cue of nameability.

We used Raven’s matrices in both their standard and advanced versions (Raven et al., Citation1993). Each problem consists of elements organized in a 3 × 3 matrix, with a blank space in the bottom right corner. See , panel A, for an example. The solver’s task is to discern the rules governing the arrangement of the eight given elements, and thereby to identify the missing element among eight presented options. The underlying processes applied when solving Raven’s matrices are widely recognized as general rather than specific to this particular test (Carpenter et al., Citation1990; Raven, Citation2000).

Lauterman and Ackerman (Citation2019) also examined iJOS using Raven’s matrices. They created an unsolvable version of each original matrix by switching the locations of elements so as to break the rules governing the matrices’ lines and columns. They generated two sets of fifteen matrices balanced by difficulty. Each participant received one set in its solvable version and the other in its unsolvable version, with the individual items intermixed. Participants first viewed each of the thirty matrices for up to four seconds (the mean was 2 s), without the answer options, and indicated for each matrix whether they judged it to be solvable or unsolvable. Lauterman and Ackerman found that participants were sensitive to the difficulty of the original matrices even when the rules were broken. That is, participants tended to judge the difficult matrices as unsolvable and the easy matrices as solvable, regardless of their actual solvability. In addition, they found that iJOS was predictive of several aspects of the subsequent solving phase: a positive iJOS predicted more time investment in the solving phase; a tendency for a positive final solvability decision (final JOS) regardless of objective solvability; and higher confidence in the final answer for solvable matrices. A secondary goal of the present study was thus to replicate this predictive value of iJOS for the solving phase.

In the present study, we hypothesized that people use the nameability of the elements as an item-based heuristic cue for the solvability of the matrices. Nameability can be thought of as the degree to which an element used in a matrix resembles something recognizable and therefore nameable (e.g., a rectangle). More formally, we define nameability as a measure of the ease with which a presented shape can be recoded into a verbal representation. Classic memory research distinguishes between two interconnected modes of coding for any information item: visual and verbal, where stimuli from one domain can be recoded into representations from the other (Brandimonte & Gerbino, Citation1996; Paivio, Citation1971). The verbal representation code is more available for use than the visual code—an advantage found also in problem-solving tasks (e.g., Brandimonte & Gerbino, Citation1993; DeShon et al., Citation1995). This inequality between the verbal and visual codes thus generates an advantage for nameable over unnamable shapes (Boucart & Humphreys, Citation1992). Specifically, in order to solve a Raven’s matrix, one must verbalize the laws governing the columns and rows, making nameability a helpful characteristic. Indeed, the literature on Raven’s matrices suggests that performance is better when the elements within matrices are nameable, compared with unfamiliar or non-representational shapes which are harder to describe (Meo et al., Citation2007; Roberts et al., Citation2000).

We used a norming test to generate two measures of nameability for each matrix—a whole-matrix score, based on seeing all eight elements of the matrix together, and a figure-by-figure score, where each element is viewed in isolation and these ratings are then averaged. More precisely, for the whole-matrix score we presented the whole matrix and asked “How easy it is to give a name to the figures included in this matrix?” For the figure-by-figure score we presented each of the eight elements of the matrix separately, intermixed with elements from other matrices, and asked in each case “How easy it is to give a name to this figure?” We then averaged the relevant eight assessments to generate a figure-by-figure nameability score for each matrix.

In the main experiment, we manipulated prior expectations as to the percentage of solvable matrices such that either one-third or two-thirds of the items were said to be solvable. A third condition, where participants were correctly informed that half the items were solvable, was taken from the data collected for Lauterman and Ackerman (Citation2019). We hypothesized that participants told that a higher percentage of matrices were solvable would be more likely to judge any given matrix as solvable (meaning, in terms of SDT, that their decision criterion would be lower; see details below). We also hypothesized that response time would be negatively correlated with iJOS, but would leave a unique effect for expectations and nameability. We tested our hypotheses using hierarchical logistic regression, examining whether iJOS was associated with prior expectations (possibility A above), nameability (possibility B), or both (possibility C), above and beyond the correlation between iJOS and response time.

Method

Participants

A-priori power analysis with G*Power indicated that a total sample of 76 participants would be sufficient to achieve statistical power (β = .80) to detect a moderate effect size (f2 = .15) for a multiple-regression analysis with three predictors, one between-participants variable with three groups and two within-participants variables (Faul et al., Citation2007). The analyses were based on 107 participants, as detailed below.

Seventy-one undergraduate students from the Technion took part in the current data collection (54% female; Mage = 25.0, SD = 2.9) in exchange for course credit. All participants provided informed consent. They were randomly assigned to one of two groups based on their manipulated prior expectations about the percentage of solvable matrices, one-third (N = 35) or two-thirds (N = 36). In Lauterman and Ackerman’s (Citation2019) study, one group went through exactly the same procedure, except that they were informed that half (50%) the matrices were solvable. We included that group as a control group for comparison (N = 36, 53% female; Mage = 25.0, SD = 2.4).

The real percentage of solvable matrices was 50% for all three groups. Planned exclusion were combinations of two of the following criteria: time spent on the task more than 2SD below or above the mean; providing fewer than 80% of the iJOS responses on time; insufficient variability in supplied iJOS (more than 80% with the same iJOS); and a rate of correct answers lower than 2SD below the mean.

Materials

We used thirty Raven’s matrices (Raven et al., Citation1993), including twenty-five from the Advanced Progressive Matrices (APM) Set II (matrix numbers 6–29 and 31), and five from the Standard Progressive Matrices (SPM, matrix numbers 29–33). These matrices were chosen by Lauterman and Ackerman (Citation2019) to generate a large range of success rates, 17%–96%. Another eight matrices were used for instruction and practice.

As described above, in a norming test for the present study, we collected two subjective nameability scores for the matrices—a whole-matrix score, reflecting participants’ impression of the elements’ nameability after seeing the matrix as a whole; and a figure-by-figure score, where we averaged nameability scores provided for each of the eight elements in isolation. This procedure enabled us to explore whether the iJOS was more tightly associated with the nameability of elements as gleaned from a global impression of the whole matrix, or from a more focused impression of each figure. Norming-test participants were drawn from the same population as the main experiment but did not participate in the main experiment (N = 35, 43% female, Mage = 25.0 years). All norming-test participants provided both whole-matrix and figure-by-figure scores for ten matrices, comprising one set of matrices out of three, with each set seen by 11 or 12 respondents. The nameability rankings were elicited in two phases. First, the ten matrices were displayed one by one, as in the main experiment, but without the answer options. Participants were asked to assess how easy it was to give a name to the figures included in that matrix on a scale between 1 and 7. The instructions explicitly solicited participants’ global impression regarding ease of naming the elements within each matrix. In the second phase, the eighty elements contained in the ten matrices of the previous phase were displayed one by one in a random order. Participants were asked how easy it was to give a name to each figure, again on a 7-point Likert scale.

For each matrix, the final whole-matrix nameability score was defined as the mean of the scores provided by all participants who saw that matrix (M = 4.28, min = 2.20, max = 6.00). Inter-rater reliability was α= .74, .80, and .88 for the three sets. To calculate the figure-by-figure scores for each matrix, we first calculated the mean rating for each element, and then averaged the mean scores for the eight elements included in each matrix (M = 4.85, min = 2.58, max = 6.54). The two scores were correlated (r = 0.71, p < .0001), but the whole-matrix nameability scores were significantly lower than the figure-by-figure scores, t(29) = 4.00, p < .0001, d = 0.56.

For each original matrix, Lauterman and Ackerman (Citation2019) created an unsolvable version by randomly choosing four of the eight elements and switching their locations, breaking the rules of the matrix. See example in , panel B. Under this procedure, the solvable and unsolvable versions contained the same eight elements, meaning the nameability ratings for each solvable version applied equally to its parallel unsolvable one. The original matrices were divided into two sets balanced for difficulty and the two nameability scores. Each participant received solvable matrices from one set and unsolvable matrices from the other set, counterbalanced across participants.

Procedure

The experiment was administered in groups in a small computer lab. At the start, participants read instructions for solving Raven’s matrices and practiced on three matrices. They were then informed of the supposed proportion of solvable matrices, either one-third or two-thirds, depending on their experimental group.

The main experiment started with an iJOS block in which all the matrices were presented one by one. Each matrix was displayed for up to four seconds, without the answer options, as done by Lauterman and Ackerman (Citation2019). This time limit allowed participants to glean a first impression of the figures but not to solve the problems. Participants clicked a “Yes” button if they judged the matrix to be solvable and a “No” button otherwise. They were instructed to make their decision quickly, based on their “gut feeling,” without trying to solve the matrix. They practiced this task on four matrices, half of them unsolvable. If a participant did not respond in the allotted time, the message “Too slow” appeared, and the program displayed the next matrix without collecting a response for the missed one.

In the second phase, all thirty matrices were presented again, in the same order. Each matrix, whether solvable or unsolvable, was displayed with the eight original Raven’s solution options along with an “Unsolvable” button. After choosing an answer, participants reported their confidence that their answer, whether a solution option or “Unsolvable,” was correct, on a 0 to 100% scale. There was no time limit for this phase. The entire procedure, including instructions and practice, took about 45 min.

Results and Discussion

Twelve participants were excluded from the analyses based on the planned exclusion criteria (9 for investing less than 2SD below the sample’s mean time; 2 for investing time longer than 2SD above the sample’s mean time; 1 for providing fewer than 80% of the iJOS responses on time). Thus, the analyses were based on thirty participants in the one-third condition, 29 participants in the two-thirds condition, and 36 in the half condition taken from Lauterman and Ackerman (Citation2019). An average of 1.5 iJOS responses per participant was not provided on time and were excluded from the data. See descriptive statistics in .

Table 1. Experiment 1: Means (SD) by objective solvability, experiment phase, and manipulated expected solvability rate for each group.

Decision criterion and discrimination

A central question in the present study is whether different expectations regarding the distribution of solvable and unsolvable matrices changed participants’ discrimination between the two, and/or biased the decision criterion for solvability. To address this question, we conducted analyses guided by SDT, using the following variables: hit rate (H)—number of solvable matrices correctly judged as solvable divided by the total number of solvable problems; false alarm rate (F)—number of unsolvable matrices incorrectly judged as solvable divided by the total number of unsolvable problems; individual’s decision criterion (c)—the threshold for declaring a matrix to be solvable/unsolvable; and discrimination (d′)—the extent to which the participant correctly distinguished between solvable and unsolvable matrices.Footnote2 The results of the SDT analysis are presented in .

Table 2. Means (SD) for Signal Detection Theory (SDT): False alarms, hits, c (decision criterion) and d′ (discrimination) for each expected solvability rate in phase 1 of Experiment 1.

Prior expectations significantly affected participants’ threshold for judging the matrices as solvable. The decision criterion (c) was lower when participants expected two-thirds of the matrices to be solvable relative to half, and lower again in the half condition relative to the one-third condition. Notably, discrimination (d′) did not differ across the conditions, F(2, 92) = 1.26, MSE = 0.33, p = .30, ηp2 = 0.03, and across the entire sample it was larger than zero, t(94) = 4.13, p < .0001, d = 0.42. These findings indicate that participants differentiated between solvable and unsolvable matrices, despite having time only for a brief glance at the matrices.

Integrating expectations with nameability

Next, we examined whether participants weighed expectations and nameability separately (possibility A and possibility B above) or together (possibility C above). Given the nested nature of the data, we used a two-level hierarchical logistic regression model (level 1: participants; level 2: matrices) using the R package lme4 (Bates et al., Citation2015; R Development Core Team, Citation2008). The independent variables were objective solvability (dummy coded: solvable 1, unsolvable 0), prior expectations (one-third/half/two-thirds, where “half” is the baseline category),Footnote3 and the whole-matrix nameability score; all independent variables were used as fixed-effect predictors. The dependent variable was iJOS (yes/no). In all models the random intercepts for participants allowed for individual differences in the means of the dependent variable. Significance values for interactions were generated using likelihood-ratio tests comparing the full statistical model against a reduced model, without the interaction effect.

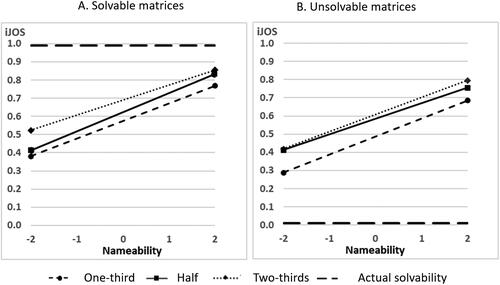

In accordance with the SDT results, we found a main effect of objective solvability, b = 0.33, SE = 0.08, p < .0001, indicating more positive iJOS ratings for solvable than for unsolvable matrices (see simple effects in ) and differences between all three groups in predicting iJOS (one-third compared to the half condition: b = −0.34, SE = 0.11, p = .002; two-thirds compared to the half condition: b = 0.24, SE = 0.11, p = .03).

The unique contribution of this analysis is considering the role of nameability. Indeed, the whole-matrix nameability score had a main effect on iJOS, b = 0.43, SE = 0.04, p < .0001, indicating that the probability of a positive iJOS was greater for matrices whose elements were perceived as more nameable. Allowing for an interaction with the independent variables did not improve the model fit, χ2 = 7.45, p = .11. See . Notably, the figure-by-figure nameability scores, used instead of whole-matrix nameability scores, did not predict iJOS, b = 0.04, SE = 0.04, p = .29. These results support the prediction that both prior expectations and perceived nameability affect iJOS as independent heuristic cues, at least when nameability of the matrix elements is gleaned from a single global impression.

Figure 2. Experiment 1: Probability of positive initial Judgment of Solvability (iJOS) predicted by expected percentage of solvable matrices (between participants) and whole-matrix nameability score (within participants) for solvable matrices (panel A) and unsolvable matrices (panel B). Actual solvability is constant in each panel. It is drawn for emphasizing iJOS bias.

Order effects

We expected that the probabilities for “solvable” and “unsolvable” answers would be constant throughout the first phase. To test this, we added the serial order of each matrix to the HLM model. Indeed, order had no effect, b = −0.0002, SE = 0.005, p = .96. Notably, no main effects changed in this model, suggesting that the solvability rate information provided at the task outset stayed active in participants’ minds, and that there was no effect of accumulating experience with the task.

Fluency

We interpret our results as showing that nameability operates as an experience-based cue, such that matrices whose elements are readily named are processed more fluently than those with less-nameable elements. If this fluency is expressed in response time, including this measure in the model should cancel out nameability’s predictive value for iJOS. Indeed, adding response time to the model yielded a main effect, b = −0.12, SE = 0.05, p = .03. However, the predictive power of nameability was robust, b = 0.42, SE = 0.04, p < .0001. We conclude that nameability takes effect above and beyond the aspect of fluency encompassed in response time.

Nameability and difficulty

So far, we have shown that nameability is predictive of iJOS. Lauterman and Ackerman (Citation2019) also found that iJOS was associated with the objective difficulty of the original matrices, even with respect to iJOSs provided for the unsolvable versions. A correlation between nameability and the difficulty of the original matrix might explain their finding. We tested this possibility using the data of Lauterman and Ackerman (Citation2019). Whole-matrix nameability scores were strongly correlated with the matrices’ difficulty scores (r = .663, p < .0001), meaning that matrices perceived to generally have easy-to-name elements were easier to solve than those rated to have lower nameability. In line with the regression results above, the figure-by-figure nameability scores were not correlated with original matrix difficulty (r = .187, p = .30). Thus, although the two nameability scores were correlated, only the whole-matrix scores predicted iJOS and were also correlated with difficulty. See the General Discussion.

When we added objective difficulty to the regression model, the effect of nameability became only marginally significant, b = 0.11, SE = 0.06, p = .056, while the effect of objective difficulty was significant, b = 0.50, SE = 0.06, p < .001. Since difficulty is not a heuristic cue that can be perceived quickly, this result suggests that Raven’s matrices are characterized by other valid cues that can be perceived quickly and that are also correlated with difficulty, in addition to whole-matrix nameability.

The predictive power of iJOS for the reasoning phase

Ackerman and Thompson (Citation2017) referred to iJOS as leading (or misleading) effort regulation in the solving process. Lauterman and Ackerman (Citation2019) indeed found iJOS to be predictive of solving time, final JOS, and confidence in provided answers (either a solution or “unsolvable”) in the group which correctly knew that half the problems were solvable (see also Burton et al., in press). We examined the replicability of the predictive power of iJOS in the two groups added as part of the present experiment. Indeed, we replicated the findings that in unsolvable matrices a positive iJOS (“solvable”) predicted greater time investment than a negative iJOS. For solvable matrices, iJOS did not predict time investment. We also replicated the finding that positive iJOS predicted positive final JOS. We did not find predictive power of iJOS for confidence, as found by Lauterman and Ackerman (Citation2019); but notably, their finding was concealed when matrix difficulty was included in the model. See the detailed report of the results in the Appendix. It includes some contributions of the present study to understanding the predictive power of iJOS.

In sum, this experiment highlights the appreciable sophistication of how people quickly assess solvability, as at least two main sources of information are considered: prior information regarding the entire task, and item-based cues. In the present study, the item-based cues include both whole-matrix nameability, which is based on the given stimuli, and response time, which reflects other aspects of the assessment process. The results are congruent with the hypothesis that a priori expectations bias the decision criterion, while nameability strengthens the impression of solvability for solvable items. We also replicated previous findings of Lauterman and Ackerman (Citation2019) showing predictive power of iJOS for measures of the solving process—time investment, final JOS, and confidence in the final answer for items judged to be solvable.

Experiment 2

In Experiment 2, we used the CRA task (Bowden & Beeman, Citation1998, Bowden & Jung-Beeman, Citation2003) to compare the results of Experiment 1 to those obtained with a more traditional, verbal task. In the CRA task, participants are presented with triads of isolated words. In each solvable triad, all three words are commonly associated with the same fourth word so as to form a compound word or a two-word phrase (e.g., PIPE—WOOD—HOT: STOVE). In this study, unsolvable triads comprised random collections of three words with no common association. Studies suggest that both the solving process and iJOS in CRA tasks are based on unconscious spreading activation of semantic associations (Bolte & Goschke, Citation2005; Bowers et al., Citation1990; Kenett & Faust, Citation2019; Sio et al., Citation2013; Smith et al., Citation2013). In particular, fluent activation of semantic knowledge increases the chance that a triad will be judged as solvable, meaning that fluency is used as an experience-based cue for iJOS. Undorf and Zander (Citation2017) studied fluency using a similar paradigm in the context of memorization. Notably, while a triad of words can be unsolvable as a CRA problem, regardless of the invested effort, memorizing the same triad is a matter of investing enough time (Ackerman & Beller, Citation2017).

In Experiment 2, as in Experiment 1, we examined whether both prior expectations and item-based subjective cues predict iJOS. Prior expectations were manipulated in the same way as in Experiment 1. This time we collected new data for all three groups, telling them that 33%, 50%, or 66% of the problems were solvable.

For this experiment, we used word familiarity as the item-based cue, where familiarity was operationalized by the frequency with which each word is used in the language. This verbal cue was chosen because it is similar to nameability in being associated with the problem’s elements, rather than with characteristics of the problem as a whole. Metacognitive research has confirmed the use of familiarity as a heuristic cue (Koriat & Levy-Sadot, Citation2001; Markovits et al., Citation2015). Fiacconi and Dollois (Citation2020) found a small but reliable effect of word frequency in a meta-analytic review of memorization studies, where high-frequency words were judged as more likely to be remembered than low-frequency words. In the case of CRA problems familiarity was expected to be a misleading cue, since finding the common word that relates to the given word triad is more challenging when the words are used in many contexts and associations (Olteţeanu et al., Citation2019).

Word frequency was obtained from the Word-Frequency Database for Printed Hebrew (Frost & Plaut, Citation2005), which supplies the mean occurrence of specific Hebrew words per million printed words. We hypothesized that word frequency would affect fluency in an experience-based manner, similarly to the nameability of figures in Raven’s matrices. We dissociated familiarity and solvability by using unsolvable problems matched in familiarity to solvable problems. This way, familiarity was necessarily non-predictive of solvability. We used a similar set of analyses and expected to find similar results to Experiment 1, with familiarity instead of nameability.

Method

Participants

A power analysis as for Experiment 1 suggested 76 participants as the minimal sample size. Ninety-nine undergraduate students from the Technion participated in the study (47% female; Mage = 25.0, SD = 2.6) in exchange for course credit or monetary payment (about $10). All participants reported speaking Hebrew at a mother-tongue level. They were randomly assigned to one of three groups, which were told that the proportion of solvable problems in the set was respectively one-third (N = 30), half (N = 33), or two-thirds (N = 36). The actual solvable rate was 50% of the problems for all groups. The exclusion criteria were as in Experiment 1.

Materials

The thirty Hebrew CRA problems used by Ackerman (2014; Experiments 2–4) and Ackerman et al. (Citation2020; Experiment 3a) were used for this study. Success rates in these problems were in the range of 20%–80%. Frequency scores for each word were taken from the Word-Frequency Database for Printed Hebrew (Frost & Plaut, Citation2005) and normalized to the range −0.35–7.97. For each triad we calculated the mean frequency score for its three words (M = 0, SD = 0.59, Min = −0.31, Max = 2.74).

The thirty problems were divided into two sets balanced in mean frequency score. The sets were also balanced for difficulty based on success rates in Ackerman et al. (Citation2020) with a sample from the same population. For each participant, one set served as the solvable problems. The words in the second set were used to create random triads as the unsolvable problems, after verifying that each generated unsolvable triad indeed had no common association. The role of the two sets was counterbalanced across participants.

Procedure

The procedure resembled that of Experiment 1 except for the use of CRA problems instead of Raven’s matrices. At the beginning of the session, participants read instructions for solving CRA problems and practiced on two problems. They were then informed of the supposed proportion of solvable problems for their assigned group—either one-third, half, or two-thirds.

In the first phase, following Ackerman and Beller (Citation2017), participants had two seconds to provide an iJOS for each problem. This time limit allowed participants to read the words, but not to solve the problems. Participants were first instructed how to judge solvability, as was done in Experiment 1, and practiced this task on two triads. Then the thirty triads were displayed one by one. If participants did not respond in the allotted time, a message reading “Too slow” appeared on the screen. The program then moved on to the next triad without collecting a response for the missed problem.

In the second phase, all thirty triads were presented again, in the same order. Participants were asked to write the fourth word that formed a valid phrase with each of the three displayed words, or to click “Unsolvable.” After choosing a response, participants reported their confidence, as in Experiment 1. The entire procedure took about 30 min.

Results and Discussion

Based on the planned exclusion criteria, nine participants were excluded from the analyses (3 for spending less than 2SD below the sample’s mean time; 4 for providing less than 80% valid iJOSs; 2 for particularly low success rates, less than 2SD below the sample’s mean success rate). Thus, the analyses were based on ninety participants: 26 in the one-third condition, 30 in the half condition, and 34 in the two-thirds condition. Descriptive statistics are presented in .

Table 3. Experiment 2: Means (SD) by objective solvability, Experiment phase, and manipulated expected solvability rate for each group.

As a manipulation check we examined whether the inclusion of common words indeed increased the difficulty of CRA problems. We looked at the solvable CRAs only and used a mixed logistic regression to estimate the effect of familiarity (mean normalized frequency of the three words in each triad) on the rate of correct solutions. Indeed, familiarity had a negative effect on success rates, b = −0.59, SE = 0.14, p < .0001, meaning fewer correct solutions were produced as the triads’ words were more familiar.

We analyzed the data in a similar manner to that employed in Experiment 1, starting by using SDT to look at the effect of expectations on iJOS. Following that, we examined whether participants’ iJOS would incorporate both prior expectations and familiarity.

Decision criterion and discrimination

As in Experiment 1, we used SDT to examine whether the iJOS decision criterion (c) and discrimination (d′) between solvable and unsolvable problems differed between the prior expectations groups. See . A one-way ANOVA comparing c for the three conditions revealed a significant effect, F(2, 87) = 8.90, MSE = 0.87, p > .0001, ηp2 = 0.17. Post-hoc tests revealed that c was significantly higher in the one-third condition than in the half condition, p = .01, d = 0.81, meaning that participants who expected more problems to be solvable had a lower threshold for judging the CRAs as solvable. There was no significant difference between the half and two-thirds conditions, p = .3, d = 0.3. A one-way ANOVA comparing d′ between the three prior expectations conditions revealed no significant differences, F < 1, and across the entire sample d′ was larger than zero, t(87) = 2.62, p = .01, d = 0.28. Thus, as in Experiment 1, discrimination was equivalent for all three prior expectations conditions. Overall, iJOS discriminated between solvable and unsolvable items.

Table 4. Means (SD) for Signal Detection Theory (SDT): False alarms, hits, c (decision criterion) and d′ (discrimination) for each expected solvability rate in phase 1 of Experiment 2.

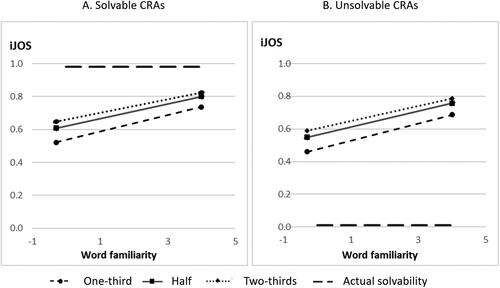

Turning to our hypothesis that familiarity would serve as an item-based cue for iJOS above and beyond prior expectations, as in Experiment 1, we fitted a two-level hierarchical logistic regression model (level 1: participants; level 2: CRAs). Objective solvability, prior expectations (one-third/half/two-thirds), and familiarity score were the independent variables. As in the SDT analysis, solvability, b = 0.25, SE = 0.08, p = .004, and prior expectations had main effects on iJOS, with the one-third condition predicting a lower iJOS rate than the half condition, b = −0.35, SE = 0.13, p = .005, and no significant difference between the half and the two-thirds conditions, b = 0.17, SE = 0.12, p = .10.

Familiarity had a main effect as well, b = 0.22, SE = 0.08, p = .005, such that the probability of a positive iJOS was greater for CRAs assembled from more familiar words. Allowing for interactions did not improve the model fit, χ2 = 5.95, p = .50. See . These results suggest that participants took into consideration both prior expectations and the words’ familiarity, in a similar way to expectations and nameability in Experiment 1.

Figure 3. Experiment 2: Probability of positive initial Judgment of solvability (iJOS) by expected percentage of solvable Compound Remote Associates (CRAs) and word familiarity (z-score) for solvable CRAs (panel A) and unsolvable CRAs (panel B). Actual solvability is constant in each panel. It is drawn for emphasizing iJOS bias.

Order effects

As in Experiment 1, serial order had no effect, b = −0.007, SE = 0.005, p = .16. Thus, judgments were made item-by-item in the verbal task as well. The main effects of objective solvability, prior expectations and familiarity did not change when serial order was included in the model.

Fluency

As in Experiment 1, adding response time to the model above yielded a negative main effect, b = −0.38, SE = 0.14, p = .008, while familiarity was still a significant predictor, b = 0.22, SE = 0.08, p = .006. Thus, the effect of familiarity was found above and beyond fluency operationalized as response time.

The predictive power of iJOS for the reasoning phase

As in Experiment 1, we examined the predictive power of iJOS for solving time, final JOS, and confidence in the answer. The detailed results appear in the Appendix. Overall, unlike with Raven’s matrices in both Ackerman and Lauterman (2019) and in Experiment 1 of the present study, iJOS did not predict the solving process for CRAs, as it was not predictive of time investment, final JOS, or final confidence. Also unlike in Experiment 1, neither prior expectations nor familiarity predicted final JOS. See the General Discussion for possible reasons for this difference.

In sum, Experiment 2 generalized most findings of Experiment 1 regarding the cue integration underlying iJOS. As in Experiment 1, iJOS was predicted by prior information regarding the whole task and item-based heuristic cues. In both experiments iJOS discriminated between solvable and unsolvable problems. However, though secondary to this study, iJOS predicted time investment, final JOS, and to some extent also confidence in Experiment 1 but not in Experiment 2.

General Discussion

This research contributes to the emerging literature dealing with meta-reasoning in general, and specifically with heuristic bases for meta-reasoning judgments. This study focused on iJOS in the presence of unsolvable problems. Like other metacognitive judgments, iJOS is thought to be based on three levels of heuristic cues: 1) self-perceptions; 2) task characteristics; and 3) momentary experiences (Ackerman, Citation2019). We demonstrated the concurrent use of cues from level 2 and level 3 for this quick metacognitive judgment. In two experiments we manipulated the proportion of problems believed to be solvable in the entire task, as information eliciting top-down prior expectations (level 2). At the item level (level 3), we considered two item-based heuristic cues: nameability of elements in Raven’s matrices and familiarity of words in CRAs. We also examined whether these item-based cues made a unique contribution to iJOS above and beyond response time, which represented processing fluency.

The pattern of combining prior expectations with item-based cues was consistent across both experiments. In both task types, participants discriminated well between solvable and unsolvable problems. This robust reliability of the brief iJOS is appreciable.

Another finding common to both experiments was the effect of prior expectations on iJOS. In both experiments, the decision criteria across the groups pointed to a general tendency toward more “solvable” responses. Delving into differences between the groups revealed that participants adjusted their iJOS to what they were told about the proportion of solvable problems at the instructions stage, even though the same proportion of problems were objectively solvable. However, the half-solvable group did not significantly differ from the two-thirds group in Experiment 2.

Utilization of item-based cues was also consistent across both experiments. In both task types, the heuristic cues we considered were derived from the elements of each problem (figures within each matrix in Experiment 1; the words within each triad in Experiment 2), while the essence of the solution lay in the relations between the problem’s elements (rules governing the rows and columns of a Raven’s matrix; a common fourth word in CRAs). We designed the tasks such that both item-based cues, nameability and word frequency, were non-predictive of problems’ solvability, which was what the participants were asked about in the iJOS phase. Nevertheless, both cues had unique predictive values for iJOS. Thus, objective solvability, prior expectations, and the relevant item-based cue all generated significant contributions to iJOS. Moreover, none of these effects were concealed by fluency, operationalized by response time, although response time was a significant predictor of iJOS. This collection of findings highlights the sophistication encompassed in people’s quick initial impression regarding the solvability of problems. It also enriches recent findings about cue integration in non-pressured metacognitive judgments in a memory context (Undorf et al., Citation2018; Undorf & Bröder, Citation2020).

The reliability of iJOS

There is almost no evidence in the literature regarding the reliability of iJOS in non-verbal problems. Reber et al. (Citation2008) found that participants performed at chance levels when they had to judge the correctness of dot-pattern addition equations under a time limit of 600 msec, but performed at above-chance levels under a time limit of 1800 msec. Reber et al. concluded that this brief time allowed for the collection of enough valid information to assess the correctness of the equation (e.g., assessing the number of dots in one line and the number of lines), but participants still utilized symmetry as a (misleading) cue for correctness. Ackerman and Lauterman (2019) found iJOS to be reliable when unsolvable Raven’s matrices were produced by switching the places of two elements of the original matrices, but not when four elements were switched. In Experiment 1 of the present study we used the Raven’s paradigm with four elements switched and found iJOS to be reliable, unlike Lauterman and Ackerman (Citation2019). Clearly, this discrimination is not devoid of errors, as participants produced a large number of mistaken iJOSs (see ). Nevertheless, this discrimination between solvable and unsolvable matrices was resistant to the inaccurate information provided about the proportion of solvable matrices. This finding suggests that participants collected enough valid information to perform at better-than-chance level even when they provided their iJOS in only 2.2 s on average. The mismatch between the two studies’ findings, even though the present study used the same stimuli and population as Ackerman and Lauterman (2019), calls for future research to delve into the exact conditions that support discrimination above chance. With CRAs the evidence is also inconsistent across studies. In previous studies, coherence judgments regarding CRAs (or the similar RAT problems) discriminated at above-chance level between solvable (coherent) and unsolvable (incoherent) problems (Bolte & Goschke, Citation2005; Maldei et al., Citation2019; Topolinski, Citation2014; Topolinski & Strack, Citation2009; Zander et al., Citation2016). In contrast, when participants were asked about the solvability of CRA problems, Ackerman and Beller (Citation2017) found no discrimination between solvable and unsolvable word triads. In particular, Ackerman and Beller found that this lack of discrimination was consistent regardless of whether participants were asked whether the problem was solvable or whether they were asked about their own ability to solve the problem. In the present study, in Experiment 2, when we assessed discrimination as done by Ackerman and Beller (Citation2017), we found discriminability. Thus, iJOS discriminability for both Raven’s and CRAs is inconsistent across highly similar studies. Future research is called for to shed light on the underlying processes involved.

The reliability of iJOS found here with Raven’s matrices and CRA problems may not share the same explanation. It is broadly agreed that a fast, “gut feeling” judgment regarding the coherence of word triads is based on mechanisms of spreading activation in the semantic net (Anderson, Citation1983; Kajic & Wennekers, Citation2015; Christensen & Kenett, Citationin press). It seems plausible that there is partial activation of the solution concept, among other related terms. Bolte and Goschke (Citation2008) generalized the semantic activation theory to a non-verbal task. They used the Waterloo Gestalt Closure Task (Bowers et al., Citation1990), in which participants view fragmented line drawings which either represent meaningful objects (coherent fragments) or cannot be connected into meaningful objects. Participants discriminated at above-chance level between meaningful and meaningless objects even if they did not recognize the target objects. Bolte and Goschke (Citation2008) concluded that an implicit perception of coherence in the gestalt figure guided discrimination. That is, even when the gestalt figure was not identified, it carried enough information to allow a reliable judgment. In our study, the unsolvable Raven’s matrices shared their elements with original solvable matrices. Thus, the existence of rules in the solvable matrices might generate a feeling of coherence that was absent in the shuffled elements within unsolvable matrices. This explanation is not based on semantic activation, but on holistic perceptual processes.

Nameability

In Experiment 1 we demonstrated the use of nameability as an item-based heuristic cue for a variety of metacognitive judgements—iJOS, final JOS, and final confidence. This finding contributes a new heuristic cue that has not yet been discussed in the context of meta-reasoning. Nameability carries the advantage of dual representation, both visual and verbal. However, this explanation is challenged by our finding that only one of the two nameability scores—the whole-matrix score, but not the figure-by-figure nameability score—was predictive of iJOS. This finding points to the role of a general impression rather than the use of specific verbal codes. A possible reason for this difference may lie in the need for compatibility between the type of experience that serves as a cue and the type of outcome judgment called for (Vogel et al., Citation2020). It may be that as the Raven’s task calls for finding rules at the whole-matrix level, predictive cues are also assessed at the whole-matrix level. Future studies are called for to consider what aspect of nameability contributes to its predictive value for the various meta-reasoning judgments. Notably, we did not eliminate the possibility that participants in fact used other, similar, heuristic cues suitable for non-verbal problems, such as familiarity, or the frequency with which a shape is encountered in daily life or in other contexts. But still, these cues must correlate with nameability of the matrix figures gleaned from a global impression, and not from focused perusal of the individual elements. More generally, this study paves a way towards exposing heuristic cues underlying metacognitive judgments in non-verbal tasks, which have been severely understudied relative to semantic cues.

Reliability of experience-based heuristic cues

Notably, nameability is usually associated with ease of solving. Nameability has been found to be beneficial for success in several visual problem-solving tasks, including Raven’s matrices (Boucart & Humphreys, Citation1992; Brandimonte & Gerbino, Citation1993; Brandimonte et al., Citation1992; Schooler et al., Citation1993). We used both nameability and familiarity as misleading cues for solvability. Note, though, that for the CRAs, familiarity not only was non-predictive of solvability, but its actual effects run in the opposite direction: familiarity deceptively hints at an easy solving process, but in fact highly familiar words generate a large solution space to be scanned, which in turn makes the problem harder to solve. However, these cues were not manipulated, but tied to the chosen stimuli. As a result, their contribution is still correlational rather than a proof of causality (Ackerman, Citation2019). We call for future research to go further up the causality ladder, to strengthen our understanding of factors underlying iJOS and the solving processes that follow.

The predictive power of iJOS

The predictive power of iJOS differed between the two experiments. In Experiment 1, with Raven’s matrices, iJOS predicted measures of the solving process—time investment, final JOS, and to some extent also final confidence, replicating findings of Lauterman and Ackerman (Citation2019). In Experiment 2, with CRAs, we did not find predictive effects of iJOS. We find it quite interesting that the underlying processes of iJOS are generalized over two different paradigms, while its predictive value for the subsequent solving process differs, suggesting a discrepancy between the two stages in their metacognitive processes.

Raven’s matrices differ from CRA problems in two main respects. The former is a visual task, and the solution is based on analytic processing (see Mackintosh & Bennet, Citation2005, for a review), while the latter is a semantic task calling for creative processes (Kenett & Faust, Citation2019; Stevens & Zabelina, Citation2019; Zmigrod et al., Citation2015). The differences we found in the predictive power of iJOS might be explained by these characteristics, but this difference should be further examined to clarify when iJOS predicts subsequent reasoning stages.

This study still leaves open questions regarding the mechanisms whereby information and experiences are integrated into metacognitive judgments. In the procedure we applied, we gave most participants incorrect information about the proportion of problems that were solvable. This disparity between what participants were told and the true proportion could have influenced participants’ momentary experiences. More generally, it is unclear whether prior expectations are updated by item-based heuristic cues, or, alternatively, whether people avail themselves of these two sources concurrently. Notably, though, the items’ sequential order did not affect our results. Thus, pure experience with the task, without feedback, does not seem to change cue integration as examined in this study.

Conclusions

This study contributes to the theoretical framework of meta-reasoning and extends our knowledge regarding iJOS. We found effects for non-verbal problems similar to effects found with verbal problems. Specifically, this study demonstrates two novel attributes of iJOS. First, it exposes quick integration of top-down prior expectations with bottom-up momentary experiences in iJOS. Second, it highlights the role of non-verbal factors in meta-reasoning processes and their role in directing effort investment. Specifically, it introduces a new heuristic cue, nameability, relevant for non-verbal tasks. Another novel result is that whole-matrix nameability judgments of Raven’s problems predicted iJOS, but not figure-by-figure nameability scores for the individual elements. In addition, the study broadens the scarce literature dealing with the predictive power of iJOS for regulating the problem-solving process when considering Raven’s matrices, but exposed a boundary condition for this predictive power when dealing with CRAs. Meta-reasoning processes involved in solving non-verbal problems are common in real-life situations, such as navigation, engineering, design, gaming, and education, as well as verbal problems, which are relevant for conversation, reading, marketing, forensic investigations, education, etc. Thus, discovering new components in this puzzle carries numerous practical implications for better understanding how people behave when facing cognitive challenges.

Acknowledgements

We thank Meira Ben-Gad for editorial assistance. Results data are available online at https://osf.io/7e5wx/

Additional information

Funding

Notes

1 In Experiment 1, a control group (50%), where participants correctly knew that half the problems were solvable, was taken from data published previously in Lauterman and Ackerman (Citation2019). This group was drawn from the same population as in the current study, and had the same age, gender and SAT score distributions.

2 The Φ-1 (inverse phi) function was used to convert probabilities into z scores. For example, Φ-1 (.05) = -1.64, which means that a one-tailed probability of .05 requires a z score of -1.64. On this basis, d′ and c can be calculated as follows (Macmillan, Citation1993): (1) d′ = Φ-1 (H) − Φ-1 (F); (2) c = -[ Φ-1 (H) + Φ-1 (F)]/2.

3 We also analyzed the data without the half group and found the same effects.

References

- Ackerman, R. (2019). Heuristic cues for meta-reasoning judgments: Review and methodology. Psihologijske Teme, 28(1), 1–20. https://doi.org/10.31820/pt.28.1.1

- Ackerman, R., & Beller, Y. (2017). Shared and distinct cue utilization for metacognitive judgements during reasoning and memorisation. Thinking & Reasoning, 23(4), 376–408. https://doi.org/10.1080/13546783.2017.1328373

- Ackerman, R., Bernstein, D. M., & Kumar, R. (2020). Metacognitive hindsight bias. Memory & Cognition, 48(5), 731–744. https://doi.org/10.3758/s13421-020-01012-w

- Ackerman, R., & Lauterman, T. (2012). Taking reading comprehension exams on screen or on paper? A metacognitive analysis of learning texts under time pressure. Computers in Human Behavior, 28(5), 1816–1828. https://doi.org/10.1016/j.chb.2012.04.023

- Ackerman, R., & Thompson, V. A. (2017). Meta-reasoning: Monitoring and control of thinking and reasoning. Trends in Cognitive Sciences, 21(8), 607–617. https://doi.org/10.1016/j.tics.2017.05.004

- Ackerman, R., Yom-Tov, E., & Torgovitsky, I. (2020). Using confidence and consensuality to predict time invested in problem solving and in real-life web searching. Cognition, 199, 104248. https://doi.org/10.1016/j.cognition.2020.104248

- Anderson, J. R. (1983). A spreading activation theory of memory. Journal of Verbal Learning and Verbal Behavior, 22(3), 261–295. https://doi.org/10.1016/S0022-5371(83)90201-3

- Arnold, N. R., Bröder, A., & Bayen, U. J. (2015). Empirical validation of the diffusion model for recognition memory and a comparison of parameter-estimation methods. Psychological Research, 79(5), 882–898. https://doi.org/10.1007/s00426-014-0608-y

- Bates, D., Mächler, M., Bolker, B., & Walker, S. (2015). Fitting linear mixed-effects models using lme4. Journal of Statistical Software, 67(1), 1–48. https://doi.org/10.18637/jss.v067.i01

- Besken, M. (2016). Picture-perfect is not perfect for metamemory: Testing the perceptual fluency hypothesis with degraded images. Journal of Experimental Psychology. Learning, Memory, and Cognition, 42(9), 1417–1433. https://doi.org/10.1037/xlm0000246

- Bolte, A., & Goschke, T. (2005). On the speed of intuition: Intuitive judgments of semantic coherence under different response deadlines. Memory & Cognition, 33(7), 1248–1255. https://doi.org/10.3758/bf03193226

- Bolte, A., & Goschke, T. (2008). Intuition in the context of object perception: Intuitive gestalt judgments rest on the unconscious activation of semantic representations. Cognition, 108(3), 608–616. https://doi.org/10.1016/j.cognition.2008.05.001

- Boucart, M., & Humphreys, G. W. (1992). Global shape cannot be attended without object identification. Journal of Experimental Psychology. Human Perception and Performance, 18(3), 785–806. https://doi.org/10.1037//0096-1523.18.3.785

- Bowden, E. M., & Beeman, M. J. (1998). Getting the right idea: Semantic activation in the right hemisphere may help solve insight problems. Psychological Science, 9(6), 435–440. https://doi.org/10.1111/1467-9280.00082

- Bowden, E. M., & Jung-Beeman, M. (2003). Normative data for 144 compound remote associate problems. Behavior Research Methods, Instruments, & Computers : A Journal of the Psychonomic Society, Inc, 35(4), 634–639. https://doi.org/10.3758/bf03195543

- Bowers, K. S., Regehr, G., Balthazard, C., & Parker, K. (1990). Intuition in the context of discovery. Cognitive Psychology, 22(1), 72–110. https://doi.org/10.1016/0010-0285(90)90004-N

- Brandimonte, M. A., & Gerbino, W. (1993). Mental image reversal and verbal recoding: When ducks become rabbits. Memory & Cognition, 21(1), 23–33. https://doi.org/10.3758/bf03211161

- Brandimonte, M. A., & Gerbino, W. (1996). When imagery fails: Effects of verbal recoding on accessibility. In C. Cornoldi, R. H. Logie, M. A. Brandimonte, D. Reisberg, & G. Kaufmann (eds.), Stretching the imagination: Representation and transformation in mental imagery., 31–76. Oxford University Press.

- Brandimonte, M. A., Hitch, G. J., & Bishop, D. V. (1992). Verbal recoding of visual stimuli impairs mental image transformations. Memory & Cognition, 20(4), 449–455. https://doi.org/10.3758/bf03210929

- Brehmer, B. (1994). The psychology of linear judgement models. Acta Psychologica, 87(2-3), 137–154. https://doi.org/10.1016/0001-6918(94)90048-5

- Burton, O. R., Bodner, G. E., Williamson, P., & Arnold, M. M. (in press). How accurate and predictive are judgments of solvability?. Explorations in a two-phase anagram solving paradigm. Metacognition and Learning.

- Carpenter, P. A., Just, M., & Shell, P. (1990). What one intelligence test measures: A theoretical account of processing in the Raven’s progressive matrices test. Psychological Review, 97(3), 404–431. https://doi.org/10.1037/0033-295X.97.3.404

- Christensen, A. P., & Kenett, Y. N. (in press). Semantic network analysis (SemNA): A tutorial on preprocessing, estimating, and analyzing semantic networks. Psychological Methods. https://doi.org/10.1037/met0000463

- De Neys, W., Rossi, S., & Houdé, O. (2013). Bats, balls, and substitution sensitivity: Cognitive misers are no happy fools. Psychonomic Bulletin & Review, 20(2), 269–273. https://doi.org/10.3758/s13423-013-0384-5

- DeShon, R. P., Chan, D., & Weissbein, D. A. (1995). Verbal overshadowing effects on Raven’s Advanced Progressive Matrices: Evidence for multidimensional performance determinants. Intelligence, 21(2), 135–155. https://doi.org/10.1016/0160-2896(95)90023-3

- Duncker, K. (1945). On problem-solving. Psychological Monographs, 58(5), i–113. https://doi.org/10.1037/h0093599

- Einhorn, H. J., Kleinmuntz, D. N., & Kleinmuntz, B. (1979). Linear regression and process-tracing models of judgment. Psychological Review, 86(5), 465–485. https://doi.org/10.1037/0033-295X.86.5.465

- Dunning, D., Johnson, K., Ehrlinger, J., & Kruger, J. (2003). Why people fail to recognize their own incompetence. Current Directions in Psychological Science, 12(3), 83–87. https://doi.org/10.1111/1467-8721.01235

- Faul, F., Erdfelder, E., Lang, A.-G., & Buchner, A. (2007). G*Power 3: A flexible statistical power analysis program for the social, behavioral, and biomedical sciences. Behavior Research Methods, 39(2), 175–191. https://doi.org/10.3758/bf03193146

- Fiacconi, C. M., & Dollois, M. A. (2020). Does word frequency influence judgments of learning (JOLs)? A meta-analytic review. Canadian Journal of Experimental Psychology = Revue Canadienne de Psychologie Experimentale, 74(4), 346–353. https://doi.org/10.1037/cep0000206

- Frost, R., & Plaut, D. (2005). The Word-frequency Database for Printed Hebrew, http://word-freq.mscc.huji.ac.il, The Hebrew University of Jerusalem.

- Kajic, I., & Wennekers, T. (2015). Neural network model of semantic processing in the Remote Associates Test [Paper presentation]. Paper presented at the Workshop on Cognitive Computation: Integrating Neural and Symbolic Approaches, 29th Annual Conference on Neural Information Processing Systems (NIPS 2015), Montreal. December).

- Kenett, Y. N., & Faust, M. (2019). A semantic network cartography of the creative mind. Trends in Cognitive Sciences, 23(4), 271–274. https://doi.org/10.1016/j.tics.2019.01.007

- Koriat, A. (1997). Monitoring one’s own knowledge during study: A cue-utilization approach to judgments of learning. Journal of Experimental Psychology: General, 126(4), 349–370. https://doi.org/10.1037/0096-3445.126.4.349

- Koriat, A., & Levy-Sadot, R. (2001). The combined contributions of the cue-familiarity and accessibility heuristics to feelings of knowing. Journal of Experimental Psychology: Learning, Memory, and Cognition, 27(1), 34–53. . https://doi.org/10.1037/0278-7393.27.1.34

- Koriat, A., Ma’ayan, H., & Nussinson, R. (2006). The intricate relationships between monitoring and control in metacognition: Lessons for the cause-and-effect relation between subjective experience and behavior. Journal of Experimental Psychology. General, 135(1), 36–69. https://doi.org/10.1037/0096-3445.135.1.36

- Kornell, N., & Son, L. K. (2009). Learners’ choices and beliefs about self-testing. Memory (Hove, England), 17(5), 493–501. https://doi.org/10.1080/09658210902832915

- Lauterman, T., & Ackerman, R. (2019). Initial judgment of solvability in non-verbal problems–A predictor of solving processes. Metacognition and Learning, 14(3), 365–383. https://doi.org/10.1007/s11409-019-09194-8

- Logan, J. M., Castel, A. D., Haber, S., & Viehman, E. J. (2012). Metacognition and the spacing effect: The role of repetition, feedback, and instruction on judgments of learning for massed and spaced rehearsal. Metacognition and Learning, 7(3), 175–195. https://doi.org/10.1007/s11409-012-9090-3

- Mackintosh, N. J., & Bennett, E. S. (2005). What do Raven’s Matrices measure? An analysis in terms of sex differences. Intelligence, 33(6), 663–674. https://doi.org/10.1016/j.intell.2005.03.004

- Macmillan, N. A. (1993). Signal detection theory as data analysis method and psychological decision model. In G. Keren & C. Lewis (Eds.), A handbook for data analysis in the behavioral sciences: Methodological issues. (pp. 21–57). Erlbaum.

- Maldei, T., Koole, S. L., & Baumann, N. (2019). Listening to your intuition in the face of distraction: Effects of taxing working memory on accuracy and bias of intuitive judgments of semantic coherence. Cognition, 191, 103975. https://doi.org/10.1016/j.cognition.2019.05.012

- Markovits, H., Thompson, V. A., & Brisson, J. (2015). Metacognition and abstract reasoning. Memory & Cognition, 43(4), 681–693. https://doi.org/10.3758/s13421-014-0488-9

- Meo, M., Roberts, M. J., & Marucci, F. S. (2007). Element salience as a predictor of item difficulty for Raven’s Progressive Matrices. Intelligence, 35(4), 359–368. https://doi.org/10.1016/j.intell.2006.10.001

- Mudrick, N. V., Azevedo, R., & Taub, M. (2019). Integrating metacognitive judgments and eye movements using sequential pattern mining to understand processes underlying multimedia learning. Computers in Human Behavior, 96, 223–234. https://doi.org/10.1016/j.chb.2018.06.028

- Newell, A., & Simon, H. A. (1972). Human problem solving. Prentice-Hall.

- Novick, L. R., & Sherman, S. J. (2003). On the nature of insight solutions: Evidence from skill differences in anagram solution. The Quarterly Journal of Experimental Psychology. A, Human Experimental Psychology, 56(2), 351–382. https://doi.org/10.1080/02724980244000288