Abstract

How people distinguish well-justified from poorly justified arguments is not well known. To study the involvement of intuitive and analytic cognitive processes, we contrasted participants’ personal beliefs with argument strength that was determined in relation to established criteria of sound argumentation. In line with previous findings indicating that people have a myside bias, participants (N = 249) made more errors on conflict than on no-conflict trials. On conflict trials, errors and correct responses were practically equal in terms of response times and mouse-tracking indices of hesitation. Similarly to recent findings on formal reasoning, these findings indicate that correct reasoning about informal arguments may not necessitate corrective analytic processing. We compared findings across four argument schemes but found few differences. The findings are discussed in light of intuitive logic theories and the notion that evaluating informal arguments could be based on implicit knowledge of argument criteria.

Argumentation is a ubiquitous part of human interaction. We read and hear arguments in newspapers, advertisements, from friends and acquaintances, and on social media. All discussion is not argumentation; argumentation entails presenting standpoints and supporting arguments to persuade or to convince a real or imagined discussion partner or audience (van Eemeren et al., Citation2014, Chapter 1.1). Our study regards authentic argumentation, which is studied within the fields of informal logic, argumentation theory, and rhetoric (van Eemeren et al., Citation2014, Chapter 1.1). Compared with formal logic, authentic argumentation differs both in context, form, and truth-values. Whereas logic is decontextualised and adheres to a strict form and consists of premises that are either true or false, informal argumentation is dependent on context, often less clear in form, and rests on probabilities. Additionally, informal arguments typically leave a premise implicit, making it necessary to read between the lines (Galotti, Citation1989). Evaluating argumentation is an important civic skill that we are confronted with daily. However, the cognitive processes involved are not well known. The aim of the present study is to examine these processes.

When asked to evaluate the overall quality of argumentation, or to distinguish sound from unsound arguments, people with no education in argumentation analysis tend to fare quite well. With some universality across countries, laypeople’s evaluations of informal arguments tend to be largely in line with the principles of sound argumentation that have been described in argumentation theory (Demir & Hornikx, Citation2022; Neuman et al., Citation2006; van Eemeren et al., Citation2009). However, research indicates that arguments are often processed superficially. The key to assessing arguments is understanding the relationships between claims and arguments (Angell, Citation1964; Shaw, Citation1996). However, people tend not to spontaneously engage in the key task of evaluating how arguments support claims, even though they can do it when specifically asked to (Shaw, Citation1996). Instead, people tend to elaborate on their own preferred explanations (Kuhn & Modrek, Citation2017). As further evidence of the superficiality of processing, findings show that the mental models that people form of arguments are imprecise (Britt et al., Citation2008).

Dual-process theories of reasoning

We suggest that the processes involved in argument evaluation can be understood in the context of dual-process theories of thinking and reasoning. These theories concern the interplay of autonomous and effortless, intuitive “Type 1” processes, and controlled, effortful, analytic “Type 2” processes (De Neys & Pennycook, Citation2019; Evans & Stanovich, Citation2013). One of the main findings of this field is that if a cognitively less demanding solution to a problem is available, people on average favour it over a cognitively more demanding solution. Often, this leads to errors and biases in reasoning (Stanovich, Citation2009). Influential findings indicate that fast responses seem to be based on whether they fit the reasoner’s prior beliefs rather than on logical reasoning (Evans & Curtis-Holmes, Citation2005). Earlier corrective, or default-interventionist accounts assumed that this is because logically correct reasoning would require the involvement of Type 2 processing (Evans, Citation2003). Applied to how people think about informal arguments, these corrective accounts might predict that correctly evaluating the relation between standpoints and arguments is demanding and that it requires activating analytic thinking processes.

However, more recent research has shown that logical answers may also arise rapidly and that logic may interfere with fast belief-based processing (Bago & De Neys, Citation2017; Newman et al., Citation2017). Accordingly, more recent accounts suggest that judgments may also arise from intuitions without the involvement of Type 2 processes. According to these “intuitive logic” accounts, initial Type 1 processes may lead to correct responses on many types of reasoning tasks, because people have internalised the principles needed on these tasks to the point of automaticity (De Neys, Citation2018, Citation2023). Such theories might predict that both correct and flawed judgments of informal arguments can arise through intuitive processing. Similarly to logical principles, even principles of sound argumentation may have been internalised to the point of automaticity.

So far, few studies have investigated informal argumentation from a dual-process perspective. Recently, Hornikx et al. (Citation2021) studied how people evaluate the strength of causal arguments under time pressure (a condition to bring out intuitive judgments) and without time pressure (allowing analytic processing). They found that evaluations given under time pressure were unrelated to those given without time pressure. However, the time-pressured evaluations were also unrelated to the participants’ acceptance of the claims of the arguments, indicating that the evaluations were not based strongly on the participants’ personal views on the topics. Thus, it is unclear what conclusions can be drawn about the intuitive processing of arguments. In another study, Svedholm-Häkkinen and Kiikeri (Citation2022) used mouse-tracking to investigate the processing involved in how people distinguish classic argumentation fallacies from reasonable arguments. Their data was incompatible with the corrective accounts because making errors was slower than responding correctly and associated with a larger initial pull towards the correct response option. While making errors, participants might have been implicitly aware that they were violating relevant principles of sound argumentation. However, the results were inconclusive because the study design did not determine whether the participants’ prior beliefs conflicted with their reasoning.

Creating cognitive conflict: personal views vs. argument strength

To enable clearer conclusions about the involvement of intuitive and analytic processes in argument evaluation, the present study is designed to differentiate arguments that present conflicts between personal beliefs and principles of sound argumentation, from arguments that present no conflict. In research on formal reasoning, studies often create conflicts between intuitive and analytic processes by using reasoning tasks that contrast general knowledge with logical validity (Bago & De Neys, Citation2017; Newman et al., Citation2017). Here, we apply this design to informal argumentation. The arguments in this study concern topics on which participants have strong personal views so that participants will agree with some and disagree with others. In addition, half the arguments will be strong and half will be weak in relation to the criteria for different types of arguments described in argumentation theories (van Eemeren et al., Citation2004). Thus, half the trials will involve a conflict between the participants’ personal beliefs and argument strength, while in half personal beliefs and argument strength will be aligned, and thus the trials will involve no conflict. Thus, to correctly evaluate arguments on conflict trials will require overcoming myside bias, the common tendency for evaluations of the strength or validity of stimuli to be affected by one’s personal views rather than by the actual strength or validity of the stimulus. Myside bias has been robustly documented with various methods and with many types of stimuli, including evaluation of informal argumentation (Caddick & Feist, Citation2021; Edwards & Smith, Citation1996; Hoeken & van Vugt, Citation2016; McCrudden & Barnes, Citation2016; Mercier, Citation2016; Stanovich & West, Citation1997; Taber & Lodge, Citation2006; Wolfe & Kurby, Citation2017).

Examining mouse trajectories

To trace the processing behind argument evaluations, the present study uses mouse tracking (Freeman, Citation2018). Mouse tracking builds on the finding that small movements of the hand during decision-making reflect hesitation between response options. Thus, mouse trajectories can be inspected to draw conclusions about whether decisions are made in a straightforward manner, or after weighing different options. To describe mouse trajectories, several indices can be calculated. We will examine the most established measure, the Area Under Curve (AUC) between the observed trajectory and an imagined straight trajectory (Freeman, Citation2018). In addition, we will measure response times (RT), as these are often interpreted as indicative of the amount of conflict, with faster responses expected in less conflicted conditions (Bonner & Newell, Citation2010; Voss et al., Citation1993).

Importantly, corrective theories predict that conflict trials will be more error-prone, slower, and associated with more hesitation than no-conflict trials. The latest intuitive logic theories (De Neys, Citation2023) predict that the presence of conflict will increase errors, response times, and hesitation, if two or more competing intuitions are strong and analytic thinking is needed to resolve the conflict. If one intuition clearly dominates over others, analytic thinking is not triggered. However, the theories differ clearly in their predictions regarding errors in conflict trials, that is, times when participants respond based on their personal beliefs rather than on principles of sound argumentation. Corrective theories predict these errors to be relatively fast and hesitation-free because they indicate that analytic processing has not been activated. In turn, intuitive logic theories predict that these errors are associated with equally long response times and an equal amount of hesitation as for correct responses. This is because both correct and erroneous responses are seen to arise to a similar degree from competition between different intuitions, some of them belief-based and others based on an internalised understanding of the principles of good argumentation.

The importance of studying different types of arguments

For results to be generalisable, we believe it is important to study different types of informal argumentation. For this, we turn to argumentation theories. These theories offer the theoretical concept of the argument scheme, which describes how standpoints and arguments relate to each other (Walton et al., Citation2008). Some of the most common argument schemes are arguments from consequence, from analogy, from signs (so-called symptomatic arguments), and arguments from authority (Hoeken et al., Citation2012; van Eemeren et al., Citation2004, Citation2009). presents descriptions and examples of arguments following each of these schemes. By including arguments from several schemes, our approach is broader than in much of previous cognitive psychological research on informal arguments, which has often focused on one type of argument at a time, particularly on arguments from consequence (Bonnefon, Citation2012; Hahn, Citation2020; Hahn & Oaksford, Citation2007; for inclusive treatments, see e.g., Hoeken et al., Citation2012, Citation2014; Hoeken & van Vugt, Citation2016; Thompson et al., Citation2005; van Eemeren et al., Citation2009).

Table 1. Example arguments from each scheme used in the study, with commentary in italics.

Extending research to several different argument schemes is important because evaluating arguments from different schemes ostensibly presents different cognitive tasks. In the terminology of the pragma-dialectic argumentation theory, each scheme is associated with scheme-specific “critical questions” that are used when evaluating argumentation (van Eemeren et al., Citation2004). On arguments from consequence, reasoners should ask themselves how likely the presented consequences are. On arguments from analogy, reasoners should evaluate the relevant similarities and differences between the points of comparison. On symptomatic arguments, the task is to evaluate whether the property that is referred to truly is symptomatic for the case in question. On arguments from authority, the task is to assess the credibility of the purported authority, for example in terms of possessing expertise in the relevant domain and being impartial. An interview study (Schellens et al., Citation2017) indicated that when critically discussing arguments, laypeople overwhelmingly focused on scheme-specific criteria, such as whether an authority had expertise in the right area. For use in the present study, we created strong and weak arguments using the criteria described for each argument scheme in the literature on argumentation (Hoeken et al., Citation2012; van Eemeren et al., Citation2004; Walton et al., Citation2008). We set no hypotheses regarding differences between these types.

The present study

This study compares the proportions of correct responses, response times, and mouse-tracking measures across arguments that create cognitive conflict and arguments that create no conflict. Based on previous research on myside bias, we expect all these measures to show that participants have myside bias (Hypotheses 1a–1c below). Further, in line with intuitive logic theories, we expect all studied measures to indicate that overcoming myside bias requires no more effort than responding in a way that expresses myside bias (Hypotheses 2a and 2b below). We preregistered the following hypotheses (https://osf.io/bcr4a/):

Hypothesis 1a. Conflict trials are associated with more errors in evaluation than no-conflict trials.

Hypothesis 1b. Responses to conflict trials are slower than responses to no-conflict trials.

Hypothesis 1c. Conflict trials are associated with more hesitation than no-conflict trials, as measured by the mouse-tracking index AUC (area under curve).

Hypothesis 2a. On conflict trials, errors are equally slow as correct responses.

Hypothesis 2b. On conflict trials, errors are associated with equal mouse-tracking indices of hesitation as correct responses are.

Materials and methods

Participants

The participants were 249 residents of the United Kingdom, whose first language was English. Just over half, 136 (54%) were female, 113 male, and one participant preferred not to state their gender. The mean age was 44 years, SD = 14, range 18–77, with all adult ages well represented. Most were employed either full-time (52%) or part-time (17%). Nine percent were students. The participants were recruited through the Prolific platform and paid £2 for participation.

Procedure

The study received ethical approval from the Ethics Committee of the Tampere Region. The study was run in English. The median duration of the study was 9 min. Participants were directed to a website external to Prolific, where the study was implemented in JavaScript running in the browsers of the participants’ personal computers. The participants indicated informed consent by ticking a box and were given the chance to review a privacy notice. Participants who consented to participate were directed to a page with a welcome message, instructions, and a comprehension check. Of the final sample, 93% passed the comprehension check on the first try, and 7% on the second try (participants who failed twice were directed back to Prolific). The instructions for the study were as follows:

Welcome to the study!

In this study, you will be presented with a range of arguments for claims on different topics. Your task is to evaluate how well the arguments justify the claims. If you find that an argument is a good justification for a claim, you should respond that it is strong. If you find that an argument does a poor job at justifying a claim, you should respond that it is weak.

You will see several different arguments for the same claims in scrambled order. The number of arguments is 48, so it is not useful to get stuck on individual arguments.

Halfway through the study participants were presented with the following attention check: “The next question is very simple. When asked for your favourite colour, you must select ‘green’. This is an attention check. Based on the text you read above, what colour have you been asked to enter?” (all other options except green considered failing). After evaluation of all arguments, there was another attention check: “Please indicate your agreement with the statement below: I know every language in the world” (rated 1–6 from “strongly disagree” to “strong agree”, and ratings above 2 considered failing). At the end of the study, participants were requested to report their personal opinions on the topics of the arguments, and whether they had used an external mouse or a touchpad mouse in the study. Finally, participants saw a debriefing message informing them that during the study, their mouse coordinates had been recorded and that they had the right to withdraw their mouse coordinate data from the dataset without losing their monetary compensation. One participant opted to withdraw their data.

Arguments

The 48 arguments were on three societal issues of varying topicality: the death penalty, monetary compensation to phishing victims, and the urgency of measures to combat climate change. For each topic, we formulated 16 arguments counterbalanced on strength, side, and argument scheme. Half of the arguments were in line with the criteria for strong arguments given by argumentation theories (strong), and half violated them (weak). Half of the arguments were for each topic, half against it. On each topic, there was an equal number of arguments following each of four argument schemes: causal, analogous, symptomatic, and authority arguments.

The topics were selected through a pilot study. In the pilot study, sets of arguments on five different topics were tested on a sample of 10 people with the same demographic filters as used in the main study (UK residents whose first language is English). In the pilot study, participants provided both numerical and written evaluations of the strength of the arguments, their personal opinions on the topics, how strongly they felt about the topics, and the suitability of each argument for a UK context. The final three topics were chosen because their claims divided opinions and because the arguments were suited for a UK context. Where necessary, arguments were revised based on the findings from the pilot study.

The final arguments were on average 137 characters long (SD = 26). Length did not differ between argument schemes, F(3,44) = 1.226, p = .31, nor between weak and strong arguments, F(1,46) = 0.508, p = .48, or arguments for and against a topic, F(1,46) = 0.61, p = .439. All arguments are available in the code book: https://osf.io/yqs79/.

Conflict and no-conflict trials

Participants were shown claims for the three topics that the arguments had concerned. They indicated their views on a six-point scale (1 = I strongly disagree, 6 = I strongly agree). For each participant and each topic individually, arguments were defined as being either mysided or othersided depending on the participant’s personal view of the topic and the direction of the argument. For participants who disagreed with a proposition (personal views below the scale midpoint), arguments for the proposition were defined as othersided, and arguments against the proposition were defined as mysided. For participants who agreed with a proposition (personal views above the scale midpoint), arguments for the proposition were defined as mysided, and arguments against the proposition were defined as othersided. Then, trials on which the participants’ personal beliefs and argument strength conflicted (weak mysided arguments and strong othersided arguments) were defined as conflict trials and trials on which personal beliefs and argument strength aligned (strong mysided arguments, and weak othersided arguments), were defined as no-conflict trials.

Data screening

Because the use of touchpad mice results in jerkier movement data (Freeman & Ambady, Citation2010), we asked in the study description that people only participate if they were using an external mouse. The study description described the study as “working best” when using an external mouse. However, at the end of the study, 44% of participants self-reported having used a touchpad mouse. The data of the touchpad users was inspected visually and all dependent variables were compared between touchpad users and external mouse users. Touchpad users gave significantly faster responses (median 2210 ms vs. median 4924 ms of the external mouse users), but there were no differences in the proportions of correct responses or in AUC between the groups. Moreover, we visually identified 13 participants (5% of the sample) with particularly jerky trajectories. However, removing them had no discernible effect on the results. Thus, no data was left outside analyses on these grounds.

Despite the instructions to use a mouse for responding, technically it was also possible to respond using keyboard shortcuts. The majority (88%) of participants used a mouse on all 48 arguments. The rest used keyboard shortcuts on part of the trials. Thus, trajectory data was not available in 11% of trials.

Inspection of RTs showed that 23% of trials were 1000 ms or shorter. A realistic minimum time to read an argument may be 1000 ms, and trials faster than this might provide unreliable data. However, removing the very fast trials had only a marginal effect on results and changed no conclusions. We also tested restricting the analyses to trials above 3000 ms but again, this made no difference for the conclusions. Fifty-two trials (0.4% of trials) were longer than a minute, likely resulting from participants taking a break from the study. The RTs and trajectories of these extremely long trials were left outside analyses. All other available data was used in the analyses.

We inspected AUC for outliers and found that 1478 trials (12% of all trials) had AUCs >3 SD above the mean. Removing these had no effect on the conclusions drawn from the results so these trials were retained in the analyses.

Finally, we inspected whether any participants gave the same response to all arguments, which would have indicated low effort. However, all participants varied their responses during the study.

Because some participants either made errors on all arguments of a certain type, or no errors (e.g., on conflict arguments on climate measures, 27 participants only gave correct responses, and 33 participants only gave incorrect responses), no RT and AUC data was available for them on the types of trials they did not make. To preserve sample size in ANOVAs, we used multiple imputation to replace the missing cells for participants who were missing values on a maximum of five cells (each cell representing a combination of the conflict, response, and topic factors, e.g., conflict trials with error responses on the topic of climate measures). Multiple imputations were done using the mice (van Buuren & Groothuis-Oudshoorn, Citation2011) package for R. After replacement, full RT data was available for 223 participants. One additional participant was missing from AUC analyses because they had missing trajectories due to using a touchpad mouse. Thus, full AUC data was available for 222 participants.

The data were analysed using R (R Core Team, Citation2022), the psych (Revelle, Citation2021), effectsize (Ben-Shachar et al., Citation2020), pracma (Borchers, Citation2022), and BayesFactor (Morey & Rouder, Citation2023) packages for R. The data and R code are openly available at https://osf.io/5edbk/.

Inference criteria

In all statistical analyses, we used the standard p < .05 criterion for determining if the analyses suggest that the results are significantly different from those expected if the null hypothesis were correct. Where the sphericity assumption was violated, we report the Greenhouse-Geisser epsilon and the corrected p-value. Because Hypotheses 2a and 2b were that no differences would be found, which cannot be determined using NHST (null hypothesis significance testing) analyses, we base our conclusions on Bayes factors (this deviates from the preregistered inference criteria because they did not take into account that NHST cannot determine whether results support the null). Following Kass and Raftery (Citation1995), we consider logBF = |[0, 0.5]| worth a mention, |[0.5, 1]| substantial, |[1, 2]| strong, and >|2| decisive evidence for one model over another. Negative Bayes factors indicate support for the null hypothesis and positive Bayes factors indicate support for the tested effect. In addition, we report effect sizes. In analyses of variance, we considered partial eta squares (ηp2) smaller than 0.01 as indicating no difference between the studied conditions, and in t-tests, Cohen’s d values smaller than 0.20 as indicating no difference. Relatedly, we considered ηp2 ≥ 0.06 as medium, and ηp2 ≥ 0.14 as large effects, and d ≥ 0.50 as medium, and d ≥ 0.80 as large effects.

Results

The claims divided opinions, and all claims received ratings spanning the entire scale. However, the claim concerning measures to combat climate change was heavily skewed to the right, with only 16% rating their agreement above the midpoint of the scale. shows agreement with the claims of the arguments. Supplemental Figure S1 shows the distributions of these views.

Table 2. Agreement with the claims.

Responses

On 64% of the analysed trials, arguments were rated correctly. On each scheme, the strong arguments were rated as being strong more often than the weak arguments (causal: t(248) = 26.404; analogous: t(248) = 15.442; symptomatic: t(248) = 15.755; authority: t(248) = 22.034; all ps < .001, Cohen’s ds = [1.18, 2.13], and logBF10 = [80, 162]). In line with Hypothesis 1a, participants more often made errors on conflict (mean proportion correct = 47%) than on noconflict trials (proportion correct = 81%), t(248) = −25.14, p < .001, with a large effect size, Cohen’s d = 2.68, and a Bayes factor showing decisive evidence for a model including the effect of conflict compared to a null model, logBF10 = 153.245.

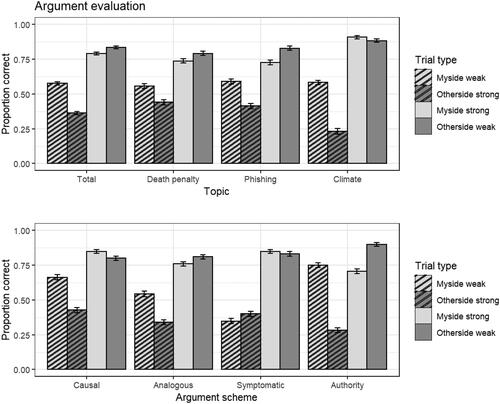

As an exploratory analysis, we ran pairwise comparisons within each topic and each argument scheme separately. The effect of conflict held within each topic and across all argument schemes (all ps < .001, Cohen’s ds = [1.26, 2.73], logBF10 = [50, 156]). shows the proportions of correctly rated arguments separately on each topic and argument scheme.

Further, to explore whether the strength of the participants’ personal views affected the results, we ran additional analyses using personal views as continuous variables. The results indicate that the effects of argument strength were largely independent of the participants’ personal views. Detailed results are reported in the Supplemental material.

Response times

The median response time (RT) was 4036 ms, with a high skew to the right (skewness = 3.81). For analyses, we used the natural logarithm of RT.

Hypothesis tests

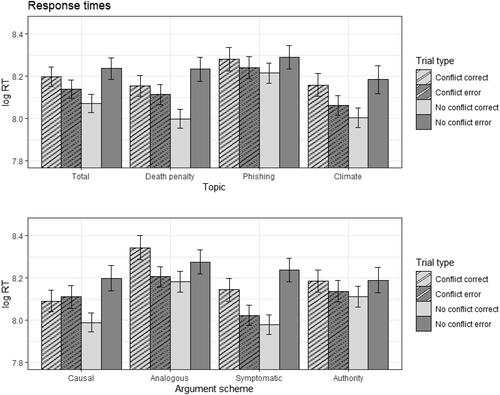

To test Hypotheses 1b and 2a, we ran a 2 × 2 factorial ANOVA, with conflict (yes, no) and response (correct, error) as within-subjects independent variables, and RT as the dependent variable. Hypothesis 1b was that responses to conflict trials would be slower than responses to no-conflict trials. Against this hypothesis, there was no overall difference in RTs between conflict trials (median = 4952 ms, logRT M = 8.15, SD = 0.65) and noconflict trials (median = 4974 ms, logRT M = 8.15, SD = 0.66). Conflict had no main effect, F(1, 222) = 0.020, p = .886, ηp2 < 0.001, logBF10 = −2.240, indicating decisive evidence for the null. The response had a small main effect, F(1,222) = 9.762, p = .002, ηp2 = 0.04, logBF10 = 0.742, and there was a large interaction between the factors, F(1,222) = 50.797, p < .001, ηp2 = 0.21, logBF10 = 20.531. Hypothesis 2a was that on conflict trials, errors would be equally slow as correct responses. To test this hypothesis, we ran a pairwise comparison of correct and error responses within conflict trials. Against this hypothesis, both null hypothesis testing and Bayes factors indicated that errors (median 4820 ms, logRT M = 8.14, SD = 0.65) were significantly faster than correct responses (median 4944 ms, logRT M = 8.18, SD = 0.70), t(222) = 2.513, p = .013; however, the effect size indicated this effect size was practically meaningless, d = 0.09, even though evidence for it was substantial, logBF10 = 0.797. shows the mean log-transformed response times on different types of trials. Supplemental Figure S3 shows the median response times on different types of trials in the original metric (milliseconds).

Explorative analyses

Exploratorily, we added the topic as an independent variable to the ANOVA. There were some differences in response times to arguments on different topics, such that RTs were overall longer on the arguments on phishing than on the other topics. However, there was no evidence for differences in the pattern of results between topics. We also explored adding the argument scheme as an independent variable to the ANOVA. The pattern of results did not differ between argument schemes. For details, see the Supplementary material.

Similarly, as for the proportions of correct responses, additional analyses (reported in the Supplemental material) showed no indication that the results would be different at different levels of personal views.

AUC

The AUC variable was approximately normally distributed. It correlated only weakly with RT, r = .15, p < .001, across trials.

Hypothesis tests

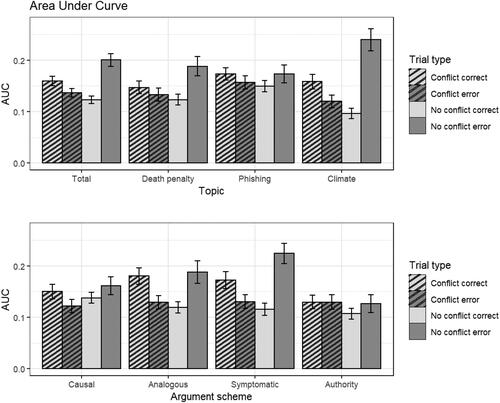

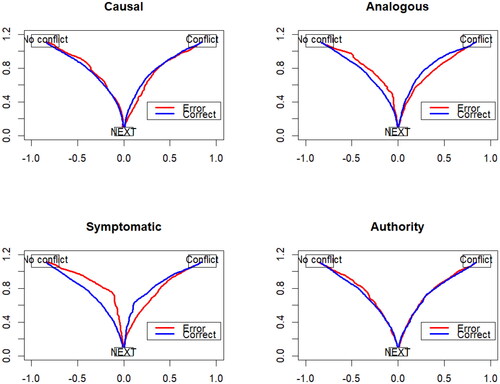

shows AUC for different types of trials. To test Hypotheses 1c and 2b, we ran a 2 × 2 factorial ANOVA, with conflict (yes, no) and response (correct, error) as within-subjects independent variables and AUC as the dependent variable. Hypothesis 1c was that AUC would be larger on conflict trials (M = 0.14, SD = 0.11) than on noconflict trials (M = 0.16, SD = 0.12). The evidence was against a main effect of conflict, F(1,221) = 3.683, p = .056, ηp2 = 0.02, logBF10 = −0.992. The main effect of response, F(1,221) = 16.081, p = < .001, ηp2 = 0.07, logBF10 = 2.277, was significant, as was the interaction of conflict and response, F(1,221) = 29.007, p < .001, ηp2 = 0.12, logBF10 = 35.029. Hypothesis 2b was that on conflict trials, there would be no difference in AUC between errors and correct responses. To test this, we made a pairwise comparison. Within conflict trials, the results indicated that AUC was larger on trials on which participants gave correct responses (M = 0.16, SD = 0.13) than on trials on which they made errors (M = 0.14, SD = 0.13), t(221) = 2.173, p = .031. Thus, the results were against Hypothesis 2b, although the small effect size, d = 0.16, indicates no meaningful difference, and evidence was not very strong, logBF10 = 0.384 (“worth a mention”, according to Kass & Raftery, Citation1995).

Explorative analyses

Exploratorily, we tested adding topic and argument scheme as independent variables to the ANOVA as we had done in the analyses of RT. While detailed results are in the Supplementary material, presents the results separately in each topic and scheme. When examined separately in each topic, NHST analyses and Bayes factors gave somewhat different results. While NHST indicated that there were effects of conflict, and of response within the conflict trials in the arguments on climate change but not in the other topics, Bayes factors indicated no evidence for any differences.

Table 3. Analyses of differences in the area under curve, separately for arguments on each topic and each argument scheme.

When examined separately in each argument scheme, the results of NHST and Bayes factors converged. There was no evidence for an effect of conflict on any scheme. Within conflict trials, AUC differed between correct responses and errors on analogous and symptomatic arguments but not on causal of authority arguments. However, the evidence for the effects in analogous and symptomatic arguments was not strong. Nevertheless, these results indicate that the marginal overall difference in AUC between correct and error responses on conflict trials was driven by the analogous and symptomatic arguments and not present in the other types of arguments. and illustrate these results.

Additional analyses (reported in the Supplemental material) showed no indication that the effects would be different at different levels of personal views.

Discussion

The present results add to previous research showing the ubiquity of myside bias in diverse types of tasks (Caddick & Feist, Citation2021; Edwards & Smith, Citation1996; Hoeken & van Vugt, Citation2016; McCrudden & Barnes, Citation2016; Mercier, Citation2016; Stanovich & West, Citation1997; Taber & Lodge, Citation2006; Wolfe & Kurby, Citation2017). When the claims of arguments and the participants’ personal views matched, participants correctly distinguished weak from strong arguments four out of five times. These findings align with much previous work that shows that overall, people with no training in argument analysis tend to evaluate informal arguments in line with criteria for sound argumentation established by scholars (review: Hornikx et al., Citation2021). However, when claims and personal views conflicted, performance dropped to 58% for weak arguments and 36% for strong arguments. In these conflict trials, the participants’ evaluations were strongly influenced by their personal views on the issues. For example, participants who considered that climate action cannot wait were reluctant to classify any arguments for the opposite side as being strong and reluctant to see fault in arguments that supported their views even if the arguments violated the principles of sound argumentation. Participants who held the opposite view struggled with giving credit to strong arguments for the urgency of climate action and admitting that some arguments against it were weak.

Corrective and intuitive logic dual-process theories and informal argumentation

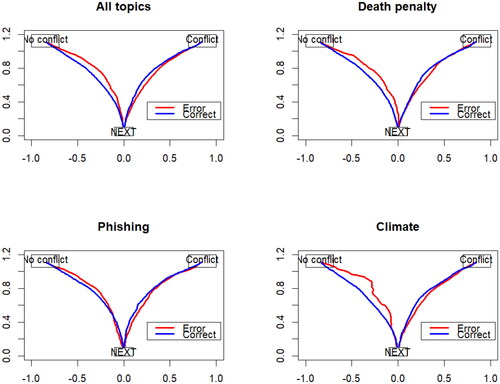

The main aim of the present study was to shed light on the types of cognitive processing involved in how people make judgments about the strength of arguments. To uncover how people process conflicts between personal views and the principles of good argumentation, we examined the two types of responses given in these conflict situations using mouse tracking. The results showed that selecting responses that were in line with principles of good argumentation did take longer and involve larger mouse movements than responding based on personal views did, but the small effect sizes indicated that these effects were practically meaningless. Moreover, there was no effect on response times and mouse trajectories of the mere presence of conflict.

As such, the results are incompatible with the traditional corrective dual-process theories (Evans, Citation2008). Corrective theories would predict that when participants respond in line with generally acknowledged principles of sound argumentation, they would tend to move the mouse first towards the response that aligns with their personal views, and only then to the correct option. Instead, the present results are more compatible with the notion that participants moved the mouse directly to their chosen response both when they responded based on personal views, and when they responded based on principles of sound argumentation. These results align with the results of previous mouse-tracking studies indicating that responses on other types of tasks, such as decisions regarding moral dilemmas can be made without analytic intervention (Gürçay & Baron, Citation2017; Koop, Citation2013; but see Szaszi et al., Citation2018, and Travers et al., Citation2016, for mouse-tracking findings showing that correct responses on some formal reasoning tasks involve corrective mouse movements).

Can the results, then, be accounted for by intuitive logic theories? Intuitive logic theories, or Dual Process Theory 2.0 (De Neys, Citation2018, Citation2023; De Neys & Pennycook, Citation2019), have been developed to account for accumulating empirical findings showing that correct responding often does not require effortful correction. In diverse domains including formal reasoning, moral and social reasoning, logical responses, and other responses long assumed to take effort, most typically arise intuitively. The evidence shows that while slow and effortful deliberation does sometimes correct faulty intuitions, the most typical case in the types of tasks that have been studied is that the correct responses arise rapidly and effortlessly (reviewed in De Neys, Citation2023). Thus, the best reasoners are not the best at correcting their faulty intuitions, but rather, they have the best intuitions (Bago & De Neys, Citation2019; De Neys & Pennycook, Citation2019; Thompson et al., Citation2018).

In the most recent formulation of the intuitive logic theory, De Neys (Citation2023) suggests that all types of responses, including normatively correct ones, can be cued by intuitions. In this view, adults have internalised many of the principles needed to solve the types of tasks that are used in reasoning studies, to the point that these can be activated automatically. The present findings are compatible with this view. Following this line of thinking, the participants may have been evaluating everyday arguments by relying on smart intuitions deriving from relevant criteria for argument quality that they have internalised. By this account, people could correctly recognise reasonable arguments as being reasonable, and violations of argument criteria as violations, intuitively and effortlessly, without the need to activate Type 2 processing.

However, an alternative explanation for the present results is that analytic processing was involved, but that it was involved in correct and biased responses alike. As dual-process theories maintain (Evans, Citation2019), the function of analytic thinking is often to help validate and justify intuitive insights, not only to correct them. For example, a recent study (Bago et al., Citation2023) found that on the politicised topic of climate change, when participants were able to use deliberation, they adjusted their judgments of argumentative texts to be more in line with their existing beliefs than if deliberation was restricted. Thus, participants in the present study may have been deliberating even when they responded in line with their personal views. Analytic processes may have been recruited for this either after the participants finished reading the materials, or already during reading of the materials.

To date, dual process theories do not specify any absolute time limits for intuition. Thus, as in any study attempting to tease apart the involvement of intuition and analytic thinking, response times are of limited use for drawing conclusions. Bago and De Neys (Citation2019) suggest a pragmatic criterion: responses that fall within the average time required to read stimuli cannot involve much analytic thinking. In the present study, the median response time was around 4 s, which is not much longer than needed to read the arguments. By this standard, many of the present responses were within the time limits of what can reasonably be thought of as intuitive. Thus, the present results are compatible with the notion that while correct responses did not stem from intuitive processing exclusively, they may have done so in some proportion of trials.

However, it is also possible that participants did not read to the end and made their decisions before they reached the end of the sentences. This could be interpreted as a type of bias in which participants do not even bother about the reasons and only decide to respond on their personal views. Because all stimuli presented the claim before the reason, it is certainly possible that this occurred in some proportion of the trials. However, the relatively high performance-level speaks against the interpretation that this happened frequently. For example, in evaluating strong otherside arguments, which seemed to be the most difficult type of argument to judge objectively, performance was far from zero, with more than a third of these arguments evaluated correctly. In the case of weak myside arguments, performance was as high as 58%. Thus, more often than not, the participants admitted that some of the arguments in favour of their preferred stance were weak. Thus, in all, the results suggest that people tended to read the stimuli in full. Nonetheless, to rule out the explanation that participants made their decisions without reading the reasons, future studies should vary the format of arguments, so that on part of the stimuli the reasons would precede the claims.

To determine the extent to which people use intuitive and analytic thinking to evaluate argument strength, more research is needed. One promising method might be to ask participants to explain their responses. Like dual-process theories define them, one difference between intuition and deliberation is transparency (De Neys, Citation2023; Evans & Stanovich, Citation2013). If a response is based on deliberation, people should be able to explain why they think the way they do, when asked. In contrast, intuitions cannot be explained (Bago & De Neys, Citation2019; Epstein, Citation2010; Kahneman, Citation2003).

Thus, future studies should combine quantitative evaluations of argument strength with asking participants to explain their responses.

Can principles of sound argumentation be internalised?

In the case that future studies support the notion that informal arguments can sometimes be correctly evaluated intuitively, this raises the question what principles are intuitively activated. Have people internalised scheme-specific criteria for sound argumentation? For example, do people intuitively note whether a causal argument refers to consequences that are likely, or whether a symptomatic argument refers to relevant characteristics (signs)? Recent research on formal reasoning has called into question the notion that people could internalise very complicated logical or other types of principles. For example, Meyer-Grant et al. (Citation2022) found that the logic-liking effect, which is often used as evidence for the intuitive logic account, can be explained in terms of experimental confounds. In another study, Ghasemi et al. (Citation2022) found that superficial features of reasoning tasks that resemble logic can lead to similar effects on reasoning tasks as actual logic. Thus, findings thought to indicate that intuitive logic interferes with belief-based processing may be due to so-called pseudovalidity. For example, on conditional reasoning tasks, participants may learn the heuristic that correct responses tend to be the ones where the conclusions are negative. Thus, Ghasemi et al. suggests, response patterns on reasoning tasks may be due to learning that takes place during a study session, and not indicative of deeply internalised understanding of logical principles that have accumulated over a lifetime. Whether similar processes may be involved in how people evaluate informal argumentation is a question for future research.

Evaluating arguments from different argument schemes

By theories of argument evaluation, different aspects are relevant depending on what type of argument one is confronted with (van Eemeren et al., Citation2004; Walton et al., Citation2008). By differentiating between causal, analogous, symptomatic, and authority arguments we were able to construct arguments that were either weak or strong based on how well the arguments supported the claims from the perspective of the critical questions associated with each argument type (Hoeken et al., Citation2020; van Eemeren et al., Citation2009, Chapter 7). However, we found only small differences in the patterns of results for different argument schemes. First, people were equally biased towards their personally favoured stances when they evaluated arguments from all schemes. Second, data on response times were also similar across argument schemes. Data on the shape of mouse trajectories indicated that overcoming myside bias may have required marginally more effort in analogous and symptomatic arguments than in causal or authority arguments, but the effect sizes indicated these differences make no practical difference. Thus, in terms of the involvement of intuitive and analytic processes, arguments representing different schemes seemed to be processed in the same way. This is not to say that evaluating different types of arguments might not differ in some other way. It is possible that arguments from different schemes draw on different abilities or different types of knowledge. For example, evaluating them may vary in terms of their reliance on the ability to form rich mental models (Johnson-Laird, Citation2008), to activate existing knowledge, or in some other respect. More research is needed to explore these possibilities.

Caveats

One factor that may limit the generalisability of the present type of research is that we studied individual arguments without context. A fundamental challenge when studying the cognitive processing of informal argumentation is contextuality. Authentic argumentation does not consist of short, individual standpoints supported by exactly one argument. Rather, it tends to consist of less structured and less clearly formulated meandering lines of reasoning. In the end, the quality often depends on the starting points of the arguers (van Eemeren et al., Citation2014, Chapter 10.6). A reasonable evaluation should take the dialogic nature of argumentation into account, the arguers, and their context. However, methodologies such as mouse-tracking require standardisation of the stimulus materials. Despite the challenges, for ecological validity, future studies should attempt to test whether the findings obtained using individual arguments also apply when people are dealing with arguments in context.

Another limitation relates to the skewed distributions of the participants’ views on the topics of the arguments. In particular, the vast majority of the participants strongly thought that climate measures cannot wait and that the death penalty should not be reinstated. This may limit the generalisability of the results. More research is needed to replicate the results using arguments on topics on which more equal proportions of participants are in support of and against claims. Moreover, the small number of climate sceptic and death penalty supporting participants might limit the possibility of finding additional effects of personal views on the results (which were explored in the supplemental analyses).

Conclusions

Judging the strength of arguments ostensibly requires evaluating aspects such as the likelihood of consequences, the similarities and differences between points of comparison, and the credibility of authorities. Whichever aspect of an argument one is dealing with, evaluating it requires filling in the missing links between arguments and standpoints (Galotti, Citation1989; van Eemeren et al., Citation2014, Chapter 1.3.2). By the results that we have presented here, evaluating informal argumentation was not consistent with the traditional corrective view that correct responses involve first an incorrect intuition, and then an effortful correction by analytic thinking. Rather, the results of this study were compatible with the “intuitive logic” notion that many rules and principles needed in reasoning can be internalised to the point of automaticity and accessed effortlessly (De Neys, Citation2018; Thompson et al., Citation2018). However, alternative explanations cannot be ruled out by the present data and more research is needed.

As such, the task of evaluating informal arguments appears to place similar cognitive demands on reasoners as formal reasoning tasks of comparable length do. Theoretically, this is not so surprising, because as Evans (Citation2012) suggested, there may not be different kinds of human reasoning, just different traditions for studying formal and informal reasoning in psychology. However, the intuitive logic account has to date been developed mainly in the context of deductive formal reasoning (Bago & De Neys, Citation2017; De Neys, Citation2012; Newman et al., Citation2017). The present study is among the first to report results that are compatible with the intuitive logic, or smart intuitions, account using a task involving informal argumentation. Continuing this line of work will bring further clarity to how intuitive and analytic thinking interact in the types of informal thinking tasks that people are faced with every day.

Supplemental material.docx

Download MS Word (156.5 KB)Acknowledgements

Thanks to Eero Häkkinen for programming the experiment and assistance in data handling.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Correction Statement

This article has been corrected with minor changes. These changes do not impact the academic content of the article.

References

- Angell, R. B. (1964). Reasoning and logic. Appleton-Century-Crofts.

- Bago, B., & De Neys, W. (2017). Fast logic? Examining the time course assumption of dual process theory. Cognition, 158, 90–109. https://doi.org/10.1016/j.cognition.2016.10.014

- Bago, B., & De Neys, W. (2019). The smart system 1: Evidence for the intuitive nature of correct responding in the bat-and-ball problem. Thinking & Reasoning, 25(3), 257–299. https://doi.org/10.1080/13546783.2018.1507949-

- Bago, B., Rand, D. G., & Pennycook, G. (2023). Reasoning about climate change. PNAS Nexus, 2(5), pgad100. https://doi.org/10.1093/pnasnexus/pgad100

- Ben-Shachar, M., Lüdecke, D., & Makowski, D. (2020). effectsize: Estimation of effect size indices and standardized parameters. Journal of Open Source Software, 5(56), 2815. https://doi.org/10.21105/joss.02815

- Bonnefon, J.-F. (2012). Utility conditionals as consequential arguments: A random sampling experiment. Thinking & Reasoning, 18(3), 379–393. https://doi.org/10.1080/13546783.2012.670751

- Bonner, C., & Newell, B. R. (2010). In conflict with ourselves? An investigation of heuristic and analytic processes in decision making. Memory & Cognition, 38(2), 186–196. https://doi.org/10.3758/MC.38.2.186

- Borchers, H. W. (2022). pracma: Practical numerical math functions. R package version 2.4.2. https://CRAN.R-project.org/package=pracma

- Britt, M. A., Kurby, C. A., Dandotkar, S., & Wolfe, C. R. (2008). I agreed with what? Memory for simple argument claims. Discourse Processes, 45(1), 52–84. https://doi.org/10.1080/01638530701739207

- Caddick, Z. A., & Feist, G. J. (2021). When beliefs and evidence collide: Psychological and ideological predictors of motivated reasoning about climate change. Thinking & Reasoning, 28(3), 428–464. https://doi.org/10.1080/13546783.2021.1994009

- De Neys, W. (2012). Bias and conflict: A case for logical intuitions. Perspectives on Psychological Science: A Journal of the Association for Psychological Science, 7(1), 28–38. https://doi.org/10.1177/1745691611429354

- De Neys, W. (2023). Advancing theorizing about fast-and-slow thinking. The Behavioral and Brain Sciences, 46, e111. https://doi.org/10.1017/S0140525X2200142X

- De Neys, W. (Ed.). (2018). Dual process theory 2.0. Routledge.

- De Neys, W., & Pennycook, G. (2019). Logic, fast and slow: Advances in dual-process theorizing. Current Directions in Psychological Science, 28(5), 503–509. https://doi.org/10.1177/0963721419855658

- Demir, Y., & Hornikx, J. (2022). Sensitivity to argument quality: Adding Turkish data to the question of cultural variability versus universality. Communication Research Reports, 39(2), 104–113. https://doi.org/10.1080/08824096.2022.2045930

- Edwards, K., & Smith, E. E. (1996). A disconfirmation bias in the evaluation of arguments. Journal of Personality and Social Psychology, 71(1), 5–24. https://doi.org/10.1037/0022-3514.71.1.5

- Epstein, S. (2010). Demystifying intuition: What it is, what it does, and how it does it. Psychological Inquiry, 21(4), 295–312. https://doi.org/10.1080/1047840X.2010.523875

- Evans, J. St. B. T. (2003). In two minds: Dual-process accounts of reasoning. Trends in Cognitive Sciences, 7(10), 454–459. https://doi.org/10.1016/j.tics.2003.08.012

- Evans, J. St. B. T. (2008). Dual-processing accounts of reasoning, judgment, and social cognition. Annual Review of Psychology, 59(1), 255–278. https://doi.org/10.1146/annurev.psych.59.103006.093629

- Evans, J. St. B. T. (2012). Questions and challenges for the new psychology of reasoning. Thinking & Reasoning, 18(1), 5–31. https://doi.org/10.1080/13546783.2011.637674

- Evans, J. St. B. T. (2019). Reflections on reflection: The nature and function of type 2 processes in dual-process theories of reasoning. Thinking & Reasoning, 25(4), 383–415. https://doi.org/10.1080/13546783.2019.1623071

- Evans, J. St. B. T., & Curtis-Holmes, J. (2005). Rapid responding increases belief bias: Evidence for the dual-process theory of reasoning. Thinking & Reasoning, 11(4), 382–389. https://doi.org/10.1080/13546780542000005

- Evans, J. St. B. T., & Stanovich, K. E. (2013). Dual-process theories of higher cognition advancing the debate. Perspectives on Psychological Science: A Journal of the Association for Psychological Science, 8(3), 223–241. https://doi.org/10.1177/1745691612460685

- Freeman, J. B. (2018). Doing psychological science by hand. Current Directions in Psychological Science, 27(5), 315–323. https://doi.org/10.1177/0963721417746793

- Freeman, J. B., & Ambady, N. (2010). MouseTracker: Software for studying real-time mental processing using a computer mouse-tracking method. Behavior Research Methods, 42(1), 226–241. https://doi.org/10.3758/BRM.42.1.226

- Galotti, K. M. (1989). Approaches to studying formal and everyday reasoning. Psychological Bulletin, 105(3), 331–351. https://doi.org/10.1037/0033-2909.105.3.331

- Ghasemi, O., Handley, S., Howarth, S., Newman, I. R., & Thompson, V. A. (2022). Logical intuition is not really about logic. Journal of Experimental Psychology. General, 151(9), 2009–2028. https://doi.org/10.1037/xge0001179

- Gürçay, B., & Baron, J. (2017). Challenges for the sequential two-system model of moral judgement. Thinking & Reasoning, 23(1), 49–80. https://doi.org/10.1080/13546783.2016.1216011

- Hahn, U. (2020). Argument quality in real world argumentation. Trends in Cognitive Sciences, 24(5), 363–374. https://doi.org/10.1016/j.tics.2020.01.004

- Hahn, U., & Oaksford, M. (2007). The rationality of informal argumentation: A Bayesian approach to reasoning fallacies. Psychological Review, 114(3), 704–732. https://doi.org/10.1037/0033-295X.114.3.704

- Hoeken, H., & van Vugt, M. (2016). The biased use of argument evaluation criteria in motivated reasoning: Does argument quality depend on the evaluators’ standpoint? In F. Paglieri, L. Bonelli, & S. Felletti (Eds.), The psychology of argument – Cognitive approaches to argumentation and persuasion (pp. 197–210). College Publications.

- Hoeken, H., Hornikx, J., & Linders, Y. (2020). The importance and use of normative criteria to manipulate argument quality. Journal of Advertising, 49(2), 195–201. https://doi.org/10.1080/00913367.2019.1663317

- Hoeken, H., Šorm, E., & Schellens, P. J. (2014). Arguing about the likelihood of consequences: Laypeople’s criteria to distinguish strong arguments from weak ones. Thinking & Reasoning, 20(1), 77–98. https://doi.org/10.1080/13546783.2013.807303

- Hoeken, H., Timmers, R., & Schellens, P. J. (2012). Arguing about desirable consequences: What constitutes a convincing argument? Thinking & Reasoning, 18(3), 394–416. https://doi.org/10.1080/13546783.2012.669986

- Hornikx, J., Weerman, A., & Hoeken, H. (2021). An exploratory test of an intuitive evaluation method of perceived argument strength. Studies in Communication Sciences, 22(2), 311–324. https://doi.org/10.24434/j.scoms.2022.02.003

- Johnson-Laird, P. (2008). How we reason. Oxford University Press.

- Kahneman, D. (2003). A perspective on judgment and choice: Mapping bounded rationality. The American Psychologist, 58(9), 697–720. https://doi.org/10.1037/0003-066X.58.9.697

- Kass, R. E., & Raftery, A. E. (1995). Bayes Factors. Journal of the American Statistical Association, 90, 773–795. https://doi.org/10.1080/01621459.1995.10476572

- Koop, G. J. (2013). An assessment of the temporal dynamics of moral decisions. Judgment and Decision Making, 8(5), 527–539. https://doi.org/10.1017/S1930297500003636

- Kuhn, D., & Modrek, A. (2017). Do reasoning limitations undermine discourse? Thinking & Reasoning, 24(1), 97–116. https://doi.org/10.1080/13546783.2017.1388846

- McCrudden, M. T., & Barnes, A. (2016). Differences in student reasoning about belief-relevant arguments: A mixed methods study. Metacognition and Learning, 11(3), 275–303. https://doi.org/10.1007/s11409-015-9148-0

- Mercier, H. (2016). The argumentative theory: Predictions and empirical evidence. Trends in Cognitive Sciences, 20(9), 689–700. https://doi.org/10.1016/j.tics.2016.07.001

- Meyer-Grant, C. G., Cruz, N., Singmann, H., Winiger, S., Goswami, S., Hayes, B. K., & Klauer, K. C. (2022). Are logical intuitions only make-believe? Reexamining the logic-liking effect. Journal of Experimental Psychology. Learning, Memory, and Cognition, 49(8), 1280–1305. https://doi.org/10.1037/xlm0001152

- Morey, R., & Rouder, J. (2023). BayesFactor: Computation of bayes factors for common designs. R package version 0.9.12-4.5. https://CRAN.R-project.org/package=BayesFactor

- Neuman, Y., Weinstock, M. P., & Glasner, A. (2006). The effect of contextual factors on the judgement of informal reasoning fallacies. Quarterly Journal of Experimental Psychology, 59(2), 411–425. https://doi.org/10.1080/17470210500151436

- Newman, I. R., Gibb, M., & Thompson, V. A. (2017). Rule-based reasoning is fast and belief-based reasoning can be slow: Challenging current explanations of belief-bias and base-rate neglect. Journal of Experimental Psychology. Learning, Memory, and Cognition, 43(7), 1154–1170. https://doi.org/10.1037/xlm0000372

- R Core Team (2022). R: A language and environment for statistical computing. R Foundation for Statistical Computing. https://www.R-project.org/

- Revelle, W. (2021). psych: Procedures for psychological, psychometric, and personality research (Version R package version 2.1.3). Northwestern University. https://CRAN.R-project.org/package=psych

- Schellens, P. J., Šorm, E., Timmers, R., & Hoeken, H. (2017). Laypeople’s evaluation of arguments: Are criteria for argument quality scheme-specific? Argumentation, 31(4), 681–703. https://doi.org/10.1007/s10503-016-9418-2

- Shaw, V. F. (1996). The cognitive processes in informal reasoning. Thinking & Reasoning, 2(1), 51–80. https://doi.org/10.1080/135467896394564

- Stanovich, K. E. (2009). Distinguishing the reflective, algorithmic, and autonomous minds: Is it time for a tri-process theory? In J. St. B. T. Evans & K. Frankish (Eds.), In two minds. Dual processes and beyond (pp. 55–88). Oxford University Press.

- Stanovich, K. E., & West, R. F. (1997). Reasoning independently of prior belief and individual differences in actively open-minded thinking. Journal of Educational Psychology, 89(2), 342–357. https://doi.org/10.1037/0022-0663.89.2.342

- Svedholm-Häkkinen, A. M., & Kiikeri, M. (2022). Cognitive miserliness in argument literacy? Effects of intuitive and analytic thinking on recognizing fallacies. Judgment and Decision Making, 17(2), 331–361. https://doi.org/10.1017/S193029750000913X

- Szaszi, B., Palfi, B., Szollosi, A., Kieslich, P. J., & Aczel, B. (2018). Thinking dynamics and individual differences: Mouse-tracking analysis of the denominator neglect task. Judgment and Decision Making, 13(1), 23–32. https://doi.org/10.1017/S1930297500008792

- Taber, C. S., & Lodge, M. (2006). Motivated skepticism in the evaluation of political beliefs. American Journal of Political Science, 50(3), 755–769. https://doi.org/10.1111/j.1540-5907.2006.00214.x

- Thompson, V. A., Evans, J. St. B. T., & Handley, S. J. (2005). Persuading and dissuading by conditional argument. Journal of Memory and Language, 53(2), 238–257. https://doi.org/10.1016/j.jml.2005.03.001

- Thompson, V. A., Pennycook, G., Trippas, D., & Evans, J. St. B. T. (2018). Do smart people have better intuitions? Journal of Experimental Psychology. General, 147(7), 945–961. https://doi.org/10.1037/xge0000457

- Travers, E., Rolison, J. J., & Feeney, A. (2016). The time course of conflict on the Cognitive Reflection Test. Cognition, 150, 109–118. https://doi.org/10.1016/j.cognition.2016.01.015

- van Buuren, S., & Groothuis-Oudshoorn, K. (2011). mice: Multivariate imputation by chained equations in R. Journal of Statistical Software, 45(3), 1–67. https://doi.org/10.18637/jss.v045.i03

- van Eemeren, F. H., Garssen, B., Krabbe, E. C. W., Snoeck Henkemans, A. F., Verheij, B., & Wagemans, J. H. M. (2014). Handbook of argumentation theory. Springer.

- van Eemeren, F., Garssen, B., & Meuffels, B. (2009). Fallacies and judgments of reasonableness. Empirical research concerning the pragma-dialectical discussion rules. Springer.

- van Eemeren, F., Grootendorst, R., & Eemeren, F. H. (2004). A systematic theory of argumentation: The pragma-dialectical approach. Cambridge University Press.

- Voss, J. F., Fincher-Kiefer, R., Wiley, J., & Silfies, L. N. (1993). On the processing of arguments. Argumentation, 7(2), 165–181. https://doi.org/10.1007/BF00710663

- Walton, D., Reed, C., & Macagno, F. (2008). Argumentation schemes. Cambridge University Press.

- Wolfe, M. B., & Kurby, C. A. (2017). Belief in the claim of an argument increases perceived argument soundness. Discourse Processes, 54(8), 599–617. https://doi.org/10.1080/0163853X.2015.1137446