ABSTRACT

How can we better mediate processes of learning at large institutions? Learning analytics are used primarily in online and blended learning environments to expose patterns in learning behaviour or interaction. They make use of digital traces from virtual learning environments and combine this with other learner data. The goal is to assist both educators and learners in improving their practice. In this work, we apply the concepts of ‘psychological tools’ and more ‘knowledgeable others’ from Lev Vygotsky, and Reuven Feuerstein's model of Mediated Learning Experiences to define prerequisites that learning analytics at scale must reach, in order to be a meaningful tool for mediating learning. We present findings from an in-depth qualitative study with educators and learners at a large, online institution of higher education. We then map insights gained from this study to our model of mediated learning. The resulting analysis and recommendations indicate that we need more inclusive and dedicated practices in learning analytics development, as well as institutional will to create more complex and meaningful tools.

KEYWORDS:

1. Introduction

Educational philosophers Vygotsky, Dewey and Piaget, argued that learning is an active process in which we build knowledge through engaging with our environment and manipulating it Vygotsky (Citation1986), Dewey (Citation1938) and Piaget (Citation1964). New knowledge is formed when preconceptions and new observations clash or are thrown off balance, an event most likely to occur in a social context with many different agents acting according to their own set of beliefs and motivations (Brooks Citation1990, 68). Lev Vygotsky proposed that engagement with knowledgeable others and with technology/tools for learning can lead toward ‘higher mental processes’ (such as metacognition), which facilitate an individual's transition from one set of ideas to another (mediated learning) (Kozulin and Presseisen Citation1995; Kozulin Citation1998). Reuven Feuerstein built on this work, proposing a range of criteria as essential for producing an impactful Mediated Learning Experience (MLE) (Presseisen and Kozulin Citation1992; Feuerstein, Feuerstein, and Falik Citation2015).

In online or blended learning environments, individuals may not have as much contact with others, or be able to witness their learning strategies (Zilka, Cohen, and Rahimi Citation2018). However, one benefit of online and blended learning is that the students' activities are somewhat more visible to the institution through trace data and other interactions with the technology used for educational delivery. Institutions can analyse this data and use it to promote students' and educators' understanding of their learning strategies and approaches (Winne Citation2017). This process and it outcomes are what we refer to as ‘Learning Analytics’.

In this work, we have applied the educational construct of ‘Mediated Learning’ to understand the potential of learning analytics research to shape learning or teaching processes.

We identified the following questions as being necessary to consider. The first two questions map back to the concept of ‘psychological tools’ from Vygotsky, the second two questions refer back to the Universal Criteria of Mediated Learning from Feuerstein:

How do learners and educators understand learning? How do they currently try to influence it?

Which factors appear to impact their perceptions and behaviours around learning analytics as a tool to understand learning?

What patterns can be observed in how educators and learners want to interact with learning analytics?

To which extent can we map these patterns to the framework of Mediated Learning to formulate a general set of guidelines for implementing learning analytics at scale?

To answer these questions, we conducted a series of in-depth qualitative interviews and focus groups about learning analytics with 18 educators and 22 learners at a large online university from 2016-2018. Our studies probed the challenges and barriers that educators and learners perceive around their practice, and the affordances they could most easily perceive in using educational data associated with learning analytics to inform how they teach or learn. In this paper, we present the analysis of these studies and our proposal for evaluating the potential of learning analytics platforms and technologies using the lens of mediated learning.

In the following sections, we first review briefly some challenges related to using learning analytics at scale, from their conception to deployment and evaluation. We provide the theoretical background for proposing Mediated Learning as a model for exposing and mitigating those challenges. We then describe our methodology and participants, and present key findings from our study. Finally, we close with some recommendations for institutions seeking to increase the impact of their learning analytics initiatives for mediating learning.

2. Challenges of learning analytics at scale

Learning analytics should a) provide information that is relevant to various stakeholders (including students) and b) create behavioural change in practice (Ferguson Citation2012). In this section, we discuss two main challenges to meeting those goals.

The first challenge is to identify information that is relevant. Ideally, one could demonstrate a clear link between the outputs of learning analytics, what a person did in response and the outcome of that decision on learning or teaching (Clow Citation2012). Within higher education institutions (HEIs) and with large numbers of students, however, impact can be difficult to establish at this level of granularity (Gašević, Dawson, and Siemens Citation2015; Tsai et al. Citation2019).

Educators and learners have different ways of understanding and measuring learning. So one approach is to use learning theories that are more holistic, interoperable with multiple learning strategies and learning analytics. For example, in Self-Regulated Learning (SRL) (Zimmerman and Schunk Citation2001; Matcha, Gasevic, and Pardo Citation2019), the learner learns to be responsible for setting and monitoring progress toward goals. However, the focus on learner autonomy can leave some learners behind, as many learners lack the skills necessary for recognising, understanding and regulating their learning strategies (Steiner, Nussbaumer, and Albert Citation2009). Hadwin and Oshige (Citation2011) have argued that learning analytics can also support socially-shared regulated learning to help mitigate some of this risk, but these approaches do not appear to be applied at large scale institutions. In addition, SRL theory does not adequately consider the extent to which learning analytics are perceived as adequate ‘evidence’ of learning or learning behaviour. There are reasonable concerns that they track what can be measured, rather than what needs to be known, which is problematic when establishing what successful learning looks like Biesta (Citation2007). This same concern can be mapped to the concept of Open Learner Modelling, in which learners gather individual insights from a wide variety of data about their learning behaviour and achievement (Bodily Citation2018).

Another solution that institutions may choose, is to use more general proxies (such as retention or engagement with a virtual learning environment) as a substitute for specific learning models. The purpose is then to identify patterns and make predictions about learner behaviour (Liñán and Pérez Citation2015). Students whose patterns indicate that they might be more likely to leave their studies or to receive a low grade, typically receive an intervention. This may include getting in touch with the student or directing them to other support services (Kuzilek et al. Citation2015).

However, this approach makes identifying specific impacts of learning analytics difficult. Impacts will be more related to macro effects on learning design or identifying at-risk learners (Sclater, Peasgood, and Mullan Citation2016; Ferguson and Clow Citation2017; Ifenthaler and Yau Citation2020). Research indicates that evaluations can also focus focus too much on usability (Verbert et al. Citation2013; Park and Jo Citation2015; Bodily and Verbert Citation2017), which risks conflating attitudes about a concept (in this case learning analytics) with attitudes about how that concept has been operationalised (for example, specific tools and their functionality) (Greenberg and Buxton Citation2008).

The second challenge relates to how we should design learning analytics, when there are many potential stakeholders. Gathering ‘requirements’ may involve asking future users what data they would like to have, or distilling their needs from other data Dyckhoff et al. (Citation2012). However, research indicates that learners are consulted less often than educators (Verbert et al. Citation2013; Park and Jo Citation2015) and consultations with learners have been generally guided. In addition, the diversity of responses can be vast, motivating the belief that personalisation of learning analytics is the way forward for impacting learning (Dyckhoff et al. Citation2012; Schoonenboom Citation2014; Schumacher and Ifenthaler Citation2018).

There are cases in which personalisation may be both unnecessary and undesired. Khan (Citation2017) noted that learners (and educators) may still lack foundational knowledge about learning analytics and their significance to be able to propose meaningful suggestions. In addition, fully customisable dashboards may be overwhelming for those without experience. Dyckhoff et al. (Citation2012) suggest that different ‘sets of indicators’ might be useful in helping to semi-automate some processes that would be useful for novice versus expert users. In the section below, we discuss the ways in which Mediated Learning Theory can help bridge some of these challenges and improve our knowledge of how learning analytics could potentially impact learning.

3. Mediated learning

In this work, we propose the educational construct of ‘Mediated Learning’ as a learning theory that provides criteria to assess the potential of learning analytics research to shape learning or teaching processes. Essentially, it answers the question: What properties should learning analytics have to mediate learning? Institutions can then conduct an assessment of their practices and repair those challenges first, before engaging in more in-depth analyses of learning analytics' impact. In this section, we outline the main tenets of this construct and how it relates to the challenges described in the previous section.

As we outlined in the introduction to this article, Vygotsky's contributions to mediated learning involved how we gain access to new ways of looking at the world and interacting with it Kozulin and Presseisen (Citation1995) and Kozulin (Citation1998). Vygotsky's theories went on to form the basis for Activity Theory (Engeström Citation1999), which explored factors such as background, motivations and relationships that are influential on our learning and behaviour. However, Activity Theory does not appear to suggest a clear pathway forward, to mobilise the theory into a practice that can be implemented. Reuven Feuerstein filled this gap with a more socio-cultural perspective on mediated learning, which includes a range of criteria he proposes as essential for producing an impactful Mediated Learning Experience (MLE) (Presseisen and Kozulin Citation1992; Feuerstein, Feuerstein, and Falik Citation2015). We used these criteria in our analysis to assess what learning analytics can offer learners, both in terms of the technology that is currently used and how it could be developed further in the future.

Feuerstein proposed three universal criteria that all Mediated Learning Experiences (MLEs) will share. The first criterion is ‘Mediation of Intentionality and Reciprocity’. What is important for this criteria is that the learner understands that learning is a mutual process and that the way they learn is as important as what they learn (Ukrainetz et al. Citation2000). This might involve activities such as integrating a learner's perspective directly into the organisation of the classroom routines or negotiating rules of communication.

The second criterion is ‘Mediation of Transcendence’, which refers to information that is made available to the learner with broader context (Presseisen and Kozulin Citation1992). Transcendence is mediated when the learner is guided in the process of categorising information and incorporating it into known models. For example, the language teacher might offer a student a way to classify a verb on the basis of its ending or remind the student if it is a regular or irregular verb. This is an important factor in ensuring that learners can transfer what they have learned onto different contexts, which is an important skill that many learners find difficult to master (Ge and Land Citation2003).

The final criterion is ‘Mediation of Meaning’. In any given learning experience, the mediation of meaning involves ensuring that the learner is capable of grasping why something should be learned and for what it may be useful (Presseisen and Kozulin Citation1992). One way to do this, is to begin with what a learner finds meaningful already. A Montessori approach, for example, leverages the learner's own attention toward learning what is interesting for them, so that whatever the student learns makes a more lasting ‘impression’ (Feez Citation2007, 79).

Other areas of mediation that impact ‘propensity to learn’, according to Feuerstein, are: a feeling of competence (a sense of self-efficacy), regulation and control of behaviour, sharing behaviour (between and among peers), individualism and psychological differentiation, all goal-related activity (including goal-seeking, planning, achieving, etc.), a desire for challenge, an appreciation for novelty and complexity, optimistic alternatives, a feeling of belonging and a sense of the human being as a ‘changing entity’ (Presseisen and Kozulin Citation1992). This list, which is not exhaustive, is reminiscent of some of the learning theories that are associated with learning analytics, such as self-regulated and socially-shared regulated learning. Mediated Learning provides a ‘stage-setting’ frame for those activities to take place. This means that if we evaluate learning analytics in terms of their potential to mediate learning, we will also evaluate their potential to contribute to self-regulated learning.

We determined that Mediated Learning Theory could help us to organise certain patterns that emerged in the data and identify important relationships, in particular how to understand the space between learners' goals and their achievements. The aim is to test learning analytics against the universal criteria to analyse its potential as a mediatory agent in learning, delivering transition and change toward achievable goals.

4. Affordances

Though we identified Mediated Learning as the lens through which to analyse our data, we needed an additional model for how to collect our data. This model had to be consistent with Mediated Learning Theory, meaning that we would need to connect it to the psychological tools that learners already have. For this reason, we identified ‘affordances’ as a useful approach. We felt this would best represent the opportunities that educators and learners can already see and would be more likely to adopt.

‘Affordances’ are properties of an object that are perceived by a user, presenting action potential for what can be done with that object (Norman Citation1999). Using a hammer as an example, one can perceive the qualities of a hammer: that it is hard, that it is heavy, that it can be held in one hand. What can be done with that hammer is limited by its properties, but also by the creative observation and consideration of the user.

To capture both participants' understanding of how learning analytics can be used, as well as how they would specifically use it themselves (metacognition), we did not consider only one type of learning analytics tool. Instead, we shared with participants an array of possible tools and their functionalities, and collected affordances relating to all of them.

Affordance Theory has become a popular lens through which to interpret human understanding of information systems (Pozzi, Pigni, and Vitari Citation2014). However, Oliver (Citation2016) has cautioned technologists and developers from making too great of an assumption about intentions to adopt technology based on affordances. For this reason, our methodology includes extra attention to those affordances which prompt metacognitive reflection in learners (any statement that reflects ‘cognition about cognition’ Flavell Citation1979), a clearer connection of an affordance with an intention to use an object for a specific, already acknowledged purpose.

5. Methods

Given that we wished to identify what is important to educators and learners, in as much detail as possible, our research was based on a qualitative approach (Creswell and Poth Citation2017; Lincoln and Denzin Citation2000). We chose to conduct a case study, because it was necessary to make observations within a ‘real life’ environment (Yin Citation2003), where learning analytics are deployed and could be expanded. We focussed on a single case, so that the variable we want to explore, what learning analytics need to provide to mediate learning, is not dependent on certain institutional features and factors that we did not have the resources to explore.

The case study environment is a long-time provider of distance and online education with considerable access to learner data and a strong institutional identity of technology-enhanced, inclusive learning. Learning analytics has garnered serious interest in the institution, due to the potential to identify learners at-risk, evaluate learning design and assessment, to categorise learners and to understand more about the social aspects of learning.

We used a combination of qualitative interviewing (Creswell and Clark Citation2007) and focus groups (Gibbs Citation1997) to examine perceptions of learning analytics first more generally, and then with more attention to the meso-level, organisational and departmental factors involved. All interviews and focus groups were transcribed, coded and analysed through a combination constant comparison (Charmaz Citation2011, Citation2014), participant checking (Charmaz Citation2014) and exchanges with experts in the field about emerging categories of importance (Barbour Citation2001).

5.1. Participants and procedure

The study involved educators (n = 18) and learners (n = 22) currently in practice at the case study site. Most students were undergraduate (n = 20), having completed their first year. 4 Educators were in senior roles with 5+ years of experience. The rest were in junior roles with less than 3 years of experience. We identified participants using a convenience sampling strategy (Charmaz and Belgrave Citation2012) through email, message board and personal contact. This was followed by purposive sampling as categories of interest began to emerge from the data (Charmaz Citation2014; Bryant and Charmaz Citation2007). When ‘constant comparison’ among the transcripts and the emergent theoretical categories no longer produced new insights, saturation was determined to have been achieved (Charmaz Citation2011). Focus groups took place in both homogeneous and heterogeneous groups in terms of domain, background and level of experience, to test the strength of emerging categories (Charmaz and Belgrave Citation2012). Focus groups were not mixed between learners and educators, in order to avoid certain power dynamics or discourage open communication about learning and teaching practices.

Before each interview or focus group, participants signed a consent form and were made aware of data protection measures. Interviews and focus groups were conducted both by Skype, Web-Ex and face-to-face at the convenience of the participant. Each interview was expected to take approximately 60 minutes with educators and 25 minutes with students (due to the fact that learners do not tend to have much exposure to learning analytics (Dawson et al. Citation2014)). Each focus group lasted approximately 1.5–2 hours.

The question schedule was the following:

What challenges do you face currently in your practice?

What would you like to achieve?

How do you plan to get there?

How could learning analytics assist?

For the last question, participants were given a basic description of some of the data that is captured in learning analytics research. Any affordances they perceived in using that information were documented.

6. Findings

The findings below are divided by (a) the challenges that participants perceived in their practice, (b) the ways they recognise learning, (c) their current strategies, and (d) their ideas about how learning analytics could assist them in meeting their objectives.

6.1. Educator challenges

Educators reported 26 distinct types of challenges, which could be classified into 9 larger themes: communication with learners (lack of visual referencing, lack of feedback), developing interaction among students (difficulties creating a sense of community or generating discussion), institutional challenges (pressure, confusion of roles), a lack of information about learner background (with regard to demographic information, learner diversity, previous knowledge and experience), progress (interpreting progress, flawed assessment procedures), dynamic agency (transience of learners' goals, peaks and troughs in learner engagement), ethics (around both data protection and retention), and realities (such as societal changes in the structure of education or the use of technology, which cannot be avoided).

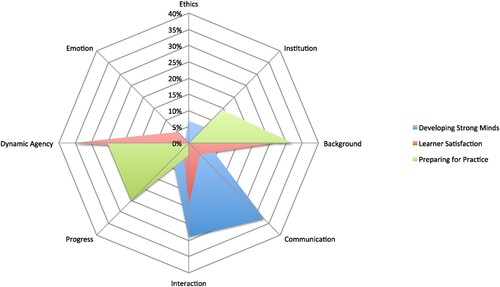

We conducted a frequency analysis of themes across the participants. We observed that the 8 main challenges (excluding ‘realities’) clustered around three larger educational goals mentioned by educators: to ‘satisfy learners’, to ‘develop strong minds’ or to ‘prepare learners for practice’ (Figure ).

Educators working in the Science, Technology, Engineering and Maths (STEM) faculty, for example, as well as those in Finance and Accounting, tended to report more frequently about preparing learners for practice and challenges around understanding learner backgrounds and current progress.

You just don't know anything about them and it's very frustrating. I suppose you have to hope that they've managed to gain some ability to manage their own learning. – Harry

Educators from the Arts and Humanities, as well as the Social Sciences, tended to focus in their statements on developing strong minds, and challenges often related back to assessment procedures and perceived demands of the domain.

In a real classroom, you would see people looking at you or even raising their hands, but in the virtual classroom, there is this awkward silence all the time. Even though you have the option of raising your hand in [name of software], nobody ever does that. – Uwe

The goal of Learner Satisfaction (making learners happy) was most related to challenges of using Massive Open Online Courses (MOOCs) or having class sizes of 150+ students.

6.2. Learner challenges

For learners, we identified three major motivations for learning, which were to ‘achieve one's own personal best’, to ‘obtain qualifications’ for a particular type of employment and for the ‘pure joy of learning’. These motivations often contextualised the types of challenges they perceived.

First time STEM students at the case study site appear to share the same goals as educators working in STEM, to focus on the development of qualifications toward a specific professional context.

I really thought it would make me better at my job, give me an advantage over other people. – Jonah

For those students, challenges can be around dissatisfaction with institutional links to industry and clarity of study materials (as many students with this motivation reported self-study as their preferred mode).

First time students in the Arts and Humanities tended to express more abstract goals of obtaining as much theoretical and practical knowledge as possible, to develop a strong mind, rather than having their eye on a specific professional context.

So, for me, I think the main thing is, I just want to see what I can do. It's seeing how far I can push myself in a sense of my ability. – Harriett

For students in this category, challenges around recognising learning progress and the ability to compare with others are more common.

Learners who are motivated by the joy of learning reported some of the same challenges as the other two groups, depending on what they enjoy most about the learning process.

However, at the case study site, many students have had another career or educational experience before starting their studies. One participant described how his return to education late in life has impacted his goals:

Obviously, if this was going to be used some way in your career, for your future, you always want to do your best, right? And so I think a normal student, a younger student, who is wanting to make use of his degree, would be more concerned than I was. – Ralf

In addition, many of the students we spoke to were entering a completely new discipline after many years in an entirely unrelated discipline. In these cases, learners' goals and strategies tended to map more closely to their first discipline, rather than the one represented by their studies at the case study site. One participant, returning to the Humanities after a career in finance said:

I can only say that I am a product of 50 years of being results orientated. – Chris

Understanding the role of previous education and professional experience in shaping how learning is practised and recognised was determined to be critical for understanding learner journeys. We discuss this in more detail in the section on Learner Strategies.

6.3. How educators recognised learning

Tables – show the major and sub-categories (open codes) that relate to how educators understand learning. While there were many commonalities shared across educators (such as sensing reciprocity from learners and feeling positive about the learning experience from their own perspective), some sets of indicators tended to cluster together. Table includes indicators that were shared among all educators. Table includes indicators most often referred to by educators that were focussed on practice. Table shows the indicators for recognising learning through learner satisfaction and finally, Table describes the indicators used in recognising learning through development. Some of the indicators that educators name are objective, for example, in the demonstration of a skill or retention.

Table 1. Recognising learning through reciprocity.

Table 2. Recognising learning through practice.

Table 3. Recognising Learning through Learner Satisfaction.

Table 4. Recognising learning through development.

Lucy: In terms of content, I like to think about it in terms of whether or not it can stand up to scrutiny from the outside.

Ivan: Yes, the student should be able to pursue a PhD degree in Manchester and not look like [an idiot] when they show up for the interview because whatever they've been taught is not what is accepted within the community.

Other indicators require some subjective and reflexive judgements along the way. For example, cohesion is described as an over-all sense-making activity, looking at the learners work and deciding if it is well-rounded and appropriate.

It's in those little details. Does the person know how to transition from one idea to the next? Is there a flow? Does it ‘hang together’? You get a sense for that. A student who is not just repeating back what you say, but knows how to, I suppose, navigate around the topic. – Nora

Educators understand excitement and energy through recognising an improvement in the speed and quality of learner contributions to the class where the threshold for an ‘improvement’ is defined by the educator through their experience of the cohort.

Educators are also able to operationalise these indicators and in some cases translate them to machine readable proxies. Table provides a list of the proxies educators reported during our study. This list is not exhaustive, but it does demonstrate that educators are drawing from some of the same sources of information as many learning analytics tools and technologies, such as participation in learning forums and improvement over time.

Table 5. Educator proxies for learning.

6.4. How learners recognised learning

Learners reported recognising learning typically through comparisons, even with themselves:

I go back to those first tutorials and think, oh my God, seriously, look how far I've come. I know I've learned a vast amount this year, a serious amount. I think it's from looking back and realising that actually, that was really difficult at the time and now that's okay. – Vicky

Table outlines the different types of comparisons and other indicators that learners reporting using to understand how they are doing in their studies.

Table 6. How learners recognise learning.

However, they interpret it in different ways. If a learner is hoping to pass with a 65/100 and then receives a 75/100, for example, the learner will view this as a sign of learning. However, if that learner had planned to get a top mark, and then received a 75/100, something has most likely gone wrong.

I got low 70 s for my first TMAs this year, which surprised me, because I was a 80 s kind of student the previous year. It got me thinking about whether or not I was taking it in. – Mascha

Unfortunately, it can be easy to miss these sorts of subtle problems, because they rely on having access to the individual learner's goals, which are often obfuscated for the educator (and they are not always linear or comparable with previous results). And as one participant noted, these goals may change for each learning experience.

I wouldn't really be able to say, simply based on my marks if I was doing well or not. I mean, compared to what? Each assignment is so different, each module, each tutor. I can get 1sts for 4 modules and 3rds for the rest. My performance has very little to say about what I've actually learnt. – Moritz

In addition, it can be difficult to spot some needs for learning, because a learner's capacity is hard to determine. As one educator noted:

You have to think about the high performers too, and actually, the mid-range students. Where are they? How can they be supported? I would really love to understand that middle-of-the-road type of student. Where is the support for that student? – Nora

However, some types of comparisons were relatively common for learners to report. For example, in terms of comparing performance or activity against that of other students, it was quite common for learners to be aiming at passing, doing average, doing better than average, and scoring within the top 10%. Those that were interested in being able to compare with the discipline often related this to being able to compare one's own work with an acceptable example. This was particularly true for writing assignments or other types of non-algorithmic activities.

6.5. Learner strategies

The ways in learners in our study recognised learning were also reflected in the types of strategies they reported. Learner strategies appeared to follow primarily one of three different approaches. In the first approach, which we called a ‘Open Strategy’, learners reported that they (a) were currently open to questioning their current strategies and (b) actively seeking inspiration from outside of themselves. These learners tended to have more general than specific strategies. They also tended to struggle with the isolation of online learning. Learners with open strategies reported that they found it difficult to cope when their social needs in learning are not met.

I do like to discuss what I'm thinking and the opportunity really isn't there. I think, maybe, if I was discussing it more in a group, I might hear things, bounce ideas around and then come away and maybe just do one draft and then change it a little bit…. – Harriett

Learners with ‘Pragmatic Strategies’ (a) already had specific strategies for optimising performance, (b) were typically seeking the easiest path to achieve the aim, and (c) generally looked only for specific advice from other people around them. Pragmatic learners tended to express more confidence around their learning and were, surprisingly, most eager to share their strategies with others. In gaining strategies from others, pragmatic learners described their behaviour more like information gathering, seeking out specific answers to specific questions from classmates and tutors.

I've found I learn well when I have very concrete examples of things, of how to play out key concepts. I've also realised that I am not terribly good at focussing on key concepts and I love going my own way, exploring my own literature and my own roots. – Jaisha

In contrast, Learners with ‘Applied Strategies’ appear to organise their learning around personal mastery. Such learners (a) already had strategies for maximising performance, (b) were typically seeking the best path to achieve the aim, and (c) looked for both general and specific advice from other people around them.

Learners with a background in STEM sciences, medicine and finance exhibited more pragmatic and applied strategies. Some learners even described very clearly how this background affects their current learning experience. Learners with a background in the Arts and Humanities were the only learner participants to report having open strategies. Participants with open strategies saw interaction with others as a large part of their own learning.

6.6. Intentions and affordances

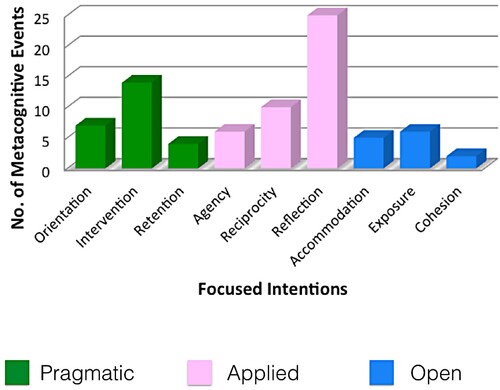

Affordance statements that the participant related directly to the their own, specific practice were coded as ‘Metacognitive Activity’. These were important, as we have previously stated, to capture the psychological tools that participants have currently accessible. During the course of the focus groups, we captured 179 metacognitive events. Through removal of repetitions by single participants, this was reduced to 46 metacognitive events.

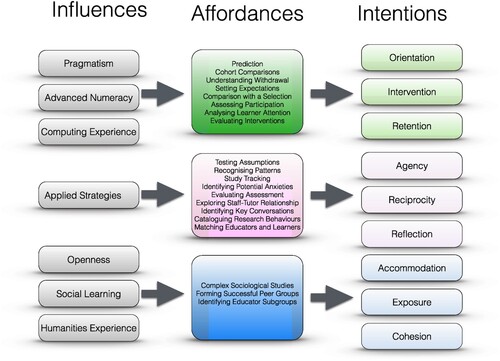

The 46 metacognitive events were then grouped into categories based on the most important general intention of that activity, as reported by the participant. This process produced 9 categories that we titled: ‘Focused Intentions’. Table describes each of those intentions in more detail. Figure presents the number of metacognitive events that were identified for each of the focussed intentions. We observed that focussed intentions also clustered according to the learning strategies and teaching goals of participants.

Table 7. Intentions behind affordances.

Findings indicate that most metacognitive activity was located around affordances for reflection and reciprocity, such as recognising patterns in the data and testing pedagogical assumptions, activities that required participants consider what it is that they are trying to accomplish and how it can be measured:

One idea that I've had…some sort of study planning tool…and you can plot your study of each topic based on the recommended hours and extra activity and it sends a notification to your phone and emails you to remind you of your study plans each day” -Jonah (Learner)

The ability to ‘play with models’ also featured strongly here:

If I had the choice, I would not have some top down, ‘this is the best’…I would make it sort of automatic so people can access it and play with it and do creative things with it. – Jeremy (Educator)

When connecting affordances with real practice, educators and learners were looking to resolve real challenges, such as exploring their own assumptions, seeking patterns in their own data, finding novel ways of identifying learner emotion and of gaining access to different strategies and choices.

The ways in which educators and learners recognised learning were reflected in which types of affordances they perceived in using learning analytics to support their practice. If comparison with the discipline was important to the learner, for example, the learner would typically see affordances in text-based analysis of essays, for example, to extract key concepts and determine the quality and trajectory of dialogue. They also saw more affordances for using learning analytics to support social processes, like the formation of peer learning groups:

I suppose to be able to highlight those students and put, you know, like-minded students together, because there are students that don't want to get involved. And I appreciate that there's people who just kind of want to get their head down, do their own work. But I'm not like that. – Vicky (Learner)

Figure illustrates these groupings as well as the contextual features reported by participants as influencing those intentions. Individuals in our study with more pragmatic approaches to education and experience with computing, for example, tended to focus on affordances around retention and interventions based on predictions. Individuals with more open approaches to education tended to seek out the more complex composite proxies for examining cohesion, accommodating different learner needs, and exposing learners to new ideas. Applied strategies were most associated with developing reciprocity in learning, working with the agency of learners and focussing on reflexive activities. Individuals with a pragmatic strategy, in particular when they have an understanding of advanced numeracy and computing, tended to perceive more affordances for helping learners to understand what is expected of them, to understand when they are struggling as quickly as possible, and to do whatever possible to retain the student. Individuals with a very open strategy, in particular if they highly valued interaction in learning, were more likely to perceive affordances that could help to identify and accommodate special needs. In addition, they were more likely to promote affordances that bring people together in the process of creating meaning.

Individuals who were transitioning between domains, and those who reported well-developed applied strategies, circumscribe a middle-space between those two territories of open, social learning and optimised, effective strategies. In this space, the intentions appear to be focussed on creating buy-in to improve value by involving different stakeholders more in the process of data collection and interpretation. In addition, affordances tend to invite learners to consider the process of learning as important as the outcomes of learning. When discussing the potential of using learning analytics to track learner's histories while they study, for example, one student said:

If there are things people are looking at that I'm not looking at, I'd find that really interesting to see, in case there is something I find useful. It's a bit like peering into someone else's mind. – Moritz (Learner)

Finally, there is a sense that learning analytics can aid in the process of reflection on a much deeper level, still using all of the same tools and technologies. For example, participants who occupied this middle space tended to describe using learning analytics to really understand more about how to optimise the teacher-student relationship, and to recognise different types of patterns in behaviour, also at the strategy level, to make those inner-workings of individual study more transparent for learners.

One interesting item of note – For nearly all learners, tracking educator activity was important, whereas none of the educators mentioned this as a possibility. Some of the same measurements that educators would use to measure the interest and willingness of the learner are also proposed by learners to assess the commitment of the tutor, their responsiveness and their own interest in what they are teaching. Likewise, tracking student activity was an affordance that was only interesting to some students, whilst it was mentioned as an affordance by most educators participating in the study.

7. Discussion

With regard to RQ1, how do learners and educators understanding their learning, our findings indicate that, while there is considerable diversity, we can also observe clusters in which the construct of learning is similarly understood. The factors that appeared to impact their perceptions and behaviours around learning analytics (RQ2), appeared to be most related to their previous experience with computing, their domain background (past and present), and their attitudes about assessment within their domain (for example, whether they believed it was possible to adequately assess skills in their area using the means currently provided). We observed patterns in the types of challenges shared by educators and learners with similar goals, as well as the ways that they currently attempted to recognise their learning and affordances of learning analytics to assist them in this task (RQ3).

With regard to how we can map such patterns to the framework of Mediated Learning (RQ4), we consider the following:

7.1. Psychological tools

Asking participants to reflect more generally on the properties of analytic data and its uses allowed them to vocalise their specific or individual interests in certain practices, rather than prompting them into a usability evaluation. From here, we could more easily assess which psychological tools learners have available, and where they see possibility to transition to new tools. It makes sense that we observe discipline clusters both in educators and students, as the discipline sometimes sets the psychological tools which are preferred or most useful in that domain.

We also found that learning analytics could operate as a ‘more knowledgeable other’ (Presseisen and Kozulin Citation1992; Kozulin Citation1998), if they give students access to other ways of thinking about or practising learning. In a typical classroom, learners can peer over each other's shoulders or read the faces in the room to understand where they stand, who is succeeding and to whom they might go for help. Learners in online or blended environments do not find it as easy to compare themselves with others. Learning analytics could help fill this gap, with appropriate input and guidance from educators and learners.

7.2. Mediation of intentionality and reciprocity

Most learning analytics initiatives at scale are not student-facing. Thus, they do not comply with Feuerstein's first universal criteria of a ‘Mediated Learning Experience’ (Presseisen and Kozulin Citation1992, 13). In order for this criterion to be met, learners would have to be much more deliberately integrated in how learning analytics are used and evaluated. The educators and learners in our study have provided many new and interesting proposals for learning analytics that indicate their need for more relational and interactive data. While these may not be features that are easy to implement, they can potentially say more about learning than current approaches. This can be considered the ‘high hanging fruit’ of learning analytics development.

7.3. Mediation of transcendence

Building on previous work on the limitations of self-regulated learning, we can conclude that mediation of transcendence would require much more facilitation on the process of monitoring one's own learning and using technology to assist with this process. We did not find any evidence that learners were receiving these two types of training, embedded in their learning journey, other than the cases in which the educator was specifically interested in supporting this activity as part of their pedagogical process. This has implications for equality and inclusion moving forward, if those with certain skills and interests are more easily or sufficiently served by learning analytics than others.

7.4. Mediation of meaning

In our study, we observed that learners have their own ways of recognising their learning. Likewise, educators have their own home-grown analytics for understanding the learning of their students. Learning analytics researchers and technologists have already accepted that focussing on relevant data is an integral part to the success of learning analytics initiatives. However, this need not be entirely personalised. We found that clusters of indicators were often found among individuals that shared the same types of psychological tools. We can leverage this knowledge to create a smaller selection of different learning analytics pathways and reduce some of the cost of fully personalised approaches.

7.5. Recommendations

On the basis of above, we can make several recommendations for creating the potential of learning analytics to mediate learning (RQ3):

Consult with different departments in learning analytics pilots and evaluations. Ensure a sample that adequately represents faculty diversity.

Consider incorporating other types of data sources and machine learning techniques to ensure that a diversity of indicators can be covered.

Get user feedback on learning analytics outputs such as predictions or recommendations.

In the best case, provide an interactive dashboard for both learners and educators.

Ensure adequate training and experimentation phases for novices.

This set of guidelines is unique in that it outlines the pre-conditions necessary for learning analytics to have impact on mediating learning: First, learning analytics should be inclusive and co-created. Second, that learning analytics should be linked with meaningful assessment. Third, learning analytics should be reciprocal and interactive, in which users can provide feedback. Finally, and perhaps most importantly, learning analytics should be embedded within the professional practice and training of staff.

7.6. Limitations and future work

These findings could be further explored and validated using a survey instrument with a wider sample of educators and learners. Do these clusters stand up to scale? Can some of what learners and educators described, in terms of their activities, also be ‘seen’ in their traces in the VLE and online? How do systems work for non-normative students or those with less representation at the institution? These are important questions for immediate future research.

8. Conclusion and contribution

Learning analytics should provide learners with ‘actionable insights’ to support their learning journey (Ferguson Citation2012). We argue that we have operationalised this goal as ‘mediating learning’ and used the models of mediated learning from Vygotsky and Feuerstein to define that goal more precisely. We gathered evidence from learners and educators around the psychological tools they currently use to understand their learning, their understanding of learning analytics in general (Mediation of Intention of Reciprocity), their proposals for how anyone could use learning analytics to mediate learning (Mediation of Transcendence) and how they would specifically use learning analytics technology to support their own practice (Mediation of Meaning). Our guidelines for mediating learning provide a minimum viable context in which we argue that learning analytics could be most successful at helping learners use analytics to understand more about their learning. In addition, we presented an alternative perspective to personalisation that is less costly, highlighting how common aims lead to certain shared, observable behaviours. Finally, our guidelines echo the need for more diverse voices in learning analytics development and implementation. If learning analytics are based on data derived from what are perceived to be inadequate procedures, encouraging adoption will be difficult. With this knowledge, institutions looking to implement learning analytics initiatives to support learners at scale can consider whether they have enough time and resources to ensure the diversity of data, enough training and exposure to learning analytics, and reciprocal mechanisms to get meaningful feedback from users within all stakeholder groups.

Disclosure statement

No potential conflict of interest was reported by the authors.

References

- Barbour, R. S. 2001. “Checklists for Improving Rigour in Qualitative Research: A Case of the Tail Wagging the Dog?” BMJ: British Medical Journal 322 (7294): 1115–1117.

- Biesta, G. 2007. “Why ‘What Works’ Won't Work: Evidence-based Practice and the Democratic Deficit in Educational Research.” Educational Theory 57 (1): 1–22.

- Bodily, R. 2018. “Open Learner Models and Learning Analytics Dashboards: A Systematic Review.” In Proceedings of the 8th International Conference on Learning Analytics and Knowledge, 41–50. ACM.

- Bodily, R., and K. Verbert. 2017. “Trends and Issues in Student-Facing Learning Analytics Reporting Systems Research.” In Proceedings of the Seventh International Learning Analytics & Knowledge Conference, 309–318. ACM.

- Brooks, J. G. 1990. “Teachers and Students: Constructivists Forging New Connections.” Educational Leadership 47 (5): 68–71.

- Bryant, A., and K. Charmaz. 2007. The Sage Handbook of Grounded Theory. London: Sage.

- Charmaz, K. 2011. “Grounded Theory Methods in Social Justice Research.” The Sage Handbook of Qualitative Research 4: 359–380.

- Charmaz, K. 2014. Constructing Grounded Theory. London: Sage.

- Charmaz, K., and L. Belgrave. 2012. “Qualitative Interviewing and Grounded Theory Analysis.” The SAGE Handbook of Interview Research: The Complexity of the Craft 2: 347–365.

- Clow, D. 2012. “The Learning Analytics Cycle: Closing the Loop Effectively.” In Proceedings of the 2nd International Conference on Learning Analytics and Knowledge, 134–138. ACM.

- Creswell, J. W., and V. L. P. Clark. 2007. “Designing and Conducting Mixed Methods Research”.

- Creswell, J. W., and C. N. Poth. 2017. Qualitative Inquiry and Research Design: Choosing Among Five Approaches. London: Sage publications.

- Dawson, S., D. Gašević, G. Siemens, and S. Joksimovic. 2014. “Current State and Future Trends: A Citation Network Analysis of the Learning Analytics Field.” In Proceedings of the Fourth International Conference on Learning Analytics and Knowledge, 231–240. ACM.

- Dewey, J. 1938. The Theory of Inquiry. New York: Holt, Rinehart & Wiston.

- Dyckhoff, A. L., D. Zielke, M. Bültmann, M. A. Chatti, and U. Schroeder. 2012. “Design and Implementation of a Learning Analytics Toolkit for Teachers.” Educational Technology & Society 15 (3): 58–76.

- Engeström, Y. 1999. “Activity Theory and Individual and Social Transformation.” Perspectives on Activity Theory 19 (38): 19–30.

- Feez, S. M. 2007. “Montessori's Mediation of Meaning: A Social Semiotic Perspective”. Unpublished PhD thesis. University of Sydney. http://hdl.handle.net/2123/1859.

- Ferguson, R. 2012. “Learning Analytics: Drivers, Developments and Challenges.” International Journal of Technology Enhanced Learning 4 (5–6): 304–317.

- Ferguson, R., and D. Clow. 2017. “Where is the Evidence? A Call to Action for Learning Analytics.” In Proceedings of the Seventh International Learning Analytics & Knowledge Conference, 56–65. ACM.

- Feuerstein, R., R. Feuerstein, and L. H. Falik. 2015. Beyond Smarter: Mediated Learning and the Brain's Capacity for Change. New York: Teachers College Press.

- Flavell, J. H. 1979. “Metacognition and Cognitive Monitoring: A New Area of Cognitive–developmental Inquiry.” American Psychologist 34 (10): 906–911.

- Gašević, D., S. Dawson, and G. Siemens. 2015. “Let's Not Forget: Learning Analytics are About Learning.” TechTrends 59 (1): 64–71.

- Ge, X., and S. M. Land. 2003. “Scaffolding Students' Problem-solving Processes in An Ill-structured Task Using Question Prompts and Peer Interactions.” Educational Technology Research and Development 51 (1): 21–38.

- Gibbs, A. 1997. “Focus Groups.” Social Research Update 19 (8): 1–8.

- Greenberg, S., and B. Buxton. 2008. “Usability Evaluation Considered Harmful (Some of the Time).” In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, 111–120. ACM.

- Hadwin, A., and M. Oshige. 2011. “Self-regulation, Coregulation, and Socially Shared Regulation: Exploring Perspectives of Social in Self-regulated Learning Theory.” Teachers College Record 113 (2): 240–264.

- Ifenthaler, D., and J. Y.-K. Yau. 2020. “Utilising Learning Analytics to Support Study Success in Higher Education: a Systematic Review.” Educational Technology Research and Development 68 (4): 1961–1990.

- Khan, O. 2017. “Learners' and Teachers' Perceptions of Learning Analytics (LA): A Case Study of Southampton Solent University (SSU).” 14th International Conference on Cognition and Exploratory Learning in Digital Age (CELDA 2017).

- Kozulin, A. 1998. Psychological Tools: A Sociocultural Approach to Education. Cambridge, MA: Harvard University Press.

- Kozulin, A., and B. Z. Presseisen. 1995. “Mediated Learning Experience and Psychological Tools: Vygotsky's and Feuerstein's Perspectives in a Study of Student Learning.” Educational Psychologist 30 (2): 67–75.

- Kuzilek, J., M. Hlosta, Z.-Z. Herrmannova, and A. Wolff. 2015. “Ou Analyse: Analysing At-risk Students At the Open University.” Learning Analytics Review, 1–16.

- Liñán, L. C., and Á. A. J. Pérez. 2015. “Educational Data Mining and Learning Analytics: Differences, Similarities, and Time Evolution.” International Journal of Educational Technology in Higher Education 12 (3): 98–112.

- Lincoln, Y. S., and N. K. Denzin. 2000. The Handbook of Qualitative Research, Thousand Oaks, CA: Sage.

- Matcha, W., D. Gasevic, and A. Pardo, et al. 2019. “A Systematic Review of Empirical Studies on Learning Analytics Dashboards: A Self-regulated Learning Perspective.” IEEE Transactions on Learning Technologies 13 (2): 226–245.

- Norman, D. A. 1999. “Affordance, Conventions, and Design.” Interactions 6 (3): 38–43.

- Oliver, M. 2016. “What is Technology.” In Wiley Handbook of Learning Technology, 35–57.

- Park, Y., and I.-H. Jo. 2015. “Development of the Learning Analytics Dashboard to Support Students' Learning Performance.” Journal of Universal Computer Science 21 (1): 110–133.

- Piaget, J. 1964. “Part I: Cognitive Development in Children: Piaget Development and Learning.” Journal of Research in Science Teaching 2 (3): 176–186.

- Pozzi, G., F. Pigni, and C. Vitari. 2014. “Affordance Theory in the is Discipline: A Review and Synthesis of the Literature”.

- Presseisen, B. Z., and A. Kozulin. 1992. “Mediated Learning–the Contributions of Vygotsky and Feuerstein in Theory and Practice”.

- Schoonenboom, J. 2014. “Using An Adapted, Task-level Technology Acceptance Model to Explain why Instructors in Higher Education Intend to Use Some Learning Management System Tools More Than Others.” Computers & Education 71 (1): 247–256.

- Schumacher, C., and D. Ifenthaler. 2018. “Features Students Really Expect From Learning Analytics.” Computers in Human Behavior 78 (1): 397–407.

- Sclater, N., A. Peasgood, and J. Mullan. 2016. Learning Analytics in Higher Education. London: JISC.

- Steiner, C. M., A. Nussbaumer, and D. Albert. 2009. “Supporting Self-regulated Personalised Learning Through Competence-based Knowledge Space Theory.” Policy Futures in Education 7 (6): 645–661.

- Tsai, Y.-S., O. Poquet, D. Gašević, S. Dawson, and A. Pardo. 2019. “Complexity Leadership in Learning Analytics: Drivers, Challenges and Opportunities.” British Journal of Educational Technology 50 (6): 2839–2854.

- Ukrainetz, T. A., S. Harpell, C. Walsh, and C. Coyle. 2000. “A Preliminary Investigation of Dynamic Assessment with Native American Kindergartners.” Language, Speech, and Hearing Services in Schools 31 (2): 142–154.

- Verbert, K., E. Duval, J. Klerkx, S. Govaerts, and J. L. Santos. 2013. “Learning Analytics Dashboard Applications.” American Behavioral Scientist 57 (10): 1500–1509.

- Vygotsky, L. S. 1986. “Thought and Language-Revised Edition”.

- Winne, P. H. 2017. “Learning Analytics for Self-Regulated Learning.” In Handbook of Learning Analytics. Beaumont, AB: Society for Learning Analytics Research.

- Yin, R. K. 2003. “Designing Case Studies.” Qualitative Research Methods 5 (14): 359–386.

- Zilka, G. C., R. Cohen, and I. Rahimi. 2018. “Teacher Presence and Social Presence in Virtual and Blended Courses.” Journal of Information Technology Education. Research 17: 103–126.

- Zimmerman, B. J., and D. H. Schunk. 2001. Self-regulated Learning and Academic Achievement: Theoretical Perspectives. New York: Routledge.