ABSTRACT

Dichotic listening is a well-established method to non-invasively assess hemispheric specialization for processing of speech and other auditory stimuli. However, almost six decades of research also have revealed a series of experimental variables with systematic modulatory effects on task performance. These variables are a source of systematic error variance in the data and, when uncontrolled, affect the reliability and validity of the obtained laterality measures. The present review provides a comprehensive overview of these modulatory variables and offers both guiding principles as well as concrete suggestions on how to account for possible confounding effects and avoid common pitfalls. The review additionally provides guidance for the evaluation of past studies and help for resolving inconsistencies in the available literature.

The term dichotic listening refers to a class of experimental paradigms traditionally applied to assess hemispheric differences in auditory processing, and today can be seen as an established paradigm in research and clinical settings (Lezak, Howieson, Loring, & Fischer, Citation2004; Ocklenburg & Güntürkün, Citation2018; Tervaniemi & Hugdahl, Citation2003). At the core of this class of paradigms is the dichotic presentation: a pair of auditory stimuli is presented simultaneously using headphones, with one of the two stimuli presented to the left ear, and the other one presented to the right ear. The instruction usually demands the test participant to indicate what she/he hears on the given trial. When verbal stimulus material is used (e.g., spoken words or syllables), the stimuli presented to the right ear are typically reported (or detected) more accurately and faster than those presented to the left ear.

This right-ear advantage (REA), following the initial description and validation of the phenomenon by Doreen Kimura (Kimura, Citation1961a, Citation1961b), is nowadays widely interpreted to reflect left hemispheric dominance for speech processing. Reversely, a left-ear advantage is thought to indicate “atypical” right-hemispheric dominance. Models explaining this association have been presented in an excellent review by Hiscock and Kinsbourne (Citation2011) and will not be discussed here. Besides differences in the direction, also variations in the magnitude of the perceptual laterality can be considered (e.g., McManus, Citation1983; Zaidel, Citation1983). For this purpose, the difference in the number of correctly reported left- (Lc) and right-ear (Rc) stimuli can be expressed as a continuous variable, referred to as laterality index (LI; Bryden & Sprott, Citation1981; Marshall, Caplan, & Holmes, Citation1975). In its simplest form, it is determined as the difference relative to the sum of the correctly recalled stimuli (i.e., LI = (Rc−Lc)/(Rc+Lc)). Differences in magnitude have been either interpreted to reflect gradual difference in the quality of hemispheric processing and/or as delay or degradation of information processing due to inter-hemispheric integration (Braun et al., Citation1994; Jäncke, Citation2002; Zaidel, Citation1983).

Irrespective of the preferred model of interpretation, or whether one is interested in measuring direction or magnitude of laterality, the main concern when planning a dichotic-listening experiment is to get reliable and valid measures in a time-efficient way. Since the conceptualization of dichotic listening almost 60 years ago, a plethora of studies has provided a wealth of evidence on how characteristics of the experimental set-up (e.g., stimulus order, number of trials) and cognitive preferences (e.g., attentional control) introduce systematic response biases to dichotic listening. When uncontrolled or ignored, these modulating variables may represent a source of systematic error variance, threatening reliability of the obtained measures and efficiency of the paradigm. The present review provides a comprehensive overview of these modulating variables, offers suggestions on how to control these effects, and aims to help avoiding potential pitfalls. Following the most common application of dichotic listening, the present review is specifically concerned with verbal dichotic-listening paradigms, which are designed to assess hemispheric differences in speech and language processing (Bryden, Citation1988; Tervaniemi & Hugdahl, Citation2003). However, most, if not all variables discussed here, will also affect performance in paradigms that aim to use dichotic listening to study other topics, such as emotional processing (Grimshaw, Kwasny, Covell, & Johnson, Citation2003; Voyer, Dempsey, & Harding, Citation2014), cognitive-control functions (Hjelmervik et al., Citation2012; Hodgetts, Weis, & Hausmann, Citation2015), or hemispheric integration (Steinmann et al., Citation2014).

Stimulus characteristics

Stimulus material

At the heart of planning a dichotic-listening paradigm, is the selection of appropriate stimulus material. Stimulus material can be considered appropriate if it promises a valid inference about the underlying construct of interest based on the measured variable, that is, construct validity (Pedhazur & Schmelkin, Citation2013). As the construct of interest is speech-processing asymmetry, this objective has been approached by selecting verbal stimulus material in which the two stimuli contrasted in a dichotic presentation differ in a phonological feature relevant for speech processing. Three main types of stimulus material have prevailed in laterality research: numeric words, (non-numeric) words, and (non-word) syllables.

The use of numeric stimuli has the longest tradition in laterality research, as monosyllabic digit names were used by Doreen Kimura in her seminal articles (Kimura, Citation1961a, Citation1961b). Influenced by Broadbent’s (Citation1956) selective attention studies she used series of three dichotically presented digit pairs (Kimura, Citation2011). Kimura’s studies were the first to validate dichotic listening against more direct indices of brain asymmetry, such as the sodium amytal (Wada) test or performance deficits due to known brain lesions, establishing the paradigm as method of laterality research (Kimura, Citation1967). Both single (e.g., Musiek, Citation1983) and two digit numbers (Dos Santos Sequeira et al., Citation2006) have been successfully employed, and today dichotic-listening paradigms with digits are frequently used in clinical settings for the assessment speech processing development and binaural integration (e.g., Cameron, Glyde, Dillon, Whitfield, & Seymour, Citation2016; Musiek, Citation1983).

Early on, also non-numeric words, mostly mono- or bisyllabic nouns, have been successfully introduced as alternative to numeric stimuli (Bryden, Citation1964) and validated, i.a. in split-brain patients (Sparks & Geschwind, Citation1968) and using the Wada test (Strauss, Gaddes, & Wada, Citation1987). Wexler and Halwes (Citation1983) later advocated the use of rhyming words as dichotic pairs (e.g., gage and cage), with the intention to increase the likelihood that the two stimuli fuse into one percept (as discussed in section: inter-channel spectro-temporal overlap). This Fused Dichotic Rhyming Word (FDRW) approach has been validated against the Wada test (Fernandes, Smith, Logan, Crawley, & McAndrews, Citation2006; Zatorre, Citation1989) and hemispherectomy (de Bode, Sininger, Healy, Mathern, & Zaidel, Citation2007), and represents one of the most-frequently used dichotic-listening paradigms in the context of laterality research today (e.g., Bryden & MacRae, Citation1988; McCulloch, Lachner Bass, Dial, Hiscock, & Jansen, Citation2017; Roup, Wiley, & Wilson, Citation2006).

The use of non-word syllables was pioneered by Studdert-Kennedy, Shankweiler, and Schulman (Citation1970), systematically testing consonant-vowel-consonant (CVC) syllables. The main finding was that the right-ear advantage is strongest and most consistent when the paired stimuli differ in the initial plosive stop consonant (e.g., /bap/-/dap/). Difference in the final consonant (e.g., /dab/-/dap/) produced less consistent effects across participants, while differences in the medial vowel did not yield a right-ear preference (e.g., /dab/-/deb/). This observation inspired the adaption of even more simplified consonant-vowel (CV) syllable paradigms in various forms (e.g., Bryden, Citation1975; Hugdahl & Anderson, Citation1984; Shankweiler & Studdert-Kennedy, Citation1975). However, also vowel-consonant-vowel syllables (e.g., /aba/-/apa/) have been suggested as alternative stimulus material (Wexler & Halwes, Citation1985). Today, the most widely used syllable version employs stimuli that combine the six plosive consonants (i.e., /b/, /d/, /g/, /p/, /t/, /k/) with a vowel (usually /a/) to syllables (sCV; e.g., Hugdahl et al., Citation2009; Moncrieff & Dubyne, Citation2017; Westerhausen et al., Citation2018). The sCV stimulus material has also been successfully validated against the Wada test (Hugdahl, Carlsson, Uvebrant, & Lundervold, Citation1997), in studies on surgical patients (Clarke, Lufkin, & Zaidel, Citation1993; de Bode et al., Citation2007), and appears concordant with asymmetry measures obtained from functional MRI (Van der Haegen, Westerhausen, Hugdahl, & Brysbaert, Citation2013; Westerhausen, Kompus, & Hugdahl, Citation2014a) and other behavioural tasks such as verbal visual-half field experiments (e.g., Van der Haegen & Brysbaert, Citation2018).

As indicated above, all three classes of stimulus material have been validated, for example, yielding good consistency between Wada test classifications and the direction of the LI. Nevertheless, comparative magnitude-based analyses using different classes of stimulus material in the same participants, suggest that these stimulus materials cannot be used interchangeably. That is, correlations of LIs obtained with different stimulus material are surprisingly low despite of high reliability (Jäncke, Steinmetz, & Volkmann, Citation1992; Teng, Citation1981; Wexler & Halwes, Citation1985). A good example is provided by Wexler and Halwes (Citation1985), who found a correlation of r = .15 for LIs determined from a VCV-syllable and a FDRW-based paradigm, together with a retest reliability of r = .90 and .89 for the VCV and the FDRW paradigm, respectively. The two paradigms did not only differ in the stimulus material used. That is, differences in response format and inter-channel stimulus overlap might have negatively affected the inter-correlation. Nevertheless, a straightforward interpretation of low inter-correlation (despite of satisfactory reliability for each single task) is that the two tests measure different aspects or stages of speech processing. The mapping of speech input onto semantic representations requires analyses on various levels of representation, typically assumed to range from distinctive stimulus features, via phoneme and syllabic structure, to phonological word forms and semantic meaning (Hickok & Poeppel, Citation2007). Thus, it can be speculated that non-word syllables might be distinguished during an earlier processing stage than rhyming words. Different processing stages, in turn, are likely associated with varying degrees of hemispheric differences along the processing stream (Specht, Citation2014), potentially producing weakly correlated LIs.

In summary, the three discussed classes of stimulus material (numeric words, non-numeric words, and non-word syllables) can be considered appropriate for the assessment of hemispheric asymmetry. However, each class of stimulus material likely assesses asymmetry during different stages of speech processing. Thus, the decision on which stimulus material to use might also be based on considerations as to which stage of speech processing is of interest in the study.

Stimulus difficulty

Stimuli differ in the ease with which they are perceived. For example, words differ in the frequency in which they occur in written and spoken language, and it is known that high-frequency words are processed faster and remembered better than low-frequency words in printed or spoken form (Brysbaert, Mandera, & Keuleers, Citation2018; Monsell, Doyle, & Haggard, Citation1989).

This fact raises concern that using words of different word frequency in dichotic listening might systematically affect the ear preference. Techentin and Voyer (Citation2011) systematically combined low- and high-frequency (non-rhyming) words in dichotic listening. While a significant REA was found in all four conditions, trials in which a high-frequency word on the right ear was combined with low-frequency word on the left ear showed a smaller REA than the other combinations. At first glance, this finding appears counterintuitive as the faster processing of high-frequency words can be expected to benefit the processing of the right-ear stimulus and to enhance the magnitude of the REA rather than reducing it. However, Strouse Carter and Wilson (Citation2001) acknowledge that word identification not only depends on the word frequency but also is affected by the neighbourhood density of that word (i.e., the amount of memory representations of phonologically similar words that are activated by the same input word, see neighbourhood activation models, e.g., Luce & Pisoni, Citation1998). Thus, the authors defined lexical difficulty considering word frequency and neighbourhood density together: high-frequency words from low-density neighbourhoods (e.g., dish or pump) are easy to be identified, whereas low-frequency words from high-density neighbourhoods (e.g., weave or mock) are particular hard to be identified. Strouse Carter and Wilson (Citation2001) demonstrated that the “easy” to identify words dominate the response pattern when paired with “hard” words in dichotic listening. That is, presenting the “easy” word to the left ear and the “hard” word to right, produced a small (non-significant) left-ear advantage. The, reversed set-up (“easy” word to the right ear, “hard” to the left) resulted in a REA, which was additionally found to be significantly increased compared to the REA yielded when using pairings of equal difficulty (both words “easy” or both “hard”).

However, not only words, but also phonemes and syllables differ in their frequencies, and one might speculate that also the identification of (non-word) syllables in dichotic listening is affected by the frequency in which the initial phoneme or the syllable occurs in the language. In French, for example, words starting with an unvoiced plosive consonant are more frequent than those starting with voiced plosives (Bedoin, Ferragne, & Marsico, Citation2010). That syllable frequency has an effect on performance has been demonstrated in studies showing that words are recognized or identified more slowly when the initial syllable is of high frequency than when it is of low frequency, independent of the frequency of the full words (Conrad & Jacobs, Citation2004; Perea & Carreiras, Citation1998). Thus, in line with the neighbourhood activation model (Luce & Pisoni, Citation1998), high frequency initial syllables appear to relate to a dense neighbourhood of potential words and take longer to categorize than low frequency syllables. Nevertheless, there is no study in the literature systematically examining phoneme/syllable frequency effects on performance in dichotic-listening paradigms.

While further research into the effect of lexical/item difficulty in dichotic listening appears warranted, the initial findings suggest that it deserves to be considered when planning the experiment. Ideally, the two words/syllables forming a dichotic stimulus pair would be matched for lexical difficulty. However, such matching appears especially challenging when rhyming words are to be used, as here the degrees of freedom for stimulus selection is substantially reduced (Techentin & Voyer, Citation2011). Thus, the best advice at the current state of knowledge appears to balance stimulus presentation by presenting all word pairs in both orientations (i.e., left ear-right ear and, the reversed, right-left orientation) so that possible effects of item difficulty would be averaged across these trials, and not substantially affect the mean estimate of laterality.

Other stimulus characteristics

When recording the spoken stimuli to be used in the paradigm, the aim should be to create stimuli which are as similar as possible to each other, keeping constant all non-relevant stimulus features, like pitch of the voice, sound length (e.g., of the constituting phonemes), loudness, or timbre. Thus, stimuli are usually spoken in neutral tone and constant intonation. Computer-synthesized speech sounds have been used it the past (Cutting, Citation1976), arguably allowing to better control and equalize the stimulus properties. Nevertheless, most paradigms used today rely on recorded natural speech tokens, performed by a voice actor and edited to meet the above requirements (Bedoin et al., Citation2010; Hugdahl et al., Citation2009; Musiek, Citation1983; Wexler & Halwes, Citation1983). One might argue that any such editing somewhat blurs the distinction between natural and synthetic speech, but seems to be unavoidable to keep non-relevant stimulus features matched. Stimuli recorded from female (Dos Santos Sequeira et al., Citation2006; Penner, Schläfli, Opwis, & Hugdahl, Citation2009; Techentin & Voyer, Citation2011) and male voice actors (Hugdahl et al., Citation2009; Westerhausen et al., Citation2018; Wexler & Halwes, Citation1983) have been used. However, possible effects of male-female acoustic differences on laterality, or interactions with the sex of the participant, have not been systematically studied.

Inter-channel onset and offset alignment

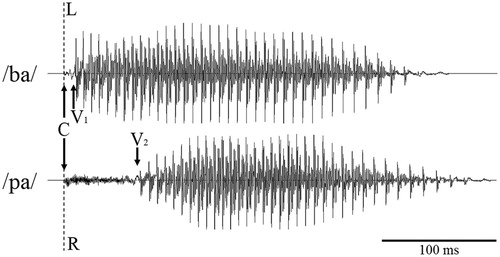

The key definition of dichotic listening is the synchronous presentation of the two paired stimuli in the left and right audio channel. This is usually achieved by temporally aligning the stimuli to achieve simultaneous onset. As exemplified for sCV stimuli in , the alignment is based on the first burst in the waveform of the stimuli, marking the initial plosion of the stop consonant (Speaks, Niccum, & Carney, Citation1982). Such careful alignment is important, as a stimulus onset-asynchrony between auditory channels of about 6–8 ms can result in a “break-up” of binaural tone pulses into two separately perceived stimuli (Babkoff, Citation1975). Also considering dichotic listening, perceptual laterality appears to be susceptible to onset asynchronies (Berlin, Lowe-Bell, Cullen Jr, Thompson, & Loovis, Citation1973; Studdert-Kennedy et al., Citation1970; Wood, Hiscock, & Widrig, Citation2000). In English-speaking samples, onset asynchronies favour the trailing stimulus irrespective of the ear it is presented to. Thus, it has been shown that the left-ear stimulus lagging the right about 20–30 ms even results in a left-ear advantage (Berlin et al., Citation1973; Studdert-Kennedy et al., Citation1970).

Figure 1. Waveforms for the syllables /ba/ and /pa/ as used in dichotic listening aligned between channels (L, left; R, right) to the onset of the “burst” of the consonant (C). V1 and V2 mark the approximate onset of the vowel in both syllables. The short interval between C and V1 for /ba/ (i.e., the short voice-onset time), is characteristic for voiced syllables. The relative longer voice-onset time, C to V2, for /pa/ is characteristic for an unvoiced syllable. Onset alignment with comparable vowel length leads (a) to offset differences between syllables and (b) to a reduced spectro-temporal overlap between mixed-voicing pairs, like /ba/ and /pa/.

At the same time, stimuli also might differ in their length so that the end of the sounds is temporally shifted. Considering sCV syllables in , for example, the waveform of the unvoiced /pa/ syllable is, due to difference in voice-onset time, longer than the waveform of the syllable /ba/ (Simos, Molfese, & Brenden, Citation1997). Such stimulus length differences have been widely accepted as a natural feature of the stimulus material and tolerated as part of the paradigm (D'Anselmo, Marzoli, & Brancucci, Citation2016; Hugdahl et al., Citation2009). Others have attempted to equate the length of the stimuli (Bryden & Murray, Citation1985; Moncrieff, Citation2015; Techentin & Voyer, Citation2011), where the main challenge is to ensure that the length adjustment does not substantially change the acoustical characteristics of the stimuli. Direct comparisons between length-adjusted and unadjusted stimuli are missing and an evaluation of the effects of length differences is currently not possible.

Inter-channel spectro-temporal overlap: perceptual fusion

Non-rhyming words, like star and pot, even when perfectly aligned in onset and offset, will differ substantially in their spectral (e.g., onset of the first formant) and temporal (e.g., onset or length of the vowel) properties. To maximize the overlap between the two sounds, it has thus been suggested to pair stimuli, which only differ in one phoneme (Wexler, Citation1988; Wexler & Halwes, Citation1983), such as rhyming words (Fernandes & Smith, Citation2000; Hiscock, Cole, Benthall, Carlson, & Ricketts, Citation2000; Wexler & Halwes, Citation1983) or CV/CVC syllables with the same vowel (Hugdahl et al., Citation2009; Shankweiler & Studdert-Kennedy, Citation1975). The aim is to achieve spectro-temporal overlap that is so complete that the stimuli are likely to perceptually fuse (Cutting, Citation1976; Repp, Citation1976). That is, although two stimuli are presented, the two stimuli will be subjectively perceived as one stimulus (Westerhausen, Passow, & Kompus, Citation2013). The sounds /ba/ and /ga/ presented dichotically might be perceived as a single /ga/. The dichotic word pair tower and power would, for example, be perceived as power.

Perceptual fusion has the benefit that the information to be processed by the participant on each trial is reduced to a single item, which renders the task easy from a cognitive prespective (Wexler, Citation1988). This claim is supported by empirical findings and theoretical considerations. Asbjørnsen and Bryden (Citation1996) demonstrated that the performance in a dichotic-listening paradigm using fused-rhyming word pairs is only minimally affected by instructing the particpants to selectively attend to and report from only one ear at the time. The effect of attention instruction was much stronger in sCV paradigm, consisting of fusing and non-fusing stimulus pairs. Using the same sCV paradigm, Westerhausen et al. (Citation2013) compared the responses to fusing and non-fusing stimulus pairs and found both shorter response times and reduced inferior-frontal brain activation for fusing stimuli, together supporting the interpretation that the processing of fusing stimuli is cognitively less demanding. Also, theoretically analysing the task demands suggests that a “fused” percept (as compared to a “non-fused” situation) reduces the working-memory load from two to one item, requires only one stimulus to be identified, simplifies the response selection to a repetition of the one perceived stimulus, eliminates response order confounds, and minimizes potential effects that “top-down” preferences for one or the other channel may have. Finally, retest reliability of a paradigm using fused-rhyming words is with r = .85 found to be substantially higher than the r = .45 found when non-rhyming/non-fusing word pairs were used (Hiscock et al., Citation2000).

However, the tendency for perceptual fusion strongly depends on the phonological properties of the contrasted stimuli even when they appear very similar (Repp, Citation1976). For example, when using plosives as initial phonemes, the voicing of the stimuli systematically affects the spectro-temporal overlap of the two auditory channels (Brancucci et al., Citation2008). Voiced syllables, such as /ba/ or /da/, are characterized by strong rhythmic vibrations of the vocal cord during articulation, while unvoiced syllables, such as /pa/ or /ta/, do not show such vibrations. Importantly, the voicing property of these stimuli is associated with differences in the voice-onset time, which is the interval between the release of the initial consonant phoneme (referred to as C in ) and the onset of the vocal-cord vibrations (V1 and V2, respectively) of the vowel (Simos et al., Citation1997). Voiced syllables have a relatively short voice-onset time (in English 10–27 ms; see Speaks, Niccum, Carney, & Johnson, Citation1981; Voyer & Techentin, Citation2009) while unvoiced syllables (e.g., /pa/ or /ka/) have a longer voice-onset time (50–83 ms). Combining stimuli of different voicing categories to dichotic stimulus pairs consequently reduces the temporal overlap of the two channels. Synchronized to start at the consonant “occlusion,” the voice onset is naturally delayed in the unvoiced relative to the voiced syllable, making them less likely to fuse into one percept than pairs formed by combining stimuli within the same voicing category (Westerhausen et al., Citation2013). Furthermore, mixed-voicing category pairs are not only less likely to perceptually fuse, they also show systematic differences in laterality measures. That is, the unvoiced initial phoneme stimulus dominates perception, irrespective of whether the unvoiced stimulus is presented to the right or the left ear (e.g., Arciuli, Rankine, & Monaghan, Citation2010; Berlin et al., Citation1973; Moncrieff & Dubyne, Citation2017; Speaks et al., Citation1981; Voyer & Techentin, Citation2009). Presenting an unvoiced sCV syllable to the right and a voiced to the left ear, yields a (pronounced) right-ear advantage, whereas presenting an unvoiced syllable to the left and a voiced to the right ear, typically results in a significant left-ear advantage (Rimol, Eichele, & Hugdahl, Citation2006). It has to be noted that the effect of voicing is somewhat reduced in FDRW paradigms (McCulloch et al., Citation2017) and studies in non-Germanic languages suggest differences in the magnitude (Westerhausen et al., Citation2018) or the direction (Bedoin et al., Citation2010) of the voicing effect. Nevertheless, utilizing mixed-voicing pairings will introduce trial-to-trial variability to the paradigm.

Taken together, using stimulus pairs resulting in a high inter-channel stimulus overlap is beneficial as long as perceptual fusion is achieved. Perceptual fusion reduces the cognitive demands of the task and minimizes the potential effect of top-down attentional shifts. Thus, it may be considered beneficial to pilot test stimulus material for fusion before the actual experiment (for a possible approach to a pilot test, see Westerhausen et al., Citation2013). However, one might also argue that within a balanced experimental design—with an equal amount of mixed-voicing pairs favouring the left and the right ear, respectively—the voicing effect would “average out” across trials, and not affect the mean estimation of laterality. Even if this assumption holds, it reduces internal consistency of the paradigm, as trial-to-trial variability is introduced. Also, it implicitly increases the number of required trials, as one unbiased laterality estimate requires averaging the response of two mixed-voicing pair trials, rendering the paradigm less time-efficient.

Stimulus-presentation features

Number of trials

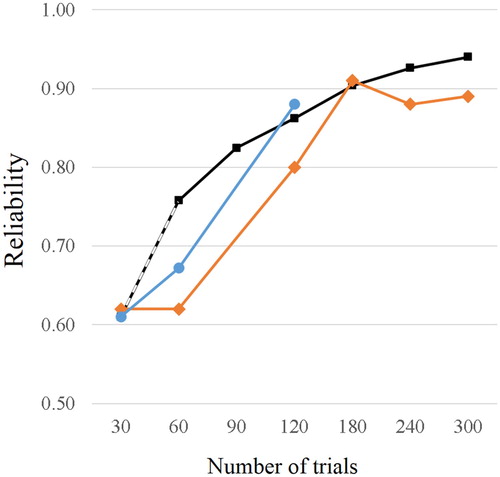

In general, in accordance with the Spearman-Brown formula for test length adjustment (Pedhazur & Schmelkin, Citation2013), an increase in the number of trials will also increase the reliability of the test, although with diminishing returns. In line with this prediction, Speaks et al. (Citation1982) report an increase in reliability with increasing number of trials in a free-report dichotic-listening paradigm using sCV syllables. As can be seen in , the product-moment correlation between two test halves increases from r = .62 for both 30 and 60 trials, via r = .80 for 120 trials, to r = .91 for 180 trials, but does not increase beyond this value for 240 or 300 trials. Comparing published reliability data across different studies and samples (but using a comparable syllable-based paradigms) similar estimates can found (). Reliability estimates of r = .61 for a 30 trial paradigm (Hugdahl & Hammar, Citation1997), between r = .61 and .70 for 60 trials (Bryden, Citation1975; Shankweiler & Studdert-Kennedy, Citation1975; Van der Haegen & Brysbaert, Citation2018), and of r = .84 and .91 for 120 trials (Springer & Searleman, Citation1978; Wexler, Halwes, & Heninger, Citation1981) have been reported. Taken together, the results suggest that satisfactory reliabilities of r > .80 can be expected when about 120 trials are used. This is also supported by Hiscock et al. (Citation2000), who demonstrated that also for the FDRW test, no substantial improvement of reliability estimates can be observed above 120 trials.

Figure 2. Reliability as a function of trial number in free-report dichotic-listening paradigm using (non-word) CV syllables. Orange line: empirical reliability estimates as reported by Speaks et al. (Citation1982). Blue line: average empirical reliability across different studies (for 30 trial paradigm from Hugdahl & Hammar, Citation1997, for 60 trials from Bryden, Citation1975; Shankweiler & Studdert-Kennedy, Citation1975; Van der Haegen & Brysbaert, Citation2018, and for 120 trials from Springer & Searleman, Citation1978; Wexler et al., Citation1981). Black line: estimated reliability calculated using the Spearman-Brown formula based on the empirical reliability of .61 reported for 20 trials by (Hugdahl & Hammar, Citation1997). All three plots indicate reliability >.80 at 120 trials.

However, the number of trials alone is obviously not sufficient to achieve acceptable reliability, as other variables will affect a paradigm’s reliability. Accordingly, reported reliability estimates of dichotic listening are in general heterogeneous (Voyer, Citation1998), and estimates between r = .63 (Wexler & King, Citation1990, using a FDRW paradigm) and r = .91 (Wexler et al., Citation1981; using a VCV paradigm) can be observed even in paradigms that consist of 120 trials. Furthermore, it also has to be acknowledged that reliability calculated as product-moment correlations (1) does not account for the fact that the correlated measures are repeated measures of the same variable with the same test (i.e., the measures are from the same “class” of data), and (2) only considers the covariance of the two measures, as mean differences between the two measures are implicitly removed during the calculation of the correlation coefficient (McGraw & Wong, Citation1996). The first critique point has been addressed in only one previous study (Bless et al., Citation2013) which used intra-class correlations (ricc), and yielded estimates between ricc = .70 and .78 in a 30 trial sCV paradigm (ricc calculated as two-way mixed effect model for single measurement and measurement consistency; i.e., ICC(C,1) according to McGraw & Wong, Citation1996). Reanalysing the data of Bless et al. (Citation2013) by also accounting for mean differences (i.e., using the ICC(A,1) model, see McGraw & Wong, Citation1996), the reliability estimates drop slightly to ricc = .68 and .77, respectively. Applying the Spearman-Brown formula (Pedhazur & Schmelkin, Citation2013) to predict ricc for longer tests, reliabilities increase to .86 and .89 for 90 and 120 trials, respectively (based on the ricc= .68 estimate from Bless et al., Citation2013).

The number of 90 to 120 trials (in a free report, single stimulus per trial set-up) appears to be a good benchmark for experimental planning but cannot be the sole measure to guarantee reliable data since many other variables, as discussed in the present review, will affect reliability. Also, the experiment has to be feasible, considering that an excessive number of trials will tire the participants or may breach given time constraints. However, a 120-trial experiment will likely not take more than ten minutes. Nevertheless, the quest for the optimal number of trials will always represent a compromise between feasibility and reliability considerations. Finally, for dichotic-listening studies, as for all studies, authors should be encouraged to consider providing reliability estimates with their findings. For this, ideally, the paradigm would have to be run twice on the same participants. However, also calculating split-half reliability (corrected for test length) might serve as a good approximation.

The number of stimulus pairs per trial

Dichotic-listening paradigms have been used utilizing experimental set-ups based on single (Hugdahl et al., Citation2009; Wexler & Halwes, Citation1983) or multiple stimulus-pair presentations per trial (Dos Santos Sequeira et al., Citation2006; Kimura, Citation1961a, Citation1961b; Musiek, Citation1983). For example, Kimura’s initial study consisted of blocks of three digit pairs, which were presented in close succession, while the participant had to wait with reporting the stimuli until all three pairs were presented. However, early replication studies revealed that the number of presentations per trial significantly modulates the magnitude of the right-ear advantage (Bryden, Citation1962): as the number of presentations per trial increases the right-ear advantage is reduced (see also Penner et al., Citation2009). This reduction can be attributed to differences in task demands, short-term memory load, and stimulus-retention interval between single- and multi-presentation trials. In a single-pair set-up, participants are confronted with a pair of two stimuli at a time and are instructed to respond without any time delay. In a multiple-pair set-up, when participants are confronted with two, three, or more stimulus pairs per trial, the load is increased to four, six, or more stimuli per trial. In addition, the multiple stimulus pairs are presented sequentially within a trial so that early stimuli of a trial have to be retained in memory for several seconds and a sequence of verbal or manual responses has to be coordinated (for similar reasoning see Voyer et al., Citation2014).

As the total number of stimuli increases, the participants approach the short-time memory capacity limits (Cowan, Citation2010) resulting in that not all perceived stimuli will be correctly recalled. In fact, studies using multi-pair set-ups are often designed to go beyond the capacity limit in order to provoke a reasonable amount of errors as a perfect recall of all stimuli would prevent finding difference between the left- and right-ear recall (Bryden, Citation1988). This act of “forgetting” is reflected in primacy and recency effects for stimulus recall in dichotic listening (Aghamollaei et al., Citation2013; Penner et al., Citation2009). For example, systematically comparing trials of three, four, and five consonant-vowel syllable pairs, Penner et al. (Citation2009) showed that both early as well as late serial positions were more likely correctly reported than those from intermediate position. The stimulus pairs at later serial positions showed the strongest REA, contributing significantly more to the overall REA than earlier positions. Furthermore, instructed to report multiple stimuli after each trial, participants are likely to develop a report strategy (Bryden, Citation1962; Freides, Citation1977), which will systematically influence which stimuli will be forgotten. Stimuli to-be-reported early will be more likely recalled correctly than stimuli at the later position of the intended report order. Illustrating this effect, Freides (Citation1977) was able to show that participants—usually preferring to start reporting the right-ear stimuli—when voluntarily starting with the left-ear stimuli, show a reduced REA (in a free recall situation). When explicitly instructed to start the report with the left-ear stimuli these participants even yielded a left-ear advantage.

Finally, also the length of the retention interval affects the performance in at least two ways. Firstly, it has been shown that longer retention interval even in single-pair trials accentuated the perceptual preference (D'Anselmo et al., Citation2016). Secondly, ongoing presentation of additional stimuli appears to interfere with ongoing retention of earlier stimuli. That is, the perceptual preference is weakened when the participants are asked to perform a verbal task (e.g., arithmetic calculation) during retention (Belmore, Citation1981; Yeni-Komshian & Gordon, Citation1974). Thus, it appears that the temporal delay allows the initial right-ear biased stimulus representation to be further enhanced by controlled rehearsal processes, as long as not interfered with by other phonological tasks (Voyer et al., Citation2014). The idea of an ongoing rehearsal process is also supported by the report that many participants tend to “whisper or mumble the numbers to themselves” (Bryden, Citation1962, p. 297), at least when the presentation rates allow for it. In this, the findings resemble studies on interference effects during retention of verbal material within the phonological loop (Baddeley, Citation2012; Baddeley, Thomson, & Buchanan, Citation1975), only that here the “interference” also reduces the magnitude of the ear advantage in addition to the performance level.

Taken together, multi-pair set-ups pose additional demands on working-memory resources, systematically affecting the obtained laterality estimates. These additional demands make a multi-pair trial more susceptible to confounding effects, as individuals or groups differ in their working-memory functioning. Thus, although multi-pair trials typically yield a robust right-ear advantage, it is “unreasonable to assume that hemispheric specialization [is] the sole factor affecting the magnitude of the right ear advantage” (Bryden, Citation1988, p. 4). Single-pair trials appear better suited for laterality estimates as these draw less on additional cognitive functions.

Sound level of stimuli and stimulus presentation

The stimulus-presentation sound level should be set to a comfortable hearing intensity. Thus, intensity levels between 70 (e.g., Voyer et al., Citation2014) to 80 dB(A) (e.g., Hiscock et al., Citation2000) are typically used. Measured as mean intensity across the entire stimulus, the intensity may vary slightly between different items (Moncrieff & Dubyne, Citation2017). Nevertheless, it is of crucial importance that presentation sound levels are comparable between the left and right channel, as inter-aural intensity difference significantly affects the magnitude of ear preference (e.g., Berlin, Lowe-Bell, Cullen Jr, Thompson, & Stafford, Citation1972; Bloch & Hellige, Citation1989; Hugdahl, Westerhausen, Alho, Medvedev, & Hämäläinen, Citation2008; Tallus, Hugdahl, Alho, Medvedev, & Hämäläinen, Citation2007). In fact, an intensity difference in favour of the left-ear channel of about 6 to 12 dB has been shown to abolish or reverse the right-ear advantage found at 0 dB difference (Berlin et al., Citation1972; Hugdahl et al., Citation2008; Robb, Lynn, & O’Beirne, Citation2013; Westerhausen et al., Citation2009).

Furthermore, the stimulus-presentation sound level needs to be considered in context of the given ambient noise level in the test rooms, as it has been demonstrated that the presence of background noise significantly alters the cognitive demands of a task (Peelle, Citation2018) and reduces the magnitude of the right ear preference in dichotic listening (Dos Santos Sequeira, Specht, Hämäläinen, & Hugdahl, Citation2008; Dos Santos Sequeira, Specht, Moosmann, Westerhausen, & Hugdahl, Citation2010; McCulloch et al., Citation2017). Noise levels will vary between test rooms, but test computers and ventilation will represent major noise sources. Typically, ambient noise levels are in the magnitude of 40 dB(A). Previous studies suggest that stimuli presented at 30 to 40 dB(A) above ambient noise level allow to successfully conduct a dichotic-listening test (Hiscock et al., Citation2000). Sound attenuating or cancelling headphones might be used to provide additional shielding. It also appears reasonable to avoid lateralized sound sources in the test room in order not to bias the signal-to-noise ratio in an asymmetric fashion.

Response collection and instruction

Response format

Verbal and manual response methods have a tradition in dichotic-listening research. For verbal responses, the participant is typically instructed to repeat orally after each trial the stimulus or stimuli she/he perceives, and the response is either recorded for later analysis or ad-hoc scored by an experimenter (e.g., Bryden, Citation1964; Hugdahl et al., Citation2009; Penner et al., Citation2009). Manual responses have been collected by asking the participants to check off stimuli on a prepared sheet of paper (Hiscock et al., Citation2000; Wexler & Halwes, Citation1983) or, in computerized versions of the paradigm, via keyboards (Westerhausen et al., Citation2018), mouse click (D'Anselmo et al., Citation2016; McCulloch et al., Citation2017), touch screen (Bless et al., Citation2013, Citation2015), or external response-button boxes (Bayazıt, Öniz, Hahn, Güntürkün, & Özgören, Citation2009; Hahn et al., Citation2011).

While there is, to date, no experimental evidence for which method to prefer, each method of responding is associated with apparent advantages and disadvantages. Regarding verbal responses, one concern is that hemispheric dominance for speech production during the later response phase might modulate or override the perceptual laterality resulting from the earlier speech perception phase. Although such alterations are conceivable, speech perception and production are usually found to be co-lateralized when tested in the same individuals (Cai, Lavidor, Brysbaert, Paulignan, & Nazir, Citation2008; Ocklenburg, Hugdahl, & Westerhausen, Citation2013), so that severe alterations at least concerning the direction of the preference appear unlikely.

A more technical concern is the registration of the participant’s verbal response for data analysis. This will be usually done by the experimenter, either ad-hoc during the experiment or post-hoc based on the audio recordings of the test session. Compared to a situation in which the participants manually log responses themselves, this can be a source of error variance. On the other hand, the verbal response method is arguably more flexible as the number of possible responses is not limited by the number of response buttons/alternatives available. Any manual response device has to assign a response button for each possible answers from which the participant is asked to select the response. Thus, verbal responses appear beneficial for paradigms, which use a large number of different stimuli (e.g., Moncrieff, Citation2015; Strouse Carter & Wilson, Citation2001). Also, verbal responding allows the experimenter to record responses, which cannot readily be anticipated, like the fusing of two stimuli into a third, not presented percept (Repp, Citation1976).

The manual response method allows replacing the asymmetrically coordinated verbal with a bilateral response-selection system, as the control of motor responses is located in the hemisphere contralateral to the responding hand. However, this advantage can best be utilized if both left- and right-hand responses are included into the experimental design in a counterbalanced way. This consideration especially applies when response-time measures are the planned outcome variable. To illustrate this with an example, using only right-hand responses would systematically bias the response times in samples consisting of both left and right speech dominant individuals. That is, for left dominant individuals, the right-hand responses would be controlled from the same hemisphere in which the speech processing takes place. For right dominant individuals speech processing and motor control would be in different hemispheres, requiring an additional step of inter-hemispheric coordination. This additional step has been shown to slow the response time significantly (Jäncke, Citation2002; Jäncke & Steinmetz, Citation1994). Thus, it introduces a systematic bias in disfavour of the right dominant group. Nevertheless, considering accuracy measure, it can be questioned whether the additional coordination step would lead to substantial modulation of direction or magnitude of the ear preference since it appears unlikely that the short delay would significantly change the already completed stimulus identification.

It has also been argued that using manual instead of verbal responses modifies the cognitive demands of the paradigm, as the manual response requires additional response selection processes including visual-motor coordination (Van den Noort, Specht, Rimol, Ersland, & Hugdahl, Citation2008). For example, considering a paradigm using the full-set of sCV syllables, a participant will be confronted with six response alternatives, among which (at least) one has to be selected. Furthermore, the additional demand can be considered to vary as a function of the number of response options provided, as from classical choice-reaction experiment it is known that the response time increases monotonously with the number of choices (e.g., Smith, Citation1968).

One special method of manual response collection, which minimizes the response to a single button press, has been developed in form of dichotic target-detection paradigms, usually referred to as dichotic monitoring (Geffen, Citation1976; Geffen & Caudrey, Citation1981; Grimshaw et al., Citation2003; Jäncke, Citation2002). In the least demanding version, participants are instructed to press a response button only when a predefined target stimulus appears. For example, using sCV stimuli Jäncke et al. (Citation1992) selected the syllable /ta/ as target and yielded a REA in both hit rate and response time. Monitoring tasks have demonstrated good retest reliability for classification (using words as stimuli; Geffen & Caudrey, Citation1981) and magnitude of the ear preference in hit rate and response time (using sCV stimuli; Jäncke et al., Citation1992). However, one disadvantage of monitoring paradigms is that they are less efficient than alternatives as more trials are required. That is, a target stimulus can only be presented in a small number of trials (e.g., 12% and 16.6% in Geffen & Caudrey, Citation1981 and Jäncke et al., Citation1992, respectively) to allow for meaningful response collection. Also, the task demands in a monitoring task differ from the demands in a free-recall paradigm, as monitoring additionally requires the participant to compare a categorized stimulus with a predefined, to-be-maintained target “template” stimulus. Small to medium (r = .11 to .50) correlations between laterality coefficients for target monitoring and free-recall sCV paradigms (Jäncke et al., Citation1992), can be seen as support for the notion that monitoring task measure different aspects of speech perception than free-recall tasks.

Taken together, when primary outcome measures are accuracy measures both verbal and manual response formats appear suitable. Although the cognitive demands differ between these response formats, substantial effects on accuracy measures and derived laterality indices appear unlikely (but deserve further investigation). Computerized paradigms additionally offer the possibility of collecting response times. This is mainly done using manual responses, although also articulation-related onset response times might be recorded via microphones. Finally, considering clinical, developmental, or ageing samples, the response format necessarily has to account for possible physical or cognitive response restrictions of the participants.

Stimulus-to-response delay

A retention interval between stimulation and response collection, as discussed above, seems to encourage subvocal rehearsal processes to keep the verbal stimulus activated in working memory (Baddeley, Citation2012). Non-interfered, these rehearsal processes apparently enhance the perceptual laterality, as increasing the retention interval from immediate recall to one or three seconds increases the magnitude of the REA (D'Anselmo et al., Citation2016). However, the retention interval also keeps the encoded stimuli vulnerable for interference via newly incoming verbal material decreasing laterality and accuracy (Belmore, Citation1981; Voyer et al., Citation2014; Yeni-Komshian & Gordon, Citation1974). Thus, whether with or without interference, delayed response collection likely increases error variance of laterality estimates and, thus, an immediate response collection appears beneficial. This observation can additionally inform the decision regarding the format of response collection (see previous section) as, for example, a complicated response format might lead to significant response delays.

Number of responses per trial

Considering multiple-stimulus pair trials, naturally, more than one answer is expected, with consequences for stimulus retention and report accuracy as discussed above. However, also in single-pair trials, participants have been asked to report both stimuli (Speaks et al., Citation1982; Studdert-Kennedy et al., Citation1970). Arguably, the working-memory requirements for processing these two stimuli are small in single- compared to the multi-pair trials. Reliability measures appear comparable for sCV paradigms (comparable number of trials), with r= .61 and .62 for single response (Hugdahl & Hammar, Citation1997) and dual response modes (Speaks et al., Citation1982), respectively, suggesting little influence on data quality. However, direct comparisons in the same participants are missing. In addition, report order effects cannot be excluded when participants are asked to report both stimuli. The REA was found to be stronger when determined based on the first response than when calculated on the second response (Studdert-Kennedy et al., Citation1970). Furthermore, in the case perceptually fusing stimulus pairs are used it appears more natural to only ask for one response per trial, also to prevent guessing (Wexler, Citation1988). Thus, taken together, it appears reasonable to recommend using single responses in single-pair paradigms. This can, for example, be achieved by instructing participant to repeat the stimulus heard clearest or best on each trial (e.g., Hugdahl et al., Citation2009).

Inter-trial interval

Systematic studies on the effect of inter-trial intervals are missing. The typical inter-trial interval for single-trial paradigms in current behavioural studies ranges from 3000 (Hahn et al., Citation2011) to 4000 ms (Hodgetts et al., Citation2015; Westerhausen et al., Citation2015a), leaving sufficient time for response collection and not introducing time pressure. Accordingly, studies in developmental, ageing, or clinical samples might benefit from longer inter-stimulus intervals, or self-paced experimental set-ups. Multi-presentation trials naturally require longer inter-trial delays to allow for multiple answers. An additional 1000 to 2000 ms time per expected response appears suitable (Penner et al., Citation2009).

Inter-trial effects and trial order

It has been demonstrated that performance in a given trial can be influenced by the trial order, inasmuch as negative priming for repeated stimuli has been reported using sCV syllables (Sætrevik & Hugdahl, Citation2007a, Citation2007b). That is, a stimulus that is a repetition of a stimulus presented in the immediately preceding “prime” trial is less likely reported on the current “probe” trial. This negative priming is independent of whether the repeated stimulus is the right- or the left-ear stimulus of the prime trial or whether it was actually reported in the prime trial. Therefore, the measured perceptual laterality in the probe trial is systematically modulated by negative priming. The REA is significantly enhanced when the left-ear stimulus is repeated and is even turned to a left-ear advantage when the right-ear stimulus is repeated.

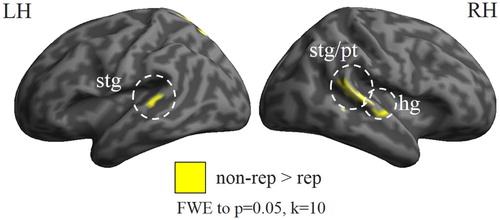

In an fMRI analysis of the same sCV paradigm, it was demonstrated that stimulus repetition is associated with suppression of brain activation (Westerhausen, Kompus, Passow, & Hugdahl, Citation2014b). Dichotic stimulation with one stimulus repeated in the probe trial evoked a reduced brain activation as compared to trials not including a repeated stimulus. As shown in , the suppression was found bilaterally in primary auditory cortex and in posterior superior temporal sulcus. Notably, repetition suppression is usually associated with performance facilitation, such as (positive) priming, rather than negative priming (Barron, Garvert, & Behrens, Citation2016; Grill-Spector, Henson, & Martin, Citation2006). However, repetition suppression effects are typically studied with regard to a single stimulus, whereas dichotic listening naturally deals with two stimuli. The observed suppression in the above fMRI study, thus, reflects the joined response of the two presented stimuli: the neuronal response to the repeated stimulus, which can be expected to be suppressed by the repetition, as well as the neuronal response to the non-repeated stimulus, which is presumably unaffected. Taken together, the stimulus repetition would shift the relative balance of the neuronal representation of the two stimuli to favour the non-repeated stimulus. It can be speculated that this shift underlies the negative priming effect.

Figure 3. Effect of stimulus repetition in dichotic listening on the BOLD response. Results from an fMRI study on N = 113 subjects (age: 29.3 ± 7.1 years; 49 females) using sCV syllables as stimulus material. Stronger activation in trials which did not contain a repeated stimulus (non-rep), as compared to trials which repeated a stimulus from a preceding trial (rep). Significant activation differences were found bilaterally in superior temporal gyrus (stg) and planum temporale (pt), as well as in the right Heschl’s gyrus (hg). Family-wise error correction (FWE) to p = .05 and a cluster threshold of 10 voxels were applied. Reported in Westerhausen et al. (Citation2014b); reanalysed data from Kompus et al. (Citation2012).

Irrespective of the neuronal mechanisms underlying negative priming effect, given a limited number of stimuli it appears difficult to completely avoid such “carry-over effects” from one trial to the next trial, however, it is possible to control for them. Using a pseudorandomized order and carefully balancing for stimulus repetition will reduce possible negative priming biases compared to an ad-hoc computer-controlled randomization of trials.

Selective attention instruction or free-report?

A cognitive factor, which has to be considered in dichotic listening, is voluntary, top-town attentional preferences. A free-report instruction, that is, asking participants to repeat, after each trial, the stimulus/stimuli they have perceived clearest, gives participants room to develop a response strategy, for example, by deciding to direct attention selectively to the left or right ear in a voluntary fashion. This possibility not only introduces noise to the data, in form of trial-to-trial variability; it also, if consistently directed to one ear, introduces systematic biases to the assessment of perceptual laterality. Since the early dichotic “shadowing” experiments by Broadbent (Citation1952) and Cherry (Citation1953), it is known that attentional selection can be utilized to follow an auditory stream presented to one ear while efficiently ignoring a competing stream presented to the other ear. Studies assessing directed attention on dichotic listening in the context of laterality research, typically reveal substantial effects on the observed laterality scores (e.g., Asbjørnsen & Bryden, Citation1996; Bloch & Hellige, Citation1989; Bryden, Munhall, & Allard, Citation1983; Foundas, Corey, Hurley, & Heilman, Citation2006; Hjelmervik et al., Citation2012; Hugdahl & Andersson, Citation1986; Moncrieff & Dubyne, Citation2017; Mondor & Bryden, Citation1991; Westerhausen et al., Citation2006). That is, when participants are instructed (verbally or cued by monoaural tones) to attend selectively to the right ear, the magnitude of the right-ear advantage is increased relative to a free-recall condition. However, when instructed to attend selectively to the left ear, participants frequently reveal a left-ear advantage. Thus, voluntary biases to report stimuli from one ear—based on an experimental selective attention instruction or initiated by arbitrary attentional strategies—have a strong modulatory effect on perceptual laterality, and have to be considered when planning an experiment using dichotic listening.

To control for attention biases, it has been suggested to replace the free-report approach with directed-attention paradigms, and estimate perceptual laterality by only utilizing the correctly detected stimuli form the to-be attended ear (e.g., Bryden et al., Citation1983). That is, laterality would be determined by comparing the number of correctly reported right-ear stimuli under “attend right” instruction, with the the number of correct left-ear reports under “attend left” instruction. Although typically yielding a right-ear advantage, this approach is implicitly relying on the assumption that attending/reporting the stimulus from left- and the right-ear, demands the same cognitive processes. That is, the effect of attention is assumed independent of and additive to the “true” underlying perceptual laterality, so that the attentional enhancement in accuracy would be more or less comparable between the attend-left and attend-right condition. However, this equivalence assumption has been substantially challenged in recent years (Hugdahl et al., Citation2009). It has been noted that the “default” perceptual bias in favour of right-ear input makes it easier to attend to right- than the left-ear stimulus and that attentional selection consequently cannot be seen as an additive effect but rather represents an interaction between baseline stimulus preference and attention instruction.

This interpretation finds support in a series of studies showing a behavioural dissociation between the “attend right” and the “attend left” condition. For example, compared to healthy controls, individuals with Alzheimer’s disease (Gootjes et al., Citation2006), attention-deficit/hyperactivity disorder (Dramsdahl, Westerhausen, Haavik, Hugdahl, & Plessen, Citation2011), dyslexia (Hakvoort et al., Citation2016), Klinefelter syndrome (Kompus et al., Citation2011), and schizophrenia (Green, Hugdahl, & Mitchell, Citation1994) show an impaired ability to selectively attend the left-ear stimulus, whereas their performance is unaltered for attending to the right ear. Studies in healthy ageing indicate that older compared to young individuals exhibit a reduced ability to follow the instruction to attend to left-ear stimulus while no differences are found in the “attend right” condition (Takio et al., Citation2009; Westerhausen, Bless, Passow, Kompus, & Hugdahl, Citation2015b). The dissociation is further supported by studies on healthy young participants. Firstly, response time for recall and target detection in attend-left are significantly slower than response times under attend-right instruction (Clark, Geffen, & Geffen, Citation1988). Secondly, imaging studies reveal activation differences between the two attention conditions (Kompus et al., Citation2012). That is, the left inferior frontal gyrus and left caudate nucleus were stronger activated in the attend-left than in the attend-right condition, whereas right inferior frontal gyrus/caudate nucleus were in both conditions activated to comparable degree. The left fronto-striatal differences suggest that the cognitive processes are not comparable between these two conditions, and have been interpreted to reflect the higher cognitive demands associated with the “attend-left” condition (Kompus et al., Citation2012).

Taken together, selectively attending to the right or left ear, respectively, appears to create two different experimental conditions with different cognitive demands. As a result, individuals with lower as compared to higher cognitive abilities will struggle more with the attend-left than the attend-right condition. Hence, it appears inadequate to combine correct answers of these conditions to estimate perceptual laterality. A free-report instruction conditions, given its lower cognitive demands, appears a better-suited approach as it minimizes the effect individual or group difference in cognitive abilities can potentially have on the laterality estimate. Nevertheless, the free-report set-up remains challenged by the presence of trial-to-trial or individual variability in attentional biases. One promising mitigation strategy that has been suggested (Westerhausen & Kompus, Citation2018; Wexler, Citation1988) is to only use stimulus combinations which fuse into one percept (e.g., sCV combination from same voicing category, or rhyming words). As discussed above, confronted with fusing stimuli, participants will often not realize that two different stimuli were presented (Westerhausen et al., Citation2013), and attention effects during response selection are minimized (Asbjørnsen & Bryden, Citation1996).

Participant variables and generalizability

Participant characteristics and exclusion criteria

Dichotic-listening paradigms are easy to perform and have a straightforward instruction, making it a suitable paradigm for various research questions and samples, allowing investigators to study child development (e.g., Hakvoort et al., Citation2016; Moncrieff, Citation2015; Westerhausen, Helland, Ofte, & Hugdahl, Citation2010) and ageing (e.g., Passow et al., Citation2012; Roup et al., Citation2006; Westerhausen et al., Citation2015b) as well as address various clinical questions (e.g., Bruder et al., Citation2016; Gootjes et al., Citation2006; Tanaka, Kanzaki, Yoshibayashi, Kamiya, & Sugishita, Citation1999). However, one core prerequisite for a reliable assessment of laterality is good peripheral hearing and, even more importantly, the absence of substantial hearing acuity differences between the ears. As would be predicted, asymmetric hearing loss will bias perceptual laterality in favour of the “better” ear (Speaks, Bauer, & Carlstrom, Citation1983; Speaks, Blecha, & Schilling, Citation1980). From studies on the effect of interaural-intensity difference in stimulation, it can be predicted that acuity differences of about 6 to 12 dB have the potential to override the right-ear preference (Berlin et al., Citation1972; Hugdahl et al., Citation2008). Thus, it is essential for participants to undergo hearing testing before inclusion into a study. This can be done using a conventional pure tone audiometric screening for speech-relevant frequencies (i.e., testing for tone of frequencies between 250 and 3000 Hz). Various exclusion criteria have been suggested but typical threshold values are (a) an overall hearing impairment of more than 20 dB (across all frequency tested and across both ears) and (b) inter-aural hearing threshold difference of more than 10 dB (Hugdahl et al., Citation2009).

For some samples, in particular ageing samples, an exclusion based on the overall threshold might result in a substantial dropout of participants (Van Eyken, Van Camp, & Van Laer, Citation2007). Thus, a previous ageing study rather than excluding participants relaxed the criterion and adjusted the overall sound level to compensate for individual deficits (Passow et al., Citation2012). Analogously, asymmetrical hearing loss might be compensated by adjusting the stimulus presentation intensity in favour of the disadvantaged ear. However, such asymmetric compensation demands rigorous testing as it has not been done previously. An alternative approach could be to include inter-aural acuity differences as covariate of non-interest in group-level analyses. The effect of interaural-intensity differences on laterality are linear (Hugdahl et al., Citation2009; Robb et al., Citation2013; Westerhausen et al., Citation2009), so that similar linear associations for inter-aural acuity differences could be predicted.

Generalizability across languages

The REA in verbal dichotic listening is a global phenomenon as it has been reported across various languages and language families (Bless et al., Citation2015). Nevertheless, significant differences between languages can be found when analysing stimulus-related factors modulating the perceptual bias. For example, while stimulus dominance of unvoiced over voiced sCV stimuli has been frequently replicated in English or Norwegian native speakers (e.g., Rimol et al., Citation2006; Voyer & Techentin, Citation2009), the effect was found to be attenuated in Estonian native speakers (Westerhausen & Kompus, Citation2018). That is, in a cross-language comparison, stimulus voicing explained 69% of variance in a Norwegian sample while it only explained 18% in an Estonian sample. This difference has been attributed to difference in the relevance of the initial plosive consonant contrasts between the two languages. Norwegian, as English, is characterized by a clear distinction of voiced and unvoiced initial plosives, which usually carry semantic distinctions (e.g., /bɪn/ vs. /pɪn/). Within the standard Estonian language repertoire, this distinction does not occur (Westerhausen & Kompus, Citation2018). Comparably, in French native speakers, Bedoin et al. (Citation2010) finds a reversal of the voicing effect as voiced rather than the unvoiced stop consonant dominates the response pattern. In French, the unvoiced-voiced contrast for stop consonants is reflected in short positive vs. long negative voice-onset times, rather than by long vs. short positive onset times in English.

Modulatory effects, as shown for the effect of voicing, may differ between languages. It has to be emphasized that most of the findings summarized in the present paper are based on samples speaking a Germanic language, mostly English and Norwegian. Other languages are less frequently used in dichotic-listening paradigms, and across-language comparisons are rarely conducted. Thus, any generalization of the modulatory effects discussed in the present review to other languages has to be taken with caution. Moreover, the stimulus material should naturally be appropriate for the native language of the participant. Homonym trials—trials binaurally presenting the same stimulus—as pretest or intermixed between the dichotic trials can serve as a test to verify whether the participants are able to identify the selected stimuli, and can help to demonstrate stimulus appropriateness.

Concluding remarks

The present review provides an overview of modulatory variables, which all have the potential to affect the reliability of measures of perceptual laterality in dichotic-listening paradigms. As outlined in , these variables can also be understood as examples of more general principles, which might be considered helpful during study design. Optimizing dichotic-listening paradigms accordingly will reduce or control sources of error variance and improve the reliability of the obtained laterality measures. However, while reliable measurements are per definition a necessary condition for obtaining valid estimates, they are not sufficient to guarantee it. Additionally it has to be ascertained that the paradigm indeed measures the construct it is supposed to measure. In the past, dichotic listening with verbal stimulus material (as reviewed above) has been successfully used to predict hemispheric asymmetry for speech processing as obtained from more direct assessment methods (e.g., Wada test, lesion studies). Increasing the reliability by optimizing stimulus material and testing procedures, promises further to improve such inferences. However, this promise remains to be tested empirically in future validation studies. Finally, the review also underlines that dichotic-listening experiments differ substantially, with consequences for reliability and interpretation. It has to be acknowledged that the term dichotic listening refers to a class of experimental paradigms, rather than one single paradigm. It appears negligent to ignore these differences when comparing or integrating results across different paradigms. Thus, the modulatory variables outlined here might also aid the understanding of consistencies and inconsistencies in the literature in future reviews and meta-analyses.

Table 1. Five design principles for a reliable dichotic-listening paradigm, measures how to adhere to these principles, and benefits achieved.

Acknowledgements

The author would like to thank Kristiina Kompus, University of Bergen, Norway, for her thoughtful comments on an earlier version of the manuscript.

Disclosure statement

No potential conflict of interest was reported by the author.

ORCID

René Westerhausen http://orcid.org/0000-0001-7107-2712

References

- Aghamollaei, M., Jafari, Z., Tahaei, A., Toufan, R., Keyhani, M., Rahimzade, S., & Esmaeili, M. (2013). Dichotic assessment of verbal memory function: Development and validation of the Persian version of dichotic verbal memory test. Journal of the American Academy of Audiology, 24(8), 684–688.

- Arciuli, J., Rankine, T., & Monaghan, P. (2010). Auditory discrimination of voice-onset time and its relationship with reading ability. Laterality: Asymmetries of body, Brain and Cognition, 15(3), 343–360.

- Asbjørnsen, A. E., & Bryden, M. (1996). Biased attention and the fused dichotic words test. Neuropsychologia, 34(5), 407–411.

- Babkoff, H. (1975). Dichotic temporal interactions: Fusion and temporal order. Perception & Psychophysics, 18(4), 267–272.

- Baddeley, A. D. (2012). Working memory: Theories, models, and controversies. Annual Review of Psychology, 63, 1–29.

- Baddeley, A. D., Thomson, N., & Buchanan, M. (1975). Word length and the structure of short-term memory. Journal of Verbal Learning and Verbal Behavior, 14(6), 575–589.

- Barron, H. C., Garvert, M. M., & Behrens, T. E. (2016). Repetition suppression: A means to index neural representations using BOLD? Philosophical Transactions of the Royal Society B, 371(1705), 20150355.

- Bayazıt, O., Öniz, A., Hahn, C., Güntürkün, O., & Özgören, M. (2009). Dichotic listening revisited: Trial-by-trial ERP analyses reveal intra-and interhemispheric differences. Neuropsychologia, 47(2), 536–545.

- Bedoin, N., Ferragne, E., & Marsico, E. (2010). Hemispheric asymmetries depend on the phonetic feature: A dichotic study of place of articulation and voicing in French stops. Brain and Language, 115(2), 133–140.

- Belmore, S. M. (1981). The fate of ear asymmetries in short-term memory. Brain and Language, 12(1), 101–115.

- Berlin, C. I., Lowe-Bell, S. S., Cullen Jr, J. K., Thompson, C. L., & Loovis, C. F. (1973). Dichotic speech perception: An interpretation of right-ear advantage and temporal offset effects. The Journal of the Acoustical Society of America, 53(3), 699–709.

- Berlin, C. I., Lowe-Bell, S. S., Cullen Jr, J. K., Thompson, C. L., & Stafford, M. R. (1972). Is speech “special”? Perhaps the temporal lobectomy patient can tell us. The Journal of the Acoustical Society of America, 52(2B), 702–705.

- Bless, J. J., Westerhausen, R., Arciuli, J., Kompus, K., Gudmundsen, M., & Hugdahl, K. (2013). Right on all occasions?—On the feasibility of laterality research using a smartphone dichotic listening application. Frontiers in Psychology, 4(42), doi: 10.3389/fpsyg.2013.00042

- Bless, J. J., Westerhausen, R., Torkildsen, J. V., Gudmundsen, M., Kompus, K., & Hugdahl, K. (2015). Laterality across languages: Results from a global dichotic listening study using a smartphone application. Laterality, 1–19. doi: 10.1080/1357650X.2014.997245

- Bloch, M. I., & Hellige, J. B. (1989). Stimulus intensity, attentional instructions, and the ear advantage during dichotic listening. Brain & Cognition, 9(1), 136–148.

- Brancucci, A., Della Penna, S., Babiloni, C., Vecchio, F., Capotosto, P., Rossi, D., … Rossini, P. M. (2008). Neuromagnetic functional coupling during dichotic listening of speech sounds. Human Brain Mapping, 29(3), 253–264.

- Braun, C. M., Sapinleduc, A., Picard, C., Bonnenfant, E., Achim, A., & Daigneault, S. (1994). Zaidel′ s model of interhemispheric dynamics: Empirical tests, a critical appraisal, and a proposed revision. Brain & Cognition, 24(1), 57–86.

- Broadbent, D. E. (1952). Listening to one of two synchronous messages. Journal of Experimental Psychology, 44(1), 51–55.

- Broadbent, D. E. (1956). Successive responses to simultaneous stimuli. Quarterly Journal of Experimental Psychology, 8(4), 145–152.

- Bruder, G. E., Alvarenga, J., Abraham, K., Skipper, J., Warner, V., Voyer, D., … Weissman, M. M. (2016). Brain laterality, depression and anxiety disorders: New findings for emotional and verbal dichotic listening in individuals at risk for depression. Laterality: Asymmetries of body, Brain and Cognition, 21(4–6), 525–548.

- Bryden, M. (1962). Order of report in dichotic listening. Canadian Journal of Psychology, 16, 291–299.

- Bryden, M. (1964). The manipulation of strategies of report in dichotic listening. Canadian Journal of Psychology/Revue canadienne de psychologie, 18(2), 126–138.

- Bryden, M. (1975). Speech lateralization in families: A preliminary study using dichotic listening. Brain and Language, 2, 201–211.

- Bryden, M. (1988). An overview of the dichotic listening procedure and its relation to cerebral organization. In K. Hugdahl (Ed.), Handbook of dichotic listening: Theory, methods and research (pp. 1–43). Chichester: Wiley & Sons.

- Bryden, M., & MacRae, L. (1988). Dichotic laterality effects obtained with emotional words. Neuropsychiatry, Neuropsychology, Behavioral Neurology, 1, 171–176.

- Bryden, M., Munhall, K., & Allard, F. (1983). Attentional biases and the right-ear effect in dichotic listening. Brain and Language, 18(2), 236–248.

- Bryden, M., & Murray, J. E. (1985). Toward a model of dichotic listening performance. Brain & Cognition, 4(3), 241–257.

- Bryden, M., & Sprott, D. (1981). Statistical determination of degree of laterality. Neuropsychologia, 19(4), 571–581.

- Brysbaert, M., Mandera, P., & Keuleers, E. (2018). The word frequency effect in word processing: An updated review. Current Directions in Psychological Science, 27(1), 45–50.

- Cai, Q., Lavidor, M., Brysbaert, M., Paulignan, Y., & Nazir, T. A. (2008). Cerebral lateralization of frontal lobe language processes and lateralization of the posterior visual word processing system. Journal of Cognitive Neuroscience, 20(4), 672–681.

- Cameron, S., Glyde, H., Dillon, H., Whitfield, J., & Seymour, J. (2016). The dichotic digits difference test (DDdT): Development. Normative data, and test-retest reliability studies part 1. Journal of the American Academy of Audiology, 27(6), 458–469.

- Cherry, E. C. (1953). On the recognition of speech with one, and with two ears. Journal of the Acoustical Society of America, 25, 975–979.

- Clark, C., Geffen, L., & Geffen, G. (1988). Invariant properties of auditory perceptual asymmetry assessed by dichotic monitoring. In K. Hugdahl (Ed.), Handbook of dichotic listening: Theory, methods and research (pp. 71–83). Chichester, UK: Wiley & Son.

- Clarke, J. M., Lufkin, R. B., & Zaidel, E. (1993). Corpus callosum morphometry and dichotic listening performace: Individual differences in functional interhemispheric inhibition? Neuropsychologia, 31(6), 547–557.

- Conrad, M., & Jacobs, A. (2004). Replicating syllable frequency effects in Spanish in German: One more challenge to computational models of visual word recognition. Language and Cognitive Processes, 19(3), 369–390.

- Cowan, N. (2010). The magical mystery four: How is working memory capacity limited, and why? Current Directions in Psychological Science, 19(1), 51–57.

- Cutting, J. E. (1976). Auditory and linguistic processes in speech perception: Inferences from six fusions in dichotic listening. Psychological Review, 83(2), 114–140.

- D'Anselmo, A., Marzoli, D., & Brancucci, A. (2016). The influence of memory and attention on the ear advantage in dichotic listening. Hearing Research, 342, 144–149.

- de Bode, S., Sininger, Y., Healy, E. W., Mathern, G. W., & Zaidel, E. (2007). Dichotic listening after cerebral hemispherectomy: Methodological and theoretical observations. Neuropsychologia, 45(11), 2461–2466.

- Dos Santos Sequeira, S., Specht, K., Hämäläinen, H., & Hugdahl, K. (2008). The effects of background noise on dichotic listening to consonant–vowel syllables. Brain and Language, 107(1), 11–15.

- Dos Santos Sequeira, S., Specht, K., Moosmann, M., Westerhausen, R., & Hugdahl, K. (2010). The effects of background noise on dichotic listening to consonant-vowel syllables: An fMRI study. Laterality, 15(6), 577–596.

- Dos Santos Sequeira, S., Woerner, W., Walter, C., Kreuder, F., Lueken, U., Westerhausen, R., … Wittling, W. (2006). Handedness, dichotic-listening ear advantage, and gender effects on planum temporale asymmetry—a volumetric investigation using structural magnetic resonance imaging. Neuropsychologia, 44(4), 622–636.

- Dramsdahl, M., Westerhausen, R., Haavik, J., Hugdahl, K., & Plessen, K. J. (2011). Cognitive control in adults with attention-deficit/hyperactivity disorder. Psychiatry Research, 188(3), 406–410.

- Fernandes, M. A., & Smith, M. L. (2000). Comparing the fused dichotic words test and the intracarotid amobarbital procedure in children with epilepsy. Neuropsychologia, 38(9), 1216–1228.

- Fernandes, M. A., Smith, M., Logan, W., Crawley, A., & McAndrews, M. (2006). Comparing language lateralization determined by dichotic listening and fMRI activation in frontal and temporal lobes in children with epilepsy. Brain and Language, 96(1), 106–114.

- Foundas, A. L., Corey, D. M., Hurley, M. M., & Heilman, K. M. (2006). Verbal dichotic listening in right and left-handed adults: Laterality effects of directed attention. Cortex, 42(1), 79–86.

- Freides, D. (1977). Do dichotic listening procedures measure lateralization of information processing or retrieval strategy? Perception & Psychophysics, 21(3), 259–263.

- Geffen, G. (1976). Development of hemispheric specialization for speech perception. Cortex, 12(4), 337–346.

- Geffen, G., & Caudrey, D. (1981). Reliability and validity of the dichotic monitoring test for language laterality. Neuropsychologia, 19(3), 413–423.

- Gootjes, L., Bouma, A., Van Strien, J. W., Van Schijndel, R., Barkhof, F., & Scheltens, P. (2006). Corpus callosum size correlates with asymmetric performance on a dichotic listening task in healthy aging but not in Alzheimer's disease. Neuropsychologia, 44(2), 208–217. doi: 10.1016/j.neuropsychologia.2005.05.002