ABSTRACT

The left hemisphere is known to be generally predominant in verbal processing and the right hemisphere in non-verbal processing. We studied whether verbal and non-verbal lateralization is present in haptics by comparing discrimination performance between letters and nonsense shapes. We addressed stimulus complexity by introducing lower case letters, which are verbally identical with upper case letters but have a more complex shape. The participants performed a same-different haptic discrimination task for upper and lower case letters and nonsense shapes with the left and right hand separately. We used signal detection theory to determine discriminability (d′), criterion (c) and we measured reaction times. Discrimination was better for the left hand for nonsense shapes, close to significantly better for the right hand for upper case letters and with no difference between the hands for lower case letters. For lower case letters, right hand showed a strong bias to respond “different”, while the left hand showed faster reaction times. Our results are in agreement with the right lateralization for non-verbal material. Complexity of the verbal shape is important in haptics as the lower case letters seem to be processed as less verbal and more as spatial shapes than the upper case letters.

Introduction

It is now well established that the two brain hemispheres differ with regards to the way and degree in which they are involved in cognitive processes (Gazzaniga, Citation1995; Gazzaniga, Citation2005). Functional brain lateralization, or asymmetry, is understood as the left or right hemisphere being specialized for certain functions. The advantage of one hemisphere over the other is typically manifested by differential neural and behavioural performance with lateralized activation in the cortex, faster reaction times or greater accuracy rates for that particular task.

Arguably, the most studied lateralized function is verbal versus non-verbal processing which is typically measured by the accuracy of the performance or processing speed of certain verbal/non-verbal tasks. The left hemisphere is predominant for language in the majority of right-handed people (Knecht et al., Citation2000; Somers et al., Citation2015). Therefore, a left hemisphere advantage is expected and generally found for verbal material, while a right hemisphere advantage is found for non-verbal or spatial processing, assessed with either visual or auditory stimuli (see Mildner, Citation2007, for a review).

Previous studies on laterality have mainly used visual or auditory stimuli and relatively less is known about lateralization in the tactile modality. There is some evidence from neuroimaging studies for distinct involvement of the hemispheres in various tactile discrimination tasks (Stoeckel et al., Citation2003, Citation2004). For example, in the Stoeckel et al. (Citation2004) study, participants had to discriminate between pairs of shapes (parallelepipeds) presented with an inter-stimulus interval of approximately 15 s and explored with the right hand. The authors reported laterality effects at different processing stages of the discrimination task with the right superior parietal lobule predominantly activated at the discrimination stage of this task, and the left superior parietal lobule predominantly activated during the interval to support the maintenance of the information. However, as only the right hand was used for discrimination in this study, a comparison with left-hand performance would be needed in order to make conclusions on hemispheric laterality. Van Boven, Ingeholm, Beauchamp, Bikle, and Ungerleider (Citation2005) reported evidence for a lateralization of function in a tactile discrimination task involving grating orientation or grating location along a finger. In particular, for each participant, the tactile grating stimuli were passively presented to the index finger of either their left or right hand. The results suggested greater activation in the left intraparietal sulcus for the tactile orientation task, and greater activation in the right temporoparietal junction during the grating location task. The authors concluded that the left hemisphere was more associated with processing of the fine spatial details of the tactile grating whilst the right hemisphere had an advantage for processing tactile shape as a whole which is more useful for determining tactile location.

Behavioural studies on tactile laterality have focused on hand-dependent performance as a measure for contralateral hemispheric laterality. This is based on the anatomical structure of the lemniscal system which carries information for discriminative touch from one side of the body, crosses over the midline and projects information to the opposite hemisphere. Behavioural studies of tactile laterality aim to stimulate one side of the body in order to invoke activation in the opposite hemisphere. Hence, in tactile tasks, any difference in terms of accuracy or reaction times across the hands can be interpreted as an advantage for one of the hands, and the respective contralateral hemisphere, over the other hand (Mildner, Citation2007).

Most of the early studies in the tactile domain have used nonverbal stimuli such as pressure sensitivity, point localization, two-point discrimination, vibrotactile discrimination, line orientation discrimination, to study lateralization although some studies have explored laterality in verbal processing (see Bryden, Citation1982 for a review). Lateralization to the right hemisphere for non-verbal tasks with tactile stimuli has been reported (Benton, Levin, & Varney, Citation1973; Cohen & Levy, Citation1986; Dodds, Citation1978; Fagot, Lacreuse, & Vauclair, Citation1997; Witelson, Citation1974). However, a left-hemisphere advantage for verbal tactile material does not always emerge (see Fagot et al., Citation1997 for a review; Witelson, Citation1974). Moreover, some studies have found lateralization to the right hemisphere even for verbal material (O'Boyle, Van Wyhe-Lawler, & Miller,Citation1987; Walch & Blanc-Garin, Citation1987). Also, there is some evidence for left-hand advantage in reading Braille letters although the results have been inconsistent (see Fagot et al., Citation1997 for a review). Thus, it has been suggested that the lateralization in the tactile modality might differ from that in the visual or auditory domains in that verbal material may be better processed by the right hemisphere because it is initially encoded as spatial information before it is transferred into a verbal code (Witelson, Citation1974). However, there are studies which have found support for a double dissociation in tactile modality similar to that of other modalities, with evidence for a preference for verbal information within the left and non-verbal within the right hemisphere (Borgo, Semenza, & Puntin, Citation2004; Oscar-Berman, Rehbein, Porfert, & Goodglass, Citation1978).

Borgo et al. (Citation2004) argued that one of the reasons why a left hemisphere advantage for verbal stimuli in the tactile modality seems to be elusive may be due to the lack of control over the participant`s encoding of the stimuli during exploration. The authors hypothesized that laterality effects depend not only on stimulus type but also on the encoding strategy. In their study, they used upper and lower case letters as verbal stimuli and notched circles as non-verbal stimuli for haptic discrimination. The authors aimed to control for the encoding strategy by introducing two conditions for the letter task; participants had to match the pairs of letters for similarity based on either their physical identity (AA) or name identity (Aa). In addition to the left-hand advantage for the nonverbal discrimination of stimuli (notched circles), there was a right-hand advantage for matching letters only in the name identity condition, which was considered as the real verbal task, but not in the physical condition which was considered as a spatial task. This finding suggested that the encoding strategy (verbal vs. spatial) is an important factor in laterality effects. The results reported by Passarotti, Banich, Sood, and Wang (Citation2002) also support the idea of a left hemisphere advantage for haptically explored geometrical shapes which were compared categorically, and a right hemisphere advantage when the shapes had to be compared by physical features. In the categorical matching, task participants had to decide whether two shapes belong to same shape category (e.g., both triangles) while in the physical matching they had to decide if the two shapes are physically identical (identical triangles). The categorical and physical tasks in this experiment share similar characteristics to the name identity and physical identity tasks respectively reported by Borgo et al. (Citation2004). Taken together, these two studies suggest that not only stimulus characteristics, but also the task demands can have an impact on hemispheric preferences. This perhaps sheds light on why some studies failed to find evidence for laterality effects based on either the verbal or non-verbal nature of the tactile stimulus types (Dowell et al., Citation2018; Stoycheva & Tiippana, Citation2018). In sum, evidence for tactile laterality effects is not consistent across studies and thus, laterality in the tactile domain is far less understood than in vision and audition.

In a previous study, we investigated haptic laterality for verbal and non-verbal stimuli (Stoycheva & Tiippana, Citation2018). We used a discrimination task to measure performance in each of the two hands separately for three types of shapes: letters, geometric shapes and nonsense shapes. We chose these stimulus types so that they differed in the possibility of being verbalized, with the assumption that letters were the most verbal (i.e., easily named), followed by geometrical stimuli, and the nonsense shapes were the least verbal. Nonsense shapes were considered as non-verbal because they were least likely to be associated with common names. In contrast to nonsense shapes, geometrical shapes were spatial stimuli although could be associated with verbal information, such as name or category, and thus we assumed that they were represented between the verbal-non-verbal continuum. Stoycheva and Tiippana (Citation2018) used a same-different task using stimulus pairs, and performance was determined, using signal detection theory, as discriminability (d′) (Macmillan & Creelman, Citation2005). Each stimulus was actively explored with one hand for 1 s and retention intervals of 5, 15 and 30 s were used between the stimuli in the pair as there is evidence that longer retention can amplify laterality differences (Evans & Federmeier, Citation2007; Moscovitch, Citation1979,- for a review; Oliveira, Perea, Ladera, & Gamito, Citation2013). Indeed, we found a difference in performance between the hands over different retention times. While performance with the right hand/left hemisphere decreased with increasing retention time, performance with the left hand/right hemisphere was maintained up to 15 s inter-stimulus interval.

Interestingly, performance between the hands was not affected by stimulus type, which suggests that there was no laterality effect for verbal or non-verbal stimuli. Performance was worse for the nonsense shapes compared to the letters and geometrical shapes but there was no performance difference between the latter two (i.e., similar performance for letters and geometrical shapes), possibly because both can be encoded verbally or due to other contributing factors such as complexity, concreteness, meaningfulness or familiarity. If letters and geometric shapes share similar characteristics, then indeed that might have contributed to similar performance in their discriminability. Thus, an interesting open question is whether haptic performance would differ between verbal stimuli which clearly differ in some of these other characteristics.

Aims and hypotheses

In the present study, we further investigated the haptic processing of verbal and non-verbal shapes by each of the two hands. We used the same discrimination task as in our previous study (Stoycheva & Tiippana, Citation2018). We used three stimulus types: upper case letters, lower case letters and nonsense shapes. Upper- and lower-case letters were verbal stimuli, and nonsense shapes were considered as non-verbal stimuli. According to the participants’ native language, we used letters from the Latin alphabet in the current study, while in our previous study letters were chosen from the Cyrillic alphabet. Unlike the previous study, geometrical shapes were not used as their performance did not differ from that of letters. Instead, lower case-letters were introduced in the current study.

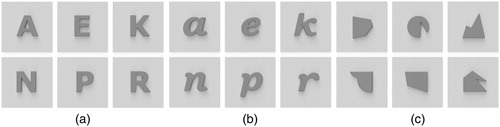

A second aim was to look at stimulus complexity as one of the characteristics that may have contributed to our previous results by varying not only verbality but also shape complexity. Specifically, our aim was to investigate how complexity of shape interacts with verbality to affect performance in each of the hands in a haptic discrimination task. To that end, two letter types, upper and lower case letters matched in verbality and meaning, were used as stimuli in order to vary shape complexity inside of the verbal groups. Here we defined letter shape complexity in reference to the typography of the letter, in which serif font type is categorized as more complex (i.e., with more features) than sans serif font. The upper case letter stimuli were printed in sans serif font (Verdana) and lower case in serif font (Bookman Old Style) as well as being italicized (see ). The upper case letters, therefore, consisted of straight and simple strokes, whereas lower case letters contained more features, including curves and intricate elements. We assumed that because of the curvier and more intricate font style, the lower case letters might require more engagement of spatial encoding and spatial transformation than the upper case letters which consisted of more simpler elements. The nonsense shapes were novel, complex shapes not associated with common accepted names. As they were difficult to verbalize, they were more likely associated with non-verbal processing.

If the ability to verbalize the stimulus determines performance, we expected that the letter shapes (upper and lower case) would be more readily discriminated than the nonsense shapes. More importantly, a right hand-advantage would be expected for both letter shapes. However, if shape complexity plays an important role in haptic discrimination, regardless of the verbal nature of the stimuli, then a difference in performance would be expected between the upper and lower case letters. Furthermore, a left-hand advantage might be expected for nonsense shapes.

In addition to performance (discriminability or d′) and response bias (criterion), we also measured reaction times. According to previous literature on lateralization, faster reaction times could be expected with the right hand for verbal stimuli and with the left hand for nonsense stimuli. Furthermore, increasing the complexity of the shape may increase the reaction time, therefore reaction times may be faster for lower case letters than upper case letters.

Methods

Participants

The participants were 24 right-handed students (20 females and 4 males) recruited from the University of Helsinki. All reported the Finnish language as their mother tongue. Their age was between 22 and 50 years old with an average of 26.3 (SD = 7.03) years. They all volunteered to take part of the experiment and received course credit for participation. All participants completed questionnaires indicating that no one had neurological, learning, language, memory or sensory deficits. The research received ethical approval from the University of Helsinki Review Board in the Humanities and Social and Behavioural Sciences.

Stimuli and apparatus

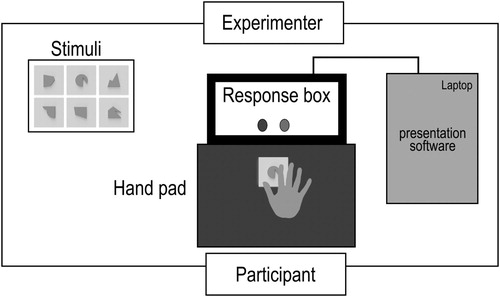

The apparatus consisted of a hand pad and response box connected to a laptop which were placed on the experimental table (See ). Participants wore black glasses during the actual experiment; thus, apparatus and stimuli were out of view of the participant during the trials. Participants were instructed to sit comfortably on a chair with one hand (left or right, depending on the experimental condition) rested on the hand pad which was placed centrally in front of the participant’s body. The hand was positioned facing downwards, with the palm and fingers resting on the pad. Prior to a trial, the participant lifted their hand slightly from the pad so that the experimenter could position a stimulus on the pad, oriented towards the participant. Stimuli were always presented in sequence, with one stimulus per trial. Responses were provided by the participant using response buttons on a Cedrus RB-840 response box which was positioned centrally behind the hand pad (see ). Presentation software (Neurobehavioral systems, Albany, CA, USA) was used to run the experiment on a laptop (HP Elitebook, 8460p) and recorded the participant’s responses and response times provided via the response box. The presentation order of the stimuli was displayed on the monitor for the experimenter.

Figure 1. Schematic illustration of the apparatus used in the Experiment. The apparatus and stimuli were out of view of the participant during the trials.

The haptic stimulus set comprised of three types: upper case letters, lower case letters and nonsense shapes. Each stimulus type included six shapes, as illustrated in . All stimuli were raised shapes which were 3D printed from gray plastic. All three types of stimuli were scaled in size to the approximate dimensions of 4 cm in length, 4 cm in width and 0.7 cm in depth. Each stimulus was glued onto individual Plexiglass platforms of 10 cm × 10 cm × 0.3 cm. The letters used were A, E, K, N, P and R, chosen from the Latin alphabet, and all these letters are commonly used in Finnish. The upper case letters were presented in Verdana font (bold, approximately 144 pt in size) The lower case-letters were presented in Bookman Old Style (semi-bold & italic, 199 pt to yield comparable letter sizes as upper case letters). The shape of some lower case letters were additionally manually modified to remove distinctive features in the individual letters due to the Bookman Old Style typeface (such as right angles and corners) and to ensure that all serif features were curved in shape. The nonsense shape stimuli were the same as those used in our previous experimental design (Stoycheva & Tiippana, Citation2018). 3D modelling of raised 2D shapes was done with Autodesk Fusion 360 (San Rafael, CA, USA) to modify all objects with 1 mm fillet on the top edges and outer corners. 3D printing was done with the Form 2 printer from Formlabs (Somerville, MA, USA), with standard grey resin (V4) at 100 µm resolution. The printouts were further manually touched up to remove any 3D printing-related artefacts.

Design

The experiment was based on a fully factorial, within-subjects design with stimulus type (3: upper case, lower case letters and nonsense stimuli) and handedness of stimulus exploration (left or right) as factors. Stimulus complexity was nested within this design. Each participant performed six experimental blocks, that is, three stimulus types with left and with right hand separately. Each block consisted of 30 same and 30 different stimulus pairs.

Half of participants started with the left hand and the other half with the right hand. Thus, all three stimulus types were first performed with one hand, and after that in the same order with the other hand. For example, the left hand started with upper case letters, then with lower case letters and last with nonsense shapes, after which the right hand does upper case letters, lower case letters and nonsense shapes in the same order as the left hand. The order of the stimulus blocks was counterbalanced across participants and trial order was randomized within each stimulus block.

Performance in each trial was measured as discriminability (d′ and c) and response times.

Procedure

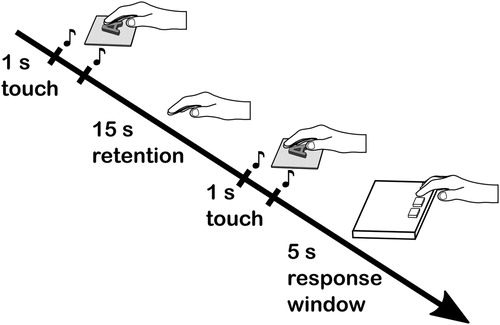

The experiment was based on a “same-different” discrimination task in which two stimuli were presented in succession in a trial as illustrated in . In each trial, the participant was instructed to explore the first stimulus with the left or right hand (depending on the test condition), then explore the second stimulus using the same hand and to respond whether the stimuli were the same or different as fast and as accurately as possible. Prior to the main experiment, the participants were able to see the stimulus set before each experimental block, but the task was performed using haptics only and the stimuli were out of view. Thus, during the task, the participants wore black glasses which prevented them from seeing the stimuli in each trial but still allowed for normal movement of the eyes. All letter stimuli were presented facing the participant in an upright orientation. The orientation of the nonsense stimuli was consistent within a trial and across blocks.

Participants could explore each stimulus in a trial for 1 s and retention (or inter-stimulus interval) occurred between the first and second stimulus for a duration of 15 s. A time limit for responding was set at 5 s. The timing of each sequence of events was indicated by a 0.3 s, 55 dB(A) SPL click sound for the start and end of the exploration time. The participant responded by pushing one of two buttons on the response pad with the same hand which was used for tactile exploration. The left button was pushed to respond “same” and right button to respond “different”. Prior to the main experiment, the participants received a short training session with few stimuli which were not used in the experiment.

The experiment was typically completed over two sessions of three blocks each. Participants were given a self-timed break between each experimental block. Altogether, the experiment took about 3 h for each participant to complete, and in most cases (80% of participants) it was completed over 2 days (an average of 1.5 h session per day) otherwise one session was conducted in the morning and the other in the afternoon.

Analyses

To analyse the results, we applied signal detection theory and thus calculated the discriminability index d′ and the criterion c (Macmillan & Creelman, Citation2005). We also recorded the reaction times (RT). Values of d′ indicated how accurately the stimuli were discriminated: the higher the value, the better the performance. The parameter c measured the tendency or the bias of the participant to respond either as “same” or “different”. According to the signal detection theory, we defined our correct and incorrect responses as shown in . We calculated hits (HIT) and false alarms (FA) so that HIT is when two “different” stimuli were correctly discriminated as “different” and FA means that two “same” stimuli were incorrectly identified as “different”. The HIT and FA-values were transformed into z-values. The discriminability index d′ was calculated by the formula: d′ = z(HIT) – z(FA) and criterion c was calculated by the formula: −0.5(z (Hit) + z(FA)). The HIT values of 1 were adjusted with the formula 1 – (1/2N) and FA values of 0 were substituted with the formula 1/(2N), where N is the number of the trials and in our task that is the stimulus pairs (Miller, Citation1996).

Table 1. Definitions of the values which were calculated for signal detection theory analyses.

We conducted a repeated measures ANOVA where d′ and c were dependent variables while the stimulus type (upper case letters, lower case letters, nonsense shapes) and hand (left and right) were independent variables. With the statistical test for d′ we measured the accuracy of each hand at discriminating each stimulus type. We applied pairwise comparisons (Bonferroni corrections) for the interaction between hand and stimulus type. In this way, we could investigate the hypothesis that hand performance is associated with greater accuracy for certain stimulus types and allowed us to compare performance across different stimulus types for each hand. The statistical test for c was run to investigate whether there was a bias to respond “same” or “different” across trials. In this test, pairwise comparisons (Bonferroni corrections) were applied to the interaction between hand side and stimulus type. This allowed us to compare differences between hands/ hemispheres in making z “same” or “different” decision according to different stimulus types.

For reaction times, the type of correct response (hit, correct rejection) was an additional independent variable, in order to test whether there was an influence of the type of correct responses (hits or correct rejections; see ) on reaction times. Thus, we conducted a repeated measured ANOVA on RTs with responses (hits and correct rejections), stimulus type and hand as factors. This analysis allowed us to test whether response times were affected by hand performance, stimulus type or specific correct response. A difference in response times across hands for certain responses may suggest the relative involvement of each hemisphere in making a decision for sameness or difference across stimuli.

The tests of normality were met for all data with the exception of the measures for nonsense stimuli explored by the right hand for criterion test (p = 0.04). There were two violations for Sphericity assumptions which were corrected with Greenhouse–Geisser tests. The first was for hand in the c (criterion) test and the other was for correct responses (hit, correct rejection) for lower case letters in the reaction time test. The outliers in the reaction times measures were removed from the analyses, using ±3 * MAD (Median absolute deviation) as the criteria (Leys, Ley, Klein, Bernard, & Licata, Citation2013). The outlier response count (across all participants) for hits was 145 and for correct rejection 139. Together, these outliers represent 3.3% of the total responses (count 8640).

Results

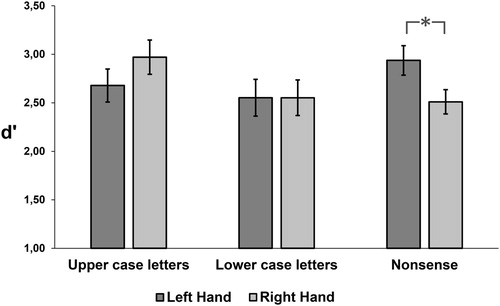

Discriminability d′

There was no main effect of stimulus type or hand for d′. However, the interaction between these factors was significant [F (2,46) = 7.39, p < 0.001] as shown in . The pairwise comparisons showed that the performance of the left hand was better than the right hand for nonsense shapes (p < 0.01). Although performance of the right hand was marginally better than the left hand for upper case letters, this comparison failed to reach significance (p = 0.054). Furthermore, the performance of the right hand was better for upper case letters than for lower case letters (p < 0.03) and nonsense shapes (p < 0.01).

Criterion c

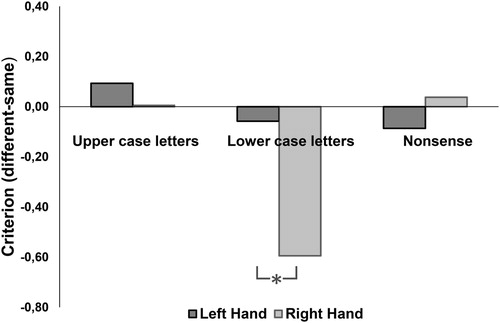

There was a main effect of stimulus type [F(2, 46) = 43.2, p < 0.001] and the main effect of hand [F(1, 23) = 12.1, p < 0.01]. Also, the interaction between stimulus type and hand was significant [F(2, 46) = 22.1, p < 0.001] as shown in .

Figure 5. Plot showing Criterion c responses in the haptic discrimination for upper- and lower-case letters and nonsense shapes across the left and right hands.

The pairwise comparisons showed a significant difference between lower case letters compared to upper case letters (p < 0.001) and to nonsense shapes (p < 0.001) for the right hand. For lower case letters there was a bias to respond “different” (c = −0.32), while upper case letters (c = 0.09) and nonsense shapes (c = 0.02) showed little bias when responding with the right hand (). There were no significant biases for the left hand or other stimulus types.

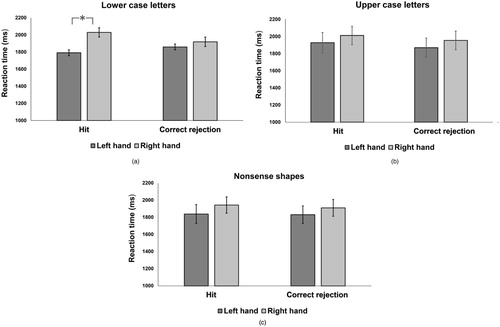

Reaction time (RT)

There was a three-way interaction (p < 0.03), with no other significant findings. As can be seen from , the rates of hits and correct rejections differed across stimulus types and hands. Consequently, we conducted further separate ANOVAs for each stimulus type, with response type and hand as factors.

Figure 6. Reaction times for hit responses (different pairs correctly judged as different) and correct rejections (same pairs correctly judged as same) for lower case letters (a), upper case letters (b), and nonsense shapes (c).

We found that for lower case letters, the main effect of hand was approaching significance (p = 0.052) and the interaction between hand and response was significant (p < 0.001). Pairwise comparisons showed that hit responses by the left hand were faster than right hand (see (a)). There was no significant effect for the other two stimulus types (see (b,c))

Discussion

Differences between the two hemispheres in the ability to distinguish verbal and nonverbal stimuli

We found evidence for functional laterality in a haptic discrimination task since performance by the left hand was better than the right hand for nonsense shapes. Additionally, the right hand showed a tendency for better performance in discriminating upper case letters but the hand difference did not reach statistical significance.

Our results are not in a complete agreement with previous studies which found the usual division of better performance by the right hand (left hemisphere) for letters and by the left hand (right hemisphere) for non-verbal shapes in the tactile modality (Borgo et al., Citation2004; Oscar-Berman et al., Citation1978). Our result of a left-hand advantage for the discrimination of non-verbal shapes is in line with previous findings from tactile research where only non-verbal stimuli were used (Fagot & Vaucliar, Citation1993, 97; Summers & Lederman, Citation1990 for a review). Most non-verbal stimuli used in tactile studies are very simple, such as two-point discrimination or frequency discrimination, therefore our result extends the evidence for a left hand-right hemisphere advantage to more complex non-verbal stimuli such as the nonsense 2D shapes used in the current experiment.

The responses to the upper case letters did not suggest a clear advantage for right hand-left hemisphere. That might be because participants relied on spatial encoding as well, which may have reduced the verbal effect. This result suggests that in tactile modality it is difficult to obtain a clear left hemisphere advantage for verbal stimuli than a right hemisphere advantage for non-verbal stimuli. There are a number of reasons why this may be the case, including the nature of encoding of object stimuli in the tactile domain, which is serial by nature. Moreover, haptic processing may rely more on spatial encoding of the shape before verbal encoding can occur (see Fagot et al., Citation1997 for a review; Witelson, Citation1974).

Performance by the right hand was significantly better with the upper case than with lower case letters. As the lower case letters were included in this study in order to introduce greater complexity in the physical features of the verbal stimuli, this result suggests that letter complexity may indeed have played a role regardless of verbality. Thus, even within the stimuli types considered as verbal, the results suggest that the spatial features of the letters can influence performance. We assumed that our lower case letters, though still verbal, were more complex than upper case letters due to differences in typography associated with the spatial features of the letters (serif and italics). Hence, worse performance of the right hand for lower than upper case letters demonstrates that more complex letter shapes were less legible and consequently were more poorly discriminated by the right hand-left hemisphere compared to shapes with more straight and simpler elements. This finding is consistent with the conclusion made by Bradshaw and Nettleton (Citation1983) in their review on hemispheric asymmetries in the visual domain. The authors argued that the right visual field-left hemisphere advantage is more likely to appear for simpler shapes while the left visual field-right hemisphere advantage is expected for more complex items. Also in the visual domain, it was found that all upper case-text was more legible than all lower- and mixed case text (Arditi & Cho, Citation2007). Thus, perhaps due to the simpler and more legible shapes, upper case letters used in the current study may have influenced the distinction between the hands with better right hand-left hemisphere discrimination, while such tendency did not appear for lower case letters. However, the lower case letters did invoke a left hand-right hemisphere advantage in terms of reaction times, a result which will be discussed below.

The lower case letters were the only stimuli which did not show any tendency for a laterality effect in performance (measured by discriminability index d′). Whilst performance by the right hand was significantly better for upper than lower case letters, the performance of the right hand for lower case letters did not differ from that for nonsense shapes. This is in contrast to what was expected, that is, better performance to the lower case letters than the nonsense shapes due to verbal coding. It may be that lower case letters and nonsense shapes were equally complex which overrode the advantage of a verbal code. The upper case letters were better discriminated than lower case letters and nonsense shapes with the right hand presumably because of easier verbal recoding. The nonsense shapes were better discriminated by the left hand presumably because of the advantage of the right hemisphere in perceiving spatial complexity. It is possible that the lower case letters represented shapes that invoked both verbal and spatial cues by the left and right hemisphere, respectively.

However, even though the lower case letters were familiar in identity, the specific shape in which they were presented may have made them difficult and ambiguous to be recognized as letters and therefore less likely to be verbally coded. For example, Mildner (Citation2007) outlined that the left visual field advantage can emerge initially during a difficult and novel verbal task because of the involvement of the right hemisphere in the pre-processing of novel material. Similar pre-processing from the right hemisphere was hypothesized by Bryden and Allard (Citation1976) in a divided visual field study for identifying different typefaces of upper case letters some of which were quite unusual. There was a left visual field-right hemisphere advantage for some of the typefaces in the verbal identification of letters. The authors explained this finding as a need for feature-based pre-processing of the difficult material before that material could be regarded as verbal. Hence, in visuo-spatially challenging conditions, the involvement of the right hemisphere may be strong enough to reduce or even supersede the left hemisphere advantage for verbal material. In agreement with this idea, a right hemisphere advantage has been found for the identification of masked visual letters versus a left hemisphere advantage for the same letters unmasked (Polich, Citation1978). In another visual study, rounded and straight Cyrillic letters were presented either in the left or right visual field for identification through naming (Pentcheva, Velichkova, & Lalova, Citation1999). Pentcheva et al. found a left visual field-right hemisphere advantage for the recognition of the rounded letters. In a more recent study, Asanowicz, Smigasiewicz, and Verleger (Citation2013) reported a right hemisphere advantage for identifying visually presented letters and digits in a divided visual field paradigm involving the rapid presentation of targets and non-target strings. These studies in the visual domain support the notion that a hemispheric advantage is not solely dependent on verbality but also on the physical characteristics of the stimuli and the nature of the task. It may have been the case that in the current study the lower case letters may have been more intricate and unusual than the upper case letters. The left hemisphere which generally was expected to show an advantage for verbal stimuli might have been challenged significantly more by the lower case letters due to their verbal characteristics combined with high-complex spatial structure. Thus, the involvement of the right hemisphere in the pre-processing of the difficult material might have abolished the emergence of the left hemisphere advantage.

In our previous study, we did not find evidence for a right-hand advantage for letters (Stoycheva & Tiippana, Citation2018), although this could be related to the specific shape of the letter stimuli used in that study. In the current study, Latin upper case letters were made with straighter and simpler line elements, whilst the previous study used Cyrillic letters that had more curvy and complex elements. Jordanova and Bogdanova (Citation1997) studied haptic discrimination using a same-different judgement task with upper case Cyrillic letters and found that letters composed of curvy elements (e.g., “C”, “З”) were poorly recognized relative to letters with straight elements (e.g., “T”, “X”). The authors associated the curvy configurations with higher perceptual demands which consequently decreased accuracy. Thus, in the study conducted by Stoycheva and Tiippana (Citation2018), the curvy Cyrillic letter shapes might have invoked a stronger involvement of the right hemisphere in order to deal with the perceptual demands, which may have abolished the left hemisphere advantage.

Response bias of the hemispheres in making decision for “same” and “different”

As regards to the response criterion, there was a strong bias towards responding “different” to the lower case letters when the right hand was used than any other conditions. There was little or no bias in the responses to the upper case letters and nonsense shapes. This contrasts with the finding that lower case letters were the only stimulus type which did not evoke a tendency for laterality effects in the discriminability performance. Such distinct processing might be partially due to the stimulus characteristics which are nominally verbal but with a rather complex spatial structure. Additionally, the right-hand bias towards a “different” response might also be related to more analytical processing in the left hemisphere. That is, the left hemisphere tends to process the information in an analytical way, whereas the right hemisphere tends to use a more holistic approach (Bradshaw & Nettleton, Citation1983; Mildner, Citation2007 for reviews). Analytical processing is understood as encoding the stimulus sequentially and extracting specific details while holistic processing refers to perceiving stimulus as a whole, attending to the overall configuration and synthesizing the stimulus information. In our study, the lower case letters might have invoked a more analytical coding strategy than the upper case letters. This emphasis on detail may have resulted in a decision bias towards “different” rather than “same” for the right hand.

Reaction time responses of the hands/hemispheres

The analysis of response times suggested evidence for a left-hand/ right hemisphere advantage. Even though the results did not support a double dissociation in response times to trials evoking a “different” versus “same” response, we found that only for lower case letters, the left hand was faster than the right hand when responding “different” with no difference between hands when responding “same”. At this point we are not aware of any other study which has investigated response time across the two hands in same-different task for shape discrimination, therefore, it is difficult to relate our finding with similar findings in tactile domain. However, there is one study on tactile discrimination of gratings which found a same-different effect on reaction times (Yu, Yang, & Wu, Citation2013). Yet, this effect was not associated to hand laterality as only the right hand was used for the actual discrimination task and the left hand was used for giving the response. Specifically, the study reported better accuracy and faster reaction times for “same” versus “different” conditions. Faster reaction times for “same” responses are relatively common in visual research (Eviatar, Zaidel, & Wickens, Citation1994; Farell, Citation1985 for a review). We did not find such an effect, but our reaction time effect is also a hand laterality effect- the left hand was faster than the right hand in responding “different” (for lower case letters). Our result can, therefore, be linked to a relatively consistent finding, even though in a different modality (vision), in which “different” responses are faster when associated with the right hemisphere and when letters had to be matched in shape as opposed to their name (Boles,Citation1981). As we had a left hand-reaction time advantage only for lower case letters and not for upper case letters this may be due to the left hand-right hemisphere advantage for spatial processing. As discussed above, we presumed that the right hemisphere was initially activated in the pre-processing of the lower case letters as more spatially complex stimuli. Moreover, this involvement might have reduced the left hemisphere advantage for verbal material whilst simultaneously invoking a right hemisphere advantage in terms of reaction times. However, an advantage in reaction times does not necessarily mean an advantage in accuracy as there might be tasks where it is possible to trade speed for accuracy (Eviatar & Zaidel, Citation1992, for a review). However, the reaction time advantage in the present study was found only for “different” responses. Furthermore, the longer reaction times for right-hand responses of “different” suggest that the right hand-left hemisphere requires more time than the left hand-right hemisphere. This again might be related to the analytical approach with which the left hemisphere tends to operate versus the more holistic processing of the right hemisphere. Thus, it has been suggested that the “same” response in same-different judgement task is made based on the principle of matching to sample, while the “different” response includes the same process but with the additional processing of the individual stimulus characteristics, where all features need to be checked before responding (Jordanova & Bogdanova,Citation1997; Kornblum, Citation1973). This feature-based analysis of the stimuli, in which the left hemisphere is thought to be more involved, can result in slower responses of the right hand (left hemisphere) in contrast to the left hand (right hemisphere) which processes overall shape that is likely to be faster.

Conclusions

Our results show that there is a left hand-right hemisphere advantage in the processing of non-verbal stimuli in the haptic modality. As we did not find a clear effect for right-hand-left hemisphere advantage for letters, we presume that the laterality effects in the haptic modality for verbal materials are weak. Furthermore, the lateralization is not merely defined by the verbal characteristics of the material but also by the complexity of the stimulus shape. Thus, greater spatial complexity of the shape might first invoke processing in the right hemisphere which can result in reducing or abolishing the left hemisphere advantage for verbal material. We found also that for lower case letters, the right hand-left hemisphere tends to respond “different” and it is slower than the left hand-right hemisphere in making decisions for difference. This might be due to the left hemisphere analytical approach which might take a longer time when processing more complex verbal stimuli.

Acknowledgement

We thank undergraduate students Anniina Koutonen, Heini Landén, Elli Muttonen, Sonja Salo and Eetu Vilminko for their participation in data acquisition.

Data availability statement

The data that support the findings of this study are available from the corresponding author, [Polina Stoycheva], upon reasonable request.

Disclosure statement

No potential conflict of interest was reported by the author(s).

References

- Arditi, A., & Cho, J. (2007). Letter case and text legibility in normal and low vision. Vision Research, 47(19), 2499–2505. doi: 10.1016/j.visres.2007.06.010

- Asanowicz, D., Smigasiewicz, K., & Verleger, R. (2013). Differences between visual hemifields in identifying rapidly presented target stimuli: Letters and digits, faces, and shapes. Frontiers in Psychology, 4, 452. doi: 10.3389/fpsyg.2013.00452

- Benton, A. L., Levin, H. S., & Varney, N. R. (1973). Tactile perception of direction in normal subjects. Implications for hemispheric cerebral dominance. Neurology, 23(11), 1248–1250. doi: 10.1212/wnl.23.11.1248

- Boles, D. B. (1981). Variability in letter matching asymmetry. Perception & Psychophysics, 29(3), 285–288. doi: 10.3758/bf03207297

- Borgo, F., Semenza, C., & Puntin, P. (2004). Hemispheric differences in haptic scanning of verbal and spatial material by adult males and females. Neuropsychologia, 42(14), 1896–1901. doi: 10.1016/j.neuropsychologia.2004.05.010

- Bradshaw, J. L., & Nettleton, N. C. (1983). Human cerebral asymmetry. Upper Saddle River, NJ: Prentice-Hall.

- Bryden, M. P. (1982). Laterality functional asymmetry in the Intact brain. Waterloo, Ontario: Academic Press.

- Bryden, M. P., & Allard, F. (1976). Visual hemifield differences depend on typeface. Brain and Language, 3(2), 191–200. doi: 10.1016/0093-934x(76)90016-x

- Cohen, H., & Levy, J. J. (1986). Sex differences in categorization of tactile stimuli. Perceptual and Motor Skills, 63(1), 83–86. doi: 10.2466/pms.1986.63.1.83

- Dodds, A. G. (1978). Hemispheric differences in tactuo-spatial processing. Neuropsychologia, 16(2), 247–250. doi: 10.1016/0028-3932(78)90115-x

- Dowell, C. J., Norman, J. F., Moment, J. R., Shain, L. M., Norman, H. F., Phillips, F., & Kappers, A. M. L. (2018). Haptic shape discrimination and interhemispheric communication. Scientific Reports, 8(1), 377. doi: 10.1038/s41598-017-18691-2

- Evans, K. M., & Federmeier, K. D. (2007). The memory that’s right and the memory that’s left: Event-related potentials reveal hemispheric asymmetries in the encoding and retention of verbal information. Neuropsychologia, 45(8), 1777–1790. doi: 10.1016/j.neuropsychologia.2006.12.014

- Eviatar, Z., & Zaidel, E. (1992). Letter matching in the hemispheres: Speed-accuracy trade-offs. Neuropsychologia, 30(8), 699–710. doi: 10.1016/0028-3932(92)90040-S

- Eviatar, Z., Zaidel, E., & Wickens, T. (1994). Nominal and physical decision criteria in same-different judgments. Perception & Psychophysics, 56(1), 62–72. doi: 10.3758/bf03211691

- Fagot, J., Lacreuse, A., & Vauclair, J. (1993). Haptic discrimination of nonsense shapes: Hand exploratory strategies but not accuracy reveal laterality effects. Brain and Cognition, 21(2), 212–225. doi: 10.1006/brcg.1993.1017

- Fagot, J., Lacreuse, A., & Vauclair, J. (1997). Role of sensory and post sensory factors on hemispheric asymmetries in tactual perception. In S. Christman (Ed.), Cerebral asymmetries in sensory and perceptual processing Elsevier Science (pp. 469–497). Amsterdam: Elsevier Science B.V.

- Farell, B. (1985). “Same”-“different” judgements: A review of current controversies in perceptual comparisons. Psychological Bulletin, 98(3), 419–456. doi: 10.1037/0033-2909.98.3.419

- Gazzaniga, M. S. (1995). Principles of human brain organization derived from split-brain studies. Neuron, 14(2), 217–228. doi: 10.1016/0896-6273(95)90280-5

- Gazzaniga, M. S. (2005). Forty-five years of split-brain research and still going strong. Nature Reviews Neuroscience, 6(8), 653–659. doi: 10.1038/nrn1723

- Jordanova, M., & Bogdanova, E. (1997). Same-different judgement and information processing in six years old children. Journal of Bulgarian Academy of Science, 1, 239–249.

- Knecht, S., Drager, B., Deppe, M., Bobe, L., Lohmann, H., Floel, A., … Henningsen, H. (2000). Handedness and hemispheric language dominance in healthy humans. Brain, 123(Pt 12), 2512–2518. doi: 10.1093/brain/123.12.2512

- Kornblum, S. (1973). Attention and performance (Vol. IV). New York: Academic press.

- Leys, C., Ley, C., Klein, O., Bernard, P., & Licata, L. (2013). Detecting outliers: Do not use standart deviation around the mean, use absolute deviation around the median. Journal of Experimental Social Psychology, 49(4), 764–766. doi: 10.1016/j.jesp.2013.03.013

- Macmillan, N., & Creelman, C. (2005). Detection theory: A user’s guide. New York: Cambridge University Press.

- Mildner, V. (2007). Cognitive neuroscience of human communication. New York: Psychology press.

- Miller, J. (1996). The sampling distribution of d’. Perception & Psychophysics, 58(1), 65–72. doi: 10.3758/bf03205476

- Moscovitch, M. (1979). Information processing an dthe cerebral hemispheres. In M. S. Gazzaniga (Ed.), Handbook of behavioural Neurobiology ( Vol. Neuropsychology, pp. 379–446). New York: Plenum Press.

- O'Boyle, M. W., Van Wyhe-Lawler, F., & Miller, D. A. (1987). Recognition of letters traced in the right and left palms: Evidence for a process-oriented tactile asymmetry. Brain and Cognition, 6(4), 474–494. doi: 10.1016/0278-2626(87)90141-2

- Oliveira, J., Perea, M. V., Ladera, V., & Gamito, P. (2013). The roles of word concreteness and cognitive load on interhemispheric processes of recognition. Laterality, 18(2), 203–215. doi: 10.1080/1357650X.2011.649758

- Oscar-Berman, M., Rehbein, L., Porfert, A., & Goodglass, H. (1978). Dichhaptic hand-order effects with verbal and nonverbal tactile stimulation. Brain and Language, 6(3), 323–333. doi: 10.1016/0093-934x(78)90066-4

- Passarotti, A. M., Banich, M. T., Sood, R. K., & Wang, J. M. (2002). A generalized role of interhemispheric interaction under attentionally demanding conditions: Evidence from the auditory and tactile modality. Neuropsychologia, 40(7), 1082–1096. doi: 10.1016/s0028-3932(01)00152-x

- Pentcheva, S., Velichkova, M., & Lalova, J. (1999). Neuropsychological examinations of global and analytical strategy during processing of verbal and non-verbal information. Bulgarian Psychological Research, 1(2), 99–129.

- Polich, J. M. (1978). Hemispheric differences in stimulus identification. Perception & Psychophysics, 24(1), 49–57. doi: 10.3758/bf03202973

- Somers, M., Aukes, M. F., Ophoff, R. A., Boks, M. P., Fleer, W., de Visser, K. C., … Sommer, I. E. (2015). On the relationship between degree of hand-preference and degree of language lateralization. Brain and Language, 144, 10–15. doi: 10.1016/j.bandl.2015.03.006

- Stoeckel, M. C., Weder, B., Binkofski, F., Buccino, G., Shah, N. J., & Seitz, R. J. (2003). A fronto-parietal circuit for tactile object discrimination: An event-related fMRI study. Neuroimage, 19(3), 1103–1114. doi: 10.1016/s1053-8119(03)00182-4

- Stoeckel, M. C., Weder, B., Binkofski, F., Choi, H. J., Amunts, K., Pieperhoff, P., … Seitz, R. J. (2004). Left and right superior parietal lobule in tactile object discrimination. European Journal of Neuroscience, 19(4), 1067–1072. doi: 10.1111/j.0953-816x.2004.03185.x

- Stoycheva, P., & Tiippana, K. (2018). Exploring laterality and memory effects in the haptic discrimination of verbal and non-verbal shapes. Laterality, 23(6), 684–704. doi: 10.1080/1357650X.2018.1450881

- Summers, D. C., & Lederman, S. J. (1990). Perceptual asymmetries in the somatosensory system: A dichhaptic experiment and critical review of the literature from 1929 to 1986. Cortex, 26(2), 201–226. doi: 10.1016/s0010-9452(13)80351-6

- Van Boven, R. W., Ingeholm, J. E., Beauchamp, M. S., Bikle, P. C., & Ungerleider, L. G. (2005). Tactile form and location processing in the human brain. Proceedings of the National Academy of Sciences of the United States of America, 102(35), 12601–12605. doi: 10.1073/pnas.0505907102

- Walch, J. P., & Blanc-Garin, J. (1987). Tactual laterality effects and the processing of spatial characteristics: Dichaptic exploration of forms by first and second grade children. Cortex, 23(2), 189–205. doi: 10.1016/s0010-9452(87)80031-x

- Witelson, S. F. (1974). Hemispheric specialization for linguistic and nonlinguistic tactual perception using a dichotomous stimulation technique. Cortex, 10(1), 3–17. doi: 10.1016/s0010-9452(74)80034-1

- Yu, Y., Yang, J., & Wu, J. (2013). Limited persistence of tactile working memory resources during delay-dependent grating orientation discrimination. Neuroscience and Biomedical Engineering, 1(1), 65–67. doi: 10.2174/2213385211301010011