ABSTRACT

A common theme across phenomena like vitality, vigor, and fatigue is that they all refer to some aspect of energy. Since experience sampling methodology has become a major approach, there is a significant need for a time-effective and valid measure of energetic activation. In this study, we develop and examine the validity of a single-item pictorial scale of energetic activation. We examine the convergent, discriminant, and criterion-related validity of the pictorial scale and scrutinize the practical advantages of applying a pictorial vs. a purely verbal item concerning response latencies and user experience ratings. We conducted two consecutive experience sampling studies among 81 and 109 employees across 15 and 12 days, respectively. Multilevel confirmatory factor analyses provide evidence that the pictorial scale converges strongly with vitality and vigor, relates to fatigue, is distinct from facets of core affect, and shows expected correlations to antecedents of energetic activation. Energetic activation as measured with the pictorial scale was predicted by sleep quality and basic need satisfaction, and predicted work engagement. The pictorial scale was superior to a purely verbal scale regarding response latencies and participant-rated user experience. Hence, our scale provides a valid, time-efficient, and user-friendly scale suited for experience sampling research.

In occupational health psychology, there are numerous indicators of employee well-being, such as job satisfaction (Judge & Ilies, Citation2004), work engagement (Christian et al., Citation2011), thriving (Kleine et al., Citation2019), subjective vitality (Fritz et al., Citation2011; Ryan & Frederick, Citation1997), and fatigue (Frone & Tidwell, Citation2015). Several of these concepts either explicitly or implicitly refer to individual levels of energy. Quinn et al. (Citation2012) have reviewed several streams of research on energy-related constructs and have proposed the concept of human energy as an overarching perspective across domains and disciplines. In their broad conceptualization of human energy, they distinguish between physical energy and energetic activation. While physical energy refers to the capacity to do work and focuses on energy as reflected at the physiological level (e.g., blood glucose or available ATP in body cells), energetic activation refers to the subjective experience of human energy (e.g., feelings of liveliness). More specifically, Quinn et al. (Citation2012) define energetic activation as “the subjective component of [a] biobehavioral system of activation experienced as feelings of vitality, vigor, enthusiasm, zest etc.” (p. 341).

Accordingly, instruments typically applied to capture aspects of energetic activation include subjective vitality (Ryan & Frederick, Citation1997), vigor (McNair et al., Citation1992; Shirom, Citation2003), fatigue (Frone & Tidwell, Citation2015; McNair et al., Citation1992), and ego-depletion (Bertrams et al., Citation2011). These instruments typically consist of 5 to 10 items and may be too long in settings where maximum test economy is crucial, for example in experience sampling methodology (ESM) research (Beal, Citation2015; Ilies et al., Citation2016). Typical ESM studies include multiple observations or self-reports from an individual over time and allow studying processes at the intraindividual level (McCormick et al., Citation2018). Currently, more and more studies include multiple self-reports per day and analyze trajectories in psychological states over the course of the day (Hülsheger, Citation2016). Even research on physiological variables, such as blood pressure (Ganster et al., Citation2018), or user interaction with digital devices, such as time spent on social media during work (Mark et al., Citation2014), relies heavily on self-reported well-being measures to facilitate interpretation of objective data. Given the trend towards more and more intensified longitudinal research on psychological states throughout the workday (Beal, Citation2015), ESM researchers would benefit from having a short measure of energetic activation that reduces the participants’ burden during measurement, avoids survey fatigue, and ultimately prevents high dropout rates (Gabriel et al., Citation2019). Applying pictorial scales might turn out as a silver bullet here as they are typically very time-effective with their few items, and furthermore reduce cognitive load by helping respondents to visually relate to the presented concept.

Hence, in the work at hand we introduce a single-item pictorial scale of energetic activation. We examine the convergent, discriminant, and criterion-related validity of the pictorial scale across two studies. In the first study, we focus on convergent and discriminant validity of state energetic activation. Hence, we differentiate between validity at the within-person (differences within-person across days) vs. the between-person (differences between persons in average level across days) level. We (1) include multi-item measures of vitality and fatigue to establish convergent validity and (2) distinguish the pictorial scale empirically from facets of affect to provide evidence for discriminant validity.

In the second study, we revisit convergent validity and broaden the set of indicators of energetic activation. Moreover, we add evidence on the criterion-related validity of the pictorial scale by studying time-lagged associations to a range of variables tapping into both antecedents (sleep quality and basic need satisfaction), and consequences of energetic activation (work engagement). Finally, we scrutinize the practical utility by comparing several variants of our proposed measure in terms of administration time and user reactions. Our study thus provides a tool particularly tailored to ESM research and to continuous tracking of employees’ energetic activation.

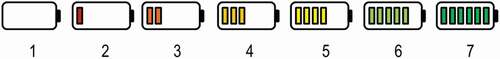

Leveraging the battery-metaphor to capture energetic activation

A recurring theme in the literature of occupational strain and recovery is the metaphor of individuals needing to “recharge their batteries” (e.g., Fritz et al., Citation2011; Querstret et al., Citation2016) after expending resources while working or facing stressful situations. The analogy of humans and batteries is ubiquitous in lay theories of (job-related) well-being as well, as reflected in quotes like “Reading allows me to recharge my batteries.“ (Batteries Quotes, Citation2021). The ubiquity of the battery metaphor to describe human energy in common sense (Ryan & Deci, Citation2008) renders the application of images to capture energetic activation a straightforward option. Taking the battery-metaphor literally, we set out to develop and evaluate a pictorial scale of energetic activation in this study. More specifically, we refer to battery icons ranging from an empty battery to a fully charged battery to assess individual energetic activation. Icons of batteries are ubiquitous in everyday life because most mobile electronic devices display current battery status prominently in the form of battery icons. We follow up on classic research on pictorial scales of job satisfaction (i.e., the Faces scale, Kunin, Citation1955; Wanous et al., Citation1997), organizational identification (Bergami & Bagozzi, Citation2000; Shamir & Kark, Citation2004), and affect (Bradley & Lang, Citation1994) and propose a single-item pictorial scale to measure energetic activation: The battery scale.

The value of pictorial scales

We draw on prior research on pictorial scales in the literature to argue for the value of a pictorial scale of energetic activation. For instance, the Faces scale of job satisfaction (Kunin, Citation1955) refers to icons of faces ranging from frowning to smiling faces to reflect individual job satisfaction levels. In a meta-analysis, Wanous et al. (Citation1997) found that the Faces scale yields a corrected correlation of ρ = .72 with multi-item verbal scales of overall job satisfaction. Furthermore, the self-assessment manikin (SAM) scales (Bradley & Lang, Citation1994) refer to icons of manikins to capture pleasure, activation, and dominance. Although the SAM item, meant to reflect dominance, correlates only modestly (r < .24) with semantic differential ratings, the SAM items of pleasure and activation converge very strongly with ratings from corresponding adjective lists as reflected in correlations of r > .90 (Bradley & Lang, Citation1994). These findings confirm that single-item pictorial scales may achieve considerable levels of validity while being very time effective.

Developing a pictorial rather than a purely verbal single-item scale of energetic activation has specific advantages. First, we draw from the field of user interface design and cognitive ergonomics (i.e., engineering psychology), where researchers are also facing the challenge to explain and represent abstract concepts within interfaces with the help of analogies (Holyoak, Citation2005) that users know from other fields of daily life. Here it is also deemed important to have rich visual representations of the metaphors to utilize their full potential.

Second, people are nowadays highly familiar with battery-powered systems. From research in the field of human-battery interaction, it can be concluded that people may directly associate concrete experiences with a visual representation of battery states that are commonly used in interface design because battery charge is a precious resource in everyday usage of mobile phones or other technical devices (Franke & Krems, Citation2013; Rahmati & Zhong, Citation2009). Hence, it may be much easier for respondents to relate to concrete visual battery state representation than only an abstract numerical percentage value.

Third, especially for the ESM setting, it is vital to develop a scale that drastically reduces demands on cognitive processing (i.e., minimal visual and cognitive workload) and therefore allows quick completion. From the field of graph perception in applied settings, it can be concluded that analog representations (e.g., bar graph as in battery icon) yield better performance within processing quantities than digital numerical displays (Wickens et al., Citation2013). Hence, a pictorial battery scale also has perceptual advantages. Consistent with these arguments, ESM research comparing the user experience of different scale formats to measure affect found that participants preferred pictorial over typical verbal items and adjective lists (Crovari et al., Citation2020).

Fourth, (common) method bias (Podsakoff et al., Citation2012; Spector, Citation2006) is regularly discussed as a problematic issue in research drawing primarily on self-reports, such as ESM- research. Applying different response formats across scales has been proposed as a strategy to reduce common method bias (Podsakoff et al., Citation2003). Given that the response format of a pictorial scale differs considerably from typical verbal items, a pictorial scale of energetic activation may be useful to minimize common method bias.

Fifth, a verbal single-item may force researchers to limit its content to one aspect to maintain clarity of the item (e.g., feeling vital). This may require excluding other focal aspects of the phenomenon from the measurement (e.g., feeling alert) and may either render measurement deficient or alter the focus of what is being captured (e.g., alertness only). We argue that a scale applying metaphors and images may allow for a more holistic measurement of a phenomenon, such as energetic activation, without requiring these kinds of compromises.

A pictorial scale of momentary energetic activation for ESM research

In our first study, we focus on convergent validity and discriminant validity of the proposed battery scale in the context of ESM research. In the second study, we additionally address criterion-related validity, time taken to respond, and user experience of the pictorial scale.

Study 1

Convergent validity: subjective vitality and fatigue

First, we study the convergent validity of the battery scale as a state-measure of momentary energetic activation in an ESM study. If the battery scale measures energetic activation, it should correlate highly with prototypical indicators of energetic activation. In their definition of energetic activation, Quinn et al. (Citation2012) refer explicitly to the experience of vitality. Accordingly, we consider vitality a core indicator of energetic activation that is apt to serve as a criterion for examining the convergent validity of the battery scale. More specifically, Ryan and Frederick (Citation1997) refer to subjective vitality as the subjective experience of possessing energy and aliveness. This description aligns well with the core of the concept of energetic activation. Hence, if the battery-scale captures energetic activation, it should correspond closely to ratings of subjective vitality.

To provide a more differentiated picture of where the battery scale is located within the nomological network of constructs tapping into energetic activation, we investigate links to a second variable, namely fatigue. Fatigue has been defined as “a feeling of weariness, tiredness or lack of energy” (Ricci et al., Citation2007, p. 1) and has conceptual similarities to energetic (de)activation. According to this definition, fatigue seems like the perfect opposite of vitality. Some researchers have defined energetic activation in terms of both high levels of vitality and low levels of fatigue (Fritz et al., Citation2011; Thayer et al., Citation1994). However, researchers have found moderate negative correlations between vitality and fatigue (Fritz et al., Citation2011; Zacher et al., Citation2014). Furthermore, a large volume of literature suggests that the absence of energetic activation is not identical to high levels of fatigue or exhaustion or vice versa (e.g., Demerouti et al., Citation2010; González-Romá et al., Citation2006). For instance, vitality and fatigue relate differentially to correlates of energetic activation, such as basic need satisfaction (Campbell et al., Citation2018). Hence, we consider links between the battery scale and fatigue to supplement our analysis of convergent validity. We expect the battery scale to converge more strongly with vitality than with fatigue because the perspective of vitality is more consistent with the concept of energetic activation ranging from no energetic activation at all to high levels of energetic activation. Also, the battery-metaphor is more compatible with this view (empty battery = no energy available). Besides studying correlations between energetic activation as measured with the battery scale, vitality, and fatigue, we also examine whether the battery scale yields similar levels of variability across time as a measure of sensitivity.

Discriminant validity: enthusiasm, tension, and serenity

Second, we examine the discriminant validity of the battery scale. If the battery scale is valid, it should not only correlate highly with established measures of energetic activation, it should also be empirically distinct from variables other than energetic activation. We study links of the battery scale to different facets of core affect consistent with the Circumplex-Model of affect (Russell, Citation1980; Warr et al., Citation2014). According to this taxonomy, affect can be distinguished along two dimensions: Pleasure and activation. The resulting quadrants of affect are high-activation pleasant affect (HAPA or enthusiasm), high-activation unpleasant affect (HAUA or tension), low-activation pleasant affect (LAPA or serenity), and low activation negative affect (LAUA).

HAPA or enthusiasm refers to feeling enthusiastic, elated, and cheerful and thus may be the facet of core affect with the strongest links to energetic well-being. On the one hand, Quinn et al. (Citation2012) explicitly include feelings of enthusiasm as a reflection of energetic activation in their definition of energetic activation. Consistent with this view, enthusiasm correlates considerably with feelings of liveliness (Ryan & Frederick, Citation1997). On the other hand, Daniels (Citation2000) distinguished energetic activation theoretically and empirically from the pleasure-depression continuum of core affect. As measured in the PANAS, HAPA has also been shown to be empirically distinct from physical and mental energy (r < .70, C. Wood, Citation1993). In a similar vein, (high activation) positive affect has been shown to yield only moderate correlations to subjective vitality (r = .36, Ryan & Frederick, Citation1997). Hence, we expect energetic activation as measured with the battery scale to be distinct from enthusiasm, albeit the correlation between energetic activation and enthusiasm might be substantial (Nix et al., Citation1999; Ryan & Deci, Citation2008).

HAUA refers to feeling tense, uneasy, and upset. Obviously, one major theme within HAUA is tension. According to the five-factor model of affective well-being, the energetic activation continuum ranging from tiredness to vigor is distinct from the comfort-anxiety continuum, and there is evidence for this distinction (Daniels, Citation2000). The distinction between energetic activation and tension is also consistent with other conceptualizations of affect distinguishing two dimensions of activation (namely positive and negative activation) that correspond to two systems of biobehavioral activation (Watson et al., Citation1999). In a similar vein, Thayer (Citation1989) distinguished between energetic activation and tense activation and found differential effects of energetic vs. tense activation. Research on the undoing-effect of positive affect (Fredrickson & Levenson, Citation1998) suggests that the effects of energetic activation may be reverse to the effects of tense activation (see Quinn et al., Citation2012 for a review and for a discussion of distinguishing one vs. two types of activation). Research on (high activation) negative affect in a broad sense and subjective vitality found moderate correlations between the two constructs (r = −.30, Ryan & Frederick, Citation1997). Research on the profile of mood states scales (McNair et al., Citation1992) suggests that the tension-facet correlates moderately to highly with facets of energetic activation (vigor: r = −.17 and fatigue: r = .61) but emerges as an empirically distinct factor in factor analysis (e.g., Albani et al., Citation2005; Andrade & Rodríguez, Citation2018). The literature on human energy, energetic activation, and energy management consistently defines and describes energetic activation as a (positive rather than a neutral) state negatively related to tense activation (Quinn et al., Citation2012). For instance, Shirom (Citation2003) refers to vigor (a concept overlapping considerably with energetic activation) as an aspect of positive affect. This is consistent with research on subjective vitality from the perspective of self-determination theory (Ryan & Deci, Citation2008; Ryan & Frederick, Citation1997). Ryan and Frederick (Citation1997) found that vitality correlates positively with positive affect (a prototypical measure of HAPA) and correlates negatively with negative affect (a proxy of tension). In other words, both conceptual arguments and empirical evidence consistently suggest that energetic activation is negatively linked to tension. Hence, we expect energetic activation as measured with the battery scale to be distinct from tension.

While high activation affect has been studied extensively, less research has considered low activation affect and its links to energetic activation. LAPA or serenity refers to feeling serene, calm, and relaxed. There is empirical evidence that vitality and LAPA are empirically distinct (e.g., Longo, Citation2015; Yu et al., Citation2019), albeit correlations are rather high, peaking around r = .70 in some studies. Hence, we expect energetic activation as measured with the battery scale to be distinct from serenity.

Given that LAUA refers to feeling without energy, sluggish, and dull (Kessler & Staudinger, Citation2009) and obviously overlaps considerably with fatigue, we do not examine LAUA explicitly.

Consistent with the distinction between energetic activation and tense activation (Reis et al., Citation2016; Steyer et al., Citation2003; Thayer et al., Citation1994) outlined above, we expect that energetic activation as measured with the battery scale relates positively to HAPA but negatively to HAUA. This pattern would be consistent with the conceptual distinction between energetic activation and tense activation as subjective correlates of two different biobehavioral systems (Thayer, Citation1989; Watson et al., Citation1999) and empirical evidence on subjective vitality (Ryan & Frederick, Citation1997). We assume that subjective theories of affect and energy reflect the distinction between energetic and tense activation. Hence, we expect that energetic activation as measured with the battery scale will reflect high levels of energetic and to some extent low levels of tense activation. Furthermore, the battery metaphor refers to the availability of resources. The battery status is meant to reflect the individual resource status in terms of energy at a given point in time. When individuals talk about “recharging their batteries”, they probably mean that their momentary resource status is lower than the preferred optimum. It is highly plausible that individuals will feel alive and vital or full of energy after “recharging their batteries” – when their resource status is high. It is less plausible that they will feel tense, anxious and annoyed afterwards. To the contrary, a high resource status may facilitate feelings of optimism (Ragsdale & Beehr, Citation2016) and serenity in the face of threats or challenges (Tuckey et al., Citation2015), due to the availability of energetic resources (Halbesleben et al., Citation2014; Quinn et al., Citation2012).

Methods

Procedure

We conducted an experience sampling study to examine the assumptions outlined above. The protocol of the ESM study asked participants to provide self-reports two times a day across a period of three workweeks: in the morning upon getting up and in the afternoon upon leaving work. The ESM study consisted of up to 30 self-reports per person across a period of 15 days. More specifically, we sent email invitations from Monday to Friday for three consecutive weeks. Accordingly, the ESM data have a multilevel structure. Self-reports are nested in persons. We informed participants about the general aims of our study before taking the surveys and received informed consent from participants before starting the baseline survey.

We applied online electronic surveys and we recorded data in an anonymized way. Participants were informed that participation is voluntary and that they are free to quit the study whenever they want. Our study fully conformed to the guidelines regarding ethical research of the [institution blinded for peer-review]. Participants accessed the survey via their web browsers. They were free to use any electronic device they preferred for taking the surveys.

Sample

A large portion of the participants was employed persons enrolled in a psychology program of a German university that offers distance learning courses. Participants could earn required study credits for providing self-reports in this study. Out of the 86 participants who participated in our ESM study, 81 provided daily self-reports. We excluded self-reports from five participants because they provided fewer than three usable self-reports per person. More specifically, we analyzed 1914 self-reports nested in 81 participants (953 morning surveys and 961 afternoon surveys). On average, each participant provided 24 out of the theoretically possible 30 self-reports (for a response rate of 79%).

Of the 81 participants, 67 were female, 14 were male. Age ranged from 18 to 60 (M = 36.04, SD = 10.90). In total, 65 participants held regular tenured employment, two were civil servants, ten were self-employed, one worked as an intern, and eight held other forms of employment.Footnote1 On average, participants worked 32 hours per week (M = 32.11, SD = 12.65). The average tenure with the current organization was six years (M = 6.14, SD = 7.30). They came from different industries, mainly from healthcare (28%), public administration (13%), the service sector (11%), commerce (10%), education (9%), manufacturing (6%), hospitality (5%), and other branches. A portion of 33 participants worked in large organizations (250 employees and above), 18 in mid-sized organizations (50 to 250 employees), 13 in small (10 to 49 employees), and 17 in very small organizations (1 to 9 employees). In our sample, 26 participants had a leadership position (32% in total) and worked as lower level (16 participants), middle level (5 participants), or upper-level managers (5 participants). The majority of our participants had a direct supervisor (68 participants).

Measures

An overview of the scales applied in Study 1 is presented in Table S1 in the supplemental materials. The battery scale, vitality, fatigue, enthusiasm, serenity, and tension refer to momentary experiences and were measured in the morning and afternoon surveys. We asked participants to rate how they felt right now across all items.

Battery scale

We applied the following instruction for the battery scale: “How one feels at the moment is often described in terms of the state of charge of a battery, ranging from ‘depleted’ to ‘full of energy’. Please indicate which of the following symbols best describes your current state.” The instruction was tailored to tap into momentary energetic activation. We presented the icons depicted in below the instruction and applied radio buttons ranging from 1 to 7.

Multi-item energetic activation scales

We measured vitality with three items of the subjective vitality scale (Ryan & Frederick, Citation1997) adapted to German and the ESM context (Schmitt et al., Citation2017). A sample item is “I feel alive and vital.” We measured fatigue with three items from the Profile of Mood States (McNair et al., Citation1992) adapted to German (Albani et al., Citation2005). We used the items “exhausted,” “worn out,” and “tired.”

Core affect

We measured enthusiasm (HAPA) with three items from a German four-quadrant scale of affect (Kessler & Staudinger, Citation2009). We used the items “elated,” “enthusiastic,” and “euphoric”. We measured serenity (LAPA) with three items (“relaxed,” “serene,” and “at rest”) from the same scale. We measured tension (HAUA) with three items from the tension facet of the Profile of Mood States (POMS; McNair et al., Citation1992). We used the items “tense,” “on edge,” and “nervous.” We combined sub-scales originating from two different affect scales because there is only one sub-scale in the POMS tapping into HAPA, namely vigor – a construct with considerable overlap to energetic activation. By contrast, the HAUA facet of the four-quadrant scale of Kessler and Staudinger (Citation2009) does not tap into tension, but captures a mix of anxiety and anger – states that may related differentially to energetic activation. Reliabilities across all multi-item scales as reflected in McDonald<apos;>s omega were acceptable to excellent, ranging from ψwithin = .77 for tension to ψwithin = .91 for vitality. Reliabilities at the between-person level were above .90 (please see for details).

Table 1. Correlations, means, standard deviations, reliabilities, and intra-class correlation coefficients among focal variables in study 1.

Analytic strategy

Given that we have repeated self-reports from each participant, our data have a nested structure. We leverage multilevel confirmatory factor analyses (MCFA) for ESM data. MCFA allows distinguishing between two levels of analysis: Within-person and between-person. This approach is particularly useful to analyze the validity of the battery scale at the within-person level. At the same time, we can consider whether our assumptions hold for links at the between-person level.

We specified measurement models for all scales and estimated the standardized covariances among all factors using Mplus 8 (Muthén & Muthén, Citation1998). We specified a two-level model (up to 30 self-reports nested in persons) with six factors (battery scale, vitality, fatigue, enthusiasm, serenity, and tension) homologous across levels. For the battery scale, we specified a latent factor with one indicator and fixed the residual variance of this indicator to zero. The Mardia-test indicated that multivariate normality of the focal scales is not given. In this case, common maximum likelihood estimation may provide biased standard errors. Hence, we estimated all models applying the robust maximum likelihood estimator MLR. The MLR estimator is recommended when assumptions of multivariate normality (kurtosis, skewness) are violated and provides robust estimates of the standard errors (Li, Citation2016). We applied the Satorra-Bentler scaled Х2 for comparing models through all analyses (Satorra & Bentler, Citation2010).

Cheung and Wang (Citation2017) suggest that correlations among constructs below .70 provide evidence for discriminant validity. Although not applicable to single-item measures, we also report average variance extracted for the multi-item scales to apply the Fornell-Larcker criterion to infer discriminant validity (Fornell & Larcker, Citation1981). According to the Fornell-Larcker criterion, the squared correlations among constructs should be lower than the average variance extracted for the items of the scales considered.

Furthermore, we calculated intra-class correlation coefficients (ICC1). We will focus on comparing ICC1 values across measures of energetic activation to explore whether the battery scale is equally sensitive to within-person fluctuation in energy as the multi-item verbal scales are. We estimated multilevel reliability (Geldhof et al., Citation2014) of the multi-item scales and report McDonald<apos;>s omega (Hayes & Coutts, Citation2020) at the within-person and the between-person level of analysis separately.

Results

Means, standard deviations, and correlations among the focal variables in Study 1 are presented in . Correlations at the between-person level refer to the correlations among the person means of the focal variables. The person mean is the average level of each given variable within-person across all self-reports of that person (e.g., the average level of energetic activation across repeated measures for each person). The ICC1 values across the focal scales are presented in . ICC1 across measures ranged from .37 (battery in the afternoon) to .57 (enthusiasm in the morning). As evident from , the battery scale and the vitality scale yielded similar patterns of variability across time (see also Table S2 in the supplemental materials). Thus, the battery scale is equally sensitive to changes in energetic activation within persons as the vitality scale is.

To examine the validity of the battery scale, we estimated a series of nested models ranging from a single-factor model combining all indicators to load on one factor to a 6-factor model where all items loaded on their respective factors (battery, vitality, fatigue, enthusiasm, tension, serenity). The 6-factor model achieved fit superior to plausible alternative models (Satorra-Bentler scaled ΔХ2 > 1799.264, df = 24, p < .001). Comparisons across models are presented in Table S3. The 6-factor model achieved an acceptable fit as reflected in CFI = .950, TLI = .933, RMSEA = .046, SRMRwithin = .045, and SRMRbetween = .094.

MCFA provides estimates for coefficients separately for Level 1 (within-person) and Level 2 (between-person). We focus on the estimated standardized covariances between the battery scale and the other factors to infer convergent and discriminant validity. However, we present estimated standardized covariances across all combinations of factors in Table S4 in the supplemental materials for completeness.

Convergent validity

We examined the convergent validity of the battery scale leveraging the focal 6-factor model introduced above. The battery scale correlates positively with vitality (ψwithin = .80, SE = 0.02, p < .001) and negatively with fatigue (ψwithin = −.74, SE = 0.02, p < .001). Given the standardized covariances above .70 between the battery scale and both vitality and fatigue, we can infer convergent validity of the battery scale with the multi-item verbal measures of energetic activation.

Discriminant validity

We examined the discriminant validity of the battery scale leveraging the focal 6-factor model introduced above. According to the criteria defined above the battery measures something clearly distinct from enthusiasm (ψwithin = .57, SE = 0.03, p < .001), serenity (ψwithin = .50, SE = 0.04, p < .001), and tension (ψwithin = −.36, SE = 0.04, p < .001). Given the standardized covariances below .70, we can infer discriminant validity of the battery scale to all aspects of core affect considered. Covariances and loadings across all scales at the within-person and the between-person level of analysis estimated in the 6-factor model are presented in Figure S1 in the supplemental material.

Our findings support the idea that the battery scale taps into momentary levels of energetic activation and corresponds most closely to momentary subjective vitality. The estimated covariances of the battery scale and the vitality scale with the facets of core affect are almost identical, with one exception. The battery scale yields a lower correlation with enthusiasm (ψwithin = .57, SE = 0.03, p < .001) than vitality does (ψwithin = .71, SE = 0.02, p < .001). That is, the battery scale may be particularly useful when aiming to differentiate between energetic activation vs. enthusiasm.

Discussion

Our analysis of the battery scale suggests that it converges particularly strongly with self-reports of state subjective vitality and is linked considerably to state fatigue. Applying rigorous analytic approaches, we found evidence that energetic activation measured with the battery scale is linked to different facets of core affect, especially enthusiasm, but measures something empirically distinct from these affective states and something highly similar to ratings of momentary subjective vitality. In sum, the results of Study 1 suggest that the battery scale is a valid measure of energetic activation in terms of momentary subjective vitality. However, there are several limitations. First, we had to confine to a limited set of correlates of the battery scale measured concurrently. Second, we did not examine criterion-related validity. Finally, we did not address whether the battery scale is as time-efficient and participant-friendly as expected. Hence, we set out to consider these issues in-depth in a second study.

Study 2

Building on the findings of Study 1, we set out to broaden the empirical evidence on the validity of the battery scale. First, we revisit the convergent validity. Second, we examine the criterion-related validity of the battery scale. Third, we consider practical issues of applying single-item measures of energetic activation (SIMEA) like the battery scale and investigate the effects of including pictorial elements (vs. omitting them) on response latencies and participant-rated user experience of the battery scale.Footnote2 Whereas revisiting convergent validity replicates and extends Study 1, considering the criterion-related validity and the practical usefulness of SIMEA like the battery scale are the unique and most novel contributions of Study 2. Below we will introduce different variants of SIMEA derived from the battery scale presented in Study 1. Hence, in Study 2, we refer to SIMEA (rather than the battery scale) to refer to this class of measures.

Broadening the evidence on the convergent validity of the single-item measure of energetic activation

Study 1 provided evidence that the battery scale as a specific type of SIMEA converges strongly with ratings of subjective vitality and fatigue. However, including additional measures of energetic activation would help locate the position of the battery scale in the nomological network of energetic activation measures even better. Hence, we included the vigor sub-scale of the Profile of Mood States (McNair et al., Citation1992). Prototypical items refer to feeling energetic, lively, active, full of life, alert, and vigorous (Wyrwich & Yu, Citation2011). These states correspond closely to the definition of energetic activation (Quinn et al., Citation2012) and overlap considerably with states referred to in prototypical items of subjective vitality (Ryan & Frederick, Citation1997). We add vigor here as another facet of energetic activation that may capture unique aspects of energetic activation, because in Study 1, we found vitality and fatigue to correlate at ψwithin = −.79, approaching the level of conceptual redundancy. By contrast, researchers studying fatigue as it relates to vigor found correlations as low as r = −.20 at the within-person level of analysis (Zacher et al., Citation2014). Given that the reliability of our fatigue measure in Study 1 was lower than expected and impaired overall model fit, we modified the set of fatigue indicators. We revisit vitality as a measure of energetic activation to replicate findings from Study 1 and to allow comparisons across studies.

Examining the criterion-related validity of the single-item measure

In Study 2, we examine the criterion-related validity by studying links between energetic activation as measured by SIMEA variants, and three correlates of energetic activation, namely (1) sleep quality (Bastien et al., Citation2001) and (2) basic need satisfaction (Deci & Ryan, Citation2000) as antecedents of energetic activation and (3) by work engagement (Rich et al., Citation2010; Rothbard & Patil, Citation2011) as a consequence of energetic activation (Quinn et al., Citation2012). To address this issue with methodological rigor, we will focus on time-lagged (rather than concurrent) associations between energetic activation and these criterion-variables, allowing predictors to precede consequences in time. In general, we expect the SIMEA to tap into vitality and yield a similar pattern of associations with these criterion variables as subjective vitality.

Sleep is considered a major opportunity to recoup depleted resources or re-charge batteries (Crain et al., Citation2018). Hence, the quality of sleep during the night predicts levels of vitality the next morning. Consistent with this notion, recent experience sampling research has found links between sleep quality and vitality at the within-person level (Schmitt et al., Citation2017). Drawing on this line of research, we expect that sleep quality predicts (higher levels of) energetic activation as reflected in the SIMEA.

Drawing on self-determination theory (Deci & Ryan, Citation2000) and research on basic need satisfaction (Sheldon et al., Citation2001), we focus on autonomy, relatedness, and competence need satisfaction. The satisfaction of basic needs has been theorized to be energizing in nature (Ryan & Frederick, Citation1997). Consistent with this view, experience sampling research has provided evidence for within-person links between basic need satisfaction and energetic activation as reflected in vitality and fatigue (Campbell et al., Citation2018; van Hooff & Geurts, Citation2015). Although most previous studies on basic need satisfaction and energetic activation have not distinguished between autonomy, relatedness, and competence need satisfaction, we will differentiate the three facets of basic need satisfaction to allow comparisons regarding relative importance for energetic activation across facets. We expect that satisfaction of psychological needs for (a) autonomy, (b) relatedness, and (c) competence predicts (higher levels of) energetic activation as reflected in the SIMEA.

We examine the link between energetic activation and work engagement. Rothbard and Patil (Citation2011) describe work engagement as “a dynamic process in which a person both pours personal energies into role behaviors (self-employment) and displays the self within the role (self-expression)” (p.59). According to this conceptualization, work engagement consists of three components, namely attention, absorption, and effort (Rothbard & Patil, Citation2011). In terms of this approach, work engagement refers explicitly to drawing on personal resources in general and devoting energy to work in more specific terms. Hence, this conceptualization aligns best with our aim of linking energetic activation and engagement. Drawing on Quinn et al. (Citation2012), we argue that having energy available as reflected in energetic activation predicts energy investment as reflected in the facets of work engagement. We expect that energetic activation as reflected in the SIMEA predicts work engagement as reflected in (a) attention to work, (b) absorption by work, and (c) effort.

Saving participant time through including pictorial elements

We have explicitly proposed pictorial elements in the battery scale because the battery icons are likely to support ease of responding to the item. Rather than taking the advantages of a pictorial scale for granted, we scrutinize the role of pictorial elements empirically. In Study 2, we have created a purely verbal SIMEA that consists of an instruction identical to the battery scale but excludes the battery icons. Responses on the purely verbal SIMEA range from 1 (depleted) to 7 (full of energy) and correspond as closely as possible to the battery scale. In this sense, the purely verbal SIMEA explicitly leverages the battery metaphor, but omits pictorial elements. We present the verbatim instruction in Table S6 in the supplemental materials.

Besides comparing the battery scale vs. a purely verbal SIMEA, we were interested in whether the instruction can be shortened without losing validity to save additional time. Hence, we developed a third SIMEA (besides the battery scale and the purely verbal SIMEA) by giving a minimal instruction and providing the same pictorial response options as in the battery scale. Removing explicit verbal labels from the battery scale further reduces reliance on verbal language. Furthermore, it may not be necessary to refer explicitly to the battery metaphor in the instruction once participants are familiar with leveraging the battery metaphor to rate their level of energetic activation. Hence, presenting the battery icons jointly with an abridged instruction may be sufficient to make the battery metaphor salient to participants. We refer to this third measure as the abridged pictorial SIMEA. We will compare the response latencies across the three SIMEA variants (battery scale, purely verbal SIMEA, abridged pictorial SIMEA). We expect the battery scale to yield a shorter response latency (reaction time) than the purely verbal SIMEA. Although we will compare the battery scale and the two alternative measures just described, our emphasis in Study 2 will be on SIMEA as a class of measures either leveraging pictorial (battery scale and abridged pictorial SIMEA) or metaphoric elements (purely verbal SIMEA). Hence, we refer to SIMEA (rather than battery scale) whenever we refer to the class of measures in a general sense.

Improving user experience through pictorial elements

Above, we have argued that the battery scale may save participant time and that pictorial elements improve the ease of use in responding to the scale (Crovari et al., Citation2020). In Study 2, we address this issue empirically. Focusing on the user experience of the battery scale from the participant<apos;>s perspective seems highly relevant because superior user experience is likely to improve response rates – an issue crucial particularly in ESM research (Gabriel et al., Citation2019). Accordingly, we will compare the perceived user experience across the three variants of the SIMEA (battery scale, purely verbal SIMEA, abridged pictorial SIMEA) described above. We will compare user experience ratings from participants familiar with each of the three variants after participating in an ESM study. We expect that the battery scale will yield a superior user experience in terms of (a) simplicity, (b) originality, (c) stimulation, and (d) efficiency compared to the purely verbal SIMEA. We expect that user experience ratings of the abridged pictorial SIMEA will be similar to those of the battery scale, because the battery metaphor will likely be salient to participants after responding several times to the three SIMEA variants. Although salience of the battery metaphor is a precondition of all SIMEA variants, it may not be necessary to mention it explicitly in the instruction, once participants are familiar with this metaphor.

Methods

Procedure

The study consisted of three elements: (1) a baseline survey covering demographics, (2) an ESM study across 12 days, and (3) a closing survey covering user experience ratings. The protocol of the ESM study asked participants to provide self-reports three times a day across a period of twelve consecutive days from Monday of week 1 to Friday of week 2: in the morning upon getting up, around noon at the end of lunch, and in the afternoon upon leaving work or around the same time during free days. The ESM study consisted of up to 36 self-reports per person across a period of 12 days. We applied the same sampling strategy as in Study 1. Study 1 and Study 2 were conducted 12 months apart. We compared participant codes across samples and found that the two studies refer to independent groups of persons and represent non-overlapping samples. As in Study 1, we informed participants about the general aims of our study before taking the surveys and received informed consent from participants before the start of the baseline survey. We manipulated SIMEA variant (battery scale, purely verbal SIMEA, abridged pictorial SIMEA) randomly across the 36 self-reports for each participant. We debriefed participants in the closing survey that one of the focal aims of the study was to compare different SIMEA variants and that different variants had been presented to them randomly.

Sample

We applied the same strategy for recruiting participants as in Study 1. Out of the 122 participants who took the baseline survey of our study, 117 provided daily self-reports. Hence, in the first step, we had to exclude five participants from the focal analyses. Within the sample of participants who provided self-reports in the ESM study, eight participants provided fewer than three usable self-reports per person. Hence, in the second step, we excluded another eight participants resulting in a focal sample size of N = 109 participants. At the within-person level, we analyzed 2997 self-reports nested in 109 participants (1009 morning surveys, 909 noon surveys, and 1079 afternoon surveys).Footnote3 On average, each participant provided 27 out of the theoretically possible 36 self-reports. That corresponds to a response rate of 76%.

Of the 109 participants in our focal sample, 88 were female, 20 were male, and one person did not provide this information. Age ranged from 19 to 60 (M = 33.77, SD = 10.03). In total, 80 participants held regular tenured employment, four were civil servants, 16 were self-employed, three were trainees, two worked as interns, and ten held other forms of employment. On average, participants worked 32 hours per week (M = 30.45, SD = 11.58). The average tenure with the current organization was five years (M = 4.57, SD = 4.65). They came from different industries, mainly from healthcare (39%), the service sector (17%), education (8%), public administration (7%), commerce (6%), manufacturing (6%), hospitality (5%), and other branches. A portion of 46 participants worked in large organizations (250 employees and above), 20 in mid-sized organizations (50 to 250 employees), 21 in small (10 to 49 employees), and 20 in very small organizations (1 to 9 employees). In our sample, 20 participants had a leadership position (18% in total) and worked as lower level (12 participants), middle level (5 participants), or upper-level managers (3 participants). The majority of our participants had a direct supervisor (88 participants).

Measures

Single-item measures of energetic activation (SIMEA)

We applied the three variants of the SIMEA described above. We presented exclusively one of these SIMEA variants per experience sampling survey. The different SIMEA variants were never presented concurrently. We randomized which of the three SIMEA variants was presented when for each participant individually. That is, on average a third of the 36 experience sampling surveys contained the battery scale, another third contained the purely verbal SIMEA, and the remaining third contained the abridged pictorial SIMEA. The battery scale was identical to Study 1. The instructions and response options of the purely verbal SIMEA and the abridged pictorial SIMEA are presented in Table S6 in the supplemental materials. The purely verbal SIMEA consisted of the identical instruction as the battery scale, but had no pictorial elements as response options, instead having seven response options from 1 (depleted) to 7 (full of energy). The abridged pictorial SIMEA was similar to the battery scale. The only difference was a shortened instruction merely asking participants to rate which of the seven icons best described how they felt right now.

Multi-item energetic activation scales (morning, noon, and afternoon surveys)

We measured vitality in the same way as in Study 1. Drawing on the empirical evidence from Study 1, we modified the measurement of energetic activation and included additional items to achieve a more reliable assessment of the focal variables. Given the high correlations between subjective vitality and fatigue in Study 1, we included an additional measure of energetic activation, namely the vigor sub-scale of the Profile of Mood States (McNair et al., Citation1992) adapted to German (Albani et al., Citation2005). We included vigor to broaden the measurement of energetic activation. The vigor items were “lively,” “energetic,” and “full of life.” We captured fatigue with a modified set of three items from the POMS (Albani et al., Citation2005) to obtain a more reliable measure of fatigue than in Study 1. More specifically, we removed “tired” and added “weary”.

Sleep quality (morning survey only)

We measured sleep quality with four items from the German version (Dieck et al., Citation2018) of the insomnia severity index (Bastien et al., Citation2001). Items were adapted to refer to the sleep of last night. A sample item is “Please rate the severity of the sleep problem difficulty falling asleep.” Response options ranged from 1 (none) to 5 (very severe). We reversed items of sleep impairment to have a straightforward measure of sleep quality (i.e., high levels correspond to high sleep quality).

Basic need satisfaction (noon survey only)

We measured competence need satisfaction, autonomy need satisfaction, and relatedness need satisfaction with three items each. We applied items from Van den Broeck et al. (Citation2010) adapted to the ESM-context (Weigelt et al., Citation2019) to measure competence need satisfaction. A sample item is “During the day, I felt that I am competent at the things I do.” We measured autonomy need satisfaction and relatedness need satisfaction with the scales proposed by Sheldon et al. (Citation2001) and Van den Broeck et al. (Citation2010) adapted to the ESM-context (Heppner et al., Citation2008). Respective sample items were “During the day, I felt that my choices expressed my ‘true self’,” and “During the day, I felt close and connected with other people I spent time with.”

Work engagement (afternoon survey only)

We captured work engagement with the work engagement scale proposed by Rothbard and Patil (Citation2011) adapted to the ESM-context. The scale distinguishes three facets, namely absorption by work, attention to work, and effort. We chose this scale because all items have an explicit focus on agency or behavior and align well with the concept of expending energy. Furthermore, this scale reduces the risk of tautological associations with energetic activation to a minimum (D. Wood & Harms, Citation2016). We asked participants to refer to the current workday. Respective sample items are “I often lost track of time when I was working,” “I concentrated a lot on my work,” and “I worked with intensity on my job.”

All scales applied in the experience sampling survey achieved acceptable to excellent reliabilities ranging from ψwithin = .72 for sleep quality to ψwithin = .91 for both vitality and attention. We present detailed reliabilities across all variables in .

Table 2. Correlations, means, standard deviations, reliabilities, and intra-class correlation coefficients among focal variables in study 1.

User experience (closing survey)

We obtained user experience ratings for each variant of the SIMEA in the closing survey. More specifically, we presented the three variants of the SIMEA next to one another and asked participants to rate the user experience of each variant. We selected eight items from a questionnaire of user experience of software products (Laugwitz et al., Citation2014) applicable to the SIMEA variants. This scale consists of a semantic differential ranging from 1 to 5, applying pairs of adjectives as scale anchors. We focused on four facets of user experience, namely simplicity (sample item: simple/complicated), originality (sample item: unimaginative/creative), stimulation (sample item: uninteresting/interesting), and efficiency (sample item: slow/fast). We measured each facet of user experience with two items each. Reliabilities of the user experience sub-scales were acceptable to excellent ranging from ω = .79 (efficiency) to ω = .95 (simplicity and stimulation) (see Table S8 in the supplemental materials for details). Given adequate reliability, we formed composite scores for each of the four facets of user experience. In Table S6, we provide details on all scales applied in Study 2.

Time effectiveness of the SIMEA

To examine how time-effective the different variants of the SIMEA are, we placed the SIMEA on a separate page within the experience sampling survey and tracked response latencies. More specifically, we recorded when each survey page was submitted by participants and calculated the time taken for responding to the SIMEA variants (time stamp of the SIMEA page minus time stamp of the page preceding the SIMEA page).

Analytic strategy

We ran multilevel confirmatory factor analyses applying the same strategy as in Study 1 to examine the convergent and predictive validity of the SIMEA. To establish criterion-related validity, we focused on time-lagged analyses (1) from sleep quality in the morning to energetic activation as measured with the SIMEA at noon, (2) from basic need satisfaction at noon to energetic activation as measured with the SIMEA in the afternoon. (3) We applied a similar approach for predicting work engagement in the afternoon by energetic activation as measured with the SIMEA at noon. Hence, our focal analyses address lagged associations. However, we report correlations among all variables when assessed concurrently as a supplement to the focal lagged analyses in Table S7 in the supplemental materials.

In the focal analyses, we do not distinguish between the three SIMEA variants but refer to SIMEA as a class of measures. To this end, we pooled the data from all three SIMEA variants and referred to it as the SIMEA indicator (sourced from one of the three SIMEA variants). However, we also specified an additional set of MCFA models to examine whether the three variants of the SIMEA yield similar or different results in terms of criterion-related validity. In these MCFA models, we had three separate indicators representing (1) the battery scale, (2) the purely verbal SIMEA, and (3) the abridged pictorial SIMEA. Including three separate indicators that were not measured concurrently required fixing covariances among the three indicators to a specific value.Footnote4 Following the rationale of measurement invariance tests, we compared the constrained models – in which the covariances of SIMEA indicators with the criterion-variables were constrained to be equal across variants – to an unconstrained model – in which the covariances of the SIMEA indicators with the criterion variables were estimated freely. If constraining parameters this way does not impair model fit considerably, the associations of the three SIMEA variants with sleep, basic need satisfaction, and work engagement can be considered to be equivalent.

We ran multilevel regression models applying the “nlme” package (Pinheiro & Bates, Citation2000) for R to compare response latencies across the three variants of the SIMEA. More specifically, we applied a multilevel model to regress each response latency on two dummy variables representing whether the variant presented was purely verbal (0 = no/1 = yes) and whether it was abridged (0 = no/1 = yes). Hence, the battery scale was the reference category (0/0). We included number of trials as a covariate to account for practice effects that might be reflected in decreases in response latencies. More specifically, we operationalized practice effects by the number of trials or number of times having provided ratings using any of the SIMEA variants so far.

We ran a one-factorial multivariate analysis of variance for repeated measures (MANOVA) to compare the user experience across the three SIMEA variants applied (battery scale, purely verbal SIMEA, abridged pictorial SIMEA).

Results

Convergent validity

We present correlations among the focal variables in (see also Table S7 in the supplemental materials). ICC1 across measures ranged from .32 (vitality in the afternoon) to .51 (vigor in the morning). We ran MCFA models to estimate the convergent validity among the battery scale, subjective vitality, vigor, and fatigue. We found that a 4-factor model homologous across levels of analysis had excellent fit and fit the data better than any alternative model combining items from different scales to load on a common factor (Satorra-Bentler scaled ΔХ2 > 210, df = 6, p < .001). Comparisons across models are presented in Table S9. The 4-factor model achieved excellent fit as reflected in CFI = .991, TLI = .987, RMSEA = .025, SRMRwithin = .013, and SRMRbetween = .037.

We found that energetic activation as measured with the SIMEA variants converges particularly strongly with vitality (ψ within = .80, SE = 0.01, p < .001) and vigor (ψ within = .79, SE = 0.01, p < .001). The SIMEA variants correlate highly with fatigue, too (ψwithin = −.69, SE = 0.02, p < .001). We report loadings and standardized covariances among the focal scales in Figure S2 in the supplemental materials. These results confirm the findings from Study 1 that the SIMEA variants including the battery scale measure energetic activation and correspond most closely to the experience of vitality and vigor. Although, the SIMEA correlated highly with fatigue, correlations were slightly below the threshold of .70. Energetic activation as measured with the SIMEA variants converges very strongly with vitality and vigor. By contrast, fatigue taps into aspects distinct from – yet highly correlated with – each of these measures of energetic activation. Vigor correlated ψwithin = −.69 with fatigue and vitality correlated ψwithin = −.71 with fatigue. Hence, we do not interpret these findings as a lack of convergent validity of the SIMEA variants. In sum, the results suggest that the SIMEA variants are best suited to capture the aspects of energetic activation also reflected in scales of vitality and vigor.

Criterion-related validity

We ran a set of MCFA models to examine whether sleep quality in the morning predicts energetic activation at noon. We found that a 2-factor model homologous across levels of analysis had a good fit as reflected in CFI = .982, TLI = .974, RMSEA = .021, SRMRwithin = .047, and SRMRbetween = .057. At the within-person level, sleep quality in the morning was linked to (higher levels of) energetic activation as measured with the SIMEA at noon (ψwithin = .29, SE = 0.04, p < .001). We report all estimated factor loadings and standardized covariances at the within-person and at the between-person level in Figure S3 of the supplemental materials.

We ran another set of MCFA models to examine whether basic need satisfaction at noon is linked to energetic activation in the afternoon. We found that a 4-factor model (SIMEA-autonomy-relatedness-competence) homologous across levels of analysis had a good fit as reflected in CFI = .979, TLI = .972, RMSEA = .028, SRMRwithin = .057, and SRMRbetween = .064. The 4-factor model fit the data better than any alternative model combining items from different scales to load on a common factor. At the within-person level, autonomy need satisfaction (ψwithin = .17, SE = 0.05, p = .002), relatedness need satisfaction (ψ within = .15, SE = 0.05, p = .001), and competence need satisfaction (ψwithin = .10, SE = 0.05, p = .032) at noon were linked to (higher levels of) energetic activation as measured with the SIMEA variants in the afternoon. We report all estimated factor loadings and standardized covariances at the within-person and at the between-person level in Figure S5 of the supplemental materials.

We applied a similar strategy to examine whether energetic activation as measured with the SIMEA at noon is linked to work engagement in the afternoon. We found that a 4-factor model (SIMEA-attention-absorption-effort) homologous across levels of analysis had an adequate fit as reflected in CFI = .950, TLI = .933, RMSEA = .045, SRMRwithin = .058, and SRMRbetween = .055. The 4-factor model fit the data better than any alternative model combining items from different scales to load on a common factor. At the within-person level, energetic activation as measured with the SIMEA variants at noon was linked to (higher levels of) attention to work (ψwithin = .23, SE = 0.06, p < .001), absorption by work (ψwithin = .29, SE = 0.06, p < .001), and effort (ψwithin = .21, SE = 0.07, p = .001) in the afternoon. We report all estimated factor loadings and standardized covariances at the within-person and at the between-person level in Figure S7 of the supplemental materials.

We compared the criterion-related validity across the three variants of the SIMEA by comparing the constrained vs. unconstrained models. Constraining the covariances of the SIMEA indicators to the criterion variables to be equal across variants did not deteriorate model fit for sleep quality (Satorra-Bentler scaled ΔХ2 = 4.041, df = 4, p = n.s.), basic need satisfaction (Satorra-Bentler scaled ΔХ2 = 16.536, df = 12, p = n.s.) and work engagement (Satorra-Bentler scaled ΔХ2 = 14.512, df = 12, p = n.s.). We provide detailed results in Table S10 in the supplemental materials. Hence, the criterion-related validity of the SIMEA concerning sleep quality, basic need satisfaction, and work engagement can be assumed to be equal across SIMEA variants. However, we report the estimated covariances for each of the three SIMEA variants in Figures S4, S6 and S8 in the supplemental materials.

We ran additional analyses to set the criterion-related validity of the SIMEA variants into context. More specifically, we tested lagged associations between vitality and the criterion-variables. We report the estimated standardized covariances for these models in Figures S11 to S13 in the supplemental materials. In essence, vitality and the SIMEA variants yielded very similar associations to the criterion variables. Hence, these additional analyses further corroborate the notion that the SIMEA variants achieve a high level of criterion-related validity.Footnote5

Differential response latencies

We ran multilevel models to compare response latencies across the three variants of the SIMEA (battery scale, purely verbal SIMEA, abridged pictorial SIMEA). After excluding outliers yielding response latencies above 15 seconds (corresponds to 1.5 interquartile ranges above the third quartile), we ran analyses on 2809 response latencies from 109 participants.Footnote6

We regressed response latency in seconds on the dummy variables (purely verbal SIMEA yes/no and abridged SIMEA yes/no), the number of trials (self-reports provided), and the interaction of number of trials x variants, to model initial differences in response latency, practice effects, and differential practice effects across variants. We compared linear and logarithmic growth because response latencies across the three variants likely approach an asymptote. We found that a logarithmic model outperformed a linear model in terms of AIC and BIC.Footnote7 Examining the interaction of variant x time allows to estimate the initial differences in response latencies and takes into account practice effects. The results of the focal model are presented in . We found that the initial response latency of the battery scale is 7 seconds (intercept: γ00 = 7.28 SE = 0.26, t = 28.54, p < .001) and decreases over time (γ10 = −0.57, SE = .10, t = −5.91, p < .001). More specifically, response latencies approach a level of 5 seconds over the course of the study. Participants took approximately 3 seconds longer to respond to the purely verbal variant of the SIMEA as compared to the pictorial variants (battery scale and abridged SIMEA vs. purely verbal SIMEA, γ20 = 2.79, SE = 0.36, t = 7.79, p < .001). We found no differences between the abridged pictorial SIMEA and the variants applying the complete instruction (i.e., battery scale and purely verbal SIMEA, γ30 = −0.39, SE = .34, t = −1.14, p = .253). The differences between the purely verbal variant of the SIMEA and the pictorial variants attenuated over time (γ40 = −0.92, SE = .14, t = 6.67, p < .001). The trajectories of response latencies across variants of the three SIMEA variants are displayed in Figure S14 in the supplemental materials. In sum, the advantages of the pictorial variants of the SIMEA vs. the purely verbal variant regarding participant time saved are considerable, particularly during the first 10 to 15 self-reports within the study.

Table 3. Results from multilevel modelling predicting response latency in seconds as a function of number of trials taken and variant of the SIMEA.

In addition, we analyzed the response latencies of the multi-item scales tapping into energetic activation (i.e., vitality, fatigue, and vigor). Given that the multi-item verbal scales were presented on the same survey page, we calculated the average response time per item and multiplied it by three to get an estimation of how long it takes on average to respond to a set of three verbal items (i.e., one multi-item scale). We ran a multilevel model including number of trials in the same way as described above. We found that participants took 9 seconds in the beginning (intercept: γ00 = 8.89 SE = 0.18, t = 49.37, p < .001) and that response latencies became shorter over time due to practice effects (γ10 = −0.80 SE = 0.05, t = −16.18, p < .001). Figure S14 in the supplemental materials shows the trajectories of response latencies across the different measures. Given that practice effects of the multi-item scales are virtually identical to those of the battery scale, the time advantage of the battery scale vs. verbal scales is robust even after more than 30 self-reports. In sum, the pictorial SIMEA variants provide a time advantage of 2 seconds per self-report compared to short multi-item scales. The time advantage of the purely verbal SIMEA is less pronounced.

Differences in user experience across variants of the SIMEA

We received complete user experience ratings from 90 participants. In Table S8 in the supplemental materials, we present reliabilities and intercorrelations across the four facets of user experience. The moderate correlations among the facets suggest that our scales capture empirically distinct aspects of user experience. We compared the user experience ratings across the three variants of the SIMEA applying MANOVA. presents the means and standard deviations for (a) simplicity, (b) originality, (c) stimulation, and (d) efficiency across variants. We found that user experience ratings differ considerably across variants as reflected in F (8, 350) = 19.07, p < .001, ηp2 = .304. Post-hoc comparisons revealed that the battery scale yielded superior user experience ratings than the purely verbal SIMEA. The mean differences in user experience ratings ranged from 0.63 (efficiency) to 1.37 (originality). The abridged pictorial variant of the SIMEA achieved user experience ratings similar to the battery scale. These results were consistent across the four facets of user experience considered. Hence, although the inclusion of pictorial elements may not affect the validity of the battery scale, our results suggest that it improves the participant experience considerably.

Table 4. Comparison of mean usability ratings across variants of the SIMEA.

Discussion

In Study 2, we replicated and extended the empirical evidence on the convergent validity of the battery scale as a specific form of SIMEA. We found that the SIMEA variants converge strongly with vitality and vigor and correlate strongly with fatigue, too. At the same time, vitality and vigor did not converge more strongly with fatigue than the SIMEA variants did. Hence, our results support the conceptual distinction between vitality/vigor vs. fatigue. The SIMEA variants are better suited to capture energetic activation in terms of vitality and vigor rather than in terms of fatigue.

Addressing the issue of criterion-related validity, we examined whether sleep quality and basic need satisfaction relate to energetic activation as measured with the SIMEA variants. We found evidence for lagged associations in that sleep quality in the morning predicts higher levels of energetic activation at noon. All facets of basic need satisfaction at noon were linked to higher levels of energetic activation in the afternoon. Furthermore, energetic activation as measured with the SIMEA variants at noon was linked to all facets of work engagement in the afternoon.

Addressing the practical advantages of applying a pictorial rather than a purely verbal SIMEA, we found that including pictorial elements reduced the time it takes to respond to the scale by nearly 3 seconds with an average initial response latency for the original variant of the battery scale around 7 seconds. Accounting for practice effects, the response latency can be expected to approach 5 seconds. Hence, our study does not only provide a reliable estimate of the time it takes to respond to the battery scale; we also show explicitly that including pictorial elements in the SIMEA reduces survey length in terms of average time spent per item. For one, the battery scale is superior to the purely verbal SIMEA. This time advantage is most pronounced during the first self-reports within an ESM study. In other words, leveraging pictorial elements alone makes a difference during the initial phase of an ESM study when participants become familiar with the protocol and the specific materials of the study. For the other, the battery scale provides a time advantage of 2 seconds compared to a set of purely verbal items as short as three. Hence, applying a pictorial SIMEA like the battery scale rather than a verbal multi-item scale likely reduces participant burden and improves response rates across ESM surveys.

Finally, we compared user experience ratings across three SIMEA variants. We found that the variants including pictorial elements, namely battery icons ranging from a depleted to a fully-charged battery, achieved superior user experience ratings across all domains considered. Hence, our results support the application of the SIMEA in its pictorial form as a means to increase the user experience of the ESM survey. Ultimately, the superior user experience of the battery scale may contribute to encourage continuing taking surveys beyond the first days of an ESM study during the phase when participants decide whether they should commit to go on.

General discussion

In the work at hand, we set out to examine the convergent, discriminant, criterion-related validity and the practical usefulness of a newly developed single-item pictorial scale of energetic activation: The battery scale. Study 1 focused on examining the convergent and discriminant validity of the battery scale. Study 2 focused on extending evidence on convergent validity, probing criterion-related validity, and studying the benefits of a pictorial scale like the battery scale in terms of test economy and user experience.

Theoretical implications

Consistently across studies, we found that energetic activation as measured with the battery scale and SIMEA variants derived from the battery scale converge with widely-used multi-item verbal scales of energetic activation, namely vitality, vigor, and fatigue. The SIMEA variants correspond closest to vitality and vigor. This finding suggests that the battery scale as a specific variant of SIMEA is a valid instrument for measuring a core aspect of energetic activation in ESM research.

We found considerable links between the battery scale and facets of core affect. However, our results provide empirical evidence for distinguishing energetic activation from affect per se. Our results are consistent with the conceptual distinction between energetic activation and facets of core affect (Daniels, Citation2000; Ryan & Frederick, Citation1997), albeit the link between enthusiasm and energetic activation was considerably higher than the modest links between subjective vitality and positive affect found in empirical research so far (Ryan & Frederick, Citation1997; C. Wood, Citation1993). Put differently, although energetic activation in terms of vitality is linked to high-activation positive affect, the two constructs can clearly be distinguished. Of note, the battery scale as a specific variant of SIMEA yielded lower correlations with enthusiasm than did the subjective vitality scale. Hence, the battery scale may even be superior to the vitality scale when distinguishing between energetic activation and enthusiasm.

We found that the SIMEA variants considered are linked to variables that have been identified as predictors of vitality in prior research, namely sleep quality (Schmitt et al., Citation2017), and basic need satisfaction (Campbell et al., Citation2018; Vergara-Torres et al., Citation2020). We also found that energetic activation as measured with the SIMEA variants were linked to devoting energy to work as reflected in attention, absorption, and physical engagement. The SIMEA variants yielded a very similar pattern of associations to these criterion variables, as did vitality – a multi-item verbal measure of energetic activation.

Our analysis of response latencies and user experience provides evidence that the SIMEA variants and especially the battery scale is a very parsimonious measure in terms of participant time and effort and is hence very suitable for application in ESM research. In sum, we have provided strong evidence that the battery scale is very similar to a multi-item verbal measure of vitality concerning convergent, discriminant, and criterion-related validity, and variability across time. These findings provide evidence that the battery scale is a valid and cost-effective measure of state energetic activation, particularly suited for ESM research.

Practical implications

The battery scale as a specific SIMEA variant provides a time-efficient way of measuring energetic activation. At the same time, it seems that the battery scale – though not perfectly valid – is probably as good as it may get when applying a single-item measure in applied research (Fisher et al., Citation2016). Although a single item cannot provide nuanced insights, it may facilitate intensified longitudinal research with multiple repeated measures per day, as is typical in ecological momentary assessment research (Syrek et al., Citation2018). For instance, tracking energetic activation across the workday may help identify periods of optimal functioning without disturbing participants considerably during work. Researchers may want to provide personalized online feedback to participants (Arslan et al., Citation2019) as an incentive for participation (Gabriel et al., Citation2019). Feedback on trajectories of energetic activation may even be part of specific (Lambusch et al., Citation2022; G. Spreitzer & Grant, Citation2012) or more general stress prevention and health promotion programs (Tetrick & Winslow, Citation2015). Besides being a valid measurement instrument, the battery scale as specific SIMEA variant may be a helpful tool to facilitate self-insight among participants. It might be useful to assess energetic activation when participant involvement in research is low. Our results suggest that the battery scale may work, even when verbal instructions are reduced to a minimum, as in the case of the abridged pictorial SIMEA. Having a minimal instruction may be particularly useful in contexts where literacy is an issue or contextual constraints do not permit interrupting employees for more than a few seconds. Of note, however, we think that the abridged pictorial SIMEA likely requires a minimum level of familiarity with applying the battery metaphor to rate energetic activation. Given that the time advantage of the abridged pictorial SIMEA vs. the battery scale is modest and fades across trials, we conclude that in ESM studies, participants will likely not read the instruction thoroughly each time they access a survey. Hence, we recommend applying the more comprehensive instruction as suggested in the battery scale to have the best of both worlds: Having an explicit and clear instruction and having the time advantage of a pictorial scale.

Strengths and limitations

The research presented has a couple of strengths, such as drawing on rich ESM data with relatively low rates of missing data, leveraging multilevel structural equation modeling techniques, and replicating some of the core findings across two consecutive studies. However, a couple of cautionary notes are warranted upon interpreting our results. First, the sample sizes in our studies are not particularly large. However, we believe that we provide a good deal of initial evidence on various aspects of construct and criterion-related validity in a common research setting. Jointly, the two studies provide a precise and rich picture of the psychometric properties of the battery scale and its location in the nomological network.