ABSTRACT

This paper considers legal contestation in the UK as a source of useful reflections for AI policy. The government has published a ‘National AI Strategy’, but it is unclear how effective this will be given doubts about levels of public trust. One key concern is the UK’s apparent ‘side-lining’ of the law. A series of events were convened to investigate critical legal perspectives on the issues, culminating in an expert workshop addressing five sectors. Participants discussed AI in the context of wider trends towards automated decision-making (ADM). A recent proliferation in legal actions is expected to continue. The discussions illuminated the various ways in which individual examples connect systematically to developments in governance and broader ‘AI-related decision-making’, particularly due to chronic problems with transparency and awareness. This provides a fresh and current insight into the perspectives of key groups advancing criticisms relevant to policy in this area. Policymakers’ neglect of the law and legal processes is contributing to quality issues with recent practical ADM implementation in the UK. Strong signals are now required to switch back from the vicious cycle of increasing mistrust to an approach capable of generating public trust. Suggestions are summarised for consideration by policymakers.

Background

Artificial intelligence (AI) is a hot topic in public policy around the world. Alongside efforts to develop and use information technology, AI has become a ubiquitous feature in visions of the future of society. This paper considers the relevance of legal and regulatory frameworks in guiding these initiatives and ideas, challenging the ‘wider discourse … drawing us away from law, … towards soft self-regulation’ (Black and Murray Citation2019). As discussed under ‘Findings’ below, it suggests that AI has become a problematic concept in policy. Policymakers might more responsibly focus on how to foster good decision-making as technology changes.

The present study focuses on the United Kingdom (UK), where the government has expressed strong interests in the ‘wondrous benefits of this technology, and the industrial revolution it is bringing about’ (Johnson Citation2019), and wishes to lead the G7 in working ‘together towards a trusted, values-driven digital ecosystem’ (DCMS Citation2021c). The UK government also claims a legal system that is ‘the envy of the world’ (Lord Chancellor et al. Citation2016), which suggests that its experience in applying relevant frameworks may be of general interest.

In domestic policy, the ‘grand challenge’ of putting the UK ‘at the forefront of the AI and data revolution’ has been the government’s first Industrial Strategy priority since 2017 (BEIS Citation2017). A new National AI Strategy was published in September 2021, aiming to: ‘invest and plan for the long-term needs of the AI ecosystem’; ‘support the transition to an AI-enabled economy’; and ‘ensure the UK gets the national and international governance of AI technologies right’ (Office for Artificial Intelligence et al. Citation2021).

But this new strategy must also address a big problem. Although it considers that its objectives ‘will be best achieved through broad public trust and support’, levels of public trust are low. A deterioration in trust in the technology sector (or ‘techlash’) has been remarked upon globally (West Citation2021). It is certainly apparent in the UK, where already-poor perceptions of AI seem to be deteriorating sharply. According to one survey, only about 32% of people in the UK trust the AI sector of the technology industry. This is a lower proportion and falling faster than in any other ‘important market’ in the world with a drop of 16% since 2019 (Edelman Citation2020, Citation2021).

Although UK policymakers have consistently acknowledged that participatory processes to develop and apply clear legal and regulatory standards are important to the development of public trust, so far that has not translated into an effective programme of action. One early paper in 2016 highlighted the importance of clarifying ‘legal constraints’, maintaining accountability and cultivating dialogue (Government Office for Science Citation2016). In practice, the various proposals, guidance documents and statements of ethical principle have often lacked enforceability mechanisms as well as public profile or credibility.

One example is the idea of possible ‘data trusts’ to address power imbalances and mobilise class action remedies. UK policymakers see data trusts more as a ‘means of boosting the national AI industry by creating a framework for data access’, overcoming privacy and other objections (Artyushina Citation2021). It has long been observed that this lacks credibility from the point of view of an individual ‘data subject’ (Rinik Citation2019). Hopes for data trusts as ‘bottom-up’ empowerment mechanisms have receded over time, with experiences like Sidewalk Toronto providing a ‘cautionary tale about public consultation’ even as consortium pilots have apparently shown their value in a ‘business scenario’ (Delacroix and Lawrence Citation2019; ElementAI Citation2019; Zarkadakis Citation2020). Associated discussions about new forms of governance capable of empowering individuals in their interactions with dominant organisations have tended to focus on market participation rather than civic values, thereby ‘limiting the scope of what is at stake’ (Lehtiniemi and Haapoja Citation2020; Micheli et al. Citation2020).

Another example is the government’s Data Ethics Framework, which was last updated in 2020 (Central Digital & Data Office Citation2020). The Framework’s successive versions have become more detailed in their warning that officials should ‘comply with the law’, now annexing a list of relevant legislation and codes of practice. But the recommendation that teams should ‘involve external stakeholders’ is treated separately and merely as a matter of good development practice. There is no connection to public participation in the articulation of public values, either considering that relevant information technology projects may involve standard-setting or acknowledging that the law requires engagement with external parties in various operational respects.

The problem does not appear to be lack of care or attention outside government. In criminal justice, for example, Liberty (the UK’s largest civil liberties organisation) and The Law Society (the professional association for solicitors in England and Wales) each released high profile reports in 2019 identifying problems with rapid algorithmic automation of decision-making (Liberty Citation2019; The Law Society Citation2019).

The problematic point instead seems to be standards around decision-making within government. Despite growing concerns in policy circles about the problem of public mistrust, ‘law and legal institutions have been fundamentally side-lined’ in favour of discussions about AI ethics (Yeung Citation2019). But this has not settled questions of legal liability (Puri Citation2020). And ethics have become less accommodating, for example with a growing sense that ‘government is failing on openness’ when it comes to AI (Committee on Standards in Public Life Citation2020). A 2020 ‘barometer’ report characterised the ‘lack of clear regulatory standards and quality assurance’ as a ‘trust barrier’ likely to impede realisation of the ‘key benefits of AI’ (CDEI Citation2020).

Under these conditions, some elements of civil society have started to emphasise legal challenges to specific applications to enable greater participation in the policy arena and highlight the growing impact of AI on UK society. For example, a prominent judicial review of police trials involving automated facial recognition (AFR) software in public places ruled on appeal that applicable data protection, equalities and human rights laws had been breached and established a key reference point for the AFR debate (R Bridges v CC South Wales Police [Citation2020] EWCA Civ 1058, Citation2020) (hereafter, Bridges). The Home Office also suffered a series of high-profile setbacks in the face of legal challenges to the automation of its immigration processes (BBC Citation2020; NAO Citation2019).

With the arrival of the Covid-19 pandemic and its associated spur to uses of technology, the issues of participation and trust have become more prominent and provocative. Although the A-level grading controversy in Summer 2020Footnote1 did not involve AI, there were high-profile public protests against ‘the algorithm’ (Burgess Citation2020; Kolkman Citation2020). The Chair of the Centre for Data Ethics and Innovation (CDEI)Footnote2 resigned as Chair of Ofqual,Footnote3 reportedly over lack of ministerial support (The Guardian Citation2020). More recently, attention has focused on levels of transparency in the National Health Service (NHS) deal with the technology company Palantir, again spawning legal challenges (BBC Citation2021b). In these circumstances, it is not surprising that the government’s own AI Council advisory group has observed in its Roadmap recommendations on the government’s strategic direction on AI that the subject is ‘plagued by public scepticism and lack [of] widespread legitimacy’ and that ‘there remains a fundamental mismatch between the logic of the market and the logic of the law’ (AI Council Citation2021).

The UK is plainly not the only jurisdiction facing these issues, although Brexit arguably puts it under special pressure to justify its approach given the European Union’s (EU) growing reputation for proactive leadership in the field (CDEI Citation2021). The EU is considering feedback on a proposed AI Regulation to harmonise and extend existing legal rules, both to ‘promote the development of AI and [to] address the potential high risks it poses to safety and fundamental rights’ (European Commission Citation2021). The United States (US) has developed a research-oriented, university-based approach to legal implications which connects technology industry and philanthropic interests to practising lawyers, civil society and government as well as academia (AI Now Institute Citation2021; Crawford Citation2021). The White House has now started to canvass views on a possible ‘Bill of AI Rights’ (Lander and Nelson Citation2021), a ‘pro-citizen approach [which appears] in striking contrast to that adopted in the UK, which sees light-touch regulation in the data industry as a potential Brexit dividend’ (Financial Times Citation2021a). China’s apparently top-down approach instead looks to be ‘shaped by multiple actors and their varied approaches, ranging from central and local governments to private companies, academia and the public’, and its ‘regulatory approach to AI will emerge from the complex interactions of these stakeholders and their diverse interests’ (Arcesati Citation2021; Roberts et al. Citation2021). Overall, a global perspective emphasises both the broad, multi-faceted nature of the challenge in understanding appropriate forms of governance for this complex technology and the value of expanding and diversifying suggestions for ways in which it could be governed.

Workshop. This paper presents a policy-oriented analysis of a workshop held on 6 May 2021, the culmination of a series of collaborative events over the first half of 2021 to discuss AI in the UK from a critical legal perspective. These ‘Contesting AI Explanations in the UK’ events were organised by the Dickson Poon School of Law at King’s College London (KCL) and the British Institute for International and Comparative Law (BIICL).

The main background to the workshop was a public panel event held on 24 February 2021, a recording and summary of which was published shortly afterwards (BIICL Citation2021a). The panel event focused primarily on relevant technology in current law. The key motivations for the panel were as follows:

Encouraging discussion of legally-required ‘explanations’ beyond data protection regulation. The so-called ‘right to explanation’, constructed out of several provisions of the EU General Data Protection Regulation 2016 (GDPR – now the UK GDPR), has unleashed a cascade of academic debate and technological initiatives as well as relatively helpful regulatory guidance (ICO Citation2020). The recent Uber and Ola decisions involved in rideshare drivers’ legal challenges to their employers have also tested relevant provisions before the courts for the first time, fuelling media coverage (Gellert, van Bekkum, and Borgesius Citation2021; The Guardian Citation2021). But data protection is clearly not a complete – nor necessarily even a particularly satisfactory – solution to the legal issues raised by machine learning technologies (Edwards and Veale Citation2017). It has been argued persuasively that ‘the GDPR lacks precise language as well as explicit and well-defined rights and safeguards against automated decision-making’ (Wachter, Mittelstadt, and Floridi Citation2017), even if the entitlement to ‘meaningful information’ is undeniably significant (Selbst and Powles Citation2017). And there are, for example, profound implications to work out with respect to current public law and employment law rights and obligations (Allen and Masters Citation2021; Maxwell and Tomlinson Citation2020).Footnote4

Fostering broader conversations amongst lawyers about experiences ‘contesting’ AI-related applications. The UK’s approach to policy has been narrower than in the US or EU, eschewing, in particular, the idea that the AI revolution needs a broad-based legal response. This has tended to obstruct calls for ‘lawyers, and for regulators more generally, to get involved in the debate and to drive the discussion on from ethical frameworks to legal/regulatory frameworks and how they might be designed’ (Black and Murray Citation2019). There is an antagonistic relationship between the government and ‘activist lawyers’ (Fouzder Citation2020), including organisations like Liberty, Foxglove and AWO that have contested the deployment of relevant systems. The law, and court or regulatory processes are strangely excluded from discussions about what ‘public dialogue’ means in this context (Royal Society & CDEI Citation2020).

Aside from validating these points, the key observations taken forward from the panel event into the framing of the workshop event were as follows:

The depth of the issues involved implies ‘upstream’ feedback from legal experience into policy. Whereas both the law and legal processes tend to focus on particular examples, especially involving the rights of individuals considered after harms may have occurred, the recent proliferation of cases suggests that systematic regulatory and governance issues are involved at various stages of use. Responsible innovation principles, for example, would imply – amongst other things – anticipating and mitigating risks in advance of the occurrence of harms (Brundage Citation2019; UKRI Trustworthy Autonomous Systems Hub Citation2020).

The breadth of the issues warrants cross-sectoral analysis. Although lawyers and policymakers specialising in information and technology have long been interested in connections with public and administrative law, recent experience suggests significant differences as well as similarities in the ways in which law and technologies intersect in specific fields of activity (for example, education as compared to health).

Methods

The workshop reported in this paper was designed and implemented as a participatory action research initiative inspired by the ‘Policy Lab’ methodology (Hinrichs-Krapels et al. Citation2020). Policy Labs are one example of a recent expansion in methods for the engagement of broader networks in policy processes, especially focused on ‘facilitating research evidence uptake into policy and practice’.

Six ‘sectors’ were identified for separate group discussions: constitution, criminal justice, health, education, finance and environment (with others considered suitable but discarded due to limited resources, including immigration, tax and media). Initial planning concentrated on identifying and securing participation of researcher group leads (the co-authors of this paper), for broader expertise and sustainability. Each group lead was supported by a member of the KCL & BIICL team in facilitating the group discussion at the workshop. Eventually, only five sector groups were taken forward because of difficulty applying the selected framing to the environment sector. It seems likely that environmental issues will grow in prominence, for example considering developments in climate modelling and future-oriented simulations. But, in the time available, no qualified interlocutor contacted believed current legal and regulatory decision-making in this sector implicates AI sufficiently to justify the approach.

Workshop participants were recruited by invitation in a collaboration between the organisers and the group leads for respective groups, using existing professional networks as well as contacting individuals through public profiles. Participants were targeted flexibly using the following inclusion criteria, bearing in mind the value of domain expertise as well as appropriate gender and ethnic diversity: (A) civil society activists – individuals or organisations whose day-to-day work involves legal challenges to AI-related decision-making; (B) practitioners – legal professionals with significant expertise relevant to associated litigation; (C) researchers – legal academics or other researchers familiar with relevant research debates. Exclusion criteria were not specified, although considering non-legally qualified policymakers, computer scientists, media figures or members of the public more generally was noted to involve some potential risk to grounding discussions on technical legal observations. The final number of participants was 33. Their names and organisational affiliations are listed in Appendix.

Relevant concepts were clarified in advance using a participant information sheet and defined in the agenda document on the day. The event used a broad, inclusive definition of ‘AI’ based on the Russell & Norvig approach adopted by the Royal Society for UK policy discussion purposes (Royal Society Citation2017; Russell and Norvig Citation1998): ‘systems that think like humans, act like humans, think rationally, or act rationally’. This definition acknowledges that AI – as generally understood – implicates aspects of the wider ‘big data’ ecosystem, as well as the algorithms that exist in it, reflecting the ‘fuzzy, dynamic, and distributed materiality of contemporary computing technologies and data sets’ (Rieder and Hofmann Citation2020). It was also clarified that participants might raise legal principles and processes they considered relevant even if they did not directly arise from or implicate circumstances involving AI technology.

‘Contestation’ was defined to include critical analysis as well as social action (Wiener Citation2017), here in the form of legal processes (in the sense of activities seeking to invoke the power of a tribunal or regulatory authority to enforce a law, including investigations or actions implicating relevant principles). The critical emphasis in this component of the framing was noted to carry a risk of being perceived as negative and ‘anti-technology’, and participants were explicitly encouraged to maintain a positive, constructive approach (bearing in mind benefits as well as risks of technology and suggesting improvements). ‘Explanation’ was connected to its meaning in data protection law (see Background), but also to its broader and more general (and interactive) social scientific meanings (Miller Citation2018). ‘Governance’ was defined generally as the processes by which social order and collective action are organised (explicitly including elements like administrative practices, corporate governance and civil society initiatives as well as official governmental authority) (Stoker Citation2018).

The workshop opened with an initial conversation between Swee Leng Harris (Luminate/Policy Institute at King’s) and Professor Frank Pasquale (Brooklyn Law School), broadly following the group agenda format, to support framing and stimulate thinking ahead of the group discussions. This was a broad-ranging conversation which included consideration of the potential for law to ‘help direct – and not merely constrain’ the development of AI (Pasquale Citation2019). Prof. Pasquale’s suggested priorities as part of a ‘multi-layered’ approach to legally-informed AI policy were as follows:

Developing models of consent capable of reinforcing the role of full information to individuals, but also building more collective levels and supporting people in keeping track of what they’ve consented to (Pasquale Citation2013).

Implementing initiatives to inform and educate decision-makers, especially judges, about benefits and risks of AI, inspired by US research about the effects of economics training on judicial decision-making (Ash, Chen, and Naidu Citation2017).

Each sectoral group then engaged in a conversation covering three questions, which were distilled from the previous panel event through an iterative process of discussion between the co-authors of this paper:

To what extent are legal actions implicated in contestations of current or potential AI applications in this sector?

Are potential harms from AI well understood and defined in the sector?

How might reflections on legal actions and potential harm definitions improve relevant governance processes?

Groups were not designed to be fully or consistently representative of their respective fields of activity; they were free to concentrate on areas of specific interest or expertise. An interim blog was published pending this fuller analysis, following participant requests for a summary of the workshop to be made available for their working purposes immediately after the workshop (BIICL Citation2021b).

Findings

This section starts with a terminological distinction before moving to report the main findings of the workshop. In each group discussion, participants expressed doubts about the utility of the term ‘AI’ in advancing common understandings of the issues in law. In some discussions (especially in the Health and Finance groups) this reflected an emphasis on a balanced approach to the technology itself, including recognising and supporting positive applications such as properly validated clinical decision support tools or effective fraud detection systems. The Education group also discussed difficulties in creating a legally meaningful definition of AI.

A more consistent concern, reflected in all group discussions, was the need to situate the question of AI-related harms within the wider issues of poor-quality computer systems, systems management, data practices and – ultimately – decision-making processes. For legal purposes, it is not always useful or possible to distinguish AI-specific problems from mistakes or harmful outputs arising from uses of complex systems generally. Circumstances are often unclear as to whether a particular system even uses AI in a technical sense. The Criminal Justice, Health and Education group discussions all included comments suggesting that incentives to make inflated claims about system capabilities are problematic. In other words, putting AI on the tin helps sell the product even if it does not contain AI. The Constitution group included a comment that decision-makers are often clearly struggling to exercise their duties (e.g. the duty of candour) because of poor levels of understanding about how these systems work.

As a result, the term ‘Automated Decision-Making’ (ADM) was generally preferred for participants’ purposes. No participant advanced an explicit definition of ADM. The term is generally familiar from the GDPR (ICO Citation2021a), although participants discussed partly-automated as well as the fully-automated decisions addressed by the GDPR.

By no means does all ADM involve AI. The wider scope of the term ADM was considered useful for including a broad range of computational approaches, and for shifting the focus from technological methods (such as machine learning) to their institutional applications (emphasising uses).

Equally, not all AI necessarily entails ADM of any consequence. However, the discussions indicated that AI was typically understood as a subset, or special class, of ADM. Most participants were concerned with existing, human-reliant decision-making processes and systems. They considered AI as a contribution to increasing automation (verging towards autonomy) from that perspective. There was also closer attention to consequences than causes, effectively rending AI systems that are not somehow associated with ADM irrelevant. As one participant in the Health group put it:

The primary focus [in law] should be on risk / harm / impact. I.e. what is the risk that the technology will result in serious harm? Focusing on the context and impact seems more important than the nature or complexity of the tech.

Legal actions

sets out examples of legal actions raised in the group discussions (with identifiers used in brackets for the remainder of this section). Some examples were discussed in detail (e.g. 3), others were mentioned in passing to illustrate a point (e.g. 5). The example raised in the most vivid terms (‘brutal’, ‘egregious and astonishing’) was that of the Post Office Horizon system (2).Footnote5 The fact that this example did not actually involve AI helps reinforce the point that participants saw the issues mainly through the broader lens of ADM.

Table 1: Examples of legal actions raised at the workshop.

Most of the examples raised were recent (2020–2021) or ongoing. Each of the group discussions raised the idea that it is early days for relevant legal contestation. Various reasons were given for this observation, including limited scope of technological deployment, obstacles to transparency and the limited availability of avenues for action. There was, however, a general expectation that legal actions will increase in coming years, especially given an apparent appetite for developing contestation perspectives and exploring new legal avenues.

These consistent impressions were nonetheless formed against a backdrop of contrast in the number, depth and variety of examples discussed by the various groups. Dissimilarities in group composition and interests probably explain some of these differences, but they were nonetheless indicative of sectoral distinctions since the groups followed a consistent agenda. Discussion in the Constitution group (and to a lesser extent Criminal Justice, with which it overlapped key examples) suggested a longer and broader track record of relevant legal contestation. The Health, Education and Finance groups raised fewer examples and, at least for Education and Finance, were more tentative in considering them. The Health group discussed the example of the Information Commissioner’s Office (ICO)Footnote6 investigation of Royal Free – Google DeepMind (15)Footnote7 in detail, noting that the extended data protection investigation in fact had ‘nothing to do with AI’ from a technical perspective (but instead concerned the data used to test a system). The Health Group also discussed a dispute between digital health provider Babylon Health and a concerned professional who advanced criticisms about safety and commercial promotion in relation to its chatbot, apparently engaging regulators’ cautious attention (14), as well as the Palantir example mentioned above (16). For the Finance group in particular the lack of clear examples was perhaps remarkable given the sector’s long experience of using algorithmic approaches and evidence of relatively high levels of current AI deployment (CCAF Citation2020; FCA Citation2019).

Examples discussed by the groups covered a wide range of action types, forums, substantive grounds and procedural avenues. Actual or threatened litigation involving the UK courts was prominent in the Constitution and Criminal Justice groups’ examples (especially implicating the Home Office and police forces). The Education group chiefly referred to recent litigation in the Netherlands which may imply potential grounds for actions in the UK (18, 19). Discussion in the Health and Finance groups, on the other hand, tended to focus more on regulatory interventions in a fragmented regulatory landscape. The Health group emphasised gaps between the Clinical Quality Commission (CQC) and Medicines and Healthcare products Regulatory Agency (MHRA), which are both UK health sector regulatory bodies. The Finance group considered the mandates of the Financial Conduct Authority (FCA), Prudential Regulation Authority (PRA) and Competition and Markets Authority (CMA), which are all UK regulatory bodies relevant to financial markets. Two examples of ethics functions were raised (10, 12), neither of which carried any legal weight beyond advisory.

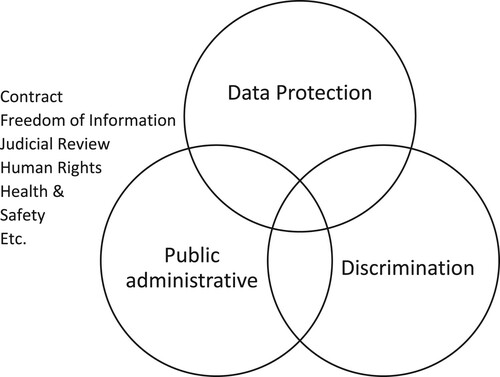

Relevant law. The group discussions referred to a broad range of relevant law. A simplified visual representation of the main areas is set out in .

Data protection law featured prominently throughout the group discussions. Interestingly, the focus tended to be on Data Protection Impact Assessments (DPIAs – GDPR Art.35) as a key substantive and procedural element in wider actions, rather than on ‘explanation’ (in the sense of GDPR Arts. 22, 13–15, etc.) (19). There were repeated comments regarding ongoing contestation over the appropriate role of DPIAs, including, for example, as a way of clarifying the duty of fairness as a pre-condition for lawful processing and in relation to the ICO’s regulatory responsibilities.

The area of law considered in most detail, though almost exclusively in the Constitution and Criminal Justice group discussions, was public administrative law. Judicial review examples received considerable attention, as did contestation over common law principles of procedural fairness (both generally and in relationship to data protection and human rights legislation). The public sector equality duty (PSED)Footnote8 was also highlighted as a set of principles evolving with judicial reflections, especially in Bridges (3) which held that South Wales police had not given enough consideration to the risk of discrimination when using automated facial recognition. Not surprisingly, given the salience of fundamental rights in legal contestation, the proportionality principle was also invoked repeatedly.

In this context, two points were raised that hinted at the relevance of broader cultural and political factors. The first related to rights and, in particular, the European Convention on Human Rights (ECHR) Article 8 right to privacy, as applied through the Human Rights Act (HRA). One participant suggested that UK courts tend to ‘downplay’ the intrusive implications of police initiatives for human rights, despite landmark Strasbourg or domestic ECHR cases, e.g. Marper or Bridges (3, 11). The second point addressed the Freedom of Information Act (FOIA), which was consistently regarded across groups as an essential (though often frustrated) support for transparency in this context. Here, the reference was to analyses indicating falling government responsiveness to FOIA requests (Cheung Citation2018).

While discrimination and equality problems were raised frequently as relevant issues across all group discussions, it was remarkable that few of the examples, apart from Bridges (3) and Home Office Streaming (4)Footnote9, directly engaged relevant legislation as a primary basis for contestation. Various participants suggested that there is strong potential to do so in future legal actions, subject to the emergence of cases with relevant claimant characteristics.

Private law issues arose across the groups, but were discussed the most extensively in the Education and Finance groups (although the Post Office Horizon example also involved contractual issues (2)). The main points in question involved consumer protection in contract, although the Finance group also touched on misrepresentation issues (22). Conceptually, this connected to references to validity of consent to data processing in the Education group (17).

Access to justice. There was extensive reference across all groups to problems of legal standing and the law’s heavy reliance on the notion that harms are individual and rights of action are normally exercised individually (see for estimates of number of cases against estimated number of affected people). There was some limited discussion of the need to develop better collective procedures, such as under Art.80 GDPR, although the refusal of the Court in Bridges to consider AFR as a ‘general measure’ indicates the depth of opposition to ideas of collective rights and any associated collective action (DCMS Citation2021d; Purshouse and Campbell Citation2021).

The main weight of comments addressed practical limitations on individuals bringing claims as a factor in access to justice. Three factors were considered significant. First, there are the significant costs of such actions taking into account the likely need for expert evidence or input as well as the limits of legal aid. Second, there is the problem of motivation. Partly this is because ‘people don’t want to complain’, but also, as discussed in the Education group, people like students and employees don’t have any real prospect of achieving satisfactory outcomes in the context of power imbalances in broader relationships. Under current regimes, they instead face the real prospect of actively harmful outcomes.

Above all, the groups commented on a third factor as a more distant but severe constraint on individual claims: the general lack of public information. Although these fundamental transparency issues were described in various terms, participants raised three broad aspects:

above all, for ordinary people, achieving basic awareness (disclosure of the existence of a relevant system, notification, knowing what’s happening, overcoming secrecy);

more relevant for technical steps in bringing actions, developing understanding about automated decision-making systems (building on basic awareness, assembling meaningful information, questioning decision-makers’ presentation of systems, revisiting previous authority based on incomplete understanding); and

generally, realising that there is the potential to contest outcomes and standard setting (both in theory and in practice).

The idea was repeatedly raised in the Constitution group that relevant legal action in the UK is increasingly targeted on aspect 2. above, in ‘tin-opener’ claims seeking essentially to drive beyond cursory, awareness-only disclosure of the existence of relevant ADM initiatives. The Home Office Streaming example (4) was offered as an example of this, with disclosure about the workings of a system leading directly to settlement.

It was interesting to consider parties’ incentives in relation to ‘tin-opener’ claims. Another example suggested that opacity motivates claimants to raise a broader range of potential issues than they might under clearer circumstances (17). And the idea was also raised that defendants might be strongly discouraged by associated publicity: comments in the Finance group suggested that reputational risk is a major consideration for financial organisations, driving a preference for settlement out of court.

Effectiveness of outcomes. There were divergent views across the group discussions on the question of effectiveness of outcomes from legal actions. In terms of litigation, 2020 was highlighted as a positive year because of notable successes in Bridges, A-levels and Streaming (3, 4, 5). One Constitution group participant said:

I think one big outcome is that the government lawyers and the civil service side are starting to realize the importance of these considerations as well when they're relying on this technology. And that [goes to the] point about bias towards automation. I think government actors are now considering this and the prospect of challenges before they overly rely on stuff.

Perspectives in the other groups were less upbeat about outcomes to date. There were comments about the limitations of relevant judicial processes, only addressing harms retrospectively and generating narrow outcomes on individual claims. In the Criminal Justice and Health groups especially, judicial review was seen to offer insufficient scope to challenge system inaccuracies, discrimination or situations in which decision-makers simply assert dubious factual claims about systems. Technology was seen to be moving too fast and flexibly and thus able to evade legal constraints, referring, for example, to DeepMind simply obtaining data from the US to train its AKI algorithm (15) and Babylon Health presenting its chatbot as advisory rather than medical or diagnostic (14). Legal actions were considered to fuel decision-maker dissimulation, with organisations making minor changes to systems and then retreating back behind veils of secrecy and public ignorance.

Two themes emerged from these more pessimistic comments. The first contrasted public law litigation with regulatory action and private disputes, both of the latter being less visible but probably more common as an avenue for legal action at scale. Although there were specific exceptions, generally participants tended to take a sceptical view of regulators’ appetite to enforce the law strictly or gather information about relevant harms. In the Health group, AI was seen by some to get a ‘free ride’ (especially in administrative applications involving triage, appointments, etc.); in Education, regulators were described as trying to delegate the issues to organisations; and in Finance, it was speculated that there are probably a large number of private complaints which remain unpublished and/or are never labelled as ADM issues.

The second theme emerged from the strong contrast between discussion of the outcomes from Bridges (3) in the Constitution and the Criminal Justice groups. The Constitution group considered it a ‘mixed bag’: it remains unknown whether AFR is on hold or still in use pending FOIA requests; but taking Singh LJ’s judgment on PSED is a significant advance. The Criminal Justice group instead focused on how this adds a further example in the sector – with Marper (11) – of contestation working perversely to legitimise authorities’ initiatives (Fussey and Sandhu Citation2020). Bridges was variously described as helping surveillance practices to develop; provoking guidance explicitly designed to encourage and enable use of AFR (Surveillance Camera Commissioner Citation2020); enabling ‘gestural compliance’; merely leading to ‘improved procedures’; and winning only ‘minor concessions’. The Criminal Justice discussion expressed frustration at a perceived tendency to be satisfied with ‘winning on a technicality’ while ignoring the gradual erosion of basic rights such as the presumption of innocence (Art.6(2) HRA). It considered not just how to regulate a particular technology, but whether the technology should be used at all.

Understanding potential harms

There was broad consensus amongst the groups that our understanding of relevant potential harms from recent ADM has started to take shape, although it remains limited. The key cause of harm that all groups were concerned to establish was errors from poor data, algorithms or their use. In addition, each group discussed categorisation or social grouping undertaken as part of decision processes, not just in terms of error risk, but also in terms of a growing sense of alienation amongst human operators as well as subjects of ADM systems. These were seen as problems generated by and requiring solutions from humans rather than being technologically based and capable of technical solutions. Workshop participants tended to consider that by far the greatest risk of harm from ADM systems is operator confusion and incompetence. All groups except for Finance discussed a sense of psychological and cultural aversion to questioning ADM systems. For instance, it took years to establish the extent of system deficiencies in the TOEIC example (1).Footnote10

Most group discussions concentrated on this issue and on other barriers to understanding harms through empirical observations. Some participants, especially in the Health group, considered distinctions between levels of harm (from minimal to very significant) and types of harm (for example, physical injury, material loss, rights-based, etc). However, most groups were more interested to understand the ways in which relevant harms are becoming clearer over time. Potential harms were seen to include fundamental issues that may take decades to emerge, for example in the possible relevance of data capture during children and young peoples’ education to their future employment prospects. There was a general sense that the law will gradually illuminate more such harms as time passes, with legal actions playing a leading role. Nonetheless, the groups discussed four main obstacles to this gradual progression towards a more balanced approach to system capability:

Intellectual property (IP). In all of the groups except Finance, it was observed that public authorities looking to implement ADM systems are often not entitled to know much about the systems they are using because of suppliers’ ‘aggressive’ commercial confidentiality standards and associated practices. This was repeatedly observed to be a major impediment to ADM-related transparency and therefore accountability. The Constitution group especially considered this to be a major reason why relevant harms are ‘very well hidden’. This logic suggests public procurement practices, including relevant contractual standards, as a potential major focus for efforts to reduce ADM-related harms.

Lack of clear responsibility. ADM harms were often discussed as occurring in ways that involve both: boundaries between institutional regimes (e.g. between service audit and medical devices in Health or between assessment standards and content delivery in Education); and incentives to devolve responsibilities to machines (e.g. trying to avoid the cost of large administrative teams in Health scheduling or the potential failures of human judgement in Education grading). This was described in the starkest terms in the Criminal Justice group as ‘agency laundering’, or delegation of discretionary authority to technology (Fussey, Davies, and Innes Citation2021). The Education group observed that there are significant silos within organisations as well as between them, indicating that ADM is causing profound confusion. The Health group discussed narratives about technological implementation being used deliberately to distract from other issues, suggesting that the confusion itself can serve other purposes.

Harm diffusion. ADM-related harms were regarded as diffuse in two senses. First, they often affect large numbers of people in ways that are subtle, intangible or only relatively infrequently develop into evident detriment. Second, they may aggregate through the interaction of apparently distinct systems into ‘compound harms’ (or even ‘vicarious harms’). In both the Constitution group with respect to benefit claims over privacy infringement (6, 3) and in the Health group with respect to preoccupation with acute care over social services, participants were concerned that appreciation of ADM-related harms is skewed towards data availability and relative quantifiability.

Relationships with clear power imbalances. This was a particular focus for discussion in the Education group, which observed that students have no real choice but to accept the terms imposed in relation to systems like those that are increasingly used for e-proctoring (e.g. 17). However the issue also appeared in the Constitution (generally in terms of decisions by state authorities), Criminal Justice (mainly in terms of surveillance but also for example in pre-trial detention decisions) and Finance (where it was doubted that consumers read or understand the terms that are offered to them).

The picture that emerged from the group discussions on understandings of potential harm was therefore one of regulatory uncertainty rather than of legislative necessity. None of the groups described a clear AI-specific gap in the law. The priority for the discussions was instead that regulatory authorities should clarify and enforce existing law in relation to ADM. Especially in Criminal Justice, Health and Education, the groups discussed a permissive, non-transparent environment in which there are significant gaps between practices and applicable law and regulation. Actors were described exercising discretion in ADM implementation, for example with police forces gravitating towards AFR but carefully avoiding any data linking which may provide insights into (and therefore responsibilities for) child protection. Regulators were described as pursuing preferences (in part because of budget pressures), for example with the ICO seen across the groups as preferring guidance and advisory intervention in cases over energetic enforcement of individual data protection or freedom of information claims.

That said, the general view of the sufficiency (or at least abundance) of applicable law in this area did not discourage some participants from arguing in favour of legal initiatives. As one participant in the Constitution group put it, ‘sufficient and good and best are three very different things’. Two types of overall systemic harm were especially prominent considerations. Firstly, some participants in the Criminal Justice group were worried about the possibility of ‘chilling effects’ in view of the various factors outlined above, with people disengaging as it becomes less and less clear where people might raise suspicions of harm in ways that they expect to have taken seriously. Secondly, the Finance group discussed periodic chaotic breakdowns as a form of potential harm, based on the experience with algorithmic trading systems and ‘flash crashes’ (21).

Governance processes

The group discussions addressed the institutional environment in which AI technology is being introduced. In the Health and (especially) Education groups, participants’ comments tended to impressions of disorder because of uncertainty over regulatory mandates and general confusion over the implications of ADM. In Criminal Justice and Finance, there was a greater impression of order but also of suspicion that relevant authorities (especially police forces) are implementing or authorising the deployment of AI in an uncritical manner. In the Constitution group, participants considered that the government appears to be actively hostile to the key legal mechanisms for AI contestation: judicial review; the Human Rights Act; the Data Protection regime; and Freedom of Information rights. There was also a comment that ADM-related precedents in higher courts are often not applied consistently by individual judges, leading to a dilution of principles (e.g. 3, 9). Overall, the sense was of a highly AI-supportive (though not well-organised) policy environment, tending to excessive permissiveness that includes disregard for the rule of law and for effective quality control for ADM systems.

The key concern for all groups was the lack of examples in which AI deployment has been discouraged or refused permission on legal grounds, for example, because of assessed risk of harm or persistent organisational non-compliance. This concern was especially elevated in the Criminal Justice group given that relevant decisions are associated with coercive outcomes and the view that legal contestation has so far had very limited outcomes (‘fiddling in the margins’, as one participant put it). The Constitution group also remarked on the poor track record of implementing ADM in the immigration system (1, 4, 8). There were repeated suggestions across groups that ADM should be banned outright in certain contexts (such as for social scoring or in applications involving clearly disproportionate risks to human safety), or at least that it should be clarified that current law does not permit ADM in such contexts.

The groups tended to consider that there is a need for greater attention to and investment in institutional efforts around ADM initiatives, even if that means taking longer to do things properly rather than rushing ahead. The example of how New Zealand has pursued AFR was contrasted with the approach to date in the UK, for example (Purshouse and Campbell Citation2021). Three main themes were identified as warranting attention in the UK:

The highest priority is for organisations to promote transparency, which means at a minimum notifying people that relevant algorithms are being used. Lack of public information about significant ADM is now becoming a major driver of legal contestation (see Constitution group comments above). All groups (except Finance) stated that DPIAs should be published – this was the clearest and most frequent governance recommendation from the workshop. The Health group also discussed MHRA starting to publish details of its assessments and actions as a positive step towards transparency. In the Finance group, one participant suggested that banks are voluntarily moving to publish digital ethics codes because of their assessment of reputational risks.

Broader risk assessment and mitigation strategies around ADM initiatives were considered desirable, including consideration of legal as well as ethical frameworks and paying special attention to stakeholder engagement. In the Constitution group, Canada’s Algorithmic Impact Assessment Tool (Treasury Board of Canada Secretariat Citation2021) was contrasted favourably with the ‘litany of frameworks and guidelines that the UK government’s promulgated’. The Criminal Justice group considered lessons from the ‘West Midlands’ model in some detail (Oswald Citation2021) and an end-to-end approach was recommended (beyond the conventional before & after assessment); it was observed to be strange that intelligence agencies are subject to closer scrutiny than the police in the UK in terms of ADM-related surveillance.

Ultimately for many, the objective was seen to be improved ADM design standards. The example of regulatory endorsement of ‘Privacy by Design’ by the Spanish data protection regulator was offered in the Education group (AEPD Citation2019). Participants in the Health and Education groups in particular saw the greatest potential for AI in terms of designs capable of fostering currently applicable standards and systems, building on current checks and balances rather than cutting past them.

The group discussions paid less attention to the means through which policymakers might stimulate the regulatory and institutional environment to develop these themes in practice. Some groups considered that consumer protection mechanisms are more relevant than is currently recognised, especially in Health (potentially not just MHRA but along the lines of the Advertising Standards Authority, to address inflated claims about technological capability) and in Finance (perhaps expanding consumer finance regimes on unfair contract terms or focusing the Financial Ombudsman Service on ADM-related concerns).

The issue of regulatory alignment and coordination was also raised repeatedly. This is the well-rehearsed idea, revisited by the House of Lords in 2020 for example (House of Lords Liaison Committee Citation2020), that the large and growing number of relevant official bodies and mandates in the UK make the question of their cooperation especially important. There are of course many options for pursuing this objective, including hierarchical and collaborative models. The Health group questioned whether some form of ‘superstructure’ should exist in the sector. The Finance group highlighted the formation of the Digital Regulation Cooperation Forum (DRCF), which was formed in 2020 as a joint initiative of three regulators concerned with ‘online regulatory matters’ (DRCF Citation2021). Only the Constitution group contemplated the role of Parliament in detail. The comparative neglect of this element in other groups suggested that institutional arrangements for democratic accountability or legislative oversight of ADM may be relatively neglected in the UK.

Discussion

The findings broadly validate the methods applied for this research. The workshop discussions provided an opportunity to gather information about the dispersed, formative processes of law-based governance of AI in the UK both across a range of legal domains and across various fields of social activity. The information was not objective; the discussions were explicitly partial (the Education group focused on tertiary education, for example). One particular issue with the composition of some groups (Constitution, Criminal Justice and Education) was the lack of a ‘public servant’ perspective in the sense of a participant with current first-hand experience of the government (or ‘defendant’) position in legal actions. It is also worth reiterating that the ‘contestation’ framing of the workshop deliberately encouraged critical analysis, which therefore opens the findings to charges of undue negativity.

Nevertheless, the chosen approach offered an unusually broad experience-based insight into the practical implications of AI in law, drawing on the views of practitioner professionals as well as those of researchers. It therefore addressed the significant contemporary UK AI policy agenda from the fresh perspective of a group with some practical experience of relevant harms and associated reasons for mistrust.

The remainder of this section presents the four main observations which, in the authors’ view, are suggested by the Findings of the workshop. A summary statement is followed in each case by reflections outlining why the authors consider the observation appropriate and significant.

AI-related decision-making

Poor-quality public policy is starting to undermine the prospect that the UK will be able to take full advantage of AI technology.

It was striking that the groups spent more time discussing the contextual processes of design and deployment around AI systems than considering the specific problem of understanding their logic. This was evident in their clear preference for the concept of ADM over AI, which contrasts with some of the priorities in computer science. Although ‘black box’ AI opacity (Knight Citation2017) was considered relevant, the focus here was on ‘process transparency’ (processes surrounding design, development and deployment) more than ‘system transparency’ (operational logic) (Ostmann and Dorobantu Citation2021).

System transparency is important, notably in helping explanations for people working with systems in organisations seeking to deploy ADM (supporting human intervention as mandated in the GDPR). But process transparency issues extend far beyond questions of machine learning, novel forms of data or even automation, for example involving initial decisions about where and how to try to involve AI techniques in existing processesFootnote11 or affecting external stakeholders who may never have any direct interaction with relevant machine systems. So the policy challenge is best described generally as ‘AI-related decision-making’.

The discussions accordingly suggested to the authors that an implementation gap (or policy failure) is starting to emerge in UK AI policy as actual implementation output increasingly contrasts with the intended ideal (Hupe and Hill Citation2016). On the one hand, current UK policy material tends to incorporate the law (especially data protection) as a relatively minor consideration in neat, rational ethical-AI design processes (Leslie Citation2019). Technology is idealised as a problem for law, for example in terms of the implications of technological change for the future of the professions (Susskind and Susskind Citation2015). This is an appealing approach which fits nicely with emphasis on the apparent ‘power and promise’ of AI as a transformative technology (Royal Society Citation2017).

The workshop discussions, on the other hand, indicated that at least some lawyers see the issues from the opposite side, less considered in current UK policy documents: the law as a challenge for new technologies and for initiatives justified on technological grounds. In common with some historians (Edgerton Citation2007), they tend to regard claims of novelty or transformation as superficial. From this point of view, AI is one of many expressions in long-running governance processes over which we should consider using applicable norms.

This main observation from the workshop is more immediate and tangible than technological visions in policy documents. At least in a significant number of applications in the emergent track record, recent ADM implementation in the UK appears to be poor quality in that relevant system uses are causing a diversity of harms to large numbers of people. Not all, or so far even most, of these harms arise directly from shortcomings in AI technology itself. Instead, they arise from irresponsible decisions by implementing organisations. And with the passage of time, understandings of harm in law are evolving so that issues previously understood almost exclusively in terms of data protection are now seen also to engage discrimination law, public administrative law, consumer protection standards, etc.

At least if we take social failures as seriously as mechanical failures as a design consideration (Millar Citation2020), this suggests a need to re-evaluate assumptions and practices. It challenges assumptions about reduced reliance on the ‘domain knowledge’ that proved so essential for expert systems (Confalonieri et al. Citation2021), for example, or the idea that technology can somehow liberate decision-makers from well-established obligations of transparency and public engagement. Much of the workshop was preoccupied with basic quality standards such as taking steps to inform external stakeholders that a system exists. The discussions implied that it is useful to start from relatively simple norms, like active communications describing an algorithm, its purpose and where to seek further information (BritainThinks Citation2021), or systematic record-keeping (Cobbe, Lee, and Singh Citation2021; Winfield and Jirotka Citation2017). AI needs to be encased in high-quality AI-related decision-making to achieve public legitimacy.

Understanding legal contestation

Law and legal processes offer legitimate channels for public participation in efforts to implement ADM and the sense that they are being marginalised in the UK is provoking resistance.

The workshop discussions provided insight into understandings of legal contestation. It is well-recognised, at least from a socio-technical perspective, that decision processes demand a great variety of explanations at different times and for different reasons (Miller Citation2018). But whereas much attention regarding the role of the law is understandably focused on discrete examples of legislative provisions (especially the GDPR), the workshop was concerned with actions in the courts and the harder task of trying to understand regulatory dynamics and other broader social manifestations.

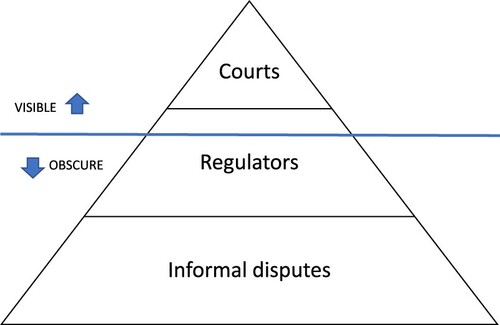

The groups all discussed legal contestation in terms capable of (highly simplified) representation as an iceberg – see . At the tip, there are court processes which are relatively few in number, but visible (and therefore make up the majority of the examples in ). Below there are more frequent but less consistently publicised regulatory actions, for example resulting from complaints from members of the public or bodies exercising statutory duties (e.g. example 15 in ). Finally there is the lowest level, comprising the greater mass of ‘informal disputes’ which might include for example people complaining to a service provider but not escalating to formal legal mechanisms. These informal disputes are therefore obscure from a legal perspective, in that legal standards are not applied to circumstances in a supervised manner and compliance is not logged.

Each of the group discussions indicated concern about growth of ADM-related interactions in the underwater part of this iceberg. At the level of informal disputes, the groups all talked about mass harms through interactions with systems such as NHS appointments booking, welfare assessments and online banking. Although it was difficult to offer empirical evidence beyond individual cases, many participants offered informal anecdotal evidence or reported other causes for concern.Footnote12 There was interest, for example, about the ways in which sensitive traits can be discovered from non-sensitive data (Rhoen and Feng Citation2018).

At the level of regulators, the issue was again obscure but indicated by the broad range of organisations considered relevant by the groups. Regulators were considered slow to investigate and publicise ADM-related harms in ways that exposed relevant issues. They were also considered to be hampered by siloed mandates. There was a sense of suspicion in some groups, in the words of a recent policy paper on digital regulation, that the current ‘vision for innovation-friendly regulation’ places greater emphasis on ‘effective mechanism for communicating government’s strategic priorities’ and a ‘deregulatory approach overall’ than it does on genuinely supporting effective coordination to clarify and enforce the law through collaborative regulators’ initiatives like DRCF (DCMS Citation2021b). There are confusing arrays of formal policy documents, for example it seems that a Data Strategy, an Innovation Strategy, a Digital Strategy and a Cyber Strategy will sit alongside the AI Strategy. Dynamic policy of this kind combines with perceptions that regulators have relatively wide discretion to pursue preferences (see Findings above on the ICO, for example). Especially where trust is lacking, it may quite readily seem that the government is informally discouraging regulators from proactive investigation of harms and enforcement.

Ultimately, the workshop focused attention on whether and how broader contestation dynamics are reflected in the above-water, visible part of the iceberg. Participants consistently expressed curiosity about this point as an important open question. Although significant variations in perspective and motivation were apparent between sectors and participants, the groups tended to agree that the issues are slowly starting to emerge in formal justice systems in ways that are uneven and difficult to predict. One way to understand this is as a confirmation that the law has indeed been unduly overlooked in UK AI policy and implementation. Issues of ‘process transparency’ (see above in this Discussion) and accountability for ADM necessarily extend to wider social issues like political engagement and exclusion, as well as including significant questions about access to justice.

As an aside, it is interesting to consider the relationship of legal transparency to other forms. Of course, legal actions are not the only medium through which relevant disputes may become visible; for example, there are also journalism and social networking platforms. The workshop discussions did not address the media relationship directly. However, the relationship did appear in discussions in various ways, for example as a means of publicising legal actions or implicitly as alternatives that people will use if legal channels are not available to them. This, along with authorities’ responses to publicity, may be important explanatory factors in the trust dynamics proposed below.

In any case, the workshop indicated that ignoring or downplaying the role of law carries risks of increasingly implicating AI as a ‘legal opportunity’ in broader social dynamics (Hilson Citation2002). Legal opportunities are circumstances in which social movements can and seek to pursue objectives through legal action. Civil society groups ostensibly focused on a range of issues apparently unrelated to this specific technology, such as social deprivation, equality of opportunity or access to finance, can increasingly find common cause in law over the consequences of automation. Public interest litigation, perhaps especially when ‘crowdsourced’ (Tomlinson Citation2019), can be expected to flourish under these conditions. Arguably resistance is a defining feature of the ‘world of the law’ (Bourdieu Citation1987). It should come as no surprise that legal professionals are interested in perceived attempts to ignore an apparently growing body of actual and potential harms.

Public values in ADM

Regrettably for public policymakers in the UK, it is the state and not the private sector that is increasingly associated with examples of apparent ADM-related harm and legal contestation.

Conceptually, the distinction between public and private (or state and non-state) have long been abandoned in favour of a polycentric analysis of governance systems (Black and Murray Citation2019). But the workshop suggested that these old distinctions may remain valid in terms of perceptions of legal contestation and its relationship to trust. In this sense, the fact that the workshop discussions mainly addressed public applications of ADM may speak to relative levels of transparency and accountability rather than to prevalence. ADM trends in public systems, especially in criminal justice (as noted in the Background), attracted critical commentary in the UK even before the Bridges appeal judgment (The Law Society Citation2019). We can see similar criticisms in the US for example (Liu, Lin, and Chen Citation2019). The Constitution group were also concerned about immigration systems; although they might equally have criticised ADM initiatives in local government (Dencik et al. Citation2018). But, however dis-satisfyingly, these examples are at least relatively prominent because of higher levels of scrutiny and accountability in the public sector. The iceberg discussed above is at least partly in view and its importance can be debated.

The lack of private examples of ADM contestation was less immediately noticeable but very striking, it being reasonable to suppose that AI applications are more common in the private sector. This contrast was most evident in the Finance group discussion, which focused on private trading and credit activities. The group speculated that probably a lot of people are not necessarily well served by ADM in the sector. But to the extent that the group considered people as decision subjects, it considered them potentially incapable; in the words of one participant, relevant systems are ‘very remote from ordinary people’. It was remarkable and worrying that the one private sector case pursued through the courts in the UK (Post Office Horizon, 2) was also the starkest example of over-reliance on machine systems. This has resulted in the simple observation that the courts should not presume that computer evidence is dependable (Christie Citation2020).

Although each of these fields have the potential generate policy reflections (for example, about effective market regulation or civil service standards), it is the public-private intersection at which ADM systems are being procured that the policy reflections become sharper. Although it is unclear in many of the cases in who the ultimate decision-makers were (or what they were told or understood about the relevant ADM or other relevant systems), it is, as the Committee for Standards in Public Life observed, a practical reality that governmental authorities purchase most of their computer systems from the private sector (Committee on Standards in Public Life Citation2020). The idea that the government may be not only failing to regulate ADM but even exploiting internal opacities to evade scrutiny is at the heart of one of the most recent and contentious examples in . In this example of the NHS Covid datastore (16), campaigners have successfully challenged the UK government’s lack of disclosure in relation to a Covid-19 analysis contract with Palantir, but it remains unclear exactly what decisions are intended to result from the processing of routinely-collected NHS data (BBC Citation2021b; Mourby Citation2020).

This suggests political value to policy initiatives capable of distancing the state from private technology companies, for example by either stronger emphasis on the government’s role in establishing market standards or efforts to address its role as a ‘taker’ of IP standards in procurement contracts (or both).

The vicious cycle of mistrust

Considering legal contestation of ADM illuminates a specific, systemic feedback problem which is negatively affecting UK public trust in relevant technology and which requires a fresh approach.

The workshop discussions indicated that trust dynamics are operating in unintended ways. Part of the original idea of public trust in government AI, expressed in documents like the Government Office for Science’s 2016 paper (Government Office for Science Citation2016), was to develop a virtuous cycle: appropriately transparent and legally compliant systems implementation would engender trust, in turn creating encouraging conditions for organisations to be open about relevant initiatives. This virtuous cycle is represented at the left of . In theory, it might also include constructive design feedback from legal actions into ethical or responsible innovation processes.

Whereas the workshop discussions suggested that a vicious cycle has become relevant, as represented at the right of : as more examples emerge of systems that are not compliant with the law, trust is eroded and implementers are less motivated to be transparent. Additionally, law tends to be separated from and deprecated in ethics or responsible innovation processes as well as in policy.

A sense of governmental haplessness has developed in the UK as authorities underestimate legal constraints, for example in the ways in which ADM adoption might in itself constitute unlawful fettering of discretion (Cobbe Citation2019) or may inadvertently expose implicit policy trade-offs (Coyle and Weller Citation2020). It is difficult to cherry-pick aspects of legal and regulatory feedback, for example embracing the legitimating effects while seeking to downplay the pressure placed on implementers to reinterpret regulatory frameworks (Fussey and Sandhu Citation2020). And, as Prof. Pasquale put it in the workshop’s opening conversation, policymakers trying to embrace a technology-inspired ‘move fast and break things’ approach may feel frustrated when legal principles intended to prevent harm to people seem to get in the way. As well as ‘clamping down’ on public interest litigation which uses mechanisms like judicial review (Harlow and Rawlings Citation2016), authorities may simply be incentivised to attempt concealment or dissimulation.

The workshop discussions suggested that UK policymakers could helpfully understand legal contestation as an integral part of these trust dynamics, a part that has grown rapidly and is expected to continue to grow. Rather than promoting AI, attempts to ignore relevant legal frameworks have simply led to a morass of regulatory uncertainty and unpredictable risks. Given the particular characteristics of legal contestation described above, these considerations have also started to entangle AI policy in apparently quite separate issues such as bulk state surveillance, Judicial Review Reform and debates over Freedom of Information compliance (BBC Citation2021a; Institute for Government Citation2021; Ministry of Justice Citation2021; Murray and Fussey Citation2019). There is no particular reason why ADM initiatives in the UK need to be focuses of attention for these sorts of controversies. If possible, policy initiatives should seek to break the cycle of mistrust.

Conclusion

These reflections on the workshop suggested concrete recommendations for policymakers aiming to foster trust as an enabling factor in UK AI policy, on two levels:

a clear signal of intended general ‘reset’ from vicious to virtuous cycles; and

developing means for ex ante / ongoing as well as ex post legal inputs to AI-related decision processes as defined above, in other words the surrounding non-technical aspects which are important to high quality technology implementation. These should emphasise transparency and compliance-related feedback to mitigate the risk of an increasingly adversarial relationship with relevant legal functions and, instead, seek to exploit their potential to contribute to improved quality standards in ADM implementation.

No single intervention seems likely to be effective in isolation. There are a wide variety of options for policy measures that may be preferred. What follows is a proposal for a package of measures suggested by the workshop discussions.

In terms of reset signals, the following recommendations stand out:

Establishing clear limits in law enforcement and migration. Although ADM-related issues within the mandate of the Department of Health and Social Care (DHSC) have attracted more attention since 2020, there are longer-running and apparently more profound problems within the Home Office’s purview (especially in immigration, policing and law enforcement generally). Some form of reform process providing an opportunity to interrupt deteriorating trends (a ‘circuit breaker’, in the language of financial regulation (Lee and Schu Citation2021)) would be useful. For example, these considerations might inform a receptive government response to relevant recommendations of the current House of Lords Justice and Home Affairs Select Committee Inquiry into ‘new technologies and the application of the law’ (Justice and Home Affairs Lords Select Committee Citation2021).

Taking credible steps to strengthen and clarify relevant legal regimes and regulatory mechanisms. After three years, it has become clear that the government missed opportunities when it avoided the recommendations of the House of Lords Artificial Intelligence Select Committee, including its suggestions that the Law Commission make recommendations to ‘establish clear principles for accountability and intelligibility’ and that the National Audit Office (NAO) advise on adequate and sustainable resourcing of regulators (BEIS Citation2018). Another approach may be to develop a ‘Public Interest Data Bill’ along the lines suggested in the AI Roadmap, encapsulating greater transparency, stronger public input rights and proper sanctions on these issues (Tisné Citation2020).

In terms of measures to promote legal contestation inputs as a constructive contribution to improved quality standards in ADM implementation, the workshop suggested the following recommendations:

Introducing a new obligation to publish and consult on DPIAs (Harris Citation2020), which could be usefully piloted in a specific sector (such as tertiary education) and should include resourcing for more effective regulatory support to initially-affected organisations.

Undertaking an evaluation of the FCA Regulatory Sandbox, especially focusing on its performance in promoting relevant technological standards and making recommendations as to its suitability as a model for application in other sectors (e.g. revisiting its use in health).

Reviewing public procurement standards and practices, especially focusing on IP, standard-setting and innovative mechanisms for rebalancing public transparency requirements in relation to commercial confidentiality objectives in due diligence, performance management and dispute resolution arrangements.

Encouraging regulators to review and adapt relevant mechanisms for complaints and engagement with representative bodies (publishing relevant conclusions) in view of the specific risks of ADM, especially in fields of activity in which relevant relationships are imbalanced (law enforcement, education, employment) and/or it has become clear that public awareness of ADM implications is very low (consumer finance, health administration).

Supporting a wider range of regulators to engage in ADM-related investigations and conversations, including for example product safety, consumer protection and standards organisations.

Supporting regulatory cooperation initiatives effectively, both domestically (e.g. DRCF) and internationally (NAO Citation2021b).

These conclusions and recommendations have become more sharply relevant to active UK policy formulation since the workshop, with the government now consulting on data protection reforms as noted above (see Footnote 4 above). The authors therefore consider that it is appropriate to conclude with some final reflections on DCMS’ ongoing data protection consultation (DCMS Citation2021a), as follows:

The proposed data protection reforms do apparently offer some potential for a ‘reset’ from the vicious cycle of mistrust (for example, if compulsory public sector transparency reporting Q4.4.1 is developed into a strong and effective measure including in relation to procurement, or if data protection reforms are accompanied by proper measures beyond data protection Q1.5.19-20).

However, it is far from clear that a reset is intended. The proposed reforms are ‘puzzling’ as an accountability framework substitution (Boardman and Nevola Citation2021). In the context of low trust and growing contestation, ideas for new arrangements will seem vague and unreliable compared to specific plans to remove perceived legal obstacles to the present direction of AI-related decision-making (e.g. much more detailed proposals Q4.4.4-7 on ‘public interest’ processing, 4.4.8 ‘streamlining’ matters for the police on biometrics, 4.5.1 on ‘public safety’ justifications). Whatever else, the government is dismantling accountability mechanisms. For example, it is proposed not just to abolish DPIAs (Q2.2.7-8) but to remove Art.30 GDPR record-keeping requirements (Q2.2.11). Such measures appear likely to ‘hinder effective enforcement’, despite the claim that the risks will be ‘minimal’.Footnote13