Abstract

Objectives: In the context of women’s health, we examine (1) the role that observational (‘real-world’) studies have in overcoming limitations of randomised clinical trials, (2) the relative advantages and disadvantages of different study designs, (3) the importance of outcome data from observational studies when making health-economic or clinical decisions, and (4) provide insights into changing perceptions of observational clinical data.

Methods: PubMed and internet searches were used to identify (i) guidance and expert commentary on designing, conducting, analysing, and reporting clinical trials or observational studies, (ii) supporting evidence of the rapid growth of observational (‘real world’) studies and publications since the turn of millennium in the fields of contraception, reproductive health, obstetrics or gynaecology.

Results: The rapidly growing use and validation of large, computerised medical records and related databases (e.g., health insurance or national registries) have played a major part in changing perceptions of observational data among researchers and clinicians. In the past 10 years, a distinct increase in the number of observational studies published tends to confirm their growing acceptance, appreciation and use.

Conclusions: Observational studies can provide information that is impossible or infeasible to obtain otherwise (e.g., impractical, very expensive, or ethically unacceptable). Greater understanding, dissemination, uptake and use of observational data might be expected to drive ongoing evolution of research, data collection, analysis, and validation, in turn improving quality and therefore credibility, utility, and further application by clinicians.

Chinese abstract

目的:为了女性的健康, 我们得出了(1)观察性研究(现实世界)有克服随机临床试验局限性的作用, (2)不同研究设计的相对优缺点, (3)观察性研究的数据结果在制定健康经济或者临床决策的重要性, (4)提供改变临床观察数据看法的见解。

方法:使用PubMed和因特网引擎确定临床试验或观察性研究(i)关于设计、指导、分析和报告的指南和专家共识, (ii)查找千年以来在避孕、生殖健康、产科或妇科等领域, 支持观察性研究(现实世界)和文章快速发展的证据。

结果:经过迅速增长的使用和大量的验证, 计算机化的病历和相关的数据库(例如, 健康保险或国籍登记)在改变研究人员和临床医生对观测数据的看法中起主要作用。在过去的10年里, 已发表的观察性研究的数量明显增加, 这验证了观察性研究越来越被接受、赞赏和使用。

结论:观察性研究可以提供不可能或不可能获得的信息(例如, 不切实际的、非常昂贵的或伦理上不可接受的)。若能更多理解、传播、吸收和利用观测数据, 可能会推动研究进展, 数据采集、分析、验证, 进而提高研究的质量, 因此, 其可信性、实用性进一步被临床医生认可。

Introduction

Aims

This review aims to examine the role that observational (real-world) studies can and do play in overcoming limitations associated with other study designs including randomised clinical trials, and discusses the relative advantages and disadvantages of different study designs in the field of gynaecology. It also seeks to understand improvements in both the quality and perceptions of observational versus randomised clinical data, as well as the potential importance of considering outcome data from observational studies when making health-economic or clinical decisions. Furthermore, observational data can be useful when developing guidelines and protocols to inform clinical practice, particularly in the context of gynaecology and reproductive health care. Improvements in study methodology and the completeness of data acquisition, along with the importance of validating data, are also addressed, since historically the quality of observational studies has varied hugely. Randomised clinical trials have also had similar problems in the initial generation of their use, especially in developing countries.

What are observational data?

Observational data can be defined as data generated from experience with routine medical care that has been systematically recorded, originally as administrative claims (e.g., insurance/payers’ administration), in electronic medical records (e.g., clinical management in primary/secondary care databases) or national registries (e.g., birth or cancer registries), in a manner that can be used for the purposes of research [Citation1]. The data should be derived from heterogeneous, large, (ideally) unselected populations. An important factor when considering the use of observational data is that acceptable quality standards for the data must have been established for the source(s) from which the data originated [Citation2].

Comparison of clinical trial and observational study designs

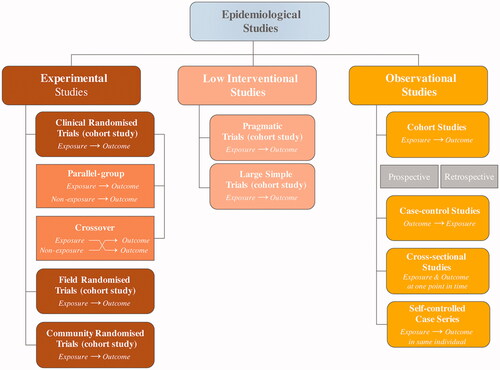

Several trial designs exist (). It is important to understand the relative strengths and weaknesses of these clinical trial and observational study types (). However, a clear-cut distinction does not necessarily exist between pragmatic trials and experimental (clinical) trials; most clinical trials are neither entirely pragmatic nor entirely experimental – they lie on a continuum [Citation3–8].

Table 1. Strengths and weaknesses of different types of randomised clinical trials and observational studies [Citation3,Citation5–8].

Changing perceptions of observational studies versus randomised clinical data

Data derived from randomised clinical trials are often held in higher regard than those from observational studies, with a perception that observational studies are less valid and can inflate positive treatment results or underestimate safety data as compared with randomised clinical trials [Citation9]. However, comparative analyses of observational and clinical study results have demonstrated that data from well designed, observational studies do not consistently or systematically overestimate the magnitude of treatment effects when compared with data from randomised clinical trials on the same topic [Citation9,Citation10].

Evidence levels

‘Good’ and ‘bad’ examples of prospective clinical trials and observational studies exist, depending on a range of variables. For this reason, systematic ratings of evidence levels have been developed, such as that of the centre for evidence-based medicine (CEBM), which rates evidence from level 1 to 5 (best to worst, with detailed criteria). Recommendations are then graded A–D dependent on the level of evidence used () [Citation11]. This system ensures consistent verification of data quality and credibility; however, it does have its flaws. It is important to note that just because a study is a randomised clinical trial does not necessarily mean that it should be ranked as evidence level 1; it may be very poorly designed and/or implemented. Furthermore, based on current rating systems, such as the one illustrated in , observational data will never offer level 1 evidence. Whether this remains appropriate is a matter of debate given the increasing importance and credibility of observational data. In this context, it is noteworthy that recommendations graded below A are less likely to be adopted in either clinical practice or clinical practice guidelines, which means that observational data cannot be effectively deployed at present.

Table 2. CEBM grades of recommendation.

Rationale for growing significance of observational study data

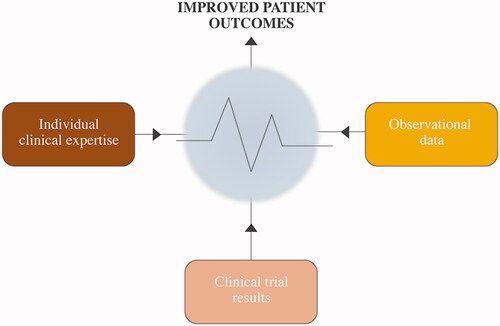

Ideally, evidence-based good clinical practice relies on a combination of clinical experience (both personal and published real-world data) and experimental (clinical) research implemented, for example, via clinical practice guidelines ().

As experience is accumulated over time, our ability to detect treatment effects that exist increases. Consequently, as more emphasis is placed on effectiveness in specific populations and health care systems, observational data become increasingly relevant. Furthermore, methodological improvements, including more sophisticated choice of datasets, better statistical methods and development of guidance supporting consistency of approach, all mean that observational studies are becoming a much more credible study genre than before – data quality is essential [Citation9,Citation12,Citation13].

Methods are being actively developed and implemented in an attempt to further strengthen observational data collection and analysis. Previously (up to approximately the 1990s), many observational studies involved direct contact with participants (e.g., field-based studies) to obtain information. Since then, computerised sources of information (electronic databases) have gradually come to dominate the field. The evolution of large, computerised health databases has contributed to improving the perception, uptake and application (comparative effectiveness research) of observational data. The ability to analyse/query such databases in real time is particularly relevant because rapid responses are increasingly important in managing a wide variety of public health issues, such as risk-benefit of medical or surgical interventions [Citation12–18].

The influence of observational studies has grown in recent years

It is notable that the number of observational studies published has increased markedly in the past 10 years (data not shown), indicating their growing acceptance and appreciation, and perhaps recognising their value in providing data complementing that from randomised clinical trials. It may also suggest increasing observational research in those fields. Indeed, it is now understood that observational studies, including various data sources (e.g., disease/hospital registries, primary/secondary care medical records, databases of health-insurance claims, and surveys), can provide information that is impossible or infeasible to obtain otherwise (i.e., impractical, too expensive, or ethically unacceptable) [Citation19].

presents circumstances under which observational studies and real-world data sources are valuable and potentially supportive of data from randomised clinical trials [Citation20], along with examples of key trials in the fields of contraception and reproductive health care, and the impact that they have had on clinical practice [Citation21–36]. The first column covers particular requirements that an observational study might address; the second column provides specific examples of studies meeting those requirements; the third column provides insight into how data meeting the requirements might impact on clinical practice, i.e., additional context.

Table 3. Circumstances under which observational studies are valuable and can be supportive of randomised clinical trials.

Limitations and strengths of observational data

Limitations of randomised clinical trials versus observational studies

The strengths and weaknesses of the basic clinical and observational study types presented in may be condensed to illustrate the strengths of observational studies versus randomised clinical trials as a whole ().

Table 4. Limitations of randomised clinical trials versus observational studies.

In general, data collected from patients in real-world settings reflect what physicians see in routine clinical practice (e.g., adherence patterns and training needs), improve understanding of infrequent events, and enable analysis of long-term risks and benefits of given interventions [Citation20].

Inherent limitations of observational studies

Bias and confounders, and their management are vital considerations in all study types. The most important biases are generally those produced in (i) the definition and selection of the study population, and (ii) data collection. Confounders are those variables that have an impact on the measured outcome independent from the intervention under investigation; in other words, the association between different determinants of an effect (outcome) in the population [Citation37]. Careful study design and analysis are needed to avoid bias and adjust for confounders.

Confounders, and differences between individuals exposed and unexposed to treatment

An investigator is not necessarily directly interested in confounders but they may affect the results of a study if not taken into account, and can contribute to an observational study receiving a poor evidence rating (see ) [Citation11]. Statistical analyses typically involve three variables: exposure, outcome, and confounders. The causal question being investigated usually determines exposure and outcome but confounders can be unclear; they require identification, and analyses should be adjusted accordingly. An example of a causal diagram () illustrates how confounders can play a role in the epidemiology of birth defects. Advanced maternal age may increase the risk of maternal obesity, which is associated with certain birth defects. However, advanced maternal age also increases the chance of periconceptional multivitamin use (folate supplementation may reduce the risk of neural tube defects) [Citation38].

Figure 3. Causal diagram of factors confounding the relationship between maternal obesity and birth defects. Adapted from Hernán et al. [Citation38].

![Figure 3. Causal diagram of factors confounding the relationship between maternal obesity and birth defects. Adapted from Hernán et al. [Citation38].](/cms/asset/1ba2ea18-b050-404d-80e8-93b42737436b/iejc_a_1361528_f0003_c.jpg)

Confounding by indication

Patients in routine clinical practice who receive drugs, or see a gynaecologist, are quite different from those who do not, whereas patients in the two arms of a randomised clinical trial are to all intents and purposes equal except for the intervention. Confounding by indication is a generalised problem in the real-world setting; it occurs when a variable is a risk factor for a condition among non-exposed individuals and, at the same time, is the reason to receive treatment without being an intermediate step in the causal pathway between the treatment and the condition [Citation39].

It is possible to use the association of hormonal contraception with depression as an example of how steps can be taken in an effort to avoid potential confounding by indication. Pregnant women (having no use for hormonal contraception and a potentially cautious attitude towards antidepressant use) as well as those with previous mental diseases might be excluded from a prospective observational study. Risk factors for being treated for depression can include help-seeking behaviour, contact with the health care system, attitudes of health care professionals and so forth. A statistical approach, such as an analysis comparing each woman with herself for antidepressant use pre- and post-hormonal contraception period, would facilitate elimination of many possible confounders considered to be relatively constant over time (e.g., alcohol, smoking habits, body mass index, education, attitudes for use of medicine in general, low social status, etc.).

One approach to reduce confounding by indication is to compare drugs with similar indications/contraindications, or to have access to detailed information on treatment indications that allow adjustments in the analysis.

Incomplete data on predictors

Adjustment for confounding factors is mandatory when analysing observational data. All relevant factors must be available, and such data should be confirmed as being as valid and complete as possible. In this respect, databases of health insurance claims are more prone to selection bias than electronic medical records; e.g., in the USA, patients with health insurance are automatically likely to be from a higher income bracket and therefore to have received better health care and have a better standard of living than patients without health insurance.

Incomplete data on events

Identification (and full record keeping) of all relevant events should be ensured, as well as that all identified events are actually relevant. Validation studies are key here to ensure both the sensitivity and specificity of an investigational strategy.

Selection procedure

This is particularly important in (but not unique to) case-control studies. Controls should be representative of the population that gave rise to the cases, and must be sampled independently of exposure. It is also important that exposure status is not influenced by individual responses to a drug; selection of prevalent users should be avoided. However, new users should receive long-term follow-up, if relevant to the aims of the study. The French E3N study () represents a good example of such follow-up, in which participating women have received questionnaires every two years, starting in 1990; the study is ongoing to date [Citation24,Citation25].

Key indicators of high-quality observational data

Reproducibility, data completeness and predictors

It is essential that evidence obtained from a given data source can be replicated, or is comparable with data from another source (e.g., randomised clinical trials or other observational studies, disease registries or surveys on the same topic). Data completeness is highly important. Data for as many predictors as possible for the condition being studied should be available; such predictors include those of characterisation (e.g., comorbidity, prior drug use, life-style factors such as smoking or alcohol use, demographics such as age, gender, body-mass index and deprivation), and those of outcome (identification of study events using clear and reproducible strategies based on a combination of diagnostic codes, free text, and laboratory test values – as applicable).

Validation of data

Data validation (the verification of reproducibility or comparability with other studies as described above) is commonly done by comparing data recorded in a computerised database with that on paper medical records, or on other databases (e.g., comparison of hospitalisations recorded in a primary care database with those recorded in a secondary care database). This is also the key to confirming the validity of methodology used to identify the relationship between exposure (if possible, for example, prescription issue should be related to prescription filling or purchase to confirm exposure) and study events (outcomes). The methodological steps of the validation process should be reported, clear and systematic, and include description of assumptions and inferences, as well as interpretation of potential under- or over-estimation of the outcome of interest. An example of such validation is that for an observational study of uterine fibroids in the UK, based on data in The Health Improvement Network (THIN), and reported by Martín-Merino et al. [Citation40].

It should be noted that no uniform criteria exist for validating data in a database, although various approaches have been detailed, e.g., for diagnostic coding within the general practice research database (GPRD) [Citation41], for database selection and use in pharmaco-epidemiological research [Citation42], and for quality assessment of health care data used in clinical research [Citation43].

Ascertainment and case validation when using a primary care database in observational studies

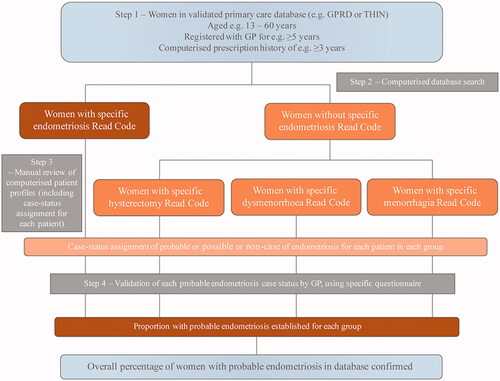

Step 1 – Choosing a primary care database

The GPRD and THIN are examples of large primary care databases in the UK that have acceptable internal and external validities overall, supported by peer-reviewed studies by external researchers as well as data provided by the database owners. As such, they represent sound ‘starting-point’ databases for an observational study [Citation44]. Nonetheless, it should be remembered that even such databases of generally acceptable (and accepted) validity are not automatically valid for all the conditions and diagnoses for which a researcher might wish to use them.

Step 2 – Conducting a computerised search

Having chosen a database with acceptable validity as a first step, care needs to be taken in the second step of conducting an initial, computerised search of that database. Factors to consider include (i) establishing a robust definition of outcome of interest by implementing specific diagnostic algorithms based on codes listed in an appropriate clinical dictionary, and (ii) making use of specific, objective, eligibility criteria [Citation44].

Step 3 – Manually reviewing computerised patient profiles (ascertainment)

It is vital to assess whether the validity of results from the initial, computerised search is acceptable (i.e., a confirmation rate close to 90%) or whether more information needs to be obtained. This requires conscientious manual review of the computer profiles of patients identified in the search, including (i) information stored as free text (e.g., physician narratives, diagnostic procedures, referral/discharge letters), and (ii) assignment of case status (i.e., probable, possible, or non-case) for each patient [Citation44].

Free text can conceal substantial additional information from the computerised search regarding diagnoses. Manual review of free text potentially allows ‘read code’ misclassifications to be identified, and accurate dating of diagnoses/referrals [Citation45].

Step 4 – Case status validation by general practitioner (GP)

The assignment of case status in the third step requires validation by the relevant GP for each case. This may be done by sending specifically designed questionnaires to collaborating GPs; in addition, the database owner may be requested to provide anonymised copies of original medical records (e.g., consultant letters, post-mortem reports). Time and expense can be saved by doing such validation on a random sample of the patient records identified in the database, provided that a high positive predictive value is anticipated on the basis of previous ascertainment [Citation44]. illustrates steps 1–4 described above.

What guidelines are available for conducting, interpreting and reporting observational studies?

Clinicians, regulators, patients, payers and policy makers will only take observational data into account if the quality is assured. A number of standards and guidelines have been published to support the conduct, analysis and reporting of observational studies and data (see Panel) [Citation2,Citation46–52].

When reading an article reporting results from a clinical trial or observational study, critical appraisal of the information is essential to ensure that the information and data presented are of good quality and fit for purpose. Reporting should be transparent, allowing the reader a thorough and unambiguous understanding of the research (i.e., what was done, what was found, and what conclusions were made), including assessment of the strengths and weaknesses in study design, conduct, and analysis. The STROBE guidance represents a good example of how to approach such assessment [Citation52].

Panel. Standards and guidelines for observational studies.

Study design

Agency for Health care Research Quality (AHRQ): Developing a protocol for observational comparative effectiveness research [Citation46]

European Network of Centres for Pharmacoepidemiology and Pharmacovigilance (ENCePP) checklist for study protocols [Citation47]

ENCePP Guide on methodological standards in pharmacoepidemiology (Revision 5) [Citation48]

International Society for Pharmacoeconomics and Outcomes Research (ISPOR) Good research practices for retrospective database analysis task force report (Parts I–III) [Citation49–51]

Data interpretation

The GRACE checklist: A validated assessment tool for high quality observational studies of comparative effectiveness [Citation2]

Data reporting

The Strengthening the Reporting of Observational Studies in Epidemiology (STROBE) statement: guidelines for reporting observational studies [Citation52]

Conclusions

Robust evidence-based practice is essential for ensuring the current and future quality of health care. Owing to the respective strengths and weaknesses inherent to clinical trials and observational studies (involving routine clinical experience), it is highly important to consider a combination of study types when making clinical decisions and, as far as possible, not be reliant on just one type or the other. No study setting is a ‘Holy Grail’ and data derived from different study settings are often complementary.

Clinical decision-making, including the development of guidelines and protocols for patients or clinicians, can be distilled to a systematic approach involving the ‘five As’: Ask the correct question (this might arise due to a recurring problem in the clinic, be raised during a conference, through interaction with colleagues, or while reading journal articles); Acquire information (clinical trial and/or observational study and/or systematic review results) to address the question; Appraise the information in a critical and systematic manner (can the data be validated? What level/grade of evidence is available, i.e. are the data complete and of good quality?); Apply the appropriate evidence in practice (involving clinicians’ consensus and, potentially, guideline or protocol development/implementation); and Assess (analyse) the care provided in a systematic manner to determine its true benefit (including factors such as cost-effectiveness or risk-benefit) for patients, and whether clinicians are complying with guidance/protocol(s) [Citation53].

In recent years, observational study data have become better perceived due to the greater availability of information databases of acceptable quality, and improvements and innovations in methodology [Citation12–18], resulting in increasing numbers of publications and a growing impact on daily practice. Greater understanding, dissemination, uptake and use of observational data might be expected to drive ongoing evolution of real-world research, data collection, analysis, and validation, thereby in turn improving quality and hence credibility, utility, and further application by clinicians.

Acknowledgements

This publication and its content are solely the responsibility of the authors, who received editorial and writing support that was paid for by Bayer AG.

Disclosure statement

The authors, Oskari Heikinheimo, Johannes Bitzer and Luis García Rodríguez, have acted as consultants to Bayer AG and received consultancy honoraria unrelated to the creation of this paper. OH is employed by the Hospital District of Helsinki and Uusimaa, the University of Helsinki and Finnish Medical Society Duodecim. He also serves occasionally on advisory boards or as invited speaker in educational events organised by Bayer Healthcare, MSD/Merck, Actavis, Gedeon Richter, and Sandoz. JB has received honoraria for lectures and participation in advisory boards from Bayer AG, Merck, Libbs, Actavis, Teva, Exeltis, Gedeon Richter, Boehringer-Ingelheim, Vifor, Lilly, Pfizer, HRA, Abbott, Mithra, Pierre Fabre, and Aspen. LAGR works for Centro Español de Investigación Farmacoepidemiológica (CEIFE), which has received research funding from Bayer AG. He has also received honoraria for serving on advisory boards for Bayer AG.

Additional information

Funding

References

- Sherman RE, Anderson SA, Dal Pan GJ, et al. Real-world evidence – what is it and what can it tell us? N Engl J Med. 2016;375:2293–2297.

- Dreyer NA, Bryant A, Velentgas P, et al. The GRACE checklist: a validated assessment tool for high quality observational studies of comparative effectiveness. JMCP. 2016;22:1107–1113.

- CEBM.net [Internet]. Oxford: Centre for Evidence-Based Medicine; [cited 2017 Feb 26]. Available from: http://www.cebm.net/study-designs/

- CHCUK.co.uk [Internet]. Contin: What are pragmatic clinical trials?; 2015. [cited 2017 Mar 17]. Available from: http://www.chcuk.co.uk/wp-content/uploads/2015/11/2015-11-22_Pragmatic-Clinical-Trials_CHCUK1.pdf

- NPCNOW.org [Internet]. Washington: National Pharmaceutical Council. Making informed decisions: assessing the strengths and weaknesses of study designs and analytic methods for comparative effectiveness research. Velengtas P, Mohr P, Messner DA, editors. 2012 February [cited 2017 Mar 19]. Available from: http://www.npcnow.org/system/files/research/download/experimental_nonexperimental_study_final.pdf

- OUP. Oxford; Oxford University Press. In: Smith PG, Morrow RH, Ross DA, editors. Field Trials of Health Interventions: A Toolbox. 3rd ed.; 2015 Jun 1. Chapter 1, Introduction to field trials of health interventions [cited 2017 Mar 19]. Available from: https://www.ncbi.nlm.nih.gov/books/NBK305510/

- Petersen I, Douglas I, Whitaker H. Self controlled case series methods: an alternative to standard epidemiological study designs. BMJ. 2016;354:i4515.

- Cai T, Zheng Y. Evaluating prognostic accuracy of biomarkers in nested case-control studies. Biostatistics. 2012;13:89–100.

- Benson K, Hartz AJ. A comparison of observational studies and randomized, controlled trials. N Engl J Med. 2000;342:1878–1886.

- Concato J. Randomized, controlled trials, observational studies, and the hierarchy of research designs. N Engl J Med. 2000;342:1887–1892.

- CEBM.net [Internet]. Oxford: Centre for Evidence-Based Medicine; [cited 2017 Feb 26]. Available from: http://www.cebm.net/oxford-centre-evidence-based-medicine-levels-evidence-march-2009/

- Hernán MA. With great data comes great responsibility: publishing comparative effectiveness research in epidemiology. Epidemiology. 2011;22:290–291.

- Amarasingham R, Audet AM, Bates DW, et al. Consensus statement on electronic health predictive analytics: a guiding framework to address challenges. EGEMS (Wash DC). 2016;4:1163.

- Andrews EB, Margulis AV, Tennis P, et al. Opportunities and challenges in using epidemiologic methods to monitor drug safety in the era of large automated health databases. Curr Epidemiol Rep. 2014;1:194–205.

- Etheredge LM. A rapid-learning health system. Health Aff (Millwood). 2007;26:w107–w118.

- Hernán MA, Robins JM. Using big data to emulate a target trial when a randomized trial is not available. Am J Epidemiol. 2016;183:758–764.

- Hershman DL, Wright JD. Comparative effectiveness research in oncology methodology: observational data. J Clin Oncol. 2012;30:4215–4222.

- Kaggal VC, Elayavilli RK, Mehrabi S, et al. Toward a learning health-care system – knowledge delivery at the point of care empowered by big data and NLP. Biomed Inform Insights. 2016;8:13–22.

- Dreyer NA. Making observational studies count; shaping the future of comparative effectiveness research. Epidemiology. 2011;22:295–297.

- Dreyer NA, Tunis SR, Berger M, et al. Why observational studies should be among the tools used in comparative effectiveness research. Health Affairs. 2010;29:1818–1825.

- Hannaford PC, Iversen L, Macfarlane TV, et al. Mortality among contraceptive pill users: cohort evidence from Royal College of General Practitioners' Oral Contraception Study. BMJ.2010;340:c927

- Iversen L, Sivasubramaniam S, Lee AJ, et al. Lifetime cancer risk and combined oral contraceptives: the Royal College of General Practitioners' Oral Contraception Study. Am J Obstet Gynecol. 2017;216:580.e1–580.e9.

- Charlton M, Rich-Edwards JW, Colditz GA, et al. Oral contraceptive use and mortality after 36 years of follow-up in the Nurses’ Health Study: prospective cohort study. BMJ. 2014;349:g6356.

- Fournier A, Berrino F, Clavel-Chapelon F. Unequal risks for breast cancer associated with different hormone replacement therapies: results from the E3N cohort study. Breast Cancer Res Treat. 2008;107:103–111.

- Cadeau C, Fournier A, Mesrine S, et al. Interaction between current vitamin D supplementation and menopausal hormone therapy use on breast cancer risk: evidence from the E3N cohort. Am J Clin Nutr. 2015;102:966–973.

- Virkus RA, Løkkegaard E, Lidegaard Ø, et al. Risk factors for venous thromboembolism in 1.3 million pregnancies: a nationwide prospective cohort. PLoS One. 2014;9:e96495.

- Dinger J, Do Minh T, Heinemann K. Impact of estrogen type on cardiovascular safety of combined oral contraceptives. Contraception. 2016;94:328–339.

- Cea Soriano L, Asiimwe A, García Rodríguez LA. Prescribing of cyproterone acetate/ethinylestradiol in UK general practice: a retrospective descriptive study using The Health Improvement Network. Contraception. 2017;95:299–305.

- Cea Soriano L, Wallander MA, Andersson S, et al. The continuation rates of long-acting reversible contraceptives in UK general practice using data from The Health Improvement Network. Pharmacoepidemiol Drug Saf. 2015;24:52–58.

- Heikinheimo O, Gissler M, Suhonen S. Age, parity, history of abortion and contraceptive choices affect the risk of repeat abortion. Contraception. 2008;78:149–154.

- Tolsgaard MG, Rasmussen MB, Tappert C, et al. Which factors are associated with trainees' confidence in performing obstetric and gynecological ultrasound examinations?. Ultrasound Obstet Gynecol. 2014;43:444–451.

- Ludvigsson JF, Ström P, Lundholm C, et al. Maternal vaccination against H1N1 influenza and offspring mortality: population based cohort study and sibling design. BMJ. 2015;351:h5585.

- RCGPOCS. Oral contraceptives, venous thrombosis, and varicose veins. Royal College of General Practitioners' Oral Contraception Study. J R Coll Gen Pract. 1978;28:393–399.

- Leung VW, Soon JA, Lynd LD, et al. Population-based evaluation of the effectiveness of two regimens for emergency contraception. Int J Gynaecol Obstet. 2016;133:342–346.

- Cottreau CM, Ness RB, Modugno F, et al. Endometriosis and its treatment with danazol or lupron in relation to ovarian cancer. Clin Cancer Res. 2003;9:5142–5144.

- Skovlund CW, Mørch LS, Kessing LV, et al. Association of hormonal contraception with depression. JAMA Psychiatry. 2016;73:1154–1162.

- Delgado-Rodríguez M, Llorca J. Bias. J Epidemiol Community Health. 2004;58:635–641.

- Hernán MA, Hernández-Díaz S, Werler MM, et al. Causal knowledge as a prerequisite for confounding evaluation: an application to birth defects epidemiology. Am J Epidemiol. 2002;155:176–184.

- Salas M, Hofman A, Stricker BH. Confounding by indication: an example of variation in the use of epidemiologic terminology. Am J Epidemiol. 1999;149:981–983.

- Martín-Merino E, Wallander MA, Andersson S, et al. The reporting and diagnosis of uterine fibroids in the UK: an observational study. BMC Womens Health. 2016;16:45.

- Khan NF, Harrison SE, Rose PW. Validity of diagnostic coding within the general practice research database: a systematic review. Br J Gen Pract. 2010;60:e128–e136.

- Hall GC, Sauer B, Bourke A, et al. Guidelines for good database selection and use in pharmacoepidemiology research. Pharmacoepidemiol Drug Saf. 2012;21:1–10.

- NIHcollaboratory.org [Internet]. Durham: National Institutes of Health Collaboratory. Assessing data quality for healthcare systems data used in clinical research (version 1.0). Zozus MN, Ed Hammond W, Green BB, et al. 2014 [cited 2017 Apr 18]. Available from: https://www.nihcollaboratory.org/Products/Assessing-data-quality_V1%200.pdf

- García Rodríguez LA, Ruigómez A. Case validation in research using large databases. Br J Gen Pract. 2010;60:160–161.

- Tate AR, Martin AG, Ali A, et al. Using free text information to explore how and when GPs code a diagnosis of ovarian cancer: an observational study using primary care records of patients with ovarian cancer. BMJ Open. 2011;1:e000025.

- AHRQ Publication No. 12(13)-EHC099. Rockville; Agency for Healthcare Research and Quality. In: Velentgas P, Dreyer NA, Nourjah P, Smith SR, Torchia MM, editors. Developing a protocol for observational comparative effectiveness research: a user’s guide; 2013. [cited 2017 Feb 26]. Available from: http://www.ncbi.nlm.nih.gov/books/NBK126190/

- ENCePP.eu [Internet]. London: European Network of Centres for Pharmacoepidemiology and Pharmacovigilance. Checklist for study protocols (revision 3). [cited 2017 Feb 26]. Available from: http://www.encepp.eu/standards_and_guidances/checkListProt-ocols.shtml

- ENCePP.eu [Internet]. London: European Network of Centres for Pharmacoepidemiology and Pharmacovigilance. Guide on methodological standards in pharmacoepidemiology (Revision 5). EMA/95098/2010. [cited 2017 Feb 26]. Available from: http://www.encepp.eu/standards_and_guidances/method-ologicalGuide.shtml

- Berger ML, Mamdani M, Atkins D, et al. Good research practices for comparative effectiveness research: defining, reporting and interpreting nonrandomized studies of treatment effects using secondary data sources: the ISPOR good research practices for retrospective database analysis task force report – Part I. Value Health. 2009;12:1044–1052.

- Cox E, Martin BC, Van Staa T, et al. Good research practices for comparative effectiveness research: approaches to mitigate bias and confounding in the design of nonrandomized studies of treatment effects using secondary data sources: the International Society for Pharmacoeconomics and Outcomes Research good research practices for retrospective database analysis task force report – Part II. Value Health. 2009;12:1053–1061.

- Johnson ML, Crown W, Martin BC, et al. Good research practices for comparative effectiveness research: analytic methods to improve causal inference from nonrandomized studies of treatment effects using secondary data sources: the ISPOR good research practices for retrospective database analysis task force report – Part III. Value Health. 2009;12:1062–1073.

- von Elm E, Altman DG, Egger M, et al. The Strengthening the Reporting of Observational Studies in Epidemiology (STROBE) statement: guidelines for reporting observational studies. J Clin Epidemiol. 2008;61:344–349.

- de Groot M, van der Wouden JM, van Hell EA, et al. Evidence-based practice for individuals or groups: let's make a difference. Perspect Med Educ. 2013;2:216–221.