?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

Introduction

The use of laparoscopic and robotic liver surgery is increasing. However, it presents challenges such as limited field of view and organ deformations. Surgeons rely on laparoscopic ultrasound (LUS) for guidance, but mentally correlating ultrasound images with pre-operative volumes can be difficult. In this direction, surgical navigation systems are being developed to assist with intra-operative understanding. One approach is performing intra-operative ultrasound 3D reconstructions. The accuracy of these reconstructions depends on tracking the LUS probe.

Material and methods

This study evaluates the accuracy of LUS probe tracking and ultrasound 3D reconstruction using a hybrid tracking approach. The LUS probe is tracked from laparoscope images, while an optical tracker tracks the laparoscope. The accuracy of hybrid tracking is compared to full optical tracking using a dual-modality tool. Ultrasound 3D reconstruction accuracy is assessed on an abdominal phantom with CT transformed into the optical tracker’s coordinate system.

Results

Hybrid tracking achieves a tracking error < 2 mm within 10 cm between the laparoscope and the LUS probe. The ultrasound reconstruction accuracy is approximately 2 mm.

Conclusion

Hybrid tracking shows promising results that can meet the required navigation accuracy for laparoscopic liver surgery.

Introduction

The use of laparoscopic liver surgery is increasing, showing better short term results, better quality of life and similar oncological outcomes compared to open surgery [Citation1–4]. However, laparoscopic procedures present unique challenges that need to be addressed. Before the surgery, the surgeons rely on pre-operative images such as Computed Tomography (CT) and/or Magnetic Resonance Imaging (MRI) for surgical planning. To achieve easier pre-operative planning, 3D models of organs including vascular structures and tumors are extracted from the pre-operative images [Citation5]. Intra-operatively, surgeons rely on laparoscopic video and ultrasound to navigate the organ by mentally linking this information with the pre-operative images. However, the orientation and deformation states of the organ are different during the procedure compared to the pre-operative data due to several factors such as pneumoperitoneum, gravity, instrument manipulations and breathing movements [Citation6]. These aspects, together with the limited field of view of the laparoscope impose challenges to link the intra-operative scene with the pre-operative data [Citation7]. In this context, intra-operative CT scans can provide a 3D representation of the surgical scene and be registered with pre-operative CTs and planning to compensate for pneumoperitoneum. However, the position, orientation, and deformation state of the organ change multiple times during the procedure; thus, a single intra-operative CT cannot solve the problem and CT scanners are not common in operating theaters.

To address this challenge, several surgical navigation solutions have been explored in pre-clinical and clinical research. Pelanis et al. [Citation8] proposed a solution using tracked fiducials injected into the liver to compensate for deformations. Other groups have explored the use of biomechanical models registered to intra-operative scenes using laparoscope video (Teatini et al. [Citation9]) or ultrasound (Rabbiani et al. [Citation10]). Ultrasound reconstruction techniques have also been investigated [Citation11–15], as they have the potential to overcome the aforementioned difficulties during the laparoscopic resection. Ultrasound reconstruction techniques generally require pose information from the tracked ultrasound probe.

Optical tracking (OT) and electromagnetic tracking (EMT) are commonly used in navigation tracking techniques. However, laparoscopic ultrasound (LUS) has a flexible tip, making external OT impractical. EMT has been used for direct tip tracking [Citation11,Citation16,Citation17], but it has limitations, such as a small measurement volume and susceptibility to interference from nearby metallic objects [Citation18,Citation19].

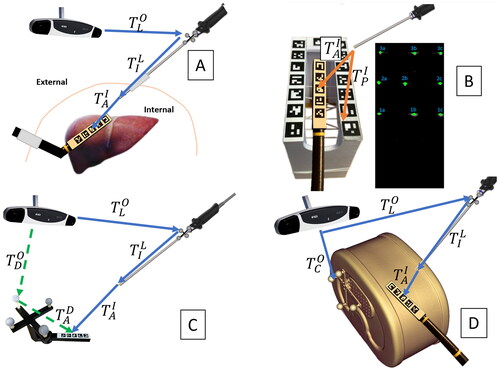

LUS tip can also be vision-based tracked from laparoscope images by adding a recognizable pattern on its backside. In this case, the laparoscope can be tracked using an external OT and serve as a secondary tracking camera inside the abdominal cavity [Citation10,Citation20,Citation21] (). We will henceforth refer to this method as ‘hybrid tracking’. This method has the potential to be accurate with a non-invasive setup. However, there are potential sources that limit accuracy, such as using a chain of two tracking arrangements and the laparoscope’s digital camera not being designed for high-precision measurements.

Figure 1. Transformation diagrams: (A) hybrid tracking of a LUS. (B) Free-hand ultrasound calibration. (C) Hand-eye calibration and tracking accuracy evaluation. (D) Ultrasound compounding evaluation.

To the best of our knowledge, no previous work has provided a comprehensive evaluation of both tracking accuracy and ultrasound 3D reconstruction accuracy using the same evaluation setup. Therefore, our objective is to systematically assess the overall accuracy under conditions like those imposed in laparoscopic surgery. This work includes:

accuracy assessment of the hybrid tracking system compared with external OT,

evaluation of the accuracy of ultrasound 3D reconstruction from LUS B-mode images which are tracked via hybrid tracking compared to a CT ground truth.

These evaluations aim to prove that hybrid tracking can be used as an accurate LUS probe tracking strategy to reconstruct 3D ultrasound. In clinical practice, this simplifies the complex and expertise-dependent surgical mental process of linking the pre-operative images and planning with the intra-operative scene by providing accurate intra-operative 3D reconstructions of the organ’s inner structures, enabling surgical navigation without the need of a complex setup. Furthermore, accurate 3D intra-operative reconstructions could potentially be used to upgrade 3D models generated from pre-operative CTs, augmenting the intra-operative surgical scene.

Material and methods

Material

The proposed workflow uses a network device interface (NDI) Polaris Vega XT OT (Northern Digital Inc., Waterloo, Canada) and an Olympus ENDOEYE FLEX 3D stereo-laparoscope (Olympus Corporation, Tokyo, Japan) with left and right images, each having 1920 × 1080 resolution, and a depth of field 18–100 mm. For this study, only left images have been used to avoid corrupted results due to observed timing jitter between left and right channels. Therefore, the results of this work may be relevant for both stereo and monocular laparoscopes. The tip of the flexible laparoscope is fixed using a 3D printed tube to simulate a rigid scope as it should be used for the hybrid tracking application. The laparoscope handle is tracked using an attached optical tracking marker shield and OT. The ultrasound machine used is a Hitachi Aloka (Hitachi Ltd., Tokyo, Japan) with an LUS probe and a B-mode image resolution 640 × 480. A grid of five ArUco markers is attached to the back side of the LUS tip. Each ArUco marker has a size of 7.5 mm, and the distance between each two adjacent ones is 2.5 mm. As a ground truth reference, we produced a tool that can be tracked using two different modalities. We utilized a Prusa i3 MK3S+ (Prusa Research, Prague, Czech Republic) to 3D print the tool, which can be tracked using an OT by tracking its set of optical markers or through vision-based tracking (VBT) by identifying an ArUco markers arrangement that is identical to the one attached to the LUS tip. We evaluated the tracking accuracy using this tool, as shown in . For the ultrasound 3D reconstruction accuracy assessment, we employed a CIRS Triple-Modality Abdominal Phantom (CIRS Inc., Norfolk, VA, USA). This phantom contains vascular structures and tumors, and we attached an optical marker set to it to enable its tracking via OT (). Additionally, we 3D printed a free-hand ultrasound calibration phantom that has 3 N-shaped wires inserted. The calibration phantom can be tracked via VBT ().

System calibration

Before evaluating the accuracy of tracking and ultrasound reconstruction, it is crucial to calibrate the system. There are two calibration processes involved: hand-eye and free-hand ultrasound calibration.

The hand-eye calibration is about computing the transformation between the coordinate system of the optical reflectors that are attached to the laparoscope and the coordinate system of the laparoscope images. On the other hand, the free-hand ultrasound calibration deals with computing the transformation between the coordinate system of the pattern that is present on the LUS tip and the coordinate system of the LUS images.

Hand-eye calibration

The hand-eye calibration matrix is obtained as described in reference [Citation22]. To serve as the calibration target, the dual-modality tool shown in is used. Using the same ArUco markers arrangement as a target for hand-eye calibration and later tracking is found to be more advantageous compared to using a commonly used checkerboard for the hand-eye calibration task, due to certain types of errors canceling out. The dual-modality tool is moved in front of the laparoscope, and around 50 samples are uniformly collected across a volume of approximately 5 × 5 × 10 cm3.

Free-hand ultrasound calibration

Free-hand ultrasound calibration is the process of finding the transformation matrix that links the ultrasound image to the ultrasound probe ArUco coordinate system. Inspired by the work of Carbajal et al. [Citation23], we printed a 3D calibration phantom with 3 N-shaped wires and a reference ArUco grid, as shown in . The phantom is tracked using the Olympus laparoscope through an attached grid sticker. Using the phantom ArUco grid allows a lower error in the calibration process than using optical marker spheres attached to the phantom. This is because, in this way, all the tracking is performed in the laparoscope coordinate system, and the free-hand ultrasound calibration does not depend on the accuracy of the hand-eye calibration of the laparoscope.

Wires intersections with the ultrasound image plane (see ) are estimated as described in [Citation23]. Denoting them with x:

(1)

(1)

(2)

(2)

where

is the pose of the phantom ArUco grid in the laparoscope image, and xI, xP, and xA are the intersections of the wires into the camera image, phantom ArUco grid, and ultrasound probe coordinate systems, respectively.

Fifty samples of pairs of wire intersections in the ultrasound image, xU, and wire intersections in the ultrasound probe coordinate system, xA, are acquired and used to compute the transformation matrix between the ArUco markers and the ultrasound image, using Horn’s method VTK implementation, and removing the outliers using the random sample consensus (RANSAC) method. The calibration error is finally computed by reprojecting the sampled points using the obtained calibration matrix.

Tracking accuracy evaluation

shows the setup for the tracking accuracy evaluation. is the transformation from the dual-modality tool optical reflectors to the OT coordinate system.

is the transformation from the ArUco grid of the dual-modality tool to its optical reflectors.

is computed by the least-square fitting of sampled 3D coordinates of the corners of the ArUco grid and ArUco template 3D points lying in the optical markers coordinate system. The sampling is performed using an optically tracked stylus.

is the transformation from the laparoscope optical reflectors to the OT coordinate system.

(laparoscope image to laparoscope optical reflectors) is the hand-eye calibration matrix obtained as described by Lai et al. [Citation22].

is the transformation from ArUco markers grid of the dual-modality tool to the laparoscopic image coordinate system.

and

are the poses of the ArUco in the OT coordinate system estimated using the hybrid and the optical tracking, respectively. This enables a direct comparison of the hybrid tracking with standard external OT approach.

(3)

(3)

(4)

(4)

More than a million samples are acquired uniformly by moving the tool in front of the laparoscope at different inclinations and positions in a sampling volume of around 10 x 10 x 15 cm3. Tracking and video data have been temporally synchronized by minimizing the root mean squared error between tool ArUco grid points (pA) multiplied by and

respectively. The 3D root mean squared error for each sample is computed as:

(5)

(5)

(6)

(6)

(7)

(7)

Where pA is a set of 3D template points of the ArUco corners of the target tool. N is the number of corners of the ArUco grid pattern.

Ultrasound reconstruction accuracy evaluation

A CIRS Triple-Modality Abdominal Phantom is used for the ultrasound reconstruction accuracy evaluation. An optical marker set is attached to enable its tracking via OT (see ).

The ultrasound reconstruction algorithm proposed by Berge et al. [Citation15] is used to reconstruct 50 sweeps performed over the phantom. The same ultrasound reconstruction algorithm is also used to perform ten multi-sweeps reconstructions containing three tumors each. The depth of the laparoscopic ultrasound probe is set to 8 cm to allow the capturing of at least three tumors contained in the phantom and part of the vasculature. During the acquisitions, the LUS probe is moved with an average speed of about 5 mm/s. Tumors are segmented using the segmentation module of Slicer 3D [Citation24].

A CT with 0.5 mm thickness axial slice of the phantom is acquired while a set of optical reflector markers is attached to it using a SOMATOM Definition Edge CT scanner (Siemens Healthineers, Erlangen, Germany). Segmentation of all the vessels and tumors is performed using the same Slicer 3D module. Since the phantom is tracked via OT, to link its CT to the OT coordinate system, we segmented the optical reflectors visible in the CT. We computed the transformation between CT and OT coordinate systems by least-square fitting of the centroids of the reflector markers visible in the CT and the reflector markers geometry as defined in the NDI description file. All tumors contained in each reconstruction obtained from the ultrasound sweeps are segmented and compared quantitatively with the CT tumors segmentations, considered as ground truth. We considered three metrics computed for each CT-US tumor segmentation:

the distance of the center of mass of each ultrasound tumor segmentation and its corresponding CT segmentation,

the dice score between each ultrasound tumor segmentation and its corresponding CT segmentation,

the Hausdorff distance between each ultrasound tumor segmentation and its correspondent CT segmentation.

Additionally, for each multi-sweep ultrasound reconstruction, we computed the rigid transformation between the latter and the CT by least-square fitting of the centers of mass of the three tumors contained in each multi-sweep and the centers of mass of the corresponding tumors in the CT. The rotational and translational absolute errors for each multi-sweep are extracted from the rigid rotation matrix.

Results

System calibration

The resulting 3D mean root mean squared error over the 50 samples used for the hand-eye calibration is 0.6 ± 0.1 mm, while the free-hand ultrasound calibration process resulted in a 3D mean error of 0.4 ± 0.1 mm.

Tracking accuracy evaluation

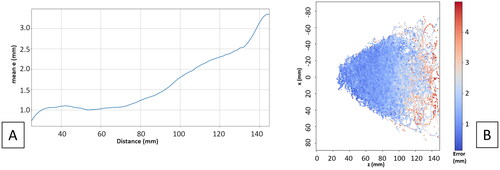

shows the average 3D mean tracking error by changing the distance between the laparoscope and the target tool. We can see that for up to 90 mm distance between the laparoscope and the target tool the error is < 1.5 mm. shows the error has an isotropic distribution with the working distance being the main factor determining the accuracy.

Ultrasound reconstruction accuracy evaluation

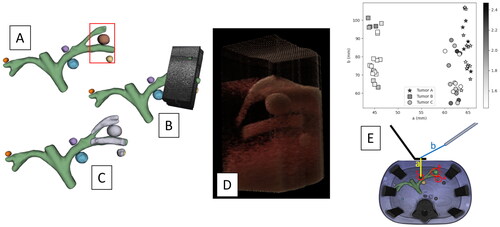

Hausdorff and centers of mass distance and dice score between corresponding CT and US tumors are computed ( and ), over the 50 sweeps acquired in different locations of the ultrasound phantom and at different working distances of the laparoscope from the LUS. The error for the deeper tumors visually seems to be bigger or at least variate more than the error for the shallower tumors (). shows mean and standard deviation absolute values of the rigid transformation parameters to register ten multi-sweeps to the correspondent sub-volume in the CT.

Figure 3. Ultrasound reconstruction results: (A) phantom CT segmentation. (B) Ultrasound compounding. (C) Ultrasound compounding segmentation (light grey). (D) Ultrasound compounding rendering. (E) Distance between center of mass of tumor in ultrasound reconstruction and CT: a is the distance between tumor and LUS probe; b is the distance between laparoscopic camera and LUS probe.

Table 1. Hausdorff and centers of mass distances between CT and US.

Table 2. Dice score.

Table 3. Mean and standard deviation absolute rigid transformation parameters (euler angles and translation vector) between multi-sweep ultrasound reconstructions and ground truth CT of the phantom.

shows an example of ultrasound reconstruction from the dataset used for this evaluation.

Discussion

Our proposed method provides a comprehensive evaluation of both tracking accuracy and ultrasound 3D reconstruction accuracy using the same evaluation setup. Our study demonstrates that a tracking error of < 2 mm can be achieved when filming a LUS from up to 10 cm (common in laparoscopy procedures). Further, our ultrasound reconstruction results depict that when the LUS is filmed from < 10 cm, the average error compared to a registered CT in a phantom setting is approximately 2 mm.

Other researchers have explored usage of laparoscope as tracking tool for LUS. Rabbani et al. [Citation10] evaluated the accuracy of ultrasound image positioning using a fixed laparoscope as the reference system without external optical tracking. Ma et al. [Citation21] assessed the accuracy of ultrasound image positioning by varying the orientation of an optically tracked laparoscope. They performed an ultrasound reconstruction of an artery phantom and co-registered it with a 3D model obtained from a CT scan of the same phantom. Jayarathne et al. [Citation20] evaluated the accuracy of LUS tracking using a tool that can be tracked by both optical tracking and the laparoscope.

The proposed study addresses several limitations as well as provides an end-to-end evaluation workflow, utilizing the described hybrid tracking approach. Our findings indicate that the proposed approach successfully meets the accuracy requirements for the intended clinical application. Both tracking accuracy and ultrasound reconstruction accuracy exhibit comparable performance levels. However, it is essential to acknowledge that the evaluation of tracking accuracy is constrained by imperfect ground truth due to optical tracking and dual-modality tool registration errors, as illustrated by the dashed arrows in . Moreover, the ground truth used for assessing ultrasound reconstruction could be more optimal, as it is subject to optical tracking and CT-optical tracker registration errors. Consequently, the error values presented in the Results section may be considered an upper-bound estimate of the error in the phantom evaluation.

Given that we are comparing segmentations of volumes obtained from two distinct imaging modalities, it is challenging to identify a single metric that accurately characterizes the suitability of the evaluation. The Dice score, for instance, in this context may not be an optimal metric, due to sensitivity towards tumor size, particularly when dealing with small tumors such as A and C.

The multi-sweeps accuracy evaluation () shows that the fusion of multiple ultrasound reconstructions generates larger volumes that are geometrically consistent, in fact the mean residual root mean squared error after applying a corrective rigid registration between the CT and the large ultrasound volume, is < 0.5 mm. Therefore, it would be interesting to evaluate whether a large multi-sweep ultrasound volume could potentially be used to register CTs from different deformation states. In this perspective, our future works will investigate how accurately this multi-sweep approach could be used clinically to register pre-operative CTs to intra-operative ultrasound reconstructions.

Our benchtop accuracy evaluation of the tracking method has some limitations. When used in a clinical setting, it is anticipated that additional error sources will arise, such as organ movements, gas insufflation, and deformations due to LUS pressure. Furthermore, we evaluate only a single type of ultrasound probe and laparoscope, which are very similar to the ones commonly used in minimally invasive surgery. In this direction, future investigations will focus on evaluating the performance of this tracking approach in real clinical scenarios, using intra-operative CT as the reference standard.

To summarize, this study demonstrates that hybrid tracking can achieve an accuracy of approximately 2 mm in both LUS tracking and reconstructing tumors within a phantom model. These findings instill confidence in the potential of hybrid tracking to meet the clinical navigation accuracy requirements for laparoscopic liver surgery, which the authors estimate to be approximately 6 mm.

Declaration of interest

No potential conflict of interest was reported by the author(s).

Additional information

Funding

References

- Abu Hilal M, Aldrighetti L, Dagher I, et al. The Southampton consensus guidelines for laparoscopic liver surgery: from indication to implementation. Ann Surg. 2018;268(1):11–18. doi: 10.1097/SLA.0000000000002524.

- Fretland ÅA, Dagenborg VJ, Waaler Bjørnelv GM, et al. Quality of life from a randomized trial of laparoscopic or open liver resection for colorectal liver metastases. Br J Surg. 2019;106(10):1372–1380. doi: 10.1002/bjs.11227.

- Fretland ÅA, Dagenborg VJ, Bjørnelv GMW, et al. Laparoscopic versus open resection for colorectal liver metastases: the Oslo-COMET randomized controlled trial. Ann Surg. 2018;267(2):199–207. doi: 10.1097/SLA.0000000000002353.

- Aghayan DL, Kazaryan AM, Dagenborg VJ, et al. Long-Term oncologic outcomes after laparoscopic versus open resection for colorectal liver metastases: a randomized trial. Ann Intern Med. 2021;174(2):175–182. doi: 10.7326/M20-4011.

- Pelanis E, Kumar RP, Aghayan DL, et al. Use of mixed reality for improved spatial understanding of liver anatomy. Minim Invasive Ther Allied Technol. 2020;29(3):154–160. doi: 10.1080/13645706.2019.1616558.

- Teatini A, Pelanis E, Aghayan DL, et al. The effect of intraoperative imaging on surgical navigation for laparoscopic liver resection surgery. Sci Rep. 2019;9(1):18687. doi: 10.1038/s41598-019-54915-3.

- Paolucci I, Sandu R-M, Sahli L, et al. Ultrasound based planning and navigation for non-anatomical liver resections – an Ex-Vivo study. IEEE Open J Eng Med Biol. 2020;1:3–8. doi: 10.1109/OJEMB.2019.2961094.

- Pelanis E, Teatini A, Eigl B, et al. Evaluation of a novel navigation platform for laparoscopic liver surgery with organ deformation compensation using injected fiducials. Med Image Anal. 2021;69:101946. doi: 10.1016/j.media.2020.101946.

- Teatini A, Brunet J-N, Nikolaev S, et al. Use of stereo-laparoscopic liver surface reconstruction to compensate for pneumoperitoneum deformation through biomechanical modeling. VPH2020-Virtual physiological human, Paris, France. 2020.

- Rabbani N, Calvet L, Espinel Y, et al. A methodology and clinical dataset with ground-truth to evaluate registration accuracy quantitatively in computer-assisted laparoscopic liver resection. Comput Methods Biomech Biomed Eng Imaging Vis. 2022;10(4):441–450. doi: 10.1080/21681163.2021.1997642.

- Langø T, Vijayan S, Rethy A, et al. Navigated laparoscopic ultrasound in abdominal soft tissue surgery: technological overview and perspectives. Int J Comput Assist Radiol Surg. 2012;7(4):585–599. doi: 10.1007/s11548-011-0656-3.

- Nakamoto M, Nakada K, Sato Y, et al. Intraoperative magnetic tracker calibration using a magneto-optic hybrid tracker for 3-D ultrasound-based navigation in laparoscopic surgery. IEEE Trans Med Imaging. 2008;27(2):255–270. doi: 10.1109/TMI.2007.911003.

- Jayarathne UL, Moore J, Chen ECS, et al. Real-time 3D ultrasound reconstruction and visualization in the context of laparoscopy. In: Descoteaux M, Maier-Hein L, Franz A, et al, editors. Med image comput Comput-Assist interv − MICCAI 2017. Cham: Springer International Publishing; 2017. p. 602–609. https://link.springer.com/10<?sch-permit JATS-0034-007?>.1007/978-3-319-66185-8_68.

- Smit JN, Kuhlmann KFD, Ivashchenko OV, et al. Ultrasound-based navigation for open liver surgery using active liver tracking. Int J Comput Assist Radiol Surg. 2022;17(10):1765–1773. doi: 10.1007/s11548-022-02659-3.

- Berge CSZ, Kapoor A, Navab N. Orientation-Driven ultrasound compounding using uncertainty information. In: Stoyanov D, Collins DL, Sakuma I, et al. editors. Information processing in computer-assisted interventions [internet]. Cham: Springer International Publishing; 2014. p. 236–245. http://link.springer.com/10<?sch-permit JATS-0034-007?>.1007/978-3-319-07521-1_25.

- Solberg OV, Langø T, Tangen GA, et al. Navigated ultrasound in laparoscopic surgery. Minim Invasive Ther Allied Technol. 2009;18(1):36–53. doi: 10.1080/13645700802383975.

- Xiao G, Bonmati E, Thompson S, et al. Electromagnetic tracking in image‐guided laparoscopic surgery: comparison with optical tracking and feasibility study of a combined laparoscope and laparoscopic ultrasound system. Med Phys. 2018;45(11):5094–5104. doi: 10.1002/mp.13210.

- Bø LE, Leira HO, Tangen GA, et al. Accuracy of electromagnetic tracking with a prototype field generator in an interventional or setting: accuracy of electromagnetic tracking with prototype field generator. Med Phys. 2011;39(1):399–406. doi: 10.1118/1.3666768.

- Liu X, Plishker W, Shekhar R. Hybrid electromagnetic-ArUco tracking of laparoscopic ultrasound transducer in laparoscopic video. J Med Imaging. 2021;8(1):015001. doi: 10.1117/1.JMI.8.1.015001.

- Jayarathne UL, McLeod AJ, Peters TM, et al. Robust intraoperative US probe tracking using a monocular endoscopic camera. In: Salinesi C, Norrie MC, Pastor Ó, editors. Advanced information systems engineering [internet]. Berlin, Heidelberg: Springer Berlin Heidelberg; 2013. p. 363–370. [cited 2023 Oct 23]. http://link.springer.com/10<?sch-permit JATS-0034-007?>.1007/978-3-642-40760-4_46.

- Ma L, Wang J, Kiyomatsu H, et al. Surgical navigation system for laparoscopic lateral pelvic lymph node dissection in rectal cancer surgery using laparoscopic-vision-tracked ultrasonic imaging. Surg Endosc. 2021;35(12):6556–6567. doi: 10.1007/s00464-020-08153-8.

- Lai M, Shan C, De With PHN. Hand-Eye camera calibration with an optical tracking system. ICDSC '18: International Conference on Distributed Smart Cameras, Eindhoven Netherlands: ACM; 2018. p. 1–6. [cited 2023 Oct 12]. https://dl.acm.org/doi/10<?sch-permit JATS-0034-007?>.1145/3243394.3243700.

- Carbajal G, Lasso A, Gómez Á, et al. Improving N-wire phantom-based freehand ultrasound calibration. Int J Comput Assist Radiol Surg. 2013;8(6):1063–1072. doi: 10.1007/s11548-013-0904-9.

- Segment Editor. 5.2.2. https://www.slicer.org: 3D Slicer. 2023