Abstract

Humans create many risks, ranging from those that are relatively negligible and easily managed to those that are far less wieldy and pose a threat to the existence of humanity, the lives of numerous other species and/or the functionality of local and global ecosystems. The literature on the process of anthropogenic risk creation is limited and piecemeal, and there has been far greater emphasis on using reactive approaches to deal with anthropogenic risks (e.g. risk management) rather than on employing proactive approaches to avert further risk creation (e.g. responsible innovation). An obvious starting point for averting or reducing future anthropogenic risk creation is to understand better the generic features of the risk creation process and to identify points at which the creation process might be better controlled or averted. To this end, this paper presents a simplified conceptual Model of Anthropogenic Risk Creation (MARC) that provides a broad descriptive overview of the sequential stages that appear to have been evident in several historic and contemporary instances of anthropogenic risk creation. By explicating the stages in the risk creation process, MARC highlights the key points at which more attention could be given (e.g. by innovators, policymaker, regulators) to implementing or encouraging greater risk prevention or limitation. Moreover, MARC can help to stimulate critical debate about the extent to which humanity inadvertently creates adverse conditions, such as those that inhibit human prosperity and sustainability, and the extent to which anthropogenic risk creation is adequately understood, researched and managed. This paper also critically reflects upon related issues, such as risk creation as a learning process and the relative merits of initiatives to promote greater responsibility in research and innovation. Important areas for future research on the anthropogenic risk creation process are discussed.

1. Overview

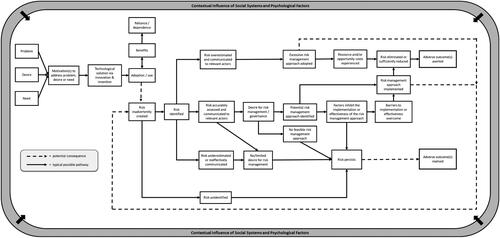

Many risks are created by humans. For example, the risks of climate change, ocean acidification and air pollution have been attributed to the burning of fossil fuels, the risk of species extinctions have been associated with the habitat destruction caused by urbanization and deforestation, and many human health risk have been linked to modifiable lifestyle behaviors (Intergovernmental Panel on Climate Change, 2014; Lelieveld et al. Citation2015; Mokdad et al. Citation2004; Orr et al. Citation2005; Travis Citation2003). Indeed, humans have created some risks that now threaten their own existence, the existence of numerous other species and the functionality of local and global ecosystems (Bostrom Citation2013; Tonn and Stiefel Citation2013). Nonetheless, humanity is also capable of reducing the extent to which it inadvertently creates risk and to which it better manages the risks that it has/does create (Bostrom Citation2013; Matheny Citation2007; Tilman et al. Citation2017). However, the effective management of these risks might only be realized if humanity understands better the anthropogenic risk creation processes (we define risk as the probability of an adverse outcome, and define anthropogenic risk creation as the processes by which innovative human actions increase the probability of specific adverse outcomes that can affect humans, other life forms or the natural environment) and the factors that determine whether such risks can be prevented or effectively mitigated. In an effort to help achieve these important risk management aims, this paper presents a simplified descriptive Model of Anthropogenic Risk Creation (MARC) that conceptualizes the generic stages of anthropogenic risk creation (see ).

Rather than aiming to model specific details of one particular anthropogenic risk creation process, MARC provides a broad overview of the sequential stages that appear to have been evident in numerous historic and contemporary instances of inadvertent anthropogenic risk creation. The model depicts the relationship between these stages and visualizes the potential pathways that can occur between the initial stages in which a risk(s) is elicited, through to the final stages in which an adverse outcome(s) is either averted or realized. MARC is predominantly relevant to societal level risks that threaten humans and the natural environment, but it may be equally relevant to localized or individual level risks and in relation to other risk domains (e.g. financial markets). Furthermore, because the concepts and relationships depicted in MARC are evident in many aspect of human life and transcend many academic disciplinary boundaries, the model has the potential to be of benefit to a range of individuals and organizations involved in innovative and risk management processes (e.g. inventors, scientists, businesses, policymakers, regulators) and to researchers from across domains.

This paper initially presents a general overview of MARC that explains its conceptual nature and details its specific aims. Then, various stages of the model are discussed with specific reference to how those particular stages have often been evident in past and current anthropogenic risk creation processes. In this part of the paper, these processes are presented in sub-sections (e.g. Solutions, adoption and dependence; Anthropogenic risk creation; Identification and assessment of anthropogenic risk; Communication and management of anthropogenic risks) that are synchronous to the main sequence of events that are depicted in MARC. A worked-example is then presented to provide a concrete illustration of how MARC can represents the main sequence of events in a risk creation process. Next, the paper discusses related topical issues, such as the extent to which risk creation is a valuable learning process, and whether the risk creation process can be effectively managed through the ‘Responsible Research and Innovation (RRI)’ agenda. Finally, some limitations of MARC are discussed and important areas for related future research are proposed.

2. The aims of MARC

The overarching aim of MARC is to help develop meaningful insights into the processes that underlie inadvertent anthropogenic risk creation and, therefore, to contribute to developing a future in which such risks might be prevented or more effectively minimized and managed. More specifically, MARC aims to provide four key benefits. First, the extant literature on inadvertent risk creation is relatively small, piecemeal and primarily focused on debating the extent to which risk creation can result in culpability in legal contexts (e.g. Handmer Citation2008; Husak Citation1998; Lewis and CICERO Citation2012). Hence, the literature only provides limited and implicit details of the stages that can occur during the whole risk creation process. To partially address this limitation, MARC provides a unified representation of the risk creation process and highlights the critical stages that have commonly been evident in past and current anthropogenic risk creation processes. Second, MARC explicates the relationships between the various stages in any innovation or invention processes during which risks can develop and manifest. Hence, MARC highlights the points at which more attention could be given to implementing or encouraging risk identification, assessment, communication and/or management. For example, individuals and organizations that are engaged in innovation and invention processes could ‘map’ the development and implementation of their activities onto MARC and, where there is evidence that the innovation/invention is currently on a pathway that could lead towards the realization of adverse outcomes, corrective actions can be instigated at these preliminary stages. Third, individuals and organizations involved in the oversight, observation and/or governance of innovations and technological developments (e.g. regulators, journalists, academics, policymakers) could use MARC to track these developmental processes and identify if risk are likely to manifest from emerging innovations and technologies or the way in which those innovations and technologies are employed. Hence, MARC could help to explicate instances where anthropogenic risk issues may be poorly managed or ignored and could highlight potential pathways towards more timely interventions and risk management processes. Fourth and finally, the model should serve to stimulate critical debate about (a) the role that humanity often plays in inadvertently creating conditions that inhibit human prosperity and sustainability and (b) the extent to which anthropogenic risk creation is adequately understood and researched and could be better managed. Moreover, it is important to note that the development of MARC was not driven by any fundamental opposition to technological progress but, rather, by a desire to contribute to reducing the risks that might unintentionally emerge from future technological developments. Relatedly, it is not intended that MARC depicts the anthropogenic process of creating technologies that are deliberately designed to produce adverse outcomes for others (e.g. weapons of war, malicious computer software).

3. The risk creation process as depicted in MARC

3.1. Solutions, adoption and dependence

Life has always presented many obstacles to the survival, prosperity and happiness of humans. In an effort to overcome these obstacles, humans have repeatedly used intellect and ingenuity to develop innovative and inventive solutions. These adaptive responses have been employed to address basic human needs (e.g. access to food, water, shelter, sanitation), as well as to satisfy more hedonic interests (e.g. tourism, entertainment, convenience). As a result of the fundamental role that innovations and inventions have played in facilitating survival, improving prosperity and increasing pleasure, humans have been quick to adopt these ‘technologies’ (we define the term ‘technology’ or ‘technologies’ as the processes and items that are created and used by humans to achieve objectives). In many cases, humans have developed such a strong reliance on these technologies that abstinence from their use can seem extremely objectionable or may even be extremely hazardous or fatal. For example, if most industrialized nations were to instantaneously stop using a technology such as the combustion engine in an effort to reduce global CO2 emissions, the continued production and distribution of food at its current rates would be near impossible and, therefore, could result in widespread famines. Indeed, we posit that the capacity to abstain from each technology may be reduced by the extent to which (i) a dependence on the technology has developed over time (ii) the use of the technology is embedded with socio-economic systems and/or (iii) there is a lack of alternative and equally beneficial technologies.

Hence, as depicted on the far left of MARC (see ), humans have typically responded to many problems, needs and desires through innovative technological solutions. These solutions have often been adopted on a wide scale and become embedded in the effective function of socio-technical systems to the point of heavy reliance and even temporary existential dependence. This would be largely unproblematic if the use of such technologies was sustainable and non-hazardous or, if not, that effective alternative solutions could be identified and adopted easily and efficiently.

3.2. Anthropogenic risk creation

While the principle focus of the abovementioned innovation and invention processes has been to create beneficial outcomes, many of the resultant technologies have inadvertently been the source of potential or realized adverse outcomes (Beck Citation1992; Denney Citation2005; Nekola et al. Citation2013). For example, while the combustion engine was developed as a source of mechanical power to enable humans to travel faster and further and to alleviate the burden of heavy labor, it is now also a key proponent in the potential existential risk posed by climate change (Intergovernmental Panel on Climate Change, Citation2014). In the 1950s, the medication Thalidomide was given to pregnant women to prevent nausea, but it was later discovered that it increased the risk of infants being born with phocomelia (malformation of the limbs) or other congenital abnormalities (McBride Citation1977). While humans first initiated tobacco smoking circa 5,000 BCE based on a desire for pleasurable sensations and as part of spiritual rituals, it was not until the 20th Century that extensive evidence started to emerge regarding the numerous risks that its habitual use posed to human health (in 2008, the World Health Organization declared tobacco smoking as ‘… the single most preventable cause of death in the world today’) (Musk and DE Klerk Citation2003; World Health Organization Citation2008). Social media websites were created with the intention of enabling people to connect, cooperate and form relationships more easily (Kaplan and Haenlein Citation2010). However, many social media sites have rapidly been utilized by political parties, terrorist groups and nation states in ways that can pose a risk to social stability, democratic processes and public safety (Brown and Pearson Citation2018; Persily Citation2017; Ward Citation2018). Hence, both history and the contemporary world is filled with a wide range of highly beneficial technologies that have been born out of necessity and/or good intentions, but which have inadvertently elicited some probability of an adverse outcome(s). This is represented in MARC, with risk shown as a potential unintended consequence of the adoption and use of technologies that address anthropogenic problems, desires and needs. In other words, risks and benefits are rarely mutually exclusive.

As suggested above, it seems reasonable to infer that when people have engaged in innovation processes, their initial focus has been on the benefits that can be achieved (Lach and Schankerman Citation2008; Melenhorst, Rogers, and Bouwhuis Citation2006; Wright Citation1983). While the obvious perceived benefits may have been to resolve a problem or to satisfy a need/desire, there are a range of parallel benefits that may have also motivated the innovator(s). For example, there may have been intrinsic objectives (e.g. altruistic, egotistical, affective [relief, pleasure]) or extrinsic goals (e.g. financial rewards, career progression, social recognition) that may have been driving forces of comparable or greater influence. Indeed, these additional motivation forces may have even influenced the innovators to ignore (either consciously or unconsciously) or downplay the potential for their innovation to create risk. Alternatively, the risks may simply have been underestimated or entirely unknown.

A further point worthy of mention is that many technologies found to present a risk of adverse outcomes have been labelled as ‘man-made hazards’ and, therefore, have often been considered distinct from ‘natural hazards’ such as floods and earthquakes, or contractible diseases (El-Sabh and Murty Citation2012). However, some scholars have argued that the probability that a ‘natural hazard’ results in an adverse outcome(s) is largely determined by the extent to which humans are proximate to the hazard (e.g. building homes on flood plains or in areas with high radon levels) or are intellectually and physically equipped to manage the risk (e.g. having sufficient medical knowledge and equipment to respond to a pandemic). Hence, it could be argued that for so-called ‘natural hazards’ some degree of anthropogenic risk creation is implicitly evident because people often elect to engage in risky behaviors, either through willful choice, ignorance or absolute necessity, for evident benefits (e.g. economic gains, access to natural resources) or they fail to instigate sufficient risk management measures (Brienen et al. Citation2010; Eiser et al. Citation2012; Lewis and CICERO Citation2012; Tierney Citation2018). However, these types of risks are distinct from those depicted in MARC because the underlying hazards have not been direct products of ‘innovative human actions’ as per our aforementioned definition of anthropogenic risk creation. In other words, MARC only depicts the threats that have their origins in human agency (Bostrom Citation2013; Sears Citation2020).

3.3. Identification and assessment of anthropogenic risk creation

In the creation of many risks, the risk attributable to the causal innovation and invention has often remained unidentified for a prolonged period. The risk has only been identified after the technology has been through relatively large-scale development and/or production processes and adopted by a relatively high number of users. In many cases, it has often only been because of prolonged, widespread and repetitive use of a technology that its potential and propensity to elicit adverse outcomes has become evident and measureable. For example, the severe adverse health effects of exposure to anthropogenic building materials such as asbestos, creosote and polychlorinated biphenyls were identified long after their widespread use over several decades (Carpenter Citation1998; Karlehagen, Andersen, and Ohlson Citation1992; Selikoff, Churg, and Hammond Citation1964). More recently, the numerous benefits of plastics (e.g. versatile, durable, cheap, light) have caused them to become one of the world’s most widely used materials. However, it is only in recent years that the range of risks that plastics pose as an environmental pollutant and as a potential threat to animal and human health have started to become more evident (Eriksen et al. Citation2014; Geyer, Jambeck, and Law Citation2017; Pivokonsky et al. Citation2018; Santillo, Miller, and Johnston Citation2017). Thus, although not always the case, the irony tends to be that the extent of an anthropogenic risk is typically only identified at a point in time after widespread use and/or heavy dependence on the technology has developed. This is depicted in MARC, which shows that the adoption and use of beneficial technologies typically results in some form of reliance on that technology, but that is also has the potential to simultaneously and inadvertently elicit a risk(s) that can remain unidentified for a prolonged period.

3.4. Scientific and social consensus on anthropogenic risk creation

The identification of anthropogenic risks has generally arisen through anecdotal observations and/or formal empirical assessments. In the case of anecdotal observations, adverse outcomes have appeared to correlate with the use of specific technologies or inventions (e.g. medical practitioners observing a higher prevalence of premature deaths among individuals who smoke cigarettes). Concerns about these potential correlations have typically then motivated formal investigations and/or scientific examinations with a view to detecting evidence of potential causal mechanisms or confirming relationships between the technology and the adverse outcome(s). Similarly, scientific knowledge of the physical world has pointed towards potential anthropogenic causes of observed adverse outcomes and these potential causes have then been subject to empirical assessment. For example, the hypothesized relationship between ozone depletion and the use of manufactured chemicals such as chlorofluorocarbons was subsequently confirmed by scientific tests in the 1970s and 1980s (Andino and Rowland Citation1999; US National Research Council Citation1982). However, there have also been circumstances in which, despite an absence of scientific evidence or anecdotal observations to the contrary, individuals and/or groups have posited that anthropogenic innovation and invention processes could lead to certain adverse outcomes. For instance, concerns over the potential adverse health effects of genetically modified (GM) foods led to extensive regulations governing the production and sale of GM foods in many countries. This occurred despite the absence of evidence or incidents to suggest GM foods are harmful to human health (American Medical Association Citation2001; Gaskell et al. Citation2004). This illustrates how, in some cases, there have been epistemic uncertainties about the risk posed by some technologies, but this has not prevented the risk from being ‘socially constructed’ as objectively valid and, therefore, being subject to precautionary risk management measures (Dake Citation1992; Kasperson et al. Citation1988).

Historically, one issue that has sometimes arisen in risk identification processes is an overestimation or underestimation of the extent to which the technology increases the probability of the adverse outcome (Flynn, Slovic, and Kunreuther Citation2001; Slovic, Fischhoff, and Lichtenstein Citation1979). Overestimations have led to heightened concerns and, therefore, to the instigation of measures aimed at managing the risk, but which have also incurred disproportionately high time and resource costs. Consequently, this has meant that the opportunity to better invest that time and resources elsewhere was forfeited. Examples involving such overestimations include the use restrictions on cellphone use at UK gas stations due to unsubstantiated fears the phones would ignite gas fumes (Burgess Citation2007) and, arguably, the widespread preparations made across numerous countries in anticipation of widespread economic, social and technical disruptions that were expected to be caused by the ‘millennium bug’ (MacGregor Citation2003). By contrast, the underestimation of the risk has, on some occasions, prevented or attenuated the appetite to put sufficient risk manage approaches in place. This has resulted in the persistence of the risk and, therefore, a greater potential for the relevant adverse outcome to be realized. One pertinent example is the 2011 Fukushima Daiichi nuclear power station accident that was caused by an earthquake-triggered tsunami. Synolakis and Kânoğlu (Citation2015) found that the risk analysis for the power station had resulted in an underestimation of the maximum probable tsunami size and of the frequency of large tsunamis. This was the third most severe nuclear accident in history and was considered to have been preventable (Synolakis and Kânoğlu Citation2015). Hence, as captured by MARC, anthropogenic risks are often subject to overestimation or underestimation and, consequently, this determines that extent of the risk management actions that are taken and the extent to which the risk persists.

A further consideration is that when risks have had an anthropogenic source, some degree of culpability may have existed for the risk creator(s). Consequently, there have been instances where the risk creator(s), or even those who benefit from the underlying technologies, have been motivated to downplay the relevant evidence or to attenuate concerns (Kasperson et al. Citation1988). By contrast, those who could have been adversely affected by the risk may have seen some value in doing the opposite (Renn et al. Citation1992). Similarly, when there has been conflicting evidence and contrasting narratives, uncertainty and confusion has sometimes emerged at the societal level regarding the relationship between a focal technology and the likelihood, temporal proximity, frequency, and/or magnitude of the associated adverse outcome(s). A potential consequence of all this is delays in commitments to risk management processes (Hood, Rothstein, and Baldwin Citation2001). For example, in the 1960s, scientists started to identify corroborative and compelling evidence that human greenhouse gas emissions were causing pronounced changes to the earth’s climate (see Weart Citation2003, Citation2008). Although such evidence continued to accumulate throughout the subsequent decades, it was only in 2019 that the governments of some industrialized nations first committed to legally binding agreements to achieve net zero carbon emissions by the mid-2000s and that some cities, local councils and organizations formally declared a ‘climate emergency’ (Smith-Schoenwalder Citation2019; UK Department for Business, Energy and Industrial Strategy). A key contributing factor in the delay between climate change identification and action has been the uncertainties and disputes surrounding evidential validity, anthropogenic origins and responsibility for its management (Kitcher Citation2010; Weart Citation2003, Citation2008). Similar controversies surrounding the potential risks posed by other anthropogenic technologies such as genetically modified crops, pesticides, nuclear power, nanomaterials and air pollutants have all played some role in determining the nature, level and timing of risk management actions (Cox Citation1997; Engelhardt, Engelhardt, and Caplan Citation1987; Gray and Hammitt Citation2000; Pidgeon, Harthorn, and Satterfield Citation2011; Toke Citation2004). Hence, anthropogenic risks can be over or underestimated, potential resulting in the disproportionate allocation of risk management resources and, as depicted in MARC, are often able to manifest beyond the risk identification stage due to controversies surrounding the extent of the risk and the nature of potential risk management actions.

3.5. Communication and management of anthropogenic risks

Assuming that an anthropogenic risk has been identified and accurately assessed, an initial key feature of the risk management process has often been effective risk communication. That is, efforts are made to communicate the risk to parties who have the extant or potential capacity to manage or facilitate the management of the risk (Breakwell Citation2007). While history has shown that effectively communicating the risk to these parties can elicit the requisite risk management action, there are many instances where this has not been not the case (Fischhoff Citation1995; Powell and Leiss Citation1997). For example, textual warnings on cigarette packets have had limited impacts on smoking cessation rates (Hammond Citation2011; Thrasher et al. Citation2012), and despite high awareness levels of environmental issues such as greenhouse gas emissions and plastic pollution (Syberg et al. Citation2018), both global carbon emissions and plastic production are projected to continue to rise during the forthcoming decades (Geyer, Jambeck, and Law Citation2017; Lebreton and Andrady Citation2019). Hence, as shown in MARC, anthropogenic risks can be accurately assessed and communicated, yet this does not necessarily result in proportionate risk management responses and, therefore, the risks can persist. In other words, risk communication cannot be assumed to be a panacea for initiating the management of anthropogenic risks.

In some instances, suitable approaches for managing anthropogenic risks have been identified and successfully implemented (e.g. fitting seatbelts and airbags in automobiles). This has typically resulted in the elimination or reduction of the risk and, therefore, greater avoidance of the associated adverse outcome. However, the implementation of effective risk management approaches has often been prevented or temporarily thwarted by a range of factors. These factors have included a high dependence on the technology that elicits the risk, limited resources to fund and operate the approach, resistance among those who benefit from the existence of the risk (e.g. cigarette manufacturers), or controversies surrounding the extent of the risk or the effectiveness of the potential risk management methods. For example, plastic pollution presents a range of potential risks to ecosystems and human health, but many factors (e.g. the absence of equally beneficial alternative materials, some plastics being non-recyclable, monetary costs, limited demand for recycled plastic materials) limit the extent to which humanity can reduce its reliance on plastics and/or reduce plastic pollution (Carney Almroth and Eggert Citation2019).

It is depicted in MARC that even when the barriers to implementing some risk management approaches are overcome, the risk management approach itself might also inadvertently elicit additional secondary risk(s). For example, this can be quite common among medicinal drugs that aim to manage the risk of a specific health condition, but which inadvertently elicit a risk of severe or even life-threatening side effects (Edwards and Aronson Citation2000). Similarly, there are now many concerns about the potential for inadvertent risks from range of potential geo-engineering approaches to managing climate change (Corner et al. Citation2013; Corner and Pidgeon Citation2010). Hence, as represented by the various pathways emanating from the ‘Risk identified’ stage in MARC, the route from identifying a risk management approach through to averting the adverse outcome can be thwarted by a range of factors.

3.6. Contextual influence of social systems and psychological factors

The preceding sub-sections (3.1–3.4) have all made implicit reference to the fact that each stage of the risk creation process is typically subject to the influence of the prevailing social systems that are in operation (e.g. culture, political stability, economic development, power distribution, legal frameworks, epistemic advancements, internationalization, etc.) and the psychological processes experienced by all individuals involved and potentially affected (e.g. aspirations, perceived risks, past experiences, value judgments, motivation, etc.). That is, these systems and processes create unique contextual circumstances that may have a substantial influence on which pathway, from all those depicted in MARC, is followed and at what point in time the transition is made from one stage to the next. For example, whether a society invests time and resources in developing technological solutions to an extant environmental problem can depend on whether specific social systems (e.g. funding availability, political will, cultural norms) and psychological factors (e.g. levels of perceived risk and benefit, belief systems, knowledge) are conducive for such actions. Likewise, whether an identified health risk is accurately assessed and effectively managed can depend on the prevailing socio-psychological factors, such as epistemological progress and expert judgments, at each temporal intersection of the assessment process. The potential influence of these socio-psychological processes on each stage of the risk creation process is visually depicted in MARC via an ‘outer ring’ labelled Contextual Influence of Social Systems and Psychological Factors.

3.7. An example of using MARC

As outlined earlier, MARC has the potential to facilitate better management of anthropogenic risks. To demonstrate how MARC might function in this way, the example of using Artificial Intelligence (AI) to aid the diagnosis of medical conditions will now be considered. This is, of course, a simplified and fictitious (though realistic) example that is meant to illustrate what could happen and how the model might assist stakeholders (e.g. technology innovators, policy makers) to make better risk management decisions. By closely monitoring , readers can follow (from left-to-right) the various stages and alternative pathways in MARC in conjunction with the following narrative.

Humanity has a need/desire to obtain veracious and fast diagnoses of medical conditions. In response to this need, diagnostic AI-based solutions are invented that work accurately, quickly and negate the potential for error-prone human judgments (Szolovits Citation2019). Following a series of successful clinical trials, these AI diagnostic technologies are rapidly adopted by healthcare institutions across the world and are gradually accepted by society as a more trusted and accurate form of diagnosis than those previously made by medical professionals. Consequently, medical professionals becomes solely reliant on the AI technology for diagnoses and gradually become de-skilled in this aspect of their practice.

After a number of years of relying on AI diagnostic tools, it transpires that some of these technologies had regularly misdiagnosed a substantial proportion of patients and that this had led to a number of severe adverse outcomes (e.g. deaths, secondary health conditions, unnecessary surgeries, costly treatments). In other words, an anthropogenic risk had been inadvertently created when the technology was first employed and it remained unidentified for an extended period, resulting in the realization of adverse outcomes.

From the point in time that the inadvertent risk from the AI technology is identified, the magnitude of that risk requires accurate assessment. If the risk is overestimated, excessive risk management approaches might be adopted (e.g. banning [or at least forgoing] the use of all AI diagnostic tools, many of which might still provide superior diagnosis to that of clinicians). This might create secondary inadvertent risks (patients left without technologies or medical professionals capable of providing accurate diagnoses) and/or large financial costs to healthcare systems (e.g. money wasted on redundant AI diagnostics tools, new diagnostic training for clinicians). Conversely, if the risk is underestimated or poorly communicated, this would probably result in a lack of appetite to rectify the problem. Hence, the misdiagnosis risk would then manifest and the same adverse outcomes would continue to be realized.

Let us assume that the risk from the AI diagnostics is accurately identified and is effectively communicated to those parties (e.g. AI developers and manufacturers, policy makers, medical professionals, regulators) who have the potential capacity to address the risk. If feasible risk management approaches exist and those parties have a sufficient desire and the resources to implement those approaches (e.g. recall defective products, tighten regulations), then there is a strong likelihood that the risk can be managed and the adverse outcomes averted. However, these parties might encounter a range of socio-psychological factors that inhibit the implementation or effectiveness of the identified risk management approach(es). For example, inhibiting factors could be a lack of suitable alternative diagnostic methods, widespread epistemic uncertainties regarding the prevalence of misdiagnoses by AI technologies, public risk perceptions and mistrust of the technology, or political lobbying pressures from AI manufacturers not to prohibit certain AI diagnostic products. It is only when such barriers to effective risk management are overcome that the risk management approaches can be implemented and the misdiagnosis risk can be eliminated or sufficiently reduced.

As illustrated with the example above, MARC could serve as an important descriptive tool that can represent the creation and various manifestations of an anthropogenic risk. However, as well as being descriptive in nature, MARC might also be used in this instance (and many others) to provide some foresight to those involved in the development of novel technologies. For example, the model helps to make the creators mindful that, following the technologies adoption, risk can manifest along different pathways, each of which may require different responses and lead to different outcomes. Hence, this could help the creators to recognize that they need to be ready to monitor for any early signs of adverse outcomes associated with their technology and, furthermore, to put in place pre-emptive measures to ensure that any risks that emerge following the technology’s adoption are accurately measured and can be effectively communicated and managed. Likewise, the creators could, at any early stage in the technology’s development and/or adoption, identify and develop proportionate means of overcoming any factors that might inhibit the implementation of effective risk management approaches. Going back to our earlier example, an AI diagnostic manufacturer that is aware that a hazardous flaw could emerge in its technology might establish an early warning system for end-users and be ready to provide each end-user with the details of alternative AI diagnostic products that can be used until the flaw is resolved. Hence, MARC provides more than just a visual representation of the typical historical pathways that have been evident in anthropogenic risk creation. Crucially, it sets out the potential future pathways for emerging technology innovations and, in doing so, can be used to motivate innovators to develop foresights into how they might avert, rather than realize, adverse outcomes. In other words, MARC could help humanity to focus more time, energy and resources on prevention (e.g. risk mitigation, risk reduction) rather than on cure (i.e. risk management).

3.8. Summarizing MARC

MARC consolidates the piecemeal perspectives on anthropogenic risk creation and explicates the generic features of the underlying processes. By doing this, the model provides a clear, sequential and holistic representation of the risk creation process. Importantly, MARC acknowledges that (a) risk is predominantly an inadvertent and accidental side-effect of innovative actions aimed at addressing extant wants, needs and problems, and (b) the value of averting risk creation during innovative processes may be overlooked or ignored largely because the incentives (both intrinsic and extrinsic to the individual) for producing technologies that address these wants, needs and problems can outweigh the incentives for mitigating potential risks. Hence, MARC draws attention to the need for proactive approaches to avert further risk creation, rather than focusing on managing extant risks, and provides a framework for innovators and risk regulators/managers to ‘map’ the pathway of innovative processes and identify where corrective actions/interventions might be beneficial. MARC also, acknowledges that, despite awareness of anthropogenic risks and the need for them to be managed, there can be a number of reasons (e.g. epistemic uncertainty, technological dependency, lack of wherewithal or resources) why such risks may not be effectively managed once created.

4. Risk creation as a learning process

While the benefits of avoiding anthropogenic risk creation may seem obvious, we acknowledge that total avoidance of risk is probably impossible and, arguably, may not even be an entirely desirable state (Husak Citation1998). History has shown that risk taking has often been necessary for humans to avoid other threats and to achieve certain goals and aspirations. For example, across the course of human evolution people have engaged in the risk of war to protect what they value and to acquire new resources, people have entered dangerous situations to acquire resources, and have cooperated with other individuals, groups and nations at the risk of non-reciprocity, exploitation and un-sustained collective action (Tucker and Ferson Citation2008). In addition, the potential adverse effects of some innovative processes and inventions might be unforeseeable, even when diligent and concerted efforts have been made to identify and minimize potential risks. Moreover, time may permit the opportunity to learn from mistakes and to take corrective actions before adverse outcomes occur. Hence, efforts to completely avoid anthropogenic risk creation may, to some, seem to be an unnecessary, unrealistic and costly pursuit that stifles the innovation processes that help to improve human prosperity. However, in light of the range and potential severity of adverse outcomes associated with human innovations and behaviors across the past few centuries, it seems logical to aim to better manage the risk creation process if humanity is to improve its track-record of innovating and inventing without also creating societal level risks that pose substantial threats to the humanity, other species and the natural environment. Furthermore, as detailed in MARC and discussed above, prudent efforts will be required to ensure that risk management processes themselves do not result in inadvertent secondary risks.

5. Responsible innovation and risk

As illustrated in MARC, anthropogenic risk can inadvertently manifest during and after innovations and inventions are adopted. Hence, one key focus for addressing the issue of anthropogenic risk creation is to identify and manage potential risks prior to the adoption stage or, at least, prior to the dependence stage. Indeed, the potent and far-reaching adverse impacts of some anthropogenic innovations and inventions have long been recognized across several academic fields (e.g. ethics, law, sociology) and, more recently, efforts to address this issue have recently been galvanized in the ‘responsible research and innovation’ (RRI) agenda (Owen, Macnaghten, and Stilgoe Citation2012; Stilgoe, Owen, and Macnaghten Citation2013). The RRI concept gained prominence and traction around 2010 as a key policy approach adopted by the European Union’s (EU) Framework Programmes to address the problems surrounding the regulation of scientific innovation (European Union Citation2019). RRI is defined by the EU as ‘… an approach that anticipates and assesses potential implications and societal expectations with regard to research and innovation, with the aim to foster the design of inclusive and sustainable research and innovation’ (European Union Citation2019).

In terms of its capacity to better prevent or limit anthropogenic risk creation processes, the RRI agenda has many merits. For example, RRI provides some scope to democratize the governance of the intentions of innovators, and it may motivate researchers and innovators to anticipate and assess potential unintended consequences and, in doing so, implicitly foster an awareness of risk, responsibility and accountability. Furthermore, the RRI agenda can reframe responsibility in innovation so that it is perceived to go beyond challenging morally questionable behaviors (e.g. manipulating data) to include long-term, societal level impacts (Owen and Goldberg Citation2010; Owen, Macnaghten, and Stilgoe Citation2012; Stilgoe, Owen, and Macnaghten Citation2013). However, despite its many merits, there are several criticisms that can be levelled at RRI, each of which cast some shadows over its capacity to mitigate all forms of anthropogenic risk creation. For instance, the approach is a set of guiding principles rather than statutory regulations and, therefore, has no specific power to compel certain practices or to take legal action against those who do not adhere. Furthermore, while the RRI agenda has primarily been promoted within Europe and similar approaches have been adopted in other nations (Owen, Macnaghten, and Stilgoe Citation2012), it is not yet employed worldwide. In addition, the RRI agenda fails to offer any rewards and, consequently, often fails to motivate compliance among researchers and innovators who generally operate in competitive cultures that are focused on short-term achievements (Blok and Lemmens Citation2015; Pain Citation2017).

Perhaps the greatest limitation to the RRI approach is that it is primarily aimed at those involved in scientific research and innovation and, therefore, does not offer guidance to, or implicitly confer any duties upon, commercial actors. This seems ironic given that businesses are regularly engaged in research and innovation processes, and most are involved, whether directly or indirectly, in either controlling, extracting or processing resources, and promoting or facilitating technological consumption. Hence, businesses can be vital actors in the key risk creation stages as depicted in MARC (e.g. innovation/invention, adoption, reliance/dependence, inadvertent risk), but remain free to choose whether to comply with ethical codes, such as the RRI principles. Although many businesses do aim to minimize or avoid any part in anthropogenic risk creation through responsible practices and the application of related corporate social responsibility (CSR) actions (de Saille and Medvecky Citation2016; Halme and Korpela Citation2014), a systematic literature review found that evidence of responsible innovation in business was scarce (Lubberink et al. Citation2017). Hence, questions remain regarding the extent to which the RRI initiative has sufficient specificity, adoption, power and scope to effectively influence anthropogenic risk creation across all social, economic and commercial contexts.

6. Some limitations of MARC

While MARC provides a descriptive overview of the general anthropogenic risk creation process, the simplified conceptual nature of the model does lead to some limitations that should be noted. First, because MARC is descriptive rather than prescriptive, it does not provide explicit solutions or risk management guidance. Nonetheless, it does explicate the stages as which risk emerges and manifests and, therefore, draws attention to the need for more care, analysis and oversight at specific stages during innovation. Second, because MARC provides a generic representation of the risk creation process, it does not feature detailed descriptions of the different and nuanced processes that may occur at each stage within different contexts. For example, the model refers to processes such as risk identification and risk management, but it does not describe what these entail nor does to elucidate how variations in the effectiveness of these processes might affect the probability of the adverse outcome. As a case in point, when risks are identified with medicinal drugs, the risk management response from the pharmaceutical industry is often heavily guided by government regulations, but when environmental risks are identified, the risk management responses are often piecemeal and delayed due to epistemic uncertainties and contentions surrounding cause-effect relationships and attribution of liability (Kitcher Citation2010). Hence, anthropogenic risk creation and its management may vary to some degree between situations, but the specific details underlying these contextual variations may not always be fully captured by MARC. Nonetheless, more detailed accounts of some of these processes can be found in other publications and frameworks (e.g. SARF as a model of risk communication: Kasperson et al. Citation1988).

Third, although the structure of MARC implies that the various stages naturally flow in a chronological order, there may be occasions when the stages do not follow in this order and are realized at different times across different contexts. For instance, it could be inferred that MARC depicts the ‘reliance/dependence’ stage as occurring at the same time as the ‘inadvertent risk’ stage. While it is highly plausible that these two stages could occur at roughly the same time, it is also possible that one of these stages could occur long before or after the other. One example of the two stages not occurring at similar times is the anthropogenic creation of health and environmental risks from burning fossil fuels. Arguably, the reliance/dependence (i.e. addiction) phase occurred many decades before evidence emerged of the harmful effects that burning fossil fuels has on living organisms and the natural environment. Fourth, as mentioned earlier, MARC only models the accidental creation of risk and, therefore, does not capture the special case of ‘deliberate risk creation’. Indeed, there may be several benefits to separately modelling the process of intentional risk creation. Nonetheless, MARC may still have some applications for this particular type of risk because deliberate risk creation can result in the existence of technologies that still inadvertently pose secondary risks. For example, many weapons of war have malfunctioned/could malfunction in host territories leading to multiple accidental deaths or have been/could be acquired by ‘enemies’ to harm the creators and their allies (Cook Citation2017; Schlosser Citation2013).

Finally, as with all novel models and frameworks, the accuracy of MARC’s conceptual and structural assertions require empirical assessment. Irrespective of whether such evaluations produce evidence that supports or contradicts some aspects of the model, this should generate epistemological advancements and help to stimulate important critical debate about the extent to which anthropogenic risk creation is understood and could be better prevented and/or managed.

7. Future research directions

Future research is required that specifically focuses on each of the key risk creation stages that are highlighted in MARC. First, specific research is needed on the socio-psychological (e.g. groupthink, risky-shift, obedience to authority) and psychological processes (e.g. motivational bias, focalism, over-commitment, overconfidence, confirmation bias) that may take place during innovation and invention processes (Bazerman and Moore Citation1994). The aim of this research should be to identify the extent to which these processes contribute to the inadvertent creation and potential neglect of risk and to determine the extent to which these processes manifest because the innovators are motivated or distracted by perceived benefits. In particular, the study of the risk and benefit perceptions of researchers, scientists, innovators and inventors as they conceive, develop, create and promote new technologies and services may prove fruitful. We suggest that those involved in the innovation and production/dissemination processes may become so intently focused on the merits, rewards and kudos of resolving immediate problems and/or satisfying pressing wants and needs that their risk perceptions may be poorly formed, suppressed or overridden by their benefit perceptions (Hanoch, Rolison, and Freund Citation2019). Indeed, extant studies show that perceptions of risks and benefits are often inversely related, leading to the belief that technologies that are high in benefits are low in risk (Alhakami and Slovic Citation1994; Finucane et al. Citation2000; Fischhoff et al. Citation1978; Slovic et al. Citation1991). However, these findings were from samples of the general population, rather than from those engaged in the practical process of technological innovation. Indeed, among innovators and commercial actors, one might even observe a higher level of inversion between risk and benefit perceptions because the potential for realizing far-reaching benefits is particularly high and, therefore, the potential to have suppressed or ill-developed perceptions of the risk may be much greater. Relatedly, researchers might aim to examine how to increase the incentives for risk identification and decrease the incentives for benefit realization during research, innovation and invention processes. Just as innovators can be driven by intrinsic objectives and extrinsic goals to resolve problems and achieve beneficial outcomes (see above), researchers might assess the influence of providing rewards to equal value for risk identification, reduction and management.

Second, research examining how social and cultural dynamics influence risk-taking and risk creation during innovation and invention processes could also prove highly valuable. As a starting point, this research could be guided by the extant literature on ‘man-made disasters’. For example, Perrow’s normal accidents theory and Turner’s model of man-made disasters both suggest that that specific ‘man-made’ disasters (e.g. Chernobyl, Challenger) have inadvertently manifested from social, cultural and technological interactions during operational processes (Perrow Citation2011; Turner Citation1978; Turner and Pidgeon Citation1997). Hence, researchers could examine how such interactions during innovation and invention processes might contribute to the anthropogenic creation of risk. One cautionary observation is that there may be limits to the extent to which extant theories of ‘man-made’ disasters can accurately guide future research on the broader issue of anthropogenic risk creation. This is because, unlike ‘man-made disasters’, which are a single sub-set of anthropogenic risks, the broader category of anthropogenic risks also includes those risks that are geographically, temporally and systemically dispersed, where responsibility for their creation and management is typically more contentious and widespread, and where specific adverse outcomes may be harder to foresee (Renn Citation2016).

Third, greater focus, debate and research is needed on risk governance and regulation in innovation and invention processes. For example, businesses have been one of the central social actors in facilitating and promoting consumption behaviors that have inadvertently created substantial risk to the natural environment. However, there seems to be low levels of formal expectations and regulations placed upon businesses to address the issue of future risk creation and there seems to be little accountability for risk creation or subsequent risk management by businesses (Halme and Korpela Citation2014; Lubberink et al. Citation2017). Better understanding might provide insights into ensuring businesses create less risk and play a greater role in risk management. One way to achieve this might be to extend the remit and reach of RRI so that it applies to commercial actors and to ensure that breach of the RRI guidance results in some form of accountability and/or legal redress. However, while it may be prudent to place greater legal requirements on all risk creators to take responsibility for managing the risk(s) that they create and perpetuate, a careful balance may be required to ensure innovation and invention processes do not become stifled. Strict and onerous requirements could lead to the avoidance of important innovative, entrepreneurial and adaptive actions.

Fourth, it is intended that MARC is of generic relevance to all risk types. Consequently, MARC does not necessary represent the nuanced processes that may be evident for specific types of risk. For example, existential risks (cf. non-existential risks) are considered to have several unique characteristics (e.g. no scope for trial-and-error, mitigation is a global public good) that could make traditional risk management approaches ineffective (Bostrom Citation2002, Citation2013; Leslie Citation1996; Sears Citation2020). Because MARC is not specific to such individual risk types, it does not necessarily represent the full complexity of each risk creation and management process. Hence, future work in this field might focus on developing variants of MARC that are tailored to specific risks. Such variants could provide those involved in innovation and risk management processes with more detailed insights into the potential pathways that can increase or decrease certain risk types.

A final observation based on past instances of anthropogenic risk creation is that much can be gained from aiming to achieve an early consensus on the existence of the risk and the anthropogenic source. As was shown in the case of CFCs contributing to ozone depletion, early identification and attribution for the risk was central to swift remedial action. Whereas in the case of climate change, epistemic uncertainty about the existence of the risk and its anthropogenic sources have resulted in sluggish global risk management actions. Hence, while early investment in risk identification and assessment might be costly, its capacity to help achieve an early consensus may prove to be a sound investment in the long-run. However, in the absence of an early consensus, adopting the precautionary approach/principle until the controversies and uncertainties are resolved - while not assuming that the adoption of the precautionary principle adds any weight to the existence of the risk – could prove wise (Foster, Vecchia, and Repacholi Citation2000). Indeed, this would give risk analyst and other relevant stakeholders time to work towards sound risk assessments that can inform judgments about what and how risk management resources should be allocated.

8. Conclusions

During this century, individuals, communities, societies and governments will probably spend a large portion of their time, energy and resources working to manage risks that have an anthropogenic source. To ensure that humanity can move into a future in which the volume and severity of such risks starts to decrease and in which there is less expenditure on remedial actions, a better understanding is needed of the processes that are involved in anthropogenic risk creation. MARC provides a starting point for developing this understanding by promoting reflection, discussion, research and assessment of the innovation and invention processes that humans undertake with intention of achieving positive outcomes. Hence, it is hoped that MARC can play a small role in creating a future with less risks and more benefits.

Disclosure statement

No potential conflict of interest was reported by the authors.

References

- Alhakami, A. S., and P. Slovic. 1994. A psychological study of the inverse relationship between perceived risk and perceived benefit. Risk Analysis14 (6):1085–1096.

- American Medical Association. 2001. “AMA Report on Genetically Modified Crops and Foods.” https://www.isaaa.org/kc/Publications/htm/articles/Position/ama.htm

- Andino, J. M., and F. S. Rowland. 1999. “Chlorofluorocarbons (CFCs) Are Heavier than Air, so How Do Scientists Suppose That These Chemicals Reach the Altitude of the Ozone Layer to Adversely Affect It?” Scientific American. https://www.scientificamerican.com/article/chlorofluorocarbons-cfcs.

- Bazerman, M. H., and D. A. Moore. 1994. Judgment in Managerial Decision Making. New York: Wiley.

- Beck, U. 1992. Risk Society: Towards a New Modernity. London: Sage.

- Blok, V., and P. Lemmens. 2015. The Emerging Concept of Responsible Innovation. Three Reasons Why It Is Questionable and Calls for a Radical Transformation of the Concept of Innovation BT – Responsible Innovation 2: Concepts, Approaches, and Applications (B.-J. Koops, I. Oosterlaken, H. Romijn, T. Swierstra, & J. van den Hoven, Eds.). Springer: Cham, Switzerland. doi:https://doi.org/10.1007/978-3-319-17308-5_2.

- Bostrom, N. 2002. “Existential Risks: Analyzing Human Extinction Scenarios and Related Hazards.” Journal of Evolution and Technology 9 (1): 1–36.

- Bostrom, N. 2013. “Existential Risk Prevention as Global Priority.” Global Policy 4 (1): 15–31. doi:https://doi.org/10.1111/1758-5899.12002.

- Breakwell, G. M. 2007. The Psychology of Risk. Cambridge, UK: Cambridge University Press.

- Brienen, N. C. J., A. Timen, J. Wallinga, J. E. Van Steenbergen, and P. F. M. Teunis. 2010. “The Effect of Mask Use on the Spread of Influenza during a Pandemic.” Risk Analysis 30 (8): 1210–1218. doi:https://doi.org/10.1111/j.1539-6924.2010.01428.x.

- Brown, K. E., and E. Pearson. 2018. “Social Media, the Online Environment and Terrorism.” In Routledge Handbook of Terrorism and Counterterrorism, 149–164. Oxon, UK: Routledge.

- Burgess, A. 2007. “Mobile Phones and Service Stations: Rumour, Risk and Precaution.” Diogenes 54 (1): 125–139. doi:https://doi.org/10.1177/0392192107073435.

- Carney Almroth, B., and H. Eggert. 2019. “Marine Plastic Pollution: Sources, Impacts, and Policy Issues.” Review of Environmental Economics and Policy 13 (2): 317–326. doi:https://doi.org/10.1093/reep/rez012.

- Carpenter, D. O. 1998. “Polychlorinated Biphenyls and Human Health.” International Journal of Occupational Medicine and Environmental Health 11 (4): 291–303. http://europepmc.org/abstract/MED/10028197.

- Cook, A. H. 2017. Terrorist Organizations and Weapons of Mass Destruction: US Threats, Responses, and Policies. Lanham, Maryland: Rowman & Littlefield.

- Corner, A., K. Parkhill, N. Pidgeon, and N. E. Vaughan. 2013. “Messing with Nature? Exploring Public Perceptions of Geoengineering in the UK.” Global Environmental Change 23 (5): 938–947. doi:https://doi.org/10.1016/j.gloenvcha.2013.06.002.

- Corner, A., and N. Pidgeon. 2010. “Geoengineering the Climate: The Social and Ethical Implications.” Environment: Science and Policy for Sustainable Development 52 (1): 24–37. doi:https://doi.org/10.1080/00139150903479563.

- Cox, L. A., Jr. 1997. “Does Diesel Exhaust Cause Human Lung Cancer?” Risk Analysis 17 (6): 807–829. doi:https://doi.org/10.1111/j.1539-6924.1997.tb01286.x.

- Dake, K. 1992. “Myths of Nature: Culture and the Social Construction of Risk.” Journal of Social Issues 48 (4): 21–27. doi:https://doi.org/10.1111/j.1540-4560.1992.tb01943.x.

- de Saille, S., and F. Medvecky. 2016. “Innovation for a Steady State: A Case for Responsible Stagnation.” Economy and Society 45 (1): 1–23. doi:https://doi.org/10.1080/03085147.2016.1143727.

- Denney, D. 2005. Risk and Society. London, UK: Sage.

- Edwards, I. R., and J. K. Aronson. 2000. “Adverse Drug Reactions: Definitions, Diagnosis, and Management.” The Lancet 356 (9237): 1255–1259. doi:https://doi.org/10.1016/S0140-6736(00)02799-9.

- Eiser, J. R., A. Bostrom, I. Burton, D. M. Johnston, J. McClure, D. Paton, J. van der Pligt, and M. P. White. 2012. “Risk Interpretation and Action: A Conceptual Framework for Responses to Natural Hazards.” International Journal of Disaster Risk Reduction 1 (0): 5–16. doi:https://doi.org/10.1016/j.ijdrr.2012.05.002.

- El-Sabh, M. I., and T. S. Murty. 2012. Natural and Man-Made Hazards: Proceedings of the International Symposium Held at Rimouski, Quebec, Canada, 3–9 August, 1986. Dordrecht, Holland: Springer Science & Business Media.

- Engelhardt, H. T., and A. L. Caplan. 1987. Scientific Controversies: Case Studies in the Resolution and Closure of Disputes in Science and Technology. Cambridge, Massachusetts: Cambridge University Press.

- Eriksen, M., L. C. M. Lebreton, H. S. Carson, M. Thiel, C. J. Moore, J. C. Borerro, F. Galgani, P. G. Ryan, and J. Reisser. 2014. “Plastic Pollution in the World’s Oceans: More than 5 Trillion Plastic Pieces Weighing over 250,000 Tons Afloat at Sea.” PLoS One 9 (12): e111913. doi:https://doi.org/10.1371/journal.pone.0111913.

- Finucane, M. L., A. Alhakami, P. Slovic, and S. M. Johnson. 2000. The affect heuristic in judgments of risks and benefits. Journal of behavioral decision making 13 (1): 1–17.

- Fischhoff, B. 1995. “Risk Perception and Communication Unplugged: Twenty Years of Process.” Risk Analysis 15 (2): 137–145. (doi:https://doi.org/10.1111/j.1539-6924.1995.tb00308.x.

- Fischhoff, B., P. Slovic, S. Lichtenstein, S. Read, and B. Combs. 1978. How safe is safe enough? A psychometric study of attitudes towards technological risks and benefits. Policy sciences 9 (2): 127–152.

- Flynn, J., P. Slovic, and H. Kunreuther (eds.) 2001. Risk, Media and Stigma: Undestanding Public Challenges to Modern Science and Technology. London, UK: Earthscan.

- Foster, K. R., P. Vecchia, and M. H. Repacholi. 2000. “Risk Management. Science and the Precautionary Principle.” Science 288 (5468): 979–981. doi:https://doi.org/10.1126/science.288.5468.979.

- Gaskell, G., N. Allum, W. Wagner, N. Kronberger, H. Torgersen, J. Hampel, and J. Bardes. 2004. “GM Foods and the Misperception of Risk Perception.” Risk Analysis 24 (1): 185–194. doi:https://doi.org/10.1111/j.0272-4332.2004.00421.x.

- Geyer, R., J. R. Jambeck, and K. L. Law. 2017. “Production, Use, and Fate of All Plastics Ever Made.” Science Advances 3 (7): e1700782. doi:https://doi.org/10.1126/sciadv.1700782.

- Gray, G. M., and J. K. Hammitt. 2000. “Risk/Risk Trade-Offs in Pesticide Regulation: An Exploratory Analysis of the Public Health Effects of a Ban on Organophosphate and Carbamate Pesticides.” Risk Analysis 20 (5): 665–680. doi:https://doi.org/10.1111/0272-4332.205060.

- Halme, M., and M. Korpela. 2014. “Responsible Innovation toward Sustainable Development in Small and Medium-Sized Enterprises: A Resource Perspective.” Business Strategy and the Environment 23 (8): 547–566. doi:https://doi.org/10.1002/bse.1801.

- Hammond, D. 2011. “Health Warning Messages on Tobacco Products: A Review.” Tobacco Control 20 (5): 327–337. doi:https://doi.org/10.1136/tc.2010.037630.

- Handmer, J. 2008. “Risk Creation, Bearing and Sharing on Australian Floodplains.” International Journal of Water Resources Development 24 (4): 527–540. doi:https://doi.org/10.1080/07900620801921439.

- Hanoch, Y., J. Rolison, and A. M. Freund. 2019. “Reaping the Benefits and Avoiding the Risks: Unrealistic Optimism in the Health Domain.” Risk Analysis 39 (4): 792–804. doi:https://doi.org/10.1111/risa.13204.

- Hood, C., H. Rothstein, and R. Baldwin. 2001. The Government of Risk: Understanding Risk Regulation Regimes. Oxford: OUP.

- Husak, D. N. 1998. “Reasonable Risk Creation and Overinclusive Legislation.” Buffalo Criminal Law Review 1 (2): 599–626. doi:https://doi.org/10.1525/nclr.1998.1.2.599.

- Intergovernmental Panel on Climate Change. 2014. “Summary for Policymakers.” In Climate Change 2014: Mitigation of Climate Change. Contribution of Working Group III to the Fifth Assessment Report of the Intergovernmental Panel on Climate Change, edited by O. Edenhofer, R. Pichs-Madruga, Y. Sokona, E. Farahani, S. Kadner, K. Seyboth, A. Adler, et al. New York: Cambridge University Press. Retrieved from Intergovernmental Panel on Climate Change website: https://www.ipcc.ch/pdf/assessment-report/ar5/wg3/ipcc_wg3_ar5_summary-for-policymakers.pdf

- Kaplan, A. M., and M. Haenlein. 2010. “Users of the World, Unite! The Challenges and Opportunities of Social Media.” Business Horizons 53 (1): 59–68. doi:https://doi.org/10.1016/j.bushor.2009.09.003.

- Karlehagen, S., A. Andersen, and C.-G. Ohlson. 1992. “Cancer Incidence among Creosote-Exposed Workers.” Scandinavian Journal of Work, Environment & Health 18 (1): 26–29. http://www.jstor.org/stable/40965962. doi:https://doi.org/10.5271/sjweh.1612.

- Kasperson, R. E., O. Renn, P. Slovic, H. S. Brown, J. Emel, R. Goble, J. X. Kasperson, and S. Ratick. 1988. “The Social Amplification of Risk: A Conceptual Framework.” Risk Analysis 8 (2): 177–187. doi:https://doi.org/10.1111/j.1539-6924.1988.tb01168.x.

- Kitcher, P. 2010. “The Climate Change Debates.” Science 328 (5983): 1230–1234. doi:https://doi.org/10.1126/science.1189312.

- Lach, S., and M. Schankerman. 2008. “Incentives and Invention in Universities.” The RAND Journal of Economics 39 (2): 403–433. doi:https://doi.org/10.1111/j.0741-6261.2008.00020.x.

- Lebreton, L., and A. Andrady. 2019. “Future Scenarios of Global Plastic Waste Generation and Disposal.” Palgrave Communications 5 (1): 6. doi:https://doi.org/10.1057/s41599-018-0212-7.

- Lelieveld, J., J. S. Evans, M. Fnais, D. Giannadaki, and A. Pozzer. 2015. “The Contribution of Outdoor Air Pollution Sources to Premature Mortality on a Global Scale.” Nature 525 (7569): 367–371. doi:https://doi.org/10.1038/nature15371.

- Leslie, J. 1996. The End of the World: The Ethics and Science of Human Extinction. London: Routledge.

- Lewis, J., and CICERO (Center for International Climate and Environmental Research – Oslo). 2012. “The Good, the Bad and the Ugly: Disaster Risk Reduction (DRR) versus Disaster Risk Creation (DRC).” PLoS Currents 4: e4f8d4eaec6af8. doi:https://doi.org/10.1371/4f8d4eaec6af8.

- Lubberink, R., V. Blok, J. Van Ophem, and O. Omta. 2017. “Lessons for Responsible Innovation in the Business Context: A Systematic Literature Review of Responsible.” Sustainability 9 (5): 721. doi:https://doi.org/10.3390/su9050721.

- MacGregor, D. G. 2003. “Public Response to Y2K: Social Amplification and Risk Adaptation: Or, “How I Learned to Stop Worrying and Love Y2K”.” In The Social Amplification of Risk, edited by Nick Pidgeon, Roger Kasperson, and Paul Slovic, 243–261. Cambridge, UK: Cambridge University Press.

- Matheny, J. G. 2007. “Reducing the Risk of Human Extinction.” Risk Analysis 27 (5): 1335–1344. doi:https://doi.org/10.1111/j.1539-6924.2007.00960.x.

- McBride, W. G. 1977. Thalidomide and Congenital Abnormalities BT - Problems of Birth Defects: From Hippocrates to Thalidomide and After (T. V. N. Persaud, Ed.). Lancaster, UK: MTP Press Limited. doi:https://doi.org/10.1007/978-94-011-6621-8_27.

- Melenhorst, A.-S., W. A. Rogers, and D. G. Bouwhuis. 2006. “Older Adults’ Motivated Choice for Technological Innovation: Evidence for Benefit-Driven Selectivity.” Psychology and Aging 21 (1): 190–195. doi:https://doi.org/10.1037/0882-7974.21.1.190.

- Mokdad, A. H., J. S. Marks, D. F. Stroup, and J. L. Gerberding. 2004. “Actual Causes of Death in the United States, 2000.” Jama 291 (10): 1238–1245. doi:https://doi.org/10.1001/jama.291.10.1238.

- Musk, A. W., and N. H. DE Klerk. 2003. “History of Tobacco and Health.” Respirology 8 (3): 286–290. doi:https://doi.org/10.1046/j.1440-1843.2003.00483.x.

- Nekola, J. C., C. D. Allen, J. H. Brown, J. R. Burger, A. D. Davidson, T. S. Fristoe, M. J. Hamilton, et al. 2013. “The Malthusian-Darwinian dynamic and the trajectory of civilization.” Trends in Ecology & Evolution 28 (3): 127–130. doi:https://doi.org/10.1016/j.tree.2012.12.001.

- Orr, J. C., V. J. Fabry, O. Aumont, L. Bopp, S. C. Doney, R. A. Feely, A. Gnanadesikan, et al. 2005. “Anthropogenic Ocean Acidification over the Twenty-First Century and Its Impact on Calcifying Organisms.” Nature 437 (7059): 681–686. doi:https://doi.org/10.1038/nature04095.

- Owen, R., and N. Goldberg. 2010. “Responsible Innovation: A Pilot Study with the U.K. Engineering and Physical Sciences Research Council.” Risk Analysis 30 (11): 1699–1707. doi:https://doi.org/10.1111/j.1539-6924.2010.01517.x.

- Owen, R., P. Macnaghten, and J. Stilgoe. 2012. “Responsible Research and Innovation: From Science in Society to Science for Society, with Society.” Science and Public Policy 39 (6): 751–760. doi:https://doi.org/10.1093/scipol/scs093.

- Pain, E. 2017. “To Be a Responsible Researcher, Reach out and Listen.” Science. doi:https://doi.org/10.1126/science.caredit.a1700006.

- Perrow, C. 2011. Normal Accidents: Living with High Risk Technologies-Updated Edition. Princeton, New Jersey: Princeton University Press.

- Persily, N. 2017. “The 2016 US Election: Can Democracy Survive the Internet?” Journal of Democracy 28 (2): 63–76. doi:https://doi.org/10.1353/jod.2017.0025.

- Pidgeon, N., B. Harthorn, and T. Satterfield. 2011. “Nanotechnology Risk Perceptions and Communication: Emerging Technologies, Emerging Challenges.” Risk Analysis 31 (11): 1694–1700. doi:https://doi.org/10.1111/j.1539-6924.2011.01738.x.

- Pivokonsky, M., L. Cermakova, K. Novotna, P. Peer, T. Cajthaml, and V. Janda. 2018. “Occurrence of Microplastics in Raw and Treated Drinking Water.” The Science of the Total Environment 643: 1644–1651. doi:https://doi.org/10.1016/j.scitotenv.2018.08.102.

- Powell, D., and W. Leiss. 1997. Mad Cows and Mother’s Milk: The Perils of Poor Risk Communication. Montreal, Canada: McGill-Queen’s Press-MQUP.

- Renn, O. 2016. “Systemic Risks: The New Kid on the Block.” Environment: Science and Policy for Sustainable Development 58 (2): 26–36. doi:https://doi.org/10.1080/00139157.2016.1134019.

- Renn, O., W. J. Burns, J. X. Kasperson, R. E. Kasperson, and P. Slovic. 1992. “The Social Amplification of Risk: Theoretical Foundations and Empirical Applications.” Journal of Social Issues 48 (4): 137–160. doi:https://doi.org/10.1111/j.1540-4560.1992.tb01949.x.

- Santillo, D., K. Miller, and P. Johnston. 2017. “Microplastics as Contaminants in Commercially Important Seafood Species.” Integrated Environmental Assessment and Management 13 (3): 516–521. doi:https://doi.org/10.1002/ieam.1909.

- Schlosser, E. 2013. Command and Control: Nuclear Weapons, the Damascus Accident, and the Illusion of Safety. New York: Penguin.

- Sears, N. A. 2020. “Existential Security: Towards a Security Framework for the Survival of Humanity.” Global Policy 11 (2): 255–266. doi:https://doi.org/10.1111/1758-5899.12800.

- Selikoff, I. J., J. Churg, and E. C. Hammond. 1964. “Asbestos Exposure and Neoplasia.” JAMA 188 (1): 22–26. doi:https://doi.org/10.1001/jama.1964.03060270028006.

- Slovic, P., B. Fischhoff, and S. Lichtenstein. 1979. “Rating the Risks.” Environment 21: 14–39.

- Slovic, P., N. Kraus, H. Lappe, and M. Major. 1991. Risk perception of prescription drugs: Report on a survey in Canada. Canadian Journal of Public Health/Revue Canadienne de Sante'e Publique82 (3): S15–S20.

- Smith-Schoenwalder, C. 2019. “What’s Behind the Climate Emergency Declarations?” Retrieved from US News and World Report website: https://www.msn.com/en-us/news/politics/what’s-behind-the-climate-emergency-declarations/ar-AAFTjUz

- Stilgoe, J., R. Owen, and P. Macnaghten. 2013. “Developing a Framework for Responsible Innovation.” Research Policy 42 (9): 1568–1580. doi:https://doi.org/10.1016/j.respol.2013.05.008.

- Syberg, K., S. F. Hansen, T. B. Christensen, and F. R. Khan. 2018. “Risk Perception of Plastic Pollution: Importance of Stakeholder Involvement and Citizen Science.” In Freshwater Microplastics : Emerging Environmental Contaminants?, edited by M. Wagner and S. Lambert, 203–221. Cham, Switzerland: Springer Nature. doi:https://doi.org/10.1007/978-3-319-61615-5_10.

- Synolakis, C., and U. Kânoğlu. 2015. “The Fukushima Accident Was Preventable.” Philosophical Transactions of the Royal Society A: Mathematical, Physical and Engineering Sciences 373 (2053): 20140379. doi:https://doi.org/10.1098/rsta.2014.0379.

- Szolovits, P. 2019. Artificial Intelligence in Medicine. New York: Routledge.

- Thrasher, J. F., M. J. Carpenter, J. O. Andrews, K. M. Gray, A. J. Alberg, A. Navarro, D. B. Friedman, and K. M. Cummings. 2012. “Cigarette Warning Label Policy Alternatives and Smoking-Related Health Disparities.” American Journal of Preventive Medicine 43 (6): 590–600. doi:https://doi.org/10.1016/j.amepre.2012.08.025.

- Tierney, K. 2018. “Disaster as Social Problem and Social Construct.” The Cambridge Handbook of Social Problems 2: 79–94.

- Tilman, D., M. Clark, D. R. Williams, K. Kimmel, S. Polasky, and C. Packer. 2017. “Future Threats to Biodiversity and Pathways to Their Prevention.” Nature 546 (7656): 73–81. . http://www.nature.com/nature/journal/v546/n7656/abs/nature22900.html#supplementary-information. doi:https://doi.org/10.1038/nature22900.

- Toke, D. 2004. The Politics of GM Food: A Comparative Study of the UK, USA and EU. Oxon, UK: Routledge.

- Tonn, B., and D. Stiefel. 2013. “Evaluating Methods for Estimating Existential Risks.” Risk Analysis 33 (10): 1772–1787. doi:https://doi.org/10.1111/risa.12039.

- Travis, J. M. J. 2003. “Climate Change and Habitat Destruction: A Deadly Anthropogenic Cocktail.” Proceedings of the Royal Society of London. Series B: Biological Sciences 270 (1514): 467–473. http://www.pubmedcentral.nih.gov/articlerender.fcgi?artid=1691268. doi:https://doi.org/10.1098/rspb.2002.2246.

- Tucker, W. T., and S. Ferson. 2008. “Evolved Altruism, Strong Reciprocity, and Perception of Risk.” Annals of the New York Academy of Sciences 1128: 111–120. doi:https://doi.org/10.1196/annals.1399.012.

- Turner, B. 1978. Man-Made Disasters. London: Wykeham. http://Spectrum.Ieee.Org/Energy/Nuclear/24-Hours-at-Fukushima/0

- Turner, B. A., and N. F. Pidgeon. 1997. Man-Made Disasters. Oxford, UK: Butterworth-Heinemann.

- UK Department for Business, Energy and Industrial Strategy. (n.d.). “UK Becomes First Major Economy to Pass Net Zero Emissions Law.” Accessed August 23, 2019. https://www.gov.uk/government/organisations/department-for-business-energy-and-industrial-strategy

- Union, E. 2019. “Responsible Research and Innovation.” Accessed August 19, 2019, from Responsible Research and Innovation website. https://ec.europa.eu/programmes/horizon2020/en/h2020-section/responsible-research-innovation

- US National Research Council. 1982. Causes and Effects of Stratospheric Ozone Reduction: An Update. Washington D.C.: National Academies Press.

- Ward, K. 2018. “Social Networks, the 2016 US Presidential Election, and Kantian Ethics: Applying the Categorical Imperative to Cambridge Analytica’s Behavioral Microtargeting.” Journal of Media Ethics 33 (3): 133–148. doi:https://doi.org/10.1080/23736992.2018.1477047.

- Weart, S. 2003. “The Discovery of Rapid Climate Change.” Physics Today 56 (8): 30–36. doi:https://doi.org/10.1063/1.1611350.

- Weart, S. R. 2008. The Discovery of Global Warming. Cambridge, Massachusetts: Harvard University Press.

- World Health Organization. 2008. WHO Report on the Global Tobacco Epidemic, 2008: The MPOWER Package. Geneva, Switzerland: World Health Organization.

- Wright, B. D. 1983. “The Economics of Invention Incentives: Patents, Prizes, and Research Contracts.” The American Economic Review 73 (4): 691–707. http://www.jstor.org/stable/1816567.