Abstract

Mitigating risk from exposures to indoor radon is a critical public health problem confronting many countries worldwide. In order to ensure effective radon risk management based on social scientific evidence, it is essential to reduce scientific uncertainty about the state of social methodology. This paper presents a review of methodological (best) practices, and sensitivity to bias, in research on public attitudes and behaviours with regards to radon risks. Using content analysis, we examined characteristics of research design, construct measurement, and data analysis. Having identified certain challenges based on established and new typologies used to assess methodological quality, our research suggests that there is a need for attention to (limitations of) cross-sectional design, representative and appropriate sampling, and a pluralist approach to methods and analysis. Furthermore, we advocate for more comparative research, rigorous measurement and construct validation. Lastly, we argue that research should focus on behavioural outcomes to ensure effective radon risk management. We conclude that for any field to thrive it is crucial that there is methodological reflexivity among researchers. Our recommendations serve as a useful guide for researchers and practitioners seeking to understand and enhance the rigor of social methodology in their field.

Introduction

In order to evaluate and solidify (future) contributions of social sciences to ionizing-radiation risk management, it is essential to take stock of the substantive findings and the methodological state of the art. While researchers continuously do the first, they are far less active with regards to the latter. This is a pity given that conclusions drawn from research are only as solid as the underlying methodologies (Bouder et al. Citation2021; Van Oudheusden, Turcanu, and Molyneux-Hodgson Citation2018). Of course, it is impossible to study the state of social methodology of the whole domain of risk research. In line with Verhagen et al. (Citation2001), it makes more sense to focus on a specific research question(s), in order to minimize heterogeneity of outcome measures. In this study we focus on studies on public attitudes and behaviours towards radon risks.

Background on the field of Radon

Indoor radon is the most important source of exposure to ionizing radiation and one of the primary causes of lung cancer in the general population (World Health Organization Citation2009). To protect against the dangers of this radioactive gas, legal frameworks were established in national and supranational regulation such as the European Basic Safety Standards Directive. The most recent amendments to the Directive introduce legally binding requirements related to radon. Article 103 in particular stipulates that Member States should develop national radon action plans. Member States are also required to inform the public about the importance of performing measurements and reducing radon concentrations in their homes (European Union Citation2013, p. 37).

While protective measures for radon measurement (testing) and radon risk reduction (remediation) are relatively cheap and accessible, and considerable efforts have been undertaken by authorities to promote them, the uptake of protective measures to desired levels is still not achieved (Cholowsky et al. Citation2021; Perko and Turcanu Citation2020).

Potential explanations point to a gap in the rate and rigor by which social science insights are incorporated into radon risk management (Bouder et al. Citation2021; Turcanu et al. Citation2020). Social scientific insights can clarify the uptake of protective measures and the factors (e.g. attitudes) influencing their implementation or lack thereof, hereby guiding risk management and improving performance (Renn and Benighaus Citation2013). Hence, the need for more scientifically-based action (Lacchia, Schuitema, and Banerjee Citation2020).

However, flaws in design, analysis or reporting, can cause effects to be under- or overestimated, and thereby obstruct effective evidence-based radon management (Leviton Citation2017; Higgins et al. Citation2011). To allow policymakers and researchers to determine the trustworthiness and applicability of evidence, they need to be aware of methodological quality.

To date no comprehensive overview of methodological criteria has been published specifically with regards to social methodology for radon research as nearly all reviews in the field focus on exposure to radon (e.g. Su et al. Citation2022), associations between radon and lung cancer (e.g. Rodríguez Martínez, Ruano Ravina, and Torres Duran Citation2018), mitigation strategies (Khan, Gomes, et al. Citation2019), or a specific social method (Martell et al. Citation2021).

However, to define and evaluate the quality of social methodology, we can draw on various research traditions. An extensive criteriology, developed by (statistical) experts, exists in the field of epidemiology research (Deeks et al. Citation2003; Sanderson, Tatt, and Higgins Citation2007; Verhagen et al. Citation1998; Vandenbroucke et al. Citation2014). Methodologists from this field, tend to focus on e.g. the study design, setting, sample design, and measurement of variables. They are mainly concerned with the validity of the design, and the likelihood that a research design generates unbiased results (Verhagen et al. Citation2001). Our evaluation criteria were strongly influenced by these guidelines. Although the validity typology is historically intertwined with experimental research (see Campbell, Stanley, and Gage Citation1963; Cook and Campbell Donald Citation1979), it has also extensively been used in social sciences (Shadish Citation2010; Neall and Tuckey Citation2014). In practice, we reflect on external validity. Since, to be of use for radon management, evidence needs to be valid beyond a research sample. We also discuss the internal validity, because it is essential that inferences regarding causal relationships can be drawn about what influences people to (not) take protective action against radon.

We also relied on social methodological literature to inform criteria on methodological quality. Because of inherent pluralism, and reliance on direct public assessments, this literature recognizes the pragmatist tradition. According to pragmatist principles, research should be contextually situated without being committed to any philosophical tradition, and take a holistic approach (diverse methods, analytic techniques) to fully understand a problem, here radon (Creswell Citation2009). The underlying assumption is that research studies individually and in correlation with each other contribute to the quality of evidence, it is a shared responsibility (Loosveldt, Carton, and Billiet Citation2004). We therefore, do not consider the substantive content as solely important, and include additional criteria that are relevant to the evaluation of quality; such as awareness of design-specific sources of bias, and the degree of diversity in methodological approaches. Here, the completeness of evidence comes under scrutiny.

To summarize, in this review we quantify the nature and extent of limitations to methodological designs within the body of evidence on public attitudes and behaviours regarding radon. We also then reflect on best practices in minimizing threats to validity and bias, and give recommendations to improve the depth and reach of the evidence base.

Methods

In this paper we conducted a methodological review, which refers to a study where methodological characteristics of studies focusing on a similar topic are evaluated, in order to highlight limitations of design, conduct and analysis. Although there is no published tool for appraising the risk of bias in methodological studies, it should be regarded as a type of observational study, therefore bias was avoided by conducting a comprehensive reproducible search to generate a systematic and representative sample (Mbuagbaw et al. Citation2020).

Unfortunately, relevant research for the present methodological study is generally poorly indexed and dispersed across many different academic disciplines. We chose to direct our focus on peer-reviewed literature, since in principle here we should find methodologically rigorous research.

Article search

From the 23rd of November 2020 till the 1st of December 2020 we searched four databases: Web of ScienceTM, Scopus®, Medline and Sociological abstracts (SA). The search strings, designed in cooperation with an experienced university librarian, consisted of combinations between the risk of interest - ‘radon’ (1) and method-keywords (2). A separate search (3) was conducted for combinations with ‘radon’ and the methods ‘survey’ and ‘experiment’, due to the common use of such methods in natural sciences. In this separate search, limitations regarding the subject (4) were introduced. For an overview of the search strategy, see .

Table 1. Search string in web of science format.

Article selection

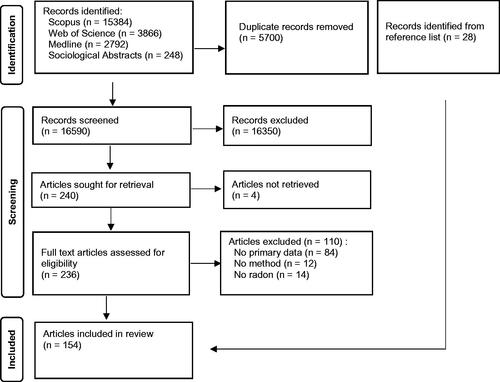

A total of 22.290Footnote1 identified records were uploaded into Endnote and thereafter to the literature review tool Rayyan (Ouzzani et al. Citation2016). In the first automated stage 5.700 duplicates were removed.

In the second stage, three reviewers performed eligibility screening of 16.590 records based on title, abstract and meta-data. Eligible records included all peer-reviewed social radon articles, published in the English language. A sample of about 20% was screened by at least two reviewers, and conflicting decisions (30), were resolved in discussion with a third reviewer.

In the penultimate stage, the full texts of 240 articles that met the initial eligibility criteria were examined. Articles that could not be retrieved, presented no primary data (e.g. review articles) – to avoid duplicates, disclosed no methodological information and/or only mentioned, but did not investigate radon and psycho-social concepts, were excluded.

In the final stage of study selection, articles were snowballed from the references of the included database articles. The 28 articles resulting from snowballing were screened on the same criteria as the initial database sample ().

Data coding

A data extraction form, based on criteriology from classic epidemiological- (RCT) and social methodological research, was created in the online tool Qualtrics©. For each article, we extracted: 1) bibliographical information, 2) study aim, 3) study design, 4) time-frame, 5) population description, 6) sample strategy, 7) sample size, 8) geographical setting, 9) dependent and independent variables (concepts and indicators), 10) quality assessments (reliability, validity, and qualitative counterparts), 11) type of analysis, 12) unit of analysis, 13) analysis software, 14) data availability (e.g. database), 15) main findings and 16) ethical considerations (conflicts of interest, funding and privacy).

Following a pre-test, a total of ten trained coders extracted the data, and every individual article was reviewed by at least two independent coders. Conflicts (10%) between coders were resolved by additional two independent master coders. The extracted data was later grouped in categories during the stage of data analysis.

Data analysis

The systematically obtained articles were studied through content analysis, results are presented descriptively in counts and percentages. In line with previous methodological reviews, we grouped results on three dimensions: 1) research design, 2) construct measurement, 3) data analysis (Ketchen, Boyd, and Bergh Citation2008; Scandura and Williams Citation2000; Aguinis et al. Citation2009; Whitten et al. Citation2007).

Five components of ‘research design’ were considered: the overall design, time-frame, data collection method, design context and sample characteristics. For ‘construct measurement’ we looked at three components of construct validation: validity analysis, reliability tests, and validation techniques for qualitative research. Lastly, for the ‘data analysis’ we looked at the analytic technique and level of analysis. provides an in-depth overview of the codes with each component.

Table 2. Taxonomy for content analysis.

In addition to these data, we added reflections from the authors of the reviewed studies on the limitation (bias/validity) of their design and/or analysis choices articles. This is to provide insight into limitations of some strategies, and guidance for advancement in the field.

We also reviewed whether the studies focused on cognitive components (knowledge of radon, and perceptions), or behavioural components (testing and mitigation) over time. The cognitive components can be regarded as antecedents to radon behaviour, but we wanted to see if they were studied as such. The coding scheme for this section can be found in Appendix 1.

In order to observe trends over time we reviewed methodological characteristics over three approximately equal time-periods: 1987–1998 (first period), 1999–2009 (second period), 2010–2020 (third period).

Results

In , we give a longitudinal overview of our analytical sample. We evaluated 154 articles that were published over a 34-year period (see full list in Appendix 2). The number of publications has remained relatively stable, with an average of five articles per year. The relative occurrence of topical components, however, has somewhat changed.

Table 3. Summary of study topics.

The most studied components overall were radon testing and radon perceptions, represented in 70% and 69% of the articles, respectively, followed by knowledge (64%). A clear downward trend in interest to the topical component of perceptions can be observed: while 76% of the articles investigated this aspect of cognition prior to the year 1999, the relative frequency declined to 60% in present times. Radon testing was the primary component of investigation overall, but from 1999-2009 (second period) it was only discussed in 57% of the articles.

Remediation was consistently studied the least, compared to the other radon components such as risk perception or radon testing, never in more than 46% of the articles and in only 28% in the latest research period. Here too a downward trend in interest can be observed. Furthermore, in only 35 of the 154 articles, testing and/or remediation were studied as dependent variables.

While four major topics can be distinguished, there is great proliferation in concepts and dimensions studied within. Taking radon risk perceptions as an example, Coleman (Citation1993) makes the distinction between personal risk judgements and societal risk judgements. Other authors focus on the perception of the severity of radon risk and susceptibility to radon (Hazar et al. Citation2014) or radon-induced illness (Weinstein and Sandman Citation1992), or risk-synergies (Rinker, Hahn, and Rayens Citation2014). This proliferation suggests that many of the social constructs studied within the field are multidimensional and complex.

All mentions of social constructs were given equal weight in , although not all studies went into equal depth on the topic because radon was not the primary focus in all articles. A total of 55 articles (37%) investigated radon as part of a broader study on environmental and/or radiological health risks. Park, Scherer, and Glynn (Citation2001) for example, measured people’s risk judgement about four health issues; water contamination, AIDS, heart disease and radon. Some studies considered also interaction effects, particularly the synergistic risk between radon and smoking is frequently explored (e.g. Hampson et al. Citation2003). The distinction between primary and secondary interest matters because studies exclusively investigating radon have a higher level of detail, and can thus capture behaviours and attitudes more accurately.

Research design

Study design

Concerning methodological approaches, we first looked at the study design. Choices in study design reflect how we see, collect and analyse data. Quantitative explanatory research allows for hypothesis testing with regards to behaviour. Qualitative research, if some criteria are met, allows for more in depth study of the context of cognition, affection and behaviour. No choice is per definition superior to another, as both approaches have their limitations. We would thus, from a pragmatist perspective, expect to find mixed-methods research or relatively balanced frequencies of qualitative and quantitative methods, in order to compensate weaknesses in a single design.

Quantitative research approaches dominated in our analytical sample and were used in 82% of all articles, primarily explanatory (44%), but also descriptive (38%) design (). Qualitative approaches (10%) and mixed methods (7%) were only marginally used, together only in 27 out of 154 articles. The use of quantitative explanatory research, declined over time, as did the use of mixed methods.

Table 4. Summary of study design.

Use of data collection methods (n = 168Footnote2 methods used) follows that of research approaches. In 70% of all articles surveys were used, a consistently popular method over the years, and especially used in the most recent decennia. Moreover, 16% of the articles conducted experiments, amongst them survey-experiments. Other methods such as interviews, focus groups, media content analysis, educational activities and eye-trackers were less used.

With the primacy of survey-methodology in the field comes awareness of its limitations. Almost 30 articles refer to challenges inherent to surveys. In survey interviews, the presence of a researcher can cause respondents to answer what they think the researcher wants to hear, meaning there is a social desirability bias (remarked by i.a. Cronin et al. Citation2020). However, self-reports are also susceptible to social desirability (Neri et al. Citation2018; Gleason, Taggert, and Goun Citation2021 Nov-Dec 01; Nicotera et al. Citation2006). Respondent answers related to action (testing and/or remediation) sometimes reflect more how they want to be perceived than what they will actually do or have done (Losee, Shepperd, and Webster Citation2020; Jones, Foster, and Berens Citation2019).

Describing past actions in particular, poses a risk of recall bias leading to misclassifications (Cronin et al. Citation2020; Smith and Johnson Citation1988; Smith et al. Citation1988). Question wording plays a crucial role too. For instance Denu et al. (Citation2019) notes that when presented with the question ‘Have you tested for radon in this home?’, respondents can focus on the ‘you’ and report ‘no’ even though someone else did or coordinated testing (Denu et al. Citation2019).

Closely related to the issue of prospective and retrospective measurement is the time frame in which studies are conducted. In the majority of reviewed articles observations were made one-off, 79% are cross-sectional studies, and only 21% studied subjects over an extended period of time through longitudinal design in order to assess for instance if intentions to test or remediate have been translated to action (e.g. (Ford et al. Citation1996; Smith, Desvousges, and Payne Citation1995). There is also a steady increase in the relative frequency of cross-sectional articles, from 69 % in the research produced before the year 1999, to 89% after the year 2010 and a complementary decrease in longitudinal design in the respective time-windows.

The evolution towards cross-sectional studies is counter-intuitive considering that approximately 20 articles mention it as a limitation of the study. Authors remarked that such studies do not allow to determine the temporal relationship between variables (e.g. was the public that tested for radon more knowledgeable of radon prior to testing, or did they become more knowledgeable because they tested). Furthermore, non-experimental cross-sectional design does not allow for causal inferences, and there is strong reliance on potentially inaccurate self-reports of future behaviour, whereby respondents give socialy desirable answers to behavioural intention questions, but don’t actually change their behaviour (Hampson et al. Citation2000; Laflamme and Vanderslice Citation2004; Hill, Butterfield, and Larsson Citation2006; Rinker, Hahn, and Rayens Citation2014; Huntington-Moskos et al. Citation2016; Cronin et al. Citation2020; Larsson et al. Citation2009; Poortinga, Cox, and Pidgeon Citation2008; Nwako and Cahill Citation2020; Martin et al. Citation2020; Poortinga, Bronstering, and Lannon Citation2011).While we have seen that many studies try to circumvent the limitation of a one-off design through retro- and prospective questions, for instance by asking people if they “have taken actions to reduce radon exposure” or “intended to engage in remedial actions” (e.g. Mazur and Hall 1990), these are susceptible to bias due to unknown practical barriers (e.g. ability and willingness to pay for mitigation), and social desirability.

Population sampling

Three parameters are important for population sampling: who are the respondents, their number and how they are selected. In , we summarized the characteristics of the studied populations. When more than one population was studied in the same article, all of these were taken into account.

Table 5. Summary of sample characteristics.

Social radon research has been orientated mostly towards the general residential population (84%). Frequently, however, a detailed description of the study sample was missing. In 88 out of 129 articles the respondents could at most be classified as ‘occupants’. In 37 articles, however, the authors specified that they studied homeowners, in three articles - tenants, and in one - landlords. This distinction matters, because people can have different responsibilities with regards to testing and remediation, and differences in socioeconomic status may affect results if not controlled for (Hahn, Rayens, Kercsmar, Adkins, et al. Citation2014; Hahn, Rayens, Kercsmar, Robertson, et al. Citation2014; Rinker, Hahn, and Rayens Citation2014).

Studies were also interested in the cognition and behaviour of professionals (16%), specifically health professionals (13), scientific experts (6), and workers in public and private industries such as real estate officers and government employees (5).

Over time, interest in schools increased. Interest in specific other populations also increases. For the school population the relative frequency increased from 5% to 23%, meaning more university students (8), university staff (2), school children (4), school staff (3) and day-care personnel (1) were studied. This also includes research on an aggregate school (2) or day-care (1) level. The category of specific populations includes: parents or guardians (5), media publics (3), radon mine visitors (1) and tourists (1).

Regarding the selection of respondents, random selection was reported in 38% of all articles; including random sampling (55), systematic sampling (3) and cluster sampling (1). Yet, the relative frequency of random sampling undergoes a decline over time, from 46% of studies in the first observed time-window to 28% in the most recent one (from 30 to 15 studies in absoute terms). Non-probability sampling strategies were used in 68% of studies and included convenience sampling (94), quota sampling (9) and snowball sampling (2). This relative frequency increased to 75% in the most recent time period.

It is important to note that sampling strategies were often poorly described, and that for at least 44% of the articles classification was made by the reviewers based on the description of the sampling procedure. Furthermore, justifications for using a sampling technique were rarely given in terms of appropriateness for the research aim and design. As a consequence, sampling strategies did not always align with study objectives. For instance, sampling parents to assess the knowledge and perceptions of the national population at large.

In nearly 70 articles, authors recognised the prevalence of non-probability sampling techniques as a clear limitation. They acknowledged that results from non-random samples cannot be generalized to more diverse populations because of, among others, socio-demographical differences (e.g. Adams, Dewey, and Schur Citation1993; Baldwin, Frank, and Fielding Citation1998; Clifford, Hevey, and Menezes Citation2012; Cronin et al. Citation2020; Evans et al. Citation2015; Sanborn et al. Citation2019; Huntington-Moskos et al. Citation2016; Butler et al. Citation2017). Only in 23 articles the authors state, legitimately or not, that findings can be generalized to the broader population. Thus, the studies are limited in external- and internal validity. Furthermore, convenience sampling risks a self-selection bias towards those most interested in radon, or who have recently tested their house (Clifford, Hevey, and Menezes Citation2012). This has consequences for conclusions which can be drawn from such data.

However, random samples too, are sometimes difficult to generalize if sample sizes are low. The average sample size has steadily declined for quantitative studies, from 2554 respondents on average per article in 1987-1998, and 2681 respondents in the second wave of research, to 790 respondents on average in the most recent period. All samples fall in the range between the maximum size of 125.000 respondents (Kendall et al. Citation2016) and the minimum of 44 respondents (Evdokimoff and Ozonoff Citation1992).

Even with large sample sizes, studies can still be limited by low (item) response rates, as people may be unwilling (Neri et al. Citation2018) or unable to respond (Ford and Eheman Citation1997). For example the study by Ford and Eheman (Citation1997) originally had a nationally representative sample of 40.000 respondents, however only 70% had heard of radon, and only 5% had tested, which resulted in large standard errors and low external validity, as stated by the author of the study. Large nationally representative samples may thus not fit every radon study objective.

In qualitative research generalizability is not the objective and consequently sample sizes are generally smaller. The average sample sizes observed in the reviewed articles were considerable for what is common in qualitative research, with an overall average of 120 respondents per study. The sizes range from 4 interviews (Alsop and Watts Citation1997) to 1491 telephone calls (Macher and Hayward Citation1991). There were two studies for which the exact sample size could not be determined (Immé et al. Citation2014; Mazur Citation1987).

Geographical context

When describing the studied populations, the geographical context becomes relevant as this too is a defining characteristic of the respondents. In , we describe the location and administrative level on which the studies were conducted. The study location has changed considerably over time, but North American literature (71%) still dominates the field, namely the United States (104) and Canada (6). The relative a frequency of studies conducted in North America declined from 92% to 58% in the latest time period. The latter is linked to the rise of European studies (23); United Kingdom (13), Italy (7), Sweden (4), Ireland (3), Italy (3), Switzerland (3), Romania (1), Belgium (2), The Netherlands (1), Finland (1), Bulgaria (1) and Slovenia (1). Pioneering research was conducted in the United Kingdom, Ireland and Sweden. From the 2000s on, we see research conducted in Asia, first in Pakistan (3) and later also in Turkey (2), Saudi-Arabia (1), Iran (1) and Korea (1).

Table 6. Summary of context characteristics.

The leading role of literature from the United States has its implications for radon research as many findings and measurement instruments originate from this one country. Language and cultural differences can influence the accuracy and applicability of these instruments elsewhere. Consequently, there is uncertainty with regards to the generalizability of the findings and the policy advice to settings with different demographic compositions, cultures, media-, economic- and or political systems and histories. From the review we learned that the degree to which people have tested for radon, their risk perceptions and knowledge varied in function of communication strategies and other radon policies e.g. related to financial support (Hahn, Rayens, Kercsmar, Robertson, et al. Citation2014; Neri et al. Citation2018; Petrescu and Petrescu-Mag Citation2017; Hazar et al. Citation2014; Jones, Foster, and Berens Citation2019). It is thus important to take context characteristics into account when studying peoples’ cognition and behaviour (Poortinga, Cox, and Pidgeon Citation2008).

Comparative research can shed light onto the transferability of results across settings, which is why we looked at the level on which a study was conducted. Most frequently, the setting was defined on a local (67) or regional (56) level. Regional studies are those in which a geographical region or (American) state is mentioned, while local studies described one or multiple village(s), city/cities or province(s). To a lesser extent settings were described on an aggregate national (30) - meaning country - level. There was also only one international study, which studied the Belgian and Slovenian population (Perko et al. Citation2012). However, despite little cross-country comparison, tens of studies included participants from different regions within the same country, so there was some comparative research on a lower geographical level.

Construct measurement

Reliability tells us something about how reproducible findings are under the same circumstances, controlling for random error, while validity determines the extent to which methods measure what they claim to measure (Mohajan Citation2017). From , we deducted that most studies did not report on construct validation.

Table 7. Summary of construct measurement reports.

Table 8. Summary of data analysis techniques.

The highest frequency of reporting was found for reliability (16), with the preferred reliability criterion being Cronbach’s alpha. This psychometric test gives an indication of internal consistency, i.e. how well multiple items measure the same concept. A potential explanation for the low rate of reliability testing is the use of single indicators, in 60% of the articles. This suggests that much of the research assumes constructs are unidimensional, without verification. Furthermore, lack of test-retest reliability or reliability across time assessments, could likely be explained by the prevalence of cross-sectional studies.

Validity assessments were even less frequently performed than reliability assessments; only in 8 articles construct and/or factorial validity were evaluated. These measures give indications of how well the measures fit the theories for which they were designed.

Notably, 20 articles reported using previously validated instruments, without reporting a reliability or validity measurements themselves. However, using existing instruments does not guarantee that the produced results will correspond to real-world values. Factors such as characteristics of participants, the setting of the study and the stimuli, could differ from the original research from which the instruments originate and affect psychometric properties.

Reliability and validity can only tell us something about the quality of quantitative research, for qualitative studies other procedures exist for assessing rigor: interrater agreement (6), triangulation (1) and protocol pre-testing (1). However, in the most-recent time period such an assessment was nearly absent.

Data analysis

Lastly, we looked at how data was analysed for radon research and whether analyses allowed for theory testing, and uncovering of complexities and mechanisms of radon behaviour (see ).

We found that 24% of the articles exclusively presented descriptive statistics, a relative frequency that remained consistent over time. All other articles used multiple analytic approaches. However, among them are associations/correlations (45%), and (co)variances (37%), which also don’t allow hypothesis and causation testing.

Regressions were the most prevalent approaches used (49%). However, multilevel modelling, which is a very useful technique to simultaneously analyze individual and contextual data, was only used in two studies (Poortinga, Bronstering, and Lannon Citation2011; Poortinga, Cox, and Pidgeon Citation2008). Other lesser used techniques included citizen-science like educational activities (3%), content analysis (2%), thematic analysis (2%), and grounded theory (2%).

It is interesting to further diversify the techniques through which data are processed. For example, by using network analysis and structural equation modelling, multiple, indirect and/or interrelated influences between actors or variables could be modelled.

We also looked at the level of analysis, in order to know where the agency was usually situated in the social radon studies. Agency is primarily located at the individual level (56%), closely followed by the group-level (42%). The relative high frequency of the latter is explained by the prevalence of household studies, in which family members responded on behalf of their household. Furthermore, experimental studies compared groups over individuals. Since radon testing and remediation is in essence an individual responsibility, the lower level of analysis is understandable. However, systemic characteristics can influence behaviour too.

In 4% of the articles, the analysis was performed on an aggregate national level.

The methodological road ahead

Among the main findings, we identified six key methodological challenges related to data collection, amalgamation, and analysis which affect the advancement of radon risk research, by threatening the validity of findings. Studies in the field generally lack contextual comparison, methodological diversity, adequate samples, longitudinal inquiry, behaviour-explanatory focus and construct validation. In the following discussion, we will consider needs and directions for research and practice. Awareness of-, and attention to biases and threats to validity from methodological practices, could stimulate better, meaning validated and holistic, social research in the field of radon.

Attend to socio-cultural diversity

The ultimate purpose of social radon research is to learn how to improve the health of the public, through their knowledge and behavior, and to use this knowledge to inform public health practice via policy and other interventions. However, translation from research to practice often lags in the larger area of cancer prevention and control, because policy-makers are unable to determine the generalizability and breadth of applicability of research findings (Steckler and McLeroy Citation2008). Furthermore, radon is a global issue, but the socio-economic, political, cultural and historical specificities that shape behaviour differ over contexts (Poortinga, Cox, and Pidgeon Citation2008). Yet, it is impossible to test theories and interventions under all possible conditions, due to conceptual, methodological and logistic complexity, and generally costs (Salway et al. Citation2011). In applied disciplines such as radon, it is thus necessary to empathize and strengthen external validity.

The concept of external validity is dual. First, it refers to generalizability; or the degree to which findings are transferable to similar contexts in terms of inter alia, social- political, historical, and cultural characteristics. Second, it refers to applicability; or the degree to which findings are transferable to dissimilar contexts.

From the review we learn that there is great uncertainty in the degree to which results are generalizable to the same-, or applicable to contexts with different demographical compositions, cultures, media-, economic- and or political systems and histories (Hahn, Rayens, Kercsmar, Robertson, et al. Citation2014; Neri et al. Citation2018; Petrescu and Petrescu-Mag Citation2017).

Strengthening external validity requires that researchers better describe context features, as well as capture-, and explain those features that affect outcomes related to protective behaviour in the context of radon (Leviton Citation2017; Poortinga, Cox, and Pidgeon Citation2008).The latter can be achieved through replication of research in similar and dissimilar contexts, under conditions as close as possible to the original research (Steckler and McLeroy Citation2008). It can also be achieved through more (international) comparative research. Besides providing insights on how contextual characteristics impact health behaviour, it gives the opportunity to explore local, national and global influences on health, identify common barriers to protective behaviour, and finally, critically assess current policies, and interventions in order to identify effective practices and best-practices (Salway et al. Citation2011). Yet, from the review we know that there is only one cross-national comparative study, and only a few within-country studies encompassing different regions, alluding that context-dependency is seldom studied.

It is important to note that while cross-national research is an interesting avenue for further research, comparison of results warrants caution. Comparability issues arise whenever studies addressing the same question, here: how to motivate people to test and mitigate for radon, are compared over different cultures and time-periods. Meaningful comparison requires equivalence; using the same mode, sampling procedure, questions in terms of wording and meaning, in the same context (question order). The same approach is also needed for comparison within culturally diverse nations, with for instance multiple national languages (Weisberg Citation2005, p. 303–305). A challenging but innovative approach for the field of radon would thus be to develop new studies to be conducted in parallel in several nations.

Currently most instruments for measuring the attitudes and behaviours of the public originate from a single country. These instruments however, are not necessarily appropriate for use in comparative studies. When instruments are used in contexts other than those in which they were developed, adaptations to new populations (differing in language and/or country), settings, or periods, may be necessary. One well known form of adaptation is culture-sensitive translation. How such adaptations should look like, in order to remain consistent with the original theoretical foundation of concepts and theories, is an empirical question which warrants further investigation (Leviton Citation2017). There is thus a need for more cross-country construct validation.

All these considerations will likely become increasingly important, since we see that funding streams such as the European Commission’s Framework programmes increasingly encourage international collaborations and cross-national comparative research (Salway et al. Citation2011).

Use a multimethod approach

Social studies in the field of radon are dominated by survey research, represented in seven out of ten studies. As all methods do, surveys have limitations, and some were explicitly mentioned by the authors of the reviewed articles (Neri et al. Citation2018; Losee, Shepperd, and Webster Citation2020; Denu et al. Citation2019; Cronin et al. Citation2020). Although these accounts of potential sources of error are useful, we know that the field of survey research has made many advancements in documenting and addressing response-, and other survey errors (Battaglia et al. Citation2016; Tourangeau, Rips, and Rasinski Citation2000; Tourangeau Citation2020). For instance, to overcome recall bias, one could ask a second person in a household to fill in the survey, or use event timelines based on well-known national events or individual/local history, stimulating accurate situating of behavior in time (Moreno-Serra et al. Citation2022). However, we observe from the review, that knowledge about biases and remedial techniques from the larger survey research field, is outdated or incomplete in the field of radon. Furthermore, there has been little research measuring the impact of biases on findings. So, the field would benefit from introducing robustness tests or instruments (e.g. social desirability scale), to assess existing biases. We acknowledge that the use of additional instruments can introduce new risks, like survey overload and non-response. These may already cause problems in the field, so trade-offs and optimal approaches specific to the field of radon, need to be empirically evaluated.

Despite the predominance of the survey method in the reviewed studies, and the relevance of strengthening this method given its popularity, we do not want to take an isolationist perspective, and encourage the use of a narrow range or single method only. Although methods must serve the study aim, there are always multiple approaches to a research problem. Pluralism in views and skills is often recognized as an asset, so it is important that pluralism in methods for obtaining and analyzing data is too (Dow Citation2012).

Diversity in methods can stimulate collaboration and knowledge transfer across disciplines, and promote interdisciplinarity in the field of radon. Pluralism and mixing of data or methods also stimulates data triangulation, which can help validate claims and enhance the power of prediction. Mixing of methodologies (e.g. survey and interview), a profound form of triangulation, aids at transcending research paradigms and explaining contradictions and connections between quantitative and qualitative data (Olsen Citation2004; Petrescu and Krishen Citation2019; Shorten and Smith Citation2017). However, the main reason for advocating for more diversity is that it could increase methodological knowledge in the field of radon, and insights on how to overcome the radon risk.

Study longitudinal change

The radon research in this review was conducted in a time range of 34 years, with varying time frames, measuring past, current, or future behaviours. Some of these measurements are more reliable than others. Past behaviour may be less well remembered than current behaviour, and actual future behaviour may differ from predicted and reported future behaviour due to unforeseen or undisclosed hindrances (Cronin et al. Citation2020; Larsson Citation2015). This bias can partly be overcome through longitudinal research, more specifically panel analysis that studies the same individuals on the same characteristics over time, or by questioning respondents directly after they perform critical actions such as testing or mitigating. This would require cooperation between, social- and technical researchers and practitioners. Unfortunately, this type of studies are exceptional and paradoxically its prevalence is in decline.

Given that almost all psychological theories are theories of change (Wang et al. Citation2017), and the strive towards behaviour change is the essence of radon research, it is only appropriate to observe actual change and strengthen confidence in inferences about causality of predictors to this behaviour change, through use of longitudinal research. Other arguments for more longitudinal research are that the field would better understand temporal relationships between variables, evolutions in attitudes and behaviours over time, as well as time-duration of intervention effects (Peterson and Howland Citation1996; Hampson et al. Citation1998; Laflamme and Vanderslice Citation2004).

Optimize the sampling design

Most research studies a general residential population, but we now see a tendency towards the study of more specific audiences. There is still room to improve the detail by which the study sample is described, since individual differences e.g. with regards to homeownership, if not controlled for, can affect results, as discussed by (Hahn, Rayens, Kercsmar, Robertson, et al. Citation2014; Larsson et al. Citation2009).

Moreover, we see that only in about one in three studies, participants were randomly selected, and that use of this technique is declining over time. This means that in the majority of cases, results cannot be generalized due to self-selection bias. To allow for extrapolation of findings, researcher must randomly sample.

However, even studies with randomized sampling designs, suffer from a generalization issue and unviability of complex statistical modelling, due to small sample sizes resulting mainly from low response rates. Findings from the review indicate that if the objective is to understand predictors for testing, and especially remediation, samples should be drawn from areas with (likely) more testers and/or remediators, or at least by researchers familiar with the nuances of conducting research with- and defining of- radon risk populations. Large national studies (Ford and Eheman Citation1997) or omnibus studies focusing on several topics or risks, do not necessarily operate with optimal sample compositions. For instance, a considerable number of individuals with no or little familiarity with radon could leave (sophisticated) questions about radon unanswered, lowering the sample size. The central argument here is that appropriate sampling designs must be adopted in function of the research aim.

Lastly, more than half of the radon studies focus on differences between individuals, and when groups are studied this is usually in an experimental design. While this is not problematic per se, it neglects that civil society exists. Radon research would benefit from a social psychological approach, which evaluates the possible discrepancy between what someone does and (normatively) ought to do, as well as how individual actions are shaped by others through processes of social influence. This could be achieved through comparison between study populations, or analysis on aggregate and/or multiple levels.

Measure in the plural way

Scientific research aims at producing reliable and reproducible results. However our review points out that most studies used items of unknown construct validity. This methodological challenge seems to afflict the wider field of radiological protection (Perko et al. Citation2019). Obviously, this lack should not necessarily be attributed to researchers’ unwillingness to assess the quality of instruments, the underlying cause is likely that use of single items impedes validation to be performed.

Furthermore, there is a risk of respondent-related measurement errors, caused by respondents giving inaccurate or false answers. Researchers that seek to minimize such error must optimize their question-wording, and study the cognitive response process. According to Tourangeau, Rips, and Rasinski (Citation2000) in all stages of the response process bias can occur. Many errors arise from misinterpretation of questions and/or instructions. Being uninformed about a topic also does not deter people from answering, and the answers of uninformed, or unmotivated, respondents differ from informed respondents, thus creating response bias (Goldsmith and Walters Citation2015). From the review we learn that those uninformed about radon are questioned, yet we do not know how this impacts the validity of the aggregated measures of public opinion and behaviour. Thus there is a need to identify and control for response bias. An explanatory text or video about radon, and a simplified and shorter questionnaire could be given to those with no-, or low radon knowledge. What the impact of this experimental approach would be on people’s knowledge, but also on their response style, needs further investigation. Ambiguity in the question-wording can also intrude comprehension. For instance ambiguity over the referent of radon risk (general/personal risk): “Are you aware of the health risks associated with exposure to radon?”, or location (current/past residence): “have you ever tested your home for radon”. The optimal conceptualization depends on the purpose of the study, but it is essential that researchers reflect on the wording they choose (Tourangeau, Rips, and Rasinski Citation2000).

By using multiple items, one can neutralize measurement error unique to some indicators, and measure complex constructs more rigorously. However, it is essential that research assesses whether the indicators measure the same underlying construct, by means of validity testing.

Take a multidimensional approach to study behaviour

From this review we conclude that a great proportion of radon studies focus on people’s knowledge and perceptions, and that use of single items can result in less nuanced measurement of these concepts. We also know that cognitive awareness of the radon risk; being knowledgeable and concerned is not the most important explanatory variable for taking action against radon (Johnson and Luken Citation1987; Cronin et al. Citation2020). One action, testing, has been studied the most, however only in few cases were the causal mechanisms explored. Furthermore, research on remediation is declining over time, even though detecting radon levels itself does not reduce the risk, indicating the need for insight on predictors of remediation (Weinstein, Man, and Roberts Citation1990). Good practice, of making the explanation of health behavior the central research aim, can be seen in studies of Mazur and Hall (Citation1990) and Khan, Krewski, et al. It is also essential that complex constructs that explain behaviour, e.g. risk perceptions, are measured rigorously by capturing conceptually relevant dimensions from literature. For instance, Coleman’s (Citation1993) study is dimension rich, in that she considered the affective and cognitive dimensions of risk, and distinguished between social and personal risk judgements. We conclude that there is a need for dimension-rich and behaviour focused radon research.

Conclusion

There is a growing consensus on the desirability to incorporate social insights into scientific risk analysis as well as the publics’ understanding of risk (Klinke, Renn & Goble, 2021). This trend is also apparent in the field of ionizing radiation where the large EURATOM Horizon 2020 Radonorm project that aims at managing risk from radon explicitly acknowledges the importance of social considerations. Yet, less attention is given to the specificities of social methodologies in the field of radon and the implications of methodological design choices on knowledge creation, bias and validity. The findings from our review are therefore new, and offer relevant insights to the field.

Our review of the social methodology in radon risk research shows that there is over-reliance on some, and disregard for other aspects of methodology, which limits internal, external, and construct validity of research. The most important threats were: limited consideration of context, predominance of cross-sectional evaluations and survey research, inadequate sampling, little construct validation and finally, insufficient causal explanations of testing and remediating behaviour. This knowledge is important for evaluating conclusions researchers have drawn, and can currently draw, about attitudes, and behaviours of people concerning radon.

Our recommendations to limit, or at least reflect on, methodological threats, can strengthen and expand the social scientific evidence on how to manage risks from radon by stimulating more diverse techniques for data collection and analysis, validation, and better methodological design specifications. This could generate evidence which is rich in perspectives, and robust, for policy-makers and researchers to use and build on. We also hope our review will contribute to improving the quality of reporting about methodology in social radon studies.

Nevertheless, more methodological reviews are needed to uncover specific challenges in other fields of risk research, and further develop critereology to evaluate social contributions. We also recommend more systematic sharing of research tools, to promote standardized and validated instruments.,

Finally, we want to acknowledge some limitations to our study. Due to search strategy being based on the presence of keywords in the abstract, we likely missed articles that did not describe their method in the abstract, which might particularly be true for qualitative research. Furthermore, some very specific method-keywords might be overlooked. However, we believe that the search strategy was appropriate for our study objective and that the most common methods in social science were included in the search. Second, our paper did not include grey literature, and we only focused on English-language publication. Therefore, we likely missed literature, particularly from Asia. However, our sample certainly includes accessible and peer-reviewed studies, these practices could inform policy or research. Lastly, we did not directly link the design-, and analysis characteristics of studies to research questions and hypotheses. Allthough this could be seen as a limitation, we indirectly captured the research objectives by only focusing on studies that measure public attitudes and/or behaviours regarding radon. There is also consensus that some practices, e.g. random sampling, reduce systematic bias and produce (quantitative) data of better quality. While other practices, e.g. longitudinal study, are not superior to cross-sectional research, they offer important insights to the literature through their unique perspective, which now may be lacking. So, our methodological recommendations remain valid regardless of the research objectives of the individual studies. Furthermore, validity threats from methodological choices are not unique to radon, the suggestions made can bring awareness, trigger reflection, and serve as guidance for the broader field of risk management.

Acknowledgments

We would like to thank Johanne Longva at the NMBU library for her help with the development of the search protocol. This project has received funding from the Euratom research and training programme 2019–2020 under grant agreement No 900009, as well as the Research Council of Norway, grant nr313070 and 223268.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Notes

1 An important pathway of radon to the atmosphere are the NORM (naturally occurring radioactive materials) residues. We thus initially conducted a wider search, the findings of this search fall beyond the scope of the present article. The NORM articles unrelated to indoor radon were excluded in the full text screening stage. The full search strategy can be found in appendix 3.

2 There are several articles in which more than one quantitative, qualitative or a combination of both are used, as such the number of methods in higher than the number of articles.

4 First Decade: 1987-1999 1998, Second Decade: 2000-2010 1999-2009, Third Decade: 2011 2010-2020

References

*References marked with an asterisk indicate articles included in the systematic review.

- Adams, M., J. Dewey, and P. Schur. 1993. “A Computerized Program to Educate Adults about Environmental Health Risks.” Journal of Environmental Health 56 (2): 13–17.

- Aguinis, H., C. A. Pierce, F. A. Bosco, and I. S. Muslin. 2009. “First Decade of Organizational Research Methods: Trends in Design, Measurement, and Data-Analysis Topics.” Organizational Research Methods 12 (1): 69–112. doi:10.1177/1094428108322641.

- Alsop, S., and M. Watts. 1997. “Sources from a Somerset Village: A Model for Informal Learning about Radiation and Radioactivity.” Science Education 81 (6): 633–650. doi:10.1002/(sici)1098-237x(199711)81:6 < 633::aid-sce2 > 3.0.co;2-j.

- Baldwin, G., E. Frank, and B. Fielding. 1998. “U.S. women Physicians’ Residential Radon Testing Practices.” American Journal of Preventive Medicine 15 (1): 49–53. doi:10.1016/S0749-3797(98)00030-0.

- Battaglia, M. P., D. A. Dillman, M. R. Frankel, R. Harter, T. D. Buskirk, C. B. McPhee, J. M. DeMatteis, and T. Yancey. 2016. “Sampling, Data Collection, and Weighting Procedures for Address-Based Sample Surveys.” Journal of Survey Statistics and Methodology 4 (4): 476–500. doi:10.1093/jssam/smw025.

- Bouder, F., T. Perko, R. Lofstedt, O. Renn, C. Rossmann, D. Hevey, M. Siegrist, et al. 2021. “The Potsdam Radon Communication Manifesto.” Journal of Risk Research 24 (7): 909–912. doi:10.1080/13669877.2019.1691858.

- Butler, K. M., M. K. Rayens, A. T. Wiggins, K. B. Rademacher, and E. J. Hahn. 2017. “Association of Smoking in the Home with Lung Cancer Worry, Perceived Risk, and Synergistic Risk.” Oncology Nursing Forum 44 (2): 55–63. doi:10.1188/17.onf.e55-e63.

- Campbell, D. T., J. C. Stanley, and N. L. Gage. 1963. Experimental and Quasi-Experimental Designs for Research, Experimental and Quasi-Experimental Designs for Research. Boston, MA: Houghton, Mifflin and Company.

- Cholowsky, N. L., J. L. Irvine, J. A. Simms, D. D. Pearson, W. R. Jacques, C. E. Peters, A. A. Goodarzi, and L. E. Carlson. 2021. “The Efficacy of Public Health Information for Encouraging Radon Gas Awareness and Testing Varies by Audience Age, Sex and Profession.” Scientific Reports 11 (1): 11906. doi:10.1038/s41598-021-91479-7.

- Clifford, S., D. Hevey, and G. Menezes. 2012. “An Investigation into the Knowledge and Attitudes towards Radon Testing among Residents in a High Radon Area.” Journal of Radiological Protection: Official Journal of the Society for Radiological Protection 32 (4): N141–7N147. doi:10.1088/0952-4746/32/4/n141.

- Coleman, C.-L. 1993. “The Influence of Mass Media and Interpersonal Communication on Societal and Personal Risk Judgments.” Communication Research 20 (4): 611–628. doi:10.1177/009365093020004006.

- Cook, T. D., and T. Campbell Donald. 1979. Quasi-Experimentation: Design & Analysis Issues for Field Settings. Boston: Houghton Mifflin.

- Creswell, J. 2009. “Research Design: Qualitative, Quantitative, and Mixed-Method Approaches.” 3rd ed. Los Angeles: Sage.

- Cronin, C., M. Trush, W. Bellamy, J. Russell, and P. Locke. 2020. “An Examination of Radon Awareness, Risk Communication, and Radon Risk Reduction in a Hispanic Community.” International Journal of Radiation Biology 96 (6): 803–813. doi:10.1080/09553002.2020.1730013.

- Deeks, J. J., J. Dinnes, R. D’Amico, A. J. Sowden, C. Sakarovitch, F. Song, M. Petticrew, and D. G. Altman, European Carotid Surgery Trial Collaborative Group. 2003. “Evaluating Non-Randomised Intervention Studies.” Health Technology Assessment (Winchester, England) 7 (27): iii–iix. doi:10.3310/hta7270.

- Denu, R. A., J. Maloney, C. D. Tomasallo, N. M. Jacobs, J. K. Krebsbach, A. L. Schmaling, E. Perez, et al. 2019. “Survey of Radon Testing and Mitigation by Wisconsin Residents, Landlords, and School Districts.” WMJ: Official Publication of the State Medical Society of Wisconsin 118 (4): 169–176.

- Dow, S. C. 2012. “Methodological Pluralism and Pluralism of Method.” In Foundations for New Economic Thinking: A Collection of Essays, edited by S. C. Dow, 129–139. London: Palgrave Macmillan UK.

- European Union. 2013. “Basic Safety Standards. Council Directive 2013/59/Euratom.” Official Journal of the European Union 57: 37. doi:10.3000/19770677.L_2014.01.

- Evans, K. M., J. Bodmer, B. Edwards, J. Levins, A. O’Meara, M. Ruhotina, R. Smith, T. Delaney, R. Hoffman-Contois, L. Boccuzzo, H. Hales, and J. K. Carney. 2015. “An Exploratory Analysis of Public Awareness and Perception of Ionizing Radiation and Guide to Public Health Practice in Vermont.” Journal of Environmental and Public Health 2015: 476495. doi:10.1155/2015/476495.

- Evdokimoff, V., and D. Ozonoff. 1992. “Compliance with EPA Guidelines for Follow-up Testing and Mitigation after Radon Screening Measurements.” Health Physics 63 (2): 215–217. doi:10.1097/00004032-199208000-00012.

- Ford, E. S., and C. R. Eheman. 1997. “Radon Retesting and Mitigation Behavior among the U.S. population.” Health Physics 72 (4): 611–614. doi:10.1097/00004032-199704000-00013.

- Ford, E. S., C. R. Eheman, P. Z. Siegel, and P. L. Garbe. 1996. “Radon Awareness and Testing Behavior: Findings from the Behavioral Risk Factor Surveillance System, 1989-1992.” Health Physics 70 (3): 363–366. doi:10.1097/00004032-199603000-00006.

- Gleason, J. A., E. Taggert, and B. Goun. 2021. “Characteristics and Behaviors among a Representative Sample of New Jersey Adults Practicing Environmental Risk-Reduction Behaviors.” Journal of Public Health Management and Practice: JPHMP 27 (6): 588–597. doi:10.1097/PHH.0000000000001106.

- Goldsmith, R. E., and H. A. Walters. 2015. “Reducing Spurious and Uninformed Response by Means of Respondent Warnings: An Experimental Study.” Paper Presented at the Proceedings of the 1989 Academy of Marketing Science (AMS) Annual Conference, Cham, 2015.

- Hahn, E. J., M. K. Rayens, S. E. Kercsmar, S. M. Adkins, A. P. Wright, H. E. Robertson, and G. Rinker. 2014. “Dual Home Screening and Tailored Environmental Feedback to Reduce Radon and Secondhand Smoke: An Exploratory Study.” Journal of Environmental Health 76 (6): 156–161.

- Hahn, E. J., M. K. Rayens, S. E. Kercsmar, H. Robertson, and S. M. Adkins. 2014. “Results of a Test and Win Contest to Raise Radon Awareness in Urban and Rural Settings.” American Journal of Health Education 45 (2): 112–118. doi:10.1080/19325037.2013.875960.

- Hampson, S. E., J. A. Andrews, M. Barckley, M. E. Lee, and E. Lichtenstein. 2003. “Assessing Perceptions of Synergistic Health Risk: A Comparison of Two Scales.” Risk Analysis: An Official Publication of the Society for Risk Analysis 23 (5): 1021–1029. doi:10.1111/1539-6924.00378.

- Hampson, S. E., J. A. Andrews, M. E. Lee, L. S. Foster, R. E. Glasgow, and E. Liechtenstein. 1998. “Lay Understanding of Synergistic Risk: The Case of Radon and Cigarette Smoking.” Risk Analysis 18 (3): 343–350. doi:10.1111/j.1539-6924.1998.tb01300.x.

- Hampson, S. E., J. A. Andrews, M. E. Lee, E. Lichtenstein, and M. Barckley. 2000. “Radon and Cigarette Smoking: Perceptions of This Synergistic Health Risk.” Health Psychology 19 (3): 247–252. doi:10.1037//0278-6133.19.3.247.

- Hazar, N., M. Karbakhsh, M. Yunesian, S. Nedjat, and K. Naddafi. 2014. “Perceived Risk of Exposure to Indoor Residential Radon and Its Relationship to Willingness to Test among Health Care Providers in Tehran.” Journal of Environmental Health Science & Engineering 12 (1): 118. doi:10.1186/s40201-014-0118-2.

- Hevey, D. 2017. “Radon Risk and Remediation: A Psychological Perspective.” Frontiers in Public Health 5 (63): 63. doi:10.3389/fpubh.2017.00063.

- Higgins, J. P., D. G. Altman, P. C. Gøtzsche, P. Jüni, D. Moher, A. D. Oxman, J. Savovic, K. F. Schulz, L. Weeks, and J. A. Sterne, Cochrane Statistical Methods Group. 2011. “The Cochrane Collaboration’s Tool for Assessing Risk of Bias in Randomised Trials.” BMJ (Clinical Research ed.) 343: d5928. doi:10.1136/bmj.d5928.

- Hill, W. G., P. Butterfield, and L. S. Larsson. 2006. “Rural Parents’ Perceptions of Risks Associated with Their Children’s Exposure to Radon.” Public Health Nursing (Boston, MA) 23 (5): 392–399. doi:10.1111/j.1525-1446.2006.00578.x.

- Huntington-Moskos, L., M. K. Rayens, A. Wiggins, and E. J. Hahn. 2016. “Radon, Secondhand Smoke, and Children in the Home: Creating a Teachable Moment for Lung Cancer Prevention.” Public Health Nursing (Boston, MA) 33 (6): 529–538. doi:10.1111/phn.12283.

- Immé, G., R. Catalano, G. Mangano, and D. Morelli. 2014. “Radioactivity Measurements as Tool for Physics Dissemination.” Journal of Radioanalytical and Nuclear Chemistry 299 (1): 891–896. doi:10.1007/s10967-013-2712-7.

- Johnson, F. R., and R. A. Luken. 1987. “Radon Risk Information and Voluntary Protection: Evidence from a Natural Experiment.” Risk Analysis: An Official Publication of the Society for Risk Analysis 7 (1): 97–107. doi:10.1111/j.1539-6924.1987.tb00973.x.

- Jones, S. E., S. Foster, and A. S. Berens. 2019. “Radon Testing Status in Schools by Radon Zone and School Location and Demographic Characteristics: United States, 2014.” The Journal of School Nursing: The Official Publication of the National Association of School Nurses 35 (6): 442–448. doi:10.1177/1059840518785441.

- Kendall, G., J. Miles, D. Rees, R. Wakeford, K. Bunch, T. Vincent, and M. Little. 2016. “Variation with Socioeconomic Status of Indoor Radon Levels in Great Britain: The Less Affluent Have Less Radon.” Journal of Environmental Radioactivity 164: 84–90. doi:10.1016/j.jenvrad.2016.07.001.

- Ketchen, D., B. Boyd, and D. Bergh. 2008. “Research Methodology in Strategic Management: Past Accomplishments and Future Challenges.” Organizational Research Methods 11 (4): 643–658. doi:10.1177/1094428108319843.

- Khan, S. M., J. Gomes, and D. R. Krewski. 2019. “Radon Interventions around the Globe: A Systematic Review.” Heliyon 5 (5): e01737. doi:10.1016/j.heliyon.2019.e01737.

- Lacchia, A. R., G. Schuitema, and A. Banerjee. 2020. “Following the Science”: in Search of Evidence-Based Policy for Indoor Air Pollution from Radon in Ireland.” Sustainability 12 (21): 9197. doi:10.3390/su12219197.

- Laflamme, D. M., and J. A. Vanderslice. 2004. “Using the Behavioral Risk Factor Surveillance System (BRFSS) for Exposure Tracking: experiences from Washington State.” Environmental Health Perspectives 112 (14): 1428–1433. doi:10.1289/ehp.7148.

- Larsson, L. S. 2015. “The Montana Radon Study: social Marketing via Digital Signage Technology for Reaching Families in the Waiting Room.” American Journal of Public Health 105 (4): 779–785. doi:10.2105/AJPH.2014.302060.

- Larsson, L. S., W. G. Hill, T. Odom-Maryon, and P. Yu. 2009. “Householder Status and Residence Type as Correlates of Radon Awareness and Testing Behaviors.” Public Health Nursing (Boston, MA) 26 (5): 387–395. doi:10.1111/j.1525-1446.2009.00796.x.

- Lee, M. E., E. Lichtenstein, J. A. Andrews, R. E. Glasgow, and S. E. Hampson. 1999. “Radon-Smoking Synergy: A Population-Based Behavioral Risk Reduction Approach.” Preventive Medicine 29 (3): 222–227. doi: 10.1006/pmed.1999.0531.

- Leviton, L. C. 2017. “Generalizing about Public Health Interventions: A Mixed-Methods Approach to External Validity.” Annual Review of Public Health 38 (1): 371–391. doi:10.1146/annurev-publhealth-031816-044509.

- Loosveldt, G., A. Carton, and J. Billiet. 2004. “Assessment of Survey Data Quality: A Pragmatic Approach Focused on Interviewer Tasks.” International Journal of Market Research 46 (1): 65–82. doi:10.1177/147078530404600101.

- Losee, J. E., J. A. Shepperd, and G. D. Webster. 2020. “Financial Resources and Decisions to Avoid Information about Environmental Perils.” Journal of Applied Social Psychology 50 (3): 174–188. doi:10.1111/jasp.12648.

- Macher, J. M., and S. B. Hayward. 1991. “Public Inquiries about Indoor Air Quality in California.” Environmental Health Perspectives 92: 175–180. doi:10.1289/ehp.9192175.

- Martell, M., T. Perko, Y. Tomkiv, S. Long, A. Dowdall, and J. Kenens. 2021. “Evaluation of Citizen Science Contributions to Radon Research.” Journal of Environmental Radioactivity 237: 106685. doi:10.1016/j.jenvrad.2021.106685.

- Martin, K., R. Ryan, T. Delaney, D. A. Kaminsky, S. J. Neary, E. E. Witt, F. Lambert-Fliszar, et al. 2020. “Radon from the Ground into Our Schools: Parent and Guardian Awareness of Radon.” SAGE Open 10 (1): 215824402091454. doi:10.1177/2158244020914545.

- Mazur, A. 1987. “Putting Radon on the Public’s Risk Agenda.” Science, Technology, & Human Values 12 (3/4): 86–93.

- Mazur, A., and G. S. Hall. 1990. “Effects of Social Influence and Measured Exposure Level on Response to Radon*.” Sociological Inquiry 60 (3): 274–284. doi:10.1111/j.1475-682X.1990.tb00145.x.

- Mbuagbaw, L., D. O. Lawson, L. Puljak, D. B. Allison, and L. Thabane. 2020. “A Tutorial on Methodological Studies: The What, When, How and Why.” BMC Medical Research Methodology 20 (1): 226. doi:10.1186/s12874-020-01107-7.

- Mohajan, H. 2017. “Two Criteria for Good Measurements in Research: Validity and Reliability.” Annals of Spiru Haret University. Economic Series 17 (4): 59–82. doi:10.26458/1746.

- Moreno-Serra, R., M. Anaya-Montes, S. León-Giraldo, and O. Bernal. 2022. “Addressing Recall Bias in (Post-)Conflict Data Collection and Analysis: Lessons from a Large-Scale Health Survey in Colombia.” Conflict and Health 16 (1): 14. doi:10.1186/s13031-022-00446-0.

- Neall, A. M., and M. R. Tuckey. 2014. “A Methodological Review of Research on the Antecedents and Consequences of Workplace Harassment.” Journal of Occupational and Organizational Psychology 87 (2): 225–257. doi:10.1111/joop.12059.

- Neri, A., C. McNaughton, B. Momin, M. Puckett, and M. S. Gallaway. 2018. “Measuring Public Knowledge, Attitudes, and Behaviors Related to Radon to Inform Cancer Control Activities and Practices.” Indoor Air 28 (4): 604–610. doi:10.1111/ina.12468.

- Nicotera, G., C. G. Nobile, A. Bianco, and M. Pavia. 2006. “Environmental History-Taking in Clinical Practice: Knowledge, Attitudes, and Practice of Primary Care Physicians in Italy.” Journal of Occupational and Environmental Medicine 48 (3): 294–302. doi:10.1097/01.jom.0000184868.77815.2a.

- Nwako, P., and T. Cahill. 2020. “Radon Gas Exposure Knowledge among Public Health Educators, Health Officers, Nurses, and Registered Environmental Health Specialists: A Cross-Sectional Study.” Journal of Environmental Health 82 (6): 26.

- Olsen, W. 2004. “Triangulation in Social Research: Qualitative and Quantitative Methods Can Really Be Mixed.” Developments in Sociology 20: 103–118.

- Ouzzani, M., H. Hammady, Z. Fedorowicz, and A. Elmagarmid. 2016. “Rayyan—a Web and Mobile App for Systematic Reviews.” Systematic Reviews 5 (1): 210. doi:10.1186/s13643-016-0384-4.

- Park, E., C. W. Scherer, and C. J. Glynn. 2001. “Community Involvement and Risk Perception at Personal and Societal Levels.” Health, Risk & Society 3 (3): 281–292. doi:10.1080/13698570120079886.

- Perko, T., and C. Turcanu. 2020. “Is Internet a Missed Opportunity? Evaluating Radon Websites from a Stakeholder Engagement Perspective.” Journal of Environmental Radioactivity 212: 106123. doi:10.1016/j.jenvrad.2019.106123.

- Perko, T., M. Van Oudheusden, C. Turcanu, C. Pölzl-Viol, D. Oughton, C. Schieber, T. Schneider, et al. 2019. “Towards a Strategic Research Agenda for Social Sciences and Humanities in Radiological Protection.” Journal of Radiological Protection 39 (3): 766–784. doi:10.1088/1361-6498/ab0f89.

- Perko, T., N. Zeleznik, C. Turcanu, and P. Thijssen. 2012. “Is Knowledge Important? Empirical Research on Nuclear Risk Communication in Two Countries.” Health Physics 102 (6): 614–625. doi:10.1097/HP.0b013e31823fb5a5.

- Peterson, E., and J. Howland. 1996. “Predicting Radon Testing among University Employees.” Journal of the Air & Waste Management Association (1995) 46 (1): 2–11. doi:10.1080/10473289.1996.10467435.

- Petrescu, D., and R. Petrescu-Mag. 2017. “Setting the Scene for a Healthier Indoor Living Environment: Citizens’ Knowledge, Awareness, and Habits Related to Residential Radon Exposure in Romania.” Sustainability 9 (11): 2081. doi:10.3390/su9112081.

- Petrescu, M., and A. S. Krishen. 2019. “Strength in Diversity: methods and Analytics.” Journal of Marketing Analytics 7 (4): 203–204. doi:10.1057/s41270-019-00064-5.

- Poortinga, W., K. Bronstering, and S. Lannon. 2011. “Awareness and Perceptions of the Risks of Exposure to Indoor Radon: A Population-Based Approach to Evaluate a Radon Awareness and Testing Campaign in England and Wales.” Risk Analysis: An Official Publication of the Society for Risk Analysis 31 (11): 1800–1812. doi:10.1111/j.1539-6924.2011.01613.x.

- Poortinga, W., P. Cox, and N. F. Pidgeon. 2008. “The Perceived Health Risks of Indoor Radon Gas and Overhead Powerlines: A Comparative Multilevel Approach.” Risk Analysis: An Official Publication of the Society for Risk Analysis 28 (1): 235–248. doi:10.1111/j.1539-6924.2008.01015.x.

- Renn, O., and C. Benighaus. 2013. “Perception of Technological Risk: insights from Research and Lessons for Risk Communication and Management.” Journal of Risk Research 16 (3–4): 293–313. doi:10.1080/13669877.2012.729522.

- Rinker, G. H., E. J. Hahn, and M. K. Rayens. 2014. “Residential Radon Testing Intentions, Perceived Radon Severity, and Tobacco Use.” Journal of Environmental Health 76 (6): 42–47.

- Rodríguez Martínez, Á., A. Ruano Ravina, and M. Torres Duran. 2018. “Residential Radon and Small Cell Lung Cancer: A Systematic Review.” Annals of Oncology 29: viii601–viii602. doi:10.1093/annonc/mdy298.015.

- Salway, S. M., G. Higginbottom, B. Reime, K. K. Bharj, P. Chowbey, C. Foster, J. Friedrich, K. Gerrish, Z. Mumtaz, and B. O’Brien. 2011. “Contributions and Challenges of Cross-National Comparative Research in Migration, Ethnicity and Health: insights from a Preliminary Study of Maternal Health in Germany, Canada and the UK.” BMC Public Health 11 (1): 514. doi:10.1186/1471-2458-11-514.

- Sanborn, M., L. Grierson, R. Upshur, L. Marshall, C. Vakil, L. Griffith, F. Scott, M. Benusic, and D. Cole. 2019. “Family Medicine Residents’ Knowledge of, Attitudes toward, and Clinical Practices Related to Environmental Health: Multi-Program Survey.” Canadian Family Physician Medecin de Famille Canadien 65 (6): e269–e77.

- Sanderson, S., I. D. Tatt, and J. P. Higgins. 2007. “Tools for Assessing Quality and Susceptibility to Bias in Observational Studies in Epidemiology: A Systematic Review and Annotated Bibliography.” International Journal of Epidemiology 36 (3): 666–676. doi:10.1093/ije/dym018.

- Scandura, T., and E. Williams. 2000. “Research Methodology in Management: Current Practices, Trends, and Implications for Future Research.” Academy of Management Journal 43 (6): 1248–1264. doi:10.2307/1556348.

- Shadish, W. R. 2010. “Campbell and Rubin: A Primer and Comparison of Their Approaches to Causal Inference in Field Settings.” Psychological Methods 15 (1): 3–17. doi:10.1037/a0015916.

- Shorten, A., and J. Smith. 2017. “Mixed Methods Research: Expanding the Evidence Base.” Evidence-Based Nursing 20 (3): 74–75. doi:10.1136/eb-2017-102699.

- Smith, V. K., W. H. Desvousges, and J. W. Payne. 1995. “Do Risk Information Programs Promote Mitigating Behavior?” Journal of Risk and Uncertainty 10 (3): 203–221. doi:10.1007/BF01207551.

- Smith, V. K., W. H. Desvousges, A. Fisher, and F. R. Johnson. 1988. “Learning about Radon’s Risk.” Journal of Risk and Uncertainty 1 (2): 233–258. doi:10.1007/BF00056169.

- Smith, V. K., and F. R. Johnson. 1988. “How Do Risk Perceptions Respond to Information? The Case of Radon.” The Review of Economics and Statistics 70 (1): 1–8. doi:10.2307/1928144.

- Steckler, A., and K. R. McLeroy. 2008. “The Importance of External Validity.” American Journal of Public Health 98 (1): 9–10. doi:10.2105/ajph.2007.126847.

- Su, C., M. Pan, Y. Zhang, H. Kan, Z. Zhao, F. Deng, B. Zhao, et al. 2022. “Indoor Exposure Levels of Radon in Dwellings, Schools, and Offices in China from 2000 to 2020: A Systematic Review.” Indoor Air 32 (1): e12920. doi:10.1111/ina.12920.

- Tourangeau, R. 2020. “How Errors Cumulate: Two Examples.” Journal of Survey Statistics and Methodology 8 (3): 413–432. doi:10.1093/jssam/smz019.

- Tourangeau, R., L. J. Rips, and K. Rasinski. 2000. The Psychology of Survey Response, the Psychology of Survey Response. New York, NY: Cambridge University Press.

- Trevethan, R. 2017. “Deconstructing and Assessing Knowledge and Awareness in Public Health Research.” Frontiers in Public Health 5: 194. doi:10.3389/fpubh.2017.00194.

- Turcanu, C., M. Van Oudheusden, B. Abelshausen, C. Schieber, T. Schneider, N. Zeleznik, R. Geysmans, T. Duranova, T. Perko, and C. Pölzl-Viol. 2020. “Stakeholder Engagement in Radiological Protection: Developing Theory, Practice and Guidelines.” Radioprotection 55: S211–S218. doi:10.1051/radiopro/2020036.

- Van Oudheusden, M., C. Turcanu, and S. Molyneux-Hodgson. 2018. “Absent, yet Present? Moving with ‘Responsible Research and Innovation’ in Radiation Protection Research.” Journal of Responsible Innovation 5 (2): 241–246. doi:10.1080/23299460.2018.1457403.

- Vandenbroucke, J., P. E. von Elm, D. G. Altman, P. C. Gøtzsche, C. D. Mulrow, S. J. Pocock, C. Poole, J. J. Schlesselman, and M. Egger, STROBE Initiative. 2014. “Strengthening the Reporting of Observational Studies in Epidemiology (STROBE): Explanation and Elaboration.” International Journal of Surgery (London, England) 12 (12): 1500–1524. doi:10.1016/j.ijsu.2014.07.014.

- Verhagen, A. P., H. C. de Vet, R. A. de Bie, M. Boers, and P. A. van den Brandt. 2001. “The Art of Quality Assessment of RCTs Included in Systematic Reviews.” Journal of Clinical Epidemiology 54 (7): 651–654. doi:10.1016/S0895-4356(00)00360-7.

- Verhagen, A. P., H. C. de Vet, R. A. de Bie, A. G. Kessels, M. Boers, L. M. Bouter, and P. G. Knipschild. 1998. “The Delphi List: A Criteria List for Quality Assessment of Randomized Clinical Trials for Conducting Systematic Reviews Developed by Delphi Consensus.” Journal of Clinical Epidemiology 51 (12): 1235–1241. doi:10.1016/S0895-4356(98)00131-0.

- Wang, M., D. J. Beal, D. Chan, D. A. Newman, J. B. Vancouver, and R. J. Vandenberg. 2017. “Longitudinal Research: A Panel Discussion on Conceptual Issues, Research Design, and Statistical Techniques.” Work, Aging and Retirement 3 (1): 1–24. doi:10.1093/workar/waw033.

- Weinstein, N. D., P. M. Man, and N. E. Roberts. 1990. “Determinants of Self-Protective Behavior: Home Radon Testing.” Journal of Applied Social Psychology 20 (10): 783–801. doi:10.1111/j.1559-1816.1990.tb00379.x.

- Weinstein, N. D., and P. M. Sandman. 1992. “Predicting Homeowners’ Mitigation Responses to Radon Test Data.” Journal of Social Issues 48 (4): 63–83. doi:10.1111/j.1540-4560.1992.tb01945.x.

- Weisberg, H. 2005. “The Total Survey Error Approach: A Guide to the New Science of Survey Research.” Bibliovault OAI Repository, the University of Chicago Press 3: 303–305. doi:10.7208/chicago/9780226891293.001.0001.

- Whitten, P., L. K. Johannessen, T. Soerensen, D. Gammon, and M. Mackert. 2007. “A Systematic Review of Research Methodology in Telemedicine Studies.” Journal of Telemedicine and Telecare 13 (5): 230–235. doi:10.1258/135763307781458976.

- World Health Organization. 2009. WHO Handbook on Indoor Radon: A Public Health Perspective. Geneva: World Health Organization.

Appendix 1.

Coding of study topics

Appendix 2.

List of included articles