ABSTRACT

This study compares the efficacy of computer and human analytics in a commemorative setting. Both deductive and inductive reasoning are compared using the same data across both methods. The data comprises 2490 non-repeated, non-dialogical social media comments from the popular touristic site Tripadvisor. Included in the analysis is participant observation at two Anzac commemorative sites, one in Western Australia and one in Northern France. The data is then processed using both Leximancer V4.51 and Dialectic Thematic Analysis. The findings demonstrate artificial intelligence (AI) was incapable of insight beyond metric-driven content analysis. While fully deduced by human analysis the metamodel was only partially deduced by AI. There was also a difference in the ability to induce themes with AI producing anodyne, axiomatic concepts. Contrastingly, human analytics was capable of transcendent themes representing ampliative, phronetic knowledge. The implications of the study suggest (1) tempering the belief that the current iteration of AI can do more than organise, summarise, and visualise data; (2) advocating for the inclusion of preconception and context in thematic analysis, and (3) encouraging a discussion of the appropriateness of using AI in research.

Introduction

To what extent can we trust our computer software to give us a full and accurate analysis of qualitative data? The use of computer software to analyse increasing volumes of research data is a growing phenomenon. Indeed, it is arguable Big data cannot be managed without it. Underpinning the current definition of Big data is a volume of data too large to be analysed using traditional human methods (Barlas, Citation2013; Lohr, Citation2013a, Citation2013b). The evolution of this software is toward artificial intelligence (AI), which as a genre of software is considered controversial. Here we see algorithms making decisions based on patterns in data, increasingly relegating humans from this responsibility.

Across the disciplines, several studies have exclusively used computer analytics to process data (see tourism-related sample: Cheng, Citation2016; Chiu et al., Citation2017; Dongwook & Sungbum, Citation2017; Li et al., Citation2018; Rodrigues et al., Citation2017; Schweinsberg et al., Citation2017; Silvia et al., Citation2019). How confident can we be in the ability of AI to accurately identify related concepts, or insightfully process data through the paradigm of context (Wilk et al., Citation2019), including transcendent filters such as grief, love, morality, and ethics (see ethics, van Berkel et al., Citation2020).

This study compares the efficacy of computer-assisted data analysis with a combined deductive and inductive version of human analysis: namely, Dialectic Thematic Analysis (DTA). Both human and AI methods use the same combined data from two poignant touristic settings, namely national war memorials. The dialectics referred to in the manual method is the simultaneous use of an adapted version of Framework Analysis (Ritchie et al., Citation2003; Ritchie & Spencer, Citation1994), with Reflexive Thematic Analysis (Braun & Clarke, Citation2006, Citation2019; Clarke, Citation2017). The method is dialectical in the sense that two antithetical processes are combined in one method with the expectation of achieving a fortified, triangulated outcome. One path uses deductive reasoning while the other is inductive, hence the dialectical nature of the method. The advantage of using dialectics when comparing human analysis with AI is that both Leximancer’s ‘supervised’ [deductive] and ‘unsupervised’ [inductive] modes can be compared using similar logic in a manual method.

The study’s aim is to evaluate the efficacy of both methods using as much as possible the same data. The premise supporting the aim is that while AI has its place the current iteration of research analytics it is not yet capable of deep thought. We define deep thought as evocative, contextualised themes typifying phronetic wisdom. Phronesis is an ancient Greek term used not only to describe practical wisdom or action but also a philosophy of seeking the same. We revisit the term in a contemporary setting to denote conclusive output that is practical and useful to society. Therefore, a machine or programme qualifying as deep thought capable should be able to produce insightful output that is of practical use to humanity. For the avoidance of doubt, the concept deep thought is unrelated to Deep Nostalgia, which is a machine learning programme developed to animate still photographs.

Computer analytics and the research context

Commemoration as a research context is a challenging one. Not only are sensitivities involved but a range of nuanced emotions such as sadness, grief, pride, wonderment, dissonance, and mortality salience. Compounding the challenge is the importance of context. When choosing an interpretivistic method consideration of context is fundamental to the quality of findings. Both Leximancer’s chief scientist and the author Silverman acknowledge the importance of context when interpreting qualitative research (Silverman, Citation2010, Citation2013a, Citation2013b; Smith & York, Citation2016). Silverman extends this idea noting context as being perversely exaggerated in contemporary culture, “the environment around the culture has become more important than the phenomenon itself” (Citation2013a, p. 90). Perhaps, but let us at least appreciate that context is salient and certainly not conveniently generic across all data sets. One size [of context] does not fit all, but instead social context is unique to the situation and often ephemeral. Yet paradoxically Halbwachs (Citation1992) argues it can be concentrated in collective behaviour, implying social settings contain generic elements. He refers to this as ‘collective memory’. Hirsch (Citation2008, Citation2012) goes further to claim that when collective memory is so intense, so poignant, it can transcend generations; for example, the Holocaust (Gross, Citation2006; Nawijn & Fricke, Citation2015; Weissman, Citation2018). To a degree then it would seem users of computer analytics apply the same context across different data sets, and they do so at their peril. Yet without the benefit of circumspection how can we ever know if applying a communal context is appropriate (van Berkel et al., Citation2020).

While computer analytics is often associated with instant displays of summary information the very nature of textual [only] findings rob practitioners of the rich contextual insight that comes from preconception and circumspection. When attempting to make sense of metric-driven analysis latent themes can remain hidden. With AI we are left with a pastiche of unremarkable and axiomatic concepts that lack usefulness. Any usefulness of AI is contingent on human stewardship and insight, which in turn relies on knowledge and experience [preconception].

While AI used in research has its place, what has also eluded the discussion is the notion of a ‘black box’ in the data custody chain. This hidden manipulation of data is typified by protected, proprietary software, producing results which are considered inviolable. Meanwhile, the literature contains examples of comparisons between popular branded AI. Studies comparing NVivo with Leximancer, for example, inform others of the pros and cons, but always with the underlying premise that AI is useful and appropriate (Sotiriadou et al., Citation2014; Wilk et al., Citation2019).

Advocates of computer analytics encourage the notion that such assistance is at best complementary and never intended to replace human interpretation (Angus, Citation2014). “The machine does what the machine is good at so that the person, the analyst, does what they’re good at” (Smith & York, Citation2016, p. 7, 20). Perhaps, but one must acknowledge that analysis is a linear process; a chain of prescribed steps leading to an outcome. If, during one of the steps latent truth remains hidden then surely by default the end-result is compromised. Without some knowledge of the phenomenon how can the researcher judge AI’s interpretation as truthful.

Leximancer

Leximancer is analytical computer software used in many disciplines, including Tourism. It is used to distil large quantities of unstructured data into a few representative themes. The software is analytical in other ways including highlighting relative occurrence and co-occurrence of words and themes within a body of text. It is designed to assist researchers in interpreting phenomena. The programme was released to the public in 2004 by Andrew Smith and the team from the Institute for Social Science Research at The University of Queensland. It is a text mining software programme that automatically codes collections of natural language. The programme uses statistical algorithms to reveal occurrence, co-occurrence and interconnectivity. This is then displayed via a proprietary map of defined terms with associated summary information. Across the tourism literature, both NVivo and Leximancer appear to hold a duopoly of academic interest in the analytical software space (Sotiriadou et al., Citation2014). While some may suggest a choice between the two is based on careful consideration Welsh (Citation2002) concluded that academics do not have the requisite skills to choose, and it often boils down to something as serendipitous as a ‘colleague’s recommendation’.

Leximancer can process impressive quantities of unstructured natural language to facilitate qualitative research, while supporting different types of Thematic Analysis (TA) (Braun & Clarke, Citation2006, Citation2019; Braun et al., Citation2019; Clarke, Citation2017). This includes conventional, directive, latent, and summative Content Analysis (CA); discourse analysis, transcription analysis, recursive abstraction, phenomenology, phenomenography, netnography and sentiment analysis. As a point of distinction, phenomenology is the philosophical study of consciousness and experience. It includes the writings of key luminaries such as Hegel, Kant, and Heidegger (Creswell, Citation2007; Denzin & Lincoln, Citation2000, Citation2018; McGrath, Citation2006; Taylor, Citation1977). Phenomenography on the other hand, is a more recent empirical study concerned with investigating ways in which subjects experience or think about something (Richardson, Citation1999; Ryan, Citation2000). For example, Abreu Novais, et al.’s (Citation2018) touristic study using phenomenography suggests a prior understanding of what stakeholders think the term means will aid in the advancement of ‘destination competitiveness’.

Leximancer is marketed with the proposition of mitigating analytics to a fraction of the familiar, laborious ‘old school’ steps. Braun and Clarke’s (Citation2006) generic process is an example of this stepped process. Leximancer ushers a degree of automation akin to plug-and-play, generating concept maps and associated frequencies with linked exemplars in a matter of seconds. More than a decade later, improved versions of Leximancer augur a convenient mechanism to process Big data. The price of such convenience however is the underlying assumption that users accept the programme’s output as a reasonable representation of reality. Leximancer’s results may be considered literal and to a degree, anthropologically reified but nonetheless inviolate. The cautionary tale of such fidelity is selling one’s soul to a computer programme by sharing, if not fully delegating responsibility for thematic analysis to artificial intelligence.

Methodology and Leximancer

Qualitative research is often an inductive approach. Typically, quantities of unstructured data are distilled and explicated in a systematic way to produce a few manageable, seminal themes. Within this paradigm there are specific methods supporting the praxis. One of those methods is CA. Weber defines CA as a research method that uses a set of procedures to make valid inferences from the text. These inferences are about the sender(s) of the message, the message itself, or the audience of the message (Sandelowski, Citation1995; Weber, Citation1990). The role of theory in CA is threefold; it can be inductive, deductive, or simply no theory (Elo & Kyngäs, Citation2008; Hsieh & Shannon, Citation2005). Weber counsels that the specific type of CA is related to the role of theory in the method, depending on both the researcher and the problem. While this implies a degree of flexibility one can argue that the choice of type should also reflect the maturity of the phenomenon. If not, as Tesch (Citation1990) suggests, a lack of consideration can lead to limiting the method’s application.

Directive CA is used to validate existing theory and thus has been referred to as “deductive use of a theory” (Potter & Levine-Donnerstein, Citation1999, p. 1281). Hickey and Kipping (Citation1996) refer to Directive CA as a more structured process than conventional CA. While CA follows similar steps to TA, they are distinct in epistemology. CA, especially in the AI space is a metric driven consideration while TA is an interpretive driven and non-metric search for meaningful themes. These themes represent the distillation of unstructured data in increasing levels of abstraction (Braun & Clarke, Citation2006, Citation2019; Braun et al., Citation2019; Clarke, Citation2017; Leech & Onwuegbuzie, Citation2011). Where both content and thematic analysis fall short and missing in the literature is a prescription of the methods for use in deduction.

A review of the literature does reveal some qualitative deductive models however these are mostly specific to student dissertations. There are countless text-book references describing the application of deductive logic to research but not the process in qualitative methodology. The assumption being that thematic analysis confines itself to inductive reasoning. One exception to this rule is Elo and Kyngäs’ (Citation2008) nine-step model, however, this is noticeably distinct from the popular Braun and Clarke exemplar. The Elo model also arguably lacks the parsimony of the Braun and Clarke model. Given inductive and deductive processes are related but oppositional their mutual exclusivity does not need to be the case. This study’s inductive path is similar to Reflexive TA in that it is akin to methodological LEGO®; it incorporates inductive TA as one of the analytical paths while the other is deductive.

Commemoration

Nested in the hypernym ‘Heritage Tourism’, commemoration is seen as a form of thanatourism (Hyde & Harman, Citation2011; Light, Citation2017; MacCarthy, Citation2017; Packer et al., Citation2019; Winter, Citation2010, Citation2011, Citation2019a). The act of travelling to a commemorative site also qualifies as a form of pilgrimage (Stephens, Citation2014; Winter, Citation2019b). Thanatourism, originally coined by Seaton is “the presentation and consumption of real and commodified death and disaster sites” (Foley & Lennon, Citation1996, p. 198; Seaton, Citation1996). Within the ‘politics of heritage’ (Timothy, Citation2011; Waterton, Citation2014) commemoration enjoys an uneasy relationship with thanatourism; this, given efforts to commodify the respected dead are often incongruous (Austin, Citation2002; Stephens, Citation2014). Stephens (Citation2014) refers to such commemorative exploitation as the “greediness for war memory” (p. 24). More recently, scholars have sought to widen this definition to include sites beyond conflict and battlefields to include associated meanings such as trauma tourism (Gross, Citation2006), dystopian and ‘dark aesthetics’ [tourism related] (Podoshen et al., Citation2015), and suicide tourism (Pratt et al., Citation2019; Sperling, Citation2019; Wen et al., Citation2019).

Commemorism is evolving. A review of the literature suggests motives for remembrance travel are shifting, from valorising war to an emphasis on its consequences and futility (Allem, Citation2014; Ekins, Citation2010; Ekins & Barron, Citation2014; Fathi, Citation2019; Scates, Citation2002). One notes a shift from fatalism and pragmatism to a more philosophical contemplation of meanings and emotions redolent of remembrance (Downes et al., Citation2015). This is acknowledged by the head of the Military History Collection at the Australian War Memorial, Ashley Ekins. Ekins (Citation2010, Ekins & Barron, Citation2014) notes the scholarly move towards truthfulness on an international level, as opposed to earlier, largely national works driven by jingoism and agenda (see also Fathi, Citation2019; Scates, Citation2002, Citation2006; Winter & Prost, Citation2005). More recently, the Director of the Australian War Memorial has controversially mooted the showcasing of the Brereton Report at the memorial. The report details alleged Australian war crimes committed during the Afghanistan campaign. Is this what visitors want in a memorial? While there is no shortage of scholarly claim to truth associated with wars, much less is published regarding the affective nature of commemoration. Why do people travel to non-substitutable places to discover, rediscover, valorise and ritualise military provenance?

Motives for commemorative pilgrimage

While schadenfreude, ‘dissonant heritage’ (Light, Citation2017), or the ‘heritage of atrocity’ (Tunbridge & Ashworth, Citation1996, Chapters 2–5) might be reason enough for some visitors to dark places, this does not fully explain motives for visiting places of remembrance (Buda, Citation2015; Dunkley et al., Citation2011). Indeed, Cave and Buda (Citation2018) refer to a ‘disconnect’ between the term ‘dark tourism’ and what visitors think they are doing. Places representing death, suffering and conflict while superficially interesting as commodities can also facilitate immersion into all that is noble and gallant about the human spirit (MacCarthy & Heng Rigney, Citation2020; Smith, Citation2014). Commemorative places facilitate meaningful ‘personal heritage’ (Biran et al., Citation2011) including connections with both the past (Winter, Citation2009), a local community (Stephens, Citation2007), and a visitor’s deity; often involving a symbolic interface such as a votive (Winter, Citation2019a), or storytelling (Ryan, Citation2007).

Memorials, cenotaphs, and visitor centres not only permit validation of one’s place in the national fabric they play an important role in defining our national identity (Coulthard-Clark, Citation1993; Fitzsimons, Citation2014; Hall et al., Citation2010; Hyde & Harman, Citation2011; Packer et al., Citation2019; Park, Citation2010; Roppola et al., Citation2019). To some, Australian visitation to Gallipoli has been elevated to a rite-of-passage with all this implies (Çakar, Citation2018, Citation2020; Scates, Citation2006). As Park in 2010 coined, commemorative travel is an emotional journey into nationhood.

Materials and methods

Methodology

Commemoration and associated pilgrimage are poignant social activities, replete with emotion, ritual and interactionism. In this context, meaning is more important than measurement in what is essentially phenomenology (Denzin & Lincoln, Citation1998). This lends itself to an interpretivist paradigm. More specifically, a focus on a subjective experience and from the respondents’ point of view informs the choice of both methodology and method. In keeping with the aim, two contemporary qualitative methods have been chosen for comparison: manual Dialectic Thematic Analysis and computer-generated data [Content] analysis using Leximancer V4.51.

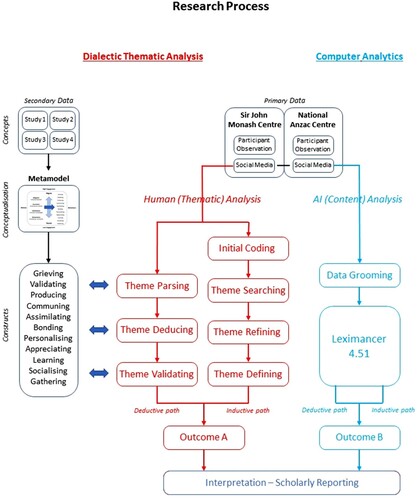

The inductive steps taken in DTA’s manual method are an adaptation of Braun and Clarke’s (Citation2006) magnum opus: Step (1) Familiarisation; (2) Generation of initial codes; (3) Searching for themes; (4) Reviewing themes; (5) Defining themes; and (6) Scholarly reporting (Braun & Clarke, Citation2006, Citation2019; Braun et al., Citation2019; Clarke, Citation2017). These steps comprise one path of DTA. The other prescribes the construction of a metamodel which is then used for deduction; (Ritchie & Spencer, Citation1994; Ritchie et al., Citation2003; Silverman, Citation2010).

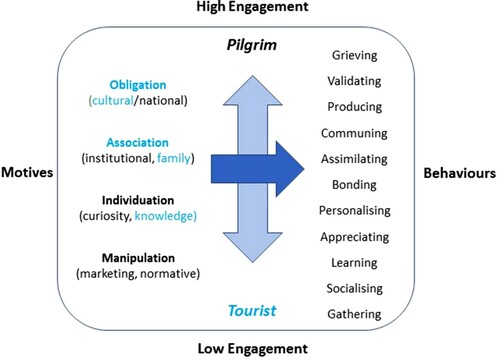

Commemoration as a phenomenon has moved beyond inception and therefore begs more than discovery, or in this case, rediscovery. The metamodel therefore is essentially an interpretive construct freighted with already known commemorative concepts. The model teases concepts from the literature and ties it all together with behaviours, involvement, and process. The deductive path then involves a similar process to Glaser and Strauss’ (Citation1967) constant comparison method (pp. 101–115). As primary data is collected it is being compared against the metamodel in an iterative process ( and ). While the action is iterative, the spirit of this deduction is recursive. The question being frequently asked is, does this micro interpretation resonate with the macro theoretical model? If not, this is an anomaly, something new, or a limiting exception?

The AI portion of the study involves both unsupervised and supervised use of the analytical software Leximancer. Once the data was collected and groomed it was then processed by the software. The first run captured an unsupervised bird’s-eye view of the combined visitor commentary from both sites: the NAC in Australia, and the SJMC in France. In doing so researchers ‘zeroed’ interpretive efforts by generating a benchmark or an output similar to a control group. Using the programme in this manner is inductive. Following this, seed concepts were introduced, and the settings adjusted to focus on specific constructs contained in the metamodel. The programme was run again, this time searching for similarities and differences with preconceived themes/terms (Haynes et al., Citation2019). The use of the programme is now deductive. Both outputs are then compared against the manual DTA outputs. DTA involves manual deduction of the metamodel (as described above), and induction by distilling the data in increasing levels of abstraction by using the steps prescribed by Reflexive Thematic Analysis.

A commemorative metamodel

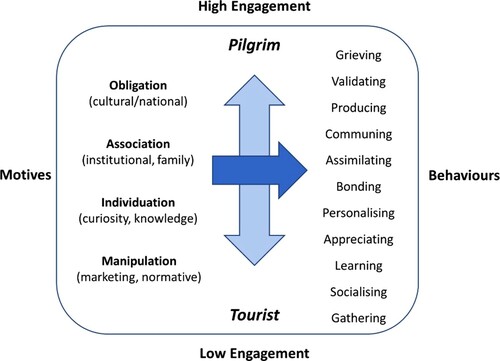

The metamodel used in this study is an amalgam of past-published concepts. Four particular studies inform the model: (1) Holt’s (Citation1995) ethnographic observations of communing and producing in consumer behaviour; (2) Hyde and Harman’s (Citation2011) motives for secular pilgrimage to Gallipoli: namely spiritual, nationalistic, family, friendship and travel (see also Çakar, Citation2020); (3) Bigley et al.’s (Citation2010) five motivational factors for Japanese visitors to the Korean Demilitarized Zone: namely appreciation of knowledge/history/culture, curiosity/adventure, political concern, war interest, and nature-based interest; and (4) Winter’s (Citation2010) dichotomy of commemorative visitors into pilgrims and tourists at Belgian and French cemeteries. In this case, Winter’s key findings have been distilled to a continuum of engagement. The metamodel includes a priori concepts such as attendance motives and a range of in situ activities on an internalization/engagement continuum. Why visitors attend and what they do while attending is depicted on a continuum from incidental tourist to committed pilgrim. A brief explanation of the four title metaphors used to describe motives for visitation in follows.

Obligation describes the most committed visitor, essentially a pilgrim. This person feels a sense of solemn duty or obligation to attend commemorative sites, perhaps from a sense of nationalistic, ethnic, or religious identification. An example of this would be a Jewish visitor to the Auschwitz Birkenau memorial.

Association refers to the motivation that comes from a sense of community or fraternity. Visitors display identification attitudes towards attendance as they do out a sense of loyalty to a group. The act of communal attendance being the social glue that binds, generates opportunities for interaction, and fosters a communal purpose.

Individuation refers to a widespread curiosity of the meanings associated with traumatic times. In this case, the curiosity is associated with important past events, which are themselves associated with chaos and death.

Lastly, Manipulation refers to the pull factor in commemorative pilgrimage, not also associated with any of the previous push metaphors. In this case visitors are commemorating by circumstance; perhaps through marketing efforts or due to pressure to attend by significant others. This metaphor represents the least engaged and committed visitor, referred to by Winter (Citation2010) as a ‘tourist’.

Data

The combined analysis uses a data set of n = 2490 non-dialogical, unrepeated comments from the popular travel site Trip Advisor (107,184 words). This is divided into 1975 comments regarding the NAC, 391 from the ANM, and 124 from the SJMC. The SJMC is considered an inseparable part of the ANM near Villers Bretonneux. It is unclear why the French site has only one-fifth of the comments of the Australian site. Fortunately, this discrepancy has little bearing on the study as there is enough data from both sites to identify any etic differences. All data was groomed with spelling errors corrected, acronyms expanded (with the exception of ANZAC/Anzac), and repeats avoided. The comments used as evidence in the manuscript had any errors reinstated for both context and truth, as is the convention.

The combined sample is considered non-purposive in that at the time of collection the comments were the most recently available. Initially, manual extraction of an arbitrary 500 gave way to the use of the social media management software Social Studio, which was later relegated by the dedicated scraping software, Octoparse V8 beta. By 2020 all available comments were able to be scraped and used. There is a smaller collateral data set of 500 transcribed visitor book comments, plus hand-written notes, digital photographs and a video taken inside the NAC (with permission).

This associated ethnography informed contextualisation. The familiarisation phase included four days of participant observation at the NAC and two days at the SJMC. The NAC engagement in Western Australia was divided into two 2018 visits three months apart with two researchers interacting with the public and staff. The 2019 SJMC engagement involved two-days in situ with one researcher interacting with the public and staff. Unlike the social media comments the study’s participant observation is considered purposive as it entailed targeted engagement with key custodians (see Denzin & Lincoln, Citation2000, Citation2018; Silverman, Citation2010, Citation2013a, Citation2013b). This included semi-structured interviews with the National Anzac Centre manager, the marketing officer for the City of Albany and the Deputy Director of the Sir John Monash Centre. The Chief Executive Officer of the City of Albany and the Director of the Sir John Monash Centre were later sought for member-checking of the manuscript. The first-author’s military service undoubtedly informed the analysis, as did the second author’s non-military provenance. Using multiple methods, sites, data and researchers qualifies as the tenet of triangulation.

Results

AI analysis [Leximancer]

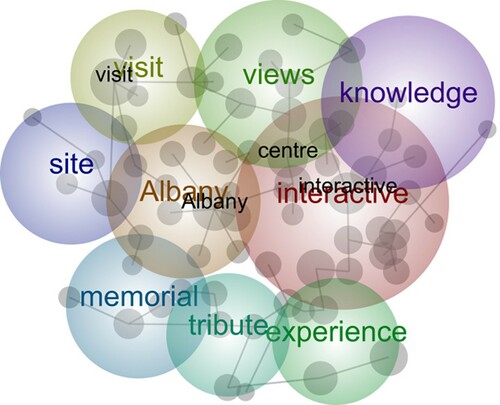

Machine thematic analysis is underpinned by Leximancer’s proprietary ontological bubble maps and associated metric-driven graphs. represents the combined data sets of the SJMC/ANM and the NAC using default programme settings. These findings also represent the equivalent of a control group for other machine outputs and the results appear stable.

The thematic bubble map is a ‘zoomed out’ general overview of the textual data. By manipulating the map’s magnification setting and cross-referencing against tabulated quantitative data the researcher can identify broad themes, concepts, and terms. These summary quantities justify importance and highlight the automatic terms revealed as clustered co-occurrence.

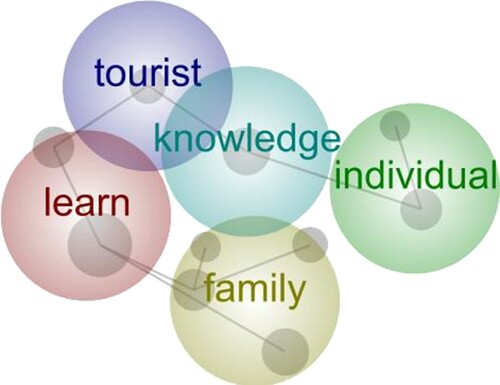

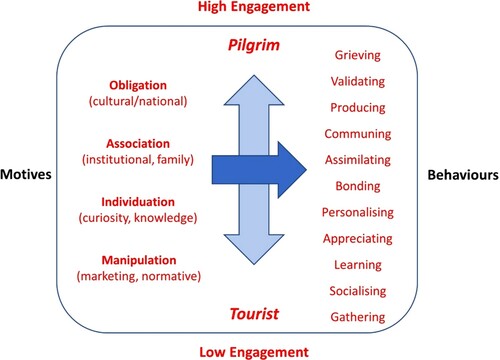

represents Leximancer’s analysis using user-defined seed concepts only. This was done to test the programme deductive ability. In this case, Leximancer was searching for constructs parsed from the metamodel. The result is one cluster or nexus of five identified themes; ‘Tourist’, ‘Knowledge’, ‘Individual’, ‘Learn’ and ‘Family’. Unsurprisingly Individual and Family are separated in the results indicating there is minimal if any co-occurrence. Contrastingly, ‘tourist’, ‘knowledge’ and ‘learn’ often occur in the same block sentences in the data. What can one glean from this? One interpretation is tourists attend commemorative centres to learn knowledge. This satisfies one element or dynamic in the metamodel: the typified behaviour of ‘Learning’. It is also correctly identified at the lower end of the Pilgrim/Tourist engagement continuum. Arguably the machine has correctly deduced a previously published conceptual nexus which includes a process. The machine has also correctly identified individual concepts: for example, separated Individuation from Association (motives for visitation) and three other key concepts: Knowledge [learning], Engagement [with commemoration, not each other], and Culture. The limitation here is the metamodel contains 15 more constructs and processes which have eluded discovery by AI. Given the richness of the four previous studies comprising the metamodel, the absence of more related concepts is concerning. This warrants further investigation.

Unshown on the cropped deductive CA results () are three concepts discovered by Leximancer however with no co-occurrence with other concepts: they are ‘gather’, ‘engage’ and ‘culture’. These concepts represent synonyms of the list of 30 seeded words. On further investigation a manual search for the word ‘gather’ by Respondent SM147 is not intended to indicate a congregation of people, rather communicate a conclusion, ‘I gather the Australian’ government is planning … ’. In contrast, Respondent SM533 refers to gather as soldiers gathering on ships, rather than the intended visitors gathering as a motive for attendance. Similarly, SM837, SM1055, SM1275, SM1456 and SM1489 use ‘gather’ when referring to the ships anchored in Albany harbour.

SM142 refers to ‘engage’ in the context of storytelling, ‘I found the [personal] stories to [be] most engaging’. SM624 uses it to communicate engagement with young and old. SM794 talks about how (presumably their own) teenagers changed from being initially skeptical to ‘really engaged’. SM1235 refers to it in the context of human interaction, specifically the staff as being knowledgeable and ‘happy to engage’. SM1103, 1291, 1402 and 1493 refer to engage in the context of a plural entity having affinity with various types of visitors. SM2115 talks about the quality of experience, referring to a ‘personalized’ engagement. While AI is utterly precise in metric manipulation it is not yet capable of discerning context. This lack of contextual delineation [awareness] appears to pollute the findings with faux importance. AI was however capable of deducing one process and five concepts from the metamodel. As far as induction or theme building is concerned the themes identified from occurrence and co-occurrence appear anodyne. For example, we know the topic is related to ‘memorial’ and ‘tribute’ and are aware Trip Advisor comments relate to ‘Experience’ and ‘Visit’. If one removes these concepts there is little left of phronetic value. In this situation, AI was incapable of deep thought. AI’s deductive results indicated in blue is highlighted in .

Human analysis (DTA)

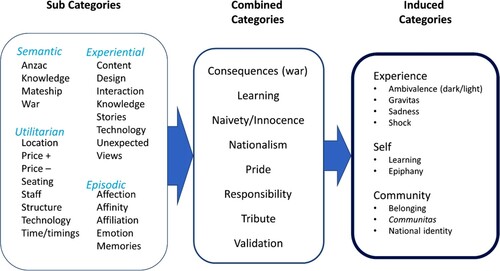

Manual/human manipulation of the data incorporated two simultaneous logic processes: induction and deduction. The inductive process followed the steps prescribed by Braun and Clarke’s (Citation2006, Citation2019) TA; coding, theme searching, theme refining and theme defining (see ). Initially bucket domains and categorising comments were gathered into four early themes: Semantic focusing on commemorative meanings, Experiential focusing on the experience of visitation), Utilitarian focusing on the logistics of visitation and, Episodic focusing on affective responses to visitation. The consolidation to eight combined categories represents theme refining with more specific headings, followed by the final phase of three overarching themes: The Experience of commemoration, the development of Self, and commemoration as the fabric of Community ().

In the final process, eight categories are further refined to three summative genres. These act as hypernyms for contextual themes. Experience emerged as a recurring theme among respondents as they used social media to share their rational thoughts and emotions. Ambivalence refers to the juxtaposed extremes of dark to light. While commemoration is ostensibly the manifestation of communal memory it is the antithesis of wartime traits that visitors are appreciating and valorising. There is an ambivalence at play here; while war and associated memories are showcased, so too are the positive traits that emerge in spite of war − courage, sacrifice, endurance, compassion and caritas [unselfish care for others]. More specifically, commemorative commentary contains varying degrees of respect and gravitas with the majority penned by respondents with serious awe. Meanwhile, others profess shock at the enormity of what they thought they knew but had not stopped to consider. The scale of death and destruction and the magnitude of emotion evokes surprise and a sense of confliction.

There is evidence in the data of all concepts and processes contained in the metamodel (.). This goes some way to implying deduction of past published concepts and processes. A summary of human deductive reasoning of the data is indicated in red ().

Table 1. Human deduction − evidentiary examples.

Discussion

While AI in this instance did not perform as well as one would have hoped we must be mindful to separate its performance from the elephant in the room. The elephant being the common belief that AI is fundamentally different to us, as exampled by often being portrayed as the boogeyman in popular fiction. By ‘othering’ AI it is seen as separate to us, whereby encouraging notions of being beyond our control, understanding, and ultimately user [researcher] responsibility. Allied with this is the fallacy that AI is objectively precise and therefore its results are superior to ours. Even further, that ultimately all this is all inevitable and we should just accept it. Yet AI is a result of human programming and choices, and humans are flawed; AI reflects these flaws, not only the GIGO (Garbage In, Garbage out) principle but in flawed premises and the programming itself. Examples exist of flawed AI due to either initial programming or subsequent interaction. In 2018 Amazon’s experimental recruitment programme developed a misogynist bias (Dastin, Citation2018). Later, Microsoft’s chatbot learned anti-Semitic terms from Twitter users, and software programmed to recognise facial emotions have been known to bias certain ethnicities as being happy or angry (Johnson, Citation2021).

Whether AI results are free from programmed or learned bias is one concern. Another is the suggested importance, and relative importance of the output, as determined by the machine. In other words, a focus on the value of the result is the reason research exists at all. The scenario is often one of targeted research with the expectation that it will lead to phronetic wisdom. This suggests researchers are expecting and vigilant for results that benefit their commission or society in some way. AI does not [yet] know this, and in that sense any AI result will likely fall short of our expectations. One must be careful what one wishes for as we imagine delegating more control of our research to a machine. Are we willing to divest the stewardship of knowledge creation to a computer? While Leximancer’s engineers stress the importance of human interpretation or in other words a symbiotic relationship one cannot help but notice that increasingly the researcher is relegated. In the forensic chain of knowledge creation this includes not only the determination by AI of what is the phenomenon, but also its importance to humanity. In some respects, we are there already – “You just do what you’re told by the app” (Interview 31, Veen et al., Citation2019; see also ‘Algocratic oversight’ in Aneesh, Citation2009; Lee et al., Citation2015). A Pandora’s box representing one possible future perhaps.

Returning to the micro implications of this study, and with respect to deducing a pre-theorized metamodel. AI appears less insightful than human analysis. Many themes comprising the metamodel appear in the data yet do not appear in the seeded result. Leximancer did not reveal the gamut of what appears obvious to researchers who have lived the experience. Perhaps because its programming constraints are textual as opposed to contextual. The programme’s thesaurus is semantic as opposed to episodic. While concepts such as ‘learning’ and ‘family’ are revealed, more esoteric, nuanced, and affective concepts such as gravitas and community are not. These are concepts that transcend the data and words that do not appear in the data. One may offer this by way of explanation and apology. One also notes a lack of emphasis of social relationships: for example, between soldiers, between soldiers and civilians, between soldiers and nation, and between soldiers and their animals. Also missing is the overwhelming appreciation of national pride, sadness, loss and [secular] caritas.

Given familiarity with the phenomenon through (1), contextual priming; (2), triangulation across data sources; (3), triangulation across researchers; and (4), member checks, then the irresistible inference remains. In the area of deductive thematic analysis AI’s output has the capacity to distract from reality. AI also failed to highlight key latent themes which were considered relevant by the researchers. For conventional Content Analysis AI was indeed helpful in summarising over 100 K words. This compared to manually coding was a stark difference in time and effort. In a time-poor workplace with Big data AI is not only useful, but also seductive. With these advantages and shortfalls perhaps Leximancer would be better suited in a multi-method research design.

One counter to criticism by Leximancer advocates is that researchers may not appreciate the literal nature of the process. As Scharkow (Citation2013) notes, “The computer has difficulties with categories that rely on contextual knowledge” (p. 771). Software retailers currently counsel that human insight provides the missing link. Therein lies the distinction between machine and human analysis; AI is descriptive, while human analysis is unavoidably, and in many cases unashamedly interpretive. From an AI advocate’s perspective this can be construed as a limitation of this study. That we are comparing apples with oranges and therefore the findings are fraught. Human analysis is focused on thematic generation while AI can be argued is focusing on one of two CA approaches: either syntactical or lexical.

The corollary to this limiting claim is that Leximancer is doing precisely what it is designed to do. That nothing is amiss, and it is we who are expecting too much. Fair point, but if that is the case then why is Leximancer marketed as a theme generating tool (Angus, Citation2014; Leximancer, Citation2018; Smith & York, Citation2016)? Yet another limitation is the use of Leximancer as representative of all analytical programmes. This is obviously not the case and perhaps other programmes would have been more or less successful in recognising salient themes. Our definition of success in this instance are results benchmarked against what humans found in the same data. This, in a world where humans ultimately decide what is important and therefore by default, we are the gold standard of research phronesis. To be fair to the machine, we made up the rules; both players competed using the same rules, but then we decided who won. To be fair to the machine, this is our game.

Conclusions

Implied criticism and countercriticism aside, the advisory when using computer-assisted CA is (1) familiarity with the data and (2) compulsory immersion in the context, preferably literally and prior to analysis. Similarly, the principle of Gestalt resonates, where the surrounding context is critical to making sense of social interaction. Such context-derived interpretations will often be polluted by goals, expectations, and assumptions; however, let us not forget that human perception often subordinates reality. For in the context of social interaction it matters less what is real and more what people think is real. This highlights a major distinction between AI and human analysis. AI will always give what is real, when ironically the more useful outcome is that which is related to human preconception, context, circumspection, and practical wisdom.

On the importance of context, we must bear in mind that contextualising will occur at some point regardless of whether the AI data is analysed ‘untouched’ by human hands. If one accepts the inevitability of contextualisation by the researcher then surely it is moot at what stage this occurs: the start, middle, or at the end of the research process. Nor is context homogenous across sites and time, but individually constructed and reconstructed to suit the cultural narrative of the commission, and the moment.

Included in the contextual narrative are memories, both individual and collective. Memories of social phenomena are dynamic, forming unique fleeting interpretive frames by which meaning is derived. Poignant social discourse such as commemoration is nuanced, constructed from multiple perspectives, and influenced by agendas. While a machine-driven ontological result is a neat, descriptive package it also appears in this case to be somewhat bland. Useful meaning on the other hand transcends descriptive meaning as it is ultimately derived from human perceptions of context and commission. Less controversial therefore, is using AI to complement rather than control the analytical process. Complementing implies augmenting human analysis while leaving the triaging of relative importance to humans.

Regardless, a discussion of where computer analytics fits in research is long overdue. While it is not the authors’ intention to generalise these findings to a population of research commissions the overarching advice is generic. That is the tempering of any hype that AI-derived analysis is inviolable. Or that it is currently more than an analytical prosthetic. Unfortunately, there is no insightful ghost in the machine – not yet.

Acknowledgements

We wish to thank our colleagues Eunjung Kim and Stephen Fanning for their assistance in making this project possible. We also wish to thank the wonderful staff at the National Anzac Centre, the City of Albany, the Department of Veterans’ Affairs (Australia) and the Sir John Monash Centre (France) for their participation and patience.

Disclosure statement

No potential conflict of interest was reported by the author(s).

References

- Abreu Novais, M., Ruhanen, L., & Arcodia, C. (2018). Destination competitiveness: A phenomenographic study. Tourism Management, 64, 324–334. https://doi.org/https://doi.org/10.1016/j.tourman.2017.08.014

- Allem, L. (Presenter). (2014, September 21). A history of forgetting: From shellshock to PTSD. Radio National [Australian Broadcasting Corporation].

- Aneesh, A. (2009). Global labor: Algocratic modes of organization. Sociological Theory, 27(4), 347–370. https://doi.org/https://doi.org/10.1111/j.1467-9558.2009.01352.x

- Angus, D. (2014, April 3). Leximancer tutorial 2014 [Video]. https://www.youtube.com/watch?v=F7MbK2AF0qQ&t=2228s

- Austin, N. (2002). Managing heritage attractions: Marketing challenges at sensitive historical sites. International Journal of Tourism Research, 4(6), 447–457. https://doi.org/https://doi.org/10.1002/jtr.403

- Barlas, P. (2013). Defining ‘Big data’ not so easy a task it’s a ‘moving target’ processing vast troves of information to get strategic insights is key. Investor’s Business Daily, A04, 04.

- Bigley, J., Lee, C., Chon, J., & Yoon, Y. (2010). Motivations for war-related tourism: A case of DMZ visitors in Korea. Tourism Geographies, 12(3), 371–371. doi:https://doi.org/10.1080/14616688.2010.494687

- Biran, A., Poria, Y., & Oren, G. (2011). Sought experiences at (dark) heritage sites. Annals of Tourism Research, 38(3), 820–841. https://doi.org/https://doi.org/10.1016/j.annals.2010.12.001

- Braun, V., & Clarke, V. (2006). Using thematic analysis in psychology. Qualitative Research in Psychology, 3(2), 77–101. https://doi.org/https://doi.org/10.1191/1478088706qp063oa

- Braun, V., & Clarke, V. (2019). Reflecting on reflexive thematic analysis. Qualitative Research in Sport, Exercise and Health, 11(4), 589–597. https://doi.org/https://doi.org/10.1080/2159676X.2019.1628806

- Braun, V., Clarke, V., Hayfield, N., & Terry, G. (2019). Thematic Anaysis. In P. Liamputtong (Ed.), Handbook of research methods in health social sciences (pp. 843–860). Singapore: Springer.

- Buda, D. M. (2015). The death drive in tourism studies. Annals of Tourism Research, 50, 39–51. https://doi.org/https://doi.org/10.1016/j.annals.2014.10.008

- Çakar, K. (2018). Experiences of visitors to Gallipoli, a nostalgia-themed dark tourism destination: An insight from TripAdvisor. International Journal of Tourism Cities, 4(1), 98–109. https://doi.org/https://doi.org/10.1108/IJTC-03-2017-0018

- Çakar, K. (2020). Investigation of the motivations and experiences of tourists visiting the Gallipoli peninsula as a dark tourism destination. European Journal of Tourism Research, 24, 1–30. https://www.proquest.com/scholarly-journals/investigation-motivations-experiences-tourists/docview/2369752932/se-2?accountid=10675

- Cave, J., & Buda, D. (2018). The Palgrave handbook of dark tourism studies. Palgrave Macmillan UK.

- Cheng, M. (2016). Sharing economy: A review and agenda for future research. International Journal of Hospitality Management, 57, 60–70. https://doi.org/https://doi.org/10.1016/j.ijhm.2016.06.003

- Chiu, W., Bae, J. S., & Won, D. (2017). The experience of watching baseball games in Korea: An analysis of user-generated content on social media using Leximancer. Journal of Sport & Tourism, 21(1), 33–47. https://doi.org/https://doi.org/10.1080/14775085.2016.1255562

- Clarke, V. (2017, December 9). What is thematic analysis? [Video]. https://www.youtube.com/watch?v=4voVhTiVydc

- Coulthard-Clark, C. D. (1993). The diggers. Melbourne University Press.

- Creswell, J. W. (2007). Qualitative inquiry & research design: Choosing among five approaches (2nd ed.). Sage Publications.

- Dastin, J. (2018, October 11). Amazon scraps secret AI recruitment tool that showed bias against women. Reuters. https://www.reuters.com/article/us-amazon-com-jobs-automation-insight-idUSKCN1MK08G

- Denzin, N. K., & Lincoln, Y. S. (1998). Strategies of qualitative inquiry. Sage Publications.

- Denzin, N. K., & Lincoln, Y. S. (2000). Handbook of qualitative research. Sage Publications.

- Denzin, N. K., & Lincoln, Y. S. (2018). The Sage handbook of qualitative research (5th ed.). SAGE.

- Dongwook, K., & Sungbum, K. (2017). The role of mobile technology in tourism: Patents, articles, news, and mobile tour app reviews. Sustainability, 9(11) 2082. https://doi.org/https://doi.org/10.3390/su9112082

- Downes, S., Lynch, A., & O’Loughlin, K. (2015). Emotions and war: Medieval to romantic literature: Palgrave studies in the history of emotions. Palgrave Macmillan.

- Dunkley, R., Morgan, N., & Westwood, S. (2011). Visiting the trenches: Exploring meanings and motivations in battlefield tourism. Tourism Management, 32(4), 860–868. https://doi.org/https://doi.org/10.1016/j.tourman.2010.07.011

- Ekins, A. (2010). 1918 year of victory: The end of the Great War and the shaping of history (1st ed.). Exisle Publishing.

- Ekins, A., & Barron, J (2014, April 23). Where did the myths of Gallipoli originate? ABC News. https://www.abc.net.au/news/2014-04-24/where-did-the-myths-about-gallipoli-originate/5410642

- Elo, S., & Kyngäs, H. (2008). The qualitative content analysis process. Journal of Advanced Nursing, 62(1), 107–115. https://doi.org/https://doi.org/10.1111/j.1365-2648.2007.04569.x

- Fathi, R. (2019). Do ‘the French’ care about Anzac? The Conversation. https://theconversation.com/friday-essay-do-the-french-care-about-anzac-110880

- Fitzsimons, P. (2014). Gallipoli. Random House Australia.

- Foley, M., & Lennon, J. J. (1996). JFK and dark tourism: A fascination with assassination. International Journal of Heritage Studies, 2(4), 198–211. https://doi.org/https://doi.org/10.1080/13527259608722175

- Glaser, B., & Strauss, A. (1967). The discovery of grounded theory: Strategies for qualitative research. Aldine Pub.

- Gross, A. (2006). Holocaust tourism in Berlin: Global memory, trauma and the ‘negative sublime’. Journeys, 7(2), 73–100. https://doi.org/https://doi.org/10.3167/jys.2006.070205

- Halbwachs, M. (1992). On collective memory (L. Coser, Ed.). University of Chicago Press.

- Hall, J., Basarin, J., & Lockstone-Binney, L. (2010). An empirical analysis of attendance at a commemorative event: Anzac Day at Gallipoli. International Journal of Hospitality Management, 29(2), 245–253. https://doi.org/https://doi.org/10.1016/j.ijhm.2009.10.012

- Haynes, E., Garside, R., Green, J., Kelly, M., Thomas, J., & Guell, C. (2019). Semiautomated text analytics for qualitative data synthesis. Research Synthesis Methods, 10(3), 452–464. https://doi.org/https://doi.org/10.1002/jrsm.1361

- Hickey, G., & Kipping, C. (1996). Issues in research. A multi-stage approach to the coding of data from open-ended questions. Nurse Researcher, 4(1), 81–91. https://doi.org/https://doi.org/10.7748/nr.4.1.81.s9

- Hirsch, M. (2008). The generation of postmemory. Poetics Today, 29(1), 103–128. https://doi.org/https://doi.org/10.1215/03335372-2007-019

- Hirsch, M. (2012). The generation of postmemory: Writing and visual culture after the Holocaust. Columbia University Press.

- Holt, D. B. (1995). How consumers consume: A typology of consumption practices. Journal of Consumer Research, 22(1), 1–16. https://doi.org/https://doi.org/10.1086/209431

- Hsieh, H., & Shannon, S. (2005). Three approaches to qualitative content analysis. Qualitative Health Research, 15(9), 1277–1288. https://doi.org/https://doi.org/10.1177/1049732305276687

- Hyde, K., & Harman, S. (2011). Motives for a secular pilgrimage to the Gallipoli battlefields. Tourism Management, 32(6), 1343–1351. https://doi.org/https://doi.org/10.1016/j.tourman.2011.01.008

- Johnson, S. (2021, February 8). Why ‘Othering’ AI keeps us from understanding it. Techonomy. https://techonomy.com/2021/02/why-othering-ai-keeps-us-from-understanding-it/?utm_source=pocket-newtab-intl-en

- Lee, M. K., Kusbit, D., Metsky, E., & Dabbish, L. (2015). Working with machines: The impact of algorithmic and data-driven management on human workers. In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems (pp. 1603–1612). ACM.

- Leech, N. L., & Onwuegbuzie, A. J. (2011). Beyond constant comparison qualitative data analysis: Using NVivo. School Psychology Quarterly, 26(1), 70–84. https://doi.org/https://doi.org/10.1037/a0022711

- Leximancer. (April, 2018). Leximancer user guide, Release 4.5. Leximancer.

- Li, J., Pearce, P. L., & Low, D. (2018). Media representation of digital-free tourism: A critical discourse analysis. Tourism Management, 69, 317–329. https://doi.org/https://doi.org/10.1016/j.tourman.2018.06.027

- Light, D. (2017). Progress in dark tourism and thanatourism research: An uneasy relationship with heritage tourism. Tourism Management, 61, 275–301. https://doi.org/https://doi.org/10.1016/j.tourman.2017.01.011

- Lohr, S. (2013a, February 1). The origins of ‘Big data’: An etymological detective story. New York Times. https://bits.blogs.nytimes.com/2013/02/01/the-origins-of-big-data-an-etymological-detective-story/

- Lohr, S. ((2013b, February 11). Searching for the origins of the term Big data. Telegraph-Journal. http://ezproxy.ecu.edu.au/login?url=https://search-proquest-com.ezproxy.ecu.edu.au/docview/1285449594?accountid=10675

- MacCarthy, M. J. (2017). Consuming symbolism: Marketing D-Day and Normandy. Journal of Heritage Tourism, 12(2), 191–203. https://doi.org/https://doi.org/10.1080/1743873X.2016.1174245

- MacCarthy, M., & Heng Rigney, K. N. (2020). Commemorative insights: The best of life, in death. Journal of Heritage Tourism. https://doi.org/https://doi.org/10.1080/1743873X.2020.1840572

- McGrath, S. J. (2006). Early Heidegger and medieval philosophy: Phenomenology for the godforsaken. https://ebookcentral.proquest.com

- Nawijn, J., & Fricke, M. C. (2015). Visitor emotions and behavioral intentions: The case of concentration camp memorial Neuengamme. International Journal of Tourism Research, 17(3), 221–228. https://doi.org/https://doi.org/10.1002/jtr.1977

- Packer, J., Ballantyne, R. & Uzzel, D. (2019). Interpreting war heritage: Impacts of Anzac museum and battlefield visits on Australians’ understanding of national identity. Annals of Tourism Research, 76, 105–116. https://doi.org/https://doi.org/10.1016/j.annals.2019.03.012

- Park, H. (2010). Heritage tourism: Emotional journeys into nationhood. Annals of Tourism Research, 37(1), 116–135. https://doi.org/https://doi.org/10.1016/j.annals.2009.08.001

- Podoshen, J., Venkatesh, V., Wallin, J., Andrzejewski, S., & Jin, Z. (2015). Dystopian dark tourism: An exploratory examination. Tourism Management, 51, 316–328. https://doi.org/https://doi.org/10.1016/j.tourman.2015.05.002

- Potter, W., & Levine-Donnerstein, D. (1999). Rethinking validity and reliability in content analysis. Journal of Applied Communication Research, 27(3), 258–284. https://doi.org/https://doi.org/10.1080/00909889909365539

- Pratt, S., Tolkach, D., & Kirillova, K. (2019). Tourism & death. Annals of Tourism Research, 78, 1–12. https://doi.org/https://doi.org/10.1016/j.annals.2019.102758

- Richardson, J. T. E. (1999). The concepts and methods of phenomenographic research. Review of Educational Research, 69(1), 53–82. https://doi.org/https://doi.org/10.3102/00346543069001053

- Ritchie, J., & Spencer, L. (1994). Qualitative data analysis for applied policy research. In A. Bryman, & R. G. Burgess (Eds.), Analysing qualitative data. Routledge.

- Ritchie, J., Spencer, L., & O’Connor, W. (2003). Carrying out qualitative analysis. In J. Ritchie & J. Lewis (Eds.), Qualitative research practise: A guide for social science researchers and students (pp. 173–194). Sage.

- Rodrigues, H., Brochado, A., Troilo, M., & Mohsin, A. (2017). Mirror, mirror on the wall, who’s the fairest of them all? A critical content analysis on medical tourism. Tourism Management Perspectives, 24, 16–25. https://doi.org/https://doi.org/10.1016/j.tmp.2017.07.004

- Roppola, T., Packer, J., Uzzell, D., & Ballantyne, R. (2019). Nested assemblages: Migrants, war heritage, informal learning and national identities. International Journal of Heritage Studies, 25(11), 1205–1223. https://doi.org/https://doi.org/10.1080/13527258.2019.1578986

- Ryan, C. (2000). Tourist experiences, phenomenographic analysis, post-postivism and neural network software. International Journal of Tourism Research, 2(2), 119–131. https://doi.org/https://doi.org/10.1002/(SICI)1522-1970(200003/04)2:2<119::AID-JTR193>3.0.CO;2-G

- Ryan, C. (2007). Battlefield tourism: History, place and interpretation. Advances in Tourism Research. Elsevier. https://www-sciencedirect-com.ezproxy.ecu.edu.au/book/9780080453620/battlefield-tourism

- Sandelowski, M. (1995). Qualitative analysis: What it is and how to begin. Research in Nursing & Health, 18(4), 371–375. https://doi.org/https://doi.org/10.1002/nur.4770180411

- Scates, B. (2002). In Gallipoli’s shadow: Pilgrimage, memory, mourning and the Great War. Australian Historical Studies, 33(119), 1–21. https://doi.org/https://doi.org/10.1080/10314610208596198

- Scates, B. (2006). Return to Gallipoli: Walking the battlefields of the Great War. Cambridge University Press.

- Scharkow, M. (2013). Thematic content analysis using supervised machine learning: An empirical evaluation using German online news. Quality and Quantity, 47(2), 761–773. https://doi.org/https://doi.org/10.1007/s11135-011-9545-7

- Schweinsberg, S., Darcy, S., & Cheng, M. (2017). The agenda setting power of news media in framing the future role of tourism in protected areas. Tourism Management, 62, 241–252. https://doi.org/https://doi.org/10.1016/j.tourman.2017.04.011

- Seaton, A. V. (1996). Guided by the dark: From thanatopsis to thanatourism. International Journal of Heritage Studies, 2(4), 234–244. https://doi.org/https://doi.org/10.1080/13527259608722178

- Silverman, D. (2010). Doing qualitative research (3rd ed.). Sage.

- Silverman, D. (2013a). A very short, fairly interesting and reasonably cheap book about qualitative research (2nd ed.). Sage.

- Silverman, D. (2013b). What counts as qualitative research? Some cautionary comments. Qualitative Sociology Review, 9(2), 48–55. https://www.proquest.com/scholarly-journals/what-counts-as-qualitative-research-some/docview/1425547054/se-2?accountid=10675

- Silvia, S.-B., Daniela, B., & Walesska, S. (2019). The sustainability of cruise tourism onshore: The impact of crowding on visitors’ satisfaction. Sustainability, 11(6), 1510. https://doi.org/https://doi.org/10.3390/su11061510.

- Smith, L. (2014). Visitor emotion, affect and registers of engagement at museums and heritage sites. Conservation Science in Cultural Heritage, 14(2), 125–132. https://doi.org/https://doi.org/10.6092/issn.1973-9494/5447

- Smith, A., & York, S. (2016, July 21). What’s new in Leximancer V4.5 [Video]. https://www.youtube.com/watch?v=FB7KIQSE4-Q

- Sotiriadou, P., Brouwers, J., & Le, T. (2014). Choosing a qualitative data analysis tool: A comparison of NVivo and Leximancer. Annals of Leisure Research, 17(2), 218–234. https://doi.org/https://doi.org/10.1080/11745398.2014.902292

- Sperling, D. (2019). Suicide tourism; Understanding the legal, philosophical, and socio-political dimensions. Oxford Scholarship Online. www.oxfordsholarship.com

- Stephens, J. R. (2007). Memory, commemoration and the meaning of a suburban war memorial. Journal of Material Culture, 12(3), 241–261. https://doi.org/https://doi.org/10.1177/1359183507081893

- Stephens, J. R. (2014). Sacred landscapes: Albany and Anzac pilgrimage. Landscape Research, 39(1), 21–39. https://doi.org/https://doi.org/10.1080/01426397.2012.716027

- Taylor, C. (1977). Hegel. Cambridge University Press.

- Tesch, R. (1990). Qualitative research: Analysis types and software tools. Falmer.

- Timothy, D. (2011). Cultural heritage and tourism: An introduction. Channel View Publications.

- Tunbridge, J. E., & Ashworth, G. J. (1996). Dissonant heritage: The management of the past as a resource in conflict. John Wiley.

- van Berkel, N., Tag, B., Goncalves, J., & Hosio, S. (2020). Human-centred artificial intelligence: A contextual morality perspective. Behaviour & Information Technology, 1–17. https://doi.org/https://doi.org/10.1080/0144929X.2020.1818828.

- Veen, A., Barratt, T., & Goods, C. (2019). Platform-capital’s ‘app-etite’ for control: A labour process analysis of food delivery work in Australia. Work, Employment and Society, 34(3), 388–406. https://doi.org/https://doi.org/10.1177/095001701983691.

- Waterton, E. (2014). A more-than-representational understanding of heritage? The ‘past’ and the politics of affect. Geography Compass, 8(11), 823–833. https://doi.org/https://doi.org/10.1111/gec3.12182

- Weber, R. P. (1990). Qualitative content analysis. https://methods-sagepub-com.ezproxy.ecu.edu.au/book/basic-content-analysis/n1.xml

- Weissman, G. (2018). Fantasies of witnessing: Postwar efforts to experience the Holocaust. Cornell University Press. https://doi.org/https://doi.org/10.7591/9781501730054

- Welsh, E. (2002). Dealing with data: Using NVivo in the qualitative data analysis process. Forum: Qualitative Social Research, 3(2), 1–9.

- Wen, J., Yu, C., & Goh, E. (2019). Physician-assisted suicide travel constraints: Thematic content analysis of online reviews. Tourism Recreation Research, 44(4), 553–557. https://doi.org/https://doi.org/10.1080/02508281.2019.1660488

- Wilk, V., Soutar, G., & Harrigan, P. (2019). Tackling social media data analysis. Qualitative Market Research, 22(2), 94–113. https://doi.org/https://doi.org/10.1108/QMR-01-2017-0021

- Winter, C. (2009). Tourism, social memory and the Great War. Annals of Tourism Research, 36(4), 607–626. https://doi.org/https://doi.org/10.1016/j.annals.2009.05.002

- Winter, C. (2010). Battlefield visitor motivations: Explorations in the Great War town of Ieper, Belgium. International Journal of Tourism Research, 13(2), 164–176. https://doi-org.ezproxy.ecu.edu.au/https://doi.org/10.1002/jtr.806

- Winter, C. (2011). First world War cemeteries: Insights from visitor books. Tourism Geographies, 13(3), 462–479. https://doi.org/https://doi.org/10.1080/14616688.2011.575075

- Winter, C. (2019a). Pilgrims and votives at war memorials: A vow to remember. Annals of Tourism Research, 76, 117–128. https://doi.org/https://doi.org/10.1016/j.annals.2019.03.010

- Winter, C. (2019b). The Palgrave handbook of artistic and cultural responses to war since 1914: The British Isles, the United States and Australasia. In M Kirby, M Baguley, & J McDonald (Eds.), Touring the battlefields of the Somme with the Michelin and Somme tourisme guidebooks (pp. 99–115). Springer International Publishing and Palgrave Macmillan.

- Winter, J., & Prost, A. (2005). The Great War in history: Debates and controversies, 1914 to the present. Cambridge University Press.