ABSTRACT

Algorithmic discrimination has become one of the critical points in the discussion about the consequences of an intensively datafied world. While many scholars address this problem from a purely techno-centric perspective, others try to raise broader social justice concerns. In this article, we join those voices and examine norms, values, and practices among European civil society organizations in relation to the topic of data and discrimination. Our goal is to decenter technology and bring nuance into the debate about its role and place in the production of social inequalities. To accomplish this, we rely on Nancy Fraser’s theory of abnormal justice which highlights interconnections between maldistribution of economic benefits, misrecognition of marginalized communities, and their misrepresentation in political processes. Fraser’s theory helps situate technologically mediated discrimination alongside other more conventional kinds of discrimination and injustice and privileges attention to economic, social, and political conditions of marginality. Using a thematic analysis of 30 interviews with civil society representatives across Europe’s human rights sector, we bring clarity to this idea of decentering. We show how many groups prioritize the specific experiences of marginalized groups and ‘see through’ technology, acknowledging its connection to larger systems of institutionalized oppression. This decentered approach contrasts the process-oriented perspective of tech-savvy civil society groups that shy from an analysis of systematic forms of injustice.

Contemporary discussion about automated computer systemsFootnote1 is feeding into a moral panic for which technology is the savior. As mentioned in various (and usually United States-based) news stories and popular discourse, systems powered by bad data, bad algorithmic models, or both lead to ‘high-tech’ discrimination – misclassifications, over targeting, disqualifications, and flawed predictions that affect some groups, such as historically marginalized ones, more than others. To remedy this problem, many argue that the introduction of fair, accountable, and transparent machine learning will thwart biased, racist, or sexist automated systems, or so the story goes.

But what computer scientists, engineers, and industry evangelists of fair machine learning get wrong is the sufficiency of technical tweaks to prevent or avoid discriminatory outcomes. This weakness stems not only from the fact that fairness, the counterpart to discrimination, means many different things depending on one’s normative understanding of equality. It also derives from the fact that these competing frameworks marshal different resources and remedies that variously involve laws, institutional policies, and procedures, as well as require cultural transformation to shift people’s behaviors, norms, and practices towards individuals and groups that differ from the status quo. Moreover, as Young (Citation1990) explains, discrimination ties to larger processes of oppression, which leave socially different groups susceptible to processes of violence, marginalization, exploitation, cultural imperialism, and powerlessness.

In this article, we grapple with the insufficiency of a techno-centric focus on data and discrimination by decentering debates on algorithmic bias and data injustices and connecting them to ongoing and often entrenched debates about traditional discrimination and injustice, which is not technologically mediated. This reflexive turn requires acknowledgment not only of the growing threats of surveillance capitalism (Zuboff, Citation2019), but also other social institutions or practices which have contributed to differential treatment of social groups.

To accomplish this aim, we briefly review the ‘techno-centricity’ of fairness, accountability, and transparency studies, as well as data justice studies, which adopt a more sociotechnical approach but which nonetheless privilege technology. We then develop a normative ‘decentered’ framework that relies on Fraser’s (Citation2010) recent theory of social justice. We use this framework to analyze how European civil society groups make sense of data and discrimination. Attending to ideas of maldistribution, misrecognition, and misrepresentation, our thematic analysis of interviews with 30 civil society representatives in Europe’s human rights sector. We show how many groups prioritize the specific experiences of marginalized groups and ‘see through’ technology, acknowledging its connection to larger systems of institutionalized oppression. This decentered approach contrasts the process-oriented perspective of tech-savvy civil society groups that shy from an analysis of systematic forms of injustice. We conclude by arguing for a plurality of approaches that challenges both discriminatory processes (technological or otherwise) and discriminatory outcomes and that reflects the interconnected nature of injustice today.

Technologically mediated discrimination

To appreciate the relevance of Fraser’s theory of justice, it is helpful to understand differences in how technology has been centered in discussion about discrimination. A comparison between the emergent fields of fairness, accountability, and transparency in machine learning, on the one hand, and data justice, on the other, also reveals how marginalization or systems of oppression do – and do not – feature alongside discussions of technology.

Fairness, accountability, transparency, and data justice in automated systems

Members of a highly influential field focus on engineering and technical choices to deal with problematic automated systems that risk harming specific groups. This field, known as fairness, accountability, and transparency studies, concentrates on various ethical dilemmas related to automated computer systems (Barocas, Citation2015).

At the outset, fairness, accountability, and transparency studies resonated with early explorations of the nature of bias in the design of computer systems (Friedman & Nissenbaum, Citation1996) and privacy-preserving data mining (Agrawal & Srikant, Citation2000). Computer scientists contended with the possibility that data-mining or machine learning algorithms that are used to power automated systems could distinguish between people with negative social consequences (Pedreschi, Ruggieri, & Turini, Citation2008). To avoid designing systems whose automated decisions lead to prejudice, unfair treatment, and negative and unlawful discrimination, computer scientists and engineers conceptualized and modeled ways to identify and avoid the risk of negative discrimination in automated decision systems (Berendt & Preibusch, Citation2014).

Today, researchers have identified numerous criteria for determining whether machine learning is fair (Narayanan, Citation2018). As Gürses (Citation2018) argues, the field has semantically moved away from discovery and prevention of harms, bias, or discrimination. Instead, it puts algorithmic decision making and automated systems in a more positive light, as concerned with fairness, accountability, and transparency (see also, Dwork & Mulligan, Citation2013).Footnote2 Furthermore, as Binns (Citation2018) suggests, fairness, accountability, and transparency studies fail to air their value-based assumptions about antidiscrimination or fairness, remaining inexplicit about their allegiances to any one normative framework. Moreover, the literature tends to neglect important entrenched debates within political philosophy about the extent to which ‘all instances of disparity are objectionable’ (Binns, Citation2018, p. 2). As a result, the field ends up with technical solutions that are overly simplistic and ill equipped to accommodate the complexity of social life. In fact, fairness, accountability, and transparency studies may overstate the power of technology, generally, and ‘fairness constraints’ or parametric decision rules in automated computer systems, specifically, to achieve their intended design or engineering aims.

By contrast to fairness, accountability, and transparency scholars, an emergent group of researchers focused on data justice offers a wider perspective through which to consider the problem of algorithmic discrimination. Footnote3 In formative writings on the idea of data justice, scholars appeal to frameworks for wellbeing, to post-structural influenced theories of justice, and to constructivist understandings of technology (as always value-laden) (Dencik, Hintz, & Cable, Citation2016; Heeks & Renken, Citation2016; Johnson, Citation2014; Taylor, Citation2017). These studies make explicit what equality of data collection, data analytics, and automated data-driven decision-making is for. That is, while fairness, accountability, and transparency studies concentrate on defining ‘fairness constraints’ and what equality is, data justice studies consider what equality is for (see also Sen, Citation1980). In short, if studies of fairness, accountability, and transparency are centered in the technical domain, then data justice leans towards the sociotechnical.

With their attention to data-driven harms as well as opportunities, data justice studies navigate an uneasy boundary between technological and social determinism. On the one hand, the field surfaces negative externalities caused by data-driven technologies and, as seen in the work of Heeks and Renken (Citation2016), examines structural data injustices. On the other hand, data justice studies offer a range of data governance models to avoid or curtail the deterministic powers of such technologies. For example, Taylor (Citation2017) appeals to process freedoms for meaningful participation in data governance, while Johnson (Citation2016) advocates for participatory design, inclusive data science, and social movements for data justice.

(Dis)advantages of seeing discrimination through technology

Though it might go without saying, both fields agree unequivocally that the technical facets of discrimination matter. Even though they vary in their interpretation of causes and effects of a technical algorithmic form of discrimination, the above scan reveals powerful insights into the role of technology in discrimination. Fairness, accountability, and transparency studies admit that algorithmic discrimination can mimic ordinary or more conventional forms of discrimination. They believe that engineers and computer scientists can instantiate fairness or engineer discrimination-aware data mining and machine learning. Meanwhile, data justice scholars concentrate on human-centered data governance, albeit in a way that accepts technology’s power and the notion that it ought to and can be just. Either way, technology lies at the center of these fields’ concerns.

The focus on system design and engineering as well as data governance is both an asset and an albatross, however. It is an asset, because discrimination’s various conceptualizations do not typically have a nuanced view of the role of technology. From Lippert-Rasmussen’s (Citation2014) emphasis on differential treatment on the basis of membership in a social group, to Makkonen’s (Citation2012) attention to institutional and structural to intersectional approaches to discrimination (Crenshaw, Citation1991), sociological and legal approaches do not privilege the role of technology in institutionalized racism, sexism, and other forms of oppression. In this sense, this techno-centric literature fills a gap.

The technical focus, however, is equally a liability. It seems to prioritize technical forms of discrimination or unfairness at the expense of other techniques faced by individuals or groups who systematically bear the risks and harms of a discriminatory society.Footnote4 So while techniques may vary and evolve over time, discrimination’s target may be the same. What remains constant is the marginality and deprivation experienced by socially silenced groups. ‘Who’ matters as much as ‘how.’

In other words, unmediated (or conventional) discrimination exists alongside technologically mediated techniques of discrimination. Algorithmic discrimination and exclusionary automated systems represent one element of a larger ecosystem of discriminatory practices and procedures, and any diagnosis of problems or prescription for remedies would benefit from some measure of reflexivity in relation to this ecosystem.

Reconceptualizing data, discrimination, and injustice: a reflexive turn towards what, who, and how

As raised in the last section, technologically mediated discrimination exists alongside other forms of discrimination that contribute to the systemic marginalization of individuals and groups marked by social difference. Such cohabitation, we argue, requires a reflexive turn that decenters data and data-driven technologies in the debate on discrimination to recognize the broader forms of systemic oppression and injustice that yield both unmediated and mediated forms of discrimination. In other words, a reflexive turn in the debate on data-centered discrimination would help position sociotechnical systems of discrimination alongside other modalities and offer nuance into what injustice discrimination causes, who is affected, and how discrimination can be remedied.

To guide this effort of decentering data and technology, the work of Nancy Fraser (Citation2010) provides a model. Fraser, whose most recent project is to define what she calls abnormal injustice and, conversely, abnormal justice, adopts a pragmatist’s approach in order to deconstruct the confusing and complex systems of governance in a globalized world. For Fraser, injustice and justice are historically contingent ideas that demand consideration of not only what is unequal, but also who is unequal and how inequality is inscribed in political institutions. In her analysis (Fraser, Citation2010; see also Fraser & Honneth, Citation2006), she argues that whereas nineteenth and twentieth century problems of injustice primarily corresponded to problems of economic distribution and hence class, twenty-first century injustice involves additional problems of disrespect for social groups and political exclusion and, accordingly, culture and politics. In these ‘abnormal’ times, institutions, decision makers, and constituents disagree about the relative importance of maldistribution, misrecognition, and misrepresentation and the role of class, culture, and politics in the conception of a just society.

As a result, Fraser argues, a more adequate theory of justice must recognize the interrelation between the ‘what,’ ‘who,’ and ‘how’ of justice.Footnote5 While class-conscious distributive advocates emphasize the ‘what,’ advocates of cultural recognition focus on the ‘who’ of justice that advocates of cultural recognition emphasize, and civically minded supporters of political inclusion emphasize the ‘how,’ a theory abnormal of justice sees the what, who, and how in relational terms. No single priority prevails or constitutes the center of a just society. At every turn, injustices of what, who, and how are interlinked and interfere with participatory parity, or the ability of every social actor to participate meaningfully in society on par with others (Citation2010).

Fraser’s work adds nuance to how discrimination is both understood and remedied. Her tripartite theory of justice interconnects the what, who, and how of discrimination. Discrimination is as much a matter of class as it is of culture and politics, and originates as much from class hierarchies as from status and political hierarchies. Discrimination is consequential for the distribution of material wealth in society, as it is for the reinforcement of certain groups’ privilege or domination over others or for the exclusion of particular structures and styles of democratic governance. As Fraser would argue, abnormal times reveal how discrimination functions as a multifaceted problem that demands a multiplicity of solutions.

This model has implications for conceptualizing the role of technology in a just society. The attention to class, culture, and politics and on participatory parity appears to position technology as an adjunct, as opposed to a primary protagonist, in class- or status-reinforcing hierarchies and institutional and administrative barriers to organizing around antidiscrimination policies and practices. This is particularly pronounced with respect to questions of the ‘who’ of injustice and cultural hegemony. For Fraser, status-reinforcing hierarchies that lead to cultural domination originate in the realm of symbolic as opposed to the material (see also Young, Citation1990, Citation2000). Technology might amplify processes of symbolic meaning making and impact social construction of identity markers, but technology differs from religion or other social institutions that generate the grounds for othering and subordination.

In total, Fraser’s theory illustrates that injustice is multifaceted and lacks a singular source or solution. While it might be tempting to link the conditions of an unjust society to the proliferation of automated computer systems or growth of surveillance capitalism, Fraser’s tripartite theory of justice helps to clarify that technology assists and exists alongside, as opposed to at the center of a discriminatory and unjust society.

Studying data and discrimination in the wild

If we conceptualize data-driven technologies as one among many techniques of discrimination and data-driven discrimination as one facet of an unjust society, to what extent does a decentered discourse exist in the real world? To answer this question and for the remainder of this article, we turn to a thematic analysis to understand how European civil society understands data-driven discrimination and connects between data, discrimination, and inequalities. Rather than approach our investigation from the perspective of what civil society knows, does not know, or needs to know about problems of unfair algorithms or data injustice, our study explores the terrain and texture of civil society discourse on data and data-driven technologies, including when and how technology plays a role in civil society organization’s work on discrimination as well as who is impacted and how discrimination can be prevented. We asked: How does European civil society understand and encounter automated computer systems, data, and discrimination? To what extent does maldistribution, misrecognition, or misrepresentation factor into these understandings or encounters?

To answer these questions, we narrowed our attention to the norms, values, and practices of European civil society organizations, specifically those focused on social and human rights (Sanchez Salgado, Citation2014).Footnote6 These organizations serve as critical actors in public debate, build greater understanding of social concerns, and have unique governance and organizational features (Fuller & McCauley, Citation2016; Salamon, Sokolowski, & List, Citation2003). Due to the diverse character of the analyzed population, our sampling method relied on a mix of maximum variation and snowball strategies to identify a relevant sample population. We generated a list of 50 organizations from grantee lists, issue networks, and organizational networks, aiming for roughly equal distribution across different regions (North, South, East, West) as well as the entirety of the European Union. Our semi-structured interview protocol invited representatives (typically senior-level employees focused on program work) to talk about opinions and experiences, as well as future programmatic work. Because our four pilot interviewees did not understand or identify with the terms algorithmic discrimination or data-driven discrimination, we modified our protocol to include general questions about technology, data, and discrimination, with follow-up probes about automated technologies. We conducted interviews from August 2017 until March 2018.

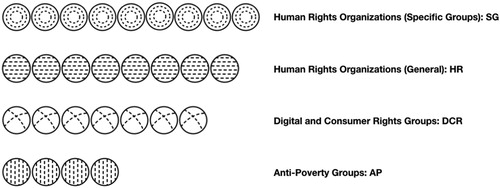

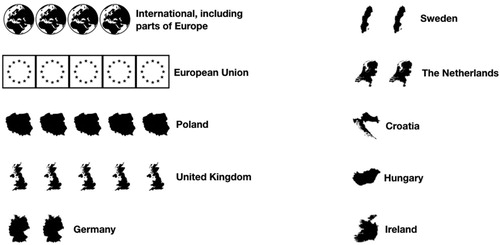

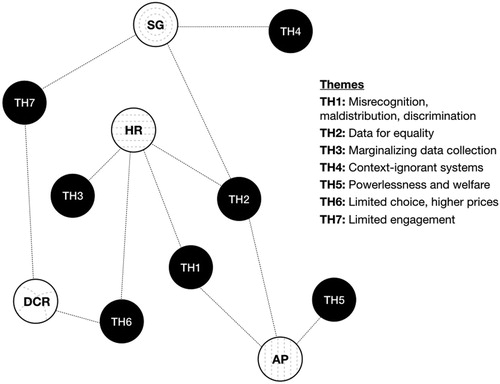

Results presented below are based on interviews with 30 representatives of 28 different civil society organizations, which represent four main types () and which operate in the European Union (). In addition to single- and multi-issue organizations, our sample includes foundations and umbrella organizations (e.g., professional networks or associations). Adopting an iterative strategy (Boyatzis, Citation2009), we developed a coding frame that allowed us to identify thematic networks (Attride-Stirling, Citation2001) including those linked to maldistribution, misrecognition, and misrepresentation, as well as those that elucidate what discrimination entails, who is affected, how it might be challenged, and when and how technology intersects these issues.

Results

By and large, our interviewees did not share stories about automated computer systems and for those that did, data-driven discrimination was neither an organic nor a primary concern. Across the seven themes presented below, data and digital technology, more broadly, did feature in the discussion of discrimination, though interviewees did not characterize them as the source of injustice .

Theme 1: for members of marginalized groups, misrecognition and maldistribution feed into discrimination

Our interviewees represent a very diverse group of activists and advocates fighting injustices and inequalities that affect the life experiences of marginalized communities. A majority of them drew links between discrimination and forms of injustice, which they typically articulated in relation to lack of dignified treatment (misrecognition) or lack of equal rights or access to services (maldistribution). The experience of marginalization of constituents or communities they serve threaded across these discussion, whereas data and technology were muted.

Interconnected stories of misrecognition and maldistribution revolved primarily around four distinct groups: Romani, migrants, poor people (especially the unhoused), and members of the lesbian, gay, bisexual, transgender, and queer (LGBTQ) community. Discussion of Romani populations centered on stories about police brutality, such as when ‘police raided a village in Slovakia … beating men, women, and children’ (SG 6), and problems with Romani access to healthcare, education, and other public and private services and often linked anti-Romanyism to nationalism and populist policy. Struggles involving LGBTQ individuals centered on hate speech, violence, or employment discrimination, which interviewees linked to institutional oppression, traditional societal views, or conservative religious values. Stories of migrants, particularly those from Middle East, North Africa, or Eastern Europe, detailed racial profiling by police, as well as, again, hate speech, workplace discrimination, and unequal access to basic services, which interviewees connected to Europe’s ‘racist practices,’ ‘history of colonialism,’ and ‘myth of white Christian Europe’ (SG 2). Unequal access to rights featured in talk about poor people and the unhoused, a problem that interviewees linked to cultural stereotypes and disrespect for ‘the weakest members of society who have less access to resources and … money’ (AP 4).

However, not all interviewees talked about racism or colonialism or spoke to specific groups’ experiences. As contrasted with representatives of antipoverty groups, organizations advocating for specific groups, and generalist human rights organizations, interviewees from digital and consumer rights groups emphasized the universality of the problems they address. (See also Theme 7 below.)

Theme 2: data for equality matter

For those representing antipoverty and human rights groups, lack of data about marginalized groups means greater marginalization. Otherwise stated, civil society groups need data to make the case for equal treatment. Most commonly, data for equality related to the idea of redistribution and, to a lesser degree, representation. For example, interviewees testified about the ways that data collection of protected information could rectify inequalities between groups. As one interviewee said:

[P]eople of African descents and Muslims really push for data collection because they realize that their realities are ignored … [Data] should be part of your obligation to also promote equality and report on what you’ve done so this is part of the equality planning. (SG 2)

Some interviewees acknowledged the risks of data collection and acknowledged the importance of necessary legal safeguards:

I very much support the idea of data collection in the context of discrimination, including sensitive data only under the circumstances that are provided by law – that it’s anonymous, that the people categorize themselves, and that they agree on giving the data. (HR 8)

On balance, the idea of data for equality might appear as technologically centered since digital tools can aid data collection and analysis. But our interviewees alluded to data-driven research in a more classic (or ‘small data’) sense while neglecting the topic of algorithmic systems. Moreover, they saw data for equality as just one element as opposed to a main solution for the problem of discrimination. In this sense, data for equality subordinates technology to a greater social cause or policy.

Theme 3: data collection marginalizes the already marginalized

Many of the marginalized groups excluded from datasets also face targeted and pervasive surveillance. More often than not, our interviewees connected the problem with broader issues of misrecognition: whether related to information collection, sharing, or analysis by state actors like police, border guards, and public prosecutors, the condition of being hypersurveilled depends on harmful and targeted stereotyping and is justified in terms of public safety or other social values.

Our interviewees connected the misuse of sensitive data (e.g., nationality, economic status, ethnicity) to discrimination and other rights violations. Three examples – none of which involve sophisticated automation – stand out. One interviewee spoke about a Roma register, which local Swedish police created for their jurisdiction, but which expanded to include the Roma community countrywide. A second case also involved the misuse of sensitive data in Poland, whereby a city government tried to create a database of unhoused or homeless individuals, accessible not only by shelter providers (to manage resources), but also courts and police access (to ensure public safety). The third case, which involved a data sharing agreement between the British government’s Home Office and the National Health Service, targeted migrants, leading many to avoid of the healthcare system for fear of surveillance.

In the above examples, algorithmic systems again are far from the focal point. Moreover, the politics of data collection connect to the reproduction of broader injustices. Again, as with the above point about data for equality, data or the technology which supports data collection regimes connect to border social problems and have roots in harmful stereotypes and stigmatization of social groups.

Theme 4: context-ignorant systems harm life chances and decrease the dignity of the marginalized

Interviewees shared how database system design is flawed and neglects specific marginalized groups with dire material and emotional consequences. A clear example of this relates to national identification systems. In Sweden, for example, national ID numbers have a gender marker (i.e., a numerical code related to one’s gender assigned at birth). When someone changes their legal gender, the national ID number also changes. As one interviewee explained: ‘[P]rivate companies … government agencies … and every official … all ask for your person number … So, if you change that, then a number of problematic situations arise’ (SG 9), including the inability to access prior customer records or health history.

Similarly, migrants from Ukraine and Belarus in Poland face routine, bureaucratic obstacles that prevent them from obtaining PESEL, a personal ID number. Exclusion from PESEL has cascading effects, given that PESEL unlocks access to numerous public services. Describing the education system and differential treatment, one interviewee described how a migrant whose child lacks a PESEL is locked out of online enrollment systems for nursery and kindergarten:

You cannot insert a child to the online form at the moment when there is no PESEL … And then she [the mother] goes with her broken Polish to kindergarten, trying to explain to somebody. And here it starts: “Ah … you are not from Poland?” (SG 1)

Theme 5: automation in the welfare state will amplify powerlessness of the marginalized

When thinking about the future of welfare administration, interviewees surfaced concerns about the harmful impacts of automated computers systems. Here, interviewees championed a well-functioning welfare state and its task of distributing goods and services. They also emphasized the need for fair procedures that ensure the dignity of welfare recipients. Our interviewees considered benefits of advanced technologies, though worried about dehumanization. Welfare administration

requires personal interaction and it requires a person with experience, knowledge, and ability to listen to what the clients have said in order to understand and to identify what the issues and the obstacles are and what the solutions are. (AP 4)

But, according to interviewees, the problem is not so much the technology itself but already existing problems of public administration. Welfare recipients who face welfare bureaucracy already feel powerless, and automation may worsen their powerlessness:

[A]lready … people in public employment services … behaved kind of like computers. [Y]ou either … fit into this box, or you don’t. And if you don’t, “Goodbye.” … [B]ut there was hope because you’re thinking, “They’re still human beings.” (AP 2)

In all, the theme exemplifies the subordinate place of technology in a larger political problem: the architecture of welfare state. While automated systems factor into this architecture, and while questions about fair administrative procedure abound, technology is a secondary concern to the larger problem that prompted automated welfare’s implementation (i.e., austerity).

Theme 6: automation spells limited choice and higher prices for the marginalized

A small minority of mainly digital and consumer rights groups talked about the misuse of data by companies. Here conversations yoked problems of technology to issues of marginalization in the context of data-driven price differentiation.

According to one participant, many companies use personalized pricing to offload risk onto consumers. Interviewees also acknowledged that certain populations, such as the elderly, would experience data-driven markets differently. One said:

[M]isuses of data can lead to discrimination … and affect consumers that are in the most vulnerable positions … [T]argeted ads to specific demographics who are more likely to be in financial trouble. They get targeted with toxic stuff. (DCR 3)

Compared to other ideas, this consumer-oriented theme is a very clear example when the technology and data represent a central matter of concern. Interviewees spoke about detecting, governing, and addressing specific data governance issues. When they linked them to the condition of marginality, they concentrated on the mechanics of differential pricing.

Theme 7: work on algorithmic discrimination has limited appeal

For a generalist human rights organization, plus the same groups mentioned in the ‘Limited choice, higher prices’ theme above, privacy and data rights, not discrimination, are paramount concerns. Here, the reference to redistribution, recognition, or representation was nearly completely lacking. Interviewees spoke about mass surveillance programmers, the broad powers of secret service and law enforcement, and cavalier companies who violate European data protection rules. These conversations did not mention specific populations and suggested that all individuals are equally harmed.

Attention to ‘everyone’ by digital and consumer rights groups ties to norms and values. One interviewee explained:

[P]rivacy and freedom of speech can be a bipartisan issue. But discrimination is, much more progressive, inherently progressive force with a particular type of progressive ideology … [W]e don’t feel that we have a mandate to speak out against racism. (DCR 8)

Discussion

The themes above evidence a number of divisions and gaps that help elucidate what it means to decenter technology in discourse – and action – on discrimination. First, misrepresentation or exclusion from political decision making did not feature much in conversations about discrimination, data, and technology. Some interviewees implicitly addressed representation by referencing problems of discrimination as linked to individuals’ inability to access their full rights as citizens. But on the whole, representation was a blind spot in conversation, a point that is interesting especially in relation to calls for developing a movement for data justice and public exposure of problematic data collection or use (for examples see Dencik et al., Citation2016; Johnson, Citation2014). We acknowledge, however, that the dearth of attention to representation might be a function of the particularity of European civil society and our focus on already professionalized groups (as opposed to social movement ones).

A second noteworthy gap is our research did not yield evidence of high-level interest or engagement in topics related to algorithmic discrimination. The timing of our interviews (August 2017-March 2018) may have factored into the muted attention to algorithmic discrimination. The story of misuse of social media data by Cambridge Analytica to sway elections broke as we were concluding data collection. Prior to this scandal, news reporting on algorithmic discrimination was poor, in spite of a looming implementation deadline for the General Data Protection Regulation (Gangadharan & Niklas, Citation2018).

Nevertheless, differences in norms, values, and practices vis-à-vis technology’s central role in social injustice are also at play and reveal important divisions in European civil society. Many of our interviewees discussed more conventional forms of discrimination. The theme of ‘data for equality’ underscores this point. In contrast to privacy and data protection arguments against more data collection (Barocas, Citation2015), advocates who pushed to collect more data about members of historically marginalized groups want to do so to achieve better outcomes in life. Interviewees’ conversations addressed conditions which contributed to lack of opportunity or access to resources, as well as solutions that would equally distribute rights and benefits to all populations, such as members of Roma, LGBTQ, and other minorities. Data for equality is rooted in efforts to challenge marginalization.

Digital and consumer rights advocates took a different tack. For example, they did offer limited commentary about algorithmic systems, but their generalized statements referred to well-known cases from the United States, rather than European examples in their areas of operation. Moreover, discussion shifted from data and discrimination to privacy and data protection quickly. Some advocates connected to latter topics precisely because of their recollections of challenging collaborations between digital rights and antidiscrimination groups, such as campaigns to safeguard against hate speech. Topics of data protection represented more comfortable spaces for discussion and allowed interviewees to engage in talk of the European General Data Protection Regulation, data processing, data holders, and data subjects.

Had our research included groups from the South (see ), we do not believe our results would differ significantly. Norms, values, and practices of digital rights and human rights groups do differ (Dencik et al., Citation2016; Dunn & Wilson, Citation2013), and these different sectors function as ‘epistemic communities’ (Haas, Citation1992, p. 3). While geography influences such communities, and data and technology affect the work of human rights groups in Southern Europe (Topak, Citation2014), affected populations remain a primary concern for such groups. Nevertheless, to fully understand the nature of epistemic communities, we welcome additional research that highlights the uniqueness of human rights sector within and between countries and regions.

Had our sample population not included digital and consumer rights groups, the dearth of attention to algorithmic discrimination might be unremarkable. But given that even digital and consumer rights tentatively and superficially broached the topic, the omission reveals a big difference in how digital and consumer rights groups speak about those populations or communities who are affected by discrimination and related injustices. The majority of themes articulated above relate to specific marginalized groups, whereas only the ‘limited engagement’ theme reveals references to ‘all’ or ‘everyone’ by digital and consumer rights groups. When one consumer rights group elaborated on dynamic pricing in relation to specific vulnerable groups, the interviewee speculated in vague, generalized terms.

In addition, while digital and consumer rights primarily represent the biggest critics of datafication, they lack a vocabulary to discuss discrimination. They called out data protection violations, conceptualized surveillance as a problem that impacts all individuals equally, and championed universal privacy rights, and then shied from a discussion of misrecognition, maldistribution, and social difference. Digital and consumer rights groups rarely spoke to discrimination directly, referring to discrimination and challenging it as the domain of other ‘progressive groups.’ Their counterparts, by contrast, were fluent in the language of maldistribution and misrecognition. Evoking Nancy Fraser, they often connected the two: whether affirming the need for data for equality or criticizing marginalizing forms of data collection, poorly designed systems, or the threat of greater dehumanization in the welfare system they blended an analysis of unfair distribution of resources, services, or goods with that of a critique of racism, colonialism, or, in other words, practices of cultural domination.

The divide between those who prioritize digital technology and those who do not echoes what others have noted about debates about technology governance, including governance of other, older communication technologies. In the United States, this division was laid bare when activists who embraced an explicit analysis of social, racial, and economic inequalities in marginalized communities delineated themselves as committed to the idea of media justice, as opposed to media reform which frames problems of concentration in the media industry as damaging to all citizens and consumers (Gangadharan, Citation2014; Cyril, Citation2005, Citation2008; Snorton, Citation2010). In more recent times, digital rights activists have aligned themselves with ‘all’ versus ‘specific groups,’ criticizing mass surveillance as opposed to targeted surveillance of already highly surveilled communities (Gürses, Kundnani, & Van Hoboken, Citation2016). Meanwhile activists aligned with environmental, labor, as well as other identity-based rights movements, have appeared nonplussed by Snowden revelations while digital rights activists have sounded the alarm (Dencik et al., Citation2016).

In the face of such divisions, many would call for bridging, including broader awareness among social, racial, or economic justice advocates of the ways in which technology ‘works.’ But we propose that the divisions between digital rights advocates other advocates, including those serving specific groups and/or their rights, clarifies the concept decentering. The division shows us that technology animates ‘non-techie’ civil society groups insofar as technological problems connect to specific marginalized groups. Antipoverty organizations, advocates for the rights of specific groups, and human rights groups think technology is important but in relational terms. Data collection and data-driven systems concern these groups when they interfere with marginalized peoples’ ability to live dignified lives, meet their basic needs, and have a fair chance at opportunity.

In this sense, these kinds of civil society organizations already have a decentered approach to technology in the discourse on discrimination. Their manner of decentering is to ‘see through’ technology and position it in relation to systems of oppression, whose norms and values are wired in and function as instruments of control, subordination, and normalization. The activists with whom we spoke see power through the design, implementation, and operation of technological systems. They pointed out forms of powerlessness that already exist and the extent to which database systems, for example, both exclude and target, the effects of which lead to greater social control and marginalization. This perspective on technology resonates with what surveillance studies and science and technology studies have been saying for decades. Whether Winner’s (Citation1986) interest in the politics of technology, Gandy’s (Citation1993) notion of the panoptic sort (Citation1993), Gilliom’s (Citation2001) interests in computerized overseers of the poor, or Monahan’s (Citation2008) concept of ‘marginalizing surveillance’ (p. 220), technology extends power and can be designed to systematically disadvantage marginalized groups.

Moreover, the themes focused on marginalizing data collection, poorly designed systems that normalize, and dehumanizing automated systems suggest that while individual developers may not be sexist, racist, classist, or otherwise guilty, such technological projects, as a whole, can be tied to larger processes of marginalization. Decentering, in other words, requires that we probe the larger contexts that motivate technological projects, their deployment, and use and look beyond narrowly defined illegal forms of discrimination. Better-designed welfare automation (e.g., fair algorithms) may not save a shrinking welfare state or be the best instrument for transforming cultural understanding of the poor.

Our results also suggest that decentering means beginning and ending with marginality, as opposed to leading with technology, data governance, or digital rights. This does not exclude or devalue technically centered approaches. To be clear, our interviewees admitted the importance of data protection measures (e.g., anonymity for the homeless) and advocated for special data rights (e.g., more inclusive national ID systems for transgender people). They also relied on or supported data protection law to demonstrate discrimination (e.g., against the Roma by Swedish police, data for equality or collecting data to show differential treatment). But across these examples, civil society representatives have tilted data governance and digital rights towards an outcome of a more equal and just society, rather than talk about problematic data, problematic data collection, or problematic uses of data in abstract terms or in isolation from larger societal problems.

Conclusion: towards alignment

With decentering, it is possible to recognize the specific impacts of technologically mediated discrimination without claiming its totalizing effects. Problems of discriminatory data mining or unfair machine learning are significant, and they do not constitute the primary means by which discrimination, unfairness, or injustice is or will be practiced. Similarly, problems of data collection, open data projects, or other data-based (though not automated) initiatives are also significant, and they do not constitute the primary means by which discrimination, unfairness, or injustice is or will be practiced.

This suggests two reflective points. First, it is worth comparing between different problems of abnormal justice: problems of the data economy or surveillance capitalism; problems unattributable or less attributable to the data economy; institutional legacies of racism, colonialism, sexism, heterosexism, and so forth; and erosion of institutions of democratic contestation. Data and data-driven systems cannot claim all the credit for structural inequalities of an unjust society. This means we ought to consider, for example, data violence (Hoffmann, Citation2018) alongside structural violence and racist search engines (Noble, Citation2018) alongside racism perpetuated by social institutions. Second, technical analyses, especially fairness, accountability, and transparency studies, would benefit from a deeper exploration of potential negative externalities of automated systems. In their exploration of optimization technologies, Overdorf, Kulynych, Balsa, Troncoso, and Gürses (Citation2018) offer clues to how this can be done. Nuanced historical context of the discrimination to which automated systems belongs will also add much needed texture to the abstract computational models. Without this, challenging technologically mediated discrimination risks insularity at a time when a just society demands greater interconnection and alignment between diverse epistemic communities.

Acknowledgements

Thanks also to Omar Al-Ghazzi, Seda Gürses, Sonia Livingstone, and anonymous reviewers for their comments.

Disclosure statement

No potential conflict of interest was reported by the authors.

Notes on contributors

Seeta Peña Gangadharan, PhD, is a scholar and organizer studying technology, governance, and social justice. She currently co-leads Our Data Bodies, a research justice project focused on the impact of data-driven technologies on marginalized communities in the United States. She is an Assistant Professor in the Department of Media and Communications at the London School of Economics and Political Science. [email: [email protected]].

Jędrzej Niklas, PhD, is working at the intersection of data-driven technologies, state, and law. His research focuses on the use of data and new technologies by public administration and social and legal impact of digital innovations especially on rights of marginalized communities and social justice. He is currently a Visiting Fellow at the London School of Economics and Political Science. [email: [email protected]]

ORCID

Seeta Peña Gangadharan http://orcid.org/0000-0002-1955-3874

Jędrzej Niklas http://orcid.org/0000-0003-2878-3134

Additional information

Funding

Notes

* This article grew from conversations at a workshop entitled ‘Intersectionality & Algorithmic Discrimination’ (Lorentz Center, Leiden, December 2017).

1 Altogether, systems that variously employ algorithms or use learning models are referred to as automated computer systems, data-driven systems, algorithmic systems, intelligent systems, expert systems, machine learning, or automated systems.

2 The field now organizes a high profile, closely watched conference known for its ‘thought leadership’ on data ethics in the academy, industry, and government.

3 Data justice scholarship is diverse and emergent. Nevertheless, we use ‘data justice studies’ in recognition of the growth in justice-oriented approaches to examining dataification.

4 For examples of the many techniques of discrimination, see Hagman (Citation1971) and Greenberg (Citation2010).

5 Note that Fraser’s theorization evokes earlier work by Young, who paid close attention to issues of representation and closely considered ‘how’ in political decision making (Young, Citation1990, Citation1997, Citation2000).

6 For brevity, we use the term ‘human rights’ to refer to both social and human rights groups.

References

- Agrawal, R., & Srikant, R. (2000). Privacy-preserving data mining. ACM SIGMOD Record, 29(2), 439–450. doi:10.1145/342009.335438 doi: 10.1145/335191.335438

- Attride-Stirling, J. (2001). Thematic networks: An analytic tool for qualitative research. Qualitative Research, 1(3), 385–405. doi:10.1177%2F146879410100100307 doi: 10.1177/146879410100100307

- Barocas, S. (2015). Data mining and the discourse on discrimination. In Proceedings of the data ethics workshop. New York. Retrieved from https://dataethics.github.io/proceedings/DataMiningandtheDiscourseOnDiscrimination.pdf

- Berendt, B., & Preibusch, S. (2014). Better decision support through exploratory discrimination-aware data mining: Foundations and empirical evidence. Artificial Intelligence and Law, 22(2), 175–209. doi: 10.1007/s10506-013-9152-0

- Binns, R. (2018). Fairness in machine learning: Lessons from political philosophy. In S. A. Friedler & C. Wilson (Eds.), Proceedings of the 1st conference on fairness, accountability, and transparency (Vol. 81, pp. 149–159). New York: Proceedings of Machine Learning Research. Retrieved from http://proceedings.mlr.press/v81/binns18a/binns18a.pdf

- Boyatzis, R. E. (2009). Transforming qualitative information: Thematic analysis and code development. Thousand Oaks: Sage.

- Crenshaw, K. (1991). Mapping the margins: Intersectionality, identity politics, and violence against women of color. Stanford Law Review, 43(6), 1241. doi: 10.2307/1229039

- Cyril, M. A. (2005). Media and marginalization. In R. McChesney, R. Newman, & B. Scott (Eds.), The future of media: Resistance and reform in the 21st century (pp. 97–104). New York: Seven Stories Press.

- Cyril, M. A. (2008). A framework for media justice. In S. P. Gangadharan, B. De Cleen, & N. Carpentier (Eds.), Alternatives on media content, journalism, and regulation (pp. 55–56). Tartu, Estonia: Tartu University Press. Retrieved from http://www.researchingcommunication.eu/reco_book2.pdf

- Dencik, L., Hintz, A., & Cable, J. (2016). Towards data justice? The ambiguity of anti-surveillance resistance in political activism. Big Data & Society, 3(2), 1–12. doi: 10.1177/2053951716679678

- Dunn, A., & Wilson, C. (2013). Training digital security trainers: A preliminary review of methods, needs, and challenges. Washington, DC: Internews Center for Innovation and Learning. (pp. 1–21). Retrieved from https://internews.org/sites/default/files/resources/InternewsWPDigitalSecurity_2013-11-29.pdf

- Dwork, C., & Mulligan, D. K. (2013). It’s not privacy, and it’s not fair. Stanford Law Review, 66, 35–40.

- Fraser, N. (2010). Scales of justice: Reimagining political space in a globalizing world. New York: Columbia University Press. Retrieved from http://public.eblib.com/choice/publicfullrecord.aspx?p=1584038

- Fraser, N., & Honneth, A. (2006). Redistribution or recognition? A political-philosophical exchange. London; New York: Verso.

- Friedman, B., & Nissenbaum, H. (1996). Bias in computer systems. ACM Transactions on Information Systems, 14(3), 330–347. doi: 10.1145/230538.230561

- Fuller, S., & McCauley, D. (2016). Framing energy justice: Perspectives from activism and advocacy. Energy Research & Social Science, 11, 1–8. doi: 10.1016/j.erss.2015.08.004

- Gandy, O. H. (1993). The panoptic sort: A political economy of personal information. Boulder, CO: Westview.

- Gangadharan, S. P. (2014). Media justice and communication rights. In C. Padovani & A. Calabrese (Eds.), Communication rights and social justice, historical accounts of transnational mobilizations (pp. 203–218). New York: Palgrave Macmillan.

- Gangadharan, S. P., & Niklas, J. (2018). Between antidiscrimination and data: Understanding human rights discourse on automated discrimination in Europe. London: London School of Economics and Political Science. Retrieved from http://eprints.lse.ac.uk/id/eprint/88053

- Gilliom, J. (2001). Overseers of the poor: Surveillance, resistance and the limits of privacy. Chicago: University of Chicago Press.

- Greenberg, J. (2010). Report on Roma education today: From slavery to segregation and beyond. Columbia Law Review, 110(4), 919–1001.

- Gürses, S. (2018, September). Waiting for AI is like waiting for Go Presented at the Choosing to be smart: Algorithms, AI, and avoiding unequal futures, London School of Economics and Political Science. Retrieved from https://media.rawvoice.com/lse_inequalitiesinstitute/richmedia.lse.ac.uk/inequalitiesinstitute/20180928_choosingToBeSmart.mp3

- Gürses, S., Kundnani, A., & Van Hoboken, J. (2016). Crypto and empire: The contradictions of counter-surveillance advocacy. Media, Culture & Society, 38(4), 576–590. doi: 10.1177/0163443716643006

- Haas, P. M. (1992). Introduction: Epistemic communities and international policy coordination. International Organization, 46(1), 1–35. doi: 10.1017/S0020818300001442

- Hagman, D. G. (1971). Urban planning and development – race and poverty – past, present and future. Utah Law Review, 46, 33.

- Heeks, R., & Renken, J. (2016). Data justice for development: What would it mean? Information Development, 34(1), 90–102. doi: 10.1177/0266666916678282

- Hoffmann, A. L. (2018). Data violence and how bad engineering choices can damage society. Medium. Retrieved from https://medium.com/s/story/data-violence-and-how-bad-engineering-choices-can-damage-society-39e44150e1d4

- Johnson, J. A. (2014). From open data to information justice. Ethics and Information Technology, 16(4), 263–274. doi: 10.1007/s10676-014-9351-8

- Johnson, J. (2016). The question of information justice. Communications of the ACM, 59(3), 27–29. doi: 10.1145/2879878

- Lippert-Rasmussen, K. (2014). Born free and equal? A philosophical inquiry into the nature of discrimination. Oxford: Oxford University Press.

- Makkonen, T. (2012). Equal in law, unequal in fact: Racial and ethnic discrimination and the legal response thereto in Europe. Leiden; Boston: Martinus Nijhoff Publishers.

- Monahan, T. (2008). Editorial: Surveillance and inequality. Surveillance & Society, 5(3), 217–226. doi: 10.24908/ss.v5i3.3421

- Narayanan, A. (2018). FAT* tutorial: 21 fairness definitions and their politics. Presented at the Conference on Fairness, Accountability, and Transparency, New York. Retrieved from https://docs.google.com/document/d/1bnQKzFAzCTcBcNvW5tsPuSDje8WWWY-SSF4wQm6TLvQ/edit?us.

- Noble, S. U. (2018). Algorithms of oppression: How search engines reinforce racism. New York: New York University Press.

- Overdorf, R., Kulynych, B., Balsa, E., Troncoso, C., & Gürses, S. (2018). POTs: Protective Optimization Technologies. ArXiv:1806.02711 [Cs]. Retrieved from http://arxiv.org/abs/1806.02711

- Pedreschi, D., Ruggieri, S., & Turini, F. (2008). Discrimination-aware data mining. In ACM Proceedings. Las Vegas, Nevada. doi: 10.1145/1401890.1401959

- Salamon, L. M., Sokolowski, S. W., & List, R. (2003). Global civil society: An overview (1st ed.). Baltimore, MD: Johns Hopkins Center for Civil Society Studies. Retrieved from http://www.cca.org.mx/apoyos/dls/m4/global.pdf

- Sanchez Salgado, R. (2014). Europeanizing civil society: How the EU shapes civil society organizations. Basingstoke, UK: Palgrave Macmillan.

- Sen, A. (1980). Equality of what? In S. M. McMurrin (Ed.), The Tanner lectures on human values (pp. 197–220). Salt Lake City, UT: University of Utah Press.

- Snorton, R. (2010). New beginnings: Racing histories, democracy and media reform. International Journal of Communication, 2, 23–41.

- Taylor, L. (2017). What is data justice? The case for connecting digital rights and freedoms globally. Big Data & Society, 4(2), 205395171773633. doi: 10.1177/2053951717736335

- Topak, Ö. E. (2014). The biopolitical border in practice: Surveillance and death at the Greece-Turkey borderzones. Environment and Planning D: Society and Space, 32(5), 815–833. doi:10.1068/d13031p

- Winner, L. (1986). The whale and the reactor. Chicago: Chicago University Press.

- Young, I. M. (1990). Justice and the politics of difference. Princeton: Princeton University Press.

- Young, I. M. (1997). Unruly categories: A critique of Nancy Fraser’s dual systems theory. New Left Review, 222, 14.

- Young, I. M. (2000). Inclusion and democracy. New York: Oxford University Press.

- Zuboff, S. (2019). The age of surveillance capitalism: The fight for a human future at the new frontier of power. New York: PublicAffairs.