ABSTRACT

Very Large Online Platforms (VLOPs) such as Instagram, TikTok, and YouTube wield substantial influence over digital information flows using sophisticated algorithmic recommender systems (RS). As these systems curate personalized content, concerns have emerged about their propensity to amplify polarizing or inappropriate content, spread misinformation, and infringe on users’ privacy. To address these concerns, the European Union (EU) has recently introduced a new regulatory framework through the Digital Services Act (DSA). These proposed policies are designed to bolster user agency by offering contestability mechanisms against personalized RS. As their effectiveness ultimately requires individual users to take specific actions, this empirical study investigates users’ intention to contest personalized RS. The results of a pre-registered survey across six countries – Brazil, Germany, Japan, South Korea, the UK, and the USA – involving 6,217 respondents yield key insights: (1) Approximately 20% of users would opt out of using personalized RS, (2) the intention for algorithmic contestation is associated with individual characteristics such as users’ attitudes towards and awareness of personalized RS as well as their privacy concerns, (3) German respondents are particularly inclined to contest personalized RS. We conclude that amending Art. 38 of the DSA may contribute to leveraging its effectiveness in fostering accessible user contestation and algorithmic transparency.

Introduction

Very large online platforms (VLOPs) such as Instagram, TikTok, or YouTube use algorithmic recommender systems (RS) to filter, sort, and prioritize personalized content for users (Gillespie, Citation2018). RS use a variety of metrics to optimize recommendations, including collaborative filtering (‘based on choices of similar users’), content-based filtering (‘based on the user’s past choices’), and knowledge-based techniques (‘based on the user’s preferences to items features’) (Karimi et al., Citation2018). Such targeted recommendations can be tailored to align with the unique interests of each user to boost user engagement and advertisement revenues (Kozyreva et al., Citation2021). By funneling user attention, RS have emerged as crucial gatekeepers who ultimately decide which content is or is not seen by the wider public (Guess et al., Citation2023; Nyhan et al., Citation2023).

Various stakeholders have critiqued the power of personalized RS to curate information flows. As VLOPs are incentivized to maximize users’ time on the platform for commercial ends, their RS tend to amplify content that users engage with (Fuchs, Citation2021). As side effects, RS were found to prioritize extreme and polarizing content (Baumann et al., Citation2020), potentially contribute to spreading false information (Vosoughi et al., Citation2018), and recommend inappropriate content to children (Papadamou et al., Citation2020). But RS could be designed differently. They could prioritize other normative goals, such as upholding privacy or promoting content diversity. Recent studies suggest that changing the underlying design of the Facebook and Instagram algorithms reduced user exposure to polarizing content and time spent on these platforms (Guess et al., Citation2023; Nyhan et al., Citation2023). Moreover, alternative approaches by open-source platforms like Mastodon opt for a non-personalized approach of curating user feeds by sorting content in reverse-chronological order.

Amidst growing demands for more user control over the algorithmic design of RS, the European Union (EU) has implemented new regulations on RS in the Digital Services Act (DSA). These rules are geared toward bolstering user agency by providing mechanisms to contest personalized algorithmic recommendations on VLOPs. These include transparency on the criteria used to determine which content users see. The DSA further outlines the possibility for platforms to offer users more choices in influencing those criteria. In addition, VLOPs are mandated to offer at least one non-personalized option. The new regulatory framework, thereby, creates conditions for users to express their preferences and contest the dominant recommendation logic. However, the effectiveness of these policies in enabling contestability ultimately hinges on the extent to which users actively engage in such actions.

This paper investigates user contestation of personalized RS on VLOPs. In line with the privacy-sensitive contestation mechanisms outlined in the DSA, we conceptualize algorithmic contestation as users’ choice to opt for a non-personalized RS on VLOPs, thereby taking control over the way they are exposed to content. These contestation mechanisms ultimately require individual capacities. For instance, users’ choices for personalized vs. non-personalized RS likely hinge on individual characteristics such as algorithmic literacy. Thus, gaining insights into individual differences is essential to understanding the efficacy of existing contestation mechanisms and for whom they work. Moreover, we investigate cross-national differences in exercising the option to contest personalized RS. The ongoing policy efforts around the world highlight the demand for comprehensive platform governance to empower users. Moreover, due to the so-called Brussels effect, EU-initiated regulations possibly impact regulation in jurisdictions outside the EU. To understand the complex dynamics between users and contestation mechanisms of personalized RS, we conducted a pre-registered survey in Brazil, Germany, Japan, South Korea, the United Kingdom (UK), and the United States of America (USA) (total N = 6217).

Normative contestation of recommender systems

A robust body of literature has scrutinized the effects of social media on individuals and societies. The main reasoning is straightforward: Given their advertising-based business model, VLOPs are incentivized to maximize users’ time on the platform. Personalized RS powered by personal data and machine learning algorithms accelerate this process by recommending highly engaging content to each individual user. Scholars have raised concerns over privacy and data protection as VLOPs trace and monetize users’ online behavior (Zuboff, Citation2019). By leveraging amassed behavioral data, algorithms can infer more information about individual users than they intended to disclose, including political views or personality traits (Hinds & Joinson, Citation2018; Youyou et al., Citation2015). Another concern relates to the potential lack of news exposure diversity (Helberger et al., Citation2018) and an ensuing risk of polarization (Iyengar et al., Citation2019). Homophilic communication networks, so-called ‘Echo chambers’, or ‘filter bubbles’, may emerge on VLOPs, as users receive content recommendations that align with their algorithmic profile (Flaxman et al., Citation2016). The empirical evidence for this assertion is mixed, with some studies supporting it (Cota et al., Citation2019), others refuting it (Haim et al., Citation2018), and some suggesting that some RS can even enhance content diversity (Nguyen et al., Citation2014). Recent comprehensive studies from the US found that changing the algorithmic design of the RS reduced overall usage, exposure to like-minded sources, and uncivil content (Guess et al., Citation2023; Nyhan et al., Citation2023). However, this change did not impact affective polarization, ideological extremity, or belief in false claims (Guess et al., Citation2023; Nyhan et al., Citation2023).

In response to these concerns, policymakers have increasingly emphasized the significance of safeguarding the ability of individuals to contest algorithms (High-Level Expert Group on AI, Citation2019; OECD, Citation2019). According to Kluttz and Mulligan (Citation2019, p. 888), ‘contestability refers to mechanisms for users to understand, construct, shape, and challenge model predictions’. While the concept of contestation varies in its interpretation across academic and policy discourse, the general idea that individuals should be able to resist algorithmic influence on their lives has become popular (Lyons et al., Citation2021; McQuillan, Citation2022).

In this regard, some scholars have argued that individuals should have an opportunity to contest the normative foundations of algorithms (Hildebrandt, Citation2022). In other words, users should have the power to choose the inherent design of an algorithm that affects their daily lives. Algorithms can contain certain normative goals imbued during their design process, i.e., the decision for what goal to optimize the algorithms (Binns, Citation2018). However, these normative goals are contestable as people can differ on which normative goals are or are not desirable (Bayamlioglu, Citation2018; Binns, Citation2018). RS can, for example, be designed to maximize performance and profit (Zuboff, Citation2019), safeguard different conceptualizations of diversity (Helberger et al., Citation2018), or protect users’ privacy (Monzer et al., Citation2020). In each case, a different normative goal shapes the design of the RS. For instance, a privacy-sensitive RS would refrain from using personal user data, rendering the algorithm incapable of delivering personalized recommendations based on a user's profile. Providing users with mechanisms to contest personalized RS means enabling them to exercise control over which recommendation logic should influence their exposure to information.

Many scholars, therefore, promote the view that users should have some control over RS (Harambam et al., Citation2019; Jannach et al., Citation2017; Van den Bogaert et al., Citation2022). Jannach et al. (Citation2017) discuss different mechanisms to facilitate users to control RS and their effects on information exposure. These include preemptive measures such as preference forms, dialogical questionnaires, and ex-post measures, allowing users to modify recommendations after they have been presented. These measures empower users to take control of RS and, in turn, also facilitate contestation of the normative goals of RS. In this regard, we argue that exercising control over the way RS influence their information exposure is also an act of contestation of the underlying recommendation logic. To a certain extent, such control measures are now mandated by the DSA (European Commission, Citation2022), providing a first step towards enabling user contestation of RS.

Contestation of recommender systems in the Digital Services Act

The introduction of the DSA in 2022 highlights the EU legislator's mounting concerns about the societal impact of RS. Recital 70 DSA, for example, emphasizes the significant influence RS can exert on users’ ability to retrieve and interact with information online due to RS’s crucial role in the ‘amplification of certain messages, the viral dissemination of information and the stimulation of online behavior’.

Consequently, the EU legislator has mandated in Art. 27 (1) DSA that providers of online platforms set out in their terms and conditions ‘in plain and intelligible language’, the main parameters used by their RS. Subparagraph (2) further specifies that this must include the most important criteria used in selecting information suggested to the user and the reasons for the relative importance of those parameters.

Such obligations for algorithmic transparency have been advocated in various policy guidelines, including the Council of Europe's (Citation2018) recommendation on the responsibilities of internet intermediaries. Such transparency provisions are essential to enable contestation (Bayamlioglu, Citation2018; Binns, Citation2018). By understanding the primary parameters of the algorithm, users are enabled to form an opinion about whether they align with the normative goals of that algorithm.

Yet, transparency alone is insufficient to safeguard the contestability of RS (van Drunen et al., Citation2022). Concrete obligations to provide users with contestation mechanisms are also needed. Such mechanisms are outlined in Art. 27 (1) and (3) DSA. They require providers of online platforms to clarify in their terms and conditions ‘any options for the recipients of the service to modify or influence’ the key parameters of the RS, provided such options are available. Subparagraph (3) provides an additional requirement specifically for RS to determine the relative order of recommended information. It states that if the platform offers any options for users to modify or influence the main parameters of the RS, these options must be directly and easily available.

Importantly, Art. 27 DSA does not mandate these options to exist. It only requires them to be made accessible if they exist. Nevertheless, Art. 38 DSA stipulates, at the very least, one contestation mechanism. This provision obliges VLOPs to offer users the option to choose a non-personalized RS. Unless VLOPs provide additional functionalities, it is not possible to choose other normative goals, such as a diversity-sensitive RS. Consequently, this study investigates specifically the choice between personalized and non-personalized RS.

Art. 27 (1) and (3) mandate that online platforms must communicate the existence of any options available to modify the RS. Three phases can be distinguished where users can exercise control over RS. These consist of focusing on the input, i.e., altering personal information to change how a recommendation might be personalized, the process, i.e., changing the parameters of the algorithm, or the output, i.e., modifying the order of the results (Jannach et al., Citation2017; Van den Bogaert et al., Citation2022). As such, there are multiple ways for users to potentially tweak the RS.

However, designers of these contestation mechanisms should avoid information overload. A balance must be struck between granting users in-depth control versus maintaining an acceptable ‘cognitive load’ (Jin et al., Citation2018). That is, giving users too much or too technical information about the user profile, the algorithm parameters, or the recommendation output may overwhelm them. Consequently, they may be unable to exercise control options, rendering the contestation mechanisms outlined in the DSA practically obsolete.

Drivers of algorithmic contestation

A straightforward way to meet the requirements of Art. 38 DSA is to give users the option to opt out of the personalized default setting of the RS and instead choose a non-personalized alternative. One promising approach are so-called algorithmic recommender personae which offer users an ‘intuitive, one-click option to select different sorts of personalized (news) recommendations based on their specific mood, interest and purpose’ (Van den Bogaert et al., Citation2022, p. 2). By selecting a certain type of RS, the user receives different recommendations based on a different underlying algorithmic design (Harambam et al., Citation2019; Van den Bogaert et al., Citation2022). Empirical research suggests that users would appreciate a choice between personalized and non-personalized RS (Harambam et al., Citation2019; Monzer et al., Citation2020).

Contestation mechanisms ultimately require individual users to take specific action. Instead of enforcing privacy sensitivity or content diversity on the system level, the rules are designed to give users a choice for choosing RS that align with their normative goals. Chater and Loewenstein (Citation2022) refer to such policies as the ‘i-frame’ and question their effectiveness in regulating societal challenges such as climate change, obesity, or retirement savings. As this study explores users’ likelihood for algorithmic contestation, we pose the following research question:

RQ1: To what extent do users intend to contest personalized recommender systems on VLOPs?

Regardless of the general prevalence among users to contest personalized RS on VLOPs, contestation intention is likely to hinge on individual characteristics. This is crucial because the existing contestation mechanisms rely on individual capacities. Thus, shedding light on individual differences paves the way to understanding how the policies function in daily practice and for whom they work. We review the existing empirical literature to assess potential individual drivers for algorithmic contestation.

The Cambridge Analytica scandal, the popular Netflix documentary ‘The Social Dilemma’, and best-selling books such as ‘Digital Minimalism’ (Newport, Citation2019) have brought public attention to the risks VLOPs pose to democracy, (mental) health, and social cohesion. Personalized RS aimed at extracting users’ attention are often identified as the primary culprit for the detrimental effects of VLOPs on individuals and society. Surveys suggest that almost two-thirds of US citizens believe that social media platforms have mostly negative effects on the state of affairs, primarily regarding spreading misinformation and extremism (Auxier, Citation2020). Other studies among teenagers yield more inconclusive results. 31% of respondents describe the impact of VLOPs on their lives as mostly positive, while 24% think that negative effects dominate (Anderson & Jiang, Citation2018). Qualitative studies further suggest that teenagers feel disempowered due to the effectiveness of recommendation algorithms (Youn & Kim, Citation2019). Hence, we argue that users’ risk perception of social media platforms impacts their likelihood of contesting personalized RS.

H1: High perceived risks of social media platforms lead to a higher intention to contest personalized recommender systems on VLOPs.

Another factor likely to impact users’ intention to contest relates to the perceived utility of personalized RS, for instance, to navigate the vast information landscapes, and keeping abreast of current affairs without actively seeking information. Empirical research on individuals’ attitudes towards personalized RS remains inconclusive, primarily due to the lack of a consistent comparative basis for the type of RS being investigated. For instance, Oeldorf-Hirsch and Neubaum (Citation2023) found that social media users in the US had more positive attitudes towards algorithms compared to German users. Kozyreva et al. (Citation2021) found that audiences from Germany, the UK, and the USA largely oppose targeted political campaigning and, in Germany and the UK, the personalization of news sources. However, other studies indicated that users also perceive individual benefits from algorithmic selection, e.g., following the news (Monzer et al., Citation2020; Thurman et al., Citation2019). Along similar lines, Honkala and Cui (Citation2012) reported high acceptance levels for algorithmic filtering as it facilitates user access to engaging content and increases their perceived control due to the reduced feed volume. Regardless of whether users generally hold positive or negative attitudes towards personalized RS, we reason that these attitudes influence their contestation intention.

H2: Negative attitudes towards content recommendations lead to a higher intention to contest personalized recommender systems on VLOPs.

Even though personalized RS are ubiquitous on VLOPs, many users remain unaware of the covert judgments made by algorithms (Powers, Citation2017). Empirical studies found that less than 50% of users in Germany, the UK, and the USA know that RS curate their social media feeds (Eslami et al., Citation2015; Kozyreva et al., Citation2021). Oeldorf-Hirsch and Neubaum (Citation2023) found higher algorithmic awareness among social media users in the US compared to Germany. And even if they are aware, young people often lack the necessary literacy to protect themselves against the potential impacts of RS (Powers, Citation2017; Swart, Citation2021). Since the algorithms enabling personalization are intangible, their outcomes are easily overlooked. However, awareness of algorithmic personalization is the first step toward understanding and acknowledging their impact (Swart, Citation2021). Studies found that algorithmic awareness increased perceived risks, yet not perceived opportunities from TikTok use (Taylor & Brisini, Citation2024). Moreover, people with higher algorithmic awareness more often counteract algorithmic filtering, for instance, by deleting cookies, clearing their browser history, or enabling the ‘do not track’ option in their browser (Oeldorf-Hirsch & Neubaum, Citation2023). Based on these findings we argue that algorithmic awareness affects people's likelihood of contesting personalized algorithms.

H3: High awareness of recommendation systems leads to a higher intention to contest personalized recommender systems on VLOPs.

RS are powered by users’ online behavior and personal data to make personalized content recommendations. In addition to the data voluntarily provided by the users, algorithms can infer other details about users predicated on the acquired data. These can include more sensitive information such as political partisanship, personal traits, or sexual orientation (Hinds & Joinson, Citation2018; Youyou et al., Citation2015). The platform could potentially misuse these data, for instance, by selling them to third parties or suffering a data breach. Thus, an inherent trade-off between the utility of getting useful personalized recommendations and concerns about privacy exists (Jeckmans et al., Citation2013; Knijnenburg et al., Citation2012). Empirical studies indicate that individuals in the US and Europe feel they retain minimal control over their personal data and harbor concerns about their digital privacy (Directorate-General for Communication, Citation2019; Vogels & Gelles-Watnick, Citation2023). Users often lack awareness regarding the extent of data collection, the security measures in place to protect it, or the duration for which it is retained (Jeckmans et al., Citation2013). Empirical evidence suggests that users lose trust in RS after learning that personal data was used (Sun et al., Citation2023). Moreover, privacy concerns have been found to undermine trust in the platform company and impede satisfaction with the system (Knijnenburg & Kobsa, Citation2013). However, users also have nuanced opinions on how VLOPs use their personal data. While users mostly accept data usage for recommending events, they largely reject being micro-targeted with political campaign messages on VLOPs (Turow et al., Citation2009). Even though privacy concerns do not necessarily translate into privacy-preserving online behavior (Kozyreva et al., Citation2021), we argue that they sway users’ intention to contest personalized RS.

H4: High privacy concerns lead to a higher intention to contest personalized recommender systems on VLOPs.

RQ2: Are there cross-national differences in citizens’ intention to contest personalized recommender systems on VLOPs?

Method

We ran a pre-registered online survey in six countries (Brazil, Germany, Japan, South Korea, the UK, and the USA) to probe the research questions and test the hypotheses (for a detailed overview of deviations from the pre-registration, see Table A1 in the supplementary information). The goal that drove the country selection was attaining a diverse representation of democratic countries from various continents and different political cultures. We explicitly opted for a global perspective in country selection, acknowledging existing national and supranational policy work on this issue. Moreover, we included countries with a high internet penetration rate and high usage of social media (Schumacher, Citation2020).Footnote1 Research suggests that the negative effects of social media platforms on different democratic indicators are most pronounced in established democracies (Lorenz-Spreen et al., Citation2022), arguably making users from those countries more likely to contest personalized RS.

The study received ethical approval from the Ethics Committee of the University of Amsterdam (2022-PCJ-15418). Cross-sectional data were collected through the online panel administered by Kantar Lightspeed, which recruited panelists both on- and offline. Native speakers employed by Kantar translated the questionnaire into different languages.

Sample and filtering process

We collected the data between 13 October and 23 November 2022. Quotas for age, gender, and education were used for each country. A total of 6300 respondents completed the online survey. Upon completion, they received Kantar points to redeem for their local currency. Due to straight-lining or completing the questionnaire unreasonably fast (<270 s), we excluded 83 respondents, resulting in a final sample of 6217. The sample size per country ranges from 1001 (South Korea) to 1048 (USA) respondents. On average, respondents were 49.0 (SD = 17.7) years old, and 50.6% identified as female. The sample in each country is reasonably representative of the general population, although lower-educated and younger citizens are slightly underrepresented. Respondents needed an average of 17.7 min (SD = 2722.8) to complete the questionnaire.

Measures

Unless described otherwise in the measurements section below, we assessed all variables using 5-point Likert scales with a residual option ‘I prefer not to answer’ (for exact wording of all items, see Table A2 in the supplementary information).

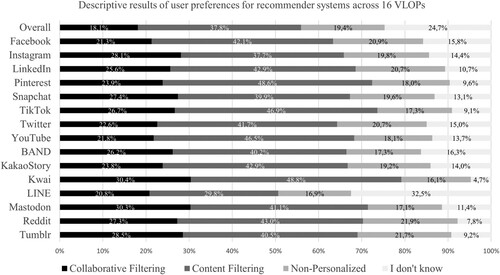

Algorithmic contestation intention

Drawing on the existing provisions in the DSA, we conceptualize algorithmic contestation based on users choosing a non-personalized RS rather than the personalized default option. We measured the dependent variable by showing respondents three different options of RS on VLOPs and asked them: ‘If you had the choice, which option would you prefer for your social media feeds?’. The options included two personalized RS: First, collaborative filtering, in which ‘recommendations are based on the choices of other users who are similar to you’ (18.1%), and second, content filtering, in which ‘recommendations are based on the content you watched or read before’ (37.8%). The third option referred to a non-personalized RS in which ‘content is presented randomly’ (19.4%). Respondents could also choose a residual ‘I don't know’ option (24.7%) which we considered missing values for the data analysis. We collapsed the collaborative filtering and the content filtering options into one category, resulting in a dichotomous variable 0 = ‘preference for a personalized RS’ (74.3%) and 1 = ‘preference for a non-personalized RS’ (25.7%).

Our descriptive RQ1 explores differences between VLOPs. We measured this variable using a two-step process. First, respondents indicated which VLOPs they have an active account (logged in in the last 12 months). We included 16 VLOPsFootnote2 based on their popularity in the six countries under investigation. Second, for each VLOP a respondent had an active account with, we repeated the question above, asking which of the three RS options they would prefer if given the choice.

Perceived risks of social media platforms

To measure respondents’ risk perceptions of VLOPs, respondents indicated to which extent they agreed with nine items referring to health (e.g., ‘Social media platforms make users feel isolated.’), democracy (e.g., ‘Social media platforms encourage the spread of misinformation.’), social cohesion (e.g., ‘Social media platforms harm social relationships.’) and privacy (e.g., ‘Social media platforms do not protect users’ privacy.’). A mean index showed good internal consistency (M = 3.34, SD = 0.93, α = 0.91).

Attitudes towards RS

To measure users’ attitudes towards content recommendations, we adapted three items from Smit et al. (Citation2014) and Thurman et al. (Citation2019), e.g., ‘Automatic recommendations by AI are a good way to follow what goes on in society’. We constructed a reliable mean index (M = 2.95, SD = 1.08, α = 0.88).

Awareness of RS

We used the four-dimensional scale by Zarouali et al. (Citation2021) to measure users’ awareness of content recommendations on VLOPs. Four items referred to content filtering (e.g., ‘AI is used to recommend content to me on social media platforms.’), three items addressed automated decision-making (e.g., ‘AI makes automated decisions on what content I get to see on social media platforms.’), three items alluded to the human-algorithm interplay (e.g., ‘The content that AI recommends to me on social media platforms depends on the data that I make available online.’), and three items addressed ethical considerations (e.g., ‘It is not always transparent why AI decides to show me certain content on social media platforms.’). A mean index showed good internal consistency (M = 3.24, SD = 1.00, α = 0.96).

Privacy concerns

People's privacy concerns were measured using three items we adopted from Knijnenburg et al. (Citation2012), e.g., ‘I'm afraid social media platforms disclose private information about me’. Since the inverse-coded item decreased the scale's reliability, even after recoding, we constructed a mean index with the two remaining items (M = 3.40, SD = 0.97, r = 0.54).

We included seven control variables based on the existing empirical evidence. Trust in social media (M = 2.49, SD = 1.09) was assessed adopting from the European Social Survey (‘How much do you trust … ?’). We measured social media use by asking respondents how many days a week they used the 16 VLOPs (1 = 0 days, 8 = 7 days, M = 4.90, SD = 1.72, α = 0.93). Political interest (M = 3.44, SD = 1.25), political partisanship (1 = very left, 11 = very right, M = 6.19, SD = 2.42), and the sociodemographic variables gender (50.7% female), age (M = 48.95, SD = 17.69), and education (38.97% tertiary education) were all measured with standard single-item questions.

Results

First, we present the descriptive results of people's intention to contest personalized RS (RQ1) and zoom in on differences between platforms. Second, we conducted a mixed-effects logistic regression analysis to identify individual drivers of algorithmic contestation intention (H1-H4) and cross-country differences (RQ2). R version 4.2.3 was used for all statistical analyses (all data and code is openly available: https://osf.io/e5×8j/?view_only=988879ae061c408d843e7dcc774a0c8a).

Descriptive results

Overall, we found that out of all respondents, 37.8% opted for content recommendations based on the content they consumed before (content filtering), while 18.1% would opt for an algorithmic configuration that recommends content based on the preferences of similar users (collaborative filtering). 19.4% would contest personalized recommendations altogether by choosing random recommendations for their social media feeds. As suggests, little variation across platforms exists. The intention to contest personalized recommendations ranged from 20.9% (Facebook) to 16.1% (Kwai).

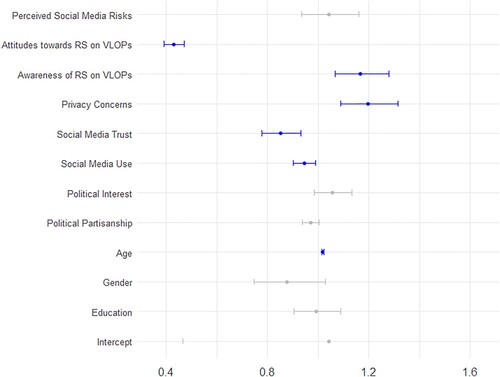

Mixed-effects logistic regression analysis

We addressed H1-H4 by computing a mixed-effects logistic regression analysis using the ‘glmer’ function from the ‘lme4’ package.Footnote3 The dichotomous variable Intention for algorithmic contestation was used as the dependent variable (1 = intention to contest personalized RS, 0 = no intention to contest personalized RS). In mixed-effects logistic regression analysis, fixed effects quantify the impact of predictor variables on the dependent variable, assuming these effects to be the same across all groups. Random effects capture the variation in the dependent variable attributable to differences between groups, allowing the intercepts to vary across these groups. The following independent variables were used for estimating the fixed effects: perceived social media risks (H1), attitudes towards content recommendations (H2), awareness of content recommendations (H3), privacy concerns (H4) and several control variables. The countries were used to estimate the random effects.

At the individual level, we found that perceived social media risks are not associated with the intention to contest personalized RS (OR = 1.04, 95% CI [0.94, 1.16]). As this finding does not align with our assumptions, we found no support for H1 ().

Figure 2. Fixed effects on users’ contestation of personalized recommender systems on VLOPs.

Note: N = 4456; Akaike information criterion (AIC) = 4034.1; Bayesian information criterion (BIC) = 4117.4, plot shows odds ratios and confidence intervals for all fixed effects, significant effects are marked in blue

We found a positive and significant association between attitudes towards content recommendations and the dependent variable (OR = 0.43, 95% CI [0.39, 0.47]): the more positive a person's attitudes towards personalized content recommendation on VLOPs, the more likely they contested personalized content recommendations. Our findings, therefore, support H2.

Awareness of content recommendations on VLOPs was significantly associated with people’s intention to contest personalized RS (OR = 1.17, 95% CI [1.07, 1.28]): the more people were aware that VLOPs use algorithms to recommend personalized content to their users, the more likely they would opt for non-personalized recommendation algorithms. The result supports H3.

Our study revealed a significant odds ratio for people's privacy concerns (OR = 1.20, 95% CI [1.09, 1.32]), indicating that individuals more concerned about privacy were more likely to contest personalized RS. This result supports our H4.

Zooming in on the control variables, we found significant odds ratios for trust in social media (OR = 0.85, 95% CI [0.78, 0.93]) and social media use (OR = 0.94, 95% CI [0.90, 0.99]). The lower respondents’ trust in social media platforms and the less frequently they used these platforms, the more likely they were to contest personalized recommendations. Moreover, our results showed a small significant association between age and the dependent variable (OR = 1.02, 95% CI [1.01, 1.02]), indicating that older respondents were more likely to contest personalized RS.

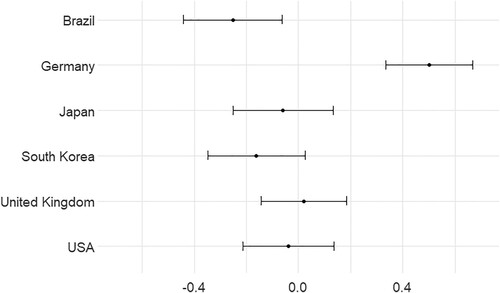

We used the random effects to identify country differences. The results indicated variability in the intercepts across countries (Var = 0.07, SD = 0.26), suggesting differences in the baseline likelihood of contesting personalized RS among the countries studied. To zoom into the country differences, we used the intercept values for each country (see ).

Figure 3. Random effects on users’ contestation of personalized recommender systems on VLOPs.

Note: N = 4456; Akaike information criterion (AIC) = 4034.1; Bayesian information criterion (BIC) = 4117.4, plot shows intercept values and confidence intervals for random effects.

For Germany, the intercept was estimated at 0.5 (95% CI [0.78, 0.93]), suggesting that individuals from Germany were significantly more likely to contest personalized RS on social media platforms than the average of all countries in the sample. Conversely, Brazil had an intercept of – 0.25 (95% CI [−0.44, 0.06]) indicating that Brazilians showed a significantly lower likelihood to contest personalized RS, compared to the average across countries. The intercepts for South Korea, Japan and the US were negative and non-significant, while the intercept for the UK was positive and non-significant. These findings suggested that respondents from South Korea, Japan, the US and the UK showed a similar intention for algorithmic contestation.

Discussion

This study set out to investigate people's intention to contest personalized RS on VLOPs. Special attention rested on assessing individual drivers and cross-national differences. If given the choice, about one out of five respondents would opt for non-personalized curation of their social media feeds, with little variation among platforms. In line with our assumptions, more negative attitudes toward RS, higher awareness of RS, and more concerns for privacy were associated with a higher intention to contest. Looking at cross-national variation, we observed that German respondents were particularly prone to algorithmic contestations, while respondents from Brazil were least likely to contest.

Contestation as a choice

Our research findings are crucial in the context of the DSA, which guarantees users a non-personalized recommendation option. The data demonstrate that 20% of all respondents expressed a preference for this non-personalized alternative. The fact that this finding was affirmed across all social media platforms triggers the question of why the option to choose a non-personalized RS is limited to VLOPs. The insights from this study show that from a citizen perspective, there are no reasons to differentiate between VLOPs and non-VLOPs in that regard.Footnote4 Our findings support an argument in favor of extending the non-personalization option under Art. 38 DSA to all platforms, including those that are not designated by the European Commission as VLOPs. Our results further indicate that German respondents are most likely to contest personalized RS compared to citizens in all other countries examined. This finding is consistent with the existing literature. Several factors may contribute to it. On the one hand, Germany has a long tradition of strict privacy and data protection laws which may lead Germans to be more sensitive to data privacy. Brazil, on the other hand, has only recently introduced stricter data protection regulation inspired by the EU’s GDPR. Due to the Brussels effect, non-EU citizens possibly benefit from the right to choose a non-personalized recommendation option in the future. However, the fact that Germany, stands out regarding users’ contestation intention lends tentative support for the argument that the measures outlined in the DSA might not be as effective in other countries. More empirical research is needed to disentangle the reasons for the cross-country variation our study found.

Algorithmic transparency and the DSA

Increased awareness among users that recommendations on VLOPs’ are algorithmically curated enhances their propensity to contest personalized RS and choose a non-personalized alternative. This finding highlights the importance of empowering users to choose the design of the recommendation algorithm. It further raises questions concerning algorithmic transparency. The DSA advocates for algorithm transparency in Art. 27, asserting that the main parameters used in recommender systems should be comprehensible to the average user. In light of our empirical results, this approach appears fruitful, as the mere awareness of RS usage heightens the probability of users contesting personalized systems, enabling users to influence the RS they interact with.

The empirical finding could be interpreted as an argument to reconsider the algorithmic transparency obligations. Art. 27 stipulates that online platforms are required to provide information about the main parameters of their RS within the terms and conditions. Yet, questions regarding realistic accessibility of this information arise as previous research suggests that users hardly ever read the terms of use, let alone revisit them regularly (McDonald & Cranor, Citation2008). Consequently, while there may be algorithmic transparency in principle, it arguably does not translate into transparency in practice because this vital information is obscured within the terms and conditions. To genuinely empower users, a more accessible form of algorithmic transparency should be considered. Regular reminders about the parameters of an RS, along with guidance on how users can influence these parameters, is arguably a more effective approach to achieving actionable transparency and user empowerment. Hence, our study identifies a need to reconsider Art. 27 DSA.

A plea for algorithmic literacy

The observed positive impact of algorithmic awareness underscores the significance of algorithmic literacy for online platform users. The nuanced measurement of this factor suggests that it is insufficient to make users aware that they are receiving highly personalized recommendations. Indeed, algorithmic literacy must extend beyond basic awareness and delve into the more intricate aspects of RS. Users must be educated about the dynamics of algorithms, which often operate with limited or potentially no human oversight (Zarouali et al., Citation2021). Moreover, users should be made aware of how their own data input serves as training data for these algorithms, enabling the generation of increasingly personalized recommendations over time. In addition, it is crucial for users to understand the potential discriminatory implications of RS. Therefore, a holistic approach to algorithmic literacy is required, encompassing the basic functionality of algorithms and their wider implications in the digital landscape. As such, the role of civil society organizations in enlightening the public about RS worldwide and creating pathways for actionable steps becomes vital. For instance, the Center for Humane Technologies has made strides in this direction. Their contributions, including an online course, the ‘Your Undivided Attention’ podcast, and their involvement in the Netflix Documentary ‘The Social Dilemma,’ exemplify the educational efforts necessary to heighten public awareness about the potential pitfalls of unchallenged VLOP usage.

Limitations and future outlook

Several limitations of this investigation suggest promising directions for future research. First, our study focused solely on the intention for algorithmic contestation rather than actual behavior. Hence, the question remains whether users, when confronted with the choice, would forgo the convenience of personalized content recommendations in favor of a non-personalized alternative. The potential discrepancy between stated intent and real-world action is known as the intention-behavior gap (Kozyreva et al., Citation2021). Given the prevalence of personalized RS across social media, e-commerce, and streaming platforms, users might not fully grasp the concept of non-personalized recommendations, like random or reverse-chronological sorting. Research documents that personalized RS can effectively enhance user experience and allow users to manage information overload (Honkala & Cui, Citation2012; Zhou et al., Citation2012). Conversely, one might speculate that platforms employing non-personalized recommendations, such as Mastodon, have struggled to gain popularity due to their inability to provide a tailored user experience. Behavioral insights are needed to determine if and under which conditions respondents would change their social media settings to select a non-personalized algorithm.

Second, our study examined the contestation of personalized RS as a binary choice in line with the DSA. We focused on contestation based on having the choice between personalized RS and a non-personalized alternative. Our study gives reasons to explore the desirability for recommendation logics that address broader societal concerns, such as the lack of diversity, and offer more sophisticated control options. Harambam and colleagues (Citation2019) proposed a more nuanced approach, suggesting diversified user choices through RS personae. RS personae could offer a refined perspective on RS by including diversity-sensitive options. In addition to personalized and non-personalized options, they suggest diversity-sensitive personae, which they denominate as ‘the explorer’ (offering ‘news from unexplored territories’), ‘the diplomat’ (offering ‘news from the other ideological side’), and ‘the wizard’ (offering ‘surprising news’). Art. 44 (1) DSA paves the way for such a system, stating that the European Commission should promote the setting of voluntary standards. According to subparagraph (i), these standards explicitly include the ‘choice interfaces and presentation of information on the main parameters of different types of recommender systems’, mentioned in Art. 27 and 38 DSA. Such a standard could include algorithmic recommender personae, promoting their use among the VLOPs participating in this standard-setting program. These fine-grained distinctions into different RS personae illustrate that personalized RS are not inherently problematic. Only when they are utilized for attention extraction, engagement and profit maximization, personalization arguably has detrimental individual and societal effects. Designing RS centered on user goals could recast personalized RS in a more favorable light and enhance the social responsibility of VLOPs. Nevertheless, more empirical evidence is necessary to determine whether users prefer these personalized RS personae over the existing personalized and non-personalized algorithms.

Conclusion

The results of this cross-national study suggest user demand for mechanisms to contest personalized RS on VLOPs by opting for a non-personalized option. Regulatory frameworks like the DSA outline concrete steps to empower users by mandating VLOPs to increase algorithmic transparency and offer non-personalized alternatives. While these policies are an important step towards taking the interests and wishes of users into account, they can only effectively curb the potentially negative implications of personalized RS if users use them. The findings indicate that users’ intention for algorithmic contestation hinges on individual and country-level characteristics. We call for extending Art. 38 DSA to encompass all online platforms and refining Art 27 DSA to make algorithmic transparency more accessible. Additionally, we advocate the promotion of algorithmic literacy as a means to empower users to make well-informed choices concerning the extent to which personalized RS shape their social media consumption.

Supplemental Material

Download MS Word (34.4 KB)Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

Notes on contributors

Christopher Starke

Christopher Starke is an Assistant Professor of the ‘human factor in new technologies’ at the Amsterdam School of Communication Research of the University of Amsterdam. His research interests include the use of AI for democracy and algorithmic fairness.

Ljubiša Metikoš

Ljubiša Metikoš is a PhD student at the Institute for Information Law of the University of Amsterdam. His research interests include governance of recommender systems and artificial intelligence in the judiciary.

Natali Helberger

Natali Helberger is a distinguished University Professor of law & digital technology, with a special focus on AI, at the Institute for Information Law of the University of Amsterdam. She studies the legal, ethical, and public policy-related challenges associated with using algorithms and AI in media, political campaigning, commerce, and the healthcare sector, and the implications this has for users and society.

Claes de Vreese

Claes de Vreese is a University Professor of Artificial Intelligence and Society at the University of Amsterdam and an affiliated professor at the University of Southern Denmark and a member of the Danish Institute of Advanced Studies. He is a leading partner in the AI, Media & Democracy Lab, in which researchers from the UvA, AUAS, and CWI collaborate with media partners, social partners, and the municipality of Amsterdam. His research interests include understanding and tackling the (un)intended consequences of the use of algorithms and AI in society.

Notes

1 As many countries met our selection criteria, the countries were additionally selected based on the authors’ familiarity with the social media ecosystems in those countries.

2 Facebook, Twitter, Pinterest, Instagram, YouTube, LinkedIn, Tumblr, Reddit, TikTok, Snapchat, Mastodon, LINE, KakaoStory, BAND, Kwai. Due to their market share in the EU, the DSA only defines the following platforms as VLOPs: Facebook, Twitter, Pinterest, Instagram, YouTube, LinkedIn, TikToki, Snapchat. As the market share most other platforms is significantly higher in other countries in our sample, we also refer to them as VLOPs.

3 We added two robustness checks (see Table A3 and A4 in the supplementary information): First, we analyzed the data with a standard logistic regression model using the countries as dummy variables. Second, we ran the analysis for all respondents (without excluding straight liners and filtering for time). While we find minor variations in effect size, the hypothesis tests remain consistent.

4 Alibaba AliExpress, Amazon Store, Apple AppStore, Booking.com, Facebook, Google Play, Google Maps, Google Shopping, Instagram, LinkedIn, Pinterest, Snapchat, TikTok, Twitter, Wikipedia, YouTube

References

- Anderson, M., & Jiang, J. (2018). Teens, social media & technology 2018. Pew Research Center. https://assets.pewresearch.org/wp-content/uploads/sites/14/2018/05/31102617/PI_2018.05.31_TeensTech_FINAL.pdf

- Auxier, B. (2020). 64% of Americans say social media have a mostly negative effect on the way things are going in the U.S. today. Pew Research Center. https://www.pewresearch.org/fact-tank/2020/10/15/64-of-americans-say-social-media-have-a-mostly-negative-effect-on-the-way-things-are-going-in-the-u-s-today/

- Baumann, F., Lorenz-Spreen, P., Sokolov, I. M., & Starnini, M. (2020). Modeling echo chambers and polarization dynamics in social networks. Physical Review Letters, 124(4), 048301. https://doi.org/10.1103/PhysRevLett.124.048301

- Bayamlioglu, E. (2018). Contesting automated decisions:. European Data Protection Law Review, 4(4), 433–446. https://doi.org/10.21552/edpl/2018/4/6

- Bendiek, A., & Stuerzer, I. (2023). The Brussels effect, European regulatory power and political capital: Evidence for mutually reinforcing internal and external dimensions of the Brussels effect from the European digital policy debate. Digital Society, 2(1), 5. https://doi.org/10.1007/s44206-022-00031-1

- Binns, R. (2018). Algorithmic accountability and public reason. Philosophy & Technology, 31(4), 543–556. https://doi.org/10.1007/s13347-017-0263-5

- Bradford, A. (2020). The Brussels effect: How the European union rules the world. Oxford University Press.

- Chater, N., & Loewenstein, G. (2022). The i-frame and the s-frame: How focusing on individual-level solutions has led behavioral public policy astray. The Behavioral and Brain Sciences, 1–60.

- Cota, W., Ferreira, S. C., Pastor-Satorras, R., & Starnini, M. (2019). Quantifying echo chamber effects in information spreading over political communication networks. EPJ Data Science, 8(1), 1–13. https://doi.org/10.1140/epjds/s13688-019-0213-9

- Council of Europe. (2018). Recommendation CM/Rec(2018)2 of the Committee of Ministers to member States on the roles and responsibilities of internet intermediaries. https://rm.coe.int/0900001680790e14#:~:text=State%20authorities%20should%20protect%20the,Convention%20108%2C%20are%20also%20guaranteed

- Directorate-General for Communication. (2019). Special Eurobarometer 487a: The General Data Protection Regulation [dataset]. https://europa.eu/eurobarometer/surveys/detail/2222

- Eslami, M., Rickman, A., Vaccaro, K., Aleyasen, A., Vuong, A., Karahalios, K., Hamilton, K., & Sandvig, C. (2015). “I always assumed that I wasn’t really that close to [her]”: Reasoning about invisible algorithms in news feeds. Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems (pp. 153–162).

- European Commission. (2022). The Digital Services Act: Ensuring a safe and accountable online environment. https://commission.europa.eu/strategy-and-policy/priorities-2019-2024/europe-fit-digital-age/digital-services-act-ensuring-safe-and-accountable-online-environment_en

- Flaxman, S., Goel, S., & Rao, J. M. (2016). Filter bubbles, echo chambers, and online news consumption. Public Opinion Quarterly, 80(S1), 298–320. https://doi.org/10.1093/poq/nfw006

- Fuchs, C. (2021). Social media: A critical introduction. SAGE.

- Gillespie, T. (2018). Custodians of the internet: Platforms, content moderation, and the hidden decisions that shape social media. Yale University Press.

- Guess, A. M., Malhotra, N., Pan, J., Barberá, P., Allcott, H., Brown, T., Crespo-Tenorio, A., Dimmery, D., Freelon, D., Gentzkow, M., González-Bailón, S., Kennedy, E., Kim, Y. M., Lazer, D., Moehler, D., Nyhan, B., Rivera, C. V., Settle, J., Thomas, D. R., … Tucker, J. A. (2023). How do social media feed algorithms affect attitudes and behavior in an election campaign? Science, 381(6656), 398–404. https://doi.org/10.1126/science.abp9364

- Haim, M., Graefe, A., & Brosius, H.-B. (2018). Burst of the filter bubble? Digital Journalism, 6(3), 330–343. https://doi.org/10.1080/21670811.2017.1338145

- Harambam, J., Bountouridis, D., Makhortykh, M., & van Hoboken, J. (2019). Designing for the better by taking users into account: A qualitative evaluation of user control mechanisms in (news) recommender systems. Proceedings of the 13th ACM Conference on Recommender Systems, 69–77. https://doi.org/10.1145/3298689.3347014

- Helberger, N., Karppinen, K., & D’Acunto, L. (2018). Exposure diversity as a design principle for recommender systems. Information, Communication & Society, 21(2), 191–207. https://doi.org/10.1080/1369118X.2016.1271900

- High-Level Expert Group on AI. (2019). Ethics guidelines for trustworthy AI. https://digital-strategy.ec.europa.eu/en/library/ethics-guidelines-trustworthy-ai

- Hildebrandt, M. (2022). The issue of proxies and choice architectures. Why EU Law matters for recommender systems. Frontiers in Artificial Intelligence, 5, 789076. https://doi.org/10.3389/frai.2022.789076

- Hinds, J., & Joinson, A. N. (2018). What demographic attributes do our digital footprints reveal? A systematic review. PLoS One, 13(11), e0207112. https://doi.org/10.1371/journal.pone.0207112

- Honkala, M., & Cui, Y. (2012). Automatic on-device filtering of social networking feeds. Proceedings of the 7th Nordic Conference on Human-Computer Interaction: Making Sense Through Design, 721–730. https://doi.org/10.1145/2399016.2399126

- Iyengar, S., Lelkes, Y., Levendusky, M., Malhotra, N., & Westwood, S. J. (2019). The origins and consequences of affective polarization in the United States. Annual Review of Political Science, 22(1), 129–146. https://doi.org/10.1146/annurev-polisci-051117-073034

- Jannach, D., Naveed, S., & Jugovac, M. (2017). User Control in Recommender Systems: Overview and Interaction Challenges. E-Commerce and Web Technologies, 278, 21–33. https://doi.org/10.1007/978-3-319-53676-7_2

- Jeckmans, A. J. P., Beye, M., Erkin, Z., Hartel, P., Lagendijk, R. L., & Tang, Q. (2013). Privacy in recommender systems. In N. Ramzan, R. van Zwol, J.-S. Lee, K. Clüver, & X.-S. Hua (Eds.), Social media retrieval (pp. 263–281). Springer London.

- Jin, Y., Tintarev, N., & Verbert, K. (2018). Effects of personal characteristics on music recommender systems with different levels of controllability. Proceedings of the 12th ACM Conference on Recommender Systems (pp. 13–21). https://doi.org/10.1145/3240323.3240358

- Karimi, M., Jannach, D., & Jugovac, M. (2018). News recommender systems – Survey and roads ahead. Information Processing & Management, 54(6), 1203–1227. https://doi.org/10.1016/j.ipm.2018.04.008

- Kluttz, D. N., & Mulligan, D. K. (2019). Automated decision support technologies and the legal profession. Berkeley Technology Law Journal, 34(3), 853–890.

- Knijnenburg, B. P., & Kobsa, A. (2013). Making Decisions about Privacy: Information Disclosure in Context-Aware Recommender Systems. ACM Transactions on Interactive Intelligent Systems, 3(3), 1–23. https://doi.org/10.1145/2499670

- Knijnenburg, B. P., Willemsen, M. C., Gantner, Z., Soncu, H., & Newell, C. (2012). Explaining the user experience of recommender systems. User Modeling and User-Adapted Interaction, 22(4), 441–504. https://doi.org/10.1007/s11257-011-9118-4

- Kozyreva, A., Lorenz-Spreen, P., Hertwig, R., Lewandowsky, S., & Herzog, S. M. (2021). Public attitudes towards algorithmic personalization and use of personal data online: Evidence from Germany, Great Britain, and the United States. Humanities and Social Sciences Communications, 8(1), 1–11. https://doi.org/10.1057/s41599-021-00787-w

- Lorenz-Spreen, P., Oswald, L., Lewandowsky, S., & Hertwig, R. (2022). A systematic review of worldwide causal and correlational evidence on digital media and democracy. Nature Human Behaviour, 7, 74–101. https://doi.org/10.1038/s41562-022-01460-1

- Lyons, H., Velloso, E., & Miller, T. (2021). Conceptualising contestability: Perspectives on contesting algorithmic decisions. Proceedings of the ACM on Human-Computer Interaction, 5(CSCW1), 1–25. https://doi.org/10.1145/3449180

- McDonald, A. M., & Cranor, L. F. (2008). The cost of reading privacy policies. I/S: A Journal of Law and Policy for the Information Society, 3(4), 543–568.

- McQuillan, D. (2022). Resisting AI: An anti-fascist approach to artificial intelligence. Policy Press.

- Monzer, C., Moeller, J., Helberger, N., & Eskens, S. (2020). User perspectives on the news personalisation process: Agency, trust and utility as building blocks. Digital Journalism, 8(9), 1142–1162. https://doi.org/10.1080/21670811.2020.1773291

- Newport, C. (2019). Digital minimalism: Choosing a focused life in a noisy world. Penguin.

- Nguyen, T. T., Hui, P.-M., Harper, F. M., Terveen, L., & Konstan, J. A. (2014). Exploring the filter bubble: The effect of using recommender systems on content diversity. Proceedings of the 23rd International Conference on World Wide Web, 677–686. https://doi.org/10.1145/2566486.2568012

- Nyhan, B., Settle, J., Thorson, E., Wojcieszak, M., Barberá, P., Chen, A. Y., Allcott, H., Brown, T., Crespo-Tenorio, A., Dimmery, D., Freelon, D., Gentzkow, M., González-Bailón, S., Guess, A. M., Kennedy, E., Kim, Y. M., Lazer, D., Malhotra, N., Moehler, D., … Tucker, J. A. (2023). Like-minded sources on Facebook are prevalent but not polarizing. Nature, 620, 137–144. https://doi.org/10.1038/s41586-023-06297-w

- OECD. (2019). Recommendation of the Council on Artificial Intelligence. https://legalinstruments.oecd.org/en/instruments/oecd-legal-0449

- Oeldorf-Hirsch, A., & Neubaum, G. (2023). Attitudinal and behavioral correlates of algorithmic awareness among German and U.S. social media users. Journal of Computer-Mediated Communication: JCMC, 28(5), zmad035.

- Papadamou, K., Papasavva, A., Zannettou, S., Blackburn, J., Kourtellis, N., Leontiadis, I., Stringhini, G., & Sirivianos, M. (2020). Disturbed YouTube for kids: Characterizing and detecting inappropriate videos targeting young children. Proceedings of the International AAAI Conference on Web and Social Media, 14, 522–533. https://doi.org/10.1609/icwsm.v14i1.7320

- Powers, E. (2017). My news feed is filtered? Digital Journalism, 5(10), 1315–1335. https://doi.org/10.1080/21670811.2017.1286943

- Schertel Mendes, L., & Kira, B. (2023). The road to regulation of artificial intelligence: The Brazilian experience. Internet Policy Review.

- Schumacher, S. (2020, April 2). 8 Charts on internet use around the world as countries grapple with COVID-19. Pew Research Center. https://www.pewresearch.org/short-reads/2020/04/02/8-charts-on-internet-use-around-the-world-as-countries-grapple-with-covid-19/

- Smit, E. G., Van Noort, G., & Voorveld, H. A. M. (2014). Understanding online behavioural advertising: User knowledge, privacy concerns and online coping behaviour in Europe. Computers in Human Behavior, 32, 15–22. https://doi.org/10.1016/j.chb.2013.11.008

- Sun, Y., Drivas, M., Liao, M., & Sundar, S. S. (2023). When recommender systems snoop into social media, users trust them less for health advice. Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems.

- Swart, J. (2021). Experiencing algorithms: How young people understand, feel about, and engage With algorithmic news selection on social media. Social Media + Society, 7(2), 205630512110088. https://doi.org/10.1177/20563051211008828

- Taylor, S. H., & Brisini, K. S. C. (2024). Parenting the TikTok algorithm: An algorithm awareness as process approach to online risks and opportunities. Computers in Human Behavior, 150, 107975. https://doi.org/10.1016/j.chb.2023.107975

- Thurman, N., Moeller, J., Helberger, N., & Trilling, D. (2019). My friends, editors, algorithms, and I. Digital Journalism, 7(4), 447–469. https://doi.org/10.1080/21670811.2018.1493936

- Turow, J., King, J., Hoofnagle, C. J., Bleakley, A., & Hennessy, M. (2009). Americans reject tailored advertising and three activities that enable It. SSRN Electronic Journal, https://doi.org/10.2139/ssrn.1478214

- Van den Bogaert, L., Geerts, D., & Harambam, J. (2022). Putting a human face on the algorithm: Co-designing recommender personae to democratize news recommender systems. Digital Journalism, 1–21. https://doi.org/10.1080/21670811.2022.2097101

- van Drunen, M., Zarouali, B., & Helberger, N. (2022). Recommenders you can rely on: A legal and empirical perspective on the transparency and control individuals require to trust news personalisation. JIPITEC, 13(3), 302–322.

- Vogels, E., & Gelles-Watnick, R. (2023, April 24). Teens and social media: Key findings from Pew Research Center surveys. Pew Research Center. https://www.pewresearch.org/short-reads/2023/04/24/teens-and-social-media-key-findings-from-pew-research-center-surveys/

- Vosoughi, S., Roy, D., & Aral, S. (2018). The spread of true and false news online. Science, 359(6380), 1146–1151. https://doi.org/10.1126/science.aap9559

- Youn, S., & Kim, S. (2019). Newsfeed native advertising on Facebook: Young millennials’ knowledge, pet peeves, reactance and ad avoidance. International Journal of Advertising, 38(5), 651–683. https://doi.org/10.1080/02650487.2019.1575109

- Youyou, W., Kosinski, M., & Stillwell, D. (2015). Computer-based personality judgments are more accurate than those made by humans. Proceedings of the National Academy of Sciences, 112(4), 1036–1040. https://doi.org/10.1073/pnas.1418680112

- Zarouali, B., Boerman, S. C., & de Vreese, C. H. (2021). Is this recommended by an algorithm? The development and validation of the algorithmic media content awareness scale (AMCA-scale). Telematics and Informatics, 62, 101607. https://doi.org/10.1016/j.tele.2021.101607

- Zhou, X., Xu, Y., Li, Y., Josang, A., & Cox, C. (2012). The state-of-the-art in personalized recommender systems for social networking. Artificial Intelligence Review, 37(2), 119–132. https://doi.org/10.1007/s10462-011-9222-1

- Zuboff, S. (2019). The Age of surveillance capitalism: The fight for a human future at the New frontier of power. Profile Books.