ABSTRACT

Introduction

Research indicates that many tools designed for screening dementia are affected by literacy level. The objective of this study was to estimate the overall effects of this confounding factor. A meta-analysis was conducted to evaluate differences in performance in dementia screening tools between literate and illiterate individuals.

Method

Electronic databases were searched from 1975 to June 2021 to identify empirical studies examining performance in dementia screening tools in literate and illiterate individuals over 50 years old. Data for effect sizes, participant demographic information, and study information were extracted.

Results

We identified 27 studies methodologically suitable for meta-analysis. Multi-level random-effects modeling demonstrated a significant overall effect, with literate participants scoring significantly higher than illiterate participants (g = −1.2, 95% CI = −1.47, −0.95, p < .001). Moderator analyses indicated significant effects of test type and the presence of cognitive impairment on the extent of the difference in performance between literate and illiterate participants. The difference in performance between groups was smaller in screening tests modified for illiterate individuals (p < .01), and in individuals with cognitive impairment (p < .001).

Conclusions

Our findings substantiate the unsuitability of many dementia screening tools for individuals who are illiterate. The results of this systematic review and meta-analysis emphasize the need for the development and validation of tools that are suitable for individuals of all abilities.

Despite advances in the provision of education in recent decades, a significant proportion of the world’s population still lack basic literacy skills. In 2019, UNESCO reported that approximately 9% (102 million) of young people and 14% (750 million) of adults are illiterate (UIS, Citation2019). Elderly illiteracy rates are higher still, with 22% (141 million) of adults over 65 unable to read or write (UIS, Citation2017).

The main reasons for illiteracy can be broadly divided into two categories: reasons pertaining to health or to social circumstances. Health reasons for illiteracy include learning difficulty or disability and neurological conditions. Social reasons include the absence of an education system, social or cultural disapproval of literacy, child labor, and poverty (Ardila et al., Citation2010).

It should be noted that, although literacy is highly related to schooling, reading and writing skills can be obtained outside of education. It cannot be assumed, therefore, that an individual who did not attend formal education is illiterate (Ardila & Rosselli, Citation2007). Individuals who are literate in their native language but unable to read or write proficiently in the language of their country of residence may also be regarded as functionally illiterate. In this sense, linguistic and cultural factors contribute to how illiteracy is defined across different contexts (Vagvolgyi et al., Citation2016).

Dementia and illiteracy

Assessment of dementia in individuals who are illiterate is complex. It has been shown that individuals who are illiterate are significantly more likely to receive a diagnosis of dementia (Nitrini et al., Citation2009). There are numerous potential explanations for this.

One possible explanation relates to brain development. The acquisition of literacy skills affects the functional and structural development of the brain (Ardila et al., Citation2010). The cognitive reserve hypothesis proposes that the neural networks in the brains of illiterate individuals may be more susceptible to disruption or may struggle to compensate for cognitive dysfunction (Manly et al., Citation2003). This theory is debated within the field of cross-cultural neuropsychology, however, as many have argued that it fails to adequately consider potential confounds, such as testing bias (Ostrosky-Solis, Citation2007).

Traditional tests used to screen for and diagnose dementia were not developed for individuals who are unable to read or write (Ardila & Rosselli, Citation2007). Screening tests, such as the Mini-Mental State Examination (MMSE; Folstein et al., Citation1975), assess specific skills that are enhanced by the process of learning to read (Ostrosky-Solis, Citation2007). For example, learning to read trains remembering strategies, visuospatial perception, logical reasoning, and fine movements. Individuals who are illiterate often possess a different skill set, one that is more procedural, pragmatic and sensory oriented. However, these skills are less likely to be tested in the process of cognitive assessment. Thus, individuals who are illiterate are at a disadvantage when assessed in the cognitive domains that benefit from literacy skills, even when assessment of these domains does not directly involve reading or writing (Kosmidis, Citation2018).

Furthermore, individuals who are illiterate usually lack familiarity with testing procedures. People who go through an education system are socialized into a value system which places importance on working alone, memorization, doing your best to succeed without any obvious immediate benefit to your daily functioning (Nell, Citation2000). These values may not be held by individuals who did not go through such a system. Thus, differences in performance in cognitive tests may not be completely reflective of differences in cognitive ability, but rather differences in test-taking abilities and familiarity.

Dementia screening tools

The MMSE is one of the most frequently used screening tools for dementia across the globe, both in research and in clinical practice (Nieuwenhuis-Mark, Citation2010). However, many studies have demonstrated that the MMSE is affected by educational level, language of administration, and culture (e.g., Black et al., Citation1999; Goudsmit et al., Citation2018; Nielsen et al., Citation2012). Studies have attempted to account for some of these confounds by lowering the cutoff score for some populations (Black et al., Citation1999; Cassimiro et al., Citation2017). However, evidence suggests that while modifying the cutoff score in the MMSE may increase specificity, it reduces sensitivity (Ostrosky-Solis, Citation2007).

In light of this evidence, a number of alternative tools have been developed, such as the Literacy Independent Cognitive Assessment (LICA; Choi et al., Citation2011), the Community Screening Instrument for Dementia (CSI-D; Hall et al., Citation2000), the Rowland Universal Dementia Assessment Scale (RUDAS; Storey et al., Citation2004), and the European Cross-Cultural Neuropsychology Test Battery (Nielsen, Segers, et al., Citation2018b). These tools were designed for use in multicultural and illiterate/low educated populations. They differ from traditional tools largely by having more of a focus on abilities acquired in everyday life (e.g., experience with shopping for groceries) rather than relying on school-dependent skills and abilities (Nielsen, Segers, et al., Citation2018b).

Rationale and objective

The number of individuals living with dementia across the globe is estimated to increase from 50 million in 2018 to 152 million in 2050, a 204% increase (WHO, Citation2019). The reasons for this increase are complex and include factors such as increased life expectancy, improvements in the reporting of health data, and an increase in knowledge about dementia (Alzheimer’s Research UK, Citation2018).

The prevalence of dementia in low-income countries is currently lower than in high income countries because the life expectancy is lower. As life expectancy increases in low-income countries, so too will the prevalence of dementia. The most drastic increase in the number of people living with dementia is therefore estimated to occur in low income countries (Prince et al., Citation2015). The lowest literacy rates are also in these countries (UIS, Citation2019). In addition, increases in migration and globalization across the world has resulted in more diverse populations with wider ranges of native languages and literacy levels in middle- and higher-income countries (Franzen & European Consortium on Cross-Cultural Neuropsychology, Citation2021; Nielsen et al., Citation2012). Finding accurate and appropriate methods of diagnosing dementia in individuals who are illiterate is therefore increasingly relevant. In order to inform the development and promotion of such methods, it is important to understand the extent to which the current methods of screening dementia are affected by illiteracy.

This review aims to identify, evaluate, and summarize the findings of all studies exploring how illiterate individuals perform in dementia screening tools compared to literate individuals. We hypothesize that the mean score of illiterate individuals in dementia screening tools will be lower than that of literate individuals.

We also aim to investigate whether any differences found between literate and illiterate individuals are moderated by whether or not the dementia screening tools were designed or modified for individuals with low levels of literacy or education, and by the cognitive status of the participants. To our knowledge, this is the first meta-analysis on this topic.

Methods

Search criteria

The meta-analysis was carried out using PRISMA guidelines (Liberati et al., Citation2009). The PRISMA checklist is included in Appendix A. The protocol for the review was registered on PROSPERO, with following reference number: CRD42020168,484 (https://www.crd.york.ac.uk/prospero/display_record.php?RecordID=168484).

A comprehensive search was conducted on 24 June 2021using OVID databases (MEDLINE, PsycINFO, and EMBASE), Scopus, Web of Science, Cumulative Index to Nursing and Allied Health Literature (CINAHL), and Google Scholar from 1975 (year of publication of the MMSE; Folstein et al., Citation1975) to June 2021. The search strategy included combinations of the following phrases:

(dement* OR “cognitive impairment” OR “alzheimer*”) AND (screening OR mmse OR “mini mental” OR “moca” or “montreal cognitive assessment” OR “GPCOG” OR “General Practitioner Assessment of Cognition” OR “mini-cog” OR “mini cog” OR “addenbrooke’s cognitive assessment” OR “ace-r” OR “ace-iii”) AND (literate OR illiterate OR literacy OR illiteracy OR “reading abilit*” OR “reading comprehension”).

Eligibility criteria

Studies that met the following criteria were included in the meta-analysis:

Quantitative study

Measured performance of illiterate and literate adults over 50 years old in a dementia screening tool. Although most studies on dementia focus on participants over 65 years, it is recognized that dementia also affects people under the age of 65 (Van der Flier & Scheltens, Citation2005). Many research studies reflect this in their age criteria (e.g., Goudsmit et al., Citation2020; Muangpaisan et al., Citation2015; Nielsen, Segers, Citation2018a; Zhou et al., Citation2006). In order avoid a potential cultural bias toward studies conducted in countries whereby 65 years old is deemed the cutoff for older age, an inclusive age range was chosen.

Illiterate participants were illiterate for social reasons (e.g., poverty, lack of education, culture group). Only studies that explicitly stated that participants were illiterate were included; participants with no education were not assumed to be illiterate. Studies that included participants who were illiterate as a result of health conditions (e.g., learning disability, motor/sensory/neurological conditions) were excluded.

Published in English in a peer-reviewed journal

Reported sufficient information to allow for the calculation of the effect size and standard error of difference in performance between the two groups (literate vs. illiterate adults) in the screening tool. The information required for eligibility included mean values, standard deviations and/or standard errors of the mean for each group. Where sufficient information was not available, authors were contacted to request the required data.

Study selection

The lead author assessed all non-duplicate titles and abstracts for suitability for inclusion using Rayyan software (https://www.rayyan.ai/). Full-text manuscripts were assessed using Microsoft Excel V16.51 and Endnote X9 and reasons for exclusion were recorded.

Data extraction

Relevant data were extracted from the studies by the lead author using Microsoft Excel. The extracted data included the year, study design, screening tool used, language of administration, study country, sample size, age range, gender breakdown, cognitive impairment diagnosis, years of education, primary findings, statistical analysis used, and the mean and standard deviation of performance in the cognitive screening tool in literate and illiterate participants.

Quality assessment

The lead author assessed the quality of all included studies, and a random 50% of the papers were assessed by an independent researcher to ensure reliability (kappa = .83, indicating near perfect agreement). Disagreements were resolved by consensus. Study quality was assessed using the NIH Quality Assessment of Case-Control Studies tool (National Institute of Health, Citation2014). The NIH Quality Assessment of Case-Control Studies tool does not include specific rules for calculating the quality of the studies. As a general guideline, studies that met 8 or more of the criteria were graded as good, those that met between 6 and 7 of the criteria were graded as fair, and those that met 5 of less of the criteria were graded as poor.

Of the 12 questions in the tool, two questions were excluded, as they were not relevant to the studies in this review. The question regarding concurrent controls was excluded because ‘cases’ in this review referred to illiterate individuals. Illiterate participants did not become illiterate at a specific moment in time, therefore making it impossible to select concurrent control participants. The question regarding confirming exposure prior to the development of the condition that defined a participant as a case was also excluded for the same reason. The condition that defined a participant as a ‘case’ was illiteracy, which did not develop over time.

Studies with a quality rating of ‘poor’ due to unclear selection of participants or ambiguous differentiation of literate and illiterate participants were excluded from the meta-analysis. As the methods used to delineate the number of participants in each group were questionable in these studies, they were excluded from the quantitative analysis to reduce the risk of bias across the studies.

Statistical analyses

Analyses and plots were carried out in RStudio (V1.3.959; RStudio Team, Citation2020), using R packages ‘meta’ (Balduzzi et al., Citation2019), ‘metafor’ (Viechtbauer, Citation2010), and ‘ggplot2’ (Wickham, Citation2016). The effect sizes of interest were those obtained from comparisons of independent groups (literate vs. illiterate) in terms of cognitive screening test scores. Studies that reported mean scores and standard deviations and/or standard errors of the mean were included in the meta-analysis, and standard errors of the mean were converted to standard deviation prior to calculation of effect size. Effect sizes were calculated as Hedges’ g. Hedges’ g is similar to the more widely used Cohen’s d in that both measures examine the magnitude of mean differences between groups. However, Hedges’ g includes a correction factor for sample size, making it a more suitable measure for comparisons of groups with significantly different sample sizes (Gaeta & Brydges, Citation2020).

A multi-level random-effects model was used to weight studies and calculate a summary effect size. A random-effects model was chosen to take into consideration the fact that differences in sample size may create variations in effect sizes across studies. A multi-level model was used to account for dependence within the data. Meta-analytic pooling assumes statistical independence. If there is dependency between effect sizes, this may result in false-positive results. Where authors assess more than one screening tool within the same study, the effect sizes calculated for each tool are not independent (Harrer et al., Citation2019). Thus, these effect sizes cannot be added to a meta-analytic model without accounting for their dependency. A multi-level model takes these dependencies into account by adding another layer into the structure of the meta-analytic model. This allows the model to account for the fact that some effect sizes are nested within one study. In the present analysis, a three-level model was implemented to model sampling variation for each effect size (Level 1), variation within each study (Level 2), and variation between studies (Level 3; Cheung, Citation2014; Harrer et al., Citation2019).

Summary effects were calculated using the restricted maximum likelihood (REML) method. Standard errors and 95% confidence intervals were calculated for each effect size. P-values were calculated to test the null hypotheses and Q and I2 statistics were used to assess heterogeneity and observed variance respectively. Publication bias and outlier biases were analyzed using Failsafe N (Rosenthal, Citation1978) and a funnel plot and asymmetry was tested statistically using Egger’s regression test (Egger et al., Citation1997). To maintain independence, average study effect sizes were used in the funnel plot and in Egger’s regression test.

The main analyses examined the overall difference in performance in cognitive screening tests between literate and illiterate groups. A sensitivity analysis was carried out to assess whether the results differed across studies with different primary aims. To assess this, studies were divided into one of three groups depending on the objectives of the study. The first group included studies that focused on examining the difference in performance between literate and illiterate participants as their primary research question. The second group included studies that focused on this comparison as a secondary research question. The third group included studies that provided data on cognitive test scores for literate and illiterate participants but did not explicitly compare groups.

Moderator analyses were carried out to assess whether the type of cognitive screening test or cognitive status affect the results of the main analyses. Cognitive screening tests were categorized by whether or not they were designed or modified for individuals with low levels of literacy or education. To assess whether cognitive status impacted on the results, participants were categorized as healthy, as having mild cognitive impairment, or as having dementia.

Results

Results of systematic search

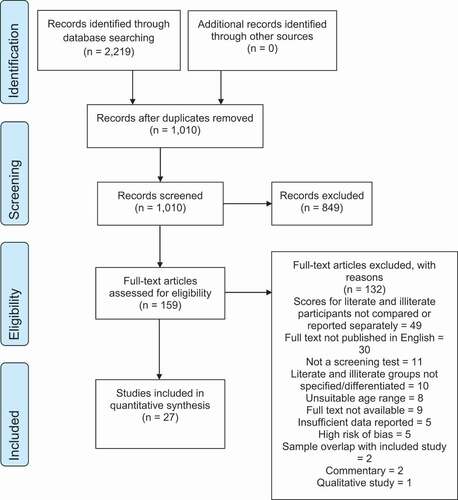

The results of the systematic search are summarized in . The search yielded 2,219 studies. After removing duplicates, the titles and abstracts of the remaining 1,010 studies were screened and 849 records were excluded. A total of 159 manuscripts were retrieved and the full texts were assessed for eligibility. Of these records, 132 were excluded, with reasons outlined in . Twenty-seven studies were identified as meeting the inclusion criteria and were therefore included in the meta-analysis.

Study characteristics

The main characteristics of the included studies are summarized in . The studies collectively comprised of 38,057 participants, with study sample sizes ranging from 34 to 16,488. Of these participants, 13,117 individuals were identified as illiterate, and 24,940 individuals were classified as literate. The gender breakdown of the collective sample was 59% female (n = 22,689) and 40% male (n = 14,607). Four studies, with 761 total participants, did not report the participants’ gender.

Table 1. Characteristics of included studies

Twelve studies either focused on healthy older adults only or did not report the cognitive status of the participants. Fifteen studies included both healthy and cognitively impaired older adults. In these 15 studies, 22,519 individuals were identified as healthy, 604 were classified as having MCI or possible dementia, and 1,176 were reported to have dementia or probable dementia. Five of these studies did not report screening scores separately according to diagnosis and consequently were not included in the moderator analysis.

The majority of the studies (25 of 27 studies) included the MMSE or an adapted version of the MMSE as a cognitive screening tool. Seven studies included a cognitive screening tool that was adapted for use in illiterate/low educated participants. The studies were carried out in 11 different countries and included cognitive screening tools administered in 10 different languages. The most common language was Portuguese (10 studies), followed by Korean (5 studies). Only two studies administered screening tools in English and both of these studies were carried out in the USA.

In 12 of the studies, examining the difference in performance between literate and illiterate participants was the primary research question. In nine studies, this was a secondary research question, with six validation studies, four prevalence studies, two studies designed to obtain norms or cutoff scores, and one assessing factors that contribute to cognitive impairment. Six studies collected and reported data for literate and illiterate participants but did not carry out a statistical comparison between the two groups.

Study quality

Quality assessment ratings for all included studies are outlined in . Seven studies were rated as good, 20 as fair, and 5 as poor. All but two studies (Lin et al., Citation2002; Subramanian et al., Citation2021) clearly defined the aims of the study, and all studies clearly defined the study population. Only one study provided a justification for the sample size (Elbedewy & Elokl, Citation2020). No study explicitly outlined whether the researchers were blinded to the participants’ literacy status. However, it is likely that maintaining blindedness during the process of cognitive assessment would have been difficult. Confounders were adjusted for in the analyses of 17 studies.

Table 2. Quality assessment ratings based on the NIH Quality Assessment of Case-Control Studies tool

Meta-analysis

Study selection and characteristics

Seven studies did not include sufficient data for inclusion in the meta-analysis. The authors of six of these studies were contacted. The authors of one study (Devraj et al., Citation2014) could not be contacted because there were no author contact details specified in the paper. Two authors replied and provided the necessary data for the meta-analysis (Cassimiro et al., Citation2017; Goudsmit et al., Citation2020). Five studies were given a quality rating of ‘poor’ and were therefore excluded from the meta-analysis (Devraj et al., Citation2014; Gambhir et al., Citation2014; Hong et al., Citation2011; Julayanont et al., Citation2015; Umakalyani & Senthilkumar, Citation2018). The final number of studies included in the meta-analysis was 27.

Cognitive screening scores in literate vs. illiterate older adults

Effect sizes were calculated for the differences in performance in cognitive screening tests between the literate groups and illiterate groups. A significant overall effect was found, with literate participants scoring significantly higher than illiterate participants (g = −1.21, 95% CI = [−1.47, −0.95], p < .001; ; ). However, significant heterogeneity was found (Q = 591.37, p < .001, I2 = 98.05%). There were no distinct outliers, so all studies were included. No statistically significant asymmetry was observed from funnel plots (see, ) using Egger’s test (p = 0.52). A calculation of Failsafe N indicated that 48,822 undetected negative studies would be needed to change the outcome, suggested low publication bias.

Table 3. Results from the multi-level random-effects model

Figure 2. Forest plot of multi-level random effects model for studies comparing performance in dementia screening tests in literate and illiterate individuals. Abbreviations: ACE-R: Addenbrooke’s Cognitive Examination-Revised; CAMCOG: Cambridge Cognitive Examination; CAMSE: Chinese adapted Mini-Mental State Examination; CDT: Clock Drawing Test; CMMS: Chinese Mini-Mental Status; K-MMSE: Korean Mini-Mental State Examination; M-MMSE: Modified Mini-Mental State Examination; mCMMSE: Modified Chinese Mini-Mental State Examination; MMSE-37: 37-point version of Mini-Mental State Examination; MMSE-ad: Mini-Mental State Examination adapted; MMSE-mo: Mini-Mental State Examination modified; MMSE: Mini-Mental State Examination; RUDAS: Rowland Universal Dementia Assessment Scale; TMSE: Thai Mini-Mental State Examination

Subgroup analyses

A sensitivity analysis showed no significant effect of study objectives on the difference in performance between literate and illiterate participants (p = 0.88). Literate participants scored significantly higher than illiterate participants in studies that primarily set out to compare these groups, studies that compared the groups as a secondary analysis, and in studies that did not explicitly compare the groups at all ().

Table 4. Random effect models sub-grouped according to how studies examined differences between literate and illiterate participants

A moderator analysis showed a significant effect of test type on the difference in performance between literate and illiterate participants (p = .004). Where screening tests were designed or modified for illiterate individuals or individuals with low education, the difference in performance between the two groups was smaller, demonstrated by a lower Hedges’ g. However, literate participants still obtained significantly higher scores than illiterate participants in adapted screening tests (g = −0.81, 95% CI = [−1.32, −0.29], p = .008; ).

Table 5. Random effect models sub-grouped according to test type

In order to examine the effect of cognitive impairment, a further analysis was carried out using only the 10 studies that included both participants with and without cognitive impairment or dementia. Similarly, in this analysis, a significant overall effect was found with literate participants scoring higher than illiterate participants (g = −1.58, 95% CI = [−2.1, −1.07], p < .001). Again, significant heterogeneity was found (Q = 293.57, p < .001, I2 = 92.9%). A moderator analysis showed a significant effect of cognitive impairment on the difference in performance between literate and illiterate participants (p < .001). The difference in performance between groups was greater in healthy participants than in participants with cognitive impairment and dementia. However, there was still a significant difference between literate and illiterate groups in participants with cognitive impairment/dementia ().

Table 6. Random effect models sub-grouped according to cognitive status

Discussion

Main findings

The results of this systematic review and meta-analysis indicated that illiterate groups scored significantly lower than literate groups in dementia screening tools. The analyses demonstrated that there was less disparity between literate and illiterate populations in tests that are designed or adapted for use with individuals who are illiterate or have low education levels, including modified versions of the MMSE as well as the RUDAS (Goudsmit et al., Citation2020; Nielsen, 2018) and LICA (Shim et al., Citation2015). However, in many of these tests, literate participants continued to outperform illiterate participants. The analysis also indicated that the difference between literate and illiterate participants in performance in dementia screening tools was greater when these participants did not have a cognitive impairment.

Interpretation and analysis

Our findings are in line with literature on the topic of illiteracy and cognitive assessment, which suggests that illiterate individuals are at a significant disadvantage when assessed using formal assessment methods (Ardila et al., Citation2010). Dementia screening tools assess many cognitive domains that rely on skills that are directly or indirectly related to literacy, such visuospatial function, logical reasoning, and fine motor skills (Kosmidis, Citation2018). It corresponds that individuals who never learned to read or write tend to perform poorer in these domains than those who did.

The most common dementia screen test studied in this review was the MMSE, with 25 of the 27 studies including either the original version or an adapted version of the MMSE. The persistence and predominance of the MMSE within research, despite widespread acknowledgment of its many limitations (Nieuwenhuis-Mark, Citation2010), represents a significant obstacle in the pursuit of appropriate methods for screening dementia. This meta-analysis adds further weight to the proposal that the MMSE is unsuitable for use in many populations and future studies should move away from relying on the MMSE as a screen for cognitive impairment.

The results indicate that, when tests are designed or adapted for use in illiterate populations or populations with low education, there is less disparity in performance between literate and illiterate groups. This analysis should be interpreted with a degree of caution, however, as the group of adapted screening tools consisted of a range of different tools. These tools are relatively new compared to the MMSE and require further assessment to determine their validity and utility across different population groups. One tool that stood out as potentially promising was the RUDAS, with both Goudsmit et al. (Citation2020) and Nielsen (2018) demonstrating that literate and illiterate participants performed similarly in this tool. The RUDAS was developed for use in culturally and linguistically diverse populations. It has been validated in 16 countries, in at least 16 languages (Komalasari et al., Citation2019).

It should be noted that only two studies included in this review administered cognitive assessments in English, both of which were conducted in the USA (Black et al., Citation1999; Hamrick et al., Citation2013). There were no studies conducted in the UK. The dearth of studies on this topic in English-speaking, higher-income countries is likely related to the higher literacy rate of these countries (UIS, Citation2019). However, the demographic landscape of many countries, including the UK, is changing, and becoming increasingly diverse (Franzen & European Consortium on Cross-Cultural Neuropsychology, Citation2021; Office for National Statistics, Citation2018). It is therefore important that even countries with high literacy rates have the resources to provide services that are culturally and educationally competent.

The results also demonstrated that, where individuals were cognitively impaired at the point of administration of the cognitive screen, there was less disparity between literate and illiterate groups. It is possible, that as cognition deteriorates, literate and illiterate groups may become more similar in terms of their cognitive functioning. It is plausible for example, that literate individuals with dementia may become more impaired in domains related to literacy (e.g., visuospatial function) as their cognition deteriorates (Kim & Chey, Citation2010). The purpose of screening tools, however, is to provide an initial indication of cognitive changes. It is therefore important that such tools are sensitive to subtle changes. Disparity between literate and illiterate healthy individuals is therefore problematic, as screening tools are most valuable at the point where it is unclear whether an individual is healthy or is beginning to show some cognitive changes (Xu et al., Citation2003).

Limitations

There are several limitations to this systematic review and meta-analysis. The quality of the studies included in the review is mixed, with only seven of the included studies rated as “good.” The main methodological issues with the studies included lack of sample size justifications and unclear criteria for differentiating between literate and illiterate participants. We attempted to minimize quality issues by only including studies with a rating of “fair” or “good” in the meta-analysis. The quality assessment ratings may also be subject to individual bias. Steps were taken to minimize bias, such as the addition of a second rater.

There are a number of potential sources of heterogeneity in the meta-analysis, as reflected by high I2 values. While heterogeneity is to be expected in a meta-analysis, it is important to explore potential sources of variance (Higgins, Citation2008).

Firstly, there was heterogeneity across the study objectives and designs. Whereas some studies were designed specifically to answer the question of whether literate and illiterate groups differed in terms of performance in a screening tool, this was not the sole focus of every study. A sensitivity analysis was carried out to explore this potential source of heterogeneity. This analysis indicated that the results were not affected by the discrepancies across the studies in terms of their primary objectives, and high I2 values remained when random effects models were carried out by subgroup.

There was also heterogeneity across study populations. Some studies included all healthy participants, whereas others included both healthy participants and participants with MCI and/or dementia. Participants with a wide range of cognitive abilities were therefore included in the main analysis of the meta-analysis, potentially limiting the generalizability of the findings. A moderator analysis was carried out to explore this potential limitation further and indicated that literate and illiterate participants still differed significantly when healthy participants and cognitively impaired participants were analyzed separately.

Finally, there was heterogeneity across the screening tools. For the purpose of this systematic review and meta-analysis, all screening tools were grouped together. Although the MMSE was the most common tool examined across the studies, there existed significant variation in the languages and versions of the MMSE used. The analyses therefore included a variety of different tools administered across a range of different countries and languages. Combining effect sizes for individual studies with different outcome measures may also limit the findings.

Implications and future directions

The results of this systematic review and meta-analysis support the hypothesis that many of the tools used for screening dementia are unsuitable for use in individuals who are illiterate. These findings have significant clinical implications, as they suggest that many of the widely used cognitive screening tools are not fit for purpose for many individuals. Many countries across the world are becoming increasingly multi-cultural and as a result, clinicians in higher income countries, such as the UK, are encountering more individuals from lower income countries with high illiteracy rates (Nielsen et al., Citation2011). Health services have a duty to provide culturally competent and person-centered care. It is therefore imperative that clinicians are aware of the considerations that should be given when assessing dementia in illiterate individuals. Definitive guidelines around assessing illiterate individuals cannot yet be recommended based on the current research. However, some general recommendations include selecting tools that were designed specifically for use in multicultural and/or illiterate populations and emphasizing information about changes relative to past functioning and the reasons for their illiteracy. This information should be gathered from interview with individuals and, where possible, with informants who have known the individual in question for a significant length of time (Kosmidis, Citation2018; Nell, Citation2000).

This review also highlights the substantial heterogeneity in research on dementia screening tools, making it difficult to compare results across studies. This is widely recognized as a problem within the field of dementia research (Costa et al., Citation2017). Recent calls for consensus in the use of assessment tools for dementia highlight the importance of a harmonized approach (Costa et al., Citation2017; Logie et al., Citation2015; Paulino Ramirez Diaz et al., Citation2005). One important aspect of harmonization involves ensuring that tools selected and developed for widespread use are suitable for use across many different populations. Such tools should be cross-culturally valid and should not be affected by education or literacy level (Costa et al., Citation2017; Logie et al., Citation2015). Tools that minimize the effect of education, literacy, and culture have been developed (Choi et al., Citation2011; Hall et al., Citation2000; Nielsen et al., 2018; Storey et al., Citation2004). However, the literature review and data analysis in the present study indicates that more research is required to validate these tools and determine their suitability across a wide range of settings. Such research should focus on determining the sensitivity and specificity of the tools in terms of differentiating between healthy aging, mild cognitive impairment and various types of dementia, the effect of translation, and the impact on confounding variables.

Although the results of this meta-analysis support the hypothesis that many tools are unsuitable for use in individuals who are illiterate, it should be acknowledged that examining mean differences alone does not fully test this hypothesis. To substantiate the hypothesis further, studies investigating whether the relation between test scores and external factors such as level of functioning differs between literate and illiterate individuals. Furthermore, it would be helpful to explore whether the factor structure of cognitive screening tools varies between literate and illiterate groups. For example, using multi-group confirmatory factor analysis would allow researchers to examine whether cognitive tools lack invariance when used with illiterate individuals. Lack of invariance demonstrates that a tool such as a cognitive test triggers systematically different responding in different groups (Brown et al., Citation2015). This is a method used in cross-cultural research to investigate the cross-cultural validity of certain research tools (Brown et al., Citation2015; Losada et al., Citation2012). Unfortunately, none of the studies found in this meta-analysis addressed this question. Further research investigating whether cognitive screening tools lack invariance in illiterate groups would help to further bolster the results of this study.

Conclusions

This meta-analysis collated existing data which supports the view that many dementia screening tools are unsuitable for individuals who are illiterate. This finding emphasizes the need for the development and use of tools that are suitable for all individuals, regardless of their literacy ability, education or cultural background. The development of screening tools that are unaffected by literacy level is complicated by the fact that many cognitive domains implicated in dementia are influenced by literacy skills. Furthermore, individuals who are illiterate are less likely to be familiar with test-taking procedures, which may impact their performance in formal cognitive tests. Despite these confounding factors, tools that minimize the effect of education, literacy, and culture have been developed. However, further research is required to validate such tools and determine their suitability across a wide range of settings. Although further research is still required in order to substantiate the suitability of these tools in some settings, clinicians assessing dementia in individuals with low levels of literacy should consider using such tools where appropriate and should place particular emphasis on information gathering to inform diagnostic decision-making.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

References

- Alzheimer’s Research UK. (2018). Global prevalence. Retrieved February 8, 2020, from https://www.dementiastatistics.org/statistics/global-prevalence/

- Ardila, A, Bertolucci, P. H., Braga, L. W., Castro-Caldas, A., Judd, T., Kosmidis, M. H., Matute, E., Nitrini, R., Ostrosky-Solis, F., Rosselli, M. (2010, December). Illiteracy: The neuropsychology of cognition without reading. Archives of Clinical Neuropsychology, 25(8), 689–712. https://doi.org/https://doi.org/10.1093/arclin/acq079

- Ardila, & Rosselli, M. (2007). Illiterates and cognition: The impact of education. In B. P. Uzzell, & M. O. Pontón, and A. Ardila (Eds.), International handbook of cross-cultural neuropsychology. (pp. 181–198). Laurence Erlbaum Associates.

- Balduino, E., de Melo, B. A. R., de Sousa Mota da Silva, L., Martinelli, J. E., & Cecato, J. F. (2020). The ”SuperAgers” construct in clinical practice: neuropsychological assessment of illiterate and educated elderly. Int Psychogeriatr, 32(2), 191–198. https://doi.org/https://doi.org/10.1017/S1041610219001364

- Balduzzi, S., Rücker, G., & Schwarzer, G. (2019). How to perform a meta-analysis with R: A practical tutorial. Evidence-Based Mental Health, 22(4), 153–160. https://doi.org/https://doi.org/10.1136/ebmental-2019-300117

- Black, S. A., Espino, D. V., Mahurin, R., Lichtenstein, M. J., Hazuda, H. P., Fabrizio, D., Ray, L. A., & Markides, K. S. (1999). The influence of noncognitive factors on the mini-mental state examination in older Mexican-Americans. Journal of Clinical Epidemiology, 52(11), 1095–1102. https://doi.org/https://doi.org/10.1016/S0895-4356(99)00100-6

- Brito-Marques, P. R., & Cabral-Filho, J. E. (2004). The role of education in mini-mental state examination: a study in Northeast Brazil. Arq Neuropsiquiatr, 62(2A), 206–211. https://doi.org/10.1016/j.jns.2013.07.1276

- Brown, G. T. L., Harris, L. R., O’Quin, C., & Lane, K. E. (2015). Using multi-group confirmatory factor analysis to evaluate cross-cultural research: Identifying and understanding non-invariance. International Journal of Research & Method in Education, 40(1), 66–90. https://doi.org/https://doi.org/10.1080/1743727x.2015.1070823

- Caramelli, P., Carthery-Goulart, M. T., Porto, C. S., Charchat-Fichman, H., & Nitrini, R. (2007). Category fluency as a screening test for Alzheimer disease in illiterate and literate patients. Alzheimer Disease and Associated Disorders, 21(1), 65–67. https://doi.org/https://doi.org/10.1097/WAD.0b013e31802f244f

- Cassimiro, L., Fuentes, D., Nitrini, R., & Yassuda, M. S. (2017). Decision-making in Cognitively Unimpaired Illiterate and Low-educated Older Women: Results on the Iowa Gambling Task. Archives of Clinical Neuropsychology: The Official Journal of the National Academy of Neuropsychologists, 32(1), 71–80. https://doi.org/https://doi.org/10.1093/arclin/acw080

- Cesar, K. G., Yassuda, M. S., Porto, F. H. G., Brucki, S. M. D., & Nitrini, R. (2017). Addenbrooke's cognitive examination-revised: Normative and accuracy data for seniors with heterogeneous educational level in Brazil. International Psychogeriatrics, 29(8), 1345–1353. https://doi.org/https://doi.org/10.1017/S1041610217000734

- Cheung, M. W. (2014, June). Modeling dependent effect sizes with three-level meta-analyses: A structural equation modeling approach. Psychological Methods, 19(2), 211–229. https://doi.org/https://doi.org/10.1037/a0032968

- Choi, S. H., Shim, Y. S., Ryu, S. H., Ryu, H. J., Lee, D. W., Lee, J. Y., Jeong, J. H., & Han, S. H. (2011). Validation of the literacy independent cognitive assessment. International Psychogeriatrics, 23(4), 593–601. https://doi.org/https://doi.org/10.1017/S1041610210001626

- Contador, I., Del Ser, T., Llamas, S., Villarejo, A., Benito-Leon, J., & Bermejo-Pareja, F. (2017). Impact of literacy and years of education on the diagnosis of dementia: A population-based study. Journal of Clinical and Experimental Neuropsychology, 39(2), 112–119. https://doi.org/https://doi.org/10.1080/13803395.2016.1204992

- Costa, A., Bak, T., Caffarra, P., Caltagirone, C., Ceccaldi, M., Collette, F., Crutch, S., Della Sala, S., Demonet, J. F., Dubois, B., Duzel, E., Nestor, P., Papageorgiou, S. G., Salmon, E., Sikkes, S., Tiraboschi, P., van der Flier, W. M., Visser, P. J., & Cappa, S. F. (2017, April). The need for harmonisation and innovation of neuropsychological assessment in neurodegenerative dementias in Europe: Consensus document of the Joint Program for Neurodegenerative Diseases Working Group. Alzheimer’s Research & Therapy, 9(1), 27. https://doi.org/https://doi.org/10.1186/s13195-017-0254-x

- Devraj, R., Singh, V. B., Ola, V., Meena, B. L., Tundwal, V., & Singh, K. (2014). Cognitive function in elderly population - An urban community based study in north-west Rajasthan. Journal, Indian Academy of Clinical Medicine, 15(2), 87–90.

- Egger, M., Davey Smith, G., Schneider, M., & Minder, C. (1997, September). Bias in meta-analysis detected by a simple, graphical test. BMJ, 315(7109), 629–634. https://doi.org/https://doi.org/10.1136/bmj.315.7109.629

- Elbedewy, R. M. S., & Elokl, M. (2020). Can a combination of two neuropsychological tests screen for mild neurocognitive disorder better than each test alone? A cross-sectional study. Middle East Current Psychiatry, 27(1), 42.https://doi.org/https://doi.org/10.1186/s43045-020-00048-7

- Folstein, M. F., Folstein, S. E., & McHugh, P. R. (1975). Mini-mental state. Journal of Psychiatric Research, 12(3), 189–198. https://doi.org/https://doi.org/10.1016/0022-3956(75)90026-6

- Franzen & European Consortium on Cross-Cultural Neuropsychology. (2021). Cross-cultural neuropsychological assessment in Europe: Position statement of the European Consortium on Cross-Cultural Neuropsychology (ECCroN). Clinical Psychology, 1–12. https://doi.org/https://doi.org/10.1080/13854046.2021.1981456

- Gaeta, L., & Brydges, C. R. (2020, May). An examination of effect sizes and statistical power in speech, language, and hearing research. Journal of Speech, Language, and Hearing Research, 63(5), 1572–1580. https://doi.org/https://doi.org/10.1044/2020_JSLHR-19-00299

- Gambhir, I. S., Khurana, V., Kishore, D., Sinha, A. K., & Mohapatra, S. C. (2014). A clinico-epidemiological study of cognitive function status of community-dwelling elderly. Indian Journal of Psychiatry, 56(4), 365–370. https://doi.org/https://doi.org/10.4103/0019-5545.146531

- Goudsmit, M., van Campen, J., Franzen, S., van den Berg, E., Schilt, T., & Schmand, B. (2020). Dementia detection with a combination of informant-based and performance-based measures in low-educated and illiterate elderly migrants. The Clinical Neuropsychologist, 35(3), 1–19. https://doi.org/https://doi.org/10.1080/13854046.2020.1711967

- Goudsmit, M., van Campen, J., Schilt, T., Hinnen, C., Franzen, S., & Schmand, B. (2018, May-August). One size does not fit all: Comparative diagnostic accuracy of the rowland universal dementia assessment scale and the mini mental state examination in a memory clinic population with very low education. Dementia and Geriatric Cognitive Disorders Extra, 8(2), 290–305. https://doi.org/https://doi.org/10.1159/000490174

- Hall, K. S., Gao, S., Emsley, C. L., Ogunniyi, A. O., Morgan, O., & Hendrie, H. C. (2000). Community screening interview for dementia (CSI ‘D’); Performance in five disparate study sites. International Journal of Geriatric Psychiatry, 15(6), 521–531. https://doi.org/https://doi.org/10.1002/1099-1166%28200006%2915:6%3C521::AID-GPS182%3E3.0.CO;2-F

- Hamrick, I., Hafiz, R., & Cummings, D. M. (2013). Use of days of the week in a modified mini-mental state exam (M-MMSE) for detecting geriatric cognitive impairment. Journal of the American Board of Family Medicine, 26(4), 429–435. https://doi.org/https://doi.org/10.3122/jabfm.2013.04.120300

- Harrer, M., Cuijpers, P., Furukawa, T. A., & Ebert, D. D. (2019). Doing meta-analysis in R: A hands-on guide https://bookdown.org/MathiasHarrer/Doing_Meta_Analysis_in_R/

- Higgins, J. P. (2008, October). Commentary: Heterogeneity in meta-analysis should be expected and appropriately quantified. International Journal of Epidemiology, 37(5), 1158–1160. https://doi.org/https://doi.org/10.1093/ije/dyn204

- Hong, Y. J., Yoon, B., Shim, Y. S., Cho, A. H., Lee, E. S., Kim, Y. I., & Yang, D. W. (2011). Effect of literacy and education on the visuoconstructional ability of non-demented elderly individuals. Journal of the International Neuropsychological Society, 17(5), 934–939. https://doi.org/https://doi.org/10.1017/S1355617711000889

- Julayanont, P., Tangwongchai, S., Hemrungrojn, S., Tunvirachaisakul, C., Phanthumchinda, K., Hongsawat, J., Suwichanarakul, P., Thanasirorat, S., & Nasreddine, Z. S. (2015). The montreal cognitive assessment - basic: A screening tool for mild cognitive impairment in illiterate and low-educated elderly adults. Journal of the American Geriatrics Society, 63(12), 2550–2554. https://doi.org/https://doi.org/10.1111/jgs.13820

- Katzman, R., Zhang, M., Qu, O. Y., Wang, Z., Liu, W. T., Yu, E., Wong, S. C., Salmon, D. P., & Grant, I. (1988). A Chinese version of the Mini-Mental State Examination; impact of illiteracy in a Shanghai dementia survey. Journal of Clinical Epidemiology, 41(10), 971–978. https://doi.org/https://doi.org/10.1016/0895-4356%2888%2990034-0

- Kim, H., & Chey, J. (2010). Effects of education, literacy, and dementia on the clock drawing test performance. Journal of the International Neuropsychological Society, 16(6), 1138–1146. https://doi.org/https://doi.org/10.1017/S1355617710000731

- Kim, J., Yoon, J. H., Kim, S. R., & Kim, H. (2014). Effect of literacy level on cognitive and language tests in Korean illiterate older adults. Geriatr Gerontol Int, 14(4), 911–917. https://doi.org/https://doi.org/10.1111/ggi.12195

- Kochhann, R., Varela, J. S., de Macedo Lisboa, C. S., & Chaves, M. L. F. (2010). The Mini Mental State Examination: Review of cutoff points adjusted to schooling in a large Southern Brazilian sample [Neurological Disorders & Brain Damage 3297]. Dementia & Neuropsychologia, 4(1), 35–41. https://doi.org/10.1590/S1980-57642010DN40100006

- Komalasari, R., Chang, H. C. R., & Traynor, V. (2019, January 3). A review of the Rowland Universal Dementia Assessment Scale. Dementia (London), 18(7–8) , 1471301218820228 . https://doi.org/https://doi.org/10.1177/1471301218820228

- Kosmidis, M. H. (2018). Challenges in the neuropsychological assessment of illiterate older adults. Language, Cognition and Neuroscience, 33(3), 373–386. https://doi.org/https://doi.org/10.1080/23273798.2017.1379605

- Leite, K. S. B., Miotto, E. C., Nitrini, R., & Yassuda, M. S. (2017). Boston Naming Test (BNT) original, Brazilian adapted version and short forms: Normative data for illiterate and low-educated older adults. International Psychogeriatrics, 29(5), 825–833. https://doi.org/https://doi.org/10.1017/S1041610216001952

- Lenardt, M. H., Michel, T., Wachholz, P. A., Borghi, Â. d. S., & Seima, M. D. (2009). Institutionalized elder women’s performance in the mini- mental state examination. Acta Paulista de Enfermagem, 22(5), 638–644. https://doi.org/https://doi.org/10.1590/s0103-21002009000500007

- Liberati, A., Altman, D. G., Tetzlaff, J., Mulrow, C., Gotzsche, P. C., Ioannidis, J. P., Clarke, M., Devereaux, P. J., Kleijnen, J., & Moher, D. (2009, July). The PRISMA statement for reporting systematic reviews and meta-analyses of studies that evaluate healthcare interventions: Explanation and elaboration. BMJ, 339(jul21 1), b2700. https://doi.org/https://doi.org/10.1136/bmj.b2700

- Lin, K. N., Wang, P. N., Liu, C. Y., Chen, W. T., Lee, Y. C., & Liu, H. C. (2002). Cutoff scores of the Cognitive Abilities Screening Instrument, Chinese version in screening of dementia. Dementia and Geriatric Cognitive Disorders, 14(3–4), 176–182. https://doi.org/https://doi.org/10.1159/000066024

- Logie, R. H., Parra, M. A., & Della Sala, S. (2015). From cognitive science to dementia assessment. Policy Insights from the Behavioral and Brain Sciences, 2(1), 81–91. https://doi.org/https://doi.org/10.1177/2372732215601370

- Losada, A., de Los Angeles Villareal, M., Nuevo, R., Marquez-Gonzalez, M., Salazar, B. C., Romero-Moreno, R., Carrillo, A. L., & Fernandez-Fernandez, V. (2012, July). Cross-cultural confirmatory factor analysis of the CES-D in Spanish and Mexican dementia caregivers. The Spanish Journal of Psychology, 15(2), 783–792. https://doi.org/https://doi.org/10.5209/rev_SJOP.2012.v15.n2.38890

- Manly, J. J., Touradji, P., Tang, M. X., & Stern, Y. (2003, August). Literacy and memory decline among ethnically diverse elders. Journal of Clinical and Experimental Neuropsychology, 25(5), 680–690. https://doi.org/https://doi.org/10.1076/jcen.25.5.680.14579

- Mokri, H., Avila-Funes, J. A., Le Goff, L., Ruiz-Arregui, L., Gutierrez Robledo, L. M., & Amieva, H. (2012). Self-reported reading and writing skills in elderly who never attended school influence cognitive performances: results from the Coyoacan cohort study. J Nutr Health Aging, 16(7), 621–624. https://doi.org/https://doi.org/10.1007/s12603-012-0070-8

- Muangpaisan, W., Assantachai, P., Sitthichai, K., Richardson, K., & Brayne, C. (2015). The distribution of Thai mental state examination scores among non-demented elderly in suburban Bangkok metropolitan and associated factors. Journal of the Medical Association of Thailand, 98(9), 916–924. https://doi.org/https://doi.org/10.1590/S1516-44462010005000009

- National Institute of Health. (2014). Quality assessment of case-control studies. https://www.nhlbi.nih.gov/health-topics/study-quality-assessment-tools

- Nell, V. (2000). Cross-cultural neuropsychological assessment: Theory and practice. Lawrence Erlbuam Associates.

- Nielsen, T. R., Segers, K., Vanderaspoilden, V., Bekkhus-Wetterberg, P., Minthon, L., Pissiota, A., Bjorklof, G. H., Beinhoff, U., Tsolaki, M., Gkioka, M., & Waldemar, G. (2018a, November). Performance of middle-aged and elderly European minority and majority populations on a Cross-Cultural Neuropsychological Test Battery (CNTB). The Clinical Neuropsychologist, 32(8), 1411–1430. https://doi.org/https://doi.org/10.1080/13854046.2018.1430256

- Nielsen, T. R., Segers, K., Vanderaspoilden, V., Bekkhus-Wetterberg, P., Minthon, L., Pissiota, A., Bjørkløf, G. H., Beinhoff, U., Tsolaki, M., Gkioka, M., & Waldemar, G. (2018b, October). Effects of Illiteracy on the European Cross-Cultural Neuropsychological Test Battery (CNTB). Archives of Clinical Neuropsychology, 32(8), 1411–1430. https://doi.org/https://doi.org/10.1093/arclin/acy076

- Nielsen, T. R., Vogel, A., Gade, A., & Waldemar, G. (2012, December). Cognitive testing in non-demented Turkish immigrants–comparison of the RUDAS and the MMSE. Scandinavian Journal of Psychology, 53(6), 455–460. https://doi.org/https://doi.org/10.1111/sjop.12018

- Nielsen, T. R., Vogel, A., Riepe, M. W., de Mendonca, A., Rodriguez, G., Nobili, F., Gade, A., & Waldemar, G. (2011, February). Assessment of dementia in ethnic minority patients in Europe: A European Alzheimer’s Disease Consortium survey. International Psychogeriatrics, 23(1), 86–95. https://doi.org/https://doi.org/10.1017/S1041610210000955

- Nieuwenhuis-Mark, R. E. (2010, September). The death knoll for the MMSE: Has it outlived its purpose? Journal of Geriatric Psychiatry and Neurology, 23(3), 151–157. https://doi.org/https://doi.org/10.1177/0891988710363714

- Nitrini, R., Bottino, C. M., Albala, C., Custodio Capunay, N. S., Ketzoian, C., Llibre Rodriguez, J. J., Maestre, G. E., Ramos-Cerqueira, A. T., & Caramelli, P. (2009, August). Prevalence of dementia in Latin America: A collaborative study of population-based cohorts. International Psychogeriatrics, 21(4), 622–630. https://doi.org/https://doi.org/10.1017/S1041610209009430

- Nitrini, R., Caramelli, P., Herrera Jr, E., Porto, C. S., Charchat-Fichman, H., Carthery, M. T., Takada, L. T., & Lima, E. P. (2004). Performance of illiterate and literate nondemented elderly subjects in two tests of long-term memory. Journal of the International Neuropsychological Society, 10(4), 634–638. https://doi.org/10.1017/S1355617704104062

- Office for National Statistics. (2018). Overview of the UK Population: November 2018. http://www.ons.gov.uk/ons/rel/pop-estimate/population-estimates-for-uk–england-and-wales–scotland-and-northern-ireland/mid-2014/sty—overview-of-the-uk-population.html

- Ortega, L. V., Aprahamian, I., Martinelli, J. E., Cecchini, M. A., Cacao, J. C., & Yassuda, M. S. (2021). Diagnostic Accuracy of Usual Cognitive Screening Tests Versus Appropriate Tests for Lower Education to Identify Alzheimer Disease. J Geriatr Psychiatry Neurol, 34(3), 222–231. https://doi.org/https://doi.org/10.1177/0891988720958542

- Ostrosky-Solis, F. (2007). Educational effects on cognitive functions: Brain reserve, compensation, or testing bias? In B. P. Uzzell, M. O. Pontón, and A. Ardila (Eds.), International Handbook of Cross-Cultural Neuropsychology (pp. 215–225). Laurence Erlbaum Associates .

- Park, S., Park, S.E., Kim, M. J., Jung, H. Y., Choi, J. S., Park, K. H., Kim, I., & Lee, J. Y. (2014). Development and validation of the Pictorial Cognitive Screening Inventory for illiterate people with dementia. Neuropsychiatr Dis Treat, 10, 1837–1845. https://doi.org/https://doi.org/10.2147/NDT.S64151

- Paulino Ramirez Diaz, S., Gil Gregorio, P., Manuel Ribera Casado, J., Reynish, E., Jean Ousset, P., Vellas, B., & Salmon, E. (2005, August). The need for a consensus in the use of assessment tools for Alzheimer’s disease: The Feasibility Study (assessment tools for dementia in Alzheimer Centres across Europe), a European Alzheimer’s Disease Consortium’s (EADC) survey. International Journal of Geriatric Psychiatry, 20(8), 744–748. https://doi.org/https://doi.org/10.1002/gps.1355

- Prince, M., Wimo, A., Guerchet, M., Ali, G. C., Wu, Y. T., Prina, M., & International, A. S. D. (2015 (:)). World Alzheimer’s Report 2015, The global impact of dementia: An analysis of prevalence, incidence, cost and trends. London: Alzheimer’s Disease International (ADI).

- Rosenthal, R. (1978). Combining results of independent studies. Psychological Bulletin, 85(1), 185–193. https://doi.org/https://doi.org/10.1037/0033-2909.85.1.185

- RStudio Team. (2020). RStudio: Integrated Development for R. In RStudio, PBC. http://www.rstudio.com/

- Shim, Y., Ryu, H. J., Lee, D. W., Lee, J. Y., Jeong, J. H., Choi, S. H., Han, S. H., & Ryu, S. H. (2015). Literacy independent cognitive assessment: Assessing mild cognitive impairment in older adults with low literacy skills. Psychiatry Investigation, 12(3), 341–348. https://doi.org/https://doi.org/10.4306/pi.2015.12.3.341

- Storey, J. E., Rowland, J. T. J., Conforti, D. A., & Dickson, H. G. (2004). The Rowland Universal Dementia Assessment Scale (RUDAS): A multicultural cognitive assessment scale. International Psychogeriatrics, 16(1), 13–31. https://doi.org/https://doi.org/10.1017/S1041610204000043

- Subramanian, M., Vasudevan, K., & Rajagopal, A. (2021, January 4). Cognitive impairment among older adults with diabetes mellitus in Puducherry: A community-based cross-sectional study. Cureus, 13(1), e12488. https://doi.org/https://doi.org/10.7759/cureus.12488

- UIS. (2017). Literacy rates continue to rise from one generation to the next.

- UIS. (2019). Meeting commitments: Are countries on track to achieve SDG 4?

- Umakalyani, K., & Senthilkumar, M. (2018). A prospective observational study of mini-mental state examination in elderly participants attending outpatient clinic in geriatric department, Madras Medical College, Chennai. Journal of Evolution of Medical and Dental Sciences, 7(3), 315–320. https://doi.org/https://doi.org/10.14260/jemds/2018/70

- Vagvolgyi, R., Coldea, A., Dresler, T., Schrader, J., & Nuerk, H. C. (2016). A review about functional illiteracy: Definition, cognitive, linguistic, and numerical aspects. Frontiers in Psychology, 7, 1617. https://doi.org/https://doi.org/10.3389/fpsyg.2016.01617

- van der Flier, W. M., & Scheltens, P. (2005, December). Epidemiology and risk factors of dementia. Journal of Neurology, Neurosurgery & Psychiatry, 76(Suppl 5), v2–7. https://doi.org/https://doi.org/10.1136/jnnp.2005.082867

- Viechtbauer, W. (2010). Conducting meta-analyses in R with the metafor Package. Journal of Statistical Software, 36(3), 1–48. https://doi.org/https://doi.org/10.18637/jss.v036.i03

- WHO. (2019). Dementia fact sheet. Retrieved February 8, 2020, from https://www.who.int/en/news-room/fact-sheets/detail/dementia

- Wickham, H. (2016). ggplot2: Elegant graphics for data analysis. Springer-Verlag.

- Xu, G., Meyer, J. S., Huang, Y., Du, F., Chowdhury, M., & Quach, M. (2003). Adapting mini-mental state examination for dementia screening among illiterate or minimally educated elderly Chinese. International Journal of Geriatric Psychiatry, 18(7), 609–616. https://doi.org/https://doi.org/10.1002/gps.890

- Youn, J. H., Siksou, M., Mackin, R. S., Choi, J. S., Chey, J., & Lee, J. Y. (2011). Differentiating illiteracy from Alzheimer's disease by using neuropsychological assessments. International Psychogeriatrics, 23(10), 1560–1568 . https://doi.org/https://doi.org/10.1017/S1041610211001347

- Zhou, D. F., Wu, C. S., Qi, H., Fan, J. H., Sun, X. D., Como, P., Qiao, Y. L., Zhang, L., & Kieburtz, K. (2006). Prevalence of dementia in rural China: Impact of age, gender and education. Acta Neurologica Scandinavica, 114(4), 273–280. https://doi.org/https://doi.org/10.1111/j.1600-0404.2006.00641.x

Appendix

Appendix A: PRISMA checklist of items to include when reporting a systematic review or meta-analysis