ABSTRACT

Introduction

The Digit-Symbol-Substitution Test (DSST) is used widely in neuropsychological investigations of Alzheimer’s Disease (AD). A computerized version of this paradigm, the DSST-Meds, utilizes medicine-date pairings and has been developed for administration in both supervised and unsupervised environments. This study determined the utility and validity of the DSST-Meds for measuring cognitive dysfunction in early AD.

Method

Performance on the DSST-Meds was compared to performance on the WAIS Coding test, and a computerized digit symbol coding test (DSST-Symbols). The first study compared supervised performance on the three DSSTs versions in cognitively unimpaired (CU) adults (n = 104). The second compared supervised DSST performance between CU (n = 60) and mild-symptomatic AD (mild-AD, n = 79) groups. The third study compared performance on the DSST-Meds between unsupervised (n= 621) and supervised settings.

Results

In Study 1, DSST-Meds accuracy showed high correlations with the DSST-Symbols accuracy (r = 0.81) and WAIS-Coding accuracy (r = 0.68). In Study 2, when compared to CU adults, the mild-AD group showed lower accuracy on all three DSSTs (Cohen’s d ranging between 1.39 and 2.56) and DSST-Meds accuracy was correlated moderately with Mini-Mental State Examination scores (r = 0.44, p < .001). Study 3 observed no difference in DSST-meds accuracy between supervised and unsupervised administrations.

Conclusion

The DSST-Meds showed good construct and criterion validity when used in both supervised and unsupervised contexts and provided a strong foundation to investigate the utility of the DSST in groups with low familiarity to neuropsychological assessment.

Introduction

Versions of the Digit Symbol Substitution Test (DSST) are used commonly in neuropsychological research and practice (Jaeger, Citation2018; Lezak et al., Citation2012; Spreen & Strauss, Citation1998; Thorndike, Citation1919). In Alzheimer’s disease (AD), DSSTs are used to guide decisions about the presence of cognitive dysfunction in the preclinical, and prodromal, and dementia stages (Amieva et al., Citation2019; Donohue et al., Citation2014). Internet-based studies, aiming to increase access to AD research by individuals in rural, remote, or international areas, also utilize unsupervised versions of the DSST in their cognitive assessments (Germine et al., Citation2012; Lim et al., Citation2021). However, as with many neuropsychological tests, the utility of the DSST in measuring central nervous system (CNS) dysfunction in AD can be limited by its deliberately abstract nature, especially in participants from populations with low experience in neuropsychological or educational assessment (Harris et al., Citation2007; Howieson, Citation2019; Spooner & Pachana, Citation2006).

Conventional DSST paradigms typically require individuals to make written responses based on the pairing of abstract geometric symbols (e.g., +, ×) with Arabic numbers (Jaeger, Citation2018; Thorndike, Citation1919). The use of abstract stimuli and the clerical nature of neuropsychological tests like this often limit their validity in individuals with low levels of education or from culturally and linguistically diverse (CALD) populations (Ardila, Citation2005; Ng et al., Citation2018; Rosselli et al., Citation2022; Sayegh, Citation2015; Teng & Manly, Citation2005). For example, individuals with low education, or from CALD groups, can be classified as impaired from test performance that reflects low familiarity with neuropsychological assessment, rather than true cognitive dysfunction (Sayegh, Citation2015). One method with potential to improve the utility of the DSST paradigm for use in groups with low levels of familiarly to neuropsychological assessment is to develop a version that retains the overarching psychometric principles but for which the deliberately abstract and clerical context is replaced by one that provides greater relevance to test-takers (De La Plata et al., Citation2008; Lim et al., Citation2012; Thompson et al., Citation2020; Wilson et al., Citation1985). This need increases further when DSSTs are used in unsupervised contexts where no test supervisor is available to answer questions or address concerns (Lim et al., Citation2021; Perin et al., Citation2020).

The daily use and management of prescription and non-prescription medications to maintain physical and mental health is an activity of daily living (ADL) common to older adults from all backgrounds (Dorman Marek & Antle, Citation2008; Elliott & Marriott, Citation2009; Morgan et al., Citation2012). Furthermore, the design of medications, recommendations for their use, and expectations around storage and management are the same for all countries and language groups (Centre for Drug Evaluation and Research, Citation2015, Citation2016; European Medicines Agency, Citation2015). Cognitive processes required for optimal medication management include attention, working memory and executive functions, which guide accuracy and adherence to medication regimens (Elliott et al., Citation2015; Insel et al., Citation2006; Stilley et al., Citation2010; Suchy et al., Citation2020). Thus, the management of medications is a cognitively demanding, relevant and common ADL, that does not change according to culture or language. Hence, the medications themselves, and their requirement for organization, could provide a context that is familiar and relevant to older adults from diverse backgrounds, and into which a DSST could be designed. This potential is increased by a focus on solid oral dosage medications (e.g., pills, capsules; hereafter termed medicines) which are designed to differ visually from one another in size, shape, and color.

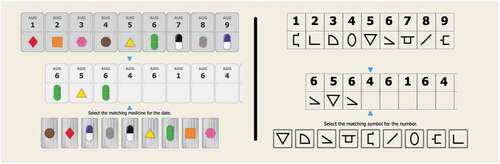

The conventional DSST paradigm can be modified into a medication management context by replacing the abstract symbols with representation of different medicines and replacing Arabic numbers with days or dates (see, )). The decision required of individuals engaging with this type of DSST is “What medicine should I take on this day?.” Even though this design change is simple, it is important to determine whether performance on a DSST-Medicines (DSST-Meds) version retains the psychometric characteristics of DSST paradigms in general. A second issue that arises from this modification is that individuals cannot indicate the different medicines using hand-written responses as they do in conventional versions. Previous studies have validated computerized DSSTs that utilize conventional digit symbol pairs as stimuli and for which responses are made via touchscreen or a mouse (e.g., ); Akbar et al., Citation2011; Chaytor et al., Citation2021; Tung et al., Citation2016). However, as both the stimulus context and response requirements have been modified on the DSST-Meds, investigations of validity must account for both changes.

As modification of the DSST paradigm to increase its potential for use in diverse populations could require substantial change to the stimuli, administration method, instructions and potentially even scoring, it is important to ensure, before the new version is evaluated in diverse populations, that such modifications have not diminished the validity of the test itself. This can be achieved by measuring in studies using well-defined clinical groups the construct and criterion validity of the test. In this experimental context, the main reason for investigating the test in the clinical groups is to improve understanding of the test, not vice versa. This can be achieved by comparing performance on the DSST-Meds to performance on (i) a conventional DSST such as the Wechsler Adult Intelligence Scale Coding subtest (WAIS-Coding; Weschler, Citation2008), which requires handwritten responses, and (ii) to a computer based DSST that utilizes abstract symbols and Arabic numbers as pairs, which requires the same manual response as the DSST-Meds.

Three studies aimed to understand the utility and validity of the DSST-Meds for measuring cognitive dysfunction in well-characterized groups of healthy adults and adults with mild clinically defined AD, with the aim of improving understanding of the validity of the DSST-Meds for measuring cognitive dysfunction in early AD. First, in healthy cognitively unimpaired (CU) adults, the construct validity of the DSST-Meds was investigated by comparing performance to that of paper-based and computer-based conventional DSSTs. The hypothesis for this study was that performance on the DSST-Meds would provide a valid measure of complex attention. The second study investigated the criterion validity of the DSST-Meds by determining its sensitivity to cognitive impairment in early symptomatic AD (hereafter termed mild-AD) and comparing this to that of paper-based and computer-based conventional DSSTs. The hypothesis for this study was that the DSST-Meds would validly determine AD-related impairment in complex attention. The aim of the third study was to explore the extent to which unsupervised administration of the DSST-Meds influenced the sensitivity of the test to AD-related cognitive impairment.

General methods

Materials

The Digit Symbol Substitution Test (Medicines) (DSST-Meds)

The DSST-Meds is a computer controlled DSST, designed for both supervised and unsupervised administration ()). The presentation and response recording for the DSST-Meds are controlled by computer software, and the task is presented on a computer screen. In addition to reflecting the characteristics of DSST paradigms, the design of the DSST meds display and the software programs was also informed by experiences with optimizing computer test for remote administration (Perin et al., Citation2020; detailed below). At the top of the display, a key of nine different medicines is presented with unique combinations of medicine size, color, and shape. Each medicine is paired with a date from the same month, shown in ascending order from left to right. The medicine paired with each date and month is randomized in each test administration. In developing the medicine stimulus for the DSST-Meds, the discriminability of each stimulus was checked against computerized filters using the Cobis color blindness simulator tool (Colblinder, Citation2021). This ensured that the different colors used in the DSST-Meds stimuli could be discriminated from one another in the range of color perception deficits. At the bottom of the display, each medicine from the key is presented from left to right, with its position randomized on each test. In the center of the display, a set of vertically aligned box-pairs are presented adjacent to one another. For each pair, the top box contains a calendar date and the bottom box is empty. A pointer marks the incomplete box-pair requiring a response, and the test-taker must select (via touchscreen or mouse click) from the bottom display, the medicine which is paired with the calendar date indicated by the pointer. Selection of the correct medicine generates an animation in which the correct medicine moves to the empty box in the marked box-pair. If an incorrect medicine is selected, no animation ensues and an auditory signal sounds to indicate an error and that another medicine should be chosen. This process continues until the correct medicine is selected. As with conventional DSSTs, the DSST-Meds allows 120 seconds for completion with the number of medicines selected correctly in this interval being the main performance measure (total correct). The average response time for correct responses is also measured. Before the DSST-Meds begins, an instruction tutorial is shown, where the rules and requirements for the test, broken down into their component processes, are presented to participants in order of their increasing complexity using an operant shaping procedure (Krueger & Dayan, Citation2009; Skinner, Citation1938). This shaping procedure teaches test-takers that 1) responses must be made only from the bottom display of medicines, 2) selection should be based on medicine-date pairs in the key at the top of the screen, 3) as soon as one correct response is made, a new decision is required, most likely about a medicine associated with a different date, and 4) there are nine different pairs to act on. This instruction tutorial advances only after three correct consecutive responses are made. The DSST-Meds begins only once the instruction tutorial is completed.

The Digit Symbol Substitution Test (Symbols) (DSST-Symbols)

The DSST-Symbols test was designed and controlled by the same software used for the DSST-Meds ()). The only difference between the two DSST versions is that, in the DSST-Symbols, the key presents associations between numbers and symbols, with the symbol missing from the box-pair in the middle of the display selected from one of the nine presented at the bottom of the display.

Wechsler Adult Intelligence Scale-Coding subtest (WAIS-Coding)

The Coding subtest of the Wechsler Adult Intelligence Scale (WAIS-IV; Weschler, Citation2008) was administered according to standard instructions. The main performance measure was the number of correct symbols written in the empty boxes for each number-symbol pair in 120 seconds.

Study 1

Participants

A sample of 104 healthy adults (n= 46 (44%) females) aged between 18 and 80 years (M age = 48.81, SD = 18.72), was recruited using convenience sampling through social networks and advertisements. At the time of assessment, all were working full-time or part-time and identified themselves as requiring no treatment for psychiatric or neurological disorders (including sleep disorders). All had normal, or corrected-to-normal, vision and audition, and after a practice with the DSST-Symbols, confirmed the absence of any skeleto-motor or CNS-related motor dysfunction or injury that would prevent them from performing the computerized tests. The study was approved by institutional research and ethics committee, and all participants provided written informed consent prior to participating.

Procedure and data analysis

All participants completed the WAIS-Coding, DSST-Symbols, and DSST-Meds in accordance with their standardized instructions and learning tutorials, in a pseudo-randomized order under direct supervision by a trained research assistant. Prior to assessment, each participant was informed that the study was designed to develop and validate a new cognitive test, and that data from the study would not be used to inform any clinical decisions about any aspect of their cognition.

Data were analyzed in R Version 4.0.5, and a repeated-measures analysis of covariance (ANCOVA) was conducted to compare performance on the WAIS-Coding, DSST-Symbols, and DSST-Meds. Age was included as a covariate given its known effects on cognitive performance. The hypothesis that the DSST-Meds would provide a valid assessment of complex attention was tested by the significance and magnitude of the association between performance on the DSST-Meds and the WAIS Coding test. Intercorrelations with the other DSST versions were explored to assist in understanding DSST-Meds performance. All associations were computed using partial correlations, with age as a covariate. The magnitude of differences between DSST versions was estimated using Cohen’s d. Magnitudes of difference were classified as trivial if d< 0.2, small if d ≥ 0.2 & <0.5, moderate if d ≥ 0.5 & <0.8, large if d ≥ 0.8 & <1.2 and very large if d≥ 1.2 (Cohen, Citation1988; Sawilowsky, Citation2009).

Results and discussion

Within the sample, older age was associated moderately and negatively with correct responses on the DSST-Meds, r = −0.55, 95% CI [−0.67, −0.40], p< .001, and this was similar in magnitude to associations between age and correct responses on the DSST-Symbols, r= −0.51, 95% CI [−0.64, −0.35], p< .001, and WAIS-Coding, r = −0.69, 95% CI [−0.78, −0.57], p< .001. Education was associated with correct responses on the WAIS-Coding, r= 0.23, 95% CI [0.04, 0.40], p = .02, but not on the DSST-Symbols, r = 0.17, 95% CI [−0.02, 0.35], p = .08, or DSST-Meds, r= .04, 95% CI [−0.15, 0.23], p = .67. There were no differences between males and females on the WAIS-Coding (p = .49), DSST-Symbols (p = .35), or DSST-Meds (p = 45).

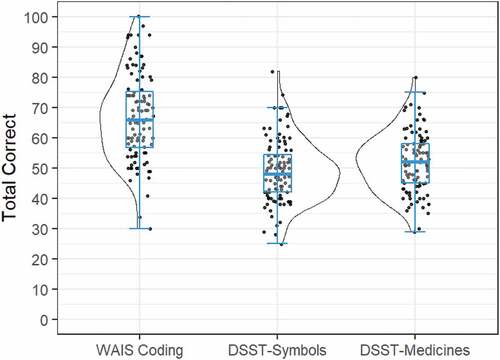

Group mean (SD) correct responses on the WAIS-Coding, DSST-Symbols, and DSST-Meds tests are summarized in . The data in show the distribution of scores on each DSST to be distributed normally, with skewness values close to 0 (WAIS-Coding = .13; DSST-Symbols = .45; DSST-Meds = .21). There was a significant main effect of test, F(2,204) = 118.30, p < .001. Post-hoc paired-samples t-tests indicated that mean correct responses on WAIS-Coding (M = 67.1, SD = 14.3) were significantly greater than those on DSST-Symbols (M = 49.1, SD = 10.3); t(103) = 21.44, p < .001, with a very large effect size (d= 1.30). Performance on the DSST-Meds (M = 51.9, SD = 9.82) was also significantly greater than correct responses for the DSST-Symbols, t(103) = 4.52, p < .001, although the magnitude of this difference was small (d = 0.26).

Figure 2. Group mean performance and distribution of scores on the WAIS-Coding, DSST-Symbols, and DSST-Medicines Tests. Box plot represents mean and 95% confidence interval (CI); scatterplot represents individual performance; split violin plot illustrates the distribution of scores.

Strong partial correlations between correct responses on the WAIS-Coding and DSST-Symbols, r = 0.72, 95% CI [0.62, 0.81], p< .001, and between DSST-Symbols and DSST-Meds, r = 0.73, 95% CI [0.63, 0.81], p< .001 were observed. The association between WAIS-Coding and DSST-Meds was slightly lower, r = 0.58, 95% CI [0.44, 0.69], p< .001, although comparable to that between WAIS-Coding and DSST-Symbols (confidence intervals of correlations overlap). As expected for time-limited tests, associations between the number of correct responses and the average response time to make those responses were strong (DSST-Symbols, r = 0.93 95% CI [0.90, 0.95], p< .001; DSST-Meds, r = 0.96, 95% CI [0.94, 0.97], p< .001).

Despite showing strong construct validity, the association between the WAIS-Coding test and DSST-Meds was a little weaker than that between the WAIS-Coding test and DSST-Symbols. This difference may reflect random error as the 95% CIs for each estimate did overlap, even with the substantial power in the model from the large sample size studied. It is also possible that these differences might be true and reflect method variance related to the use of the medicine/date pairs used in the DSST-Meds. The extent to which the use of medicine/date pairs influences DSST performance systematically will be considered in more detail in future experiments, for example, by determining whether it is the medicine stimuli specifically, or whether other similar object/date pairs (as opposed to symbol/date pairs) also yield comparable results. Importantly, the results of this first study show that use of medicine/date pairs did not reduce the acceptability or validity of the DSST-Meds. Together, these results support the study hypothesis that the DSST-Meds has good construct validity. Although healthy adults make fewer correct responses on computerized DSSTs than on the conventional DSST version, the DSST-Meds had strong construct validity on the basis that performance accuracy and speed correlated strongly with performance accuracy on computerized – and paper-based versions of the conventional DSST. Furthermore, the magnitude of relationships between age and performance was equivalent for the three different DSST versions. Study 2 investigated the criterion validity of the DSST-Meds by comparing sensitivity to cognitive impairment in early AD to that of the DSST-Symbols and WAIS-Coding tests.

Study 2

Participants

A sample of 60 cognitively unimpaired (CU) older adults and 79 adults who had been classified as meeting clinical criteria for AD dementia of a mild severity symptomatic (mild AD) were recruited from patients with established diagnoses enrolled in the Australian Imaging, Biomarkers and Lifestyle (AIBL) Study (Fowler et al., Citation2021). summarizes the demographic and clinical characteristics of participants. Participants were recruited through word of mouth at the research institutes where their study was being conducted. As described previously in detail, the clinical classification, including cognitive status, of each participant was determined by an expert clinical panel, based on clinical interview and neuropsychological and psychiatric data. All individuals had normal or corrected to normal visual and auditory acuity and motor function.

Table 1. Study 2 participant demographic and clinical characteristics.

Procedure and data analysis

The computerized DSSTs and their associated learning tutorials were as described in Study 1. Both computerized DSSTs were administered as a sub-study for which individuals gave additional consent. The tests were administered in the same assessment, although their order was pseudorandomized between participants. Performance on the WAIS-Coding test and the Mini Mental Status Examination (MMSE, Folstein et al., Citation1975) was obtained for each participant from their most recent study assessment (median time difference = 2 hours, range 0.25 hours to 2 days). All assessments were administered under the supervision of a trained research assistant. The study was approved by institutional research and ethics committees, and all participants provided written informed consent prior to participating.

Data were analyzed in R Version 4.0.5. A series of ANCOVAs were conducted to determine performance differences between CU and AD groups on the WAIS-Coding, DSST-Symbols, and DSST-Meds. Age was included as a covariate. The magnitude of performance differences between CU and AD groups was estimated using Cohen’s d. Pearson’s correlations were also computed for relationships between performance on the DSST-Meds and disease severity defined using the MMSE score.

Results and discussion

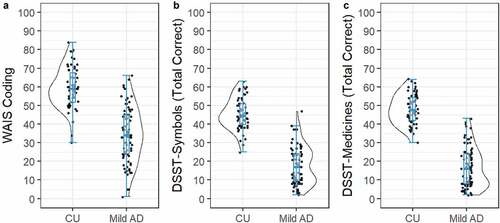

The distribution of correct responses on the different versions of the DSST is shown in . The data in show the distributions of scores on each DSST test to be distributed normally, with skewness values close to 0 (WAIS-Coding = −0.21; DSST-Symbols = 0.07; DSST-Meds = 0.04). summarizes the results of the ANCOVAs, and group mean (SE) performance and magnitudes of between-group differences for each DSST version. The mild AD group showed significantly fewer total responses than the CU group on each DSST version (). The magnitude of impairment (Cohen’s d) in the mild AD group ranged from large to very large (), with very large and equivalent group differences observed for the DSST-Meds and DSST-Symbols (i.e., overlap between Cohen’s d 95% CIs) and which were both greater than the magnitude of AD-related impairment observed for the WAIS-Coding test (i.e., no overlap between Cohen’s d 95% CIs).

Figure 3. Group mean performance and distribution of scores on the (a) WAIS-Coding, (b) DSST-Symbols, and (c) DSST-Medicines, for the CU and Mild AD groups.

Table 2. Summary of statistical analyses, and the estimated marginal means (SE), comparing correct responses on the WAIS-Coding, DSST-Symbols, and DSST-Meds tests between CU and mild AD groups.

In the mild AD group, accuracy of performance on the DSST-Meds was associated moderately with the MMSE score, r = 0.44, 95% CI [0.24, 0.60], p < .001, with the strength of this association equivalent to that between the MMSE and DSST-Symbols, r = 0.45, 95% CI [0.25, 0.61], p < .001, and between the MMSE and WAIS-Coding, r = 0.41, 95% CI [0.21, 0.58], p < .001.

As expected, performance on each DSST version was impaired substantially in mild AD, with the magnitude of impairment on both computerized DSST versions greater than that for the WAIS-Coding test (). Criterion validity of the DSST-Meds was also evident in the moderate association observed between performance and AD disease severity, defined using the MMSE. This association was equivalent in magnitude to that observed between disease severity and performance on the WAIS-Coding and DSST-Symbols. These data indicate that the DSST-Meds has strong criterion validity in symptomatic AD. With the construct and criterion validity of the DSST-Meds established in supervised contexts, Study 3 challenged the usability of the DSST-Meds in older adults in an unsupervised context of use. Study 3 also sought to determine the criterion validity of the DSST-Meds by comparing performance in unsupervised CU adults to that from the supervised CU adults and adults with AD, from Study 2.

Study 3

Participants

Participants were 621 CU adults who had completed unsupervised online cognitive assessments at baseline as part of their enrollment in the BetterBrains randomized controlled trial (RCT) (betterbrains.org.au). BetterBrains is a 24-month RCT that aims to prevent cognitive decline in those at risk of developing dementia through interventions targeting one or more known lifestyle risk-factors (e.g., physical inactivity, poor diet, low social engagement). Full trial details, including trial procedures and the recruitment of participants, have been published elsewhere (Lim et al., Citation2021). Briefly, all participants were required to be aged between 40 and 70 years (inclusive), live in Australia, have a first- or second-degree family history of dementia, be fluent in English, have access to an internet-enabled computer or laptop, have at least one modifiable risk factor for dementia, and express a willingness to make a change to their lifestyle and health behavior. Exclusion criteria included a diagnosis of any dementia or mild cognitive impairment, any untreated psychiatric disorder, any history of severe traumatic brain injury, and any recent history (<2 years) of a substance use disorder. The BetterBrains RCT was approved by the Monash University Human Research Ethics Committee. Informed consent was obtained from all participants prior to any study procedures. The trial was prospectively registered on the Australian New Zealand Clinical Trials Registry (ACTRN 12621000458831).

Procedure and data analysis

Once enrolled into the BetterBrains RCT, participants completed a baseline assessment of cognition as well as medical history, health, and lifestyle factors. All assessments were conducted remotely and unsupervised on the BetterBrains online platform. Participants were required to complete all cognitive assessments on a desktop or laptop computer, using a mouse and keyboard to make their responses. The baseline assessment was grouped into seven blocks, each requiring between 20 and 25 minutes to complete. The DSST-Meds was included in the first block of assessment (Lim et al., Citation2021). No participant enrolled in BetterBrains had completed the DSST-Meds previously. Prior to beginning the unsupervised DSST-Meds, all individuals completed the shaping procedure described in Study 1.

Data were analyzed in R Version 4.0.5. A series of t-tests and chi-square analyses examined demographic differences between completers and non-completers of the DSST-Meds. Completion of the unsupervised DSST-Meds was defined when the number of true positives was greater or equal to two and the test duration was 120 seconds. Pearson correlations were computed to examine the relationship between age and total correct responses and reaction time on the unsupervised DSST-Meds. Reaction time (speed of performance) was recorded in milliseconds and was normalized using a logarithmic base 10 (log10) transformation.

A series of ANCOVAs were conducted to compare DSST-Meds performance differences between unsupervised CU, supervised CU, and supervised AD groups on both the number correct and reaction time outcomes. Age was included as a covariate. A series of post-hoc t-tests were then conducted to compare the difference in performance between (a) unsupervised CU and supervised AD and (b) unsupervised and supervised CU groups, to estimate whether there exists any difference in performance across the two administration methods. The magnitude of performance differences between groups was quantified using Cohen’s d.

Results and discussion

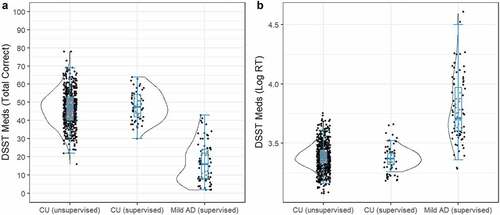

Of the 621 participants that attempted the unsupervised DSST-Meds, 604 (98%) completed the assessment. The mean age for these adults was 59.72 (SD = 6.56), with 491 (81%) participants being female. On average, they had completed 15.17 years of education (SD = 4.01). The data in show the distributions of scores on the DSST-Meds to be distributed normally in each clinical group, with skewness values close to 0 (unsupervised CU = 0.04; supervised CU = 0.06; supervised mild AD = 0.63).

Figure 4. Distribution of DSST-Meds performance accuracy (a) and performance speed score (b) for the CU unsupervised group, the CU supervised group, and the AD supervised group.

In adults who completed the unsupervised DSST-Meds, older age was associated negatively and moderately with correct responses, r = −0.44, 95% CI [−0.50, −0.37], p< .001, and reaction time, r = 0.43, 95% CI [0.49, 0.36], p< .001. Correct responses were similar for the CU unsupervised (M = 46.8, SE = 0.39) and CU supervised (M = 48.0, SE = 1.24) groups, while the AD group performed worse (M = 16.6, SE = 1.09). Log reaction times were also similar between CU unsupervised (M = 3.39, SE = 0.005) and CU supervised (M = 3.39, SE = 0.015), whereas the AD group was slower (M = 3.78, SD = 0.013). Comparison of performance on the DSST-Meds between the CU unsupervised group, the CU supervised group, and the AD supervised group indicated significant differences between groups on correct responses, F(2,740) = 347.55, p < .001, and on reaction time, F(2,740) = 410.04, p< .001 () correct responses, ) reaction times).

Post-hoc t-tests indicated significant differences in performance between the CU unsupervised and AD supervised groups on the total score, t(740) = 26.11, p< .001, with the magnitude of difference, very large, d = 3.13, 95% CI [2.84, 3.42], and on reaction time, t(740) = 28.37, p < .001, with the magnitude of this difference also very large, d = 3.20, 95% CI [2.90, 3.48] (). There were no significant differences between the CU unsupervised and CU supervised groups on the total score, t(740) = 0.98, p = .584, d= 0.12, 95% CI [−0.14, 0.39], or in reaction time t(740) = 0.99, p = .578, d= 0.13, 95% CI [−0.13, 0.40] (). Taken together, the results of Study 3 support the usability of the DSST-Meds for unsupervised assessment and demonstrated that differences in assessment modalities (i.e., supervised vs. unsupervised) did not diminish the sensitivity of the DSST-Meds to cognitive impairment in mild AD.

General discussion

The results of the three studies indicate that a version of the DSST requiring individuals to learn a set of nine unique pairings between representations of different solid oral dose medication (i.e., medicines) and date had high construct and criterion validity for characterizing an aspect of cognitive function in CU younger and older adults and in adults with early symptomatic AD. The validity of the DSST-Meds extended to contexts where it was administered to CU adults in unsupervised settings as part of their participation in an online RCT (Lim et al., Citation2021). Together, these data indicate that the context of the DSST paradigm could be modified so that it had a high similarity to a meaningful ADL while retaining its theoretical and psychometric characteristics. Furthermore, this modification did not disrupt its validity or sensitivity to AD-related cognitive impairment. This evidence supporting the validity of the DSST-Meds in well-studied patient groups now provides a strong foundation for investigation of the utility, acceptability, and validity of the DSST-Meds in AD individuals who have low experience with psychological assessment including those from CALD backgrounds where the requirements and conditions of medication management is the same as that in the groups studied here.

The validity of the DSST-Meds was determined first by the strength of association between the efficiency of performance on this test and that on a conventional paper and pencil DSST, the WAIS-Coding test. While performance on the two tests was associated strongly, the number of pairs completed accurately on the DSST-Meds was much less than that on the WAIS-Coding test (i.e., ~15 correct responses, ). This lower number of correct responses could have arisen from factors such as the differences between the response requirements on the DSST-Meds and WAIS-Coding tests (i.e., computerized vs. manual), or from the requirement of the computerized DSSTs that individuals could not move to their next selection until they had made a correct response. In contrast, the WAIS-coding test requires only that individuals make some response, correct or incorrect, before advancing to the next decision. It is unlikely that the lower number of correct responses on the DSST-Meds or Symbols reflected differences in the cognitive operations required to process medicine-date pairs or digit-symbol pairs. This hypothesis is supported by the finding that accuracy of performance on the computerized DSST that utilized digit-symbol pairs (DSST-Symbols) was also much lower (~18 correct responses) than that for the WAIS-Coding test (), despite strong associations observed between the two tests. Additionally, accuracy of performance on the DSST-Symbols was equivalent to that observed for the DSST-Meds (i.e., ). The expected reduction in performance accuracy with increasing age was also similar in magnitude on each of the three DSST versions. Finally, the conventional and computerized DSSTs each showed a very high sensitivity to cognitive impairment in adults with early AD. Thus, while the requirement that decisions on the DSST-Meds be indicated with a manual touch response, rather than with a graphomotor response, appears to have reduced the number of correct responses made, these different requirements do not change the main cognitive domains measured by the DSST-Meds from those validated in the general DSST paradigm.

One interesting aspect of the results was that despite the lower number of correct responses made on the computerized DSSTs than on the WAIS Coding test, the magnitude of impairment in the early AD group was greater for both computerized DSSTs than for the conventional paper-and-pencil DSST (). One hypothesis for this difference is that although both CU and AD adults generated fewer responses on the computerized DSSTs than on the paper-and-pencil DSST, variation between individuals in the number of correct responses (i.e., indicated by group accuracy SDs) was also smaller for the computerized DSST versions (, ). This may have arisen because the written responses required to enter the symbol in the empty box that corresponds with the Arabic number in the box-pair display on the WAIS coding test require more complex cognitive processing than the simple manual touches required on the computerized DSSTs. This greater complexity may result in greater variability between individuals in this aspect of their test performance (e.g., ). For a given difference in group mean performance, a greater between-individual variability within either group will decrease estimates of effect size.

For conventional paper-and-pencil DSSTs, instructions consist of a standardized passage that is followed by a small set of practice decisions. For example, on the WAIS-Coding test, test-takers are presented with six practice pairs prior to the test beginning (Weschler, Citation2008). On the DSST-Meds, individuals were instructed and trained to the rules and requirements of the task using a brief and interactive shaping program that introduced the stimuli and the required response for the test one step at a time (e.g., Krueger & Dayan, Citation2009). This shaping procedure developed to enable the self-administration of the DSST-Meds in unsupervised assessment contexts. In this shaping procedure, each participant was required to demonstrate that they understood and were able to comply with test rules and requirements before advancing to the next stage. They were then presented with a series of practice trials, which continued until the participant made correct responses on six consecutive trials. With this criterion achieved, the actual DSST began. Such shaping approaches may not be required in supervised neuropsychological assessments, where the neuropsychologist or psychometrician can determine whether the test-taker understands and can comply with the rules and requirements of the DSST. However, shaping approaches do provide a strong framework for standardized administration of instructions. Furthermore, shaping procedures can provide much greater rates of compliance and successful completion than when the same tests are given after only written instructions (Perin et al., Citation2020). With the use of this procedure, we demonstrated that the speed and accuracy of performance on the unsupervised DSST-Meds were equivalent to that from CU adults who had completed the DSST-Meds under supervision ().

The use of a daily medication schedule as a context within which to modify the DSST is based on observations that in conventional versions of the DSST, the digit-symbol pairs are presented in a design similar to that in a Dosette box or Webster pack, often used by individuals to assist with management of their daily medication regimens (Boeni et al., Citation2014; Conn et al., Citation2015; Zedler et al., Citation2011). The digits presented in the DSST digit-symbol keys are always presented in ascending order from left to right. Similarly, Dosette boxes and Webster packs also organize medicines in relation to days of the week or month in an ascending sequence organized from left to right. Once developed and approved by national regulatory authorities, new and existing medicines remain the same in all their contexts of use for adults(Centre for Drug Evaluation and Research, Citation2015, Citation2016; European Medicines Agency, Citation2015). For drugs taken orally, the homogeneity extends beyond chemical composition and formulation, to their size, shape, and color (i.e., pills and capsules). This consistency operates irrespective of the linguistic, cultural, or educational background of individuals who have the target disease, disorder, or injury. The international aspect of this approach to obtaining, storing, and taking the same medicine therefore provides a useful context within which to develop a version of a cognitive test that will facilitate understanding in individuals from different backgrounds, languages, and cultures. Thus, as with the symbol sets commonly used in DSSTs, the medicine stimuli used in the DSST-Meds were also designed so that they differed from one another and that no individual medicine stimulus could be considered to have a non-medicine related semantic meaning in English (e.g., represented a Stop Road sign or was part of an International Symbol Set such as the function keys on a phone keypad). Additionally, in each assessment, each medicine-date pair was randomized. With these principles, it became possible to reconstruct a DSST within a context that was meaningful to older adults without disrupting the underlying construct that has governed the design of many conventional DSSTs.

Conventional DSSTs are used widely in neuropsychology, although there is variability in theories about what aspects of cognition are assessed by the test. Most compendia classify the DSST as a test of complex attention, which includes sustained attention and response speed, together with visual scanning, motor persistence, and visuomotor coordination (Lezak et al., Citation2012; Spreen & Strauss, Citation1998). In AD contexts, poor performance on the DSST is also considered to reflect defects in executive function (Rapp & Reischies, Citation2005), while others also emphasize the importance of associative learning (Jaeger, Citation2018). Thus, while the DSST has strong face, and construct, validity as a measure of complex attention, acquisition of the number-symbol pairings requires application of executive learning strategies. Thus, the sensitivity of DSSTs to early AD most likely reflects the subtle deficits of executive function that arise with early biological changes such as the accumulation of amyloid and tau (Donohue et al., Citation2014; Lim et al., Citation2013; Rapp & Reischies, Citation2005). The results of the current study suggest strongly that these same aspects of cognition are required for optimal performance on the DSST-Meds, though further validation studies will be necessary to refine brain-behavior models that support the use of DSST-Meds in AD. For example, whilst neither the AIBL nor BetterBrains sample have had prior exposure to the DSST-Meds, they are both longitudinal studies comprised participants who may not be representative of the general population. As such, it will be important for studies to further challenge the use of the DSST-Meds, in both supervised and unsupervised contexts, in individuals who are naïve to detailed computerized cognitive testing.

The results of the current study support that the DSST can be reconstructed to reflect a meaningful and complex ADL, and that this may act to extend the contexts (e.g., supervised vs. unsupervised) in which the test can be applied with the expected validity and sensitivity. It also provides a basis to challenge the use of this DSST in CALD populations to determine whether it has increased utility and acceptability compared to conventional DSSTs. Finally, the findings of this study provide a strong theoretical and practical basis for extension of investigations into the sensitivity of the DSST-Meds to mild cognitive impairment (MCI) and moderate AD, as well as its ability to detect change in preclinical, prodromal, and clinical AD groups in both supervised and unsupervised settings.

Data availability

Data from the AIBL study are available for download at https://loni.adni.edu and will require a brief application for data access. Given the nature of health information collected, an approved human research ethics application and a data use agreement are required.

For researchers interested in collaboration, access to data from the BetterBrains RCT will be considered by the BetterBrains scientific management committee upon reasonable request (www.betterbrains.org.au for more details). Given the nature of health information collected, an approved human research ethics application and a data use agreement will be required. Only blinded baseline data will be made available as the trial is ongoing.

Acknowledgments

The BetterBrains Trial is funded by a National Health and Medical Research Council (NHMRC) Boosting Dementia Research Initiative grant (GNT1171816). Participants have, in part, been recruited from the Healthy Brain Project (healthybrainproject.org.au). The Healthy Brain Project has received funding from the NHMRC (GNT1158384, GNT1147465, GNT1111603, GNT1105576, GNT1104273), the Alzheimer’s Association (AARG-17-591424, AARG-18-591358, AARG-19-643133), the Dementia Australia Research Foundation, the Bethlehem Griffiths Research Foundation, the Yulgilbar Alzheimer’s Research Program, the National Heart Foundation of Australia (102052), and the Charleston Conference for Alzheimer’s Disease.

Disclosure statement

P Maruff is a full-time employee of Cogstate Ltd., the company that provides the Cogstate Brief Battery. A Schembri is a scientific consultant to Cogstate Ltd. All other investigators have no relevant disclosures to report.

Additional information

Funding

References

- Akbar, N., Honarmand, K., Kou, N., & Feinstein, A. (2011). Validity of a computerized version of the symbol digit modalities test in multiple sclerosis. Journal of Neurology, 258(3), 373–379. https://doi.org/10.1007/s00415-010-5760-8

- Amieva, H., Meillon, C., Proust-Lima, C., & Dartigues, J. F. (2019). Is low psychomotor speed a marker of brain vulnerability in late life? Digit symbol substitution test in the prediction of Alzheimer, Parkinson, stroke, disability, and depression. Dementia and Geriatric Cognitive Disorders, 47(4–6), 297–305. https://doi.org/10.1159/000500597

- Ardila, A. (2005). Cultural values underlying psychometric cognitive testing. Neuropsychology Review, 15(4), 185–195. https://doi.org/10.1007/s11065-005-9180-y

- Boeni, F., Spinatsch, E., Suter, K., Hersberger, K. E., & Arnet, I. (2014). Effect of drug reminder packaging on medication adherence: A systematic review revealing research gaps. Systematic Reviews, 3(1), 1. https://doi.org/10.1186/2046-4053-3-29

- Centre for Drug Evaluation and Research. (2015). Size, Shape, and Other Physical Attributes of Generic Tablets and Capsules: Guidance for Industry. https://www.fda.gov/files/drugs/published/Size–Shape–and-Other-Physical-Attributes-of-Generic-Tablets-and-Capsules.pdf

- Centre for Drug Evaluation and Research. (2016). Safety considerations for product design to minimize medication errors: Guidance for industry. https://www.fda.gov/media/84903/download

- Chaytor, N. S., Barbosa-Leiker, C., Germine, L. T., Fonseca, L. M., McPherson, S. M., & Tuttle, K. R. (2021). Construct validity, ecological validity and acceptance of self-administered online neuropsychological assessment in adults. Clinical Neuropsychologist, 35(1), 148–164. https://doi.org/10.1080/13854046.2020.1811893

- Cohen, J. (1988). Statistical power analysis for the behavioral sciences. Routledge Academic.

- Conn, V. S., Ruppar, T. M., Chan, K. C., Dunbar-Jacob, J., Pepper, G. A., & de Geest, S. (2015). Packaging interventions to increase medication adherence: Systematic review and meta-analysis. Current Medical Research and Opinion, 31(1), 145–160. https://doi.org/10.1185/03007995.2014.978939

- Colblindor. Coblis — Color Blindness Simulator. 2006-2021. https://www.color-blindness.com/coblis-color-blindness-simulator/

- de La Plata, C. M., Vicioso, B., Hynan, L., Evans, H. M., Diaz-Arrastia, R., Lacritz, L., & Cullum, C. M. (2008). Development of the Texas Spanish naming test: A test for Spanish speakers. Clinical Neuropsychologist, 22(2), 288–304. https://doi.org/10.1080/13854040701250470

- Donohue, M. C., Sperling, R. A., Salmon, D. P., Rentz, D. M., Raman, R., Thomas, R. G., Weiner, M., & Aisen, P. S. (2014). The preclinical Alzheimer cognitive composite: Measuring amyloid-related decline. JAMA Neurology, 71(8), 961–970. https://doi.org/10.1001/jamaneurol.2014.803

- Dorman Marek, K., & Antle, L. (2008). Medication Management of the Community-Dwelling Older Adult. In R. G. Hughes (Ed.), Patient safety and quality. An Evidence-Based Handbook for Nurses: Vol. 1. Agency for Healthcare Research and Quality. 499–536.

- Elliott, R. A., Goeman, D., Beanland, C., & Koch, S. (2015). Ability of older people with Dementia or cognitive impairment to manage medicine regimens: A narrative review. Current Clinical Pharmacology, 10(3), 213–221. https://doi.org/10.2174/1574884710666150812141525

- Elliott, R. A., & Marriott, J. L. (2009). Standardised assessment of patients’ capacity to manage medications: A systematic review of published instruments. BMC Geriatrics, 9(1). https://doi.org/10.1186/1471-2318-9-27

- European Medicines Agency. (2015). Good practice guide medication error risk minimisation and prevention. https://www.ema.europa.eu/en/documents/regulatory-procedural-guideline/good-practice-guide-risk-minimisation-prevention-medication-errors_en.pdf

- Folstein, M. F., Folstein, S. E., & Mchugh, P. R. (1975). “Mini-Mental State”: A practical method for grading the cognitive state of patients for the clinician. Journal of Psychiatric Research, 12(3), 189–198. https://doi.org/10.1016/0022-3956(75)

- Fowler, C., Rainey-Smith, S. R., Bird, S., Bomke, J., Bourgeat, P., Brown, B. M., Burnham, S. C., Bush, A. I., Chadunow, C., Collins, S., Doecke, J., Doré, V., Ellis, K. A., Evered, L., Fazlollahi, A., Fripp, J., Gardener, S. L., Gibson, S., Grenfell, R., & Ames, D. (2021). Fifteen years of the Australian Imaging, Biomarkers and Lifestyle (AIBL) study: Progress and observations from 2,359 older adults spanning the spectrum from cognitive normality to Alzheimer’s Disease. Journal of Alzheimer’s Disease Reports, 5(1), 443–468. https://doi.org/10.3233/ADR-210005

- Germine, L., Nakayama, K., Duchaine, B. C., Chabris, C. F., Chatterjee, G., & Wilmer, J. B. (2012). Is the Web as good as the lab? Comparable performance from web and lab in cognitive/perceptual experiments. Psychonomic Bulletin & Review, 19(5), 847–857. https://doi.org/10.3758/s13423-012-0296-9

- Harris, J. G., Wagner, B., & Cullum, C. M. (2007). Symbol vs. digit substitution task performance in diverse cultural and linguistic groups. Clinical Neuropsychologist, 21(5), 800–810. https://doi.org/10.1080/13854040600801019

- Howieson, D. (2019). Current limitations of neuropsychological tests and assessment procedures. Clinical Neuropsychologist, 33(2), 200–208. https://doi.org/10.1080/13854046.2018.1552762

- Insel, K., Morrow, D., Brewer, B., & Figueredo, A. (2006). Executive function, working memory, and medication adherence among older adults. Journal of Gerontology: Psychological Sciences, 61B(2), 102–107. https://doi.org/10.1093/geronb/61.2.P102

- Jaeger, J. (2018). Digit symbol substitution test. Journal of Clinical Psychopharmacology, 38(5), 513–519. https://doi.org/10.1097/JCP.0000000000000941

- Krueger, K. A., & Dayan, P. (2009). Flexible shaping: How learning in small steps helps. Cognition, 110(3), 380–394. https://doi.org/10.1016/j.cognition.2008.11.014

- Lezak, M. D., Howieson, D. B., Bigler, E. D., & Tranel, D. (2012). Neuropsychological assessment (5th) ed.). Oxford University Press.

- Lim, Y. Y., Ayton, D., Perin, S., Lavale, A., Yassi, N., Buckley, R., Barton, C., Bruns, L., Morello, R., Pirotta, S., Rosenich, E., Rajaratnam, S. M. W., Sinnott, R., Brodtmann, A., Bush, A. I., Maruff, P., Churilov, L., Barker, A., & Pase, M. P. (2021). An online, person-centered, risk factor management program to prevent cognitive decline: Protocol for a prospective behavior-modification blinded endpoint randomized controlled trial. Journal of Alzheimer’s Disease, 83(4), 1603–1622. https://doi.org/10.3233/JAD-210589

- Lim, Y. Y., Ellis, K. A., Harrington, K., Kamer, A., Pietrzak, R. H., Bush, A. I., Darby, D., Martins, R. N., Masters, C. L., Rowe, C. C., Savage, G., Szoeke, C., Villemagne, V. L., Ames, D., & Maruff, P. (2013). Cognitive consequences of high aβ amyloid in mild cognitive impairment and healthy older adults: Implications for early detection of Alzheimer’s disease. Neuropsychology, 27(3), 322–332. https://doi.org/10.1037/a0032321

- Lim, Y. Y., Harrington, K., Ames, D., Ellis, K. A., Lachovitzki, R., Snyder, P. J., & Maruff, P. (2012). Short term stability of verbal memory impairment in mild cognitive impairment and Alzheimer’s disease measured using the International shopping list test. Journal of Clinical and Experimental Neuropsychology, 34(8), 853–863. https://doi.org/10.1080/13803395.2012.689815

- Morgan, T. K., Williamson, M., Pirotta, M., Stewart, K., Myers, S. P., & Barnes, J. (2012). A national census of medicines use: A 24-hour snapshot of Australians aged 50 years and older. Medical Journal of Australia, 196(1), 50–53. https://doi.org/10.5694/mja11.10698

- Ng, K. P., Chiew, H. J., Lim, L., Rosa-Neto, P., Kandiah, N., & Gauthier, S. (2018). The influence of language and culture on cognitive assessment tools in the diagnosis of early cognitive impairment and dementia. Expert Review of Neurotherapeutics, 18(11), 859–869. https://doi.org/10.1080/14737175.2018.1532792

- Perin, S., Buckley, R. F., Pase, M. P., Yassi, N., Lavale, A., Wilson, P. H., Schembri, A., Maruff, P., & Lim, Y. Y. (2020). Unsupervised assessment of cognition in the healthy brain project: Implications for web-based registries of individuals at risk for Alzheimer’s disease. Alzheimer’s and Dementia: Translational Research and Clinical Interventions, 6, 1. https://doi.org/10.1002/trc2.12043

- Rapp, M. A., & Reischies, F. M. (2005). Attention and executive control predict Alzheimer Disease in late life: Results from the Berlin Aging Study (BASE). The American Journal of Geriatric Psychiatry, 13(2), 134–141. https://doi.org/10.1176/appi.ajgp.13.2.134

- Rosselli, M., Uribe, I. V., Ahne, E., & Shihadeh, L. (2022). Culture, ethnicity, and level of education in Alzheimer’s Disease. Neurotherapeutics, 19(1), 26–54. https://doi.org/10.1007/s13311-022-01193-z

- Sawilowsky, S. S. (2009). New effect size rules of thumb. Journal of Modern Applied Statistical Methods, 8(2), 597–599. https://doi.org/10.22237/jmasm/1257035100

- Sayegh, P. (2015). Cross-Cultural issues in the neuropsycholgocail assessment of Dementia. In R. F. Ferraro (Ed.), Minority and cross-cultural aspects of neuropsychological assessment: Enduring and emerging trends (pp. 54–71). Taylor and Francis Group. http://ebookcentral.proquest.com/lib/monash/detail.action?docID=3569852

- Skinner, B. F. (1938). The behavior of organisms: An experimental analysis. Appleton-Centyrt Crofts.

- Spooner, D. M., & Pachana, N. A. (2006). Ecological validity in neuropsychological assessment: A case for greater consideration in research with neurologically intact populations. Archives of Clinical Neuropsychology, 21(4), 327–337. https://doi.org/10.1016/j.acn.2006.04.004

- Spreen, O., & Strauss, E. (1998). A compendium of neuropsychological tests: Administration, norms and commentary (2nd) ed.). Oxford University Press.

- Stilley, C. S., Bender, C. M., Dunbar-Jacob, J., Sereika, S., & Ryan, C. M. (2010). The impact of cognitive function on medication management: Three studies. Health Psychology: Official Journal of the Division of Health Psychology, American Psychological Association, 29(1), 50–55. https://doi.org/10.1037/a0016940

- Suchy, Y., Ziemnik, R. E., Niermeyer, M. A., & Brothers, S. L. (2020). Executive functioning interacts with complexity of daily life in predicting daily medication management among older adults. Clinical Neuropsychologist, 34(4), 797–825. https://doi.org/10.1080/13854046.2019.1694702

- Teng, E. L., & Manly, J. J. (2005). Neuropsychological testing: Helpful or harmful? Alzheimer Disease and Associated Disorders, 19(4), 267–271. https://doi.org/10.1097/01.wad.0000190805.13126.8e

- Thompson, F., Cysique, L. A., Harriss, L. R., Taylor, S., Savage, G., Maruff, P., & McDermott, R. (2020). Acceptability and usability of computerized cognitive assessment among Australian Indigenous residents of the Torres Strait Islands. Archives of Clinical Neuropsychology, 35(8), 1288–1302. https://doi.org/10.1093/arclin/acaa037

- Thorndike, E. L. (1919). A standardized group examination of intelligence independent of language. Journal of Applied Psychology, 3(1), 13–32. https://doi.org/10.1037/h0070037

- Tung, L. C., Yu, W. H., Lin, G. H., Yu, T. Y., Wu, C., Te, Tsai, C. Y., Chou, W., Chen, M. H., & Hsieh, C. L. (2016). Development of a tablet-based symbol digit modalities test for reliably assessing information processing speed in patients with stroke. Disability and Rehabilitation, 38(19), 1952–1960. https://doi.org/10.3109/09638288.2015.1111438

- Weschler, D. (2008). Wechsler adult intelligence scale (4th) ed.). Pearson.

- Wilson, B. A., Cockburn, J., & Baddeley, A. (1985). The Rivermead Behavioural Memory Test (RBMT). Thames Valley Test Company.

- Zedler, B. K., Joyce, A., Murrelle, L., Kakad, P., & Harpe, S. E. (2011). A Pharmacoepidemiologic analysis of the impact of calendar packaging on adherence to self-administered medications for long-term use. Clinical Therapeutics, 33(5), 581–597. https://doi.org/10.1016/j.clinthera.2011.04.020