ABSTRACT

The use of multi-modal approaches, particularly in conjunction with multivariate analytic techniques, can enrich models of cognition, brain function, and how they change with age. Recently, multivariate approaches have been applied to the study of eye movements in a manner akin to that of neural activity (i.e., pattern similarity). Here, we review the literature regarding multi-modal and/or multivariate approaches, with specific reference to the use of eyetracking to characterize age-related changes in memory. By applying multi-modal and multivariate approaches to the study of aging, research has shown that aging is characterized by moment-to-moment alterations in the amount and pattern of visual exploration, and by extension, alterations in the activity and function of the hippocampus and broader medial temporal lobe (MTL). These methodological advances suggest that age-related declines in the integrity of the memory system has consequences for oculomotor behavior in the moment, in a reciprocal fashion. Age-related changes in hippocampal and MTL structure and function may lead to an increase in, and change in the patterns of, visual exploration in an effort to upregulate the encoding of information. However, such visual exploration patterns may be non-optimal and actually reduce the amount and/or type of incoming information that is bound into a lasting memory representation. This research indicates that age-related cognitive impairments are considerably broader in scope than previously realized.

Overview

Multi-modal experimental approaches that include the simultaneous collection of neural activity and behavioral metrics provide a more comprehensive understanding of how the brain supports cognition than the collection of either metric alone. Classic examples of combining the analysis of neural activity with behavioral responses can be found in early neuroimaging studies of subsequent memory. Using event-related fMRI, as opposed to the prevailing blocked designs of the time, Wagner et al (Wagner et al., Citation1998) and Brewer et al (Brewer et al., Citation1998) sorted trials of neural activity at encoding based on whether the participants’ subsequent recognition memory response was correct or incorrect, akin to methods that had already been in use in the ERP literature (Paller et al., Citation1987). Analyses of these newly sorted trials demonstrated activity in medial temporal and frontal regions that predicted whether the encoded stimuli (words: Wagner et al., Citation1998; pictures: Brewer et al., Citation1998) would be later remembered or forgotten. Studies such as these from Wagner, Brewer, and colleagues ushered in a new era of cognitive neuroscience that would use event-related fMRI to directly tie neural activity to behavioral and cognitive outcomes (Rosen et al., Citation1998), and more specifically, to provide a deeper understanding of how the brain supports memory (Wagner et al., Citation1999).

As the methods for neuroimaging data collection became more sophisticated and allowed for more flexibility in the questions that could be asked and answered, so too did the analysis of such data. Building on univariate analyses for neuroimaging that provided an indication of the level of neural activity within individual regions during a specified time period, multivariate analysis approaches provided a broader understanding of dynamic patterns of neural activity over space and time (Haxby et al., Citation2014; Haynes & Rees, Citation2006; Kriegeskorte et al., Citation2006; McIntosh & Mišić, Citation2013; Sui et al., Citation2012). When applied to behavior, multivariate analyses have similar advantages. Relying on a single metric of behavior may be uninformative or may be misinterpreted outside of its broader context, thereby providing limited insight into cognition. Instead, multivariate analyses can reveal a dynamic pattern of behavior, and can illuminate the nature of the representations that are being formed or used (Chadjikyprianou et al., Citation2021; Kwak et al., Citation2021). Here, we review the added benefits of using multivariate approaches to examine dynamic patterns of behavior, with a specific focus on the analysis of eye movements.

Eyetracking is a behavioral method that is particularly well-suited for the application of multivariate analyses. Movements of the eyes (i.e., saccades) can be initiated within 100 ms; likewise, gaze fixations – where the eyes stop on the visual world – typically last around 250–400 ms, depending on the task that is provided to the viewer, and the nature of the information that is being viewed (Henderson et al., Citation2013). Eye movements (specifically, here, we refer to the alternating sequence of saccades and gaze fixations) differ from other measures of behavior, such as button presses or verbal reports, which reflect a response that occurs only at a single point in time, following the culmination of multiple cognitive operations (Ryan & Shen, Citation2020). Thus, eyetracking provides a rich dataset that includes information regarding space (where the eyes land, as well as the distance and the angle of the vectors taken by the eyes to sample visual information) across time (when, during viewing, a specific region is interrogated by the eyes, for how long, and/or the temporal order by which information is sampled). Eyetracking therefore provides two advantages in the study of behavior and cognition. First, eyetracking provides insight into the nature of the information that is being attended to, encoded, and retrieved, in an online fashion (Ryan & Shen, Citation2020). Second, given the fine temporal resolution of eyetracking recordings, the multivariate analysis of eye movements may be akin to that of neural activity, as one can examine how spatial patterns may unfold and change across time. In this review, through a discussion of multi-modal approaches and multivariate analyses that include eyetracking as a measure of behavior alongside the recording of neural activity, we reveal how these methodological advances have contributed to our understanding of how memory changes with aging.

There is a long history of research that has used eyetracking to study cognitive changes associated with aging. Predominantly, eyetracking studies of aging have used the antisaccade task to document age-related changes in the speed of responding, and in executive functions (Butler et al., Citation1999; Fernandez-Ruiz et al., Citation2018). More recently, eyetracking has been used with older adults to explore age-related changes in memory function. As reviewed in more detail in Ryan et al. (Citation2020), older adults show changes in eye movement behavior that are reflective of underlying changes in memory (Wynn, Amer et al., Citation2020). For instance, older adults, particularly those who are beginning to show cognitive decline as measured by standardized neuropsychological tests, do not show the same levels preferential viewing for a novel image over one that has been repeatedly viewed as compared to younger adults (Crutcher et al., Citation2009; Whitehead et al., Citation2018). Likewise, older adults do not show disproportionate viewing of a region of a scene that has changed from a prior viewing (Ryan et al., Citation2007; Yeung et al., Citation2019). Deficits in these viewing effects have been linked to alterations in the structure and function of the hippocampus and entorhinal cortex of the medial temporal lobe (MTL) (Yeung et al., Citation2019).

However, these eyetracking studies have typically used single metrics of viewing behavior (e.g., number of fixations, viewing duration), considered independently, to understand age-related changes in memory function. When multi-modal and multivariate analytic approaches that include eyetracking are applied to the study of aging, we see a deficit that is more pervasive and nuanced than previously realized – moment-to-moment exploration is disrupted in aging, suggesting possible disconnections in the transfer of information between the MTL and the oculomotor system, that both contribute to, and reveal deficits in, memory. Moreover, multivariate analyses of eye movement behavior show changes in the fidelity of the information that is encoded and subsequently retrieved by older adults, thereby expanding our knowledge, and providing increased specificity, regarding the nature of age-related memory deficits. Thus, multivariate analyses of behavior provide (at least) two advantages over traditional, univariate, methods: patterns of behavior may be interrogated dynamically, and the nature of the representation that guides viewing behavior can be revealed.

Multi-modal (eyetracking and neuroimaging) approaches

Research employing multi-modal approaches, specifically, the combination of eyetracking and neuroimaging techniques, have shown that there is a close, reciprocal connection between the oculomotor and hippocampal systems (Ryan et al., Citation2020). Hannula and Ranganath (Hannula & Ranganath, Citation2009) provided the first combined eyetracking and neuroimaging study that examined the role of the hippocampus in guiding where the eyes would look and when. Specifically, they showed that the level of hippocampal activity during viewing of a scene predicted the extent to which subsequent viewing would be directed at one of three faces that had been previously paired with that scene, even in the absence of explicit memory for the face-scene pairing. Their work provided a noninvasive exploration of the coupling between neural activity and behavior, and complemented existing neuropsychological research showing that the hippocampus may have a critical role in the expression of relational memory representations via eye movements (Hannula et al., Citation2007; Ryan et al., Citation2000; Smith et al., Citation2006). We have recently provided a detailed review of multi-modal eyetracking-neuroimaging research focused on memory function that has emerged since the time of Hannula and Ranganath (Citation2009) in Ryan et al. (Citation2020). Therefore, here, we highlight findings that have been published since that time and that use a combined eyetracking-neuroimaging multi-modal approach to further add to our understanding of oculomotor-memory interactions.

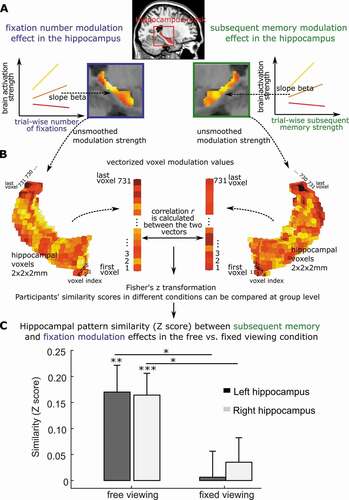

Previously, Liu et al. (Citation2017, Citation2020) showed that the number of gaze fixations made by participants during viewing was positively associated with neural activity, on a trial-wise basis, in the ventral visual stream, and in regions of the medial temporal lobe, including the hippocampus (Liu et al., Citation2020, Citation2017). By examining the overlap between the pattern of neural activity that was modulated by gaze fixations and the pattern of neural activity that was modulated by subsequent recognition memory performance, Liu et al. (Citation2020) showed that there were voxels common to both patterns (). The overlap in modulatory activity suggests that gaze fixations contribute information to lasting mnemonic representations, and perhaps reflects information inherent to eye movements regarding the spatial and temporal arrangements of distinct elements.

Figure 1. Brain modulation pattern similarity between eye movements and memory. a. Hippocampal activation can be predicted (or modulated) by both trial-wise number of fixations and subsequent memory strength. The color coding reflects the modulation strength (i.e., prediction slope) by fixations or memory, with warmer (i.e., toward yellow) colors indicating stronger modulation (i.e., larger prediction slopes). A stronger modulation indicates more sensitivity to trial-wise number of fixations (left) or trial-wise subsequent memory strength (right). b. To quantify the similarity of the hippocampal cross-voxel modulation patterns by visual exploration (i.e., the trial-wise number of fixations) and by trial-wise subsequent memory, for each participant, the unsmoothed cross-voxel modulation values were first vectorized for both fixation and memory. Then Pearson correlation coefficient (r) (or other vector similarity measures) was calculated, and Fisher’s Z transformed. Individuals’ hippocampal modulation similarity by memory and fixations (i.e., Fisher’s Z scores) can be compared between conditions across participants. c. Using this procedure, Liu et al. (Citation2020) found similar hippocampal cross-voxel modulation pattern by trial-wise number of fixations and subsequent memory strength, but only when participants were allowed to freely explore the visual stimuli. The modulation similarity not evident when participants restricted their eye movements. Panels a and c are adapted from Liu et al. (Citation2020).

Liu et al. (Citation2017, Citation2020) examined the association between gaze fixations and neural activity, irrespective of where the gaze fixation landed on the image. Recently, Henderson et al. (Citation2020) expanded on this work by investigating the neural activity that was modulated by the specific type of information that was extracted at the site of each gaze fixation. That is, using fMRI, Henderson et al. (Citation2020) examined the neural activity that was associated with gaze fixations that were directed to areas that had high or low levels of bottom-up visual content (e.g., edge density), and the neural activity that was associated with gaze fixations that were directed to areas that had high or low levels of top-down semantic content. Levels of semantic content were defined by a separate group of viewers who rated the informativeness and recognizability of scene patches. Henderson et al. (Citation2020) found that fixations to regions high in edge density information primarily activated occipital regions, whereas fixations to more semantically informative scene regions elicited activation along the ventral visual stream into the parahippocampal gyrus. The findings from this work were consistent with the interpretations previously set forth by Liu and colleagues; namely, that gaze fixations modulate the hippocampus and extended MTL system through the extraction of higher-level visual information that is ultimately bound into memory.

Recent work has provided further converging evidence that informational content may be driving the relationship between gaze fixations and neural activity in the MTL (see also Wynn, Liu et al., Citation2021), and with subsequent memory. Using parametric modulation analysis, Fehlmann, et al., (Citation2020) showed that the number of gaze fixations made to scenes was positively correlated with free recall performance, and was associated with neural activity in visual processing regions, as well as in the MTL, specifically in the parahippocampus. This cluster of fixation-related activity in the parahippocampus was also positively related to subsequent free recall performance. A follow-up experiment found that, through guided viewing, increasing the number of gaze fixations that were specifically directed to informative regions of an image improved later memory performance, whereas guiding viewing to non-informative regions (i.e., regions that were generally not viewed by participants in the first study) decreased subsequent memory performance. Thus, eye movements serve to gather information from the visual world, modulate activity in the MTL, and support subsequent memory. Indeed, Liu et al. (Citation2020) showed that when participants were forced to maintain viewing on a static fixation cross (as opposed to free viewing), neural activity all along the ventral visual steam and into the hippocampus, and functional connectivity among the hippocampus, parahippocampal place area and other cortical regions, were decreased (Liu et al., Citation2020). Restricted viewing was also associated with decreases in later recognition memory. Finally, whereas there was considerable overlap in the neural activity patterns associated with gaze fixations and with subsequent memory during free viewing, this overlap decreased under restricted viewing. Such findings point to the intrinsic link between the oculomotor and extended hippocampal memory system at the level of behavior (i.e., subsequent memory) and at the level of neural activity ().

Using advanced neuroimaging techniques, recent work has significantly extended our understanding of the neural mechanisms linking eye movements and memory retrieval. For example, Koba et al. (Citation2021) similarly went beyond examination of activity in a single neural region to demonstrate how eye movements made under differing fixation instructions influenced the topography and topology of resting-state functional brain networks. Removing eye movement-related neural activity reduced connectivity between functional networks, and increased modularity within networks. Therefore, eye movements may be functional for coordinating activity across functional networks.

Using simultaneous eyetracking and intracranial EEG, Kragel et al. (Citation2021) recorded eye movements and hippocampal theta oscillations as neurosurgical patients completed a spatial memory task in which they had to learn the unique spatial locations of a sequence of objects, and subsequently discern whether an object had been moved to a new location. Examination of the theta phase distributions prior to fixation on an object’s original location were associated with the peak of theta, whereas the phase-locking following fixations to the novel, updated, location was associated with the trough of theta. The authors suggested that the peak of theta may reflect retrieval processes, such that the previously viewed location for the object had been recalled and then fixated by the eyes. By contrast, the trough of theta phase that followed gaze fixations to the updated locations may be associated with memory encoding. These new findings provide additional support for the coupling of gaze fixations and phase-locking of theta oscillations in the hippocampus (Hoffman et al., Citation2013). Kragel et al (Citation2021) add to a growing literature on active vision and memory by demonstrating a possible alternation between encoding-retrieval processes that are enacted on a fixation-by-fixation basis, that may be mediated, at least in part, via theta oscillations.

A related finding, also using intracranial recordings, has provided evidence for saccade phase resetting in the anterior nuclei of the thalamus during active viewing (Leszczynski et al., Citation2020). The thalamus, as part of the extended hippocampal system, has reciprocal inputs from the subiculum (Christiansen et al., Citation2016; Frost et al., Citation2021), and receives inputs from the mammillary bodies (Dillingham et al., Citation2015). Thus, the anterior nuclei of the thalamus may be well-situated to provide ongoing modulation of neural activity in the broader MTL via eye movements. Other intracranial EEG and eyetracking work suggests that eye movements may create a type of corollary discharge that is observed within hippocampal activity (Katz et al., Citation2020), suggesting that the motor pattern itself may become part of the mnemonic representation that is built by the hippocampus and MTL (see also (Wynn, Liu et al., Citation2021).

Altogether, data from the aforementioned combined eyetracking-neuroimaging studies have demonstrated that gaze behavior modulates oscillatory and neural activity in the brain, perhaps in service of ongoing encoding and retrieval operations. Saccades increase phase-locking of oscillations, and gaze fixations are associated with increased BOLD activity in the hippocampus. Gaze fixations also modulate functional connectivity with the hippocampus, and broader MTL, as well as between broader functional networks. The influence of eye movements on the hippocampal system has downstream consequences for memory, such that alterations in naturalistic eye movement behavior (e.g., restriction of visual exploration) can negatively impact recognition (Liu et al., Citation2020). Multi-modal studies that consider behavior alongside neuroimaging therefore provide a broader understanding of how effector systems may have a reciprocal relationship with neural function. That is, behavioral responses, including the movements of the eyes, may not simply be a passive reflection of the output of neural processing, but rather, may be particularly well-suited to drive or otherwise coordinate neural responses (for further discussion, see Ryan & Shen, Citation2020).

Multi-modal (eyetracking and neuroimaging) approaches as applied to the study of aging

Despite the increase in multi-modal approaches that are applied to the study of memory, there is a paucity of research that has applied such tools to the study of memory function in aging. Specifically, similar to eyetracking-only studies of aging, combined eyetracking-neuroimaging studies of aging have primarily focused on performance on the antisaccade task to understand the integrity of executive function (Fernandez-Ruiz et al., Citation2018; Stacey et al., Citation2021). And, to the best of our knowledge, there have been no combined eyetracking-functional neuroimaging studies of memory, or with specific reference to the hippocampus and broader MTL, in aging using either electroencephalography (EEG), magnetoencephalography (MEG) or fMRI since Liu et al. (Citation2018). In that study, Liu et al. (Citation2018) showed that the gaze fixations of older adults did not modulate the hippocampus and extended system. Further, in older adults, gaze fixations were only weakly related to subsequent, indirect expressions of memory, as indexed by neural repetition suppression effects. Multi-modal investigations such as this are important for understanding the myriad ways that hippocampal and MTL dysfunction may manifest in behavior, and for understanding which neural regions may or may not support relatively intact expressions of memory. Moreover, multi-modal investigations may importantly show which behavioral measures could stand as a reliable proxy for neural integrity.

As an example, researchers have combined eyetracking with structural MRI to show that alterations in memory-related viewing behavior are linked to changes in cortical thickness that may be helpful in identifying those who either have, or may be at risk for, clinically significant cognitive decline. Nie et al. (Citation2020) tested healthy older adults and adults with mild cognitive impairment (MCI) on the Visual Paired Comparison (VPC) task. The VPC is an eyetracking task that has been widely used for decades with humans and non-human animals alike (Fagan, Citation1970; Fantz, Citation1964; Manns et al., Citation2000; S M Zola et al., Citation2000) as a means to indirectly assess memory. On the VPC task, participants view a set of stimuli repeatedly, and subsequently, a repeated image is presented alongside a novel image. Intact memory function is inferred through findings of preferential viewing for the novel, as opposed to the repeated, stimulus. Typically, older adults and individuals with hippocampal compromise show lower levels of preferential viewing compared to younger adults, and control participants, respectively (Crutcher et al., Citation2009; M. Munoz et al., Citation2011; Pascalis et al., Citation2004; Whitehead et al., Citation2018; Zola et al., Citation2013). Nie and colleagues (Nie et al., Citation2020) added to this literature by demonstrating that MCI cases had lower preferential viewing scores than the healthy older adults, and that preferential viewing scores distinguished whether an individual was a healthy older adult or an individual with MCI. Preferential viewing scores were significantly related to cortical thickness in the right hemisphere in regions of the temporal pole, superior frontal lobe, rostral anterior cingulate, and precuneus.

Although research using combined eyetracking and neuroimaging approaches to understand the mechanisms underlying age-related changes in memory has been minimal to date, current evidence suggests there is a disconnect between the transfer of information between the oculomotor and the hippocampal memory systems. However, older adults may not necessarily show atrophy or altered function in just a single neural region, or even within a single system of regions. Rather, neuroimaging research has shown that age-related changes in functional connectivity may emerge in parallel with age-related changes in structural connectivity across multiple neural regions and/or networks (Zimmermann et al., Citation2016). Likewise, dysfunction in a limited set of neural regions may result in the over-recruitment of other neural regions (Samson & Barnes, Citation2013), perhaps in a compensatory fashion (Grady et al., Citation2005), or may result in an altered network topology that could either be more integrated or segregated (Henson et al., Citation2016; Yuan et al., Citation2017). Aging has been associated with a multitude of cognitive changes that go beyond long-term memory, including speed of processing, attention, working memory, and other executive functions (Park & Bischof, Citation2013; Park et al., Citation2002). Moreover, the changes that are observed in aging may not necessarily be correlated with one another; differences in brain structure and function, and in cognition, may emerge at different timepoints in the aging trajectory, and subsequently decline at different rates (Park et al., Citation2002). Thus, to provide a comprehensive study of aging, one that considers the complex patterns of brain structure, function, and dysfunction, as well as its association with cognition and patterns of behavior, it is helpful to turn to multivariate approaches for the analysis of aging-related data.

Multivariate approaches for neuroimaging and eye movement data

Recently, multivariate approaches have been applied to the study of eye movements in a manner akin to that of neural activity (i.e., pattern similarity). The advantage of using multivariate approaches to the study of eye movements is similar to that of neural activity; namely, that broader patterns in the data may be derived that include information about correlated metrics. Additionally, an important advantage of applying multivariate approaches to eyetracking data is that the method allows researchers to make broader inferences about the nature of the information that is contained within the pattern; specifically, the patterns may be suggestive of the cognitive processing that is being enacted by the viewer and/or the type of content that is being encoded into, or retrieved from, memory.

As an example, multivariate eye movement analyses have been used to address the question of whether mental states can be decoded from patterns and metrics of eye movements. Classic work from Yarbus (Citation1967) showed that as the top-down goals of the viewer changed, so too did the viewer’s eye movements, despite the same image being viewed, suggesting that differing mental states or goals may be revealed through eye movement behavior. Recent studies suggest that mental states can indeed by decoded from a viewer’s eye movements, under certain conditions. Specifically, as reviewed by Borji and Itti (Borji & Itti, Citation2014), classification accuracy may vary depending on the classifier method that is used as well as how separable the different cognitive tasks are, whether the stimulus set that is used has the requisite information for the task, whether images are treated separately or as a set, and whether the viewers understand the task that is presented. On the first point, classification performance may be enhanced when information regarding the spatial distribution or location of the eye movements is considered alongside the frequency or duration of the movements (Borji & Itti, Citation2014).

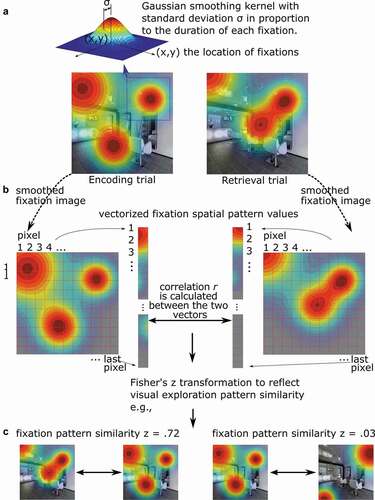

Beyond classifying the cognitive task that is presented to the viewers, multivariate analyses have been used to compare the similarity in the pattern of eye movements across phases of memory experiments (). Specifically, as first outlined in Scanpath Theory by Noton and Stark (Noton & Stark, Citation1971), researchers have asked whether the scanpaths (i.e., gaze patterns) enacted during encoding are recapitulated during a subsequent retrieval phase, and whether the reinstatement of such eye movement patterns is related to memory performance. To quantify the spatial overlap between corresponding encoding and retrieval scanpaths, Wynn, Ryan, et al. (Citation2020) generated density maps from the aggregated fixations of each participant viewing a particular stimulus during encoding and subsequently retrieving that same stimulus when presented with a brief cue (). Corresponding (matching image) encoding and retrieval scanpaths were correlated, as were non-corresponding (mismatching image) scanpaths, such that above chance similarity was quantified as match similarity that was greater than mismatch similarity. Lending support to Scanpath Theory, they found that during retrieval, gaze scanpaths reinstated the fixations executed during encoding of the same image, and did so more than chance. Moreover, such reinstatement was associated with both correct recognition of difficult old images and false alarms to lure images, suggesting that gaze reinstatement supports the reactivation of stored memory representations. As described in a recent review (Wynn et al., Citation2019), gaze reinstatement allows viewers to broadly recapitulate the spatiotemporal context of the prior encoding episode, which may allow for further, associated, details to be retrieved from memory. When task demands exceed memory capacity, gaze reinstatement may be obligatorily recruited in order to support task performance (Wynn et al., Citation2019). As such, the extent of the recapitulation has been shown to be positively associated with the accuracy of memory retrieval across a range of tasks (Johansson et al., Citation2021; Wynn, Liu et al., Citation2021; Wynn et al., Citation2018). Just as eye movements during encoding may be guided by visual salience, prior semantic knowledge, or memories that are more episodic in nature, gaze reinstatement may reflect the influence of multiple factors and types of knowledge (Ramey et al., Citation2020; see also Wynn, Buchsbaum et al., Citation2021).

Figure 2. Fixation pattern similarity analyses. a. Each fixation on a visual stimulus is first spatially smoothed using a Gaussian kernel, with the standard deviation σ (or the amplitude d) in proportion to the duration of the fixation. Then the viewing pattern image from all fixations are aggregated according to their locations. The two hypothetical examples depicted are viewing patterns for the same image during an initial encoding task and a subsequent memory test. b. The smoothed viewing pattern images are then vectorized, i.e., values at all pixels are aligned in a certain order in one column. Then Pearson correlation coefficient (r) (or other vector similarity measures) was calculated, and Fisher’s Z transformed to measure the similarity of the two viewing patterns. The similarity depicted here may reflect encoding-retrieval gaze reinstatement. c. As examples, the two viewing patterns on the left cover similar regions of the image and have high similarity, Z = .72, and the two on the right do not cover similar regions and have low similarity, Z = .03.

Gaze reinstatement may contribute to memory errors if an erroneous scanpath is elicited in response to a similar stimulus or event as one that had been maintained in memory (Klein Selle et al., Citation2021; Wynn, Ryan et al., Citation2020). Moreover, subsequent memory performance can suffer if the initial scanpath is low in distinctiveness. That is, if the scanpath created during encoding for a given stimulus or event has considerable overlap with a scanpath created for a different stimulus or event, then the reproduction of one of the scanpaths may result in the retrieval of multiple memory representations that interfere with one another and lead to erroneous memory performance (Klein Selle et al., Citation2021; Wynn, Buchsbaum et al., Citation2021). Individuals who form more distinct representations also show later memory advantages (Wynn, Buchsbaum et al., Citation2021). Thus, multivariate examination of the scanpath across time can reveal the nature of the representation that is formed, including the type of content contained therein, and its distinctiveness from other memory representations formed by the same viewer. Such analyses can also highlight the dynamic unfolding or updating of cognitive processes. That is, gaze reinstatement predominantly occurs early during viewing, and declines over time, presumably as retrieval of requisite information has been accomplished, and the viewer can then enact other cognitive processes, such as initiating the relevant decision or response (Wynn, Ryan et al., Citation2020; Wynn et al., Citation2019).

Using combined eyetracking-fMRI, Wynn, Liu et al. (Citation2021) recently examined the neural correlates that predict subsequent gaze reinstatement. Activity in the occipital lobe and in the basal ganglia were positively associated with the amount of gaze reinstatement that was observed at retrieval. Moreover, there was overlap in the pattern of neural activity in the hippocampus that was predictive of subsequent recognition memory and subsequent gaze reinstatement. Together, these results suggest that, consistent with the original formulation of Noton and Stark (Noton & Stark, Citation1971), the scanpath is a sensory-motor effector trace that may become part-and-parcel of a memory representation. Gaze reinstatement thereby serves as a cue to support retrieval of associated details in memory.

Thus, like neuroimaging, eyetracking can provide moment-to-moment information, but with respect to behavior (and underlying cognitive operations), rather than of neural responses. Also, much like multivariate analyses of neural activity, multivariate analyses of eye movements allow for patterns in gaze behavior to be compared across time and space. Further, in the studies noted above, multivariate analyses provided an advantage over standard analyses of single eye movement metrics by revealing the content and quality of the representations that subsequently influence memory performance.

Applying multivariate approaches to eye movement data for the study of aging

Standard, univariate, analyses of eye movements have revealed that older adults differ from their younger counterparts on a number of viewing measures. For instance, older adults have demonstrated slower saccade latencies (D.P. Munoz et al., Citation1998), increased targeting errors (Huddleston et al., Citation2014), and older adults also tend to make more gaze fixations (and, consequently, have shorter gaze durations) than younger adults (Heisz & Ryan, Citation2011; Moghadami et al., Citation2021). However, some eye movement measures may be highly correlated with one another; for instance, during a fixed viewing period, the number of gaze fixations is inversely related to the duration of those fixations. Thus, like neural data, eyetracking data is multidimensional, and in the absence of using a multivariate approach, careful consideration must then be given to justify the eye movement metric of interest, lest issues of “cherry-picking” or of multiple comparisons arise.

Applying a multivariate analysis of eye movements to the study of age-related changes is only just emerging. However, the research that has been done to date suggests that aging is characterized by alterations in the pattern of visual exploration that reflect underlying changes in the quality of the memory representations that are formed and retrieved, and how such representations are used to support memory decisions. These changes are thought to reflect age-related alterations in the structure and function of the MTL, and specifically of the hippocampus, as well as a disruption in the information flow between the oculomotor system and the hippocampal memory system. Importantly, research suggests that consideration of multiple metrics of viewing using a multivariate approach may be important for increasing the sensitivity and specificity of detecting impending neurodegeneration (Haque et al., Citation2019; Lagun et al., Citation2011).

Pavisic et al. (Citation2021) considered multiple metrics of viewing behavior using multivariable linear regression to gain insight into the underlying behavioral mechanisms that may explain the visual short-term memory deficits observed in Alzheimer’s disease. Symptomatic and presymptomatic individuals from a familial Alzheimer’s disease cohort were tasked with remembering the locations of visual objects over a short delay. Across all participants, accurate memory for the previously studied location of an object was associated with increased viewing time on that object during encoding, as well as increased homogenous distribution of viewing time across all objects within the study display. Compared to control participants, symptomatic individuals showed decreases in time spent fixating the objects, and had a less homogenous viewing pattern; presymptomatic individuals required more viewing time on the objects compared to the controls to achieve similar accuracy (Pavisic et al., Citation2021). Such findings suggest that encoding processes may be disrupted in individuals who have markers for neurodegeneration, and such deficits may be exacerbated in symptomatic individuals.

We have used Partial Least Squares (PLS) correlation analysis to understand the manner and extent of visual exploration in younger adults, older adults who vary on cognitive status, as measured by the Montreal Cognitive Assessment (MoCA), and in individuals with amnestic mild cognitive impairment (aMCI). PLS correlation is a data-driven analysis approach that is similar to canonical correlation; the goal is to derive linear combinations of two or more groups of variables that maximally relate the groups (Abdi & Williams, Citation2013). PLS analysis results in a series of latent variables (LVs) that express the different relationships between groups. Originally applied to PET imaging data, PLS techniques have been expanded in the past two decades to uncover the spatiotemporal dynamics of neural activity as recorded in fMRI, EEG, or MEG, that relate to different cognitive tasks or behavior (McIntosh & Lobaugh, Citation2004). PLS is also particularly helpful in the analysis of behavioral data, including the metrics derived from eyetracking, as it considers collinearity among the variables (Shen et al., Citation2014). Applying PLS analysis to eye movement data collected during free viewing of scenes for younger adults, older adults, and “aMCI cases demonstrated a pattern of viewing that distinguished the groups (Khosla et al., Citation2021). The PLS analysis showed one significant latent variable that, for the younger adults, was expressed as fewer gaze fixations, larger saccade amplitudes, fewer regions sampled, yet those gaze fixations (and sampled regions) were spread over a greater area of the scene (root mean square distance, or RMSD; (Damiano & Walther, Citation2019)), with lower entropy (i.e., less randomness) of those eye movements. Amnestic MCI cases expressed the reverse pattern: more gaze fixations, smaller saccade amplitudes, more distinct regions explored, yet a smaller area of the scene was sampled by the eyes (RMSD), with higher entropy. Older adults expressed a similar pattern to that of the aMCI cases (and opposite of that of the younger adults), although not as a reliably as the aMCI cases (see also (Açik et al., Citation2010).

Within the older adult group, there was a significant correlation between MoCA scores and the scene exploration metrics. As MoCA scores increased, there was a robust increase in the area of exploration (RMSD) and in entropy. Thus, higher-performing older adults showed a pattern of exploration with respect to entropy that was distinct from that of younger adults, whereas older adults with lower MoCA scores showed a similar pattern of entropy in their viewing to that of younger adults. It may be counterintuitive that higher performing older adults showed a pattern of viewing that is different from that of younger adults, however, neuroimaging data has consistently shown that higher performing older adults exhibit distinct patterns of neural engagement compared to younger adults, suggesting that older adults may have adapted different strategies, or use different cognitive operations, in order to circumvent declining abilities (Elshiekh et al., Citation2020; Grady, Citation2000). Thus, altered visual exploration in aging may stem from altered neural engagement.

A related finding (Chan et al., Citation2018) used a hidden Markov modeling approach to characterize two different types of viewing to faces: a “holistic” approach in which eye movements were predominantly centered on the face, and an “analytic” approach in which the eye movements transitioned between the two eyes and the face center. Older adults showed more instances of holistic viewing than younger adults, and holistic viewing was associated with lower recognition performance. Additionally, for the older adults, lower performance on the MoCA was associated with increased incidences of holistic viewing (Chan et al., Citation2018). Presumably a holistic viewing approach for the older adults in the Chan et al. (Citation2018) study would correspond to a smaller area of visual exploration compared to an analytic viewing approach; if so, that would be consistent with the above described PLS findings in which older adults explore a smaller area of images compared to younger adults, and those with higher MoCA scores sampled a higher area of the visual scene.

Lower MoCA scores in older adults have been associated with significant volume changes in the entorhinal cortex, and marginal changes in the perirhinal cortex and CA1 subregion of the hippocampus (Olsen et al., Citation2017). This suggests that there are changes in the pattern of viewing of even healthy older adults that either precede impending cognitive decline and/or structural changes in the MTL, or may be invoked as a compensatory shift due to early onset of structural and/or functional changes in the brain. Indeed, functional changes have been observed in the MTL of older adults in the absence of structural changes in region volume (Rondina et al., Citation2016). Given the relationship between visual exploration (i.e., gaze fixations) and functional activity in the hippocampus (Liu et al., Citation2017) increased visual exploration in older adults may reflect a continual effort to encode information into memory, or may be a means by which neural activity in regions of the MTL may be up-regulated (Liu et al., Citation2018).

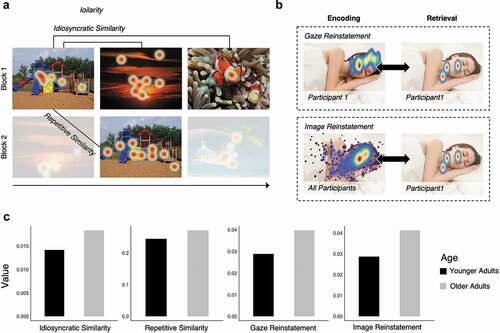

One consequence of viewing the visual world differently from younger adults may be that the information that is extracted is of a different amount or quality from that of younger adults. Alternatively, the altered visual exploration may be an outward manifestation of age-related changes in hippocampally-mediated memory binding abilities. Specifically, the hippocampus has a critical role in binding the relative spatial and temporal relations among elements within a scene or event to form a lasting memory representation (Cohen & Eichenbaum, Citation1993); functional deficits within the hippocampus and the broader MTL may result in increased exploration among objects in an effort to up-regulate the binding process. On either account, one prediction would be that the nature of the information represented by visual exploration differs between older and younger adults. To test this prediction, Wynn and colleagues (Wynn, Buchsbaum et al., Citation2021) examined the extent to which scanpaths that were created during initial viewing of a given image were distinct from those created during repeated viewings of the same image (repetitive similarity) and from those created during the viewing of other images (idiosyncratic similarity) ().

Figure 3. Visualization of the eye movement similarity analyses. (a) Idiosyncratic similarity captures the similarity between the eye movements of a single participant viewing different images. Repetitive similarity captures the similarity between the eye movements of a single participant viewing the same image over repetitions. (b) Gaze reinstatement captures the similarity between the eye movements of a single participant encoding and retrieving the same or similar (i.e., lure) image. Image reinstatement captures the similarity between the eye movements of a single participant retrieving an image to a gaze template generated from the eye movements of all participants encoding the same or similar (i.e., lure) image. (c) Age differences in similarity and reinstatement measures; older adults showed significantly higher repetitive similarity and image reinstatement. Idiosyncratic similarity and gaze reinstatement were numerically higher in older versus younger adults, but were not significantly different (figure adapted from data presented in Wynn, Buchsbaum et al., Citation2021).

Specifically, using the same gaze similarity method described previously, gaze density maps were generated for each participant viewing each image and were correlated across repeated presentations of the same image (repetitive similarity) and across distinct images (idiosyncratic similarity). These measures of encoding similarity were used to assess the distinctiveness and the completeness (i.e., amount of information), respectively, of the memory traces generated for each unique viewing event. Older adults had numerically higher idiosyncratic similarity than younger adults suggesting that the memory representations for the images were less well differentiated for older compared to younger adults. Likewise, older adults had significantly higher repetitive similarity than younger adults, suggesting that older adults may not have been updating their representations with novel information (i.e., sampling new regions within the image with their eyes) at the same rate as younger adults. These findings suggest that, in addition to being less distinct, the memory representations that were made older adults may have been lacking in the amount or type of information contained therein. Older adults may re-fixate previously sampled regions in order to re-encode details or to strengthen a weak representation, consistent with the often-reported finding (and further replicated in (Wynn, Buchsbaum et al., Citation2021)) that older adults tend to have a greater amount of visual sampling behavior as indexed by gaze fixations. Other, related, research has shown that individual older adults showed viewing patterns that were similar to the group average (i.e., higher inter-subject correlations) compared to younger adults, at various points during movie watching. Perhaps unsurprisingly, higher levels of similarity with the group average, and therefore less distinctiveness between individuals, was not related to the ability to recall details from memory (Davis & Campbell, Citation2021).

A question then, is whether the scanpaths that are made by older adults are functional for memory retrieval, as they are for younger adults. However, although gaze reinstatement positively supports memory performance, scanpaths that are less distinct, and/or contain lower-quality information (either in content or strength) may ultimately result, in part, in a failure to subsequently retrieve the appropriate information from memory thereby increasing memory errors (Klein Selle et al., Citation2021; Wynn, Ryan et al., Citation2020). The scanpath may be an element that is bound within the hippocampal memory representation (Wynn, Liu et al., Citation2021). Due to the flexible nature of memory, the relations among elements within the hippocampal representation may be strengthened, updated, or even recombined through experience. When a scanpath is subsequently retrieved, it may facilitate accurate retrieval of additional, associated details (Wynn et al., Citation2019). Alternatively, an incorrect, but similar, scanpath may be retrieved, which may result in the subsequent erroneous retrieval of associated details.

Wynn and colleagues examined the nature and function of gaze reinstatement in older adults; specifically, the amount of gaze reinstatement, the relationship between gaze reinstatement and successful memory performance, and also whether inappropriate gaze reinstatement explained an increase in false alarms that is often observed in older adults on recognition tasks (Stark et al., Citation2013; Toner et al., Citation2013). First, using a change detection task, Wynn et al. (Wynn et al., Citation2018) showed that older adults were just as accurate as younger adults for detecting a change in the relative spatial locations among objects after a variable (750 msec – 6000msec) delay, but older adults showed higher levels of gaze reinstatement during the delay interval, prior to making the recognition judgment (for a related finding, see (Wynn et al., Citation2016)). Further, the amount of reinstatement of encoding-related eye movements during the delay phase was positively associated with change detection performance across both shorter and longer delays for older adults. This was in contrast to the relationship that was observed for younger adults, for whom the positive relationship between gaze reinstatement and change detection performance was only seen at the longer delays (see also, (Olsen et al., Citation2014)). These findings suggest that older adults may likely need more visual input to facilitate recognition via relational comparison processes (Wynn et al., Citation2016). Moreover, older adults may invoke gaze reinstatement in service of memory performance at lower levels of cognitive demands than the younger adults, or when the memory demands of the task exceeded available cognitive resources, consistent with findings of neural compensation (Grady, Citation2012; Wynn et al., Citation2019).

A subsequent study used an image recognition memory task (Wynn, Buchsbaum et al., Citation2021) in which the retrieval probes varied in level of completeness (i.e., 0–80% degradation) or duration (250, 500, or 750ms), to determine whether gaze reinstatement, or even merely reinstatement by the eyes of salient image features, may contribute to memory errors that are made by older adults (). During recognition, older adults typically false alarm to lures that are highly similar to previously studied images at a higher rate than what has been observed for younger adults (Stark et al., Citation2013; Toner et al., 2013). This recognition deficit has been thought to be due to a bias in pattern completion processes, in which retrieval of a memory occurs in response to a partial cue. Pattern completion is contrasted against pattern separation, a process whereby similar inputs are orthogonalized into distinct representations in memory (Stark et al., Citation2013). The authors reasoned that if older adults use gaze reinstatement to support recognition memory, they may also use gaze reinstatement to support memory retrieval in response to a partial cue, and that such reinstatement may be, in part, the source of memory errors that occur. Alternatively, memory errors may simply arise, not due to the recapitulation of the older adults’ unique scanpaths, but due to a recapitulation by the eyes of the generally salient, and similar, image features that were present at encoding. To that end, Wynn et al, calculated two eye-movement based reinstatement measures: gaze reinstatement as previously defined, and image reinstatement which was the correlation of participant-specific retrieval gaze patterns with image-specific encoding gaze patterns, aggregated across all participants (). Thus, gaze reinstatement captured both the content of the prior images as well as the scanpath itself (i.e., the specific encoding operations used), whereas image reinstatement captured the replay of image-specific content. During the stimulus-free retrieval interval (following the brief presentation of a degraded test probe), older adults showed similar levels of gaze reinstatement, and higher levels of image reinstatement, as compared to younger adults. In younger adults, false alarms to lure images were predicted by the amount of gaze reinstatement, whereas for older adults, false alarms to lure images were predicted by the amount of image reinstatement. Thus, for younger adults, memory errors are due to the recapitulation of encoded image content as well as the experience of encoding (i.e., gaze reinstatement), but for older adults, retrieval of image content was enough to produce memory errors. These findings add to the growing narrative that scanpaths, much like other effector traces, may be an element that is fed forward and bound into hippocampal representations; however, increasing functional disconnections between the oculomotor system and the hippocampal memory system in older adults may result in an inability to leverage the scanpath (i.e., enact gaze reinstatement) to support retrieval of associated details from memory. Gaze reinstatement may contribute to the rich reexperiencing of memory in younger adults, but not in older adults.

Altogether, studies that use multivariate approaches for the analysis of eye movement behavior show that aging, and increasing MTL dysfunction (such as in cases of aMCI), are characterized by moment-to-moment changes in the nature and extent of visual exploration, and ultimately, in the information used to support memory performance. Critically, however, absent such multivariate investigations, our understanding of age-related cognitive deficits would have remained limited. That is, by only using univariate analyses, we may have simply concluded that the deficit in aging is one of a failure to encode (e.g., not look at) and/or retrieve information (Ryan et al., Citation2007; Taconnat et al., Citation2020; Yeung et al., Citation2019). However, the above reviewed evidence points to a more nuanced depiction of aging: the nature of the representations that are encoded and subsequently retrieved by older adults may not necessarily contain the same type or fidelity of information as that of younger adults.

Moreover, absent multi-modal and multivariate approaches, our understanding of the role of the hippocampus and broader MTL in visual exploration would have also remained limited. Beyond adding to the growing amount of evidence that the functions of the MTL guide viewing behavior online (Ryan et al., Citation2020), there is now novel evidence that effector traces may be one element that is bound in hippocampally-mediated representations, thereby allowing scanpaths to play a functional role in memory retrieval (Noton & Stark, Citation1971; Wynn et al., Citation2019). This latter finding contributes further to our understanding of memory itself: replay of eye movements may drive the experiential feel of remembering, and due to the flexible nature of memory, may even lead to memory errors (Wynn, Buchsbaum et al., Citation2021).

Remaining questions and future research

Research that employs a multi-modal approach to the study of aging continues to increase; however, the multivariate analyses of behavior, and specifically of eye movements, are emerging, and to date, have only rarely been applied to the study of aging. The field of aging research would benefit from increased incidences of combined multi-modal and multivariate approaches as they can reveal the dynamic unfolding of information that is encoded and subsequently used to support cognitive performance, as well as the underlying neural regions and broader networks that are engaged. In particular, findings from studies that use multi-modal (eyetracking + neuroimaging) and multivariate analysis approaches as applied to the study of aging raise new questions regarding how the nature of the encoding and retrieval processes may differ for older versus younger adults, and how such processing differences impact the resultant representations that are stored in memory. Typically, it has been suggested that age-related changes in visual exploration may simply be due to sensory changes, such as reduced vision or a smaller functional field of view (Scialfa et al., Citation1994; Sekuler et al., Citation2010), or due to changes in the speed of processing, resulting in repetitive or inefficient patterns of viewing behavior (Noiret et al., Citation2017). However, the research reviewed above suggests that age-related changes in visual exploration may go above and beyond mere sensory changes, to reflect ongoing changes in the ability to bind information into lasting mnemonic representations, and to retrieve such representations to support cognition in the moment.

Further work remains to comprehensively understand the changes that occur to the type or amount of information that is contained in mnemonic representations in older compared to younger adults. Research from the developmental literature points to the use of representational similarity analyses within a convolutional neural network model to determine the link between gaze patterns and neural activity; the correspondence between network layers (i.e., those corresponding to lower versus higher-level visual cortex) and gaze patterns allows assumptions to be made regarding the type of information that may be guiding eye movements (in this case, in younger versus older infants) (Kiat et al., Citation2021). A similar approach may prove useful for understanding age-related differences in the neural levels that are engaged to support memory, and consequently, the type of information that is stored. Notably, here, the above reviewed evidence regarding gaze reinstatement in aging suggests that there is an important distinction between whether information is stored, and the type of information that may subsequently be used by older adults to support further visual exploration and cognitive processing. That is, cognitive aging may not always be marked by an absence of stored information, but may also be characterized by an inability to leverage such stored information to support performance. Therefore, research remains to determine what type of mnemonic information can indeed be leveraged, and how it may be accessed, to subsequently support performance. It is perhaps most likely that older adults face a multi-factorial problem that reflects sensory and processing speed changes, as well as structural and functional brain changes that outwardly manifest as changes in visual exploration, thereby impacting the amount and nature of the information that is encoded, as well as the information that can be accessed and/or is prioritized for use. In this regard, the use of multi-modal and multivariate approaches in aging research is key to disentangling which age-related deficits may be primary or foundational, and which deficits may be contingent upon, or occur as a consequence of, other cognitive or neural functions that are also disrupted.

Conclusions

By applying multi-modal and multivariate approaches to the study of aging, research has shown that aging is characterized by moment-to-moment alterations in the amount and pattern of visual exploration, and by extension, alterations in the activity and function of the hippocampus and broader MTL. Age-related increases in, or general changes in the patterns of, visual exploration may support the encoding of information, and may contribute to an up-regulation of function within the hippocampus and MTL. However, age-related changes in visual exploration patterns may be non-optimal and may actually reduce the amount and/or type of incoming information, thereby ultimately compromising the contents of the memory representation. Together, methodological advances in multi-modal and multivariate studies suggest that age-related declines in the integrity of the memory system have consequences for oculomotor behavior in the moment, in a reciprocal fashion. Models of cognitive aging may be informed and refined by understanding age-related changes in visual exploration, and in the resultant representations (Wynn, Amer et al., Citation2020), as it is clear from the research reviewed here that age-related cognitive impairments are considerably broader in scope than previously realized. From a more applied standpoint, multivariate analyses of eye movements advance research regarding the potential applications of eyetracking for the diagnosis of neurodegeneration by demonstrating that even in the absence of any explicit memory demands, eye movements during free viewing can be used to identify MTL decline in normal and pathological aging.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

References

- Abdi, H., & Williams, L. J. (2013). Partial least squares methods: Partial least squares correlation and partial least square regression. In: Reisfeld B., Mayeno A. (eds) Computational Toxicology. Methods in Molecular Biology (Methods and Protocols), vol 930. Humana Press, Totowa, NJ. https://doi.org/https://doi.org/10.1007/978-1-62703-059-5_23

- Açik, A., Sarwary, A., Schultze-Kraft, R., Onat, S., & König, P. (2010). Developmental changes in natural viewing behavior: Bottomup and top-down differences between children, young adults and older adults. Frontiers in Psychology, 1(NOV), 1–14. https://doi.org/https://doi.org/10.3389/fpsyg.2010.00207

- Borji, A., & Itti, L. (2014). Defending Yarbus: Eye movements reveal observers’ task. Journal of Vision, 14(3), 29–29. https://doi.org/https://doi.org/10.1167/14.3.29

- Brewer, J. B., Zhao, Z., Desmond, J. E., Glover, G. H., & Gabrieli, J. D. E. (1998). Making memories: Brain activity that predicts how well visual experience will be remembered. Science, 281(5380), 1185–1187. https://doi.org/https://doi.org/10.1126/science.281.5380.1185

- Butler, K. M., Zacks, R. T., & Henderson, J. M. (1999). Suppression of reflexive saccades in younger and older adults: Age comparisons on an antisaccade task. Memory & Cognition, 27(4), 584–591. https://doi.org/https://doi.org/10.3758/BF03211552

- Chadjikyprianou, A., Hadjivassiliou, M., Papacostas, S., & Constantinidou, F. (2021). The neurocognitive study for the aging: Longitudinal analysis on the contribution of sex, age, education and APOE ɛ4 on cognitive performance. Frontiers in Genetics, 12:680531. doi: https://doi.org/10.3389/fgene.2021.680531

- Chan, C. Y. H., Chan, A. B., Lee, T. M. C., & Hsiao, J. H. (2018). Eye-movement patterns in face recognition are associated with cognitive decline in older adults. Psychonomic Bulletin and Review, 25(6), 2200–2207. https://doi.org/https://doi.org/10.3758/s13423-017-1419-0

- Christiansen, K., Dillingham, C. M., Wright, N. F., Saunders, R. C., Vann, S. D., Aggleton, J. P., & Barbas, H. (2016). Complementary subicular pathways to the anterior thalamic nuclei and mammillary bodies in the rat and macaque monkey brain. European Journal of Neuroscience, 43(8), 1044–1061. https://doi.org/https://doi.org/10.1111/ejn.13208

- Cohen, N. J., & Eichenbaum, H. (1993). Memory, amnesia, and the hippocampal system. MIT Press.

- Crutcher, M. D., Calhoun-Haney, R., Manzanares, C. M., Lah, J. J., Levey, A. I., & Zola, S. M. (2009). Eye tracking during a visual paired comparison task as a predictor of early dementia. American Journal of Alzheimer’s Disease and Other Dementias, 24(3), 258–266. https://doi.org/https://doi.org/10.1177/1533317509332093

- Damiano, C., & Walther, D. B. (2019). Distinct roles of eye movements during memory encoding and retrieval. Cognition, 184, 119–129. https://doi.org/https://doi.org/10.1016/j.cognition.2018.12.014

- Davis, E.E., Chemnitz, E., Collins, T.K., Geerligs, L., Campbell, K.L. (2021). Looking the same, but remembering differently: Preserved eye-movement synchrony with age during movie watching. Psychology & Aging, 36(5), 604–615. doi: https://doi.org/10.1037/pag0000615

- Dillingham, C. M., Frizzati, A., Nelson, A. J. D., & Vann, S. D. (2015). How do mammillary body inputs contribute to anterior thalamic function? Neuroscience and Biobehavioral Reviews, 54, 108–119. https://doi.org/https://doi.org/10.1016/j.neubiorev.2014.07.025

- Elshiekh, A., Subramaniapillai, S., Rajagopal, S., Pasvanis, S., Ankudowich, E., & Rajah, M. N. (2020). The association between cognitive reserve and performance-related brain activity during episodic encoding and retrieval across the adult lifespan. Cortex, 129, 296–313. https://doi.org/https://doi.org/10.1016/j.cortex.2020.05.003

- Fagan, J. F. (1970). Memory in the infant. Journal of Experimental Child Psychology, 9(2), 217–226. https://doi.org/https://doi.org/10.1016/0022-0965(70)90087-1

- Fantz, R. L. (1964). Visual experience in infants: Decreased attention to familiar patterns relative to novel ones. Science (New York, N.Y, 146(3644), 668–670. https://doi.org/https://doi.org/10.1126/science.146.3644.668

- Fehlmann B, Coynel D, Schicktanz N, Milnik A, Gschwind L, Hofmann P, Papassotiropoulos A and de Quervain D J-F. (2020). Visual Exploration at Higher Fixation Frequency Increases Subsequent Memory Recall. Cerebral Cortex Communications, 1(1), https://doi.org/10.1093/texcom/tgaa032

- Fernandez-Ruiz, J., Peltsch, A., Alahyane, N., Brien, D. C., Coe, B. C., Garcia, A., & Munoz, D. P. (2018). Age related prefrontal compensatory mechanisms for inhibitory control in the antisaccade task. NeuroImage, 165, 92–101. https://doi.org/https://doi.org/10.1016/j.neuroimage.2017.10.001

- Frost, B. E., Martin, S. K., Cafalchio, M., Islam, M. N., Aggleton, J. P., & O’Mara, S. M. (2021). Anterior thalamic inputs Are required for subiculum spatial coding, with associated consequences for hippocampal spatial memory. The Journal of Neuroscience, 41(30), 6511–6525. https://doi.org/https://doi.org/10.1523/JNEUROSCI.2868-20.2021

- Grady, C. L., McIntosh, A. R., & Craik, F. I. M. (2005). Task-related activity in prefrontal cortex and its relation to recognition memory performance in young and old adults. Neuropsychologia, 43(10), 1466–1481. https://doi.org/https://doi.org/10.1016/j.neuropsychologia.2004.12.016

- Grady, C. L. (2000). Functional brain imaging and age-related changes in cognition. Biological Psychology, 54(1–3), 259–281. https://doi.org/https://doi.org/10.1016/S0301-0511(00)00059-4

- Grady, C. L. (2012). The cognitive neuroscience of ageing. Nature Reviews Neuroscience, 13(7), 491–505. https://doi.org/https://doi.org/10.1038/nrn3256

- Hannula, D. E., & Ranganath, C. (2009). The eyes have it: Hippocampal activity predicts expression of memory in eye movements. Neuron, 63(5), 592–599. https://doi.org/https://doi.org/10.1016/j.neuron.2009.08.025

- Hannula, D. E., Ryan, J. D., Tranel, D., & Cohen, N. J. (2007). Rapid onset relational memory effects are evident in eye movement behavior, but not in hippocampal amnesia. Journal of Cognitive Neuroscience, 19(10), 1690–1705. https://doi.org/https://doi.org/10.1162/jocn.2007.19.10.1690

- Haque, R. U., Manzanares, C. M., Brown, L. N., Pongos, A. L., Lah, J. J., Clifford, G. D., & Levey, A. I. (2019). VisMET: A passive, efficient, and sensitive assessment of visuospatial memory in healthy aging, mild cognitive impairment, and Alzheimer’s disease. Learning & Memory, 26(3), 93–100. https://doi.org/https://doi.org/10.1101/lm.048124.118

- Haxby, J. V., Connolly, A. C., & Guntupalli, J. S. (2014). Decoding neural representational spaces using multivariate pattern analysis. Annual Review of Neuroscience, 37(1), 435–456. https://doi.org/https://doi.org/10.1146/annurev-neuro-062012-170325

- Haynes, J. D., & Rees, G. (2006, July). Decoding mental states from brain activity in humans. Nature Reviews Neuroscience, 7(7), 523–534. Nature Publishing Group. https://doi.org/https://doi.org/10.1038/nrn1931

- Heisz, J. J., & Ryan, J. D. (2011). The effects of prior exposure on face processing in younger and older adults. Frontiers in Aging Neuroscience, 3(OCT), 1–6. https://doi.org/https://doi.org/10.3389/fnagi.2011.00015

- Henderson J M, Goold J E, Choi W and Hayes T R. (2020). Neural Correlates of Fixated Low- and High-level Scene Properties during Active Scene Viewing. Journal of Cognitive Neuroscience, 32(10), 2013–2023. https://doi.org/10.1162/jocn_a_01599

- Henderson, J. M., Nuthmann, A., & Luke, S. G. (2013). Eye movement control during scene viewing: Immediate effects of scene luminance on fixation durations. Journal of Experimental Psychology: Human Perception and Performance, 39(2), 318–322. https://doi.org/https://doi.org/10.1037/a0031224

- Henson, R. N., Greve, A., Cooper, E., Gregori, M., Simons, J. S., Geerligs, L., Erzinçlioğlu, S., Kapur, N., & Browne, G. (2016). The effects of hippocampal lesions on MRI measures of structural and functional connectivity. Hippocampus, 26(11), 1447–1463. https://doi.org/https://doi.org/10.1002/hipo.22621

- Hoffman, K. L., Dragan, M. C., Leonard, T. K., Micheli, C., Montefusco-Siegmund, R., & Valiante, T. A. (2013). Saccades during visual exploration align hippocampal 3-8 Hz rhythms in human and non-human primates. Frontiers in Systems Neuroscience, 7, 43. https://doi.org/https://doi.org/10.3389/fnsys.2013.00043

- Holden H M, Toner C, Pirogovsky E, Kirwan C Brock and Gilbert P E. (2013). Visual object pattern separation varies in older adults. Learn. Mem., 20(7), 358–362. https://doi.org/10.1101/lm.030171.112

- Huddleston, W. E., Ernest, B. E., & Keenan, K. G. (2014). Selective age effects on visual attention and motor attention during a cued saccade task. Journal of Ophthalmology, 2014, 1–11 . https://doi.org/https://doi.org/10.1155/2014/860493

- Johansson, R., Nyström, M., Dewhurst, R., & Johansson, M. (2021). Eye-movement replay supports episodic remembering. ResearchSquare. https://doi.org/https://doi.org/10.21203/rs.3.rs-275969/v1

- Katz, C. N., Patel, K., Talakoub, O., Groppe, D., Hoffman, K., & Valiante, T. A. (2020). Differential generation of saccade, fixation, and image-onset event-related potentials in the human mesial temporal lobe. Cerebral Cortex, 30(10), 5502–5516. https://doi.org/https://doi.org/10.1093/cercor/bhaa132

- Khosla, A., Kacollja, A., Shahmiri, E., Shen, K., & Ryan, J. D. (2021). Changes in viewing behaviour in healthy aging and amnestic mild cognitive impairment. Journal of Vision, 21(9), 2098. https://doi.org/https://doi.org/10.1167/jov.21.9.2098

- Kiat, J. E., Luck, S. J., Beckner, A. G., Hayes, T. R., Pomaranski, K. I., Henderson, J. M., & Oakes, L. M. (2021). Linking patterns of infant eye movements to a neural network model of the ventral stream using representational similarity analysis. Developmental Science, (June), 1–13. e13155. https://doi.org/https://doi.org/10.1111/desc.13155

- Kirwan, C. B., Kirwan, C. B., Kirwan, C. B., Gilbert, P. E., & Gilbert, P. E. (2013). Visual object pattern separation varies in older adults. Learning & Memory, 20(7), 358–362. https://doi.org/https://doi.org/10.1101/lm.030171.112

- Klein Selle, N., Gamer, M., & Pertzov, Y. (2021). Gaze-pattern similarity at encoding may interfere with future memory. Scientific Reports, 11(1), 7697. https://doi.org/https://doi.org/10.1038/s41598-021-87258-z

- Koba C, Notaro G, Tamm S, Nilsonne G and Hasson U. (2021). Spontaneous eye movements during eyes-open rest reduce resting-state-network modularity by increasing visual-sensorimotor connectivity. Network Neuroscience, 5(2), 451–476. https://doi.org/10.1162/netn_a_00186

- Kragel J E, VanHaerents S, Templer J W, Schuele S, Rosenow J M, Nilakantan A S, and Bridge D J. (2021). Hippocampal theta coordinates memory processing during visual exploration. eLife, 9 https://doi.org/10.7554/eLife.52108

- Kriegeskorte, N., Goebel, R., & Bandettini, P. (2006). Information-based functional brain mapping. Proceedings of the National Academy of Sciences of the United States of America, 103(10), 3863–3868. https://doi.org/https://doi.org/10.1073/pnas.0600244103

- Kwak, S., Park, S. M., Jeon, Y.-J., Ko, H., Oh, D. J., & Lee, J.-Y. (2021). Multiple cognitive and behavioral factors link association between brain structure and functional impairment of daily instrumental activities in older adults. Journal of the International Neuropsychological Society, 1–14. https://doi.org/https://doi.org/10.1017/S1355617721000916

- Lagun, D., Manzanares, C., Zola, S. M., Buffalo, E. A., & Agichtein, E. (2011). Detecting cognitive impairment by eye movement analysis using automatic classification algorithms. Journal of Neuroscience Methods, 201(1), 196–203. https://doi.org/https://doi.org/10.1016/j.jneumeth.2011.06.027

- Leszczynski, M., Staudigl, T., Chaieb, L., Enkirch, S. J., Fell, J., & Schroeder, C. E. (2020). Eye movements modulate neural activity in the human anterior thalamus during visual active sensing. BioRxiv. https://doi.org/https://doi.org/10.1101/2020.03.30.015628

- Liu, Z.-X., Rosenbaum, R. S., & Ryan, J. D. (2020). Restricting visual exploration directly impedes neural activity, functional connectivity, and memory. Cerebral Cortex Communications, 1(1), tgaa054; 1–15. https://doi.org/https://doi.org/10.1093/texcom/tgaa054

- Liu, Z.-X., Shen, K., Olsen, R. K., & Ryan, J. D. (2017). Visual sampling predicts hippocampal activity. The Journal of Neuroscience, 37(3), 599–609. https://doi.org/https://doi.org/10.1523/JNEUROSCI.2610-16.2016

- Liu, Z.-X., Shen, K., Olsen, R. K., & Ryan, J. D. (2018). Age-related changes in the relationship between visual exploration and hippocampal activity. Neuropsychologia, 119(July), 81–91. https://doi.org/https://doi.org/10.1016/j.neuropsychologia.2018.07.032

- Manns, J. R. L., Stark, C. E., & Squire, L. R. (2000). The visual paired-comparison task as a measure of declarative memory. Proceedings of the National Academy of Sciences of the United States of America, 97(22), 12375–12379. doi: https://doi.org/10.1073/pnas.220398097

- McIntosh, A. R., & Lobaugh, N. J. (2004). Partial least squares analysis of neuroimaging data: Applications and advances. Neuroimage, 23, Suppl 1, S250–63. doi: https://doi.org/10.1016/j.neuroimage.2004.07.020

- McIntosh, A. R., & Mišić, B. (2013). Multivariate statistical analyses for neuroimaging data. Annual Review of Psychology, 64(1), 499–525. https://doi.org/https://doi.org/10.1146/annurev-psych-113011-143804

- Moghadami, M., Moghimi, S., Moghimi, A., Malekzadeh, G. R., & Fadardi, J. S. (2021). The investigation of simultaneous EEG and eye tracking characteristics during fixation task in mild alzheimer’s disease. Clinical EEG and Neuroscience, 52(3), 211–220. https://doi.org/https://doi.org/10.1177/1550059420932752

- Munoz, D. P., Broughton, J. R., Goldring, J. E., & Armstrong, I. T. (1998). Age-related performance of human subjects on saccadic eye movement tasks. Experimental Brain Research, 121(4), 391–400. https://doi.org/https://doi.org/10.1007/s002210050473

- Munoz, M., Chadwick, M., Perez-Hernandez, E., Vargha-Khadem, F., & Mishkin, M. (2011). Novelty preference in patients with developmental amnesia. Hippocampus, 21(12), 1268–1276. https://doi.org/https://doi.org/10.1002/hipo.20836

- Nie, J., Qiu, Q., Phillips, M., Sun, L., Yan, F., Lin, X., Xiao, S., & Li, X. (2020). Early diagnosis of mild cognitive impairment based on eye movement parameters in an aging chinese population. Frontiers in Aging Neuroscience, 12, 221. https://doi.org/https://doi.org/10.3389/fnagi.2020.00221

- Noiret, N., Vigneron, B., Diogo, M., Vandel, P., & Laurent, É. (2017). Saccadic eye movements: What do they tell us about aging cognition?. Neuropsychology, Development, and Cognition. Section B, Aging, Neuropsychology and Cognition, 24(5), 575–599. https://doi.org/https://doi.org/10.1080/13825585.2016.1237613

- Noton, D., & Stark, L. (1971). Scanpaths in eye movements during pattern perception. Science (New York, N.Y.), 171(3968), 308–311. https://doi.org/https://doi.org/10.1126/science.171.3968.308

- Olsen, R. K., Chiew, M., Buchsbaum, B. R., & Ryan, J. D. (2014). The relationship between delay period eye movements and visuospatial memory. Journal of Vision, 14(1), 1. https://doi.org/https://doi.org/10.1167/14.1.8

- Olsen, R. K., Yeung, L.-K., Noly-Gandon, A., D’Angelo, M. C., Kacollja, A., Smith, V. M., Ryan, J. D., & Barense, M. D. (2017). Human anterolateral entorhinal cortex volumes are associated with cognitive decline in aging prior to clinical diagnosis. Neurobiology of Aging, 57, 195–205. https://doi.org/https://doi.org/10.1016/j.neurobiolaging.2017.04.025

- Paller, K. A., Kutas, M., & Mayes, A. R. (1987). Neural correlates of encoding in an incidental learning paradigm. Electroencephalography and Clinical Neurophysiology, 67(4), 360–371. https://doi.org/https://doi.org/10.1016/0013-4694(87)90124-6

- Park, D. C., & Bischof, G. N. (2013). The aging mind: Neuroplasticity in response to cognitive training. Dialogues in Clinical Neuroscience, 15(1), 109–119. https://doi.org/https://doi.org/10.31887/dcns.2013.15.1/dpark

- Park, D. C., Lautenschlager, G., Hedden, T., Davidson, N. S., Smith, A. D., & Smith, P. K. (2002). Models of visuospatial and verbal memory across the adult life span. Psychology and Aging, 17(2), 299–320. https://doi.org/https://doi.org/10.1037/0882-7974.17.2.299

- Pascalis, O., Hunkin, N. M., Holdstock, J. S., Isaac, C. L., & Mayes, A. R. (2004). Visual paired comparison performance is impaired in a patient with selective hippocampal lesions and relatively intact item recognition. Neuropsychologia, 42(10), 1293–1300. https://doi.org/https://doi.org/10.1016/j.neuropsychologia.2004.03.005

- Pavisic, I. M., Pertzov, Y., Nicholas, J. M., O’Connor, A., Lu, K., Yong, K. X. X., Husain, M., Fox, N. C., & Crutch, S. J. (2021). Eye-tracking indices of impaired encoding of visual short-term memory in familial Alzheimer’s disease. Scientific Reports, 11(1), 8696. https://doi.org/https://doi.org/10.1038/s41598-021-88001-4

- Ramey, M. M., Yonelinas, A. P., & Henderson, J. M. (2020). Why do we retrace our visual steps? Semantic and episodic memory in gaze reinstatement. Learning & Memory, 27(7), 275–283. https://doi.org/https://doi.org/10.1101/lm.051227.119

- Rondina, R., Olsen, R. K., McQuiggan, D. A., Fatima, Z., Li, L., Oziel, E., Meltzer, J. A., & Ryan, J. D. (2016). Age-related changes to oscillatory dynamics in hippocampal and neocortical networks. Neurobiology of Learning and Memory, 134(PartA), 15–30. https://doi.org/https://doi.org/10.1016/j.nlm.2015.11.017

- Rosen, B. R., Buckner, R. L., & Dale, A. M. (1998). Event-related functional MRI: Past, present, and future. Proceedings of the National Academy of Sciences of the United States of America, 95(3), 773–780. https://doi.org/https://doi.org/10.1073/pnas.95.3.773

- Ryan, J. D., Althoff, R. R., Whitlow, S., & Cohen, N. J. (2000). Amnesia is a deficit in relational memory. Psychological Science, 11(6), 454–461. https://doi.org/https://doi.org/10.1111/1467-9280.00288