?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

The eigensystem realization algorithm (ERA) is a commonly used data-driven method for system identification and reduced-order modelling of dynamical systems. The main computational difficulty in ERA arises when the system under consideration has a large number of inputs and outputs, requiring to compute a singular value decomposition (SVD) of a large-scale dense Hankel matrix. In this work, we present an algorithm that aims to resolve this computational bottleneck via tangential interpolation. This involves projecting the original impulse response sequence onto suitably chosen directions. The resulting data-driven reduced model preserves stability and is endowed with an a priori error bound. Numerical examples demonstrate that the modified ERA algorithm with tangentially interpolated data produces accurate reduced models while, at the same time, reducing the computational cost and memory requirements significantly compared to the standard ERA. We also give an example to demonstrate the limitations of the proposed method.

1. Introduction

Control of complex systems can be achieved by using low-dimensional surrogate models that approximate the input–output behaviour of the original system accurately, and are much faster to simulate. When access to the internal description of the model is not available, data-driven techniques are used to approximate the system response. The field of subspace-based system identification (SI) provides powerful tools for fitting a linear time-invariant (LTI) system to given input–output responses of the measured system. Applications of subspace-based SI arise in many engineering disciplines, such as in aircraft wing flutter assessment [Citation1,Citation2], vibration analysis for bridges [Citation3], structural health analysis for buildings [Citation4], modelling of indoor-air behaviour of energy efficient buildings [Citation5], flow control [Citation6–Citation8], seismic imaging [Citation9] and many more. In all applications, the identification of LTI systems was crucial for analysis and control of the plant. An overview of applications and methods for subspace-based SI can be found in [Citation10] and more recently in [Citation11,Citation12].

The eigensystem realization algorithm (ERA) by Kung [Citation13] offers one solution to the SI problem, while simultaneously involving a model reduction step. The algorithm uses discrete-time impulse response data to construct reduced-order models via a singular value decomposition (SVD). Importantly, the resulting reduced models retain stability, see Section 2. Starting with Kung’s work [Citation13], various applications and extensions of the algorithm appeared in the literature [Citation1,Citation3,Citation9,Citation14–Citation17]. As an interesting result, Rowley and co-authors showed in [Citation6] that ERA is the data-driven approximation to balanced truncation, and compared ERA to balanced proper orthogonal decomposition (POD) [Citation18,Citation19] for a flow past an inclined plate. Balanced POD was found to provide superior reduced-order models, yet assumes that system matrices and their adjoints are available. The authors in [Citation20] propose a randomized POD technique to reduce the computational cost of extracting the dominant modes of the Hankel matrix.

Mechanical systems with multiple sensors and actuators are modelled as multi-input multi-output (MIMO) dynamical systems. Such systems impose additional computational challenges for SI and for ERA in particular. For instance, ERA requires a full SVD of a structured Hankel matrix, whose size scales linearly with the input and output dimension. Moreover, large Hankel matrices can arise if the dynamics of the system decay slowly.

We propose a SI and model reduction algorithm for MIMO systems which reduces the computational effort and storage compared to the standard ERA, see Section 3. The new algorithm projects the full impulse response data onto smaller input and output subspaces along carefully chosen left and right tangential directions to minimize the effect of the neglected impulse response data. Computing the SVD of the projected Hankel matrix then becomes feasible and can be executed in shorter time with less storage. Moreover, we show that reduced models obtained via the ERA from tangentially interpolated data (TERA) retain stability. Numerical results in Section 4 demonstrate the accuracy and computational savings of the modified ERA with projected data. The error bound in Theorem 3.4 shows the individual contributions of both the data interpolation and Hankel matrix approximation on the reduced-order model.

For notational convenience, we adopt MATLABFootnote1 notation. Given a vector and r ≤ n, the vector x (1: r) denotes the vector of the first r components of x. Similarly, for a matrix

, we denote by A (1: r, 1: r) the leading r × r submatrix of A.

Remark 1.1:

A wide range of excellent model reduction techniques for LTI systems exist in the literature, see [Citation21–Citation23] for an overview. In particular, we shall mention balanced truncation [Citation24,Citation25] and balanced POD [Citation18,Citation19], the iterative rational Krylov algorithm (IRKA) [Citation26], and Hankel norm approximations [Citation27]. We do not propose to use ERA as a model reduction technique when state space matrices are available. We rather suggest to use ERA for the combined task of SI and model reduction where only black-box code or experimental measurements are available. In this case, the aforementioned model reduction techniques are not applicable.

Remark 1.2:

In this paper, our data will be restricted to time-domain samples of the impulse response of the underlying dynamical systems. In the frequency domain, this corresponds to sampling the transfer function and its derivates around infinity. For the cases where one has the exibility in choosing the frequency samples, a variety of techniques become available such as the Loewner framework [Citation28], vector fitting [Citation29,Citation30], realization-independent IRKA (transfer function-IRKA [TF-IRKA]) [Citation31] and various rational least-squares fitting methodologies [Citation30,Citation32–Citation34]. However, as stated earlier, our focus here is ERA and to make it computationally more efficient for MIMO systems with large input and output dimensions.

2. Partial realization and Kung’s algorithm

In practice, experimental measurements and outputs of black-box simulations are sampled at discrete time instances. Therefore, consider the discrete-time LTI system in state–space formFootnote2

where is a discrete-time instance. The initial condition x (0) = x0 is assumed to be zero – the system will be excited through external disturbances. In equations (1) and (2),

and

are, respectively, state-to-state, state-to-input, state-to-output and feedthrough system matrices. The inputs are

and the outputs are

. The system is completely determined by the matrices (A, B, C, D). It is common to define the Markov parameters

so the output response equation for system (1)–(2) becomes

which is known as the external description of the system and is fully determined by the Markov parameters. Unfortunately, in several practical scenarios, the matrices are not available; instead one has access to the sequence of Markov parameters, describing the reaction of the system to external inputs. If only the Markov parameters (and therefore the external description (4)) are available, how can one reconstruct the internal description (1)–(2) of an LTI system? This is the classical problem of partial realization.

Definition 2.1:

[Citation21, Definition 4.46] Given the finite set of p × m matrices , the partial realization problem consists of finding a positive integer n and constant matrices

and

, such that (3) holds.

A finite sequence of Markov parameters is always realizable and there always exists a minimal realization of order n = rank (). Define the Hankel matrix, denoted by

, constructed by the

sampled Markov parameters:

The size of the Hankel matrix grows linearly with m and p. In this work, we propose to construct a projected Hankel matrix that is independent of the input and output dimensions and therefore does not exhibit such growth. For a better understanding of the algorithms to follow, assume for a moment that the system matrices are known, so that the Hankel matrix reads as

It is well known (e.g. [Citation21, Lemma 4.39]) that for a realizable impulse response sequence, the Hankel matrix can be factored into the product of the observability matrix and the controllability matrix

:

The shifted observability matrix satisfies

where and

denote the first and last

block rows of

. Similarly for the controllability matrix, we obtain

.

Silverman [Citation35] proposed an algorithm to construct a minimal realization, which requires finding a rank submatrix of the partially defined Hankel matrix. The algorithm determines the

th order minimal realization directly, and does not involve a model reduction step. If only a degree-

approximation is constructed where

, the algorithm does not guarantee to retain stability.

Kung’s ERA [Citation13] on the other hand, can be divided into two steps, which are briefly reviewed below. To guarantee stability, the following assumption is made in [Citation13].

Assumption 2.2:

Assume that Markov parameters are given and that the given impulse response sequence is convergent in the sense that

As Kung pointed out in his original work [Citation13], this assumption needs further explanation. Clearly for asymptotically stable dynamical systems, as

However, in the case of ERA where only finite length is collected, this assumption means that

; in other words, the Markov parameters have decayed significantly after

steps. Following the original work [Citation13], we shall refer to this assumption as the property of the Markov parameters.

Step 1 of ERA: Low-rank approximation of Hankel matrix. Construct the Hankel matrix (5) from the given impulse response sequence and compute its economy-sized SVD

where and

are orthogonal matrices with

, and

is a square matrix containing singular values,

(called Hankel singular values).Footnote3 Per definition, the Hankel singular values are the singular values of the underlying Hankel operator; see, for example, [Citation21], which are ordered as

. The rank of the Hankel matrix is

, the minimal realization order with

. Choose

and rewrite the decomposition as

where contains the leading

columns of

, the square matrix

and

. The matrices

and

have appropriate dimensions. Consequently,

and

. It follows that

is the best rank approximation of the Hankel matrix

in the

and

norm. The approximation errors are given by

and

.

Step 2 of ERA: Approximate Realization of LTI System. It is the goal of this step to find a realization of the best approximate Hankel matrix

. Kung [Citation13] suggested that

should have ‘Hankel structure’ as well, so that it can be factored into a product of an approximate observability and controllability matrix as

In light of Equation (6), if is the approximation to the observability matrix, then its first block row can be used to estimate

, therefore

where is the

identity matrix. Similarly, the first block column of

yields an approximation of the control input matrix

:

To estimate the system matrix , the shift invariance property (7) is imposed on the approximate controllability and observability matrices as

The matrix again denotes the first

block rows of

. Similarly,

refers to the last

block rows of

. Either equality can be used to solve the least squares problem for

. Without loss of generality, we focus on the first equality involving the observability matrix. Since

is a

matrix, a least squares problem to minimize

has to be solved. The minimizing solution is given by the Moore–Penrose pseudo inverse [Citation36, Chapter 5] as

Define the matrix via

, and similarly for

, so that

is computed as

Theorem 2.3:

[Citation13] If the Markov parameters satisfy Assumption 2.2, then the realization given by from (9), (10), (11) provides a stable discrete-time dynamical system. In addition,

where is the number of outputs,

is the number of inputs,

is the order of the reduced model and

denotes the first neglected Hankel singular value.

Theorem 2.3 reveals that if the original model is stable, then reduced order models of any order obtained through ERA are stable, too, with an a priori error bound for the impulse response reconstruction. The rank

of the Hankel matrix is the order of the minimal realization. However,

can be very large and the resulting model too big for design and control purposes. Instead, one would like to obtain reduced-order models of order

. The choice of

depends on many factors, such as accuracy of the reduced-order model, performance criteria, limitations on implementable model orders etc.

Example 2.4:

This work has been motivated by the need to generate reduced-order models for the indoor-air behaviour in buildings, see [Citation5, Section 4]. The original model of interest has a large number of inputs and outputs, in particular, we are given m = 26 control inputs and p = 42 measured outputs. The impulse response data are sampled over 3600[s] with a Markov parameter measured every 2[s]. With standard ERA, this requires computing an SVD of size 37,800 × 23,400, which is a computationally challenging problem on a standard desktop machine.

3. Proposed method: tangential interpolation-based ERA (TERA)

To circumvent the bottleneck of computing the SVD of a large Hankel matrix, we propose to project the data sequence along left and right tangential directions before assembling the Hankel matrix. The proposed algorithm, denoted by TERA henceforth, has three stages:

Compute tangential directions and project the impulse response data into smaller input and output dimensions, see Section 3.2.

Use ERA on the projected Hankel matrix to obtain an approximation for the smaller input/output dimension, see Section 3.3.

Lift the reduced realization back to the original input and output dimensions to obtain the final approximation, see Section 3.3.

Our approach is motivated by rational approximation by tangential interpolation, as illustrated in the next section.

3.1. Tangential interpolation from data

A thorough treatment of rational interpolation of a given data set along tangential directions can be found in [Citation23,Citation37]. To illustrate the idea, assume for a moment that a discrete-time dynamical system as in (1)–(2) is given. By taking the -transform of these equations, we obtain the transfer function

which maps the inputs to the outputs in the frequency domain via

where

and

denote the

-transforms of

and

, respectively. Model reduction through rational interpolation seeks a reduced-order transfer function

, with

,

and

such that

for a set of interpolation points

. However, for MIMO systems, this is too restrictive since it imposes

conditions for every interpolation point leading to unnecessarily high reduced orders. The concept of tangential interpolation eases those restrictions by only enforcing interpolation along certain directions. Assume that the transfer function

is sampled at

points

along the right tangential directions

and

points

along the left tangential directions

; that is,

and

are measured. Then, the Loewner framework [Citation28] produces a reduced model

that tangentially interpolates the given data, that is,

The details of how the interpolant is constructed can be found in [Citation23,Citation28]; here we only show how

is constructed:

The matrix is related to a divided difference matrix (called the Loewner matrix) corresponding to

. However, in filling the entries of

, neither the full-matrix data

nor

is used; instead the tangential data

and

are used. Thus dependence on the input and output dimensions are avoided. Without this modification, the reduced matrix

would be of dimension

as opposed to

. This is the motivation for our modification to ERA.

Remark 3.1

The choice of interpolation points and tangential directions are of fundamental importance in model reduction by interpolation. The IRKA of [Citation26] provides a locally optimal strategy in the norm. In [Citation31], IRKA has been recently coupled with the Loewner approach to find optimal reduced models in a data-driven setting. However, this approach cannot be applied here since in the ERA setting, the available frequencies are fixed: one can only sample the Markov parameters, which corresponds to sampling the transfer function and its derivatives at infinity.

3.2. Projection of Markov parameters

Inspired by tangential interpolation in the Loewner framework, for systems with high dimensional input and output spaces we will project the impulse response samples onto low dimensional subspaces via multiplications by tangential directions. However, achieving this goal in the ERA set-up comes with major additional difficulties that do not appear in the Loewner framework. Therein, the elegant construction of the reduced-model quantities

and

guarantee that the number of rows and columns still match the original input and output dimensions even when the tangential interpolation is employed. In other words, only the system dimension is reduced without changing the input/output dimensions. However, in ERA, once the Markov parameters

are replaced by the (tangentially) projected quantities

where

and

, the reduced model via ERA will have

inputs and

outputs; thus the original input and output dimensions will be lost. Therefore, one will need to carefully lift this reduced model back to the original

-inputs and

-outputs spaces. The second difficulty arises from the fact that sampling Markov parameters means sampling

only around infinity. Since we are interested in approximating not only the first Markov parameter but also the higher-order ones (up to order

), with an analogy to tangential interpolation, we need to choose the same tangential directions for every sample. Since selecting a single direction for all the Markov parameters will be extremely restrictive, we will pick multiple dominant tangential directions to project all the Markov parameters.

To deal with large input and output spaces, the authors in [Citation20] use a randomized selection of inputs and outputs and subsequently collect primal and dual simulation data reducing computational time and storage requirements for the SVD of the Hankel matrix. However, the method assumes that primal and dual simulations can be performed separately, which is not possible in several situations and which we will not assume. In [Citation6] and [Citation19], the authors consider fluid dynamical applications, where the output of interest is often the entire state, leading to an enormous output space. Hence, standard ERA is not feasible, especially since the complex dynamical behaviour of fluid systems makes it necessary to sample many Markov parameters. The authors suggest to project the output space onto a low-dimensional manifold and use ERA subsequently. However, this is mentioned as a rather short remark without any details or error analysis and an algorithm to recover the original output dimension is not given, a crucial difficulty arising in the ERA setup as mentioned above. Terminal (input/output) reduction algorithms for model reduction of linear (often circuit) systems are considered within the ESVDMOR framework [Citation38–Citation40]. There, it is assumed that the internal description of the systems is available, which can efficiently be exploited in the terminal reduction framework. Moreover, not only the inputs, but also the number of outputs can cause computational challenges. Recall Example 2.4, where both input and output dimensions are large ( and

), which leads to a challenging computation of the SVD. Therefore, we propose a modified ERA method that works with a two-sided interpolation version of the Markov parameters while guaranteeing stability of the reduced model endowed with an error bound. The minimization problem behind the proposed method is to find two projectors

and

that solve

Ideally, one would like to pick individual projectors and

for every Markov parameter to produce the minimal error

, where

. However this is impractical since, in an analogy to tangential interpolation, it would correspond to choosing different tangential directions for

and

. Therefore we restrict ourselves to finding two orthogonal projectors, which are used for the entire dataset of Markov parameters. In Sections 3.3 and 3.4, we will see that this choice of

and

preserves the structure of the Hankel matrix at the cost of a suboptimal approximation error.

Thus, and

will be constructed such that

where and

. The goal is to compute

and

by considering data streams of Markov parameters. As opposed to solving (14) for

and

jointly, we will construct

and

by solving two separate optimization problems. The reason for this is once again due to the preservation of the Hankel structure, and will be clarified in Section 3.4 in the proof of Theorem 3.4. To compute

, we arrange the impulse response sequence in a matrix

and solve the optimization problem

The optimal solution of (16) is given by the SVD of , and

where

denotes the leading

columns of

. The corresponding minimum error is then given by

where

denotes the

th singular value of

. To compute

, we define

and consider the corresponding optimization problem

Similarly, compute the SVD of , and the optimal solution is

, where

. The minimal error is given by

. Recall that our goal is to reduce the size of the Markov parameters, and consequently to lessen the cost of the SVD of the Hankel matrix. The factors

and

are employed to project the Markov parameters using

Equation (19) can be considered analogous to tangential interpolation where the transfer function (

in this case) and its derivatives are sampled along various tangential directions; the columns of

and

. The projected values

are subsequently used to construct a reduced size Hankel matrix

. For this, define the block diagonal matrices

Unlike the case for , the row and column dimensions of

are independent of the original input and output dimensions m and p.

3.3. ERA for projected Hankel matrix and recovering original input/output dimensions

Once the projected Hankel matrix (21) is computed, ERA can be applied. However due to the projected input and output dimensions, control and observation matrices are identified in the reduced output/reduced input spaces. Thus, the goal of TERA is to lift these spaces optimally back to the original dimension to recover the full input and output dimensions. The Hankel matrix from tangentially interpolated data is given by

Using the definitions of , we can rewrite (22) as

and by defining and

, the Hankel matrix from interpolated data can be decomposed such that

This illustrates how to identify from the interpolated Hankel matrix. The best rank

approximation of the projected Hankel matrix is given by the truncated SVD

where and

represent the approximate observability and controllability matrices, respectively. As before, the first block row of

gives an approximation for

, the observation matrix matching the interpolated impulse response, so

Analogously, the first block column of yields an approximation for

, the control input matrix for the interpolated impulse response sequence, which reads as

To solve the least squares problem for the system matrix , one proceeds as in the previous subsection, so that

which is computed as in (11) with appropriate matrices. To illuminate the connection between in (11) obtained from standard ERA and

in (23) obtained from the projected sequence, let

denote the matrix obtained from deleting the last block row and column from

, and similarly for

. Then we have that

, and it readily follows that

Recall from (11) that . Thus,

works with

projected onto the range of

. Note that

unless

is square (i.e. when there is no reduction in input and output dimension in which case one recovers the standard ERA.) The identified system matrices

,

and

match the projected Markov parameters

with a similar type error bound as in the original ERA, see Corollary 3.3. below.

While is an

matrix (matching the original ERA construction),

has

columns (as opposed to

) and

has

rows (as opposed to

). Therefore, we need to lift

and

to the original input/output dimensions. By virtue of the minimization problem (14), the original input–output dimension of the system can be recovered through injection of

to

. Recall that

. Therefore,

approximates

in the least-squares sense. To approximate the original sequence

, replace

with

and

with

. In other words, the original impulse response sequence is approximated via

yielding the final reduced-model quantities

The modified ERA for tangentially interpolated data, henceforth denoted by TERA (tangential ERA), is given in Algorithm 1.

Algorithm 1. TERA

3.4. Error analysis and stability

We first show that TERA retains stability.

Corollary 3.2

Let Assumption 2.2 hold (as in the case of the standard ERA). Then the reduced model given by the matrices in (25) obtained via TERA from the projected data is a stable dynamical system.

Proof. The projected Markov parameters are , where

and

have orthonormal columns. It follows from Assumption 2.2, that

when

. Therefore,

Thus, it follows that as

, so that the projected impulse response satisfies the convergence to zero property. Since the reduced matrix

for TERA is obtained by the standard ERA for the projected data, Theorem 2.3 yields stability of the extracted reduced-order model, which completes the proof.

Using Theorem 2.3, we can directly obtain an error bound for the interpolated Markov parameters.

Corollary 3.3:

With the number of left and right tangential directions , respectively, the error in the Markov parameter sequence generated by TERA is given by

Proof. Recall that when ERA is applied to , it yields a stable reduced-order model as shown in Corollary 3.2. Using

and

in Theorem 2.3, the result follows directly, by replacing all quantities by the ‘hat’ quantities.

Corollary 3.3 gives a bound for the error in the interpolated (projected) Markov parameters. However, the real quantity of interest is the error in the reconstruction of the original full Markov parameter sequence . The next results answers this question.

Theorem 3.4:

Let be the original sequence of Markov parameters, and let

be the identified sequence via TERA in (24). The approximation error is given by

where and

are as defined in (15) and (17), respectively.

Proof.

We use the definitions of and

in (24) to rewrite the error in terms of

and

,

and

, and then split the error into two parts using the projectors

in (16) and

in (18):

Remark 3.5:

In addition to the need to preserve the Hankel structure in the projected data (so that ERA can be applied to the tangentially interpolated data), the error term in (27) also reveals why the projections

and

were computed separately via (16) and (18), respectively; as opposed to the joint optimization problem (14).

4. Numerical results

In this section, we present numerical results for TERA (Algorithm 3.3) and Kung’s standard ERA. To test these algorithms, a mass spring damper model (MSD) and a cooling model for steel profiles (Rail) are considered. The main computational difference between ERA and TERA is the size of the SVD that needs to be computed. As we will illustrate, TERA offers significant computational savings by working with the SVD of a reduced Hankel matrix, see .

Table 1. Specifications, CPU times to execute, and time savings for the numerical examples. Solved on a cluster with a 6-core Intel Xeon X5680 CPU at 3.33GHz and 48GB RAM, with MATLAB2013b.

ERA assumes a discrete-time model. The examples we consider are continuous-time dynamical systems, that is, they have the form

with , where the subscripts are used to emphasize the continuous-time setting. Therefore we convert these continuous-time models to discrete-time via a bilinear transformation [Citation41], mapping the left half-plane onto the unit circle. Once the reduced models are computed via ERA and TERA, we use the original system dynamics only for illustration purposes to present a more detailed comparison both in the time-domain by comparing time-domain simulations and in the frequency domain by comparing Bode plots. We emphasize that the matrices

are never used in the algorithms. Both ERA and TERA have only access to impulse response data.

4.1. Mass spring damper system

This model is taken from [Citation42] and describes a mass spring damper system with masses , spring constants ki and damping coefficients

for

. The state variables are the displacement and momentum of the masses, and the outputs are the velocities of some selected masses. We refer to [Citation42, Section 6] for more details about the model. The model dimension is

, which is equivalent to 500 mass spring damper elements. All masses are

, the spring constants are

and the damping coefficients are

for

. The number of inputs is equal to the number of outputs, namely

. We collect

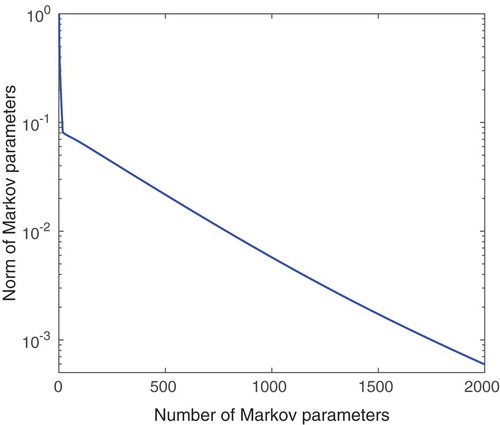

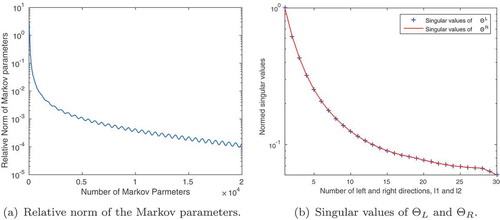

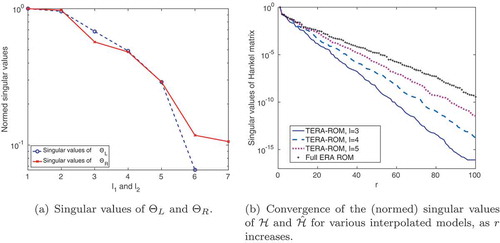

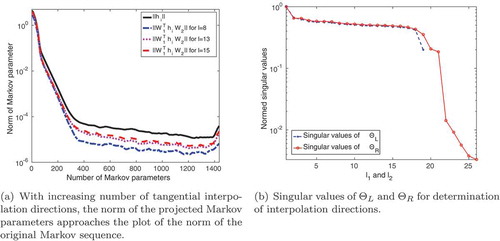

Markov parameters. In , the decay of the normalized Markov parameters is plotted,

. We observe a steep initial decay, followed by a slower decay. shows the singular values of

and

, which in turn help decide how many input/output directions

are needed in the TERA framework.

Figure 1. MSD model: The Markov parameters decay slowly in time (a); the singular values of the matrices and

help guide the decision about the number of input/output interpolation directions needed (b).

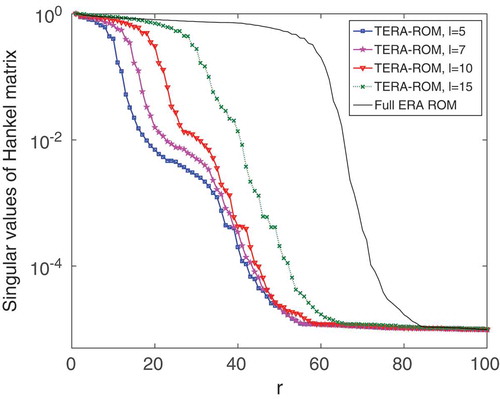

Application of the standard ERA requires computing an SVD of size . On the other hand, in TERA, we pick

, reducing the SVD dimension to

. Even though we picked

for TERA, we illustrate the leading hundred normalized singular values of both the full Hankel matrix

and several projected Hankel matrices

in for various

and

choices. There is a drastic difference in the decay of the singular values. At the truncation order

, the singular values of

have already dropped significantly. In contrast, the singular values of the full Hankel matrix start a rapid decay only after

.

Figure 2. MSD model: The normalized singular values of the Hankel matrices and

are shown in decreasing order. With an increasing number of interpolation directions

, the singular value decay curves approach the singular value decay of the full Hankel matrix

.

We choose the reduced model order as , and apply both ERA and TERA. Theorem 3.4 via the upper bound in (26) can give valuable insight into the success of TERA. Choosing

, and

, the actual relative error in the Markov parameters is

and the upper bound in (26) yields

Notably, the error bound is in the same order of magnitude as the actual error. The main contribution to the upper bound results from the truncation of and

. As a comparison, we also give the actual error and the error bound for the standard

. While the actual relative error due to the standard ERA is

the upper bound for ERA is

As we shall see again in the next example, Kung’s error bound might not be sharp, and in fact may vary by several orders of magnitude from the true error. Thus, since the error bound is above 100%, it would have been hard to know a priori how well the ROM behaves.

To compare the reduced models due to ERA and TERA, both reduced models are converted back to continuous time, yielding

The full model and the reduced systems (29) obtained from ERA and TERA, respectively, are simulated from zero to with zero initial conditions. The input functions were chosen as in [Citation42, Ex. 6.3] to be

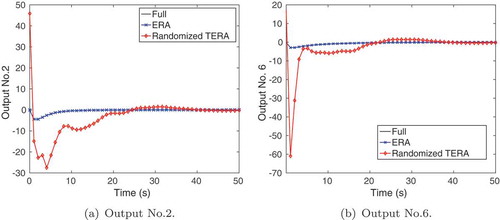

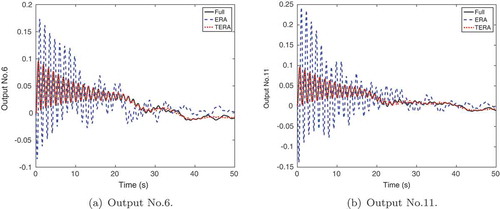

. shows outputs 6 and 11 of time domain simulations for the full model and both reduced models. Here, the TERA-based reduced model follows the full model outputs accurately. In contrast, the ERA-based reduced-order model responses are far from the actual output and produce erroneous results as shown in .

Figure 3. MSD model: Outputs of continuous-time simulations from the full model, and reduced models with . In this case, TERA-ROM matches the output of the full order model better, whereas ERA shows strong deviations compared to the full order model.

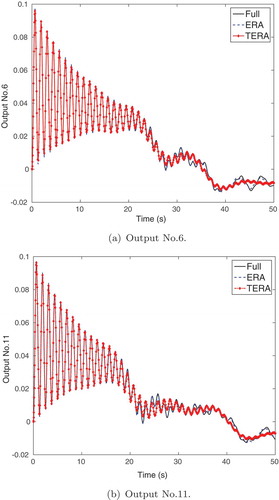

These results illustrate that the reduced order is too low for ERA to produce satisfactory results; thus we increase

to study the performance of ERA more. Based on the plot of the Hankel singular values, , the singular values of the full Hankel matrix start decaying at order

. compares the continuous-time simulations of the full model, and both reduced-order models with

(the left and right interpolation directions for TERA are kept at

). The outputs of the ERA model have now improved in accuracy and mimic the full model outputs accurately.

Figure 4. MSD model: Outputs of continuous-time simulations from the full model, and reduced models with . At higher reduced order dimension, ERA performs better, and is visually indistinguishable from the full model.

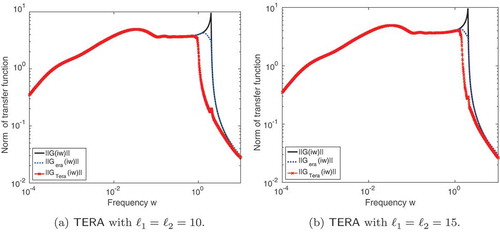

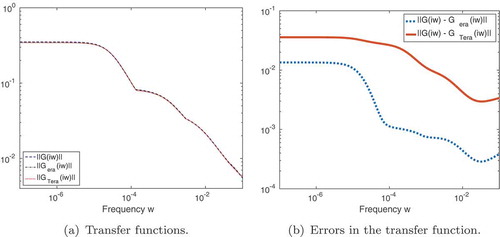

For a better comparison of the performance across all inputs and outputs, we include below, where we show the Bode plots for the full model, ERA and TERA with and

. We see that as we increase the interpolation directions, the frequency response of TERA approaches ERA as expected, and the Bode plot is better matched. Note, that the original system has

inputs and outputs. However, one should observe that even the original full ERA misses the sharp pick of the Bode plot around

rad/sec. This is unfortunately the best one can do with an ERA setting here. Unlike other data-driven approaches, such as the Loewner framework [Citation28] or data-driven optimal

interpolation method TF-IRKA [Citation31], we cannot simply sample the transfer function at various frequencies to have a better/optimal reduced model (and capture the sharp behaviour around

). State-space matrices are assumed not available to start with. All that is available are Markov parameters (data), and the goal is to get a reduced model from this data, which are stable, and have a bound for the sum of squares in the Frobenius norm of the error in the Markov parameters.

Figure 5. MSD model: Transfer function for ERA and TERA where ROMS have order , and the TERA models were obtained by interpolating the data with a different number of directions. In plot (a), the original transfer function shows a peak around

. In both (a) and (b), ERA remains unchanged, and we show that TERA approaches the transfer function obtained from ERA as we increase the number of tangential directions.

We have tried several lower order models and observed that we needed to increase the reduced order to around to have a satisfactory reduced model from ERA. This was also reflected in the decay of the singular values in . Thus, for this example, TERA produced a better reduced-order model than ERA even with a smaller

value at the same time reducing the effort for the SVD from a

matrix to a

matrix. For

, ERA provides a slightly better match in terms of the output of time-domain simulations, yet it still remains more expensive to compute and the advantage of the computational effort for TERA is still persistent. Moreover, the reader should note that a careful balancing of the number of interpolation directions

, and the reduced-order model size

, led to a satisfactory accuracy in the ROM, while saving computational time. We shall add though, that in general we do not expect TERA to outperform ERA in accuracy, as it did in this particular case. Nonetheless, since ERA is only optimal in reconstruction, the fact that an improvement in accuracy occurred here, is not contradictory.

4.2. Cooling of steel profiles – Rail model

The model is taken from the Oberwolfach benchmark collection for model reduction [Citation43] and is further described in [Citation44]. The process is modelled by a two-dimensional heat equation with boundary control input. A finite element discretization results in a model with

states,

outputs and

outputs. The generalized eigenvalue of

, with largest real part is

, which implies that the Markov parameters will decay slowly. It is therefore necessary to sample many Markov parameters to capture enough of the system dynamics. The model is converted to a discrete-time model through the bilinear transformation and simulated to construct

Markov parameters. Once again, the original matrices are only used for Markov parameter generation and never enter into the algorithm. , shows the normalized decay of the Markov parameters over time, that is,

for

. The plot can guide the choice of when to stop collecting data. Next, we investigate the performance of ERA/TERA, with reduced-model order

, unless indicated otherwise. Thus, the standard ERA is applied to the sequence of

Markov parameters in

requiring an SVD of size

. Unless otherwise stated, the Markov parameters are projected with

tangential directions, so that

. Therefore, only a singular value decomposition of size

has to be computed.

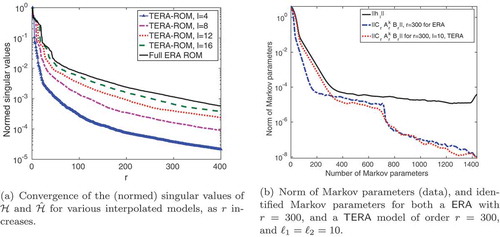

shows the normalized singular values of the matrices and

, respectively. In addition to computational cost limitations, the decay of the singular values gives valuable insight for choosing the tangential truncation orders

and

, since they occur in the error bound in (26). Moreover, in , we see the convergence of the singular values of Hankel matrix from tangentially interpolated data for various values of

and

, as the dimension of the reduced-order model

increases. As the size of the Hankel matrix grows the singular values converge to the full model. The first neglected singular value

enters into the upper bound of the TERA error in Equation (26).

Figure 7. Rail model: The singular values of the matrices as well as of the Hankel matrix provide insight into truncation of input/output directions, as well as the ROM dimension

. In plot (b), for increasing numbers of tangential directions, the singular value decay approaches the behaviour of the ERA model, as expected.

compares the full transfer function with the reduced-order transfer functions

of the ERA and TERA ROMs by showing the amplitude Bode plots. The

error (where the Hardy-norm

) for TERA is

and similarly

for ERA, which is in the same order of magnitude.

Figure 8. Rail model: Bode plots (transfer function) for full model, and the reduced-order models through ERA and TERA. As seen from (a), the transfer functions in the original scaling are visually identical. Thus, plot (b) shows the error of TERA and ERA with respect to the original transfer function.

In the following, we shed some light on the behaviour of the error bound for ERA in (12) as well as the TERA error bound in (26). While the true relative error due to TERA with is

the upper bound from Theorem 3.4 yields

Even though the upper bound is not too pessimistic, it is not as tight as the previous example. This was expected from the slower decay of the Hankel singular values and the singular values of and

. On the contrary, the error bound for the standard ERA is rather pessimistic. While the true relative error due to the standard ERA is

the upper bound for ERA is

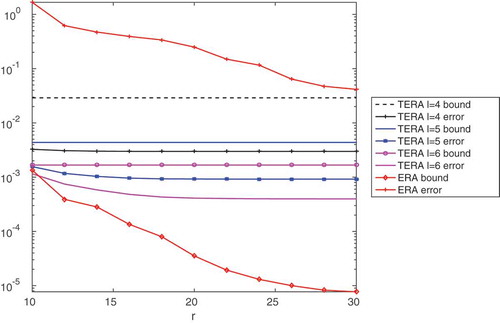

four orders of magnitude higher than the actual error. A more thorough look at the error bound of TERA for various reduced-order model sizes and interpolation directions

and

is given in . ERA obviously produces the lowest errors, yet the error bound is almost three orders of magnitudes higher than the true error. For TERA, we see that the error bound and actual error are within the same order of magnitude, yet the method produces higher errors. The error bound in Equation (26) is dominated by the truncated singular values of the matrices

and

, so that there is no significant decay trend of the error bound with increasing order

.

Figure 9. Rail model: The error bound of ERA (normalized) from (12) and TERA from (26) versus the actual errors.

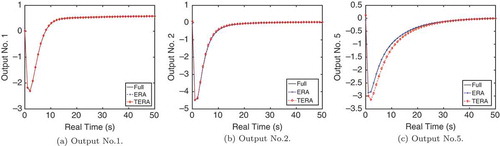

The continuous-time reduced-order models (29) are simulated with an input vector with

, for

. For time stepping, we used ode45 in MATLAB with standard error tolerances. The outputs are compared to the outputs of simulations of the full model. shows outputs No. 1, No. 2 and No. 5 computed from the full model as well as the reduced models obtained through both standard ERA and TERA. In addition to reducing the computational time and memory requirements of standard ERA, the TERA framework performs well in time domain simulations. Outputs No. 1 and No. 2 are captured very accurately. While one can observe a deviation in the approximation of output No. 5, the overall behaviour is still approximated well.

Figure 10. Rail model: The plot shows outputs of time domain simulations of the full and reduced-order models. In plot (a) and (b), all three models provide visually identical results, in plot (c), TERA shows a slight deviation for the first 20 s of simulation.

For both models, to show that the success of the selection of projection/tangential directions for TERA via SVD is not random, we generated tangential directions from a random normal distribution (as opposed to using the singular vectors of and

). This approach gave unsatisfactory results in all the test runs and we, therefore, safely exclude it as a choice for tangential directions. For illustration purposes, for the Rail model, we plotted the results of the continuous-time simulations for output two and six in , where random interpolation directions were used for

, leading to a rather poor model reduction performance.

4.3. Indoor-air model for thermal fluid dynamics

We offer a brief explanation for the limitations of the proposed TERA approach by revisiting the indoor-air behaviour model [Citation5] from Example 2.4. The motivation is that it is often not possible to extract system matrices from commercial software, yet one can use SI methods to obtain reduced-order models for the dynamics. Given the size of the problem (input–output dimension as well as data), it would be computationally beneficial to use ERA with tangentially interpolated data. The tools developed earlier can help us decide whether TERA could be applied here.

We consider a similar model as illustrated in Example 2.4. For this problem, Markov parameters were obtained by simulating the underlying dynamical system using the ANSYS FLUENT software with a spatial discretization of approximately

finite volume elements used in a three dimensional domain. The version of the model we consider here already has a reduced number of outputs,

, yet similar inputs,

. Consequently, this would mean that the standard ERA for this model is computationally demanding, requiring an SVD for a matrix of dimension

; thus reflecting a limitation for the standard ERA itself. Since the internal representation is not available, we cannot provide the same level of detailed comparison as above.

First, we show in , the norm of the Markov parameters of the full model versus the norm of the interpolated Markov parameters

. This shows the information that is retained after the tangential interpolation procedure, which then enters the TERA algorithm. shows the decay of the singular values of

and

to determine the number of necessary interpolation directions. The reader should compare this to and (a), where a faster decay in the singular values is observed. Since the error bound in Theorem 3.4 contains the summed tail of the neglected singular values of

and

, the upper bound is expected to be loose.

Figure 12. Indoor-air model: A high number of interpolation directions is needed to retain enough information from the sequence of Markov parameters. Especially plot (b) shows that decay of the singular values for with respect to

is very slow.

The other ingredient to the error bound in Theorem 3.4 is the first neglected singular value of the full Hankel matrix, . The singular values of the Hankel matrix from interpolated data are shown in . As in the previous examples, the Hankel singular values converge to the true values as we increase the interpolation directions

. However, the convergence is noticeably slower than in the previous two examples. Taken together, one might expect TERA not to yield satisfactory results for small values of

; which could hint at the fact that all inputs and outputs are highly relevant for this particular model as we shall see below. In contrast, for large

, the computational benefit of using TERA is negligible, in which case one would say that the methods ‘fails’.

Figure 13. Indoor-air model: The TERA models slowly approach the ERA model as the number of interpolation directions is increased, see plot (a). Part (b) shows that even the identified Markov parameters from a full ERA model do not match the original sequence well.

Following this a priori analysis, in , we compare the original and identified Markov parameters, using ERA (an expensive computation of the SVD) and TERA with

. In both cases,

is chosen as the ROM model order. We note here that the relative error

is

for ERA and

for TERA. Thus, ERA still performs better, but the errors are still too large for a reduced-order model with good predictive capabilities. In this example, due to several illustrated factors, TERA could not provide a computational benefit to ERA. This illustrates how to approach the problem of deciding whether tangential interpolation of the data prior to SI is beneficial.

5. Conclusions

We modified the standard ERA to handle MIMO systems more efficiently. After the input and output dimensions are reduced by tangential interpolation of the impulse response data, the standard ERA is used on the low dimensional input and output spaces. The observation and control matrices are subsequently lifted back to the original input and output dimensions. The resulting reduced-order model has the original input and output dimensions, and is guaranteed to retain stability. The computational savings for the necessary singular value decomposition are significant, in particular since the complexity of the SVD grows cubically with the size of the Hankel matrix. Moreover, we give criteria to guide the user whether in a particular model using TERA can be beneficial. The a priori error bound in Theorem 3.4 provides a clear picture regarding the contribution of the tangential interpolation error, and the truncation error of the Hankel matrix to the overall error. The numerical findings demonstrate the success of TERA. The algorithm can run with inputs from experiments or black-box code and accurately identify reduced order dynamics.

There are several interesting directions one can pursue. As showed in [Citation6], ERA can be considered a data-driven approximation to balanced truncation. Establishing and understanding a similar connection for TERA might yield other ways of choosing the left and right directions to project the impulse response data. This connection can also help handling the cases where, for example, only the output dimension is massive but there is only a single input. In the setting of balanced truncation, the authors in [Citation45] offered an effective methodology for those situations by employing a numerical quadrature in the computation of one of the gramians involving the other gramian. It will be beneficial to understand the implications for ERA and TERA as well. Moreover, understanding the effect of noise on the TERA computations will also be important.

Disclosure statement

No potential conflict of interest was reported by the authors.

Additional information

Funding

Notes

1. MATLAB and Statistics Toolbox Release 2015b, The MathWorks, Inc., Natick, Massachusetts, United States.

2. We follow the original ERA notation and assume a standard state-space, that is, the E-term is E = I. This makes the notation involving Markov parameters and the Hankel matrix much simpler. The theory can be extended to the general case. One of the numerical examples in Section 4 has E ≠ I.

3. We use this term to refer to the singular values of the Hankel matrix.

References

- I. Houtzager, J. Van Wingerden, and M. Verhaegen, Recursive predictor-based subspace identification with application to the real-time closed-loop tracking of flutter, IEEE Trans. Control Syst. Technol. 20 (2012), pp. 934–949. doi:10.1109/TCST.2011.2157694

- D.C. Rebolho, E.M. Belo, and F.D. Marques, Aeroelastic parameter identification in wind tunnel testing via the extended eigensystem realization algorithm, J. Vib. Control 20 (2014), pp. 1607–1621. doi:10.1177/1077546312474015

- M. Döhler and L. Mevel, Fast multi-order computation of system matrices in subspace-based system identification, Control Eng. Pract. 20 (2012), pp. 882–894. doi:10.1016/j.conengprac.2012.05.005

- J.M. Caicedo, S. Dyke, and E. Johnson, Natural excitation technique and eigensystem realization algorithm for phase I of the IASC-ASCE benchmark problem: Simulated data, J. Eng. Mech. 130 (2004), pp. 49–60. doi:10.1061/(ASCE)0733-9399(2004)130:1(49)

- J. Borggaard, E. Cliff, and S. Gugercin, Model reduction for indoor-air behavior in control design for energy-efficient buildings, Proceedings of the American Control Conference, Montreal, 2012, pp. 2283–2288. ISBN 9781457710957

- Z. Ma, S. Ahuja, and C. Rowley, Reduced-order models for control of fluids using the eigensystem realization algorithm, Theor. Comput. Fluid Dyn. 25 (2011), pp. 233–247. doi:10.1007/s00162-010-0184-8

- F. Juillet, P. Schmid, and P. Huerre, Control of amplifier flows using subspace identification techniques, J. Fluid. Mech. 725 (2013), pp. 522–565. doi:10.1017/jfm.2013.194

- C. Wales, A. Gaitonde, and D. Jones, Stabilisation of reduced order models via restarting, Int. J. Numer. Methods Fluids 73 (2013), pp. 578–599. doi:10.1002/fld.v73.6

- J. Mendel, Minimum-variance deconvolution, IEEE Trans. Geosci. Remote Sensing GE-19 (1981), pp. 161–171. doi:10.1109/TGRS.1981.350346

- M. Viberg, Subspace-based methods for the identification of linear time-invariant systems, Automatica 31 (1995), pp. 1835–1851. doi:10.1016/0005-1098(95)00107-5

- S. Qin, An overview of subspace identification, Comput. Chem. Eng. 30 (2006), pp. 1502–1513. doi:10.1016/j.compchemeng.2006.05.045

- E. Reynders, System identification methods for (operational) modal analysis: Review and comparison, Arch. Comput. Methods Eng. 19 (2012), pp. 51–124. doi:10.1007/s11831-012-9069-x

- S.Y. Kung, A new identification and model reduction algorithm via singular value decomposition, Proceedings of 12th Asilomar Conference on Circuits, Systems & Computers, Pacific Grove, CA, 1978, pp. 705–714. IEEE.

- J.-N. Juang and R. Pappa, An eigensystem realization algorithm for modal parameter identification and model reduction, J. Guidance, Control, Dyn. 8 (1985), pp. 620–627. doi:10.2514/3.20031

- G. Pitstick, J. Cruz, and R. Mulholland, Approximate realization algorithms for truncated impulse response data, IEEE Trans. Acoust. Speech Signal Process. 34 (1986), pp. 1583–1588. doi:10.1109/TASSP.1986.1164997

- R. Longman and J.-N. Juang, Recursive form of the eigensystem realization algorithm for system identification, J. Guidance, Control, Dyn. 12 (1989), pp. 647–652. doi:10.2514/3.20458

- J. Singler, Model reduction of linear PDE systems: A continuous time eigensystem realization algorithm, Proceedings of the American Control Conference, Montreal, 2012, pp. 1424–1429.

- K. Willcox and J. Peraire, Balanced model reduction via the proper orthogonal decomposition, AIAA J. 40 (2002), pp. 2323–2330. doi:10.2514/2.1570

- C. Rowley, Model reduction for fluids, using balanced proper orthogonal decomposition, Int. J. Bifur. Chaos 15 (2005), pp. 997–1013. doi:10.1142/S0218127405012429

- D. Yu and S. Chakravorty, A randomized proper orthogonal decomposition technique, arXiv preprint arXiv:1312.3976, 2013.

- A.C. Antoulas, Approximation of Large-Scale Dynamical Systems, Advances in Design and Control Society for Industrial and Applied Mathematics, Philadelphia, PA, 2005.

- U. Baur, P. Benner, and L. Feng, Model order reduction for linear and nonlinear systems: A system-theoretic perspective, Arch. Comput. Methods Eng. 21 (2014), pp. 331–358. doi:10.1007/s11831-014-9111-2

- A.C. Antoulas, C.A. Beattie, and S. Gugercin, Interpolatory model reduction of large-scale dynamical systems, in Efficient Modeling and Control of Large-Scale Systems, J. Mohammadpour and M.K. Grigoriadis, eds., Springer US, Boston, MA, 2010, pp. 3–58.

- B. Moore, Principal component analysis in linear systems: Controllability, observability, and model reduction, IEEE Trans. Automat. Contr. 26 (1981), pp. 17–32. doi:10.1109/TAC.1981.1102568

- C. Mullis and R. Roberts, Synthesis of minimum roundoff noise fixed point digital filters, IEEE Trans. Circuits Syst. 23 (1976), pp. 551–562. doi:10.1109/TCS.1976.1084254

- S. Gugercin, A. Antoulas, and C.A. Beattie, model reduction for large-scale linear dynamical systems, SIAM J. Matrix Anal. Appl. 30 (2008), pp. 609–638. doi:10.1137/060666123

- K. Glover, All optimal Hankel-norm approximations of linear multivariable systems and their L,∞-error bounds, Int. J. Control 39 (1984), pp. 1115–1193. doi:10.1080/00207178408933239

- A. Mayo and A. Antoulas, A framework for the solution of the generalized realization problem, Linear Algebra Appl. 425 (2007), pp. 634–662. doi:10.1016/j.laa.2007.03.008

- B. Gustavsen and A. Semlyen, Rational approximation of frequency domain responses by vector fitting, IEEE Trans. Power Deliv. 14 (1999), pp. 1052–1061. doi:10.1109/61.772353

- Z. Drmač, S. Gugercin, and C. Beattie, Quadrature-based vector fitting for discretized approximation, SIAM J. Sci. Comput. 37 (2015), pp. A625–A652. doi:10.1137/140961511

- C. Beattie and S. Gugercin, Realization–independent approximation, Proceedings of the 51st IEEE Conference on Decision & Control, Maui, HI, IEEE, 2012, pp. 4953–4958.

- C. Sanathanan and J. Koerner, Transfer function synthesis as a ratio of two complex polynomials, IEEE Trans. Autom. Control 8 (1963), pp. 56–58. doi:10.1109/TAC.1963.1105517

- M. Berljafa and S. Güttel, Generalized rational Krylov decompositions with an application to rational approximation, SIAM J. Matrix Anal. Appl. 36 (2015), pp. 894–916. doi:10.1137/140998081

- Z. Drmač, S. Gugercin, and C. Beattie, Vector fitting for matrix-valued rational approximation, SIAM J. Scientific Comput. 37 (2015), pp. A2346–A2379. doi:10.1137/15M1010774

- L.M. Silverman, Realization of linear dynamical systems, IEEE Trans. Automat. Contr. 16 (1971), pp. 554–567. doi:10.1109/TAC.1971.1099821

- G. Golub and C.F. Van Loan, Matrix Computations, Johns Hopkins University, Press, Baltimore, MD, 1996, pp. 374–426.

- C.A. Beattie and S. Gugercin, Model reduction by rational interpolation, in Model Reduction and Approximation for Complex Systems, P. Benner, A. Cohen, M. Ohlberger, and K. Willcox, eds., Marseille, 2014. Available at http://arxiv.org/abs/1409.2140.

- P. Feldmann and F. Liu, Sparse and efficient reduced order modeling of linear subcircuits with large number of terminals, in IEEE/ACM International Conference on Computer Aided Design, 2004. ICCAD-2004, 2004, pp. 88–92.

- P. Liu, S.X. Tan, B. Yan, and B. McGaughy, An extended SVD-based terminal and model order reduction algorithm, Proceedings of the 2006 IEEE International Behavioral Modeling and Simulation Workshop San Jose, CA, 2006, pp. 44–49.

- P. Benner and A. Schneider, Model reduction for linear descriptor systems with many ports, in Progress in Industrial Mathematics at ECMI 2010, M. Günther, A. Bartel, M. Brunk, S. Schöps, M. Striebel, eds., Springer, Berlin, 2012, pp. 137–143.

- U. Al-Saggaf and G. Franklin, Model reduction via balanced realizations: An extension and frequency weighting techniques, IEEE Trans. Automat. Contr. 33 (1988), pp. 687–692. doi:10.1109/9.1280

- S. Gugercin, R. Polyuga, C. Beattie, and A. Van Der Schaft, Structure-preserving tangential interpolation for model reduction of port-Hamiltonian systems, Automatica 48 (2012), pp. 1963–1974. doi:10.1016/j.automatica.2012.05.052

- IMTEK - Simulation, Oberwolfach benchmark collection, 2003. Available at http://www.simulation.uni-freiburg.de/downloads/benchmark.

- A. Unger and F. Tröltzsch, Fast solution of optimal control problems in the selective cooling of steel, ZAMM Z. Angew. Math. Mech. 81 (2001), pp. 447–456. doi:10.1002/(ISSN)1521-4001

- P. Benner and A. Schneider, Balanced truncation for descriptor systems with many terminals, Max Planck Institute Magdeburg Preprint MPIMD/13-17, 2013. Available at http://www2.mpi-magdeburg.mpg.de/preprints/2013/MPIMD13-17.pdf.