?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

A new adaptive kernel principal component analysis (KPCA) for non-linear discrete system control is proposed. The proposed approach can be treated as a new proposition for data pre-processing techniques. Indeed, the input vector of neural network controller is pre-processed by the KPCA method. Then, the obtained reduced neural network controller is applied in the indirect adaptive control. The influence of the input data pre-processing on the accuracy of neural network controller results is discussed by using numerical examples of the cases of time-varying parameters of single-input single-output non-linear discrete system and multi-input multi-output system. It is concluded that, using the KPCA method, a significant reduction in the control error and the identification error is obtained. The lowest mean squared error and mean absolute error are shown that the KPCA neural network with the sigmoid kernel function is the best.

1. Introduction

We are involved in adaptive system control of the non-linear discrete system using neural network. In fact, the indirect adaptive control structure is based on two neural network blocks corresponding to the model identification of the dynamic behaviour of the system and system controller [Citation1–Citation6].

However, the size of the neural network model or the neural network controller can accelerate or slow down their training phase. This problem of reduction of the higher dimension of neural network is well discussed by different techniques [Citation7–Citation37].

The first step in reduction method is feature selection (new features are selected from the original inputs) or feature extraction (new features are transformed from the original inputs). In the modelling, all available indicators can be used, but correlated features or irrelevant features could deteriorate the generalization performance of any model [Citation7–Citation18].

Many linear techniques of reduction dimensionality are proposed. For instance, Kohonen Self Organizing Feature Maps provide a way of representing multidimensional data in much lower dimensional spaces [Citation19], curvilinear component analysis[Citation20] and curvilinear distance analysis [Citation21] are proposed to make smaller the original dimension of the face images, data and for classification in medical imaging [Citation22] and principal component analysis (PCA) has been widely used for reducing high dimension in many applications [Citation16–Citation18,Citation23–Citation25].

PCA is a well-known method for feature extraction [Citation23,Citation24]. By calculating the eigenvectors of the covariance matrix of the original inputs, PCA linearly transforms the original high-dimensional input vector into new low-dimensional one whose components are uncorrelated. The basis function orders of PCA, as a typical approach, are the lowest in the sense of model dimension reduction [Citation16–Citation18,Citation23–Citation25].

In other applications, for instance, in the study by Zhang et al. [Citation15], a hybrid modelling strategy consists of a decoupled non-linear radial basis function neural network model based on PCA and linear autoregressive exogenous model. PCA reduces the cross-validation time required to identify optimal model hyper-parameters [Citation25]. In the study by Seerapu and Srinivas [Citation26], it was combined with the linear discriminate analysis to ameliorate the reduction. Then, in the study by Peleato et al. [Citation27], the use of fluorescence data coupled with neural networks based on PCA for improved predictability of drinking water disinfection by-products was investigated. Second, in the study by Qinshu et al. [Citation14], a PCA for feature selection and a grid searching and k-fold cross validation approach for parameter optimization in the support vector machine were developed. Finally, in other dimensionality reduction, linear techniques such as multidimensional scaling and probabilistic PCA are applied for user authentication using keystroke dynamics [Citation28] and other methods [Citation29].

However, PCA is a linear time/space separation method and cannot be directly applied to non-linear systems [Citation30]. Non-linear PCA has also been developed by using different algorithms. Kernel principal component analysis (KPCA) is a non-linear PCA developed by using the kernel method. Kernel method is originally used for Support Vector Machine (SVM). Later, it has been generalized into many algorithms having the term of dot products such as PCA. Specifically, KPCA firstly maps the original inputs into a high-dimensional feature space using the kernel method and then calculates PCA in the high-dimensional feature space. The linear PCA in the high-dimensional feature space corresponds to a non-linear PCA in the original input space. Recently, another linear transformation method called independent component analysis (ICA) is also developed. Instead of transforming uncorrelated components, ICA attempts to achieve statistically independent components in the transformed vectors. ICA is originally developed for blind source separation. Later, it has been generalized for feature extraction [Citation7].

KPCA is used as an effective method for tackling the problem of non-linear data [Citation31]. Indeed, in the study by Chakour et al. [Citation32], an algorithm for adaptive KPCA is proposed for dynamic process monitoring. This algorithm combined two existing algorithms: the recursive weighted PCA and the moving window KPCA algorithms. Even better, the fault detection of the non-linear system using KPCA method for extracting the reduced number of measurements from the training data [Citation33] is studied. In the study by Xiao and He [Citation34], a neural-network-based fault diagnosis approach of analog circuits is developed, using maximal class separability-based KPCA as a preprocessor to reduce the dimensionality of candidate features so as to obtain the optimal features with maximal class separability as inputs to the neural networks. In the study by Reddy and Ravi [Citation36], differential evolution (DE)-trained kernel principal component wavelet neural network and DE-trained kernel binary quantile regression are proposed for classification. In the proposed DE-KPCWNN technique, KPCA is applied to input data to get KPC, on which WNN is employed.

In the study by Klevecka and Lelis [Citation37], a functional algorithm of preprocessing of input data taking into account the specific aspects of teletraffic and properties of neural networks is created. The practical application for forecasting telecommunication data sequences shows that the procedure of data preprocessing decreases the time of learning and increases the plausibility and accuracy of the forecasts.

In this paper, the scheme of indirect adaptive control is used based on a neural network. First, the used neural network is based on an adaptive learning rate and a reduced derivative of the activation function. Even better, the weights of the neural network model and neural network controller are updated based on the identification error and the control error and used to generate the appropriate control.

In the first hand, in various studies [Citation1,Citation2,Citation5,Citation6,Citation15,Citation38,Citation39], the authors developed many algorithms for the adaptive indirect control without any preprocessing and they did not take into account the high dimension of the neural network.

On the other hand, in the study by Errachdi and Benrejeb [Citation4], the authors developed an algorithm to accelerate the speed of training phase in the adaptive indirect control based on neural network controller using a variable learning rate and a development of Taylor of the derivative of the activation function but they did not focus on the big dimension. That is why, in this paper, we propose a new algorithm of a reduction of the input vector of the neural controller in the control system based on the KPCA. The procedure of the data preprocessing scheme decreases the time of learning and increases the accuracy of the system control.

The present paper is organized as follows. After this introduction, Section 2 reviews the proposed KPCA method for system control. In fact, the proposed neural network controller based on the KPCA method is developed. Furthermore, in Section 3, the proposed algorithm is detailed. In Section 4, an example of a non-linear system is presented to illustrate the proposed efficiency of the method. Section 5 gives the conclusion of this paper.

2. The proposed KPCA neural network controller approach

On the basis of the input and output relations of a system, the above discrete non-linear system can be expressed by a NARMA (Non-linear Autoregressive Moving Average) model [Citation4,Citation35] given by

(1)

(1)

is the non-linear function mapping specified by the model,

and

are the outputs and the inputs of the system, respectively,

is the discrete time,

and

are the number of past output and input samples, respectively, required for prediction.

The aim of this paper is to find a control law to the non-linear system, given by Equation (1), based on the KPCA approach in order that the system output

tracks, where possible, the desired value

.

The indirect control architecture is shown in , and the weights of the neural network model and the neural network controller are trained by different errors where is the identification error,

is the estimated tracking error and

is the tracking error [Citation4].

The architecture shown in assumes the role of two neural blocks. Indeed, the weights of the neural model are adjusted by the identification error ; however, the weights of the neural controller are trained by the tracking error

[Citation4].

The multi-layer perceptron is used in the neural model and in the neural controller. Each block consists of three layers. The sigmoid activation function is used for all neurons [Citation4].

2.1. The neural network model

The principle of neural network model is given by the .

The output layer of the hidden layer is described as follows:

(2)

(2)

where is the number of nodes of the input layer,

is the number of nodes of the hidden layer and

is the hidden weight.

The input vector of the neural network model is

(3)

(3)

where is the neural network controller output.

The output of the neural network model is given by the following equation:

(4)

(4)

where is a scaling coefficient and

is the output weight.

The compact form of the output is given by the following equation:

(5)

(5)

with,.

The identification error is given by

(6)

(6)

The function cost is given by the following equation:

(7)

(7)

where is the number of observations.

The output weights are updated by the following equation:

(8)

(8)

where ,

is given by minimizing the cost function defined as follows:

(9)

(9)

is the variable learning rate for the weights of the neural network model,

, given by

(10)

(10)

is the derivative of

defined as follows:

(11)

(11)

The hidden weights are updated by the following equation:

(12)

(12)

where is given by the following equation:

(13)

(13)

with

For the stability of the neural network model, the Lyapunov function is detailed. Indeed, let us define a discrete Lyapunov function as

(14)

(14)

where is the identification error given by Equation (6). The change in the Lyapunov function is obtained by

(15)

(15)

The identification error difference can be represented by

(16)

(16)

where is the synaptic weights of the neural network identifier (

,

). Using Equation (16), the identification error is going to be

(17)

(17)

with

(18)

(18)

From Equations (17) and (18), the convergence of the identification error is guaranteed if

or

with

from Equation (14).

The suitable online algorithm may be applied if the variable learning rate is

.

2.2. The KPCA neural network controller

The PCA technique is a lower-dimensional projection method that can use with multivariate data mining [Citation25,Citation30–Citation32,Citation40]. The main idea behind the PCA is to represent multidimensional data with fewer numbers of variables retaining the main features of the data. It is inevitable that by reducing dimensionality, some features of the data will be lost. The method PCA tries to project multidimensional data into a lower-dimensional space, retaining as much as possible variability of the data [Citation4,Citation25,Citation30–Citation32,Citation40].

However, the presented PCA method is a linear technique and cannot capture the non-linear structure in a data set. For this reason, non-linear generalization has been proposed using the kernel method, introduced for computing the principal components of the data set mapped non-linearly into some high-dimensional feature space. Because sample data are implicitly mapped from an input space to a higher-dimensional feature space , KPCA is implemented efficiently by virtue of kernel tricks and it can be solved as an eigenvalue problem of its kernel matrix.

In this section, we propose to reduce the input vector of the neural network controller of the adaptive indirect control structure. Indeed, before the reduction of the input vector, the new architecture of the adaptive indirect KPCA neural network control is given .

We recall the input vector of the neural network controller is

(19)

(19)

where is the desired value.

For the input data ,

represents the non-linear mapped data in

. The covariance matrix of the projected features

is

, defined as

(20)

(20)

Its eigenvalues and eigenvectors are given by

(21)

(21)

From Equation (20), Equation (21) may be

(22)

(22)

can be rewritten as

(23)

(23)

with ,

as the expansion coefficients. Equation (21) can be rewritten as

(24)

(24)

The kernel function is defined as

(25)

(25)

is multiplied to the left and to the right by , Equation (23) becomes

(26)

(26)

Equation (25) is

(27)

(27)

with

The resulting kernel principal components can be calculated using

(28)

(28)

The reduced space of the signal given by Equation (28) constitutes the input vector of the neural network controller.

We propose a dimensionality reduction technique that should be employed to reduce the dimensionality of the feature vectors before they are fed as input

(29)

(29)

The primary purpose of data pre-processing is to modify the input variables so they can better match the predicted output. The main purpose of neural network data transformation is to modify the distribution of the network input parameters without losing much information.

Using the reduced input vector , the

output layer of the hidden layer is described as follows:

(30)

(30)

where is the number of nodes of the input layer,

is the hidden weight.

Similarly, the output of the neural controller is given by the following equation:

(31)

(31)

where is the number of nodes of the hidden layer,

is a scaling coefficient and

is the output weight.

The compact form of the control input to the system is given by the following equation:

(32)

(32)

with,.

The tracking error is given by the following equation:

(33)

(33)

where is the desired output.

The updated weights of the neural controller are obtained by minimizing the cost function defined as follows:

(34)

(34)

where is the number of observations. The output weights are updated by

(35)

(35)

with ,

, is the incremental change of the output weights:

(36)

(36)

where is the learning rate for the weights of the neural network controller,

, given by

(37)

(37)

Concerning the hidden weights, they are updated by

(38)

(38)

where is given by

(39)

(39)

with

Let ,

and

,

is the matrix which is defined as

(40)

(40)

with . In this paper, different kernel functions are used and defined in .

Table 1. The usual kernel functions.

The principal components are the first vectors associated with the highest eigenvalues and are often sufficient to describe the structure of the data. The number

satisfies the Inertia Percentage Criterion (IPC) [Citation25] given by

(41)

(41)

with

(42)

(42)

We have developed a neural network controller based on a reduced input vector and a variable learning rate. Consequently, this approach increases the training speed.

For the stability of the neural network controller, the Lyapunov function is detailed. Indeed, let us define a discrete Lyapunov function as

(43)

(43)

where is the control error. The change in the Lyapunov function is obtained by

(44)

(44)

The control error difference can be represented by

(45)

(45)

where is the synaptic weights of the neural network controller (

and

). Using Equation (45), the control error is going to be

(46)

(46)

with

(47)

(47)

From Equations (46) and (47), the convergence of the control error is guaranteed if

or

with

from Equation (43).

The suitable online algorithm for real-time applications may be applied if the variable learning rate is

.

3. The proposed algorithm

In this section, a summary of the proposed algorithm of the online kernel principal component analysis neural network controller is presented.

Offline phase

Initialization of neural network parameters (

,

,

,

) using

observations,

,

Determine the matrix

, focus the data and decompose into eigenvalue

,

Determine the orthogonal eigenvalues and the eigenvectors of the covariance matrix,

Order the eigenvectors on the decreasing way respect to the corresponding eigenvalues,

(5) Choose

that satisfy Equation (28) using the

retained principal components given byEquations (41) and (42).

Online phase

At time instant

, we have a new data

, using the obtained input vector

, if the condition

, where

is a given small constant, is satisfied then the neural network model, given by Equation (5), approaches sufficiently the behaviour of the system.

If the condition

, where

is a given small constant, is satisfied, then the reduced neural network controller provides sufficiently the control law

.

If

is not satisfied, the update of the synaptic weights of the neural network model is necessary, using Equations (8) and (12),

If

is not satisfied, the update of the synaptic weights of the neural network controller is necessary, using Equation (35) and (38),

(5), End.

4. Simulation results

In this section, two non-linear discrete systems are used. Indeed, the first is a single-input single-output nonlinear time-varying system and the second is a multi-input multi-output (MIMO) system.

4.1. Example of time-varying system

The time-varying non-linear system is described by the input–output model in the following equation [Citation41].

(48)

(48)

where and

are, respectively, the output and the input of the time-varying non-linear system at instant

;

,

and

are given by

(49)

(49)

The trajectory of and

are given in .

In this section, in order to examine the effectiveness of the proposed algorithm of the dimensionality reduction, different performance criteria are used.

Indeed, the mean squared identification error () and the mean absolute identification error (

) are, respectively, given by

(50)

(50)

(51)

(51)

where is the time-varying system output,

is the neural network model output and the used number of observations

is

.

The mean squared tracking error () and the mean absolute tracking error (

) are, respectively, given by

(52)

(52)

where is the desired value.

(53)

(53)

In this section, we examine the effectiveness of the proposed algorithm of the dimensionality reduction of the neural network controller input vector in the adaptive indirect control system.

Indeed, in offline phase, using a reduced number of observations to find, either, the parameter initialization of the neural network parameters (

,

,

,

), and the KPCA parameters as the matrix

, the eigenvalues, the eigenvectors, and finally the reduced input vector

given by Equation (28) based on the

retained principal components given by the Equations (41)–(42) are obtained.

In online phase, at instant , we use the input vector of the neural network controller

.

In this case, both neural network model and pre-processing neural network controller consist of single input, hidden layer with

nodes, and a single output node, identically, and a variable learning rate of neural network model

and of neural network controller

. The used scaling coefficient is

and

.

To use the suitable kernel function, the simulation results present that the used sigmoid function as a kernel, compared to other kernel functions defined in , which gives the lowest value obtained with the calculation of the indicating which sigmoid kernel function is the most reliable.

Table 2. The comparison results of the used kernel function in the identification error.

However, the features are directly fed to multilayer perceptron neural network as inputs without any preprocessing by KPCA. The obtained online MLP neural network model and the plant output are obtained. The used input vector of the MLP neural network is when the number of the hidden layer is

with

nodes and the value of the learning rate is variable. From , an excellent concordance between both plant output and the desired value is observed with a mean square error equal to

.

In , the output of the reduced online MLP neural network controller and the desired values are presented. In this case, the KPCA method is combined with the multilayer perceptron neural network. The KPCA technique is used as a preprocessing method to reduce the dimension features. The obtained reduced vector is fed also to the online multilayer perceptron neural network. The number of the hidden layer is 1. The learning rates are variable. A concordance between both desired values and the plant output is noticed from . To give more efficiency of this combination, several functions are tested and the result is presented in .

As defined in , we use the sigmoid function as a kernel function in the KPCA technique, and the tracking control aim of this system is to follow as possible the reference signal based on a proposed pre-processing neural network controller.

In this simulation, the desired value, , is given in the following:

(54)

(54)

We examine the influence of the dimensionality reduction of the neural network controller input vector in the identification error in and in the control error in .

Table 3. The influence of the dimensionality reduction in the identification error.

Table 4. The influence of the dimensionality reduction in the control error.

From and we observe that using the KPCA as a pre-processing phase to reduce the input vector of the neural network controller, the neural network KPCA controller has the smallest performance criteria in the identification error and in the control error

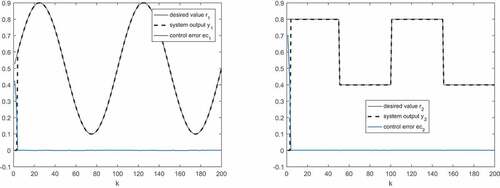

. These results are shown in , and .

Indeed, presents the pre-processing control system output and the desired values. In this case, the KPCA method is combined with a multilayer perceptron neural network controller.

The KPCA technique is used as a preprocessing method to reduce the dimension features. The obtained reduced vector is fed to the neural network controller. A concordance between the desired values and the control system output is noticed from although the parameters vary over time. However, and present, respectively, the control law and the control error.

These figures reveal that the NN controller using the KPCA as a pre-processing technique has smaller errors than the other controller without pre-processing.

Another desired value , given by Equation (55), is used to examine the effectiveness of the proposed algorithm of the dimensionality reduction of the neural network controller input vector in the adaptive indirect control system for the time-varying non-linear system.

Indeed, both neural network model and neural network controller consist of single input, hidden layer with

nodes, and a single output node, identically. The used scaling coefficient is

and

.

In this simulation, the desired value, , is given in the following:

(55)

(55)

presents the pre-processing control system output and the desired values. In this case, the KPCA method is combined with a multilayer perceptron neural network controller. A concordance between the desired values and the control system output is noticed, although the time-varying parameters.

However, and present, respectively, the control law and the control error. These figures reveal that the NN controller using the KPCA as a pre-processing technique has smaller errors than the other controller without pre-processing.

and present the influence of the dimensionality reduction in the identification error and in the control error.

Table 5. The influence of the dimensionality reduction in the identification error.

Table 6. The influence of the dimensionality reduction in the control error.

From and we observe that by using the KPCA as a pre-processing phase to reduce the input vector of the neural network controller, the neural network KPCA controller has the smallest performance criteria in the identification error and in the control error

. These results are shown in , and .

4.2. Effect of disturbances

An added noise is injected to the output of the time-varying non-linear system, given by Equation (48), in order to test the effectiveness of the pre-processing neural network controller.

To measure the correspondence between the system output and the desired value, a signal noise ratio is taken from the following equation:

(56)

(56)

with is a noise of the measurement of symmetric terminal

,

,

and

are an output average value and a noise average value, respectively. In this paper, the taken SNR is

.

Using the first desired value , the sensitivity of the proposed pre-processing neural network controller is examined in and respectively.

Table 7. The influence of the dimensionality reduction in the identification error.

Table 8. The influence of the dimensionality reduction in the control error.

From these tables, we observe that by using the KPCA as a pre-processing phase to reduce the input vector of the neural network controller, the neural network KPCA controller has the smallest performance criteria in the identification error and in the control error.

Using the second desired value, the sensitivity of the proposed pre-processing neural network controller is examined in and respectively.

Table 9. The influence of the dimensionality reduction in the identification error.

Table 10. The influence of the dimensionality reduction in the control error.

According to the obtained simulation results, despite the fact of the presence of disturbance in the system output and the time-varying parameters, the lowest ,

and

are obtained using a combination between the neural network controller and the KPCA technique.

4.3. Example of multi-input multi-output system

In this section, in order to examine the effectiveness of the proposed algorithm of the dimensionality reduction, a multi-input multi-output (MIMO non-linear system, given by the following equation, is used.

(57)

(57)

where and

,

, are, respectively, the output and the input of the MIMO non-linear system at instant

;

and

are the reference signal given by

(58)

(58)

The control system outputs, the desired values and the control errors are presented in . However, presents the control law u1 and u2 trajectories. These figures reveal that using a NN controller combined with the KPCA as a pre-processing technique gives an excellent concordance between the system outputs and the desired outputs with smaller control errors.

In this case, both neural network model and pre-processing neural network controller consist of single input, hidden layer with

nodes, and two output nodes, identically, and variable learning rates of neural network model,

, and of neural network controller

. The used scaling coefficient is

and

,

.

The input vector of the neural network controller is . The influence of the dimensionality reduction in the model error and in the control error is shown in and .

Table 11. The influence of the dimensionality reduction in the model error.

Table 12. The influence of the dimensionality reduction in the control error.

5. Conclusion

In this paper, an online combination between the neural network controller and the KPCA method is proposed and is applied with success in indirect adaptive control. Different kernel functions are tested. For instance, the lowest ,

,

,

,

and

are obtained, and it is proved that the sigmoid kernel function is the best. The effectiveness of the proposed algorithm is successfully applied, firstly, to single-input single-output system, with and without disturbances, and it proved its robustness to reject disturbances and to accelerate the speed of the learning phase of the neural model and neural controller. Second, it is applied to MIMO system and it gives good results.

Disclosure statement

No potential conflict of interest was reported by the authors.

References

- O. Mohareri, R. Dhaouadi, and A.B. Rad, Indirect adaptive tracking control of a nonholonomic mobile robot via neural networks, Neurocomputing 88 (2012), pp. 54–66. doi:10.1016/j.neucom.2011.06.035.

- A.A. Bohari, W.M. Utomo, Z.A. Haron, N.M. Zin, S.Y. Sim, and R.M. Ariff, Speed tracking of indirect field oriented control induction motor using neural network, Procedia Technol. 11 (2013), pp. 141–146. doi:10.1016/j.protcy.2013.12.173.

- S. Slama, A. Errachdi, and M. Benrejeb, Adaptive PID controller based on neural networks for MIMO nonlinear systems, J. Theor. Appl. Inf. Technol. 97 2 (2019), pp. 361–371.

- A. Errachdi and M. Benrejeb, Performance comparison of neural network training approaches in indirect adaptive control, Int. J. Control. Autom. Syst. 16 (3) (2018), pp. 1448–1458. doi:10.1007/s12555-017-0085-3.

- N. Ben, W. Ding, D.A. Naif, and E.A. Fuad, Adaptive neural state-feedback tracking control of stochastic nonlinear switched systems: An average dwell-time method, IEEE Trans. Neural Networks Learn. Syst. 30 (4) (2018), pp. 1076–1087. doi:10.1109/TNNLS.2018.2860944.

- N. Ben, L. Yanjun, Z. Wanlu, L. Haitao, D. Peiyong, and L. Junqing, Multiple lyapunov functions for adaptive neural tracking control of switched nonlinear non-lower-triangular systems, IEEE Trans. Cybern. 99 (2019). doi:10.1109/TCYB.2019.2906372

- P.O. Hoyer and A. HyvUarinen, Independent component analysis applied to feature extraction from colour and stereo images, Network 11 (3) (2000), pp. 191–210. doi:10.1088/0954-898X_11_3_302.

- L.J. Cao, K.S. Chua, W.K. Chong, H.P. Lee, and Q.M. Gu, A comparison of PCA, KPCA and ICA for dimensionality reduction in support vector machine, Neurocomputing 55 (1–2) (2003), pp. 321–336. doi:10.1016/S0925-2312(03)00433-8.

- I. Guyon and A. Eliseeff, An introduction to variable and feature selection, J. Mach. Learn. Res. 3 (2003), pp. 1157–1182.

- J. Weston, S. Mukherjee, O. Chapelle, M. Pontil, T. Poggio, and V.N. Vapnik, Feature selection for SVMs, Adv. Neural Inform. Process. Syst. 13 (2001), pp. 668–674.

- F.E.H. Tay and L.J. Cao, Saliency analysis of support vector machines for feature selection, Neural Network World 2 1 (2001), pp. 153–166.

- F.E.H. Tay and L.J. Cao, A comparative study of saliency analysis and genetic algorithm for feature selection in support vector machines, Intell. Data Anal. 5 (3) (2001), pp. 191–209. doi:10.3233/IDA-2001-5302.

- K. Lee and V. Estivill-Castro, Feature extraction and gating techniques for ultrasonic shaft signal classification, Appl. Soft Comput. 7 (2007), pp. 156–165. doi:10.1016/j.asoc.2005.05.003.

- H. Qinshu, L. Xinen, and X. Shifu, Comparison of PCA and model optimization algorithms for system identification using limited data, J. Appl. Sci. 13, 11 (2013), pp. 2082–2086. doi:10.3923/jas.2013.2082.2086

- R. Zhang, J. Tao, R. Lu, and Q. Jin, Decoupled ARX and RBF neural network modeling using PCA and GA optimization for nonlinear distributed parameter systems, IEEE Trans. Neural Networks Learn. Syst. 29 (2) (2018), pp. 457–469. doi:10.1109/TNNLS.2016.2631481.

- M.L. Wang, X.D. Yan, and H.B. Shi, Spatiotemporal prediction for nonlinear parabolic distributed parameter system using an artificial neural network trained by group search optimization, Neurocomputing 113 (2013), pp. 234–240. doi:10.1016/j.neucom.2013.01.037.

- S. Yin, S.X. Ding, A.H. Abandan Sari, and H.Y. Hao, Data-driven monitoring for stochastic systems and its application on batch process, Int. J. Syst. Sci. 44 (7) (2013), pp. 1366–1376. doi:10.1080/00207721.2012.659708.

- E. Aggelogiannaki and H. Sarimveis, Nonlinear model predictive control for distributed parameter systems using data driven artificial neural network models, Comput. Chem. Eng. 32 (6) (2008), pp. 1225–1237. doi:10.1016/j.compchemeng.2007.05.002.

- M. Madhusmita and H.S. Behera, Kohonen self organizing map with modified K-means clustering For high dimensional data set, Int. J. Appl. Inf. Syst. (IJAIS). 2(3) (2012), pp. 34–39. ( Foundation of Computer Science FCS, New York, USA).

- S. Buchala, N. Davey, T.M. Gale, and R.J. Frank, Analysis of linear and nonlinear dimensionality reduction methods for gender classifcation of face images, Int. J. Syst. Sci. 14 (36) (2005), pp. 931–942. doi:10.1080/00207720500381573.

- M. Lennon, G. Mercier, M.C. Mouchot, and L. Hubert-Moy, Curvilinear component analysis for nonlinear dimensionality reduction of hyperspectral images, Proc. SPIE Image Signal Process Remote Sens. VII 4541 (2001), pp. 157–168.

- N.K. Batmanghelich, B. Taskar, and C. Davatzikos, Generative-discriminative basis learning for medical imaging, IEEE Trans. Med. Imaging 31 (2012), pp. 51–69. doi:10.1109/TMI.2011.2162961.

- L. Van der Mateen, E. Postma, and J. Van den Herik, Dimensionality reduction: A comparative review tilburg centre for creative computing, Tilburg University, LE Tilburg, The Netherlands, 2009.

- K. Kuzniar and M. Zajac, Data pre-processing in the neural network identification of the modified walls natural frequencies, Proceedings of the 19th International Conference on Computer Methods in Mechanics CMM-2011, Warszawa, 9–12 May, 2011, pp. 295–296.

- V.M. Janakiraman, X. Nguyen, and D. Assanis, Nonlinear identification of a gasoline HCCI engine using neural networks coupled with principal component analysis, Appl. Soft Comput. 13 (2013), pp. 2375–2389. doi:10.1016/j.asoc.2013.01.006.

- K. Seerapu and R. Srinivas, Face recognition using robust PCA and radial basis function network, Int. J. Comput. Sci. Commun. Networks 2 5 (2012), pp. 584–589.

- N.M. Peleato, R.L. Legge, and R.C. Andrews, Neural networks for dimensionality reduction of fluorescence spectra and prediction of drinking water disinfection by-products, Water Res. 136 (2018), pp. 84–94. doi:10.1016/j.watres.2018.02.052

- C. Sucheta and K.V. Prema, Effect of dimensionality reduction on performance in artificial neural network for user authentication, 3rd IEEE International Advance Computing Conference (IACC), Ghaziabad, India, 2013.

- G.E. Hinton and R.R. Salakhutdinov, Reducing the dimensionality of data with neural networks, Science 313 (2006), pp. 504–507. doi:10.1126/science.1127647.

- Q. Zhu and C. Li, Dimensionality reduction with input training neural network and its application in chemical process modelling, Chinese J. Chern. Eng. 14 (5) (2006), pp. 597–603. doi:10.1016/S1004-9541(06)60121-3.

- C.-Y. Cheng, -C.-C. Hsu, and M.-C. Chen, Adaptive kernel principal component analysis (KPCA) for monitoring small, Ind. Eng. Chem. Res. 49 (2010), pp. 2254–2262. doi:10.1021/ie900521b.

- C. Chakour, M.F. Harkat, and M. Djeghaba, New adaptive kernel principal component analysis for nonlinear dynamic process monitoring, Appl. Math. Inf. Sci. 9 4 (2015), pp. 1833–1845.

- R. Fezai, M. Mansouri, O. Taouali, M.F. Harkat, and N. Bouguila, Online reduced kernel principal component analysis for process monitoring, Jx 61 (2018), pp. 1–11. doi:10.1016/j.jprocont.2017.10.010.

- Y. Xiao and Y. He, A novel approach for analog fault diagnosis based on neural networks and improved kernel PCA, Neurocomputing 74 (2011), pp. 1102–1115. doi:10.1016/j.neucom.2010.12.003.

- A. Errachdi and M. Benrejeb, On-line identification using radial basis function neural network coupled with KPCA, Int. J. Gen. Syst. 45 7 (2016), pp. 1–15.

- K.N. Reddy and V. Ravi, Differential evolution trained kernel principal component WNN and kernel binary quantile regression: Application to banking, Knowledge-Based Syst. 39 (2013), pp. 45–56. doi:10.1016/j.knosys.2012.10.003.

- I. Klevecka and J. Lelis, Pre-processing of input data of neural networks: The case of forecasting telecommunication network traffic, Telektronikk 104 3/4 (2008), pp. 168–178.

- M. Shirzadeh, A. Amirkhani, A. Jalali, and M.R. Mosavi, An indirect adaptive neural control of a visual-based quadrotor robot for pursuing a moving target, ISA Trans 59 (2015), pp. 290–302. doi:10.1016/j.isatra.2015.10.011.

- S.J. Yoo, J.B. Park, and Y.H. Choi, Indirect adaptive control of nonlinear dynamic systems using self recurrent wavelet neural networks via adaptive learning rates, Inf. Sci. 177 (2007), pp. 3074–3098. doi:10.1016/j.ins.2007.02.009.

- B. Scholkopf and A. Smola, Learning with Kernels, MIT Press, Cambridge, 2002.

- K.S. Narendra and K. Parthasarthy, Identification and control of dynamical systems using neural networks, IEEE Trans. Neural Networks 1 (1) (1990), pp. 4–27. doi:10.1109/72.80202.