?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

Agent-based modelling is a powerful tool when simulating human systems, yet when human behaviour cannot be described by simple rules or maximizing one’s own profit, we quickly reach the limits of this methodology. Machine learning has the potential to bridge this gap by providing a link between what people observe and how they act in order to reach their goal. In this paper we use a framework for agent-based modelling that utilizes human values like fairness, conformity and altruism. Using this framework we simulate a public goods game and compare to experimental results. We can report good agreement between simulation and experiment and furthermore find that the presented framework outperforms strict reinforcement learning. Both the framework and the utility function are generic enough that they can be used for arbitrary systems, which makes this method a promising candidate for a foundation of a universal agent-based model.

1. Introduction

Agent-based models (ABMs) [Citation1–4] quickly gain importance in various scientific fields [Citation5–12]. Current crises show us that when predicting the future, human behaviour can be more challenging to model than any natural phenomena, yet it is paramount to the time development of any system involving human beings. Sustainability research is aware of this problem for quite some time now [Citation13–16], but also in the field of virology we found that human behaviour is difficult to predict [Citation17–19] and that agent-based models (ABMs) could help mend this blind spot of many scientific communities [Citation20–22].

The core idea of ABMs is to shift the focus of a model from equations that try to describe macroscopic properties of a system (e.g. pressure and temperature of a gas) to properties of individual components of the system (e.g. position and velocity of each gas molecule). This way not only information about the macroscopic state of the system can be calculated, but also the states of individual components (i.e. agents) can be observed. The dynamic of the system is then mainly driven by interactions of agents with other agents or their environment. An detailed introduction to the concept of agent-based modelling can, for example, be found in [Citation23].

There are two factors that limit the wider use of ABMs: First of all, they are difficult to implement, since they usually rely on a set of rules that govern human behaviour, which are difficult to find [Citation24]. Secondly, and this relates more to using ABMs in scientific communities that are not experienced with this methodology, there is a large gap between the expectation and the capabilities of traditional ABMs: Often the expectation is that ABMs are inherently able to predict human decision making. In reality, describing this process is the most difficult part of designing an ABM [Citation25].

With the advance of machine learning and artificial intelligence [Citation26,Citation27] a solution to this predicament presents itself: We can use an artificial neural network to handle the decision-making of the agents. That way, we do not have to find complicated rules for agent behaviour, rather a utility function that agents want to optimize, using the actions available to them. This combination of methods was used successfully for various different systems [Citation28–35]. A generic framework that makes use of this synergy was developed in [Citation36] and expanded with an iterative learning approach in [Citation37]. Those two studies had the goal of showing that an ABM created from a generic framework using machine learning can lead to the same results as a model that was created manually, thereby shifting the problem of defining rules for agent behaviour to the much simpler problem of finding a utility function. In [Citation36] this was done for the Schelling Segregation model [Citation38], while [Citation37] investigated the Sugarscape model [Citation39], a system that is much more difficult to handle for machine learning, since the important states of the system cannot be reached by random decisions of the agents. However, this problem was solved by using an iterative learning approach. Furthermore, it was discovered that the framework also has some unique advantages that mainly relate to the fact that a trained neural network has more similarities to a human brain than hard-wired rules. Specifically, the ability to give different agents different experiences proves extremely helpful in modelling human beings [Citation36,Citation37].

Previous studies tried to replicate existing models, like the Schelling Segregation model [Citation38] or the Sugarscape model [Citation39]. In this work we will try to go one step beyond this and replicate actual human behaviour, extracted from a real-life experiment. The challenge here is, of course, that every human has different experiences and goals and that they do not always act in an optimal way. Rather, they act based on their experience and on certain values they hold, like altruism [Citation40,Citation41], fairness [Citation42] or conformity [Citation43,Citation44]. However, exactly for this reason, machine learning is a promising candidate to tackle this challenge.

The paper is organized as follows: Section 2.1 gives a short introduction into the used modelling framework. In Section 2.2 we construct a generic utility function, so that agent behaviour is more realistic than a simple homo economicus approach. We then use the framework and the utility function to model a public goods game in Section 2.3. Results are compared to experimental findings and discussed in Section 3. Section 4 concludes by recapping the most important findings of this study and putting them into context.

2. Methods

2.1. The framework

The basic ABM-framework used in this study is detailed in [Citation37], and the explanations given here will closely follow this description. The main goal of the framework is to provide a generic and universal tool for developing ABMs. Like in any other ABM, we have to define what agents can sense, and what actions are available to them. However, instead of defining all the rules that govern the agents’ behaviour, we simply have to define one utility function that the agents want to optimize. For most systems, this is a significantly simpler problem. Think of a game of chess: Finding explicit rules on how agents should act given the position of each chess piece is nearly impossible. However, we know that they want to win the game, that they can observe all pieces on the board and which moves they could perform. The heavy lifting of finding the link between observation and optimal action to reach the goal is then performed by a machine learning algorithm. This is closely related to reinforcement learning, yet there are some relevant differences [Citation37]. In detail, the framework has four distinct phases:

Initialization

Experience

Training

Application

In the initialization phase we have to define the agents, what they can sense, what they can do and what goal they want to reach, in the form of a utility function.

In the experience phase agents make random decisions and save their experiences. One experience consists of what they sensed, what action they took and what result the evaluation of their utility function yielded. Note, that the agents do not have direct access to their utility function, so initially they are unaware of any possible connections between their utility and the information they can observe. In that sense, the utility function is used as a black box: The agents know the result of the utility function, but do not know how it relates to the variables they can observe. And more importantly: The agents cannot observe all the variables that are needed to calculate the utility function. Only through the use of the artificial neural network they can try to estimate the value of the utility function based on what they observe. Note, that different agents can therefore learn different behaviour, based on what they experienced.

In the training phase the data gathered in the previous phase are used to train the neural network. Each agent has their own set of experiences and their own neural network. In order to keep the framework versatile, we use a hidden layer approach [Citation45,Citation46] utilizing cross validation [Citation47]. The artificial neural network is implemented using scikit-learn [Citation48]. We use a Multi-layer Perceptron regressor [Citation49] with a hidden layer consisting of 100 neurons and an adaptive learning rate. Its goal is to solve the regression problem of predicting the utility of every possible action in the current situation. For a large class of systems it was shown that an iterative approach is necessary for successful training [Citation37], in order to avoid the sampling bias that is caused by taking random actions. In such a case, agents go back to the experience phase and make their decisions not randomly, but using their trained neural network.

In the application phase, the agents (now trained and making ’smart’ decisions) can be used for modelling purposes and observed as in any conventional ABM.

2.2. Replicating human behaviour

The overall goal of this study is to bridge the gap between expectation and capability of ABMs by providing a universal framework for ABMs that can replicate human behaviour. For this, we will need to find a generic utility function, that can be used for many different systems. There are certain requirements for that function:

it should only include observables that are present in most systems

it should include properties that can be different for each individual

it should be able to describe human behaviour beyond homo economicus [Citation50,Citation51] by including values such as fairness and altruism

The first requirement is the strictest, since it means that we can only use variables, that should be present in any ABM. Since ABMs are extremely divers this list is short: All agents have some actions available to them and a goal that they want to reach. Everything else is highly dependent on the system itself. Since also the actions are system-dependent, we can only use the fact that the agents have some goal for constructing a utility function. For most systems, the goal, score or reward of agents is formulated as a payoff, similar to the payoff used in Game Theory. This payoff can now be used as a basis for our utility function. Note the difference: the payoff describes the score or reward of a single agent and maximizing this payoff leads to behaviour of a homo economicus. In contrast, the utility function should go beyond that. When thinking about relevant observables related to the payoff, an agent could use their own payoff, the payoff of others, both relative or absolute. This leads to the observables presented in .

Table 1. Possible observables that can be used in a generic utility function

Interestingly, each of these observables can be linked to a personal value that governs human behaviour, as shown in .

Table 2. Personal values that relate to the relevance of the observables from

This will be the basis of constructing the utility function. We define self interest (), altruism (

), conformity (

) and fairness (

) as values between 0 and 1, with the following meaning:

• is the payoff the agent wants to receive, given in relation to the maximally possible payoff

• is the payoff the agent wants others to receive, given in relation to the maximally possible payoff

• is the proportion (see EquationEquation (3

(3)

(3) )) between own payoff and average payoff of others, the agent wants

• is a weighting factor, that governs how the agent rates the fairness of all payoffs in relation to its own payoff, for states in which all other conditions are met

Additionally we will use , the payoff of the agent in relation to the maximally possible payoff and

, the average payoff of the other agents in relation to the maximally possible payoff.

Mathematically, the utility function consists of three main cost terms, i.e. conditions that need to be met, and an additional reward term that helps in comparing two states in which the cost terms yield the same result. The first term is the self interest cost . It is zero if the agent gets at least the payoff it wants to receive, otherwise there is a cost linearly connected to the gap between received payoff of the agent

and required payoff

:

The next cost term is the altruism cost . It is constructed in a similar way as the self interest cost, but related to the average payoff of other agents

and the agents altruism

:

The final cost term is the conformity cost . It is zero, if the payoff of the agent

is close enough to the average payoff of other agents

. The allowed gap is given by an agent’s conformity

. If the gap is too big, i.e. the agents payoff is either too low or too high, the resulting cost is linearly dependent on the size of the gap. For the mathematical definition of this cost term, it makes sense to define a proportion term

as

which yields , if

and a non-negative term

otherwise. With these definitions, the conformity cost

can be expressed as

Besides these three cost terms, which will decrease the utility, we also need additional terms that increase the utility, if the agent has a high payoff or if the distribution of the payoff is fair, i.e. has a lower Gini coefficient [Citation52,Citation53]. The weighting between these somewhat contradictory conditions is given in the agent’s fairness

. Thus, we can define a reward function

as

That means that agents with a fairness of 0 only gain utility from their own payoff, while agents with fairness 1 only gain utility for a low Gini coefficient.

When combining the cost terms with the reward term it is important to find a weighting that ensures that the cost terms are the dominant contributions. Since no term can be larger than 1 by definition, this can easily be ensured by multiplying a factor to the cost terms, similar to a Lagrangian multiplier. Here a factor of 10 was chosen, meaning that even the maximal reward

can only compensate for a single cost term of

. Thus, the complete utility function reads

with

We have now constructed a generic utility function that satisfies the conditions given above and that enables us to try to replicate human behaviour in an ABM using the presented framework.

2.3. Testing the framework using a public goods game

In order to test the capabilities of the framework, we will take a look at a system, in which human behaviour is quite counter-intuitive and obviously not only governed by maximizing one’s own payoff, namely, the public goods game [Citation54]. There are various versions of the public goods game [Citation55–57], yet they all rely on the same principles: Each individual of a group is given a certain endowment in the form of monetary units. Each individual can then decide for themselves how much (if any) of this endowment they want to contribute to the public good and which part they want to keep for themselves. All contributions in the public good get multiplied by an enhancement factor and then distributed evenly among all individuals, independent of their own contribution. This setup leads to a social dilemma: The maximal public good can be reached when everybody invests everything, yet the maximal personal payoff is reached when a person invests nothing, independent of the contributions of others. In game theory the action of not investing anything is called a dominant strategy, since it yields the best result in all circumstances. Thus, game theory predicts that each person should choose this action.

However, human decision making is not that simple. Many other factors influence one’s decision like fairness [Citation58,Citation59] or altruism [Citation60–62]. When observing real people in a setting similar to a public goods game one often finds evidence of cooperation. One of the most prominent examples is a study performed by Fischbacher et al. [Citation63] in which an experiment is performed to test how people behave in a simple public goods game. The experimental setup was sophisticated so that it was possible to extract what investment people would choose given the contribution of others, without having to play the public goods game over several rounds in order to avoid learning effects or effects of reciprocation.

Fischbacher et al. found, that even though all participants understood the rules of the game, there were three different strategies. The simplest strategy, termed free-riding, is to never contribute anything to the public good. Furthermore, they found behaviour called conditional cooperation, i.e. investing an amount similar to the other group members, but slightly biased towards the own payoff. The third strategy was called hump-shaped, since people following this strategy behave similar to conditional cooperators for overall low contribution, but they start to invest less and less if the contribution of other is higher. This gives us an ideal opportunity to see if we can replicate those strategies using the presented framework.

We will start by defining what actions are available to our agents. We will use the same values as Fischbacher et al., so the agents can contribute anything from 0 to 20 monetary units. Also what they can observe is clear: In the experiment they were informed about what the other group members invested on average, so this is what we will use as sensory input in the model. The payoff is then simply given as

with the own investment , the sum of all investments

, the enhancement factor

and the number of group members

. Note, that

can also be calculated from the average investment of others

, which is the sum over all investments minus the investment of the agent itself divided by

:

As utility function, we will use EquationEquation (6)(6)

(6) , as derived in the previous section.

According to the steps of the framework we will now train the agents using random decisions. An iterative form is possible, but not required, since all the possible states of the system can, in principle, be reached using random decisions.

In the application phase we now try to reproduce the experiment performed by Fischbacher et al. [Citation63]. In the model, this can be done in a straightforward way, since we can test how agents would behave, given a hypothetical investment of the other agents, without using this data for training, therefore circumventing complications like reciprocity.

Naturally, the results of this experiment will be dependent on the agent’s values (self interest, conformity, altruism and fairness) that we choose for the agents. Therefore, we will first ignore conformity and fairness and focus on the interplay between self interest and altruism, scanning this parameter space qualitatively. After that we will try to find reasonable assumptions for those values and see if we can replicate the results obtained by Fischbacher et al.

In order to use the given utility function for modelling purposes, the utilization of a neural network is absolutely necessary. Since the agents do not have access to all the data required for evaluating the utility function, conventional optimization techniques fail. The Gini coefficient, needed for evaluating the reward term of the utility function, is an example for such missing information. Since only the average investment of the other agents is known, there is no way of obtaining information about the distribution of investments and therefore the distribution of payoffs. Furthermore, many terms of the utility function depend on the behaviour of the other agents rather than the agent’s own behaviour. This makes the optimization problem exceptionally complex: The agent can only choose its own action and the influence of this action also depends on the personal values of other agents and what they have learned. However, using a neural network offers the possibility to grasp such complex correlations and makes the attempt of modelling this system feasible.

3. Results

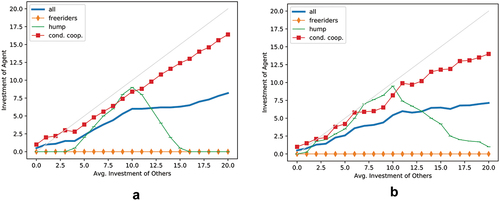

To see what qualitative behaviour is possible within the framework we will perform a parameter sweep for the value altruism. We keep self interest constant at and vary altruism between

and

. For each value, we will train an agent and see how it reacts, given the contribution of others. Results of this simulation are presented in , showing the average investments of 10 agents with identical personal values.

Figure 1. Agent behaviour for different values of altruism. The y-axis shows the contribution of the agent, the x-axis shows the average contribution of all other agents.

We find that all three major strategies observed by Fischbacher et al. are present: For low altruism we see free-riding, for medium altruism we see a hump and for high altruism we observe conditional cooperation.

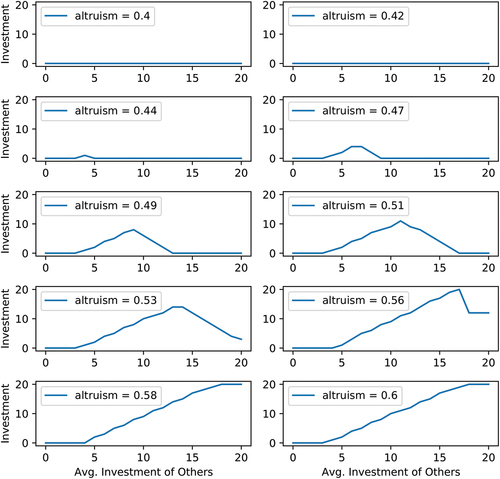

Now that we know that the utility function we found is in principle capable of producing all observed behaviour we can try to find reasonable assumptions for the three strategies and see how well they match the experimental observations. The easiest strategy to emulate is free-riding. Since the goal of free-riding is to optimize one’s own payoff, we can simply choose and keep the other values at 0, meaning that the only relevant goal of the agent is to obtain the maximally obtainable payoff. For the hump-shaped behaviour we can use a mix of altruism and self interest. Setting both of these variables to

means that the agent wants 50% of the maximal profit both for itself as well as for other agents. If these conditions are met, solutions with higher payoff for the agent itself are preferred, since

. For modelling conditional cooperation we can use not only altruism, but also fairness or conformity. Here we will use

, meaning that agents are satisfied as long as their payoff is not more than 20% lower than average and the average payoff is not more than 20% lower than their own payoff (see EquationEquation (4)

(4)

(4) ).

Using these values we try to replicate the experiment performed by Fischbacher et al. We train agents using the values determined above and compare their investments with the experimental results. For this, we model an agent population of the same size and strategy composition as the original experiment and attribute them personal values as explained above. After a training phase, in which they play the public goods game, the agents then face the same question as in the experiment: What would they invest, given an average investment of ? While in the real experiment this process was rather intricate to ensure that people respond honestly and uninfluenced, in the model it is much simpler: We can observe directly how an agent behaves, given the average investment of others and compare this behaviour to the behaviour of the participants of the original experiment. This comparison can be seen in . The left side shows the simulation results, while the right side shows the experimental results from [Citation63].

We find that all three strategies are replicated at least qualitatively. Note, that the numbers chosen for the personal values were not determined by any form of optimization, but rather by reasonable assumptions. That means, that it would be possible to bring the simulation even closer to the experiment by fine-tuning all parameters. However, this was not the goal of this study. We wanted to show, that, even without fine-tuning, we can reproduce human behaviour patterns based on simple assumptions.

Beyond the qualitative similarity between experiment and simulation, we can also see some quantitative matches: The hump-shaped strategy has a maximal investment of 10 units for an average investment of 10 units for both simulation and experiment. Also the maximal investment of conditional cooperators and agents overall are a close match.

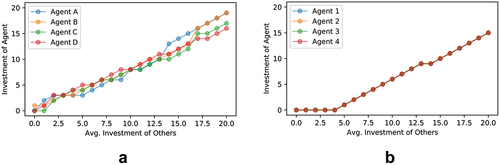

Note, that this result is different to what would be gained when using strict reinforcement learning. The goal of reinforcement learning is to get an optimal result, i.e. to give the agents all the possible information as well as the function they need to optimize and then train until they make perfect predictions. In contrast, here we only provide the information humans would have access to and do not provide the utility function itself to the agents. Training is performed until a certain prediction accuracy is reached, but this threshold is chosen below 100%, in order to do justice to the fact that people have no accurate, quantitative understanding of their own utility function. Here, this threshold is chosen at 99.0%, but for 95% – 99.9% the obtained results look qualitatively similar. Note, that using the prediction accuracy of the neural network is only one possibility of modelling the inaccuracy of human predictions. While other techniques, like introducing noise to the input or output of the neural network may give more control, the utilization of the prediction accuracy has a significant advantage: Any kind of (white) noise averages out over the course of training and will be ignored or averaged by the agents, having little influence on their behaviour outside the training phase. Only by using the prediction accuracy to stop the training at a given time we can make lasting changes to the trained network and therefore the behaviour of the agents. The inclusion of this human inaccuracy drastically improves the realism of the framework. This can be seen in , which compares the results of the framework with the results of traditional reinforcement learning.

Figure 3. The difference between the presented framework (left) and strict reinforcement learning (right).

Here we see the behaviour of four agents with the same personal values (, i.e. conditional cooperation). The left side depicts their decisions when this ABM framework is used, the right side is the result of reinforcement learning. We see the two important differences: Using reinforcement learning leads to the same behaviour for all agents, since they can exactly calculate the effect of each of their actions, independent of the experiences they gathered. Secondly, we notice some form of risk aversion: In the left panel, agents choose an investment of which they are sure will satisfy their need for conformity, while the agents in the right panel choose the absolute minimal investment that meets this requirement.

4. Conclusion

We used a framework for agent-based modelling based on machine learning to model a public goods game. Contrary to the traditional approach, we did not use the payoff of each agent as the sole determinant of an agents goal, but rather a universal utility function that includes the agents own payoff and the payoff of others to calculate a reward or score for this agent, taking into account its personal values. In order to make this function generic, we considered the relevance of the own payoff and the payoff of others both in a relative and in an absolute way, leading to the four values self interest, conformity, altruism, and fairness, defined in Section 2.2.

We then compared our simulation results with the experimental results of Fischbacher et al. [Citation63] and found good agreement. This means that the presented framework and utility function is a good candidate for modelling various systems from game theory and beyond. We also found that the framework outperforms strict reinforcement learning in terms of realistic behaviour of the agents (see ). Even when we use exactly the same utility function, reinforcement learning leads to rigid behaviour, while the framework can produce agents with the same values that still act differently because they gathered different experiences. This leads to a much more realistic description of human behaviour.

Regarding applicability, the framework can be used for most multi-agent systems. However, if the rules of behaviour are quite clear and simple to formulate there is no real benefit of using the framework, as the traditional ABM approach leads to the same behaviour. However, for systems in which

the decision process is complex,

parts of the relevant information is shrouded from the agents, or

agents have different values and goals,

using the framework offers a significant advantage. Still, for systems in which the goal of the agents is completely unknown the framework cannot be used in a straightforward way. Nevertheless the framework could be used to test different ideas for goals, values or utility functions to see which of those produce the observed behaviour, similar to using traditional ABMs to revers engineer rules for behaviour [Citation64,Citation65].

An interesting expansion for the framework would be including network effects of social networks. In the current implementation, every agent can observe any other agent (as was the case in the experiment to which we compared our results). However, in a larger system people can only observe someone who is in some way connected to them. Using the link strength as weighting factor in the utility function would even enable us to give higher importance to the well-being of close relatives than to casual acquaintances. The possibility of negative link strength could even describe some kind of rivalry, which would further improve the realism produced by the framework. While such an expansion would add nothing substantial to this study, it would be an interesting way of further expanding the framework’s scope.

One of the main goals of this framework was to keep it generic enough to work for nearly all systems, that can be modelled using an ABM. Both the framework itself as well as the utility function presented here satisfy these demands. However, it is still not clear if this formulation of the utility function is the optimal choice for all systems. We showed that it succeeds in replicating realistic human behaviour in the public goods game, yet more testing is necessary, before the framework can be released as open source code. Reaching that goal, however, would be a significant step for agent-based modelling, since it would give the scientific community a flexible tool for creating models that can realistically depict human behaviour, even in research communities that are not not yet familiar with the method of agent-based modelling.

Disclosure statement

No potential conflict of interest was reported by the author(s).

References

- S. Abar, G.K. Theodoropoulos, P. Lemarinier, and G.M. O’Hare, Agent based modelling and simulation tools: A review of the state-of-artsoftware, Comput. Sci. Rev. 24 (2017), pp. 13–33. doi:10.1016/j.cosrev.2017.03.001.

- H.R. Parry, and M. Bithell, Large scale agent-based modelling: A review and guidelines for model scaling, in Agent-based Models of Geographical Systems, Heppenstall, Alison J., CrooksLinda, Andrew T., and SeeMichael Batty, M., eds., Springer, Heidelberg, 2012, pp. 271–308.

- L. An, Modeling human decisions in coupled human and natural systems: Review of agent-based models, Ecol Modell 229 (2012), pp. 25–36. doi:10.1016/j.ecolmodel.2011.07.010.

- M. Barbati, G. Bruno, and A. Genovese, Applications of agent-based models for optimization problems: A literature review, Expert. Syst. Appl. 39 (2012), pp. 6020–6028. doi:10.1016/j.eswa.2011.12.015.

- P. Hansen, X. Liu, and G.M. Morrison, Agent-based modelling and socio-technical energy transitions: A systematic literature review, Energy Res. Social Sci. 49 (2019), pp. 41–52. doi:10.1016/j.erss.2018.10.021.

- M. Lippe, M. Bithell, N. Gotts, D. Natalini, P. Barbrook-Johnson, C. Giupponi, M. Hallier, G.J. Hofstede, C. Le Page, R.B. Matthews et al., Using agent-based modelling to simulate social-ecological systems across scales, GeoInformatica 23 (2019), pp. 269–298. doi:10.1007/s10707-018-00337-8.

- H. Zhang and Y. Vorobeychik, Empirically grounded agent-based models of innovation diffusion: A critical review, Artif. Intell. Rev. 52 (2019), pp. 707–741. doi:10.1007/s10462-017-9577-z.

- G. Letort, A. Montagud, G. Stoll, R. Heiland, E. Barillot, P. Macklin, A. Zinovyev, and L. Calzone, Physiboss: A multi-scale agent-based modelling framework integrating physical dimension and cell signalling, Bioinformatics 35 (2019), pp. 1188–1196. doi:10.1093/bioinformatics/bty766.

- T. Haer, W.W. Botzen, and J.C. Aerts, Advancing disaster policies by integrating dynamic adaptive behaviour in risk assessments using an agent-based modelling approach, Environ. Res. Lett. 14 (2019), pp. 044022. doi:10.1088/1748-9326/ab0770.

- D. Geschke, J. Lorenz, and P. Holtz, The triple-filter bubble: Using agent-based modelling to test a meta-theoretical framework for the emergence of filter bubbles and echo chambers, Br.J. Soc. Psychol. 58 (2019), pp. 129–149. doi:10.1111/bjso.12286.

- E.R. Groff, S.D. Johnson, and A. Thornton, State of the art in agent-based modeling of urban crime: An overview, J Quant Criminol 35 (2019), pp. 155–193. doi:10.1007/s10940-018-9376-y.

- G. Fagiolo, M. Guerini, F. Lamperti, A. Moneta, and A. Roventini, Validation of agent-based models in economics and finance, in Computer Simulation Validation, Springer, Heidelberg, 2019, pp. 763–787.

- L. Whitmarsh, I. Lorenzoni, and S. O’Neill, Engaging the Public with Climate Change: Behaviour Change and Communication, Whitmarsh, Lorraine, O'Neill, Saffron, and Lorenzoni, Irene, eds, Routledge, Abingdon-on-Thames, 2012.

- J. Urry, Climate Change and Society, in Why the Social Sciences Matter, Springer, Heidelberg, 2015, pp. 45–59.

- B. Beckage, L.J. Gross, K. Lacasse, E. Carr, S.S. Metcalf, J.M. Winter, P.D. Howe, N. Fefferman, T. Franck, A. Zia et al., Linking models of human behaviour and climate alters projected climate change, Nat. Clim. Chang. 8 (2018), pp. 79–84. doi:10.1038/s41558-017-0031-7.

- S. Clayton, P. Devine-Wright, P.C. Stern, L. Whitmarsh, A. Carrico, L. Steg, J. Swim, and M. Bonnes, Psychological research and global climate change, Nat. Clim. Chang. 5 (2015), pp. 640–646. doi:10.1038/nclimate2622.

- N. Chater, Facing up to the uncertainties of covid-19, Nat. Hum. Behav. 4 (2020), pp. 439. doi:10.1038/s41562-020-0865-2.

- J.J. Van Bavel, K. Baicker, P.S. Boggio, V. Capraro, A. Cichocka, M. Cikara, M.J. Crockett, A.J. Crum, K.M. Douglas, J.N. Druckman et al., Using social and behavioural science to support covid-19 pandemic response, Nat. Hum. Behav. 4 (2020), pp. 460–471.

- C. Betsch, How behavioural science data helps mitigate the covid-19 crisis, Nat. Hum. Behav. 4 (2020), pp. 438. doi:10.1038/s41562-020-0866-1.

- E. Cuevas, An agent-based model to evaluate the covid-19 transmission risks in facilities, Comput. Biol. Med. 121 (2020), pp. 103827. doi:10.1016/j.compbiomed.2020.103827.

- C.C. Kerr, R.M. Stuart, D. Mistry, R.G. Abeysuriya, G. Hart, K. Rosenfeld, P. Selvaraj, R.C. Nunez, B. Hagedorn, and L. George et al., Covasim: An agent-based model of covid-19 dynamics and interventions, PLoS Comput Biol, (2020), 17(7), pp. e1009149.

- P.C. Silva, P.V. Batista, H.S. Lima, M.A. Alves, F.G. Guimarães, and R.C. Silva, Covid-abs: An agent-based model of covid-19 epidemic to simulate health and economic effects of social distancing interventions, Chaos. Solitons. Fractals. 139 (2020), pp. 110088. doi:10.1016/j.chaos.2020.110088.

- C.M. Macal, Everything you need to know about agent-based modelling and simulation, J. Simul. 10 (2016), pp. 144–156. doi:10.1057/jos.2016.7.

- A. Klabunde and F. Willekens, Decision-making in agent-based models of migration: State of the art and challenges, Eur. J. Popul. 32 (2016), pp. 73–97. doi:10.1007/s10680-015-9362-0.

- L. An, V. Grimm, and B.L. Turner II, Meeting grand challenges in agent-based models, J. Artif. Soc. Social Simul. 23 (2020). doi:10.18564/jasss.4012.

- D. Dhall, R. Kaur, and M. Juneja, Machine learning: A review of the algorithms and its applications, Proceedings of ICRIC 2019, Jammu, India, 2020, pp. 47–63.

- P.C. Sen, M. Hajra, and M. Ghosh, Supervised classification algorithms in machine learning: A survey and review, in Emerging Technology in Modelling and Graphics, Springer, Heidelberg, 2020, pp. 99–111.

- E. Samadi, A. Badri, and R. Ebrahimpour, Decentralized multi-agent based energy management of microgrid using reinforcement learning, Int. J. Electr. Power Energy Syst. 122 (2020), pp. 106211. doi:10.1016/j.ijepes.2020.106211.

- C. Bone and S. Dragićević, Simulation and validation of a reinforcement learning agent-based model for multi-stakeholder forest management, Comput. Environ. Urban Syst. 34 (2010), pp. 162–174. doi:10.1016/j.compenvurbsys.2009.10.001.

- A. Jalalimanesh, H.S. Haghighi, A. Ahmadi, and M. Soltani, Simulation-based optimization of radiotherapy: Agent-based modeling and reinforcement learning, Math. Comput. Simul. 133 (2017), pp. 235–248. doi:10.1016/j.matcom.2016.05.008.

- I. Jang, D. Kim, D. Lee, and Y. Son, An agent-based simulation modeling with deep reinforcement learning for smart traffic signal control, in 2018 International Conference on Information and Communication Technology Convergence (ICTC), Jeju Island, Korea. IEEE, 2018, pp. 1028–1030.

- S. Hassanpour, A.A. Rassafi, V. Gonzalez, and J. Liu, A hierarchical agent-based approach to simulate a dynamic decision-making process of evacuees using reinforcement learning, J. Choice Modell. 39 (2021), pp. 100288. doi:10.1016/j.jocm.2021.100288.

- I. Maeda, D. deGraw, M. Kitano, H. Matsushima, H. Sakaji, K. Izumi, and A. Kato, Deep reinforcement learning in agent based financial market simulation, J. Risk Financ. Manage. 13 (2020), pp. 71. doi:10.3390/jrfm13040071.

- A. Collins, J. Sokolowski, and C. Banks, Applying reinforcement learning to an insurgency agent-based simulation, J. Def. Model. Simul. 11 (2014), pp. 353–364. doi:10.1177/1548512913501728.

- E. Sert, Y. Bar-Yam, and A.J. Morales, Segregation dynamics with reinforcement learning and agent based modeling, Sci Rep 10 (2020), pp. 1–12. doi:10.1038/s41598-020-68447-8.

- G. Jäger, Replacing rules by neural networks a framework for agent-based modelling, Big Data Cognit. Comput. 3 (2019), pp. 51. doi:10.3390/bdcc3040051.

- G. Jäger, Using neural networks for a universal framework for agent-based models, Math. Comput. Model. Dyn. Syst. 27 (2021), pp. 162–178. doi:10.1080/13873954.2021.1889609

- T.C. Schelling, Dynamic models of segregation, J. Math. Sociol. 1 (1971), pp. 143–186. doi:10.1080/0022250X.1971.9989794.

- J.M. Epstein, and R. Axtell, Growing Artificial Societies: Social Science from the Bottom Up, Brookings Institution Press, Washington, DC, 1996.

- E. Fehr and U. Fischbacher, The nature of human altruism, Nature 425 (2003), pp. 785–791. doi:10.1038/nature02043.

- J.A. Piliavin and H.W. Charng, Altruism: A review of recent theory and research, Annu Rev Sociol. 16 (1990), pp. 27–65. Available at http://www.jstor.org/stable/2083262.

- K. McAuliffe, P.R. Blake, N. Steinbeis, and F. Warneken, The developmental foundations of human fairness, Nat. Hum. Behav. 1 (2017), pp. 0042. Available at https://doi.org/10.1038/s41562-016-0042

- T. Morgan and K. Laland, The biological bases of conformity, Front. Neurosci. 6 (2012), pp. 87. Available at https://www.frontiersin.org/article/10.3389/fnins.2012.00087.

- J.C. Coultas, When in Rome … an evolutionary perspective on conformity, Group Processes Intergroup Relat. 7 (2004), pp. 317–331. Available at https://doi.org/10.1177/1368430204046141

- L.K. Hansen and P. Salamon, Neural network ensembles, IEEE Trans. Pattern Anal. Mach. Intell. 12 (1990), pp. 993–1001. doi:10.1109/34.58871.

- A. Krizhevsky, I. Sutskever, and G.E. Hinton, “Imagenet classification with deep convolutional neural networks“, in Proceedings of the Advances in Neural Information Processing Systems, MIT Press, Lake Tahoe, NV, USA, 2012, pp. 1097–1105.

- A. Krogh, and J. Vedelsby, Neural network ensembles, cross validation, and active learning, Proceedings of the 7th International Conference on Neural Information Processing Systems, MIT Press, Cambridge, MA, USA, (1995), pp. .

- F. Pedregosa, G. Varoquaux, A. Gramfort, V. Michel, B. Thirion, O. Grisel, M. Blondel, P. Prettenhofer, R. Weiss, V. Dubourg et al., Scikit-learn: Machine learning in python, J. Mach. Learn. Res. 12 (2011), pp. 2825–2830.

- F. Murtagh, Multilayer perceptrons for classification and regression, Neurocomputing 2 (1991), pp. 183–197. doi:10.1016/0925-2312(91)90023-5.

- H. Gintis, Beyond homo economicus: Evidence from experimental economics, Ecol. Econ. 35 (2000), pp. 311–322. Available at https://www.sciencedirect.com/science/article/pii/S0921800900002160.

- R.H. Thaler, From homo economicus to homo sapiens, J. Econ.Perspect. 14 (2000), pp. 133–141. Available at https://www.aeaweb.org/articles?id=10.1257/jep.14.1.133

- R. Dorfman, A formula for the gini coefficient, Rev. Econ. Stat. 61 (1979), pp. 146–149. Available at http://www.jstor.org/stable/1924845.

- B. Milanovic, A simple way to calculate the gini coefficient, and some implications, Econ. Lett. 56 (1997), pp. 45–49. Available at https://www.sciencedirect.com/science/article/pii/S0165176597001018.

- R. Axelrod and W. Hamilton, The evolution of cooperation, Science. 211 (1981), pp. 1390–1396. Available at https://science.sciencemag.org/content/211/4489/1390.

- M. McGinty and G. Milam, Public goods provision by asymmetric agents: Experimental evidence, Soc. Choice Welfare. 40 (2013), pp. 1159–1177. Available at https://doi.org/10.1007/s00355-012-0658-2

- E. Fehr and K.M. Schmidt, A theory of fairness, competition, and cooperation*, Q. J. Econ. 114 (1999), pp. 817–868. Available at https://doi.org/10.1162/003355399556151

- J. Andreoni, W. Harbaugh, and L. Vesterlund, The carrot or the stick: Rewards, punishments, and cooperation, Am. Econ. Rev. 93 (2003), pp. 893–902. Available at https://www.aeaweb.org/articles?id=10.1257/000282803322157142

- E. Fehr and K.M. Schmidt, Fairness, incentives, and contractual choices, Eur Econ Rev 44 (2000), pp. 1057–1068. doi:10.1016/S0014-2921(99)00046-X.

- G.E. Bolton and A. Ockenfels, Self-centered fairness in games with more than two players, Handb. Exp. Econ. Results. 1 (2008), pp. 531–540.

- T. Dietz, Altruism, self-interest, and energy consumption, Proc. Natl. Acad. Sci. 112 (2015), pp. 1654–1655. Available at https://www.pnas.org/content/112/6/1654.

- D.G. Rand and Z.G. Epstein, Risking your life without a second thought: Intuitive decision-making and extreme altruism, PLOS ONE. 9 (2014), pp. 1–6. Available at https://doi.org/10.1371/journal.pone.0109687

- E. Fehr and B. Rockenbach, Human altruism: Economic, neural, and evolutionary perspectives, Curr. Opin. Neurobiol. 14 (2004), pp. 784–790. Available at https://www.sciencedirect.com/science/article/pii/S0959438804001606.

- U. Fischbacher, S. Gächter, and E. Fehr, Are people conditionally cooperative? evidence from a public goods experiment, Econ Lett 71 (2001), pp. 397–404. doi:10.1016/S0165-1765(01)00394-9.

- N. Gilbert and P. Terna, How to build and use agent-based models in social science, Mind Soc. 1 (2000), pp. 57–72. doi:10.1007/BF02512229.

- K.H. Van Dam, I. Nikolic, and Z. Lukszo, Agent-based Modelling of Socio-technical Systems, Vol. 9, Springer Science & Business Media, Heidelberg, 2012.