?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

We deduce a procedure to apply balanced truncation to parameter-dependent differential-algebraic systems. For that we solve multiple projected Lyapunov equations for different parameter values to compute the Gramians that are required for the truncation procedure. As this process would lead to high computational costs if we perform it for a large number of parameters, we combine this approach with the reduced basis method that determines a reduced representation of the Lyapunov equation solutions for the parameters of interest. Residual-based error estimators are then used to evaluate the quality of the approximations. After introducing the procedure for a general class of differential-algebraic systems, we turn our focus to systems with a specific structure, for which the method can be applied particularly efficiently. We illustrate the efficiency of our approach on several models from fluid dynamics and mechanics.

1. Introduction

In the modelling of various industrial processes one often obtains systems of differential-algebraic equations (DAEs) that are of high dimension. Typical examples are electrical circuits, thermal and diffusion processes, multibody systems, and specific discretized partial differential equations (Brenan, Campbell, and Petzold Citation1995; Campbell Citation1980). Often, these systems are further dependent on parameters that can describe physical properties such as material constants and which are often subject to optimization. The resulting parameter-dependent differential-algebraic systems have the form

where ,

, and

are dependent on parameters

, where

. The input, the state, and the output are denoted by

,

and

for

. The matrix

can be a singular. However, throughout this paper, we assume uniform regularity, i.e., the pencil

, which is a matrix polynomial in the variable

, is assumed to be regular for all

, that is

is not the zero polynomial for all

. Moreover, we assume that the system under consideration is uniformly asymptotically stable, i.e., the system (or the pencil

) is asymptotically stable for all

, meaning that all finite eigenvalues of the pencil

have negative real part for all

. Throughout the derivations provided in this work, we assume that

,

,

, and

are affine in the parameter

, i.e.,

where all are parameter-dependent continuous functions and

,

,

,

are parameter-independent matrices. For reasons of computational efficiency, we always assume that

and we also assume that the number of inputs and outputs is small compared to the dimension of the states, i.e.,

. For ease of notation, it is sometimes handy to put the matrices

,

,

, and

into the sums in (3) and multiply them with the factors

. The model (1) is also referred to as the full-order model (FOM). Often, one also considers the input/output mapping of the system in the frequency domain. This relation is typically expressed by the transfer function

Because of the high state-space dimension of (1) it is useful to apply reduction methods to extract the essential information of the system and its solution. More precisely, we want to determine a (uniformly regular and asymptotically stable) reduced-order model (ROM)

with ,

, and

, where

. The reduced state and output are

and

. The aim is to determine the surrogate model in such a way that the output of the ROM well-approximates the output of the FOM for all admissible inputs

and all parameters

. In terms of the transfer function, this means that the error

is sufficiently small in an appropriate norm for all

, where

denotes the transfer function of the ROM.

For parameter-independent problems, i.e.,

there are several classes of model order reduction techniques. Examples are singular value-based approaches like balanced truncation (Benner et al. Citation2017; Moore Citation1981; Tombs and Postlethwaite Citation1987) and Hankel norm approximations (Glover Citation1984). Additionally, there are Krylov subspace-based methods such as the iterative rational Krylov algorithm (IRKA) (Benner et al. Citation2017; Flagg, Beattie, and Gugercin Citation2012; Gugercin, Antoulas, and Beattie Citation2008; Gugercin, Stykel, and Wyatt Citation2013) and moment matching as well as data-driven methods such as the Loewner framework (Beattie, Gugercin, and Mehrmann Citation2022; Gosea, Poussot-Vassal, and Antoulas Citation2021; Mayo and Antoulas Citation2007). Corresponding methods for parameter-dependent systems are introduced in (Baur, Benner, and Feng Citation2014; Benner et al. Citation2016; Geuss, Panzer, and Lohmann Citation2013).

In this article, we focus on balanced truncation which is one of the most popular reduction techniques. This is mainly due to the guaranteed asymptotic stability of the ROM, the existence of an error bound, and appealing numerical techniques for the involved Lyapunov equations (Benner and Schneider Citation2013; Druskin, Simoncini Citation2011; Schmidt, Wittwar, and Haasdonk Citation2020; Son and Stykel Citation2017). Within balanced truncation, Lyapunov equations corresponding to the original system need to be solved. The solutions of these equations are called Gramians. They describe the input-to-state and state-to-output behavior and are used to construct projection matrices for the reduction. The multiplication of the system matrices with these projection matrices then results in a ROM.

All the above-mentioned methods focus on the case and are, however, not directly applicable in the DAE case. Even if the problem is not parameter-dependent, there are several challenges that one has to face (here we assume for simplicity, that the problem is parameter-independent):

a) Since the matrix is typically singular, the transfer function

is possibly improper, i.e., it may have a polynomial part which is unbounded for growing values of

. If the reduced transfer function

does not match this polynomial part, then the error transfer function

is not an element of the rational Hardy spaces

or

(see, e.g., Zhou, Doyle, and Glover Citation1996) for a definition) and the output error cannot be bounded by the input norm. Thus, a model reduction scheme for DAEs must preserve the polynomial part of its transfer function. This is addressed in (Gugercin, Stykel, and Wyatt Citation2013; Mehrmann and Stykel Citation2005; Stykel Citation2004).

b) In balanced truncation, one has to solve two large-scale Lyapunov equations. In the DAE case, one has to consider a generalization of these – so-called projected Lyapunov equations. Thereby, the Lyapunov equations are projected onto the left and right deflating subspaces corresponding to the finite or infinite eigenvalues of the matrix pencil . This involves specific projectors which are hard to form explicitly and that might destroy the sparsity structure of the coefficient matrices. However, for DAE systems of special structure, the projectors are of a particular form which can be exploited from the numerical point of view. For details, we refer to (Benner, Saak, and Uddin Citation2016; Heinkenschloss, Sorensen, and Sun Citation2008; Saak and Voigt Citation2018; Stykel Citation2008).

Besides the approaches mentioned above, some of the issues in model reduction of DAEs are also addressed in (Alì et al. Citation2014; Banagaaya, Ali, and Schilders Citation2016). Here, the authors introduce an index-aware model order reduction scheme that splits the DAE system into a differential and an algebraic part. However, in this method, it is often difficult to maintain sparsity of the system matrices, and hence, it is in general not feasible for many systems with large dimensions. Also data-driven approaches have been recently extended to differential-algebraic systems, see, e.g. (Antoulas, Gosea, and Heinkenschloss Citation2020; Gosea, Zhang, and Antoulas Citation2020). Finally, we would like to mention (Benner and Stykel Citation2017) which provides an overview of model order reduction for DAE systems.

Different approaches for parametric model reduction have been developed as well. Besides others, there exist two main classes of such methods. First, one could determine global bases for the projection spaces for the entire parametric model, e.g., by concatenating the local bases for different parameter values, see, e.g. (Baur et al. Citation2011). Second, there exist approaches for constructing local bases for a specific parameter value by combining local basis information of several prereduced models at different parameter values, e.g., by interpolating between different models (Eid et al. Citation2011; Geuss, Panzer, and Lohmann Citation2013). We also refer to (Benner, Gugercin, and Willcox Citation2015) for an extensive survey on such methods.

However, to the best of our knowledge, none of the techniques presented in (Benner, Gugercin, and Willcox Citation2015) has already been extended to the case of DAE systems. Therefore, the motivation of this paper is to develop a first method for this purpose based on balanced truncation. If we aim to reduce a parameter-dependent system by balanced truncation in a naive fashion, we would have to solve the Lyapunov equations for each individual parameter of interest, which is computationally prohibitive. To avoid these computational costs, for the case , the authors in (Son et al. Citation2021) apply some interpolation techniques to approximate the Gramians for all admissible parameters. The authors in (Son and Stykel Citation2017), on the other hand, apply the reduced basis method which is a well-established method to reduce parameter-dependent partial differential equations (Hesthaven, Rozza, and Stamm Citation2016; Kunkel and Mehrmann Citation2006). Using this method, the authors determine the approximate subspaces in which the solutions of the corresponding Lyapunov equations are contained in.

In this paper, we extend the reduced basis method to the case of DAE systems where the parametric projected Lyapunov equations are only solved for few sampling parameters. Then, based on these solutions, a reduced subspace in which the Lyapunov equation solutions for all approximately live, is constructed. The latter steps form the computationally expensive offline phase. After that, using the reduced basis representation, the Lyapunov equations can be solved much more efficiently for all

in the online phase. A crucial question in the offline phase is the choice of the sample parameters. Usually, a grid of test parameters is selected. For these, the error is quantified using a posteriori error estimators. Then, new samples are taken at those parameters at which the error estimator estimates the largest error.

In this paper, we generalize the reduced basis balanced truncation method of (Son and Stykel Citation2017) to differential-algebraic systems with a focus on structured systems from specific application areas. The main problems we solve in this paper are the following:

a) We derive error estimators for parameter-dependent projected Lyapunov equations. These can be improved if the given system is uniformly strictly dissipative. Since this condition is not always satisfied, we discuss transformations to achieve this for systems of a particular index 3 structure.

b) We discuss in detail how the polynomial part of parameter-dependent transfer functions of DAE systems can be extracted by efficiently approximating their Markov parameters.

c) We apply this approach to several application problems and illustrate its effectiveness.

Our method also follows the paradigm of decomposing the procedure into an offline and online phase. In the offline phase, we approximate the image spaces of controllability and observability Gramians that are the solutions of projected Lyapunov equations. These approximate image spaces are then used in the online phase, to determine approximations of the Gramians, and hence, the reduced order model, efficiently for all required parameters. The online phase can be performed for any parameter . Due to the reduced dimension obtained by the offline phase, this step is very fast and can be performed repeatedly for all required parameters. Moreover, in this work, we introduce new error estimators, which are used in the offline phase to assess the quality of the reduction.

This paper is organized as follows. In Section 2, two model problems are introduced that motivate the method presented in this paper. In Section 3, we review existing concepts and approaches that will be used later in this paper. Thereby, in Subsection 3.1, we recall projection techniques to decouple the differential and algebraic equations in (Equation1(1a)

(1a) ). Afterwards, in Subsection 3.2, we consider model order reduction by balanced truncation for DAE systems. We further address the numerical challenges that arise in computing the solutions of the required Lyapunov equations. Since solving Lyapunov equations for every requested parameter leads to high computational costs, in Section 4 the reduced basis method is presented, which was first applied to standard Lyapunov equations in (Son and Stykel Citation2017). We derive two error estimators for our method, one of them is motivated by the estimator from (Son and Stykel Citation2017), the other one is an adaption of the estimator presented in (Schmidt, Wittwar, and Haasdonk Citation2020). Here, we also discuss the treatment of the algebraic part of the system that may lead to polynomial parts in the transfer function. Finally, in Section 5, we evaluate the method of this paper by applying it to our model problems from Section 2.

2. Model problems

2.1. Problem 1: Stokes-like DAEs

The first example is the system

which arises, e.g., if we discretize the incompressible Navier–Stokes equation. The parameter-independent version is presented in (Mehrmann and Stykel Citation2005; Stykel Citation2004). The system matrices are dependent on a parameter , where

is the parameter domain. For fluid-flow problems, the matrices

represent the masses and the discretized Laplace operator. Naturally, it holds that

and

for all

, where

(

) denotes a positive (semi)definite matrix

and correspondingly

(

) denotes a negative (semi)definite matrix. The discrete gradient is given by

which we assume to be of full column rank for all

. The matrices

and

are the input and the output matrices, respectively. The state of the system at time

is given by

and

. The vectors

and

are the input and output of the system.

In view of (2) we assume that the symmetry and definiteness of and

is represented in the affine parameter structure as

where ,

are positive parameter-dependent continuous functions with the convention

. Furthermore,

,

,

,

for

are parameter-independent matrices. Moreover, for reasons of computational efficiency, we always assume that

.

2.2. Problem 2: Mechanical systems

The second system we consider is of the form

which results from a linearization of the spring-mass-damper model presented in (Mehrmann and Stykel Citation2005). The mass, damping, and stiffness matrices are assumed to be symmetric and positive definite for all

. The matrices

and

are the input and the output matrices. The matrix

reflects algebraic constraints on the system and is of full column rank. In this example, the state includes the displacement

, the velocity

, and Lagrange multiplier

.

For convenience, we also define

Then with this notation, we can write the mechanical system similarly as in (4) with the difference that the off-diagonal blocks of the state matrix are not the transposes of each other.

Similarly to the first model problem, we assume that ,

, and

can be written as

where ,

,

are positive parameter-dependent continuous functions using the convention

. Furthermore,

,

,

and

,

,

for

are parameter-independent matrices with

.

3. Preliminaries

3.1. Decoupling of differential and algebraic equations

In this section, we briefly describe the projection technique for decoupling the algebraic constraints in DAEs of the form (1) from the remaining differential equations for a fixed parameter and under the assumptions of regularity and asymptotic stability. Therefore, for simplicity of presentation, we omit

in this section. The details of this can be found in (Heinkenschloss, Sorensen, and Sun Citation2008; Saak and Voigt Citation2018; Stykel Citation2004, Citation2006).

As shown in (Berger, Ilchmann, and Trenn Citation2012) there exist regular matrices that transform the system matrices into quasi-Weierstraß form, that is

where and

is nilpotent with nilpotency index

that coincides with the (Kronecker) index of the DAE system (1). Here,

is the number of the finite eigenvalues of the matrix pencil

and

is the number of the infinite ones. Accordingly, spectral projectors onto the left and right deflating subspace of

corresponding to the

finite eigenvalues are defined as

We define

to derive the states

under the assumption that the input is sufficiently smooth and that the consistency conditions are satisfied, i.e., we assume that

. They satisfy

such that

solves EquationEquation (1a)

(1a)

(1a) . We refer to

and

as differential and algebraic states. These states are associated with the proper and improper controllability Gramians

respectively. They span the reachable subspaces of the differential and algebraic states. Analogously, the proper and improper observability Gramians

span the corresponding observability spaces. The proper Gramians are obtained by solving the projected continuous-time Lyapunov equations

and the improper ones by solving the discrete-time Lyapunov equations

In Subsection 3.2.2 we will describe how these projected Lyapunov equations can be solved.

In practice, the projectors and

are computationally expensive to form and even more expensive to compute with as they are dense matrices of large dimension in general. To determine them, the derivations from (Kunkel and Mehrmann Citation2006) can be applied, however they are not numerically stable since a Jordan canonical form needs to be computed. On the other hand, the methods presented in (Banagaaya Citation2014; Campbell, Meyer, and Rose Citation1976), or (März Citation1996) might be used to generate the differential and algebraic state spaces, however, they involve the determination of matrix kernels which are based on matrix decompositions. Suitable parts of the matrices involved in these decompositions are then used to determine the projection matrices

and

. They are in general not of a sparse structure and, therefore, not applicable if we consider matrices of large dimensions. Hence, if possible, it is advantageous to determine the projection matrices analytically. In many application fields, this is possible because the matrices

and

are of special structure that can be utilized for this purpose. The examples in Section 2 have such a numerically advantageous structure. Further examples can be found in (März Citation1996; Stykel Citation2008).

3.2. Model reduction of differential-algebraic systems

The aim of this section is to review methods to reduce the DAE system (Equation1(1a)

(1a) ) for a fixed parameter. We utilize balanced truncation modified for projected systems which is presented in Subsection 3.2.1. Afterwards, in Subsection 3.2.2, we summarize the ADI and Smith methods to solve the involved projected Lyapunov equations. Finally, in Subsection 3.2.3, we present an alternative approach to approximate the algebraic parts of the system (potentially leading to polynomial parts in the transfer function) which is inspired by (Schwerdtner et al. Citation2023).

3.2.1. Balanced truncation

The aim of this subsection is to find a reduced system

with ,

which approximates the input/output behaviour of the original system (Equation1

(1a)

(1a) ) (for a fixed parameter).

Since the different Gramians are positive semidefinite, there exist factorizations

with factors . We consider the singular value decompositions

where the matrix is a diagonal matrix with nonincreasing nonnegative entries that are the proper Hankel singular values and

is a diagonal matrix including the improper nonzero Hankel singular values. With the matrix

which contains the

largest Hankel singular values and

we construct the left and right projection matrices

that balance the system, reduce the differential subsystem, and generate a minimal realization of the algebraic subsystem.

We obtain the reduced system (13) by setting

The generated reduced system has decoupled differential and algebraic states.

If , then the ROM is asymptotically stable and one can estimate the output error of the reduced system by

where and

are the transfer functions of the original and the reduced system [Antoulas Citation2005, Theorem 7.9]. The

-norm is defined as

where

denotes the maximum singular value of its matrix argument.

Remark 1. In the balancing procedure outlined above, it is important that the number of inputs and outputs is very small compared to the state-space dimension, i.e., , which we assume throughout this work. In this case, the Gramians will typically have low numerical rank (Sorenson and Zhou Citation2002), which ensures that a low-rank approximation of the Gramians is possible and numerically efficient and that the FOM can be well-approximated by a ROM of low order. Balanced truncation model reduction is also possible in the case that one of the dimensions

or

is large while the other one is small, see, e.g. (Benner and Schneider Citation2013; Pulch, Narayan, and Stykel Citation2021), However, in this case, the numerical procedure is based on the approximation of an integral by quadrature. Our method cannot be generalized to this more general situation in a straightforward manner, but we believe that it would be an interesting topic for future research.

3.2.2. Solving projected Lyapunov equations

The aim of this subsection is to present numerical techniques to solve the projected Lyapunov equations (Equation10(9)

(9) ) and (Equation12

(12)

(12) ) in order to approximate the Gramians of system (Equation1

(1a)

(1a) ). For standard systems with

, there are several methods to solve the corresponding Lyapunov equations. If small-scale matrices are considered, the Bartels-Stewart algorithm (Bartels and Stewart Citation1972) or Hammarling’s method (Hammarling Citation1982) are used. These methods, however, are inefficient in the case of large matrices. In typical applications of practical relevance, the solution of a Lyapunov equation is often of very low numerical rank. So it is desired to compute low-rank factors of these solutions directly. State-of-the-art methods are the ADI method (Kürschner Citation2016; Penzl Citation2000), the sign function method (Benner et al. Citation2017) or Krylov subspace methods (Simoncini and Druskin Citation2009). In the literature, there are also several extensions to projected Lyapunov equations such as (Heinkenschloss, Sorensen, and Sun Citation2008; Stykel Citation2008).

In this section, we utilize the ADI method to solve the projected continuous-time Lyapunov equation (Equation10(9)

(9) ) and the generalized Smith method to solve the discrete-time Lyapunov equation (Equation12

(11)

(11) ). Here, we follow the ideas from (Stykel Citation2008).

We can use the following lemma from (Stykel Citation2008) to derive an equation that is equivalent to the projected continuous-time Lyapunov equation (Equation9(8)

(8) ).

Lemma 3.1. Let the matrix pencil with

be regular and asymptotically stable. Let further the matrix

be nonsingular and

. Assume that the left and right spectral projectors onto the finite spectrum of

are denoted by

. If

with

is not an eigenvalue of the pencil

, then the projected discrete-time Lyapunov equation

with

is equivalent to the projected continuous-time Lyapunov Equation (Equation9(8)

(8) ), i.e. their solution sets coincide.

Lemma 3.1 motivates the ADI iteration

As shown in (Stykel Citation2008) the following proposition provides the convergence of the iteration (19).

Proposition 3.2. Let the matrix pencil with

be regular and asymptotically stable (i.e., all its finite eigenvalues have negative real part) and let

. Assume that the left and right spectral projectors onto the finite spectrum of

are denoted by

. Suppose that we have given a sequence of shift parameters

with

for all

and with

for some

and all

. Then the iteration (19) converges to the solution

of the projected Lyapunov equation (Equation9

(8)

(8) ).

Remark 2. The periodicity of the shift parameters ensures that they fulfill a non-Blaschke condition that is sufficient for the convergence of the ADI iteration (Massoudi, Opmeer, and Reis Citation2017).

To work with the ADI iteration more efficiently, our aim is to compute low-rank factors of , i.e., we aim to compute a tall and skinny matrix

such that

. We can represent the iteration (20) by the low-rank factors of

with

where and

is chosen as the empty matrix in

. We note that the following properties hold for all

:

We further define

Using (20), we obtain

It remains to solve the discrete-time Lyapunov equation (Equation11(10)

(10) ). Under the assumption that

is nonsingular, (11) is equivalent to the transformed discrete-time Lyapunov equation

This equation could be solved with the Smith method (Stykel Citation2008). Since and the matrix

is nilpotent with the nilpotency index

of the matrix

of the quasi-Weierstrass form of the pencil

, the iteration stops after

steps. This leads to the unique solution

We note that we have to solve multiple linear systems with the matrix to obtain the solution

. Therefore, we can utilize the sparse LU decomposition to do this efficiently. Instead of computing the full matrix

we can also generate the low-rank factors

as

Remark 3. In many relevant applications, the solution can be explicitly calculated by making use of specific block structures in

. Moreover, the improper Hankel singular values are often zero (namely, when the FOM’s transfer function is strictly proper) in which case we do not have to solve the improper Lyapunov equations at all, see also (Stykel Citation2006).

3.2.3. Alternative approach to determine the algebraic parts

Since the reduced basis method presented later in this paper is applied only to the differential part of the system, in this section we discuss ways to construct reduced representations of the algebraic part. One way is to use the reduction provided by the improper Gramians as explained in Subsection 3.2.1. This is a suitable choice for parameter-independent problems. However, it is difficult to build a reduced representation for parameter-dependent problems, if the improper part depends on parameters. Then, the discrete-time Lyapunov equations have to be solved for each parameter value of interest in the online phase which can be too expensive. For this reason, in this subsection, we present an alternative approach that is also viable in the parametric setting and allows an efficient evaluation in the online phase (see also Subsection 4.3).

Our method is inspired by (Schwerdtner et al. Citation2023) in which the polynomial part of the transfer functions of DAE systems of index at most two is approximated, but the methodology can also be extended to systems of even higher index. Consider the transfer function of system (1) (for a fixed parameter) which can be decomposed into its strictly proper part and a polynomial part

where the polynomial part is given as

with ,

, and

with index of nilpotency

as in (8), see (Stykel Citation2004) for the details. Hence, for

and

partitioned conforming to the partioning of (8), the polynomial part can be rewritten as

where ,

denote the

-th Markov parameters of the transfer function. As shown in (Antoulas, Gosea, and Heinkenschloss Citation2020), the strictly proper part

converges to zero for

. For this reason we can approximate the Markov parameters if we consider sufficiently large values of

. We exemplify the methodology for a few cases.

The index one case In this case, we simply have

for a sufficiently large value of and moreover,

for

.

The index two case In this case, for

and hence

. By inserting

we obtain that

Hence, for sufficiently large values we can approximate

The index three case In the index three case, we approximate the polynomial part of the form Inserting

into the polynomial part of the transfer function

provides a real and an imaginary part

Then the Markov parameters are approximated by

for sufficiently large ,

, and

with

.

System realization from Markov parameters If the polynomial part has been extracted and all Markov parameters

,

have been determined we can realize it by a DAE system as follows. Assume that

are compact SVDs of

with positive definite

for all

. Then for

we define the matrices

and

This yields

Now we obtain an overall realization of the algebraic part of the system as

4. Reduced basis method

In this section, we return to the problem of reducing parameter-dependent systems. Since the solution of the Lyapunov equations is the most expensive part of balanced truncation, we aim to limit the number of Lyapunov equation solves. To achieve this, the reduced basis method presented by (Son and Stykel Citation2017) can be applied. Besides the regularity and asymptotic stability of the matrix pencil for all

we impose the following assumption on the problem (1) for the rest of the paper.

Assumption 4.1. We assume that is a bounded and connected domain. We assume further that the matrix-valued functions

,

,

,

in (1) as well as the left and right spectral projector functions

and

on the deflating subspace associated with the finite eigenvalues of

depend continuously on the parameter

.

Assumption 4.1 ensures that the solutions of the parameter-dependent projected Lyapunov equations also depend continuously on . Then, also the ROM depends continuously on

for a fixed reduced order.

In practice, many examples are continuously parameter-dependent, e.g., in electrical circuits, the resistances can be continuously dependent on the temperature. Moreover, inductances and capacitances may be assumed continuous if one wishes to tune them within a parameter optimization. In other examples, for instance, mechanical systems, the parameters may represent (continuously varying) material constants. On the other hand, there are also examples where the dependence on the parameters is not continuous. This is, for example, the case for electrical circuits in which the network topology changes by switching. These discontinuities are often known since, in practice, the user has insight into the considered problem. In this case, the parameter domain could be decomposed into several subdomains, on which the reduction can be performed separately.

In the following, we will focus on the reduction of the differential part of the DAE system (1). The main idea consists of finding a reduced representation of the solutions of the Lyapunov equations (Equation9(8)

(8) ) of the form

where has orthonormal columns with

and where

denotes the projector onto the right deflating subspace of

for the parameter value

. Under the assumption that

is invertible and the pencil

is regular and asymptotically stable (This condition can be guaranteed a priori by the strict dissipativity assumptions in Subsection 4.2), the low-rank factor

solves the reduced Lyapunov equation

The equation replaces the condition

Algorithm 1 Reduced basis method for projected Lyapunov equations (offline phase)

Input: Dynamical system (1), test parameter set , tolerance

.

Output: Reduced basis matrix

1: Choose any .

2: Solve the projected Lyapunov equation (Equation24(23)

(23) ) for

to obtain

.

3: Set .

4: Set .

5: Set .

6: Set .

7: while do

8: Solve the projected Lyapunov equation (Equation24(23)

(23) ) for

to obtain

.

9: Set .

10: Set .

11: Set .

12: Set .

13: end while

In practice, we aim to determine a matrix with

that contains the information of

for all

globally. When we have determined such a

, we set

where denotes the orthonormalized columns of

. If the matrix

has been found, then the ROM for a particular parameter

can be determined very efficiently in the so-called online phase, where one simply solves (22) with (21) and (23) to determine the two Gramians and then projects the system as in Subsection 3.2.1.

The computation of the reduced basis is done beforehand in the offline phase. There, we solve the full-order Lyapunov equation only for those parameters, where their solutions are currently approximated worst to enrich the current reduced basis. A posteriori error estimators are employed on a test parameter set to find these points efficiently.

We determine by considering a test parameter set

. We begin by computing a low-rank factor

in

such that

solves the projected Lyapunov equation

for .

The first reduced basis is then given by . Next, we determine the test parameter

for which the solution

of the Lyapunov equation (Equation24

(24)

(24) ) is approximated worst by using (22), (23), and (24). For that, one of the error estimators

presented in Subsection 4.1 is utilized. For this parameter

, we solve the projected Lyapunov equation (Equation24

(24)

(24) ) with

to obtain the low-rank factor

. Then, we define the new reduced basis by setting

. This procedure is repeated until the error estimator

is smaller than a prescribed tolerance for every test parameter in

. This method results in Algorithm 1.

Remark 4. Under the assumption that the projected Lyapunov equations (Equation9(8)

(8) ), (Equation10

(10)

(10) ) are solved exactly for all parameters

using the reduced Lyapunov equations of the form (22), the

error bound (16) is also valid in the entire parameter domain. Unfortunately, this bound is no longer valid if numerical approximation errors affect the numerically computed Gramians which is already the case in the parameter-independent case. A general and in-depth analysis of the effect of such errors on the Hankel singular values is still an open problem. On the other hand, the numerically computed Hankel singular values can still be used to obtain an approximate error bound.

4.1. Error estimation

We want to estimate the error with the help of the residual

Lemma 4.2. Let be given. Let the matrix pencil

with

be regular and asymptotically stable. Assume that the left and right spectral projectors onto the finite spectrum of

are denoted by

. Let the controllability Gramian

be defined as in (8) and let it be approximated as described in EquationEquation (21)

(21)

(21) . Then the error

solves the projected error equation

Proof. The condition

follows from and

. For the remaining equation, we consider its left-hand side

With ,

and

we obtain

which proves the statement.

Similarly to (Son and Stykel Citation2017), we consider the linear system

with

where the operator denotes the Kronecker product and

the vectorization operator that stacks the columns of the matrix on top of one another. This linear system is equivalent to the projected Lyapunov equation (Equation24

(23)

(23) ). We note that

is a singular matrix and that the unique solvability is enforced by the additional restriction given by the projection with

.

Using the linear system representation and the abbreviations ,

, we can rewrite the error EquationEquation (25)

(25)

(25) as

This equation is equivalent to

Due to the unique solvability of the latter, we obtain

where denotes the Moore-Penrose inverse. Then, we can estimate

Here, denotes the smallest positive singular value of its matrix argument and

is an easily computable lower bound of

. The bound

is the first possible error estimator that provides a rather conservative bound in practice.

To improve the estimator (28), we consider the error bound presented in (Schmidt, Wittwar, and Haasdonk Citation2020). Assume that is an error estimate that is not necessarily an upper bound. An error bound

is derived by

where the residual is defined as

It remains to obtain an error estimate for all

. To do so, we solve the error EquationEquation (26)

(26)

(26) approximately by modifying the projected ADI iteration from Section 3.2.2 and the reduced basis method in Algorithm 1.

The right-hand side of the error EquationEquation (25)(25)

(25) can be written as the product

, where

For simplicity of notation, we consider the modified ADI iteration for the parameter-independent case such that

,

, and

. Analogously to the derivation in Section 3.2.2 (and under the same conditions), it can be shown that the iteration

converges to the solution of (25), where

and

are defined as in (18) for a shift parameter

with

. The factors

and

can be written as

where

for and with

.

We include the modified projected ADI iteration into the reduced basis method presented in Algorithm 1 by solving the error EquationEquation (25)(25)

(25) in Step 2 and 8 to obtain the two factors

with

. The orthonormal basis computation in Step 4 and 4 is replaced by

. Note that here, the columns of

and

span the same subspace. As error estimator, we use

. After we have determined the orthonormal basis, we solve EquationEquation (25)

(25)

(25) on the corresponding subspace to get an approximate error

, that we use for the error estimator

.

Remark 5. If the factor is already of large dimension

, the right-hand side of the error EquationEquation (25)

(25)

(25) described by

and

is of even larger rank

, and hence, the solution

cannot in general be well-represented by low-rank factors. This means that the error space basis might be large which leads to a time consuming error estimation.

4.2. Strictly dissipative systems

For the model examples from Section 2 we can make use of their structure to derive the bound in a computationally advantageous way. Therefore, we will impose an additional assumption on the matrix pencils

in (4) and (6) that is formed from submatrices of

and

.

Definition 4.3. The set of matrix pencils is called uniformly strictly dissipative, if

We will consider this condition for our motivating examples from Section 2 and determine a corresponding lower bound .

4.2.1. Stokes-like systems

We consider the Stokes-like system (4). Here, the pencil set is naturally uniformly strictly dissipative.

As shown in (Stykel Citation2006) the projection matrices are given as

where

Even though for the Stokes-like system in (4) we have for all

, in this section we will already treat the more general case of a uniformly strictly dissipative set of matrix pencils

with nonsymmetric

as this case will arise later in Subsection 4.2.2.

We continue finding a value for .

Theorem 4.4. Let be given and consider the Stokes-like system (4). Let

,

,

,

be defined as in Definition 4.3, (26), (27) with

as in (30), respectively. Then the bound

is satisfied, where denotes the minimum eigenvalue of its (symmetric) matrix arguments.

Proof. Let be as in (31). Making use of the condition

, we can deduce

Define and the matrix

Denoting the smallest positive eigenvalue of a symmetric matrix by , Corollary 3.1.5 from (Horn and Johnson Citation1991) and the eigenvalue properties of the Kronecker product, given in (Horn and Johnson Citation1991; Lancaster and Tismenetsky Citation1985) (see also (Son and Stykel Citation2017) as well as a Rayleigh quotient argument lead to the estimate

□

Since computing the eigenvalues of and

for all requested parameters

can be expensive, there are several ways to find cheap lower bounds of

, some of which are derived in (Son and Stykel Citation2017). Based on these bounds, we can, e.g., estimate

where is an arbitrary-fixed parameter in

and where we make use of the convention that

. Here, we compute the eigenvalues of

and

only once to find a lower bound

of

for every parameter

.

4.2.2. Mechanical systems

we turn to the mechanical system from Subsection 2.2. There, the corresponding matrix pencil set

is not uniformly strictly dissipative. However, it can be made uniformly strictly dissipative by appropriate generalized state-space transformations as we will show now.

Here, we apply a transformation presented in (Eid et al. Citation2011; Panzer, Wolf, and Lohmann Citation2012). We observe that by adding productive zeros and for an auxiliary function yet to be chosen, the system (5) is equivalent to the system

where is equal to

and

equal to

from system (5). The last equation is a direct consequence from the equation above and poses no extra restrictions. These equations can be written as first-order system. By defining the matrices

and replacing by

, we generate a system in the form (5) such that we can apply the methods from Subsection 4.2.1 for this kind of system in the following, if

is chosen properly. This is possible, because even if

is unsymmetric, the projector

in (32) will be the same in both left and right spectral projectors

and

and hence, the estimate in Theorem 4.4 is still valid. Only an estimation of the form (32) is not possible, since the matrices

and

cannot be written as affine decompositions in which all summands are positive or negative semidefinite, respectively. So in our numerical experiments we make use of Theorem 4.4 directly.

Theorem 4.5. Consider the mechanical system (5). Then the matrix pencil set with

and

defined in (33) is uniformly strictly dissipative, if

with

is satisfied for all Proof. As shown in (Panzer, Wolf, and Lohmann Citation2012), the pencil set

is uniformly strictly dissipative, if we choose

such that

Using the estimate for symmetric and positive definite matrices

and

of conforming dimension (Wang, Zhang Citation1992, Theorem 4), we arrive at the choice for

given by

Because of the symmetry and positive definiteness of and the submultiplicativity of the norm we obtain

By Weyl’s lemma (Horn and Johnson Citation1991; Lancaster and Tismenetsky Citation1985), we also have

Therefore, condition (34) is satisfied, if we choose such that

This can be achieved by the choice

□

To avoid computing the eigenvalues of for every parameter

, we further use the estimates

that are a consequence of Weyl’s lemma. Similar estimates are also valid for and

. Then we can further estimate

as

while ensuring that we can choose for all

.

The benefit of the above estimate is that the extremal eigenvalues of the matrices ,

, and

have to be computed only once in the beginning and otherwise, its evaluation is cheap, since

,

,

are scalar-valued functions.

4.3. Treatment of the algebraic parts

The goal of this subsection is to discuss the reduction of the algebraic part of the system which is potentially also parameter-dependent. To do so, we first precompute specific matrices in the offline phase which will later be used to efficiently construct the Markov parameters in the online phase for a particular parameter configuration and then determine a reduced representation of the algebraic subsystem using the construction from the last paragraph in Subsection 3.2.3. In order for this construction to be efficient, we assume that the parameter influence has an additional low-rank structure, i.e., with regard to the affine decomposition (3), we assume that ,

,

, and

are low-rank matrices for

, i.e., they admit low-rank factorizations

To determine the Markov parameters, for a fixed set of sample points ,

, we aim to evaluate

efficiently for all needed parameters

. In other words, we aim for an efficient procedure to evaluate the transfer function

for fixed value

and varying

. Due to (38) and since

and

are assumed to be very small, also the matrix

is of low rank and we can factorize it as

It holds that

With the help of the Sherman-Morrison-Woodbury identity, we obtain

The matrices ,

,

, and

are all of small dimension and they can be precomputed in the offline phase. In the online phase, the small matrix

can be formed (under the assumption that all necessary matrices are invertible) and used for small and dense system solves in order to calculate

with only little effort.

5. Numerical results

In this section, we present the numerical results for the two differential-algebraic systems from Section 2. The computations have been done on a computer with 2 Intel Xeon Silver 4110 CPUs running at 2.1 GHz and equipped with 192 GB total main memory. The experiments use MATLAB®R2021a (9.3.0.713579) and examples and methods from M-M.E.S.S.-2.2 (Saak, Köhler, and Benner Citation2022).

5.1. Problem 1: Stokes equation

We consider a system that describes the creeping flow in capillaries or porous media. It has the following structure

with appropriate initial and boundary conditions. The position in the domain is described by

and

is the time. For simplicity, we use a classical solution concept and assume that the external force

is continuous and that the velocities

and pressures

satisfy the necessary smoothness conditions. The parameter

represents the dynamic viscosity. We discretize system (38) by finite differences as shown in (Mehrmann and Stykel Citation2005; Stykel Citation2006) and add an output equation. Then, we obtain a discretized system of the form (4), which is of index 2. The matrix

depends on the viscosity parameter

.

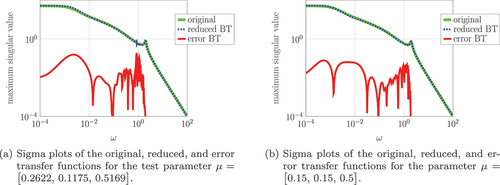

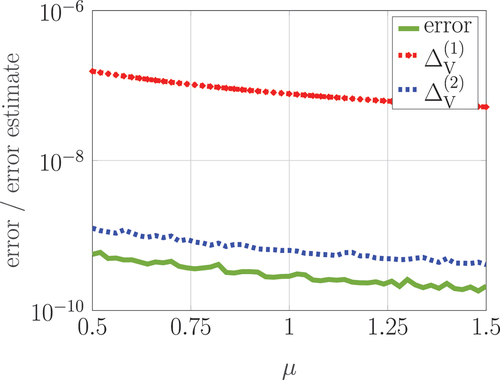

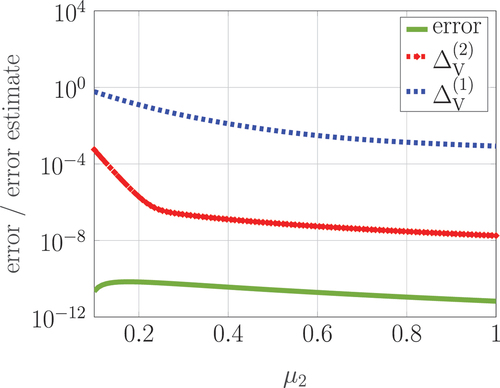

Figure 1. Error and error estimates of the approximated controllability Gramians for the Stokes system (4) after the first iteration of the reduced basis method.

Our constant example matrices are created by the M-M.E.S.S. function

and we choose the dimensions

,

, and the parameter set

. The test parameter set

consists of ten equidistant points within

, i. e.,

. The matrices

and

are zero matrices. The reduced basis method from Section 4 produces bases of dimensions

for both projected continuous-time Lyapunov equations. This basis generation is done in the offline phase, which takes

seconds for this example. For the first iteration of the reduced basis method corresponding to the proper controllability Lyapunov equation, we evaluate the errors and their estimates from EquationEquation (28)

(28)

(28) and (Equation29

(29)

(29) ). They are presented in , where the error is evaluated by

where denotes an accurate approximation of the exact solution

. For evaluating the error, the Lyapunov equations are also solved with the residual tolerance

to obtain an accurate estimate of the exact solution. After the first iteration step, we obtain an error that is already smaller than the tolerance

. Additionally, we see that the error estimator

provides an almost sharp bound of the actual error while

leads to more conservative error bounds.

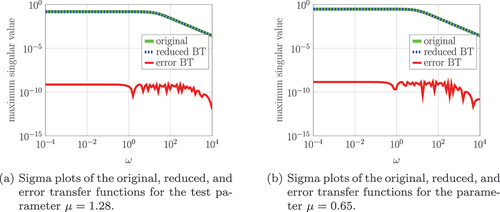

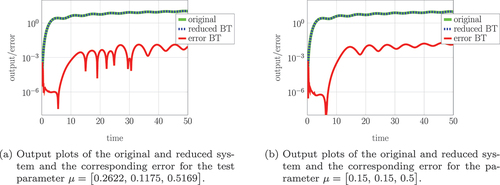

After we have determined the bases, we approximate the proper controllability and observability Gramians to apply balanced truncation in the online phase for two different parameters and

where the first parameter is the eighth entry from the test parameter set

. The reduced systems for both parameters are of dimension

and consist only of differential states. This online phases last for

and

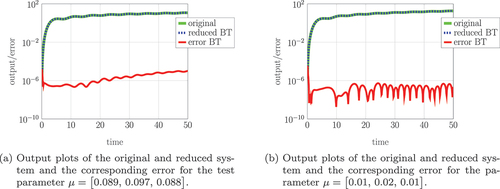

seconds, respectively, for this example. depict the transfer functions of the full and the reduced systems and the corresponding errors. We observe that the error is smaller than

for all

in

. Additionally, in , we show the output

of the full system and the output

of the reduced system for an input

,

, and the initial condition is given as

. We also depict the error

which is smaller than

in the entire time range. Hence, we can observe that the system behaviour is well-approximated by the reduced system.

Now, to demonstrate that the methods presented in this paper are also suitable for systems with an improper transfer function, we modify the system in such a way that the matrices and

are chosen to be

and

That way, we obtain more complex restrictions for

and also evaluate the pressure described by

to generate an output

. We additionally assume that the external force represented by the input function

varies its magnitude depending on the viscosity of the system so that the input matrix

is parameter-dependent, and hence, the polynomial part of the transfer function is parameter-dependent as well.

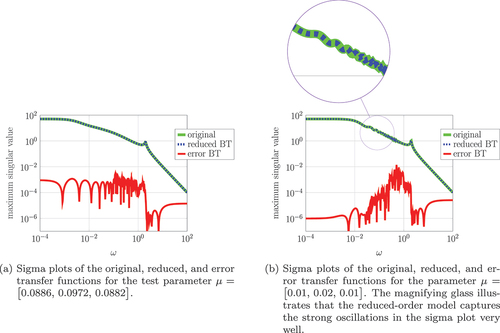

Again, in the offline phase, we execute the reduced basis method to generate bases that approximate the proper controllability and observability spaces (i.e., the image space of the corresponding proper Gramians) of this system. This phase takes seconds for this example. In , the errors and error estimations in the two stages of the reduced basis method corresponding to the proper controllability space are shown. For evaluating the error, the Lyapunov equations are also solved with the residual tolerance

to obtain an accurate estimate of the exact solution. We can observe that after the first iteration step, we obtain an error that is significantly larger than the tolerance

as shown in . Additionally, we see that the error estimator

provides an almost sharp bound of the actual error while

leads to more conservative error bounds. The second iteration of the reduced basis method and the corresponding errors are depicted in . In this case, the error as well as the estimators are smaller than the tolerance

. The final basis projection space dimensions for the projected continuous-time Lyapunov equations are

and

.

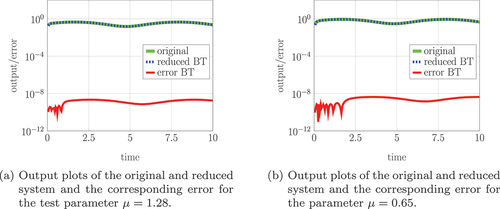

The next step is to use the bases to generate approximations of the system Gramians and to apply balanced truncation, which leads to a reduced system of dimension where we obtain

differential states and

algebraic ones for both parameters

and

. The online phase lasts for

and

seconds if we use balanced truncation via the improper Gramians to reduce the improper system components and

seconds for both parameters if we extract the polynomial part of the transfer function. However, in this example, the parameter dependency does not have a low-rank structure. Thus, for the determination of the polynomial part, it is necessary to solve one linear system of equations of full order in the online phase for each parameter of interest. Explicit formulas of the improper Gramians are also described in (Stykel Citation2006). For a fixed parameter, also an explicit formula

is provided with

compare with (14). This formula replaces the computation of the low-rank factors of the improper Gramians in the online phase. In our setting, (Equation39(38)

(38) ) can be evaluated efficiently since all involved matrices can be precomputed and then appropriately scaled with

in the parametric setting.

Figure 4. Error and error estimates of the approximated gramians for the stokes system (4) with improper parts.

We demonstrate the quality of the reduced systems by evaluating the error of the transfer functions. In the following, we denote the reduced system (error system) with an algebraic part generated by balanced truncation as reduced BT (error BT) and the reduced system (error system) with an algebraic part obtained by approximating the polynomial part of the transfer function as reduced TF (error TF). We show the sigma plot of the original and reduced transfer function as well as the error for two parameters. The first parameter, depicted in , is that is an element from the test parameter set and hence we already know that the error estimators

are smaller than the tolerance and the proper controllability space is well-approximated. The second parameter is

, and the corresponding results are shown in . The polynomial part of the transfer function is clearly observable since it does not tend to zero for growing values of

. We observe that for both parameters, the error is smaller than

in the entire frequency band

and that the parameter chosen from the test parameter set does not lead to significantly better approximations than an arbitrarily chosen parameter from the parameter set

. This allows the conclusion that the basis

approximates the proper controllability space well – for all parameters in

. Additionally, we can observe that both procedures to approximate the improper part of the system lead to satisfactory results.

Finally, to illustrate that the output is well approximated by the reduced output

, in we evaluate the norms of the output error

for

for the test parameter

and the parameter

. We choose the input function

and the initial condition

, in such a way that the consistency conditions are satisfied. We obtain an output error that is smaller than

for all

for both evaluated parameters. Again, we observe that both approximations of the algebraic part of the system lead to results of similar quality.

5.2. Problem 2: Mechanical system

Figure 7. Constrained mass-damper-spring system including three chains of masses that are connected by the mass .

As an example for system (5), we consider a constrained mass-spring-damper system which is depicted in and which is similar to the one considered in (Ugrica Citation2020). The matrices are generated using the function triplechain_MSD from the M-M.E.S.S.-2.2. toolbox, see (Saak, Köhler, and Benner Citation2022) where the mass matrix is built as

for . The stiffness matrix is built as

with and

. We set

so that the dimension of

and

is

and the matrix

is a zero matrix except for the entries

and

. Hence, the first and the last mass are additionally connected by a rigid structure, as shown in . Hence, the first-order system is of dimension

after applying the transformation presented in Subsection 4.2.2. The input matrix

is chosen as a zero matrix with one nonzero entry

The output matrix

is given by

.

These systems occur in the field of damping optimization, see (Benner, Tomljanović, and Truhar Citation2011; Truhar, Tomljanović, and Puvača Citation2019; Truhar and Veselić Citation2009; Ugrica Citation2020), where the damping matrix consists of an internal damping

and some external dampers that need to be optimized. In our example, we choose Rayleigh damping as a model for the internal damping, i.e.,

, where we set

and

. To describe the external damping, we assume that every mass is connected to a grounded damper, where the masses

are connected by dampers with viscosity

, the masses

by dampers with viscosity

and the masses

by dampers with viscosity

as depicted in . The mass

is connected to a grounded damper with fixed viscosity

. The external dampers can be described by the matrices

where denotes the zero matrix in

. Therefore, the overall damping matrix can then be constructed as

where are the viscosities of the dampers, which are optimized and thus variable in our model. This model leads to a differential-algebraic system of index 3. We generate the index-2 surrogate system as shown in Subsection 4.2.2 using the value

from (36).

We consider two parameter settings. In the first one, the system is damped more strongly, and in the second one more weakly. The latter setting is used to illustrate that the slightly damped system is more difficult to approximate by projection spaces of small dimensions, which poses a challenge from the numerical point of view. For both parameter settings, the reduced models are obtained by truncating all state variables corresponding to proper Hankel singular values with .

5.2.1. Setting 1: Stronger external damping

We consider the parameter set and choose the test parameter set

to consist of 50 randomly selected parameter triples from

. Therefore, we use the

operator from MATLAB so that the

-th test parameter is equal to

with fixed seed.

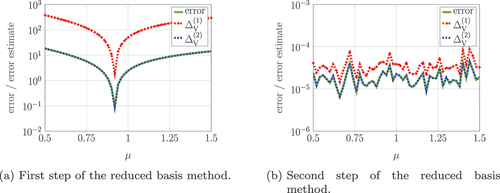

Figure 8. Error and error estimates of the approximated controllability Gramians of the mechanical system (5) with stronger external damping and ,

after the first iteration of the reduced basis method.

In the offline phase, the approximation of the controllability and observability spaces takes seconds for this example. For the proper controllability space, we evaluate the errors and the error estimates that are depicted in for the first iteration of the reduced basis method. For evaluating the error, the Lyapunov equations (Equation9

(8)

(8) ) are also solved by computing the projection matrices

and

explicitly and solving the corresponding Lyapunov equation. Therefore, we fix the parameters

and

and show the errors for the varying second parameter

. After the first iteration and for a basis of dimension

, the maximal error estimate

is equal to

. Hence, the basis approximates the proper controllability space sufficiently well. Again, we see that the error estimator

approximates the error

better than the estimation by

, which massively overestimates the error. Hence, we only use the error estimator

to determine the basis quality.

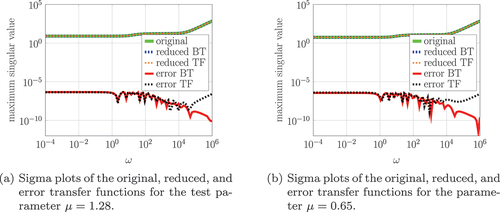

We need two iterations of the reduced basis method to determine the respective observability subspace of dimension of 304. Hence, we have to solve Lyapunov equations of dimensions 219 and 304 in the online phase to compute the Gramian approximations. We apply the online phase for the parameters (which is rounded to 4 digits here) and

. We obtain the two respective reduced systems of dimension 75 and 63, where all states are differential ones. The online phases take

and

seconds, respectively, for this example.

Figure 10. Output and output error of the original and reduced system (5) with stronger external damping.

In , we depict the original, the reduced, and the error transfer function. Additionally, we evaluate the outputs of the original and the reduced system and

. The results are shown in , where we observe the norm of the output error

for

. We choose the input function

and the initial condition

, so that the consistency conditions are satisfied. We observe that the output error is smaller than

for all

. Note that for damping optimization examples, the proper controllability and observability spaces are often hard to approximate by spaces of small dimensions, see (Benner et al. Citation2016; Tomljanović, Beattie, and Gugercin Citation2018). Hence, the tolerances are chosen rather large for this kind of example to achieve satisfying results.

5.2.2. Setting 2: Weaker external damping

Now, we consider a parameter set with viscosities of small magnitudes, that is . The test-parameter set

consists of 50 randomly selected parameters from

. To generate the test parameters, we again use the

operator from MATLAB so that the

-th parameter is equal to

with fixed seed.

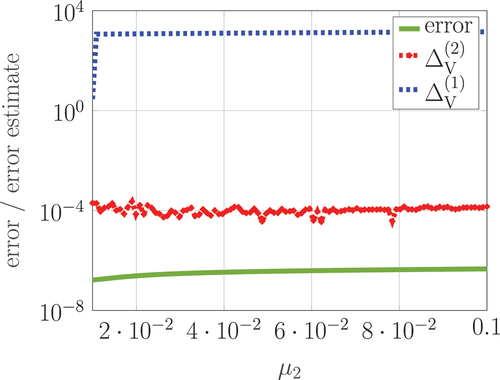

Figure 11. Error and error estimates of the approximated observability Gramians of the mechanical system (5) with weaker external damping and ,

after the first iteration of the reduced basis method.

First, we apply the offline phase of the reduced basis method that takes seconds. When computing the proper controllability space, two iterations are needed to generate a subspace of dimension 449 that leads to error estimates

that are at most

. For the proper observability space, the errors and the error estimates are illustrated in within the first iteration of the reduced basis method with fixed parameters

and

and varying

. For evaluating the error, the Lyapunov equations (Equation10

(9)

(9) ) are also solved by computing the projection matrices

and

explicitly and solving the corresponding Lyapunov equation. After the first iteration, we determine a basis of dimension 223 for which the maximal error estimate

is equal to

, and hence, sufficient accuracy is achieved. As for the first parameter setting, the error estimator

approximates the error

better than the estimation by

. Hence, we use the error estimator

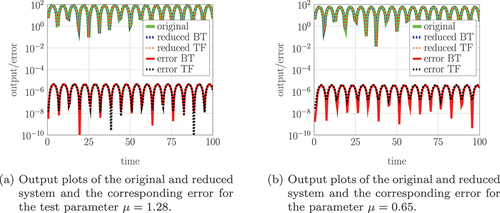

within the reduced basis method. We apply the online phase for the parameters

(which is rounded to 4 digits) and

to derive a reduced-order model of dimension 78 for the first parameter and the reduced dimension 178 for the second one. The online phases need 2.21 and 2.04 seconds, respectively.

Figure 13. Output and output error of the original and reduced mechanical system (5) with weaker external damping.

depicts the original, the reduced, and the error transfer function. Additionally, we evaluate the norm of the output error for

in , where the input function

and the initial condition

are chosen in such a way that the consistency conditions are satisfied. We observe that the output error is smaller than

for all

.

6. Conclusions

This paper has addressed the model reduction of parameter-dependent differential-algebraic systems by balanced truncation. To apply the balancing procedure, we have utilized specific projectors to eliminate the algebraic constraints. This has enabled us to compute the necessary Gramians efficiently by solving projected Lyapunov equations.

To handle the parameter-dependency, we have applied the reduced basis method, which is split into the offline phase and the online phase. In the offline phase, we have computed the basis of the subspace which approximates the solution space of the parametric Lyapunov equations. To assess the quality of this subspace, we have derived two error estimators. Afterwards, in the online phase, we have solved a reduced Lyapunov equation to obtain an approximation of the Gramian for a parameter value of interest efficiently. Therefore, a balanced truncation for this parameter value can also be carried out fast.

This method has been illustrated by numerical examples of index two and three. In particular, we were often able to reduce the associated Lyapunov equations to very small dimensions. We have evaluated our error estimators, where the second one can often estimate the error almost exactly.

Novelty statement

We propose a new method that reduces parametric differential-algebraic equations by combining projection methods for differential-algebraic equations and the reduced basis methods for Lyapunov equations. To apply the reduced basis method, new error estimators are invented. The performance of our new method is illustrated for several examples.

Code availability

The code and data that has been used to generate the numerical results of this work are freely available under the DOI 10.5281/zenodo.10040792 under the 3-clause BSD license and is authored by Jennifer Przybilla.

CRediT author statement

Jennifer Przybilla: Methodology, Software, Data Curation, Writing – Original Draft, Writing – Review & Editing, Visualization; Matthias Voigt: Conceptualization, Writing – Original Draft, Writing – Review & Editing, Supervision.

Disclosure statement

No potential conflict of interest was reported by the author(s).

References

- Alì G, Banagaaya N, Schilders WHA, Tischendorf C. 2014. Index-aware model order reduction for differential-algebraic equations. Math Comput Model Dyn Syst. 20(4):345–373. doi:10.1080/13873954.2013.829501.

- Antoulas AC. 2005. Approximation of Large-Scale Dynamical Systems. In: Advance Des Control Vol. 6 Philadelphia, PA: SIAM Publications, doi:10.1137/1.9780898718713.

- Antoulas AC, Gosea IV, Heinkenschloss M. 2020. Data-driven model reduction for a class of semi-explicit DAEs using the Loewner framework. In: Reis T and Ilchmann A, editors. Progress in Differential-Algebraic Equations II, Differ.-Algebr. Equ. Forum. Cham: Springer; p. 185–210. doi: 10.1007/978-3-030-53905-4_7.

- Banagaaya N. 2014. Index-Aware Model Order Reduction Methods for DAEs. The Netherlands: Proefschrift, Technische Universiteit Eindhoven. doi:10.6100/IR780937.

- Banagaaya N, Ali G, Schilders WHA. 2016. Index-aware Model Order Reduction Methods. In: Atlantis Studies Science Computer Electromagnet. Paris: Atlantis Press; Vol. 2.

- Bartels RH, Stewart GW. 1972. Solution of the matrix equation AX+XB=C: Algorithm 432. Comm ACM. 15(9):820–826. doi:10.1145/361573.361582.

- Baur U, Beattie CA, Benner P, Gugercin S. 2011. Interpolatory projection methods for parameterized model reduction. SIAM J Sci Comput. 33(5):2489–2518. doi:10.1137/090776925.

- Baur U, Benner P, Feng L. 2014. Model order reduction for linear and nonlinear systems: A system-theoretic perspective. Arch Comput Methods Eng. 21(4):331–358. doi:10.1007/s11831-014-9111-2.

- Beattie CA, Gugercin S, Mehrmann V. 2022. Structure-preserving interpolatory model reduction for port-Hamiltonian differential-algebraic systems. In: Beattie C, Benner P, Embree M, Gugercin S, and Lefteriu S, editors. Realization and Model Reduction of Dynamical Systems. Cham: Springer; p. 235–254. doi:10.1007/978-3-030-95157-3_13.

- Benner P, Gugercin S, Willcox K. 2015. A survey of projection-based model reduction methods for parametric dynamical systems. SIAM Rev. 57(4):483–531. doi:10.1137/130932715.

- Benner P, Kürschner P, Tomljanović Z, Truhar N. 2016. Semi-active damping optimization of vibrational systems using the parametric dominant pole algorithm. ZAMM Z Angew Math Mech. 96(5):604–619. doi:10.1002/zamm.201400158.

- Benner P, Ohlberger M, Cohen A, Willcox K. 2017. editors, Model Reduction and Approximation: Theory and Algorithms. Comput. Sci. Eng. SIAM. doi:10.1137/1.9781611974829.

- Benner P, Saak J, Uddin MM. 2016. Balancing based model reduction for structured index-2 unstable descriptor systems with application to flow control. Numer Algebra Cont Optim. 6(1):1–20. doi:10.3934/naco.2016.6.1.

- Benner P, Schneider A. 2013. Balanced truncation for descriptor systems with many terminals. Max Planck Institute Magdeburg Preprints MPIMD/13–17, Available at https://csc.mpi-magdeburg.mpg.de/preprints/2013/MPIMD13-17.pdf.

- Benner P, Stykel T. 2017. Model order reduction for differential-algebraic equations: A survey. In: Ilchmann A and Reis T, editors. Surveys in Differential-Algebraic Equations IV, Differ.-Algebr. Equ. Forum. Cham: Springer; p. 107–160. doi:10.1007/978-3-319-46618-7_3.

- Benner P, Tomljanović Z, Truhar N. 2011. Dimension reduction for damping optimization in linear vibrating systems. ZAMM Z Angew Math Mech. 91(3):179–191. doi:10.1002/zamm.201000077.

- Berger T, Ilchmann A, Trenn S. 2012. The quasi-Weierstraß form for regular matrix pencils. Linear Algebra Appl. 436(10):4052–4069. doi:10.1016/j.laa.2009.12.036.

- Brenan KE, Campbell SL, Petzold LR. 1995. Numerical Solution of Initial-Value Problems in Differential-Algebraic Equations. Philadelphia, PA: SIAM. doi:10.1137/1.9781611971224.

- Campbell SL. 1980. Singular Systems of Differential Equations. In: Research Notes in Mathematics Vol. 40. San Francisco: Pitman Advanced Publishing Program, doi:10.1002/nme.1620150916.

- Campbell SL, Meyer CD, Rose NJ. 1976. Applications of the Drazin inverse to linear systems of differential equations with singular constant coefficients. Comput Math Appl. 31(3):411–425. doi:10.1137/0131035.

- Druskin V, Simoncini V. 2011. Adaptive rational Krylov subspaces for large-scale dynamical systems. Syst Cont Lett. 60(8):546–560. doi:10.1016/j.sysconle.2011.04.013.

- Eid R, Castañé-Selga R, Panzer H, Wolf T, Lohmann B. 2011. Stability-preserving parametric model reduction by matrix interpolation. Math Comput Model Dyn Syst. 17(4):319–335. doi:10.1080/13873954.2011.547671.

- Flagg G, Beattie C, Gugercin S. 2012. Convergence of the iterative rational Krylov algorithm. Syst Cont Lett. 61(6):688–691. doi:10.1016/j.sysconle.2012.03.005.

- Geuss M, Panzer H, Lohmann B. On parametric model order reduction by matrix interpolation. In Proceedings of the 12th European Control Conference, 3433–3438, Strasbourg, France, 2013. doi:10.23919/ECC.2013.6669829.

- Glover K. 1984. All optimal Hankel-norm approximations of linear multivariable systems and their L∞ -error bounds. Internat J Control. 39(6):1115–1193. doi:10.1080/00207178408933239.

- Gosea IV, Poussot-Vassal C, Antoulas AC. 2021. Data-driven modeling and control of large-scale dynamical systems in the Loewner framework: methodology and applications. In: Zuazua E and Trelat E, editors. Numerical Control: Part A, Hadb Numer Anal. Elsevier: North-Holland.

- Gosea IV, Zhang Q, Antoulas AC. 2020. Preserving the DAE structure in the Loewner model reduction and identification framework. Adv Comput Math. 46(3). doi:10.1007/s10444-020-09752-8.

- Gugercin S, Antoulas AC, Beattie C. 2008. model reduction for large-scale linear dynamical systems. SIAM J Matrix Anal Appl. 30(2):609–638. doi:10.1137/060666123.

- Gugercin S, Stykel T, Wyatt S. 2013. Model reduction of descriptor systems by interpolatory projection methods. SIAM J Sci Comput. 35(5):B1010–B1033. doi:10.1137/130906635.

- Hammarling SJ. 1982. Numerical solution of the stable, non-negative definite Lyapunov equation. IMA J Numer Anal. 2(3):303–323. doi:10.1093/imanum/2.3.303.

- Heinkenschloss M, Sorensen DC, Sun K. 2008. Balanced truncation model reduction for a class of descriptor systems with application to the Oseen equations. SIAM J Sci Comput. 30(2):1038–1063. doi:10.1137/070681910.

- Hesthaven JS, Rozza G, Stamm B. 2016. Certified Reduced Basis Methods for Parametrized Partial Differential Equations. Cham: SpringerBriefs Math. Springer. doi:10.1007/978-3-319-22470-1.

- Horn RA, Johnson CR. 1991. Topics in Matrix Analysis. Cambridge: Cambridge University Press. doi:10.1017/CBO9780511840371.

- Kunkel P, Mehrmann V. 2006. Differential-Algebraic Equations: Analysis and Numerical Solution. In: Textbooks in Mathematics. Zürich, Switzerland: EMS Publishing House.

- Kürschner P. 2016, Efficient Low-Rank Solution of Large-Scale Matrix Equations. Dissertation, Otto-von-Guericke-Universität, Magdeburg, Germany: http://hdl.handle.net/11858/00-001M-0000-0029-CE18-2.

- Lancaster P, Tismenetsky M. 1985. The Theory of Matrices. 2nd edition ed. Orlando: Academic Press.

- März R. 1996. Canonical projectors for linear differential algebraic equations. Comput Math Appl. 31(4/5):121–135. doi:10.1016/0898-1221(95)00224-3.

- Massoudi A, Opmeer MR, Reis T. 2017. The ADI method for bounded real and positive real Lur’e equations. Numer Math. 135(2):431–458. doi:10.1007/s00211-016-0805-2.

- Mayo AJ, Antoulas AC. 2007. A framework for the solution of the generalized realization problem. Linear Algebra Appl. 425(2–3):634–662. doi:10.1016/j.laa.2007.03.008.

- Mehrmann V, Stykel T. 2005. Balanced truncation model reduction for large-scale systems in descriptor form. In: Benner P, Mehrmann V, and Sorensen DC, editors. Dimension Reduction of Large-Scale Systems, volume 45 Lect Notes Comput Sci Eng. Berlin/Heidelberg: Springer; p. 83–115. doi:10.1007/3-540-27909-1_3.

- Moore BC. 1981. Principal component analysis in linear systems: controllability, observability, and model reduction. IEEE Trans Automat Control. AC-26(1):17–32. doi:10.1109/TAC.1981.1102568.

- Panzer H, Wolf T, Lohmann B. 2012. A strictly dissipative state space representation of second order systems at-Automatisierungstechnik. 60(7):392–397. doi:10.1524/auto.2012.1015.

- Penzl T. 2000. A cyclic low rank Smith method for large sparse Lyapunov equations. SIAM J Sci Comput. 21(4):1401–1418. doi:10.1137/S1064827598347666.

- Pulch R, Narayan A, Stykel T. 2021. Sensitivity analysis of random linear differential–algebraic equations using system norms. J Comput Appl Math. 397:113666. doi:10.1016/j.cam.2021.113666.

- Quarteroni A, Manzoni A, Negri F. 2016. Reduced Basis Methods for Partial Differential Equations. In: of La Matematica per il 3+2. Cham: Springer International Publishing; Vol. 92.

- Saak J, Köhler M, Benner P. M-M.E.S.S.-2.2 – The Matrix Equations Sparse Solvers library, February 2022. doi:10.5281/zenodo.5938237.

- Saak J, Voigt M. 2018. Model reduction of constrained mechanical systems in M-M.E.S.S. IFAC-PapersOnLine. 51(2):661–666. doi:10.1016/j.ifacol.2018.03.112. 9th Vienna International Conference on Mathematical Modelling, Vienna, 2018.

- Schmidt A, Wittwar D, Haasdonk B. 2020. Rigorous and effective a-posteriori error bounds for nonlinear problems – Application to RB methods. Adv Comput Math. 46:32. doi:10.1007/s10444-020-09741-x.

- Schwerdtner P, Moser T, Mehrmann V, Voigt M. 2023. Optimization-based model order reduction of port-Hamiltonian descriptor systems. Syst Control Lett. 182:105655. doi:10.1016/j.sysconle.2023.105655.

- Simoncini V, Druskin V. 2009. Convergence analysis of projection methods for the numerical solution of large Lyapunov equations. SIAM J Num Anal. 47(2):828–843. doi:10.1137/070699378.

- Son NT, Gousenbourger P-Y, Massart E, Stykel T. 2021. Balanced truncation for parametric linear systems using interpolation of Gramians: a comparison of algebraic and geometric approaches. In: Benner P, Breiten T, Faẞbender H, Hinze M, Stykel T, and Zimmermann R, editors. Model Reduction of Complex Dynamical Systems, volume 171 International Series of Numerical Mathematics. Cham: Birkhäuser; p. 31–51. doi:10.1007/978-3-030-72983-7_2.

- Son NT, Stykel T. 2017. Solving parameter-dependent Lyapunov equations using the reduced basis method with application to parametric model order reduction. SIAM J Matrix Anal Appl. 38(2):478–504. doi:10.1137/15M1027097.

- Sorenson DC, Zhou Y. 2002. Bounds on eigenvalue decay rates and sensitivity of solutions to Lyapunov equations. CAAM Technical Reports TR02-07, Rice University, Available at https://repository.rice.edu/items/7d1381b1-adc7-4282-8486-430784cef15c

- Stykel T. 2004. Gramian-based model reduction for descriptor systems. Math Cont Sign Syst. 16(4):297–319. doi:10.1007/s00498-004-0141-4.

- Stykel T. 2006. Balanced truncation model reduction for semidiscretized Stokes equation. Linear Algebra Appl. 415(2–3):262–289. doi:10.1016/j.laa.2004.01.015.

- Stykel T. 2008. Low-rank iterative methods for projected generalized Lyapunov equations. Electron Trans Numer Anal. 30:187–202. URL http://etna.mcs.kent.edu/vol.30.2008/pp187-202.dir/pp187-202.pdf

- Tombs MS, Postlethwaite I. 1987. Truncated balanced realization of a stable non-minimal state-space system. Internat J Control. 46(4):1319–1330. doi:10.1080/00207178708933971.

- Tomljanović Z, Beattie C, Gugercin S. 2018. Damping optimization of parameter dependent mechanical systems by rational interpolation. Adv Comp Math. 44(6):1797–1820. doi:10.1007/s10444-018-9605-9.

- Truhar N, Tomljanović Z, Puvača M. 2019. Approximation of damped quadratic eigenvalue problem by dimension reduction. Appl Math Comput. 347:40–53. doi:10.1016/j.amc.2018.10.047.

- Truhar N, Veselić K. 2009. An efficient method for estimating the optimal dampers’ viscosity for linear vibrating systems using Lyapunov equation. SIAM J Matrix Anal Appl. 31(1):18–39. doi:10.1137/070683052.

- Ugrica M. Approximation of Quadratic Eigenvalue Problem and Application to Damping Optimization. Doctoral thesis, University of Zagreb, Faculty of Science, Zagreb, Croatia, 2020. URL https://urn.nsk.hr/urn:nbn:hr:217:106554.

- Wang B, Zhang F. 1992. Some inequalities for the eigenvalues of the product of positive semidefinite Hermitian matrices. Linear Algebra Appl. 160:113–118. doi:10.1016/0024-3795(92)90442-D.

- Zhou K, Doyle JC, Glover K. 1996. Robust and Optimal Control. Upper Saddle River, NJ: Prentice-Hall.