ABSTRACT

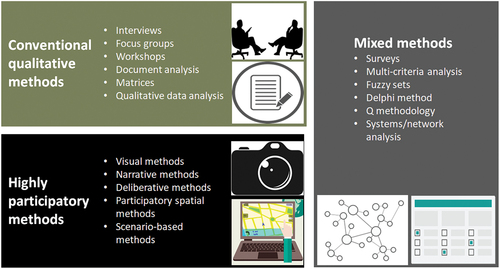

Qualitative methods for impact assessment (IA) represent a broad spectrum of approaches that are important for realising effective IA practice. The purpose of this paper is to identify and promote qualitative methods that are available for use in contemporary and future (next-generation) IA processes. From an extensive literature review, an international survey (145 responses), expert interviews (48 interviewees), and a workshop attended by 27 IA practitioners, 17 qualitative method categories were identified. These were further subdivided into three classes: conventional qualitative methods, highly participatory methods, and mixed methods. Each method is described, and an indication given of how each can be used in IA practice, including the specific stage of the IA process to which they might be applied. Whilst this paper seeks to stimulate practitioners to apply qualitative methods to enrich IA practices, the research also identifies a lack of expertise with social science methods as a significant barrier to the effective use of qualitative methods in IA practice.

1. Introduction

Many methods for obtaining and analysing data may be utilised at different steps in the impact assessment (IA) process, which include baseline data collection, impact identification and prediction, comparison of alternatives, decision-making, mitigation design and implementation, and post-approval follow-up monitoring and management. In IA practice, researchers have long sought to promote and improve quantitative methods (e.g. Bisset Citation1978; Beanlands and Duinker Citation1984), despite the potential for such approaches to mask contentious items and close-down discussions. Notwithstanding that qualitative methods have always been in use in IA, the extent to which they are used, the types of methods being employed, and application of the data in IA have not previously been explicitly examined. Further, there seems to be an underlying perception on the part of some practitioners and decision-makers that social science methods based upon qualitative data are unscientific and subjective, and therefore provide second-rate evidence (Kørnøv and Thissen Citation2000; Nitz and Brown Citation2001; Owens et al. Citation2004; Retief et al. Citation2013). And yet, subjectivity and value-based decision-making have always been central to IA practice, not only in the assessment of social impacts where it is core (e.g. as acknowledged in numerous chapters within Vanclay and Esteves Citation2024) but also in the process of determining impact significance, which is fundamental to the theory and practice of IA (e.g. Ehrlich and Ross Citation2015; Jones and Morrison-Saunders Citation2016).

The need for appropriately selected and rigorously applied qualitative methods from social science to be more widely used in IA will only increase as governments move towards enshrining next-generation forms of assessment (e.g. Sinclair et al. Citation2018) in legislation. Following Sinclair et al. (Citation2021), we view such forms of assessment as bringing together process elements that aim to achieve integrated planning and decision-making for sustainability, address policies, and programs as well as projects and cumulative local, regional, and global effects, and establish decision processes that empower the public, recognize uncertainties, and favour precaution.

The purpose of this paper is to respond to this need by identifying and introducing a range of qualitative methods that are available for use in contemporary and future IA processes. The research we report on here is part of a three-year-long program, funded by the Impact Assessment Agency of Canada, specifically seeking to understand and promote effective usage of qualitative methods in IA. Action in this regard was taken by the Agency due to the introduction of the Impact Assessment Act (of Canada) 2019, which broadened the scope of IA in Canada beyond traditional biophysical considerations to include next-generation elements such as a requirement to conduct gender-based analysis and the process features previously identified. A complete account of the research program is provided in Walker et al. (Citation2023), and more specific assistance with the use of the methods identified is provided in this source. In this paper, our focus is specific to the typology of qualitative methods that can contribute to sustainability-oriented IA.

2. Methods

Four methods were used to undertake this research, comprising literature review, surveys, interviews, and workshops with international IA practitioners. Literature review was the initial method employed to identify qualitative methods, resulting in 22 method categories being established. Subsequently, surveys, interviews, and workshops were used to examine, refine, and consolidate these. We outline each of the four research methods in turn before discussing triangulation and the inter-relationships between our methods.

2.1 Literature review

Our literature review was undertaken in several stages. An initial scoping review (following Grant and Booth Citation2009) provided an indication of the breadth and depth of available literature and informed the selection of appropriate search terms for the structured literature review (SLR). Based upon the scoping review, the decision was made to incorporate search terms from related fields such as environmental planning and natural resource management, to identify qualitative methods that could potentially be applied in IA as well as those that already are. It was also determined that terms related to participatory and community-based methods should be included in the search.

The SLR was based on the following search chain within both Scopus and Google Scholar: (Qualitative OR subjective OR participat* OR community) AND (‘environmental assessment’ OR ‘impact assessment’ OR ‘natural resource management’ OR ‘spatial planning’ OR ‘land planning’ OR ‘land use planning’ OR ‘regional planning’ OR ‘urban planning’ OR ‘environmental planning’). Sources identified were screened for relevance to IA and usefulness of content in terms of understanding the method and its potential application.

In addition to our SLR searches, we also employed the ‘snowball’ methods of Greenhalgh and Peacock (Citation2005) to pursue ‘references of references and electronic citation tracking’ (p. 1065). We also drew upon our ‘existing knowledge and networks’ (Badger et al. Citation2000, p. 223), including the external Best Practice Advisory Committee (BPAC) that we had established at the outset of the overall research project to guide our work (details of the committee provided in Walker et al. Citation2023), to inform our searches. This was especially valuable in sourcing grey literature not included in online databases like Google Scholar.

In selecting papers, we chose sources that:

related to ex-ante assessment of policies, plans, programmes, and projects;

discussed details of a specific method or technique applied to IA or a closely related field;

were accessible (open access or available through university databases); and

were in English.

This resulted in the identification of 423 sources through the scoping review search. An additional 30 sources the BPAC suggested were potentially relevant were also included, resulting in a total of 453 sources screened. Removal of duplicates and application of screening criteria left 135 sources for full-text review.

At this point, an initial list of qualitative methods was generated. A targeted literature review was then conducted on each method in the initial list, which involved both searching for the use of the method in IA and related fields using a similar search chain as for the SLR, as well as searching for the method alone, especially for those that are tightly prescribed (such as fuzzy sets and Q methodology). The targeted searches generated data on the method and its appropriate application; its strengths, limitations, and challenges; and practical considerations. Summaries of each method were then prepared using a standard template.

2.2 International survey

We designed and implemented an international online survey that asked IA professionals about their engagement with the 22 methods identified in our initial literature review and with qualitative research more broadly. To this end, we posed a combination of closed- and open-ended questions as suggested by Bhattacherjee (Citation2012). Those specific to our focus in this paper on identifying qualitative methods used in contemporary IA are reproduced in Box 1. Our online survey by necessity was long, which is counter to the advice of research methods researchers such as Deutskens et al. (Citation2004) or Revilla and Ochoa (Citation2017). Following the advice of Neuman (Citation2014) on how to improve reliability in social research methods, we piloted our survey with the BPAC prior to dissemination, which led to some refinements.

Box 1 Survey questions pertaining to the identification of qualitative methods in IA

Please indicate the extent to which you use, or engage with, each of the 22 methods [i.e. subsequently itemised] – (closed-question responses: often, sometimes, rarely, or never).

Please identify any additional qualitative methods [i.e. not in the list of 22 provided] that could be applied within IA.

Please identify two methods you have used or engaged with that contributed most to the overall IA objectives.

For each of these two methods, please indicate: a) impact area and IA process steps, b) associated data analysis methods, c) the strengths, weaknesses, and challenges of the methods, and d) practical considerations and tips.

Not knowing the total population of IA professionals who work with qualitative methods, we relied on non-random, convenience and purposive sampling (e.g. after Neuman Citation2014). Our survey, which was available for two months in 2022 was distributed via: 1) 238 emails sent directly to a list of potential participants known to have expertise in qualitative research in IA, as identified by the research team, the BPAC, and the literature review; 2) nine national and international IA professional associations’ newsletters and/or social media platforms (e.g. International Association for Impact Assessment – IAIA, including the IAIA affiliates and SIAHub); and 3) information cards distributed at the IAIA annual meeting in May 2022. A total of 145 responses were received; of which 80 responded positively to the final survey question enquiring whether they would be willing to participate in a follow-up interview.

2.3 Interviews

A semi-structured interview format was developed (e.g. following Al Balushi Citation2016) to enable in-depth discussions to emerge. The questions enquired about two specific methods and their use in IA, including details of their data collection and analysis procedures, implementation considerations and tips, strengths and challenges, and appropriate contexts for use in IA (i.e. the interview questions were similar in nature to those in the survey; Box 1). The research team collaboratively drafted an interview guide and four members of the team conducted pilot interviews to test the guide, resulting in some refinements.

Interviewees were initially targeted from the 80 survey respondents mentioned previously, with 46 being identified as approaches that would cover experience with the widest possible range of qualitative methods in the list of 22. Forty of these practitioners subsequently agreed to be interviewed and further eight interviews were also conducted with IA professionals who had not completed the survey, but who were sought out because they had known expertise in otherwise under-represented methods. The interviews were conducted via Zoom or Teams using the transcription or closed caption functions to create initial transcripts. The quality of these transcripts varied considerably, and the audio recordings were used to verify and refine the transcripts.

2.4 Workshops

As a research method, workshops can serve as ‘evaluative, reflexive milestones (formative evaluation)’ (Lang et al. Citation2012, p. 32) as well as being ‘ways of developing or changing existing programmes or interventions … [and] to explore theories or strategies’ (Ritchie and Lewis Citation2003, p. 290) arising from research. A workshop was conducted by members of the research team in attendance at the annual conferences of IAIA in 2022 to simultaneously share and test aspects of our overall research program. This workshop, which was attended by 27 IA practitioners (comprising researchers, non-governmental organization representatives, and government/regulatory professionals), provided an opportunity to verify the list of 22 methods that had emerged from the initial literature review and begin to develop a more in-depth understanding of their application in IA. In addition to reflecting and commenting upon their use of these methods, workshop participants were asked to identify any key methods missing from our list. Specific methods suggested by participants were subsequently either found to fit within the existing method categories or were excluded because they were considered research approaches (e.g. ethnography) or technical tools (e.g. Mentimeter, Zoom), rather than specific research methods.

2.5 Data analysis

The qualitative survey data, interview transcripts, and workshop notes were coded together in NVivo 12 using a hybrid deductive-inductive thematic qualitative analysis approach. Deductive codes were initially established for each method based on specific project objectives, such as ‘Strengths & Value,’ ‘Challenges & Limitations,’ ‘Practical Considerations,’ and ‘Contextual Considerations’ (i.e. relevant IA process steps and impact categories). After the data had been coded deductively, an inductive coding process allowed for specific themes to emerge from the data (following the process outlined by Braun and Clarke Citation2006). For example, 40 discrete sections of data had been deductively coded to ‘Practical Considerations’ for interviews. These data were read thoroughly, and initial second-level codes were inductively developed to cluster the data into meaningful groups. The content of the codes was reviewed again, and related codes merged where related ideas were observed. For instance, upon review, inductive second-level codes ‘respect’ and ‘safe spaces’ were determined to contain similar ideas. These were then merged into a single code ‘creating safe spaces,’ which was identified as an important theme relating to good practice considerations for conducting interviews. This example code structure along with a few samples of coded data are shown in .

The inclusion of data from the multiple methods used and the numerous participants who took part in this research facilitated triangulation, since we were able to ‘learn more by observing from multiple perspectives than by looking from only a single perspective’ (Neuman Citation2014, p. 166). For example, in establishing the broader themes noted above, we actively sought confirmation from multiple sources of data, such as the coded qualitative data and targeted literature review summary templates. Further triangulation was subsequently conducted during analysis that matched qualitative methods with particular IA needs and considered how different methods might be used in different stages of IA. This is explained further in the results section in relation to and .

3. Results

While 22 qualitative methods were initially identified for use in IA from the initial literature review (as explained previously), following application of all the methods, the final list was shortened to 17 method categories. Some entries from the original list were excluded because participants perceived them as research approaches (e.g. participatory rural appraisal) or IA processes (e.g. value mapping) rather than specific methods. Others were excluded because the data did not contain sufficient information about how they can be applied to IA (e.g. qualitative modelling) or how they can be applied in novel/innovative ways in contemporary IA (e.g. checklists). Finally, system analysis and network analysis were combined into a single method category in the final list due to their similarities in use and application in IA.

The final list of 17 method categories is presented in alphabetical order in , along with a brief description and example references for each. Readers are referred to Walker et al. (Citation2023) for more detail, and a more comprehensive reading list, for each method category. Within some of the categories, a variety of specific or unique methods can be identified. For example, deliberative methods involve various discussion-based approaches and the entry for this in the table includes three examples. The decision to group such specific methods into categories was to enable the table (and the subsequent discussion of each category) to serve as a simple reference guide for IA practitioners. Attention is also drawn in the description of methods to those frequently utilised by the survey respondents (with ‘frequently’ being defined as those used by at least 30% of respondents).

Table 1. Qualitative methods used in impact assessment (adapted from Walker et al. Citation2023, pp. 38–39).

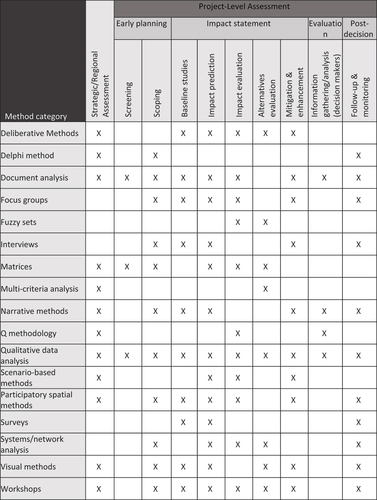

Each qualitative method has unique characteristics that might help determine its suitability to meet particular IA needs (e.g. the extent to which a method is participatory or has the ability to deal with uncertainties) or contextual circumstances faced by a practitioner (e.g. cost and time demands or technical abilities needed to apply a method). It is beyond the scope of this paper to fully analyse and explain these characteristics for each of the 17 qualitative method categories we have identified. However, as a quick reference guide, provides some suggestions for which of the methods might best be utilised for various aspects of IA practice. The table as derived by triangulating among data coded to ‘Strengths and Value’ (a deductive subcode established for each method category in NVivo) and the ‘Strengths’ section of literature review synthesis tables that were prepared for each method. Although our triangulation process was not fully comprehensive (i.e. it did not attempt to identify all relevant circumstances present in the data), the table includes the most prominent themes and IA circumstances. Through the qualitative analysis, the research team identified IA stages at which the different qualitative methods have been used or participants identified as relevant (e.g. early development design and planning, screening, and scoping, approval decision-making, and follow-up). These matches between methods and particular steps in IA are presented in . The figure is based on findings from the analysis; therefore, it is possible that there are additional IA steps at which IA methods could be applied, but were not identified through this research and thus are absent from .

Figure 2. Impact assessment steps for which each qualitative method has been used or suggested as relevant by research participants (adapted from Walker et al. Citation2023, p. 42).

Table 2. Matching qualitative methods with particular IA needs (adapted from Walker et al. Citation2023, p. 44).

4. Discussion

describes an array of qualitative methods that can contribute to more effective IA by helping to address subjectivity and value-based decision-making in IA practice. The decision to group-specific methods into categories and subsequently display them in alphabetical order was made to permit the table to serve as a simple reference guide for IA practitioners. The 17 method categories are diverse, encompassing a range of conventional qualitative social science methods, innovative participatory methods, and mixed methods that rely on a blend of qualitative and quantitative data collection and analysis techniques. These methods are not mutually exclusive, and combinations of them may be employed simultaneously (for example, visual methods might be used with numerous categories including within interviews, scenario-based methods, multi-criteria analysis, and many others).

Regardless of the suite of methods employed, an important consideration for practitioners is the extent to which they have experience and expertise to enable them to use the methods effectively. A cautionary note in this regard was flagged in the interviews. Several participants commented that there is a general lack of capacity in IA for using social science research methods. For example, one social scientist noted that many people doing IA – even social impact assessment – are trained in the natural sciences and assume that qualitative methods can be done by anyone, even without appropriate training. The interviewee was adamant that this should not be the case. It was further suggested that qualitative data analysis can be particularly problematic in IA where there is a lack of rigour. Further study is needed to clarify the depth and breadth of this problem in IA, but experience, expertise, and rigour are obviously needed for effective application of any research method, whether it is qualitative or quantitative (e.g. Neuman Citation2014; Leavy Citation2017; Creswell and Creswell Citation2018).

Technical expertise is particularly important in the case of mixed methods. Another cautionary note raised in the interviews was that inappropriate quantitative methods are often applied to qualitative data, e.g. ratings of high/medium/low become 3/2/1, and then these get summed, or mean values are calculated, which is not mathematically defensible (as it treats ordinal data as if they are linear). This is one of the reasons that qualitative data are often viewed with suspicion among practitioners. Methods such as fuzzy sets, multi-criteria analysis, and Q methodology offer ways to conduct legitimate mathematical analysis of qualitative data (e.g. Brown Citation1993; Wood et al. Citation2007; Geneletti and Ferretti Citation2015). However, another caution for practitioners is that these methods have very prescriptive and technical requirements and are not appropriate choices unless suitable expertise is available. Additionally, although quantitative and mixed methods provide findings that are highly legible to decision makers (because numerical results provide clarity), they can lose the richness, depth, and nuanced understandings that qualitative methods can provide.

and match qualitative methods with, respectively, particular IA needs and discrete IA process steps. is not comprehensive but covers highly salient method characteristics and IA circumstances. Together, the table and figure offer a quick reference guide for practitioners on when and how each method can contribute to IA practice, needs, and process. An important observation about the table and figure is that multiple methods can, and perhaps should (for the sake of triangulation and validity), be used to meet each IA need/objective and at each IA step. Another is that the table and figure reinforce the degree to which document analysis is a foundational method for meeting most IA information needs at most IA steps. It was by far the most used method by the survey respondents (83%). These details underscore the importance of addressing the concerns noted earlier about the lack of rigour in qualitative data analysis. When document reviews centre on rich descriptions of social, cultural, and environmental conditions, they need to go beyond quick scans and overviews. They need to be careful, systematic, and focused on yielding valid interpretations of the variables of interest (Bowen Citation2009).

Given our emphasis on current and future (next generation) IA and addressing subjectivity and value-based decisions, a highly salient consideration for matching methods with IA needs and process steps is the degree to which the methods are participatory (Sinclair et al. Citation2015; Diduck and Sinclair Citation2024). As a guide for practitioners, offers a classification of the 17 main method categories outlined in . We recognise that the classification is rudimentary because of overlaps between classifications, for example, some of the conventional methods (focus groups and workshops) are inherently participatory and some of the mixed methods (e.g. network analysis, multi-criteria analysis, and Q methodology) can be applied in a participatory way.

Participatory methods are considered to be more innovative, which perhaps explains why they were perceived to be used less frequently than other methods, although noteworthy examples were cited by the research participants. One such example is community mapping, which emerged from participatory rural appraisal (Chambers Citation2006) and is likely most relevant to community-based IA in rural contexts, particularly in the global South (e.g. Spaling et al. Citation2011). Another example is land use and occupancy mapping (or traditional land use mapping) (Tobias Citation2014). Since this method involves a collection of interview data about traditional use of resources and occupancy of lands by Indigenous peoples, best practice dictates that it be initiated, led, and controlled by Indigenous peoples; applied and used in ways that reflect Indigenous worldviews; and support Indigenous sovereignty and self-representation (e.g. Joly et al. Citation2018). A third example is the use of participatory geographic information systems in research that was undertaken in the context of IA or environmental planning (Alagan and Aladuwaka Citation2012; Brown et al. Citation2014).

5. Conclusions

In this paper, we set out to identify and promote qualitative methods for impact assessment. The 17 categories of methods that have been described were identified from literature and their use within IA by practitioners was confirmed and consolidated through surveys, interviews, and workshops. While there are numerous relationships evident between the 17 methods and others that simply offer an alternative means of delivering a particular IA function, they are individually unique and discrete approaches to collecting and analysing qualitative data. The brief descriptions provided (in summary form in ) as well as what each method offers IA practice (e.g. in summary form in and ) are intended to serve as a useful guide to IA practitioners seeking to enrich their own ‘toolbox’ of IA methods. The hope is that the promotion of these methods will benefit practitioners by serving as something of an introduction and guide to ways of doing IA with which they may not previously have been familiar. In support of this, our full report (Walker et al. Citation2023) establishes ways that qualitative methods in IA can be strengthened and also offers a guide to implementing the methods identified with IA case examples.

In particular, the hope is that this paper stimulates and enriches current and future IA practice and initiates a shift in the trend we found during our research, namely that practice has been limited to a relatively small number of conventional qualitative social science methods (document analysis, interviews, focus groups, workshops, narrative methods, surveys, and qualitative data analysis). Also, a significant caveat remains regarding the concern expressed by interviewees in relation to the expertise required to properly apply qualitative methods. Social science training is essential in most cases, or the methods will not deliver what is intended.

Ultimately, if practice is to deliver on next-generation IA expectations, then the consideration of the values and perspectives of multiple and diverse stakeholder groups is increasingly important. The methods highlighted in this paper facilitate this shift towards the inclusion of broader next-generation considerations in decision-making. and and and are intended to serve as simple reference guides for IA practitioners wishing to broaden their knowledge and application of qualitative methods. However, they merely represent a snapshot in time for thinking about the 17 qualitative methods identified in this research. New innovations will inevitably emerge, and new applications of qualitative methods for tasks not identified in this research will also emerge over time.

Acknowledgments

We are grateful to the many IA practitioners, researchers, and decision-makers who shared their knowledge of these methods. A nine-member Best Practices Advisory Committee provided direction and feedback throughout the process. The research was supported by four research assistants: Anna Pattison, Ran Wei, Flo Kaempf, and Penelope Sanz.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Correction Statement

This article has been corrected with minor changes. These changes do not impact the academic content of the article.

Additional information

Funding

References

- Alagan R, Aladuwaka S. 2012. Innovative public participatory GIS methodologies adopted to deal with the social impact assessment process challenges: a Sri Lankan experience. J Urban Reg Inf Syst Assoc. 24(2):19–32.

- Al Balushi K. 2016. The use of online semi-structured interviews in interpretive research. Int J Sci Res. 57(4):2319–7064.

- Badger D, Nursten J, Williams P, Woodward M. 2000. Should all literature reviews be systematic? Eval Res Educ. 14(3–4):220–230. doi: 10.1080/09500790008666974.

- Beanlands G, Duinker P. 1984. An ecological framework for environmental impact assessment. J Environ Manag. 18:267–277.

- Bhattacherjee A. 2012. Social science research: principles, methods and practices. In: Textbooks collection, book 3. Global text project. 2nd ed. Tampa (FL): University of South Florida. [accessed 16 Oct 2023]. http://scholarcommons.usf.edu/oa_textbooks/3.

- Bisset R. 1978. Quantification, decision-making and environmental impact assessment in the United Kingdom. J Environ Manag. 7(1):43–58.

- Bisset R. 1980. Methods for environmental impact analysis: recent trends and future prospects. J Environ Manag. 11(1):27–43.

- Bowen GA. 2009. Document analysis as a qualitative research method. Qual Res J. 9(2):27–40. doi: 10.3316/QRJ0902027.

- Braun V, Clarke V. 2006. Using thematic analysis in psychology. Qual Res Psychol. 3(2):77–101. doi: 10.1191/1478088706qp063oa.

- Brown SR. 1993. A primer on Q methodology. Operant Subjectivity. 16(3/4):91–138. doi: 10.22488/okstate.93.100504.

- Brown G, Kelly M, Whitall D. 2014. Which ‘public’? Sampling effects in public participation GIS (PPGIS) and volunteered geographic information (VGI) systems for public lands management. J Environ Plann Manage. 57(2):190–214. doi: 10.1080/09640568.2012.741045.

- Chambers R. 2006. Participatory mapping and geographic information systems: whose map? Who is empowered and who disempowered? Who gains and who loses? Electron J Info Sys Dev Countries. 25(1):1–11. doi: 10.1002/j.1681-4835.2006.tb00163.x.

- Chen Y, Caesemaecker C, Rahman HMT, Sherren K. 2020. Comparing cultural ecosystem service delivery in dykelands and marshes using Instagram: a case of the Cornwallis (Jijuktu’kwejk) river, Nova Scotia, Canada. Ocean Coast Manag. 193:105254. doi: 10.1016/j.ocecoaman.2020.105254.

- Chen Y, Parkins JR, Sherren K. 2019. Leveraging social media to understand younger people’s perceptions and use of hydroelectric energy landscapes. Soc Natur Resour. 32(10):1114–1122. doi: 10.1080/08941920.2019.1587128.

- Creswell JW. 2007. Qualitative inquiry & research design: choosing among five approaches. 2nd ed. London: Sage.

- Creswell JW, Creswell JD. 2018. Research design: qualitative, quantitative, and mixed methods approaches. 5th ed. London: SAGE Publications.

- Crosby N. 1995. Citizens juries: one solution for difficult environmental questions. In: Renn O, Webler T Wiedemann P, editors. Fairness and competence in citizen participation: evaluating models for environmental discourse. Springer Netherlands; p. 157–174. 10.1007/978-94-011-0131-88.

- Deutskens E, De Ruyter K, Wetzels M, Oosterveld P. 2004. Response rate and response quality of internet-based surveys: an experimental study. Mark Lett. 15(1):21–36. doi: 10.1023/B:MARK.0000021968.86465.00.

- Diduck AP, Sinclair AJ. 2024. The promise of social learning-oriented approaches to public participation.In Handbook of public participation in impact assessment. Edward Elgar Publishing; p. 89–101.

- Dodgson JS, Spackman M, Pearman A, Phillips LD. 2009. Multi-criteria analysis: a manual. UK Department of Communities and Local Government; [accessed 12 Jan 2024]. https://eprints.lse.ac.uk/12761/1/Multi-criteria_Analysis.pdf.

- Duinker PN, Greig LA. 2007. Scenario analysis in environmental impact assessment: improving explorations of the future. Environ Impact Assess Rev. 27(3):206–219. doi: 10.1016/j.eiar.2006.11.001.

- Egan AF, Jones SB. 1997. Determining forest harvest impact assessment criteria using expert opinion: a Delphi study. North J Appl For. 14(1):20–25. doi: 10.1093/njaf/14.1.20.

- Ehrlich A, Ross W. 2015. The significance spectrum and EIA significance determinations. Impact Assess Proj Apprais. 33(2):87–97. doi: 10.1080/14615517.2014.981023.

- European Commission. 1999. Guidelines for the assessment of indirect and cumulative impacts as well as impact interactions. Office for Official Publications of the European Communities; [accessed 14 Jan 2024]. https://ec.europa.eu/environment/archives/eia/eia-studies-and-reports/pdf/guidel.pdf.

- Fischer DW, Davies GS. 1973. An approach to assessing environmental impacts. J Environ Manag. 1:207–227.

- Fishkin JS. 2021. Deliberative public consultation via deliberative polling: criteria and methods. Hastings Cent Rep. 51(S2):S19–S24. doi: 10.1002/hast.1316.

- Gastil J. 2009. A comprehensive approach to evaluating deliberative public engagement. In: MASS LBP Engaging with impact: targets and indicators for successful community engagement by Ontario’s local health integration networks, Ontario ministry of health and long term care, health system strategy division, and the central, north west and south east LHINs. Toronto (ON): Ontario Ministry of Health and Long Term Care; p. 15–27.

- Geneletti D, Ferretti V. 2015. Multicriteria analysis for sustainability assess-ment: concepts and case studies. In: Morrison-Saunders A, Pope J Bond A, editors. Handbook of sustainability assessment. Cheltenham, (UK): Edward Elgar; p. 235–264.

- Gislason MK, Morgan VS, Mitchell-Foster K, Parkes MW. 2018. Voices from the landscape: storytelling as emergent counter-narratives and collective action from northern BC watersheds. Health & Place. 54:191–199. doi: 10.1016/j.healthplace.2018.08.024.

- Goater S, Goater R, Goater I, Kirsch P. 2012. This life of mine – personal reflections on the well-being of the contracted fly-in, fly-out workforce. Proceedings from the Eighth AUSIMM Open Pit Operators’ Conference; [accessed 12 Jan 2024]; Perth, Western Australia. https://www.academia.edu/download/33505950/Goater_2012_This_life_of_mine_AUSIMM.pdf.

- González A, Gilmer A, Foley R, Sweeney J, Fry J. 2008. Technology-aided participative methods in environmental assessment: an international perspective. Comput Environ Urban Syst. 32(4):303–316. doi: 10.1016/j.compenvurbsys.2008.02.001.

- Grant MJ, Booth A. 2009. A typology of reviews: an analysis of 14 review types and associated methodologies. Health Info Libr J. 26(2):91–108. doi: 10.1111/j.1471-1842.2009.00848.x.

- Greenhalgh T, Peacock R. 2005. Effectiveness and efficiency of search methods in systematic reviews of complex evidence: audit of primary sources. BMJ. 331(7524):1064–1065. doi: 10.1136/bmj.38636.593461.68.

- Hanna K, Noble B. 2015. Using a Delphi study to identify effectiveness criteria for environmental assessment. Impact Assess Proj Apprais. 33(2):116–125. doi: 10.1080/14615517.2014.992672.

- Jenkins J. 2017. Rare earth at Bearlodge: anthropocentric and biocentric perspectives of mining development in a multiple use landscape. J Environ Stud Sci. 7:189–199.

- Joly TL, Longley H, Wells C, Gerbrandt J. 2018. Ethnographic refusal in traditional land use mapping: consultation, impact assessment, and sovereignty in the Athabasca oil sands region. Extr Ind Soc. 5(2):335–343. doi: 10.1016/j.exis.2018.03.002.

- Jones M, Morrison-Saunders A. 2016. Making sense of significance in environmental impact assessment. Impact Assess Proj Apprais. 34(1):87–93. doi: 10.1080/14615517.2015.1125643.

- Kørnøv L, Thissen W. 2000. Rationality in decision- and policy-making: implications for strategic environmental assessment. Impact Assess Proj Apprais. 18(3):191–200. doi: 10.3152/147154600781767402.

- Kwan M-P, Ding G. 2008. Geo-narrative: extending geographic information systems for narrative analysis in qualitative and mixed-method research. Prof Geogr. 60(4):443–465. doi: 10.1080/00330120802211752.

- Lang DJ, Wiek A, Bergmann M, Stauffacher M, Martens P, Moll P, Swilling M, Thomas CJ. 2012. Transdisciplinary research in sustainability science: practice, principles, and challenges. Sustainability Sci. 7(S1):25–43. doi: 10.1007/s11625-011-0149-x.

- Leavy P. 2017. Research design: quantitative, mixed methods, arts-based, and community-based participatory research approaches. (NY): The Gilford Press.

- Moen T. 2006. Reflections on the narrative research approach. Int J Qual. 5(4):56–59. doi: 10.1177/160940690600500405.

- Morgan DL. 1996. Focus groups. Annu Rev Sociol. 22(1):129–152. doi: 10.1146/annurev.soc.22.1.129.

- Morris A. 2015. A practical guide to in-depth interviewing. London: SAGE Publications.

- Narayanasamy N. 2008. Participatory rural appraisal principles, methods and application. London: SAGE Publications.

- Nchanji YK, Levang P, Jalonen R. 2017. Learning to select and apply qualitative and participatory methods in natural resource management research: self-critical assessment of research in Cameroon. Forests Trees Livelihoods. 26(1):47–64. doi: 10.1080/14728028.2016.1246980.

- Neuman WL. 2014. Social research methods: qualitative and quantitative approaches. 7th ed. Harlow: Pearson Education Limited.

- Nitz T, Brown A. 2001. SEA must learn how policy making works. J Environ Assess Policy Manag. 3(3):329–342. doi: 10.1142/S146433320100073X.

- Ørngreen R, Levinsen K. 2017. Workshops as a research methodology. The Electron J eLearning. 15(1):70–81.

- Owens S, Rayner T, Bina O. 2004. New agendas for appraisal: reflections on theory, practice, and research. Environ Plan A. 36(11):1943–1959. doi: 10.1068/a36281.

- Perdicoúlis A, Glasson J. 2006. Causal networks in EIA. Environ Impact Assess Rev. 26(6):553–569. doi: 10.1016/j.eiar.2006.04.004.

- Reed MS, Kenter J, Bonn A, Broad K, Burt TP, Fazey IR, Fraser EDG, Hubacek K, Nainggolan D, Quinn CH, et al. 2013. Participatory scenario development for environmental management: a methodological framework illustrated with experience from the UK uplands. J Environ Manag. 128:345–362. doi: 10.1016/j.jenvman.2013.05.016.

- Retief F, Morrison-Saunders A, Geneletti D, Pope J. 2013. Exploring the psychology of trade-off decision making in EIA. Impact Assess Proj Apprais. 31(1):13–23. doi: 10.1080/14615517.2013.768007.

- Revilla M, Ochoa C. 2017. Ideal and maximum length for a web survey. J Mark Res Soc. 59(5):557–565.

- Richey JS, Horner RR, Mar BW. 1985. The Delphi technique in environmental assessment II. Consensus on critical issues in environmental monitoring program design. J Environ Manag. 21(2):147–159.

- Ritchie J, Lewis J. 2003. Qualitative Research practice: a guide for social science students and researchers. London: Sage.

- Ross H. 1989. Community social impact assessment: a cumulative study in the Turkey Creek area, Western Australia. East Kimberley working paper No. 27. [accessed 12 Jan 2024]. https://library.dbca.wa.gov.au/static/Journals/081519/081519-27.pdf.

- Rounsevell MDA, Metzger MJ. 2010. Developing qualitative scenario storylines for environmental change assessment. Wiley Interdiscip Rev Clim Change. 1(4):606–619. doi: 10.1002/wcc.63.

- Satterfield T, Gregory R, Klain S, Roberts M, Chan KM. 2013. Culture, intangibles and metrics in environmental management. J Environ Manag. 117:103–114. doi: 10.1016/j.jenvman.2012.11.033.

- Savin-Baden M, Major CH. 2013. Qualitative research: the essential guide to theory and practice. London: Routledge.

- Schieffer A, Isaacs D, Gyllenpalm B. 2004. The world café: part one. World. 18(8):1–9.

- Shopley J, Sowman M, Fuggle R. 1990. Extending the capability of the component interaction matrix as a technique for addressing secondary impacts in environmental assessment. J Environ Manag. 31(3):197–213. doi: 10.1016/S0301-4797(05)80034-9.

- Sieber R. 2006. Public participation geographic information systems: a literature review and framework. Ann Assoc Am Geogr. 96(3):491–507. doi: 10.1111/j.1467-8306.2006.00702.x.

- Sinclair AJ, Diduck AP, Vespa M. 2015. Public participation in sustainability assessment: essential elements, practical challenges and emerging directions. In: Morrison-Saunders A, Pope J Bond A, editors. Handbook of sustainability assessment. Cheltenham, (UK): Edward Elgar; p. 349–374.

- Sinclair AJ, Doelle M, Gibson RB. 2018. Implementing next generation assessment: a case example of a global challenge. Environ Impact Assess Rev. 72:166–176. doi: 10.1016/j.eiar.2018.06.004.

- Sinclair AJ, Doelle M, Gibson RB. 2021. Next generation impact assessment: exploring key components. Impact Assess Proj Apprais. 40(1):3–19. doi: 10.1080/14615517.2021.1945891.

- Spaling H, Montes J, Sinclair AJ. 2011. Best practices for promoting participation and learning for sustainability: lessons from community-based environmental assessment in Kenya and Tanzania. J Environ Assess Policy Manag. 13(3):343–366. doi: 10.1142/S1464333211003924.

- Tang Z, Liu T. 2016. Evaluating internet-based public participation GIS (PPGIS) and volunteered geographic information (VGI) in environmental planning and management. J Environ Plann Manag. 59(6):1073–1090. doi: 10.1080/09640568.2015.1054477.

- Te Boveldt G, Keseru I, Macharis C. 2021. How can multi- criteria analysis support deliberative spatial planning? A critical review of methods and participatory frameworks. Evaluation. 27(4):492–509. doi: 10.1177/13563890211020334.

- Tight M. 2019. Documentary research in the social sciences. London: SAGE Publications.

- Tobias TN. 2014. Research design and data collection for land use and occupancy mapping. SPC Tradit Mar Resource Manag Knowl Inf Bull. 33:13–25.

- Toth FL. 2001. Participatory integrated assessment methods: an assessment of their usefulness to the European environmental agency. European Environmental Agency; [accessed 12 Jan 2024]. https://www.eea.europa.eu/publications/Technical_report_no_64/page001.html.

- UK Government. 2017. Futures toolkit: tools for strategic futures for policy-makers and analysts. Cabinet Office and Government Office for Science; [accessed 12 Jan 2024]. https://assets.publishing.service.gov.uk/government/uploads/system/uploads/attachment_data/file/674209/futures-toolkit-edition-1.pdf.

- Vanclay F. 2015. Qualitative methods in regional program evaluation: an examination of the story-based approach. In: Karlsson C, Andersson M Norman T, editors. Handbook of research methods and applications in economic geography. Cheltenham: Edward Elgar Publishing; p. 544–570.

- Vanclay F, Esteves AM. 2024. Handbook of social impact assessment and management. Cheltenham: Edward Elgar Publishing.

- Van Riper CJ, Foelske L, Kuwayama SD, Keller R, Johnson D. 2020. Understanding the role of local knowledge in the spatial dynamics of social values expressed by stakeholders. Appl Geogr. 123:102279. doi: 10.1016/j.apgeog.2020.102279.

- Vitous CA, Zarger R. 2020. Visual narratives: exploring the impacts of tourism development in Placencia, Belize. Ann Anthropol Pract. 44(1):104–118. doi: 10.1111/napa.12135.

- Walker H, Pope J, Sinclair J, Bond A, Diduck A. 2023. Qualitative methods for the next generation of impact assessment. Report submitted to: impact assessment agency of Canada, University of Manitoba; [accessed 11 Jan 2024]. https://mspace.lib.umanitoba.ca/items/40910784-63ad-4b1a-a330-158929aa7a6d.

- Wathern P. 1984. Ecological modelling in impact analysis. In: Roberts RD Roberts TM, editors. Planning and ecology. London and (NY): Chapman and Hall; p. 80–98.

- Wiklund H, Viklund P. 2006. Public deliberation in strategic environmental assessment: an experiment with citizens’ juries in energy planning. In: Emmelin L, editor. Effective environmental assessment tools-critical reflections on concepts and practice. Karlskrona, Sweden: Blekinge Institute of Technology; p. 44–59.

- Wood G, Rodriguez-Bachiller A, Becker J. 2007. Fuzzy sets and simulated environmental change: evaluating and communicating impact significance in environmental impact assessment. Environ Plan A. 39(4):810–829. doi: 10.1068/a3878.

- Zadeh LA. 1965. Fuzzy sets. Inf Control. 8(3):338–353. doi: 10.1016/S0019-9958(65)90241-X.