ABSTRACT

During the coronavirus pandemic, conspiracy theories and dubious health guidance about COVID-19 led to public debates about the role and impact of blatant acts of disinformation. But less attention has been paid to the broader media environment, which is how most people understood the crisis and how it was handled by different governments.

Drawing on a news diary study of 200 participants during the pandemic, we found they easily identified examples of “fake news” but were less aware of relevant facts that might help them understand how the UK government managed the crisis. Our content analysis of 1259 television news items revealed broadcasters did not routinely draw on statistics to contextualise the UK’s record of managing the coronavirus or regularly make comparisons with other countries. Given television news bulletins were the dominant news source for many people in the UK, we suggest the information environment gave audiences limited opportunities to understand the government’s performance internationally.

We argue that misinformation is often a symptom of editorial choices in media coverage—including television news produced by public service broadcasters—that can lead to gaps in public knowledge. We conclude by suggesting the concept of the information environment should play a more prominent role in studies that explore the causes of misinformation.

During the coronavirus pandemic, there were heightened concerns about false or misleading information (Poynter Citation2020). Not long after the virus spread, studies revealed the widespread circulation of so-called “fake news” about the pandemic across many countries (Graham et al. Citation2020). In particular, scholars drew attention to dubious health guidance and wild conspiracy theories. In doing so, misinformation or disinformation become widely used concepts to represent the spreading of false information (Pamment Citation2020). But while they are often used interchangeably, misinformation—as the spreading of false or inaccurate information—is conceptually distinct from disinformation, which describes the dissemination of deliberately false information (Wardle Citation2018).

Our aim in this study is to explore how the public made sense of the coronavirus pandemic within the UK’s information environment, and to enter into debates about the influence of disinformation and misinformation during the pandemic. We draw on the concept of the “information environment” (Jerit, Jason, and Toby Citation2006, 266) to consider the kind of news people were likely to have been exposed to in order to assess public understanding. Two complementary methods were used to explore the relationship between public knowledge and the information environment. First, a six-week online news diary with 200 participants was conducted in order to consider how the information environment—at a critical point in the pandemic—was shaping their knowledge and understanding of COVID-19, as well as how the UK government was managing the crisis. To assess the information respondents would have likely encountered when completing the diaries, we carried out a four-week systematic content analysis study of UK television news bulletins. The television news sample generated 1259 news items about the pandemic. Given television news in the UK is a largely trusted and impartial information source (Ofcom Citation2020a), we did not expect to find blatant instances of disinformation present in online or social media sites. In fact, we found a few stories in the television sample debunking conspiracy theories and false information. Instead, our analysis centred on assessing the extent to which television news provided information about how the UK handled the pandemic compared to other nations. By assessing our news diary participants’ understanding of disinformation, we first established how knowledgeable they were about blatant instances of false news. We then explored their cross-national understanding of how different nations governed the health crisis. After all, given the pandemic was global in scope, if the public were exposed to an information environment that regularly compared the UK’s management of the crisis with other nations, it would have enhanced the public’s ability to evaluate the government’s decision-making. We focus specifically on comparative international data, including measures of the death toll across different countries and, secondly, on the role of statistics in news reporting because understanding how these issues were reported matters to public knowledge. This is important because the public might behave or respond differently depending on their relevant knowledge. For example, a more informed public might have an impact on people's perception of how much they perceive the virus is circulating in the country, or their level of compliance with the government's measures. Furthermore, public knowledge about the government’s performance provides an indication of how the public relates to and evaluates the government in a moment of crisis.

Our study contributes to broader debates in journalism studies about the relationship between public knowledge and the information environment. By establishing how (mis)informed audiences were during the pandemic, we critically assess how well broadcasters communicated news about the UK government’s governance of the crisis. We conclude by suggesting the concept of the information environment can help scholars better understand the causes of misinformation.

Misinformation, Public Knowledge and Television News

In debates about the communication of the coronavirus pandemic, the media have often centred their attention on conspiracy theories, such as the belief that the virus originated in a lab, or blatant acts of disinformation including myths about home remedies that can cure the disease (Mian and Khan Citation2020). Popular among these theories has been the claim that 5G technology is responsible for the transmission of the disease, which in the UK led to arson attacks on cell phone towers in April 2020 (Hamilton Citation2020). The circulation of such fake information has raised concerns about undermining people’s understanding of the crisis.

The media focus on the circulation of so-called “fake news” and conspiracy theories about the pandemic represents a continuation of existing debates about the dangers of either misinformation and disinformation, and their persuasive effects on public behaviour. This preoccupation with “fake news”, spread either by political figures or through social media, is justifiable in the context of “post-truth” politics, whereby fake information can dominate electoral campaigns and political debate. However, a focus on demonstrably false information, in our view, often overshadows the ways mainstream media can contribute to reproducing misinformation. A fast-moving, 24-hour news cycle, for example, has put pressure on journalists to prioritise speed over accuracy (Cushion and Sambrook Citation2016), while the budgets of many newsrooms have been cut in recent years, limiting the time and resources necessary to routinely scrutinise the facts of a news story. This means journalists might inadvertently reproduce misinformation by not supplying enough analysis or contextual information that can lead to public confusion. Put more simply, longstanding structural conditions of journalism can allow misinformation and disinformation to thrive, rather than it being entirely driven by pernicious online or social media networks.

In order to explore disinformation in the context of the coronavirus pandemic, our study begins by focussing on how aware our diary participants were of blatant instances of false information about COVID-19, which is often the focal point of debates about the information environment. Our study found most participants could identify fake news (e.g., disinformation) but a majority felt confused about the UK’s lockdown measures and specific policies. We decided to therefore focus the content analysis on the UK government’s handling of the crisis by first exploring their knowledge of the death toll in the UK compared to other nations, as well as the different lockdown measures implemented cross-nationally. Without claiming any causal effects, we then examined how and to what extent one of the UK’s main information source—television news bulletins —covered the UK’s handling of the pandemic internationally. In doing so, we can explore the relationship between public knowledge and the information environment in order to identify whether there was any misinformation about people’s understanding of how the UK government handled the pandemic.

There is, of course, a voluminous academic literature across many disciplines that has explored public knowledge about social, political and economic issues (Carpini, Michael, and Keeter Citation1996). Our interest is in not just exploring factual questions about political knowledge, such as being able to name a local politician, but in more complex debates about people’s understanding of policy issues which has long been part of interpreting political knowledge in public opinion research (Lewis Citation2001). We did not expect the public to have precise knowledge about, say, the amount of people who had died at a specific point in the pandemic or how the lockdown measures were different in the UK compared to other nations. Instead, we considered more broadly whether the public was knowledgeable about the UK’s comparative handling of the pandemic by exploring if they thought other countries had lower or higher death tolls, or if their lockdowns were stricter or less stringent. While some of these facts and figures, such as interpreting the cross-national death tolls, were contentious because of contrasting data gathering methods, our study explores how television news reported these issues, as well as assessing the public’s knowledge of them including their attitudes towards journalists’ use of statistics.

As part of this body of political knowledge scholarship, studies examining the relationship between media content and public understanding has grown and become more sophisticated (Williams and Michael Citation2011). In doing so, the concept of the “information environment” has become a more prominent way of explaining what people think and why they think that way (Jerit, Jason, and Toby Citation2006, 266). Put simply, it conveys the informational opportunities the media provide to citizens to understand what is—and is not—happening in the world. There are many different ways scholars have examined how the environment shapes conflicting attitudes. Our interest is in the information environment of public service media, which was a dominant news source widely trusted by people during the pandemic (Ofcom Citation2020a). Previous cross-national studies of audiences and television news have found nations with a robust public service media system are associated with greater knowledge of the world because they create an information rich environment about hard news topics, such as politics and foreign affairs (Curran et al. Citation2009; Iyengar et al. Citation2009).

We contribute to these debates by examining television news bulletins in the UK, most of which are informed by public service values and obligations (Cushion Citation2021), and assessing the extent to which they provided informational opportunities to learn about the coronavirus and how the government handled it. We explore public knowledge within the information environment in terms of disinformation (fake news stories) and misinformation (misunderstanding of policies). Since our content analysis found very few stories about fake health news on television, we focussed on how they reported the government’s management of the crisis compared to other nations. In doing so, we can examine the relationship between public knowledge and the information environment by tracing any evidence of disinformation about COVID-19 or misinformation in the UK’s handling of the pandemic compared with other nations.

Our study has three research questions:

RQ1: How knowledgeable were respondents about prominent instances of false COVID-19 information?

RQ2: How well informed were respondents about how the UK government handled the pandemic compared to other nations?

RQ3: To what extent did UK television news bulletins compare and contrast the UK government’s response to the pandemic with other nations?

Method

The diary study of news audiences was conducted at the height of the first wave of the health crisis in the UK (between 16 April and 27 May 2020). The use of this method allowed us to qualitatively explore the range of participants’ understanding of the pandemic at a point in time when they were exposed to the information environment, rather than reflecting back in time (Couldry, Livingstone, and Markham Citation2007). While we asked specific knowledge-based questions, the diary approach enabled participants to articulate their understanding in their own words. Finally, we explored people’s knowledge at separate points in time. Developing a longitudinal record of people’s understanding represents an important feature of diary research (Couldry, Livingstone, and Markham Citation2007). However, diary research is limited to a small sample meaning it does not convey a representative picture of public opinion. The online nature of the study also meant participants self-recorded their responses, thus possibly misinterpreting questions in the process.

We employed the online recruitment agency, Prolific, to recruit a mix of 200 people in the UK. The sample included more women than men (146 vs. 54), more centre to left-wing Labour supporters than centre to right-wing Conservative voters (84 vs. 31) and featured a higher level of younger (under 40) than older (41+) people (159 vs. 39). In terms of education, while one participant had no formal education, 87 had secondary school or A-level qualifications, 17 had technical or community college degree, 60 had an undergraduate degree, 26 had a postgraduate qualification and five had a doctorate degree. Four participants did not state their education. Whilst our diary sample was not generally representative of the UK population, it revealed a range of views and provided an in-depth assessment of respondents’ knowledge and understanding at critical moments of the pandemic. Over the six-week period, respondents were asked to complete two diary entries a week (12 in total) on a wide range of issues, from their media consumption habits, to their political views and specific questions about their understanding of the pandemic. In this study, we focus on questions we asked respondents in two time periods (between 16 and 19 April and between 11 and 13 May), which explored their knowledge about fake health stories, the death toll in the UK compared to other countries, and their views about how the media, in particular television news, communicated information about how many people had died due to the coronavirus. This enabled us to explore any changes to participants’ knowledge over an approximately three-week period. Respondents were given either two or three days to complete the diary and were paid £60 for their participation in the study. Specifically, in entry 1 we asked respondents to rank—from highest to lowest—which countries had implemented the strictest lockdown measures, at the time the diary was completed, and which countries had registered the most deaths due to the coronavirus. Our aim was to assess how knowledgeable participants were about the UK’s management of the pandemic compared to other countries. We also showed them a BBC News headline from April 4, 2020, which read “Mast Fire Probe Amid 5G coronavirus claims” and then asked what role they thought, if any, 5G had played in spreading the coronavirus. Our intention was to explore the degree to which respondents believed a specific disinformation story. Three weeks later we then asked respondents about some of the blatant instances of disinformation and asked them to state if they thought they were accurate or false. We drew on Ofcom’s (Citation2020a) surveys and media coverage to develop a list of the most prominent claims people had seen or heard (but many studies did not then explore if they thought these claims were true or not as we did).

As we identified some misconceptions in respondents’ knowledge about the UK government’s comparative international performance of the pandemic in the first diary entry, three weeks later we longitudinally explored their knowledge again about death tolls and lockdowns, as well as their more general views on media coverage of these topics. Once again, we asked them to rank—at this point in time—which countries had implemented the strictest lockdown measures so far and which countries had registered the most deaths due to the coronavirus. We also asked them to rank—from highest to lowest—which countries had the highest excess death rate, since it was seen as the most reliable metric to compare the death rates of countries by many scientists. Finally, we invited respondents to more generally reflect on how they thought the media had reported the numbers of deaths related to COVID-19, including whether they thought television news bulletins had fairly or unfairly covered the UK’s figures compared to other countries. We analysed the qualitative data thematically, reading through all comments several times, identifying recurring themes and connecting them throughout the different diary entries. Three themes were particularly prominent in the diary entries explored here, namely misunderstandings expressed by participants in relation to the pandemic, criticisms of media coverage and of the government.

In entry 1 we had 200 respondents in the diary study with 170 still actively providing responses in entry 8. At these two points in time, we asked respondents about their media consumption habits, including specifically about whether they watched news every day, most days, once or twice a week or not at all. In entry 1, 159 participants—about eight in 10—watched TV news every day or most days in the last week. Seven out of 10 indicated they watched and trusted BBC news, which was a higher proportion than other television news bulletins. But just before entry 8, consumption fell to almost six in 10 respondents watching TV every day or most days in the last week. The BBC remained the most watched and trusted broadcaster, with half of respondents watching it every day or most days in the last week. Throughout the pandemic, Ofcom (Citation2020a) conducted representative surveys about the news consumption habits of people in the UK. Our diary respondents were in line with Ofcom’s representative surveys taken at a similar point in time, with television news one of the most trusted and consumed sources of news generally and the BBC specifically. Our sample, in other words, represented a demographic mix of news audiences who relied to a large extent on television news bulletins, especially the BBC, during the health crisis. Of course, TV was not the only source used for information about the health crisis, with many participants also relying to a large extent on online news and social media.

Given television news was a dominant source of information for our respondents during the pandemic, the content analysis of TV bulletins explored the kind of coverage they were likely to have encountered while completing the diaries. While we cannot isolate cause and effect given the number of news sources respondents drew on, at the very least the content analysis can help us understand the supply of information they were most exposed to—and trusted most—when responding to questions in the diary study. So, for example, when we explored people’s international comparative knowledge about the pandemic, our content analysis can reveal the degree to which different countries appeared in coverage including every statistical reference related to the death rate. We drew on content analysis as a method because it can systematically evaluate coverage across broadcasters and over time, objectively assessing the inclusion and exclusion of information about the pandemic. It was beyond the scope of the study to examine coverage across a wider range of online and social media platforms. But future research could explore whether different types of media reported differently from television news bulletins.

The sample included five major evening bulletins—the BBC News at Ten, ITV News at Ten, Sky News at Ten, Channel 4 at 7pm and Channel 5 at 5pm—over a period of four weeks (April 14–10 May 2020 excluding Easter Monday). We included Sky News in our sample of public service media because of its impartial reputation for producing high quality journalism (Cushion Citation2021). In order to develop a fair comparative assessment for Channel 4 we only coded the first 25 minutes of its nightly bulletin. It should also be noted that the weekend editions of Channel 5 and ITV were relatively short in length (5 and 15–20 minutes respectively) compared to the other bulletins (typically 20 minutes). The unit of analysis was every coronavirus-related television item. By item we refer to each news convention rather than story (e.g., a stand-alone anchor only item, edited package, live two way and studio interview/discussion).

The content analysis study generated 1259 items across five broadcasters, which were coded by two researchers. The differences in items can be explained by not just the number of items reported, but the length of airtime granted to coverage of the pandemic. Items on Channel 4 and Sky News, for example, tended to be longer than other bulletins, which is why there were less of them overall (see ).Footnote1

Table 1. The percentage of news items in the sample across different UK news bulletins.

The content analysis drew on eight variables. First, it recorded the type of television news convention, such as live two-way or an edited package. Second, it assessed whether a news item was predominantly about the pandemic or not. Third, it recorded the geographical focus of the item (UK or international). Fourth, it asked whether a UK item featured any international comparison. Fifth, it recorded the country mentioned for comparison. Sixth, it classified whether the comparison was made in passing or gave more detailed context. Seventh, it asked whether the item featured any visual statistics. Finally, it recorded the nation(s) compared visually with statistics.

After re-coding approximately 10% of the sample, all variables achieved a high level of intercoder reliability according to Cohen’s Kappa (CK). For news convention, the level of agreement (LoA) was 99.3% and 0.99 CK, for international comparisons the LoA was 99.3% and 0.95 CK, with 84.6% LoA and 0.83 for CK for the country mentioned in comparison, while the extent of comparison was 84.6% LoA and 0.81 CK. For the use of visual statistics, including international comparisons, the LoA was 97.9% and 0.92CK. There were no disagreements for geographical focus or whether the dominant theme of item was about COVID-19 or not.

Fake News or Not?

We began by asking whether respondents could identify examples of COVID-19 related disinformation that had no scientific credibility. In the first diary entry, respondents were asked in an open-ended question what role, if any, they thought 5G had played in spreading the coronavirus. We posed the question underneath a BBC News headline that read: “BBC News—Mast fire probe amid 5G coronavirus claims”. This was used as an example from BBC coverage because the headline arguably helped legitimatise the scientific credibility of 5G spreading the virus by framing it as “5G coronavirus claims” rather than explicitly labelling them false. In other words, the framing of the story from the most trusted source of news in the UK might encourage our respondents to believe the false claims.

Overwhelmingly, however, respondents rejected any connection between 5G and the coronavirus in spite of the BBC news story, with just three out of 200 hinting at a possible connection. In explaining why, many indicated they had encountered it as disinformation because the media, including in BBC news coverage, had drawn attention to these false claims in recent weeks. For example, our content analysis revealed that two days before we put this question to participants, Channel 5 News dedicated an entire item to exploring this issue because Ofcom—the UK’s media regulator—was investigating complaints after the claim was given some legitimacy on a television programme. Even a cursory search in online news showed many UK news providers reported this as a fake news story in April 2020. Given respondents clearly recognised the 5G claim as false, this suggests the media were effective in countering this disinformation.

In order to follow up on participants’ ability to recognise disinformation about the coronavirus, three weeks later we explored their knowledge about false information more comprehensively. At that time, some media reports were raising concerns about the influence “fake news” was having on the public (Wright Citation2020), highlighting new representative surveys that showed many people had been exposed to disinformation (Ofcom Citation2020b). Less clear, however, was the kind of false or misleading information they had been exposed to or where it had come from. Moreover, behind the many headlines there appeared to be an assumption that encountering disinformation would affect public understanding of the pandemic.

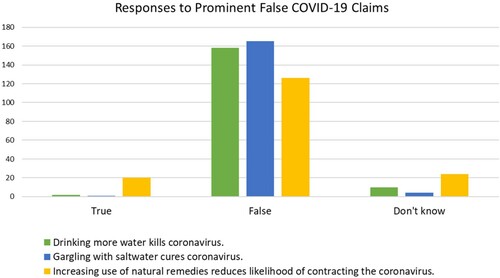

In light of this information environment, we selected some of the most prominent false claims associated with COVID-19 disinformation (Ofcom Citation2020a) and asked participants to state if they were true, false or if they did not know. We did so in the context of asking other questions about the pandemic, such as whether the UK government had met its target for testing people with suspected coronavirus. As shows, the vast majority of respondents rightly said that drinking more water does not kill the coronavirus, that gargling with saltwater was not a cure for COVID-19, and that increasing use of natural remedies helped reduce contracting coronavirus. While “fake news” was easily spotted, however, participants were less aware of facts that may help them understand how the pandemic was being handled by the UK government. So, for example, three in 10 respondents did not know the government had failed to regularly meet its testing targets, while almost a third did not realise that living in more deprived areas of the UK increased the likelihood of catching the coronavirus. In other words, the vast majority of our respondents rejected scientific disinformation about COVID-19. But they appeared to be more susceptible to what might be described as misinformation about the impact of the pandemic in society or the UK government’s handling of the crisis.

We now further develop our analysis of public knowledge during the pandemic by asking respondents more specifically about the UK’s record on managing the health crisis compared to other nations. In doing so, we explore respondents’ (mis)understanding about the UK’s comparative response to the crisis internationally and identify any sources of misinformation about the UK government’s handling of COVID-19.

UK Government’s Handling of the Health Crisis

In order to interpret how well people were informed about the pandemic, our opening diary entry asked respondents to rank the UK’s death rate compared to other countries, along with which nations had implemented the strictest lockdown measures. In our view, these are important facts the public should be broadly informed about in order to understand the UK government’s relative performance and decision-making. It does not mean they need to know the technical nuances of national governmental policy-making. But if respondents believed the UK had a comparatively low death rate and implemented the strictest public lockdown (both of which were not true) it might follow that they think the government’s handling of crisis was proportionate. Since the pandemic was global, establishing people’s comparative knowledge about how different governments handled the crisis can help them evaluate their own understanding of the UK government’s performance. Interpreting public knowledge at this time could also influence how people in the UK behaved at a pivotal moment in the pandemic, such as the degree to which they accepted governmental decisions, and followed health guidance and lockdown measures.

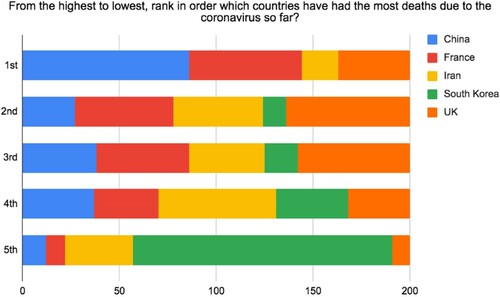

We asked respondents to rank which country—Iran, South Korea, the UK, France and China—had, at that point in time, recorded the highest to lowest number of deaths related to the coronavirus. We selected these countries to reflect a range of nations that, in our assessment, had featured either prominently or marginally in the news in the weeks and months before the diary entry was completed. China, for example, was central to coverage when the pandemic began, while South Korea attracted attention because of its lockdown response. France, and Iran especially, appeared far less newsworthy.

As shows, we found that more than four in 10 of our respondents– 43%—incorrectly ranked China as having the highest death rate. This was perhaps understandable because of the media focus on China. But at the time of posing this question, 4632 people had died due to the coronavirus in China, compared to three to four times more in France and the UK respectively. Nearly a third of our participants also mistakenly ranked Iran as having either the highest or second highest death rate. This is despite the fact that Iran had recorded just over 300 more fatalities than China. Of the five countries overall, it was only South Korea that recorded fewer deaths than China.

Figure 2. From the highest to the lowest, the rank order of nations who have recorded the most deaths due to coronavirus according to diary respondents (Entry 1).

The accuracy of official figures about Covid-related death tolls across different nations has been subject to much debate. In some countries, notably China, it has been suggested they have under-reported the death rate. But there have also been concerns about the UK government’s figures. At the start of the pandemic, the UK government’s COVID-19 death rate only included data from hospitals, excluding care or nursing homes. According to Discombe (Citation2020), the UK would have had a higher death rate than France at the time we asked respondents if they had included COVID-19 related fatalities beyond hospitals. But, as revealed, respondents were not widely aware the UK had a relatively high death rate. Approximately half of them—49.5%—did not rank the UK as having either the first or second highest death rate. That many people did not appreciate the full scale of coronavirus-related deaths in the UK compared to other countries has significant democratic implications. As Discombe (Citation2020) argued at the time the diary study was completed: “There is huge public debate over how the UK is faring in terms of deaths compared to other European nations and the government and its advisers have constantly referred to the “global death comparison” data to defend their position”. Since the public was not aware of the relative death toll differences, they arguably had a limited understanding about how the UK government was handling the pandemic at this point in time.

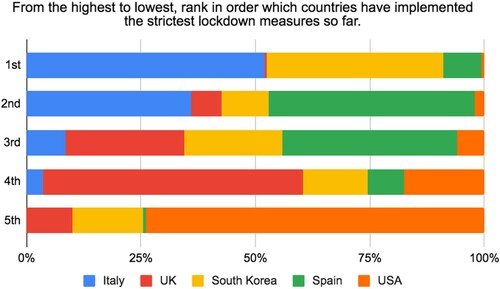

To further explore public knowledge about the UK’s handling of the pandemic, we asked respondents to rank which country had implemented the strictest lockdown measures from highest to lowest. Our interpretation of the relative strictness of lockdown measures was determined by a University of Oxford study (Citation2020), which operationalised national initiatives according to a set of criteria. They concluded that Italy, followed by Spain, South Korea, the UK and US had implemented the most stringent lockdowns at the time we asked respondents. We found that, broadly speaking, respondents correctly identified Italy and Spain as having the strictest lockdowns, while also stating that the US had the lightest measures (see ).

Figure 3. From highest to lowest, the rank order of nations implementing the strictest lockdown measures according to diary respondents.

At the same time, nearly half of all respondents—49%—ranked South Korea as the country that implemented the first or second strictest national lockdown. This misconception may have been cultivated by its initiative of early interventions, including wide-scale testing, GPS tracking and quarantine. Unlike European countries, they did not implement tough curfews or limit the workforce to essential services. This suggests many of our respondents were able to identify Italy and Spain as having stricter lockdowns, but did not fully comprehend how different countries comparatively dealt with the crisis. As our analysis of television news demonstrates, broadcasters tended to focus on domestic rather than international news coverage about the lockdown. Given the severity of the pandemic at this point in time, many participants were also perhaps less attentive to international affairs and more focussed on domestic news.

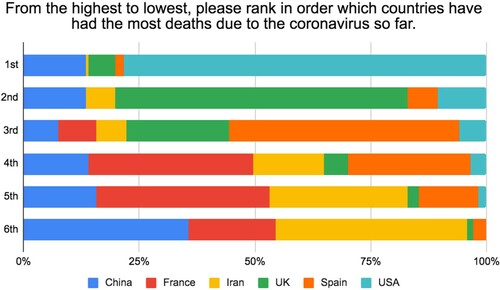

Our assessment of how respondents comparatively understood the UK government’s handling of the health crisis was followed up one month later when we asked them to again rank the UK’s relative death toll compared to other nations. We did so after the UK government had decided to stop publishing the international comparative death rate in its daily briefings from May 12. This received some critical media attention, with headlines and commentators suggesting it was an attempt to cover up the UK’s handling of the pandemic compared to other nations (Jones Citation2020). We found that respondents’ knowledge about the UK’s relative death toll had increased over time after we put the same questions to our participants between May 11 and 13, asking them to rank from the highest to lowest the countries which had the most deaths due to the coronavirus (see ). Rather than a majority ranking China as having the first and second highest death toll, participants rightly named the US first, while two-thirds rightly also correctly stated the UK was second. This suggests public knowledge improved over time.

Figure 4. From the highest to the lowest, the rank order of nations who have recorded the most deaths due to coronavirus according to diary respondents (Entry 8).

However, during this period of time there were debates about the most credible comparative measure of comparing death rates between countries. After all, the larger the population size of a country the most likely they are to have higher COVID-19 related fatalities. According to the Financial Times’ data journalist John Burn-Murdoch, who built up over 300,000 followers on Twitter with his daily analysis of the death rate, the “gold-standard for international comparisons of COVID-19 deaths” was the excess death rate. This metric worked out the number of additional deaths in a time period compared to the number usually expected. In other words, it looks at the increase of death rates due to the coronavirus and can work out the proportional comparative differences between nations. By that measure, according to the Financial Times (Burn-Murdoch Citation2015), the UK had the highest excess death rate in the world. It found that the UK's 42,000 excess fatalities in May 2020 were far higher than the numbers recorded by the US.

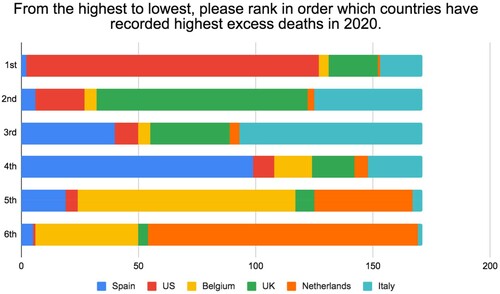

We asked our respondents to rank the excess death rate from a list of countries—the US, UK, Italy, Spain, Netherlands and Belgium—at this point in time. We found an overwhelming majority of our respondents thought the US had the highest excess death rate, while just over one in 10 correctly said the UK had the highest excess death rate (see ). In fact, almost four in 10 respondents thought the UK had a relatively low excess death compared to other countries as they ranked it as having the lowest comparative figure (with most placing it fourth, fifth or sixth in our list of six nations).

Figure 5. From the highest to the lowest, the rank order of nations respondents thought had the most excess deaths due to coronavirus according to diary respondents.

Overall, we found most respondents had become more informed of the UK’s comparatively high death rate between April and May 2020. As our content analysis shows, coverage of the death rate was the most widely conveyed visual statistic across all television news bulletins so this may have become more widely appreciated over time. But, perhaps more significantly, our respondents did not fully realise the UK had the highest comparative death rate according to the excess death rate metric which many scientists consider to be the most credible metric (Lee Citation2020).

Finally, we asked respondents how they thought television news bulletins had reported the numbers of deaths related to COVID-19 in the UK compared with other countries. Our reading of this qualitative data uncovered widespread public confusion, including mistrust in how the death rate was recorded by the UK government and communicated by journalists. One of the most prominent themes of coverage was participants explaining how suspicious they were about UK comparisons with other nations. One observed:

I think originally when they started to announce the deaths on TV, they were only counting people that had died in hospitals which made it very unreliable and unfair. People had started to think the number was lower but these were only what was being accounted for. Then when they introduced deaths that had come from care homes also it hit home as to how real and how high the deaths were!

We also found a significant minority of respondents acknowledged the challenges journalists faced when reporting and comparing complex figures from different countries, but felt they could have been communicated with greater clarity and precision. Some participants explicitly conveyed their mistrust in the use of statistics, with one stating: “I do believe that the actual figures are much higher than what have been reported. I think this could be because of the false data provided to the media from the government.” Another respondent believed that “Parts of TV media have just blindly followed the government and have tried to play down the number of UK deaths.” Notwithstanding the complexity of comparing death rates across nations, some respondents told us they valued them being reported. As one respondent put it:

I think it is fair for TV news bulletins to compare the UK death rate to that of other countries. It enables us to gauge the effect lockdown measures are having in different countries as they have all implemented lockdown differently and to varying degrees.

At this point in time, the UK government announced it would stop including comparative figures about death rates in their regular press briefing (Jones Citation2020). But our analysis showed many participants wanted journalists to compare and contrast the handling of the pandemic between countries, including analysis about how coronavirus data was generated across different nations. Above all, they wanted journalists to regularly explain the complexity behind statistics so they could be interpreted accurately and fairly.

We now turn to our content analysis of UK television news coverage of the pandemic that examined the degree of domestic and foreign news, the regularity of international comparisons, as well as how comparative death rates were communicated.

International News and Comparative Coverage

Overall, television news about the pandemic was largely focussed on UK domestic issues rather than foreign affairs. As shows, 86.7% of items were primarily about the UK compared to 13.3% about international news. However, there were some striking differences between broadcasters. While almost two in 10 BBC News at Ten items were about foreign affairs, for Channel 5 it was lower than one in 20 items.

Table 2. Percentage proportion of International and UK news items.

Of the 1259 television news items examined about the coronavirus, we found 103–8.2%—included an international comparison. shows all broadcasters focussed more on comparing the UK with other countries than on comparing foreign nations with other countries including the UK.

Table 3. Number of UK and International television news items with international comparison.

The BBC had the most comparative news coverage—with 30 items in total, including a relatively balanced agenda of domestic and international news featuring an international comparison—with Channel 5 producing just 11 items, which had an overwhelming domestic over foreign editorial emphasis. As a proportion of all pandemic coverage, the BBC included an international comparison in one in 10 items compared to one in 20 items on Channel 5. In other words, most of the time evening television news viewers did not encounter stories that compared the UK’s handling of the pandemic with other nations.

We also examined the degree to which different countries were compared by assessing if they were made in passing or in a more detailed way (see ).

Table 4. The proportion of television UK related news items with detailed or passing references to other countries (N in brackets).

For example, a detailed comparison between nations was provided by the BBC when reporting the UK had the highest death toll in Europe according to the latest government figures. Alongside showing a graph with comparative death rates between the two countries, the BBC science editor reported:

The official numbers confirm that Italy has lost 29,315 people, and the UK now slightly more, at 29,427(…) There are important differences between the two countries. The UK has more people than Italy, and London is far bigger than any Italian city. On the other hand, the population of Italy is older and more generations live together, which increases the risk to grandparents. (BBC News at Ten, 5 May 2020, detailed international comparison)

More often, however, broadcasters made far briefer, passing references to other countries, with limited contextual information supporting the comparison. For example, Channel 5 reported that:

Meanwhile teachers [in the UK] question how social distancing could work in schools: morning and afternoon sessions perhaps, like France and Germany from next week. For the UK so far, there is no road map. There is fear of getting it wrong and U-turning back to lockdown. (Channel 5 News at 5, 27 April, in passing international comparison)

The BBC provided the highest proportion of detailed analysis between countries with well over two in 10–22.5%—comparisons, while for Channel 5 and ITV it was just under two in 10, and approximately one in 10 on Sky News and Channel 4. Overall, in television coverage of the pandemic the vast majority of comparisons with other countries were brief, with limited information or context.

The Comparative Use of Statistics

Overall, 214 news items featured statistics which were visually represented in UK television news coverage of the pandemic. This meant that under one in five news items—17%—had a statistic referenced visually in television news. There were, however, some major differences in the use of statistics between broadcasters (see ).

Table 5. The proportion of television news items featuring an on-screen statistic (N in brackets).

Once again, BBC News had the most statistical references, with one in four items including visual coverage of some data or figures, compared to one in five items on Channel 4. Channel 5 had the fewest references to statistics, with well under one in 10 items featuring a figure or data set visually. On BBC, Channel 4 and Sky News, on-screen data about the latest key statistics (for instance, the number of deaths, cases and tests) often punctuated the beginning of each bulletin, but in a relatively brief way. On screen statistics included line graphs, bar charts, maps, or raw numbers about issues such as mortality rates, new cases, and tests performed.

We further analysed all visually represented statistics across the 214 items and identified 455 in total, with the most on display on BBC News (147), followed by Sky News (135), Channel 4 (74), ITV (65) and Channel 5 (34). Across all broadcasters, a majority of these statistics—53%—related to the death toll, which was the dominant topic used to visually represent figures or data on all broadcasters. Out of the 455 visually represented statistics, over half (241, 53%) were about the death figures. We also coded whether the represented death statistics were used in comparison with other sets of figures such as excess mortality or comparisons between the death toll in the UK and other nations. We found that 10% of the death-related statistics referenced and represented “excess deaths” when analysing the impact of the virus in the UK. Our findings show that while excess mortality featured in 10% of death statistics, most of the represented death figures (66%) did not feature any further comparison beyond information concerning daily or weekly death rates. Sky News most frequently included excess deaths figures in its analyses, representing death figures in a more comparative fashion with further variables including UK/international comparisons. Over the four-week study, there was few statistics about infected cases, testing or lockdown measures. With the exception of Sky News, more than seven in 10 statistical references by broadcasters were to raw figures. Six in 10 statistical references on Sky News, by contrast, included a chart, often with more sophisticated statistical elaboration than other broadcasters, such as multi-line graphs examined in studio packages.

Given the complexity of data about the coronavirus and the degree of explanation that is needed to understand some of the figures—as our diary participants acknowledged—we assessed every statistical reference to check if a caveat had been included or not. By that we mean if a journalist supplied additional background or context to a statistic such as addressing or clarifying any constraints in the collection or analysis of the data (e.g., “data only includes deaths in hospital” or “there’s a time lag in the data”). We found the BBC was most cautious, with close to three in 10 statistical references reported with some additional clarification compared to just over two in 10 references on Sky News. By contrast, three in 20 statistical references included a caveat on Channel 4, whereas on ITV and Channel fewer than one in 10 references did.

Finally, we examined the geographical relevance of each statistic and established that just over three in 20–17.4%—were international in scope. The rest focussed mainly on the UK generally, followed by England and Wales, England, and then far fewer references to either Scotland, Wales or Northern Ireland. In sum, fewer than 1 in 5 items included a visual statistic with an international comparison.

Towards a Better Understanding of the Information Environment in Debates About (Mis)informing the Public

Drawing on a news diary study of 200 participants during the height of the pandemic, our study found participants could easily identify a number of “fake news” stories. But they were far less aware of facts that may help them understand how the pandemic was being handled by the UK government, such as whether testing targets were being met or realising that living in more deprived areas of the UK increased the chances of catching the coronavirus. We also found many participants were unaware that the UK death rate was far higher than most other countries. Over time, respondents become more aware of the international death toll, but the majority still did not realise the UK had the highest excess death rate in the world. More generally, participants acknowledged that during the pandemic they were confused by many of the statistics and UK comparisons with other countries.

In order to explore the kind of information most of our respondents encountered when completing the diaries, we also drew on a systematic content analysis of 1259 UK television news items on the pandemic, including every statistical reference about the death toll, during a four-week period when the news diary was undertaken. Overall, we found almost all broadcasters focussed far more on domestic issues about the UK’s handling of the health crisis than comparisons between countries. Moreover, even when an international country appeared in domestic coverage, the vast majority of times they were referenced in passing rather than receiving any sustained comparative coverage. Our study also found the use of data about the pandemic was in short supply, with over four in five items having no supporting visual statistics. Just one in five of these statistical references included an international comparison. Despite the complexity of many data sets and figures, most broadcasters did not add any additional caveats that might help audiences better understand or trust them. When a statistic was shown, half were about the death rate, but almost two thirds of these provided no comparative figures with other countries. With perhaps the exception of Sky News, broadcasters granted limited airtime to referencing the excess death rate figure over the four weeks of analysis. In sum, television news reporting during the height of the pandemic did not routinely draw on statistics to put into context the UK’s record of managing the coronavirus, or regularly make comparisons with other countries about how the UK government handled the crisis.

Given the limited information environment, it was perhaps understandable why most respondents did not possess the knowledge to accurately assess the UK’s performance of the pandemic internationally. Taken together, our findings raise a broader normative question long debated in political science about how much the public need to know about political affairs (Lewis Citation2001). In our view, given many people thought the UK had a relatively low death rate, it follows they might be misinformed that the government had managed the crisis more effectively than other nations. Outside the UK, for example, the international media were highly critical about how the UK handled the pandemic at this point in time, with comparative data to support their analysis (Wintour Citation2020).

Overall, our study suggests that while much of the public turned to television news for trusted information at a key point in the pandemic, we identified gaps in reporting which may have left audiences confused—as identified by the diary respondents—about the UK’s handling of the crisis internationally. This should not be crudely interpreted as the media being solely responsible for the public’s lack of knowledge about the UK’s death toll or their lack of understanding about how strict the lockdown measures were compared to other countries. There are, of course, many factors that help in raising public understanding of political issues (Carpini, Michael, and Keeter Citation1996). We would argue that the broader contribution of our study’s findings demonstrate that misinformation—or misunderstandings of politics and public affairs—are often a symptom of editorial choices in routine mainstream news reporting, including public service broadcasters, which can lead to gaps in public knowledge and cause confusion among audiences. Our point is not to single out public service broadcasters. Systematic comparative content studies have shown they provide a more informative agenda of politics and public affairs than many commercial online news outlets (Cushion Citation2021). Our argument is that even high-quality broadcasters which are legally bound to be impartial and accurate can play a role in spreading misinformation by what they editorially include or exclude in routine coverage. In our view, the concept of the information environment should play a more prominent role in studies theorising and empirically identifying the causes of misinformation. In doing so, it could help explain gaps in people’s knowledge and identify where more background and context to reporting could enhance public understanding. As our study revealed, if broadcasters had provided more detailed cross-country comparisons about how different nations handled the pandemic, it would have opened up more opportunities for the public to enhance viewers’ knowledge and understanding of the UK government's performance.

Disclosure Statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

Notes

1 All tables may not add up to 100% due to rounding up data.

References

- Burn-Murdoch, John. 2020. Comparative Excess Death rate figures on 6 May, 2020 from the Financial Times Source: https://twitter.com/jburnmurdoch.

- Carpini, Delli, X. Michael, and Scott Keeter. 1996. What Americans Know About Politics and Why It Matters. New Haven: Yale University Press.

- Couldry, Nick, Sonia Livingstone, and Tim Markham. 2007. Media Consumption and Public Engagement: Beyond the Presumption of Attention. Basingstoke: Palgrave Macmillan.

- Curran, James, Shanto Iyengar, Anker Brink Lund, and Inka Salovaara-Moring. 2009. “Media System, Public Knowledge and Democracy: A Comparative Study.” European Journal of Communication 24 (1): 5–26.

- Cushion, Stephen. 2021. “Are Public Service Media Distinctive from the Market? Interpreting the Political Information Environments of BBC and Commercial News in the United Kingdom.” European Journal of Communication. Ifirst.

- Cushion, Stephen, and Richard Sambrook. 2016. Introduction: The Future of 24-Hour News: New Directions, New Challenges. New York: Peter Lang.

- Discombe, Matt. 2020. “Government Has Misled Public Over UK Deaths Being Lower than France.” HSJ, April 14. https://www.hsj.co.uk/coronavirus/government-has-misled-public-over-uk-deaths-being-lower-than-france/7027404.arti.

- Graham, Timothy, Axel Bruns, Guangnan Zhu, and Rod Campbell. 2020. Like a Virus: The Coordinated Spread of Coronavirus Disinformation. Canberra: The Australia Institute.

- Hamilton, Isobel Asher. 2020. ‘77 Cell Phone Towers Have Been Set on Fire so Far Due to a Weird Coronavirus 5G Conspiracy Theory’. Business Insider. Accessed 13 October 2020 https://www.businessinsider.com/77-phone-masts-fire-coronavirus-5g-conspiracy-theory-2020-5.

- Iyengar, S., K. S. Hahn, H. Bonfadelli, and M. Marr. 2009. “Dark Areas of Ignorance” Revisited: Comparing International Affairs Knowledge in Switzerland and the United States.” Communication Research 36 (3): 341–358.

- Jerit, Jennifer, Barabas Jason, and Bolsen Toby. 2006. “Citizens, Knowledge, and the Information Environment.” American Journal of Political Science 50 (2): 266–282.

- Jones, Harrison. 2020. Government ‘Shamelessly Abandons’ Global Comparison of Coronavirus Deaths, The Metro, 12 May.

- Lee, Georgina. 2020. “How Helpful are Coronavirus ‘Excess Deaths’ Figures?” Channel 4 News Fact Check. https://www.channel4.com/news/factcheck/factcheck-how-helpful-are-coronavirus-excess-deaths-figures.

- Lewis, Justin. 2001. Constructing Public Opinion: How Political Elites Do What They Like and Why We Seem to go Along with it. New York: Columbia University Press.

- Mian, Areeb, and Shujhat Khan. 2020. “Coronavirus: The Spread of Misinformation.” BMC Medicine 18 (1): 89.

- Ofcom. 2020a. Covid-19 News and Information: Consumption and Attitudes. Results from Weeks One to Three of Ofcom’s Online Survey. London: Ofcom.

- Ofcom. 2020b. ‘Half of UK Adults Exposed to False Claims About Coronavirus’, 9 April. https://www.ofcom.org.uk/about-ofcom/latest/features-and-news/half-of-uk-adults-exposed-to-false-claims-about-coronavirus.

- Pamment, James. 2020. The EU’s Role in Fighting Disinformation: Taking Back the Initiative. Future Threats, Future Solutions #1.

- Poynter. 2020. ‘Fact-checkers Fighting the COVID-19 Infodemic Drew a Surge in Readers’, 9 June. https://www.poynter.org/fact-checking/2020/fact-checkers-fightingthe-covid-19-infodemic-drew-a-surge-in-readers/.

- University of Oxford. 2020. Coronavirus Government Response Tracker. https://www.bsg.ox.ac.uk/research/research-projects/coronavirus-government-response-tracker.

- Wardle, Claire. 2018. “The Need for Smarter Definitions and Practical, Timely Empirical Research on Information Disorder.” Digital Journalism 6 (8): 951–963.

- Williams, Bruce A, and Delli Carpini Michael. 2011. After Broadcast News: Media Regimes, Democracy, and the New Information Environment. Cambridge: Cambridge University Press.

- Wintour, Patrick. 2020. “UK Takes a Pasting from World's Press Over Coronavirus Crisis.” The Guardian, May 12. https://www.theguardian.com/world/2020/may/12/uk-takes-a-pasting-from-worlds-press-over-coronavirus.

- Wright, Mike. 2020. "Survey Reveals Half of People Have Seen Coronavirus Fake News Online". The Telegraph, 9 April.