ABSTRACT

The new commercial-grade Electroencephalography (EEG)-based Brain-Computer Interfaces (BCIs) have led to a phenomenal development of applications across health, entertainment and the arts, while an increasing interest in multi-brain interaction has emerged. In the arts, there is already a number of works that involve the interaction of more than one participants with the use of EEG-based BCIs. However, the field of live brain-computer cinema and mixed-media performances is rather new, compared to installations and music performances that involve multi-brain BCIs. In this context, we present the particular challenges involved. We discuss Enheduanna – A Manifesto of Falling, the first demonstration of a live brain-computer cinema performance that enables the real-time brain-activity interaction of one performer and two audience members; and we take a cognitive perspective on the implementation of a new passive multi-brain EEG-based BCI system to realise our creative concept. This article also presents the preliminary results and future work.

1 Introduction

Since 2007, the introduction of new commercial-grade Electroencephalography (EEG)-based Brain–Computer Interfaces (BCIs) and wireless devices has led to a phenomenal development of applications across health, entertainment and the arts. At the same time, an increasing interest in the brain activity and interaction of multiple participants, referred to as multi-brain interaction (Hasson et al. Citation2012, 114), has emerged. Artists, musicians and performers have been amongst the pioneers in the design of BCI applications (Nijholt Citation2015, 316) and while the vast majority of their works use the brain-activity of a single participant, a survey by Nijholt (Citation2015) presents earlier examples that involve multi-brain BCI interaction in installations, computer games and music performances, such as: the Brainwave Drawings (1972) for two participants by Nina Sobell; the music performance Portable Gold and Philosophers’ Stones (Music From the Brains In Fours) (1972) by David Rosenboom, where the brain-activity of four performers was used as creative input; the Alpha Garden (1973) installation and the Brainwave Etch-a-Sketch (1974) drawing game by Jaqueline Humbert, both for two participants.

Nowadays, there is a new increasing number of works that involve the simultaneous interaction of more than one participants or performers with the use of EEG-based BCIs. The emergence of these applications is not coincidental. On the one hand, amongst artists and performers the notion of communicating and establishing a feeling of being connected with each other and the audience is part of their anecdotal experience. On the other hand, in recent years with the advancement of neurosciences and new EEG technology they have been enabled to realise works and projects as a manifestation of their intra- and inter-subjective experiences (Zioga et al. Citation2015, 105). While, in a parallel course, in the fields of neuroscience and experimental psychology, has emerged a new and increasing interest in studying the mechanisms, dynamics and processes of the interaction and synchronisation between multiple subjects and their brain activity, such as brain-to-brain coupling (Zioga et al. Citation2015, 104). Recent applications include the computer games Brainball (Hjelm and Browall Citation2000); BrainPong (Krepki et al. Citation2007); Mind the Sheep! (Gürkök et al. Citation2013) and BrainArena (Bonnet, Lotte, and Lecuyer Citation2013). Amongst the relevant installations are: Mariko Mori's Wave UFO (Citation2003), an immersive video installation (Mori, Bregenz, and Schneider Citation2003); the MoodMixer (2011–2014) by Grace Leslie and Tim Mullen, an installation with visual display and ‘generative music composition’ of two participants’ brain-activity (Mullen et al. Citation2015, 217) and a series of projects, like Measuring the Magic of Mutual Gaze (2011), The Compatibility Racer (2012) and The Mutual Wave Machine (2013), by the Marina Abramovic Institute Science Chamber and the neuroscientist Dr Suzanne Dikker (Dikker Citation2011). Whereas, in the field of music performances are included: the DECONcert (Mann, Fung, and Garten Citation2008), where forty-eight members of the audience were adjusting the live music; the Multimodal Brain Orchestra (Le Groux, Manzolli, and Verschure Citation2010) that involves the real-time BCI interaction of four performers; Ringing Minds (2014) by Rosenboom, Mullen and Khalil that involved the real-time brain-activity of four members of the audience combined to a ‘multi-person hyper-brain’ (Mullen et al. Citation2015, 222); and The Space Between Us (2014) by Eaton, Jin, and Miranda. In the latter the brainwaves of a singer and a member of the audience are measured and processed in real-time, separately or jointly, as an attempt of bringing the ‘moods of the audience and the performer closer together’ (Eaton Citation2015) with the use of a system that ‘attempts to measure the affective interactions of the users’ (Eaton, Williams, and Miranda Citation2015, 103).

However, to our knowledge, the use of multi-brain BCIs in the field of live brain-computer mixed-media and cinema performancesFootnote1 for both performers and members of the audience is rather new and distinct from applications like those discussed above. Live cinema (Willis Citation2009) and mixed-media performances (Auslander Citation1999, 36) are historically established categories in the broader field of the performing arts and bear distinct characteristics that essentially differentiate them from music performances with the addition of ‘dynamical graphical representations’ (Mullen et al. Citation2015, 212) or VJing practices. In this context, we present in Section 2 the neuroscientific, computational, creative, performative and experimental challenges of the design and implementation of multi-brain BCIs in mixed-media performances. Accordingly, we discuss in Section 3 Enheduanna – A Manifesto of Falling, the first demonstration of a live brain-computer cinema performance, as a complete combination of creative and research solutions, which is based on our previous work (Zioga et al. Citation2014, Citation2015) with the use of BCIs in performances that involve audience participation and interaction with a performer (Nijholt and Poel Citation2016, 81). This new work enables for the first time, to our present knowledge, the simultaneous real-time interaction with the use of EEG of more than two participants, including both a performer as well as members of the audience in the context of a mixed-media performance. In Section 3.1 we discuss the cognitive approach we followed, while in Section 3.2 we present the new passive multi-brain EEG-based BCI system that was implemented for the first time. In Section 3.3 we discuss the creative concept, methods and processes. Finally, Section 4 includes the preliminary results, a discussion of the difficulties we encountered and future work.

2 The challenges of the design and implementation of multi-brain BCIs in mixed-media performances

Today, the new wireless EEG-based devices provide the performers with greater kinetic and expressive freedom, especially when compared to previous wired systems and electrodes used by artists and pioneers like Alvin Lucier (Music For Solo Performer 1965), Claudia Robles Angel (INsideOUT 2009) and others. At the same time, they also offer a variety of connectivity solutions. They enable the computational and creative processing of a wide range of brain- and cognitive-states, according to the tasks executed, in consistency with the dramaturgical conditions and the creative concept of the performance (Zioga et al. Citation2014, 223). However, the design and implementation of multi-brain BCIs in the context of mixed-media performances is linked to a series of neuroscientific, computational, creative, performative and experimental challenges.

2.1 Neuroscientific

Although EEG is a very effective technique for measuring changes in the brain-activity with accuracy of milliseconds, one of its technical limitations is the low spatial resolution, as compared to other brain imaging techniques, like fMRI (functional Magnetic Resonance Imaging), meaning that it has low accuracy in identifying the precise region of the brain being activated.

Additionally, the design and implementation of the EEG-based BCIs presents particular difficulties and is dependent on many factors and unknown parameters, such as the unique brain anatomy of the different participants wearing the devices during each performance, or the type of sensors used. Other unknown parameters include the location of the sensors, which might be differentiated even slightly during each performance, and the ratio of noise and non-brain artifacts to the actual brain signal being recorded. The artifacts can be either ‘internally generated’ or ‘physiological’—electromyographic (EMG) from the neck and face muscles, electrooculographic (EOG) from the eye movements and electrocardiographic (ECG) from the heart activity; and ‘externally generated’ or ‘non-physiological’—spikes from equipment, cable sway and thermal noise (Nicolas-Alonso and Gomez-Gil Citation2012, 1238; Swartz Center of Computational Neuroscience, University of California San Diego Citation2014).

2.2 Computational

As Heitlinger and Bryan-Kinns (Citation2013, 111) point out, the Human–Computer Interaction (HCI) research has mainly focused on ‘users’ abilities to complete tasks at desk-bound computers’. This is still evident also in the field of BCIs and the application development across games, interactive and performance art. Often this is necessary, such as in cases where Steady State Visual Evoked Potential (SSVEP) paradigms are used, for which users need to focus their attention at visual stimuli on a screen, for periods of several seconds repeated multiple times. Similar conditions are also encountered in live computer music performances where the performers are limited by the music tasks they need to accomplish. However, for the brain–computer interaction of performers engaging with more intense body movement and making more active use of the performance space, like actors/actresses and dancers, the design of the BCI application needs to be liberated from ‘desk-bound’ constraints.

At the same time, the use of the performance space itself is also limited due to the available transmission protocols, such as Bluetooth, which is very common amongst the wireless BCI devices (Lee et al. Citation2013, 221) and has typically a physical range of 10 m.

Additionally, the new low-cost headsets that are used by an increasing number of artists creating interactive works have proven to be reliable for the real-time raw EEG data extraction. At the same time, they also include ready-made algorithmic interpretations, filters and ‘detection suites’ which indicate the user’s affective states, such as ‘meditation’ or ‘relaxation’, ‘engagement’ or ‘concentration’, which vary amongst the different devices and manufactures. However, the algorithms and methodology upon which the interpretation and feature extraction of the brain’s activity is made are not published by the manufactures (Zioga et al. Citation2014, 225) and therefore their reliability is not equally verified. Towards this direction new research is trying to understand the correlates of individual functions, such as the attention regulation, and compare them to published literature (van der Wal and Irrmischer Citation2015, 192).

Moreover, multi-brain applications designed for simultaneous real-time interaction of both performers and members of the audience in a staged environment, like in mixed-media and live cinema performances, are rather new and have involved so far up to two interacting brains. What kind of methods need to be developed and what tools to be used in order to visualise, both independently as well as jointly, the real-time brain-activity of multiple participants under these conditions? (Zioga et al. Citation2015, 111).

2.3 Creative and performative

The use of interactive technology in staged works presents major creative and performative challenges, especially when audience members become participants and co-creators. This occurs because the aim is to achieve ‘a comprehensive dramaturgy’ with ‘a high level of narrative, aesthetic, emotional and intellectual quality’. At the same time a great emphasis is placed ‘on the temporal parameter’ of the interaction, which needs to be highly coordinated comparing to interactive installations that in most cases can be activated whenever the visitor wants (Lindinger et al. Citation2013, 121).

In the case of BCIs, the different systems are categorised as ‘active’, ‘re-active’ and ‘passive’, according to their interaction design and the tasks involved. The passive derive their outputs from ‘arbitrary brain activity without the purpose of voluntary control’, in contrast to ‘active’ BCIs where the outputs are derived from brain activity ‘consciously controlled by the user’, while the ‘reactive’ BCIs derive their outputs from ‘brain activity arising in reaction to external stimulation’ (Zander et al. Citation2008). At the same time, one of the first creative challenges that the performer/s might face during a mixed-media performance that involves different tasks is the cognitive load. For example, if the performer/s need to dance, act and/or sing, it is highly difficult to execute at the same time mental tasks, such in the case of active or re-active BCIs.

Additionally, if we would like to involve both the performer/s’ as well as the participating members’ of the audience real-time brain-interaction, then we would need to approach the BCI design in a way that addresses the dramaturgical, narrative and participatory level, in order to induce ‘feelings of immersion, engagement and enjoyment’ (Lindinger et al. Citation2013, 122).

Moreover, in real-time audio-visual and mixed-media performances, from experimental underground acts to multi dollar music concerts touring around the world in big arenas, liveness is a key element and challenge. In the case of performers using laptops and operating software, the demonstration of liveness to the audience can be approached in various ways (Zioga et al. Citation2014, 226). Last but not least, in the history of arts when a new medium is introduced, the first works are commonly oriented around the capabilities and the qualities of the medium itself. The use of BCIs in interactive art has not been an exception. However, a negative manifestation of this tendency is technoformalism, the fetishising of the technology (Heitlinger and Bryan-Kinns Citation2013, 112), when the artists’ focus on the medium is made on the expense of the artistic concept and the underpinning ideas of the creative process. In the case of the use of BCIs how could this be avoided and how can the specific technologies serve the purpose of the creative concept?

2.4 Experimental

When EEG studies are conducted in a lab environment there is greater flexibility and freedom compared to studies attempted in a public space and moreover under the tight conditions of a mixed-media performance. In a lab experiment the allocation of a time that is suitable for the research purposes and convenient for the participants is easier and can expand during a longer period. While in a performance setting the study needs to be organised according to the event and venue logistics. Additionally, a lab environment is a more informal and private space comparing to a public performance venue, where apart from the researcher/s and the participants, other spectators are also present, a fact that increases considerably the psychological pressure for a successful process. Moreover, conducting an EEG study within the frame of a public mixed-media performance, involves many additional elements that need to be coordinated and precisely synchronised, like for example live video projections with live electronics and the real-time acquisition and processing of the participants’ data.

3 Enheduanna – A Manifesto of Falling: a live brain-computer cinema performance as a combination of creative and research solutions

Enheduanna – A Manifesto of Falling (2015) is a new interactive performative work, an event that combines live, mediatised representations, more specifically live cinema, and the use of BCIs (Zioga et al. Citation2015, 107). The project involves a multidisciplinary team. The key collaborators of the project include the first author, as the director, designer of the Brain–Computer Interface system, live visuals and BCI performer; the actress and writer of the inter-text Anastasia Katsinavaki; the composer and live electronics performer Minas Borboudakis; the co-authors as the BCI research supervisory team; the director of photography Eleni Onasoglou; the MAX MSP Jitter programmer Alexander Horowitz; Ines Bento Coelho as the choreographer; the costumes designer Ioli Michalopoulou; the software engineer Bruce Robertson and Hanan Makki as the graphic designer.

The performance is an artistic research project, which aims to investigate in practice the challenges of the design and implementation of multi-brain BCIs in mixed-media performances as mentioned in Section 2 and develop accordingly a combination of creative and research solutions. It involves a large production team of professionals, performers and public audiences in a theatre space. The concept of the performance is based on the following elements: the aesthetic, visual and dramaturgical vision of the director; the thematic idea and the inter-text written by the actress; as well as the design and implementation of the passive multi-brain EEG-based BCI System by the authors. More specifically, the work, with an approximate duration of 50 minutes, involves the live act of three performers, a live visuals (live video projections) and BCI performer, a live electronics and music performer, an actress and the participation of two members of the audience, with the use of a passive multi-brain EEG-based BCI system (Zioga et al. Citation2015, 108). The real-time brain-activity of the actress and the audience members control the live video projections and the atmosphere of the theatrical stage, which functions as an allegory of the social stage. The premiere took place at the Theatre space of CCA: Centre for Contemporary Arts, Glasgow, UK, from 29 to 31 July 2015 (CCA Citation2015) involving different audience and participants each day (see http://www.polina-zioga.com/performances/2015-enheduanna---a-manifesto-of-falling for a short video trailer of the performance). The technical specifications of the space included a stage with an approximate 60 cm height; a video projection screen with approximate dimensions of 370 cm × 400 cm; an HD video projector; and a 4.1 sound system with two active loudspeakers located at the back of the stage, two more at the far end of the space and one active sub bass unit underneath the middle front of the stage.

The thematic idea of the performance explores the life and work of Enheduanna (ca. 2285-2250 B.C.E.), an Akkadian Princess, the first documented High Priestess of the deity of the Moon Nanna in the city of Ur (present-day Iraq), who is regarded as possibly the first known author and poet in the history of human civilisation, regardless of gender (Zioga et al. Citation2015, 107). In her most known work, ‘The Exaltation of Inanna’ (Hadji Citation1988), Enheduanna describes the political conditions under which she was removed from high office and sent into exile. She speaks about the ‘city’, power, crisis, falling and the need for rehabilitation. Her poetry is used as a starting point for a conversation with the work of contemporary writers, Adorno et al. (Citation1950), Angelou (Citation1995), Laplanche and Pontalis (Citation1988), Pampoudis (Citation1989), Woolf (Citation2002) and Yourcenar (Citation1974), that investigate the notions of citizenry, personal and social illness, within the present-day international, social and political context of democracy. The premiere was performed in English, Greek, and French with English supertitles (CCA Citation2015).

This new work enables for the first time the simultaneous real-time interaction with the use of EEG of more than two participants, including both a performer as well as members of the audience in the context of a mixed-media performance.

3.1 The cognitive approach

As we mentioned in Section 2.3, if we would like to involve the performer/s’ as well as the audience participants’ real-time brain-activity, we need to approach the BCI design in a way that addresses the dramaturgical, narrative and participatory level. At the same time, the actress’ performance in Enheduanna – A Manifesto of Falling involves speaking, singing, dancing and intense body movement, sometimes even simultaneously as it is common in similar staged works. This results to a very important challenge: the cognitive load she is facing and because of which it is not feasible for her to execute at the same time mental tasks such as those used for the control of ‘re-active’ and ‘active’ BCIs i.e. focusing her attention at visual stimuli on a screen for periods of several seconds and repeated multiple times or trying to imagine different movements (motor imagery). For this reason, the cognitive approach in the design of the performance was focused on the ‘arbitrary brain activity without the purpose of voluntary control’ (Zander et al. Citation2008) and the BCI system developed is passive for both the performer, as well as for the audience participants. This is a feasible solution for the actress’ cognitive load, but it also presents two opportunities. It allows us to directly compare the brain-activity of the performer and the audience. It also enables us to study and compare the experience and engagement of the audience in a real-life context, which is multi-dimensional and bears analogies to free viewing of films, extensively studied in the interdisciplinary field of neurocinematics (Hasson et al. Citation2008, 1). This way we pursue a double aim: the development of a multi-brain EEG-based BCI system, which will enable the use of the brain activity of a performer and members of the audience as a creative tool, but also as a tool for investigating the passive multi-brain interaction between them (Zioga et al. Citation2015, 108).

3.2 The passive multi-brain EEG-based BCI system

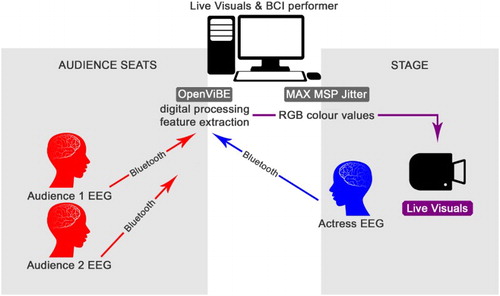

The passive multi-brain EEG-based BCI system consists of the following parts (): the performer and the audience participants; the data acquisition; the real-time EEG data processing and feature extraction; and the MAX MSP Jitter programming.

Figure 1. The passive multi-brain EEG-based BCI system. Vectors of human profiles designed by Freepik. ©2015 The authors.

3.2.1 Participants

The participants of the study included the actress and six members of the audience, two for each performance: the dress rehearsal, the premiere and the second public performance. The audience participants were recruited directly by the first author in the close geographical proximity. The inclusion criteria were general adult population, aged 18–65 years old, both female and male. The exclusion criteria were not to suffer from a neurological deficit and not to be receiving psychiatric or neurological medication. The participants were asked to avoid the consumption of coffee, tea, high caffeine drinks, cigarettes and alcohol, as well as the recreational use of drugs for at least twelve hours prior to the study. They were asked to arrive at the space one hour before the performance, when the venue was closed for the public. This gave sufficient quite time to discuss any additional questions, answer a preliminary brief questionnaire and start the preparation (). Following the opening of the space to the public, they were asked to watch the performance like any other spectator and in the end to complete a final brief questionnaire. The recruitment and the preparation of the actress followed a similar process, inclusion and exclusion criteria, similar informed consent and questionnaires.

Figure 2. The live visuals and BCI performer (first author) preparing two audience participants prior to the performance at CCA: Centre for Contemporary Arts Glasgow, 30–31 July 2015. ©2015 The authors and Catherine M. Weir.

As we mentioned in Section 2.4, when EEG studies are conducted in a lab environment there is greater flexibility and freedom comparing to studies attempted in a public space and moreover under the tight conditions of a mixed-media performance. In the case of Enheduanna – A Manifesto of Falling the process we describe here, which includes informing the participants and answering their questions a few days prior to the study and also allowing for an hour without the presence of other spectators has been adequate and helped to minimise the psychological pressure to both the participants as well as the researcher.

The audience participants were seated side by side together with a technical assistant. They were positioned facing the stage, at the left end of the third row, near the director and the MAX MSP Jitter programmer, but also within the standard 10 m range of the Bluetooth connection with the main computer, where the EEG data were collected and processed. At the same time, they had an excellent view of the entire performance and the video projections, while both the audience members as well as the actress were free from ‘desk-bound’ constraints.

3.2.2 EEG data acquisition

The second part of the system involves the use of commercial grade EEG-based wireless devices. More specifically, the participants are wearing the MyndPlay Brain-BandXL EEG Headsets, which were also used during the design phase of the system, in order to ensure that important parameters remain the same.

The headset has two dry sensors with one active, located in the prefrontal lobe (Fp1) (MyndPlay Citation2015). The choice of the specific device was based on the following criteria:

Low cost—feasible for works that involve multiple participants.

Easy to wear design—crucial for the time constraints of a public event.

Lightweight—convenient for use over prolonged periods such as during a performance.

Aesthetically neutral—easier integration with the scenography and other elements such as the costumes.

Dry sensors—same as [1].

Position of the sensors on the prefrontal lobe—broadly associated with cognitive control.

The three devices (the actress’ and the two audience participants’) are always switched on and connected to the main computer one after another and in the same order, so that to ensure that they are always assigned the same COM ports. The raw EEG data are acquired at a sampling frequency of 512 Hz with 32 sample count per sent block and are being transmitted wirelessly to the main a computer via Bluetooth.

3.2.3 Real-time EEG data processing

In the third part of the system the real-time digital processing of the raw EEG data from each participant and device is performed with the OpenViBE software (Renard et al. Citation2010), which enabled us to solve efficiently the design of a simultaneous real-time multi-brain interaction of more than two participants. This is achieved by configuring multiple ‘Acquisition Servers’ (OpenViBE Citation2011a) sending their data to corresponding ‘Acquisition Clients’ (Renard Citation2015) within the same Designer Scenario (OpenViBE Citation2011b). This also automatically enables the simultaneous receiving, processing and recording of the data and the synchronisation with the live video projections, as we will demonstrate further along.

The processing continues by selecting the appropriate EEG channel and by using algorithms that follow the frequency analysis method we designed a custom-based feature extraction. Taking into consideration the challenges presented in Section 2, such as the unique brain anatomy of the different participants wearing the device and the location of the sensors, which might be differentiated even slightly during each performance, but also the EEG’s low spatial resolution, our methodology focuses on the oscillatory processes of the brain activity. With the use of band-pass filters, the 4–40 Hz frequencies that are meaningful in the conditions of the performance, are selected. More specifically, we process the theta (4–8 Hz) frequency band associated with deep relaxation but also emotional stress, the alpha (8–13 Hz) associated with relaxed but awake state, the beta (13–25 Hz) and the lower gamma (25–40 Hz) that occur during intense mental activity and tension (Thakor and Sherman Citation2013, 261). The <4 Hz frequency, which corresponds to the delta band, and is associated with deep sleep, is rejected, in order to suppress low pass noise, EOG and ECG artifacts. Also the 40 Hz and above frequencies are rejected, in order to suppress EMG artifacts from the body muscle movements, high pass and line noise from electrical devices. This way we improve the ratio of actual brain signal to the noise and non-brain artifacts being recorded. The processing continuous by applying time-based epoching, squaring, averaging and then computing the log(1 + x) of each selected frequency. At the same time, both the raw as well as the processed EEG data of each participant are being recorded as CSV files in order to be analysed off line after the performance (Zioga et al. Citation2015, 110). The final generated values are sent to the MAX MSP Jitter software using OSC (Open Sound Control) Controllers (Caglayan Citation2015).

3.2.4 Max MSP jitter programming

The fourth part of the system involves the MAX MSP Jitter programming. The processed values of each participant, imported as OSC messages with the use of separate ‘User Datagram Protocol (UDP) receivers’ (Cycling Citation2016), are scaled to RGB colour values from 0 to 255. More specifically, the processed data from the 13–40 Hz frequency (beta and lower gamma) are mapped to the red value, the data from the 8–13 Hz (alpha) band are mapped to the green value and from the 4–8 Hz (theta) to the blue value. Then, these RGB values of each participant are imported either separately or jointly, depending on the stage of the performance (see Section 3.3) into a ‘swatch’ (max objects database Citation2015).

The ‘swatch’ provides 2-dimensional RGB colour selection and display and combines in real-time the values creating a constantly changing single colour. The higher the incoming OSC message value of any given processed frequency, the higher the respective RGB value becomes and the more the final colour shifts towards that shade. The generated colour is then applied as a filter, with a manually controlled opacity of maximum 50%, to pre-rendered black and white video files reproduced in real-time. The resulting video stream is projected on a screen. Its chromatic variations not only correspond to a unique real-time combination of the three selected brain activity frequencies of multiple participants, but also serve as visualisation of their predominant cognitive states, both independently as well as jointly.

3.3 The creative concept, methods and processes

We started by presenting the cognitive approach and the passive multi-brain EEG-based BCI system, leaving the creative concept, methods and processes for the current section. However, these three directions have influenced each other and were co-designed during the entire preparation phase of the project. Also, the use of the BCI system has influenced almost every aspect of the creative production, such as the choreography, the scenography and even the lighting and the costumes design. Nevertheless, for the purposes of this article we will focus on directing and live cinema, the interactive storytelling and narrative structure and the live visuals.

3.3.1 Directing and live cinema

As we explained at the beginning of Section 3, a live brain-computer cinema performance is an event that combines live, mediatised representations, more specifically live cinema, and the use of BCIs (Zioga et al. Citation2015, 107). More specifically, live cinema is defined as ‘[…] real-time mixing of images and sound for an audience, where […] the artist’s role becomes performative and the audience’s role becomes participatory.’ (Willis Citation2009, 11). In the case of Enheduanna – A Manifesto of Falling, there are three performers, the live visuals performer, the actress and the live electronics performer. The actress’ activity and the participatory role of the audience members are enhanced and characterised by the use of their real-time brain-activity as a physical expansion of the creative process, as an act of co-creating and co-authoring, and as an embodied form of improvisation, which is mapped in real-time to the live visuals (Zioga et al. Citation2015, 108). At the same time, the live visuals performer has a significantly different role than those usually encountered in a theatre play. She is the director, video artist and BCI performer on stage, a multi-orchestrator facilitating and mediating the interaction of the actress and the audience participants. Additional elements borrowed by the practices of live cinema include the use of non-linear narration and storytelling approach through the fragmentation of the image, the frame and the text (Zioga et al. Citation2015, 108).

Moreover, one of the preliminary directing preoccupations has been to avoid technoformalism and create a work not just orientated towards an entertaining, ‘pleasurable, playful or skilful’ result and interaction, but aiming to a ‘meaning-making’ of historical and socio-political themes (Heitlinger and Bryan-Kinns Citation2013, 113). The goal is to bring together the thematic idea of the life of Enheduanna with the passive brain-interaction of the actress and the audience as two complementary elements. This has been achieved in the conceptual as well as the aesthetic level.

A conceptual and dramaturgical non-linear dialogue is created between: the poetry of Enheduanna (Hadji Citation1988) who speaks about power, crisis, falling and citizenry; the work of the contemporary writers that investigate socio-political themes; and the passive brain-interaction of the participants as an allegory of the passive citizenry and its role in the present-day context of democracy.

In the aesthetic level, the basic directing strategy is to create multiple levels of storytelling and interaction. The texts in the three languages of the original literature references, Greek, English and French, are either performed live by the actress or her pre-recorded voice is reproduced together with the live electronics. This symbolically creates the effect of three personalities: the live narrator and two other female commentators, perceived as either external or as two other sides of her consciousness. The basic directing strategy also involves associating the different aspects of the real-time brain-activity of the participants to the colour of the live visuals. However, the visualisation is not uniform throughout the performance. It presents variations that follow the interactive storytelling pattern and the narrative structure presented in the following section.

3.3.2 Interactive storytelling and narrative structure

As Ranciere (Citation2007, 279) argues:

Spectatorship is not the passivity has to be turned into activity. It is our normal situation. We learn and teach, we act and know as spectators who link what they see with what they have seen and told, done and dreamt [ … ] We have to acknowledge that any spectator already is an actor of his own story and that the actor also is the spectator of the same kind of story.

Figure 3. ‘Enheduanna – A Manifesto of Falling’ Live Brain-Computer Cinema Performance, vignette introducing part 1 ‘Me’. ©2015 The first author.

Figure 4. ‘Enheduanna – A Manifesto of Falling’ Live Brain-Computer Cinema Performance, vignette introducing part 2 ‘You/We’. ©2015 The first author.

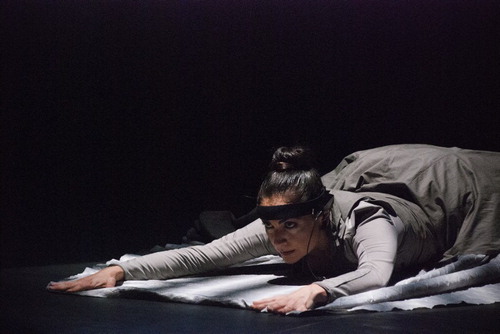

Figure 5. ‘Enheduanna – A Manifesto of Falling’ Live Brain-Computer Cinema Performance, scene 3 ‘Me Falling’, at CCA: Centre for Contemporary Arts Glasgow, 30–31 July 2015. ©2015 The authors and Catherine M. Weir.

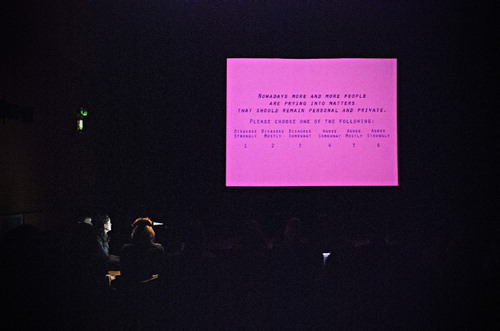

Figure 6. ‘Enheduanna – A Manifesto of Falling’ Live Brain-Computer Cinema Performance, scene 4 ‘You measuring the F-scale’, at CCA: Centre for Contemporary Arts Glasgow, 30–31 July 2015. ©2015 The authors and Catherine M. Weir.

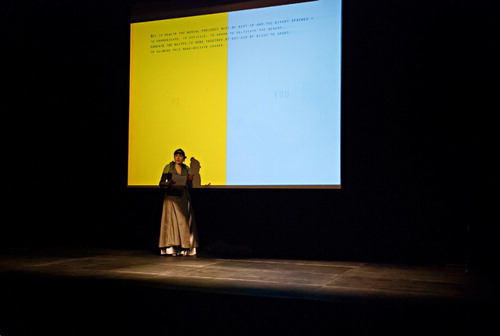

Figure 7. ‘Enheduanna – A Manifesto of Falling’ Live Brain-Computer Cinema Performance, first half of scene 5 ‘We’, at CCA: Centre for Contemporary Arts Glasgow, 30–31 July 2015. ©2015 The authors and Catherine M. Weir.

Figure 8. ‘Enheduanna – A Manifesto of Falling’ Live Brain-Computer Cinema Performance, second half of scene 5 ‘We’, at CCA: Centre for Contemporary Arts Glasgow, 30–31 July 2015. ©2015 The authors and Catherine M. Weir.

The two parts of the performance are further divided into five scenes. The first three correspond to part 1 and the last two to part 2: scene 1 ‘Me Transmitting Signals’; scene 2 ‘Me Rising’; scene 3 ‘Me Falling’; scene 4 ‘You Measuring the F-Scale’ and scene 5 ‘We’.

While in part 1 we are introduced and immersed in the story of Enheduanna in a more traditional theatrical manner, with the actress being perceived by the audience as a third person (she), in part 2 we witness her transformation to becoming a second person (you), when the audience members are addressed (Dixon Citation2007, 561).

This transformation/transition is promoted with the use of different theatrical elements. The actress is present performing throughout part 1, but at the end of scene 3 she leaves the stage. The lights fade out and two soft spots above the two audience participants are turned on throughout scene 4 and the first half of scene 5. This way the performance becomes a cinematic experience, while the use of light underlines the control of the live visuals by the audience’s brain activity ().

The actress reappears on stage at the beginning of scene 5, addressing directly the audience (), while their real-time brain-interaction gradually merges and their averaged values control the colour filter applied to the live video stream ().

The narrative and dramaturgical structure we described fulfils the directing vision and aim of bringing together the thematic idea of the performance with the use of the interaction technology in a coherent and comprehensive manner. It associates the real-time brain-activity of the participants to the colour of the live visuals, which will further discuss in the following section, within a consistent storytelling process and by this it also serves as an evidence of liveness.

3.3.3 Live visuals

As we mentioned previously, the live visuals are performed with the MAX MSP Jitter software and consist of two main components: the pre-rendered black and white .wmv video files and the RGB colour filter generated by the processing and mapping of the participants’ EEG data. The video shootings took place on location in Athens, Greece, while the editing and post-production was made with the Adobe After Effects software (Adobe Citation2016).

Regarding the generation of the colour filter, the choice of mapping the selected EEG frequency bands to the specific RGB colours, as described in Section 3.2.4, was based on the historically established in the western world cultural associations of specific colours with certain emotions. As we mentioned previously, the blue colour is controlled by the theta frequency band, which is associated with deep relaxation; the green by the alpha band, associated with a relaxed but awake state; and the red colour is controlled by the beta and lower gamma frequency bands that occur during intense mental activity and tension. This way the transition of the participants from relaxed to more alert cognitive states is visualised in the colour scale of the live visuals as a shift from colder to warmer tints. Taking into account that all the other production elements are in black, white and grey shades, including the costumes and the lighting design, the generated RGB colour filter not only creates the atmosphere of the visuals, but also of the theatrical stage. It becomes a real-time feedback of the cognitive state of the participants and sets the emotional direction for the overall performance.

Furthermore, the configuration of the live-visuals with the MAX MSP Jitter software is not an entirely automated process. In consistency with the interactive storytelling and narrative structure, certain features are subject to manual control:

Selection of processed brain-activity for the generation of the RGB colour filter—the actress’ in scene 1, 2 and 3; one of the audience participants in scene 4; the actress’ and one of the audience participants’ in scene 5, separately and then averaged.

Selection and triggering of video files and corresponding scenes—performed in coordination with the live electronics performer who functions as a conductor, leading the initiation of the different scenes and the synchronisation between the audio and the visuals.

The RGB colour filter saturation level—i.e., decreasing in cases that the level is high for a prolonged period of time thus becoming unpleasant for the audience or increasing when the level is low therefore creating a non-visible result.

The RGB colour filter opacity level—0% during the video vignettes and increased up to 50% during the main scenes. This allows the video vignettes to function as aesthetically neutral intervals orienting the audience in regards to the narrative structure and the brain-activity interaction.

The RGB colour filter split in two equal parts—at the beginning of scene 5, the filter on the right half of the screen maps the actress’ brain-activity (marked as ‘Me’) and on the left maps one of the audience member’s activity (marked as ‘You’) (). Towards the middle of the scene, the two parts merge and the filter is averaged (marked as ‘We’) (). This way not only their respective cognitive state is visualized, but also a real-time comparison between them, enriching the interactive storytelling and reinforcing the audience perception of liveness.

4 Discussion and future work

In the present article, we described the challenges of the design and implementation of multi-brain BCIs in mixed-media and live cinema performances. We presented ‘Enheduanna – A Manifesto of Falling’ Live Brain-Computer Cinema Performance, as a complete combination of creative and research solutions, which are summarised in . These well-documented solutions can function as general guidelines; however, we expect that other artists might also investigate individualised approaches, customised and consistent to the specific context of their performances.

Table 1. ‘Enheduanna – A Manifesto of Falling’ Live Brain-Computer Cinema Performance: the solutions to the challenges of the design and implementation of multi-brain BCIS in mixed-media performances. ©2015 The authors.

The first demonstrations of the performance were very well received by the audiences and provided us with valuable and most interesting feedback from the participants. For example, the actress reported that when she was facing the screen she could observe and identify specific colour changes corresponding to specific phases of her acting. One of the audience participants reported that although he couldn’t understand exactly the brain-activity interaction, he ‘felt somehow connected’ and that in specific parts of the performance the colours expressed his feelings. The collected data from the pre- and post-performance questionnaires aimed more specifically to reveal: whether the participants were able to identify when and how their brain-activity was controlling the live video projections; and what were the most special elements of the performance according to them. As we mentioned in Section 3.2, we also collected the participants’ raw EEG data, the quantitative and statistical analysis of which most interestingly revealed a correlation with their answers to the questionnaires. For example, the audience participants highlighted part 2 ‘You/We’ as a special aspect of the performance, during which their cognitive and emotional engagement was clearly increased too and also in a similar manner. We will be able to report more detailed results in the future.

The first demonstrations of the performance also gave us the opportunity to observe in real-life conditions challenges that we have not predicted before and at the same time to consider the direction of our future work.

In the computational level, one of the first issues was that the BCI devices would connect successfully via the Bluetooth to the computer, only if they were assigned in each session the same COM Ports. In order to ensure this, we had to switch on and connect them, one after another and in the same order. This was especially crucial, since the current version of the OpenViBE driver is searching for devices assigned to COM Ports 1-16, so a headset with a greater value is not being recognised. Another computational issue we experienced was the noticeable disconnections of the actress’ headset. By trying different devices, we could verify that this was not occurring due to hardware malfunctioning. Also, since the actress was always within a distance of 10 m, we assume that it is not a problem related to the physical range of the Bluetooth neither. Nevertheless, this requires further investigation including experimenting with different communication protocols.

In the experimental level, one of the difficulties that was not predicted, is for the actress not to consume any high caffeine drink at least 12 hours before each performance and in this case for three consecutive days. As she explained, for performers that even consume moderate amounts of caffeine like her that is, one to two cups of coffee per day, it is common practice to drink a cup one hour before the performance in order to feel fresh and energised. In the case of Enheduanna – A Manifesto of Falling, in order to help the actress perform at a high level and at the same time implement the experimental conditions, we realised a programme of rehearsals that started one month earlier and during which she gradually reduced the caffeine consumption. However, we understand that this might not be feasible in all cases and therefore needs reconsideration.

Apart from the unpredicted difficulties we encountered, we can already envision the future potential of our study. On the one hand, the combination of solutions we presented can be adjusted and applied also to different contexts, like other formats of interactive works of art, games, but also neurofeedback applications for multiple participants. On the other hand, the passive multi-brain EEG-based BCI system described in this article has a lot of potential for further development. Its architecture can allow different algorithmic processing and interpretation of the EEG data and it can also incorporate virtual and mixed-reality sensors data. Additionally, although at this stage we focused on passive brain-interaction for reasons described in detailed in the previous sections, we acknowledge that in the future hybrid BCIs that combine different paradigms might enrich the performative experience.

Our future work involves as main objectives: stabilising the system and increasing the number of the participants in real-life conditions; developing and evaluating more performances; reporting the detailed results of the behavioural and EEG data and investigating for possible evidence that might support or not the hypothesis of brain-to brain coupling between performer/s and audience participants (Zioga et al. Citation2015, 104).

Acknowledgements

This research was made possible with the Global Excellence Initiative Fund PhD Studentship awarded by the Glasgow School of Art. ‘Enheduanna – A Manifesto of Falling’ Live Brain-Computer Cinema Performance has been developed in the Digital Design Studio (DDS) of the Glasgow School of Art (GSA), in collaboration with the PACo Lab at the School of Psychology of the University of Glasgow and the School of Art, Design and Architecture of the University of Huddersfield. The project has been awarded with the NEON Organization 2014–2015 Grant for Performance Production, is supported by MyndPlay and the premiere presentations were made possible also with the support of CCA: Centre for Contemporary Arts Glasgow and the GSA Students’ Association. We would like to heartily thank all the collaborators mentioned in Section 3 and also Alison Ballantine, Brian McGeough, Jessica Evelyn Argo, Jack McCombe, Alexandra Gabrielle, Catherine M. Weir, Shannon Bolen and Erifyli Chatzimanolaki. We are indebted to Dr Francis McKee, Director of CCA and to the members of the audience who volunteered to participate in the study. We would also like to thank the authors, the publishing houses and estates for their permission to use the literature extracts; all the CCA staff members; and Emeritus Prof Basil N. Ziogas for his continued support.

Disclosure statement

No potential conflict of interest was reported by the authors.

Notes on contributors

Polina Zioga is an award-winning multimedia visual artist, lecturer in the School of Art, Design and Architecture of the University of Huddersfield, and affiliate member of national and international organisations for the visual and new media arts. Her interdisciplinary background in Visual Arts (Athens School of Fine Arts) and Health Sciences (National and Kapodistrian University of Athens) has influenced her practice at the intersection of art and science and her doctoral research (Digital Design Studio, Glasgow School of Art) in the field of brain–computer interfaces, awarded with the Global Excellence Initiative Fund PhD Studentship. Her work has been presented since 2004 internationally, in solo and group art exhibitions, art fairs, museums, centres for the new media and digital culture, video-art and film festivals and international peer-reviewed conferences www.polina-zioga.com.

Dr Paul Chapman is Acting Director of the Digital Design Studio (DDS) of the Glasgow School of Art where he has worked since 2009. The DDS is a postgraduate research and commercial centre based in the Digital Media Quarter in Glasgow housing state of the art virtual reality, graphics and sound laboratories. Paul holds BSc, MSc and PhD degrees in Computer Science, he is a Chartered Engineer, Chartered IT Professional and a Fellow of the British Computer Society. Paul is a Director of Cryptic and an inaugural member of the Royal Society of Edinburgh’s Young Academy of Scotland which was established in 2011.

Professor Minhua Ma is a Professor of Digital Media & Games and Associate Dean International in the School of Art, Design and Architecture at University of Huddersfield. Professor Ma is a world-leading academic developing the emerging field of serious games. She has published widely in the fields of serious games for education, medicine and healthcare, Virtual and Augmented Reality, in over hundred peer-reviewed publications, including six books with Springer. She received grants from RCUK, EU, NHS, NESTA, UK government, charities and a variety of other sources for her research on serious games for stroke rehabilitation, cystic fibrosis and autism, and medical visualisation. Professor Ma is the Editor-in-Chief responsible for the Serious Games section of the Elsevier journal Entertainment Computing.

Professor Frank Pollick is interested in the perception of human movement and the cognitive and neural processes that underlie our abilities to understand the actions of others. In particular, current research emphasises brain imaging and how individual differences involving autism, depression and skill expertise are expressed in the brain circuits for action understanding. Research applications include computer animation and the production of humanoid robot motions. Professor Pollick obtained BS degrees in physics and biology from MIT in 1982, an MSc in Biomedical Engineering from Case Western Reserve University in 1984 and a PhD in Cognitive Sciences from The University of California, Irvine in 1991. Following this he was an invited researcher at the ATR Human Information Processing Research Labs in Kyoto, Japan from 1991 to 1997.

ORCID

Polina Zioga http://orcid.org/0000-0003-1317-2074

Paul Chapman http://orcid.org/0000-0002-6390-5558

Minhua Ma http://orcid.org/0000-0001-7451-546X

Frank Pollick http://orcid.org/0000-0002-7212-4622

Additional information

Funding

Notes

1 A live brain-computer mixed-media performance is defined as a real-time audio-visual and mixed-media performance with use of BCIs (Zioga et al. Citation2014, 221).

References

- Adobe. 2016. “After Effects.” Accessed January 6 2016. http://www.adobe.com/uk/products/aftereffects.html.

- Adorno, T. W., E. Frenkel-Brunswik, D. Levinson, and N. Sanford. 1950. The Authoritarian Personality. New York: Harper & Row.

- Angelou, M. 1995. A Brave and Startling Truth. New York: Random House.

- Auslander, P. 1999. Liveness: Performance in Mediatized Culture. New York: Routledge.

- Bonnet, L., F. Lotte, and A. Lecuyer. 2013. “Two Brains, One Game: Design and Evaluation of a Multi-user BCI Video Game Based on Motor Imagery.” IEEE Transactions on Computational Intelligence and AI in Games, IEEE Computational Intelligence Society, 5 (2): 185–198. doi: 10.1109/TCIAIG.2012.2237173

- Caglayan, O. 2015. “OSC Controller.” Accessed January 1 2016. http://openvibe.inria.fr/documentation/unstable/Doc_BoxAlgorithm_OSCController.html.

- CCA. 2015. “Polina Zioga & GSA DDS: “Enheduanna – A Manifesto of Falling” Live Brain-Computer Cinema Performance.” Accessed January 6 2016. http://cca-glasgow.com/programme/555c6ab685085d7e38000026.

- Cycling. 2016. “udpreceive.” Accessed January 1 2016. https://docs.cycling74.com/max5/refpages/max-ref/udpreceive.html.

- Dikker, S. 2011. “Measuring the Magic of Mutual Gaze.” YouTube video, 03:24. Posted by ‘Suzanne Dikker’ (2011) Accessed January 17 2016. https://www.youtube.com/watch?v=Ut9oPo8sLJw.

- Dixon, S. 2007. Digital Performance: A History of New Media in Theater, Dance, Performance Art, and Installation. Cambridge, MA: MIT Press.

- Eaton, J. 2015. “The Space Between Us.” Vimeo video, 06:35. Posted by ‘joel eaton’ (2015). Accessed January 6 2016. https://vimeo.com/116013316.

- Eaton, J., D. Williams, and E. Miranda. 2015. “The Space Between Us: Evaluating aMulti-User Affective Brain-Computer Music Interface.” Brain-Computer Interfaces 2 (2–3): 103–116. doi:10.1080/2326263X.2015.1101922.

- Gürkök, H., A. Nijholt, M. Poel, and M. Obbink. 2013. “Evaluating A Multi-Player Brain-Computer Interface Game: Challenge Versus Co-experience.” Entertainment Computing, 4 (3): 195–203. doi: 10.1016/j.entcom.2012.11.001

- Hadji, T. 1988. Enheduanna: He Epoche, he zoe, kai to Ergo tes. Athens: Odysseas. (in Greek).

- Hasson, U., A. A. Ghazanfar, B. Galantucci, S. Garrod, and C. Keysers. 2012. “Brain-to-brain Coupling: A Mechanism for Creating and Sharing a Social World.” Trends in Cognitive Neuroscience 16 (2): 114–121. doi:10.1016/j.tics.2011.12.007.

- Hasson, U., O. Landesman, B. Knappmeyer, I. Vallines, N. Rubin, and D. Heeger. 2008. “Neurocinematics: The Neuroscience of Film.” Projections 2 (1): 1–26. doi: 10.3167/proj.2008.020102

- Heitlinger, S., and N. Bryan-Kinns. 2013. “Understanding Performative Behaviour Within Content-Rich Digital Live Art.” Digital Creativity, 24 (2): 111–118. Accessed October 10 2015. doi:10.1080/14626268.2013.808962.

- Hjelm, S. I., and C. Browall. 2000. “Brainball: Using Brain Activity for Cool Competition.” Proceedings of the first nordic conference on computer-human interaction (NordiCHI 2000), Stockholm, Sweden.

- Krepki, R., B. Blankertz, G. Curio, and K.-R. Müller. 2007. “The Berlin Brain-Computer Interface (BBCI) – Towards a new Communication Channel for Online Control in Gaming Applications.” Multimedia Tools and Applications 33 (1): 73–90. doi:10.1007/s11042-006-0094-3.

- Laplanche, J., and J.-B. Pontalis. 1988. The Language of Psychoanalysis. London: Karnac and the Institute of Psycho-Analysis.

- Le Groux, S., J. Manzolli, and P. F. M. J. Verschure. 2010. “Disembodied and Collaborative Musical Interaction in the Multimodal Brain Orchestra.” Proceedings of the 2010 conference on New Interfaces for Musical Expression (NIME 2010), Sydney, Australia, 309–314.

- Lee, S., Y. Shin, S. Woo, K. Kim, H.-N. Lee. 2013. Review of Wireless Brain-Computer Interface Systems, Brain-Computer Interface Systems – Recent Progress and Future Prospects. Edited by Dr. Reza Fazel-Rezai. InTech. doi:10.5772/56436.

- Lindinger, C., M. Mara, K. Obermaier, R. Aigner, R. Haring, and V. Pauser. 2013. “The (St)Age of Participation: Audience Involvement in Interactive Performances.” Digital Creativity, 24 (2): 119–129. doi:10.1080/14626268.2013.808966.

- Mann, S., J. Fung, and A. Garten. 2008. “DECONcert: Making Waves with Water, EEG, and Music.” In Computer Music Modeling and Retrieval. Sense of Sounds Computer Music Modeling and Retrieval. Sense of Sounds, edited by R. Kronland-Martinet, S. Ystad, and K. Jensen, 487–505. Berlin: Springer, 197–229. Singapore: Springer.

- max objects database. 2015. “swatch.” Accessed January 1 2016. http://www.maxobjects.com/?v=objects&id_objet=715&requested=swatch&operateur=AND&id_plateforme=0&id_format=0.

- Mori, M., K. Bregenz, and E. Schneider. 2003. Mariko Mori: Wave UFO. Köln: Verlag der Buchhandlung Walther König.

- Mullen, T., A. Khalil, T. Ward, J. Iversen, G. Leslie, R. Warp, M. Whitman, et al. 2015. “MindMusic: Playful and Social Installations at the Interface Between Music and the Brain.” In More Playful Interfaces, 197–229. Singapore: Springer. doi:10.1007/978-981-287-546-4_9.

- MyndPlay. 2015. “BrainBandXL & MyndPlay Pro Bundle.” Accessed January 6 2016. http://myndplay.com/products.php?prod=9.

- Nicolas-Alonso, L. F., and J. Gomez-Gil. 2012. “Brain Computer Interfaces, a Review.” Sensors 12 (2): 1211–1279. doi:10.3390/s1202012111238.

- Nijholt, A. 2015. “Competing and Collaborating Brains: Multi-Brain Computer Interfacing.” In Brain-Computer Interfaces: Current Trends and Applications, edited by A.E. Hassanien, and A.T. Azar, 313–335. Switzerland: Intelligent Systems Reference Library series, 74, Springer International Publishing. doi:10.1007/978-3-319-10978-7_12.

- Nijholt, A., and M. Poel. 2016. “Multi-Brain BCI: Characteristics and Social Interactions.” In Foundations of Augmented Cognition: Neuroergonomics and Operational Neuroscience, edited by Dylan D. Schmorrow, and Cali M. Fidopiastis, 79–90. Lectures Notes in Computer Science, 9743, Springer International Publishing. http://link.springer.com/chapter/10.1007%2F978-3-319-39955-3_8.

- OpenViBE. 2011a. “Acquisition Server.” Posted by ‘lbonnet’. Accessed January 1 2016. http://openvibe.inria.fr/acquisition-server.

- OpenViBE. 2011b. “Designer Overview.” Posted by ‘lbonnet’. Accessed January 1 2016. http://openvibe.inria.fr/designer.

- Pampoudis, P. 1989. O Enikos. Athens: Kedros. (in Greek)

- Ranciere, J. 2007. “The Emancipated Spectator.” Artforum. 45: 270–281.

- Renard, Y. 2015. “Acquisition client.” Accessed January 1 2016. http://openvibe.inria.fr/documentation/unstable/Doc_BoxAlgorithm_AcquisitionClient.html.

- Renard, Y., F. Lotte, G. Gibert, M. Congedo, E. Maby, V. Delannoy, O. Bertrand, and A. Lécuyer. 2010. “OpenViBE: An Open-Source Software Platform to Design, Test and Use Brain-Computer Interfaces in Real and Virtual Environments.” Presence: Teleoperators and Virtual Environments 19: 35–53. doi: 10.1162/pres.19.1.35

- Swartz Center of Computational Neuroscience, University of California San Diego. 2014. “Introduction to Modern Brain-Computer Interface Design Wiki.” Last modified June 10 2014. Accessed January 6 2016. http://sccn.ucsd.edu/wiki/Introduction_To_Modern_Brain-Computer_Interface_Design.

- Thakor, N. V., and Lei. Sherman. 2013. “EEG Signal Processing: Theory and Applications.” In Neural Engineering, edited by Bin He, 259–304. New York: Springer Science+Business Media.

- van der Wal, C. N., M. Irrmischer. 2015. “Myndplay: Measuring Attention Regulation with Single Dry Electrode Brain Computer Interface.” In BIH 2015, edited by Y. Guo, 192–201, LNAI 9250. Accessed January 8 2016. doi:10.1007/978-3-319-23344-4_19

- Willis, H. 2009. “Real Time Live: Cinema as Performance.” In AfterImage 37 (1): 11–15.

- Woolf, V. 2002. On Being Ill. Ashfield: Paris Press.

- Yourcenar, M. 1974. Feux. Paris: Editions Gallimard. (in French).

- Zander, T.O., C. Kothe, S. Welke, and M. Roetting. 2008. ‘Enhancing Human-Machine Systems with Secondary Input from Passive Brain-Computer interfaces’. In Proceedings of the 4th international BCI workshop & training course, Graz, Austria. Graz: Graz University of Technology Publishing House.

- Zioga, P., P. Chapman, M. Ma, and F. Pollick. 2014. “A Wireless Future: Performance art, Interaction and the Brain-Computer Interfaces.” In Proceedings of ICLI 2014 – INTER-FACE: International Conference on Live Interfaces, edited by A. Sa, M. Carvalhais, and A. McLean, 220–230. Lisbon: Porto University, CECL & CESEM (NOVA University), MITPL (University of Sussex).

- Zioga, P., P. Chapman, M. Ma, and F. Pollick. 2015. “A Hypothesis of Brain-to-Brain Coupling in Interactive New Media Art and Games Using Brain-Computer Interfaces.” In Serious Games First Joint International Conference, JCSG 2015, Huddersfield, UK, June 3–4, 2015, Proceedings, edited by S. Göbel, M. Ma, J. Baalsrud Hauge, M. F. Oliveira, J. Wiemeyer, and V. Wendel, 103–113. Springer-Verlag Berlin Heidelberg. doi:10.1007/978-3-319-19126-3_9.