Abstract

Mental models are knowledge structures employed by humans to describe, explain, and predict the world around them. Shared Mental Models (SMMs) occur in teams whose members have similar mental models of their task and of the team itself. Research on human teaming has linked SMM quality to improved team performance. Applied understanding of SMMs should lead to improvements in human-AI teaming. Yet, it remains unclear how the SMM construct may differ in teams of human and AI agents, how and under what conditions such SMMs form, and how they should be quantified. This paper presents a review of SMMs and the associated literature, including their definition, measurement, and relation to other concepts. A synthesized conceptual model is proposed for the application of SMM literature to the human-AI setting. Several areas of AI research are identified and reviewed that are highly relevant to SMMs in human-AI teaming but which have not been discussed via a common vernacular. A summary of design considerations to support future experiments regarding Human-AI SMMs is presented. We find that while current research has made significant progress, a lack of consistency in terms and of effective means for measuring Human-AI SMMs currently impedes realization of the concept.

Relevance to human factors/ergonomics theory

This work summarizes the foundational research on the role of mental models and shared mental models in human teaming and explores the challenges of its application to teams of human and AI agents, which is currently an active topic of research both in the fields of psychology, human factors, cognitive engineering and human-robot interaction. A rich theoretical understanding herein will lead to improvements in the development of human-autonomy teaming technologies and collaboration protocols by allowing a critical aspect of teaming in the joint human-AI system to be understood in its historical context. The inclusion of a significant review of explainable AI will also enable researchers not associated with the development of AI systems to integrate and build upon the significant efforts of colleagues in HRI and AI more generally.

1. Introduction

Mental model theory of an individual, initially put forward by Johnson-Laird in the early 1980s (Johnson-Laird Citation1983, Citation1980), is one attempt at describing an important aspect of human cognition: the way humans interpret and interact with an engineered environment. Mental models are generally defined as the abstract long-term knowledge structures humans employ to describe, explain, and predict the world around them (Converse, Cannon-Bowers, and Salas Citation1993; Van den Bossche et al. Citation2011; Johnson-Laird Citation1983; Rouse and Morris Citation1986; Scheutz, DeLoach, and Adams Citation2017; Norman Citation1987; Johnson-Laird Citation1980). In the 50 years since the term’s inception, mental models have been the subject of significant scientific consideration and the basis for many theoretical and practical contributions to human-automation interaction, human-human teams, and human judgment and decision-making more generally.

Shared mental models (SMMs) are an extension of mental model theory, proposed by Converse, Salas, and Cannon-Bowers as a paradigm to study team training (Converse, Cannon-Bowers, and Salas Citation1993). The central idea of SMMs is that when individual team members’ mental models align—when they have similar understandings of their shared task and each other’s role in it—then this ‘shared’ mental model will allow the team to perform better because they will be able to more accurately predict the needs and behaviors of their teammates. The results of study on human-human SMMs have revealed that teams are indeed more effective when they are able to establish and maintain an SMM, see §2.

With the advent of advanced automation bordering on autonomy from advances in fields such as control theory, machine learning (ML), and artificial intelligence, human mental models are once again of interest as humans endeavor to create effective teams that incorporate automation with capabilities similar to those of a human teammate. However, unlike human-human teams, Human-AI teamsFootnote1 require differential study to fully capture the necessary bi-directional relationship—humans creating MMs of their team, and artificial agents creating MMs of their team— to fully create and understand Human-AI SMMs.

This paper presents a literature review of Human-AI SMMs culminating in a conceptual model. The paper fills the gap in the current literature on SMMs for Human-AI teams, which is currently disjointed, as researchers from diverse fields (psychology, cognitive science, robotics, human-robot interaction, cognitive engineering, human-computer interaction, etc.) investigate similar constructs using different terminology and methods and publish in distinct literatures. The paper seeks to lay the groundwork for future research on Human-AI SMMs by (1) reviewing and summarizing existing work on SMMs in humans, including their definitions, elicitation, measurement, and development; (2) defining Human-AI SMMs and situating them among the larger field of similar constructs; (3) identifying challenges facing future research; and (4) identifying new, developing, or previously unconsidered areas of research that may help to address these challenges. The scope of this review is focused on engineered socio-technical systems in which at least one human and one artificial agent work together in a team and seek to achieve common goals. We emphasize the definitions of the various constructs, conceptual and computational frameworks associated with SMMs, measurements and metrics used to measure SMMs, and open questions that remain.

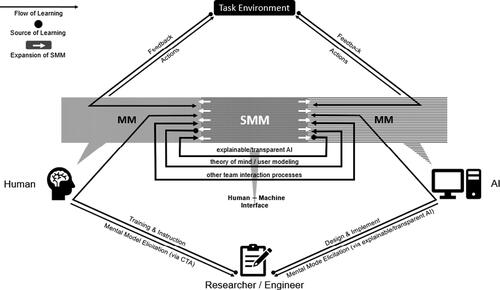

This paper is divided into eight sections. In the next section, §2, we define and summarize mental models in the context of individual humans, including how they are elicited. The third section, §3, discusses SMMs for human-human teams, including metrics, research on their development, and related concepts from our literature review. The fourth section, §4, expands SMMs to human-AI teams. Here we present a conceptual model of Human-AI SMMs based on our synthesis of the literature (see ) and discuss the added difficulties of applying SMM theory to artificial agents. §5 covers some existing or emerging computational techniques for modeling human teammates, a key component of SMMs. §6 provides an overview of the explainable AI movement, which can be seen as intricately linked to the creation and maintenance of both human and artificial components of Human-AI SMMs. The penultimate section, §7, presents considerations for the design and study of Human-AI SMMs. We conclude in section §8.

2. Individual mental models & related concepts

Something like the idea of mental models had been around for decades before mental model theory was coined and popularized by Johnson-Laird in the 1980s. Kenneth Craik, for example, conceived of cognition as involving ‘small-scale models’ of reality as early as 1943 (Craik Citation1943). Jay W. Forrester, the father of system dynamics, speaks of ‘mental images’ and explains that ‘a mental image is a model’ and that ‘all of our decisions are taken on the basis of models’ (Forrester Citation1971). Forrester goes so far as to refer to these mental images as mental models and eloquently describes the concept as follows: ‘One does not have a city or a government or a country in his head. He has only selected concepts and relationships which he uses to represent the real system.’ Other authors referred to this idea as internal representations or internal models before Johnson-Laird (Citation1980), and similar concepts going by different names have also been described and explored in fields such as psychology, computer science, military studies, manual and supervisory control, and learning science. In this section, we review the historical definition of mental models and situate them with similar concepts from neighboring fields and explain their relationship, where applicable. We also discuss the subject of mental model elicitation, including methods and the difficulties involved.

Mental models are simplified system descriptions, employed by humans when systems, particularly modern socio-technical systems, are too complex for humans to understand in full detail. In the process of mental model creation, humans tend to maximize system simplification while minimizing forgone performance. Mental models are ‘partial abstractions that rely on domain concepts and principles’ (Mueller et al. Citation2019, 24). They enable understanding and control of systems at a fraction of the processing cost required by more comprehensive analytical strategies (Norman Citation1987).

Despite the goal of maintaining accuracy, some information is necessarily lost in the abstraction process. Norman explains that mental models often contain partial domain descriptions, workflow shortcuts, large areas of missing information, and vast amounts of uncertainty (Norman Citation1987). Many efforts to characterize expert mental models in system controls applications have ‘resulted in hypothesized mental models that differ systematically from the “true” model of the system involved’ (Rouse and Morris Citation1986; Rouse Citation1977; Van Bussel Citation1980; Van Heusden Citation1980; Jagacinski and Miller Citation1978). For example, humans often adhere to superstitious workflows that consistently produce positive results without fully grasping the system dynamics informing the utility of their strategies - Norman gives the example of users ‘clearing’ a calculator many more times than necessary. The knowledge structures underlying human cognition in these cases are practical, but far from accurate.

One way to enjoy the efficiencies of simplification while avoiding its consequences is to model the system at multiple levels of abstraction and use only the version with enough information for the task at hand. Rasmussen’s work on cognitive task analysis (CTA) and work domain abstraction/decomposition suggests that humans engage in precisely such a process (Rasmussen Citation1979). A large repertoire of useful system representations at various levels of abstraction provides a solid basis from which system management strategies can be generated spontaneously, without needing to consider the whole of system complexity (Rasmussen Citation1979). These mental models allow domain practitioners to ‘estimate the state of the system, develop and adopt control strategies, select proper control actions, determine whether or not actions led to desired results, and understand unexpected phenomena that occur as the task progresses’ (Veldhuyzen and Stassen Citation1977).

Mental models are context-specific and/or context-sensitive (Converse, Cannon-Bowers, and Salas Citation1993; Scheutz, DeLoach, and Adams Citation2017; Rouse and Morris Citation1986). They are created to facilitate task completion in a given work domain—entirely different mental models are used in economic forecasting, for example, versus those employed in air-to-air combat.

Mental models are dynamic but slowly evolving, and they are generally considered to exist in long-term memory. As domain practitioners learn more about the work domain and experience a wider variety of off-normal operating conditions, mental models are updated to account for new information, and are thus also subject to considerable individual differences. How the content and structure of a mental model develop is actively being investigated, with some concluding that they develop gradually, with potential for greater rates of change occurring in less experienced operators (Endsley Citation2016), and others believing that they develop in ‘spurts’, only changing when a new model is presented to replace them (Mueller et al. Citation2019, 74; Einhorn and Hogarth Citation1986).

2.1. Mental model elicitation

Mental model elicitation is the process of capturing a person’s mental model in a form that can examined by others. This can be used to understand a complex sociotechnical process, evaluate the design of a system, or provide insight about how mental models develop or change in certain conditions.

Formally, the mental model that is measured is not the same as the mental model that actually exists. Norman distinguishes between four things: (1) A system, (2) an engineer’s model of the system (i.e., the design documents), (3) a user’s mental model of the system, and (4) a researcher’s mental model of the user’s mental model (Norman Citation1987). The resulting representation - a model of the model - will take different forms depending on the methods used and the needs of the researcher, and it may be subjective or objective, qualitative or quantitative. The methods used to elicit mental models overlap strongly with the field of cognitive task analysis (Bisantz and Roth Citation2007) and can be subdivided into observational methods, interviews, surveys, process tracing, and conceptual methods (Cooke et al. Citation2000). These tactics may be used individually or in combination.

Observation consists simply of watching an operator’s performance and thereby drawing conclusions about their reasoning. This could take a number of forms, including directly monitoring the operator in real time from a distance, embedding an undercover observer into the work domain, or filming the performance of a task (Bisantz and Roth Citation2007). Observational techniques offer intimate detail of how a task is performed; however, without adequate precautions, many methods risk interfering with normal operation. This approach is straightforward and can be useful, but it is sometimes criticized as subjective because of the potential biases of the observer and for producing largely qualitative information (Bisantz and Roth Citation2007; Cooke et al. Citation2000).

Interviews attempt to extract a mental model through conversation with an operator and can be classified as structured, unstructured, or semi-structured (Cooke et al. Citation2000; Bisantz and Roth Citation2007). Unstructured interviews are free-form and give the interviewer the opportunity to probe domain practitioners comprehensively on any areas of interest, such as asking for narrations of critical events or any idiosyncrasies of the person’s performance. Structured interviews aim to be more rigorous or objective by presenting the same questions in the same order to multiple people, enabling a degree of quantifiability. Nonetheless, interviews are still susceptible to biases since they depend on verbal questions and responses, as well as the interviewee’s introspection.

Surveys are quizzes that attempt to capture a mental model in a specific set of quantifiable questions. They have a broad variety of uses, including characterizing a practitioner’s model of a task in general or investigating how a specific performance evolved. Common examples include Likert scales and subject-matter aptitude tests. Surveys can be sufficient in isolation, but they can also be used to supplement other elicitation methods such as observation. Surveys are closely related to structured interviews, only given asynchronously and with a finite selection of answers. In this way, they lose some expressive power but produce more quantifiable results—necessary for many applications. Both surveys and interviews can be used to quiz the operator’s depth of understanding of the environment or to provide insight to how the operator thinks via introspection. Sarter and Woods note in particular the usefulness of mid-operation surveys for measuring situation awareness, a closely related concept to mental models (see below) (Sarter and Woods Citation1991). A specific example of this for simulated environments is Endsley’s Situation Awareness Global Assessment Technique (SAGAT), which occasionally halts a simulation to quiz the user about the state of the world (Endsley Citation1995).

Process tracing can be used to analyze user mental models by collecting and processing electronic data directly from the work domain, including user behavioral records, system state information, verbal reports from the user, and eye movements (Patrick and James Citation2004). These methods can be advantageous because they provide direct, quantitative information that is not subject to the biases of introspection (Cooke et al. Citation2000). An example of a process tracing success is Fan and Yen’s development of a Hidden Markov Model for quantifying cognitive load based on secondary task performance (Fan and Yen Citation2011). However, in many cases, process tracing has not produced such conclusive results. The use of eye tracking in particular has proven difficult to directly correlate with the internal cognition that causes the observed motions.

Conceptual methods generate spatial representations of key concepts in the work domain, along with the constraints and ‘shunts’ that link them together in the mind of the practitioner (Cooke et al. Citation2000; Bisantz and Roth Citation2007). Examples include concept mapping, cluster analysis, multidimensional scaling, card sorting, and Pathfinder (Mohammed, Klimoski, and Rentsch Citation2000; Cooke et al. Citation2000). Conceptual analysis elicitation methods are popular for mental model elicitation, as mental models are often thought to exist in a pictorial or image-like form (Rouse and Morris Citation1986). Once a conceptual map of the work domain has been developed, it can be cross checked against a global reference or the concept map of a teammate. Conceptual methods are the most common among the methods described herein (Lim and Klein Citation2006; Mathieu et al. Citation2000; Van den Bossche et al. Citation2011; Marks, Zaccaro, and Mathieu Citation2000; Resick et al. Citation2010).

A central difficulty of measuring mental models is that the researcher can only measure what they think to ask. This means that unconventional, emergent behaviors are likely to be difficult or impossible to capture. It also requires the researcher themselves to be familiar with the system being modeled. (This familiarity might result from some kind of cognitive task analysis of the work domain (Naikar Citation2005).) Additionally, it must be noted that when measuring mental models, the mere act of asking questions may influence the models’ future development by directing the subject’s attention to previously unconsidered subjects or by restricting it to specific ideas proposed by the question. For example, ‘how fast can the car accelerate?’ suggests this is an important quantity to consider, whereas ‘to what degree is the AI trustworthy?’ may influence the operator to be more skeptical.

Mental model elicitation remains an inexact discipline. All available methods are vulnerable to various biases and confounding variables. French et al. succinctly discuss these difficulties in their review of trust in automation, drawing a connection between trust and mental models as ‘hypothetical latent construct[s] which cannot be directly observed or measured but only inferred’ (French, Duenser, and Heathcote Citation2018, 49-53). Ultimately, there are two ways to measure such a construct: by drawing inferences from observations of an operator’s behavior, or by introspection on the part of the operator—and neither affords a direct view of the underlying construct. Methods relying on introspection, including surveys, interviews, and conceptual methods, provide some insight into the operator’s thought processes; however, it is generally acknowledged that introspection is not fully reliable. Additionally, all such methods are limited by how the participant interprets the questions posed to them. By contrast, behavioral methods, including observation and process tracing, eliminate the need to ask subjective questions but are confounded by the many other factors that may influence behavioral outcomes, including workload, stress, and fatigue.

2.2. Related concepts

Mental models’ qualities place them alongside at least three other constructs: knowledge representation, schema, and situation awareness.

2.2.1. Knowledge representation

Knowledge Representation is broad term prevalent in both psychology and AI literature that concerns concrete models of the information stored in minds. In psychology (Rumelhart and Ortony Citation1977), the focus is on deriving these structures as naturally found in humans, and a subset of this work includes efforts to externalize people’s mental models. AI researchers have also used this term in the context of producing ‘knowledge-based AI’ systems that mimic an information processing model of the human mind—systems that could be considered ‘artificial mental models.’

2.2.2. Schema

Rumelhart and Ortony (Citation1977) define schema as ‘data structures for representing the generic concepts stored in memory,’ which can represent objects, situations, events, actions, sequences, and relationships. Schema (sometimes pluralized ‘schemata’) are the subject of a vast body of literature in the psychology and cognitive science fields (McVee, Dunsmore, and Gavelek Citation2005; Stein Citation1992).

Four essential characteristics of schema are given (Rumelhart and Ortony Citation1977):

Schema have variables

Schema can embed one within the other

Schema represent generic concepts which, taken altogether, vary in their levels of abstraction

Schema represent knowledge rather than definitions

Schema has been discussed widely and their precise definition is at times ambiguous. Some authors view schema as functionally identical to mental models, while Converse et al. present schema as static data structures (as opposed to mental models, which both organize data and can be ‘run’ to make predictions) (Converse, Cannon-Bowers, and Salas Citation1993, 227). One clear difference is their domain of application: Mental model theory has primarily been developed in relation to engineered, socio-technical systems, whereas schema theory has been developed in more naturalistic domains: language, learning, social concepts, etc. The idea of schema predates mental models (Converse, Cannon-Bowers, and Salas Citation1993; Endsley Citation2016).

Scripts are a type of schema that organize procedural knowledge (Dionne et al. Citation2010). They are prototypical action sequences that allow activities to be chunked into hierarchically larger abstractions (Schank and Abelson Citation1977).

2.2.3. Situation awareness

Situation awareness (SA) refers to the completeness of one’s knowledge of the facts present in a given situation. This state of knowledge arises from a combination of working memory, the ability to perceive information from the environment, and the mental models necessary to make accurate inferences from that information. The concept of SA, originally put forth by Mica Endsley, has been thoroughly studied from the perspective of air-to-air combat and military command and control. The general thrust of this subfield has been that a large proportion of accidents in critical systems can be attributed to a loss of situation awareness.

Endsley (Endsley Citation2004, 13-18) defines SA as existing at three levels, with awareness at each level being necessary for the formation of the next:

Level 1: Perception of Elements in the Environment. Deals with raw information inputs, their accuracy, and the ability of the human to access them and devote sufficient attention to each.

Level 2: Comprehension of the Current Situation. Refers to the human’s ability to understand the implications of the raw inputs—the ability to recognize patterns and draw inferences.

Level 3: Projection of Future Status. Refers to the ability to anticipate how the system will evolve in the near future, given its current state.

What may blur the distinction between SA and mental models is Endsley’s formal definition of SA as ‘the perception of the elements in the environment within a volume of time and space, the comprehension of their meaning, and the projection of their status in the near future’ (Gilson et al. Citation1994; Wickens Citation2008; Endsley Citation2004; Endsley Citation2016). Taken on its own, this is very similar to the common definition of mental models as that which is used to ‘describe, explain, and predict’ the environment. The key difference is that mental models are something that exists in long-term memory, regardless of present context, whereas situation awareness arises within the context of a specific situation and results from the application of mental models to stimuli and working memory. Mental models aid situation awareness by providing expectations about the system, which guides attention, provides ‘default’ information, and allows comprehension and prediction to be done ‘without straining working memory’s capabilities’ (Endsley Citation2016; National Academies of Sciences Engineering and Medicine Citation2022).

This same relationship underlies one of the key inconsistencies across all the following literature - the distinction between models and the states of those models - with some studies claiming to have ascertained one’s mental model by deducing the current state of their short-term knowledge. We take the view in this paper that a model is, in all cases, both long-term and functional (it can be ‘run’) (see also Endsley Citation2004, 21). States, like situation awareness, are transient and informed by their underlying models. This close relationship makes measuring one useful for, but not equivalent to, measuring the other.

2.2.4. Summary

All of the preceding terms describe similar phenomena from different subfields of the human sciences. Mental model theory is a perspective drawing largely from seminal works in psychology, applied most commonly in the context of engineered socio-technical systems. Although psychologists were initially interested in the implications of these knowledge structures in a single human, a newer branch of research has focused on the interaction of mental models in groups and work teams (i.e., SMMs). We describe these and related constructs in the next section.

3. Shared mental models (SMMs) & related concepts

SMMs are an extension of mental model theory, proposed and popularized by Converse, Salas, and Cannon-Bowers as a paradigm to study training practices for teams (Converse, Cannon-Bowers, and Salas Citation1993). The core hypothesis is that if team members have similar mental models of their shared task and of each other, then they are able to accurately predict their teammates’ needs and behaviors. This facilitates anticipatory behavior and, in turn, increases team performance. Research has investigated how SMMs can be fostered through training, which models should be shared, and how much overlap is appropriate. It has also investigated how degrees of mental model sharing can be evaluated, to test the hypothesis that SMMs lead to improved performance.

3.1. Structure and dynamics of SMMs

A team is defined in this context as a group of individuals with differing capabilities and responsibilities working cooperatively toward a shared goal. Mental effort in a team is spent on at least two functions: interaction with the task environment, and coordination with the other team members, which Converse et al. term ‘task work’ and ‘team work’ (Converse, Cannon-Bowers, and Salas Citation1993). Since they deal with distinct systems (the task environment and the team), these functions use separate mental models. Converse et al. propose as many as four models per individual:

The Task Model — the task, procedures, possible outcomes, and how to handle them; ‘what is to be done’

The Equipment Model — the technical systems involved in performing a task and how they work; ‘how to do it’

The Team Model — the tendencies, beliefs, personalities, etc. of one’s teammates; ‘what are my teammates like’

The Team Interaction Model — the structure of the team, its roles, and the modes, patterns, and frequency of communication; ‘what do we expect of each other’

Subsequent literature (e.g., Scheutz, DeLoach, and Adams Citation2017)) often reduces this taxonomy to two: the Task Model and Team Model.

An SMM is believed to benefit a team by ‘[enabling members] to form accurate explanations and expectations for the task, and, in turn, to coordinate their actions and adapt their behavior to demands of the task and other team members’ (Converse, Cannon-Bowers, and Salas Citation1993, 228). The process by which SMMs form is not fully understood, though there is some consensus. Team members begin with highly variable prior mental models, which may depend on their past experiences, education, socio-economic background, or individual personality (Converse, Cannon-Bowers, and Salas Citation1993; Schank and Abelson Citation1977). Through training, practice with the task, and interaction with their teammates, they gradually form mental models that enable accurate prediction of team and task behaviors. They may also adjust their behaviors to meet the expectations of their teammates. The exact interaction and learning processes involved, what training methods are most effective, and the rates at which SMMs develop and degrade are primary subjects of research on the topic. We detail some of the prominent works in this area below in §3.4.

3.2. Eliciting SMMs

The most common way to measure an SMM is to elicit mental models of team members individually and derive from these an overall metric of the team, as described in §3.3. However, other approaches examine the team as a whole, analyzing the emergent team knowledge structure as a distinct entity. Cooke argues that the latter approach may be the most important for fostering team performance but notes that very little research has done so (Cooke et al. Citation2004). Based on our analysis, this still seems to be the case, 18 years later.

The holistic approach advocated by Cooke calls for applying elicitation techniques to the team as a group (Cooke et al. Citation2004). For example, if questionnaires are employed, then the team would work together to select their collective responses; if conceptual methods are employed, then the team would cooperatively construct their domain representation. Such methods would help ensure that the same group dynamics that guide task completion (i.e., effects of team member personalities, leadership abilities, communication styles) are also present in SMM elicitation (Cooke et al. Citation2004; Dionne et al. Citation2010).

It is important to note that many experiments purporting to study SMMs do not elicit or apply any metric to them whatsoever. Rather, they may make a qualitative assertion of the presence of an SMM based on a specific intervention, such as cross-training, or some other structuring of the task environment. For example, one study equates the presence of shared displays with shared task models, and the reading of teammate job description with shared team models, but it does not explicitly attempt to measure the resultant or anticipated SMM (Bolstad and Endsley Citation1999).

The elicitation of MMs and the study of SMMs has predominantly been considered from the perspective of establishing a theoretical link between mental model sharedness and improved team performance. As of now, these elicitation methods are not being used to inform the conveyance or convergence of mental models in the human-human or human-AI domain.

3.3. Metrics for SMMs

Though ample work has been done on the elicitation of SMMs, relatively little literature exists on the key problem of synthesizing this information into salient metrics. Most authors agree that mental models are best measured in terms of the the expectations they produce, rather than their underlying representation because it is the expectations themselves that are thought to lead to improved performance (Converse, Cannon-Bowers, and Salas Citation1993; DeChurch and Mesmer-Magnus Citation2010; Jonker, Riemsdijk, and Vermeulen Citation2010; Cooke et al. Citation2000). Techniques to elicit these expectations run the gamut from casual interviews to structured surveys, but all literature on quantitative metrics assumes this information can be ultimately distilled to a discrete set of questions and answers. Examples of the questions involved might include ‘Is team member A capable of X?’, ‘What is the maximum acceptable speed of the vehicle?’, ‘When is it appropriate to send an email?’, or ‘Does team member B know Y?’ Note that the questions need not have quantitative or bounded answers; they may be arbitrary sentences, provided that there is a sound way to test the equivalence of two answers (Jonker, Riemsdijk, and Vermeulen Citation2010).

Nancy Cooke’s seminal work on measuring team knowledge from 2000 is still relevant today, and it has proven to provide the most valuable insight on this subject (Cooke et al. Citation2000). Her work suggests that once data have been elicited about each team member’s mental model, or about the team holistically, at least six kinds of metrics can be used to relate SMMs to task performance: similarity, accuracy, heterogeneous accuracy, inter-positional accuracy, knowledge distribution, and perceived mutual understanding (Cooke et al. Citation2000; Burtscher and Oostlander Citation2019).

3.3.1. Similarity

Similarity refers to the extent to which team mental models are shared (i.e., are equivalent to each other). When using surveys for mental model elicitation, this may refer to the number or percentage of questions answered identically by a pair of teammates. For a concept map, it could refer to the number or percentage of links between domain concepts shared by a pair of teammates (Cooke et al. Citation2000).

It has been repeatedly shown that similarity alone in team mental models does lead to improved team performance: similarity facilitates shared expectations, which results in improved coordination. This appears to apply to both task models and team models. In a field study of 71 military combat teams, conceptual methods demonstrated that both taskwork and teamwork similarity predicted team performance (Lim and Klein Citation2006). Conceptual methods, supported by some qualitative assertions, have been used to the same end in the domain of simulated air-to-air combat (Mathieu et al. Citation2000). Survey techniques and conceptual methods have also been used to show that similarity of task mental models is correlated with team effectiveness in the context of business simulations (Van den Bossche et al. Citation2011).

3.3.2. Accuracy

Cooke writes, ‘all team members could have similar knowledge and they could all be wrong’ (Cooke et al. Citation2000, 164). Accuracy measures aim to account for this by comparing each team member’s task model to some ‘correct’ baseline. This is common with survey techniques, in which accuracy is the number or percentage of questions answered correctly. For conceptual methods, accuracy is the number or percentage of correctly identified domain concepts, as well as the links and constraints connecting them. Overall team accuracy is then measured as some average of these individual scores (Cooke et al. Citation2004).

Both qualitative and conceptual methods for team mental model elicitation have been used to show that accurate task models correlate with team effectiveness in military combat operations (Marks, Zaccaro, and Mathieu Citation2000; Lim and Klein Citation2006). Conceptual methods have also been used to demonstrate a positive correlation between mental model accuracy and team effectiveness in a simulated search and capture task domain (Resick et al. Citation2010). Though mental model accuracy does appear to play a role in teaming, some studies have found only a marginally significant effect (Webber et al. Citation2000).

Note that the correct baseline may sometimes be hard to identify. Jonker et al. identify at least two kinds of accuracy: what they call ‘system accuracy’ (a theoretically ideal mental model which may be hard to derive) and ‘expert accuracy’ (comparisons to an already-trained human expert) (Jonker, Riemsdijk, and Vermeulen Citation2010).

3.3.3. Metrics accounting for specialization

Specialization is a key aspect of teams, as defined by the field. For fairly complex systems, it is unreasonable to expect any team member to have an accurate mental model of the whole task. Thus, Cooke discusses several additional measures to capture appropriate knowledge for specialized roles. Though these metrics offer compelling theoretical improvements over simple similarity and accuracy measures, they appear to be less well represented in the literature.

Heterogeneous Accuracy tests each team member only on knowledge specific to their role; the team is then evaluated as an aggregate of team members’ individual scores.

Inter-positional Accuracy is the opposite of heterogeneous accuracy: it tests team members on the knowledge specific to the roles of the other members. In a study analyzing teamwork from domains as varied as avionics troubleshooting to undergraduate studies, Cooke finds that teams often become less specialized and more inter-positionally accurate with time. Moreover, this increase in inter-positional accuracy is associated with an increase in team performance (Cooke et al. Citation1998).

Knowledge Distribution is similar to Accuracy but instead measures the degree to which each piece of important information is known by at least one teammate (see below, §3.5.6).

3.3.4. Perceived mutual understanding

Thus far, the metrics discussed are concerned only with the objective characterization of team member knowledge. Other authors, however, emphasize the importance of perception—what team members think about the accuracy and similarity of team knowledge (Burtscher and Oostlander Citation2019; Rentsch and Mot Citation2012). Perceived mutual understanding (PMU) ‘refers to subjective beliefs about similarities between individuals’ as opposed to objective knowledge of their existence, and it has also been correlated with team performance (Burtscher and Oostlander Citation2019).

3.3.5. Similarity vs. accuracy

There is some inconsistency in the literature regarding which metrics are appropriate for SMMs. Cooke et al. detail multiple types of accuracy measures and highlight their relevance, and several studies use them as their basis; however, although none dispute its relevance, several authors exclude accuracy from their definitions of SMMs. In Jonker et al.’s conceptual analysis, SMMs are defined explicitly in terms of the questions two models can answer and the extent to which their answers agree, eschewing accuracy measures on the basis that they do not necessarily involve comparisons between teammates (Jonker, Riemsdijk, and Vermeulen Citation2010). DeChurch and Mesmer-Magnus likewise exclude studies that do not explicitly compare teammates’ mental models to each other from their meta-analysis of SMM measurement (DeChurch and Mesmer-Magnus Citation2010).

Intuitively, similarity alone can benefit a team in any situation where an exact protocol is unimportant, so long as teammates can consistently predict each other—for example, the convention of passing on the right in hallways, or assigning a common name to a certain concept. However, there will always be aspects of a task for which there is an objective, right answer, such as the flight characteristics of a plane or the adherence to laws or procedures. Consequently, there are good reasons one may measure the accuracy of mental models alongside their similarity, whether one considers this an element of the ‘shared’ mental model or not.

3.4. Development and maintenance of SMMs in human teams

The previous sections discuss what SMMs are and how they can be characterized, but where do they come from? What mechanisms allow them to form? How can we foster their development and prevent them from degrading? Substantially less research addresses these questions (Bierhals et al. Citation2007), but they are key to our goal of fostering SMMs between humans and machines. In this section, we summarize the relevant literature on the factors and social processes that enable human teams to develop and maintain SMMs.

3.4.1. Development

The nature of SMM development is fundamentally a question of learning. Each human is unique: they approach life with their own mental models of the world. Therefore, for team members’ models to become shared, change must occur to establish common ground. This in turn requires interaction with other team members; left in isolation, an individual’s models will remain stagnant (Van den Bossche et al. Citation2011). Mental model development has been studied through various lenses, including verbal and nonverbal communication, trust, motivation, the presence or absence of shared visual representations of the task environment, and a specific set of processes termed ‘team learning behaviors.’

Verbal communication is the most obvious form of team member interaction that impacts the development of SMMs. The two are mutually reinforcing: communication can lead to the formation of better SMMs, and the existence of better SMMs can also facilitate better communication (Bierhals et al. Citation2007). This will result in either a positive or negative feedback loop: a good SMM will result in constructive communication which will result in a better SMM, and vice versa.

Many studies agree that, while verbal communication is important, far more is said nonverbally—as much as 93 percent of all communication, according to Mehrabian’s mid-century research (Mehrabian Citation1972) (though this exact figure has been disputed (Burgoon, Guerrero, and Manusov Citation2016)). This includes facial expressions, posture, hand gestures, and tone (Burgoon, Manusov, and Guerrero Citation2021). Bierhals et al. Citation2007 study of engineering design teams, and Hanna and Richards Citation2018 study of humans and intelligent virtual agents (IVAs) explore the effects of communication on SMM development (Bierhals et al. Citation2007; Hanna and Richards Citation2018). Both verbal and nonverbal aspects of communication are found to significantly influence development of both shared taskwork and teamwork models. Interestingly, nonverbal communication seems to be more important in the development of taskwork SMMs, whereas verbal communication plays a larger role in development of teamwork models (Hanna and Richards Citation2018).

Beyond communication, higher order social and personal dynamics are at play. These include personal motivation, commitment to task completion, mutual trust, and other emotional processes such as unresolved interpersonal conflicts. Unfortunately, there is a paucity of research concerning the impact of such factors on SMMs. Bierhals et al. make reference to ‘motivational and emotional processes’ to account for peculiarities in their results, but their experiments are not designed to specifically measure these factors (Bierhals et al. Citation2007). An exception is Hanna and Richards’ study, which directly correlates the effects of trust and commitment to SMM development in teams of humans and IVAs (Hanna and Richards Citation2018). (However, these measurements were made only via subjective self-assessments of SMM quality.) Both better shared teamwork and taskwork mental models are found to positively correlate with human trust in their artificial teammate; the effect of sharedness in the taskwork model is found to be slightly stronger than that of the teamwork model. In addition, teammate trust is found to significantly correlate with task commitment, which is found to significantly correlate with improved team performance. From what little research has been done on the topic, it is clear that higher order social and personal dynamics are fundamentally intertwined with SMM development. Further research is warranted to fully discern the nature of this relationship.

Some studies suggest that mutual interaction with shared artifacts, such as shared visual representations of the task environment, can be used to facilitate SMM convergence. Swaab et al.’s study on multiparty negotiation support demonstrates that visualization support facilitates the development of SMMs among negotiating parties (Swaab et al. Citation2002). Bolstad and Endsley’s study on the use of SMMs and shared displays for enhancing team situation awareness also suggests that shared displays help to establish better SMMs, and correlates the presence of such shared artifacts with improved team performance (Bolstad and Endsley Citation1999). These findings support the common assertion that mental models are frequently pictorial or image-like, rather than script-like in a language processing sense or symbolic in a list-processing sense (Rouse and Morris Citation1986). By providing an accessible domain conceptualization in the form of a shared visual display, it is intuitive that team mental models would converge around the available scaffolding.

Scaffolding is also insightful with respect to the role of prior knowledge in mental model development. The term ‘scaffolding’ as a metaphor for the nature of constructive learning patterns originated with Wood, Bruner and Ross (Wood, Bruner, and Ross Citation1976) in the mid 1970s and has resonated with educators ever since (Hammond Citation2001). The basic idea is that educators add support for new ideas around a learner’s pre-existing knowledge structures. As the learner gains confidence with the new ideas, assistance or ‘scaffolding’ can be removed and the learning process repeated. An educator’s ability to add new information to a learner’s knowledge base is largely dependent on the quality of the learners pre-existing schema. Mental models for system control, human-robot interaction, military operations, etc. function in a similar manner. If pre-existing mental models are accurate and robust, they provide a firm starting point for learning new concepts and interpreting new material (Converse, Cannon-Bowers, and Salas Citation1993; Rouse and Morris Citation1986). However, if they are inaccurate, containing information gaps and incoherencies, research suggests that they can be difficult to correct (Converse, Cannon-Bowers, and Salas Citation1993; Rouse and Morris Citation1986). The process of SMM development is thus highly dependent on the experience, education, socio-cultural background, and moreover, pre-existing mental models of each team member. However, despite the apparent importance of team member prior knowledge and experience level, comprehensive analysis of the effects of these factors with regard to SMM development in human-agent teams has not yet been performed.

Team learning behaviors—broadly categorized as construction, collaborative construction (co-construction), and constructive conflict (Van den Bossche et al. Citation2011)—are a convenient way of mapping common behaviors seen in teams to various levels of SMM development. They are formally defined in the literature as ‘activities carried out by team members through which a team obtains and processes data that allow it to adapt and improve’ (Edmondson Citation1999). Research on team learning behaviors is fundamentally informed by theory on negotiation of common ground, an idea originating in linguistics in 1989 (Clark and Schaefer Citation1989) and swiftly adapted and expounded on by subsequent learning science researchers (Beers et al. Citation2007). In theory on negotiation of common ground, communication is viewed as a negotiation, with the ultimate goal of establishing shared meaning or shared belief. Communicators engage in a cyclic process of sharing their mental model, verifying the representations set forth by other team members, clarifying articulated belief statements, accepting or rejecting presented ideas, and explicitly stating the final state of their mental model—whether changed or unchanged (Beers et al. Citation2007). Construction is the first stage of this process—namely, personal articulation of worldview (Beers et al. Citation2007).

Co-construction is the portion of the common ground negotiation process primarily concerned with the acceptance, rejection, or modification of ideas set forth by others (Baker Citation1994). The result of co-construction is that new ideas emerge from the team holistically that were not initially available to each team member (Van den Bossche et al. Citation2011). If team members accept new ideas and converge around a commonly held belief set, an SMM has been developed. Otherwise, a state of conflict exists.

Constructive conflict occurs when differences in interpretation arise between team members and are resolved by way of clarifications and arguments. Constructive conflict, unlike co-collaboration, was found by Bossche et al. to be significantly correlated with SMM development (Van den Bossche et al. Citation2011). Conflict shows that team members are engaging seriously with diverging viewpoints and making an active effort to reconcile their representations based on the most current information. Processing of this caliber may just be the very prerequisite for meaningful mental model evolution (Jeong and Chi Citation2007; Knippenberg, De Dreu, and Homan Citation2004).

3.4.2. Maintenance

Once an SMM has been established, it will be either maintained, be updated, or begin to degrade. Updating refers to the constant need to keep SMM information current and relevant in light of changing system dynamics, whereas maintenance and degradation refer to the fleeting nature of knowledge in human information processing. All three aspects will here be referred to collectively as maintenance. Much like with SMM development, SMM maintenance is largely governed by team member interaction.

In his 2018 PhD dissertation on SMMs, Singh suggests that SMMs are updated through four main processes: perception, communication, inference, and synchronization (Singh Citation2018). Perception refers to sensing the environment. If team members are in a position to detect the same changes in the environment, SMMs can be updated through perception alone. In most cases, however, communication plays a crucial role in mental model maintenance. Much like with SMM development, team members will communicate to engage in team learning behaviors to co-construct interpretations of evolving system dynamics, and thereby keep their SMM up to date (Van den Bossche et al. Citation2011). On a slightly deeper level, inference mechanisms will guide the actual interpretation of perceived system states and verification of communicated beliefs. Though Singh discusses synchronization as a fourth process (Singh Citation2018), it is debatable whether or not this is fundamentally different from communication. It may be helpful to view synchronization processes as a convenient subclass of communication processes, specifically geared toward maintaining SMM consistency.

Because SMM maintenance is an inherently social activity, higher order social and emotional dynamics such as trust, motivation, and commitment are crucial factors. Trust, for example, plays an essential role in communication. An agent’s level of trust in their teammate will directly correlate with the likelihood of adopting that teammate’s communicated beliefs (Singh Citation2018). Research also suggests a correlation between team member motivation for goal completion and SMM maintenance (Wang et al. Citation2013). This relationship is intuitive; up-to-date SMMs facilitate goal completion: teams that are highly motivated to complete the goal will also be motivated to maintain their SMM. Joint intention theory (Cohen and Levesque Citation1991) takes this idea one step further. The theory requires team members to be committed not only to goal completion, but also to informing other team members of important events. In other words, team members must be committed to working as a team. A group of individuals could all be highly motivated to achieve a goal, but if they are not also bought into the team concept, an SMM will likely never form—and if it does, it will degrade quickly.

Skill degradation and forgetfulness literature is insightful with respect to the nature of SMM deterioration. Multiple studies have shown that humans retain cognitive skills only for a finite period of time before substantial degradation ensues (Sitterley and Berge Citation1972; Volz Citation2018; Erber et al. Citation1996). This also applies to SMMs of teamwork and taskwork. To maintain shared teamwork models, teams should train together on a regular basis. Research suggests that teamwork cross-training, even when conducted outside of the conventional work domain, can facilitate SMM maintenance (McEwan et al. Citation2017; Hedges et al. Citation2019), though some training in the work domain should be retained to maintain shared taskwork models. Maintenance of shared taskwork models can also be aided by regular academic training sessions, as well as pre- or post-task briefings (Dionne et al. Citation2010; McComb Citation2007; Johnsen et al. Citation2017).

3.5. Related concepts

As with mental models, literature on SMMs contains many similar and overlapping terms, and certain terms differ among authors.

3.5.1. Team mental models

Team mental models and SMMs generally refer to the same idea: that effective teams form joint knowledge structures to realize performance gains (Cannon-Bowers and Salas Citation2001). Team mental models are defined in the early literature as ‘team members’ shared, organized understanding and mental representation of knowledge about key elements of the team’s relevant environment’ (Lim and Klein Citation2006; Mohammed and Dumville Citation2001; Klimoski and Mohammed Citation1994). Those same seminal works on Team Mental Models made frequent reference to foundational SMM literature and used the terms interchangeably (Mohammed and Dumville Citation2001; Klimoski and Mohammed Citation1994; Converse, Cannon-Bowers, and Salas Citation1993; Rouse and Morris Citation1986). Despite their fundamental similarity, subtle differences seem to have emerged in the way the terms are used. The ‘sharedness,’ for example, of SMMs is indicative of the early belief that similarity among team member mental models was the key determinant of team performance. Later research has demonstrated that additional factors are involved. The use of ‘team mental model’ de-emphasizes sharedness somewhat, and in turn attempts to place the focus on the study of collective knowledge structures that result from teaming (Cooke et al. Citation2000). Note that this term is also sometimes used by other authors as a reference to mental models of teams (team models or team interaction models).

3.5.2. Shared cognition

Shared cognition and SMM literature have common origins in the 1990s’ and early 2000s’ work of researchers such as Cannon-Bowers, Dumville, Mohammed, Klimoski, and Salas (Cooke et al. Citation2000; Converse, Cannon-Bowers, and Salas Citation1993; Klimoski and Mohammed Citation1994; Mohammed and Dumville Citation2001). Such authors sought to explain the fluidity of expert team decision-making and coordination, hypothesizing that similarity in team member cognitive processing enabled increased efficiency (Cannon-Bowers and Salas Citation2001). SMM theory is concerned specifically with the theorized knowledge structures underlying this process, whereas shared cognition focuses more broadly on the process itself.

3.5.3. Team knowledge

Team knowledge is a slight expansion upon the idea of team mental models to include the team’s model of their immediate context, or situation model (Cooke et al. Citation2000). The term was introduced and developed primarily by Nancy Cooke. The word choice for ‘team knowledge’ is specifically selected to specify the study of teams as opposed to dyads or work groups so as to avoid the ambiguity surrounding use of the word ‘shared’ and to focus study on the knowledge structures at play in teaming instead of broader cognitive processes (Cooke et al. Citation2000). Team situation models can be seen as more fleeting, situation-specific, dynamic, joint interpretations of the immediate evolving task context; in this way, it is unclear if or how they are distinct from ‘team situation awareness’, discussed below. Given the concession that SMMs play a primary role in situation interpretation (Cooke et al. Citation2000; Orasanu Citation1990), it is not clear how necessary it is to make this distinction. Substantial attention toward situation models is probably only necessary in fast-paced environments such as emergency response, air-to-air combat, or tactical military operations.

3.5.4. Team cognition

Team cognition takes as its premise that cognitive study can be performed holistically on teams and that the study of this emergent cognition is largely a function of team member interaction (Cooke et al. Citation2013). Though team cognition ‘encompasses the organized structures that support team members’ ability to acquire, distribute, store, and retrieve critical knowledge,’ it is more concerned with the processes than the structures themselves (Fernandez et al. Citation2017). Thus, SMMs can be viewed as the knowledge structures that facilitate team cognition. Cooke specifies team decision-making, team situation awareness, team knowledge (including team mental models and team situation models), and team perception as the basic building blocks of team cognition (Cooke et al. Citation2000).

3.5.5. Distributed cognition

Like shared cognition, distributed cognition suggests that cognitive processes are distributed among team members, but it explores further cognitive distribution in the material task environment—and with respect to time (Hollan, Hutchins, and Kirsh Citation2000). Distributed cognition stems largely from the work of Edwin Hutchins and has much in common with shared cognition. Both recognize the emergence of higher order cognitive processes in complex socio-technical systems that dwell beyond the boundaries of the individual. Both concepts emerged around the same time and were clearly influenced by some of the same ideas. That said, distributed cognition has its own unique perspective on cognitive processes in complex work teams in socio-technical systems. The task environment in the study of emergent cognitive processes is an important concept with respect to human-automation teaming (Hutchins and Klausen Citation1996) and as SMM theory is updated to include artificial agents.

3.5.6. Transactive memory

Transactive memory is intimately related to team and distributed cognition and is formally defined as ‘the cooperative division of labour for learning, remembering, and communicating relevant team knowledge, where one uses others as memory aids to supplant limited memory’ (Ryan and O’Connor Citation2012; Lewis Citation2003; Wegner Citation1987). In other words, not every fact has to be known by every team member, so long as everyone who needs a fact knows how to retrieve it from someone else on the team. Research on this topic conceives of formally analyzing the flow of information between team members as storage and retrieval transactions, analogous to computer processing.

3.5.7. Team situation awareness

Situation awareness may be discussed at the team level, and its relationship to SMMs is analogous to its relationship to mental models at the individual level. As with individual SA, team (or Shared) SA is supported by information availability and adequate mental models. Additionally, team SA is supported by appropriate communication between team members (Entin and Entin Citation2000).

It is worth noting that many questions used to measure SMMs actually measure SA—that is, rather than asking about general knowledge, experiments may query knowledge inferred about the immediate situation as a proxy for measuring the underlying models. For example, one may ask a pilot and copilot what the next instruction from air traffic control (ATC) will be to test of their knowledge of ATC procedures. This is not an incorrect way to measure SMMs — however, in doing so, the researcher must be aware of other factors that influence SA, such as equipment reliability and the limits of attention and working memory, and consider ways to control for these (Endsley Citation2004, 13-29).

4. SMMs in human-AI teams

Recently, researchers have suggested that SMMs are a useful lens to study and improve performance in teams of human and AI agents. That is, human and AI teammates can be led to make accurate predictions of each other and of their shared task and thus achieve better coordination. Attempting to predict human behaviors or intentions and to modify automated system behavior accordingly is an idea that has been investigated previously in the dynamic function allocation (DFA) literature as well as the adaptive automation (AA) literature (Parasuraman et al. Citation1991; Morrison and Gluckman Citation1994; Kaber et al. Citation2001; Rothrock et al. Citation2002; Scerbo, Freeman, and Mikulka Citation2003; Kaber and Endsley Citation2004; Coye de Brunelis and Le Blaye Citation2008; Kim, Lee, and Johnson Citation2008; Pritchett, Kim, and Feigh Citation2014; Feigh, Dorneich, and Hayes Citation2012b), and in some work on AI expert systems (Mueller et al. Citation2019, Section 5). In both DFA and AA, the goals were similar, i.e. to adapt automation to better support humans in their work, the mechanisms were slightly different. DFA and AA generally were based on observing human behavior, inferring workload, and adapting accordingly to keep workload at an acceptable level in a very predictable (or at least so it seemed to the system designers) way. In some cases performance was included as part of the objective function, but often workload bounding was the main driver. By contrast, the idea behind SMMs is more general: that in order to function as a team, both humans and automated agents must have an understanding of shared and individual goals, likely methods appropriate to achieve them, and teammate capabilities, information needs, etc. - and that from this understanding, each may seek to anticipate the behavior of the other and adapt appropriately to support joint work.

However, though a handful of studies have approached Human-AI SMMs, no formulation of the concept has yet been supported with a quantitative link to improved team performance. The challenges center around (1) how the AI’s mental model is conceived of and implemented, (2) how the Human-AI SMM is elicited and measured, and (3) what factors lead to effective formation of a Human-AI SMM.

In the remainder of this work, we hope to lay the groundwork for this future research. This section reviews existing work on the topic; then, stepping back, we present a broad model of the dynamics of SMM formation in a human-AI team. We then use this to detail the theoretical and practical challenges of applying SMM theory in the context of human-AI teams. In the following sections, we highlight various bodies of literature that may address these challenges—including some that have not yet been discussed in terms of SMMs. Finally, we present general considerations for the construction of experiments to evaluate SMMs in human-AI teams.

4.1. Prior work

In 2010, Jonker et al. presented a conceptual analysis of SMMs, specifically formulated to allow human and AI agents to be considered interchangeably (Jonker, Riemsdijk, and Vermeulen Citation2010). They emphasized SMMs as being fundamentally defined by (1) their ability to produce mutually compatible expectations in each teammate and (2) how they are measured. They also advocated the strict use of similarity, not accuracy, in metrics of SMMs. That is, if team members answer questions about the task and team the same way, then their mental models are (according to Jonker) shared.

Following this conceptual work, Scheutz et al. proposed (but did not implement) a detailed computational framework in 2017 to equip artificial agents (specifically robots) for SMM formation (Scheutz, DeLoach, and Adams Citation2017). Gervits et al. then implemented this framework between multiple robots and tested it in a virtual human-robot collaboration task, claiming in 2020 the first empirical support for the benefits of SMMs in human-agent teams (Gervits et al. Citation2020). Another study, by Hanna and Richards in 2018, investigated a series of relationships between communication modes among humans and AI, SMM formation, trust, and other factors, and positive correlations between all of them and team performance were found (Hanna and Richards Citation2018). Zhang proposed RoB-SMM, which focused particularly on role allocation among teams, but only tested the model among teams of artificial agents (Zhang Citation2008).

Although the experimental results among the above studies are encouraging, they far from fully operationalize the SMM concept. Most critically, both Scheutz and Gervits, one building on the other, discuss SMMs as something possessed by an individual teammate (Scheutz, DeLoach, and Adams Citation2017, 5), which is inconsistent with their definition in foundational literature (Converse, Cannon-Bowers, and Salas Citation1993; Jonker, Riemsdijk, and Vermeulen Citation2010) as a condition arising between teammates when their knowledge overlaps. While Scheutz et al. describe a rich knowledge framework for modeling a human teammate, they make no mention of how this can be compared to the actual knowledge state of the human to establish whether this knowledge is truly shared—a shared mental model may or may not exist, but there is no mechanism to test this. Likewise, Gervits et al. make only a qualitative distinction between systems that either did or did not possess an SMM-inspired architecture, rather than a quantitative measurement of SMM quality as described above § 3.3. Their study deals only with SMMs between robot agents, not between robot and human agents; the human’s mental model is not elicited, and sharedness between robot MMs and human MM is not claimed. They use the terms ‘robot SMM’ and ‘human-robot SMM’ to make this distinction, deferring measurement of the latter to future work. The Hanna and Richards study did produce metrics for SMM quality, but only in the form of introspective Likert ratings about the presence of shared understanding, rather than explicit surveys or behavioral measurements of team member expectations (Hanna and Richards Citation2018).

4.2. Conceptual model of human-AI SMMs

We present here a conceptual model of the components and relevant interactions in a human-AI team that are thought to lead to the formation of an SMM (). Our aim here is to summarize the important concepts, to highlight connections to other bodies of research, and to serve as a point of departure for future debate and refinement of the concept.Footnote2

In the simplest case, the system involves two team members—one human and one artificial—their shared task environment, and a researcher. We include the researcher in our model because of the significant role they play in determining the SMM using current elicitation methods and to illustrate the challenges and specific considerations of eliciting mental models from both parties during experimentation. The researcher here is an abstraction for any human involved in designing or implementing the human-AI system; outside of experimental settings, the researcher is replaced with a team of system designers or engineers, but the dynamics described here remain applicable. We focus on a dyad team in this example, but these relationships could be extended pairwise to larger groups (see below).

Each teammate’s mental model may be loosely divided into its task and team components or viewed holistically. Each individual’s model is informed by at least four factors: (1) their prior knowledge, (2) the task environment, (3) the researcher/system designer, and (4) their teammates. In successful SMM formation, as the teammates perform their task, collaborate with each other, resolve conflicts, and engage in a breadth of team learning behaviors, their mental models converge such that each makes equivalent predictions of the task environment and of each other. The portions of their mental models that produce these equivalent expectations are then defined as the SMM.

Prior experience in a human teammate refers to whatever knowledge, habits, skills, or traits the human brings to the task from their life experience. For artificial agents, this factor may not be applicable in an experimental setting, or it may overlap with the inputs given by the researcher.

Both human and artificial agents may learn from the task environment through direct interaction or explicit training. In an AI specifically, this may take the form of reinforcement learning, or the agent may have a ‘fixed’ understanding of the task, having been given it a priori by its designer as part of a development or training phase.

The researcher(s) play a strong role in both agent’s mental models. To the human, the researcher provides training and context that informs how the human will approach their task. They have an even greater influence over the AI (even outside of experimental settings), potentially designing its entire functionality by hand. For any learning components of the AI, the researcher will likely provide ‘warm start’ models derived from baseline training data, rather than deploying a randomly initialized model to interact with the human.

In an experimental setting, the researcher takes steps to elicit and measure the mental models of each teammate, to assess the quality of their shared mental model or whether they have learned what was intended. This process is nontrivial and requires different methods for human and artificial agents (4.3.2).

Finally, the human and AI acquire mental models of each other through mutual interactions. While some work exists on informing humans’ models of AI (e.g. (Bansal et al. Citation2019)), and some exists on forming AI’s models of humans (see §5), little work yet exists on how the social, collaborative processes involved in human SMM formation discussed in §3.4.1 map to the human-AI setting. Furthermore, these interactions are heavily constrained and mediated by the human-machine interface (see §4.3.3). Trust is a major factor in this aspect of the relationship; for more general reviews on trust regulation in human-automation interaction, see French, Duenser, and Heathcote (Citation2018) and Lee and See (Citation2004).

In larger groups, the above relationships are replicated for every pair of teammates which share responsibilities. Between pairs of humans, some elements such as the human-machine interface are omitted, but most factors remain: each human must learn from the task environment, any provided training, and experience working with their teammate. Between pairs of AI agents, opportunities exist for direct communication or for sharing knowledge directly through shared memory, as seen in Scheutz, DeLoach, and Adams (Citation2017). In these cases, the boundaries between agents are blurred and care must be taken in analyses deciding whether to model a team of artificial agents or a single, distributed agent.

4.3. Challenges relative to human-human teams

The literature currently lacks a thorough exploration of what it means to apply the concept of a mental model to an artificial system. By hypothesis, the concept may be applied equally to AI as to humans: whatever expectations a system produces about its environment or teammates that can lead to useful preemptive behavior is relevant to SMM theory. Nevertheless, all contemporary AI falls into the category of ‘narrow’ (as opposed to ‘general’) intelligence; it does not possess the full range of cognitive abilities of a human. All relevant capabilities of the system must be created explicitly. Following from the above model, we now note some additional considerations that AI ‘narrowness’ necessitates in the application of existing SMM literature to mixed teams of humans and AI, and, where possible, we link to the bodies of literature that may address them.

4.3.1. Forming the AI’s mental models

A fully-fledged Human-AI SMM needs an AI with both a task model and a team model. The components of an AI enabling it to perform its core job (task) can roughly be considered its ‘task model’ - that is, the components of a system that explicitly or implicitly represent knowledge of the external world to be interacted with. Virtually any kind of AI could underlie this portion of the artificial teammate. We consider some of the implications this has for the design and study of a Human-AI SMM in §7.2, but in general the processes for creating and optimizing the task AI are beyond the scope of this review.

Generally speaking, an AI designed for a specific task lacks anything that might be described as a ‘team model’; it makes no inferences about the humans using it. However, there have been a number of efforts in computer science and computational psychology to model humans that may be useful to form the team model component of a Human-AI SMM. We detail these in §5.

4.3.2. Eliciting and measuring human-AI SMMs

Although it is generally assumed that humans are able to answer questions about their own knowledge and reasoning, this ability must be specifically designed for in AI systems. In some types of AI, such as the logical knowledge bases used in the work of Scheutz, DeLoach, and Adams (Citation2017), this may be as simple as outputting a state variable. In others, however, particularly deep learning systems, the ability to explain a prediction or engage in any kind of metacognition is a major, largely unsolved problem. This is the subject of the explainable AI field, which we detail further in section 6.

AI’s lack of explainability constrains how mental models can be elicited in human-AI teams. Whatever elicitation method is used to measure the mental model, it must be applicable to both a human and an AI. An AI certainly cannot easily complete a Likert survey, nor participate in an interview; observation methods only apply if the AI has something intelligible to observe. Conceptual methods may be appropriate for AIs built on logical databases but not for others. Surveys asking direct, objective questions about the state of the task and team are likely the most accessible for both types of teammate. Process tracing is also an option, depending on how the task environment is set up. Eye tracking, for example, would make no sense for an AI, but interactions with controls might. This problem of elicitation is still open; to date, no study has collected measurements from both the human and AI members of a team.

Another issue is that whereas humans have a very broad ability to adapt their mental models, in any AI system, there is much that is hard-coded and inflexible, both about how the system performs its job and how it interacts with its teammates. For example, there might be a fixed turn-taking order or frequency of interactions with the system, a fixed vocabulary of concepts, or a limited set of possible commands or interactions. There may be assumptions about the task environment or teammate behavior that are imposed by the AI’s engineers, either to reduce the complexity of the implementation or because they are assumed to be roughly optimal. (One can consider these situations as corresponding to fixed elements of the AI’ s team or task model, respectively.) This means that in a human-AI team there are many aspects of team behavior for which similarity of mental models is no longer sufficient; in all cases where the AI’s behavior is fixed, there is a correct set of expectations the human must adopt, and the question becomes more so one of accuracy, in which the AI’s fixed behaviors are the baseline.

Additionally, including artificial teammates may prompt the modeling and measurement of additional factors that would not ordinarily be considered part of any mental model. For example, Scheutz et al. model cognitive workload of human teammates by monitoring heart rate and use it to anticipate which teammates need additional support (Scheutz, DeLoach, and Adams Citation2017). It is easy to imagine other human traits that could be modeled, such as personality and tendencies or preferences that the human themselves may not even be aware of. Equivalent traits for machines could include processing bottlenecks and any sensor or equipment limitations not explicitly modeled in the AI itself. These traits fall somewhat outside the scope of SMMs as models that produce equivalent expectations, since they exist only in the mental model of one teammate. Nevertheless, they can be useful to model and for fostering performance. (It is a semantic matter for others to debate whether modeling these traits implies a necessary extension of the SMM concept in the human-AI domain or highlights the breadth of factors besides SMMs that impact human-AI teaming.)

4.3.3. The role of the human-machine interface in developing and maintaining SMMs

To develop a successful SMM, team members must be able to acquire knowledge on what their teammates’ capabilities and responsibilities are and collaborate with them toward a common goal. In human-human SMMs, this is largely done through multiple modes of communication, as highlighted in section 3.4.1. For human-AI teams, the communication with and acquisition of information regarding other teammates is informed by and largely dependent on the interface/communication module used to display, acquire, and pass information.