?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

Automatic design of organic materials requires black-box optimization in a vast chemical space. In conventional molecular design algorithms, a molecule is built as a combination of predetermined fragments. Recently, deep neural network models such as variational autoencoders and recurrent neural networks (RNNs) are shown to be effective in de novo design of molecules without any predetermined fragments. This paper presents a novel Python library ChemTS that explores the chemical space by combining Monte Carlo tree search and an RNN. In a benchmarking problem of optimizing the octanol-water partition coefficient and synthesizability, our algorithm showed superior efficiency in finding high-scoring molecules. ChemTS is available at https://github.com/tsudalab/ChemTS.

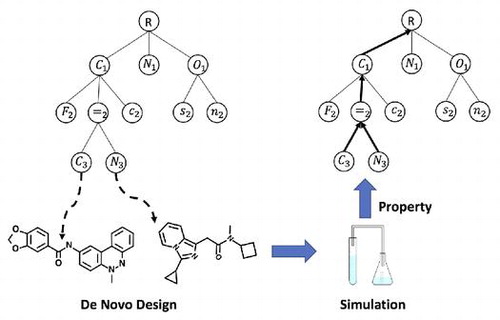

Graphical Abstract

Classification:

1. Introduction

In modern society, a variety of organic molecules are used as important materials such as solar cells [Citation1], organic light-emitting diodes [Citation2], conductors [Citation3], sensors [Citation4] and ferroelectrics [Citation5]. At the highest level of abstraction, design of organic molecules is formulated as a combinatorial optimization problem to find the best solutions in a vast chemical space. Most computer-aided methods for molecular design build a molecule by a combination of predefined fragments (e.g. [Citation6]). Recently, Ikebata et al. [Citation7] succeeded de novo molecular design using an engineered language model of SMILES representation of molecules [Citation8]. It is increasingly evident, however, that engineered models often perform worse than neural networks in text and image generation [Citation9,Citation10]. Gomez-Bombarelli et al. [Citation11] were the first to employ a neural network called variational autoencoder (VAE) to generate molecules. Later Kusner et al. enhanced it to grammar variational autoencoder (GVAE) [Citation12]. SMILES strings created by VAEs are mostly invalid (i.e. they do not translate to chemical structures); so, generation steps have to be repeated many times to obtain a molecule. Segler et al. [Citation13] showed that a recurrent neural network (RNN) using long short-term memory (LSTM) [Citation14] achieves a high probability of valid SMILES generation. In their algorithm, a large number of candidates are generated randomly and a black-box optimization algorithm is employed to choose high-scoring molecules. It is required to generate a very large number of candidates to ensure that desirable molecules are included in the candidate set. Optimization in a too large candidate space can be inhibitively slow.

In this paper, we present a novel Python library ChemTS to offer material scientists a versatile tool of de novo molecular design. The space of SMILES strings is represented as a search tree where the ith level corresponds to the ith symbol. A path from the root toa terminal node corresponds to a complete SMILES string. Initially, only the root node exists and the search tree is gradually generated by Monte Carlo tree search (MCTS) [Citation15]. MCTS is a randomized best-first search method that showed exceptional performance in computer Go [Citation16]. Recently, it has been successfully applied to alloy design [Citation17]. MCTS constructs only a shallow tree and downstream paths are generated by a rollout procedure. In ChemTS, an RNN trained by a large database of SMILES strings is used as the rollout procedure. In a benchmarking experiment, ChemTS showed better efficiency in comparison to VAEs, creating about 40 molecules per minute. As a result, high-scoring molecules were generated within several hours.

2. Method

ChemTS requires a database of SMILES strings and a reward function r(S) where is an input SMILES string. Our definition of SMILES strings contains the following symbols representing atoms, bonds, ring numbers and branches:

{C, c, o, O, N, F, [C@@H], n, -, S,Cl, [O-],[C@H], [NH+],[C@], s, Br, [nH], [NH3+], [NH2+], [C@@], [N+], [nH+], [S@], [N-], [n+],[S@@], [S-], I, [n-], P, [OH+],[NH-], [P@@H], [P@@], [PH2], [P@], [P+], [S+],[o+], [CH2-], [CH-], [SH+], [O+], [s+], [PH+], [PH], [S@@+], /,=, #, 1,2,3,4,5,6,7,8,9,(, ),}. In addition, we have a terminal symbol $. The reward function involves first principle or semi-empirical calculations and describes the quality of the molecule described by S. If S does not correspond to a valid molecule, r(S) is set to an exceptionally small value. We employ rdkit (www.rdkit.org) to check if S is valid or not. Before starting the search, an RNN is trained by the database and we obtain the conditional probability

as a result. The architecture of our RNN is similar to that in [Citation13] and will be detailed in Section 2.1.

MCTS creates a search tree, where each node corresponds to one symbol. Nodes with the terminal symbol are called terminal nodes. Starting with the root node, the search tree grows gradually by repeating the four steps, selection, expansion, simulation and backpropagation (Figure ). Each intermediate node has an upper confidence bound (UCB) score that evaluates the merit of the node [Citation15]. The distinct feature of MCTS is the use of rollout in the simulation step. Whenever a new node is added, paths from the node to terminal nodes are built by a random process. In computer games, it is known that uniformly random rollout does not perform well, and designing a better rollout procedure based on available knowledge is essential in achieving high performance [Citation15]. Our idea is to employ a trained RNN for rollout. A node at level has a partial SMILES string

corresponding to the path from the root to the node. Given the partial string, RNN allows us to compute the distribution of the next letter

. Sampling from the distribution, the string is elongated by one. Elongation by RNN is repeated until the terminal symbol occurs. After elongation is done, the reward of the generated string is computed. In the backpropagation step, the reward is propagated backwards and the UCB scores of traversed nodes are updated. See [Citation17] for details about MCTS.

Figure 1. Monte Carlo tree search. (a) Selection step: the search tree is traversed from the root to a leaf by choosing the child with the largest UCB score. (b) Expansion step: 30 children nodes are created by sampling from RNN. (c) Simulation step: paths to terminal nodes are created by the rollout procedure using RNN. Rewards of the corresponding molecules are computed. (d) Backpropagation step: the internal parameters of upstream nodes are updated.

Figure 2. Best 20 molecules by ChemTS. Blue parts in SMILES strings indicate prefixes made in the search tree. The remaining parts are made by the rollout procedure.

Table 1. Maximum score J at time points 2,4,6 and 8 h achieved by different molecular generation methods.

2.1. Recurrent neural network

Our RNN has a non-deterministic output: an input string is mapped to probability distributions of output symbols

. The RNN represents the function

, where

is a hidden state at position t and

is the one-hot coded vector of input symbol

. The function f is implemented by two stacked gated recurrent units (GRUs) [Citation14], each with 256 dimensional hidden states. The input vector

is fed to the lower GRU, and the hidden state of the lower GRU is fed to the upper GRU. The distribution of output symbol is computed as

, where

is a softmax activation function depending only on the hidden state of the upper GRU.

Given N strings in the training set, we train the network such that it outputs a right-shifted version of the input. Denoted by , the one-hot coded vector of the tth symbol in the ith training string. The parameters in the network

are trained to minimize the following loss function,

where D denotes the relative entropy. Our RNN was implemented using Keras library (github.com/fchollet/keras), and trained with ADAM [Citation18] using a batch size of 256. After the training is finished, one can compute from

. It allows us to perform rollout by sampling the next symbol repeatedly.

3. Experiments

Following [Citation11], we generate molecules that jointly optimize the octanol-water partition coefficient logP and the other two properties: synthetic accessibility [Citation19] and ring penalty that penalizes unrealistically large rings. The score of molecule S is described as(1)

(1)

The reward function of ChemTS is defined as(2)

(2)

ChemTS was compared with two existing methods CVAE [Citation11] and GVAE [Citation12] based on variational autoencoders. Their implementation is available at https://github.com/mkusner/grammarVAE. Both methods perform molecular generation by Bayesian optimization (BO) in a latent space of VAE. RNN, CVAE and GVAE were trained with approximately 250,000 molecules in ZINC database [Citation20]. All methods were trained for 100 epochs. Training took 3.8, 9.4 and 33.5 h, respectively, on a CentOS 6.7 server with a GeForce GTX Titan X GPU. To evaluate the efficiency of MCTS, we prepared two alternative methods using RNN. One is simple random sampling using RNN, where the first symbol is made randomly and it is elongated until the terminal symbol occurs. The other is the combination of RNN and Bayesian optimization [Citation21], where 4000 molecules are made a priori and Bayesian optimization is applied to find the best scoring molecule.

As shown in Table , effectiveness of each method is quantified by the maximum score J among all generated molecules at 2, 4, 6 and 8 h and the speed of molecules generation (i.e. the number of generated molecules per minute). VAE methods performed substantially slower than RNN-based methods, which reflects the low probability of generating valid SMILES strings. ChemTS performed best in finding high-scoring molecules, while the speed of molecular generation (40.89 molecules per minute) was only slightly worse than random generation by RNN (41.33 molecules per minute). The combination of RNN and BO could not find high-scoring molecules. Preparing more candidate molecules may improve the best score, but it would further slow down the molecular generation. In general, it is difficult to design a correct reward function when there are multiple objectives. So, it is important to generate many good molecules in a given time frame to allow the user to browse and select favourite molecules afterwards. See Figure for the best molecules generated by ChemTS.

4. Conclusion

In this paper, we presented a new Python package for molecular generation. It will be further extended to include more sophisticated tree search methods and neural networks. Use of additional packages for computational physics such as pymatgen [Citation22] allows the users to easily implement their own reward function. We look forward to see ChemTS as a part of the open-source ecosystem for organic materials development.

Acknowledgements

We would like to thank Hou Zhufeng, Diptesh Das, Masato Sumita and Thaer M. Dieb for their fruitful discussions.

Additional information

Funding

Notes

No potential conflict of interest was reported by the authors.

References

- Niu G, Guo X, Wang L. Review of recent progress in chemical stability of perovskite solar cells. J Mater Chem A. 2015;3(17):8970–8980.

- Kaji H, Suzuki H, Fukushima T, et al. Purely organic electroluminescent material realizing 100% conversion from electricity to light. Nat Commun. 2015;6:8476.

- Ueda A, Yamada S, Isono T, et al. Hydrogen-bond-dynamics-based switching of conductivity and magnetism: a phase transition caused by deuterium and electron transfer in a hydrogen-bonded purely organic conductor crystal. J Am Chem Soc. 2014;136(34):12184–12192.

- Yeung MCL, Yam VWW. Luminescent cation sensors: from host-guest chemistry, supramolecular chemistry to reaction-based mechanisms. Chem Soc Rev. 2015;44(13):4192–4202.

- Horiuchi S, Tokura Y. Organic ferroelectrics. Nat Mater. 2008;7(5):357–366.

- Podlewska S, Czarnecki WM, Kafel R, et al. Creating the new from the old: Combinatorial libraries generation with machine-learning-based compound structure optimization. J Chem Inf Model. 2017;57(2):133–147.

- Ikebata H, Hongo K, Isomura T, et al. Bayesian molecular design with a chemical language model. J Comput Aided Mol Des. 2017;31(4):379–391.

- Weininger D. SMILES, a chemical language and information system. 1. Introduction to methodology and encoding rules. J Chem Inf Comput Sci. 1988;28(1):31–36.

- Bowman SR, Vilnis L, Vinyals O, et al. Generating sentences from a continuous space. In: Proceedings of the 20th SIGNLL Conference on Computational Natural Language Learning (CoNLL); Berlin; 2016. p. 10–21.

- Oord Avd, Kalchbrenner N, Kavukcuoglu K. Pixel recurrent neural networks. In: Proceedings of 33rd International Conference on Machine Learning (ICML); New York City; 2016. p. 1747–1756.

- Gómez-Bombarelli R, Duvenaud D, Hernández-Lobato JM, et al. Automatic chemical design using a data-driven continuous representation of molecules. arXiv preprint arXiv:161002415; 2016.

- Kusner MJ, Paige B, Hernández-Lobato JM. Grammar variational autoencoder. In: Proceedings of 34th International Conference on Machine Learning (ICML); Sydney; 2017. p. 1945–1954.

- Segler MH, Kogej T, Tyrchan C, et al. Generating focussed molecule libraries for drug discovery with recurrent neural networks. arXiv preprint arXiv:170101329; 2017.

- Cho K, van Merrienboer B, Gülçehre Ç, et al. Learning phrase representations using RNN encoder-decoder for statistical machine translation. In: Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP); Doha; 2014. p. 1724–1734.

- Browne C, Powley E, Whitehouse D, et al. A survey of Monte Carlo tree search methods. IEEE Trans Comput Intell AI Games. 2012;4(1):1–43.

- Silver D, Huang A, Maddison CJ, et al. Mastering the game of go with deep neural networks and tree search. Nature. 2016;529(7587):484–489.

- Dieb TM, Ju S, Yoshizoe K, et al. MDTS: automatic complex materials design using Monte Carlo tree search. Sci Tech Adv Mater. 2017;18(1):498–503.

- Kingma D, Ba J. Adam: a method for stochastic optimization. arXiv preprint arXiv:14126980; 2014.

- Ertl P, Schuffenhauer A. Estimation of synthetic accessibility score of drug-like molecules based on molecular complexity and fragment contributions. J Cheminf. 2009;1(1):8.

- Irwin JJ, Sterling T, Mysinger MM, et al. ZINC: a free tool to discover chemistry for biology. J Chem Inf Model. 2012;52(7):1757–1768.

- Ueno T, Rhone T, Hou Z, et al. COMBO: an efficient Bayesian optimization library for materials science. Mater Discov. 2016;4:18–21.

- Ong SP, Richards WD, Jain A, et al. Python materials genomics (pymatgen): a robust, open-source python library for materials analysis. Comp Mater Sci. 2013;68:314–319.