ABSTRACT

The rising use of technology in classrooms has also brought with it a concomitant wave of computer-based assessments. The argument for computer-based testing is often framed in terms of efficiency and data management: computer-based tests facilitate more efficient processing of test data and the rate at which feedback can be leveraged for student learning rather than being framed in terms of the direct effects that students experience from engaging with novel learning tools. Whilst potentially beneficial, for some students the outcomes of computer-based tests may be counter-productive. This review considers the cognitive, and often implicit, consequences of testing mode upon students with reference to testing performance and subjective measures of cognitive load. Considerations for teachers, test writers and future research are presented with a view to raising the significance of learners’ subjective experiences as a guiding perspective in educational policy making.

1. Introduction

The use of computers in Australian secondary school education has increased in recent decades (Hatzigianni et al., Citation2016; Vassallo & Warren, Citation2017). In response, education systems continue to work to understand the educational impact of information and communications technology (ICT) use in the classroom. In many cases, technologies are introduced to learning environments (Vassallo & Warren, Citation2017), while more recently, the use of technologies has made its way into the realm of assessments. For example, the transition to computer-based assessment in the Australian National Assessment Programme in Literacy and Numeracy (NAPLAN) began in 2018, with a view to 100% digitalisation of the process by 2022 (ACARA, Citation2016). Comparatively, PISA’s move towards computer-based assessments began in 2006 (PISA, Citation2010). This trend is echoed by inconsistent evidence proposing that computer-based testing (CBT) may produce equivalent, if not better results than paper-based testing (PBT) (Ackerman & Lauterman, Citation2012; Prisacari & Danielson, Citation2017).

Despite previous research showing mixed effects of test mode (i.e. taking a test on computer compared to paper), these transitions towards increased computer use in assessments have been upheld as a gateway to faster results, more precise and accurate measurement of student achievement and as a form of assessment-for-learning by providing adaptive feedback (Prisacari & Danielson, Citation2017; Selwyn, Citation2014). The assumption underlying these claims is, of course, that computer-based forms of assessment are at the very least equivalent, if not superior, to paper-based assessments. However, it is worth considering whether different forms of an assessment selectively advantage certain students in ways that traditional test measures do not reflect (Martin, Citation2014). For instance, previous studies have shown that computer-based learning and assessment tasks not only produce lower scores for school-aged students (Carpenter & Alloway, Citation2019), but in some instances, these differences are exacerbated for students with lower working memory capacities (Batka & Peterson, Citation2005; Sidi et al., Citation2017). Additionally, alternative measures of student performance such as measures of cognitive efficiency (Paas & Van Merriënboer, Citation1993) suggest that under certain assessment conditions, students may experience subtle changes to their subjective experience of a task that may not be reflected in changes to assessment scores (Galy et al., Citation2012).

One way to conceptualise changes to students’ subjective experiences during learning and assessment processes is through the theoretical framework of cognitive load theory (CLT), which emphasises instructional sequences and materials design in order to improve learning outcomes (J. Sweller, van Merriënboer, et al., Citation1998). CLT proposes that learning is constrained by the limits of human working memory. When the demands of a learning task overwhelm working memory capacity (WMC), a learner experiences excessive cognitive load, which reduces the resources a student has available to meaningfully engage in the learning process (Sweller, Citation1988; Sweller et al., Citation2019).

One of the idiosyncrasies of CLT is that its current models are derived from research and thinking in a range of psychological theories including models of cognitive architecture (Anderson, Citation1983; J. Sweller, J. J. Van Merriënboer, et al., Citation1998) and dual-channel processing models of working memory (Baddeley & Hitch, Citation1994). In its current theoretical form, CLT underpins Sweller’s tripartite model (J. Sweller, J. J. Van Merriënboer, et al., Citation1998) and Mayer’s theory of multimedia learning (Mayer, Citation2003). This broad reach of CLT serves to highlight both its wide-ranging appeal as well as some of the complexities that remain elusive to researchers such as the temporal and task-sensitivity of cognitive load (CL) measures.

While there has been reasonably wide discussion of the applications of CLT to traditional learning environments (Anmarkrud et al., Citation2019), most of this research has focused on ways of improving instructional and material design, giving rise to a collection of well-discussed effects, such as the split-attention effect (the reduction in performance when information must be integrated from multiple sources), the redundancy effect (the reduction in performance when distracting information is included in learning materials), the worked-example effect (the improved performance that results from providing worked examples to lower-ability students) and the modality effect (the improvement in working memory capacity that occurs when information is presented in both visual and phonological modalities) (Sweller et al., Citation2019). However, there are two important issues that have received little attention in the empirical literature. Firstly, reviews by de Jong (Citation2010) and Anmarkrud et al. (Citation2019) both highlighted that very little CLT research has included any data on participant working memory capacity, which is one of the key principles on which CLT theory is premised.

Additionally, some criticisms have been made of artificial learning contexts in CLT research which are removed from the realities of dynamic classroom environments, thus raising questions of its utility and ecological validity of some research (Ayres, Citation2015; Martin, Citation2014; Mayer, Citation2005; Skulmowski & Xu, Citation2021). For instance, given that a learner’s prior knowledge is a key determinant of the overall cognitive load they experience during a task, many research designs involve artificial time constraints (de Jong, Citation2010). Another means to control the influence of prior knowledge is to engage participants in learning content that is outside their area of expertise, thereby forcing students to learn content they are not necessarily motivated to learn, or material for which the assessment bears no meaningful consequence (e.g. psychology students learning economics – see Korbach et al., Citation2018; Park et al., Citation2015).

Part of the artificiality surrounding some CLT research may stem from the ongoing challenges faced by those attempting to identify valid and reliable measures of cognitive load. For instance, a number of studies report the temporal resolution afforded by physiological measurements such as EEG and pupillometry techniques (Antonenko et al., Citation2010; Haapalainen et al., Citation2010) as well as leveraging the spatial resolution provided by fMRI imaging to confirm distinct categories of cognitive load (Whelan, Citation2007). Likewise, CLT researchers that take a traditional cognitive approach have employed indirect measurement techniques such as secondary-task paradigms that measure students’ monitoring abilities of an unrelated task (Ayres, Citation2001; Brünken et al., Citation2002). However, these approaches to measuring cognitive load ultimately compromise the authenticity of learning environments through their reliance on equipment or, in the case of secondary task procedures, involvement of processes that detract from the underlying learning and assessment tasks.

The aim of this review is to provide a synopsis of test mode effects on cognitive load. While there is little research addressing this explicitly in school-aged learners, a key focus of this review is to consider whether the findings from research conducted on tertiary students can be applied to school-aged learners. The discussion that follows will first present an overview of CLT as a theoretical framework through which to consider recent literature on comparing traditional and computer-based learning materials. Finally, the implications of computer-based assessments will be considered. In drawing conclusions from this review, implications for further research are presented.

2. Methodology

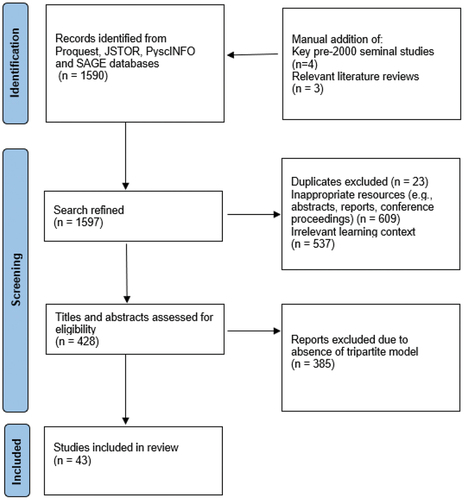

For this narrative-based review, the initial literature search was conducted via four databases: ProQuest, JSTOR, PyscINFO and SAGE. Search terms included cognitive load, multimedia learning, computer-based testing, paper-based testing, working memory and assessment. Given that the literature on CLT including precursor theories spans multiple decades, the initial search was restricted to papers published since 2000, as well as those relating specifically to the education of students in primary years and above. Following this initial search, a manual search was used to identify apart from a number of seminal studies those relating specifically to Sweller’s tripartite model of CLT (J. Sweller, J. J. Van Merriënboer, et al., Citation1998). This list was further refined using content analysis, with papers being included on the basis of learning contexts (the following contexts were excluded: Early Childhood and non-education/industrial applications of CLT, with only primary, secondary and tertiary contexts included), publishing date (i.e. after 2000) and reference to the tripartite theory of cognitive load as the primary theoretical framework – see . In total, 43 empirical studies have been included in the following discussion, plus a number of reviews (see Appendix 1 in the online supplemental materials).

2.1. Overview

The articles included in the review process fell into two broad categories. The first category included articles with a focus on cognitive load and its measurement in the context of learning (n = 24). The second category focused on comparisons of computer and paper-based modalities, of which eight studies included an explicit focus on cognitive load. Of the 43 papers included, 14 sampled school-aged students, with the remaining papers sampling undergraduate or postgraduate students. It was also observed that few studies (n = 10) included measures of working memory capacity, and none of the tools used for this measurement were common to any other studies in this review. Geographically, the research included in this review was sourced from 18 unique countries, with the multiple studies coming from (in descending order of frequency) Australia, USA, Germany, the Netherlands, the United Kingdom (UK), Israel, Norway and Canada.

With respect to measurement of cognitive load, studies included in this review reported a variety of approaches. The most commonly reported measurement of subjective experience of cognitive load was the Paas scale (Paas et al., Citation1994), consisting of either 7-point or 9-point self-reports of participant experiences of item difficulty and mental effort (n = 15). Fewer studies (n = 5) employed the NASA-TLX workload scale which required subjective ratings on six dimensions (mental demand, physical demand, temporal demand, performance, effort and frustration). Alternative measures of cognitive load tended to rely on objective measures (dual task or secondary task paradigms [n = 2]; physiological measures such as EEG and pupillometry [n = 7]; reaction time or time-to-completion [n = 2]). Overall, it was observed that subjective rating scales were the most commonly employed measures of cognitive load because they are minimally intrusive and convenient to employ. However, numerous studies attempted to improve the validity of subjective tools by combining these with objective measures (n = 10). Additionally, it was observed that relatively few papers focused on measuring cognitive load in school-aged students (n = 7), with only one of these directly comparing differences between paper and computer-based modes.

The key themes to emerge from this overview were the application of CLT research to comparisons of computer and paper-based modes, the consistency of working memory data in CLT research, the application of testing mode research to different learning contexts (school aged vs university-aged learners) and the variation in methods used for measuring cognitive load.

The review was guided by the following research questions, with a particular focus on school-aged learners:

What are the effects of test mode on cognitive load?

Does the inclusion of working memory data in CLT research help to understand test mode effects?

What is the best way to measure CLT in authentic learning contexts?

3. Results

Because the focus of this review is ultimately to provide a deeper understanding of the cognitive load experienced under different testing mode conditions, an overview of cognitive load theory, including recent evolutions to the tripartite model are presented first. Following this, an analysis of research addressing each of the three main review questions is presented.

3.1. Recent evolution of the tripartite model of cognitive load

CLT posits that a student’s cognitive load is comprised of three types of load. First, load that stems from the complexity of the information being learned is termed intrinsic load (IL). Material that is high in IL is also high in element interactivity, reflecting an increase in the number of units of information that must be concurrently processed in working memory. For example, written instructions to build a simple molecule (such as HCl) are relatively low in IL, compared to written instructions to build a complex model (such as NaHSO4) in which students have to simultaneously consider four different types of atoms, their relative positions and the nature of the bonds between each (Sweller & Chandler, Citation1994). The experience of high IL appears to be dependent on learner characteristics: those with high prior knowledge (and thus well-established, automated schema) or increased WMC are more likely to report lower levels of IL compared to those with low prior knowledge on questions of equivalent element interactivity (Beckmann, Citation2010; Klepsch et al., Citation2017; Naismith et al., Citation2015; Park et al., Citation2015). In attempting to return CLT to ‘the actual zone of constructive applicability’ (Kalyuga, Citation2011, p. 13), some have tried to reconceptualise the nature of IL. Citing the similarities between CLT and theories of motivation in learning, Schnotz and Kürschner (Citation2007) suggested that the load associated with new learning material must operate as a function of the motivation a learner has to engage with it. This line of thinking has led to the reconceptualisation of IL as the load associated with achieving a particular instructional goal (Kalyuga & Singh, Citation2016).

Second, extraneous load (EL) is associated with factors unrelated to learning processes or content. In traditional CLT, EL typically refers to load that learners incur as a result of the nature of instructional design (i.e. how materials are designed, lessons are structured or instructions are given), leading to a number of instructional effects that mediate successful learning (Sweller, Citation2020). The classic example of this has been demonstrated by comparing material in which instructions are presented in a traditional list format, and material in which instructions are integrated into process diagrams (such as completing the wiring for an electrical insulation resistance test). This so-called split-attention effect suggests that information is better learned when EL is lowered by decreasing the unnecessary demands placed on learners by constantly shifting their attention to integrate disparate sources of essential information (Chandler & Sweller, Citation1991). Third, the seductive details effect (SDE) is typically demonstrated in multimedia contexts by presenting learners with a text containing interesting but irrelevant pictures or videos. The SDE has been reported to decrease test scores and perceptual processing of textual information (Korbach et al., Citation2018; Park et al., Citation2015). However, the experience of EL depends largely on the presence of high levels of IL. That is, when students are given less complex material to learn, the influence of extraneous information disappears (Sweller & Chandler, Citation1994). This is important in the context of testing mode effects, as it implies that any extraneous load associated with the use of computers is less likely to add load that is detrimental to performance during easy, or low-load tasks. Conversely, under high load tasks, the extraneous load associated with computer use will likely have greater bearing on test performance.

Third, cognitive load arising due to learning or schema formation is termed germane load (GL). Although traditionally considered a third category of CL, GL may be more accurately conceptualised as the working memory resources required to process IL (Leppink et al., Citation2015). Galy et al. (Citation2012) placed learners under increasing IL (by increasing task difficulty), increasing EL (by increasing time pressure) and manipulated participants’ total cognitive resources available by testing students at differing levels of alertness. They proposed that GL is the resultant cognitive resource that remains after the addition of IL, EL and functional state factors such as alertness. This is consistent with recent revisions of CLT proposing that GL be treated completely separately to IL and EL, as a pool of working memory resources that are actually devoted to learning (de Jong, Citation2010; Kalyuga, Citation2011; Sweller, Citation2010), with the implication being that IL and EL combine in a theoretically additive relationship to determine the cognitive load experienced by individual learner characteristics (motivation, attitudes and prior knowledge). For instance, a learner who is insufficiently challenged by a task may become unmotivated and choose to allocate insufficient resources to complete a relatively simple calculation, and thus fail to meet the learning objectives of that activity and in doing so, report a relatively low CL. Skulmowski and Xu’s (Citation2021) review of CLT in digital learning environments noted a lack of awareness of the role of learning context in CLT research. To this end, it is worth observing that relatively few of the studies included in this review were based on an assessment of learning in context where the material was contextually appropriate for learners, such as assessing psychology undergraduates on psychology-related content or taking observations from students in authentic learning contexts which are genuinely integrated into their regular teaching and learning programmes. We highlight this disconnect because there is a risk that recent developments to the triarchic model of CLT that remove GL altogether from the measurement of CL (Jiang & Kalyuga, Citation2020; Skulmowski & Xu, Citation2021) will further facilitate the decontextualisation of assessments and misalignment between learner characteristics meaningful forms of assessment in future CL research.

The overarching theme of CLT research is that learning is most effective (and learning errors lowest) when EL is minimised and IL is optimised within the limits of a student’s working memory capacity (Van Merriënboer et al., Citation2006). This has been demonstrated in numerous studies which, in placing learners under increased CL, have observed increased error rate (Ayres, Citation2001, Citation2006), decreased levels of comprehension (Mayes et al., Citation2001), poorer task performance (e.g. test scores) (Emerson & MacKay, Citation2011; Schmeck et al., Citation2015) and lower levels of task-directed visual attention (Debue & Van De Leemput, Citation2014). However, these results may be partly obfuscated by inconsistencies in the operationalisation of cognitive load. Although part of this difficulty may stem from the range of terminology used in the literature (cognitive load, mental effort, mental load, workload), there have been persistent issues with attempts to theorise and empirically differentiate IL, EL and GL. As evidenced by recent discussions pertaining to the relative status of each type of CL, this would suggest that empirically differentiating between the three categories renders CLT unnecessarily idiosyncratic (Jiang & Kalyuga, Citation2020).

3.2. Working memory in CLT research

An important issue that pervades much CLT research is the role of working memory. CLT is clearly premised on the assumption that WMC is the limiting factor in our capacity to learn (Sweller et al., Citation2019). However, several reviews point to a remarkable absence of WMC measurement in CLT research, given its role as a mediating variable in the experience of CLT or as a way to validate the subjective scales ubiquitous in the measurement of cognitive load (Anmarkrud et al., Citation2019; de Jong, Citation2010). These observations are consistent with the outcome of the present review in which only seven of the studies included referred to measures of WMC (Batka & Peterson, Citation2005; Chen et al., Citation2018; Galy et al., Citation2012; Logan, Citation2015; Noyes & Garland, Citation2003; Noyes et al., Citation2004; Schwamborn et al., Citation2011). Where recent researchers have included measures of WMC, this has typically served as a control variable to demonstrate empirical homogeneity between groups (Korbach et al., Citation2018; Park et al., Citation2015) rather than to examine the relationship between individual differences in WMC and their respective CL during learning. While empirically sound, this is a much harder requirement to meet when observing cognitive load in authentic learning contexts. Two studies of interest (Chen et al., Citation2018; Mayes et al., Citation2001) have demonstrated a negative relationship between WMC and subjective experiences of cognitive load; however, these both employed between-subjects designs, which may introduce unnecessary variation owing to individual differences.

As far as alternative, more subtle cognitive consequences that learners might experience when engaging with different testing modalities, there is evidence that CBT environments change students’ ability to draw effectively on key metacognitive processes such as retrieval, self-regulation and performance monitoring (Ackerman & Lauterman, Citation2012; Logan, Citation2015; Noyes & Garland, Citation2003; Noyes et al., Citation2004; Prisacari & Danielson, Citation2017; Sidi et al., Citation2017), with a resultant increase in the mental effort reported by students (Noyes et al., Citation2004). One way that researchers have tried to gauge the cognitive impacts of the use of computers compared to paper has been to consider if the traditional test performance measures are mediated by working memory capacity. Results from Batka and Peterson (Citation2005) showed that some of the common instructional complexities that arise from using multimedia inefficiently in classrooms such as the presenting information in competing vs complementary modalities, and the presence of redundant information selectively disadvantages learners with lower WMC. There is supporting evidence from Carpenter and Alloway (Citation2019) demonstrating children experience an advantage when completing working memory tests on paper compared to on a computer. This presents an interesting issue for CLT because if WMC can be shown to mediate performance on equivalent tests given in different modalities, then there would be reason to assume that the experience of CL would also differ across different testing modalities, given the underlying assumptions about the role of WMC in CLT (i.e. that decreased WMC resources generally lead to increased CL).

Moreover, including WMC as a covariate in analyses of cognitive load may add rigour to CLT research in two ways. Firstly, it may help to control for the working memory demands placed on participants that arise from the measurement of cognitive load itself rather than from the load imposed by learning and assessment tasks (Anmarkrud et al., Citation2019; de Jong, Citation2010). Secondly, while much CLT research attempts to control for the influence of differences in learners’ prior knowledge by having participants learn new material unrelated to the participants’ area of study, WMC may provide an important measure of the ‘system capacity’ (de Jong, Citation2010, p. 123) intrinsic to each learner that exists independent of prior knowledge.

3.3. Measuring cognitive load

Measures of CL fall into three broad categories: physiological, secondary task and subjective measures. Physiological techniques (such as heart rate, heart rate variance, blood pressure, eye-tracking, pupil fixations and diameter) have risen in popularity in response to calls for more objective measures with greater time resolution of changes in cognitive load. Cardiac measures are assumed to reflect autonomic arousal and considered some of the most accurate and responsive measures of cognitive load (Galy et al., Citation2012; Haapalainen et al., Citation2010; Solhjoo et al., Citation2019). In contrast, results using pupillometry measures have been mixed and prone to individual idiosyncrasies (Debue & Van De Leemput, Citation2014; Szulewski et al., Citation2017). From the perspective of artificiality, physiological measures present two key challenges: they are typically indirect measures of CL, and they tend to be intrusive to authentic learning and assessment environments.

Studies using a secondary task typically involve students completing a primary learning task, while performance or reaction times in response to a secondary task (e.g. digit span) or stimulus (e.g. the appearance of an arbitrary response stimulus) is used to indirectly measure CL. Students who are engaged in a primary task of higher IL tend to have slower reaction times and poorer recall of secondary task details (Ayres, Citation2001; Brünken et al., Citation2002). The design of these studies lends itself well to more traditional lines of cognitive science and empirical psychology and improved the temporal resolution of CL measurements, yet they also suffer from high levels of artificiality and intrusion in authentic learning environments as they are likely to interfere with test performance.

The most commonly employed techniques are subjective rating scales. The Paas scale (Paas et al., Citation1994) is an adapted version of an item difficulty scale (see Bratfisch et al., Citation1972) that consists of a single scale of either 7 or 9 points prompting learners to rate their invested mental effort. The NASA-TLX workload scale (Hart & Staveland, Citation1988) is multidimensional, measuring subjective evaluations on a number of related scales including workload, difficulty, mental effort, time pressure, frustration, fatigue and performance. Numerous studies have shown that these scales are sensitive to manipulations of task complexity, and that students are generally able to reflect minor changes in task difficulty and cognitive effort on these subjective scales (Korbach et al., Citation2018; Schmeck et al., Citation2015). While both scales have been shown to be sensitive to small changes in IL and EL, the Paas scale provides a greater range of CL scores (Naismith et al., Citation2015) and may be less intrusive because it can be administered with a single item rather than relying on multiple responses for each measurement of CL. The Paas scale is generally associated with relatively high measures of reliability, with reported Cronbach’s alpha values ranging from 0.67 to 0.90 (Ayres, Citation2006; Klepsch et al., Citation2017; Paas et al., Citation1994).

One of the main criticisms of self-report measures of CL is that increasing subjectivity comes at the cost of accuracy. Researchers have explored the effects of asking learners to subjectively rate perceived CL immediately following a learning task compared to a single, delayed rating given at the end of a collection of tasks. Single ratings given at the end of a series of learning tasks tend to be more sensitive to the salience of more difficult questions, therefore subjective ratings are more sensitive to item-level manipulations of CL when taken immediately following each learning or assessment task (Raaijmakers et al., Citation2017; Schmeck et al., Citation2015; Van Gog et al., Citation2012). One advantage of employing subjective scale measures in this way is its reliance on within-subjects research designs, which have been identified as an important recommendation for future CLT research (Brünken et al., Citation2002; Debue & Van De Leemput, Citation2014; Prisacari & Danielson, Citation2017).

A second issue pertaining to subjective measures is that they tend to be sensitive to learner ability and task dynamics. Ayres (Citation2006) collected subjective learners’ ratings on a 7-point scale of task difficulty after completing a series of mathematical bracket expansion problems. He concluded that subjective ratings are sensitive to subtle differences in element interactivity that may not be reflected in the rates at which learners make errors. Similar findings have been reported by Naismith et al. (Citation2015); however, low-level learners may also have a poorer grasp of task complexities owing to poorer schema formation which would, in turn, render their ratings less valid (Ayres, Citation2006; Klepsch et al., Citation2017). One solution to this may be to incorporate learner training prior to administering CL ratings in which learners are briefed on the concept of cognitive load, and practise rating tasks designed to impose differing levels of load. There is some precedent for this approach, with Klepsch et al. (Citation2017) reporting that when learners were given training to understand CL and to distinguish questions of differing load, they were better discriminators of high compared to low-load tasks. In relation to the interaction between CL measures and task dynamics, Galy et al. (Citation2012) reported when task difficulty (IL) and time pressure (EL) are increased, learners’ mental efficiency suffers.

This highlights an interesting intricacy of measuring CL: where CL is generally thought to correlate to performance measures such as test scores, it is also likely that subjective experiences of CL may not be reflected by changes in performance measures such as test scores and error rates. Paas and Van Merriënboer (Citation1993) developed a CL efficiency scale that compares standardised scores of mental effort (EZ) and performance (PZ) in a way that allocates positive values where a learner reports PZ>EZ but negative values where EZ>PZ. Given the idiosyncratic nature of individuals’ experiences of cognitive load, efficiency measures may provide a useful way to triangulate CL data. Researchers commonly acknowledge the benefits of validating subjective scale measures by including more objective physiological measures. However, to avoid their intrusion upon authentic learning contexts, triangulating these measures with measures of efficiency may provide a welcome compromise.

Finally, it is worth acknowledging the distinct lack of qualitative, learner-centred experiential data in CLT research. A large part of this may stem from the relatively high proportion of laboratory-based experimental research in the field. There are authors who have placed explicit value upon learner experiences as a means to validating traditional measures of CL through post-task interviews or think-aloud reflections (Naismith et al., Citation2015; Van Gog et al., Citation2012), yet it is hard to rationalise the two contrasting assumptions that a) learners are able to reflect small changes in CL on subjective scales, and b) the extent to which an individual experiences CL may depend on learning processes unique to each individual. An obvious way to address this for future research would be to corroborate subjective CL ratings with think-aloud protocols reported in recent studies.

3.4. Implications of CLT for computer-based learning and assessment

It is worth reiterating at this point that CLT research has traditionally been tasked with the reduction of extraneous and the optimisation of intrinsic load experienced by learners during an instructional sequence in order to free up precious working memory resources and ultimately enhance the learning process leading to long-term memory formation (Sweller, Citation2020). What, then, might the implications be when instructional formats are shifted from traditional student-teacher-blackboard interaction patterns to allow for the inclusion of information presented and assessed on a digital device?

Incorporating the use of multimedia into learning and assessment sequences adds a layer of complexity to our understanding of best practice in the classroom. The variety of potential stimuli (narration, text, static and dynamic images) as well as interactive elements (e.g. open text, drag-and-drop, adaptive feedback) increases the opportunities to not only engage learners in novel learning experiences, but also increases the risk of involving learners in inefficient learning sequences that place increased demands on their working memory capacities. To this end, CLT has played an important role in understanding the cognitive effects incurred when learners engage with multimedia learning environments (Mayer, Citation2019).

A key question in the use of CLT in multimedia learning environments is the way in which digital learning interacts with the theoretical underlying cognitive loads. The fact that engaging with digital learning tools can be hugely motivating is widely accepted. However, the use of digital learning and assessment tools without consideration of the extraneous and germane loads they induce in learners will lead to a detrimental misalignment between instructional design and learning outcomes (Skulmowski & Xu, Citation2021).

In a general sense, there is a lack of consensus on whether learning on computer or learning via more conventional media (i.e. paper) leads to better outcomes, although younger students seem to be put at a greater disadvantage than adult learners when presented with computer-based assessments. A large study by Bennett et al. (Citation2008) compared mathematics CBT and PBT results from 1970 eighth-grade North American students. They reported significantly higher scores on PBT, although the reported effect size was small (d = .14). Similar findings in favour of paper-based learning and assessment techniques have been reported in other studies of primary-aged learners (Chu, Citation2014; Logan, Citation2015). On the other hand, those studies reporting a CBT advantage also acknowledge that CBT typically disadvantages lower-ability (Noyes et al., Citation2004), low SES learners (Yu & Iwashita, Citation2021) or those with poorer WMC (Logan, Citation2015). This is significant because it suggests that learner performance under different testing modalities becomes more dependent on functional and affective states during learning and assessment tasks than differences in the question difficulty that are intended to differentiate between learners of differing abilities and content competence.

In directly considering the cognitive load of testing mode, Prisacari and Danielson (Citation2017) conducted a counterbalanced study in which undergraduate chemistry students were randomly assigned to complete a series of quizzes in both computer and paper-based formats. While participants did not report differences in cognitive load or mental effort for either mode, the researchers did report a decrease in the use of scratch paper for computer-based questions compared to paper-based questions designed to induce higher IL (algorithmic and conceptual level questions). Further to this, Sidi et al. (Citation2017) noted that students completing computer-based assessments are likely to be doing so under relatively high time pressures. This increase in EL produced increases in inefficiencies as well as poorer self-monitoring by students who were more inclined to overestimate their abilities in answering questions.

Evidently, there is some precedent for the proposal that learners incur cognitive consequences that may not be reflected in traditional measures of performance when teachers move learning and assessment from paper to computer. To uphold this assertion, cognitive evidence ought to show that differences in cognitive load are selectively associated with areas of the brain typically involved in key cognitive processes such as attention, working memory, motivation and self-regulation. A review by Whelan (Citation2007) supports the notion that different cognitive loads are associated with cortical activation of areas typically associated with key working memory and executive function processes. For example, Whelan’s review found that empirical manipulations of intrinsic load have been observed in neuroimaging studies to selectively increase activity of the dorso-lateral prefrontal cortex, an area typically associated with attention control. Other studies have shown that empirical manipulation of extraneous load can be matched to the processing limitations of verbal processing (i.e. Wernicke’s area) and visual association (posterior parietal lobe) areas. Likewise, manipulations of germane load have been associated with executive functional areas in the superior frontal sulcus of the frontal cortex. We propose that under certain circumstances, there are unavoidable cognitive consequences for learners when engaging with digital learning and assessment activities. Indeed, there is now an emerging argument that these cognitive consequences, especially where increased EL can be offset by concomitant improvements in motivation and content engagement, may lead to better learning outcomes and could be justified through careful instructional design (Skulmowski & Xu, Citation2021). Therefore, to leverage any benefits afforded by CBT, the key issue would therefore be that any increase in such cognitive load must be aligned with a meaningful and authentic form of assessment that engages learners in an ecologically valid manner. In other words, assessing learners on computer for the sake of assessing learners on computer may undermine the original intentions of any assessment.

In relation to test writers, school-aged students are more sensitive than older students to the effects of taking assessments on computer. It is worth highlighting that some circumstances may justify the use of computers, particularly in cases where mass distribution and evaluation of test performance renders paper-based assessment less economical. However. in these circumstances additional considerations must be made to account for the additional load that computer-based assessment places on learners to minimise any associated disadvantage. For instance, there is some evidence that school-aged learners (particularly males) take longer to read information presented on a screen (Ronconi et al., Citation2022), and that this may lead to shallower levels of processing (Delgado & Salmerón, Citation2021; Delgado et al., Citation2018), and subtle changes in eye-tracking patterns (Latini et al., Citation2020). One plausible way that test writers producing assessment material could accommodate these effects is by allowing additional reading time for computer-based tests. Furthermore, split attention effect research from CLT highlights that information presented in ways that require students to shift their attention between multiple sources of information is likely to increase cognitive demands of a task (Sweller et al., Citation2019). Consistent with research by Prisacari and Danielson (Citation2017) and Pengelley et al. (Citation2023), test formats that require students to show working out (e.g. algebraic and formula rearranging) may be placed at a disadvantage if their CBT environment does not provide a means to do so on screen, and they are forced to show working out on scratch paper while processing and recording final responses on computer.

4. Discussion

In the context of assessment, the current review suggests that CBT formats are not unanimously equivalent to PBT. We have presented mixed evidence for the argument of CBT equivalency and suggest that the extent to which CBT is appropriate is largely dependent on characteristics of both the learner taking the assessment, and the assessment itself. Most of the studies that report CBT inferiority have focused on primary and lower secondary school-aged children (Bennett et al., Citation2008; Carpenter & Alloway, Citation2019; Chu, Citation2014; Logan, Citation2015). This observation is consistent with much of the CLT research that suggests that multimedia learning and assessment environments are likely to fundamentally change the metacognitive strategies that students engage to meet the needs of a given task (Ackerman & Lauterman, Citation2012; Noyes & Garland, Citation2003). Additionally, it is likely that these increased demands selectively disadvantage learners with lower WMC, including younger and developing students.

In the context of this review, the following discussion highlights some key conclusions drawn in relation to our three research questions.

4.1. RQ1: what are the effects of testing mode on cognitive load?

In the context of recent developments to the triarchic framework of CLT, teachers intending to implement CBT formats ought to consider several important factors. Firstly, traditional CLT theory might have once suggested that where identical versions of a test can be presented to students in either paper or computer formats, the only difference between the two would be subtle changes in extraneous load arising from the use of a computer. We have presented evidence that this is a reductive assessment of the reality of multimedia learning environments. More recent acknowledgement of the role that motivation must play in determining the CL experienced by an individual for any given task points to the reality that engaging in multimedia environments can be both highly motivating for learners and increasingly taxing on an individual’s germane resources as they navigate complex and non-linear interaction sequences that are embedded with a variety of audio, visual and textual information. If IL is a function of a learner’s motivation to engage with a learning task, or to achieve a particular learning goal (i.e. achieve a successful outcome on a test), then the importance of ecological validity and CLT research in authentic learning contexts cannot be ignored given the prevalence of studies employing assessment tasks which have limited contextual importance to the learners they are assessing.

Secondly, we have presented evidence that while CBT formats may not necessarily lead to poorer performance in all contexts, they may change the manner in which students engage with test materials – either in terms of their test behaviour (e.g. use of scratch paper) or self-regulatory behaviours (e.g. performance monitoring). It is entirely possible that a learning sequence that places learners under increased EL may not be reflected in commonly employed subjective measures of CL because their underlying working memory capacity and motivation to engage allows them to absorb information and perform appropriately in response to a given task, particularly when presented with tasks with low IL. Further, the extent to which additional computer-induced extraneous load is likely to be considered acceptable to justify the use of CBT is likely to be mediated by individual differences in working memory and computer competence. Whether this then renders the use of CBT unjustifiable is a difficult question, but one worth exploring from an equity perspective.

A key consideration at this point is the significance of test mode effects in instances where they have been shown to have little to no effect on test scores. Firstly, we have presented evidence that many of the studies conducted in contexts with school-aged students indicate that, indeed, computer-based testing tends to lead to poorer test scores (Bennett et al., Citation2008; Carpenter & Alloway, Citation2019; Logan, Citation2015). Of note is that the differences between paper and computer modes are absent in much research conducted on tertiary learners (Prisacari & Danielson, Citation2017), although the paper-based advantage may be present under certain test parameters such as time constraints (Ackerman & Lauterman, Citation2012). Furthermore, if the deleterious effects of computer-based assessment in younger learners are mediated by working memory capacity, then this raises another important issue. For instance, it has been shown that successive tasks that place high demands on learners may lead to a working memory depletion effect (Chen et al., Citation2018). This suggests that the deleterious effects of computer-based tests may not lead to decreased test scores initially, but that they may gradually deplete working memory resources over successive testing events, as is common in national standardised assessments such as NAPLAN.

Another area in which test mode effects are important is the use of scratch paper. Scratch paper use is commonly required for certain types of questions that require algebraic rearrangement, or stepped working out in calculation-based assessment questions. Regardless of whether or not this type of test-taking behaviour has a direct impact on test scores (particularly for students with high working memory capacity), it is often used by teachers to assess and diagnose students’ approaches to addressing a question. Thus, as test mode has been shown to reduce scratch paper use (Prisacari & Danielson, Citation2017), it is reasonable to question the use of learning and assessment practices that interfere with adaptive learning and assessment behaviours. This is especially important in the context of this discussion as we were able to identify only one study considering the effect of test mode on scratch paper use in school-aged learners (Pengelley et al., Citation2023), which suggested that for younger learners CBT environments lead to decreased scratch paper use as students are placed under increasing intrinsic and/or extraneous cognitive load which, in turn, is associated with poorer test performance. Despite few studies having focused on testing mode effects on school-aged learners, the evidence presented in this review (Bennett et al., Citation2008; Carpenter & Alloway, Citation2019; Chen et al., Citation2018; Pengelley et al., Citation2023) suggests it is plausible that the working memory and attentional demands placed on students during computer-based learning and assessment tasks are likely to be exacerbated in children when compared to adults.

Despite the discourse surrounding the inevitability of digitisation in education, educators have been on the brink of technology-induced transformation for many decades (Laurillard, Citation2008). While remaining cautious of hyperbole surrounding the ed-tech revolution (Selwyn, Citation2016), the narrative associated with educational technology typically equates the inevitability of change to social improvement, rather than exploring the effects it has on its primary consumers: teachers and students. In this sense, CLT research would benefit from a refocus on what is actually happening in authentic learning contexts when learners engage in meaningful learning and assessment tasks (Selwyn, Citation2010), given the increased perceptual complexities that are afforded by digital learning and assessment practices.

4.2. RQ 2: does the inclusion of working memory data in CLT research help to understand test mode effects?

We have presented evidence that design features of multimedia tasks may selectively advantage learners based on WMC. This is further supported by neuroimaging evidence indicating that experimental manipulations of CL selectively engage localised cortical areas associated with key working memory processes, thus highlighting the importance of WM processes in understanding the cognitive impacts of different learning and assessment tasks. This is a significant consideration to make when incorporating digital tools into assessment tasks. We find the lack of working memory data in the CLT literature concerning because, as Schnotz and Kürschner (Citation2007) concluded, cognitive task performance is fundamentally determined by working memory constraints, but the learning process relates to changes in the organisation of long-term memory. It is worth highlighting that much CLT and multimedia research does not differentiate between learning processes and cognitive task demands particularly clearly because most researchers rely on test performance (i.e. a measure of learning) given at the end of a sequence of learning to gauge the relative merits of digital learning tools (which are mediated by cognitive processes in working memory as learners engage with learning content). The question of whether testing mode has distinguishable cognitive effects on learners (especially if these effects selectively favour high WMC learners) is certainly one that future research should continue to explore from an equity perspective.

4.3. RQ 3: what is the best way to measure CLT in authentic learning contexts?

The issue of CLT measurement continues to be problematic. On the one hand, there have been promising developments in objective physiological techniques which have improved temporal resolution of measurements while enhancing the validity of operationalisation of cognitive load. However, the fundamental issue put forward in this review relating to the need for greater emphasis on meaningful and authentic learning and assessment contexts renders many measurement techniques intrusive. A weight of evidence suggests the most efficient means for measuring CL in authentic assessment settings is through the use of subjective rating scales such as the 7 or 9-point Paas scale. This is not to say that other techniques are not worth pursuing in different research contexts, however the ability to insert rating scales alongside authentic assessment questions means that researchers can achieve relatively high item-level resolution of variation in CL during assessments while maintaining authenticity of an assessment task. Two approaches underrepresented in the literature is the use of an efficiency measure (Paas & Van Merriënboer, Citation1993) that relates performance against mental effort, and also post-interview think-aloud tasks as a means to validate subjective ratings provided by learners. Through an experiential lens, this line of research might offer valuable insights in validating and disentangling the different forms of CL, and the way they interact with variations in motivation and assessment format.

5. Limitations

It is important to acknowledge some of the limitations of the current review and its methodology. In the first instance, we sought to only include articles from the year 2000 onwards. This was largely intended to limit the scope of the current discussion to more recent evolutions of CLT and multimedia research and was based on previous acknowledgements on the breadth of CLT research across multiple domains of knowledge (Martin, Citation2014). Secondly, the focus of this review has primarily been on research incorporating CLT as its theoretical framework. We acknowledge that the parallel theory (Mayer’s Cognitive Theory of Multimedia Learning) contains many relevant features that have not been included in this review because the theory lends itself best to principles of instructional design in a multimedia context rather than a comparison between paper and computer. Finally, while every attempt was made to include key information relating to the scope of database searches employed in this review, we acknowledge that the use of manual search techniques is prone to bias which could be avoided if approached in a more systematic manner.

6. Implications for practice and research

While cognitive load theory has led to the identification of many effects that inform cognitively sound instructional design which are, in turn, determined by the mechanics of human cognitive architecture, its application to assessment is relatively new. This review has highlighted several key areas of interest for practitioners and researchers. The main finding of the present review emphasises that additional research on test mode effects in school-aged students is necessary. In terms of classroom practice, the present review suggests that computer and paper-based assessment modes are unlikely to be experienced in equal ways. Computer-based testing may lead to lower scores, and lower levels of positive test-taking behaviours, such as scratch paper use. This has implications for the way in which computer-based tests are written and administered. Moreover, future research should prioritise the measurement of cognitive load in authentic learning environments. Given the geographical breadth of research considered in this review, these recommendations should be considered in an international context of the increased use of computer-based testing modes in high-stakes standardised assessment, such as NAPLAN and PISA.

Supplemental Material

Download MS Word (27.7 KB)Disclosure statement

No potential conflict of interest was reported by the author(s).

Supplementary material

Supplemental data for this article can be accessed online at https://doi.org/10.1080/1475939X.2024.2367517.

Additional information

Notes on contributors

James Pengelley

James Pengelley is an adjunct lecturer at Murdoch University. His research has focused on the impact of educational technologies on learning environment, classroom discourse and student cognition.

Peter R. Whipp

Peter R. Whipp is the Head of School and Dean of Education at Murdoch University.

Anabela Malpique

Anabela Malpique is a Senior lecturer in School of Education, Edith Cowan University, Western Australia. Her research interests focus on literacy development, particularly in writing development and instruction. Her research involves developing writers in primary and secondary schools.

References

- ACARA. (2016). NAPLAN online. https://www.nap.edu.au/online-assessment

- Ackerman, R., & Lauterman, T. (2012). Taking reading comprehension exams on screen or on paper? A metacognitive analysis of learning texts under time pressure. Computers in Human Behavior, 28(5), 1816–1828. https://doi.org/10.1016/j.chb.2012.04.023

- Anderson, J. R. (1983). The architecture of cognition (Vol. 5). Harvard University Press. https://go.exlibris.link/7r6yPk8n

- Anmarkrud, Ø., Andresen, A., & Bråten, I. (2019). Cognitive load and working memory in multimedia learning: Conceptual and measurement issues. Educational Psychologist, 54(2), 61–83. https://doi.org/10.1080/00461520.2018.1554484

- Antonenko, P., Paas, F., Grabner, R., & van Gog, T. (2010). Using electroencephalography to measure cognitive load. Educational Psychology Review, 22(4), 425–438. https://doi.org/10.1007/s10648-010-9130-y

- Ayres, P. (2001). Systematic mathematical errors and cognitive load. Contemporary Educational Psychology, 26(2), 227–248. https://doi.org/10.1006/ceps.2000.1051

- Ayres, P. (2006). Using subjective measures to detect variations of intrinsic cognitive load within problems. Learning and Instruction, 16(5), 389–400. https://doi.org/10.1016/j.learninstruc.2006.09.001

- Ayres, P. (2015). State-of-the-art research into multimedia learning: A commentary on Mayer’s handbook of multimedia learning. Applied Cognitive Psychology, 29(4), 631–636. https://doi.org/10.1002/acp.3142

- Baddeley, A. D., & Hitch, G. J. (1994). Developments in the concept of working memory. Neuropsychology, 8(4), 485. https://doi.org/10.1037/0894-4105.8.4.485

- Batka, J. A., & Peterson, S. A. (2005). The effects of individual differences in working memory on multimedia learning. Proceedings of the Human Factors and Ergonomics Society Annual Meeting, 49(13), 1256–1260. https://doi.org/10.1177/15419312050490130

- Beckmann, J. (2010). Taming a beast of burden – On some issues with the conceptualisation and operationalisation of cognitive load. Learning and Instruction, 20(3), 250–264. https://doi.org/10.1016/j.learninstruc.2009.02.024

- Bennett, R. E., Braswell, J., Oranje, A., Sandene, B., Kaplan, B., & Yan, F. (2008). Does it matter if I take my mathematics test on computer? A second empirical study of mode effects in NAEP. Journal of Technology, Learning, and Assessment, 6(9). https://ejournals.bc.edu/index.php/jtla/article/view/1639

- Bratfisch, O., Borg, G., & Dornic, O. (1972). Perceived item-difficulty in three tests of intellectual performance capacity [ Report]. https://eric.ed.gov/?id=ED080552

- Brünken, R., Steinbacher, S., Plass, J. L., & Leutner, D. (2002). Assessment of cognitive load in multimedia learning using dual-task methodology. Experimental Psychology, 49(2), 109–119. https://doi.org/10.1027//1618-3169.49.2.109

- Carpenter, R., & Alloway, T. (2019). Computer versus paper-based testing: Are they equivalent when it comes to working memory? Journal of Psychoeducational Assessment, 37(3), 382–394. https://doi.org/10.1177/0734282918761496

- Chandler, P., & Sweller, J. (1991). Cognitive load theory and the format of instruction. Cognition and Instruction, 8(4), 293–332. https://doi.org/10.1207/s1532690xci0804_2

- Chen, O., Castro-Alonso, J. C., Paas, F., & Sweller, J. (2018). Extending cognitive load theory to incorporate working memory resource depletion: Evidence from the spacing effect. Educational Psychology Review, 30(2), 483–501. https://doi.org/10.1007/s10648-017-9426-2

- Chu, H.-C. (2014). Potential negative effects of mobile learning on students’ learning achievement and cognitive load – A format assessment perspective. Journal of Educational Technology & Society, 17(1), 332–344. http://www.jstor.org/stable/jeductechsoci.17.1.332

- Debue, N., & Van De Leemput, C. (2014). What does germane load mean? An empirical contribution to the cognitive load theory. Frontiers in Psychology, 5, 1099. https://doi.org/10.3389/fpsyg.2014.01099

- de Jong, T. (2010). Cognitive load theory, educational research, and instructional design: Some food for thought. Instructional Science, 38(2), 105–134. https://doi.org/10.1007/s11251-009-9110-0

- Delgado, P., & Salmerón, L. (2021). The inattentive on-screen reading: Reading medium affects attention and reading comprehension under time pressure. Learning and Instruction, 71, 101396. https://doi.org/10.1016/j.learninstruc.2020.101396

- Delgado, P., Vargas, C., Ackerman, R., & Salmerón, L. (2018). Don’t throw away your printed books: A meta-analysis on the effects of reading media on reading comprehension. Educational Research Review, 25, 23–38. https://doi.org/10.1016/j.edurev.2018.09.003

- Emerson, L., & MacKay, B. (2011). A comparison between paper‐based and online learning in higher education. British Journal of Educational Technology, 42(5), 727–735. https://doi.org/10.1111/j.1467-8535.2010.01081.x

- Galy, E., Cariou, M., & Mélan, C. (2012). What is the relationship between mental workload factors and cognitive load types? International Journal of Psychophysiology, 83(3), 269–275. https://doi.org/10.1016/j.ijpsycho.2011.09.023

- Haapalainen, E., Kim, S., Forlizzi, J. F., & Dey, A. K. (2010). Psycho-physiological measures for assessing cognitive load. Proceedings of the 12th ACM International Conference on Ubiquitous Computing (pp. 301–310). https://doi.org/10.1145/1864349.1864395

- Hart, S. G., & Staveland, L. E. (1988). Development of NASA-TLX (Task Load Index): Results of empirical and theoretical research. Advances in Psychology, 52, 139–183. https://doi.org/10.1016/S0166-4115(08)62386-9

- Hatzigianni, M., Gregoriadis, A., & Fleer, M. (2016). Computer use at schools and associations with social-emotional outcomes–A holistic approach. Findings from the longitudinal study of Australian children. Computers & Education, 95, 134–150. https://doi.org/10.1016/j.compedu.2016.01.003

- Jiang, D., & Kalyuga, S. (2020). Confirmatory factor analysis of cognitive load ratings supports a two-factor model. The Quantitative Methods for Psychology, 16(3), 216–225. https://doi.org/10.20982/tqmp.16.3.p216

- Kalyuga, S. (2011). Cognitive load theory: How many types of load does it really need? Educational Psychology Review, 23(1), 1–19. https://doi.org/10.1007/s10648-010-9150-7

- Kalyuga, S., & Singh, A.-M. (2016). Rethinking the boundaries of cognitive load theory in complex learning. Educational Psychology Review, 28(4), 831–852. https://doi.org/10.1007/s10648-015-9352-0

- Klepsch, M., Schmitz, F., & Seufert, T. (2017). Development and validation of two instruments measuring intrinsic, extraneous, and germane cognitive load. Frontiers in Psychology, 16(8). https://doi.org/10.3389/fpsyg.2017.01997

- Korbach, A., Brünken, R., & Park, B. (2018). Differentiating different types of cognitive load: A comparison of different measures. Educational Psychology Review, 30(2), 503–529. https://doi.org/10.1007/s10648-017-9404-8

- Latini, N., Bråten, I., & Salmerón, L. (2020). Does reading medium affect processing and integration of textual and pictorial information? A multimedia eye-tracking study. Contemporary Educational Psychology, 62, 101870. https://doi.org/10.1016/j.cedpsych.2020.101870

- Laurillard, D. (2008). Digital technologies and their role in achieving our ambitions for education. Institute of Education, University of London. https://discovery.ucl.ac.uk/id/eprint/10000628/1/Laurillard2008Digital_technologies.pdf

- Leppink, J., van Gog, T., Paas, F., & Sweller, J. (2015). Cognitive load theory: Researching and planning teaching to maximise learning. In J. Cleland & S. Durning (Eds.), Researching medical education (pp. 207–218). Wiley & Sons. https://doi.org/10.1002/9781118838983.ch18

- Logan, T. (2015). The influence of test mode and visuospatial ability on mathematics assessment performance. Mathematics Education Research Journal, 27(4), 423–441. https://doi.org/10.1007/s13394-015-0143-1

- Martin, S. (2014). Measuring cognitive load and cognition: Metrics for technology-enhanced learning. Educational Research & Evaluation, 20(7–8), 592–621. https://doi.org/10.1080/13803611.2014.997140

- Mayer, R. E. (2003). The promise of multimedia learning: Using the same instructional design methods across different media. Learning and Instruction, 13(2), 125–139. https://doi.org/10.1016/S0959-4752(02)00016-6

- Mayer, R. E. (2005). Principles for managing essential processing in multimedia learning: Segmenting, pretraining, and modality principles. In R. Mayer (Ed.), The Cambridge handbook of multimedia learning (pp. 169–182). Cambridge University Press. https://doi.org/10.1017/CBO9780511816819.012

- Mayer, R. E. (2019). Thirty years of research on online learning. Applied Cognitive Psychology, 33(2), 152–159. https://doi.org/10.1002/acp.3482

- Mayes, D., Sims, V., & Koonce, J. (2001). Comprehension and workload differences for VDT and paper-based reading. International Journal of Industrial Ergonomics, 28(6), 367–378. https://doi.org/10.1016/S0169-8141(01)00043-9

- Naismith, L. M., Cheung, J. J., Ringsted, C., & Cavalcanti, R. B. (2015). Limitations of subjective cognitive load measures in simulation‐based procedural training. Medical Education, 49(8), 805–814. https://doi.org/10.1111/medu.12732

- Noyes, J., & Garland, K. (2003). VDT versus paper-based text: Reply to Mayes, Sims and Koonce. International Journal of Industrial Ergonomics, 31(6), 411–423. https://doi.org/10.1016/S0169-8141(03)00027-1

- Noyes, J., Garland, K., & Robbins, L. (2004). Paper‐based versus computer‐based assessment: Is workload another test mode effect? British Journal of Educational Technology, 35(1), 111–113. https://doi.org/10.1111/j.1467-8535.2004.00373.x

- Paas, F., & Van Merriënboer, J. (1993). The efficiency of instructional conditions: An approach to combine mental effort and performance measures. Human Factors: The Journal of the Human Factors & Ergonomics Society, 35(4), 737–743. https://doi.org/10.1177/001872089303500412

- Paas, F., Van Merriënboer, J., & Adam, J. (1994). Measurement of cognitive load in instructional research. Perceptual and Motor Skills, 79(1), 419–430. https://doi.org/10.2466/pms.1994.79.1.419

- Park, B., Korbach, A., & Brünken, R. (2015). Do learner characteristics moderate the seductive-details-effect? A cognitive-load-study using eye-tracking. Journal of Educational Technology & Society, 18(4), 24–36. https://www.jstor.org/stable/jeductechsoci.18.4.24

- Pengelley, J., Whipp, P. R., & Rovis-Hermann, N. (2023). A testing load: Investigating test mode effects on test score, cognitive load, and scratch paper use with secondary school students. Educational Psychology Review, 35(67). https://doi.org/10.1007/s10648-023-09781-x

- PISA. (2010). PISA computer-based assessment of student skills in science. OECD Publishing. https://doi.org/10.1787/9789264082038-en

- Prisacari, A., & Danielson, J. (2017). Computer-based versus paper-based testing: Investigating testing mode with cognitive load and scratch paper use. Computers in Human Behavior, 77, 1–10. https://doi.org/10.1016/j.chb.2017.07.044

- Raaijmakers, S., Baars, M., Schaap, L., Paas, F., & Van Gog, T. (2017). Effects of performance feedback valence on perceptions of invested mental effort. Learning and Instruction, 51, 36–46. https://doi.org/10.1016/j.learninstruc.2016.12.002

- Ronconi, A., Veronesi, V., Mason, L., Manzione, L., Florit, E., Anmarkrud, Ø., & Bråten, I. (2022). Effects of reading medium on the processing, comprehension, and calibration of adolescent readers. Computers and Education, 185, 104520. https://doi.org/10.1016/j.compedu.2022.104520

- Schmeck, A., Opfermann, M., Van Gog, T., Paas, F., & Leutner, D. (2015). Measuring cognitive load with subjective rating scales during problem solving: Differences between immediate and delayed ratings. Instructional Science, 43(1), 93–114. https://doi.org/10.1007/s11251-014-9328-3

- Schnotz, W., & Kürschner, C. (2007). A reconsideration of cognitive load theory. Educational Psychology Review, 19(4), 469–508. https://doi.org/10.1007/s10648-007-9053-4

- Schwamborn, A., Thillmann, H., Opfermann, M., & Leutner, D. (2011). Cognitive load and instructionally supported learning with provided and learner-generated visualizations. Computers in Human Behavior, 27(1), 89–93. https://doi.org/10.1016/j.chb.2010.05.028

- Selwyn, N. (2010). Looking beyond learning: Notes towards the critical study of educational technology. Journal of Computer Assisted Learning, 26(1), 65–73. https://doi.org/10.1111/j.1365-2729.2009.00338.x

- Selwyn, N. (2014). Distrusting educational technology: Critical questions for changing times. Routledge, Taylor & Francis Group. https://doi.org/10.4324/9781315886350

- Selwyn, N. (2016). Minding our language: Why education and technology is full of bullshit … and what might be done about it. Learning, Media and Technology, 41(3), 437–443. https://doi.org/10.1080/17439884.2015.1012523

- Sidi, Y., Shpigelman, M., Zalmanov, H., & Ackerman, R. (2017). Understanding metacognitive inferiority on screen by exposing cues for depth of processing. Learning and Instruction, 51, 61–73. https://doi.org/10.1016/j.learninstruc.2017.01.002

- Skulmowski, A., & Xu, M. (2021). Understanding cognitive load in digital and online learning: A new perspective on extraneous cognitive load. Educational Psychology Review, 33(2), 171–196. https://doi.org/10.1007/s10648-021-09624-7

- Solhjoo, S., Haigney, M. C., McBee, E., van Merriënboer, J. J., Schuwirth, L., Artino, A. R., Battista, A., Ratcliffe, T. A., Lee, H. D., & Durning, S. J. (2019). Heart rate and heart rate variability correlate with clinical reasoning performance and self-reported measures of cognitive load. Scientific Reports, 9(1), 1–9. https://doi.org/10.1038/s41598-019-50280-3

- Sweller, J. (1988). Cognitive load during problem solving: Effects on learning. Cognitive Science, 12(2), 257–285. https://doi.org/10.1207/s15516709cog1202_4

- Sweller, J. (2010). Element interactivity and intrinsic, extraneous, and germane cognitive load. Educational Psychology Review, 22(2), 123–138. https://doi.org/10.1007/s10648-010-9128-5

- Sweller, J. (2020). Cognitive load theory and educational technology. Educational Technology Research & Development, 68(1), 1–16. https://doi.org/10.1007/s11423-019-09701-3

- Sweller, J., & Chandler, P. (1994). Why some material is difficult to learn. Cognition and Instruction, 12(3), 185–233. https://doi.org/10.1207/s1532690xci1203_1

- Sweller, J., van Merriënboer, J., & Paas, F. (1998). Cognitive architecture and instructional design. Educational Psychology Review, 10(3), 251–296. https://doi.org/10.1023/A:1022193728205

- Sweller, J., van Merriënboer, J. J., & Paas, F. (2019). Cognitive architecture and instructional design: 20 years later. Educational Psychology Review, 31(2), 1–32. https://doi.org/10.1007/s10648-019-09465-5

- Sweller, J., Van Merriënboer, J. J., & Paas, F. G. (1998). Cognitive architecture and instructional design. Educational Psychology Review, 10(3), 251–296. https://doi.org/10.1023/A:1022193728205

- Szulewski, A., Gegenfurtner, A., Howes, D. W., Sivilotti, M. L., & van Merriënboer, J. J. (2017). Measuring physician cognitive load: Validity evidence for a physiologic and a psychometric tool. Advances in Health Sciences Education, 22(4), 951–968. https://doi.org/10.1007/s10459-016-9725-2

- Van Gog, T., Kirschner, F., Kester, L., & Paas, F. (2012). Timing and frequency of mental effort measurement: Evidence in favour of repeated measures. Applied Cognitive Psychology, 26(6), 833–839. https://doi.org/10.1002/acp.2883

- Van Merriënboer, J. J., Kester, L., & Paas, F. (2006). Teaching complex rather than simple tasks: Balancing intrinsic and germane load to enhance transfer of learning. Applied Cognitive Psychology, 20(3), 343–352. https://doi.org/10.1002/acp.1250

- Vassallo, S., & Warren, D. (2017). Use of technology in the classroom. In D. Warren & G. Daraganova (Eds.), Growing up in Australia – The longitudinal study of Australian children, annual statistical report (Vol. 8, pp. 99–112). Australian Institute of Family Studies. https://growingupinaustralia.gov.au/research-findings/annual-statistical-report-2017/use-technology-classroom

- Whelan, R. R. (2007). Neuroimaging of cognitive load in instructional multimedia. Educational Research Review, 2(1), 1–12. https://doi.org/10.1016/j.edurev.2006.11.001

- Yu, W., & Iwashita, N. (2021). Comparison of test performance on paper-based testing (PBT) and computer-based testing (CBT) by English-majored undergraduate students in China. Language Testing in Asia, 11(1), 1–21. https://doi.org/10.1186/s40468-021-00147-0